84 results in 94Axx

Extropy-based dynamic cumulative residual inaccuracy measure: properties and applications

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences , First View

- Published online by Cambridge University Press:

- 26 February 2025, pp. 1-25

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Some generalized information and divergence generating functions: properties, estimation, validation, and applications

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences , First View

- Published online by Cambridge University Press:

- 25 February 2025, pp. 1-34

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

ON THE BINARY SEQUENCE

$(1,1,0,1,0^3,1,0^7,1,0^{15},\ldots )$

$(1,1,0,1,0^3,1,0^7,1,0^{15},\ldots )$

- Part of

-

- Journal:

- Bulletin of the Australian Mathematical Society / Volume 111 / Issue 2 / April 2025

- Published online by Cambridge University Press:

- 23 October 2024, pp. 260-271

- Print publication:

- April 2025

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

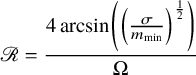

A mathematical theory of super-resolution and two-point resolution

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 12 / 2024

- Published online by Cambridge University Press:

- 21 October 2024, e83

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

ON A CONJECTURE REGARDING THE SYMMETRIC DIFFERENCE OF CERTAIN SETS

- Part of

-

- Journal:

- Bulletin of the Australian Mathematical Society , First View

- Published online by Cambridge University Press:

- 10 October 2024, pp. 1-8

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Optimal experimental design: Formulations and computations

- Part of

-

- Journal:

- Acta Numerica / Volume 33 / July 2024

- Published online by Cambridge University Press:

- 04 September 2024, pp. 715-840

-

- Article

-

- You have access

- Open access

- Export citation

Quantile-based information generating functions and their properties and uses

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 38 / Issue 4 / October 2024

- Published online by Cambridge University Press:

- 22 May 2024, pp. 733-751

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Phase retrieval on circles and lines

- Part of

-

- Journal:

- Canadian Mathematical Bulletin / Volume 67 / Issue 4 / December 2024

- Published online by Cambridge University Press:

- 10 May 2024, pp. 927-935

- Print publication:

- December 2024

-

- Article

-

- You have access

- HTML

- Export citation

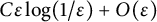

Convergence rate of entropy-regularized multi-marginal optimal transport costs

- Part of

-

- Journal:

- Canadian Journal of Mathematics , First View

- Published online by Cambridge University Press:

- 15 March 2024, pp. 1-21

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

On the Ziv–Merhav theorem beyond Markovianity I

- Part of

-

- Journal:

- Canadian Journal of Mathematics , First View

- Published online by Cambridge University Press:

- 07 March 2024, pp. 1-25

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Unified bounds for the independence number of graphs

- Part of

-

- Journal:

- Canadian Journal of Mathematics / Volume 77 / Issue 1 / February 2025

- Published online by Cambridge University Press:

- 11 December 2023, pp. 97-117

- Print publication:

- February 2025

-

- Article

- Export citation

t-Design Curves and Mobile Sampling on the Sphere

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 11 / 2023

- Published online by Cambridge University Press:

- 23 November 2023, e105

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

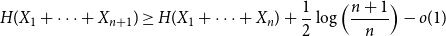

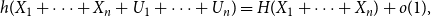

Approximate discrete entropy monotonicity for log-concave sums

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 33 / Issue 2 / March 2024

- Published online by Cambridge University Press:

- 13 November 2023, pp. 196-209

-

- Article

-

- You have access

- HTML

- Export citation

Markov capacity for factor codes with an unambiguous symbol

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems / Volume 44 / Issue 8 / August 2024

- Published online by Cambridge University Press:

- 07 November 2023, pp. 2199-2228

- Print publication:

- August 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The unified extropy and its versions in classical and Dempster–Shafer theories

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 61 / Issue 2 / June 2024

- Published online by Cambridge University Press:

- 23 October 2023, pp. 685-696

- Print publication:

- June 2024

-

- Article

-

- You have access

- HTML

- Export citation

Encoding subshifts through sliding block codes

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems / Volume 44 / Issue 6 / June 2024

- Published online by Cambridge University Press:

- 03 August 2023, pp. 1609-1628

- Print publication:

- June 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Information-theoretic convergence of extreme values to the Gumbel distribution

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 61 / Issue 1 / March 2024

- Published online by Cambridge University Press:

- 21 June 2023, pp. 244-254

- Print publication:

- March 2024

-

- Article

-

- You have access

- HTML

- Export citation

On asymptotic fairness in voting with greedy sampling

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 55 / Issue 3 / September 2023

- Published online by Cambridge University Press:

- 10 May 2023, pp. 999-1032

- Print publication:

- September 2023

-

- Article

- Export citation

Costa’s concavity inequality for dependent variables based on the multivariate Gaussian copula

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 60 / Issue 4 / December 2023

- Published online by Cambridge University Press:

- 12 April 2023, pp. 1136-1156

- Print publication:

- December 2023

-

- Article

- Export citation

A new lifetime distribution by maximizing entropy: properties and applications

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 38 / Issue 1 / January 2024

- Published online by Cambridge University Press:

- 28 February 2023, pp. 189-206

-

- Article

-

- You have access

- Open access

- HTML

- Export citation