204 results

An analysis of the transformative potential of Australia’s national food policies and policy actions to promote healthy and sustainable food systems

-

- Journal:

- Public Health Nutrition / Volume 27 / Issue 1 / 2024

- Published online by Cambridge University Press:

- 20 February 2024, e75

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

10 Pupil Dilation During the Stroop Task Offers a Sensitive and Scalable Biomarker of Locus Coeruleus Integrity

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 802-803

-

- Article

-

- You have access

- Export citation

49 Locus Coeruleus MR Signal Interacts with CSF p-tau/AB42 to Predict Attention, Executive Function, and Verbal Memory

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 921-922

-

- Article

-

- You have access

- Export citation

The Global Dynamics of Inequality (GINI) project: analysing archaeological housing data

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

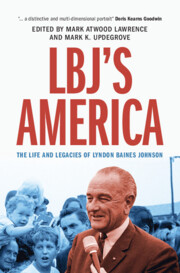

Index

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp 358-374

-

- Chapter

- Export citation

Introduction

-

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp 1-14

-

- Chapter

- Export citation

Notes

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp 323-357

-

- Chapter

- Export citation

Acknowledgments

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp 321-322

-

- Chapter

- Export citation

Contributors

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp ix-xiv

-

- Chapter

- Export citation

Illustrations

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp vii-viii

-

- Chapter

- Export citation

Copyright page

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp iv-iv

-

- Chapter

- Export citation

Contents

-

- Book:

- LBJ's America

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023, pp v-vi

-

- Chapter

- Export citation

LBJ's America

- The Life and Legacies of Lyndon Baines Johnson

-

- Published online:

- 19 October 2023

- Print publication:

- 19 October 2023

An approach for collaborative development of a federated biomedical knowledge graph-based question-answering system: Question-of-the-Month challenges

-

- Journal:

- Journal of Clinical and Translational Science / Volume 7 / Issue 1 / 2023

- Published online by Cambridge University Press:

- 14 September 2023, e214

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The Rapid ASKAP Continuum Survey III: Spectra and Polarisation In Cutouts of Extragalactic Sources (SPICE-RACS) first data release

-

- Journal:

- Publications of the Astronomical Society of Australia / Volume 40 / 2023

- Published online by Cambridge University Press:

- 30 August 2023, e040

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The need for particular scrutiny of claims made by researchers associated with ultra-processed food manufacturers

- Part of

-

- Journal:

- Public Health Nutrition / Volume 26 / Issue 7 / July 2023

- Published online by Cambridge University Press:

- 13 April 2023, pp. 1392-1393

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Ultra-processed foods: a fit-for-purpose concept for nutrition policy activities to tackle unhealthy and unsustainable diets – ADDENDUM

- Part of

-

- Journal:

- Public Health Nutrition / Volume 26 / Issue 7 / July 2023

- Published online by Cambridge University Press:

- 11 April 2023, p. 1389

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The need for particular scrutiny of claims made by researchers associated with ultra-processed food manufacturers

-

- Journal:

- British Journal of Nutrition / Volume 130 / Issue 8 / 28 October 2023

- Published online by Cambridge University Press:

- 20 February 2023, pp. 1469-1470

- Print publication:

- 28 October 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Ultra-processed foods: a fit-for-purpose concept for nutrition policy activities to tackle unhealthy and unsustainable diets – ADDENDUM

-

- Journal:

- British Journal of Nutrition / Volume 129 / Issue 10 / 28 May 2023

- Published online by Cambridge University Press:

- 11 January 2023, p. 1840

- Print publication:

- 28 May 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Medical Care Among Individuals with a Concussion in Ontario: A Population-based Study

-

- Journal:

- Canadian Journal of Neurological Sciences / Volume 51 / Issue 1 / January 2024

- Published online by Cambridge University Press:

- 20 December 2022, pp. 87-97

-

- Article

- Export citation