219 results

Unintended consequences, conflict and resilience in a small-scale irrigation development, Marakwet, Kenya

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Head and Neck Cancer: United Kingdom National Multidisciplinary Guidelines, Sixth Edition

-

- Journal:

- The Journal of Laryngology & Otology / Volume 138 / Issue S1 / April 2024

- Published online by Cambridge University Press:

- 14 March 2024, pp. S1-S224

- Print publication:

- April 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

System fertilization in the pasture phase enhances productivity in integrated crop–livestock systems

-

- Journal:

- The Journal of Agricultural Science / Volume 161 / Issue 6 / December 2023

- Published online by Cambridge University Press:

- 14 December 2023, pp. 755-762

-

- Article

-

- You have access

- HTML

- Export citation

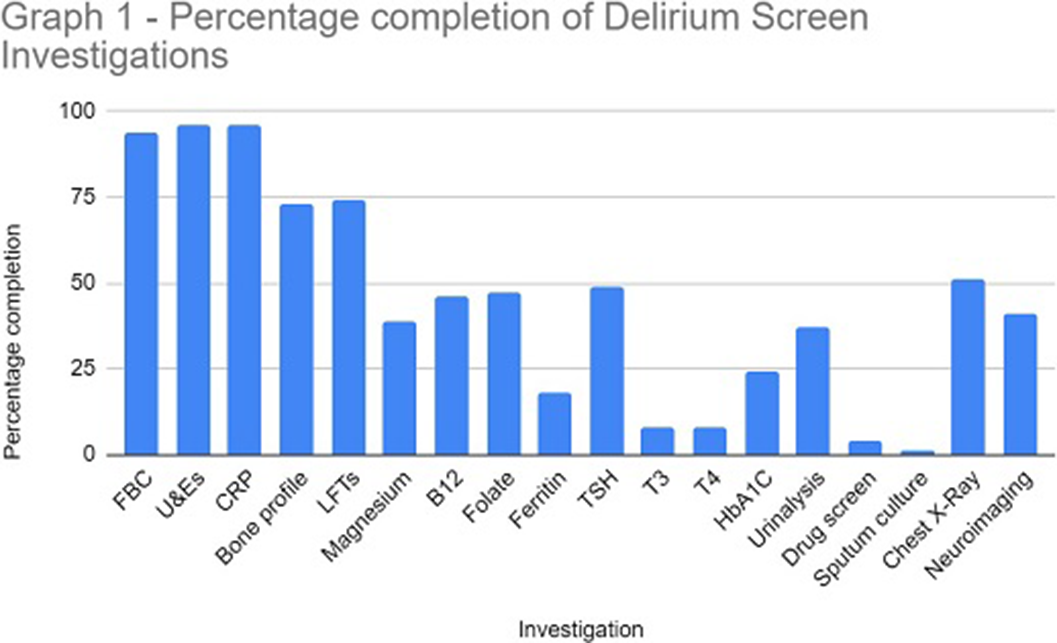

Are We Adequately Assessing Delirium? An Analysis Of Liaison Psychiatry Referrals

-

- Journal:

- European Psychiatry / Volume 66 / Issue S1 / March 2023

- Published online by Cambridge University Press:

- 19 July 2023, pp. S518-S519

-

- Article

-

- You have access

- Open access

- Export citation

Risk and resilience factors for psychopathology during pregnancy: An application of the Hierarchical Taxonomy of Psychopathology (HiTOP)

-

- Journal:

- Development and Psychopathology / Volume 36 / Issue 2 / May 2024

- Published online by Cambridge University Press:

- 03 February 2023, pp. 545-561

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Depression, anxiety and PTSD symptoms before and during the COVID-19 pandemic in the UK

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 12 / September 2023

- Published online by Cambridge University Press:

- 26 July 2022, pp. 5428-5441

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Associations between unpasteurised camel and other milk consumption, livestock ownership, and self-reported febrile and gastrointestinal symptoms among semi-pastoralists and pastoralists in the Somali Region of Ethiopia

- Part of

-

- Journal:

- Epidemiology & Infection / Volume 151 / 2023

- Published online by Cambridge University Press:

- 02 May 2022, e44

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Seed-shattering phenology at soybean harvest of economically important weeds in multiple regions of the United States. Part 3: Drivers of seed shatter

-

- Journal:

- Weed Science / Volume 70 / Issue 1 / January 2022

- Published online by Cambridge University Press:

- 15 November 2021, pp. 79-86

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Outcomes of clinical decision support for outpatient management of Clostridioides difficile infection

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 43 / Issue 10 / October 2022

- Published online by Cambridge University Press:

- 29 September 2021, pp. 1345-1348

- Print publication:

- October 2022

-

- Article

- Export citation

The MAGPI survey: Science goals, design, observing strategy, early results and theoretical framework

-

- Journal:

- Publications of the Astronomical Society of Australia / Volume 38 / 2021

- Published online by Cambridge University Press:

- 26 July 2021, e031

-

- Article

-

- You have access

- HTML

- Export citation

Role of age, gender and marital status in prognosis for adults with depression: An individual patient data meta-analysis

-

- Journal:

- Epidemiology and Psychiatric Sciences / Volume 30 / 2021

- Published online by Cambridge University Press:

- 04 June 2021, e42

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The treatment gap for mental disorders in adults enrolled in HIV treatment programmes in South Africa: a cohort study using linked electronic health records

-

- Journal:

- Epidemiology and Psychiatric Sciences / Volume 30 / 2021

- Published online by Cambridge University Press:

- 17 May 2021, e37

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Turbulent impurity transport simulations in Wendelstein 7-X plasmas

- Part of

-

- Journal:

- Journal of Plasma Physics / Volume 87 / Issue 1 / February 2021

- Published online by Cambridge University Press:

- 02 February 2021, 855870103

-

- Article

- Export citation

Seed-shattering phenology at soybean harvest of economically important weeds in multiple regions of the United States. Part 1: Broadleaf species

-

- Journal:

- Weed Science / Volume 69 / Issue 1 / January 2021

- Published online by Cambridge University Press:

- 04 November 2020, pp. 95-103

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Seed-shattering phenology at soybean harvest of economically important weeds in multiple regions of the United States. Part 2: Grass species

-

- Journal:

- Weed Science / Volume 69 / Issue 1 / January 2021

- Published online by Cambridge University Press:

- 26 October 2020, pp. 104-110

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Validation and calibration of the Eating Assessment in Toddlers FFQ (EAT FFQ) for children, used in the Growing Up Milk – Lite (GUMLi) randomised controlled trial

-

- Journal:

- British Journal of Nutrition / Volume 125 / Issue 2 / 28 January 2021

- Published online by Cambridge University Press:

- 17 August 2020, pp. 183-193

- Print publication:

- 28 January 2021

-

- Article

-

- You have access

- HTML

- Export citation

Impact of unit-specific metrics and prescribing tools on a family medicine ward

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 41 / Issue 11 / November 2020

- Published online by Cambridge University Press:

- 01 July 2020, pp. 1272-1278

- Print publication:

- November 2020

-

- Article

- Export citation

The Role of Zinc in Depressed Pregnant and Non-Pregnant Women: A Systematic Review and Meta-Analysis

-

- Journal:

- Proceedings of the Nutrition Society / Volume 79 / Issue OCE2 / 2020

- Published online by Cambridge University Press:

- 10 June 2020, E542

-

- Article

-

- You have access

- Export citation

Documentation of acute change in mental status in nursing homes highlights opportunity to augment infection surveillance criteria

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 41 / Issue 7 / July 2020

- Published online by Cambridge University Press:

- 28 April 2020, pp. 848-850

- Print publication:

- July 2020

-

- Article

- Export citation

PKS 2250–351: A giant radio galaxy in Abell 3936

- Part of

-

- Journal:

- Publications of the Astronomical Society of Australia / Volume 37 / 2020

- Published online by Cambridge University Press:

- 25 March 2020, e013

-

- Article

-

- You have access

- HTML

- Export citation