544 results

Methylation profiles at birth linked to early childhood obesity

-

- Journal:

- Journal of Developmental Origins of Health and Disease / Volume 15 / 2024

- Published online by Cambridge University Press:

- 25 April 2024, e7

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Prehospital Surgical Cricothyrotomy in a Ground-Based 9-1-1 EMS System: A Retrospective Review

-

- Journal:

- Prehospital and Disaster Medicine , First View

- Published online by Cambridge University Press:

- 23 April 2024, pp. 1-4

-

- Article

- Export citation

The authors’ reply to Jensen et al’s Letter to the Editor

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 45 / Issue 6 / June 2024

- Published online by Cambridge University Press:

- 16 April 2024, pp. 799-800

- Print publication:

- June 2024

-

- Article

-

- You have access

- HTML

- Export citation

Disinfection of flexible fibre-optic endoscopes out-of-hours: confidential telephone survey of ENT units in England – 20 years on

-

- Journal:

- The Journal of Laryngology & Otology , First View

- Published online by Cambridge University Press:

- 12 February 2024, pp. 1-6

-

- Article

- Export citation

The Pleistocene footprints are younger than we thought: correcting the radiocarbon dates of Ruppia seeds, Tularosa Basin, New Mexico

-

- Journal:

- Quaternary Research / Volume 117 / January 2024

- Published online by Cambridge University Press:

- 10 January 2024, pp. 67-78

-

- Article

- Export citation

Rethinking Transnational Activism through Regional Perspectives: Reflections, Literatures and Cases

-

- Journal:

- Transactions of the Royal Historical Society , First View

- Published online by Cambridge University Press:

- 08 January 2024, pp. 1-27

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Producing and researching podcasts as a reflective medium in English language teaching

-

- Journal:

- Language Teaching / Volume 57 / Issue 1 / January 2024

- Published online by Cambridge University Press:

- 09 January 2024, pp. 139-142

- Print publication:

- January 2024

-

- Article

- Export citation

Rapid Dehydroxylation of Nickeliferous Goethite in Lateritic Nickel Ore: X-Ray Diffraction and TEM Investigation

-

- Journal:

- Clays and Clay Minerals / Volume 57 / Issue 6 / December 2009

- Published online by Cambridge University Press:

- 01 January 2024, pp. 751-770

-

- Article

- Export citation

9 Four-Year Practice Effects on the RBANS in a Longitudinal Study of Older Adults

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, p. 694

-

- Article

-

- You have access

- Export citation

Severe mental illness, race/ethnicity, multimorbidity and mortality following COVID-19 infection: nationally representative cohort study – ADDENDUM

-

- Journal:

- The British Journal of Psychiatry / Volume 224 / Issue 1 / January 2024

- Published online by Cambridge University Press:

- 13 December 2023, p. 29

- Print publication:

- January 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Developing a framework to improve global estimates of conservation area coverage

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Essential team science skills for biostatisticians on collaborative research teams

-

- Journal:

- Journal of Clinical and Translational Science / Volume 7 / Issue 1 / 2023

- Published online by Cambridge University Press:

- 06 November 2023, e243

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Severe mental illness, race/ethnicity, multimorbidity and mortality following COVID-19 infection: nationally representative cohort study

-

- Journal:

- The British Journal of Psychiatry / Volume 223 / Issue 5 / November 2023

- Published online by Cambridge University Press:

- 25 October 2023, pp. 518-525

- Print publication:

- November 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Radiofrequency ice dielectric measurements at Summit Station, Greenland

-

- Journal:

- Journal of Glaciology , First View

- Published online by Cambridge University Press:

- 09 October 2023, pp. 1-12

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Factors associated with loss to follow-up in outpatient parenteral antimicrobial therapy: A retrospective cohort study

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 45 / Issue 3 / March 2024

- Published online by Cambridge University Press:

- 02 October 2023, pp. 387-389

- Print publication:

- March 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

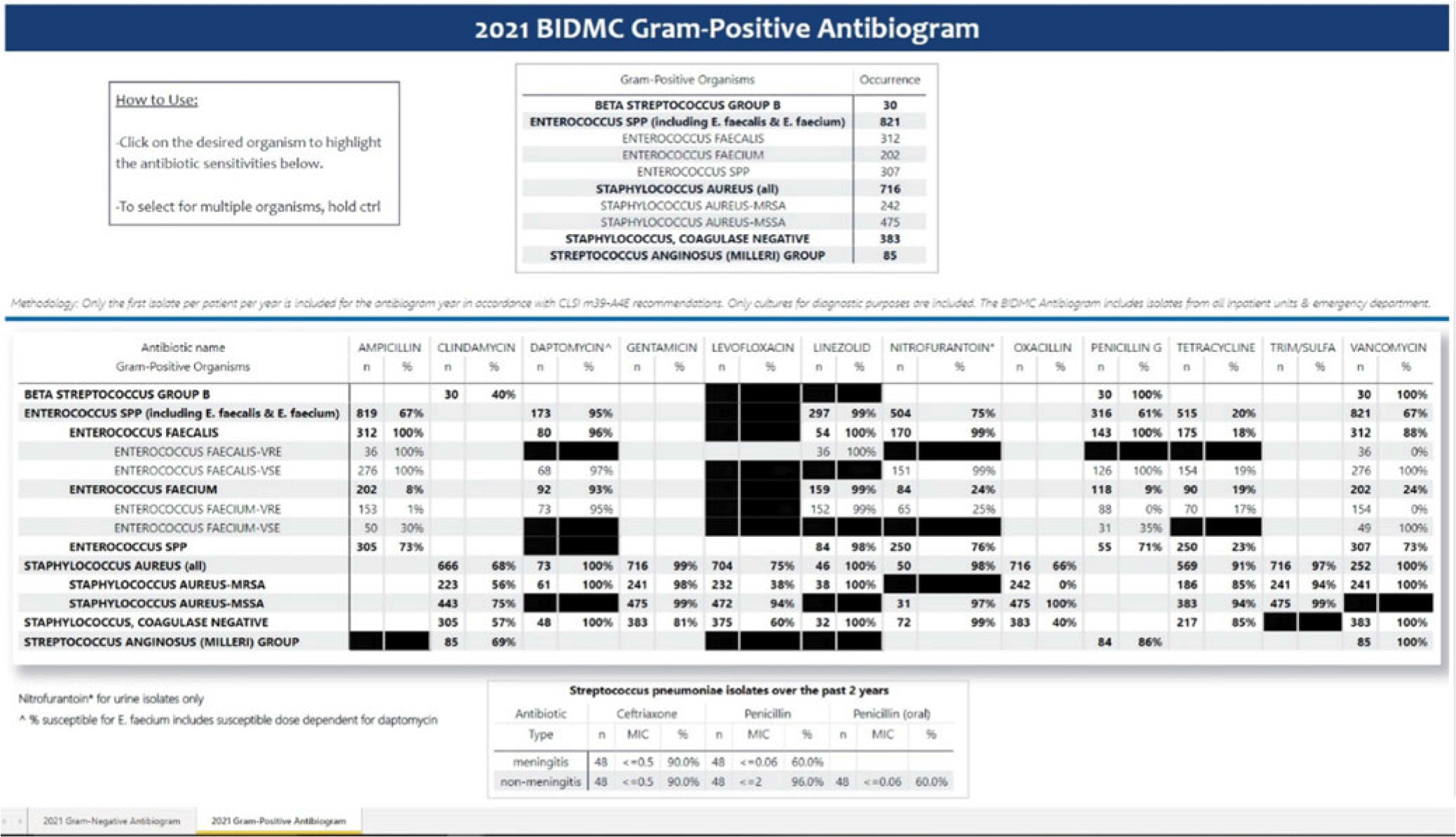

Creating an electronic antibiogram using visualization software: Easily updatable and removes the need for yearly manual review

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, p. s34

-

- Article

-

- You have access

- Open access

- Export citation

Agricultural Research Service Weed Science Research: Past, Present, and Future

-

- Journal:

- Weed Science / Volume 71 / Issue 4 / July 2023

- Published online by Cambridge University Press:

- 16 August 2023, pp. 312-327

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Successful implementation of telehealth visits in the paediatric heart failure and heart transplant population

-

- Journal:

- Cardiology in the Young / Volume 34 / Issue 3 / March 2024

- Published online by Cambridge University Press:

- 31 July 2023, pp. 531-534

-

- Article

- Export citation

Outcomes of Pre-Existing Diabetes in People With/without New Onset Severe Mental Illness: A Primary-Secondary Mental Healthcare Linkage in South London, United Kingdom

-

- Journal:

- BJPsych Open / Volume 9 / Issue S1 / July 2023

- Published online by Cambridge University Press:

- 07 July 2023, pp. S2-S3

-

- Article

-

- You have access

- Open access

- Export citation

Chapter 14 - Working with Complex Trauma and Dissociation in Schema Therapy

- from Part III - Applications and Adaptations for Mental Health Presentations

-

- Book:

- Cambridge Guide to Schema Therapy

- Published online:

- 27 July 2023

- Print publication:

- 29 June 2023, pp 266-278

-

- Chapter

- Export citation