1691 results

Psychometric and adherence considerations for high-frequency, smartphone-based cognitive screening protocols in older adults

-

- Journal:

- Journal of the International Neuropsychological Society , First View

- Published online by Cambridge University Press:

- 20 September 2024, pp. 1-9

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Interventions targeting social determinants of mental disorders and the Sustainable Development Goals: A systematic review of reviews

-

- Journal:

- European Psychiatry / Volume 67 / Issue S1 / April 2024

- Published online by Cambridge University Press:

- 27 August 2024, p. S283

-

- Article

-

- You have access

- Open access

- Export citation

Development and initial evaluation of a clinical prediction model for risk of treatment resistance in first-episode psychosis: Schizophrenia Prediction of Resistance to Treatment (SPIRIT)

-

- Journal:

- The British Journal of Psychiatry , FirstView

- Published online by Cambridge University Press:

- 05 August 2024, pp. 1-10

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Pathways to adolescent social anxiety: Testing interactions between neural social reward function and perceived social threat in daily life

-

- Journal:

- Development and Psychopathology , First View

- Published online by Cambridge University Press:

- 27 May 2024, pp. 1-16

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Development and implementation of a nationwide multidrug-resistant organism tracking and alert system for Veterans Affairs medical centers

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 24 May 2024, pp. 1-6

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Sex-dependent differences in vulnerability to early risk factors for posttraumatic stress disorder: results from the AURORA study

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 22 May 2024, pp. 1-11

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The contribution of cannabis use to the increased psychosis risk among minority ethnic groups in Europe

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 09 May 2024, pp. 1-10

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Chapter 2 - Friends and Family

- from Part I - Personal

-

-

- Book:

- Jonathan Swift in Context

- Published online:

- 02 May 2024

- Print publication:

- 09 May 2024, pp 10-17

-

- Chapter

- Export citation

Associations between food groups and biomarkers of inflammation: Are some foods groups more protective than others?

-

- Journal:

- Proceedings of the Nutrition Society / Volume 83 / Issue OCE1 / April 2024

- Published online by Cambridge University Press:

- 07 May 2024, E189

-

- Article

-

- You have access

- Export citation

Reduction in systolic blood pressure following dietary fibre intervention is dependent on baseline gut microbiota composition

-

- Journal:

- Proceedings of the Nutrition Society / Volume 83 / Issue OCE1 / April 2024

- Published online by Cambridge University Press:

- 07 May 2024, E14

-

- Article

-

- You have access

- Export citation

367 The Effect of a Culturally-tailored and Theory-based Resistance Exercise Intervention on Motivation, Self-Regulation, and Adherence in Young Black Women

- Part of

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue s1 / April 2024

- Published online by Cambridge University Press:

- 03 April 2024, pp. 110-111

-

- Article

-

- You have access

- Open access

- Export citation

163 Knowledge and Implementation of Tobacco Control Practices in Rural Louisiana Community Health Centers

- Part of

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue s1 / April 2024

- Published online by Cambridge University Press:

- 03 April 2024, p. 49

-

- Article

-

- You have access

- Open access

- Export citation

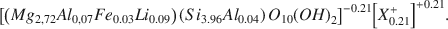

Mixed-Layer Kerolite/Stevensite from the Amargosa Desert, Nevada

-

- Journal:

- Clays and Clay Minerals / Volume 30 / Issue 5 / October 1982

- Published online by Cambridge University Press:

- 02 April 2024, pp. 321-326

-

- Article

-

- You have access

- Export citation

Origin of Magnesium Clays from the Amargosa Desert, Nevada

-

- Journal:

- Clays and Clay Minerals / Volume 30 / Issue 5 / October 1982

- Published online by Cambridge University Press:

- 02 April 2024, pp. 327-336

-

- Article

-

- You have access

- Export citation

Using latent class analysis to investigate enduring effects of intersectional social disadvantage on long-term vocational and financial outcomes in the 20-year prospective Chicago Longitudinal Study

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 25 March 2024, pp. 1-13

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Changing sustainable diet behaviours during the COVID-19 Pandemic: inequitable outcomes across a sociodemographically diverse sample of adults

-

- Journal:

- Journal of Nutritional Science / Volume 13 / 2024

- Published online by Cambridge University Press:

- 14 March 2024, e16

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Report of the target end-states for defined benefit pension schemes working party

-

- Journal:

- British Actuarial Journal / Volume 29 / 2024

- Published online by Cambridge University Press:

- 07 March 2024, e5

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Cation Ordering in Synthetic Layered Double Hydroxides

-

- Journal:

- Clays and Clay Minerals / Volume 45 / Issue 6 / December 1997

- Published online by Cambridge University Press:

- 28 February 2024, pp. 803-813

-

- Article

-

- You have access

- Export citation

Developmental trajectories of adolescent internalizing symptoms and parental responses to distress

-

- Journal:

- Development and Psychopathology , First View

- Published online by Cambridge University Press:

- 23 February 2024, pp. 1-12

-

- Article

-

- You have access

- HTML

- Export citation

Understanding the role of antibiotic-associated adverse events in influencing antibiotic decision-making

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 4 / Issue 1 / 2024

- Published online by Cambridge University Press:

- 30 January 2024, e13

-

- Article

-

- You have access

- Open access

- HTML

- Export citation