122 results

Stakeholder perspectives of mental healthcare services in Bangladesh, its challenges and opportunities: a qualitative study

-

- Journal:

- Cambridge Prisms: Global Mental Health / Volume 11 / 2024

- Published online by Cambridge University Press:

- 12 March 2024, e37

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Development of the conversation tool “I-HARP for COPD” for early identification of palliative care needs in patients with chronic obstructive pulmonary disease

-

- Journal:

- Palliative & Supportive Care , First View

- Published online by Cambridge University Press:

- 16 February 2024, pp. 1-9

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Bioclimatic predictors of forest structure, composition and phenology in the Paraguayan Dry Chaco

-

- Journal:

- Journal of Tropical Ecology / Volume 40 / 2024

- Published online by Cambridge University Press:

- 04 January 2024, e1

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

84 Feasibility and Validity of Remote Digital Assessment of Multi-Day Learning in Cognitively Unimpaired Older Adults

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 487-488

-

- Article

-

- You have access

- Export citation

How old is the Ordovician–Silurian boundary at Dob’s Linn, Scotland? Integrating LA-ICP-MS and CA-ID-TIMS U-Pb zircon dates

-

- Journal:

- Geological Magazine / Volume 160 / Issue 9 / September 2023

- Published online by Cambridge University Press:

- 22 November 2023, pp. 1775-1789

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Severe and common mental disorders and risk of emergency hospital admissions for ambulatory care sensitive conditions among the UK Biobank cohort

-

- Journal:

- BJPsych Open / Volume 9 / Issue 6 / November 2023

- Published online by Cambridge University Press:

- 07 November 2023, e211

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Radiofrequency ice dielectric measurements at Summit Station, Greenland

-

- Journal:

- Journal of Glaciology , First View

- Published online by Cambridge University Press:

- 09 October 2023, pp. 1-12

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

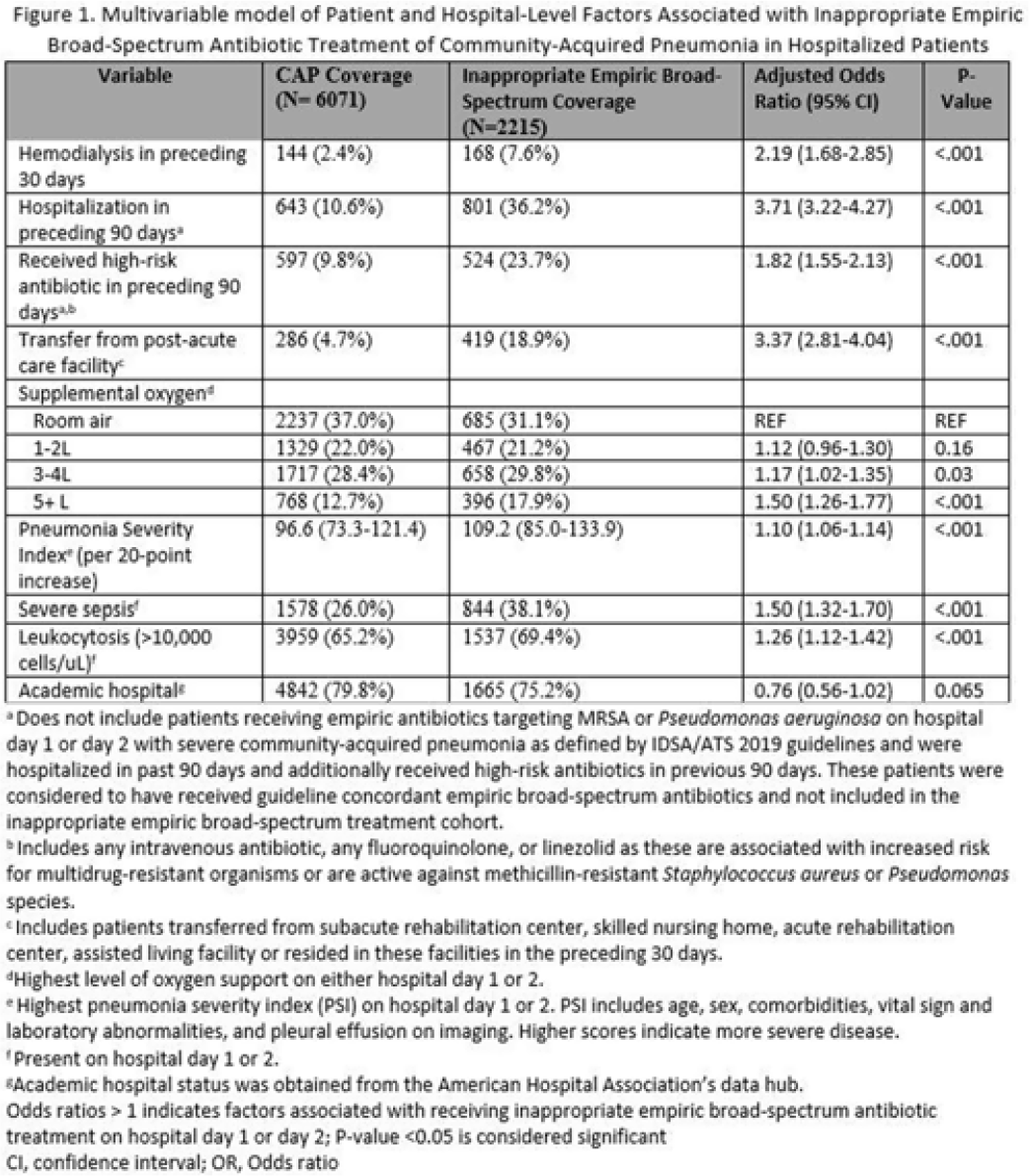

Risk Factors and outcomes associated with inappropriate empiric broad-spectrum antibiotic use in hospitalized patients with community-acquired pneumonia

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, pp. s31-s32

-

- Article

-

- You have access

- Open access

- Export citation

Patterns, predictors, and patient-reported reasons for antidepressant discontinuation in the WHO World Mental Health Surveys

-

- Journal:

- Psychological Medicine / Volume 54 / Issue 1 / January 2024

- Published online by Cambridge University Press:

- 14 September 2023, pp. 67-78

-

- Article

- Export citation

Effectiveness and long-term stability of outpatient cognitive behavioural therapy (CBT) for children and adolescents with anxiety and depressive disorders under routine care conditions

-

- Journal:

- Behavioural and Cognitive Psychotherapy / Volume 51 / Issue 4 / July 2023

- Published online by Cambridge University Press:

- 13 March 2023, pp. 320-334

- Print publication:

- July 2023

-

- Article

- Export citation

Examining the relation between bilingualism and age of symptom onset in frontotemporal dementia

-

- Journal:

- Bilingualism: Language and Cognition / Volume 27 / Issue 2 / March 2024

- Published online by Cambridge University Press:

- 09 March 2023, pp. 274-286

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Non-suicidal self-injury and emotional burden among university students during the COVID-19 pandemic: cross-sectional online survey

-

- Journal:

- BJPsych Open / Volume 9 / Issue 1 / January 2023

- Published online by Cambridge University Press:

- 01 December 2022, e1

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

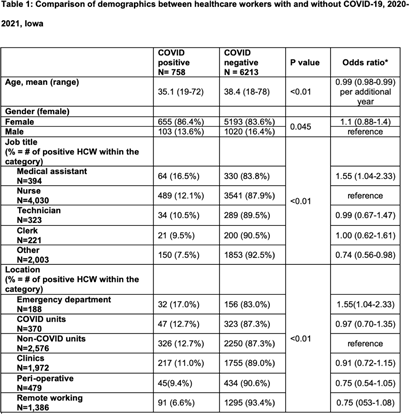

Association between job role and coronavirus disease 2019 (COVID-19) among healthcare personnel, Iowa, 2021

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 2 / Issue 1 / 2022

- Published online by Cambridge University Press:

- 01 December 2022, e188

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Milk yield and composition in dairy goats fed extruded flaxseed or a high-palmitic acid fat supplement

-

- Journal:

- Journal of Dairy Research / Volume 89 / Issue 4 / November 2022

- Published online by Cambridge University Press:

- 13 December 2022, pp. 355-366

- Print publication:

- November 2022

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Characterizing Emergency Supply Kit Possession in the United States During the COVID-19 Pandemic: 2020–2021

-

- Journal:

- Disaster Medicine and Public Health Preparedness / Volume 17 / 2023

- Published online by Cambridge University Press:

- 17 October 2022, e283

-

- Article

- Export citation

Developing and sustaining a community advisory committee to support, inform, and translate biomedical research

-

- Journal:

- Journal of Clinical and Translational Science / Volume 7 / Issue 1 / 2023

- Published online by Cambridge University Press:

- 11 October 2022, e20

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Governments commit to forest restoration, but what does it take to restore forests?

-

- Journal:

- Environmental Conservation / Volume 49 / Issue 4 / December 2022

- Published online by Cambridge University Press:

- 09 September 2022, pp. 206-214

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Sex and age differences in the proportion of experienced symptoms by SARS-CoV-2 serostatus in a community-based cross-sectional study

-

- Journal:

- Epidemiology & Infection / Volume 150 / 2022

- Published online by Cambridge University Press:

- 10 August 2022, e157

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

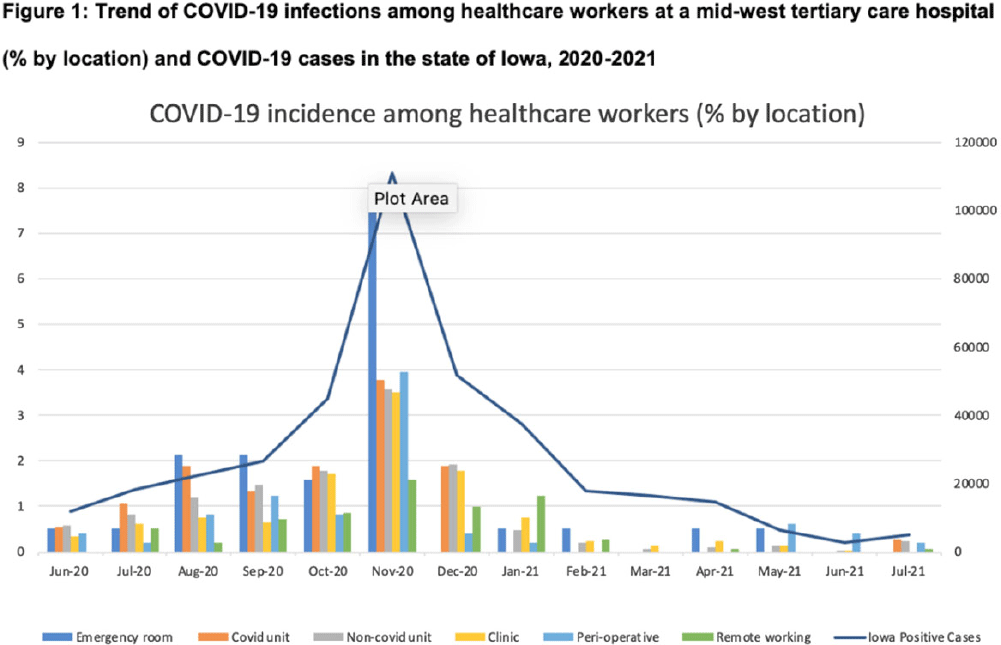

Coronavirus disease 2019 (COVID-19) among nonphysician healthcare personnel by work location at a tertiary-care center, Iowa, 2020–2021

- Part of

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 8 / August 2023

- Published online by Cambridge University Press:

- 02 June 2022, pp. 1351-1354

- Print publication:

- August 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

COVID-19 incidence among nonphysician healthcare workers at a tertiary care center–Iowa, 2020–2021

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 2 / Issue S1 / July 2022

- Published online by Cambridge University Press:

- 16 May 2022, pp. s6-s7

-

- Article

-

- You have access

- Open access

- Export citation