Book contents

- Frontmatter

- Contents

- Preface to the English Version

- Preface

- 1 Bayesian Inference

- 2 Representation of Prior Information

- 3 Bayesian Inference in Basic Problems

- 4 Inference by Monte Carlo Methods

- 5 Model Assessment

- 6 Markov Chain Monte Carlo Methods

- 7 Model Selection and Trans-dimensional MCMC

- 8 Methods Based on Analytic Approximations

- 9 Software

- Appendix A Probability Distributions

- Appendix B Programming Notes

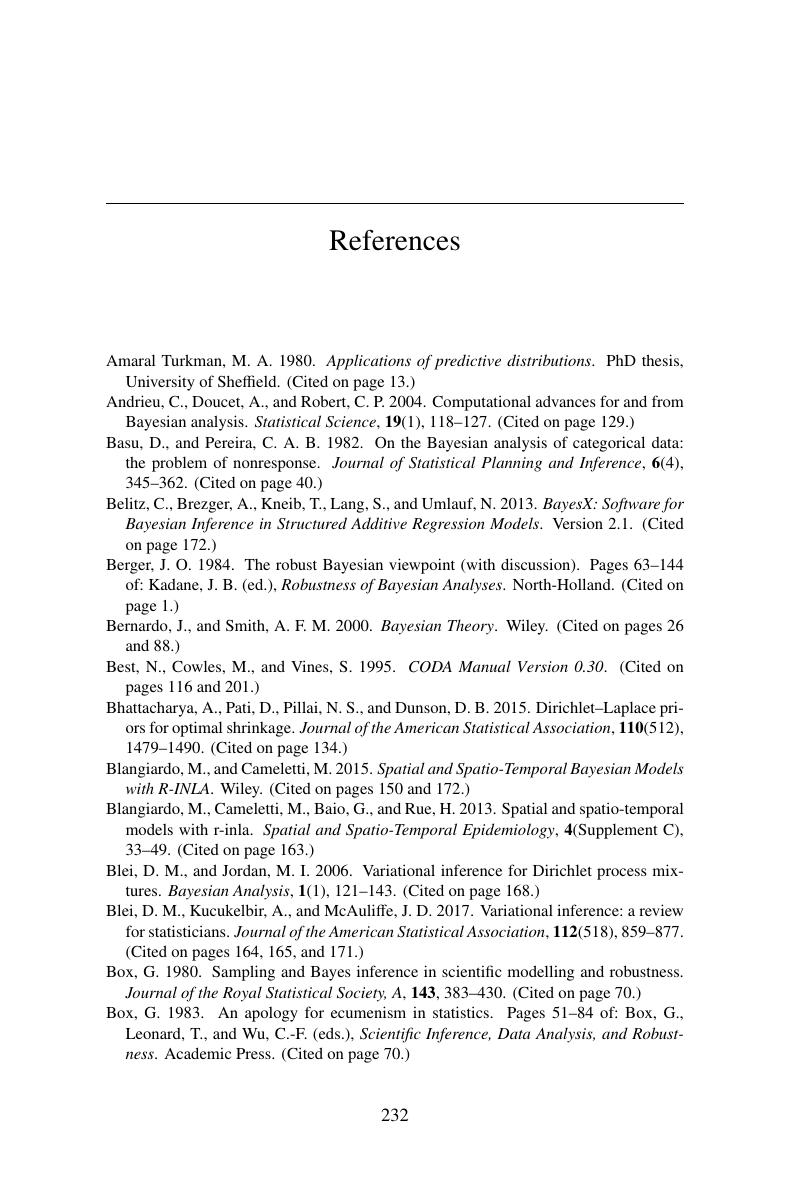

- References

- Index

- References

References

Published online by Cambridge University Press: 18 February 2019

- Frontmatter

- Contents

- Preface to the English Version

- Preface

- 1 Bayesian Inference

- 2 Representation of Prior Information

- 3 Bayesian Inference in Basic Problems

- 4 Inference by Monte Carlo Methods

- 5 Model Assessment

- 6 Markov Chain Monte Carlo Methods

- 7 Model Selection and Trans-dimensional MCMC

- 8 Methods Based on Analytic Approximations

- 9 Software

- Appendix A Probability Distributions

- Appendix B Programming Notes

- References

- Index

- References

Summary

- Type

- Chapter

- Information

- Computational Bayesian StatisticsAn Introduction, pp. 232 - 240Publisher: Cambridge University PressPrint publication year: 2019