INTRODUCTION

A core concern in contemporary democratic politics is whether adherents to different political ideologies might have fundamentally incompatible moral outlooks. Moral foundations theory (MFT; Haidt Reference Haidt2012; Haidt and Graham Reference Haidt and Graham2007)—which aims to document and explain variation in the moral perspectives of different political and social groups—suggests that differences in such intuitions may make reasoned political debate challenging. Viewing morality as residing in the intuitive reflexes that individuals give in response to moral stimuli, MFT suggests that there are five central “foundations” that inform people’s moral outlooks: care, fairness, loyalty, authority, and sanctity. While these foundations are considered the “irreducible basic elements” (Graham et al. Reference Graham, Haidt, Koleva, Motyl, Iyer, Wojcik and Ditto2013, 56) of human morality, MFT suggests that the moral weight assigned to each of these foundations by any given individual will be a function of experience, upbringing, and culture. As a consequence, MFT suggests that there will be predictable differences in the moral values of those who occupy different parts of the political spectrum.

Empirical research suggests that conservatives and liberals do, in fact, differentially endorse the five moral foundations, at least when asked to reflect explicitly on their own moralities. The main evidence on which such conclusions rest comes from public opinion surveys, most of which employ the Moral Foundations Questionnaire (MFQ; Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011) to measure moral attitudes. Although this literature has provided important insights into the relationship between political ideologies and moral endorsement, in this article, we argue that a number of methodological features—both of the MFQ and of the empirical literature more broadly—are likely to lead scholars to overstate differences in the moral intuitions of liberals and conservatives. We make three main arguments.

First, existing work typically relies on respondents’ explicit ratings of abstract and generalized moral principles, rather than trying to assess the intuitions that structure their moral evaluations. Consistent with arguments of other scholars (e.g., Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015), we argue that this approach to measuring moral priorities is in tension, however, with a core theoretical assumption of MFT. Second, the surveys used to assess the political predictions of MFT are not well suited for drawing conclusions about the relative importance of each foundation for moral evaluation. Third, even when measurement approaches in this literature aim to measure intuitive moral attitudes, they tend to do so by prompting respondents to provide judgments on a very small number of specific moral violations. In combination, we argue that these methodological features of existing approaches are likely to have lead prior literature to overestimate differences in the moral intuitions of liberals and conservatives.

We address these issues by introducing a new experimental design and modeling strategy which aims to measure the relative importance of the five moral foundations to respondents of different political ideologies. In our design, we ask survey respondents from the United Kingdom and the United States to compare pairs of vignettes, each of which describes a single, specific violation that is relevant to one of the foundations. Rather than asking respondents to reflect explicitly on their own moral codes, we ask them to simply compare the vignettes and then indicate which they think constitutes the worse moral transgression. We analyze responses to these comparisons using hierarchical Bradley–Terry models and present three main findings.

We first show that, across all respondents, estimates of foundation importance based on our measure of intuitive attitudes differ in important ways from existing work. Second, we show that extreme liberals and conservatives do appear to put different weights on the foundations when making moral judgments, and the differences are in the direction predicted by MFT at least with respect to the care, authority, and loyalty foundations. However, our third (and most important) finding is that the ideology-by-foundation interactions that are the central focus of MFT’s political predictions explain very little of the variation in moral judgments across violations. In both the United Kingdom and the United States, comparing the rankings of violation severity across all of the vignettes in our experiment for respondents with different ideologies, we find large, positive correlations, even between respondents from opposite extremes of the political spectrum. We find much lower correlations applying a similar experimental design to the MFQ items, showing that ideological divisions that appear when respondents are asked to self-theorize about their morality are not present when they make intuitive moral judgments about concrete moral violations.

Our design provides a test of the core political predictions of MFT. While several papers criticize MFT on the basis of theoretical objections to the theory’s core concepts (Suhler and Churchland Reference Suhler and Churchland2011), the ambiguity between the descriptive and prescriptive components of the theory (Jost Reference Jost2012), the causal process assumed by MFT (Hatemi, Crabtree, and Smith Reference Hatemi, Crabtree and Smith2019), or empirical inconsistencies with the theory’s key assumptions (Smith et al. Reference Smith, Alford, Hibbing, Martin and Hatemi2017; Walter and Redlawsk Reference Walter and Redlawsk2023), we take the central ideas of MFT as a given, and ask whether political ideology is predictive of the comparisons individuals make between violations of the five foundations. Though previous work has questioned the degree to which the political findings from the MFQ generalize across different country contexts and respondent subgroups (e.g., Davis et al. Reference Davis, Rice, Van Tongeren, Hook, DeBlaere, Worthington and Choe2016; Iurino and Saucier Reference Iurino and Saucier2020; Nilsson and Erlandsson Reference Nilsson and Erlandsson2015), our argument focuses on the degree to which such findings generalize to a novel and theoretically motivated survey instrument. We believe that our design provides a sharper test than previous studies of the core political ideas of MFT, as our experiment is designed explicitly to solicit the types of fast, automatic, intuitive judgments that are central to the theory. By rooting our study in these types of moral judgments, we show that the political differences predicted by the theory have been overstated in the existing literature and that ideologically distant voters make strikingly similar intuitive moral decisions.

The basic descriptive claim that liberals and conservatives differ in terms of their moral intuitions is often used as an empirical touchstone for a wide range of studies that rely upon MFT as a theoretical approach. This claim features in work on, inter alia, political rhetoric (e.g., Clifford and Jerit Reference Clifford and Jerit2013; Jung Reference Jung2020), political persuasion (e.g., Kalla, Levine, and Broockman Reference Kalla, Levine and Broockman2022), voting behavior (e.g., Franks and Scherr Reference Franks and Scherr2015), and public attitudes on foreign policy (e.g., Kertzer et al. Reference Kertzer, Powers, Rathbun and Iyer2014) and “culture war” issues (e.g., Koleva et al. Reference Koleva, Graham, Iyer, Ditto and Haidt2012). Our results—which cast doubt on how robust the liberal-conservative morality gap is to different measurement strategies—are therefore likely to be salient for many scholars working on a broad range of questions in political behavior.

IDEOLOGICAL DIFFERENCES IN MORAL JUDGMENT

Moral Foundations Theory

How do people form moral judgments? MFT (Graham et al. Reference Graham, Haidt, Koleva, Motyl, Iyer, Wojcik and Ditto2013; Haidt Reference Haidt2012; Haidt and Joseph, Reference Haidt and Joseph2004; Reference Haidt and Joseph2008) suggests that judgments on moral issues typically arise from fast and automatic processes which are rooted in peoples’ moral intuitions (Haidt Reference Haidt2001). From this perspective, when encountering a situation that requires moral evaluation, people come to decisions “quickly, effortlessly, and automatically” (Haidt Reference Haidt2001, 1029) without being consciously aware of the criteria they use to form moral conclusions. These intuitions are therefore considered to be the key causal factors in moral judgment, while conscious and deliberate moral reasoning—in which people search for and weigh evidence in order to infer appropriate conclusions—is thought to be employed only post hoc, as people search for arguments that support their intuitive conclusions.Footnote 1

Understanding the moral decisions that people make therefore requires understanding the sources of their moral intuitions. MFT adopts an evolutionary account of morality, in which the central “foundations” of peoples’ moral intuitions are thought to have evolved in response to a series of broad challenges: the need to protect the vulnerable (especially children); the need to form partnerships to benefit from cooperation; the need to form cohesive coalitions to compete with other groups; the need to form stable social hierarchies; and the need to avoid parasites, pathogens, and contaminants. In response to these challenges, humans are said to have evolved distinct cognitive modules that underpin the “moral matrices” of contemporary cultures. Each challenge is thought to be associated with a distinct moral foundation—care, fairness, loyalty, authority, and sanctity—which are the “irreducible basic elements” (Graham et al. Reference Graham, Haidt, Koleva, Motyl, Iyer, Wojcik and Ditto2013, 56) needed to explain and understand the moral domain. These foundations are typically further grouped into two broader categories, where care and fairness are thought of as individualizing foundations (because they link closely to the focus on the rights and welfare of individuals), and loyalty, authority, and sanctity are referred to as the binding foundations (because they emphasize virtues connected to binding individuals together into well-functioning groups).Footnote 2

An evolutionary origin of the foundations implies that they are innate and universally shared, at least in the sense that human minds are “organized in advance of experience” (Graham et al. Reference Graham, Haidt, Koleva, Motyl, Iyer, Wojcik and Ditto2013, 61) to be receptive to concerns that are relevant to these five criteria. However, proponents of MFT also argue that “innateness” does not preclude the possibility that individual or group moralities might be responsive to environmental influences. As Graham et al. (Reference Graham, Haidt, Koleva, Motyl, Iyer, Wojcik and Ditto2013, 65) argue, “the foundations are not the finished buildings [but they] constrain the kinds of buildings that can be built most easily.” At the level of individuals, while the human mind might show some innate concern for all five foundations, environmental factors—like upbringing, education, and cultural traditions—will result in different people and social groups constructing moralities that weight the foundations differently.

MFT combines these arguments—that morality is pluralistic, constituted of moral concerns beyond care and justice, and expressed primarily through intuitive reactions which are shaped by experience—to provide an explanation for observable variation in expressed morality. On this basis, MFT has been used to explain moral similarities and differences across societies, changes in moral values over time, and—crucially—variation in expressed moral values across individuals from different parts of the political spectrum.

Moral Foundations and Political Ideology

One of the most prominent applications of MFT is as an explanation for moral differences between people with different political ideologies. The core political claim made by MFT’s proponents is that liberals and conservatives, in the United States and elsewhere, put systematically different weight on the different foundations (Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009; Haidt and Graham Reference Haidt and Graham2007). An extensive empirical literature, primarily based on survey evidence that connects respondents’ self-reported political ideology to questions designed to measure reliance on each of the foundations in moral decision-making, appears to offer support for this hypothesis.Footnote 3 Discussed in greater detail in the section below, the broad finding of these studies is that liberals (or those on the political left) prioritize the care and fairness foundations, while conservatives (or those on the political right) have moral systems that rely to a greater degree on the loyalty, authority, and sanctity foundations (Enke Reference Enke2020; Enke, Rodríguez-Padilla, and Zimmermann Reference Enke, Rodríguez-Padilla and Zimmermann2022; Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011; Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009; Haidt and Graham Reference Haidt and Graham2007; Kertzer et al. Reference Kertzer, Powers, Rathbun and Iyer2014).

These results have been interpreted in stark terms. For instance, Graham, Haidt, and Nosek (Reference Graham, Haidt and Nosek2009, 1030) argue that MFT accounts for “substantial variation in the moral concerns of the political left and right, especially in the United States, and that it illuminates disagreements underlying many ‘culture war’ issues.” Similarly, Graham, Nosek, and Haidt (Reference Graham, Nosek and Haidt2012, 1) suggest that “liberal and conservative eyes seem to be tuned to different wavelengths of immorality.” Likewise, Haidt and Graham (Reference Haidt and Graham2007, 99) suggest that “Conservatives have many moral concerns that liberals simply do not recognize as moral concerns.” Together, the impression generated by this literature is that there is a fundamental incompatibility between the moral outlooks of liberals and conservatives.

MFT clearly envisages the relationship between morality and political ideology to be causal, with moral intuitions shaping political stances (Enke Reference Enke2020; Enke, Rodríguez-Padilla, and Zimmermann Reference Enke, Rodríguez-Padilla and Zimmermann2022; Franks and Scherr Reference Franks and Scherr2015; Haidt Reference Haidt2012; Kertzer et al. Reference Kertzer, Powers, Rathbun and Iyer2014; Koleva et al. Reference Koleva, Graham, Iyer, Ditto and Haidt2012). However, recent work has questioned this account by showing that changes in political ideology predict changing moral attitudes, rather than the reverse (Hatemi, Crabtree, and Smith Reference Hatemi, Crabtree and Smith2019). Similarly, in contrast to the stable, dispositional traits required to be convincing determinants of political ideology, moral attitudes are subject to substantial individual-level variability over time (Smith et al. Reference Smith, Alford, Hibbing, Martin and Hatemi2017). Survey experimental evidence also suggests that endorsement of the different moral foundations is sensitive to political and ideological framing effects (Ciuk Reference Ciuk2018), and the morality gap between liberals and conservatives is sensitive to other attitudes of individuals, such as how closely aligned a person is with their social group (Talaifar and Swann Reference Talaifar and Swann2019) and how politically sophisticated they are (Milesi Reference Milesi2016).

While these papers complicate the causal story told by MFT, they do not dispute the idea that, descriptively, liberals and conservatives endorse different sets of moral principles. As Hatemi, Crabtree, and Smith (Reference Hatemi, Crabtree and Smith2019, 804) suggest, “our findings provide reasons to reconsider MFT as a causal explanation of political ideology [but] do nothing to diminish the importance of the relationship between these two concepts.” More generally, throughout the empirical literature on MFT, disagreement focuses on how to interpret the correlation between moral and political attitudes, not on whether such a correlation exists or how large it is. Regardless of the overarching causal story, interpretations of the available descriptive evidence are relatively uniform: there are substantial differences in the moral outlooks of liberals and conservatives, both in the United States and elsewhere.

Measuring Foundation Importance

The strength of the descriptive association between moral and political attitudes is likely, however, to be related to the instruments used for measuring individuals’ reliance on the five moral foundations. Existing empirical research relies heavily on the MFQ (Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011; Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009), a survey instrument designed to assess individual-level endorsement of the moral foundations, which is composed of two question batteries. The first battery asks participants to rate how relevant various concerns are to them when making moral judgments.Footnote 4 The “moral relevance” items used in this battery are typically abstract and generalized statements such as “whether or not someone was harmed” (care), or “whether or not someone did something disgusting” (sanctity). The second battery aims to assess levels of agreement with more specific “moral judgments” by asking participants to rate (from strongly disagree to strongly agree) their agreement with statements such as “respect for authority is something all children need to learn” (authority) or “when the government makes laws, the number one principle should be ensuring that everyone is treated fairly” (fairness). These two batteries have been used extensively throughout existing research which measures political differences in moral endorsement (e.g., Enke Reference Enke2020; Enke, Rodríguez-Padilla, and Zimmermann Reference Enke, Rodríguez-Padilla and Zimmermann2022; Franks and Scherr Reference Franks and Scherr2015; Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011; Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009; Haidt Reference Haidt2012; Kertzer et al. Reference Kertzer, Powers, Rathbun and Iyer2014; Koleva et al. Reference Koleva, Graham, Iyer, Ditto and Haidt2012).

Despite the ubiquity of this survey instrument, the MFQ is subject to a number of shortcomings. First, MFT assumes that moral intuitions—fast, effortless reactions to moral stimuli—are central to moral decision-making. Crucially, the cognitive processes that lead to intuitive reactions are thought to be inaccessible to respondents. Moral intuitions are marked by the sudden appearance of moral conclusions in the mind, “without any conscious awareness” of having gone through the process of forming an opinion, nor any recognition of the factors that lead to a particular conclusion being reached (Haidt Reference Haidt2001, 1029). However, while MFT assumes the primacy of intuition, the vast majority of the survey questions used to assess morality differences between liberals and conservatives are based on questions that solicit slow, self-reflective, “System-2” style, responses. The MFQ prompts respondents to self-assess their own motivations for their moral choices; motivations which are—by MFT’s own assumptions—inaccessible to them. The gap between theoretical assumptions and empirical methods in MFT research represents, for some, “a substantial impediment to testing and developing theories of morality” (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1179).

Prompts asking respondents to explicitly endorse moral values may lead to a different distribution of responses than items that elicit more automatic moral judgments. In particular, respondents who are prompted to self-theorize about their own moralities may be more likely to engage in motivated moral reasoning (Ditto, Pizarro, and Tannenbaum Reference Ditto, Pizarro and Tannenbaum2009), stressing the moral principles that are most supportive of the positions they take on particular moral issues. This creates a pathway for spurious political differences to arise in MFQ responses as respondents give the responses that make sense of their politics. However, political differences in the ways in which people justify their moral choices are distinct from differences in the ways that people intuitively evaluate specific moral scenarios. This argument is shared by Ditto, Pizarro, and Tannenbaum (Reference Ditto, Pizarro and Tannenbaum2009, 1041), who suggest that “studies using implicit measurement methods will be essential for understanding the ways in which liberals and conservatives make moral judgments.”

In addition, the items included in the MFQ typically describe abstract moral principles, which respondents may interpret differently. When asked to rate the moral relevance of “Whether or not someone showed a lack of respect for authority,” for instance, people might imagine very different scenarios, which may differ in moral importance. One respondent might imagine a situation in which a child is rude to a parent, while another might imagine a soldier refusing to follow the instructions of their commanding officer. In general, because the MFQ fails to prompt respondents to consider specific moral violations, it risks respondents imputing scenarios that they associate with general categories of moral wrong, and the scenarios that respondents impute might differ systematically across respondents with different ideologies.

Second, the survey prompts used in this literature do not easily facilitate comparisons of the relative importance of the different foundations to moral decision-making. In particular, the MFQ contains sets of items which respondents are asked to rate one at a time, against an abstract scale. In contrast, the pairwise comparison approach we adopt facilitates the intuitive responses that are most theoretically relevant to MFT, because they do not require comparison of each item against an abstract scale that must be first theorized and then mentally carried across items. By avoiding this cognitive burden, pairwise comparisons also facilitate consistent responses for an individual across prompts. Several authors have argued that future empirical work on MFT should consider adopting comparison-based, rather than rating-based, questions in order to encourage respondents to directly consider trade-offs between different foundations (Ciuk Reference Ciuk2018; Jost Reference Jost2012).

Third, even when researchers have used specific moral violations (rather than abstract moral principles) as the basis of inference for political differences in expressed morality, they have tended to rely on a small number of examples which are supposed to be representative of each foundation. For example, three prominent examples of sanctity violations—one involving incest, one involving eating a dead dog, and one involving having sex with a dead chicken—have been used extensively in a number of studies relating to moral judgment (Eskine, Kacinik, and Prinz Reference Eskine, Kacinik and Prinz2011; Feinberg et al. Reference Feinberg, Willer, Antonenko and John2012; Schnall et al. Reference Schnall, Haidt, Clore and Jordan2008; Wheatley and Haidt Reference Wheatley and Haidt2005). More generally, while researchers have used a variety of vignettes in past work on MFT, these vignettes do not “cover the full breadth of the moral domain” (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1180) and may be “unrepresentative of the full spectrum of moral judgments that people make” (Frimer et al. Reference Frimer, Biesanz, Walker and MacKinlay2013, 1053). Importantly, reliance on a small number of issues might lead to overestimates of political differences in moral judgment if the issues selected are marked by unusually large levels of partisan disagreement.

In addition, experimental designs in which researchers study the effects of latent concepts (such as the moral content of a given scenario) using a small number of specific implementations are subject to potential confounding concerns. The scenarios that researchers construct may differ from each other in multiple ways, not only in terms of the latent treatment concept they are intended to capture (Grimmer and Fong Reference Grimmer and Fong2021). Differences in moral foundation endorsement may not result from differences in moral content, but rather from the fact that the researcher-generated MFT scenarios used to typify each foundation are confounded by differing levels of “weirdness and severity” (Gray and Keeney Reference Gray and Keeney2015, 859). Furthermore, studies that use single implementations of latent treatment concepts tend to have low levels of external validity, as the treatment effects of one implementation of a given latent treatment may differ in both sign and magnitude from the effects of another implementation of the same concept (Blumenau and Lauderdale Reference Blumenau and Lauderdale2024a; Hewitt and Tappin Reference Hewitt and Tappin2022).

Finally, most of the studies that document morality differences between liberals and conservatives are based on self-selected, convenience samples which are unlikely to be representative in terms of political ideology or other covariates (Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011; Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009). Although sample selection is only consequential for conclusions about the political dimension of moral endorsement when there are interaction effects between participation decisions, political orientations, and expressed morality, this is nevertheless an aspect of existing designs where there is room for improvement.

Together, these features of existing survey measurement approaches suggest that moral foundation-based differences between political liberals and conservatives may have been overstated in the previous literature on MFT. In the next section, we propose a new experimental design and modeling strategy that aims to elicit the types of intuitive responses that are central to MFT; emphasizes comparative evaluations of the different dimensions of morality; deploys a large number of specific, concrete examples of violations associated with each foundation; and constructs estimates for nationally representative samples in the United Kingdom and the United States.

EXPERIMENTAL DESIGN

In this section, we describe the design of two survey experiments. The first experiment asked respondents to make choices between pairs of moral foundation vignettes (MFV) which describe specific violations of particular moral foundations. This experiment was fielded by YouGov to samples of U.S. and U.K. respondents in February 2022. To sharpen the contrast between the results from this survey and those from the existing literature, we conducted a second pairwise-comparison experiment (also fielded by YouGov) in April 2023, in which a sample of U.S. respondents compared pairs of items from the MFQ itself.Footnote 5 While the treatment texts we use vary across these experiments, the experimental design and modeling strategy we use for analysis are common to both.

Moral Foundation Vignettes

The design of our first experiment is based around 74 short vignettes, each of which describes a behavior that violates one specific moral foundation. The vignettes we use are drawn from Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015), who develop the texts with the goal of providing standardized stimulus sets which map directly to each foundation. Each vignette describes a situation “that could plausibly occur in everyday life” (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1181) and the vignettes are written to minimize variability in both text length and reading difficulty. In order to maintain the distinction between moral and political intuitions, the violations avoid any “overtly political content and reference to particular social groups” (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1181). Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) show that survey respondents associate these vignettes with the foundations to which they are intended to apply and that respondents’ perceptions of the moral wrongness of these vignettes correlate broadly with their answers to the MFQ.

We lightly edited these vignettes for use in our context. In particular, as we fielded them to respondents in both the United Kingdom and the United States, we changed the wording of some vignettes to make them consistent with the idioms and political contexts of each country. We also use two versions of each vignette: one in which the person committing the moral violation is a man, one where it is a woman.Footnote 6 Finally, we also removed five of the Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) vignettes entirely from our sample because they did not translate into realistic scenarios in both countries.Footnote 7 The sample of violations is not balanced across the five foundations: we have 26 care foundation violations, 12 fairness violations, 14 authority violations, 13 loyalty violations, and 9 sanctity violations. We present all vignettes in Table 1 in the Supplementary Material.

The key virtue of the treatments we include in our MFV experiment is that each vignette describes a specific action that constitutes a violation of a specific foundation. Accordingly, rather than asking respondents to reflect on the importance of each foundation to their own moral reasoning (as in the MFQ), we instead try to infer the degree to which respondents rely on the different foundations by examining their judgments of these scenarios. This approach is consistent with the idea that respondents’ theories of their own moralities may differ from the ways in which they actually make intuitive moral judgments, and it is the latter that are central to the political predictions of MFT.

An additional benefit of our approach is that we use a wide range of vignettes to operationalize violations of each of the five foundations. Using multiple violations relevant to each foundation reduces the risk that our inferences will be skewed by confounding factors present in any specific treatment implementation, a common problem in survey-experimental designs employing text-based treatments (Blumenau and Lauderdale Reference Blumenau and Lauderdale2024a; Grimmer and Fong Reference Grimmer and Fong2021). Of particular concern is that any given implementation of a foundation-specific violation may be subject to especially pronounced political differences. For instance, if we used a single-implementation design and our example of a sanctity violation related to the issue of abortion, we might expect very large political differences that are not necessarily representative of typical differences in the moral intuitions of liberals and conservatives relating to sanctity concerns. By using a large number of violations of each foundation, we reduce the risk that the differences we estimate will be attributable to the idiosyncrasies of any particular treatment implementation.

Finally, it is important to note that the set of violations we use are not in any sense a representative sample from a well-defined population of violations of each type. In fact, it is not clear that it would be possible even to characterize such a population. However, the large number of violations (both overall and of each foundation) and the variation in the substance of each of the scenarios is consistent with calls to use “a broader set of cases to explore judgments of right and wrong” (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1179) in the process of evaluating MFT. We discuss the differences and similarities between the results in Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) and the results from our experiment in detail below.

Moral Foundation Questionnaire

The design of our second experiment is based on the 30-item version of the MFQ (Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011) which has been used extensively in the empirical literature on MFT. The items in the MFQ are of two types. First, there are 15 “moral relevance” items that seek respondents’ views on a set of abstract statements about the relevance of particular moral values. Second, there are 15 “moral judgment” items which constitute a mixture of normative declarations, virtue endorsements, and opinions about the principles of government policy (Graham et al. Reference Graham, Nosek, Haidt, Iyer, Koleva and Ditto2011, 371), with which respondents are asked to indicate how strongly they agree or disagree. We list all the MFQ items in Appendix A2 of the Supplementary Material.

The core advantage of using the MFQ items in this second experiment is that it allows us to directly compare the ways in which respondents make judgments of concrete moral violations, and the ways in which they make judgments between more abstract moral statements (which form the basis of the existing empirical literature), while holding the paired-comparison survey design and modeling approach that we use constant.

Randomization, Prompts, and Sample

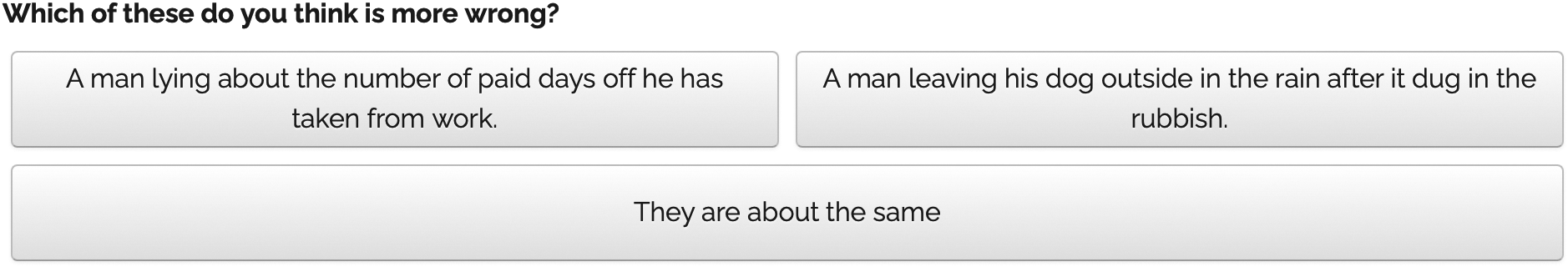

In both experiments, we present randomly selected pairs of items—either from the MFV or the MFQ—to survey respondents and ask them to select between them. For the first experiment, we ask respondents to select which of a given pair of violations is “more wrong.”Footnote 8 In the example given in Figure 1, the respondent sees one vignette about a man lying about the number of days he has taken off from work (a violation of the “fairness” foundation), and one vignette about a man who punished his dog for bad behavior by leaving it out in the rain (a violation of the “care” foundation). Respondents were able to click on which of the two vignettes they thought was worse, or alternatively could select “They are about the same.”

Figure 1. Experimental Prompt, Moral Foundation Vignette (MFV) Items

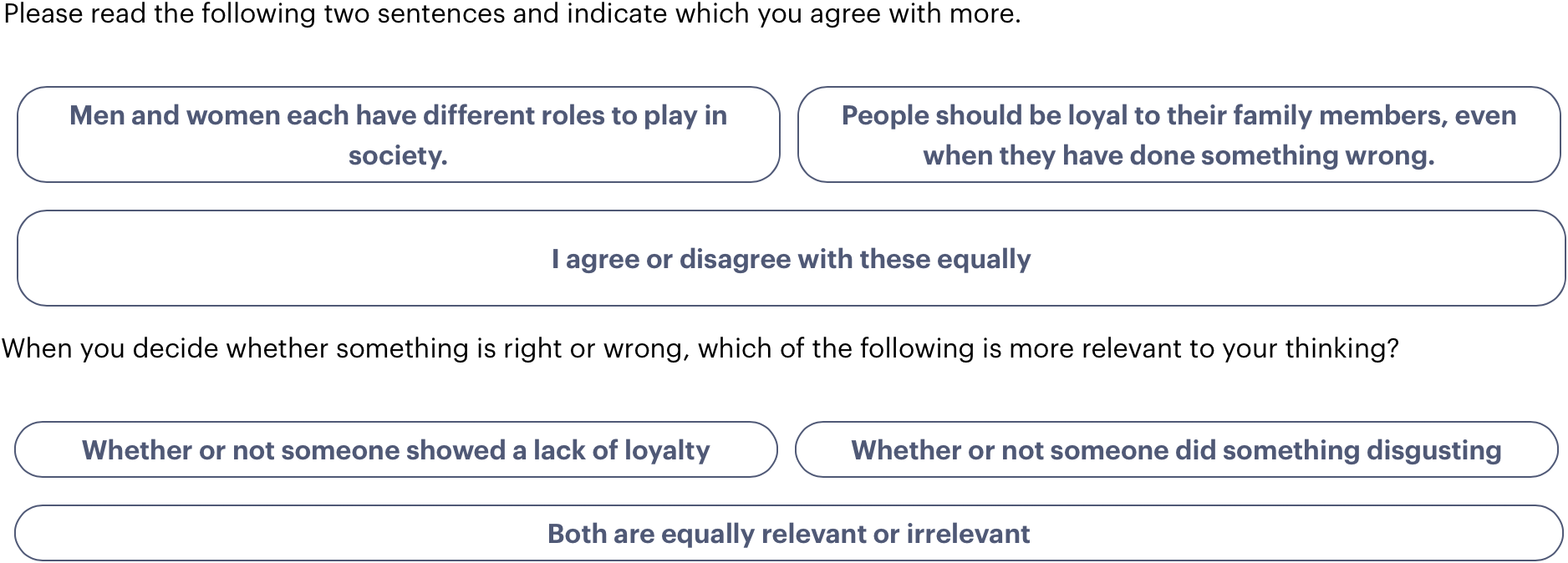

In the second experiment, half of the respondents were presented with randomly selected pairs of items from the “judgment” item battery and asked to indicate which item they agreed with more, and the other half of the respondents were presented with pairs of items from the “relevance” item battery and were asked to indicate which of the items was “more relevant to your moral thinking.” In both cases, the respondents could also select an intermediate option that favored neither treatment text. Examples of both forms of prompt are given in Figure 2.

Figure 2. Experimental Prompts, Moral Foundation Questionnaire (MFQ) Items

For each comparison, we first sampled two foundations and then, conditional on the foundation drawn, we sampled two of the vignettes/items, without replacement, from the full set of 74 MFV violations or, in the MFQ experiment, from the 15 items relevant to a respondent’s treatment arm (i.e., relevance items or judgment items). This sampling strategy means that we have equal numbers of observations of each foundation in expectation but unequal numbers of observations for each violation (because we have more violations for some foundations than for others). For each of the MFV vignettes, we also randomly sampled whether the person committing the relevant violation was a man or a woman. In both experiments, each respondent answered six pairwise comparisons. For experiment one, we collected data from 1,598 respondents in the United Kingdom and 2,375 respondents in the United States, giving us a total of 9,472 and 14,063 observations from the United Kingdom and the United States, respectively.Footnote 9 In experiment two, we collected data from 2,321 respondents in the United States, giving us a total of 13,926 observations.

MEASURING MORAL INTUITIONS

Model Definition

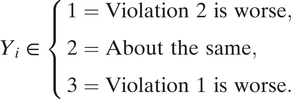

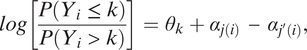

Each of our experiments results in an ordered response variable with three categories. We present the models below with reference to our first (MFV) experiment; we use an identical model to investigate the response distributions of our second (MFQ) experiment. For experiment one, we have

$$ \begin{array}{ll}{Y}_i\in \left\{\begin{array}{l}1=\mathrm{Violation}\ 2\ \mathrm{is}\ \mathrm{worse}\hbox{,}\qquad \\ {}2=\mathrm{About}\ \mathrm{the}\ \mathrm{same},\qquad \\ {}3=\mathrm{Violation}\ 1\ \mathrm{is}\ \mathrm{worse}.\qquad \end{array}\right)& \end{array} $$

$$ \begin{array}{ll}{Y}_i\in \left\{\begin{array}{l}1=\mathrm{Violation}\ 2\ \mathrm{is}\ \mathrm{worse}\hbox{,}\qquad \\ {}2=\mathrm{About}\ \mathrm{the}\ \mathrm{same},\qquad \\ {}3=\mathrm{Violation}\ 1\ \mathrm{is}\ \mathrm{worse}.\qquad \end{array}\right)& \end{array} $$

To model this outcome, we adopt a variation on the Bradley–Terry model for paired comparisons (Bradley and Terry Reference Bradley and Terry1952; Rao and Kupper Reference Rao and Kupper1967) where we model the log-odds that violation j is worse than violation j′ in a pairwise comparison:

$$ \begin{array}{rl}log\left[\frac{P({Y}_i\le k)}{P({Y}_i>k)}\right]={\theta}_k+{\alpha}_{j(i)}\hskip0.35em -\hskip0.35em {\alpha}_{j^{\prime }(i)},& \end{array} $$

$$ \begin{array}{rl}log\left[\frac{P({Y}_i\le k)}{P({Y}_i>k)}\right]={\theta}_k+{\alpha}_{j(i)}\hskip0.35em -\hskip0.35em {\alpha}_{j^{\prime }(i)},& \end{array} $$

where θ k is the cutpoint for response category k. We can interpret the α j as the “severity” of violation j (or, equivalently, as the “relevance” or “importance” of item j in the MFQ experiment). This parameter is increasing in the frequency with which respondents choose violation j as the “worse” moral violation in paired comparison with other violations.

In this section, our primary goal is to understand how the severity of these violations varies according to which of the five moral foundations they violate. That is, we are not primarily interested in the relative severity of the 74 individual moral scenarios, but rather in how the distribution of severity differs for violations of different types. We have a moderate number of observations for each of the violations that we include in our experiment: on average, each vignette appears in 256 comparisons in the U.K. data and 380 comparisons in the U.S. data. As a consequence, our design is only likely to be well powered to detect reasonably large differences in average moral evaluations of the individual violations. However, we have far more information about the average moral evaluations of the different foundations and about the levels of variation across vignettes, which are our main targets of interest. We therefore use a hierarchical approach to estimating the average and distribution of effects of each of the five foundations by specifying a second-level model for the α j parameters.

Where f(j) is the moral foundation violated in vignette j, we model the severity of each violation as the sum of a foundation effect, μ f(j), plus a violation-specific random effect, ν j :

The model described by Equation 3 implies that the severity of a given violation equals the average severity of violations of a given foundation type plus a deviation that is attributable to that specific violation. One advantage of our modeling approach is that it allows us to quantify the distribution of violation severity for each foundation.

The model is completed by prior distributions for the ν j and μ f(j) parameters, which we assume are drawn from normal distributions with mean 0 and standard deviation σ ν and σ μ , respectively. We estimate a single scale parameter for the distribution of violation effects, regardless of the foundation to which they apply. We estimate the model using Hamiltonian Monte Carlo as implemented in Stan (Carpenter et al. Reference Carpenter, Gelman, Hoffman, Lee, Goodrich, Betancourt and Brubaker2017). The results presented below are based on four parallel simulation chains of one thousand iterations which follow from five-hundred warm-up iterations.

Finally, as we argued above, existing survey evidence in support of MFT is largely based on convenience samples that are unlikely to be representative. We maximize the representativeness of our estimates from both experiments by incorporating demographic survey weights, provided by YouGov, via a quasi-likelihood approach. The estimates are substantively identical to estimates produced without using the weights.

Results

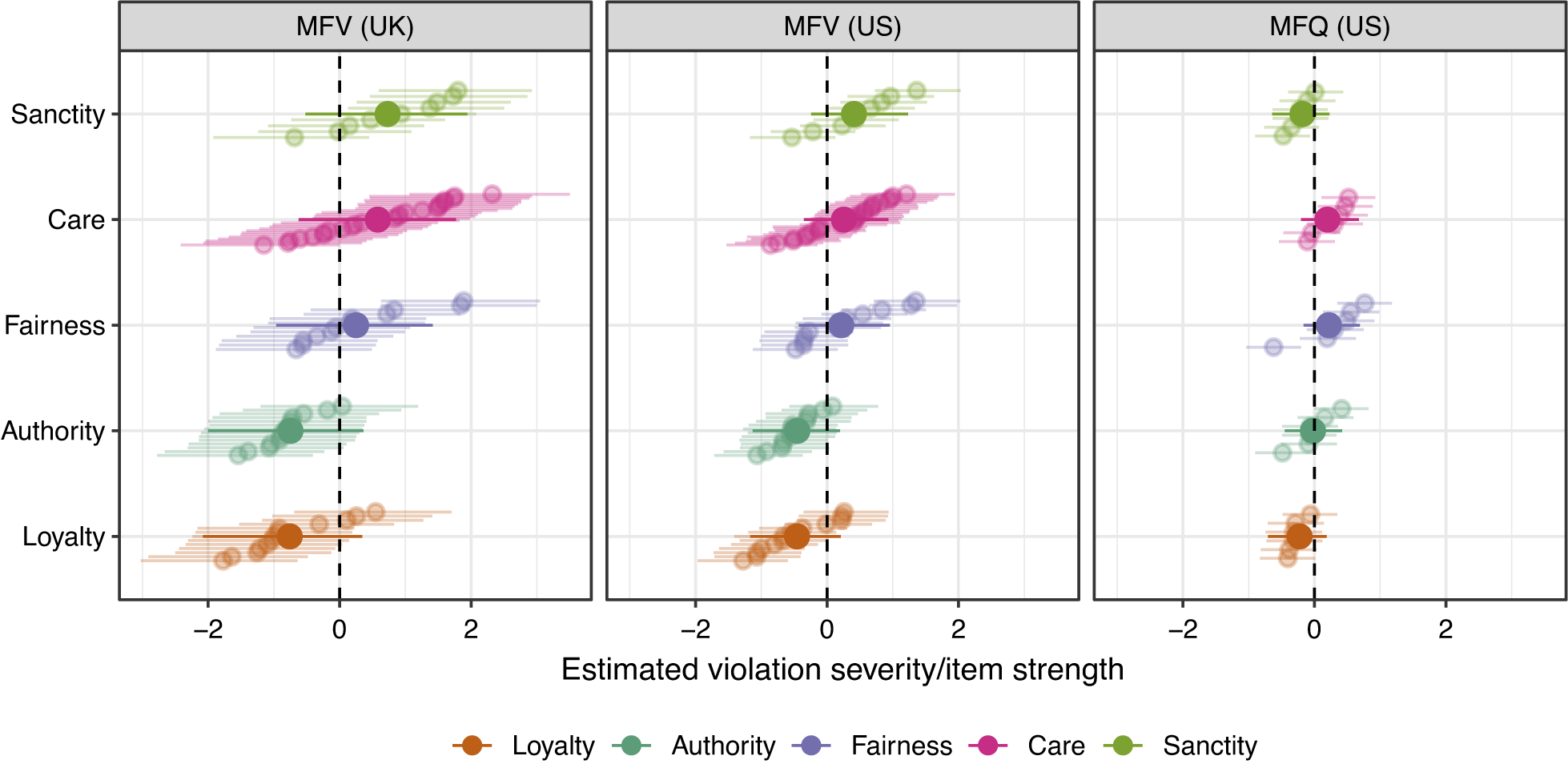

We present the main results from this baseline model in Figure 3. The figure shows the estimated average severity of violations of each of the five moral foundations (μ f ) from experiment one in both the United Kingdom (left panel) and the United States (center panel), as well as the average relevance of the five moral foundations from experiment two (right panel). It also depicts the estimated severity/relevance of each of the 74 individual MFV violations and 30 MFQ items (μ f(j) + ν j ) that we include in the two experiments (transparent points and intervals). Numerical estimates are provided in Appendix A7 of the Supplementary Material (see Blumenau and Lauderdale Reference Blumenau and Lauderdale2024b for the replication materials).

Figure 3. Estimates of

![]() $ {\mu}_{f(j)} $

and

$ {\mu}_{f(j)} $

and

![]() $ {\alpha}_j $

from Equations 2 and 3

$ {\alpha}_j $

from Equations 2 and 3

Note: Left and center panels give estimates from the U.K. and U.S. versions of the moral foundation vignette experiment, respectively. The right panel gives estimates from the Moral Foundations Questionnaire version of the experiment.

The figure reveals two main findings. First, we recover systematic differences in the average severity of the tested violations across the different foundations, and the ordering of the importance of the foundations differs between the MFV and MFQ versions of the experiment. In particular, the figure clearly demonstrates that the violations of the care, fairness, and sanctity foundations that we tested are, on average, considered to be morally worse by our respondents—in both the United Kingdom and the United States—than violations of either the authority or loyalty foundations. By contrast, the MFQ experiment suggests that care and fairness are more relevant to respondents’ moral thinking than all three of the other foundations, including sanctity.

This ordering of the moral wrongness of violations of different types is consistent with (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1188), but contrasts with, the generally accepted ordering of moral foundation importance in the literature. For instance, Graham, Haidt, and Nosek (Reference Graham, Haidt and Nosek2009, 1032) find that, averaging over individuals of different political positions, the moral relevance of the “individualizing” foundations of care and fairness is significantly higher than that of the “binding” foundations of loyalty and authority. However, our finding that respondents also rate violations of the sanctity foundation, on average, as worse than either loyalty or authority violations, and roughly equally as bad as care and fairness violations contrasts with findings presented in Graham, Haidt, and Nosek (Reference Graham, Haidt and Nosek2009) and Haidt (Reference Haidt2012), where—even among the most conservative respondents—sanctity considerations are considered less important to moral decision-making than either harm or fairness concerns.

One interpretation of this difference is that it reflects the different design choices between our two experiments (and between those in our violations experiment and the existing literature). When survey respondents are asked to reflect in the abstract on the considerations that are most important to them in their moral decisions, they tend to think that sanctity concerns are not as important as other moral criteria. But if you ask respondents to compare concrete examples of human action, those that describe degrading (but harmless) situations are often selected as the worst violations of acceptable moral behavior.

An alternative interpretation is that the sanctity violations included in Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) are more severe among the set of all possible sanctity violations than was the case for the violations we presented among those possible for the other foundations. A parallel concern exists for the MFQ as well, as it is not at all clear what severity of violation respondents are imagining when asked to self-assess how responsive they are to a type of violation in the abstract, or indeed how sensitive the measures are to the wording of the abstract violation items. As noted above, and returned to below, it is difficult to define a population of violations, concerns, or principles in a way that would allow one to ensure representativeness and thus make reliable claims about which foundations are more important overall.

This observation leads to our second main finding of this initial model: we observe significant heterogeneity in violation severity both across and within foundation types. First, the overall range of the MFV violation severity estimates dwarfs that of the MFQ item strength estimates, implying that respondents are significantly more decisive when it comes to judging which violations are worse than they are about which items of the MFQ are more relevant to their moral thinking.Footnote 10 Second, in the MFV experiment, there is a large degree of overlap in the severity of violations of different types. Only a limited fraction of the variation in respondents’ evaluations of severity can be explained by the foundations to which the violations apply. The foundation effects (μ f(j)) explain 38% of the variation in violation severity (α j ) for U.K. respondents and 31% of the variation for U.S. respondents. The same is true for experiment two, where the foundation effects explain 30% of the variation in MFQ item relevance.Footnote 11 The foundation to which the MFV vignettes and MFQ items are associated is predictive of respondents’ judgments, but approximately two-thirds of the variation across these remains unexplained by foundation.

In Appendix A3 of the Supplementary Material, we present the violations considered most and least severe for each foundation, in each country. The violations that feature in the U.S. and U.K. lists in the tables are very similar, suggesting a high degree of correlation in moral evaluations across the two countries in our sample.

MEASURING MORAL INTUITIONS BY IDEOLOGY

Model Definition

The model above allows us to measure the extent to which the different moral foundations predict respondents’ judgments between pairs of moral violations or pairs of abstract moral statements. However, the central political claim made by MFT is that the relevance of each of the foundations to moral decisions will depend on the ideological position of a given individual, and this model does not yet allow us to describe how these foundation-level effects vary by respondent ideology.

We therefore modify the model in Equations 2 and 3 to allow the violation-level parameters, α j , to vary according to the self-reported ideological position of the respondent. As before, we describe this model in relation to the MFV experiment but implement an identical model for the MFQ experiment.

We follow Graham, Haidt, and Nosek (Reference Graham, Haidt and Nosek2009) and ask all respondents to place themselves on a seven-point ideological scale before they complete the violation comparison task.Footnote 12 We include this variable in a model of the following form:

$$ \begin{array}{rl}log\left[\frac{P({Y}_i\le k)}{P({Y}_i>k)}\right]={\theta}_k+{\alpha}_{j(i),\hskip0.35em p(i)}-{\alpha}_{j^{\prime }(i),\hskip0.1em p(i)},& \end{array} $$

$$ \begin{array}{rl}log\left[\frac{P({Y}_i\le k)}{P({Y}_i>k)}\right]={\theta}_k+{\alpha}_{j(i),\hskip0.35em p(i)}-{\alpha}_{j^{\prime }(i),\hskip0.1em p(i)},& \end{array} $$

where α j,p is the severity of violation j for ideology group p. We then model these parameters with an adapted second-level model in which we allow the foundation effects, μ f(j), to also vary by respondent ideology:

In this specification, γ f, p is a matrix of coefficients which describe how the main effects of foundation severity (μ f ) vary as a function of the ideology of the respondent making the comparison between violations. That is, γ f,p collects the set of foundation-by-ideology interaction effects that are central to the political claims made by MFT. To identify the model, we set one foundation—fairness—as the baseline category (μ fairness is constrained to be zero). As for the model in the previous section, we assume a normal prior for ν j , with mean zero and standard deviation σ ν . We assume improper uniform priors for μ f .

For the interaction effects for each foundation, we use a first-order random-walk prior, such that the effect for a given ideology group on a given foundation is drawn from a distribution with mean equal to the effect for the adjacent ideological group and standard deviation σ γ :

We estimate all the ideology-level interaction effects, γ f,p , relative to the “moderate” group of respondents, meaning that γ f,4 = 0 for all foundations. The random-walk prior encourages smooth coefficient changes between adjacent ideological groups, unless the evidence from the data is sufficiently strong to indicate otherwise.

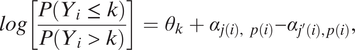

Results

We present the estimates of the foundation-level effects for each level of ideology (μ f + γ f,p ) from this model in Figure 4.Footnote 13 The figure reveals important differences between our two experiments in relation to the association between ideology and moral judgment. The results from the MFQ experiment in the bottom row broadly replicate classic findings from the literature on moral foundations. When comparing abstract moral ideals, liberals care primarily about the fairness and care foundations, while conservatives appear to have a “broader moral matrix” (Haidt Reference Haidt2012, 357), placing similar weight on all five foundations. The figure also reveals steep ideological gradients for all five foundations in the MFQ experiment, with concern for authority, loyalty, and sanctity all sharply increasing when moving from liberal to conservative respondents, and concern for fairness and care decreasing among right-wing respondents.

Figure 4. Estimates of

![]() $ {\mu}_f+{\gamma}_{f,p} $

from Equation 5

$ {\mu}_f+{\gamma}_{f,p} $

from Equation 5

Note: Top and Middle rows: MFV experiment, U.K. and U.S. respondents. Bottom row: MFQ experiment, U.S. respondents only.

By contrast, the top and middle rows of the figure demonstrate that the ideological associations are much weaker when estimated on the basis of responses to the moral violation vignettes. Compared to the estimates based on the MFQ, the ideological associations are noticeably shallower across the sanctity, care, fairness, and authority foundations, while the relationship between ideology and loyalty is broadly similar in the two experiments. For two of the foundations—fairness and sanctity—we find no relationship at all between ideology and moral judgments. Although there is some indication that the most extreme liberal respondents in the United States put greater weight on fairness violations than other respondents, there is no difference between the other six ideological categories on this dimension, and ideology does not affect perceptions of fairness violations in the United Kingdom either. Similarly, with respect to sanctity, although thought to be a key dimension on which liberals and conservatives differ (Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009; Haidt and Graham Reference Haidt and Graham2007), we find that no group places significantly more weight than any other group on violations of this type when making moral judgments.

One potential caveat relating to our null result on the fairness foundation is that the fairness vignettes developed in Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) are arguably more closely linked to the notion of fairness as “proportionality”—the desire to see people rewarded or punished in proportion to the moral quality of their deeds—rather than fairness as “equality”—a form of social reciprocity marked by equal treatment, equal opportunity and equal shares (Clifford et al. Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1193). The original conceptualization of the fairness foundation combined elements of both proportionality and equality, but later revisions have suggested that this foundation should be primarily about proportionality (Haidt Reference Haidt2012, 209). So conceptualized, the prediction of MFT is that “[e]veryone—left, right, and center—cares about proportionality…But conservatives care more, and they rely on the Fairness foundation more heavily—once fairness is restricted to proportionality” (Haidt Reference Haidt2012, 213). This prediction contrasts with earlier work in MFT which suggested that the fairness foundation will be primarily associated with those on the left than on the right (Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009). However, even if the items developed in Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) more closely correspond to the notion of proportionality, then our results still challenge the claims of MFT, as we show that there is no systematic relationship between ideology and judgments of moral violations of this type.Footnote 14

Moreover, when making paired comparisons of the MFV items, liberals and conservatives appear to have very similar rank orderings of the relative severity of violations of the five foundations. This is because even where we do detect ideological differences in moral judgments, these are in general much smaller than the foundation-level variation in violation severity. Those on the political right may object less to violations of the care foundation than those on the left, but the right still views the care violations we presented as substantially worse, on average, than either the loyalty or the authority violations. Likewise, even if the right put marginally more weight on loyalty considerations than those on the left, they nevertheless ranked the loyalty violations as much less severe than those involving fairness, care, or sanctity, on average. As a result, the foundation-level effects from the moral violations experiment imply that all respondents, regardless of ideology, rank the tested set of sanctity, fairness, and care violations as systematically more important than the authority or loyalty violations. This again contrasts sharply with the results of the MFQ analysis, in which liberals and conservatives report very different rankings of the foundations.

An important implication of these results is therefore that estimates of political differences in moral judgment depend heavily on whether survey items aim to capture explicitly stated moral principles versus intuitive moral responses to concrete situations. When asked to articulate theories of their own morality, conservatives cite both authority and loyalty as central concerns, but when faced with specific violations of those norms, they appear to view them as less important than actions that violate principles of care or fairness. Similarly, liberals might say, when asked, that they do not think sanctity concerns are relevant to their moral evaluations, but they still object when presented with scenarios in which people engage in activities that transgress ideas of temperance, chastity, and cleanliness.

Our findings share some similarities with the analysis reported in Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015, 1193). Like us, Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) show that there is no correlation between ideology and judgments of fairness violations and that the relationship between ideology and authority, and ideology and loyalty, is slightly weaker than the same relationship when measured from the MFQ. By contrast, in their analysis, Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) show a stronger relationship between ideology and the care foundation in the MFV than the MFQ (with respect to violations involving physical harm, though not emotional harm), where we find a weaker relationship between ideology and judgments of care violations. Finally, Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) find a strong relationship between sanctity concerns and ideology, while we find no evidence of such a relationship.

We attribute these differences largely to differences in survey design and sample composition. The analysis in Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) is based on 416 responses to a nonrepresentative survey of 18–40 year olds in the United States, in which respondents rated the moral wrongness of 132 vignettes using a five-point scale, completed the 30-item MFQ, and answered a series of political and demographic questions. These design decisions are clearly appropriate for surveys aimed at developing and validating new items for moral judgment, but are not necessarily optimal for data collection which aims to test subsequent hypotheses about the relationship between political ideology and moral decision making. In addition to using two large and nationally representative samples, and a significantly simpler survey instrument, our analysis also differs from Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015) in that we ask respondents to make comparisons between vignettes rather than providing single-vignette ratings. We therefore see our article as a helpful extension to the work of Clifford et al. (Reference Clifford, Iyengar, Cabeza and Sinnott-Armstrong2015), as we build directly on their work to explore in detail the relationship between political ideology and moral judgments using the vignettes that they developed.

MEASURING AGREEMENT ABOUT INDIVIDUAL VIOLATION SEVERITY

Model Definition

Given the small foundation-level differences in the MFV experiment uncovered above, we might also be interested in whether there are large political differences regarding specific violations, regardless of the foundation to which they apply. If conservatives and liberals react to the world through fundamentally different moral intuitions, then we might expect there to be little similarity in their relative assessments of specific moral violations, even if these differences do not map neatly onto the foundation-based categorization proposed by MFT.

In order to directly estimate the correlation between the relative judgments of violation severity across different ideological groups at the vignette level, we again build on the first-stage model described in Equation 4. We use a “correlated severity” model where we model the α j,p parameters by assuming that they are drawn from a multivariate normal distribution with mean zero and covariance matrix Σ:

Here, Σ has diagonal elements σ 2 p and off-diagonal elements σ p σ p′ ρ p,p′ . The correlations ρ are our primary interest, as these tell us whether the relative severity of the violations, across our entire MFV experiment, tend to be very similar for groups p and p′ (ρ p,p′ > 0), whether the violations that are considered to be bad by one group are uncorrelated with those that are considered bad by the other (ρ p,p′ ≈ 0), or whether the groups systematically disagree about which violations are worse from a moral perspective (ρ p,p′ < 0). We estimate this model twice, once using the seven-category version of the ideology scale for each country described above, and once using a simplified three-category version of the scale in which we put respondents into {Left, Moderate, Right} groups. We present results from both models (for each country) below. In addition, and as before, we use the same modeling approach to analyze the results from our second experiment, based on the MFQ. Here, the correlation parameters capture the degree to which left- and right-wing respondents agree on the relative importance of the MFQ items when they think about morality.

Results

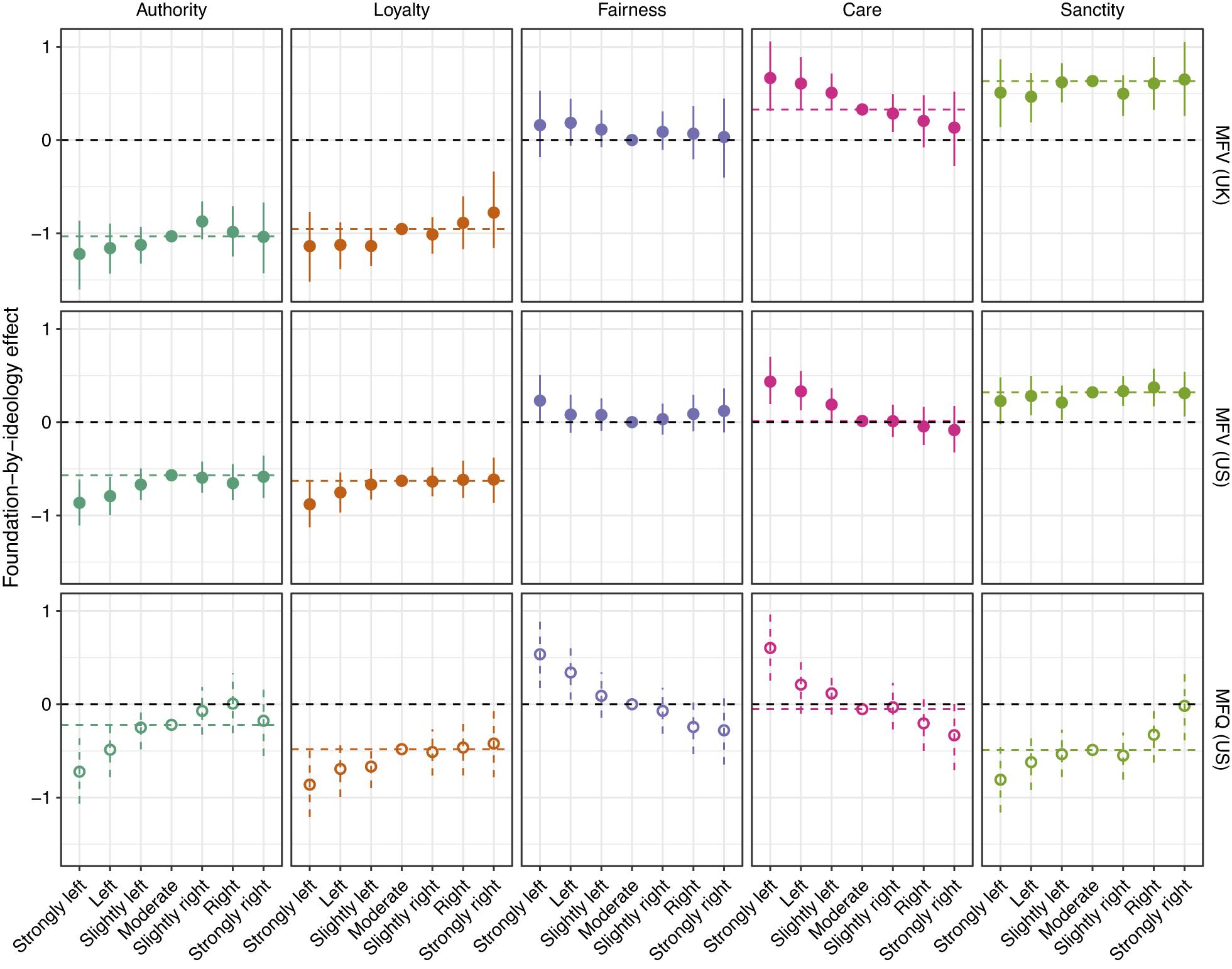

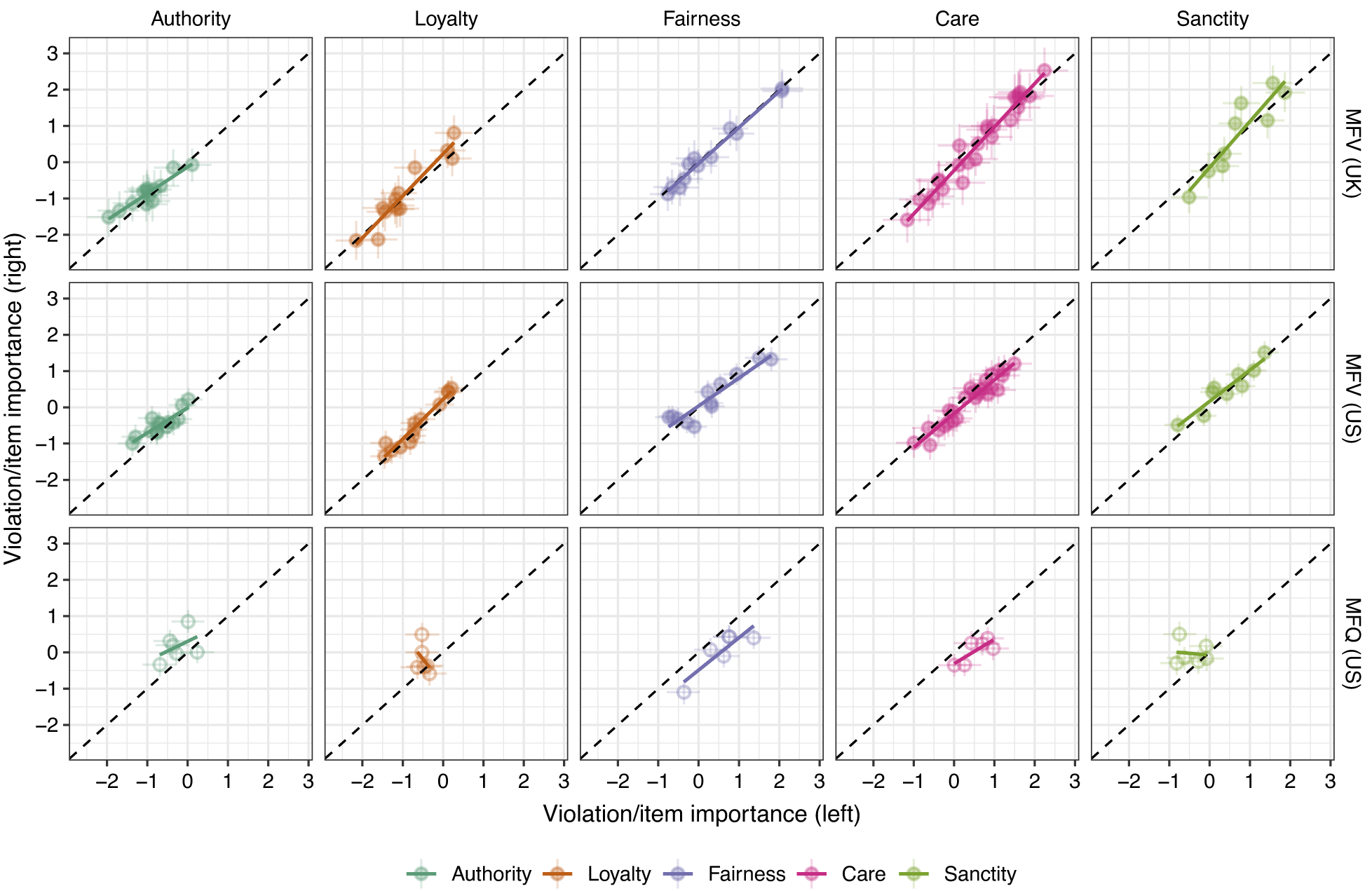

Figure 5 presents our estimates for each of the 74 MFV violations and the 30 MFQ items separately for those on the left and the right of the political spectrum from the model estimated using our three-category decomposition of ideology. The top and middle rows give results from the U.K. and U.S. versions of the MFV experiment, respectively, and the bottom row presents results from the MFQ experiment. While liberal and conservative rankings of abstract moral concerns are heterogeneous, those on the left and the right are largely uniform in their perceptions of the severity of concrete moral violations. Averaging across the five foundations, the correlation in the rankings of the MFV violations between liberals and conservatives is remarkably high in both the United Kingdom (0.94 [0.89, 0.97]) and the United States (0.91 [0.85, 0.95]), and very few vignettes show differences between liberals and conservatives that are significantly different from zero. By contrast, the correlation in the rankings between left- and right-leaning respondents of the MFQ items is much lower (0.37 [-0.02, 0.68]), and for the sanctity and loyalty foundations, the correlation is actually negative.

Figure 5. Correlation of Violation Severity/Item Importance between Respondents on the Left and Right

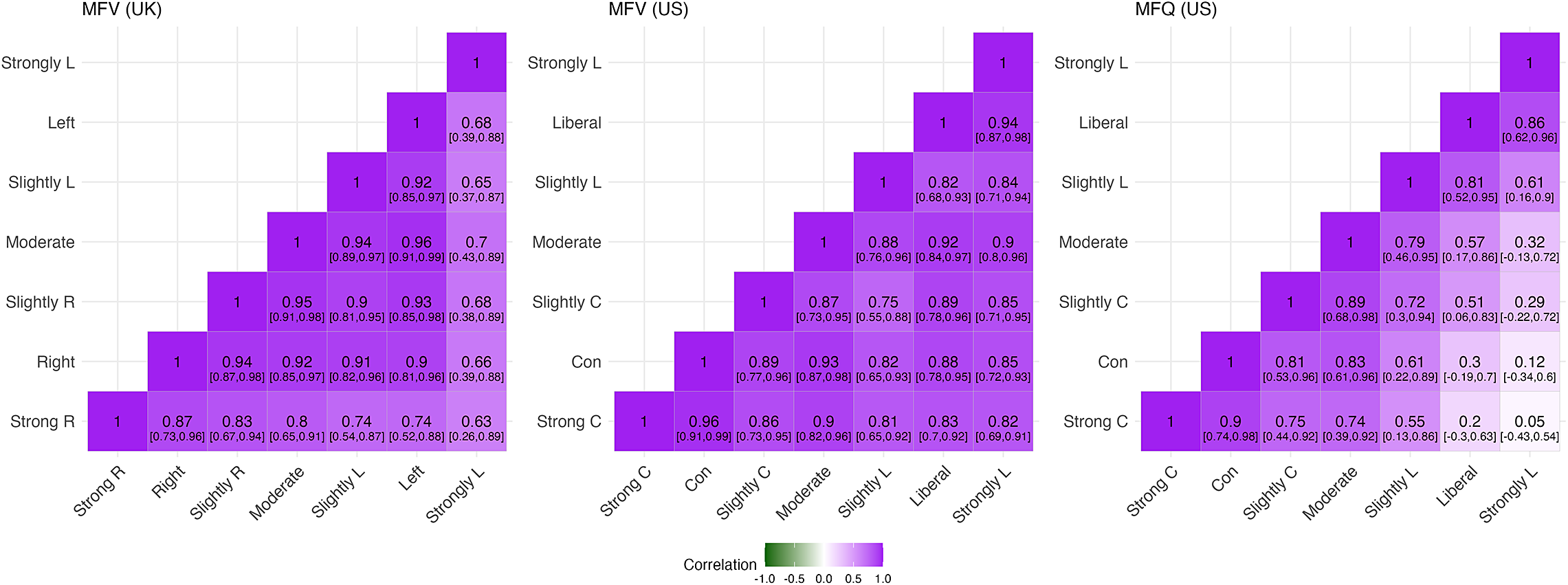

To investigate whether these results mask heterogeneity at more extreme ideological positions, we present the estimates for the correlation between respondents in each group (ρ p,p′ ) of the seven-category ideological variable (alongside their associated 95% credibility intervals) in Figure 6. The left and center panels depict estimates from the MFV experiment in the United Kingdom and the United States, and the right panel shows estimates from the MFQ experiment.

Figure 6. Correlation of Violation Severity by Ideology

Note: Correlation in violation severity, across all foundations, for respondents with different self-reported ideological positions.

The figure shows that the correlation in perceptions of violation severity is positive for all pairs of ideological groups of respondents. The lowest correlation we measure is between those who are “Strongly left” and “Strongly right” in the United Kingdom, but even here the correlation is positive and reasonably strong at 0.63 [0.26, 0.89]. In the United States, the correlation between “Strongly liberal” and “Strongly conservative” respondents is even higher at 0.82 [0.69, 0.91]. The respondents falling into these groups represent small fractions of the population, particularly in the United Kingdom. In the United States, 23% of respondents describe themselves as being “Strongly” liberal or conservative, and in the United Kingdom, just 4.5% report being “Strongly” left or right. It is therefore striking that even these small groups at the ideological extremes, regardless of their political differences, tend to have very similar moral intuitions about the relative severity of the violations that we included in our experiment.

By contrast, the right panel of Figure 6 reveals that, when evaluating the relevance of the abstract moral statements contained in the MFQ, the rankings of items are very different for conservative and liberal respondents. For example, the estimated correlation between MFQ item rankings for “Strongly liberal” and “Strongly conservative” respondents is just 0.05 [−0.43, 0.54]. While we find that U.S. liberals and conservatives largely agree on the relative severity of specific moral violations, there is almost no correlation in their judgments of the relevance of abstract moral principles. There is also a clear relationship between ideological proximity and the correlation of item strengths: the more ideologically proximate respondents are, the more they tend to agree about the relevance of different MFQ items. The stark difference between the highly correlated responses to the concrete moral violation vignettes and the low ideological correlation in responses to the MFQ items is hard to square with an interpretation that differences in moral intuitions between liberals and conservatives “illuminate the nature and intractability of moral disagreements” (Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009, 1029). Although liberals and conservatives may express different moral priorities when asked to reflect on their own moral reasoning, when confronted with concrete moral dilemmas they tend to react in strikingly similar ways.

Are the high correlations of perceived violation severity due to measurement error in our ideology variable? Our measure for political ideology is identical to the one used in existing work on MFT, but we can also reestimate the correlated severity model to assess whether other voter-level characteristics are associated with different perceptions of violation severity. We find little evidence that this is the case. Most notably, the political voting histories of our respondents seem generally uninformative with respect to their moral intuitions. For instance, we find that, in the United Kingdom, the correlation in perceptions of the moral wrongness of the different violations is strongly positive between those who voted for the United Kingdom to “Leave” and those who voted to “Remain” in the EU referendum in 2016 (0.98), as well as between Conservative and Labor voters in the 2019 General Election (0.97). Likewise, Trump and Biden voters in the 2020 U.S. Presidential Election also make very similar judgments about which violations are morally worse: the estimated ρ parameter for these groups is 0.95. In general, whether we use an attitudinal or behavioral measure of political ideology, the public consensus on relative violation severity explains far more variation in individual respondents’ judgments of moral wrongness than do any systematic differences in moral judgment between respondents of different ideological or political groupings.

In the Supplementary Material, we use the same correlated strength model described above to analyze the similarities in moral judgment between respondents of different ages, education levels, genders, races, and incomes. Looking across all the covariates, we find a consistent pattern: the correlation in judgments of specific moral violations is always high, whereas the correlation in judgments of the relevance of the MFQ items is not. In general, while respondent demographics allow us to predict variation in the endorsement of abstract moral principles, there is very little disagreement across different groups of respondents when people make judgments of concrete moral violations. Finally, we find that respondents’ perceptions of violation severity are very similar regardless of whether the vignette describes the perpetrator of a particular transgression as male or female.

CONCLUSION

In this article, we argued that existing empirical work on MFT has overstated differences in the moral intuitions of liberals and conservatives due to a mismatch between the theory and the measurement. Measuring the moral priorities of individuals by asking them to explicitly reflect on the abstract principles of their own moralities is unlikely to capture the automatic and effortless moral intuitions that lie at the conceptual heart of MFT. We proposed an alternative approach based on asking respondents to select between pairs of concrete moral transgressions, which comes closer to eliciting the types of intuitions that proponents of MFT claim underpin political disagreement between the left and the right. Empirically, we field two new survey experiments which show that while liberals and conservatives articulate different sets of moral priorities, we find that when confronted with specific moral comparisons they make very similar moral judgments. To the degree that political differences in moral evaluation do exist, these differences are small relative to the overall variation in judgments of different scenarios and small relative to the variation in support of abstract moral statements. As our final analysis demonstrates very clearly, while ideology strongly predicts the importance that citizens assign to abstract moral concerns, when making moral judgments of concrete scenarios related to those concerns citizens strongly agree with one another regardless of ideology.

Our findings have important implications for assessing potential explanations for contemporary political disagreement. In particular, a concern raised by previous studies on MFT is that the large moral differences between liberals and conservatives are likely to make the resolution of morally loaded political issues intractable (Graham, Haidt, and Nosek Reference Graham, Haidt and Nosek2009; Haidt Reference Haidt2012; Koleva et al. Reference Koleva, Graham, Iyer, Ditto and Haidt2012). Haidt (Reference Haidt2012, 370–1) suggests that when disagreement is driven by instinctive moral responses, it becomes “difficult…to connect with those who live in other [moral] matrices.” These fears are especially pronounced for “culture wars” issues—such as those related to sex, gender, and multiculturalism—where voters’ policy positions are particularly strongly associated with their expressed moral attitudes (Koleva et al. Reference Koleva, Graham, Iyer, Ditto and Haidt2012). However, our results suggest that this is unlikely to be the most important obstacle to productive conversation across political lines of disagreement. If conservatives and liberals react in largely similar ways to concrete moral questions (as we show in our MFV experiment), but express much more variation in their self-assessed moral attitudes (as documented in existing work and in our MFQ experiment), then the latter may reflect differences in how people talk about moral questions rather than genuine moral conflict. Indeed, our results align more with those who have argued for the unifying potential of morality in politics (Jung Reference Jung2022) and with work that suggests that the real-world differences in moral behavior between people with different politics “appear to be more a matter of nuance than stark contrast” (Hofmann et al. Reference Hofmann, Wisneski, Brandt and Skitka2014, 1342).

Looking beyond the literature on politics and morality, the central methodological argument we make—that survey questions asking people to reflect on abstract concepts can result in different response distributions than questions asking people to evaluate concrete manifestations of those concepts—has implications for many other literatures. For instance, much existing work in political behavior draws on survey questions that ask respondents to reflect on their support for democracy and the normative ideals associated with democratic government. Would the ways in which responses to these questions correlate with respondent covariates persist if surveys instead asked respondents to evaluate specific violations of democratic ideals? Our findings raise the possibility that analyses based on soliciting reactions to specific stimuli might lead to very different patterns of “support for democracy” than findings based on questions that encourage voters to self-theorize about their democratic attitudes. In partial evidence of this point, recent survey-based work on the acceptability of political violence suggests that the specificity with which such questions are addressed can “cause the magnitude of the relationship between previously identified correlates and partisan violence to be overstated” (Westwood et al. Reference Westwood, Grimmer, Tyler and Nall2022, 1).

One objection to the conclusion we draw is that the vignettes we use are mostly apolitical in nature, possibly suppressing political differences in moral expression. However, we think this property is helpful because it reduces endogenous responses where people infer the moral positions they think they ought to take on different issues as a result of their partisan or ideological allegiances. If we asked about a highly politicized moral issue—for instance, abortion—we might find highly polarized views between liberals and conservatives, but it would not be clear that such polarization stems from intuitive moral concerns or rather from the fact that voters have had their views on that issue deeply shaped by politics. In fact, our findings suggest that where prior work finds such political differences, they may not stem from fundamentally incompatible moral views on the importance of sanctity (or another foundation). More generally, proponents of MFT view moral intuitions as being causally constitutive of political attitudes, but it is hard to see how the very high degree of consensus about moral judgments that we document could be the root cause of either policy-based or affective polarization between political groups. The differences in the intuitive morality of those on the left and the right are simply too small to be responsible for the well-documented polarization between ideological groups.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit https://doi.org/10.1017/S0003055424000492.

DATA AVAILABILITY STATEMENT

Research documentation and data that support the findings of this study are openly available at the American Political Science Review Dataverse: https://doi.org/10.7910/DVN/BWZ3N0.

Acknowledgements

We thank seminar participants at the European Political Science Association 2022 Conference, the Elections, Parties and Public Opinion 2022 Conference, the European University Institute, and University College London.

FUNDING STATEMENT

This research was funded by the Leverhulme Trust (Grant No. RF-2021-327/7).

CONFLICT OF INTEREST

The authors declare no ethical issues or conflicts of interest in this research.

ETHICAL STANDARDS

Our study received ethical review before it was fielded by the UCL Research Ethics panel (Project ID: 21793/001). The authors affirm that this article adheres to the principles concerning research with human participants laid out in APSA’s Principles and Guidance on Human Subject Research (2020).

Comments

No Comments have been published for this article.