1. Introduction

In science and engineering, different computational models can be derived to make realizations of the quantities of interest (QoIs) of a process or an event happening in reality. High-fidelity (HF) models can result in highly accurate and robust realizations, but running them is typically computationally expensive. In contrast, different low-fidelity (LF) models with lower computational cost can be developed for the same process which, however, potentially lead to lower accuracy QoIs due to partial or completely missing physics captured by the model. On the other hand, in different applications arising in uncertainty quantification (UQ), data fusion, and optimization, of numerous realizations of the QoIs are required, associated with the samples taken from the space of the inputs/parameters in order to make reliable estimations (these non-intrusive problems are referred to as the outer-loop problems). In this regard, multifidelity models (MFMs) can be constructed by combining realizations of the HF and LF models such that a balance between the overall computational cost and predictive accuracy is achieved. The goal is to provide, by combining HF and LF models, an estimate of the QoI that is better than any of the models alone.

In the recent years, different types of MFMs have been applied to a wide range of problems, see e.g. the recent review by Peherstorfer, Willcox & Gunzburger (Reference Peherstorfer, Willcox and Gunzburger2018). The use of the MFMs in studies of turbulent flows can be greatly advantageous, considering the wide range of engineering applications relying on these flows and also the high cost generally involved in the HF computations (such as scale-resolving simulations) and experiments of the turbulent flows. There is a distinguishable hierarchy in the fidelity of the computational models utilized for simulation of turbulence, (see e.g. Sagaut, Deck & Terracol Reference Sagaut, Deck and Terracol2013). Let us consider the wall-bounded turbulent flows where a turbulent boundary layer forms at the wall boundaries. Direct numerical simulation (DNS) can provide the highest-fidelity results for a given turbulent flow, however, it can become prohibitively expensive at high Reynolds numbers which are relevant to practical applications. The computational cost can be reduced by employing large eddy simulation (LES) which aims at directly resolving the scales larger than a defined size and modelling the unresolved effects. At the lowest cost and fidelity level, Reynolds-averaged Navier–Stokes (RANS) simulations can be performed which avoid directly resolving any flow fluctuations by resorting to a statistical description of turbulence. Between RANS and wall-resolving LES, other approaches such as hybrid RANS–LES and wall-modelled LES can be considered (see Sagaut et al. Reference Sagaut, Deck and Terracol2013; Larsson et al. Reference Larsson, Kawai, Bodart and Bermejo-Moreno2016). Although this clear hierarchy is extremely beneficial when constructing MFMs, as will be thoroughly discussed and demonstrated in the present paper, there is a challenge to be dealt with: the realizations of different turbulence simulation approaches are, in general, sensitive to various modelling and numerical parameters as well as inputs. At lower fidelities like RANS, modelling effects are dominant while as moving towards LES and DNS, numerical factors become more relevant, including grid resolution and discretization properties. Hereafter, these fidelity-specific controlling parameters are referred to as tuning or calibration parameters.

Combining training data from different turbulence simulation approaches, MFMs are constructed over the space of design and uncertain parameters/inputs. An appropriate approach to construct MFMs for turbulent flow problems should systematically allow for simultaneous calibration of the tuning parameters. An appropriate methodology which is employed in the present study is the hierarchical multifidelity predictive model developed in Higdon et al. (Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004) and Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013) in which the calibration parameters of the involved fidelities are estimated using the data of the higher-fidelity models. This MFM, which is hereafter referred to as HC-MFM, can also incorporate the observational uncertainties. The HC-MFM can be seen as an extension of the model by Higdon et al. (Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004) which was employed to combine experimental (field) and simulation data. A fundamental component of this class of MFMs is the Bayesian calibration of the computer models, as described in the landmark paper by Kennedy & O'Hagan (Reference Kennedy and O'Hagan2001). At each level of the MFM, the Gaussian process regression (GPR) (Rasmussen & Williams Reference Rasmussen and Williams2005) is employed to construct surrogates for the simulators.

The application of the HC-MFM in the field of computational fluid dynamics (CFD) and turbulent flows is novel, and we make specific adaptations suitable for turbulence simulations. In this regard, the present paper aims at assessing the useful potential of the HC-MFM by applying it to three examples relevant to engineering wall-bounded turbulent flows. To highlight the contributions of the present work, the existing studies in the literature devoted to the development and application of the MFMs to CFD and turbulent flows are briefly reviewed here by classifying them according to their underlying MFM strategy. (i) A model was originally introduced by Kennedy & O'Hagan (Reference Kennedy and O'Hagan2000) where a QoI at each fidelity is expressed as a first-order autoregressive model of the same QoI at the immediately lower fidelity. Co-kriging using GPR to construct surrogates is classified in this category, see e.g. Fatou Gomez (Reference Fatou Gomez2018); Voet et al. (Reference Voet, Ahlfeld, Gaymann, Laizet and Montomoli2021) for applications to turbulence simulations. To enhance the computational efficiency of the co-kriging for several fidelity levels, recursive algorithms have been proposed and applied to CFD problems, see Gratiet & Garnier (Reference Gratiet and Garnier2014); Perdikaris et al. (Reference Perdikaris, Venturi, Royset and Karniadakis2015). (ii) A class of MFMs has been developed based on non-intrusive polynomial chaos expansion (PCE) and stochastic collocation methods (Ng & Eldred Reference Ng and Eldred2012; Palar, Tsuchiya & Parks Reference Palar, Tsuchiya and Parks2016), where an additive or a multiplicative term is considered to correct the LF model's predictions against the HF model. (iii) The multi-level multifidelity Monte Carlo (MLMF-MC) models (Fairbanks et al. Reference Fairbanks, Doostan, Ketelsen and Iaccarino2017; Geraci, Eldred & Iaccarino Reference Geraci, Eldred and Iaccarino2017) are appropriate for the UQ forward problems. These models are developed by combining multilevel (Giles Reference Giles2008) and control-variate (Pasupathy et al. Reference Pasupathy, Schmeiser, Taaffe and Wang2012) MC methods to improve the rate of convergence of the stochastic moments of the QoIs compared with the the standard MC method. Jofre et al. (Reference Jofre, Geraci, Fairbanks, Doostan and Iaccarino2018) applied MLMF-MC models to an irradiated particle-laden turbulent flow. The HF model was considered to be DNS and the two LF models were based on a surrogate particle approach and lower resolutions for flow and particles. (iv) Other models including the hierarchical kriging model based on GPR where the predictions of a LF model are taken as the trend in the HF kriging, see Han & Görtz (Reference Han and Görtz2012).

Recently, Voet et al. (Reference Voet, Ahlfeld, Gaymann, Laizet and Montomoli2021) compared inverse weighted distance-, PCE- and co-kriging-based MFMs using the data of RANS and DNS for the turbulent flow over a periodic hill, and concluded that the co-kriging model outperforms the others in terms of accuracy. This is the first (and to our knowledge only) study where MFMs have been applied to engineering-relevant RANS and DNS data for the purpose of uncertainty propagation. Voet et al. (Reference Voet, Ahlfeld, Gaymann, Laizet and Montomoli2021) also found that the performance of the co-kriging can deteriorate when there is no significant correlation between the RANS and DNS data and at the same time there is a significant deviation between them. Motivated by this deficiency, we adapt and use the HC-MFM where the discrepancy between the data (and not their correlation) over the space of design parameters is learned using independent Gaussian processes. The model is absolutely generative and can be extended to an arbitrary number of fidelity levels. Besides the systematic calibration of the fidelity-specific parameters during its training stage, the HC-MFM is also capable of handling uncertain data, as for instance happens when QoIs are turbulence statistics computed over a finite time-averaging interval (recently, a framework was proposed by Rezaeiravesh, Vinuesa & Schlatter (Reference Rezaeiravesh, Vinuesa and Schlatter2022) to combine these observational uncertainties with parametric ones). Relying on these characteristics, the HC-MFM is suitable for application to the data of various turbulence simulation methodologies to address different types of the outer-loop problems. In contrast to all the previous studies (at least in CFD), we adopt a Bayesian inference to construct the HC-MFM, a feature which results in more accurate models as well as estimating confidence intervals for the predictions.

The rest of the paper is organized as follows. In § 2, various elements of the HC-MFM approach are introduced and explained. Section 3 is devoted to the application of the HC-MFM to an illustrative example, turbulent channel flow, polars for an airfoil and analysis of the geometrical uncertainties in the turbulent flow over a periodic hill. The summary of the paper along with the conclusions is presented in § 4.

2. Method

In this section, the hierarchical MFM with calibration (HC-MFM) developed by Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013), which forms the basis for the present study is reviewed. We will proceed by sequentially going through the aspects of GPR, model calibration and eventually the HC-MFM formulation. The workflow of the HC-MFM represented in figure 1 may help connect the following technical details.

Figure 1. Schematic representation of the machinery for constructing the HC-MFM over the space of design/uncertain parameters ![]() $\boldsymbol {x}$. To make realizations of the QoI of the problem in hand, there is a hierarchy of fidelities where each fidelity may have its own parameters and also some parameters shared with others (together called

$\boldsymbol {x}$. To make realizations of the QoI of the problem in hand, there is a hierarchy of fidelities where each fidelity may have its own parameters and also some parameters shared with others (together called ![]() $\boldsymbol {\theta }$). For joint samples of

$\boldsymbol {\theta }$). For joint samples of ![]() $\boldsymbol {x}$ and

$\boldsymbol {x}$ and ![]() $\boldsymbol {\theta }$, training data for the QoI are generated (or are available) in a way that more samples are taken for LF models which are less costly to evaluate. The priors for

$\boldsymbol {\theta }$, training data for the QoI are generated (or are available) in a way that more samples are taken for LF models which are less costly to evaluate. The priors for ![]() $\boldsymbol {\theta }$ and GPs’ hyperparameters

$\boldsymbol {\theta }$ and GPs’ hyperparameters ![]() $\boldsymbol {\beta }$ are defined after the HC-MFM is formulated as, for instance, (2.6). Given the training data, an MCMC sampling method is used to infer the posteriors of

$\boldsymbol {\beta }$ are defined after the HC-MFM is formulated as, for instance, (2.6). Given the training data, an MCMC sampling method is used to infer the posteriors of ![]() $\boldsymbol {\theta }$ and

$\boldsymbol {\theta }$ and ![]() $\boldsymbol {\beta }$.

$\boldsymbol {\beta }$.

2.1. Gaussian process regression

In general, the Gaussian processes (GPs) provide a way to systematically build a representation of the QoI as a function of the various inputs to the model. Eventually, regression can be performed by evaluating the GP at new inputs not seen by the model before. Let ![]() $\boldsymbol {x}\in \mathbb {X}\subset \mathbb {R}^{p_{\boldsymbol {x}}}$ represent the controllable inputs and parameters, adopting the notation from Kennedy & O'Hagan (Reference Kennedy and O'Hagan2001). As a convention, all boldface letters are hereafter considered to be a vector or a matrix. The design and uncertain parameters appearing in optimization and UQ analyses, respectively, can also be classified as

$\boldsymbol {x}\in \mathbb {X}\subset \mathbb {R}^{p_{\boldsymbol {x}}}$ represent the controllable inputs and parameters, adopting the notation from Kennedy & O'Hagan (Reference Kennedy and O'Hagan2001). As a convention, all boldface letters are hereafter considered to be a vector or a matrix. The design and uncertain parameters appearing in optimization and UQ analyses, respectively, can also be classified as ![]() $\boldsymbol {x}$. A GP

$\boldsymbol {x}$. A GP ![]() $\hat {f}(\boldsymbol {x})$ can be employed to map the inputs

$\hat {f}(\boldsymbol {x})$ can be employed to map the inputs ![]() $\boldsymbol {x}$ to a QoI or an output

$\boldsymbol {x}$ to a QoI or an output ![]() $y\subset \mathbb {R}$ of the computer codes (simulators) or field data. For a finite set of training samples

$y\subset \mathbb {R}$ of the computer codes (simulators) or field data. For a finite set of training samples ![]() $\{\boldsymbol {x}_1, \boldsymbol {x}_2,\ldots,\boldsymbol {x}_n\}$ with corresponding observations

$\{\boldsymbol {x}_1, \boldsymbol {x}_2,\ldots,\boldsymbol {x}_n\}$ with corresponding observations ![]() $\{y_1,y_2,\ldots,y_n\}$, the collection of

$\{y_1,y_2,\ldots,y_n\}$, the collection of ![]() $\{\hat {f}(\boldsymbol {x}_1),\hat {f}(\boldsymbol {x}_2),\ldots,\hat {f}(\boldsymbol {x}_n)\}$ will have a joint Gaussian (multivariate normal) distribution (Rasmussen & Williams Reference Rasmussen and Williams2005). The GP

$\{\hat {f}(\boldsymbol {x}_1),\hat {f}(\boldsymbol {x}_2),\ldots,\hat {f}(\boldsymbol {x}_n)\}$ will have a joint Gaussian (multivariate normal) distribution (Rasmussen & Williams Reference Rasmussen and Williams2005). The GP ![]() $\hat {f}(\boldsymbol {x})$ is written as

$\hat {f}(\boldsymbol {x})$ is written as

which is fully described by its mean ![]() $m(\boldsymbol {x})$ and covariance function

$m(\boldsymbol {x})$ and covariance function ![]() $k(\boldsymbol {x},\boldsymbol {x}')$ defined as

$k(\boldsymbol {x},\boldsymbol {x}')$ defined as

In general, the GPs can be used in the case of having observation noise ![]() $\boldsymbol {\varepsilon }$ in the

$\boldsymbol {\varepsilon }$ in the ![]() $y$ data. Using an additive error model, we have

$y$ data. Using an additive error model, we have

where the noises are assumed to be independent and have Gaussian distribution ![]() $\boldsymbol {\varepsilon }\sim \mathcal {N}(0,\sigma ^2)$.

$\boldsymbol {\varepsilon }\sim \mathcal {N}(0,\sigma ^2)$.

In the GPR, given a set of training data ![]() $\mathcal {D}=\{\boldsymbol {x}_i,y_i\}_{i=1}^n$ the posterior and posterior predictive distributions of

$\mathcal {D}=\{\boldsymbol {x}_i,y_i\}_{i=1}^n$ the posterior and posterior predictive distributions of ![]() $\hat {f}({\cdot })$ and

$\hat {f}({\cdot })$ and ![]() $y$, respectively, at test inputs

$y$, respectively, at test inputs ![]() $\boldsymbol {x}^* \in \mathbb {X}$, can be inferred in a Bayesian framework (Rasmussen & Williams Reference Rasmussen and Williams2005). To this end, first a prior distribution for

$\boldsymbol {x}^* \in \mathbb {X}$, can be inferred in a Bayesian framework (Rasmussen & Williams Reference Rasmussen and Williams2005). To this end, first a prior distribution for ![]() $\hat {f}(\boldsymbol {x})$, see (2.1), is assumed through specifying functions for the mean and covariance in (2.2) and (2.3), where there are unknown hyperparameters

$\hat {f}(\boldsymbol {x})$, see (2.1), is assumed through specifying functions for the mean and covariance in (2.2) and (2.3), where there are unknown hyperparameters ![]() $\boldsymbol {\beta }$ in the functions. Using the training data, the posterior distribution of

$\boldsymbol {\beta }$ in the functions. Using the training data, the posterior distribution of ![]() $\boldsymbol {\beta }$ is learned. As a main advantage of the GPR, the predictions at test samples will be accompanied by an estimate of uncertainty, see (A1) and (A2) in Appendix A.

$\boldsymbol {\beta }$ is learned. As a main advantage of the GPR, the predictions at test samples will be accompanied by an estimate of uncertainty, see (A1) and (A2) in Appendix A.

2.2. Model calibration

As pointed out in § 1, the outputs of computational models (simulators) at a given ![]() $\boldsymbol {x}$ may depend on different tuning or calibration parameters,

$\boldsymbol {x}$ may depend on different tuning or calibration parameters, ![]() $\boldsymbol {t}\in \mathbb {T}\subset \mathbb {R}^{p_{\boldsymbol {t}}}$. Given a set of observations, these parameters can be calibrated through conducting a UQ inverse problem which can be expressed in a Bayesian framework (Kennedy & O'Hagan Reference Kennedy and O'Hagan2001). The calibrated model can then not only be employed for prediction, but also for fusion of the field and simulation data, see Higdon et al. (Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004). Consider

$\boldsymbol {t}\in \mathbb {T}\subset \mathbb {R}^{p_{\boldsymbol {t}}}$. Given a set of observations, these parameters can be calibrated through conducting a UQ inverse problem which can be expressed in a Bayesian framework (Kennedy & O'Hagan Reference Kennedy and O'Hagan2001). The calibrated model can then not only be employed for prediction, but also for fusion of the field and simulation data, see Higdon et al. (Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004). Consider ![]() $n_1$ data samples

$n_1$ data samples ![]() $\{(\boldsymbol {x}_i,y_i)\}_{i=1}^{n_1}$ are observed for a physical process

$\{(\boldsymbol {x}_i,y_i)\}_{i=1}^{n_1}$ are observed for a physical process ![]() $\zeta (\boldsymbol {x})$. To statistically model the observations, a simulator

$\zeta (\boldsymbol {x})$. To statistically model the observations, a simulator ![]() $\hat {f}(\boldsymbol {x},\boldsymbol {\theta })$ can be employed in which the

$\hat {f}(\boldsymbol {x},\boldsymbol {\theta })$ can be employed in which the ![]() $\boldsymbol {\theta }$ are the true or optimal values of

$\boldsymbol {\theta }$ are the true or optimal values of ![]() $\boldsymbol {t}$ and are to be estimated from the training data. However, in general, it is possible that even the calibrated simulator

$\boldsymbol {t}$ and are to be estimated from the training data. However, in general, it is possible that even the calibrated simulator ![]() $\hat {f}(\boldsymbol {x},\boldsymbol {\theta })$ produces observations which systematically deviate from reality. To remove such a bias, a model discrepancy term

$\hat {f}(\boldsymbol {x},\boldsymbol {\theta })$ produces observations which systematically deviate from reality. To remove such a bias, a model discrepancy term ![]() $\hat {\delta }(\boldsymbol {x})$ can be added to the simulator (see Kennedy & O'Hagan Reference Kennedy and O'Hagan2001; Higdon et al. Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004). In many practical applications, particularly in CFD and turbulent flow simulations where the computational cost can be excessively high, the restrictions of the computational budget only allows for a limited number of simulations. In any realization, the adopted values for the tuning parameters

$\hat {\delta }(\boldsymbol {x})$ can be added to the simulator (see Kennedy & O'Hagan Reference Kennedy and O'Hagan2001; Higdon et al. Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004). In many practical applications, particularly in CFD and turbulent flow simulations where the computational cost can be excessively high, the restrictions of the computational budget only allows for a limited number of simulations. In any realization, the adopted values for the tuning parameters ![]() $\boldsymbol {t}$ are not necessarily optimal and hence potentially lead to the outputs which are systematically different from the QoIs in reality. For the described calibration problem, the Kennedy & O'Hagan (Reference Kennedy and O'Hagan2001) model reads as

$\boldsymbol {t}$ are not necessarily optimal and hence potentially lead to the outputs which are systematically different from the QoIs in reality. For the described calibration problem, the Kennedy & O'Hagan (Reference Kennedy and O'Hagan2001) model reads as

where ![]() $\hat {\cdot }$ specifies a GP and

$\hat {\cdot }$ specifies a GP and ![]() $n_2$ is the number of simulated data. Note that the samples

$n_2$ is the number of simulated data. Note that the samples ![]() $\{\boldsymbol {x}_i\}_{i=1+n_1}^{n_2+n_1}$ are not necessarily the same as

$\{\boldsymbol {x}_i\}_{i=1+n_1}^{n_2+n_1}$ are not necessarily the same as ![]() $\{\boldsymbol {x}_i\}_{i=1}^{n_1}$ at which the observations are made. The index

$\{\boldsymbol {x}_i\}_{i=1}^{n_1}$ at which the observations are made. The index ![]() $i$ should be seen as a global index which implies that a different model is used for each of the two subranges of

$i$ should be seen as a global index which implies that a different model is used for each of the two subranges of ![]() $i$. Given the

$i$. Given the ![]() $n_1+n_2$ data, the posterior distribution for the calibration parameters

$n_1+n_2$ data, the posterior distribution for the calibration parameters ![]() $\boldsymbol {\theta }$ along with that of the hyperparameters in the GPs,

$\boldsymbol {\theta }$ along with that of the hyperparameters in the GPs, ![]() $\boldsymbol {\beta }$, is estimated. Further details are provided in the section below.

$\boldsymbol {\beta }$, is estimated. Further details are provided in the section below.

2.3. The hierarchical MFM with automatic calibration (HC-MFM)

Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013) extended the model (2.5) to an arbitrary number of fidelity levels which together form a modelling hierarchy for a physical process. As a main feature of the resulting MFM, each fidelity can, in general, have its own calibration parameters and also share some calibration parameters with other fidelities. The basics of the MFM comprising three fidelity levels are explained below, noting that adapting the formulation to any number of fidelities with different combinations of parameters is straightforward. We assume that the fidelity of the models decreases from ![]() $M_1$ to

$M_1$ to ![]() $M_3$, and in practice due to the budget limitations, the number of training data decreases with increasing the model fidelity. The HC-MFM for three fidelities reads as

$M_3$, and in practice due to the budget limitations, the number of training data decreases with increasing the model fidelity. The HC-MFM for three fidelities reads as

\begin{align} \left.\begin{array}{ll@{}} y_{M_1}(\boldsymbol{x}_i) = {\hat{f}(\boldsymbol{x}_i,\boldsymbol{\theta}_3,\boldsymbol{\theta}_s)} + {\hat{g}(\boldsymbol{x}_i,\boldsymbol{\theta}_{2},\boldsymbol{\theta}_{s})}+ {\hat{\delta}(\boldsymbol{x}_i)} + \varepsilon_{1_i} & i=1,2,\ldots,n_1 \\ y_{M_2}(\boldsymbol{x}_{i}) = {\hat{f}(\boldsymbol{x}_i,\boldsymbol{\theta}_{3},\boldsymbol{t}_{s_i})} + {\hat{g}(x_i,\boldsymbol{t}_{2_i},\boldsymbol{t}_{s_i})}+ \varepsilon_{2_i} & {i=1+n_1,2+n_1, \ldots , n_2+n_1} \\ y_{M_3}(\boldsymbol{x}_{i}) = {\hat{f}(\boldsymbol{x}_i,\boldsymbol{t}_{3_i},\boldsymbol{t}_{s_i})}+ \varepsilon_{3_i} & i=1+n_1+n_2, \ldots , n_3+n_1+n_2 \end{array}\right\}, \end{align}

\begin{align} \left.\begin{array}{ll@{}} y_{M_1}(\boldsymbol{x}_i) = {\hat{f}(\boldsymbol{x}_i,\boldsymbol{\theta}_3,\boldsymbol{\theta}_s)} + {\hat{g}(\boldsymbol{x}_i,\boldsymbol{\theta}_{2},\boldsymbol{\theta}_{s})}+ {\hat{\delta}(\boldsymbol{x}_i)} + \varepsilon_{1_i} & i=1,2,\ldots,n_1 \\ y_{M_2}(\boldsymbol{x}_{i}) = {\hat{f}(\boldsymbol{x}_i,\boldsymbol{\theta}_{3},\boldsymbol{t}_{s_i})} + {\hat{g}(x_i,\boldsymbol{t}_{2_i},\boldsymbol{t}_{s_i})}+ \varepsilon_{2_i} & {i=1+n_1,2+n_1, \ldots , n_2+n_1} \\ y_{M_3}(\boldsymbol{x}_{i}) = {\hat{f}(\boldsymbol{x}_i,\boldsymbol{t}_{3_i},\boldsymbol{t}_{s_i})}+ \varepsilon_{3_i} & i=1+n_1+n_2, \ldots , n_3+n_1+n_2 \end{array}\right\}, \end{align}

where subscript ![]() $s$ denotes the parameters which are shared between the models, whereas

$s$ denotes the parameters which are shared between the models, whereas ![]() $\boldsymbol {t}_2$ and

$\boldsymbol {t}_2$ and ![]() $\boldsymbol {t}_3$ are the calibration parameters specific to fidelities

$\boldsymbol {t}_3$ are the calibration parameters specific to fidelities ![]() $M_2$ and

$M_2$ and ![]() $M_3$, respectively. The noises are assumed to have Gaussian distributions with zero mean. At each fidelity level, the associated simulator is created by adding a model discrepancy term to the simulator describing the immediately lower fidelity. Concatenating all training data, an augmented vector

$M_3$, respectively. The noises are assumed to have Gaussian distributions with zero mean. At each fidelity level, the associated simulator is created by adding a model discrepancy term to the simulator describing the immediately lower fidelity. Concatenating all training data, an augmented vector ![]() $\boldsymbol {Y}$ of size

$\boldsymbol {Y}$ of size ![]() $n_1+n_2+n_3$ is obtained, for which the covariance matrix can be written in terms of the covariances of

$n_1+n_2+n_3$ is obtained, for which the covariance matrix can be written in terms of the covariances of ![]() $\hat {f}({\cdot })$,

$\hat {f}({\cdot })$, ![]() $\hat {g}({\cdot })$,

$\hat {g}({\cdot })$, ![]() $\hat {\delta }({\cdot })$ and the observational noise:

$\hat {\delta }({\cdot })$ and the observational noise:

\begin{align} \boldsymbol{\varSigma} &= {\boldsymbol{\varSigma}_{f}} +\begin{bmatrix} {\boldsymbol{\varSigma}_{g}} & \boldsymbol{0}_{(n_1+n_2)\times n_3} \\ \boldsymbol{0}_{n_3\times (n_1+n_2)} & \boldsymbol{0}_{n_3\times n_3} \\ \end{bmatrix} +\begin{bmatrix} {\boldsymbol{\varSigma}_\delta} & \boldsymbol{0}_{n_1\times (n_2+n_3)} \\ \boldsymbol{0}_{(n_2+n_3)\times n_1} & \boldsymbol{0}_{(n_2+n_3)\times (n_2+n_3)} \\ \end{bmatrix} \nonumber\\ & \quad +\begin{bmatrix} \boldsymbol{\varSigma}_{\varepsilon_1} & \boldsymbol{0}_{n_1\times n_2} & \boldsymbol{0}_{n_1\times n_3} \\ \boldsymbol{0}_{n_2\times n_1} & \boldsymbol{\varSigma}_{\varepsilon_2} & \boldsymbol{0}_{n_2\times n_3} \\ \boldsymbol{0}_{n_3\times n_1} & \boldsymbol{0}_{n_3\times n_2} & \boldsymbol{\varSigma}_{\varepsilon_3}\\ \end{bmatrix} . \end{align}

\begin{align} \boldsymbol{\varSigma} &= {\boldsymbol{\varSigma}_{f}} +\begin{bmatrix} {\boldsymbol{\varSigma}_{g}} & \boldsymbol{0}_{(n_1+n_2)\times n_3} \\ \boldsymbol{0}_{n_3\times (n_1+n_2)} & \boldsymbol{0}_{n_3\times n_3} \\ \end{bmatrix} +\begin{bmatrix} {\boldsymbol{\varSigma}_\delta} & \boldsymbol{0}_{n_1\times (n_2+n_3)} \\ \boldsymbol{0}_{(n_2+n_3)\times n_1} & \boldsymbol{0}_{(n_2+n_3)\times (n_2+n_3)} \\ \end{bmatrix} \nonumber\\ & \quad +\begin{bmatrix} \boldsymbol{\varSigma}_{\varepsilon_1} & \boldsymbol{0}_{n_1\times n_2} & \boldsymbol{0}_{n_1\times n_3} \\ \boldsymbol{0}_{n_2\times n_1} & \boldsymbol{\varSigma}_{\varepsilon_2} & \boldsymbol{0}_{n_2\times n_3} \\ \boldsymbol{0}_{n_3\times n_1} & \boldsymbol{0}_{n_3\times n_2} & \boldsymbol{\varSigma}_{\varepsilon_3}\\ \end{bmatrix} . \end{align}

Appropriate kernel functions should be chosen to express the structure of the covariances. Using samples ![]() $i$ and

$i$ and ![]() $j$ of the inputs and parameters, the associated element in the covariance matrix

$j$ of the inputs and parameters, the associated element in the covariance matrix ![]() $\boldsymbol {\varSigma }_f$ will be obtained from

$\boldsymbol {\varSigma }_f$ will be obtained from

where ![]() $\lambda _f$ is a hyperparameter and

$\lambda _f$ is a hyperparameter and ![]() $\bar {d}_{f_{ij}}$ is the scaled Euclidean distance between two samples

$\bar {d}_{f_{ij}}$ is the scaled Euclidean distance between two samples ![]() $i$ and

$i$ and ![]() $j$ over the space of

$j$ over the space of ![]() $(\boldsymbol {x},\boldsymbol {t}_3,\boldsymbol {t}_s)$

$(\boldsymbol {x},\boldsymbol {t}_3,\boldsymbol {t}_s)$

\begin{align} &=\sum_{l=1}^{p_{\boldsymbol{x}}} \frac{(x_{l_i}-x_{l_j})^2}{\ell^2_{f_{x_l}}} + \sum_{l=1}^{p_{{\boldsymbol{t}}_3}} \frac{(t_{3_{l_i}}-t_{3_{l_j}})^2}{\ell^2_{f_{{t_3}_l}}} + \sum_{l=1}^{p_{{\boldsymbol{t}}_s}} \frac{(t_{s_{l_i}}-t_{s_{l_j}})^2}{\ell^2_{f_{{t_s}_l}}}. \end{align}

\begin{align} &=\sum_{l=1}^{p_{\boldsymbol{x}}} \frac{(x_{l_i}-x_{l_j})^2}{\ell^2_{f_{x_l}}} + \sum_{l=1}^{p_{{\boldsymbol{t}}_3}} \frac{(t_{3_{l_i}}-t_{3_{l_j}})^2}{\ell^2_{f_{{t_3}_l}}} + \sum_{l=1}^{p_{{\boldsymbol{t}}_s}} \frac{(t_{s_{l_i}}-t_{s_{l_j}})^2}{\ell^2_{f_{{t_s}_l}}}. \end{align}

Here, ![]() $p_{\boldsymbol {x}}, p_{{\boldsymbol {t}}_3}$ and

$p_{\boldsymbol {x}}, p_{{\boldsymbol {t}}_3}$ and ![]() $p_{{\boldsymbol {t}}_s}$ specify the dimensions of

$p_{{\boldsymbol {t}}_s}$ specify the dimensions of ![]() $\boldsymbol {x}, \boldsymbol {t}_3$ and

$\boldsymbol {x}, \boldsymbol {t}_3$ and ![]() $\boldsymbol {t}_s$, respectively. Correspondingly, the length scale over the

$\boldsymbol {t}_s$, respectively. Correspondingly, the length scale over the ![]() $l$th dimension of each of these spaces is represented by

$l$th dimension of each of these spaces is represented by ![]() $\ell _{f_{x_l}}, \ell _{f_{{t_3}_l}}$ and

$\ell _{f_{x_l}}, \ell _{f_{{t_3}_l}}$ and ![]() $\ell _{f_{{t_s}_l}}$, respectively. These length scales are among the hyperparameters

$\ell _{f_{{t_s}_l}}$, respectively. These length scales are among the hyperparameters ![]() $\boldsymbol {\beta }$ to be learned when constructing the HC-MFM. There are various options for modelling the covariance kernel function

$\boldsymbol {\beta }$ to be learned when constructing the HC-MFM. There are various options for modelling the covariance kernel function ![]() $k({\cdot })$, see e.g. Rasmussen & Williams (Reference Rasmussen and Williams2005) and Gramacy (Reference Gramacy2020), among which the exponentiated quadratic and Matern-5/2 (Matern Reference Matern1986) functions are used in the examples in § 3. These two functions respectively read as

$k({\cdot })$, see e.g. Rasmussen & Williams (Reference Rasmussen and Williams2005) and Gramacy (Reference Gramacy2020), among which the exponentiated quadratic and Matern-5/2 (Matern Reference Matern1986) functions are used in the examples in § 3. These two functions respectively read as

and

Similar expressions can be derived for ![]() $\boldsymbol {\varSigma }_{g_{ij}} = k_{g}(\boldsymbol {x}_i,\boldsymbol {t}_{2_i},\boldsymbol {t}_{s_i},\boldsymbol {x}_j,\boldsymbol {t}_{2_j},\boldsymbol {t}_{s_j})$ and

$\boldsymbol {\varSigma }_{g_{ij}} = k_{g}(\boldsymbol {x}_i,\boldsymbol {t}_{2_i},\boldsymbol {t}_{s_i},\boldsymbol {x}_j,\boldsymbol {t}_{2_j},\boldsymbol {t}_{s_j})$ and ![]() ${\boldsymbol {\varSigma }_{\delta _{ij}} =k_\delta (\boldsymbol {x}_i,\boldsymbol {x}_j)}$ appearing in (2.7). This leads to introducing new hyperparameters associated with the GPs. Note that, given how the training vector

${\boldsymbol {\varSigma }_{\delta _{ij}} =k_\delta (\boldsymbol {x}_i,\boldsymbol {x}_j)}$ appearing in (2.7). This leads to introducing new hyperparameters associated with the GPs. Note that, given how the training vector ![]() $\boldsymbol {Y}$ and associated inputs are assembled, correct combinations of training data for the inputs and parameters will be used in the kernels. The unknown parameters to estimate include calibration parameters in different models,

$\boldsymbol {Y}$ and associated inputs are assembled, correct combinations of training data for the inputs and parameters will be used in the kernels. The unknown parameters to estimate include calibration parameters in different models, ![]() $\boldsymbol {\theta }$, and hyperparameters

$\boldsymbol {\theta }$, and hyperparameters ![]() $\boldsymbol {\beta }$ appearing in the GPs. Following the Bayes rule, the posterior distribution of these parameters given the training data

$\boldsymbol {\beta }$ appearing in the GPs. Following the Bayes rule, the posterior distribution of these parameters given the training data ![]() $\boldsymbol {Y}$ can be inferred from (Kennedy & O'Hagan Reference Kennedy and O'Hagan2001; Higdon et al. Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004; Goh et al. Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013)

$\boldsymbol {Y}$ can be inferred from (Kennedy & O'Hagan Reference Kennedy and O'Hagan2001; Higdon et al. Reference Higdon, Kennedy, Cavendish, Cafeo and Ryne2004; Goh et al. Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013)

where ![]() ${\rm \pi} (\boldsymbol {Y}|\boldsymbol {\theta },\boldsymbol {\beta })$ specifies the likelihood function and

${\rm \pi} (\boldsymbol {Y}|\boldsymbol {\theta },\boldsymbol {\beta })$ specifies the likelihood function and ![]() ${\rm \pi} _0({\cdot })$ represents a prior distribution. Note that all priors are assumed to be independent. For all GPs in § 3, the prior distribution for

${\rm \pi} _0({\cdot })$ represents a prior distribution. Note that all priors are assumed to be independent. For all GPs in § 3, the prior distribution for ![]() $\lambda$ appearing in the covariance matrices such as (2.8) is taken to be half-Cauchy whereas the length scales

$\lambda$ appearing in the covariance matrices such as (2.8) is taken to be half-Cauchy whereas the length scales ![]() $\ell$ in (2.11) and (2.12) are assumed to have gamma distributions. The exact definition of the priors will be provided later for each case in § 3, and table 2 in Appendix B summarizes the formulation of the standard distributions used as priors. The standard deviations of the noises are assumed to be the same for which a half-Cauchy prior is adopted. For the calibration parameters

$\ell$ in (2.11) and (2.12) are assumed to have gamma distributions. The exact definition of the priors will be provided later for each case in § 3, and table 2 in Appendix B summarizes the formulation of the standard distributions used as priors. The standard deviations of the noises are assumed to be the same for which a half-Cauchy prior is adopted. For the calibration parameters ![]() $\boldsymbol {\theta }$, Gaussian or uniform priors are considered. In some cases, we may consider a constant mean function for the GPs, where a Gaussian distribution is used as the prior. Due to this, the predictions of a trained MFM when it is used to extrapolate in

$\boldsymbol {\theta }$, Gaussian or uniform priors are considered. In some cases, we may consider a constant mean function for the GPs, where a Gaussian distribution is used as the prior. Due to this, the predictions of a trained MFM when it is used to extrapolate in ![]() $\boldsymbol {x}$ (outside of the range of training samples) should be used with caution. To avoid potential inaccuracies, in general, more elaborate mean functions can be used when constructing the HC-MFMs (this, however, is not the subject of the present study). Further details about the choice of the kernels and priors for the parameters/hyperparameters as well as the use of the HC-MFM for extrapolation can be found in Appendix A.

$\boldsymbol {x}$ (outside of the range of training samples) should be used with caution. To avoid potential inaccuracies, in general, more elaborate mean functions can be used when constructing the HC-MFMs (this, however, is not the subject of the present study). Further details about the choice of the kernels and priors for the parameters/hyperparameters as well as the use of the HC-MFM for extrapolation can be found in Appendix A.

Given the training data ![]() $\boldsymbol {Y}$, a Markov chain Monte Carlo (MCMC) technique can be used to draw samples from the posterior distributions of

$\boldsymbol {Y}$, a Markov chain Monte Carlo (MCMC) technique can be used to draw samples from the posterior distributions of ![]() $\boldsymbol {\theta }$ and

$\boldsymbol {\theta }$ and ![]() $\boldsymbol {\beta }$, and hence construct a HC-MFM. In the present study, the described HC-MFM (2.6) has been implemented in Python using the PyMC3 (Salvatier, Wiecki & Fonnesbeck Reference Salvatier, Wiecki and Fonnesbeck2016) package with the no-U-turn sampler (NUTS) MCMC sampling approach (Hoffman & Gelman Reference Hoffman and Gelman2014). As it will be shown in § 3.6, the MCMC sampling method may lead to more accurate results compared with the point estimators.

$\boldsymbol {\beta }$, and hence construct a HC-MFM. In the present study, the described HC-MFM (2.6) has been implemented in Python using the PyMC3 (Salvatier, Wiecki & Fonnesbeck Reference Salvatier, Wiecki and Fonnesbeck2016) package with the no-U-turn sampler (NUTS) MCMC sampling approach (Hoffman & Gelman Reference Hoffman and Gelman2014). As it will be shown in § 3.6, the MCMC sampling method may lead to more accurate results compared with the point estimators.

After being constructed, an HC-MFM can be used for predicting the QoI ![]() $y$ for any new sample taken from the space of inputs

$y$ for any new sample taken from the space of inputs ![]() $\boldsymbol {x}$. The accuracy of the predicted QoIs will be assessed by measuring their deviation from the validation data of the highest fidelity

$\boldsymbol {x}$. The accuracy of the predicted QoIs will be assessed by measuring their deviation from the validation data of the highest fidelity ![]() ${M_1}$. As detailed in Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013), the joint distribution of the training

${M_1}$. As detailed in Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013), the joint distribution of the training ![]() $\boldsymbol {Y}$ and new

$\boldsymbol {Y}$ and new ![]() $y^*$ (associated with a test sample

$y^*$ (associated with a test sample ![]() $\boldsymbol {x}^*$) conditioned on

$\boldsymbol {x}^*$) conditioned on ![]() $\boldsymbol {\theta },\boldsymbol {\beta }$ will have a multivariate normal distribution with a covariance matrix of the same structure as

$\boldsymbol {\theta },\boldsymbol {\beta }$ will have a multivariate normal distribution with a covariance matrix of the same structure as ![]() $\boldsymbol {\varSigma }$ in (2.7). For any joint sample drawn from the posterior distribution of

$\boldsymbol {\varSigma }$ in (2.7). For any joint sample drawn from the posterior distribution of ![]() ${\rm \pi} (\boldsymbol {\theta },\boldsymbol {\beta }|\boldsymbol {Y})$, a sample prediction for

${\rm \pi} (\boldsymbol {\theta },\boldsymbol {\beta }|\boldsymbol {Y})$, a sample prediction for ![]() $y^*$ is made. Repeating this procedure for a large number of times, valid estimations for the posterior of the predictions

$y^*$ is made. Repeating this procedure for a large number of times, valid estimations for the posterior of the predictions ![]() $y^*$ can be achieved. Therefore, estimating the confidence in the predictions is straightforward. Note that at this stage, various UQ analyses can be performed using the HC-MFM as a surrogate of the physical process over

$y^*$ can be achieved. Therefore, estimating the confidence in the predictions is straightforward. Note that at this stage, various UQ analyses can be performed using the HC-MFM as a surrogate of the physical process over ![]() $\boldsymbol {x}$.

$\boldsymbol {x}$.

3. Results and discussion

Four examples are considered to which the HC-MFM described in the previous section is applied. The first example in § 3.1 is used to validate the implementation of the MFM, and the next three examples are relevant to fundamental and engineering analysis of wall-bounded turbulent flows.

3.1. An illustrative example

Consider the following analytical model taken from Forrester, Sóbester & Keane (Reference Forrester, Sóbester and Keane2007) to generate HF and LF samples of the QoI ![]() $y$ for input

$y$ for input ![]() $x\in [0,1]$:

$x\in [0,1]$:

In Forrester et al. (Reference Forrester, Sóbester and Keane2007), ![]() $\theta$ is taken to be fixed and equal to

$\theta$ is taken to be fixed and equal to ![]() $6$, but here it is treated as an uncertain calibration parameter that is to be estimated during the construction of the MFM. Note that the notations of the general model (2.5) can be adopted for (3.1). For simulator

$6$, but here it is treated as an uncertain calibration parameter that is to be estimated during the construction of the MFM. Note that the notations of the general model (2.5) can be adopted for (3.1). For simulator ![]() $\hat {f}(x,t)$ and model discrepancy

$\hat {f}(x,t)$ and model discrepancy ![]() $\hat {\delta }(x)$ the covariance matrix in (2.8) is used with the exponentiated quadratic kernel (2.11). The following prior distributions are considered:

$\hat {\delta }(x)$ the covariance matrix in (2.8) is used with the exponentiated quadratic kernel (2.11). The following prior distributions are considered: ![]() $\lambda _f, \lambda _\delta \sim \mathcal {HC}(\alpha =5)$,

$\lambda _f, \lambda _\delta \sim \mathcal {HC}(\alpha =5)$, ![]() $\ell _{f_x}, \ell _{f_t}, \ell _{\delta _x} \sim \varGamma (\alpha =1,\beta =5)$,

$\ell _{f_x}, \ell _{f_t}, \ell _{\delta _x} \sim \varGamma (\alpha =1,\beta =5)$, ![]() $\varepsilon \sim \mathcal {N}(0,\sigma )$ with

$\varepsilon \sim \mathcal {N}(0,\sigma )$ with ![]() $\sigma \sim \mathcal {HC}(\alpha =5)$ and

$\sigma \sim \mathcal {HC}(\alpha =5)$ and ![]() $\theta \sim \mathcal {U}[5.8,6.2]$. Here,

$\theta \sim \mathcal {U}[5.8,6.2]$. Here, ![]() $\mathcal {HC}, \varGamma, \mathcal {N}$ and

$\mathcal {HC}, \varGamma, \mathcal {N}$ and ![]() $\mathcal {U}$ denote the half-Cauchy, gamma, Gaussian, and uniform distributions, respectively, see table 2. The HF training samples are taken at

$\mathcal {U}$ denote the half-Cauchy, gamma, Gaussian, and uniform distributions, respectively, see table 2. The HF training samples are taken at ![]() $x=\{0,0.4,0.6,1\}$, therefore,

$x=\{0,0.4,0.6,1\}$, therefore, ![]() $n_H=4$ is fixed. To investigate the effect of

$n_H=4$ is fixed. To investigate the effect of ![]() $n_L$, three sets of LF samples of size

$n_L$, three sets of LF samples of size ![]() $10, 15$ and

$10, 15$ and ![]() $20$ are considered which are generated by Latin hypercube sampling from the admissible space

$20$ are considered which are generated by Latin hypercube sampling from the admissible space ![]() $[0,1]\times [5.8,6.2]$ corresponding to

$[0,1]\times [5.8,6.2]$ corresponding to ![]() $x$ and

$x$ and ![]() $t$ (uncalibrated instance of

$t$ (uncalibrated instance of ![]() $\theta$), respectively.

$\theta$), respectively.

Using the data, the HC-MFM (2.6) for problem (3.1) is constructed. The first row in figure 2 shows the predicted ![]() $y$ with the associated

$y$ with the associated ![]() $95\,\%$ confidence interval (CI) along with the training data and reference true data generated with

$95\,\%$ confidence interval (CI) along with the training data and reference true data generated with ![]() $\theta =6$. For all

$\theta =6$. For all ![]() $n_L$, the predicted

$n_L$, the predicted ![]() $y$ is closer to HF data than the LF data, however, for

$y$ is closer to HF data than the LF data, however, for ![]() $n_L=15$ and

$n_L=15$ and ![]() $20$, the agreement between the mean of the predicted

$20$, the agreement between the mean of the predicted ![]() $y$ and the true data is significantly improved. A better validation can be made via the plots in the second row of figure 2, where the predicted

$y$ and the true data is significantly improved. A better validation can be made via the plots in the second row of figure 2, where the predicted ![]() $y$ and true values of

$y$ and true values of ![]() $y_H$ at

$y_H$ at ![]() $50$ uniformly spaced test samples for

$50$ uniformly spaced test samples for ![]() $x\in [0,1]$ are plotted. Clearly, increasing the number of the LF samples while keeping

$x\in [0,1]$ are plotted. Clearly, increasing the number of the LF samples while keeping ![]() $n_H=4$ fixed improves the predictions and reduces the uncertainty. In the third row of figure 2, the posterior densities of

$n_H=4$ fixed improves the predictions and reduces the uncertainty. In the third row of figure 2, the posterior densities of ![]() $\theta$ are presented. In all cases, a uniform (non-informative) prior distribution over

$\theta$ are presented. In all cases, a uniform (non-informative) prior distribution over ![]() $[5.8,6.2]$ was considered for

$[5.8,6.2]$ was considered for ![]() $\theta$. Only for

$\theta$. Only for ![]() $n_L=20$, the resulting posterior density of

$n_L=20$, the resulting posterior density of ![]() $\theta$ is high near the true value

$\theta$ is high near the true value ![]() $6$. Therefore, it is confirmed that, as explained by Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013), the main capability of the HC-MFM (2.6) is in making accurate predictions for

$6$. Therefore, it is confirmed that, as explained by Goh et al. (Reference Goh, Bingham, Holloway, Grosskopf, Kuranz and Rutter2013), the main capability of the HC-MFM (2.6) is in making accurate predictions for ![]() $y$ and only if a sufficient number of training data are available, accurate distributions for the calibration parameters are also obtained. This is shown here by fixing

$y$ and only if a sufficient number of training data are available, accurate distributions for the calibration parameters are also obtained. This is shown here by fixing ![]() $n_H$ and increasing

$n_H$ and increasing ![]() $n_L$, which is favourable in practice. It is also noteworthy that if

$n_L$, which is favourable in practice. It is also noteworthy that if ![]() $\theta$ was known and hence treated as a fixed parameter, then even with

$\theta$ was known and hence treated as a fixed parameter, then even with ![]() $n_L=10$ very accurate predictions for

$n_L=10$ very accurate predictions for ![]() $y$ could be already achieved (not shown here).

$y$ could be already achieved (not shown here).

Figure 2. (a–c) Predicted QoI ![]() $y$ by HC-MFM (2.6) along with the training and true data, (d–f) predicted

$y$ by HC-MFM (2.6) along with the training and true data, (d–f) predicted ![]() $y$ vs true observations at

$y$ vs true observations at ![]() $50$ test samples of

$50$ test samples of ![]() $x\in [0,1]$ with error bars representing

$x\in [0,1]$ with error bars representing ![]() $95\,\%$ CI, (g–i) posterior probability density function (PDF) of

$95\,\%$ CI, (g–i) posterior probability density function (PDF) of ![]() $\theta$ based on

$\theta$ based on ![]() $10^4$ MCMC samples. The

$10^4$ MCMC samples. The ![]() $y_H$ and

$y_H$ and ![]() $y_L$ training data are generated from (3.1) using

$y_L$ training data are generated from (3.1) using ![]() $B=C=10$. The training data include

$B=C=10$. The training data include ![]() $4$ HF samples combined with (left column)

$4$ HF samples combined with (left column) ![]() $10$, (middle column)

$10$, (middle column) ![]() $15$ and (right column)

$15$ and (right column) ![]() $20$ LF samples. The true data are generated by (3.1) using

$20$ LF samples. The true data are generated by (3.1) using ![]() $\theta =6$.

$\theta =6$.

It also should be noted that the mean of the posterior distribution of ![]() $\sigma$, the noise standard deviation, is found to be negligible, as expected. This is in fact the case for all other examples in this section.

$\sigma$, the noise standard deviation, is found to be negligible, as expected. This is in fact the case for all other examples in this section.

3.2. Turbulent channel flow

Turbulent channel flow is one of the most canonical wall-bounded turbulent flows. The flow develops between two parallel flat walls which are apart by the distance ![]() $2\delta$, and the flow is periodic in the streamwise and spanwise directions. Channel flow is fully defined by a Reynolds number, for instance the bulk Reynolds number

$2\delta$, and the flow is periodic in the streamwise and spanwise directions. Channel flow is fully defined by a Reynolds number, for instance the bulk Reynolds number ![]() $Re_b=U_b\delta /\nu$, where

$Re_b=U_b\delta /\nu$, where ![]() $U_b$ and

$U_b$ and ![]() $\nu$ specify the streamwise bulk velocity and kinematic viscosity, respectively. Among different possible QoIs, here we only focus on the friction velocity

$\nu$ specify the streamwise bulk velocity and kinematic viscosity, respectively. Among different possible QoIs, here we only focus on the friction velocity ![]() $\langle u_\tau \rangle$, as a function of Reynolds number. This quantity is defined as

$\langle u_\tau \rangle$, as a function of Reynolds number. This quantity is defined as ![]() $\sqrt {\langle \tau _w \rangle /\rho }$, where

$\sqrt {\langle \tau _w \rangle /\rho }$, where ![]() $\tau _w$ and

$\tau _w$ and ![]() $\rho$ are the magnitude of the wall-shear stress and fluid density, respectively, and

$\rho$ are the magnitude of the wall-shear stress and fluid density, respectively, and ![]() $\langle {\cdot } \rangle$ represents averaging over time and the periodic directions. Three fidelity levels are considered: DNS (

$\langle {\cdot } \rangle$ represents averaging over time and the periodic directions. Three fidelity levels are considered: DNS (![]() $M_1$), wall-resolved LES (WRLES) (

$M_1$), wall-resolved LES (WRLES) (![]() $M_2$) and a reduced-order algebraic model (

$M_2$) and a reduced-order algebraic model (![]() $M_3$), where the fidelity reduces from the former to the latter. We use the DNS data of Iwamoto, Suzuki & Kasagi (Reference Iwamoto, Suzuki and Kasagi2002), Lee & Moser (Reference Lee and Moser2015) and Yamamoto & Tsuji (Reference Yamamoto and Tsuji2018).

$M_3$), where the fidelity reduces from the former to the latter. We use the DNS data of Iwamoto, Suzuki & Kasagi (Reference Iwamoto, Suzuki and Kasagi2002), Lee & Moser (Reference Lee and Moser2015) and Yamamoto & Tsuji (Reference Yamamoto and Tsuji2018).

The WRLES of channel flow have been performed at different Reynolds numbers without any explicit subgrid-scale model using OpenFOAM (Weller et al. Reference Weller, Tabor, Jasak and Fureby1998), which is an open-source finite-volume flow solver. Linear interpolation is used for the evaluation of face fluxes, and a second-order backward-differencing scheme is used for time integration. The pressure-implicit with splitting of operators (PISO) algorithm is used for pressure–velocity coupling. For further details on the simulation set-up, see Rezaeiravesh & Liefvendahl (Reference Rezaeiravesh and Liefvendahl2018). The results in that paper show that for a fixed resolution in the wall-normal direction, variation of the grid resolutions in the wall-parallel directions could significantly impact the accuracy of the flow QoIs. Therefore, in the context of the HC-MFM, the calibration parameters for WRLES are taken to be ![]() ${{\rm \Delta} x^+}$ and

${{\rm \Delta} x^+}$ and ![]() ${{\rm \Delta} z^+}$, which are the cell dimensions in the streamwise and spanwise directions, respectively, expressed in wall units (

${{\rm \Delta} z^+}$, which are the cell dimensions in the streamwise and spanwise directions, respectively, expressed in wall units (![]() ${{\rm \Delta} x^+}={\rm \Delta} x u^\circ _\tau /\nu$ where

${{\rm \Delta} x^+}={\rm \Delta} x u^\circ _\tau /\nu$ where ![]() $u^\circ _\tau$ is the reference

$u^\circ _\tau$ is the reference ![]() $u_\tau$ from DNS).

$u_\tau$ from DNS).

At the lowest fidelity, the following reduced-order algebraic model is considered which is derived by averaging the streamwise momentum equation for the channel flow in the periodic directions and time:

where ![]() $\eta$ is the distance from the wall normalized by the channel half-height

$\eta$ is the distance from the wall normalized by the channel half-height ![]() $\delta$, and

$\delta$, and ![]() $\zeta (\eta )$ is the normalized eddy viscosity

$\zeta (\eta )$ is the normalized eddy viscosity ![]() $\nu _t$, i.e.

$\nu _t$, i.e. ![]() $\zeta (\eta )=\nu _t(\eta )/\nu$. Reynolds & Tiederman (Reference Reynolds and Tiederman1967) proposed the following closed form for

$\zeta (\eta )=\nu _t(\eta )/\nu$. Reynolds & Tiederman (Reference Reynolds and Tiederman1967) proposed the following closed form for ![]() $\zeta (\eta )$:

$\zeta (\eta )$:

\begin{align} \zeta(\eta) =\frac{1}{2} \left[1+\frac{{\kappa}^2 Re^2_\tau}{9}(1- (\eta-1)^2)^2 (1+2(\eta-1)^2)^2 \left(1-\exp\left(\frac{-\eta Re_\tau}{A^+}\right) \right)^2 \right]^{1/2} \!\!-\frac{1}{2} , \end{align}

\begin{align} \zeta(\eta) =\frac{1}{2} \left[1+\frac{{\kappa}^2 Re^2_\tau}{9}(1- (\eta-1)^2)^2 (1+2(\eta-1)^2)^2 \left(1-\exp\left(\frac{-\eta Re_\tau}{A^+}\right) \right)^2 \right]^{1/2} \!\!-\frac{1}{2} , \end{align}

where ![]() ${Re}_\tau =\langle u_\tau \rangle \delta /\nu$ is the friction-based Reynolds number, and

${Re}_\tau =\langle u_\tau \rangle \delta /\nu$ is the friction-based Reynolds number, and ![]() $\kappa$ and

$\kappa$ and ![]() $A^+$ are two modelling parameters. At any

$A^+$ are two modelling parameters. At any ![]() ${Re}_b$ (and given value of

${Re}_b$ (and given value of ![]() $\kappa$ and

$\kappa$ and ![]() $A^+$), (3.2) is integrated over

$A^+$), (3.2) is integrated over ![]() $\eta \in [0,1]$ and is iteratively solved using (3.3) to estimate

$\eta \in [0,1]$ and is iteratively solved using (3.3) to estimate ![]() $\langle u_\tau \rangle$. Expressing the channel flow example in the terminology of MFM (2.6),

$\langle u_\tau \rangle$. Expressing the channel flow example in the terminology of MFM (2.6), ![]() $\langle u_\tau \rangle /U_b$ is the QoI

$\langle u_\tau \rangle /U_b$ is the QoI ![]() $y$,

$y$, ![]() $x=Re_b$,

$x=Re_b$, ![]() $\boldsymbol {t}_3=(\kappa,A^+)$ and

$\boldsymbol {t}_3=(\kappa,A^+)$ and ![]() $\boldsymbol {t}_2=({{\rm \Delta} x^+},{{\rm \Delta} z^+})$. The training data set consists of the following databases. For DNS,

$\boldsymbol {t}_2=({{\rm \Delta} x^+},{{\rm \Delta} z^+})$. The training data set consists of the following databases. For DNS, ![]() $\langle u_\tau \rangle$ is taken from Iwamoto et al. (Reference Iwamoto, Suzuki and Kasagi2002), Lee & Moser (Reference Lee and Moser2015) and Yamamoto & Tsuji (Reference Yamamoto and Tsuji2018) at

$\langle u_\tau \rangle$ is taken from Iwamoto et al. (Reference Iwamoto, Suzuki and Kasagi2002), Lee & Moser (Reference Lee and Moser2015) and Yamamoto & Tsuji (Reference Yamamoto and Tsuji2018) at ![]() $Re_b=5020$,

$Re_b=5020$, ![]() $6962$,

$6962$, ![]() $10\ 000$,

$10\ 000$, ![]() $20\ 000$,

$20\ 000$, ![]() $125\ 000$ and

$125\ 000$ and ![]() $200\ 400$. In total,

$200\ 400$. In total, ![]() $16$ WRLES

$16$ WRLES ![]() $\langle u_\tau \rangle$ samples are obtained from a design of experiment based on the prior distributions

$\langle u_\tau \rangle$ samples are obtained from a design of experiment based on the prior distributions ![]() ${{\rm \Delta} x^+}\sim \mathcal {U}[15,85]$ and

${{\rm \Delta} x^+}\sim \mathcal {U}[15,85]$ and ![]() ${{\rm \Delta} z^+}\sim \mathcal {U}[9.5,22]$ at

${{\rm \Delta} z^+}\sim \mathcal {U}[9.5,22]$ at ![]() ${Re}_b=5020,6962,10\ 000$, and

${Re}_b=5020,6962,10\ 000$, and ![]() $20\ 000$. Here, we do not consider the observational uncertainty in

$20\ 000$. Here, we do not consider the observational uncertainty in ![]() $\langle u_\tau \rangle$ which could, for instance, be due to finite time averaging in DNS and WRLES, but in general the HC-MFM could take such information into account. The reduced-order model (3.2) which is computationally cheap is run for

$\langle u_\tau \rangle$ which could, for instance, be due to finite time averaging in DNS and WRLES, but in general the HC-MFM could take such information into account. The reduced-order model (3.2) which is computationally cheap is run for ![]() $10$ values of

$10$ values of ![]() ${Re}_b$ in range

${Re}_b$ in range ![]() $[2000,200\ 200]$. For each

$[2000,200\ 200]$. For each ![]() ${Re}_b, 9$ joint samples of

${Re}_b, 9$ joint samples of ![]() $(\kappa,A^+)$ are generated assuming

$(\kappa,A^+)$ are generated assuming ![]() $\kappa \sim \mathcal {U}[0.36,0.43]$ and

$\kappa \sim \mathcal {U}[0.36,0.43]$ and ![]() $A^+\sim \mathcal {U}[26.5,29]$ (note that

$A^+\sim \mathcal {U}[26.5,29]$ (note that ![]() $\kappa$ is the von Kármán coefficient). For all the GPs in the MFM (2.6), the exponentiated quadratic covariance function (2.11) is used. The prior distribution of the hyperparameters are set as the following:

$\kappa$ is the von Kármán coefficient). For all the GPs in the MFM (2.6), the exponentiated quadratic covariance function (2.11) is used. The prior distribution of the hyperparameters are set as the following: ![]() $\lambda _f, \lambda _g \sim \mathcal {HC}(\alpha =5)$,

$\lambda _f, \lambda _g \sim \mathcal {HC}(\alpha =5)$, ![]() $\lambda _{\delta } \sim \mathcal {HC}(\alpha =3)$,

$\lambda _{\delta } \sim \mathcal {HC}(\alpha =3)$, ![]() $\ell _{f_x}, \ell _{f_{t_3}},\ell _{g_x}, \ell _{g_{t_2}}, \ell _{\delta _x} \sim \varGamma (\alpha =1,\beta =5)$ and the noise standard deviation

$\ell _{f_x}, \ell _{f_{t_3}},\ell _{g_x}, \ell _{g_{t_2}}, \ell _{\delta _x} \sim \varGamma (\alpha =1,\beta =5)$ and the noise standard deviation ![]() $\sigma \sim \mathcal {HC}(\alpha =5)$ (assumed to be the same for all fidelities).

$\sigma \sim \mathcal {HC}(\alpha =5)$ (assumed to be the same for all fidelities).

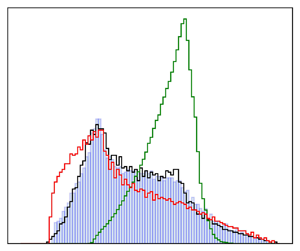

Using the described training data in the HC-MFM (2.6) and running the MCMC chain for ![]() $7000$ samples, after an initial

$7000$ samples, after an initial ![]() $2000$ samples discarded due to burn in, the model is constructed. This means extra MCMC samples are generated from which a sufficiently large number of initial samples is discarded to avoid any bias introduced by the initialization. According to figure 3(a), the predicted mean of

$2000$ samples discarded due to burn in, the model is constructed. This means extra MCMC samples are generated from which a sufficiently large number of initial samples is discarded to avoid any bias introduced by the initialization. According to figure 3(a), the predicted mean of ![]() $\langle u_\tau \rangle /U_b$ follows the trend of the DNS data. This approximately holds even at high Reynolds numbers, where there is a large systematic error in the algebraic model and no WRLES data are available. As expected, in this range due to scarcity of the DNS data, the uncertainty in the predictions is high. The plot in figure 3(b) shows the joint posterior distributions of the calibration parameters

$\langle u_\tau \rangle /U_b$ follows the trend of the DNS data. This approximately holds even at high Reynolds numbers, where there is a large systematic error in the algebraic model and no WRLES data are available. As expected, in this range due to scarcity of the DNS data, the uncertainty in the predictions is high. The plot in figure 3(b) shows the joint posterior distributions of the calibration parameters ![]() $\kappa, A^+, {{\rm \Delta} x^+}$ and

$\kappa, A^+, {{\rm \Delta} x^+}$ and ![]() ${{\rm \Delta} z^+}$ along with the histogram of each parameter. As mentioned above, the prior distributions of all of these parameters were assumed to be uniform and mutually independent. However, the resulting posterior densities for

${{\rm \Delta} z^+}$ along with the histogram of each parameter. As mentioned above, the prior distributions of all of these parameters were assumed to be uniform and mutually independent. However, the resulting posterior densities for ![]() $\kappa$ and

$\kappa$ and ![]() $A^+$ are not uniform and the samples of these parameters are correlated. More interestingly, the peak of the posterior density of

$A^+$ are not uniform and the samples of these parameters are correlated. More interestingly, the peak of the posterior density of ![]() $\kappa$ is close to the value of

$\kappa$ is close to the value of ![]() $0.4$ that is assumed to be universal across various flows and Reynolds numbers within the turbulence community. On the other hand, the distribution of

$0.4$ that is assumed to be universal across various flows and Reynolds numbers within the turbulence community. On the other hand, the distribution of ![]() $A^+$ does not show a clear maximum, which may indicate that the range of admissible values in the prior could be extended. Note that indeed the value of

$A^+$ does not show a clear maximum, which may indicate that the range of admissible values in the prior could be extended. Note that indeed the value of ![]() $A^+=25$ is associated with the van-Driest wall damping commonly used for eddy-viscosity models. In contrast, the posteriors of

$A^+=25$ is associated with the van-Driest wall damping commonly used for eddy-viscosity models. In contrast, the posteriors of ![]() ${{\rm \Delta} x^+}$ and

${{\rm \Delta} x^+}$ and ![]() ${{\rm \Delta} z^+}$ are found to be still close to the prior uniform distributions and no correlation between their samples is observed. This may be at least partially be due to the fact that the number of the WRLES data is limited as they are obtained only at 4 Reynolds numbers. Nevertheless, over this range of the Reynolds number the posterior prediction of the QoI

${{\rm \Delta} z^+}$ are found to be still close to the prior uniform distributions and no correlation between their samples is observed. This may be at least partially be due to the fact that the number of the WRLES data is limited as they are obtained only at 4 Reynolds numbers. Nevertheless, over this range of the Reynolds number the posterior prediction of the QoI ![]() $\langle u_\tau \rangle$ is very accurate and has the lowest uncertainty, which seems to indicate that the significant dependence of the wall-shear stress on the WRLES resolution did not lead to a bias in the prediction by the HC-MFM. This may in fact be an important aspect for building future wall models.

$\langle u_\tau \rangle$ is very accurate and has the lowest uncertainty, which seems to indicate that the significant dependence of the wall-shear stress on the WRLES resolution did not lead to a bias in the prediction by the HC-MFM. This may in fact be an important aspect for building future wall models.

Figure 3. (a) Mean prediction of ![]() $\langle u_\tau \rangle /U_b$ and associated

$\langle u_\tau \rangle /U_b$ and associated ![]() $95\,\%$ CI along with the training data and validation data from DNS of Iwamoto et al. (Reference Iwamoto, Suzuki and Kasagi2002), Lee & Moser (Reference Lee and Moser2015) and Yamamoto & Tsuji (Reference Yamamoto and Tsuji2018), (b) diagonal, posterior density of parameters

$95\,\%$ CI along with the training data and validation data from DNS of Iwamoto et al. (Reference Iwamoto, Suzuki and Kasagi2002), Lee & Moser (Reference Lee and Moser2015) and Yamamoto & Tsuji (Reference Yamamoto and Tsuji2018), (b) diagonal, posterior density of parameters ![]() $\kappa, A^+, {{\rm \Delta} x^+}$ and

$\kappa, A^+, {{\rm \Delta} x^+}$ and ![]() ${{\rm \Delta} z^+}$; off diagonal, contour lines of the joint posterior densities of these parameters. The value of the contour lines increases from the lightest to darkest colour.

${{\rm \Delta} z^+}$; off diagonal, contour lines of the joint posterior densities of these parameters. The value of the contour lines increases from the lightest to darkest colour.

3.3. Polars for the NACA0015 airfoil

In this section, the HC-MFM (2.6) is applied to a set of data for the lift and drag coefficients, ![]() $C_L$ and

$C_L$ and ![]() $C_D$, respectively, of a wing with a NACA0015 airfoil profile at Reynolds number

$C_D$, respectively, of a wing with a NACA0015 airfoil profile at Reynolds number ![]() $1.6\times 10^6$. The angle of attack (AoA) between the wing and the ambient flow is taken to be the design parameter

$1.6\times 10^6$. The angle of attack (AoA) between the wing and the ambient flow is taken to be the design parameter ![]() $x$.

$x$.

The data consist of the following sources with respective fidelities in brackets: wind-tunnel experiments by Bertagnolio (Reference Bertagnolio2008) (![]() $M_1$), detached-eddy simulations (DES) (

$M_1$), detached-eddy simulations (DES) (![]() $M2$) and two-dimensional RANS (

$M2$) and two-dimensional RANS (![]() $M_3$) both by Gilling, Sørensen & Davidson (Reference Gilling, Sørensen and Davidson2009). The simulations of Gilling et al. (Reference Gilling, Sørensen and Davidson2009) were performed with a finite-volume code using a fourth-order central-difference scheme and a second-order accurate dual time-stepping algorithm to integrate the momentum equations. The PISO algorithm was used to enforce pressure–velocity coupling. In the study, the authors investigated the sensitivity of the DES results to the resolved turbulence intensity (TI) of the fluctuations imposed at the inlet boundary. The sensitivity was found to be particularly significant near the stall angle. Therefore, when constructing an MFM, the calibration parameter

$M_3$) both by Gilling, Sørensen & Davidson (Reference Gilling, Sørensen and Davidson2009). The simulations of Gilling et al. (Reference Gilling, Sørensen and Davidson2009) were performed with a finite-volume code using a fourth-order central-difference scheme and a second-order accurate dual time-stepping algorithm to integrate the momentum equations. The PISO algorithm was used to enforce pressure–velocity coupling. In the study, the authors investigated the sensitivity of the DES results to the resolved turbulence intensity (TI) of the fluctuations imposed at the inlet boundary. The sensitivity was found to be particularly significant near the stall angle. Therefore, when constructing an MFM, the calibration parameter ![]() $t_2$ in fidelity

$t_2$ in fidelity ![]() $M_2$ is taken to be the TI.

$M_2$ is taken to be the TI.

For the covariance of the Gaussian processes ![]() $\hat {f}(x)$ and

$\hat {f}(x)$ and ![]() $\hat {g}(x,t_2)$ in HC-MFM (2.6), the exponentiated quadratic and Matern-5/2 kernel functions (2.11) and (2.12) are used, respectively. The following prior distributions are assumed for the hyperparameters:

$\hat {g}(x,t_2)$ in HC-MFM (2.6), the exponentiated quadratic and Matern-5/2 kernel functions (2.11) and (2.12) are used, respectively. The following prior distributions are assumed for the hyperparameters: ![]() $\lambda _f , \lambda _g \sim \mathcal {HC}(\alpha =5)$,

$\lambda _f , \lambda _g \sim \mathcal {HC}(\alpha =5)$, ![]() $\ell _{f_x}\sim \varGamma (\alpha =1,\beta =5)$,

$\ell _{f_x}\sim \varGamma (\alpha =1,\beta =5)$, ![]() $\ell _{g_x},\ell _{g_{t_2}}\sim \varGamma (\alpha =1,\beta =3)$ and the noise standard deviation

$\ell _{g_x},\ell _{g_{t_2}}\sim \varGamma (\alpha =1,\beta =3)$ and the noise standard deviation ![]() $\sigma \sim \mathcal {HC}(\alpha =5)$ (assumed to be the same for all fidelities). To make the model capable of capturing large-scale separation, the stall AoA,

$\sigma \sim \mathcal {HC}(\alpha =5)$ (assumed to be the same for all fidelities). To make the model capable of capturing large-scale separation, the stall AoA, ![]() $x_{stall}$, is included as a new calibration parameter in the MFM. Our suggestion is to consider a piecewise kernel function for the covariance of

$x_{stall}$, is included as a new calibration parameter in the MFM. Our suggestion is to consider a piecewise kernel function for the covariance of ![]() $\hat {\delta }(x)$ where

$\hat {\delta }(x)$ where ![]() $x_{stall}$ is the merging point. If the kernel functions for the AoAs smaller and larger than

$x_{stall}$ is the merging point. If the kernel functions for the AoAs smaller and larger than ![]() $x_{stall}$ are denoted by

$x_{stall}$ are denoted by ![]() $k_{\delta _1}({\cdot })$ and

$k_{\delta _1}({\cdot })$ and ![]() $k_{\delta _2}({\cdot })$, respectively, then the covariance function for

$k_{\delta _2}({\cdot })$, respectively, then the covariance function for ![]() $\hat {\delta }(x)$ may be constructed as

$\hat {\delta }(x)$ may be constructed as

where ![]() $\bar {d}_{\delta _{ij}}$ is defined similar to those in (2.9) and (2.10) but only in

$\bar {d}_{\delta _{ij}}$ is defined similar to those in (2.9) and (2.10) but only in ![]() $x$, and

$x$, and ![]() $\varphi (x)$ is a function to smoothly merge the two covariance functions. In particular, we use the logistic function:

$\varphi (x)$ is a function to smoothly merge the two covariance functions. In particular, we use the logistic function:

where ![]() $\alpha _{stall}$ is a new hyperparameter. The kernel functions

$\alpha _{stall}$ is a new hyperparameter. The kernel functions ![]() $k_{\delta _1}({\cdot })$ and

$k_{\delta _1}({\cdot })$ and ![]() $k_{\delta _2}({\cdot })$ are both modelled by the Matern-5/2 function (2.12). As the prior distributions, we assume

$k_{\delta _2}({\cdot })$ are both modelled by the Matern-5/2 function (2.12). As the prior distributions, we assume ![]() $\lambda _{\delta _1}\sim \mathcal {HC}(\alpha =3)$,

$\lambda _{\delta _1}\sim \mathcal {HC}(\alpha =3)$, ![]() $\lambda _{\delta _2}\sim \mathcal {HC}(\alpha =5)$,

$\lambda _{\delta _2}\sim \mathcal {HC}(\alpha =5)$, ![]() $\ell _{\delta _1}\sim \varGamma (\alpha =1,\beta =5)$,

$\ell _{\delta _1}\sim \varGamma (\alpha =1,\beta =5)$, ![]() $\ell _{\delta _2}\sim \varGamma (\alpha =1,\beta =0.5)$,

$\ell _{\delta _2}\sim \varGamma (\alpha =1,\beta =0.5)$, ![]() $\alpha _{stall}\sim \mathcal {HC}(\alpha =2)$ and

$\alpha _{stall}\sim \mathcal {HC}(\alpha =2)$ and ![]() $x_{stall}\sim \mathcal {N}(14,0.2)$. The prior for the TI is also considered to be Gaussian:

$x_{stall}\sim \mathcal {N}(14,0.2)$. The prior for the TI is also considered to be Gaussian: ![]() ${TI}\sim \mathcal {N}(0.5,0.15)$. These Gaussian priors are selected based on our prior knowledge about the approximate stall AoA and the discussion about the influence of TI in Gilling et al. (Reference Gilling, Sørensen and Davidson2009).

${TI}\sim \mathcal {N}(0.5,0.15)$. These Gaussian priors are selected based on our prior knowledge about the approximate stall AoA and the discussion about the influence of TI in Gilling et al. (Reference Gilling, Sørensen and Davidson2009).

The admissible range of ![]() $x={AoA}$ is assumed to be

$x={AoA}$ is assumed to be ![]() $[0^\circ,20^\circ ]$ over which the experimental and RANS data (Bertagnolio Reference Bertagnolio2008; Gilling et al. Reference Gilling, Sørensen and Davidson2009) are available. The training HF data are taken to be a subset of size

$[0^\circ,20^\circ ]$ over which the experimental and RANS data (Bertagnolio Reference Bertagnolio2008; Gilling et al. Reference Gilling, Sørensen and Davidson2009) are available. The training HF data are taken to be a subset of size ![]() $7$ of the experimental data of Bertagnolio (Reference Bertagnolio2008). The rest of the experimental data are used to validate the predictions of the MFM. For the purpose of examining the capability of the MFM in a more challenging situation, the training HF samples are explicitly selected to exclude the range of AoAs where the stall happens. The DES data of Gilling et al. (Reference Gilling, Sørensen and Davidson2009) are available at

$7$ of the experimental data of Bertagnolio (Reference Bertagnolio2008). The rest of the experimental data are used to validate the predictions of the MFM. For the purpose of examining the capability of the MFM in a more challenging situation, the training HF samples are explicitly selected to exclude the range of AoAs where the stall happens. The DES data of Gilling et al. (Reference Gilling, Sørensen and Davidson2009) are available at ![]() $7$

$7$ ![]() ${AoA}\in [8^\circ,19^\circ ]$ and

${AoA}\in [8^\circ,19^\circ ]$ and ![]() $5$ different values of TI. Employing these training data in the HC-MFM (2.6) and drawing

$5$ different values of TI. Employing these training data in the HC-MFM (2.6) and drawing ![]() $10^4$ MCMC samples after excluding an extra

$10^4$ MCMC samples after excluding an extra ![]() $5000$ initial samples for burn in, the predictions for

$5000$ initial samples for burn in, the predictions for ![]() $C_L$ and

$C_L$ and ![]() $C_D$ shown in figure 4(a,c) are obtained. The expected value of the predictions has a trend similar to that of the experimental validation data of Bertagnolio (Reference Bertagnolio2008) and is not diverging towards either the physically invalid RANS data or scattered DES data at

$C_D$ shown in figure 4(a,c) are obtained. The expected value of the predictions has a trend similar to that of the experimental validation data of Bertagnolio (Reference Bertagnolio2008) and is not diverging towards either the physically invalid RANS data or scattered DES data at ![]() ${AoA}\gtrsim 10^\circ$. A more elaborate comparison is made through scatter plot of the MFM predictions against the validation data in figure 4(b,d). For both

${AoA}\gtrsim 10^\circ$. A more elaborate comparison is made through scatter plot of the MFM predictions against the validation data in figure 4(b,d). For both ![]() $C_L$ and

$C_L$ and ![]() $C_D$, the agreement between the predicted mean values with the validation data at lower AoAs (before stall) is excellent and for most of the higher AoAs, even near and after the stall, is very good. Due to the scarcity of the HF training data and also systematic error in the RANS and DES data, the error bars at the predicted values can be relatively large, as more evident in the case of

$C_D$, the agreement between the predicted mean values with the validation data at lower AoAs (before stall) is excellent and for most of the higher AoAs, even near and after the stall, is very good. Due to the scarcity of the HF training data and also systematic error in the RANS and DES data, the error bars at the predicted values can be relatively large, as more evident in the case of ![]() $C_D$ in figure 4(d).

$C_D$ in figure 4(d).

Figure 4. (a) Lift coefficient ![]() $C_L$ and (c) drag coefficient

$C_L$ and (c) drag coefficient ![]() $C_D$ plotted against the AoA: the HC-MFM (2.6) is trained by the experimental data of Bertagnolio (Reference Bertagnolio2008) (yellow circles), as well as the DES (squares) and RANS (crosses) data by Gilling et al. (Reference Gilling, Sørensen and Davidson2009). The DES were performed with the resolved turbulence intensities

$C_D$ plotted against the AoA: the HC-MFM (2.6) is trained by the experimental data of Bertagnolio (Reference Bertagnolio2008) (yellow circles), as well as the DES (squares) and RANS (crosses) data by Gilling et al. (Reference Gilling, Sørensen and Davidson2009). The DES were performed with the resolved turbulence intensities ![]() ${TI}=0\,\%,0.1\,\%,0.5\,\%,1\,{\%}$ and

${TI}=0\,\%,0.1\,\%,0.5\,\%,1\,{\%}$ and ![]() $2\,{\%}$ at the inlet. The validation data (red triangles) are also taken from the experiments of Bertagnolio (Reference Bertagnolio2008). The mean prediction by the HC-MFM (2.6) is represented by the solid line along with associated

$2\,{\%}$ at the inlet. The validation data (red triangles) are also taken from the experiments of Bertagnolio (Reference Bertagnolio2008). The mean prediction by the HC-MFM (2.6) is represented by the solid line along with associated ![]() $95\,{\%}$ CI (shaded area). Scatter plots of (b)

$95\,{\%}$ CI (shaded area). Scatter plots of (b) ![]() $C_L$, (d)

$C_L$, (d) ![]() $C_D$ predictions by the HC-MFM (vertical axis) against the validation data (horizontal axis). The red straight line is diagonal and provided to evaluate the accuracy of the HC-MFM predictions: if the hollow markers which represent the mean posterior prediction by the HC-MFM at the AoAs where validation data are available for, are close to the diagonal line, then they are more accurate. Each mean prediction has an error bar which represents the associated

$C_D$ predictions by the HC-MFM (vertical axis) against the validation data (horizontal axis). The red straight line is diagonal and provided to evaluate the accuracy of the HC-MFM predictions: if the hollow markers which represent the mean posterior prediction by the HC-MFM at the AoAs where validation data are available for, are close to the diagonal line, then they are more accurate. Each mean prediction has an error bar which represents the associated ![]() $95 {\%}$ CI.

$95 {\%}$ CI.

Figure 5 shows the posterior densities of different parameters appearing in the MFM constructed for ![]() $C_L$ and

$C_L$ and ![]() $C_D$. As expected, the distribution of the kernels’ hyperparameters varies between the two QoIs. But more importantly, the posterior distributions of