1. Introduction

A core principle of creating a scientific evidence base is that results can be replicated in independent experiments and in clinical trials and other well designed studies of interventions that aim to improve health outcomes. It is also important that interventions are described in enough detail for them to be implemented in practice when shown to be effective. For an evaluation of a drug, this standardisation of the intervention is achieved through a formal quality control process in production and release and documented in an investigator’s brochure. Non-pharmacological interventions cannot be standardised in the same way with chemical descriptors, and as they become increasingly complex it has been recognised that we need to improve the way in which they are developed, specified and quality controlled. The Medical Research Council guidance on complex intervention development and evaluation [Reference Moore, Audrey, Barker, Bond, Bonell, Hardeman, Moore, O’Cathain, Tinati, Wight and Baird1] highlights the importance of developing interventions with a strong theoretical underpinning, and modelling potential processes and outcomes. This has led to the increased use of logic models, borrowed from programme theory [2], which are frameworks used to conceptualise the components of a complex intervention and the pathways by which it achieves its outcomes. As well, it has seen the development of the TIDieR (Template for Intervention Description and Replication) checklist which summarises the key items needed when reporting clinical trials and other well designed evaluations of complex interventions in order that findings can be replicated or built on reliably [Reference Hoffmann, Glasziou, Boutron, Milne, Perera, Moher, Altman, Barbour, Macdonald, Johnston, Lamb, Dixon-Woods, McCulloch, Wyatt, Chan and Michie3].

The TIDieR checklist summarises 12 items which should be reported under the six domains of What, Why, When, How, Where and Who. These draw out a detailed description of What is done as part of the intervention (including materials and process), Why it is done (including underlying theory), When (and how often and how much), How it is done (including mode of delivery), Where (location and infrastructure) and Who (who delivers the components, what is their skill set and training). Other items also include the degree to which all of the above can be tailored for an individual setting or patient, and intended and actual quality of delivery targets (fidelity). Whilst the TIDieR checklist is intended to improve the reporting of complex interventions, it can also be utilised to explore potential variation in intervention definition and delivery (i.e. between participants, or across settings), which may otherwise be insufficiently captured. Understanding areas of commonality and difference should contribute to the discussion and debate as to what an intervention is, how it should be defined and how much adaptation or variation is appropriate.

Neurofeedback describes a group of technologies that involve real-time processing of brain signals to provide feedback to participants and train them in the self-regulation of the targeted brain signal [Reference Linden4]. Neurofeedback with electroencephalogram (EEG) signals has a long tradition in psychiatric research and an established group of clinical practitioners although the evidence for even its most popular application in the treatment of attention deficit/hyperactivity disorder is mixed [Reference Holtmann, Sonuga-Barke, Cortese and Brandeis5]. A meta-analysis found significant effects of EEG-neurofeedback on both parent- and teacher-rated assessments [Reference Micoulaud-Franchi, Geoffroy, Fond, Lopez, Bioulac and Philip6], but a recent RCT found no benefit for real compared to sham EEG-NFT on self-reported ADHD symptoms [Reference Schönenberg, Wiedemann, Schneidt, Scheeff, Logemann and Keune7].

Neurofeedback with functional magnetic resonance signals (fMRI) is a more recent field of technological development [Reference Stoeckel, Garrison, Ghosh, Wighton, Hanlon and Gilman8], but has recently seen a marked increase in clinical interest, particularly from the psychiatric side [Reference Fover, Jardri and Linden9]. A search on clinicaltrials.gov, a widely used trials registration database, conducted on 22 August 2017 with the search terms “fMRI” and “neurofeedback” yielded 33 trials using fMRI-based neurofeedback, most of them ongoing, in psychiatric and neurological diseases and chronic pain. These trials are generally rather small and represent the early stages of treatment development. They are also characterised by a considerable heterogeneity in terms of the clinical populations studied, target areas and systems in the brain, dosage (how many training sessions), duration, outcome measures and control conditions. Another indicator of increasing work in this area is relevant publications found with search terms “fMRI” and “neurofeedback” for 2011 and 2016: 15 papers in 2011; 43 in 2016.

Neurofeedback using fMRI is a multicomponent intervention that should be considered a complex intervention. As such, best practice would be to use appropriate reporting standards already in place for such interventions. Here, we report on the first application of a TIDieR checklist to neurofeedback. Minor modifications were made to TIDieR, to include additional items that captured the specific requirements of neurofeedback (for example, specification of targeted brain signals) and its application to document the growing field of fMRI-based neurofeedback.

A field like neurofeedback, in which presently most trials have relatively low numbers of patients (in the low teens, in the case of most fMRI-neurofeedback trials) and which is applied across a wide range of psychiatric and neurological diseases, is particularly dependent on standardised intervention delivery and reporting in order to minimise inter-trial and inter-site variability and enable the meta-analyses that will be crucial to provide reliable evidence on its efficacy. As a first step it is important to establish where commonalities lie within this research, which was one of the aims of the present study. We also wanted to highlight areas for discussion and provide a basis for recommendations for harmonisation and standardisation.

2. Method

The standard 12 item TIDieR checklist (Appendix A) was reviewed by the authors to establish whether it might potentially be applicable to neurofeedback (NF) research studies. It was agreed that wording in the checklist needed to be refined to make it more specific to NF interventions. After several rounds of discussion and review, consensus was reached by the authors that the main TIDieR checklist domains (What, Why, When, How, Where and Who) would be applicable but with some additional clarifications (Table 1). For example, one such clarification related to control conditions/groups (Heading 2). Authors of the TIDieR checklist [Reference Hoffmann, Glasziou, Boutron, Milne, Perera, Moher, Altman, Barbour, Macdonald, Johnston, Lamb, Dixon-Woods, McCulloch, Wyatt, Chan and Michie3] recommend that the checklist is completed separately for control conditions however, including it in our adapted NF specific checklist could help in understanding what responders consider effective and ‘inert’ aspects of the intervention.

Once consensus on the content of the checklist was reached, it was laid out as a web-based survey which could then be sent to the international NF research community. The Bristol Online Survey (BOS) tool was ideal to use for this small scale piece of online research as it allowed the authors to design a dedicated webpage where the survey could be completed. All questions in the survey were designed to illicit descriptive responses.

A link to the unique BOS webpage was sent to 14 partners working on BRAINTRAIN collaborations at 10 different institutions. The EU funded BRAINTRAIN collaboration includes seven proof of principle early studies in different clinical populations [Reference Cox, Subramanian, Linden, Lührs, McNamara and Playle10]. The main objective of the collaboration is to ‘improve and adapt methods of real-time fMRI-NF for clinical use, including the combination with electroencephalography (EEG) and the development of standardised procedures for the mapping of brain networks that can be targeted with neurofeedback’ [11]. In addition, five other researchers known to be carrying out work in this area were also sent the link along with those subscribed to an international mailing list used by the fMRI-neurofeedback community (rtFIN@utlists.utexas.edu). The link was available from the 30th June 2017 until the 14th August 2017 (45 days). Once closed, survey responses were extracted and examined for commonalities by the authors.

3. Results

There were 16 responses to the survey (Appendix B). Responders work in the clinical areas of depression (x4), obsessive compulsive disorder, alcohol dependence (x2), chronic pain (x2), Huntington’s disease, eating disorders, schizophrenia, ADHD (x2), post-traumatic stress disorder (PTSD) and Borderline Personality Disorder (BPD). 14 responders reported on fMRI-protocols, one on an MEG (magnetoencephalography) based protocol, and one group combines fMRI and EEG in their neurofeedback delivery. All responses have been summarised under heading titles.

3.1. Heading 1: Materials

These questions were designed to identify whether common materials, software and equipment are used among researchers. The responses to ‘what materials do you use as part of the intervention?’ were very varied and perhaps the question was not interpreted as intended. Some responses were to list the outcome measures provided to participants. The aim of the question was to establish how participants as well as those delivering interventions are provided with enough information to be able to carry out intervention tasks. Responses indicated that the majority relied on the study protocol rather than detailed user manuals for details of how to carry out the intervention. Only three responders explicitly reported use of manuals or standard operating procedures developed for delivery of the intervention.

Table 1 NF checklist survey questions sent to the NF research community using the BOS tool.

Responses to the questions of software and interface were more clear and it was apparent that this could be answered succinctly by researchers.

3.2. Heading 2: Procedures

As described earlier, the authors wanted to ensure that not only could intervention procedures be detailed, but also those in any control conditions, the exact nature of which are rarely described fully. Groups described their means of identifying target brain areas, systems for delivering feedback, neurofeedback protocol (timing of regulation and rest/control blocks) and some of the strategies provided where applicable.

Feedback was always visual and delivered in one of three ways, using an analogue representation (e.g., thermometer) of activation levels, or a change of stimulus properties (e.g., size of an alcohol-related picture) based on activation level, or a combination of a disease-relevant visual cue (e.g., alcohol- or food-related picture) and an analogue representation. Thus participants either have to regulate their brain activity whilst they are only watching the changing feedback signal, or they have to regulate their brain activity whilst watching a disease-relevant cue, and feedback is provided either directly through this cue (changing size) or with an additional signal appearing next to the cue.

One group working with adolescents reported using a token system, under which participants’ actual financial reward depended on the number of successful neurofeedback trials.

Use of control conditions varied according to study design and commonly included sham treatment groups, upregulation of a different target area, or treatment as usual, but also psychological interventions not involving scanning. Definitions of ‘placebo’ were mostly described as ‘sham feedback’ or ‘active control neurofeedback of another region’. Two ways of implementing sham feedback were described, through use of a random activation time course or through yoked feedback which uses the signal from another participant.

3.3. Heading 3: Provision and training

All responses show that the intervention is typically delivered by either post-graduate (Masters or Doctoral students) or post-Doctoral researchers. In line with this, those delivering the intervention will have had considerable academic and practical experience of using appropriate technologies and perhaps relatively little clinical experience. Although perhaps not entirely relevant to descriptions of interventions, none of the responders listed having had specific training in clinical trials.

The use of standard operating procedures (SOP) was reported. SOPs were being used to supplement experience and to specify exact working practices for using the scanning equipment.

Quality assurance (QA) and supervision tended to be provided by Principal investigators and senior members of the research team. Areas covered by QA and supervision included scanning procedures, participant handling and data quality which covered mainly the handling of imaging rather than psychometric data. The frequency of QA/supervision varied from session by session feedback to monthly observations. A couple of researchers reported that no QA or supervision was provided or that it was very informal.

3.4. Heading 4: How

Targeted areas of the brain were well described (incorporating information from question 4). The groups used either structurally or functionally defined regions of interest. The definition of structural areas was through anatomical labelling (e.g., amygdala, ventral striatum) whereas functionally defined areas were identified through a preceding localiser scan employing fMRI during exposure to stimuli relevant to the targeted neuropsychological process, for example contrasting alcohol cues vs. neutral visual stimuli or pain-catastrophising vs. neutral statements. Of the groups using structurally defined target areas four reported targeting the amygdala (albeit varying between uni- and bilateral localisation) but otherwise there was no overlap in structural or functional localisers. Studies also varied in whether they requested participants to up- or downregulate activity in the target areas, or whether they used correlation coefficients or other measures of functional relatedness/connectivity as target signals.

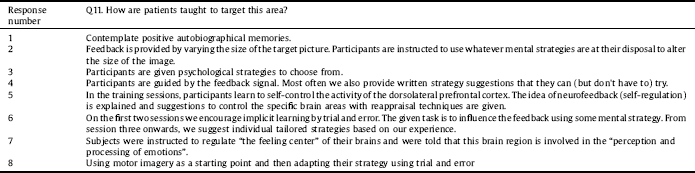

In response to whether patients were taught to target specific areas of the brain, participants were mainly encouraged to identify their own strategies through learning phases. Also adducing information from question 4, eight out of 16 responders reported providing instructions or guidance (or at least some information about the target area from which they could infer useful strategies) to participants as summarised in Table 2.

All responders reported using post treatment questionnaires and interviews to allow participants to identify the strategies they had used during the intervention.

3.5. Heading 5: Where

Interventions were delivered in research facilities located in either universities or academic hospital research centres. Specialist scanning facilities and clinic spaces were commonly listed as venues.

3.6. Heading 6: When and how

Responders were able to report the specific number of runs and sessions used in the intervention. All interventions were fairly brief with little variation across them in terms of length. 13 groups reported running up to five sessions and just three groups reported running between six and 17 sessions. Feedback runs were reported to last between one and 10 min. Sessions ranged little in length and averaged at about an hour long. The period of time sessions were spread over also varied and averaged at 2 weeks for all sessions.

The majority of researchers (nine of 14 responses) reported that they do not incorporate homework elements into their studies. Five confirmed that while homework was not a formal task, they encouraged participants to apply their training strategies in real life conditions.

3.7. Heading 7: Tailoring

Nine responders reported elements of tailoring to individual patients. These elements included individual definition of target areas through functional localisers, adjustment of difficulty to participants’ self-regulation success rates (shaping) and use of visual stimuli that were adapted to the patient’s clinical situation (e.g. alcohol cues reflecting their drinks preferences).

3.8. Heading 8: Fidelity

Of the 15 responses to questions under this heading, five researchers reported that they do not measure adherence or fidelity, 10 reported that they do. Distinction was not made between adherence and fidelity.

Six researchers reported using questionnaires to measure adherence or fidelity; four used visual confirmation that participants were completing tasks as desired and two reported that attendance rates and homework adherence were monitored. Average dropout rates were listed in percentages from 0% to 30%.

4. Discussion

From the responses to the TIDieR checklist survey, this piece of work provides encouraging insight into the ability to be able to map neuroimaging interventions to a structured framework for reporting purposes. Regardless of the considerable variability of design components, all studies could be described in standard terms of dose/duration, targeted areas/signals, and psychological strategies and learning models. In addition to more general questions that capture parameters across complex interventions (e.g. training status of the person delivering the intervention; number of sessions) we included several neurofeedback-specific questions (2, 3, 6, 10–12) that allowed investigators across signal modalities (mainly fMRI but also EEG and MEG) to capture the neurophysiological principles of their procedures. Although not included in the survey as a specific question, it was also possible for researchers to add in detail to describe the diagnostic groups they work with. This information is useful to capture and could be explicit in any future use of the checklist. Similar NF interventions could potentially be applied to different diagnostic groups (e.g. an alcohol cue paradigm could be modified to address eating disorders, pathological gambling, drug addictions etc.).

Some interesting topics were highlighted by the survey: it is notoriously difficulty to design “placebo” conditions for complex interventions. Because it is impossible for these conditions to be psychologically inert one should probably call them “control” rather than “placebo” conditions. In the survey responses, comparators included protocols of sham feedback, upregulation of a different target area, a psychological intervention not involving scanning, and treatment as usual (TAU). All of these have been used in published trials and protocols, yoked feedback [Reference Kirsch, Gruber, Ruf, Kiefer and Kirsch12], target area in parietal lobe as control for amygdala neurofeedback [Reference Young, Siegle, Zotev, Phillips, Misaki and Yuan13], other interventions, matched for time/intensity [Reference Linden, Habes, Johnston, Linden, Tatineni and Subramanian14, Reference Subramanian, Busse Morris, Brosnan, Turner, Morris and Linden15], Treatment as Usual (TAU) [Reference Cox, Subramanian, Linden, Lührs, McNamara and Playle10]. They all have advantages and disadvantages, and the variability in trial designs highlights the experience that there is no ideal control condition for neurofeedback. Comparing a neurofeedback intervention with TAU is useful if the aim is to determine whether any additional clinical benefits can be expected and whether further, larger scale effectiveness trials are justified. However, this comparison does not differentiate between the many potential mechanisms that can lead to positive effects of neurofeedback, which include the experience of the high-technology environment, the time and effort of researchers, the experience of self-control/self-efficacy and the experience of reward, in addition to any direct physiological effects of the self-regulation. They match non-scanning intervention controls for non-specific investigator effects and general training effects, but not for any of the NF-specific components. A neurofeedback intervention targeting a different area, which is not obviously involved in the neuropsychological process supposed to underlie the expected therapeutic benefit, is probably the tightest experimental control in current use, but has its own challenges [Reference Alegria, Wulff, Brinson, Barker, Norman and Brandeis16, Reference Young, Siegle, Zotev, Phillips, Misaki and Yuan17]. It may be too conservative if it turns out that the targeted area is, in fact, involved in the process (for example a control area in sensorimotor cortex in a depression trial may still be relevant to emotion processing), and it will be challenging to adjust the target and control area for self-regulation difficulty (which is needed in order to match the interventions for reward experience). Finally sham feedback (yoked feedback from another participant or random feedback) controls for training effort and the neurofeedback environment but differs from the real feedback in terms of the experience of self-efficacy and can potentially frustrate participants by providing low reward levels and/or a false sense of control [Reference Sulzer, Haller, Scharnowski, Weiskopf, Birbaumer and Blefari18].

Table 2 Methods of targeting brain areas.

Although this information was not specifically requested as part of the BOS, the authors would like to invite the community to think about including the rationale for their intervention design within study protocols. This would include being more explicit about the reasons behind the length and dose of any given intervention as well as details of any theories or mechanisms underlying the choice of brain regions being targeted and interfaces being used. Researchers may also want to think about the use of intervention handbooks and methods of standardising instructions for delivery of interventions, for participants and the researchers themselves, but also to enable replication by other research teams. This might also include formal ways of issuing homework where it is set. Using such tools could aid further understanding of intervention fidelity, that is to say whether or not the content of the intervention was delivered and received as it should be. This may be something different to adherence to the study protocol.

Our findings highlight the appropriateness and suitability of using a checklist based on the TIDieR to report, evaluate and design neurofeedback interventions. They also document a considerable variability in the neuropsychological and neurophysiological processes targeted with neurofeedback, in the ways in which feedback is delivered, in the amount of instructions provided and individual tailoring and in the dosage and duration of the intervention. This is to be expected in an emerging field of therapeutic research but highlights the need for standardisation and harmonisation of neurofeedback interventions. Such a focus on definition and clear reporting will also potentially increase the speed of development of this intervention as the field moves on towards larger and definitive clinical trials.

Funding

BRAINTRAIN is funded by the European Commission 7th Framework Programme for Research, Technological Development and Demonstration under the Grant Agreement n° 602186. The Centre for Trials Research is funded by Health and Care Research Wales.

Disclosure of interests

There are no conflicts of interest.

Appendix A. TIDieR Checklist

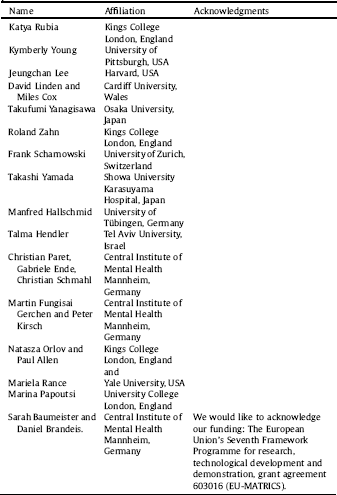

Appendix B. Survey contributors

Those who contributed to the BOS agreed to provide their names In order for us to acknowledge their contribution to this piece of work.

Comments

No Comments have been published for this article.