1. Introduction

We stabilize the wake behind a fluidic pinball using a hierarchy of model-free self-learning control methods from a one-parametric study of open-loop control to a gradient-enriched machine learning feedback control. Flow control is at the heart of many engineering applications. Traffic alone profits from flow control via drag reduction of transport vehicles (Choi, Jeon & Kim Reference Choi, Jeon and Kim2008), lift increase of wings (Semaan et al. Reference Semaan, Kumar, Burnazzi, Tissot, Cordier and Noack2016), mixing control for more efficient combustion (Dowling & Morgans Reference Dowling and Morgans2005) and noise reduction (Jordan & Colonius Reference Jordan and Colonius2013).

The control logic is a critical component for performance increases after the actuators and sensors have been deployed. The hardware is typically determined from engineering wisdom (Cattafesta & Shelpak Reference Cattafesta and Shelpak2011). The control law may be designed with a rich arsenal of mathematical methods. Control theory offers powerful methods for control design with large success for model-based stabilization of low-Reynolds-number flows or simple first- and second-order dynamics (Rowley & Williams Reference Rowley and Williams2006). Transport-related engineering applications are at high Reynolds numbers and, thus, associated with turbulent flows. So far, turbulence has eluded most attempts for model-based control albeit for a few simple exceptions (Brunton & Noack Reference Brunton and Noack2015). Examples relate to first- and second-order dynamics, e.g. the quasi-steady response to quasi-steady actuation (Pfeiffer & King Reference Pfeiffer and King2012), opposition control near walls (Choi, Moin & Kim Reference Choi, Moin and Kim1994; Fukagata & Nobuhide Reference Fukagata and Nobuhide2003), stabilizing phasor control of oscillations (Pastoor et al. Reference Pastoor, Henning, Noack, King and Tadmor2008) and two-frequency crosstalk (Glezer, Amitay & Honohan Reference Glezer, Amitay and Honohan2005; Luchtenburg et al. Reference Luchtenburg, Günter, Noack, King and Tadmor2009). In general, control design is challenged by the high dimensionality of the dynamics, the nonlinearity with many frequency crosstalk mechanisms and the large time delay between actuation and sensing.

Hence, most closed-loop control studies of turbulence resort to a model-free approach. A simple example is extremum seeking (Gelbert et al. Reference Gelbert, Moeck, Paschereit and King2012) for online tuning of one or few actuation parameters, like amplitude and frequency of periodic actuation. More complex examples involve high-dimensional parameter optimization with methods of machine learning, such as evolutionary strategies (Koumoutsakos, Freund & Parekh Reference Koumoutsakos, Freund and Parekh2001) and genetic algorithms (Benard et al. Reference Benard, Pons-Prats, Periaux, Bugeda, Braud, Bonnet and Moreau2016). Even regression problems for nonlinear feedback laws have been learned by genetic programming (Ren, Hu & Tang Reference Ren, Hu and Tang2020) and reinforcement learning (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019).

Genetic programming control (GPC) has been pioneered by Dracopoulos (Reference Dracopoulos1997) over 20 years ago and has been proven to be particularly successful for nonlinear feedback turbulence control in experiments. Examples include the drag reduction of the Ahmed body (Li et al. Reference Li, Noack, Cordier, Borée, Kaiser and Harambat2018) and the same obstacle under yaw angle (Li et al. Reference Li, Borée, Noack, Cordier and Harambat2019), mixing layer control (Parezanović et al. Reference Parezanović, Cordier, Spohn, Duriez, Noack, Bonnet, Segond, Abel and Brunton2016), separation control of a turbulent boundary layer (Debien et al. Reference Debien, von Krbek, Mazellier, Duriez, Cordier, Noack, Abel and Kourta2016), recirculation zone reduction behind a backward facing step (Gautier et al. Reference Gautier, Aider, Duriez, Noack, Segond and Abel2015) and jet mixing enhancement (Zhou et al. Reference Zhou, Fan, Zhang, Li and Noack2020), just to name a few. Genetic programming control has consistently outperformed existing optimized control approaches, often with unexpected frequency crosstalk mechanisms (Noack Reference Noack2019). Genetic programming control has a powerful capability to find new mechanisms (exploration) and populate the best minima (exploitation). Yet, the exploitation is inefficient leading to increasing redundant testing of similar control laws with poor convergence to the minimum. This challenge is well known and will be addressed in this study.

As a benchmark control problem, we chose the fluidic pinball, the flow around three parallel cylinders one radius apart from each other (Noack et al. Reference Noack, Stankiewicz, Morzyński and Schmid2016; Chen et al. Reference Chen, Ji, Alam, Williams and Xu2020; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020). The triangle of centres points in the direction of the flow. The actuation is performed by rotating each cylinder independently. The flow is monitored by nine velocity probes downstream. The control goal is the complete stabilization of the unstable symmetric steady Navier–Stokes solution. This choice is motivated by several reasons. First, already the unforced fluidic pinball shows a surprisingly rich dynamics. With increasing Reynolds number the steady wake becomes successively unstable in a Hopf bifurcation, a pitchfork bifurcation, another Hopf bifurcation before, eventually, a chaotic state is reached. Second, the cylinder rotations may encapsulate the most common wake stabilization approaches, such as Coanda forcing (Geropp & Odenthal Reference Geropp and Odenthal2000), base bleed (Wood Reference Wood1964; Bearman Reference Bearman1967), low-frequency forcing (Pastoor et al. Reference Pastoor, Henning, Noack, King and Tadmor2008), high-frequency forcing (Thiria, Goujon-Durand & Wesfreid Reference Thiria, Goujon-Durand and Wesfreid2006), phasor control (Roussopoulos Reference Roussopoulos1993) and circulation control (Cortelezzi, Leonard & Doyle Reference Cortelezzi, Leonard and Doyle1994). Third, the rich unforced and controlled dynamics mimics nonlinear behaviour of turbulence while the computation of the two-dimensional flow is manageable on workstations. To summarize, the fluidic pinball is an attractive all-weather plant for non-trivial multiple-input multiple-output (MIMO) control dynamics.

This study focuses on the stabilization of the unstable symmetric steady solution of the fluidic pinball in the pitchfork regime, i.e. for asymmetric vortex shedding. This goal is pursued under symmetric steady actuation, general non-symmetric steady actuation and general nonlinear feedback control. We aim to physically explore the actuation mechanisms in a rich search space and to efficiently exploit the performance gains from gradient-based approaches. This multi-objective optimization leads to innovations of hitherto employed parameter optimizations and regression solvers as a beneficial side effect.

The manuscript is organized as follows. Section 2 introduces the fluidic pinball problem and the corresponding direct numerical simulation. Section 3 reviews and augments machine learning control strategies. In § 4 a hierarchy of increasingly more complex control laws is optimized for wake stabilization. Section 5 discusses design aspects of the proposed methodology. Section 6 summarizes the results and indicates directions for future research.

2. The fluidic pinball – a benchmark flow control problem

In this section we describe the fluid system studied for the control optimization – the fluidic pinball. First we present the fluidic pinball configuration and the unsteady two-dimensional Navier–Stokes solver in § 2.1, then the unforced flow spatio-temporal dynamics in § 2.2 and finally the control problem for the fluidic pinball in § 2.3.

2.1. Configuration and numerical solver

The test case is a two-dimensional uniform flow past a cluster of three cylinders of same diameter ![]() $D$. The centre of the cylinders form an equilateral triangle pointing upstream. The flow is controlled by the independent rotation of the cylinders along their axis. The rotation of the cylinders enables the steering of incoming fluid particles, like a pinball machine. Thus, we refer to this configuration as the fluidic pinball. In our study we choose the side length of the equilateral triangle equal to

$D$. The centre of the cylinders form an equilateral triangle pointing upstream. The flow is controlled by the independent rotation of the cylinders along their axis. The rotation of the cylinders enables the steering of incoming fluid particles, like a pinball machine. Thus, we refer to this configuration as the fluidic pinball. In our study we choose the side length of the equilateral triangle equal to ![]() $1.5D$. The distance of one radius gives rise to an interesting flip-flopping dynamics (Chen et al. Reference Chen, Ji, Alam, Williams and Xu2020).

$1.5D$. The distance of one radius gives rise to an interesting flip-flopping dynamics (Chen et al. Reference Chen, Ji, Alam, Williams and Xu2020).

The flow is described in a Cartesian coordinate system, where the origin is located midway between the two rearward cylinders. The ![]() $x$-axis is parallel to the streamwise direction and the

$x$-axis is parallel to the streamwise direction and the ![]() $y$-axis is orthogonal to the cylinder axis. The velocity field is denoted by

$y$-axis is orthogonal to the cylinder axis. The velocity field is denoted by ![]() $\boldsymbol {u}=(u,v)$ and the pressure field by

$\boldsymbol {u}=(u,v)$ and the pressure field by ![]() $p$. Here,

$p$. Here, ![]() $u$ and

$u$ and ![]() $v$ are, respectively, the streamwise and transverse components of the velocity. We consider a Newtonian fluid of constant density

$v$ are, respectively, the streamwise and transverse components of the velocity. We consider a Newtonian fluid of constant density ![]() $\rho$ and kinematic viscosity

$\rho$ and kinematic viscosity ![]() $\nu$. For the direct numerical simulation, the unsteady incompressible viscous Navier–Stokes equations are non-dimensionalized with cylinder diameter

$\nu$. For the direct numerical simulation, the unsteady incompressible viscous Navier–Stokes equations are non-dimensionalized with cylinder diameter ![]() $D$, the incoming velocity

$D$, the incoming velocity ![]() $U_{\infty }$ and the fluid density

$U_{\infty }$ and the fluid density ![]() $\rho$. The corresponding Reynolds number is

$\rho$. The corresponding Reynolds number is ![]() . Throughout this study, only

. Throughout this study, only ![]() $Re_D =100$ is considered.

$Re_D =100$ is considered.

The computational domain ![]() $\varOmega$ is a rectangle bounded by

$\varOmega$ is a rectangle bounded by ![]() $[-6,20]\times [-6,6]$ and excludes the interior of the cylinders,

$[-6,20]\times [-6,6]$ and excludes the interior of the cylinders,

\begin{align} \varOmega &= \{[x,y]^\intercal \in \mathcal{R}^2 \colon [x,y]^\intercal \in [{-}6,20]\times[{-}6,6] \land (x-x_i)^2\nonumber\\ &\quad +(y-y_i)^2 \geq 1/4,\ i=1, 2, 3 \}. \end{align}

\begin{align} \varOmega &= \{[x,y]^\intercal \in \mathcal{R}^2 \colon [x,y]^\intercal \in [{-}6,20]\times[{-}6,6] \land (x-x_i)^2\nonumber\\ &\quad +(y-y_i)^2 \geq 1/4,\ i=1, 2, 3 \}. \end{align}

Here ![]() $[x_i,y_i]^\intercal$, with

$[x_i,y_i]^\intercal$, with ![]() $i=1,2,3$, are the coordinates of the cylinder centres, starting from the front cylinder and numbered in a mathematically positive direction,

$i=1,2,3$, are the coordinates of the cylinder centres, starting from the front cylinder and numbered in a mathematically positive direction,

\begin{equation} \begin{array}{ll} x_1 ={-}3/2\cos(30^{{\circ}}), & y_1= 0,\\ x_2 = 0, & y_2={-}3/4,\\ x_3 = 0, & y_3= 3/4. \end{array} \end{equation}

\begin{equation} \begin{array}{ll} x_1 ={-}3/2\cos(30^{{\circ}}), & y_1= 0,\\ x_2 = 0, & y_2={-}3/4,\\ x_3 = 0, & y_3= 3/4. \end{array} \end{equation}

The computational domain ![]() $\varOmega$ is discretized on an unstructured grid comprising 4225 triangles and 8633 nodes. The grid is optimized to provide a balance between computation speed and accuracy. Grid independence of the direct Navier–Stokes solutions has been established by Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020).

$\varOmega$ is discretized on an unstructured grid comprising 4225 triangles and 8633 nodes. The grid is optimized to provide a balance between computation speed and accuracy. Grid independence of the direct Navier–Stokes solutions has been established by Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020).

The boundary conditions for the inflow, upper and lower boundaries are ![]() $U_{\infty }=\boldsymbol {e}_x$ while a stress-free condition is assumed for the outflow boundary. The control of the fluidic pinball is carried out by the rotation of the cylinders. A non-slip condition is adopted on the cylinders: the flow adopts the circumferential velocities of the front, bottom and top cylinder specified by

$U_{\infty }=\boldsymbol {e}_x$ while a stress-free condition is assumed for the outflow boundary. The control of the fluidic pinball is carried out by the rotation of the cylinders. A non-slip condition is adopted on the cylinders: the flow adopts the circumferential velocities of the front, bottom and top cylinder specified by ![]() $b_1 = U_F$,

$b_1 = U_F$, ![]() $b_2 = U_B$ and

$b_2 = U_B$ and ![]() $b_3 = U_T$. The actuation command comprises these velocities,

$b_3 = U_T$. The actuation command comprises these velocities, ![]() $\boldsymbol {b} = [b_1,b_2,b_3]^\intercal$. A positive (negative) value of the actuation command corresponds to counter-clockwise (clockwise) rotation of the cylinders along their axis. The numerical integration of the Navier–Stokes equations is carried out by an in-house solver using a fully implicit finite-element method. The time integration is performed with an iterative Newton–Raphson-like approach. The chosen time step of 0.1 corresponds to about 1 % of the characteristic shedding period. The method is third-order accurate in time and space and employs a pseudo-pressure formulation. The solver has been employed in recent fluidic pinball investigations for reduced-order modelling (Noack et al. Reference Noack, Stankiewicz, Morzyński and Schmid2016; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020) and for control (Ishar et al. Reference Ishar, Kaiser, Morzynski, Albers, Meysonnat, Schröder and Noack2019). We refer to Noack et al. (Reference Noack, Afanasiev, Morzyński, Tadmor and Thiele2003, Reference Noack, Stankiewicz, Morzyński and Schmid2016) for further information on the numerical method. The code is accessible on GitLab upon email request.

$\boldsymbol {b} = [b_1,b_2,b_3]^\intercal$. A positive (negative) value of the actuation command corresponds to counter-clockwise (clockwise) rotation of the cylinders along their axis. The numerical integration of the Navier–Stokes equations is carried out by an in-house solver using a fully implicit finite-element method. The time integration is performed with an iterative Newton–Raphson-like approach. The chosen time step of 0.1 corresponds to about 1 % of the characteristic shedding period. The method is third-order accurate in time and space and employs a pseudo-pressure formulation. The solver has been employed in recent fluidic pinball investigations for reduced-order modelling (Noack et al. Reference Noack, Stankiewicz, Morzyński and Schmid2016; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020) and for control (Ishar et al. Reference Ishar, Kaiser, Morzynski, Albers, Meysonnat, Schröder and Noack2019). We refer to Noack et al. (Reference Noack, Afanasiev, Morzyński, Tadmor and Thiele2003, Reference Noack, Stankiewicz, Morzyński and Schmid2016) for further information on the numerical method. The code is accessible on GitLab upon email request.

The initial condition for the numerical simulations is the symmetric steady solution. The symmetrical steady solution is computed with a Newton–Raphson method on the steady Navier–Stokes. An initial short and small rotation of the front cylinder is used to kick-start the transient to natural vortex shedding in the first period (Deng et al. Reference Deng, Noack, Morzyński and Pastur2020). This rotation has a circumferential velocity of ![]() $+$0.5 at

$+$0.5 at ![]() $t<6.25$ and of

$t<6.25$ and of ![]() $-$0.5 at

$-$0.5 at ![]() $6.25<t<12.5$. The transient regime lasts around 400 convective time units. Figure 1 shows the vorticity field for the symmetric steady solution and the natural unforced flow after 400 convective units. The snapshot at

$6.25<t<12.5$. The transient regime lasts around 400 convective time units. Figure 1 shows the vorticity field for the symmetric steady solution and the natural unforced flow after 400 convective units. The snapshot at ![]() $t=400$ in figure 1(b) will be the initial condition for all the following simulations.

$t=400$ in figure 1(b) will be the initial condition for all the following simulations.

Figure 1. Vorticity fields for the unforced fluidic pinball at ![]() $Re_D=100$. Blue (red) regions bounded by dashed lines represent negative (positive) vorticity. Darker regions indicate higher values of vorticity magnitude. (a) Symmetric steady solution, (b) unforced flow at

$Re_D=100$. Blue (red) regions bounded by dashed lines represent negative (positive) vorticity. Darker regions indicate higher values of vorticity magnitude. (a) Symmetric steady solution, (b) unforced flow at ![]() $t=400$.

$t=400$.

2.2. Flow characteristics

The fluidic pinball is a geometrically simple configuration that comprises key features of real-life flows such as successive bifurcations and frequency crosstalk between modes. Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020) shows that the unforced fluidic pinball undergoes successive bifurcations with increasing Reynolds number before reaching a chaotic regime. The first Hopf bifurcation at Reynolds number ![]() $Re\approx 18$ breaks the symmetry in the flow and initiates the von Kármán vortex shedding. The second bifurcation at Reynolds number

$Re\approx 18$ breaks the symmetry in the flow and initiates the von Kármán vortex shedding. The second bifurcation at Reynolds number ![]() $Re \approx 68$ is of a pitchfork type and gives rise to a transverse deflection of jet-like flow between the two rearward cylinders. The bistability of the jet deflection has been reported by Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020). At a Reynolds number

$Re \approx 68$ is of a pitchfork type and gives rise to a transverse deflection of jet-like flow between the two rearward cylinders. The bistability of the jet deflection has been reported by Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020). At a Reynolds number ![]() $Re = 100$, the jet deflection is rapid and occurs before the vortex shedding is fully established. Figure 2(a) shows an increase of the lift coefficient

$Re = 100$, the jet deflection is rapid and occurs before the vortex shedding is fully established. Figure 2(a) shows an increase of the lift coefficient ![]() $C_L$ before oscillations set in and the lift coefficient converges against a periodic oscillation around a slightly reduced mean value. Those bifurcations are a consequence of multiple instabilities present in the flow: there are two shear instabilities, on the top and bottom cylinder, and a jet bistability originating from the gap between the two back cylinders. The shear-layer instabilities synchronize to a von Kármán vortex shedding.

$C_L$ before oscillations set in and the lift coefficient converges against a periodic oscillation around a slightly reduced mean value. Those bifurcations are a consequence of multiple instabilities present in the flow: there are two shear instabilities, on the top and bottom cylinder, and a jet bistability originating from the gap between the two back cylinders. The shear-layer instabilities synchronize to a von Kármán vortex shedding.

Figure 2. Characteristics of the unforced natural flow starting from the steady solution (![]() $t=0$). The transient spans until

$t=0$). The transient spans until ![]() $t \approx 400$. (a) Time evolution of the lift coefficient

$t \approx 400$. (a) Time evolution of the lift coefficient ![]() $C_L$, (b) phase portrait, (c) time evolution of the instantaneous cost function

$C_L$, (b) phase portrait, (c) time evolution of the instantaneous cost function ![]() $j_a$ and (d) power spectral density (PSD) showing the natural frequency

$j_a$ and (d) power spectral density (PSD) showing the natural frequency ![]() $f_0=0.116$ and its first harmonic. The phase portrait is computed during the post-transient regime

$f_0=0.116$ and its first harmonic. The phase portrait is computed during the post-transient regime ![]() $t \in [900,1400]$ and the PSD is computed over the last 1000 convective time units,

$t \in [900,1400]$ and the PSD is computed over the last 1000 convective time units, ![]() $t \in [400,1400]$.

$t \in [400,1400]$.

Figure 2 illustrates the dynamics of the unforced flow from the unstable steady symmetric solution to the post-transient periodic flow. The phase portrait in figure 2(b) and the power spectral density (PSD) in figure 2(d) show a periodic regime with frequency ![]() $f_0=0.116$ and its harmonic. Figure 2(a) shows that the mean value of the lift coefficient

$f_0=0.116$ and its harmonic. Figure 2(a) shows that the mean value of the lift coefficient ![]() $C_L$ is not null. This is due to the deflection of the jet behind the two rearward cylinders during the post-transient regime. During this regime, the deflection of the jet stays on one side as it is illustrated in figure 3(a–h) over one period and in figure 3(j) in the mean field. This deflection explains the asymmetry of the lift coefficient

$C_L$ is not null. This is due to the deflection of the jet behind the two rearward cylinders during the post-transient regime. During this regime, the deflection of the jet stays on one side as it is illustrated in figure 3(a–h) over one period and in figure 3(j) in the mean field. This deflection explains the asymmetry of the lift coefficient ![]() $C_L$. Indeed, the upward oriented jet increases the pressure on the lower part of the top cylinder leading to an increase of the lift coefficient. In figure 2(a) the initial downward spike on the lift coefficient is due to the initial kick. The unforced natural flow is our reference simulation for future comparisons.

$C_L$. Indeed, the upward oriented jet increases the pressure on the lower part of the top cylinder leading to an increase of the lift coefficient. In figure 2(a) the initial downward spike on the lift coefficient is due to the initial kick. The unforced natural flow is our reference simulation for future comparisons.

Figure 3. Vorticity fields of the unforced flow. (a)–(f) Time evolution of the vorticity field in the last period of the simulation, (i) the objective symmetric steady solution and (j) the mean field of the unforced flow. The colour code is the same as figure 1. Here ![]() $T_0$ is the natural period associated to the natural frequency

$T_0$ is the natural period associated to the natural frequency ![]() $f_0$. The mean field has been computed by averaging the flow over 100 periods.

$f_0$. The mean field has been computed by averaging the flow over 100 periods.

Thanks to the rotation of the cylinders, the fluidic pinball is capable of reproducing six actuation mechanisms inspired from wake stabilization literature and exploiting distinct physics. Examples of those mechanisms can be found in Ishar et al. (Reference Ishar, Kaiser, Morzynski, Albers, Meysonnat, Schröder and Noack2019). First, the wake can be stabilized by shaping the wake region more aerodynamically – also called fluidic boat tailing. Here, the shear layers are vectored towards the centre region with passive devices, such as vanes (Flügel Reference Flügel1930) or active control through Coanda blowing (Geropp Reference Geropp1995; Geropp & Odenthal Reference Geropp and Odenthal2000; Barros et al. Reference Barros, Borée, Noack, Spohn and Ruiz2016). In the case of the fluidic pinball we can mimic this effect by using counter-rotating rearward cylinders which accelerate the boundary layers and delay separation. This fluidic boat tailing is typically associated with significant drag reduction. Second, the two rearward cylinders can also rotate oppositely ejecting a fluid jet on the centreline. Thus, interaction between the upper and lower shear layer is suppressed, preventing the development of a von Kármán vortex in the vicinity of the cylinders. Such a base bleeding mechanism has a similar physical effect as a splitter plate behind a bluff body and has been proved to be an effective means for wake stabilization (Wood Reference Wood1964; Bearman Reference Bearman1967). Third, phasor control can be performed by estimating the oscillation phase and feeding it back with a phase shift and gain (Protas Reference Protas2004). Fourth, unified rotation of the three cylinders in the same direction gives rise to higher velocities, and, thus, larger vorticity, on one side at the expense of the other side, destroying the vortex shedding. This effect relates to the Magnus effect and stagnation point control (Seifert Reference Seifert2012). Fifth, high-frequency forcing can be effected by symmetric periodic oscillation of the rearward cylinders. With a vigorous cylinder rotation (Thiria et al. Reference Thiria, Goujon-Durand and Wesfreid2006), the upper and lower shear layers are re-energized, reducing the transverse wake profile gradients and, thus, the instability of the flow. Thus, the effective eddy viscosity in the von Kármán vortices increases, adding a damping effect. Sixth and finally, a symmetrical forcing at a lower frequency than the natural vortex shedding may stabilize the wake (Pastoor et al. Reference Pastoor, Henning, Noack, King and Tadmor2008). This is due to the mismatch between the anti-symmetric vortex shedding and the forced symmetric dynamics whose clock-work is distinctly out of sync with the shedding period. High- and low-frequency forcing lead to frequency crosstalk between actuation and vortex shedding over the mean flows, as described by the low-dimensional generalized mean-field model (Luchtenburg et al. Reference Luchtenburg, Günter, Noack, King and Tadmor2009).

The fluidic pinball is an interesting MIMO control benchmark. The configuration exhibits well-known wake stabilization mechanisms in physics. From a dynamical perspective, nonlinear frequency crosstalk can easily be enforced. In addition, even long-term simulations can easily be performed on a laptop within an hour.

2.3. Control objective and optimization problem

Several control objectives related to the suppression or reduction of undesired forces can be considered for the fluidic pinball. We can reduce the net drag power, increase the recirculation bubble length, reduce lift fluctuations or even mitigate the total fluctuation energy.

In this study we aim to stabilize the unstable steady symmetric Navier–Stokes solution at ![]() $Re_D=100$. The associated objectives are

$Re_D=100$. The associated objectives are ![]() $J_a$, quantifying the closeness to the symmetric steady solution and

$J_a$, quantifying the closeness to the symmetric steady solution and ![]() $J_b$, the actuation power. The cost

$J_b$, the actuation power. The cost ![]() $J_a$ is defined as the temporal average of the residual fluctuation energy of the actuated flow field

$J_a$ is defined as the temporal average of the residual fluctuation energy of the actuated flow field ![]() $\boldsymbol {u}_{\boldsymbol {b}}$ with respect to the symmetric steady flow

$\boldsymbol {u}_{\boldsymbol {b}}$ with respect to the symmetric steady flow ![]() $\boldsymbol {u}_s$,

$\boldsymbol {u}_s$,

with the instantaneous cost function

based on the ![]() $L_2$-norm

$L_2$-norm

\begin{equation} \Vert \boldsymbol{u} \Vert_\varOmega = \sqrt{ \iint_{\varOmega} {u}^2 + {v}^2\,\mathrm{d} \boldsymbol{x}}. \end{equation}

\begin{equation} \Vert \boldsymbol{u} \Vert_\varOmega = \sqrt{ \iint_{\varOmega} {u}^2 + {v}^2\,\mathrm{d} \boldsymbol{x}}. \end{equation}

The control is activated at ![]() $t_0=400$ convective time units after the starting kick on the steady solution. Thus, we have a fully established post-transient regime. The cost function is evaluated until

$t_0=400$ convective time units after the starting kick on the steady solution. Thus, we have a fully established post-transient regime. The cost function is evaluated until ![]() $T_{ev}=1400$ convective time units. Thus, the time average is effected over 1000 convective time units to make sure that the transient regime has far less weight as compared with the actuated regime. Yet, a faster stabilizing response to actuation is clearly desirable and factors positively into the cost.

$T_{ev}=1400$ convective time units. Thus, the time average is effected over 1000 convective time units to make sure that the transient regime has far less weight as compared with the actuated regime. Yet, a faster stabilizing response to actuation is clearly desirable and factors positively into the cost.

Here ![]() $J_b$ is naturally chosen as a measurement of the actuation energy investment. Evidently, a low actuation energy is desirable. The actuation power is computed as the power of the torque applied by the fluid on the cylinders. Here

$J_b$ is naturally chosen as a measurement of the actuation energy investment. Evidently, a low actuation energy is desirable. The actuation power is computed as the power of the torque applied by the fluid on the cylinders. Here ![]() $J_b$ is the time-averaged actuation power over

$J_b$ is the time-averaged actuation power over ![]() $T_{ev} = 1000$ time units,

$T_{ev} = 1000$ time units,

\begin{equation} J_b(\boldsymbol{b})=\frac{1}{T_{ev}}\int_{t_0}^{t_0+T_{ev}} \sum_{i=1}^{3}\mathcal{P}_{act,i} \,\mathrm{d} t, \end{equation}

\begin{equation} J_b(\boldsymbol{b})=\frac{1}{T_{ev}}\int_{t_0}^{t_0+T_{ev}} \sum_{i=1}^{3}\mathcal{P}_{act,i} \,\mathrm{d} t, \end{equation}

where ![]() $\mathcal {P}_{act,i}$ is the actuation power supplied integrated over cylinder

$\mathcal {P}_{act,i}$ is the actuation power supplied integrated over cylinder ![]() $i$,

$i$,

where ![]() $( F^{\theta }_{s,i} \,\mathrm {d}s )$ is the azimuthal component of the local fluid forces applied to cylinder

$( F^{\theta }_{s,i} \,\mathrm {d}s )$ is the azimuthal component of the local fluid forces applied to cylinder ![]() $i$. The negative sign denotes that the power is supplied and not received by the cylinders. The numerical value of

$i$. The negative sign denotes that the power is supplied and not received by the cylinders. The numerical value of ![]() $J_b$ may be compared with the unforced drag coefficient

$J_b$ may be compared with the unforced drag coefficient ![]() $c_D=3.75$ which is also the non-dimensionalized parasitic drag power.

$c_D=3.75$ which is also the non-dimensionalized parasitic drag power.

In this study optimization is based on the cost function ![]() $J=J_a$ and the actuation investment

$J=J_a$ and the actuation investment ![]() $J_b$ is evaluated separately. We refrain from a cost function

$J_b$ is evaluated separately. We refrain from a cost function ![]() $J$ which employs the objective function

$J$ which employs the objective function ![]() $J_a$ and penalizes the actuation investment

$J_a$ and penalizes the actuation investment ![]() $J_b$ with suitable weight

$J_b$ with suitable weight ![]() $\gamma$, i.e.

$\gamma$, i.e. ![]() $J = J_a + \gamma J_b$. The procedure has three reasons. First, the distance between two flows and actuation energy belong to two different worlds, kinematics and dynamics. Any choice of the penalization parameter

$J = J_a + \gamma J_b$. The procedure has three reasons. First, the distance between two flows and actuation energy belong to two different worlds, kinematics and dynamics. Any choice of the penalization parameter ![]() $\gamma$ will be subjective and implicate a sensitivity discussion. Moreover, a strong penalization would constrain the search space and may rule out relevant actuation mechanisms. In this study we look for stabilization mechanisms rather than the most power-efficient solutions. Second, the complete stabilization of the steady solution would lead to a vanishing actuation

$\gamma$ will be subjective and implicate a sensitivity discussion. Moreover, a strong penalization would constrain the search space and may rule out relevant actuation mechanisms. In this study we look for stabilization mechanisms rather than the most power-efficient solutions. Second, the complete stabilization of the steady solution would lead to a vanishing actuation ![]() $\boldsymbol {b} \equiv 0$ and, thus, vanishing energy

$\boldsymbol {b} \equiv 0$ and, thus, vanishing energy ![]() $J_b$. Thus, the optimization problem without actuation energy can be expected to be well posed. Third, a Pareto front of

$J_b$. Thus, the optimization problem without actuation energy can be expected to be well posed. Third, a Pareto front of ![]() $J_a$,

$J_a$, ![]() $J_b$ reveals how much actuation power is required for which closeness to the steady solution. Using Pareto optimality, there is no need to decide in advance on the subjective weight

$J_b$ reveals how much actuation power is required for which closeness to the steady solution. Using Pareto optimality, there is no need to decide in advance on the subjective weight ![]() $\gamma$. Foreshadowing the results, the best performance

$\gamma$. Foreshadowing the results, the best performance ![]() $J_a$ turns out to be achieved with the least actuation energy

$J_a$ turns out to be achieved with the least actuation energy ![]() $J_b$. This result corroborates a posteriori the decision not to include actuation energy in the cost.

$J_b$. This result corroborates a posteriori the decision not to include actuation energy in the cost.

The instantaneous cost function ![]() $j_a$ of the unforced flow is shown in figure 2(c). We notice a slight overshoot around

$j_a$ of the unforced flow is shown in figure 2(c). We notice a slight overshoot around ![]() $t=200$ before converging to a post-transient fluctuating regime. The post-transient regime shows the expected periodic behaviour from von Kármán vortex shedding. The cost averaged over 1000 convective time units is

$t=200$ before converging to a post-transient fluctuating regime. The post-transient regime shows the expected periodic behaviour from von Kármán vortex shedding. The cost averaged over 1000 convective time units is ![]() $J_0=39.08$ and serves as reference to actuation success.

$J_0=39.08$ and serves as reference to actuation success.

To reach the steady symmetric solution, the flow is controlled by the rotation of the three cylinders. The actuation command ![]() $\boldsymbol {b}=[b_1,b_2,b_3]^\intercal$ is determined by the control law

$\boldsymbol {b}=[b_1,b_2,b_3]^\intercal$ is determined by the control law ![]() $\boldsymbol {K}$. This control law may operate open loop or closed loop with flow input. Considered open-loop actuations are a steady or harmonic oscillation around a vanishing mean. Considered feedback includes velocity sensor signals in the wake. Thus, in the most general formulation, the control law reads as

$\boldsymbol {K}$. This control law may operate open loop or closed loop with flow input. Considered open-loop actuations are a steady or harmonic oscillation around a vanishing mean. Considered feedback includes velocity sensor signals in the wake. Thus, in the most general formulation, the control law reads as

with ![]() $\boldsymbol {h}(t)$ and

$\boldsymbol {h}(t)$ and ![]() $\boldsymbol {s}(t)$ being vectors comprising respectively time-dependent harmonic functions and sensor signals. The sensor signals include the instantaneous velocity signals as well as three recorded values over one period as elaborated in § 4.3. In the following,

$\boldsymbol {s}(t)$ being vectors comprising respectively time-dependent harmonic functions and sensor signals. The sensor signals include the instantaneous velocity signals as well as three recorded values over one period as elaborated in § 4.3. In the following, ![]() $N_b$ represents the number of actuators,

$N_b$ represents the number of actuators, ![]() $N_h$ the number of time-dependent functions and

$N_h$ the number of time-dependent functions and ![]() $N_s$ the number of sensor signals. Then the optimal control problem determines the control law which minimizes the cost

$N_s$ the number of sensor signals. Then the optimal control problem determines the control law which minimizes the cost

with ![]() $\mathcal {K}:X \mapsto Y$ being the space of control laws. Here,

$\mathcal {K}:X \mapsto Y$ being the space of control laws. Here, ![]() $X$ is the input space, e.g. the space of sensor signals, and

$X$ is the input space, e.g. the space of sensor signals, and ![]() $Y$ is the space of all possible outputs. In general, (2.9) is a challenging non-convex optimization problem.

$Y$ is the space of all possible outputs. In general, (2.9) is a challenging non-convex optimization problem.

3. Control optimization framework

In this section we present the control optimization for stabilizing the fluidic pinball. This constitutes a challenging nonlinear non-convex optimization problem in which the possibility of several local minima must be expected. Hence, we specifically address how to explore new minima while keeping the convergence rate and efficiency of gradient-based approaches. In § 3.1 the principles of exploration and exploitation are discussed for parameter and control law optimization. Then, the employed algorithms are described: the explorative gradient method (EGM) for parametric optimization (§ 3.2) and the gradient-enriched machine learning control (gMLC) for control law optimization (§ 3.3).

3.1. Optimization principles – exploration vs exploitation

The two algorithms, EGM and gMLC, enable model-free control optimization. These algorithms combine the advantages of exploitation and exploration. Exploitation is based on a downhill simplex method with the best performing of all tested control laws, also called ‘individuals’. The goal is to ‘slide down’ the best identified minimum.

Exploration is performed with another algorithm using all previously tested individuals. The goal is to find potentially new and better minima, ideally the global minimum. The method for exploration depends on the search space. For a low-dimensional parameter space, a space-filling version of the Latin hypercube sampling (LHS) guarantees optimal geometric coverage of the search space. For a high-dimensional function space, genetic programming is found to be efficient.

The EGM and gMLC start with an initial set of individuals to be evaluated. Then, exploitive and explorative phases iterate until a convergence criterion is reached. The iteration hedges against several worst-case scenarios. The control landscape may have only a single minimum accessible from any other point by steepest descend. In this case, exploration is often inefficient, although it might help in avoiding slow marches through long shallow valleys (Li et al. Reference Li, Cui, Jia, Li, Yang, Morzyński and Noack2020). The control landscape may also have many minima accessible by gradient-based searches. In this case, exploitation is likely to incrementally improve performance in suboptimal minima and the search strategy should have a significant investment in exploration. The minima of the control landscape may also have narrow basins of attractions for gradient-based iterations and extended plateaus. This is another scenario where iteration between exploitation and exploration is advised.

Many optimizers balance exploration and exploitation and gradually shift from the former to the latter. This strategy sounds reasonable but is not a good hedge against the described worst-case scenarios where almost all exploitative or almost all explorative algorithms are doomed to fail.

Note that the chances of exploration landing close to a new better minimum are small. Yet, the explorative phases may find new basins of attractions for successful gradient-based descents. This is another argument for the alternating execution of exploration and exploitation.

Finally, we note that the proposed explorative–exploitive schemes allows both kinds of iterations to be adjusted to the control landscape. For instance, LHS in a high-dimensional search space will initially explore only the boundary and may better be replaced by Monte Carlo or a genetic algorithm. We refer to Li et al. (Reference Li, Cui, Jia, Li, Yang, Morzyński and Noack2020) for a thorough comparison of EGM and five common optimizers and to Duriez, Brunton & Noack (Reference Duriez, Brunton and Noack2016) for GPC. The next two sections detail both optimizers, EGM and gMLC.

3.2. Parameter optimization with the EGM

The EGM optimizes ![]() $N_p$ parameters

$N_p$ parameters ![]() $\boldsymbol {b} = [ b_1, \ldots , b_{N_p} ]^\intercal$ with respect to cost

$\boldsymbol {b} = [ b_1, \ldots , b_{N_p} ]^\intercal$ with respect to cost ![]() $J(\boldsymbol {b})$ and comprises exploration and exploitation phases. In the context of parameter optimization, we do not differentiate between the control law

$J(\boldsymbol {b})$ and comprises exploration and exploitation phases. In the context of parameter optimization, we do not differentiate between the control law ![]() $\boldsymbol {K}=\text {const.}$ and the associated actuation command

$\boldsymbol {K}=\text {const.}$ and the associated actuation command ![]() $\boldsymbol {b}=\boldsymbol {K}$. The search space, or actuation domain, is a compact subset

$\boldsymbol {b}=\boldsymbol {K}$. The search space, or actuation domain, is a compact subset ![]() $\mathcal {B}$ of

$\mathcal {B}$ of ![]() $\mathbb {R}^{N_p}$, typically defined by upper and lower bounds for each parameter. The exploration phase is based on a space-filling variant of LHS (McKay, Beckman & Conover Reference McKay, Beckman and Conover1979) whereas the exploitation phase is carried out by Nelder–Mead's downhill simplex (Nelder & Mead Reference Nelder and Mead1965).

$\mathbb {R}^{N_p}$, typically defined by upper and lower bounds for each parameter. The exploration phase is based on a space-filling variant of LHS (McKay, Beckman & Conover Reference McKay, Beckman and Conover1979) whereas the exploitation phase is carried out by Nelder–Mead's downhill simplex (Nelder & Mead Reference Nelder and Mead1965).

The first ![]() $N_p+1$ initial individuals

$N_p+1$ initial individuals ![]() $\boldsymbol {b}_m$,

$\boldsymbol {b}_m$, ![]() $m=1,\ldots , N_p+1$ define the first ‘amoeba’ of the downhill simplex method. The first individual

$m=1,\ldots , N_p+1$ define the first ‘amoeba’ of the downhill simplex method. The first individual ![]() $\boldsymbol {b}_1$ is typically placed at the centre of

$\boldsymbol {b}_1$ is typically placed at the centre of ![]() $\mathcal {B}$. The

$\mathcal {B}$. The ![]() $N_p$ remaining vertices are slightly displaced along the

$N_p$ remaining vertices are slightly displaced along the ![]() $b_m$ axes. In other words,

$b_m$ axes. In other words, ![]() $\boldsymbol {b}_m = \boldsymbol {b}_1 + h_m \boldsymbol {e}_{m-1}$ for

$\boldsymbol {b}_m = \boldsymbol {b}_1 + h_m \boldsymbol {e}_{m-1}$ for ![]() $m=2, \ldots , N_p+1$. Here,

$m=2, \ldots , N_p+1$. Here, ![]() $\boldsymbol {e}_m := [ \delta _{m,1},\ldots ,\delta _{m,N_p} ]^\intercal$ is the unit vector in the

$\boldsymbol {e}_m := [ \delta _{m,1},\ldots ,\delta _{m,N_p} ]^\intercal$ is the unit vector in the ![]() $m$th direction and

$m$th direction and ![]() $h_m$ is the corresponding step size. The increment

$h_m$ is the corresponding step size. The increment ![]() $h_m$ is chosen to be small compared with the range of the corresponding dimension.

$h_m$ is chosen to be small compared with the range of the corresponding dimension.

The exploitation phase employs the downhill simplex method. This method is robust and widely used for data-driven optimization in low- and moderate-dimensional search spaces that require neither analytical expression of the cost function nor local gradient information. The new individual is a linear combination of the simplex individuals and follows a geometric reasoning. The vertex with the worst performance is replaced by a point reflected at the centroid of the opposite side of the simplex. This step leads to a mirror-symmetric version of the simplex where the new vertex has the best performance if the cost function depends linearly on the input. Subsequent operations like expansion, single contraction and global shrinking ensure that iterations exploit a favourable downhill behaviour and avoid getting stuck by nonlinearities. We refer to Li et al. (Reference Li, Cui, Jia, Li, Yang, Morzyński and Noack2020) for a detailed description.

The explorative phase of EGM is inspired by the LHS method. Latin hypercube sampling aims to fill the complete domain ![]() $\mathcal {B}$ optimally. The predefined number

$\mathcal {B}$ optimally. The predefined number ![]() $m$ of individuals maximizes the minimum distance of its neighbours,

$m$ of individuals maximizes the minimum distance of its neighbours,

Here, ![]() $\Vert \cdot \Vert$ denotes the Euclidean norm. The number of individuals has to be determined in advance and cannot be augmented. This static feature is incompatible with the iterative nature of the EGM algorithm. Thus, we resort to a recursive ‘greedy’ version. Let

$\Vert \cdot \Vert$ denotes the Euclidean norm. The number of individuals has to be determined in advance and cannot be augmented. This static feature is incompatible with the iterative nature of the EGM algorithm. Thus, we resort to a recursive ‘greedy’ version. Let ![]() $\boldsymbol {b}^{\bullet }_1$ be the first individual. Then,

$\boldsymbol {b}^{\bullet }_1$ be the first individual. Then, ![]() $\boldsymbol {b}^{\bullet }_2$ maximizes the distance from

$\boldsymbol {b}^{\bullet }_2$ maximizes the distance from ![]() $\boldsymbol {b}^{\bullet }_1$,

$\boldsymbol {b}^{\bullet }_1$,

The ![]() $m$th individual maximizes the minimum distance to all previous individuals,

$m$th individual maximizes the minimum distance to all previous individuals,

This recursive definition allows for adding explorative phases from any given set of individuals.

Exploitation and exploration are iteratively continued until the stopping criterion is reached. In our study, the stopping criterion is the total number of cost function evaluations, i.e. a given budget of simulations. This criterion is validated after the run by checking the convergence of the performance. The EGM phases are summarized in algorithm 1.

Algorithm 1 Explorative Gradient Method

3.3. Multiple-input multiple-output control optimization with gMLC

In this section we cure a challenge of linear GPC – the suboptimal exploitation of gradient information. Starting point is machine learning control (MLC) based on linear genetic programming (LGP). Machine learning control optimizes a control law without assuming a polynomial or other structure of the mapping from input to output. The only assumption is that the law can be expressed by a finite number of mathematical operations with a finite memory, i.e. is computable. The optimization process relies on a stochastic recombination of the control laws, also called evolution. Machine learning control has been amazingly efficient in outperforming existing optimal control laws – often with surprising frequency crosstalk mechanisms – in dozens of experiments (Noack Reference Noack2019). Machine learning control demonstrates a good exploration of actuation mechanisms but a slow convergence to an optimum despite an increasing testing of redundant similar control laws.

The proposed gMLC departs in two aspects from MLC. First, the concept of evolution from generation to generation is not adopted. The genetic operations include all tested individuals. One can argue that the neglection of previous generations might imply loss of important information. Second, the exploitation is accelerated by downhill subplex iteration (Rowan Reference Rowan1990). The best ![]() $k+1$ individuals are chosen to define a

$k+1$ individuals are chosen to define a ![]() $k$-dimensional subspace and a downhill simplex algorithm optimizes the control law in this subspace.

$k$-dimensional subspace and a downhill simplex algorithm optimizes the control law in this subspace.

Machine learning control and gMLC share a representation of the control laws used for LGP (Brameier & Banzhaf Reference Brameier and Banzhaf2006). The individuals are considered as little computer programs, using a finite number ![]() $N_{inst}$ of instructions, a given register of variables and a set of constants. The instructions employ basic operations (

$N_{inst}$ of instructions, a given register of variables and a set of constants. The instructions employ basic operations (![]() $+$,

$+$, ![]() $-$,

$-$, ![]() $\times$,

$\times$, ![]() $\div$,

$\div$, ![]() $\cos$,

$\cos$, ![]() $\sin$,

$\sin$, ![]() $\tanh$, etc.) using inputs (

$\tanh$, etc.) using inputs (![]() $h_i$ time-dependent functions and

$h_i$ time-dependent functions and ![]() $s_i$ sensor signals) and yielding the control commands as outputs. A matrix representation conveniently comprises the operations of each individual. Every row describes one instruction. The first two columns define the register indices of the arguments, the third column the index of the operation and the fourth column the output register. Before execution, all registers are zeroed. Then, the first registers are initialized with the input arguments, while the output is read from the last registers after the execution of all instructions. This leads to a

$s_i$ sensor signals) and yielding the control commands as outputs. A matrix representation conveniently comprises the operations of each individual. Every row describes one instruction. The first two columns define the register indices of the arguments, the third column the index of the operation and the fourth column the output register. Before execution, all registers are zeroed. Then, the first registers are initialized with the input arguments, while the output is read from the last registers after the execution of all instructions. This leads to a ![]() $N_{inst}\times 4$ matrix representing the control law

$N_{inst}\times 4$ matrix representing the control law ![]() $\boldsymbol {K}$. We refer to Li et al. (Reference Li, Noack, Cordier, Borée, Kaiser and Harambat2018) for details.

$\boldsymbol {K}$. We refer to Li et al. (Reference Li, Noack, Cordier, Borée, Kaiser and Harambat2018) for details.

The algorithm begins with a Monte Carlo (MC) initialization of ![]() $N_{MC}$ individuals, i.e. the indices of the matrix. The cost of these randomly generated functions are evaluated in the plant. The number of individuals

$N_{MC}$ individuals, i.e. the indices of the matrix. The cost of these randomly generated functions are evaluated in the plant. The number of individuals ![]() $N_{MC}$ needs to balance exploration and cost. Too few individuals may lead to descent into a suboptimal local minimum. Too many individuals may lead to unnecessary inefficient testing, as Monte Carlo sampling is purely explorative.

$N_{MC}$ needs to balance exploration and cost. Too few individuals may lead to descent into a suboptimal local minimum. Too many individuals may lead to unnecessary inefficient testing, as Monte Carlo sampling is purely explorative.

Once the initial individuals are evaluated, an exploration phase is carried out. New individuals are generated thanks to crossover and mutation operations. Thus, this phase is also referred to as the evolution phase. These operations are performed on the matrix representation of the individuals. As for MLC, crossover combines two individuals by exchanging lines in their matrix representation, whereas mutation randomly replaces values of some lines by new ones. In this approach, we no longer consider a population but the database of all the individuals evaluated so far. Thus, we no longer need the replication and elitism operators of MLC. This choice is justified by the fact that we want to learn as much as possible from what we already know and avoid re-evaluating individuals. To perform the crossover and mutation operation, individuals are selected from the database thanks to a tournament selection. A tournament selection of size 7 for a population of 100 individuals is used in Duriez et al. (Reference Duriez, Brunton and Noack2016). That means that for a population of 100 individuals, 7 individuals are selected randomly and among the 7, the best one is chosen for the crossover or mutation operation. For gMLC, as the individuals are selected among all the evaluated individuals, the tournament size is properly scaled at each call to preserve the ![]() $7/100$ ratio between the tournament size and the size of the database. The crossover and mutation operation are repeated randomly following

$7/100$ ratio between the tournament size and the size of the database. The crossover and mutation operation are repeated randomly following ![]() $P_c$, the crossover probability, and

$P_c$, the crossover probability, and ![]() $P_m$, the mutation probability, until

$P_m$, the mutation probability, until ![]() $N_G$ individuals are generated. The probabilities

$N_G$ individuals are generated. The probabilities ![]() $P_c$ and

$P_c$ and ![]() $P_m$ are such that

$P_m$ are such that ![]() $P_m+P_c=1$.

$P_m+P_c=1$.

Once the evolution phase is achieved, ![]() $N_G$ new individuals are generated thanks to downhill subplex iterations. Being in an infinite dimension function space, Nelder–Mead's downhill simplex is impractical as an exploitation tool. Thus, we propose a variant of downhill simplex inspired by Rowan (Reference Rowan1990), commonly called downhill subplex. Just as downhill simplex, the strength of this approach is to exploit local gradients to explore the search space. In the original approach of Rowan (Reference Rowan1990), downhill simplex is applied to several orthogonal subspaces. However, in order to limit the number of cost function evaluations, we apply downhill simplex to only one subspace. This subspace is initialized by selecting

$N_G$ new individuals are generated thanks to downhill subplex iterations. Being in an infinite dimension function space, Nelder–Mead's downhill simplex is impractical as an exploitation tool. Thus, we propose a variant of downhill simplex inspired by Rowan (Reference Rowan1990), commonly called downhill subplex. Just as downhill simplex, the strength of this approach is to exploit local gradients to explore the search space. In the original approach of Rowan (Reference Rowan1990), downhill simplex is applied to several orthogonal subspaces. However, in order to limit the number of cost function evaluations, we apply downhill simplex to only one subspace. This subspace is initialized by selecting ![]() $N_{sub}$ individuals. Two ways to build the subspace after the Monte Carlo process are listed below.

$N_{sub}$ individuals. Two ways to build the subspace after the Monte Carlo process are listed below.

• Choose the best individual: select the best

$N_{sub}$ individuals evaluated so-far in the whole database.

$N_{sub}$ individuals evaluated so-far in the whole database.• Individuals near a minimum: select the best individual evaluated so-far and the

$N_{sub}-1$ individuals closest to the best one.

$N_{sub}-1$ individuals closest to the best one.

The first approach has the benefit of comprising of several minima candidates, whereas the second one is bound to lead to a minimum in the neighbourhood of the best individual and relies on a given metric. Once the subspace is built, the next steps are similar to the downhill simplex method. As subplex and simplex are essentially the same algorithm applied to different spaces, we will not designate them differently.

Following the situation, downhill subplex may call ![]() $1$ (only reflection),

$1$ (only reflection), ![]() $2$ (expansion or single contraction) or

$2$ (expansion or single contraction) or ![]() $N_{sub}+1$ (shrink) times the cost function. Several iterations of downhill subplex are repeated until at least

$N_{sub}+1$ (shrink) times the cost function. Several iterations of downhill subplex are repeated until at least ![]() $N_G$ individuals are generated. In this study the same number of individuals generated with the evolution phase and the downhill subplex phase are chosen to balance exploration and exploitation.

$N_G$ individuals are generated. In this study the same number of individuals generated with the evolution phase and the downhill subplex phase are chosen to balance exploration and exploitation.

If the stopping criterion is reached, the most efficient individual in the database is given back. Otherwise, we restart a new cycle by generating new individuals with a new evolution phase, combining and modifying individuals derived by evolution and downhill subplex. However, the individuals built thanks to downhill subplex are a linear combination of the original ![]() $N_{sub}$ individuals. These new individuals do not have a matrix representation which is necessary to generate new individuals with genetic operators in the exploitation phase. To overcome this problem, we introduce a new phase to compute a matrix representation for the linearly combined control laws. The matrix representation is computed by solving a regression problem of the first kind, similar to a function fitting problem, for all the linearly combined control laws. First, each control law

$N_{sub}$ individuals. These new individuals do not have a matrix representation which is necessary to generate new individuals with genetic operators in the exploitation phase. To overcome this problem, we introduce a new phase to compute a matrix representation for the linearly combined control laws. The matrix representation is computed by solving a regression problem of the first kind, similar to a function fitting problem, for all the linearly combined control laws. First, each control law ![]() $\boldsymbol {K}_i$ is evaluated on randomly sampled inputs

$\boldsymbol {K}_i$ is evaluated on randomly sampled inputs ![]() $\boldsymbol {s}_{rand}$. The resulting output

$\boldsymbol {s}_{rand}$. The resulting output ![]() $\boldsymbol {K}_i (\boldsymbol {s}_{rand})$ is used to solve a secondary optimization problem,

$\boldsymbol {K}_i (\boldsymbol {s}_{rand})$ is used to solve a secondary optimization problem,

where ![]() $\Vert \cdot \Vert$ denotes the Euclidean norm. This optimization problem is a function fitting problem that we solve with LGP. The LGP parameters are the same as those used for the gMLC so the computed individuals are compatible with the ones in the database. The best fitting control law

$\Vert \cdot \Vert$ denotes the Euclidean norm. This optimization problem is a function fitting problem that we solve with LGP. The LGP parameters are the same as those used for the gMLC so the computed individuals are compatible with the ones in the database. The best fitting control law ![]() $\boldsymbol {K}_{\boldsymbol {M}}^\ast$ then has a matrix representation and is used as a substitute for the original linear combination of control laws. The substitutes are then employed for the evolution phase even though they may not be perfect substitutes of the original control laws. Indeed, following the stopping criterion and population size of the secondary LGP optimization, the control law substitutes may not be able to reproduce all the characteristics of the linearly combined control laws. An accurate but costly representation may not be needed as the control laws will be recombined afterwards. Moreover, the introduction of some error may be beneficial to improve the exploration phase and enrich our database.

$\boldsymbol {K}_{\boldsymbol {M}}^\ast$ then has a matrix representation and is used as a substitute for the original linear combination of control laws. The substitutes are then employed for the evolution phase even though they may not be perfect substitutes of the original control laws. Indeed, following the stopping criterion and population size of the secondary LGP optimization, the control law substitutes may not be able to reproduce all the characteristics of the linearly combined control laws. An accurate but costly representation may not be needed as the control laws will be recombined afterwards. Moreover, the introduction of some error may be beneficial to improve the exploration phase and enrich our database.

Algorithm 2 Gradient-enriched MLC

Once the matrix representations are computed, a new cycle may begin with a new evolution phase. In this phase, if any individual has a better performance than the ![]() $N_{sub}$ individuals in the simplex then the least performing individuals among the

$N_{sub}$ individuals in the simplex then the least performing individuals among the ![]() $N_{sub}$ individuals are replaced. Thus, each evolution phase replaces elements in the simplex, allowing exploration beyond the initial subspace. Then, the optimization continues with the exploitation phase on the updated

$N_{sub}$ individuals are replaced. Thus, each evolution phase replaces elements in the simplex, allowing exploration beyond the initial subspace. Then, the optimization continues with the exploitation phase on the updated ![]() $N_{sub}$ individuals.

$N_{sub}$ individuals.

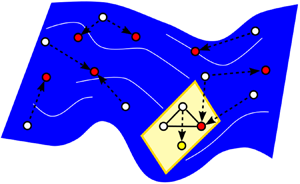

Figure 4 illustrates the initialization, exploration and exploitation of gMLC. The exploration is based on LGP. Also the exploitation requires LGP. In the downhill simplex method the individuals are linear combinations of the subplex basis and are finally approximated as matrices. This process is repeated until the stopping criterion is reached. The gMLC is summarized by pseudo code in algorithm 2. The source code is freely available at https://github.com/gycm134/gMLC. Finally, figure 5 summarizes the exploration and exploitation phases for EGM and gMLC.

Figure 4. Schematic of the gMLC algorithm (b) and distribution of individuals in the search space (a). First (1), Monte Carlo initialization performs a first coarse exploration of the search space. Second (2), further exploration is performed thanks to genetic programming. Individuals are selected in the whole dataset and combined thanks to genetic operators to generate new individuals (blue dots). Then the database is augmented with the new individuals. Third (3), exploitation focuses on a subspace (represented in yellow) of finite dimension where downhill simplex iterations builds new individuals by linear combination (yellow dots). A matrix representation is computed for the downhill subplex individuals thanks to LGP, allowing the downhill subplex individuals to be included in the database.

Figure 5. Summary of the EGM (left column) and gMLC (right column). The level plots are a schematic representation of the control landscape. Darker regions depict poor performances and light regions depict good performances. Three minima are shown, two on the top left and the global one on the top right. The map represents an affine space (of finite dimension) for EGM and a Hilbert function space for gMLC. The initialization step is depicted with black diamonds for EGM and black dots for gMLC. The individuals generated thanks to an exploration phase are represented by blue dots. Exploration is carried out with LHS for EGM and evolution with genetic operators (crossover and mutation) for gMLC. The individuals generated thanks to an exploitation phase are represented in yellow. For EGM, downhill simplex steps are carried out. The associated level plot depicts one iteration of downhill simplex: the reflected individual (yellow triangle) and the expanded individual (reversed yellow triangle), the star is the centroid of the two best black diamonds. For gMLC, the simplex steps are carried out in a subspace (downhill subplex) of finite dimension. The associated level plot depicts two distinct simplex steps: first, a reflection step (yellow triangle) with the two best black dots and the best blue dot; then a contraction step (yellow diamond) with the same black dots and the newly evaluated yellow triangle. The stars are the centroids for each step. This process is repeated until the stopping criterion is reached. In this figure only one iteration of the loop is depicted. The reconstruction phase is not depicted for the sake of clarity.

4. Flow stabilization

In this section we stabilize the fluidic pinball with optimized control laws in increasingly more general search spaces. First (§ 4.1), we consider symmetric steady actuation with a parametric study reduced to one parameter ![]() $b_2=-b_3=\text {const}$. Then (§ 4.2), we optimize steady actuation allowing also for non-symmetric forcing, i.e. three independent inputs

$b_2=-b_3=\text {const}$. Then (§ 4.2), we optimize steady actuation allowing also for non-symmetric forcing, i.e. three independent inputs ![]() $b_1$,

$b_1$, ![]() $b_2$,

$b_2$, ![]() $b_3$. Finally (§ 4.3), we optimize sensor-based feedback from nine downstream sensor signals driving the three cylinder rotations. Evidently, the three search spaces are successive generalizations.

$b_3$. Finally (§ 4.3), we optimize sensor-based feedback from nine downstream sensor signals driving the three cylinder rotations. Evidently, the three search spaces are successive generalizations.

4.1. Symmetric steady actuation – parametric study

This section describes the behaviour of the fluidic pinball under a symmetric steady actuation. In this configuration only the two rearward cylinders rotate at equal but opposite rotation speeds, ![]() $b_2=-b_3$. When

$b_2=-b_3$. When ![]() $b_2$ is positive, the rearward cylinders accelerate the outer boundary layers and suck near-wake fluid upstream. This forcing delays separation, mimics Coanda forcing and leads to a fluidic boat tailing. When

$b_2$ is positive, the rearward cylinders accelerate the outer boundary layers and suck near-wake fluid upstream. This forcing delays separation, mimics Coanda forcing and leads to a fluidic boat tailing. When ![]() $b_2$ is negative, the cylinders eject fluid in the near wake as in base bleed and oppose the outer boundary-layer velocities. Figure 6 shows the evolution of

$b_2$ is negative, the cylinders eject fluid in the near wake as in base bleed and oppose the outer boundary-layer velocities. Figure 6 shows the evolution of ![]() $J_a/J_0$ (a),

$J_a/J_0$ (a), ![]() $J_b$ (b) and the bifurcation diagram (c) as a function of

$J_b$ (b) and the bifurcation diagram (c) as a function of ![]() $b_2$.

$b_2$.

Figure 6. Parametric study for symmetric steady forcing. The velocity of the bottom cylinder is ![]() $b_2=-b_3$. The normalized distance to the steady solution

$b_2=-b_3$. The normalized distance to the steady solution ![]() $J_a/J_0$ (a) and the actuation power

$J_a/J_0$ (a) and the actuation power ![]() $J_b$ (b) are plotted as a function of

$J_b$ (b) are plotted as a function of ![]() $b_2$. The bifurcation diagram (c) comprises all local maximum and minimum lift values. The vertical red dashed line corresponds to

$b_2$. The bifurcation diagram (c) comprises all local maximum and minimum lift values. The vertical red dashed line corresponds to ![]() $b_2=0$ and separates the base bleeding and the boat tailing configurations. The global minimum of

$b_2=0$ and separates the base bleeding and the boat tailing configurations. The global minimum of ![]() $J_a/J_0$ is reached at

$J_a/J_0$ is reached at ![]() $b_2=-0.375$, as indicated by a vertical blue dashed line.

$b_2=-0.375$, as indicated by a vertical blue dashed line.

We limited our study to ![]() $b_2 \in [-5, 6]$. The trends are resolved with a discretization step of

$b_2 \in [-5, 6]$. The trends are resolved with a discretization step of ![]() $0.25$ and a finer resolution in the ranges

$0.25$ and a finer resolution in the ranges ![]() $[-2.5,0]$ and

$[-2.5,0]$ and ![]() $[1,2]$. For each parameter, the cost

$[1,2]$. For each parameter, the cost ![]() $J_a$ and actuation power

$J_a$ and actuation power ![]() $J_b$ have been computed over 1000 convective time units. The bifurcation diagram has been built by detecting the extrema of the lift coefficient over the last 600 convective time units. The bifurcation diagram reveals the following five regimes.

$J_b$ have been computed over 1000 convective time units. The bifurcation diagram has been built by detecting the extrema of the lift coefficient over the last 600 convective time units. The bifurcation diagram reveals the following five regimes.

• Regime

$b_2<-4$: the lift amplitude decreases to zero and the cost decreases to the first minimum.

$b_2<-4$: the lift amplitude decreases to zero and the cost decreases to the first minimum.• Regime

$-4<b_2<-2.5$: the extremal lift values increase and decrease to zero again. The cost approaches another local minimum near

$-4<b_2<-2.5$: the extremal lift values increase and decrease to zero again. The cost approaches another local minimum near  $b \approx -2.5$.

$b \approx -2.5$.• Regime

$-2.54<b_2<0$: a period doubling cascade is observed for decreasing

$-2.54<b_2<0$: a period doubling cascade is observed for decreasing  $b_2$ leading to a chaotic regime. At

$b_2$ leading to a chaotic regime. At  $b_2 \approx 0.375$, the cost assumes its global minimum with residual fluctuation of the lift coefficient.

$b_2 \approx 0.375$, the cost assumes its global minimum with residual fluctuation of the lift coefficient.• Regime

$0<b_2<2.375$: the cost and the extremal lift values monotonically increase.

$0<b_2<2.375$: the cost and the extremal lift values monotonically increase.• Regime

$2.375<b_2$: the Coanda forcing completely stabilizes a symmetric steady solution. The cost increases with the rotation speed.

$2.375<b_2$: the Coanda forcing completely stabilizes a symmetric steady solution. The cost increases with the rotation speed.

Interestingly, the boat tailing discontinuity at ![]() $b_2=2.375$ does not appear in the graph of the cost function

$b_2=2.375$ does not appear in the graph of the cost function ![]() $J_a/J_0$. This continuity, even in the derivative, corresponds to a continuous passage from a periodic symmetrical solution to a stationary solution which is itself symmetrical. As the value of the cost function indicates, this stationary solution is quite far from the unforced symmetric steady solution. The global minimum of

$J_a/J_0$. This continuity, even in the derivative, corresponds to a continuous passage from a periodic symmetrical solution to a stationary solution which is itself symmetrical. As the value of the cost function indicates, this stationary solution is quite far from the unforced symmetric steady solution. The global minimum of ![]() $J_a/J_0=0.51$ is reached near

$J_a/J_0=0.51$ is reached near ![]() $b_2=-0.375$, i.e. for a base bleeding configuration, corresponding to a small actuation power

$b_2=-0.375$, i.e. for a base bleeding configuration, corresponding to a small actuation power ![]() $J_b= 0.0490$, roughly 0.1 % of the unforced cost

$J_b= 0.0490$, roughly 0.1 % of the unforced cost ![]() $J_0$.

$J_0$.

The characteristics of the best base bleeding solution leading closest to the symmetric steady solution are depicted in figure 7. In figure 7(a) the lift coefficient is displayed for the unforced transient (blue curve) and the forced flow (red curve). The unforced flow terminates in an asymmetric shedding with positive lift values. After the start of forcing, the lift coefficient oscillates vigorously around its vanishing mean value. This forced statistical symmetry is corroborated by the oscillating jet in figure 8(a–h). Base bleeding increases the velocity of the rearward jet compared with the unforced flow. This jet instability mitigates the Coanda effect on the bottom and top cylinder, i.e. the jet neither stays long at either side.

Figure 7. Characteristics of the best base bleeding solution. (a) Time evolution of the lift coefficient ![]() $C_L$, (b) phase portrait, (c) time evolution of instantaneous cost function

$C_L$, (b) phase portrait, (c) time evolution of instantaneous cost function ![]() $j_a$ and (d) PSD showing a broad spectral peak at

$j_a$ and (d) PSD showing a broad spectral peak at ![]() $f_1=0.132$. The control starts at

$f_1=0.132$. The control starts at ![]() $t=400$. The unforced phase is depicted in blue and the forced one in red. The phase portrait is computed over

$t=400$. The unforced phase is depicted in blue and the forced one in red. The phase portrait is computed over ![]() $t \in [900,1400]$ and the PSD is computed on the forced regime

$t \in [900,1400]$ and the PSD is computed on the forced regime ![]() $t \in [400,1400]$.

$t \in [400,1400]$.

Figure 8. Vorticity fields of the best base bleeding solution. (a–f) Time evolution of the vorticity field throughout the last period of the 1400 convective time units, (i) the objective symmetric steady solution and (j) the mean field of the forced flow. The colour code is the same as figure 1. Here ![]() $T_1$ is the period associated to the main frequency

$T_1$ is the period associated to the main frequency ![]() $f_1$ of the forced flow. The mean field has been computed by averaging 100 periods.

$f_1$ of the forced flow. The mean field has been computed by averaging 100 periods.

The vortex shedding persists similar to the unforced flow. However, the dominant frequency is increased from ![]() $f_0=0.116$ to

$f_0=0.116$ to ![]() $f_1=0.132$. The instantaneous cost function

$f_1=0.132$. The instantaneous cost function ![]() $j_a$ in figure 7(c) shows an unsteady non-periodic behaviour, reaching intermittently low levels. The broad spectral peak in figure 7(d) is a characteristic of a chaotic regime. The phase portrait in figure 7(b) corroborates the non-periodic oscillatory behaviour. The mean field in figure 8(j) shows that the actuated mean jet is symmetric unlike the mean field of the unforced flow. Moreover, the shear layer on the upper and lower sides extends further downstream as compared with the unforced state.

$j_a$ in figure 7(c) shows an unsteady non-periodic behaviour, reaching intermittently low levels. The broad spectral peak in figure 7(d) is a characteristic of a chaotic regime. The phase portrait in figure 7(b) corroborates the non-periodic oscillatory behaviour. The mean field in figure 8(j) shows that the actuated mean jet is symmetric unlike the mean field of the unforced flow. Moreover, the shear layer on the upper and lower sides extends further downstream as compared with the unforced state.

This parametric study reveals that base bleeding is the best symmetric steady forcing strategy to bring the flow close to the symmetric steady solution. However, even though the cost ![]() $J_a/J_0$ is almost halved, the best base bleeding control fails to stabilize the flow.

$J_a/J_0$ is almost halved, the best base bleeding control fails to stabilize the flow.

4.2. General non-symmetric steady actuation – EGM

In this section we aim to stabilize the symmetric steady solution by commanding the three cylinders with constant actuation without symmetry constraint. This three-dimensional parameter space is explored with the EGM presented in § 3.2. The symmetry along the ![]() $x$-axis of the fluidic pinball allows us to reduce our search space and to explore only positive values of

$x$-axis of the fluidic pinball allows us to reduce our search space and to explore only positive values of ![]() $b_1$. A coarse initial parametric study carried out on

$b_1$. A coarse initial parametric study carried out on ![]() $b_1$,

$b_1$, ![]() $b_2$ and

$b_2$ and ![]() $b_3$ by steps of unity indicates that the global minimum of

$b_3$ by steps of unity indicates that the global minimum of ![]() $J_a/J_0$ should be near

$J_a/J_0$ should be near ![]() $[b_1,b_2,b_3]^\intercal = [1,0,0]^\intercal$. Thus, we limit our research to the actuation domain

$[b_1,b_2,b_3]^\intercal = [1,0,0]^\intercal$. Thus, we limit our research to the actuation domain ![]() $\mathcal {B} = [0,2]\times [-2,2]\times [-2,2]$. The limitation of

$\mathcal {B} = [0,2]\times [-2,2]\times [-2,2]$. The limitation of ![]() $b_1$ to positive values exploits the mirror symmetry of the configuration. Figure 9(b) depicts the cost function in the actuation domain

$b_1$ to positive values exploits the mirror symmetry of the configuration. Figure 9(b) depicts the cost function in the actuation domain ![]() $\mathcal {B}$. Three planes (

$\mathcal {B}$. Three planes (![]() $b_1=\text {const.}$) are computed by interpolating parameters on a coarse grid. The individuals computed with EGM are all shown in the three-dimensional space. The four initial control laws for EGM are the centre of the box and shifted points from this centre. The shift is

$b_1=\text {const.}$) are computed by interpolating parameters on a coarse grid. The individuals computed with EGM are all shown in the three-dimensional space. The four initial control laws for EGM are the centre of the box and shifted points from this centre. The shift is ![]() $10\,\%$ of the box size in the positive coordinate direction. Thus, the four initial control laws are

$10\,\%$ of the box size in the positive coordinate direction. Thus, the four initial control laws are ![]() $[1,0,0]^\intercal$,