1 Introduction

Holistic marking is often the norm for assessing essays in academic contexts. An on-going question is what makes a rater give higher or lower marks to different essays. This Element aims to gain an understanding of the linguistic and non-linguistic variables that may play a role in shaping the writing quality scores awarded to student essays by raters. Our work answers one central question: what linguistic and non-linguistic variables may play a role in shaping the writing quality scores awarded to student essays in a first-year composition (FYC) writing context?

The underlying premise is that collocation may play a role in understanding what shapes writing quality scores. We also assume that non-linguistic factors such as variation in individual writers, the writing task, and the language status of the writers may play a role in shaping scores.

To understand these variables, our work engages with the following methodologically driven sub-questions:

(i) How can we choose appropriate measures of collocation?

(ii) How can we measure and understand the potential role of different linguistic and non-linguistic variables involved in shaping writing quality scores in an appropriate way?

By answering these questions, we hope to illuminate the complexity of the linguistic and non-linguistic variables themselves, as well as how these variables operate with a degree of nuance in the rating process overall. We hope to illuminate, and to some extent demystify, different aspects of the rating process in a relatively underexplored FYC writing context in the United States (see Section 2 for details of the writing context).

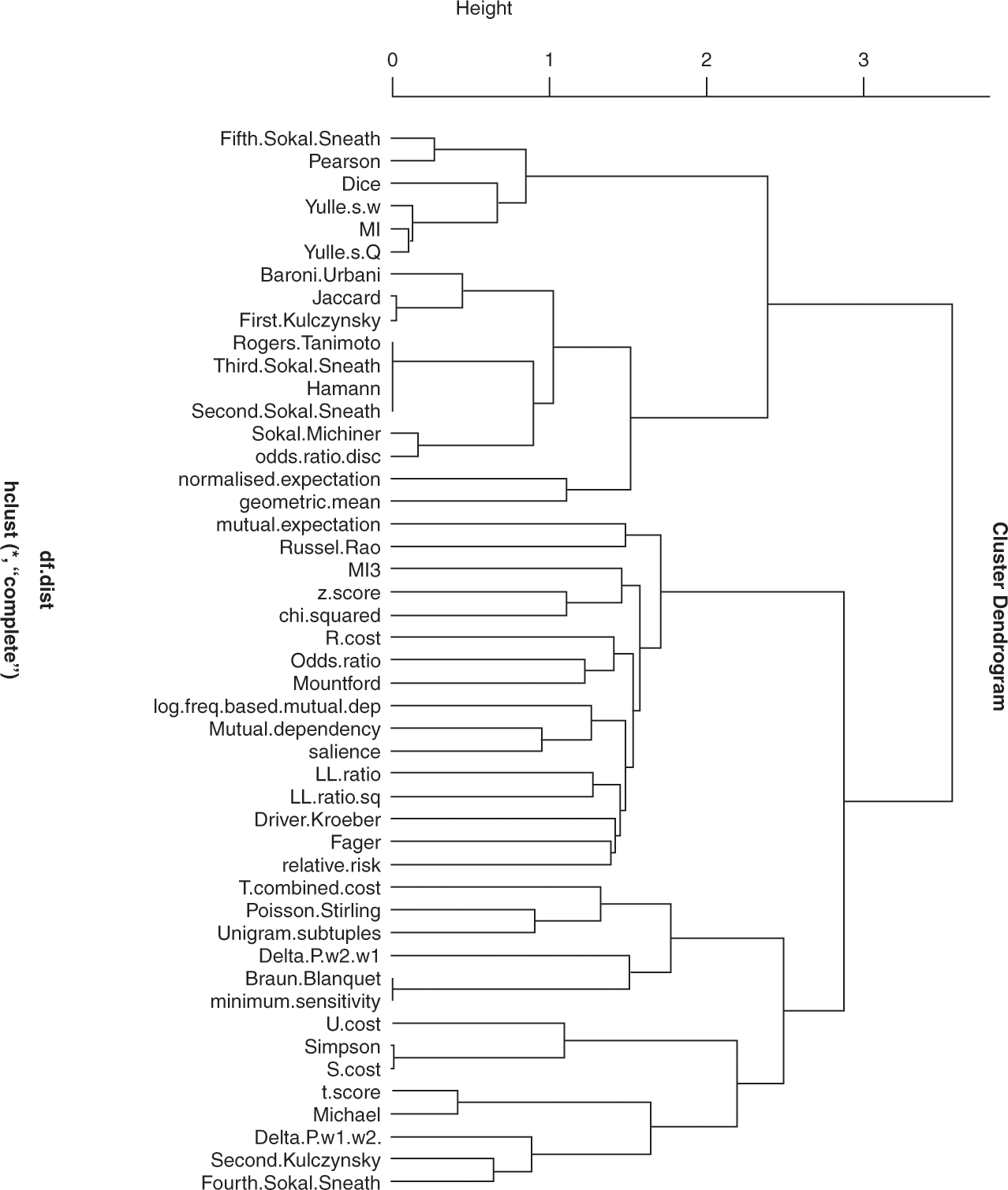

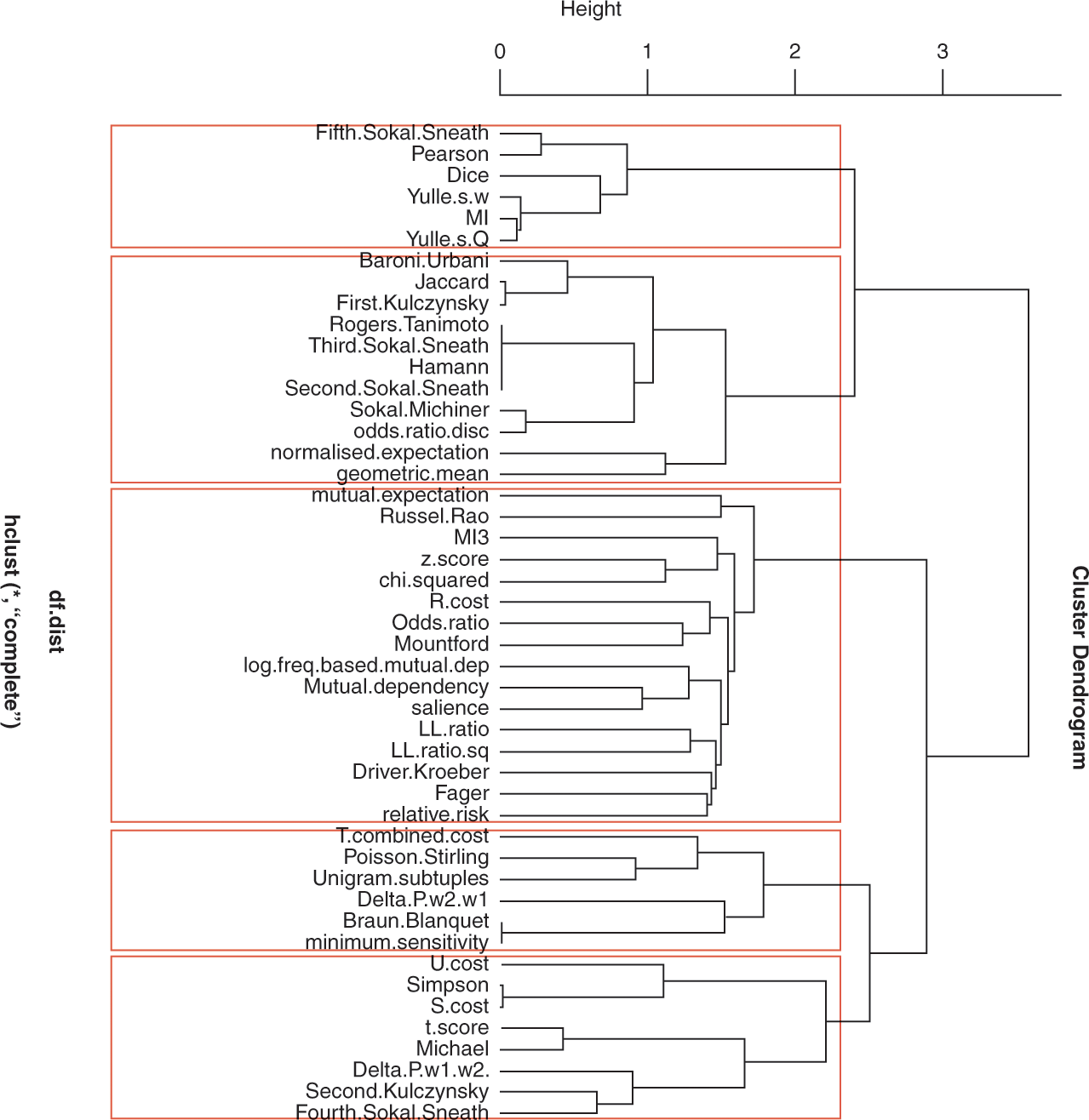

Three empirical studies are carried out to answer these questions. The first question is answered by engaging with past literature and through the study of collocation measures in a cluster analysis. The second question is answered via the use of a cumulative-link mixed effects regression model. This model can accommodate different variable types to appreciate how they may play a role in shaping writing scores. A follow-up qualitative study provides a deeper understanding of how writers use collocations in their writing and also helps answer the second question.

The introductory section of this Element presents the rationale for the focus on these particular questions and our methods for answering them.

1.1 Understanding Writing Quality via Quantitative Linguistic Features

There has been a long-standing interest in understanding rater judgements of writing quality in first and second language research. This interest has adopted several theoretical and methodological lenses (e.g., see the overview in Reference Durrant, Brenchley and McCallumDurrant et al., 2021). One popular lens used to tap into these judgements and the inferences we can (and cannot) make from them has been the quantitative study of the relationship between linguistic features and writing quality grades. Under this lens, linguistic features are identified (normally by adhering to a specific theoretical framework that governs how to identify the features) and counted (manually or automatically with corpus software), and then relationships between these frequencies and writing quality grades are established numerically using statistical techniques such as correlation and regression analyses. Writing quality grades represent subjective ratings made by text evaluators who largely make their judgements from a predetermined set of criteria which to an extent presupposes what ‘good’ writing involves. These criteria therefore guide evaluators in their judgements (e.g., see the IELTS and TOEFL grade bandings mentioned in Reference Durrant, Brenchley and McCallumDurrant et al., 2021). This quantitative approach has enjoyed sustained popularity in the literature and is currently experiencing something of a ‘boom’, thanks to the increasing creation of corpus software tools which make the counting and analyses of such features increasingly user-friendly. This boom is well documented across overviews provided in Reference Durrant, Brenchley and McCallumDurrant et al. (2021) and Reference CrossleyCrossley (2020).

In their studies, researchers make two key assumptions. First, there is an assumption of, or perhaps appreciation for, the role that linguistic features themselves may play in our understandings of writing quality judgements as a measurable construct. This means there is an underlying belief that by counting linguistic features and looking at their relationships with writing quality via statistical methods, we can learn something about how these features may be being judged/perceived by raters. Second, and linking back to the first assumption, is the belief that the linguistic features chosen are (a) worth counting (because they have an established linguistic history/history in models of writing proficiency/quality), and (b) that they can indeed be reliably counted.

Findings of past feature-writing quality work have gone on to inform two often connected areas of research: the development of writing proficiency scales/rubrics by referring to differences in linguistic feature use across bandscales (e.g., Reference Hawkins and FilipovicHawkins & Filipovic, 2012), and/or the training of large-scale feedback and grading systems (e.g., Reference Chen, Zhang and BejarChen et al., 2017). Researchers have most persistently studied features of grammar (e.g., clauses (see Reference Bulté and HousenBulté & Housen, 2014)) and vocabulary (e.g., percentage of words appearing in the Academic Word List (Reference Daller, Turlik, Weir, Jarvis and DallerDaller et al., 2013)), with features of cohesion occupying an inconsistent position of interest for researchers (e.g., see the review in Reference Durrant, Brenchley and McCallumDurrant et al. (2021)). Features of phraseology, for example lexical bundles (e.g., see Reference Appel and WoodAppel & Wood, 2016), occupy an increasingly prominent position, especially in second language literature (e.g., see reference made to this emerging importance in Reference Durrant, Brenchley and McCallumDurrant et al. (2021) and Reference PaquotPaquot (2018, Reference Paquot2019)).

It is this latter linguistic area that this Element focusses on. The following sub-sections make an explicit case for the study of one specific area of phraseology: that of collocation. The sub-sections present the rationale for such a focus and explain how this Element contributes to understanding the role collocation may play alongside several non-linguistic writing assessment variables in shaping writing quality judgements.

1.2 The Rationale for Studying Collocations and Writing Quality

The use of appropriate language is viewed as a key component of success for meeting programme outcomes in the FYC programme our Element focusses on (CWPA, 2014; CWPA et al., 2011). In this sense, using appropriate academic language is therefore a requirement of fitting into students’ respective academic disciplines/communities. Reference Wray and BrownWray (2006, p. 593) notes on this matter that ‘when we speak, we select particular turns of phrase that we perceive to be associated with certain values, styles and groups’, with the learning of these phrases or word combinations acting as a badge of identity and this badge is linked to particular academic communities.

Later, Reference Wray, Siyanova-Chanturia and Pellicer-SánchezWray (2019, p. 267) emphasises that the status of a word combination as a formula lies in the decision-making or perceptions of the agent. She states that a formulaic sequence is ‘any multiword string that is perceived by the agent (i.e., learner, researcher, etc.) to have an identity or usefulness as a single lexical unit’. Reference Siyanova-Chanturia, Pellicer-Sánchez, Siyanova-Chanturia and Pellicer-SánchezSiyanova-Chanturia and Pellicer-Sánchez (2019, p. 6) highlight that there are several frameworks or reasons that guide the perceiver’s decision-making. It may entail high frequency of occurrence (since frequently produced strings, other than being useful by virtue of being frequent in language, may also benefit from being treated as a single unit), a teacher’s perceived value of a string (no matter how frequent), some sort of basic holistic storage and processing, a specific pragmatic function, or, indeed, something altogether different.

Although these definitions allow researchers flexibility in their theoretical and methodological approaches to capturing formulas, two particular approaches have dominated the literature: phraseological and frequency-based approaches (see Reference NesselhaufNesselhauf (2005) for an in-depth overview of the differences between these lenses). This Element grounds its theoretical and methodological conceptualisation of collocation in the frequency-based lens. Under a frequency-based lens, the tenets of collocation rest on understandings from Reference Firth and PalmerFirth (1968, p. 181), who believed that part of a word’s meaning is the ‘habitual collocations’ in which it appears. Meaning here is said to include both the concept with which the word is associated and the ways in which it is used. Reference Firth and PalmerFirth (1968) gives the example of ‘dark night’, resting his understanding on the belief that collocating words are part of each other’s meaning. Thus, because dark appears frequently alongside night, collocability with night is one of the meanings of dark (Reference FirthFirth, 1957, p. 196). Later, Reference Firth and PalmerFirth (1968) articulates these thoughts further to indicate that collocation is a type of mutual expectancy between words. Collocating words are said to predict each other, in the sense that the presence of one word makes the presence of the other more likely.

Corpus linguists have unpacked this mutual expectancy further by stating that collocation is ‘the relationship a lexical item has with items that appear with greater than random probability in its (textual) context’ (Reference HoeyHoey, 1991, p. 7), with Reference Jones and SinclairJones and Sinclair (1974) simply stating that words are collocates if they appear together more frequently than their individual word frequencies would predict. Under these views, there is also a psycholinguistic nature to collocation to consider, with Reference Sinclair, Steele and ThreadgoldSinclair’s (1987, p. 391) idiom principle setting out this mental association where ‘a language user has available to him or her a large number of semi-preconstructed phrases that constitute single choices, even though they might appear to be analysable into segments’. Further still, Reference HoeyHoey’s (2005, pp. 3–5) theory of lexical priming also draws attention to the psycholinguistic nature of collocation in that it is seen as the ‘psychological association between words … evidenced by their occurrence together in corpora more often than is explicable in terms of random distribution’. Under these guiding thoughts then, collocation is bound up in the idea that word combinations are more frequent than their individual word frequencies would explain and that there is a degree of non-random use to these pairings.

These thoughts have led researchers to develop multiple taxonomies and dictionaries of collocations (e.g., Reference Benson, Benson and IlsonBenson et al., 2009) with studies presenting word combinations such as (i) ‘heavy rain’, (ii) ‘rancid butter’, and (iii) ‘apologise profusely’, as collocations (e.g., Reference PaquotPaquot, 2018). Under the frequency-based school of thought, researchers have commonly captured collocations like these by focussing on the belief that collocations are pairs of words which regularly co-occur within a given span or window of text, for example two to four words either side of the node/search word. This approach has been criticised as capturing syntactically unrelated or uninteresting collocations (e.g., Reference Evert, Lüdeling and KytöEvert, 2009). More recently a smaller group of studies have identified collocations using syntactic parsers which capture collocations according to a particular syntactic dependency relationship. Dependency pairings are decided by parsing a text using an automated parser. Dependency grammar operates on the notion that in every sentence each word is dependent on another, apart from the root of the sentence which is independent (Reference DebusmannDebusmann, 2000). A word depends on another if it is a complement or a modifier of the latter. For example, dependency pairings which might be collocations include an adjective modifying a noun.

1.2.1 The Study of Collocation in Student Writing

After using a span or syntactic approach, many researchers have focussed on studying the often-arbitrary partnering and the degree of exclusivity in combinations extracted. ‘Arbitrariness’ here refers to the way that collocational preferences can sometimes appear to defy logical explanation. For example, the fact that an idea can be ‘utterly ridiculous’ but not ‘utterly sensible’. ‘Exclusivity’ refers to the way that some words are found almost exclusively in combination with another particular word, or group of words. For example, the fact that few things other than ‘rain’ can be described as ‘torrential’ and that few things can be ‘shrugged’ other than our ‘shoulders’. It is these often arbitrary, complex, and exclusive relationships that researchers have attempted to study in learner writing.

Many of the foundations for studying collocation in learner writing stem from the belief that second language learner writers struggle to use collocations appropriately. This is because the combinations are assumed to be stored mentally as single units and must be used appropriately with an understanding of their arbitrary combinatory nature and intended, expected meaning. However, there is growing evidence that first language learners also struggle with collocation. They struggle to navigate the expected writing of university genres and disciplines for the first time. Both groups therefore encounter barriers in using the language expected and ultimately gaining acceptance, through that language use, into their academic communities (Reference Durrant, Siyanova-Chanturia and Pellicer-SanchezDurrant, 2019; Reference WrayWray, 2002).

Under a frequency-based approach, scholars have used frequency information to statistically show two pieces of information about learner collocation: (i) how confident we can be that the word combination does or does not occur because of random chance and (ii) the degree of exclusivity the words in the combination have with each other; in other words – the degree to which they may in fact have other possible combinatory partners. The formulae used to capture these are known as association measures (Reference EvertEvert, 2004). Reference EvertEvert (2004, p. 75) defines an association measure as ‘a formula that computes an association score from the frequency information in a pair’s contingency table’. A contingency table is a 2 × 2 table that lays out a word combination’s frequency information. The table contains frequency information relating to the frequency of the combination, the frequency of word 1 and word 2 in the pair, the frequency of other possible word combinations using either word 1 or 2, and the size of the reference corpus being used. An illustration of a contingency table is provided in Reference Brezina, McEnery and WattamBrezina et al. (2015, pp. 144–5). Reference EvertEvert (2004) groups measures able to capture confidence as (i) significance measures, and those able to capture exclusivity as measures of (ii) association strength. These types of information have been interpreted as the higher the score, the more confident we can be that the combination is a collocation (i.e., not occurring because of random chance) in (i), and in (ii), the higher the score, the more exclusive the pairing and the less likely it is to have multiple other word partners that it pairs with naturally.

Those researching learner writing have mostly relied on two representative measures from the significance and the degree of strength groups. In the former, this has been the t-score, and in the latter, the mutual information (MI) score. The t-score, as a measure of confidence, has been found to flag up word combinations that comprise high-frequency words (e.g., ‘little bit’, ‘other hand’) (Reference Granger and BestgenGranger & Bestgen, 2014). In contrast, the MI has been found to flag up word combinations that comprise low-frequency words (which make them more exclusive to each other). For example, ‘tectonic plate’ and ‘juvenile delinquency’ (Reference Durrant and SchmittDurrant & Schmitt, 2009; Reference Granger and BestgenGranger & Bestgen, 2014).

Several studies have used these measures to inform understandings of second language learner writing (e.g., Reference BestgenBestgen, 2017; Reference Bestgen and GrangerBestgen & Granger, 2014; Reference ChenChen, 2019; Reference Durrant and SchmittDurrant & Schmitt, 2009; Reference Garner, Crossley and KyleGarner et al., 2019, Reference Garner, Crossley and Kyle2020; Reference Granger and BestgenGranger & Bestgen, 2014; Reference Kim, Crossley and KyleKim et al., 2018), with few studies of first language learner writing (e.g., Reference Durrant, Brenchley, Szudarski and BarclayDurrant & Brenchley, 2021; Reference Kyle, Crossley and BergerKyle et al., 2018). In their English for Academic Purposes (EAP) study, Reference Durrant and SchmittDurrant and Schmitt (2009) found that second language writers used more high-scoring t-score combinations, while first language writers used more high-scoring MI combinations (more exclusive pairings found in discipline- and genre-specific writing). To some extent, this finding has been corroborated in other second language contexts (e.g., Reference BestgenBestgen, 2017; Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Garner, Crossley and KyleGarner et al., 2019, Reference Garner, Crossley and Kyle2020; Reference Granger and BestgenGranger & Bestgen, 2014); however, across these individual contexts, increases in MI combination use have not always been linear across year groups of learners or proficiency levels (e.g., Reference Durrant, Brenchley, Szudarski and BarclayDurrant & Brenchley, 2021; Reference PaquotPaquot, 2018, Reference Paquot2019).

1.3 Emerging Questions from Current Studies

1.3.1 How Can We Choose Appropriate Measures of Collocation?

The first question that this Element engages with is ‘How can we choose appropriate measures of collocation’? Engaging with this important question is warranted because, as previous sections of the Element have noted, past studies have raised several issues relating to the use of association measures. Scholars have relied on a narrow set of measures that have been restricted to association measures used in the language learning/assessment literature with the t-score and MI featuring prominently. There has been sparse mention of alternatives or an awareness of how the hundreds of other association measures touted in the literature align with the MI or t-score or may be able to illuminate different collocation properties to those highlighted by the MI and t-score (e.g., see criticisms in Reference Öksuz, Brezina and RebuschatÖksuz et al. (2021) and acknowledgement of the hundreds of measures in Reference PecinaPecina (2005, Reference Pecina2010), Reference WiechmannWiechmann (2008), Reference Gries and EllisGries and Ellis (2015), and more recently Reference Kyle, Crossley and BergerKyle et al. (2018) and Reference Kyle, Eguchi and GrangerKyle and Eguchi (2021)). This Element’s starting position is that the use of these measures needs to be understood against the wider bank of association measures that researchers have access to. The measures need to be understood in terms of their ability to illuminate different types of collocation properties. There is also a need to bring together the fragmented association measure literature. This fragmented picture means measurement choice is often underexplored and/or undertheorised because measures are spread out across different disciplines and scholars (Reference Öksuz, Brezina and RebuschatÖksuz et al., 2021).

1.3.2 How Can We Measure and Understand the Potential Role of Different Linguistic and Non-linguistic Variables Involved in Shaping Writing Quality Scores in an Appropriate Way?

The second question that this Element engages with is ‘How can we measure and understand the potential role of different linguistic and non-linguistic variables involved in shaping writing quality scores in an appropriate way’? Studies in this research area have started to use a wider range of statistical methods, such as recently mixed/multi-effects models (e.g., Reference Garner, Crossley and KyleGarner et al., 2019, Reference Garner, Crossley and Kyle2020; Reference PaquotPaquot, 2018, Reference Garner, Crossley and Kyle2019), to measure relationships between collocations and writing quality. Thus, a key goal of the Element is to explore how these types of models offer an appropriate method of studying writing quality scoring.

1.4 The Organisation of the Element

This Element proceeds by providing an overview of the FYC context in Section 2. Section 3 then guides readers through the current collocation-grade landscape and emphasises how the empirical work in the Element adds to this landscape. Section 4 sets out the methodological steps taken in the three individual studies. Then, Section 5 describes the results of the cluster analysis carried out to answer the first question, while Section 6 describes the results of the mixed effects modelling, carried out to partially answer the second question. Section 7 also helps answer the second question by qualitatively unpacking the possible reasons for the statistical relationships between the measures of collocation and writing quality by looking at text samples from the FYC corpus itself. Section 8 concludes the Element by summing up the key findings and limitations, and importantly how we reflect on our methodological approach and its promise in future work.

2 FYC Programmes and the Writing Context

2.1 Overview of the Section

This section will explain the rationale for focussing on a FYC programme in the United States. The section will explain how these programmes may benefit from closer engagement with language instruction. We chose to base our study on the programme at the University of South Florida (USF) because of its focus on different writing tasks.

2.2 The Nature of the FYC Programme at USF

The University of South Florida is a large public university with a diverse student population. Of its 50,000 students, as many as 41 per cent identify as African American, Black, Asian American, Hispanic, Native American, or multiracial (USF, 2018). The university provides degrees in business, engineering, arts and social sciences, and interdisciplinary sciences ( USF, 2018).

As a state requirement, students who enter a Florida College or University State system have been required since Reference Aull2015–16 to complete thirty-six hours of general education coursework from a list of courses in communication, mathematics, social sciences, humanities, and natural science, among others. This requirement means students develop the academic and numeracy skills needed for the demands of university study.

In the FYC programme, students complete writing as a ‘process’. They develop strategies in pre-writing, co-authoring, revising, and editing, as well as learning to follow academic/disciplinary conventions for different genres. They must achieve a minimum C-grade to continue their studies.

The programme’s learning objectives are set out across two modules: ENC (English Composition) 1101 and ENC 1102. Some of these objectives include the following:

Learning and applying strategies to facilitate a range of skills, including critical reading, the stages of process writing, and giving peer feedback.

Composing academic genres and adhering to academic conventions (structure, citation, and linguistic features).

Synthesising disparate or conflicting thoughts when evaluating questions/problems to form cohesive and collaborative solutions.

2.2.1 Individual Project Information

Students complete six projects: three on each module. They produce drafts, carry out peer review activities, and develop a revision plan from this feedback. Across the two modules, students choose a controversial topic that they explore from different stakeholder perspectives. In ENC 1101, the three projects are: producing an annotated bibliography, analysing a stakeholder’s platform, and synthesising multiple perspectives in the form of a literature review. In ENC 1102, the three projects are: developing a Rogerian argument on common ground between stakeholders and how they can compromise, analysing a visual rhetoric, and finally composing a multimodal argument in the final project.

Course ENC 1101 focusses on solidifying writing practices by introducing and practising paraphrasing, citing sources, drafting and editing work, peer review, and collaboration. Course ENC 1102 focusses more on developing students’ argumentation and reasoning skills as well as their agency. Project 1 from ENC 1102 requires students to develop arguments that look at differences in stakeholder views for their chosen topics and explore how these stakeholders may reach a compromise. This project builds on Project 3 from ENC 1101, which sets out the key arguments for each stakeholder.

2.3 Teaching, Evaluation, and Feedback at USF

Modules are taught by permanent staff, adjunct instructors, and Graduate Teaching Assistants (GTAs). The ethos on the programme is that writing is constructed at a community level. This means peer review and teacher-led writing conferences feature heavily. Writing is commented on and evaluated using ‘My Reviewers’, a bespoke learning management system (LMS) which allows instructors and students to view programme material, draft and final projects, and to give peer and instructor feedback via PDF annotation tools.

Each of the six projects is worth between 20 and 30 per cent of students’ overall grade. Students are awarded the remaining percentage of their grade for homework tasks and class participation, equalling 100 per cent. Projects are evaluated using custom-made rubrics which instructors are trained to use. These rubrics evaluate projects according to analysis, use of evidence, organisation, focus, and style. An overall holistic grade out of fifteen points is awarded, expressed by the letters A–F. These bandings are shown in Table 1.

Table 1 Holistic grades awarded for ENC 1101 and ENC 1102

| Grade Types | Grade Breakdown for ENC 1101 and ENC 1102 | ||

|---|---|---|---|

| A | A+ (97–100) GPA: 4.00 | A (94–96.9) GPA: 4.00 | A– (90–93.9) GPA: 3.67 |

| B | B+ (87–89.9) GPA: 3.33 | B (84–86.9) GPA: 3.00 | B– (80–83.9) GPA: 2.67 |

| C | C+ (77–79.9) GPA: 2.33 | C (74–76.9) GPA: 2.00 | C– (70–73.9) GPA: 1.67 |

| D | D+ (67–69.9) | D (64–66.9) | D– (60–63.9) |

| GPA: 1.33 | GPA: 1.00 | GPA: 0.67 | |

| F | F (59.99 or below) 0.00 | ||

2.4 Language Instruction in FYC Programmes

2.4.1 The Focus on Language Instruction in FYC Programmes

Although the CWPA Outcomes Statement (2014) helps standardise FYC programmes across universities, Reference Eckstein and FerrisEckstein and Ferris (2018) highlight how the statement and the Conference on College Composition and Communication’s fluid guidance on students’ linguistic needs means there is the potential for explicit language input to be overlooked in favour of a focus on traditional composition processes. Indeed, several scholars have started to draw attention to the lack of language focus on FYC programmes (e.g., Reference Matsuda, Saenkhum and AccardiMatsuda et al., 2013). They acknowledge that this lack of language instruction is common despite many raters downgrading coursework because of language problems.

The CWPA Outcomes Statement (2014) makes most specific reference to language instruction on FYC programmes under its ‘Rhetorical Knowledge’ and ‘Knowledge of Conventions’ sections. When developing rhetorical knowledge, students are expected to develop the ability to respond to a variety of different contexts, that is, they must be able to shift tone, level of formality, medium, and/or structure. Instructors are expected to guide students towards learning about the main features of genres.

Despite these connections to language use, Reference AullAull (2015) emphasises that most FYC programmes focus on process pedagogies and neglect focussing on how language use facilitates meeting many of the FYC programme goals (e.g., genre and readership awareness).

2.4.2 The Focus on Language Instruction at USF

There is a strong ethos of focussing on the processes of writing and social text creation, but some attention is paid to language development. My Reviewers facilitates a focus on language through its multimedia library containing ‘community’ comments, a bank of more than 200 instructor-created comments that offer advice on grammar and mechanics, and resources that address different types of writing concerns (e.g., word choice/diction, weak argumentation, and logical organisation) (Reference Moxley and EubanksMoxley & Eubanks, 2015).

The grading rubrics also promote a focus on language. Texts which fail in the evidence component are noted to ‘rarely distinguish between the writer’s ideas and source ideas and quotes, paraphrases and summaries are not clearly and consistently introduced, integrated and analysed to support arguments’. In the style component, weak texts are those where ‘language significantly interferes with communication of ideas with frequent grammar and/or punctuation errors, inconsistent points of view, significant problems with syntax, diction and word choice’.

This lack of language focus is not only prevalent at USF but is a wider FYC issue. Reference Jeffery and WilcoxJeffery and Wilcox (2013) highlight that for US high school students, their National Assessment of Educational Progress exam requires them to write opinion-based essays about large-scale topics where their evidence is personal. Similarly, international university entrants who are second language writers are often asked to discuss the extent to which they agree/disagree with a particular statement (Reference Moore and MortonMoore & Morton, 2005).

Considering these shortcomings, FYC researchers have started to adopt more corpus-based approaches to researching language use by FYC writers (e.g., Reference AullAull, 2017, Reference Aull2019; Reference Eckstein and FerrisEckstein & Ferris, 2018). For example, Reference AullAull (2017) examines the variation in language use across FYC genres with a corpus of USF texts by looking at differences in the keywords that each genre’s texts contain.

While these studies present a picture of student language use on FYC programmes, there are several unexplored paths. There are still unanswered questions as to how writers use language and, importantly, how raters view this language use when judging project tasks. Therefore, the work in this Element aims to further highlight the role language plays in first-year writing by examining the relationship collocation has with the construct of writing quality. In doing so, several contextual and learner variables that relate to the writing task and the language status of the writer are also considered to appreciate how these factors may also influence writing quality score variation.

3 Review of Collocation-Writing Quality Studies

3.1 Overview of Section

This section reviews relevant literature to set out the research landscape of collocation-grade studies. In doing so, we create a space for our work to contribute to filling identified gaps and addressing issues we raise in this area.

3.2 Definitions of Collocation and Methods of Identification

The frequency-based approach to defining and identifying collocations is influenced by two guiding principles: recurrence and co-occurrence. Recurrence is the repetition of the same word combination by a language user or a group of users (Reference Ellis, Meunier and GrangerEllis, 2008). It can be captured by looking at frequencies of word pairings in corpus data (Reference EvertEvert, 2004). Co-occurrence is an attraction between two words, captured by their appearance together more often than their individual frequencies would predict (Reference EvertEvert, 2004). As Reference Evert, Lüdeling and KytöEvert (2009) notes, the mere repetition of a word pair is not a sufficient indicator of a strong attraction between the words; a pair may be frequent without there being a strong attraction between the individual words. This is seen, for example, when a combination is frequent, but its component words are able to take many other partners. Reference Schmitt and SchmittSchmitt and Schmitt (2020) illustrate that the word ‘the’ co-occurs with almost every non-proper noun and thus does not have strong attraction to other words. Reference Schmitt and SchmittSchmitt and Schmitt (2020, p. 5) also explain that some words co-occur with only a small number of other words. The word ‘blonde’, for example, occurs almost exclusively with ‘hair’ and a few other nouns such as ‘woman’ or ‘lady’. We produce ‘blonde hair’, ‘blonde woman’, or ‘blonde lady’ but never ‘blonde wallpaper’ or ‘blonde paint’ (italics in authors’ original), although these latter combinations are syntactically and semantically possible.

Reference Firth and PalmerFirth (1968) discusses attraction as relating to the ‘mutual expectancy’ of words, while Reference SinclairSinclair (1991) draws on the ‘mutual choice’ that words seem to be subject to. These ideas of predictability and chance were also earlier captured in the work of Reference OsgoodOsgood (1952):

If in the past experience of the source, events A and B … have occurred together, the subsequent occurrence of one of them should be a condition facilitating the occurrence of the other: the writing or speaking of one should tend to call forth thinking about and hence producing the other.

Reference SeretanSeretan (2011) ties these notions of frequency and predictability to key principles of statistics, namely: tendency and typicality. She highlights how scholars have defined a collocation as a typical, specific, and characteristic combination of words which are arbitrary, recurrent word combinations. Bringing these views together, collocations therefore comprise two or more words that appear near each other in a recurrent manner, and that co-occur more often than could be explained by random chance.

Traditional methods of capturing collocation have been termed positional as they operate under a span approach. This span is set by the researcher or by the software they are using and has been classically stated as four words to the left or right of the search word. However, this span of four words has been challenged (e.g., Reference SmadjaSmadja (1993), who uses five words). In many second language studies, it has been set at one or two words to only capture adjacent pairs (e.g., Reference BestgenBestgen, 2017; Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Granger and BestgenGranger & Bestgen, 2014). Reference SeretanSeretan (2011) recognises the dangers of a span approach because it may capture syntactic noise – that is, word pairs which have no syntactic relationship. This is shown in example [1], whereby a span approach might capture ‘human rights’, and ‘human rights organisations’ as pairings but also the unrelated ‘human organisations’.

[1] Human rights organisations

Reference SeretanSeretan (2011) also notes that the span approach would fail to capture pairs if they fell outside the span boundary, as with ‘problem solved’ in example [2]:

[2] The problem is therefore clearly a deeply rooted one and cannot be solved without concerted action by all parties.

Recognising these limitations, others have recommended capturing syntactically related combinations by using automated tools such as the Stanford parser (Reference Manning, Surdeanu, Bauer, Bontcheva and ZhuManning et al., 2014). These parsers can capture multiple dependencies such as adjectives pre-modifying a common noun, adverbs modifying an adjective. These methods have the advantage of retrieving combinations efficiently. However, their accuracy with learner writing has only recently started to be documented, so researchers need to exercise caution in their use (e.g., see Reference Durrant, Brenchley, Szudarski and BarclayDurrant & Brenchley, 2021; Reference Huang, Murakami, Alexopoulou and KorhonenHuang et al., 2018; and the special issue on working with learner data edited by Reference KyleKyle (2021)).

The sub-sections that follow outline the vast array of association measures that have been used to capture the property of co-occurrence, but importantly have not been acknowledged much in first or second language writing studies. A full discussion of each measure’s respective formula is beyond the scope of this Element; however, full details of the formulas are provided in the supplementary material at: https://leemccallum.net/resources

3.2.1 Measures of Significance and Measures of Association Strength

Association measures can be divided into two main groups, each with its own sub-group: significance-testing measures, and strength of association measures. This distinction overlaps strongly with that between recurrence and co-occurrence discussed in the previous section, with significance-testing measures emphasising combinations that frequently recur, while strength of association measures emphasise combinations that co-occur with high levels of exclusivity.

Significance-testing measures have been divided into different sub-groups which use frequency information sometimes similarly and sometimes markedly differently. Literature (e.g., Reference EvertEvert, 2004; Reference PecinaPecina, 2005, Reference Pecina2010) has presented groupings labelled likelihood, exact hypothesis, and asymptotic measures. These measures have some similarities, but they treat the given frequency information slightly differently from a theoretical perspective and so can allocate high values to certain word combinations over others. For example, Reference EvertEvert’s (2004) discussion of five likelihood measures (multinomial likelihood, hypergeometric likelihood, binomial likelihood, Poisson likelihood, and Poisson-Stirling) draws attention to the fact that these are in fact manipulations of each other. They build on previous measures to phase out mathematical bias by paying attention to skewed frequencies, and in doing so they draw attention to different types of word combinations.

As noted in Section 1, the t-score has emerged as a popular representative of significance measures. In language learning studies, high-scoring t-score combinations tend to include word pairings which are frequently used across different genres, disciplines, and domains. For example, in their study of intermediate and advanced CEFR (Common European Framework of Reference) second language writing, Reference Granger and BestgenGranger and Bestgen (2014) found that pairings such as ‘prime minister’ (t-score: 97.2), ‘other hand’ (t-score: 73.9), ‘long time’ (t-score: 64.2), ‘other people’ (t-score: 61.5), ‘young people’ (t-score: 59.5), ‘other words’ (t-score:56.9), ‘wide range’ (52.3), and ‘little bit’ (49.9) were all amongst the highest scoring t-score combinations. There is a tradition of taking a t-score of 2 or greater to indicate that a word pairing is likely to be a collocation, probably based on a dubious analogy with t-scores of 1.96, marking the threshold for p < 0.05 in inferential statistics. However, there is no real basis for such a threshold in collocation research, where sample sizes are enormous and the assumptions on which parametric statistics are based are not usually met. Like other association measures, t-scores are best seen as a method for ranking collocations, rather than for marking sharp cut-offs between collocations and non-collocations.

Similarly, measures in the strength of association group have also been divided into different sub-groups. These include point estimate, coefficients, information theory, and heuristic measures. Of these measures, the MI has been favoured. It taps into the strength of the association or attraction between words in the pairing and so quantifies the notion of mutual expectancy. The formula captures how much we expect to see word 2 if we see word 1. It therefore quantifies the degree of exclusivity that words in a pairing have with each other. In language studies, the MI has been found to highlight low-frequency pairings which comprise more exclusive pairings, with these pairings also used in a narrower range of genres, disciplines, and domains. For example, Reference Granger and BestgenGranger and Bestgen (2014) found that their highest scoring MI combinations included nitrous oxide (MI: 17.4), hippocractic oath (MI:16.4), conscientious objectors (MI:15.9), juvenile delinquency (MI: 15.1), ultraviolet radiation (MI: 13.8), and conscientious objection (MI: 12.6). Like the t-score, MI has often operated on a threshold basis, with a value of ≥ 3 taken to be evidence of collocation. As with the t-score threshold, there is little basis for such a cut-off, and MI is best seen as a gradient score, ranking more versus less exclusive combinations.

It is important to note here that the measures introduced thus far are based on an understanding that words mutually attract each other in a symmetrical manner. However, later work has shown that attraction is in fact asymmetrical where either word 1 or word 2 has greater power of attraction in the combination.

3.2.2 Asymmetrical Measures

Reference GriesGries (2013a) has explained that a combination is often far more important for one of its elements than for the other. In the pair upside down, for example, upside is strongly associated with down, but the reverse is not true. This becomes especially important in research that depends on the idea that words ‘predict’ each other. While the occurrence of upside strongly predicts the occurrence of down, the occurrence of down does not strongly predict the occurrence of upside.

The asymmetrical nature of attraction between words has been captured by the Delta P measure. Delta P assigns separate scores for the extent to which word 1 predicts word 2 (Delta P w2 | w1: e.g., how strongly upside predicts down) and the extent that word 2 predicts word 1 (Delta P w1| w2: e.g., how strongly down predicts up). Its values range from –1 to +1 (Reference SchneiderSchneider, 2020).

The narratives in the previous sub-sections highlight both the array of measures mentioned across the literature and the difficulty of coherently synthesising their use. The narrative brings to light the number of measures being mentioned but also illuminates the patchy nature of their groupings and attention. The narrative further points to some scholars focussing narrowly on a small group of measures, for example, Reference DunningDunning’s (1993) focus on likelihood measures while others focus on multiple measures with limited explanations between their connections and differences (e.g., Reference PecinaPecina, 2005, Reference Pecina2010). There are now many voices in the language learning community calling for a better understanding of association measures (e.g., Reference Gablasova, Brezina and McEneryGablasova et al., 2017a, Reference Gablasova, Brezina and McEnery2017b; Reference Gries, Durrant, Gries and PaquotGries & Durrant, 2021; Reference SchneiderSchneider, 2020; Reference WiechmannWiechmann, 2008). Among these voices, the fragmented nature of measure attention has been raised (e.g., Reference Gries, Durrant, Gries and PaquotGries & Durrant, 2021), as have calls to understand the relations between measures better (e.g., Reference WiechmannWiechmann, 2008) and for measure selection to be less arbitrary (Reference Gablasova, Brezina and McEneryGablasova et al., 2017b).

We now turn to show how the use of association measures has played a key role in operationalising the construct of phraseological complexity and its relationship to student writing quality. Later, we highlight how the language learning/assessment literature has used a relatively narrow range of association measures and the implications of this.

3.3 Phraseological Complexity and Its Measurement

3.3.1 The Importance of Phraseological Complexity in Learner Writing

Phraseological complexity is a relatively new term, first coined by Reference PaquotPaquot (2019). However, the theoretical sub-constructs it is based on have a longer history. Reference PaquotPaquot (2019, p. 124) defines phraseological complexity ‘as the range of phraseological units that surface in language production and the degree of sophistication of such units’. The sub-constructs of lexical range (also known as diversity) and sophistication have a long history in vocabulary knowledge and language complexity research. For example, this might involve knowledge of the semantic meanings a word may have and/or its syntactic patterns (Reference Durrant, Brenchley and McCallumDurrant et al., 2021). Researchers have often studied the diversity of vocabulary items learners have used (e.g., Reference Kyle and CrossleyKyle & Crossley, 2016). Sophistication has been operationalised in multiple ways but essentially focusses on counting the different words or structures learners use which are beyond ‘everyday’ structures/words. This picture of ‘everyday’ is often built up by looking at the frequencies of the structures/words with less-frequent structures/words assumed to be more sophisticated and genre- and/or discipline-specific. Under these two constructs, there is an assumption that a learner’s knowledge, shown through their produced text, is focussed on being able to vary the types of language items they use and their sophistication, with the latter bound up in ideas of using genre- and/or discipline-specific or appropriate items. However, it is worth remembering that the inferences we make between learners’ use of language/show of knowledge is directly related to simply analysing what is produced in their texts. The picture we obtain is limited to what language learners choose to use, rather than demonstrating all knowledge. We can assume that what is present is not necessarily their whole repertoire of knowledge or language, it is simply the language used in the text.

The production of varied and sophisticated collocation use has important implications for understanding how the use of collocations is judged or perceived by evaluators. The development of collocation use is seen as an important marker of native language use, being accepted into academic communities and signals fluent language production (Reference WrayWray, 2002). Traditionally, second language writers have been known to struggle to develop this use because of the often-arbitrary constraints of combining words as well as struggling to grasp their non-literal fixed meanings (Reference Granger, Paquot, Charles, Pecorari and HunstonGranger & Paquot, 2009).

These struggles often present themselves as writers produce marked combinations such as ‘powerful coffee’ instead of ‘strong coffee’ (Reference NesselhaufNesselhauf, 2005). Making and demonstrating appropriate combination choices has been shown to be well received by raters in assessment studies (e.g., Reference BestgenBestgen, 2017; Reference Granger and BestgenGranger & Bestgen, 2014), with marked choices less favourably so (Reference Granger and BestgenGranger & Bestgen, 2014). Reference Henriksen, Bardel, Lindqvist and LauferHenriksen (2013) notes that the mastery of formulaic language (such as collocation) allows writers to appear fluent and gives the impression that they can fulfil a range of communicative purposes that texts demand. At university, the use of these patterns has also been seen as a kind of expected threshold at undergraduate level of study (Reference WardWard, 2007). However, knowledge/use of collocations should not be taken for granted with first language novice writers either. Indeed, comments from Reference RömerRömer (2009) remind us that first language novice writers are also navigating academic terrain for the first time, while Reference Bychkovska and LeeBychkovska and Lee (2017) remind us that academic writing is not a native language for anyone.

To uncover a clear picture of learners’ knowledge/use of collocation and its subsequent relationship to writing quality, several studies have made implicit and explicit reference to measuring phraseological complexity. However, the clarity of this whole picture varies given the approaches to identifying collocations, methods of analyses, and different learner and reference corpora used.

3.3.2 The Knowledge Base of Phraseological Complexity in Learner Writing

The study of phraseological complexity has a shorter yet rapidly expanding history when compared to studies of syntax or vocabulary. This history is almost entirely led by studies in second language learner writing (Reference Durrant, Brenchley and McCallumDurrant et al., 2021).

Scholars have largely extracted collocations using a span approach (e.g., Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Granger and BestgenGranger & Bestgen, 2014), but more recent work has started to extract syntactically related pairings via automatic parsers (e.g., Reference PaquotPaquot, 2018, Reference Paquot2019). Most studies have focussed on either looking at the strength of association/significance of association between words and looked at how use of these combinations differs across proficiency levels (e.g., Reference BestgenBestgen, 2017; Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Garner, Crossley and KyleGarner et al., 2019, Reference Garner, Crossley and Kyle2020; Reference Granger and BestgenGranger & Bestgen, 2014), or also looked at the diversity of collocation use using established measures of diversity (e.g., Reference PaquotPaquot, 2018). These studies have based their analyses on a reference corpus or another resource which is often based on native writers’ use of language. The work is therefore bound up in an understanding of academic writing which advocates comparing learner writing to some form of native writing.

Under this premise, scholars have looked at learners’ use of word combinations in three ways: (i) counting the proportion of attested/non-attested word combinations that are found in a learner text and the reference corpus; (ii) the proportion of attested combinations which appear five or more times in the reference corpus; (iii) mean frequency of combinations where each learner text is assigned a mean frequency score based on the average frequency of all their combinations.

Using the first method, studies have found significant positive correlations between the proportion of attested word combinations and writing quality scores (e.g., Reference BestgenBestgen, 2017; Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Garner, Crossley and KyleGarner et al., 2020; Reference Kim, Crossley and KyleKim et al., 2018; Reference Kyle and CrossleyKyle & Crossley, 2016). However, an interesting contrast to these findings has been found in the earlier work of Reference Crossley, Cai, McNamara, McCarthy and YoungbloodCrossley et al. (2012), in their study of 313 Freshman argumentative essays at Mississippi State University. Using the BNC as their reference corpus, they found fewer attested forms associated with the essay quality scores. This interesting contrast highlights the nuanced picture of feature–grade relationships in that what is valued in other contexts (e.g., the later works above are L2 contexts) might not be valued in other contexts, such as the mixed composition context that Reference Crossley, Cai, McNamara, McCarthy and YoungbloodCrossley et al. (2012) studied.

Using the second method, results have been more nuanced. Looking at syntactic pairings such as adjective + noun, verb + noun, adverb + adjective, and noun + noun, Reference Granger and BestgenGranger and Bestgen (2014) found that low-frequency combinations were more common at the advanced proficiency level than at the intermediate level; however, Reference PaquotPaquot’s (2018) study of L2 postgraduate writing did not find significant differences between texts rated B2-C2 on the CEFR. Using the third method, studies found positive correlations between mean frequencies and writing quality (e.g., Reference Garner, Crossley and KyleGarner et al., 2019; Reference Kim, Crossley and KyleKim et al., 2018; Reference Kyle and CrossleyKyle & Crossley, 2016), although results have differed across writing tasks, with Reference Kyle and CrossleyKyle and Crossley (2016) finding positive correlation for independent TOEFL essays but negative correlation for integrated essays, with the latter essay type being essays which are written using two reading texts as evidence. One possible explanation for this is that in the integrated essays, students borrow words from source texts (e.g., see Reference KyleKyle, 2020).

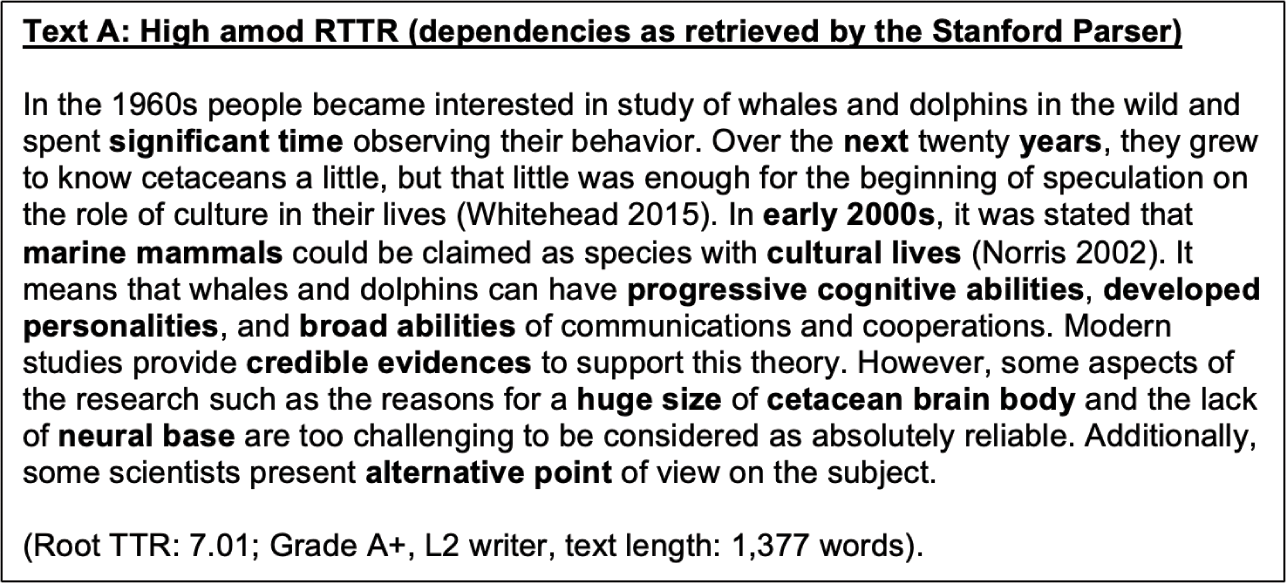

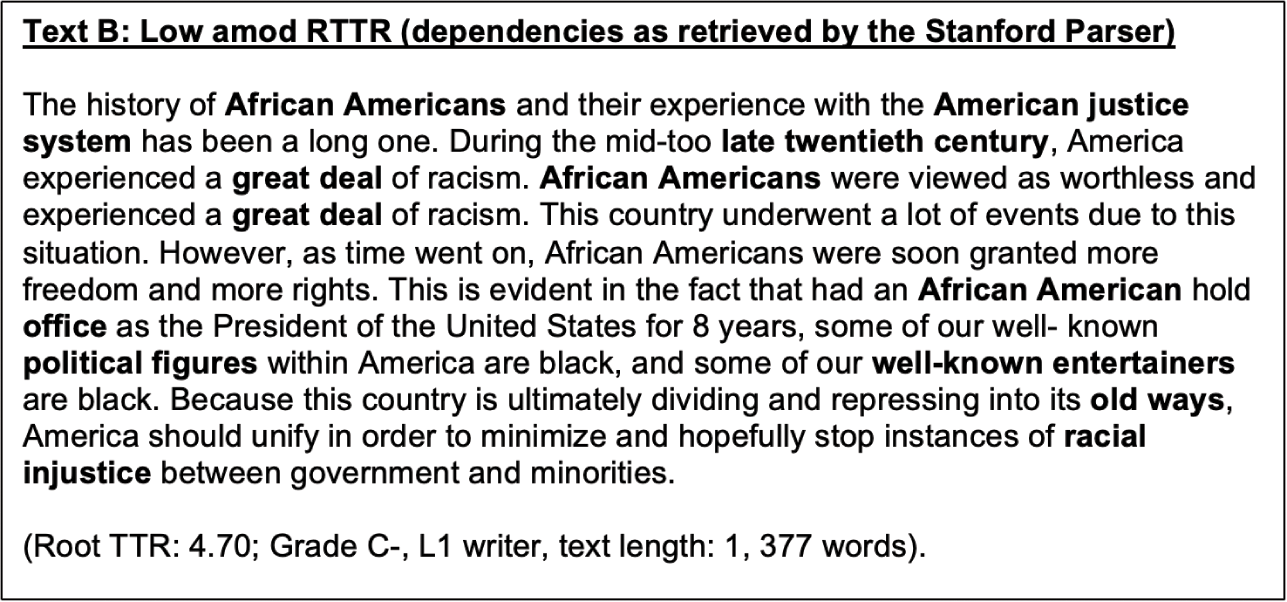

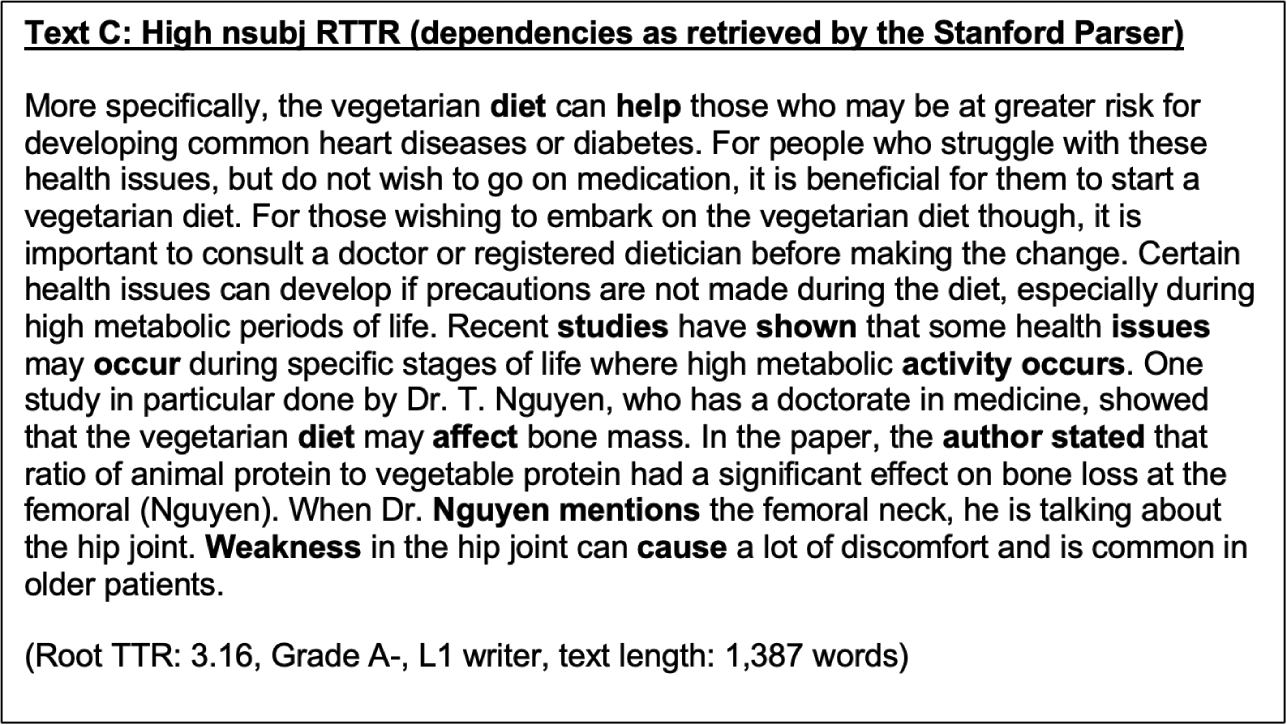

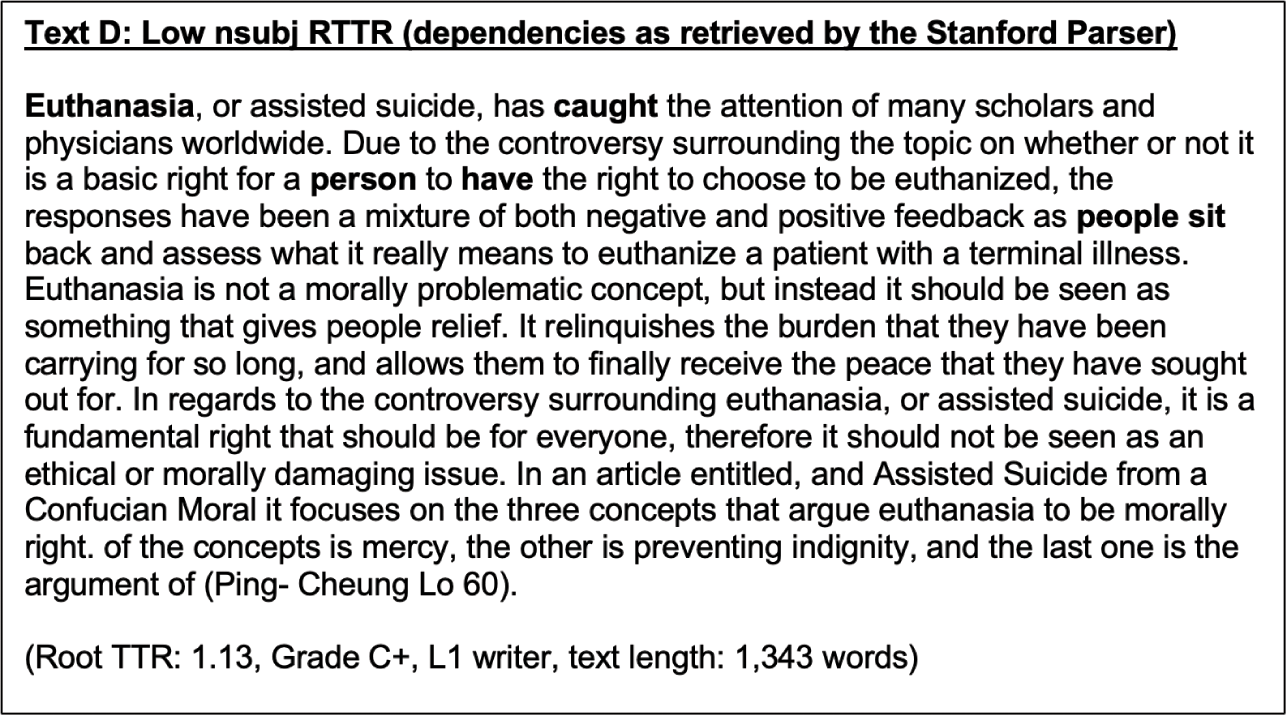

Reference PaquotPaquot (2019) captured collocation diversity by looking at the Root Type Token Ratio (RTTR) of adjective modifying noun (amod) combinations, adverbial modifiers (advmod) combinations, and direct verb object combinations (dobj). Across second language postgraduate writing, she found no significant increase in diversity across CEFR proficiency levels. Reference PaquotPaquot (2019) is also the only study to have looked at the range of collocations via the use of a collocation list obtained from native writing. She looked at the number of collocations in second language postgraduate writing that were found in the Academic Collocation List from Reference Ackermann and ChenAckermann and Chen (2013) but found no significant increase across proficiency levels.

Scholars have also looked at the relationship between association measures and writing quality using threshold bands and mean association scores. Both Reference Granger and BestgenGranger and Bestgen (2014) and Reference PaquotPaquot (2018) use threshold bands in their studies of L2 CEFR-graded texts. They split the MI into four bands: non-collocation (MI < 3), low collocation (MI ≥ 3 and < 5), mid collocation (≥ 5 and < 7), and high collocation (MI > 7). Reference Granger and BestgenGranger and Bestgen (2014) found that advanced writers used a significantly higher proportion of high MI collocations than intermediate writers, with the latter group using more non-collocations. No significant differences were found between low- and mid-MI threshold groups. When split for part of speech, intermediate proficiency level writers used a significantly higher proportion of low-scoring pre-modifier + noun combinations. Reference PaquotPaquot’s (2018) results are harder to bring together. She found significant increases across CEFR proficiency levels for mid-MI premodifier + noun and verb + direct object combinations and for low-MI pre-modifier + noun combinations and a significant decrease for premodifier + noun non-collocations.

A more solid picture of the relationship between association measures and writing quality has emerged when scholars have used the mean MI. L2 studies have shown a consistently positive significant relationship between mean MI scores and writing quality across different L2 contexts (Reference BestgenBestgen, 2017; Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Garner, Crossley and KyleGarner et al., 2020; Reference Kim, Crossley and KyleKim et al., 2018; Reference PaquotPaquot, 2018, Reference Paquot2019). In a rare investigation of FYC projects at USF, Reference Durrant, Moxley and McCallumDurrant et al. (2019) found consistently weak positive non-significant correlations between the MI and the final project grades and also the independent style grade.

Two studies used the MI2 measure, which is said to phase out the MI’s favouring of low-frequency pairings. Both Reference Garner, Crossley and KyleGarner et al. (2019) and (Reference Garner, Crossley and Kyle2020) found a significant increase in means across CEFR proficiency levels, further strengthening the evidence base that the MI and its variations are valid correlates of writing quality.

The t-score has also been studied via threshold bands and mean scores. Reference Granger and BestgenGranger and Bestgen (2014) divided their thresholds into non-collocations (t-score < 2), low collocations (t-score ≥ 2 and 6), mid collocations (≥ 6 and < 10), and high scoring (t-score > 10). They found that intermediate level writer used significantly more high-scoring collocations than advanced level writers. Advanced writers used more low- and mid-scoring collocations. However, intermediate writers used more non-collocations. When split for part of speech, the same trends were found but fewer were statistically significant.

Other studies have used the mean t-score and found different results to Reference Granger and BestgenGranger and Bestgen (2014). Both Reference Bestgen and GrangerBestgen and Granger (2014) and Reference Garner, Crossley and KyleGarner et al. (2020) found that as t-score means increased, writing quality scores increased. Reference Durrant, Moxley and McCallumDurrant et al. (2019) found the opposite in that there was weak negative or no correlation between two- or three-word combinations and FYC project final and style grades. These results suggest a complex picture of how the t-score may be related to writing quality.

From what has been discussed, studies have found different and sometimes opposite relationships between writing quality/development and collocations. This may be due to the different nature of the writing contexts and the methodological approaches adopted. At this point, we wish to offer critique on the methodological approaches taken in some of these studies. The use of association measure thresholds and banding raises questions about the loss of information in doing so. The often-cited premise that an MI value of ≥3 and a t-score value of ≥2 is an appropriate departure point for looking at word combinations has been the subject of increasing criticism from a variety of researchers in different disciplines. In the case of operating under the ‘MI ≥ 3’ captures ‘interesting’ combinations as opposed to <3 being ‘uninteresting’ (Reference Church and HanksChurch & Hanks, 1990), we envisage a potentially huge loss of interesting data under this cut-off point. This is particularly true when examining the production of learner combinations which may violate important language conventions and therefore be allocated low MI and/or t-scores. As already noted, we take the view that work should be based not on thresholds and cut-off points but on ranked lists of combinations, so that researchers can evaluate word combinations across the whole spectrum of scores. The t-score has also been criticised as its significance testing basis makes the measure in essence more likely to always reach significance as its values are influenced by corpus size. If the corpus used is large enough and the combination found frequently enough, it will reach more than a value of 2.0 by virtue of its reliance on corpus size and its formula make-up (Reference Gablasova, Brezina and McEneryGablasova et al., 2017a). These two criticisms are acknowledged in this Element here by adopting a ranking approach over thresholds, and we also encourage use of this ranking in future studies.

It is clear here that many studies have focussed on the MI and t-score in their work with few of these studies acknowledging other alternative measures or indeed providing a concrete rationale for their focus on the MI and/or t-score. However, this is slowly changing through the use of statistical techniques which are able to tap into how association measures may be distinct from one another. Some of these statistical techniques have been shown in the learner corpus work of Reference Kim, Crossley and KyleKim et al. (2018), Reference Kyle, Crossley and BergerKyle et al. (2018), Reference Durrant, Moxley and McCallumDurrant et al. (2019) and Reference KyleEguchi and Kyle (2020). Reference Kim, Crossley and KyleKim et al. (2018) found in their multidimensional factor analysis that Delta P was distinct from other association measures, while Reference Kyle, Crossley and BergerKyle et al. (2018) found that the MI, MI2, and Delta P were all distinct measures worth retaining for analyses in their study of TAALES 2.0 measures. In another study of TAALES, Reference Durrant, Moxley and McCallumDurrant et al. (2019) also found that these association measures were distinct enough from each other to warrant retention and further analyses with their FYC data. Similarly, Reference KyleEguchi and Kyle (2020) found with association measures that the Delta P, MI, and MI2 were all distinct from each other and able to flag up different types of collocations.

Reference Kim, Crossley and KyleKim et al.’s (2018) multidimensional factor analysis study of the Yonsei English Learner Corpus found that directional association, measured by the Delta P, could explain 16.1 per cent of variation in writing proficiency grades and 31 per cent of variation in lexical proficiency grades, when placed in a regression model with other linguistic predictors (content word properties, bigram mutual information, bigram and trigram proportions, and word specificity). Reference Kyle, Crossley and BergerKyle et al. (2018) found in a mixed corpus of L1 and L2 free-writing that Delta P values, taken from various sub-corpora in COCA, were able to explain 58 per cent of variation in lexical proficiency grades when modelled with other types of ngram measures and word property measures. More recently, Reference Durrant, Moxley and McCallumDurrant et al. (2019) also extended the association measure range by studying Delta P alongside the MI and t-score. They found Delta P to have the strongest positive correlations with both final and style grades in FYC projects, although the reason for these results needs further unpacking. Reference Garner, Crossley and KyleGarner et al. (2020) also extended the use of association measures beyond the MI and the t-score. They introduced the collexeme strength measure and found a significant positive correlation to writing quality across A2- B2 CEFR levels in a corpus of L2 writing produced by L1 Korean students.

It should also be highlighted here that the foundations of the MI and t-score being linked to writing quality is often limited to a sole focus on second language writing. The picture emerging via the limited use of the MI in first language school children’s writing from Reference Durrant, Brenchley, Szudarski and BarclayDurrant and Brenchley (2021) is that MI scores do not increase linearly across school year groups. A degree of caution is therefore needed when considering how these results apply to other grading contexts. Findings suggest an idiosyncratic picture of how association measures are related to writing development/quality.

Importantly, the aforementioned narrative highlights a need that is addressed in this Element: the need to understand the trajectories of how the MI and the t-score fit into the association measure literature, how they are distinct from other measures, and how they can act as stand-alone informants of word association information.

A final point that we wish to make relates to the direction of this feature-writing quality work more broadly. The literature base has measured this relationship in a straightforward manner which takes the starting position that linguistic features, and in this Element’s case, those of collocation, are a particularly prominent predictor of writing quality. However, this starting position overlooks the fact that what shapes writing quality judgements is complex when we consider the human judgements being made behind the quantitative score information.

We therefore take up the position in this Element that while we believe collocations have a place in understanding writing quality, we must acknowledge that multiple other variables are likely to play some role in shaping the end quantitative grade scores we have and use. The penultimate sub-sections of this section outline what kinds of variables should be considered and introduce the rationale for the use of mixed effects modelling as an appropriate way to consider these variables.

3.4 How Non-linguistic Variables May Shape Writing Quality

3.4.1 Contextual and Learner Variables

Scholars such as Reference BarkaouiBarkaoui (2008) have noted the complexity involved in the grading process. Variables influencing this process include (i) the writing task, (ii) rater characteristics, and (iii) learner backgrounds. These variables introduce variation into the judgement process. This variation is either seen as a source of measurement error or as a factor worth exploring as the process of making judgements is partly standardised through using assessment rubrics which are intended to constrain individual practices (Reference BarkaouiBarkaoui, 2008). In this Element, we therefore incorporate many of these variables into our work.

One contextual variable that stands out as receiving continuous attention is task (e.g., Reference Carlson, Bridgeman, Greenberg, Weiner and DonovanCarlson & Bridgeman, 1986; Reference Quellmalz, Capell and ChovQuellmalz et al., 1982; Reference Ruth and MurphyRuth & Murphy, 1988; Reference TedickTedick, 1990). For example, in their study of a first language US school context, Reference Quellmalz, Capell and ChovQuellzmalz et al. (1982) found that across 200 eleventh and twelfth grade writers’ texts, raters awarded lower scores to narrative texts over expository texts. They speculate that raters therefore tend to score narratives more harshly. Their study shows that raters vary judgements according to tasks, casting doubt on the assumption that a good writer is a good writer irrespective of assignment. In L2 contexts, Reference Carlson, Bridgeman, Greenberg, Weiner and DonovanCarlson and Bridgeman (1986) note the fluid nature of how we understand writing quality and that it is not stagnant in light of task changes.

In different TOEFL-focussed studies, Reference Guo, Crossley and McNamaraGuo et al. (2013) and Reference Kyle and CrossleyKyle and Crossley (2016) studied how lexical features across two tasks yielded different correlations with writing quality. Reference Guo, Crossley and McNamaraGuo et al. (2013) studied independent and integrated source-based essays and found that many lexical diversity and sophistication measures yielded different positive or negative correlations with writing quality grade scores across the two tasks. Reference Kyle and CrossleyKyle and Crossley (2016) found similarly in their study of independent and integrated source-based essays. Taken together, these studies raise the possibility that certain tasks may introduce sources of variation into the scoring process.

A further variable of interest is the language background of the writer (e.g., see Reference BrownBrown, 1991; Reference Huang and FooteHuang & Foote, 2010). These studies present an inconclusive picture on how language background influences the allocation of writing grades by raters. In the United States, Reference BrownBrown (1991) looked at the degree of difference existing in the writing scores of native and international students taking composition courses at a US university. Results found no statistically significant differences between the two writers’ groups scores; however, faculty did pay attention to different features of the writing, showing that although no major score differences existed, raters may have arrived at their scores from different perspectives. In contrast, the graduate level study from Reference Huang and FooteHuang and Foote (2010) found that L2 writers received consistently lower essay scores than their native counterparts. These studies show a mixed picture of how raters may have (un)conscious patterns of grading, depending on whether the essays are written by first or second language writers. It is worth bearing in mind that these findings have been largely obtained using small sample sizes and in a qualitative manner and therefore generalisation to other grading contexts is not possible.

3.4.2 Random Variation

The previous sections recognised the complexity of the grading process. A further consideration in building up this picture of being able to tap into score variation is the structure of the corpus itself, and how the sampling from the assessment context influences the scores.

In this respect, many corpus studies (e.g., Reference Bestgen and GrangerBestgen & Granger, 2014; Reference Guo, Crossley and McNamaraGuo et al., 2013; Reference Kyle and CrossleyKyle & Crossley, 2016) have assumed the data points are independent from each other in the equation. In other words, scholars interested in measuring writing quality have assumed that the corpus and related contextual and learner variables are independent data points or independent observations (Reference BarkaouiBarkaoui, 2008). A clear example of violating this independence assumption is where the same writer contributes more than one text to the corpus. The data points taken from those texts will be more similar than data points taken from texts written by different writers. Several methodologists have noted that there is a greater chance of Type 1 errors when these dependency assumptions are violated, meaning that the null hypothesis is incorrectly rejected (e.g., Reference HoxHox, 2002).

This violation of independence has implications for how we understand relationships between feature-grades in FYC programmes like the one at USF. The unique assessment structure means that if modelled with monofactorial methods, we risk remaining unaware of the role that individual writers play in modelling collocation–grade relationships.

Overall, the literature review has highlighted the complexity of studying linguistic features such as collocations as well as acknowledging their role in the complex nature of the essay grading process. It allows us to pose the central question: which linguistic and non-linguistic variables may play a role in shaping the writing quality scores awarded to student essays in an FYC writing context?

Within this question, there is a need to understand which measures of collocation are appropriate for tapping into different properties of collocation that might be relevant to understanding learner writing. There is also a need to understand the complexity involved in the grading process when we consider non-linguistic variables and the potential role they might play in shaping grade scores. We therefore also pose two sub-questions which are relevant to providing a comprehensive answer to our central question:

1. How can we choose appropriate measures of collocation?

2. How can we measure and understand the potential role of different linguistic and non-linguistic variables involved in shaping writing quality scores in an appropriate way?

The sections of this Element that follow describe how we carried out our empirical work to answer these questions.

4 Methodology

4.1 Overview of the Section

The literature review in the previous section highlighted two key issues. The first concerns capturing different properties of collocation and leads us to ask: how can we choose appropriate measures of collocation? The second concerns how we can understand the complexity of what shapes writing quality scores, and leads us to ask: how can we measure the potential role of different linguistic and non-linguistic factors in an appropriate way?

This section describes the methodologies underlying the three studies we carried out to answer these two questions. The first study addressed question one. It focussed on carrying out a cluster analysis of a set of association measures to determine the extent to which they measured different properties of collocation. The second study addressed the next question. It focussed on carrying out a type of complex logistic regression for ordinal response variables. In our case, the ordinal response variable was the set of FYC writing quality grade scores. This modelling included several fixed and random effects/predictors. Linguistic predictors included the collocation measures from the cluster analysis described below and a measure of collocation diversity. Non-linguistic predictors comprised the writing task types, the language status of the student writers, and random individual raters and writers. The latter set of predictors were used to capture the complexity of the grading context as set out in Sections 2 and 3. The third study adds an additional level of understanding around what shapes writing quality scores by carrying out a qualitative analysis of the linguistic predictors to shed light on particular patterns that may explain the statistical results uncovered in the second study.

The sub-sections that follow provide details of our study and reference corpora, the rationale for our selection of predictors, and the technical methodological steps we took in each of the two studies.

4.2 The FYC Corpus and Using MICUSP as a Reference Corpus

The study corpus comes from a sample of FYC argumentative essays produced by L1 and L2 writers from USF’s first-year writing programme. The study corpus comprises texts from Project 3 of ENC 1101 and Project 1 of ENC 1102 (see Section 2) because both focus on extensive essay writing, with Project 1 directly building on Project 3. Project 3focusses on setting out and describing key arguments of different stakeholders, while Project 1 considers these key arguments and outlines how stakeholders can reach a compromise (see Section 2 for comprehensive project details).

A breakdown of the corpus is shown in Table 2.

Table 2 Corpus make-up

| Module | Project | Number of Texts | Words per L1 Texts | Words per L2 Texts | |||||

|---|---|---|---|---|---|---|---|---|---|

| L1 | L2 | Mean | Min | Max | Mean | Min | Max | ||

| 1101 | 3 | 262 | 104 | 1,082 | 615 | 2,279 | 1,100 | 655 | 1,849 |

| 1102 | 1 | 404 | 109 | 1,237 | 529 | 2,457 | 1,251 | 784 | 1,951 |

A breakdown of the corpus in terms of grades is also provided in Tables 3 and 4. Both tables show that across the modules, there are a high number of students achieving top A grade bands (scored as 13–15 points out of a total of 15) with fewer students receiving B grades (scored as 12–10 points out of a total of 15), and fewer still only just achieving passing C grade bands (scored 9–7 points out of a total of 15).

Table 3 ENC 1101 grade breakdown

| ENC 1101 | |||||

|---|---|---|---|---|---|

| Grades | Numerical Score | # L1 Texts | # L2 Texts | Total Texts per Grade | (%) of Total Texts |

| A+ | 15 | 24 | 21 | 45 | 12.30 |

| A | 14 | 34 | 12 | 46 | 12.57 |

| A– | 13 | 62 | 26 | 88 | 24.04 |

| B+ | 12 | 46 | 14 | 60 | 16.39 |

| B | 11 | 36 | 9 | 45 | 12.30 |

| B– | 10 | 27 | 11 | 38 | 10.38 |

| C+ | 9 | 17 | 5 | 22 | 6.01 |

| C | 8 | 10 | 0 | 10 | 3.28 |

| C– | 7 | 8 | 4 | 12 | 2.73 |

Note: (# = number), % = percentage

Table 4 ENC 1102 grade breakdown

| ENC 1102 | |||||

|---|---|---|---|---|---|

| Grades | Numerical Score | # L1 Texts | # L2 Texts | Total Texts per Grade | (%) of Total Texts |

| A+ | 15 | 53 | 19 | 72 | 14.04 |

| A | 14 | 63 | 19 | 82 | 15.98 |

| A– | 13 | 84 | 14 | 98 | 19.10 |

| B+ | 12 | 59 | 13 | 72 | 14.04 |

| B | 11 | 56 | 20 | 76 | 14.81 |

| B– | 10 | 32 | 12 | 44 | 8.58 |

| C+ | 9 | 21 | 6 | 27 | 5.26 |

| C | 8 | 15 | 5 | 20 | 3.90 |

| C– | 7 | 20 | 2 | 22 | 4.29 |

Note: (# = number), % = percentage

To calculate the association measures, MICUSP ( Michigan Corpus of Upper-Level Student Papers, 2009) was chosen as the reference corpus. A reference corpus is a larger corpus of language which is used as a comparison to the study corpus. In this case, a reference corpus is used to calculate the association measure values. Each dependency from the FYC ‘study’ corpus is looked up in MICUSP and from the frequency information in MICUSP, the association measure value is calculated. MICUSP was chosen because it contains similar tasks and topics to the tasks FYC writers would be expected to complete in their academic studies after completing the FYC programme. These tasks included argumentative writing like the FYC module tasks but also included literature reviews, lab reports, critical summaries of texts, and empirical research reports. Tasks were completed by L1 and L2 writers across multiple years of study and disciplines, including biology, education, economics, history, and engineering. Topics vary but include ‘fruit fly experiments’ and ‘standardised testing in education’. The use of the corpus complied with the MICUSP Fair Use Statement ( Michigan Corpus of Upper-Level Student Papers, 2009), and the formatted texts that were used to compute association measures were solely based on anonymised metadata.

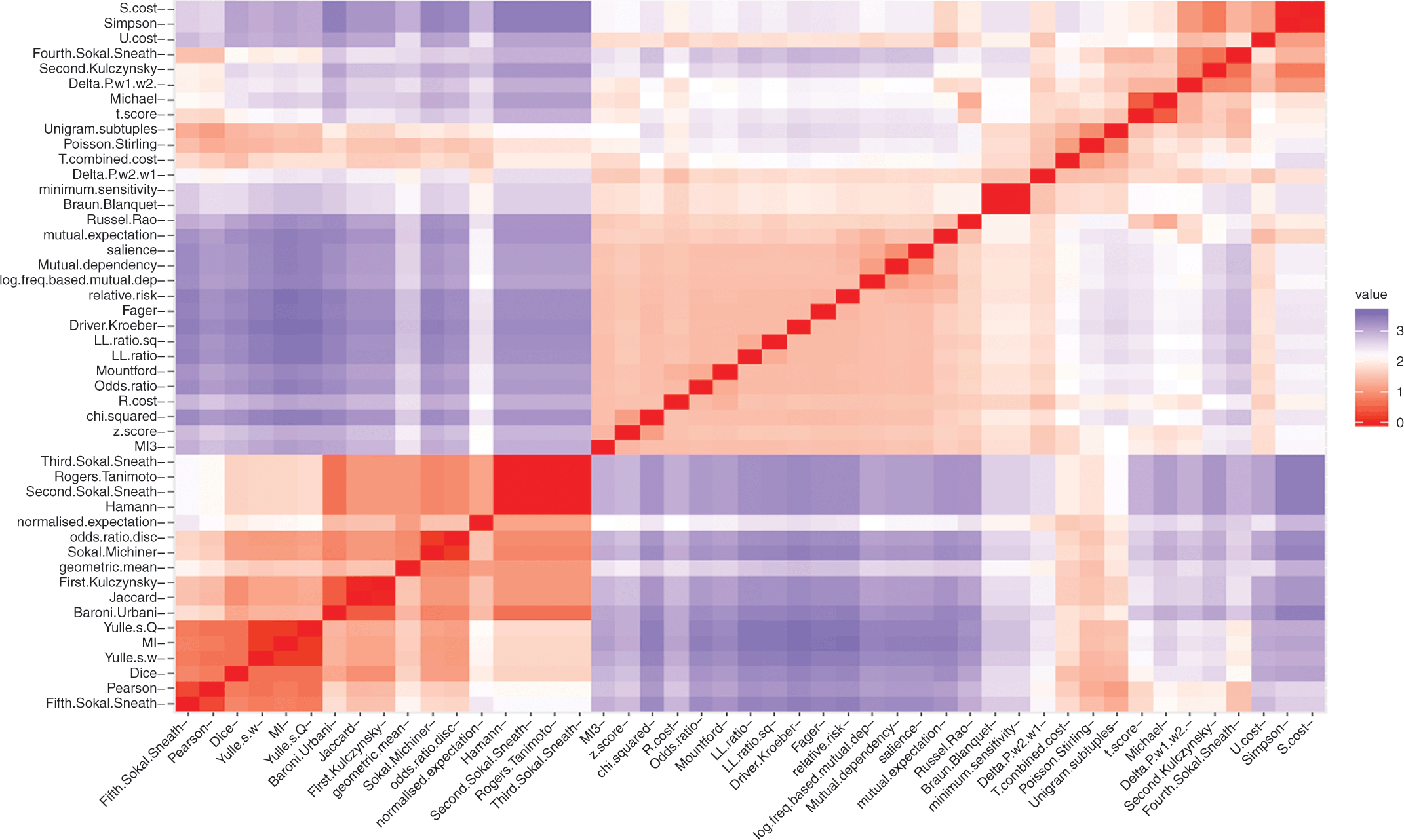

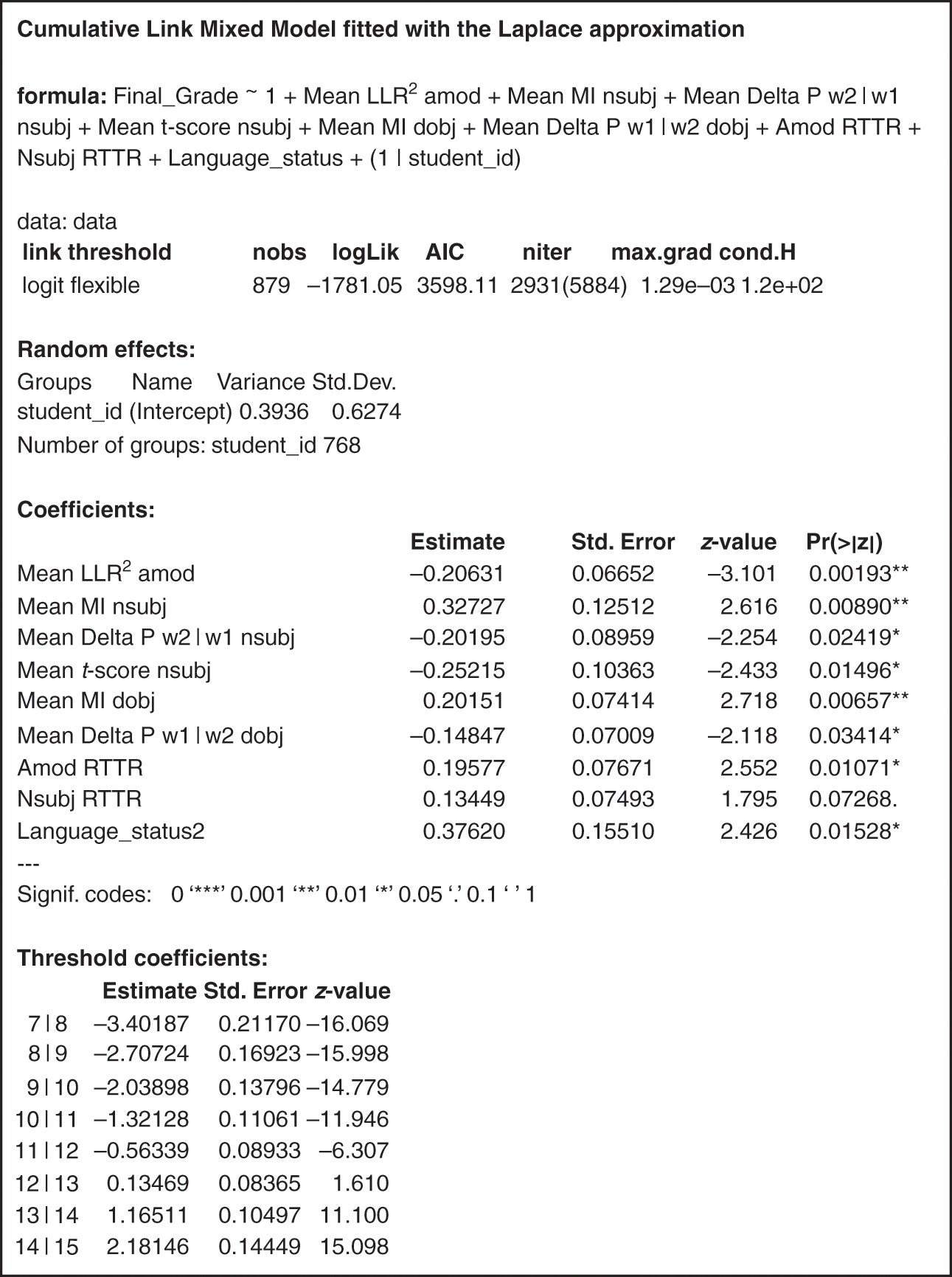

Genre types were decided by two raters (Reference Römer and O’DonnellRömer & O’Donnell, 2011). Forty-four per cent of texts were reports, 22 per cent of texts were argumentative writing, 17 per cent were research papers, 7 per cent were critiques, 6 per cent were proposals, 3 per cent were response papers, and 1 per cent was creative writing. A second reason for choosing MICUSP related to its size, with the corpus itself representing one of the largest corpora of student writing across multiple levels of study (undergraduate and graduate), disciplinary subject, and language backgrounds (in alignment with the study corpus), MICUSP also includes non-native student writers (e.g., Arabic, Chinese, and Spanish L1 speakers). The access to 2.8 million words of student writing contained within MICUSP was also a draw in choosing a reference corpus as it is a valid representative of authentic student writing which could act as a target for the first-year writers in this Element.