In the aftermath of the global COVID-19 crisis, the need for mental health services has risen sharply throughout the world, with the result that most jurisdictions have insufficient mental health resources with which to address the surge. As a consequence, mental illnesses go undiagnosed or receive inaccurate diagnoses. When untreated or undertreated, the dysfunction worsens, causes increasing distress, raises treatment costs, reduces productivity, and, too often, results in loss of life. Thankfully, artificial intelligence (AI) has come to the rescue. AI uses computational tools and algorithms that assist with individual diagnosis and help refine psychiatric diagnostic categories. In the intricate art of pattern recognition, AI far surpasses humans, whose attempts in the field of mental health have fallen appreciably short of expectations. AI can potentially turn this around.

In our opinion, the long waiting lists for conventional face-to-face psychological therapy can best be addressed by Internet- and mobile-based interventions (IMIs). Currently, telephone- and internet-based chatbots assist with access to needed information, support, and guidance. Chatbots such as these offer non-judgmental, unbiased, and personalized care using algorithms analogous to psychotherapeutic skills (empathy, patience, humor, and positive feedback). By utilizing big data from anonymized patient medical records, and also from social media posts, blogs, and surveys, AI easily arrives at working diagnoses at early stages of a disorder, reducing treatment costs and improving prognosis.

Dynamic therapeutic resources such as virtual reality (VR) tools and natural language processing (NLP) strategies are AI specialties. They may, in the future, yield important benefits for the delivery of psychiatric services—for example, enabling individualized personal therapy and a variety of other psychiatric interventions tailored to the needs of individual patients. Additionally, as has already been shown in Alzheimer’s disease, AI reading of brain scans will improve diagnostic accuracy and, with diagnostic precision, will come the effective management of currently unresponsive psychiatric conditions.

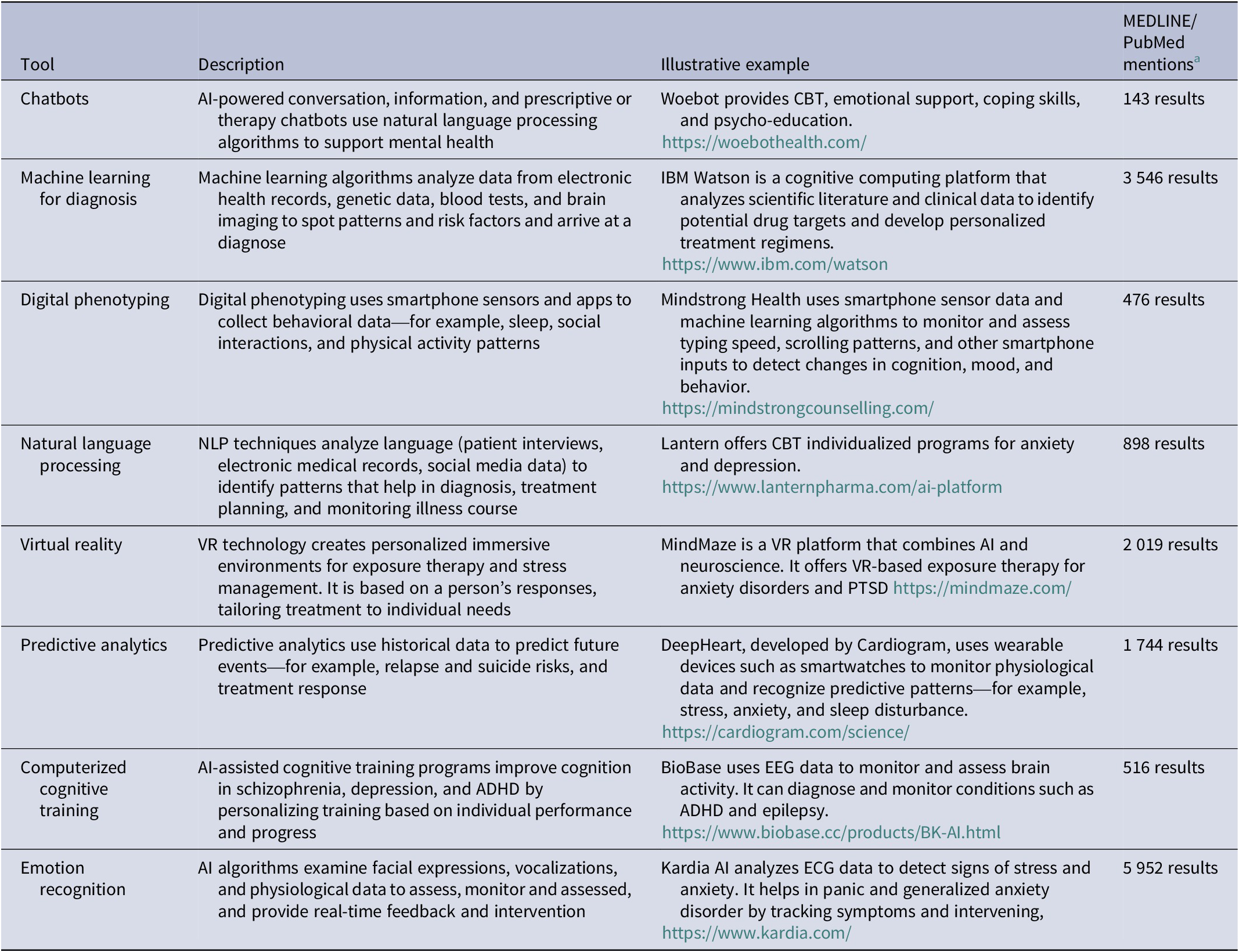

AI can enhance many aspects of mental health care. The list of possibilities is long. AI can contribute to the early detection of mental disorders, it can refine diagnostic categories, improve the accuracy of diagnosis, personalize treatment plans, recognize risks and predict outcomes, provide remote support and monitoring, expand treatment access and affordability, and facilitate data-driven interventions. AI may substantially improve mental healthcare delivery by analyzing patient health information (PHI) such as laboratory results, interpreting imaging data, and capturing electronic health record (EHR) information to detect real-time data (RTD) trends, patterns, and problems. Beyond capture, AI can classify data, track progress and retrogression, and recommend treatment changes. AI algorithms can forecast events that are probable and provide actionable recommendations for effective triage and the prevention of tragic outcomes. AI may also prove extremely useful in self-assessment and self-management. Once in place, AI will equip patients with the tools they need to automate daily routines and self-manage many distressing features of illness, especially in the context of chronic and/or recurrent disorders. Although more evidence-based, and larger studies are awaited, AI for mental health is already making inroads into clinical practice by expanding access (Table 1). In the United States, for example, the Food and Drug Administration (FDA) has now relaxed rules to allow for more use of digital treatment tools for persons with mental health conditions.

Table 1. AI tools used in psychiatry

Note: Search as of 13 August 2023. Search syntax = ((psychiatry) OR (mental health)) AND (“AI Tool”).

a MEDLINE/PubMed mentions are about the generic tools and not necessarily the specific example selected.

Abbreviations: ADHD, attention deficit hyperactivity disorder; AI, artificial intelligence; CBT, cognitive behavioral therapy; ECG, electrocardiogram. EEG, electroencephalogram; IBM: international business machines corporation; NLP, neuro-linguistic programming; PTSD, post-traumatic stress disorder; VR, virtual reality.

However, while looking ahead to the promise of AI, it is essential to acknowledge its challenges and drawbacks. These include ethical quandaries, privacy and security threats, the specter of robotic, inhumane care, the potential for bias being programmed into the original algorithm, the inability to adapt to patient context, the prospect of increasing dependence on technology, and the possibility of error (which, of course, is omnipresent in clinical medicine). Of major concern are: the lack of regulatory AI frameworks, our society’s overemphasis on technology at the expense of experienced human judgment, overwhelming difficulties in integrating AI into existing systems, unresolvable discrepancies between AI recommendations and clinician decisions, issues of transparency and interpretability in standing algorithms, technical limitations and errors, the potential for “black box” decision-making by algorithms, limited applicability to minority populations, high implementation costs and resource requirements, understandable resistance to change within and outside of the healthcare profession, unintended and unforeseen consequences and an over-reliance on single responses to problems that may well need multiple solutions. Legal and accountability repercussions may result. Mental health is considered the most humanistic field of medicine, which leads to legitimate concern about the danger of dehumanization, the erosion of conventional wisdom and professional experience, and the delegation of control to a computer. But, with care, algorithmic decision-making should reduce the inequality and discrimination that currently exists—high-income countries having hijacked biomedicine—and allow for cultural variation in psychotherapeutic methods. The use of AI should be approached with all necessary caution.

An obvious problem of implementation is that few mental health personnel are trained in AI technology. It is vital that training be made mandatory and mental health providers be offered incentives to keep up with technological advances. Psychologists and psychiatrists must stay current in this fast-moving field. To ensure that as many as possible individuals in need of care receive the best care possible, modern technology must become part of psychiatric training and continuing education. We strongly recommend that the healthcare system prepare psychiatric services to meet the demands of a constantly changing world by embracing the power of AI.

The demand is significant. The possibilities are vast. Neither patients nor providers are, however, convinced. Social repercussions need to be foreseen and evaluated. As with any new technology, there is understandable fear of potentially untoward consequences, which will be assuaged if negative social repercussions are foreseen, evaluated, and prevented.

While it remains unclear whether, to what extent, and how quickly AI will be adopted in the delivery of mental healthcare, it is unquestionable that AI is here to stay and that its use will continue to expand. A premature promotion of AI models that may not be useful in real-world situations must await appropriate validation and be thoroughly scrutinized by law, ethics, public policy, and human rights experts. Before widespread use, it must be shown to meet jurisdictional quality and regulatory standards. While this is occurring, mental health practitioners have time to learn about and train themselves in, AI technology.

In the digital age in which we live, the rise of AI brings benefits and pitfalls. Stakeholders (which includes us all) need to evaluate the current state of the application of AI for mental health. We need to understand the trends, gaps, opportunities, challenges, and weaknesses of this new technology. As it evolves, rules, guidelines, standards, policies, and regulatory frameworks will develop and will gradually scale upwards. Mental health practitioners need to be ready.

Data availability statement

No dataset was used in this study or one that was created as a result of it.

Author contribution

Equally contributed.

Disclosure

The authors declare none.