Health care faces serious challenges when it comes to the economic sustainability of the system. Decisions are made on how to achieve and maintain effective and accessible health care. Thorough impact assessment of existing and new healthcare practices on relevant outcomes is warranted to ensure delivery of the right care at the right time, to the right person. Impact can be understood as the (un)intended consequences that healthcare practices have in different health and care outcomes, including efficacy, safety, effectiveness, costs, and other care provision-related aspects. During the 1980s, health technology assessment (HTA) grew into a discipline, producing and summarizing evidence about efficacy and efficiency of predominantly pharmaceutical innovations (Reference Draborg, Gyrd-Hansen, Poulsen and Horder1–Reference Woolf and Henshall4). Over the years, HTA extended the conceptual and methodological assessment of clinical outcomes and cost-effectiveness of pharmaceuticals to include patient perspectives, organizational dimensions, and other societal aspects (Reference Banta and Jonsson5;Reference Bashshur, Shannon and Sapci6). In parallel, the role of technology in health care gradually expanded from a biomedical orientation toward a more holistic approach that includes medical devices such as imaging tools, robotics, and digital healthcare solutions.

Currently, novel interventions are commonly assessed through formal HTA, evaluating mostly clinical outcomes in order to realize evidence-informed decision and policy making. In general, HTA apply frameworks that involves mostly quantitative intervention properties (Reference Goodman and Ahn7). Such frameworks specify methods for assessing the qualities of the intervention under study, including comparative effectiveness research (CER), systematic, and meta-analytic reviews on the clinical effectiveness of an intervention expressed in numbers-needed-to-treat or cost-effectiveness estimates such as incremental cost-effectiveness ratios (Reference Goodman and Ahn7;Reference Goodman and Ahn8).

Various national and international organizations are engaged in HTA and involved in creating or guiding the development of standards for the evidence required including for digital technologies. For example, National Institute for Health and Care Excellence in England (NICE) recently developed an Evidence Standards Framework for Digital Health Technologies (9). This framework provides technology developers with standards of the evidence demonstrating health technologies' (cost)-effectiveness in the UK health and care system. Zorginstituut Nederland (ZIN, the Dutch National Health Care Institute) has statutory assignments to the systematic assessment if healthcare interventions are being deployed in a patent-oriented, clinically effective and cost-effective manner. Such analyses are part of its flagship program “Zinnige Zorg” (appropriate care), specifically designed to identify ineffective and unnecessary care across a number of themes, including patient-centeredness, shared decision making, and approaches to stepped care (10;11). ZIN currently hosts the secretariat of EUnetHTA, a collaborative network aimed to produce and contribute to HTA in Europe. Following the trends of professionalization and widening the focus, HTA practice evolved to structural assessments of the impact of a variety of health innovations across a multitude of health and care organization-related outcomes.

eHealth refers to the organization, delivery, and innovation of health services and information using the Internet and related digital technologies (Reference Eysenbach12;Reference Pagliari, Sloan, Gregor, Sullivan, Detmer and Kahan13). Being a container concept, it includes a broad spectrum of digital technologies that purport to assess, improve, maintain, promote or modify health, functioning, or health conditions through diagnostic, preventive, and treatment interventions in somatic and mental health care. eHealth is expected to improve accessibility, affordability and quality of health care (Reference Ahern, Kreslake, Phalen and Bock14). eHealth is debated to diverge from traditional health care due to its technological properties, speed of development, and complexity of implementation in existing routine care, as eHealth often impacts interlocking levels of organizational, staff, and client behaviors, beliefs, and norms (Reference Stelk15;16). Meanwhile investments in eHealth are rising, the urgency of healthcare transformations is pressing and the use of a wide array of both proven and unproven eHealth applications is growing.

Discussions on the necessity of eHealth specific HTA frameworks are ongoing. In general, technology evolves quickly and impact assessment has often been perceived as a hindering factor. A certain level of maturity of the technology is required for which evidence has been provided to inform decision making. Studies have investigated the use of Rapid Relative Effectiveness Assessments (REAs) to address these concerns and reduce the required time for obtaining evidence and conducting the assessments (Reference Doupi, Ammenwerth and Rigby17). However, these approaches primarily focus on effectiveness (under routine care conditions) and safety and leave out topics deemed more context dependent such as costs and organizational aspects to improve comparability across healthcare settings.

Nevertheless, the necessity of incorporating eHealth in traditional care practices, and the inherent complexity of achieving sustainable change, requires a multi-perspective and multi-method approach for assessing their impact on relevant outcomes. Ultimately, eHealth is about health and care of and for real people. There should be no exceptions in conducting rigorous assessment of the impact of eHealth services on health and care. It is unclear how eHealth services can be assessed systematically, to inform and support decision making in policy and practice prior to their implementation (16). Contrary to traditional HTA, assessments of eHealth services (eHTA) are less forged in structured approaches. Reasons may include the diverse and rapidly evolving nature of the technological properties and the amalgamative nature of eHealth, referring to the interconnectedness of such services with behavioral, cultural, and organizational aspects of healthcare delivery (11;Reference Stelk15;Reference Blackwood18;Reference May, Finch, Mair, Ballini, Dowrick and Eccles19).

We conducted a systematic review of the scientific literature to answer the following question: which frameworks are available to assess the impact of eHealth services on health and care provisions? We regard assessment frameworks as providing a conceptual structure to the assessment but not necessarily specifications of the methods and evaluation instruments that should be used to conduct the actual assessments. A conceptual structure includes for example assessing the effectiveness, safety, and the required technical infrastructure of an eHealth service. An example of the methods and instruments used such concepts include conducting randomized-controlled trials to measure change in blood levels as an indication of symptom reduction, or perform data transfer speed tests to establish the required band-width. Because of this nuance, we also identified the specific assessment methods and instruments proposed by the frameworks.

Methods

A broad search strategy was applied with high sensitivity to two key terms: “eHealth” and “health technology assessment.” A total of 155 synonyms were formulated, resulting in a fine-grained search string for use in five online bibliographical databases: PubMed (Medline), Cochrane (Wiley), PsychINFO (EBSCO), Embase, and Web of Science (Thomson Reuters/Clarivate Analytics). A trained librarian guided the development of the search strings (see Supplementary file 1).

All identified papers were examined for eligibility by two researchers (CV and LB) independently. Disagreements were solved by discussion. The following inclusion criteria were applied: (a) the assessment was conducted in a clinical setting, (b) used an explicit HTA framework, and (c) the clinical intervention included a digital technological component. Articles were excluded if:

• The primary aim was to establish clinical efficacy, effectiveness, cost-effectiveness, feasibility, piloting, usability testing, or design of eHealth services.

• Services included in the assessment can be categorized as medical devices (assistive technology, imaging, and surgical devices), information systems (electronic health records, decision making tools, scheduling systems, information resources, training, and education), or pharmacological interventions.

• They contain implied viewpoints, commentaries, editorials, study protocols, proposals, presentations, posters, HTA-use reviews, and/or development proposals.

• The full text article was not available or not in English.

A standardized data extraction form was developed to extract relevant data from the remaining articles, including:

• General study characteristics.

• eHealth service assessed (participants, inclusion criteria, intervention aim and working principles, target disorder, technological principles, outcomes, and setting).

• HTA framework used (purpose, structure, advantages, methods, evaluation dimensions and criteria, working principles, and intended users).

• Methods and instruments applied (type, aims, purpose, instruments, data collection methods, and link to framework).

A systematic qualitative narrative approach was applied for analysis (Reference Arai, Britten, Popay, Roberts, Pitticrew and Rodgers20–Reference Popay, Roberts, Snowden, Petticrew, Arai and Rodgers22). Commonalities across the frameworks were examined to structure the analysis in terms of what, when, and how specific properties of eHealth services can be assessed.

Results

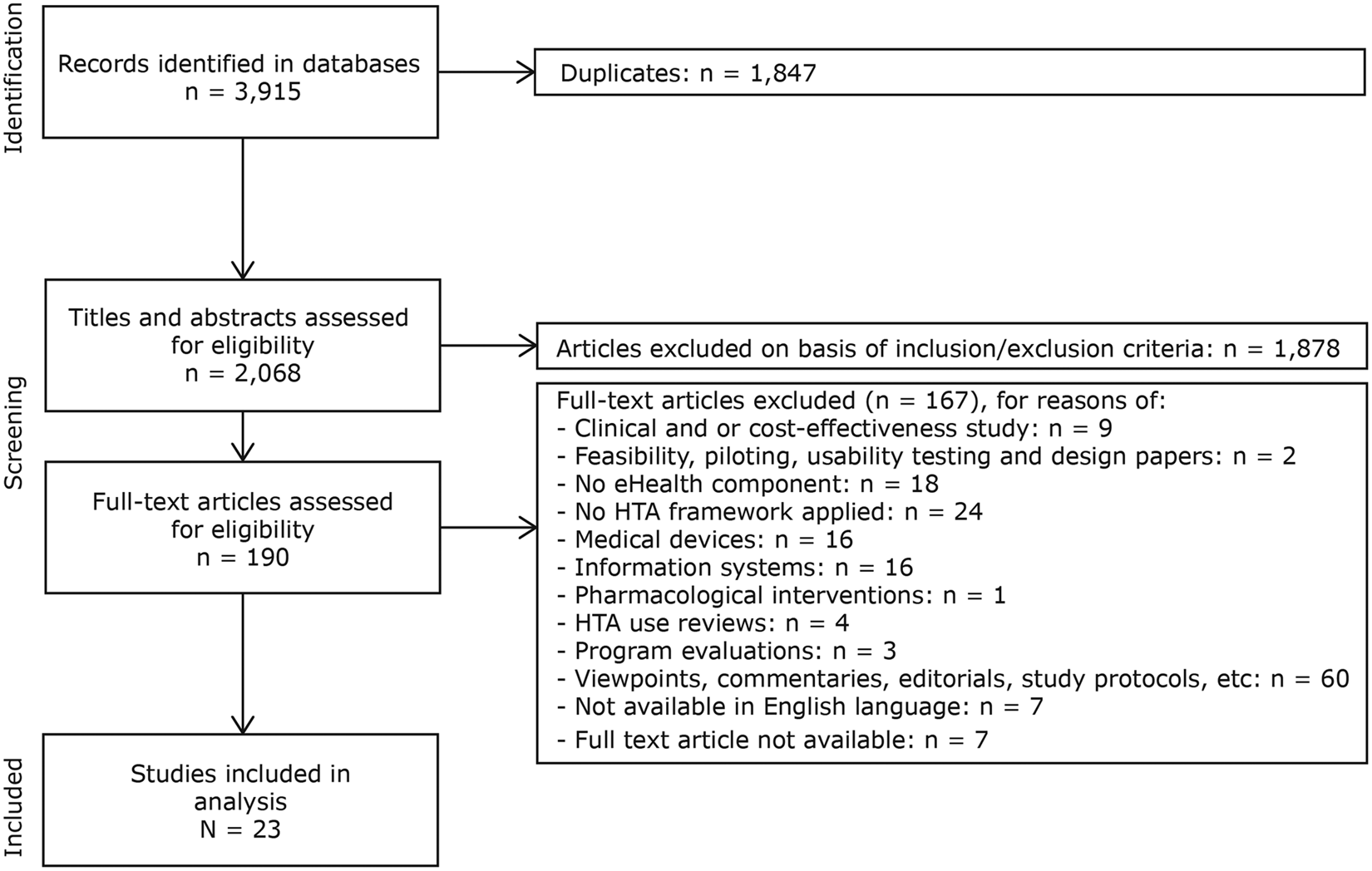

The searches, which were performed in March 2018, resulted in 3,915 articles. After removing duplicates, 2,068 titles and abstracts were screened for eligibility. Figure 1 provides an overview of the identification and selection of studies in different phases of the screening.

Fig. 1. PRISMA flowchart illustrating study identification and selection process.

eHTA Frameworks

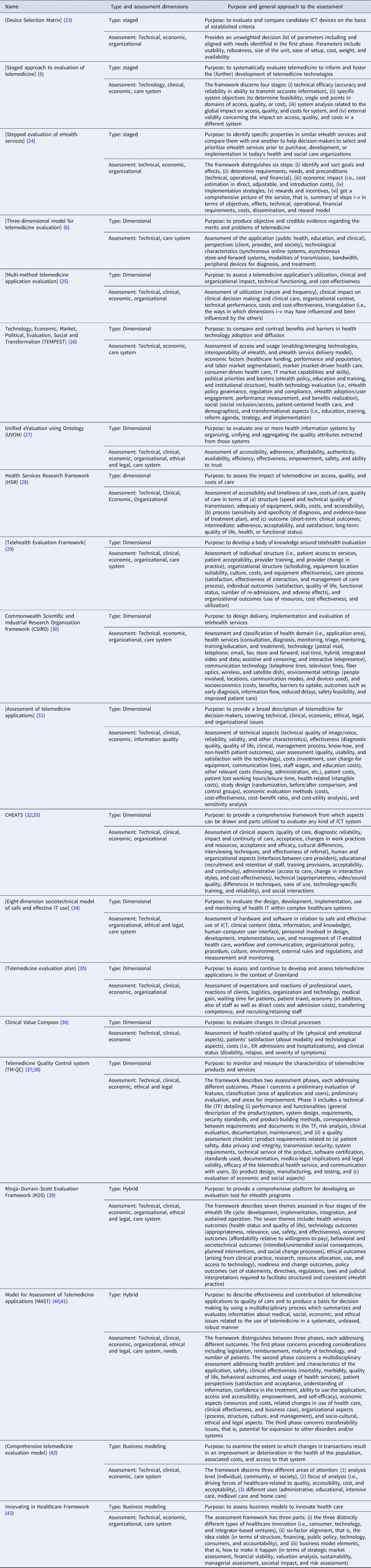

The twenty-three articles included in this review report in total twenty-one distinctive eHTA frameworks. Four themes classifying the frameworks emerged from the analysis of the included literature: (i) staged (n = 3, references: 23;8;24), (ii) dimensional (n = 13, references: 6;25–38), (iii) hybrid (n = 3, described in references: Reference Khoja, Durrani, Scott, Sajwani and Piryani39–Reference Kidholm, Ekeland, Jensen, Rasmussen, Pedersen and Bowes41), and (iv) business modeling (n = 2, references 42;43). Table 1 provides an overview of the frameworks identified according to their classification. All frameworks included in this review are summarized in Table 2.

Table 1. Classification of the frameworks identified in the review

n and N refer to the number of unique frameworks found in this review.

Columns 2–10 indicate the assessment dimensions:

• Technical: aspects related to the technical characteristics of the service.

• Clinical: aspects of clinical outcomes including effectiveness, well-being, and safety.

• Economic: aspects of cost and cost-effectiveness.

• Organizational: aspects of an organizational nature such as training, resources, procedures, etc.

• Ethical and legal: ethical and legal aspects such as data protection.

• Information quality: the quality of the information included in the assessment.

• Needs: aspects defining the needs of stakeholders regarding the service under assessment.

Table 2. Main characteristics of the eHTA frameworks identified in the systematic review

[]: Square brackets indicate that the name of the framework is not explicitly mentioned by the authors but is derived by analyzing the manuscript.

Staged: means that the framework concerned predominantly applies a staged approach to eHealth Technology Assessment.

Dimensional: indicates that the framework concerned predominantly applies a dimensional approach to eHealth Technology Assessment.

Hybrid: indicates that the framework concerned can be categorized as combining a staged with a dimensional approach to eHealth Technology Assessment.

Business modeling: indicates that the framework concerned focuses solely on business modeling and economic aspects of eHealth Technology Assessment.

Staged Frameworks

Staged frameworks apply a sequential phased approach for assessing outcomes relevant to the developmental phase of the eHealth service. Three frameworks advocate a staged approach, two of which focus on providing guidance on comparing and selecting eHealth devices, and one on informing (further) development of eHealth services. To highlight these different approaches, two frameworks are explained in more depth. For example, Casper and Kenron (Reference Casper and Kenron23) take a socio-technical systems approach, aligning users' values with functional elements of the eHealth service. The framework has four phases: (i) development of project needs, (ii) survey of potential eHealth services, (iii) evaluation of candidate services, and (iv) selection of the service. Important considerations in the selection process include technological aspects, user-, and environmental factors. For phase three, an unweighted decision list is based on the needs identified in phase one addressing usability, robustness, size and weight, ease of setup, costs, and availability of the eHealth solution.

DeChant et al. (Reference DeChant, Tohme, Mun, Hayes and Schulman8) argue that besides the clinical and technological performance of eHealth services, the overall impact on health care should also be assessed. This is because eHealth services, when introduced in routine care, potentially affect a range of aspects of care delivery including care pathways and access to care (Reference DeChant, Tohme, Mun, Hayes and Schulman8). Consequently, their framework consists of four successive stages addressing (i) technical efficacy, (ii) healthcare system objectives, (iii) analysis, and (iv) external validity. Each stage focuses on the impact of eHealth services on the quality, accessibility, or costs of care. For each stage, the assessment is tailored to the maturity of the eHealth service.

Dimensional Frameworks

Dimensional frameworks prescribe sets of assessment outcomes grouped in dimensions. The dimensions are categorized in accordance with expected impacts of eHealth services, irrespective of their developmental stage. All thirteen identified frameworks focus on assessment of technical features, clinical, and economic outcomes, with few also addressing organizational, ethical, and system level outcomes. Full details are provided in Table 2. Two frameworks are detailed below due to their distinctive features: the Technology, Economic, Market, Political, Evaluation, Social and Transformation framework (TEMPEST) (Reference Currie26) because of its comprehensiveness, and the Telehealth Evaluation Framework (Reference Hebert29) which explicates the importance of different perspectives in assessing eHealth services.

The TEMPEST framework specifies seven domains for comparing benefits of and barriers to eHealth service adoption (Reference Currie26). The domains concern outcomes of (i) access, usage, emerging technologies, interoperability, and the delivery model, (ii) economy, including funding, performance, and human resources, (iii) engagement and use in terms of the healthcare market, (iv) policy-related matters, (v) governance, regulation, and compliance, (vi) sociological aspects such as care access, and (vii) care reformation, strategies, and implementation. These seven domains are divided into twenty-one sub-themes with eighty-four quantitative outcomes covering different perspectives, including a market, political, commercial, stakeholders, and individuals.

Hebert (Reference Hebert29) places the perspective from which an assessment is conducted central in defining the dimensions for evaluation. Building on Donabedian's work (Reference Donabedian44), the outcomes relate to individual and organizational assessment perspectives. The underlying premise is a relationship between structure–process–outcome. The framework identifies five domains: (i) individual structure including patients' access and acceptability, and providers' training, and changes in practice, (ii) organizational structure related to scheduling, infrastructure, culture, costs, and equipment effectiveness, (iii) care process concerning satisfaction, effectiveness, and care management, (iv) individual level outcomes such as patient satisfaction, quality of life, functional status, and adverse effects, and (v) organizational level outcomes including resource use, costs, and service utilization.

Hybrid Frameworks

Hybrid frameworks combine a phased perspective on service development with an assessment of the current impact of an innovation using varying sets of criteria to be assessed in a particular order. Three frameworks can be classified as hybrid.

The TM-QC framework (Reference Giansanti, Morelli and Macellari37;Reference Giansanti, Morelli and Macellari38) brings a technological and quality assurance perspective to eHealth assessments and applies two phases. The first phase comprises seven dimensions for classifying the service. For the second phase, a technical dossier is compiled detailing system design, requirements, security standards, risk analysis, clinical evaluation, and maintenance aspects. In addition, a quality assessment is performed on three dimensions: (i) product requirements regarding patient safety and privacy, (ii) product design, manufacturing, and testing, and (iii) economic evaluation and social aspects. Following the quality assessment, a score is calculated and compared to a predefined threshold.

The Khoja–Durrani–Scott Evaluation Framework (KDS) (Reference Khoja, Durrani, Scott, Sajwani and Piryani39) aligns its evaluation themes to the eHealth services' life-cycle phases comprising: (i) development, (ii) implementation, (iii) integration, and (iv) sustained operation. Across these phases, seven groups of outcomes are assessed: (i) health outcomes, (ii) technology including appropriateness, relevance, use, safety, and effectiveness of the service, (iii) economy related to affordability and willingness-to-pay, (iv) behavioral and sociotechnical outcomes covering (un)intended social consequences and social change processes, (v) ethical aspects, (vi) readiness and change outcomes, and (vii) policy outcomes concerning the facilitation of consistent eHealth service delivery. For each of the assessment outcomes specific evaluation methods are included (KDS tools) (Reference Khoja, Durrani, Scott, Sajwani and Piryani45).

The Model for Assessment of Telemedicine applications (MAST) (Reference Ekeland and Grottland40;Reference Kidholm, Ekeland, Jensen, Rasmussen, Pedersen and Bowes41) defines a three-phased assessment of: (i) preceding considerations to determine the relevance of an assessment, (ii) a broad range of outcomes structured in seven domains, and (iii) transferability to understand the potential for scaling-up or -out. For phase one, issues regarding relevant regulatory aspects (financial, maturity, and potential use) are assessed, addressing questions about the purpose, alternatives, required level of assessment (international, national, regional, and local), and the maturity of the eHealth service. Phase two is based on the EUnetHTA Core Model (Reference Chamova and EUnetHTA46;Reference Lampe, Mäkelä, Garrido, Anttila, Autti-Rämö and Hicks47) covering seven domains: (i) the health problem targeted, (ii) clinical and technical safety, (iii) clinical effectiveness, (iv) patient perspectives, including satisfaction, acceptance, usability, literacy, access, empowerment, and self-efficacy, (v) economic evaluation addressing costs, related changes in use of health care, and a business case, (vi) organizational aspects including procedures structure, culture, and management aspects, and (vii) further socio-cultural, ethical, and legal issues. The third phase focuses on assessing the potential to effectively transfer the eHealth service to other healthcare systems and its scalability in terms of throughput and costs.

Business Modeling Frameworks

Where the previous frameworks provide a list of outcomes to consider in assessing eHealth services, business modeling frameworks in this context focus on the economic viability and business models for eHealth services.

Alfonzo et al. (Reference Alfonzo, Huerta, Wong, Passariello, Díaz and La Cruz42) places transactional distances in providing and receiving care central in their framework. Transactional distance refers to any factor having an impact on an interaction that creates distance between the parties, such as education, culture, ethnicity, gender, health status, geography, etc. (Reference Alfonzo, Huerta, Wong, Passariello, Díaz and La Cruz42). The purpose of the assessment is to examine the extent to which changes in the transactions result in an improvement or deterioration in health, the associated costs, and access to care.

The Innovating in Healthcare Framework proposed by Grustam et al. (Reference Grustam, Vrijhoef, Koymans, Hukal and Severens43) takes a business modeling approach to the assessment of eHealth services. The framework consists of three parts: (i) types of healthcare innovation, (ii) a six-dimension assessment, and (iii) business model elements (Reference Grustam, Vrijhoef, Koymans, Hukal and Severens43). The assessment includes evaluation of the (i) structure of the system, (ii) financing mechanisms, (iii) regulatory public policies, (iv) technological, developmental, and competitive aspects, (v) consumer empowerment, and (vi) accountability. Business modeling includes assessing the (i) market, (ii) financial viability, (iii) valuations regarding cash flows and rates of return, (iv) financial sustainability, (v) managerial skills and requirements, (vi) societal impact, and (vii) technological risks.

Assessment Methods and Instruments

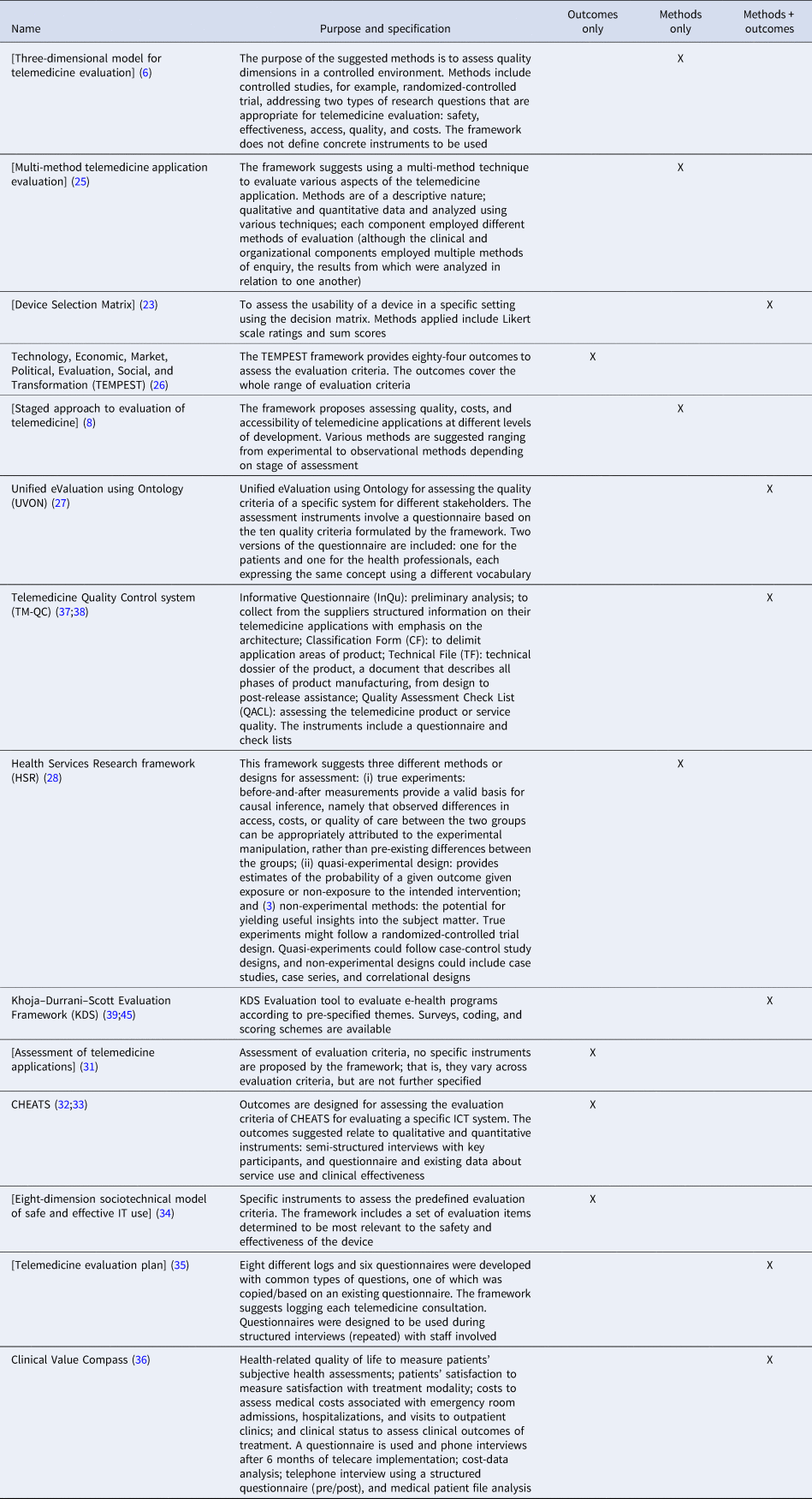

Specific instruments for outcome assessment and data collection methods were reported for six frameworks. Four frameworks specified only the methods for data collection and performing the assessments. Another four frameworks include a list of specific instruments that can be used to assess the outcomes, but not the methods for collecting and evaluating the data. The frameworks, their methods, and instruments are listed in Table 3.

Table 3. Instruments, methods, and outcomes defined by each framework

Frameworks that specify a set of methods for data collection and evaluation do so in a broad way. For example, the HSR framework suggests a number of possible broadly defined methodological strategies for assessing outcomes ranging from randomized-controlled trials to quasi-experiments using case-control studies and non-experimental designs such as case studies and correlational research (Reference Grigsby, Brega and Devore28).

Four frameworks include operationalized sets of outcomes (i.e., instruments) related to the assessment domains. The most comprehensive set of instruments is provided by the TEMPEST framework (Reference Currie26). In total, eighty-four specific outcomes are defined, including technology penetration and use to assess emerging technological trends, health expenditures to inform economic assessment, and population level epidemiological outcomes for assessing health policies.

Six frameworks specify concrete instruments and methods for collecting data and evaluating outcomes. For example, KDS includes four separate evaluation tools in accordance with the four eHealth life-cycle phases specified by the framework (Reference Khoja, Durrani, Scott, Sajwani and Piryani39). Each of these tools include a set of questions for three types of users (managers, providers, and clients), covering seven outcome themes included in the framework (Reference Khoja, Durrani, Scott, Sajwani and Piryani39;Reference Ekeland and Grottland40).

For seven of the twenty-one frameworks, no specific assessment instruments or methods were reported. The main reason for not specifying methods or instruments for collecting data is that the operationalization of outcomes depends on the purpose, the technology, the patient group, and the context in which the eHealth service is to be implemented. In addition, the choice of outcomes and methods for data collection within each assessment domain must follow current state-of-the-art research methods within the domains to produce valid and reliable assessments (Reference Kidholm, Ekeland, Jensen, Rasmussen, Pedersen and Bowes41).

eHealth Services Assessed

The eHealth services reported in the articles were described broadly, serving mainly illustrative purposes. The clinical purposes that were reported varied between diagnostic (n = 1), monitoring (n = 5), and treatment (n = 2), and areas of application such as chronic diseases such as multiple sclerosis, chronic heart failure, AIDS, chronic obstructive pulmonary disease (COPD), diabetes, or diabetic foot ulcers. However, patient profiles were not specified. Examples of settings in which an eHealth service was to be implemented included clinical and non-clinical settings or a combination of both. Clinical settings and disciplines were, for example, emergency rooms, tele-diagnosis and telemonitoring in pathology, psychiatry, ophthalmology, wound care, obstetrics, pediatrics, dermatology, and cardiology. Non-clinical settings and disciplines related to, for example, patients' homes, including tele-management systems, tele-rehabilitation, home-based self-monitoring, tele-education, and tele-consultation. The third category of eHealth services aimed to enable collaborative and integrated care between care providers in order to, for example, facilitate knowledge transfer for diagnostic and monitoring purposes.

A limited number of the included studies reported on actual application of the proposed framework. For instance, one study assessed the benefits of short-term implementation of a physical tele-rehabilitation system compared to usual care for Hebrew-speaking patients aged over 18 years old with a relapsing-remitting type of multiple sclerosis (Reference Zissman, Lejbkowicz and Miller36). The assessment used the Clinical Value Compass framework, defining the outcomes in four domains, including clinical symptom severity, health-related quality of life, patient satisfaction, and medical costs (Reference Zissman, Lejbkowicz and Miller36). Another example of a study using an experimental approach to verify the utility of a specific eHealth service assessment framework is a study reported by Ekeland et al. (Reference Ekeland and Grottland40). This study aims to examine the utility of the MAST framework in the context of twenty-one implementation pilots of telemedicine services for diabetes, COPD, and cardiovascular disease.

Discussion

Main Findings

This study revealed twenty-one frameworks for eHTA that can be classified into four categories: staged, dimensional, hybrid, and business modeling focused. Two frameworks were reported in multiple articles: MAST (Reference Ekeland and Grottland40;Reference Kidholm, Ekeland, Jensen, Rasmussen, Pedersen and Bowes41) and CHEATS (Reference Shaw32;Reference Brown and Shaw33). The majority of the frameworks constitutes a set of assessment domains related to technical requirements and functionalities, clinical, economic, organizational, ethical, and legal characteristics, as well as characteristics of the healthcare system and stakeholders' needs. There is considerable variation in the guidelines provided by these frameworks regarding the operationalization of outcomes, instruments, and concrete methods for assessing the impact of eHealth services. The eHealth services presented in the articles served mostly illustrative purposes, rendering the proven applicability and real-world usefulness of the frameworks unclear.

The MAST framework provides the most comprehensive approach by including all domains identified in this review. The importance of such comprehensiveness is also found in a review of evaluation criteria for non-invasive telemonitoring for patients with heart failure (Reference Farnia, Jaulent and Steichen48). However, it could be that not all domains are relevant to a particular eHealth service. Not only because of the development phase, but also because of specific aims and properties of the eHealth service and the context in which it is to be implemented.

The challenge in providing comprehensive standards for assessing all eHealth services versus the relevance of assessment outcomes for a specific eHealth service is underlined by the fact that most authors of the included frameworks have a shared view on the importance of a generic assessment framework for eHealth services and do not include concrete assessment methods. However, specificity is sacrificed and applicability of the frameworks for assessing certain eHealth services is reduced. With the evidence standards framework for digital health technologies, NICE introduces a way to stratify eHealth services into evidence standards in proportion to the potential risk to end-users (9). A pragmatic functional classification scheme is proposed differentiating the main functions of the eHealth service. For each of these functions, varying levels and types of assessment data are required. Nevertheless, this approach (still) focuses solely on clinical effectiveness of the eHealth service and its economic impact. It does not provide standards for assessing technical requirements and functionalities, organizational, ethical, and legal aspects, nor does it address impacts on the healthcare system as a whole. Although eHealth services deserve no exception in assessing their impact on health and care, an extended NICE Framework might offer practical guidance that steers toward the assessment of impacts beyond clinical effectiveness and cost-effectiveness, as well as harmonization and reduced proliferation of loaded frameworks.

Limitations

This study is limited due to its focus on the scientific literature. As HTA often has an applied emphasis in informing policy, assessment methods and outcomes do not necessarily need to be disseminated through scientific literature. For this study, gray literature was left out due to issues with accessibility, selecting relevant sources, and its heterogeneous, unstructured, and often incomplete nature (Reference Benzies, Premji, Hayden and Serrett49). However, methods and guidelines in using these resources in systematic reviews are maturing (Reference Mahood, van Eerd and Irvin50). In addition, practice-oriented expert feedback using qualitative and quantitative methods such as Delphi or Concept Mapping techniques (Reference Trochim and McLinden51) might enrich the results of this study and could prove useful in harmonizing and standardizing frameworks for eHTA.

Recommendations

Considering the variation in domains assessed, and level of detail concerning outcome definitions and instruments in the eHTA frameworks found, assessment frameworks would gain considerably in transparency, comparability, and applicability if: (i) the eHealth service being assessed is defined in a structured manner in terms of the clinical aim, target group, and working mechanism, and what, who and how the service is to be provided, and (ii) the assessment methods are standardized in terms of measurable outcomes and instruments. Moreover, the applicability and usefulness of eHTA frameworks should be reflected upon systematically to validate their utility and applicability in real world assessment practices.

The Template for Intervention Description and Replication (TIDieR) checklist could be a useful resource for reporting eHealth services for eHTA purposes (Reference Hoffmann, Glasziou, Boutron, Milne, Perera and Moher52). This checklist is designed to be generically applicable for reporting complex interventions and includes thirteen topics describing the rationale for the eHealth service, materials, and procedures, and provision details, as well as measures for ensuring adherence and fidelity.

In improving the standardization of eHTA, a systematic whole systems dialog with decision makers, policy makers, and HTA experts using a Delphi or Concept Mapping approach, might facilitate consensus-based prioritization and selection of assessment methods and instruments. The outcomes defined by the TEMPEST or CHEATS frameworks, the ontological methods proposed by UVON, and the comprehensive structure of the MAST framework can serve as a starting point. The work laid down by EUnetHTA (JA3/WP6), NICE, and the opinion report on assessing the impact of digital transformation of health services by the European Expert Panel on effective ways of investing in Health (EXPH) (53) should be considered.

A stepped-approach to what and how eHTA should be applied, tailored to the functional characteristics of the eHealth service, can be fruitful in balancing completeness and relevance of assessment outcomes and methods. For example, a first phase could entail the assessment of safety and (negative) effects on clinical outcomes in combination with an economic assessment of costs-effectiveness. In a second phase, the impact on organizational aspects such as required skills, changes in tasks and roles, and required interoperability with relevant eHealth systems could be assessed. Such a stepped-approach should improve adequacy and efficiency of assessments matching the Appropriate Care program of the National Health Care Institute (10) motto of “no more than needed and no less than necessary.”

Conclusions

HTA frameworks specifically designed for eHealth services are available. Besides technical performance and functionalities, costs and clinical aspects are described most frequently. Standardized sets of concrete evaluation methods and instruments are mostly lacking and evidence demonstrating the applicability of eHTA frameworks is limited.

Considering the purpose of eHTA to inform healthcare policy making and achieve evidence-based health care, the field would benefit from (i) standardizing the way in which eHealth services are reported and (ii) developing a standardized set of assessment tools by incorporating a stepped-approach tailored to the functional characteristics of the eHealth service. Standardized sets of evaluation methods for each of the domains as well as guidelines scoping the eHealth services might improve transparency, comparability, and efficiency of assessments, as well as facilitating collaboration of eHTA practices across healthcare systems in decision and policy making in digital health care.

Supplementary Material

The supplementary material for this article can be found at https://doi.org/10.1017/S026646232000015X.

Acknowledgments

The authors would like to thank Dr C. Planting of the Amsterdam UMC for providing expert advice and help in operationalizing the search strategy for this systematic review. This study was funded by the Dutch National Health Care Institute as it is relevant to their appropriate care program.

Conflict of Interest

Mr. Vis, Ms. Buhrmann, and Prof. Dr. Riper report that their institute (Vrije Universiteit Amsterdam) received a financial contribution from the National Health Care Institute (Zorginstituut Nederland), during the conduct and for the purpose of this study. The research was conducted independently by the researchers. Dr. Ossebaard is employed by the Dutch National Health Care Institute which funded this study.