1 Introduction

The kinetic framework is a general paradigm that aims to extend Boltzmann’s kinetic theory for dilute gases to other types of microscopic interacting systems. This approach has been highly informative, and became a cornerstone of the theory of nonequilibrium statistical mechanics for a large body of systems [Reference Spohn43, Reference Spohn44]. In the context of nonlinear dispersive waves, this framework was initiated in the first half of the past century [Reference Peierls41] and developed into what is now called wave turbulence theory [Reference Zakharov, L’vov and Falkovich51, Reference Nazarenko39]. There, waves of different frequencies interact nonlinearly at the microscopic level, and the goal is to extract an effective macroscopic picture of how the energy densities of the system evolve.

The description of such an effective evolution comes via the wave kinetic equation (WKE), which is the analogue of Boltzmann’s equation for nonlinear wave systems [Reference Spohn46]. Such kinetic equations have been derived at a formal level for many systems of physical interest (nonlinear Schrödinger (NLS) and nonlinear wave (NLW) equations, water waves, plasma models, lattice crystal dynamics, etc.; compare [Reference Nazarenko39] for a textbook treatment) and are used extensively in applications (thermal conductivity in crystals [Reference Spohn45], ocean forecasting [Reference Janssen31, Reference Burns49], and more). This kinetic description is conjectured to appear in the limit where the number of (locally interacting) waves goes to infinity and an appropriate measure of the interaction strength goes to zero (weak nonlinearityFootnote 1 ). In such kinetic limits, the total energy of the whole system often diverges.

The fundamental mathematical question here, which also has direct consequences for the physical theory, is to provide a rigorous justification of such wave kinetic equations starting from the microscopic dynamics given by the nonlinear dispersive model at hand. The importance of such an endeavour stems from the fact that it allows an understanding of the exact regimes and the limitations of the kinetic theory, which has long been a matter of scientific interest (see [Reference Denissenko, Lukaschuk and Nazarenko20, Reference Aubourg, Campagne, Peureux, Ardhuin, Sommeria, Viboud and Mordant1]). A few mathematical investigations have recently been devoted to studying problems in this spirit [Reference Faou23, Reference Buckmaster, Germain, Hani and Shatah7, Reference Lukkarinen and Spohn35], yielding some partial results and useful insights.

This manuscript continues the investigation initiated in [Reference Buckmaster, Germain, Hani and Shatah7], aimed at providing a rigorous justification of the wave kinetic equation corresponding to the nonlinear Schrödinger equation,

As we shall explain later, the sign of the nonlinearity has no effect on the kinetic description, so we choose the defocussing sign for concreteness. The natural setup for the problem is to start with a spatial domain given by a torus

![]() ${\mathbb T}^d_L$

of size L, which approaches infinity in the thermodynamic limit we seek. This torus can be rational or irrational, which amounts to rescaling the Laplacian into

${\mathbb T}^d_L$

of size L, which approaches infinity in the thermodynamic limit we seek. This torus can be rational or irrational, which amounts to rescaling the Laplacian into

$$ \begin{align*} \Delta_{\beta} \mathrel{\mathop:}= \frac{1}{2\pi} \sum\limits_{i=1}^d \beta_i \partial_i^2, \qquad \beta \mathrel{\mathop:}= (\beta_1,\dots,\beta_d)\in [1,2]^d, \end{align*} $$

$$ \begin{align*} \Delta_{\beta} \mathrel{\mathop:}= \frac{1}{2\pi} \sum\limits_{i=1}^d \beta_i \partial_i^2, \qquad \beta \mathrel{\mathop:}= (\beta_1,\dots,\beta_d)\in [1,2]^d, \end{align*} $$

and taking the spatial domain to be the standard torus of size L, namely

${\mathbb T}^d_L=[0,L]^d$

with periodic boundary conditions. With this normalisation, an irrational torus would correspond to taking the

${\mathbb T}^d_L=[0,L]^d$

with periodic boundary conditions. With this normalisation, an irrational torus would correspond to taking the

![]() $\beta _j$

to be rationally independent. Our results cover both cases, and in part of them

$\beta _j$

to be rationally independent. Our results cover both cases, and in part of them

![]() $\beta $

is assumed to be generic – that is, avoiding a set of Lebesgue measure

$\beta $

is assumed to be generic – that is, avoiding a set of Lebesgue measure

![]() $0$

.

$0$

.

The strength of the nonlinearity is related to the characteristic size

![]() $\lambda $

of the initial data (say in the conserved

$\lambda $

of the initial data (say in the conserved

![]() $L^2$

space). Adopting the ansatz

$L^2$

space). Adopting the ansatz

![]() $v=\lambda u$

, we arrive at the following equation:

$v=\lambda u$

, we arrive at the following equation:

$$ \begin{align} \begin{cases} i \partial_t u - \Delta_{\beta} u + \lambda^{2} \left\lvert u\right\rvert^{2} u=0, \quad x\in \mathbb{T}_L^d = [0,L]^d, \\ u(0,x) = u_{\text{in}}(x). \end{cases} \end{align} $$

$$ \begin{align} \begin{cases} i \partial_t u - \Delta_{\beta} u + \lambda^{2} \left\lvert u\right\rvert^{2} u=0, \quad x\in \mathbb{T}_L^d = [0,L]^d, \\ u(0,x) = u_{\text{in}}(x). \end{cases} \end{align} $$

The kinetic description of the long-time behaviour is akin to a law of large numbers, and therefore one has to start with a random distribution of the initial data. Heuristically, a randomly distributed,

![]() $L^{2}$

-normalised field would (with high probability) have a roughly uniform spatial distribution, and consequently an

$L^{2}$

-normalised field would (with high probability) have a roughly uniform spatial distribution, and consequently an

![]() $L_x^{\infty }$

norm

$L_x^{\infty }$

norm

![]() $\sim L^{-d/2}$

. This makes the strength of the nonlinearity in (NLS) comparable to

$\sim L^{-d/2}$

. This makes the strength of the nonlinearity in (NLS) comparable to

![]() $\lambda ^2 L^{-d}$

(at least initiallyFootnote

2

), which motivates us to introduce the quantity

$\lambda ^2 L^{-d}$

(at least initiallyFootnote

2

), which motivates us to introduce the quantity

and phrase the results in terms of

![]() $\alpha $

instead of

$\alpha $

instead of

![]() $\lambda $

. The kinetic conjecture states that at sufficiently long time scales, the effective dynamics of the Fourier-space mass density

$\lambda $

. The kinetic conjecture states that at sufficiently long time scales, the effective dynamics of the Fourier-space mass density

$\mathbb E \left \lvert \widehat u(t, k)\right \rvert ^2 \left (k \in \mathbb Z^d_L=L^{-1}\mathbb Z^d\right )$

is well approximated – in the limit of large L and vanishing

$\mathbb E \left \lvert \widehat u(t, k)\right \rvert ^2 \left (k \in \mathbb Z^d_L=L^{-1}\mathbb Z^d\right )$

is well approximated – in the limit of large L and vanishing

![]() $\alpha $

– by an appropriately scaled solution

$\alpha $

– by an appropriately scaled solution

![]() $n(t, \xi )$

of the following WKE:

$n(t, \xi )$

of the following WKE:

$$ \begin{align} \partial_t n(t, \xi) &={\mathcal K}\left(n(t, \cdot)\right), \nonumber \\ {\mathcal K}(\phi)(\xi)&:= \int_{\substack{\left(\xi_1, \xi_2, \xi_3\right)\in {\mathbb R}^{3d} \\ \xi_1-\xi_2+\xi_3=\xi}} \phi \phi_1 \phi_2 \phi_3\left(\frac{1}{\phi}-\frac{1}{\phi_1}+\frac{1}{\phi_2}-\frac{1}{\phi_3}\right)\delta_{{\mathbb R}}\left(\left\lvert\xi_1\right\rvert_{\beta}^2-\left\lvert\xi_2\right\rvert_{\beta}^2+\left\lvert\xi_3\right\rvert_{\beta}^2-\left\lvert\xi\right\rvert_{\beta}^2\right) d\xi_1 d\xi_2 d\xi_3, \end{align} $$

$$ \begin{align} \partial_t n(t, \xi) &={\mathcal K}\left(n(t, \cdot)\right), \nonumber \\ {\mathcal K}(\phi)(\xi)&:= \int_{\substack{\left(\xi_1, \xi_2, \xi_3\right)\in {\mathbb R}^{3d} \\ \xi_1-\xi_2+\xi_3=\xi}} \phi \phi_1 \phi_2 \phi_3\left(\frac{1}{\phi}-\frac{1}{\phi_1}+\frac{1}{\phi_2}-\frac{1}{\phi_3}\right)\delta_{{\mathbb R}}\left(\left\lvert\xi_1\right\rvert_{\beta}^2-\left\lvert\xi_2\right\rvert_{\beta}^2+\left\lvert\xi_3\right\rvert_{\beta}^2-\left\lvert\xi\right\rvert_{\beta}^2\right) d\xi_1 d\xi_2 d\xi_3, \end{align} $$

where we used the shorthand notations

![]() $\phi _j:=\phi \left (\xi _j\right )$

and

$\phi _j:=\phi \left (\xi _j\right )$

and

$\left \lvert \xi \right \rvert ^2_{\beta }=\sum _{j=1}^d \beta _j \left (\xi ^{\left (j\right )}\right )^2$

for

$\left \lvert \xi \right \rvert ^2_{\beta }=\sum _{j=1}^d \beta _j \left (\xi ^{\left (j\right )}\right )^2$

for

$\xi =\left (\xi ^{(1)},\cdots ,\xi ^{(d)}\right )$

. More precisely, one expects this approximation to hold at the kinetic timescale

$\xi =\left (\xi ^{(1)},\cdots ,\xi ^{(d)}\right )$

. More precisely, one expects this approximation to hold at the kinetic timescale

$T_{\mathrm {kin}}\sim \alpha ^{-2}=\frac {L^{2d}}{\lambda ^4}$

, in the sense that

$T_{\mathrm {kin}}\sim \alpha ^{-2}=\frac {L^{2d}}{\lambda ^4}$

, in the sense that

$$ \begin{align} \mathbb E \left\lvert\widehat u(t, k)\right\rvert^2 \approx n\left(\frac{t}{T_{\mathrm{kin}}}, k\right) \quad \text{as } L\to \infty, \alpha \to 0. \end{align} $$

$$ \begin{align} \mathbb E \left\lvert\widehat u(t, k)\right\rvert^2 \approx n\left(\frac{t}{T_{\mathrm{kin}}}, k\right) \quad \text{as } L\to \infty, \alpha \to 0. \end{align} $$

Of course, for such an approximation to hold at time

![]() $t=0$

, one has to start with a well-prepared initial distribution for

$t=0$

, one has to start with a well-prepared initial distribution for

![]() $\widehat u_{\text {in}}(k)$

as follows: denoting by

$\widehat u_{\text {in}}(k)$

as follows: denoting by

![]() $n_{\text {in}}$

the initial data for (WKE), we assume

$n_{\text {in}}$

the initial data for (WKE), we assume

$$ \begin{align} \widehat u_{\mathrm{in}}(k)=\sqrt{n_{\text{in}}(k)} \eta_{k}(\omega), \end{align} $$

$$ \begin{align} \widehat u_{\mathrm{in}}(k)=\sqrt{n_{\text{in}}(k)} \eta_{k}(\omega), \end{align} $$

where

![]() $\eta _{k}(\omega )$

are mean-

$\eta _{k}(\omega )$

are mean-

![]() $0$

complex random variables satisfying

$0$

complex random variables satisfying

![]() $\mathbb E \left \lvert \eta _k\right \rvert ^2=1$

. In what follows,

$\mathbb E \left \lvert \eta _k\right \rvert ^2=1$

. In what follows,

![]() $\eta _k(\omega )$

will be independent, identically distributed complex random variables, such that the law of each

$\eta _k(\omega )$

will be independent, identically distributed complex random variables, such that the law of each

![]() $\eta _k$

is either the normalised complex Gaussian or the uniform distribution on the unit circle

$\eta _k$

is either the normalised complex Gaussian or the uniform distribution on the unit circle

![]() $\lvert z\rvert =1$

.

$\lvert z\rvert =1$

.

Before stating our results, it is worth remarking on the regime of data and solutions covered by this kinetic picture in comparison to previously studied and well-understood regimes in the nonlinear dispersive literature. For this, let us look back at the (pre-ansatz) NLS solution v, whose conserved energy is given by

$$ \begin{align*} {\mathcal E}[v]:=\int_{{\mathbb T}^d_L} \frac{1}{2}\left\lvert\nabla v\right\rvert^2 +\frac{1}{4}\left\lvert v\right\rvert^4 \mathrm{d}x. \end{align*} $$

$$ \begin{align*} {\mathcal E}[v]:=\int_{{\mathbb T}^d_L} \frac{1}{2}\left\lvert\nabla v\right\rvert^2 +\frac{1}{4}\left\lvert v\right\rvert^4 \mathrm{d}x. \end{align*} $$

We are dealing with solutions having an

![]() $L^{\infty }$

-norm of

$L^{\infty }$

-norm of

![]() $O\left (\sqrt \alpha \right )$

(with high probability) and whose total mass is

$O\left (\sqrt \alpha \right )$

(with high probability) and whose total mass is

![]() $O\left (\alpha L^d\right )$

, in a regime where

$O\left (\alpha L^d\right )$

, in a regime where

![]() $\alpha $

is vanishingly small and L is asymptotically large. These bounds on the solutions are true initially, as we have already explained, and will be propagated in our proof. In particular, the mass and energy are very large and will diverge in this kinetic limit, as is common in taking thermodynamic limits [Reference Ruelle42, Reference Minlos37]. Moreover, the potential part of the energy is dominated by the kinetic part – the former of size

$\alpha $

is vanishingly small and L is asymptotically large. These bounds on the solutions are true initially, as we have already explained, and will be propagated in our proof. In particular, the mass and energy are very large and will diverge in this kinetic limit, as is common in taking thermodynamic limits [Reference Ruelle42, Reference Minlos37]. Moreover, the potential part of the energy is dominated by the kinetic part – the former of size

![]() $O\left (\alpha ^3 L^d\right )$

and the latter of size

$O\left (\alpha ^3 L^d\right )$

and the latter of size

![]() $O\left (\alpha L^d\right )$

– which explains why there is no distinction between the defocussing and focussing nonlinearities in the kinetic limit. It would be interesting to see how the kinetic framework can be extended to regimes of solutions which are sensitive to the sign of the nonlinearity; this has been investigated in the physics literature (e.g., [Reference Dyachenko, Newell, Pushkarev and Zakharov22, Reference Fitzmaurice, Gurarie, McCaughan and Woyczynski25, Reference Zakharov, Korotkevich, Pushkarev and Resio50]).

$O\left (\alpha L^d\right )$

– which explains why there is no distinction between the defocussing and focussing nonlinearities in the kinetic limit. It would be interesting to see how the kinetic framework can be extended to regimes of solutions which are sensitive to the sign of the nonlinearity; this has been investigated in the physics literature (e.g., [Reference Dyachenko, Newell, Pushkarev and Zakharov22, Reference Fitzmaurice, Gurarie, McCaughan and Woyczynski25, Reference Zakharov, Korotkevich, Pushkarev and Resio50]).

1.1 Statement of the results

It is not a priori clear how the limits

![]() $L\to \infty $

and

$L\to \infty $

and

![]() $\alpha \to 0$

need to be taken for formula (1.1) to hold or whether there is an additional scaling law (between

$\alpha \to 0$

need to be taken for formula (1.1) to hold or whether there is an additional scaling law (between

![]() $\alpha $

and L) that needs to be satisfied in the limit. In comparison, such scaling laws are imposed in the rigorous derivation of Boltzmann’s equation [Reference Lanford34, Reference Cercignani, Illner and Pulvirenti10, Reference Gallagher, Saint-Raymond and Texier26], which is derived in the so-called Boltzmann–Grad limit [Reference Grad27]: namely, the number N of particles goes to

$\alpha $

and L) that needs to be satisfied in the limit. In comparison, such scaling laws are imposed in the rigorous derivation of Boltzmann’s equation [Reference Lanford34, Reference Cercignani, Illner and Pulvirenti10, Reference Gallagher, Saint-Raymond and Texier26], which is derived in the so-called Boltzmann–Grad limit [Reference Grad27]: namely, the number N of particles goes to

![]() $\infty $

while their radius r goes to

$\infty $

while their radius r goes to

![]() $0$

in such a way that

$0$

in such a way that

![]() $Nr^{d-1}\sim O(1)$

. To the best of our knowledge, this central point has not been adequately addressed in the wave-turbulence literature.

$Nr^{d-1}\sim O(1)$

. To the best of our knowledge, this central point has not been adequately addressed in the wave-turbulence literature.

Our results seem to suggest some key differences depending on the chosen scaling law. Roughly speaking, we identify two special scaling laws for which we are able to justify the approximation (1.1) up to time scales

![]() $L^{-\varepsilon } T_{\text {kin}}$

for any arbitrarily small

$L^{-\varepsilon } T_{\text {kin}}$

for any arbitrarily small

![]() $\varepsilon>0$

. For other scaling laws, we identify significant absolute divergences in the power-series expansion for

$\varepsilon>0$

. For other scaling laws, we identify significant absolute divergences in the power-series expansion for

![]() $\mathbb E \left \lvert \widehat u(t, k)\right \rvert ^2$

at much earlier times. We can therefore only justify this approximation at such shorter times (which are still better than those in [Reference Buckmaster, Germain, Hani and Shatah7]). In these cases, whether or not formula (1.1) holds up to time scales

$\mathbb E \left \lvert \widehat u(t, k)\right \rvert ^2$

at much earlier times. We can therefore only justify this approximation at such shorter times (which are still better than those in [Reference Buckmaster, Germain, Hani and Shatah7]). In these cases, whether or not formula (1.1) holds up to time scales

![]() $L^{-\varepsilon } T_{\text {kin}}$

depends on whether such series converge conditionally instead of absolutely, and thus would require new methods and ideas, as we explain later.

$L^{-\varepsilon } T_{\text {kin}}$

depends on whether such series converge conditionally instead of absolutely, and thus would require new methods and ideas, as we explain later.

We start by identifying the two favourable scaling laws. We use the notation

![]() $\sigma +$

for any numerical constant

$\sigma +$

for any numerical constant

![]() $\sigma $

(e.g.,

$\sigma $

(e.g.,

![]() $\sigma =-\varepsilon $

or

$\sigma =-\varepsilon $

or

![]() $\sigma =-1-\frac {\varepsilon }{2}$

, where

$\sigma =-1-\frac {\varepsilon }{2}$

, where

![]() $\varepsilon $

is as in Theorem 1.1) to denote a constant that is strictly larger than and sufficiently close to

$\varepsilon $

is as in Theorem 1.1) to denote a constant that is strictly larger than and sufficiently close to

![]() $\sigma $

.

$\sigma $

.

Theorem 1.1. Set

![]() $d\geq 2$

and let

$d\geq 2$

and let

![]() $\beta \in [1,2]^d$

be arbitrary. Suppose that

$\beta \in [1,2]^d$

be arbitrary. Suppose that

![]() $n_{\mathrm {in}} \in {\mathcal S}\left ({\mathbb R}^d \to [0, \infty )\right )$

is SchwartzFootnote

3

and

$n_{\mathrm {in}} \in {\mathcal S}\left ({\mathbb R}^d \to [0, \infty )\right )$

is SchwartzFootnote

3

and

![]() $\eta _{k}(\omega )$

are independent, identically distributed complex random variables, such that the law of each

$\eta _{k}(\omega )$

are independent, identically distributed complex random variables, such that the law of each

![]() $\eta _k$

is either complex Gaussian with mean

$\eta _k$

is either complex Gaussian with mean

![]() $0$

and variance

$0$

and variance

![]() $1$

or the uniform distribution on the unit circle

$1$

or the uniform distribution on the unit circle

![]() $\lvert z\rvert =1$

. Assume well-prepared initial data

$\lvert z\rvert =1$

. Assume well-prepared initial data

![]() $u_{\mathrm {in}}$

for (NLS) as in equation (1.2).

$u_{\mathrm {in}}$

for (NLS) as in equation (1.2).

Fix

![]() $0<\varepsilon <1$

(in most interesting cases

$0<\varepsilon <1$

(in most interesting cases

![]() $\varepsilon $

will be small); recall that

$\varepsilon $

will be small); recall that

![]() $\lambda $

and L are the parameters in (NLS) and let

$\lambda $

and L are the parameters in (NLS) and let

![]() $\alpha =\lambda ^2L^{-d}$

be the characteristic strength of the nonlinearity. If

$\alpha =\lambda ^2L^{-d}$

be the characteristic strength of the nonlinearity. If

![]() $\alpha $

has the scaling law

$\alpha $

has the scaling law

![]() $\alpha \sim L^{(-\varepsilon )+}$

or

$\alpha \sim L^{(-\varepsilon )+}$

or

![]() $\alpha \sim L^{\left (-1-\frac {\varepsilon }{2}\right )+}$

, then we have

$\alpha \sim L^{\left (-1-\frac {\varepsilon }{2}\right )+}$

, then we have

$$ \begin{align} \mathbb E \left\lvert\widehat u(t, k)\right\rvert^2 =n_{\mathrm{in}}(k)+\frac{t}{T_{\mathrm{kin}}}{\mathcal K}(n_{\mathrm{in}})(k)+o_{\ell^{\infty}_k}\left(\frac{t}{T_{\mathrm {kin}}}\right)_{L \to \infty} \end{align} $$

$$ \begin{align} \mathbb E \left\lvert\widehat u(t, k)\right\rvert^2 =n_{\mathrm{in}}(k)+\frac{t}{T_{\mathrm{kin}}}{\mathcal K}(n_{\mathrm{in}})(k)+o_{\ell^{\infty}_k}\left(\frac{t}{T_{\mathrm {kin}}}\right)_{L \to \infty} \end{align} $$

for all

![]() $L^{0+} \leq t \leq L^{-\varepsilon } T_{\mathrm {kin}}$

, where

$L^{0+} \leq t \leq L^{-\varepsilon } T_{\mathrm {kin}}$

, where

![]() $T_{\mathrm {kin}}=\alpha ^{-2}/2$

,

$T_{\mathrm {kin}}=\alpha ^{-2}/2$

,

![]() ${\mathcal K}$

is defined in (WKE) and

${\mathcal K}$

is defined in (WKE) and

$o_{\ell ^{\infty }_k}\left (\frac {t}{T_{\mathrm {kin}}}\right )_{L \to \infty }$

is a quantity that is bounded in

$o_{\ell ^{\infty }_k}\left (\frac {t}{T_{\mathrm {kin}}}\right )_{L \to \infty }$

is a quantity that is bounded in

![]() $\ell ^{\infty }_k$

by

$\ell ^{\infty }_k$

by

$L^{-\theta } \frac {t}{T_{\mathrm {kin}}}$

for some

$L^{-\theta } \frac {t}{T_{\mathrm {kin}}}$

for some

![]() $\theta>0$

.

$\theta>0$

.

We remark that in the time interval of the approximation we have been discussing, the right hand sides of formulas (1.1) and (1.3) are equivalent. Also note that any type of scaling law of the form

![]() $\alpha \sim L^{-s}$

gives an upper bound of

$\alpha \sim L^{-s}$

gives an upper bound of

![]() $t\leq L^{-\varepsilon }T_{\mathrm {kin}}\sim L^{2s-\varepsilon }$

for the times considered. Consequently, for the two scaling laws in Theorem 1.1, the time t always satisfies

$t\leq L^{-\varepsilon }T_{\mathrm {kin}}\sim L^{2s-\varepsilon }$

for the times considered. Consequently, for the two scaling laws in Theorem 1.1, the time t always satisfies

![]() $t\ll L^{2}$

, and it is for this reason that the rationality type of the torus is not relevant. As will be clear later, no similar results can hold for

$t\ll L^{2}$

, and it is for this reason that the rationality type of the torus is not relevant. As will be clear later, no similar results can hold for

![]() $t\gg L^2$

in the case of a rational torus,Footnote

4

as this would require rational quadratic forms to be equidistributed on scales

$t\gg L^2$

in the case of a rational torus,Footnote

4

as this would require rational quadratic forms to be equidistributed on scales

![]() $\ll 1$

, which is impossible. However, if the aspect ratios

$\ll 1$

, which is impossible. However, if the aspect ratios

![]() $\beta $

are assumed to be generically irrational, then one can access equidistribution scales that are as small as

$\beta $

are assumed to be generically irrational, then one can access equidistribution scales that are as small as

![]() $L^{-d+1}$

for the resulting irrational quadratic forms [Reference Bourgain4, Reference Buckmaster, Germain, Hani and Shatah7]. This allows us to consider scaling laws for which

$L^{-d+1}$

for the resulting irrational quadratic forms [Reference Bourgain4, Reference Buckmaster, Germain, Hani and Shatah7]. This allows us to consider scaling laws for which

![]() $T_{\mathrm {kin}}$

can be as big as

$T_{\mathrm {kin}}$

can be as big as

![]() $L^{d-}$

on generically irrational tori.

$L^{d-}$

on generically irrational tori.

Remark 1.2. Strictly speaking, in evaluating equation (1.3) one has to first ensure the existence of the solution u. This is guaranteed if

![]() $d\in \{2,3,4\}$

(when (NLS) is

$d\in \{2,3,4\}$

(when (NLS) is

![]() $H^1$

-critical or subcritical). When

$H^1$

-critical or subcritical). When

![]() $d\geq 5$

we shall interpret equation (1.3) such that the expectation is taken only when the long-time smooth solution u exists. Moreover, from our proof it follows that the probability that this existence fails is at most

$d\geq 5$

we shall interpret equation (1.3) such that the expectation is taken only when the long-time smooth solution u exists. Moreover, from our proof it follows that the probability that this existence fails is at most

$O\left (e^{-L^{\theta }}\right )$

, which quickly becomes negligible when

$O\left (e^{-L^{\theta }}\right )$

, which quickly becomes negligible when

![]() $L\to \infty $

.

$L\to \infty $

.

The following theorem covers general scaling laws, including the ones that can only be accessed for the generically irrational torus. By a simple calculation of exponents, we can see that it implies Theorem 1.1.

Theorem 1.3. With the same assumptions as in Theorem 1.1, we impose the following conditions on

![]() $(\alpha , L, T)$

for some

$(\alpha , L, T)$

for some

![]() $\delta>0$

:

$\delta>0$

:

$$ \begin{align}T\leq \begin{cases}L^{2-\delta}&\text{if }\beta_i\text{ is arbitrary},\\ L^{d-\delta}&\text{if }\beta_i\text{ is generic}, \end{cases} \qquad\alpha\leq \begin{cases} L^{-\delta}T^{-1}&\text{if }T \leq L,\\ L^{-1-\delta}&\text{if }L\leq T\leq L^2,\\ L^{1-\delta}T^{-1}&\text{if } T\geq L^2. \end{cases} \end{align} $$

$$ \begin{align}T\leq \begin{cases}L^{2-\delta}&\text{if }\beta_i\text{ is arbitrary},\\ L^{d-\delta}&\text{if }\beta_i\text{ is generic}, \end{cases} \qquad\alpha\leq \begin{cases} L^{-\delta}T^{-1}&\text{if }T \leq L,\\ L^{-1-\delta}&\text{if }L\leq T\leq L^2,\\ L^{1-\delta}T^{-1}&\text{if } T\geq L^2. \end{cases} \end{align} $$

Then formula (1.3) holds for all

![]() $L^{\delta } \leq t \leq T$

.

$L^{\delta } \leq t \leq T$

.

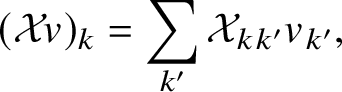

It is best to read this theorem in terms of the

![]() $\left (\log _L \left (\alpha ^{-1}\right ),\log _L T\right )$

plot in Figure 1. The kinetic conjecture corresponds to justifying the approximation in formula (1.1) up to time scales

$\left (\log _L \left (\alpha ^{-1}\right ),\log _L T\right )$

plot in Figure 1. The kinetic conjecture corresponds to justifying the approximation in formula (1.1) up to time scales

![]() $T\lesssim T_{\mathrm {kin}}=\alpha ^{-2}$

. As we shall explain later, the time scale

$T\lesssim T_{\mathrm {kin}}=\alpha ^{-2}$

. As we shall explain later, the time scale

![]() $T\sim T_{\mathrm {kin}}$

represents a critical scale for the problem from a probabilistic point of view. This is depicted by the red line in the figure, and the region below this line corresponds to a probabilistically subcritical regime (see Section 1.2.1). The shaded blue region corresponds to the

$T\sim T_{\mathrm {kin}}$

represents a critical scale for the problem from a probabilistic point of view. This is depicted by the red line in the figure, and the region below this line corresponds to a probabilistically subcritical regime (see Section 1.2.1). The shaded blue region corresponds to the

![]() $(\alpha , T)$

region in Theorem 1.3, neglecting

$(\alpha , T)$

region in Theorem 1.3, neglecting

![]() $\delta $

losses. This region touches the line

$\delta $

losses. This region touches the line

![]() $T=\alpha ^{-2}$

at the two points corresponding to

$T=\alpha ^{-2}$

at the two points corresponding to

![]() $\left (\alpha ^{-1}, T\right )=(1, 1)$

and

$\left (\alpha ^{-1}, T\right )=(1, 1)$

and

![]() $\left (L, L^2\right )$

, whereas the two scaling laws of Theorem 1.1, where

$\left (L, L^2\right )$

, whereas the two scaling laws of Theorem 1.1, where

![]() $\left (\alpha ^{-1},T\right )\sim (L^{\varepsilon -},L^{\varepsilon -})$

and

$\left (\alpha ^{-1},T\right )\sim (L^{\varepsilon -},L^{\varepsilon -})$

and

$\left (\alpha ^{-1},T\right )\sim \left (L^{1+\frac {\varepsilon }{2}-},L^{2-}\right )$

, approach these two points when

$\left (\alpha ^{-1},T\right )\sim \left (L^{1+\frac {\varepsilon }{2}-},L^{2-}\right )$

, approach these two points when

![]() $\varepsilon $

is small.

$\varepsilon $

is small.

Figure 1 Admissible range for

![]() $(\alpha , L, T)$

in the

$(\alpha , L, T)$

in the

![]() $\left (\log _L \left (\alpha ^{-1}\right ),\log _L T\right )$

plot when

$\left (\log _L \left (\alpha ^{-1}\right ),\log _L T\right )$

plot when

![]() $d\geq 3$

. The coloured region is the range of Theorem 1.3 (up to

$d\geq 3$

. The coloured region is the range of Theorem 1.3 (up to

![]() $\varepsilon $

endpoint accuracy). The red line denotes the case when

$\varepsilon $

endpoint accuracy). The red line denotes the case when

![]() $T=T_{\mathrm {kin}}=\alpha ^{-2}$

, which our coloured region touches at two points corresponding to

$T=T_{\mathrm {kin}}=\alpha ^{-2}$

, which our coloured region touches at two points corresponding to

![]() $T\sim 1$

and

$T\sim 1$

and

![]() $T\sim L^{2}$

.

$T\sim L^{2}$

.

These results rely on a diagrammatic expansion of the NLS solution in Feynman diagrams akin to a Taylor expansion. The shaded blue region depicting the result of Theorem 1.3 corresponds to the cases when such a diagrammatic expansion is absolutely convergent for very large L. In the complementary region between the blue region and the line

![]() $T=T_{\text {kin}}$

, we show that some (arbitrarily high-degree) terms of this expansion do not converge to

$T=T_{\text {kin}}$

, we show that some (arbitrarily high-degree) terms of this expansion do not converge to

![]() $0$

as their degree goes to

$0$

as their degree goes to

![]() $\infty $

, which means that the diagrammatic expansion cannot converge absolutely in this region. Therefore, the only way for the kinetic conjecture to be true in the scaling regimes not included in Theorem 1.1 is for those terms to exhibit a highly nontrivial cancellation, which would make the series converge conditionally but not absolutely.

$\infty $

, which means that the diagrammatic expansion cannot converge absolutely in this region. Therefore, the only way for the kinetic conjecture to be true in the scaling regimes not included in Theorem 1.1 is for those terms to exhibit a highly nontrivial cancellation, which would make the series converge conditionally but not absolutely.

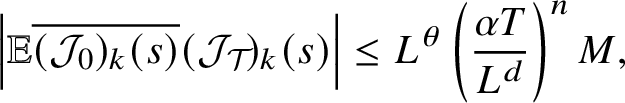

Finally, we remark on the restriction in formula (1.4). The upper bounds on T on the left are necessary from number-theoretic considerations: indeed, if

![]() $T\gg L^2$

for a rational torus, or if

$T\gg L^2$

for a rational torus, or if

![]() $T\gg L^d$

for an irrational one, the exact resonances of the NLS equation dominate the quasi-resonant interactions that lead to the kinetic wave equation. One should therefore not expect the kinetic description to hold in those ranges of T (see Lemma 3.2 and Section 4). The second set of restrictions in formula (1.4) correspond exactly to the requirement that the size of the Feynman diagrams of degree n can be bounded by

$T\gg L^d$

for an irrational one, the exact resonances of the NLS equation dominate the quasi-resonant interactions that lead to the kinetic wave equation. One should therefore not expect the kinetic description to hold in those ranges of T (see Lemma 3.2 and Section 4). The second set of restrictions in formula (1.4) correspond exactly to the requirement that the size of the Feynman diagrams of degree n can be bounded by

![]() $\rho ^n$

with some

$\rho ^n$

with some

![]() $\rho \ll 1$

. In fact, if one aims only at proving existence with high probability (not caring about the asymptotics of

$\rho \ll 1$

. In fact, if one aims only at proving existence with high probability (not caring about the asymptotics of

![]() $\mathbb {E}\left \lvert \widehat {u}(t,k)\right \rvert ^2$

), then the restrictions on the left of formula (1.4) will not be necessary, and one obtains control for longer times. See also the following remark:

$\mathbb {E}\left \lvert \widehat {u}(t,k)\right \rvert ^2$

), then the restrictions on the left of formula (1.4) will not be necessary, and one obtains control for longer times. See also the following remark:

Remark 1.4 Admissible scaling laws

The foregoing restrictions on T impose the limits of the admissible scaling laws, in which

![]() $\alpha \to 0$

and

$\alpha \to 0$

and

![]() $L \to \infty $

, for which the kinetic description of the long-time dynamics can appear. Indeed, since

$L \to \infty $

, for which the kinetic description of the long-time dynamics can appear. Indeed, since

![]() $T_{\mathrm {kin}}=\alpha ^{-2}$

, then the necessary (up to

$T_{\mathrm {kin}}=\alpha ^{-2}$

, then the necessary (up to

![]() $L^{\delta }$

factors) restrictions

$L^{\delta }$

factors) restrictions

![]() $T\ll L^{2-\delta }$

(resp.,

$T\ll L^{2-\delta }$

(resp.,

![]() $T\ll L^{d-\delta }$

) on the rational (resp., irrational) torus already mentioned imply that one should only expect the previous kinetic description in the regime where

$T\ll L^{d-\delta }$

) on the rational (resp., irrational) torus already mentioned imply that one should only expect the previous kinetic description in the regime where

![]() $\alpha \gtrsim L^{-1}$

(resp.,

$\alpha \gtrsim L^{-1}$

(resp.,

![]() $\gtrsim L^{-d/2}$

). In other words, the kinetic description requires the nonlinearity to be weak, but not too weak! In the complementary regime of very weak nonlinearity, the exact resonances of the equation dominate the quasi-resonances – a regime referred to as discrete wave turbulence (see [Reference L’vov and Nazarenko36, Reference Kartashova32, Reference Nazarenko39]), in which different effective equations, like the (CR) equation in [Reference Faou, Germain and Hani24, Reference Buckmaster, Germain, Hani and Shatah6], can arise.

$\gtrsim L^{-d/2}$

). In other words, the kinetic description requires the nonlinearity to be weak, but not too weak! In the complementary regime of very weak nonlinearity, the exact resonances of the equation dominate the quasi-resonances – a regime referred to as discrete wave turbulence (see [Reference L’vov and Nazarenko36, Reference Kartashova32, Reference Nazarenko39]), in which different effective equations, like the (CR) equation in [Reference Faou, Germain and Hani24, Reference Buckmaster, Germain, Hani and Shatah6], can arise.

1.2 Ideas of the proof

As Theorem 1.1 is a consequence of Theorem 1.3, we will focus on Theorem 1.3. The proof of Theorem 1.3 contains three components: (

![]() $1$

) a long-time well-posedness result, where we expand the solution to (NLS) into Feynman diagrams for sufficiently long time, up to a well-controlled error term; (

$1$

) a long-time well-posedness result, where we expand the solution to (NLS) into Feynman diagrams for sufficiently long time, up to a well-controlled error term; (

![]() $2$

) computation of

$2$

) computation of

$\mathbb E\left \lvert \widehat u_k(t)\right \rvert ^2 \left (k \in \mathbb Z^d_L\right )$

using this expansion, where we identify the leading terms and control the remainders; and (

$\mathbb E\left \lvert \widehat u_k(t)\right \rvert ^2 \left (k \in \mathbb Z^d_L\right )$

using this expansion, where we identify the leading terms and control the remainders; and (

![]() $3$

) a number-theoretic result that justifies the large box approximation, where we pass from the sums appearing in the expansion in the previous component to the integral appearing on the right-hand side of (WKE).

$3$

) a number-theoretic result that justifies the large box approximation, where we pass from the sums appearing in the expansion in the previous component to the integral appearing on the right-hand side of (WKE).

The main novelty of this work is in the first component, which is the hardest. The second component follows similar lines to those in [Reference Buckmaster, Germain, Hani and Shatah7]. Regarding the third component, the main novelty of this work is to complement the number-theoretic results in [Reference Buckmaster, Germain, Hani and Shatah7] (which dealt only with the generically irrational torus) by the cases of general tori (in the admissible range of time

![]() $T\ll L^2$

). This provides an essentially full (up to

$T\ll L^2$

). This provides an essentially full (up to

![]() $L^{\varepsilon }$

losses) understanding of the number-theoretic issues arising in wave-turbulence derivations for (NLS). Therefore, we will limit this introductory discussion to the first component.

$L^{\varepsilon }$

losses) understanding of the number-theoretic issues arising in wave-turbulence derivations for (NLS). Therefore, we will limit this introductory discussion to the first component.

1.2.1 The scheme and probabilistic criticality

Though technically involved, the basic idea of the long-time well-posedness argument is in fact quite simple. Starting from (NLS) with initial data of equation (1.2), we write the solution as

where

![]() $u^{(0)}=e^{-it\Delta _{\beta }}u_{\mathrm {in}}$

is the linear evolution,

$u^{(0)}=e^{-it\Delta _{\beta }}u_{\mathrm {in}}$

is the linear evolution,

![]() $u^{(n)}$

are iterated self-interactions of the linear solution

$u^{(n)}$

are iterated self-interactions of the linear solution

![]() $u^{(0)}$

that appear in a formal expansion of u and

$u^{(0)}$

that appear in a formal expansion of u and

![]() $\mathcal R_{N+1}$

is a sufficiently regular remainder term.

$\mathcal R_{N+1}$

is a sufficiently regular remainder term.

Since

![]() $u^{(0)}$

is a linear combination of independent random variables, and each

$u^{(0)}$

is a linear combination of independent random variables, and each

![]() $u^{(n)}$

is a multilinear combination, each of them will behave strictly better (both linearly and nonlinearly) than its deterministic analogue (i.e., with all

$u^{(n)}$

is a multilinear combination, each of them will behave strictly better (both linearly and nonlinearly) than its deterministic analogue (i.e., with all

![]() $\eta _k=1$

). This is due to the well-known large deviation estimates, which yield a ‘square root’ gain coming from randomness, akin to the central limit theorem (for instance,

$\eta _k=1$

). This is due to the well-known large deviation estimates, which yield a ‘square root’ gain coming from randomness, akin to the central limit theorem (for instance,

![]() $\left \lVert u_{\mathrm {in}}\right \rVert _{L^{\infty }}$

is bounded by

$\left \lVert u_{\mathrm {in}}\right \rVert _{L^{\infty }}$

is bounded by

![]() $L^{-d/2}\cdot \left \lVert u_{\mathrm {in}}\right \rVert _{L^2}$

in the probabilistic setting, as opposed to

$L^{-d/2}\cdot \left \lVert u_{\mathrm {in}}\right \rVert _{L^2}$

in the probabilistic setting, as opposed to

![]() $1\cdot \left \lVert u_{\mathrm {in}}\right \rVert _{L^2}$

deterministically by Sobolev embedding, assuming compact Fourier support). This gain leads to a new notion of criticality for the problem, which can be definedFootnote

5

as the edge of the regime of

$1\cdot \left \lVert u_{\mathrm {in}}\right \rVert _{L^2}$

deterministically by Sobolev embedding, assuming compact Fourier support). This gain leads to a new notion of criticality for the problem, which can be definedFootnote

5

as the edge of the regime of

![]() $(\alpha , T)$

for which the iterate

$(\alpha , T)$

for which the iterate

![]() $u^{(1)}$

is better bounded than the iterate

$u^{(1)}$

is better bounded than the iterate

![]() $u^{(0)}$

. It is not hard to see that

$u^{(0)}$

. It is not hard to see that

![]() $u^{(1)}$

can have size up to

$u^{(1)}$

can have size up to

![]() $O(\alpha\sqrt{T})$

(in appropriate norms), compared to the

$O(\alpha\sqrt{T})$

(in appropriate norms), compared to the

![]() $O(1)$

size of

$O(1)$

size of

![]() $u^{(0)}$

(see, e.g., formula (2.25) for

$u^{(0)}$

(see, e.g., formula (2.25) for

![]() $n=1$

). This justifies the notion that

$n=1$

). This justifies the notion that

![]() $T\sim T_{\mathrm {kin}}=\alpha ^{-2}$

corresponds to probabilistically critical scaling, whereas the time scales

$T\sim T_{\mathrm {kin}}=\alpha ^{-2}$

corresponds to probabilistically critical scaling, whereas the time scales

![]() $T\ll T_{\mathrm {kin}}$

are subcritical.Footnote

6

$T\ll T_{\mathrm {kin}}$

are subcritical.Footnote

6

As it happens, a certain notion of criticality might not capture all the subtleties of the problem. As we shall see, some higher-order iterates

![]() $u^{(n)}$

will not be better bounded than

$u^{(n)}$

will not be better bounded than

![]() $u^{(n-1)}$

in the full subcritical range

$u^{(n-1)}$

in the full subcritical range

![]() $T\ll \alpha ^{-2}$

we have postulated, but instead only in a subregion thereof. This is what defines our admissible blue region in Figure 1.

$T\ll \alpha ^{-2}$

we have postulated, but instead only in a subregion thereof. This is what defines our admissible blue region in Figure 1.

We should mention that the idea of using the gain from randomness goes back to Bourgain [Reference Bourgain3] (in the random-data setting) and to Da Prato and Debussche [Reference Da Prato and Debussche14] (later, in the stochastic PDE setting). They first noticed that the ansatz

![]() $u=u^{(0)}+\mathcal R$

allows one to put the remainder

$u=u^{(0)}+\mathcal R$

allows one to put the remainder

![]() $\mathcal R$

in a higher regularity space than the linear term

$\mathcal R$

in a higher regularity space than the linear term

![]() $u^{(0)}$

. This idea has since been applied to many different situations (see, e.g., [Reference Bourgain and Bulut5, Reference Burq and Tzvetkov8, Reference Colliander and Oh11, Reference Deng15, Reference Dodson, Lührmann and Mendelson21, Reference Kenig and Mendelson33, Reference Nahmod and Staffilani38]), though most of these works either involve only the first-order expansion (i.e.,

$u^{(0)}$

. This idea has since been applied to many different situations (see, e.g., [Reference Bourgain and Bulut5, Reference Burq and Tzvetkov8, Reference Colliander and Oh11, Reference Deng15, Reference Dodson, Lührmann and Mendelson21, Reference Kenig and Mendelson33, Reference Nahmod and Staffilani38]), though most of these works either involve only the first-order expansion (i.e.,

![]() $N=0$

) or involve higher-order expansions with only suboptimal bounds (e.g., [Reference Bényi, Oh and Pocovnicu2]). To the best of our knowledge, the present paper is the first work where the sharp bounds for these

$N=0$

) or involve higher-order expansions with only suboptimal bounds (e.g., [Reference Bényi, Oh and Pocovnicu2]). To the best of our knowledge, the present paper is the first work where the sharp bounds for these

![]() $u^{(j)}$

terms are obtained to arbitrarily high order (at least in the dispersive setting).

$u^{(j)}$

terms are obtained to arbitrarily high order (at least in the dispersive setting).

Remark 1.5. There are two main reasons why the high-order expansion (1.5) gives the sharp time of control, in contrast to previous works. The first is that we are able to obtain sharp estimates for the terms

![]() $u^{(j)}$

with arbitrarily high order, which were not known previously due to the combinatorial complexity associated with trees (see Section 1.2.2).

$u^{(j)}$

with arbitrarily high order, which were not known previously due to the combinatorial complexity associated with trees (see Section 1.2.2).

The second reason is more intrinsic. In higher-order versions of the original Bourgain–Da Prato–Debussche approach, it usually stops improving in regularity beyond a certain point, due to the presence of the high-low interactions (heuristically, the gain of powers of low frequency does not transform to the gain in regularity). This is a major difficulty in random-data theory, and in recent years a few methods have been developed to address it, including regularity structure [Reference Hairer29], para-controlled calculus [Reference Gubinelli, Imkeller and Perkowski28] and random averaging operators [Reference Deng, Nahmod and Yue18]. Fortunately, in the current problem this issue is absent, since the well-prepared initial data (1.2) bound the high-frequency components (where

![]() $\lvert k\rvert \sim 1$

) and low-frequency components (where

$\lvert k\rvert \sim 1$

) and low-frequency components (where

![]() $\left \lvert k\right \rvert \sim L^{-1}$

) uniformly, so the high-low interaction is simply controlled in the same way as the high-high interaction, allowing one to gain regularity indefinitely as the order increases.

$\left \lvert k\right \rvert \sim L^{-1}$

) uniformly, so the high-low interaction is simply controlled in the same way as the high-high interaction, allowing one to gain regularity indefinitely as the order increases.

1.2.2 Sharp estimates of Feynman trees

We start with the estimate for

![]() $u^{(n)}$

. As is standard with the cubic nonlinear Schrödinger equation, we first perform the Wick ordering by defining

$u^{(n)}$

. As is standard with the cubic nonlinear Schrödinger equation, we first perform the Wick ordering by defining

Note that

![]() $M_0$

is essentially the mass which is conserved. Now w satisfies the renormalised equation

$M_0$

is essentially the mass which is conserved. Now w satisfies the renormalised equation

and

![]() $\left \lvert \widehat {w_k}(t)\right \rvert ^2=\left \lvert \widehat {u_k}(t)\right \rvert ^2$

. This gets rid of the worst resonant term, which would otherwise lead to a suboptimal time scale.

$\left \lvert \widehat {w_k}(t)\right \rvert ^2=\left \lvert \widehat {u_k}(t)\right \rvert ^2$

. This gets rid of the worst resonant term, which would otherwise lead to a suboptimal time scale.

Let

![]() $w^{(n)}$

be the nth-order iteration of the nonlinearity in equation (1.6), corresponding to the

$w^{(n)}$

be the nth-order iteration of the nonlinearity in equation (1.6), corresponding to the

![]() $u^{(n)}$

in equation (1.5). Since this nonlinearity is cubic, by induction it is easy to see that

$u^{(n)}$

in equation (1.5). Since this nonlinearity is cubic, by induction it is easy to see that

![]() $w^{(n)}$

can be written (say, in Fourier space) as a linear combination of termsFootnote

7

$w^{(n)}$

can be written (say, in Fourier space) as a linear combination of termsFootnote

7

![]() $\mathcal J_{\mathcal{T}\,}$

, where

$\mathcal J_{\mathcal{T}\,}$

, where

![]() $\mathcal{T}\,$

runs over all ternary trees with exactly n branching nodes (we will say it has scale

$\mathcal{T}\,$

runs over all ternary trees with exactly n branching nodes (we will say it has scale

![]() $\mathfrak s(\mathcal{T}\,\,)=n$

). After some further reductions, the estimate for

$\mathfrak s(\mathcal{T}\,\,)=n$

). After some further reductions, the estimate for

![]() $\mathcal J_{\mathcal{T}\,}$

can be reduced to the estimate for terms of the form

$\mathcal J_{\mathcal{T}\,}$

can be reduced to the estimate for terms of the form

$$ \begin{align} \Sigma_k:=\sum_{\left(k_1,\ldots,k_{2n+1}\right)\in S}\eta_{k_1}^{\pm}\cdots \eta_{k_{2n+1}}^{\pm}, \qquad \left(\eta_k^+,\eta_k^{-}\right):=\left(\eta_k(\omega), \overline{\eta_k}(\omega)\right), \end{align} $$

$$ \begin{align} \Sigma_k:=\sum_{\left(k_1,\ldots,k_{2n+1}\right)\in S}\eta_{k_1}^{\pm}\cdots \eta_{k_{2n+1}}^{\pm}, \qquad \left(\eta_k^+,\eta_k^{-}\right):=\left(\eta_k(\omega), \overline{\eta_k}(\omega)\right), \end{align} $$

where

![]() $\eta _k(\omega )$

is as in equation (1.2),

$\eta _k(\omega )$

is as in equation (1.2),

$(k_1,\ldots ,k_{2n+1})\in \left (\mathbb {Z}_L^d\right )^{2n+1}$

, S is a suitable finite subset of

$(k_1,\ldots ,k_{2n+1})\in \left (\mathbb {Z}_L^d\right )^{2n+1}$

, S is a suitable finite subset of

$\left (\mathbb {Z}_L^d\right )^{2n+1}$

and the

$\left (\mathbb {Z}_L^d\right )^{2n+1}$

and the

![]() $(2n+1)$

subscripts correspond to the

$(2n+1)$

subscripts correspond to the

![]() $(2n+1)$

leaves of

$(2n+1)$

leaves of

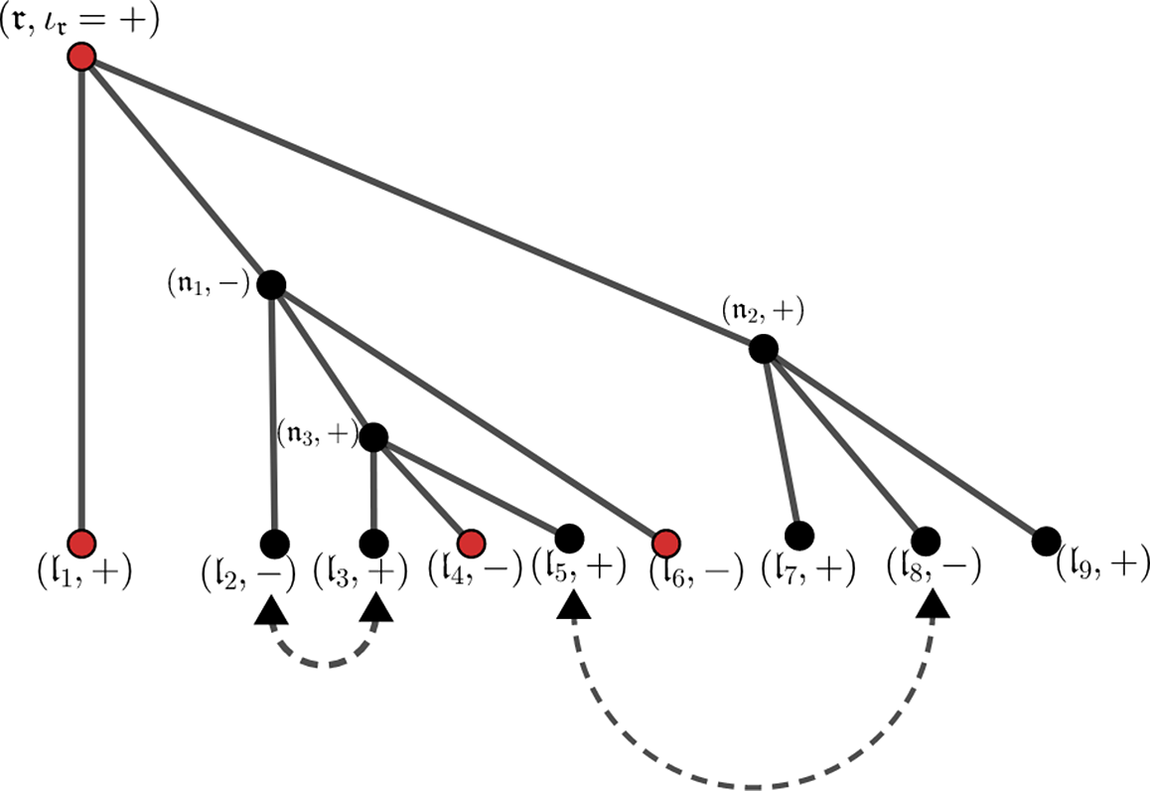

![]() $\mathcal{T}\,$

(see Definition 2.2 and Figure 2).Footnote

8

$\mathcal{T}\,$

(see Definition 2.2 and Figure 2).Footnote

8

Figure 2 On the left, a node

![]() $\mathfrak n$

with its three children

$\mathfrak n$

with its three children

![]() $\mathfrak n_1, \mathfrak n_2, \mathfrak n_3$

, with signs

$\mathfrak n_1, \mathfrak n_2, \mathfrak n_3$

, with signs

![]() $\iota _1=\iota _3=\iota =-\iota _2$

. On the right, a tree of scale

$\iota _1=\iota _3=\iota =-\iota _2$

. On the right, a tree of scale

![]() $4$

$4$

![]() $(\mathfrak s(\mathcal{T}\,\,)=4)$

with root

$(\mathfrak s(\mathcal{T}\,\,)=4)$

with root

![]() $\mathfrak r$

, four branching nodes (

$\mathfrak r$

, four branching nodes (

![]() $\mathfrak r, \mathfrak n_1, \mathfrak n_2, \mathfrak n_3$

) and

$\mathfrak r, \mathfrak n_1, \mathfrak n_2, \mathfrak n_3$

) and

![]() $l=9$

leaves, along with their signatures.

$l=9$

leaves, along with their signatures.

To estimate

![]() $\Sigma _k$

defined in formaul (1.7) we invoke the standard large deviation estimate (see Lemma 3.1), which essentially asserts that

$\Sigma _k$

defined in formaul (1.7) we invoke the standard large deviation estimate (see Lemma 3.1), which essentially asserts that

![]() $\left \lvert \Sigma _k\right \rvert \lesssim (\#S)^{1/2}$

with overwhelming probability, provided that there is no pairing in

$\left \lvert \Sigma _k\right \rvert \lesssim (\#S)^{1/2}$

with overwhelming probability, provided that there is no pairing in

![]() $(k_1,\ldots ,k_{2n+1})$

, where a pairing

$(k_1,\ldots ,k_{2n+1})$

, where a pairing

![]() $\left (k_i,k_j\right )$

means

$\left (k_i,k_j\right )$

means

![]() $k_i=k_j$

and the signs of

$k_i=k_j$

and the signs of

![]() $\eta _{k_i}$

and

$\eta _{k_i}$

and

![]() $\eta _{k_j}$

in formula (1.7) are opposites. Moreover, in the case of a pairing

$\eta _{k_j}$

in formula (1.7) are opposites. Moreover, in the case of a pairing

![]() $\left (k_i,k_j\right )$

we can essentially replace

$\left (k_i,k_j\right )$

we can essentially replace

$\eta _{k_i}^{\pm } \eta _{k_j}^{\pm }=\left \lvert \eta _{k_i}\right \rvert ^2\approx 1$

, so in general we can bound, with overwhelming probability,

$\eta _{k_i}^{\pm } \eta _{k_j}^{\pm }=\left \lvert \eta _{k_i}\right \rvert ^2\approx 1$

, so in general we can bound, with overwhelming probability,

$$ \begin{align*} \left\lvert\Sigma_k\right\rvert^2\lesssim\sum_{\left(\text{unpaired }k_i\right)}\left(\sum_{\substack{\left(\text{paired }k_i\right):\\ \left(k_1,\ldots,k_{2n+1}\right)\in S}}1\right)^2\lesssim\sum_{\left(k_1,\ldots,k_{2n+1}\right)\in S}1\cdot\sup_{\left(\text{unpaired }k_i\right)}\sum_{\substack{\left(\text{paired }k_i\right):\\ \left(k_1,\ldots,k_{2n+1}\right)\in S}}1. \end{align*} $$

$$ \begin{align*} \left\lvert\Sigma_k\right\rvert^2\lesssim\sum_{\left(\text{unpaired }k_i\right)}\left(\sum_{\substack{\left(\text{paired }k_i\right):\\ \left(k_1,\ldots,k_{2n+1}\right)\in S}}1\right)^2\lesssim\sum_{\left(k_1,\ldots,k_{2n+1}\right)\in S}1\cdot\sup_{\left(\text{unpaired }k_i\right)}\sum_{\substack{\left(\text{paired }k_i\right):\\ \left(k_1,\ldots,k_{2n+1}\right)\in S}}1. \end{align*} $$

It thus suffices to bound the number of choices for

![]() $(k_1,\ldots ,k_{2n+1})$

given the pairings, as well as the number of choices for the paired

$(k_1,\ldots ,k_{2n+1})$

given the pairings, as well as the number of choices for the paired

![]() $k_j$

s given the unpaired

$k_j$

s given the unpaired

![]() $k_j$

s.

$k_j$

s.

In the no-pairing case, such counting bounds are easy to prove, since the set S is well adapted to the tree structure of

![]() $\mathcal{T}\,$

; what makes the counting nontrivial is the pairings, especially those between leaves that are far away or from different levels (see Figure 3, where a pairing is depicted by an extra link between the two leaves). Nevertheless, we have developed a counting algorithm that specifically deals with the given pairing structure of

$\mathcal{T}\,$

; what makes the counting nontrivial is the pairings, especially those between leaves that are far away or from different levels (see Figure 3, where a pairing is depicted by an extra link between the two leaves). Nevertheless, we have developed a counting algorithm that specifically deals with the given pairing structure of

![]() $\mathcal{T}\,$

and ultimately leads to sharp counting bounds and consequently sharp bounds for

$\mathcal{T}\,$

and ultimately leads to sharp counting bounds and consequently sharp bounds for

![]() $\Sigma _k$

(see Proposition 3.5).

$\Sigma _k$

(see Proposition 3.5).

Figure 3 A paired tree with two pairings

![]() $(p=2)$

. The set

$(p=2)$

. The set

![]() ${\mathcal S}$

of single leaves is

${\mathcal S}$

of single leaves is

![]() $\{\mathfrak l_1,\mathfrak l_4,\mathfrak l_6,\mathfrak l_7,\mathfrak l_9 \}$

. The subset

$\{\mathfrak l_1,\mathfrak l_4,\mathfrak l_6,\mathfrak l_7,\mathfrak l_9 \}$

. The subset

![]() $\mathcal R\subset \mathcal {S}\cup \{\mathfrak {r}\}$

of red-coloured vertices is

$\mathcal R\subset \mathcal {S}\cup \{\mathfrak {r}\}$

of red-coloured vertices is

![]() $\{\mathfrak r, \mathfrak l_1,\mathfrak l_4,\mathfrak l_6\}$

. Here

$\{\mathfrak r, \mathfrak l_1,\mathfrak l_4,\mathfrak l_6\}$

. Here

![]() $(l, p, r)=(9, 2, 4)$

. A strongly admissible assignment with respect to this pairing, colouring and a certain fixed choice of the red modes

$(l, p, r)=(9, 2, 4)$

. A strongly admissible assignment with respect to this pairing, colouring and a certain fixed choice of the red modes

![]() $\left (k_{\mathfrak r},k_{\mathfrak l_4},k_{\mathfrak l_6}\right )$

corresponds to having the modes

$\left (k_{\mathfrak r},k_{\mathfrak l_4},k_{\mathfrak l_6}\right )$

corresponds to having the modes

![]() $k_{\mathfrak l_2}=k_{\mathfrak l_3}$

,

$k_{\mathfrak l_2}=k_{\mathfrak l_3}$

,

![]() $k_{\mathfrak l_5}=k_{\mathfrak l_8}$

and

$k_{\mathfrak l_5}=k_{\mathfrak l_8}$

and

![]() $\lvert k_{\mathfrak l}\rvert \leq L^{\theta }$

for all the uncoloured leaves. The rest of the modes are determined according to Definition 2.2.

$\lvert k_{\mathfrak l}\rvert \leq L^{\theta }$

for all the uncoloured leaves. The rest of the modes are determined according to Definition 2.2.

1.2.3 An

$\ell ^2$

operator norm bound

$\ell ^2$

operator norm bound

In contrast to the tree terms

![]() $\mathcal J_{\mathcal{T}\,}$

, the remainder term

$\mathcal J_{\mathcal{T}\,}$

, the remainder term

![]() $\mathcal R_{N+1}$

has no explicit random structure. Indeed, the only way it feels the ‘chaos’ of the initial data is through the equation it satisfies, which in integral form and spatial Fourier variables looks like

$\mathcal R_{N+1}$

has no explicit random structure. Indeed, the only way it feels the ‘chaos’ of the initial data is through the equation it satisfies, which in integral form and spatial Fourier variables looks like

where

![]() $\mathcal J_{\sim N}$

is a sum of Feynman trees

$\mathcal J_{\sim N}$

is a sum of Feynman trees

![]() $\mathcal J_{\mathcal{T}\,}$

(already described) of scale

$\mathcal J_{\mathcal{T}\,}$

(already described) of scale

![]() $\mathfrak s (\mathcal{T}\,\,)\sim N$

, and

$\mathfrak s (\mathcal{T}\,\,)\sim N$

, and

![]() $\mathcal L$

,

$\mathcal L$

,

![]() $\mathcal Q$

and

$\mathcal Q$

and

![]() $\mathcal C$

are, respectively, linear, bilinear and trilinear operator in

$\mathcal C$

are, respectively, linear, bilinear and trilinear operator in

![]() $\mathcal R_{N+1}$

. The main point here is that one would like to propagate the estimates on

$\mathcal R_{N+1}$

. The main point here is that one would like to propagate the estimates on

![]() $\mathcal J_{\sim N}$

to

$\mathcal J_{\sim N}$

to

![]() $\mathcal R_{N+1}$

itself; this is how we make rigorous the so-called ‘propagation of chaos or quasi-Gaussianity’ claims that are often adopted in formal derivations. In another aspect, qualitative results on propagation of quasi-Gaussianity, in the form of absolute continuity of measures, have been obtained in some cases (with different settings) by exploiting almost-conservation laws (e.g., [Reference Tzvetkov48]).

$\mathcal R_{N+1}$

itself; this is how we make rigorous the so-called ‘propagation of chaos or quasi-Gaussianity’ claims that are often adopted in formal derivations. In another aspect, qualitative results on propagation of quasi-Gaussianity, in the form of absolute continuity of measures, have been obtained in some cases (with different settings) by exploiting almost-conservation laws (e.g., [Reference Tzvetkov48]).

Since we are bootstrapping a smallness estimate on

![]() $\mathcal R_{N+1}$

, any quadratic and cubic form of

$\mathcal R_{N+1}$

, any quadratic and cubic form of

![]() $\mathcal R_{N+1}$

will be easily bounded. It therefore suffices to propagate the bound for the term

$\mathcal R_{N+1}$

will be easily bounded. It therefore suffices to propagate the bound for the term

![]() $\mathcal L(\mathcal R_{N+1})$

, which reduces to bounding the

$\mathcal L(\mathcal R_{N+1})$

, which reduces to bounding the

![]() $\ell ^2\to \ell ^2$

operator norm for the linear operator

$\ell ^2\to \ell ^2$

operator norm for the linear operator

![]() $\mathcal L$

. By definition, the operator

$\mathcal L$

. By definition, the operator

![]() $\mathcal L$

will have the form

$\mathcal L$

will have the form

![]() $v\mapsto \mathcal {IW}\left (\mathcal J_{\mathcal{T}\,_1}, \mathcal J_{\mathcal{T}\,_2}, v\right )$

, where

$v\mapsto \mathcal {IW}\left (\mathcal J_{\mathcal{T}\,_1}, \mathcal J_{\mathcal{T}\,_2}, v\right )$

, where

![]() $\mathcal {I}$

is the Duhamel operator,

$\mathcal {I}$

is the Duhamel operator,

![]() $\mathcal {W}$

is the trilinear form coming from the cubic nonlinearity and

$\mathcal {W}$

is the trilinear form coming from the cubic nonlinearity and

![]() $\mathcal J_{\mathcal{T}\,_1}, \mathcal J_{\mathcal{T}\,_2}$

are trees of scale

$\mathcal J_{\mathcal{T}\,_1}, \mathcal J_{\mathcal{T}\,_2}$

are trees of scale

![]() $\leq N$

; thus in Fourier space it can be viewed as a matrix with random coefficients. The key to obtaining the sharp estimate for

$\leq N$

; thus in Fourier space it can be viewed as a matrix with random coefficients. The key to obtaining the sharp estimate for

![]() $\mathcal L$

is then to exploit the cancellation coming from this randomness, and the most efficient way to do this is via the

$\mathcal L$

is then to exploit the cancellation coming from this randomness, and the most efficient way to do this is via the

![]() $TT^*$

method.

$TT^*$

method.

In fact, the idea of applying the

![]() $TT^*$

method to random matrices has already been used by Bourgain [Reference Bourgain3]. In that paper one is still far above (probabilistic) criticality, so applying the

$TT^*$

method to random matrices has already been used by Bourgain [Reference Bourgain3]. In that paper one is still far above (probabilistic) criticality, so applying the

![]() $TT^*$

method once already gives adequate control. In the present case, however, we are aiming at obtaining sharp estimates, so applying

$TT^*$

method once already gives adequate control. In the present case, however, we are aiming at obtaining sharp estimates, so applying

![]() $TT^*$

once will not be sufficient.

$TT^*$

once will not be sufficient.

The solution is thus to apply

![]() $TT^*$

sufficiently many times (say,

$TT^*$

sufficiently many times (say,

![]() $D\gg 1$

), which leads to the analysis of the kernel of the operator

$D\gg 1$

), which leads to the analysis of the kernel of the operator

![]() $(\mathcal L\mathcal L^*)^D$

. At first sight this kernel seems to be a complicated multilinear expression which is difficult to handle; nevertheless, we make one key observation, namely that this kernel can essentially be recast in the form of formula (1.7) for some large auxiliary tree

$(\mathcal L\mathcal L^*)^D$

. At first sight this kernel seems to be a complicated multilinear expression which is difficult to handle; nevertheless, we make one key observation, namely that this kernel can essentially be recast in the form of formula (1.7) for some large auxiliary tree

![]() $\mathcal{T}\,=\mathcal{T}\;^D$

, which is obtained from a single root node by attaching copies of the trees

$\mathcal{T}\,=\mathcal{T}\;^D$

, which is obtained from a single root node by attaching copies of the trees

![]() $\mathcal{T}\,_1$

and

$\mathcal{T}\,_1$

and

![]() $\mathcal{T}\,_2$

successively a total of

$\mathcal{T}\,_2$

successively a total of

![]() $2D$

times (see Figure 4). With this observation, the arguments in the previous section then lead to sharp bounds of the kernel of

$2D$

times (see Figure 4). With this observation, the arguments in the previous section then lead to sharp bounds of the kernel of

![]() $(\mathcal L\mathcal L^*)^D$

, up to some loss that is a power of L independent of D; taking the

$(\mathcal L\mathcal L^*)^D$

, up to some loss that is a power of L independent of D; taking the

![]() $1/(2D)$

power and choosing D sufficiently large makes this power negligible and implies the sharp bound for the operator norm of

$1/(2D)$

power and choosing D sufficiently large makes this power negligible and implies the sharp bound for the operator norm of

![]() $\mathcal L$

(see Section 3.3).

$\mathcal L$

(see Section 3.3).

Figure 4 Construction of the tree

![]() $\mathcal{T}\,^D$

by successive plantings of trees

$\mathcal{T}\,^D$

by successive plantings of trees

![]() $\mathcal{T}\,_1$

and

$\mathcal{T}\,_1$

and

![]() $\mathcal{T}\,_2$

onto the first two nodes of a ternary tree, starting with a root

$\mathcal{T}\,_2$

onto the first two nodes of a ternary tree, starting with a root

![]() $\mathfrak r$

and stopping after

$\mathfrak r$

and stopping after

![]() $2D$

steps, leaving a leaf node

$2D$

steps, leaving a leaf node

![]() $\mathfrak r'$

. In the figure,

$\mathfrak r'$

. In the figure,

![]() $D=2$

.

$D=2$

.

1.2.4 Sharpness of estimates

We remark that the estimates we prove for

![]() $\mathcal J_{\mathcal{T}\,}$

are sharp up to some finite power of L (independent of

$\mathcal J_{\mathcal{T}\,}$

are sharp up to some finite power of L (independent of

![]() $\mathcal{T}\,$

). More precisely, from Proposition 2.5 we know that for any ternary tree

$\mathcal{T}\,$

). More precisely, from Proposition 2.5 we know that for any ternary tree

![]() $\mathcal{T}\,$

of scale n and possible pairing structure (see Definition 3.3), with overwhelming probability,

$\mathcal{T}\,$

of scale n and possible pairing structure (see Definition 3.3), with overwhelming probability,

$$ \begin{align}\sup_k\left\lVert(\mathcal J_{\mathcal{T}})_k\right\rVert_{h^{b}}\leq L^{0+}\rho^n, \end{align} $$

$$ \begin{align}\sup_k\left\lVert(\mathcal J_{\mathcal{T}})_k\right\rVert_{h^{b}}\leq L^{0+}\rho^n, \end{align} $$

where

![]() $\rho $

is some quantity depending on

$\rho $

is some quantity depending on

![]() $\alpha $

, L and T (see formula (2.24)), k is the spatial Fourier variable and

$\alpha $

, L and T (see formula (2.24)), k is the spatial Fourier variable and

![]() $h^b$

is a time-Sobolev norm defined in equation (2.22); on the other hand, we will show that that for some particular choice of trees

$h^b$

is a time-Sobolev norm defined in equation (2.22); on the other hand, we will show that that for some particular choice of trees

![]() $\mathcal{T}\,$

of scale n and some particular choice of pairings, with high probability,

$\mathcal{T}\,$

of scale n and some particular choice of pairings, with high probability,

$$ \begin{align}\sup_k\left\lVert(\mathcal J_{\mathcal{T}})_k\right\rVert_{h^{b}}\geq L^{-d}\rho^n. \end{align} $$

$$ \begin{align}\sup_k\left\lVert(\mathcal J_{\mathcal{T}})_k\right\rVert_{h^{b}}\geq L^{-d}\rho^n. \end{align} $$

The timescale T of Theorem 1.3 is the largest that makes

![]() $\rho \ll 1$

; thus if one wants to go beyond T in cases other than Theorem 1.1, it would be necessary to address the divergence of formula (1.9) with

$\rho \ll 1$

; thus if one wants to go beyond T in cases other than Theorem 1.1, it would be necessary to address the divergence of formula (1.9) with

![]() $\rho \gg 1$

by exploiting the cancellation between different tree terms or different pairing choices (see Section 3.4).

$\rho \gg 1$

by exploiting the cancellation between different tree terms or different pairing choices (see Section 3.4).

1.2.5 Discussions

Shortly after the completion of this paper, work of Collot and Germain [Reference Collot and Germain12] was announced that studies the same problem, but only in the rational-torus setting. In the language of this paper, their result corresponds to the validity of equation (1.3) for

![]() $L\leq t\leq L^{2-\delta }$

, under the assumption

$L\leq t\leq L^{2-\delta }$

, under the assumption

![]() $\alpha \leq L^{-1-\delta }$

. This is a special case of Theorem 1.3, essentially corresponding to the rectangle below the horizontal line

$\alpha \leq L^{-1-\delta }$

. This is a special case of Theorem 1.3, essentially corresponding to the rectangle below the horizontal line

![]() $\log _LT=2$

and to the right of the vertical line

$\log _LT=2$

and to the right of the vertical line

![]() $\log _L\left (\alpha ^{-1}\right )=1$

in Figure 1. We also mention later work by the same authors [Reference Collot and Germain13], where they consider a generic nonrectangular torus (as opposed to the rectangular tori here and in [Reference Collot and Germain12]) and prove the existence of solutions (but without justifying equation (1.3)) up to time

$\log _L\left (\alpha ^{-1}\right )=1$

in Figure 1. We also mention later work by the same authors [Reference Collot and Germain13], where they consider a generic nonrectangular torus (as opposed to the rectangular tori here and in [Reference Collot and Germain12]) and prove the existence of solutions (but without justifying equation (1.3)) up to time

![]() $t\leq L^{-\delta }T_{\mathrm {kin}}$

for a wider range of power laws between

$t\leq L^{-\delta }T_{\mathrm {kin}}$

for a wider range of power laws between

![]() $\alpha $

and L.

$\alpha $

and L.

While the present paper was being peer-reviewed, we submitted new work to arXiv [Reference Deng and Hani16], in which we provide the first full derivation of (WKE) from (NLS). Those results reach the kinetic time scale

![]() $t=\tau \cdot T_{\mathrm {kin}}$

, where

$t=\tau \cdot T_{\mathrm {kin}}$

, where

![]() $\tau $

is independent of L (compared to Theorem 1.1 here, where

$\tau $

is independent of L (compared to Theorem 1.1 here, where

![]() $\tau \leq L^{-\varepsilon }$

), for the scaling law

$\tau \leq L^{-\varepsilon }$

), for the scaling law

![]() $\alpha \sim L^{-1}$

on generic (irrational) rectangular tori and the scaling laws

$\alpha \sim L^{-1}$

on generic (irrational) rectangular tori and the scaling laws

![]() $\alpha \sim L^{-\gamma }$

(where

$\alpha \sim L^{-\gamma }$

(where

![]() $\gamma <1$

and is close to

$\gamma <1$

and is close to

![]() $1$

) on arbitrary rectangular tori.

$1$

) on arbitrary rectangular tori.

Shortly after completing [Reference Deng and Hani16], we received a preprint of a forthcoming deep work by Staffilani and Tran [Reference Staffilani and Tran47]. It concerns a high-dimensional (on

![]() $\mathbb {T}^d$

for

$\mathbb {T}^d$

for

![]() $d\geq 14$

) KdV equation under a time-dependent Stratonovich stochastic forcing, which effectively randomises the phases without injecting energy into the system. The authors derive the corresponding wave kinetic equation up to the kinetic time scale, for the scaling law

$d\geq 14$

) KdV equation under a time-dependent Stratonovich stochastic forcing, which effectively randomises the phases without injecting energy into the system. The authors derive the corresponding wave kinetic equation up to the kinetic time scale, for the scaling law

![]() $\alpha \sim L^{-0}$

(i.e., first taking

$\alpha \sim L^{-0}$

(i.e., first taking

![]() $L\to \infty $

and then taking

$L\to \infty $

and then taking

![]() $\alpha \to 0$

). They also prove a conditional result without such forcing, where the condition is verified for some particular initial densities converging to the equilibrium state (stationary solution to the wave kinetic equation) in the limit.

$\alpha \to 0$

). They also prove a conditional result without such forcing, where the condition is verified for some particular initial densities converging to the equilibrium state (stationary solution to the wave kinetic equation) in the limit.

1.3 Organisation of the paper

In Section 2 we explain the diagrammatic expansion of the solution into Feynman trees, and state the a priori estimates on such trees and remainder terms, which yield the long-time existence of such expansions. Section 3 is devoted to the proof of those a priori estimates. In Section 4 we prove the main theorems already mentioned, and in Section 5 we prove the necessary number-theoretic results that allow us to replace the highly oscillating Riemann sums by integrals.

1.4 Notation

Most notation will be standard. Let

![]() $z^+=z$

and

$z^+=z$

and

![]() $z^-=\overline {z}$

. Define

$z^-=\overline {z}$

. Define

![]() $\left \lvert k\right \rvert _{\beta }$

by

$\left \lvert k\right \rvert _{\beta }$

by

$\left \lvert k\right \rvert _{\beta }^2=\beta _1k_1^2+\cdots +\beta _dk_d^2$

for

$\left \lvert k\right \rvert _{\beta }^2=\beta _1k_1^2+\cdots +\beta _dk_d^2$

for

![]() $k=(k_1,\ldots ,k_d)$

. The spatial Fourier series of a function

$k=(k_1,\ldots ,k_d)$

. The spatial Fourier series of a function

$u: {\mathbb T}_L^d \to \mathbb C$

is defined on

$u: {\mathbb T}_L^d \to \mathbb C$

is defined on

$\mathbb Z^d_L:=L^{-1}\mathbb Z^{d}$

by

$\mathbb Z^d_L:=L^{-1}\mathbb Z^{d}$

by

$$ \begin{align} \widehat{u}_k=\int_{{\mathbb T}^d_L} u(x) e^{-2\pi i k\cdot x},\quad \text{so that}\quad u(x)=\frac{1}{L^d}\sum_{k \in \mathbb Z^d_L} \widehat{u}_k e^{2\pi i k\cdot x}. \end{align} $$

$$ \begin{align} \widehat{u}_k=\int_{{\mathbb T}^d_L} u(x) e^{-2\pi i k\cdot x},\quad \text{so that}\quad u(x)=\frac{1}{L^d}\sum_{k \in \mathbb Z^d_L} \widehat{u}_k e^{2\pi i k\cdot x}. \end{align} $$

The temporal Fourier transform is defined by

$$ \begin{align*} \widetilde{f}(\tau)=\int_{\mathbb{R}}e^{-2\pi it\tau}f(t)\mathrm{d}t. \end{align*} $$

$$ \begin{align*} \widetilde{f}(\tau)=\int_{\mathbb{R}}e^{-2\pi it\tau}f(t)\mathrm{d}t. \end{align*} $$

Let

![]() $\delta>0$

be fixed throughout the paper. Let N, s and

$\delta>0$

be fixed throughout the paper. Let N, s and

$b>\frac {1}{2}$

be fixed, such that N and s are large enough and

$b>\frac {1}{2}$

be fixed, such that N and s are large enough and

$b-\frac {1}{2}$

is small enough, depending on d and

$b-\frac {1}{2}$

is small enough, depending on d and

![]() $\delta $

. The quantity C will denote any large absolute constant, not dependent on

$\delta $

. The quantity C will denote any large absolute constant, not dependent on

$\big(N,s,b-\frac {1}{2}\big)$

, and

$\big(N,s,b-\frac {1}{2}\big)$

, and

![]() $\theta $

will denote any small positive constant, which is dependent on

$\theta $

will denote any small positive constant, which is dependent on

$\big(N,s,b-\frac {1}{2}\big)$

; these may change from line to line. The symbols

$\big(N,s,b-\frac {1}{2}\big)$

; these may change from line to line. The symbols

![]() $O(\cdot )$

,

$O(\cdot )$

,

![]() $\lesssim $

and so on will have their usual meanings, with implicit constants depending on

$\lesssim $

and so on will have their usual meanings, with implicit constants depending on

![]() $\theta $

. Let L be large enough depending on all these implicit constants. If some statement S involving

$\theta $

. Let L be large enough depending on all these implicit constants. If some statement S involving

![]() $\omega $

is true with probability

$\omega $

is true with probability

![]() $\geq 1-Ke^{-L^{\theta }}$

for some constant K (depending on

$\geq 1-Ke^{-L^{\theta }}$

for some constant K (depending on

![]() $\theta $

), then we say this statement S is L-certain.

$\theta $

), then we say this statement S is L-certain.

When a function depends on many variables, we may use notations like

to denote a function f of variables

![]() $(x_i:i\in A)$

and

$(x_i:i\in A)$

and

![]() $y_1,\ldots ,y_m$

.

$y_1,\ldots ,y_m$

.

2 Tree expansions and long-time existence

2.1 First reductions

Let

![]() $\widehat {u}_k(t)$

be the Fourier coefficients of

$\widehat {u}_k(t)$

be the Fourier coefficients of

![]() $u(t)$

, as in equation (1.10). Then with

$u(t)$

, as in equation (1.10). Then with

$c_k(t):= e^{2\pi i\left \lvert k\right \rvert _{\beta }^2t} \widehat u_k(t)=\left (\mathcal F_{{\mathbb T}^d_L} e^{-it\Delta _{\beta }} u\right )(k)$

, we arrive at the following equation for the Fourier modes:

$c_k(t):= e^{2\pi i\left \lvert k\right \rvert _{\beta }^2t} \widehat u_k(t)=\left (\mathcal F_{{\mathbb T}^d_L} e^{-it\Delta _{\beta }} u\right )(k)$

, we arrive at the following equation for the Fourier modes: