1 Introduction

Over 50 years ago Strassen [Reference Strassen40] discovered that the usual row-column method for multiplying

![]() $\mathbf {n}\times \mathbf {n}$

matrices, which uses

$\mathbf {n}\times \mathbf {n}$

matrices, which uses

![]() $O(\mathbf {n}^3)$

arithmetic operations, is not optimal by exhibiting an explicit algorithm to multiply matrices using

$O(\mathbf {n}^3)$

arithmetic operations, is not optimal by exhibiting an explicit algorithm to multiply matrices using

![]() $O(\mathbf {n}^{2.81})$

arithmetic operations. Ever since then, substantial efforts have been made to determine just how efficiently matrices may be multiplied. See any of [Reference Bürgisser, Clausen and Shokrollahi12, Reference Bläser8, Reference Landsberg31] for an overview. Matrix multiplication of

$O(\mathbf {n}^{2.81})$

arithmetic operations. Ever since then, substantial efforts have been made to determine just how efficiently matrices may be multiplied. See any of [Reference Bürgisser, Clausen and Shokrollahi12, Reference Bläser8, Reference Landsberg31] for an overview. Matrix multiplication of

![]() $\mathbf {n}\times {\boldsymbol \ell }$

matrices with

$\mathbf {n}\times {\boldsymbol \ell }$

matrices with

![]() ${\boldsymbol \ell }\times \mathbf {m}$

matrices is a bilinear map, that is, a tensor

${\boldsymbol \ell }\times \mathbf {m}$

matrices is a bilinear map, that is, a tensor

![]() $M_{\langle {\boldsymbol \ell },\mathbf {m},\mathbf {n}\rangle }\in \mathbb C^{{\boldsymbol \ell }\mathbf {m}}{\mathord { \otimes } } \mathbb C^{\mathbf {m}\mathbf {n}}{\mathord { \otimes } } \mathbb C^{\mathbf {n}{\boldsymbol \ell }}$

, and since 1980 [Reference Bini6], the primary complexity measure of the matrix multiplication tensor has been its border rank, which is defined as follows.

$M_{\langle {\boldsymbol \ell },\mathbf {m},\mathbf {n}\rangle }\in \mathbb C^{{\boldsymbol \ell }\mathbf {m}}{\mathord { \otimes } } \mathbb C^{\mathbf {m}\mathbf {n}}{\mathord { \otimes } } \mathbb C^{\mathbf {n}{\boldsymbol \ell }}$

, and since 1980 [Reference Bini6], the primary complexity measure of the matrix multiplication tensor has been its border rank, which is defined as follows.

A nonzero tensor

![]() $T\in \mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {b}}{\mathord { \otimes } } \mathbb C^{\mathbf {c}}=:A{\mathord { \otimes } } B{\mathord { \otimes } } C$

has rank one if

$T\in \mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {b}}{\mathord { \otimes } } \mathbb C^{\mathbf {c}}=:A{\mathord { \otimes } } B{\mathord { \otimes } } C$

has rank one if

![]() $T=a{\mathord { \otimes } } b{\mathord { \otimes } } c$

for some

$T=a{\mathord { \otimes } } b{\mathord { \otimes } } c$

for some

![]() $a\in A$

,

$a\in A$

,

![]() $b\in B$

,

$b\in B$

,

![]() $c\in C$

and the rank of T, denoted

$c\in C$

and the rank of T, denoted

![]() $\mathbf {R} (T)$

, is the smallest r such that T may be written as a sum of r rank one tensors. The border rank of T, denoted

$\mathbf {R} (T)$

, is the smallest r such that T may be written as a sum of r rank one tensors. The border rank of T, denoted

![]() $\underline {\mathbf {R}}(T)$

, is the smallest r such that T may be written as a limit of a sum of r rank one tensors. In geometric language, the border rank is smallest r such that

$\underline {\mathbf {R}}(T)$

, is the smallest r such that T may be written as a limit of a sum of r rank one tensors. In geometric language, the border rank is smallest r such that

![]() $[T]\in \sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

. Here,

$[T]\in \sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

. Here,

![]() $\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

denotes the r-th secant variety of the Segre variety of rank one tensors. For the relations between rank, border rank and other measures of complexity, see [Reference Bürgisser, Clausen and Shokrollahi12, Ch. 14-15].

$\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

denotes the r-th secant variety of the Segre variety of rank one tensors. For the relations between rank, border rank and other measures of complexity, see [Reference Bürgisser, Clausen and Shokrollahi12, Ch. 14-15].

Despite the vast literature on matrix multiplication, previous to this paper, the precise border rank of

![]() $M_{\langle {\boldsymbol \ell },\mathbf {m},\mathbf {n}\rangle }$

was known in exactly one nontrivial case, namely

$M_{\langle {\boldsymbol \ell },\mathbf {m},\mathbf {n}\rangle }$

was known in exactly one nontrivial case, namely

![]() $M_{\langle 2\rangle }=M_{\langle 222\rangle }$

[Reference Landsberg29]. We determine the border rank in two new cases,

$M_{\langle 2\rangle }=M_{\langle 222\rangle }$

[Reference Landsberg29]. We determine the border rank in two new cases,

![]() $M_{\langle 223\rangle }$

and

$M_{\langle 223\rangle }$

and

![]() $M_{\langle 233\rangle }$

. We prove new border rank lower bounds for

$M_{\langle 233\rangle }$

. We prove new border rank lower bounds for

![]() $M_{\langle 3\rangle }$

and two infinite sequences of new cases,

$M_{\langle 3\rangle }$

and two infinite sequences of new cases,

![]() $M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

and

$M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

and

![]() $M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

. Previous to this paper, there were no nontrivial lower bounds for these sequences. In fact, there were no nontrivial border rank lower bounds for any tensor in

$M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

. Previous to this paper, there were no nontrivial lower bounds for these sequences. In fact, there were no nontrivial border rank lower bounds for any tensor in

![]() $\mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {b}}$

, where

$\mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {b}}$

, where

![]() $\mathbf {b}>2\mathbf {a}$

other than Lickteig’s near trivial bound [Reference Lickteig37]

$\mathbf {b}>2\mathbf {a}$

other than Lickteig’s near trivial bound [Reference Lickteig37]

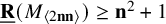

![]() $\underline {\mathbf {R}}(M_{\langle \mathbf {m},\mathbf {n},\mathbf {n}\rangle })\geq \mathbf {n}^2+1$

when

$\underline {\mathbf {R}}(M_{\langle \mathbf {m},\mathbf {n},\mathbf {n}\rangle })\geq \mathbf {n}^2+1$

when

![]() $\mathbf {m}<\mathbf {n}$

, (where the bound of

$\mathbf {m}<\mathbf {n}$

, (where the bound of

![]() $\mathbf {n}^2$

is trivial). We also determine the border rank of the

$\mathbf {n}^2$

is trivial). We also determine the border rank of the

![]() $3\times 3$

determinant considered as a tensor, which is important for proving upper bounds on the exponent of matrix multiplication as discussed below. See §1.2 below for precise statements.

$3\times 3$

determinant considered as a tensor, which is important for proving upper bounds on the exponent of matrix multiplication as discussed below. See §1.2 below for precise statements.

1.1 Methods/History

This paper deals exclusively with lower bounds (“complexity theory’s Waterloo” according to [Reference Arora and Barak5, Chap. 14]). For a history of upper bounds, see, for example, [Reference Bläser8, Reference Landsberg31].

Let

![]() $\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

denote the set of tensors of border rank at most r, which is called the r-th secant variety of the Segre variety. Previously, border rank lower bounds for tensors were primarily obtained by finding a polynomial vanishing on

$\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

denote the set of tensors of border rank at most r, which is called the r-th secant variety of the Segre variety. Previously, border rank lower bounds for tensors were primarily obtained by finding a polynomial vanishing on

![]() $\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

and then showing the polynomial is nonzero when evaluated on the tensor in question. These polynomials were found by reducing multilinear algebra to linear algebra [Reference Strassen41], and also exploiting the large symmetry group of

$\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

and then showing the polynomial is nonzero when evaluated on the tensor in question. These polynomials were found by reducing multilinear algebra to linear algebra [Reference Strassen41], and also exploiting the large symmetry group of

![]() $\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

to help find the polynomials [Reference Landsberg and Ottaviani35, Reference Landsberg and Ottaviani36]. Such methods are subject to barriers [Reference Efremenko, Garg, Oliveira and Wigderson18, Reference Gałązka21]; see [Reference Landsberg32, §2.2] for an overview. A technique allowing one to go slightly beyond the barriers was introduced in [Reference Landsberg and Michałek34]. The novelty there was, in addition to exploiting the symmetry group of

$\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

to help find the polynomials [Reference Landsberg and Ottaviani35, Reference Landsberg and Ottaviani36]. Such methods are subject to barriers [Reference Efremenko, Garg, Oliveira and Wigderson18, Reference Gałązka21]; see [Reference Landsberg32, §2.2] for an overview. A technique allowing one to go slightly beyond the barriers was introduced in [Reference Landsberg and Michałek34]. The novelty there was, in addition to exploiting the symmetry group of

![]() $\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

, to also exploit the symmetry group of the tensor one wanted to prove lower bounds on. This border substitution method of [Reference Landsberg and Michałek34] relied on first using the symmetry of the tensor to study its degenerations via the Normal Form Lemma 2.3, and then to use polynomials on the degeneration of the tensor.

$\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

, to also exploit the symmetry group of the tensor one wanted to prove lower bounds on. This border substitution method of [Reference Landsberg and Michałek34] relied on first using the symmetry of the tensor to study its degenerations via the Normal Form Lemma 2.3, and then to use polynomials on the degeneration of the tensor.

The classical apolarity method studies the decompositions of a homogeneous polynomial of degree d into a sum of d-th powers of linear forms, (these are called Waring rank decompositions); see,for example, [Reference Iarrobino and Kanev27]. It was generalized to study ranks of points with respect to toric varieties [Reference Gałązka22, Reference Gallet, Ranestad and Villamizar23]. To prove rank lower bounds with it, one takes the ideal of linear differential operators annihilating a given polynomial P and proves it does not contain an ideal annihilating r distinct points. In [Reference Buczyńska and Buczyński11], Buczyńska and Buczyński extend this classical method to the border rank setting. They also extend the Normal Form Lemma to the entire ideal associated to the border rank decomposition of the tensor, their Fixed Ideal Theorem (Theorem 2.4). (In the language introduced below, the Normal Form Lemma is the

![]() $(111)$

case of the Fixed Ideal Theorem.) In the present work, we describe an algorithm to enumerate a set of parameterized families of ideals which together exhaust those which could satisfy the conclusion of the Fixed Ideal Theorem, and we show this enumeration fails to produce any candidates in important cases of interest.

$(111)$

case of the Fixed Ideal Theorem.) In the present work, we describe an algorithm to enumerate a set of parameterized families of ideals which together exhaust those which could satisfy the conclusion of the Fixed Ideal Theorem, and we show this enumeration fails to produce any candidates in important cases of interest.

The ideals subject to enumeration are homogeneous in three sets of variables, so we have a

![]() $\mathbb {Z}^3$

-graded ring of polynomials, that is,

$\mathbb {Z}^3$

-graded ring of polynomials, that is,

![]() $I=\bigoplus _{i,j,k} I_{ijk}$

, and we may study a putative ideal I in each multidegree. Given r, the ideal enumeration algorithm builds a candidate ideal family step by step, starting in low (multi) degree and building upwards. At each building step, there are tests that restrict a so-far built family to a subfamily, and after these tests empty families are removed. If at any point there are no remaining candidates, one concludes there is no border rank r decomposition. For tensors with large symmetry groups, the dimensions of candidate ideal families one needs to consider during this enumeration are typically small. All the results of this paper require examining only the first few multigraded components of candidate ideal families.

$I=\bigoplus _{i,j,k} I_{ijk}$

, and we may study a putative ideal I in each multidegree. Given r, the ideal enumeration algorithm builds a candidate ideal family step by step, starting in low (multi) degree and building upwards. At each building step, there are tests that restrict a so-far built family to a subfamily, and after these tests empty families are removed. If at any point there are no remaining candidates, one concludes there is no border rank r decomposition. For tensors with large symmetry groups, the dimensions of candidate ideal families one needs to consider during this enumeration are typically small. All the results of this paper require examining only the first few multigraded components of candidate ideal families.

The restrictions to subfamilies result from upper bounding the ranks of certain linear maps. The linear maps are multiplication maps. On one hand, in order for a candidate space of polynomials to be an ideal, it must be closed under multiplication. On the other hand, our hypothesis that the ideal arises via a border rank r decomposition upper-bounds its dimension in each nontrivial multidegree; in fact one may assume it has codimension r in each multidegree.

The fact that the elimination conditions are rank conditions implies that the lower bound barriers [Reference Efremenko, Garg, Oliveira and Wigderson18, Reference Gałązka21] still hold for the technique as presented in this paper. In §1.3, we explain how we plan to augment these tests to go beyond the barriers in future work and how our techniques might be used to overcome upper bound barriers for the laser method as well.

We use representation theory at several levels. For tensors with symmetry, the Fixed Ideal Theorem significantly restricts the candidate

![]() $I_{ijk}$

’s one must consider, namely to those that are fixed by a Borel subgroup of the symmetry group of the tensor. Without this additional condition, even low degree ideal enumeration would likely be impossible to carry out except for very small examples.

$I_{ijk}$

’s one must consider, namely to those that are fixed by a Borel subgroup of the symmetry group of the tensor. Without this additional condition, even low degree ideal enumeration would likely be impossible to carry out except for very small examples.

We also make standard use of representation theory to put the matrices whose ranks we need to lower-bound in block diagonal format via Schur’s lemma. For example, to prove

![]() $\underline {\mathbf {R}}(M_{\langle 2\rangle })>6$

, the border apolarity method produces three size

$\underline {\mathbf {R}}(M_{\langle 2\rangle })>6$

, the border apolarity method produces three size

![]() $40\times 40$

matrices whose ranks need to be lower bounded. Decomposing the matrices to maps between isotypic components reduces the calculation to computing the ranks of several matrices of size

$40\times 40$

matrices whose ranks need to be lower bounded. Decomposing the matrices to maps between isotypic components reduces the calculation to computing the ranks of several matrices of size

![]() $4\times 8$

with entries

$4\times 8$

with entries

![]() $0,\pm 1$

, making the proof easily hand-checkable.

$0,\pm 1$

, making the proof easily hand-checkable.

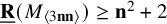

Our results for

![]() $M_{\langle 3\rangle }$

and

$M_{\langle 3\rangle }$

and

![]() $\operatorname {det}_3$

are obtained by a computer implementation of the ideal enumeration algorithm.

$\operatorname {det}_3$

are obtained by a computer implementation of the ideal enumeration algorithm.

For

![]() $M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

and

$M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

and

![]() $M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

, we must handle all

$M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

, we must handle all

![]() $\mathbf {n}$

uniformly, and a computer calculation is no longer possible. To do this, we consider potential

$\mathbf {n}$

uniformly, and a computer calculation is no longer possible. To do this, we consider potential

![]() $I_{110}$

candidates as a certain sum of ‘local’ contributions, which we analyze separately (Lemmas 7.2 and 7.4). Given this analysis, it is possible to give a purely combinatorial necessary condition for the suitability of a potential

$I_{110}$

candidates as a certain sum of ‘local’ contributions, which we analyze separately (Lemmas 7.2 and 7.4). Given this analysis, it is possible to give a purely combinatorial necessary condition for the suitability of a potential

![]() $I_{110}$

candidate, and the analysis of all potential candidates then takes the form of a combinatorial optimization problem over filled Young diagrams (Lemma 7.7). This technique reduces the problem to checking three cases of local contribution for

$I_{110}$

candidate, and the analysis of all potential candidates then takes the form of a combinatorial optimization problem over filled Young diagrams (Lemma 7.7). This technique reduces the problem to checking three cases of local contribution for

![]() $M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

and eight cases for

$M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

and eight cases for

![]() $M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

. This method for proving lower bounds is completely different from previous techniques.

$M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

. This method for proving lower bounds is completely different from previous techniques.

To enable a casual reader to see the various techniques we employ, we return to the proof that

![]() $\underline {\mathbf {R}}(M_{\langle 2\rangle })>6$

multiple times: first using the general algorithm naïvely in §4, then working dually to reduce the calculation (Remark 4.1), then using representation theory to block diagonalize the calculation in §6.2 and finally we observe that the result is an immediate consequence of our localization principle and Lemma 7.2 (Remark 7.3).

$\underline {\mathbf {R}}(M_{\langle 2\rangle })>6$

multiple times: first using the general algorithm naïvely in §4, then working dually to reduce the calculation (Remark 4.1), then using representation theory to block diagonalize the calculation in §6.2 and finally we observe that the result is an immediate consequence of our localization principle and Lemma 7.2 (Remark 7.3).

1.2 Results

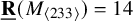

Theorem 1.1.

![]() $\underline {\mathbf {R}}(M_{\langle 3\rangle })\geq 17$

.

$\underline {\mathbf {R}}(M_{\langle 3\rangle })\geq 17$

.

The previous lower bounds were

![]() $14$

[Reference Strassen41] in 1983,

$14$

[Reference Strassen41] in 1983,

![]() $15$

[Reference Landsberg and Ottaviani36] in 2015 and

$15$

[Reference Landsberg and Ottaviani36] in 2015 and

![]() $16$

[Reference Landsberg and Michałek34] in 2018.

$16$

[Reference Landsberg and Michałek34] in 2018.

Let

![]() $\operatorname {det}_3\in \mathbb C^9{\mathord { \otimes } } \mathbb C^9{\mathord { \otimes } } \mathbb C^9$

denote the

$\operatorname {det}_3\in \mathbb C^9{\mathord { \otimes } } \mathbb C^9{\mathord { \otimes } } \mathbb C^9$

denote the

![]() $3\times 3$

determinant polynomial considered as a tensor. That is, as a bilinear map, it inputs two

$3\times 3$

determinant polynomial considered as a tensor. That is, as a bilinear map, it inputs two

![]() $3\times 3$

matrices and returns a third such that if the input is

$3\times 3$

matrices and returns a third such that if the input is

![]() $(M,M)$

, the output is the cofactor matrix of M.

$(M,M)$

, the output is the cofactor matrix of M.

Strassen’s laser method [Reference Strassen39] upper bounds the exponent of matrix multiplication using “simple” tensors. In [Reference Alman and Vassilevska Williams2, Reference Alman and Vassilevska Williams3, Reference Alman1, Reference Christandl, Vrana and Zuiddam13], barriers to proving further upper bounds with the method were found for many tensors. In [Reference Conner, Gesmundo, Landsberg and Ventura15], we showed that the (unique up to scale) skew-symmetric tensor in

![]() $\mathbb C^3{\mathord { \otimes } } \mathbb C^3{\mathord { \otimes } } \mathbb C^3$

, which we denote

$\mathbb C^3{\mathord { \otimes } } \mathbb C^3{\mathord { \otimes } } \mathbb C^3$

, which we denote

![]() $T_{skewcw,2}$

, is not subject to these upper bound barriers and could potentially be used to prove the exponent of matrix multiplication is two via its Kronecker powers. Explicitly, if one were to prove that

$T_{skewcw,2}$

, is not subject to these upper bound barriers and could potentially be used to prove the exponent of matrix multiplication is two via its Kronecker powers. Explicitly, if one were to prove that

![]() $\lim _{k{\mathord {\;\rightarrow \;}}\infty } \underline {\mathbf {R}}(T_{skewcw,2}^{\boxtimes k})^{\frac 1k}$

equals

$\lim _{k{\mathord {\;\rightarrow \;}}\infty } \underline {\mathbf {R}}(T_{skewcw,2}^{\boxtimes k})^{\frac 1k}$

equals

![]() $3$

, that would imply the exponent is two. One has

$3$

, that would imply the exponent is two. One has

![]() $\underline {\mathbf {R}}(T_{skewcw,2})=5$

and

$\underline {\mathbf {R}}(T_{skewcw,2})=5$

and

![]() $T_{skewcw,2}^{\boxtimes 2}=\operatorname {det}_3$

; see [Reference Conner, Gesmundo, Landsberg and Ventura15]. Thus, the following result is important for matrix multiplication complexity upper bounds:

$T_{skewcw,2}^{\boxtimes 2}=\operatorname {det}_3$

; see [Reference Conner, Gesmundo, Landsberg and Ventura15]. Thus, the following result is important for matrix multiplication complexity upper bounds:

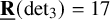

Theorem 1.2.

![]() $\underline {\mathbf {R}}(\operatorname {det}_3)= 17$

.

$\underline {\mathbf {R}}(\operatorname {det}_3)= 17$

.

The upper bound was proved in [Reference Conner, Gesmundo, Landsberg and Ventura15]. In [Reference Boij and Teitler9], a lower bound of

![]() $15$

for the Waring rank of

$15$

for the Waring rank of

![]() $\operatorname {det}_3$

was proven. The previous border rank lower bound was

$\operatorname {det}_3$

was proven. The previous border rank lower bound was

![]() $12$

as discussed in [Reference Farnsworth19], which follows from the Koszul flattening equations [Reference Landsberg and Ottaviani36]. Note that had the result here turned out differently, for example, were the border rank

$12$

as discussed in [Reference Farnsworth19], which follows from the Koszul flattening equations [Reference Landsberg and Ottaviani36]. Note that had the result here turned out differently, for example, were the border rank

![]() $16$

or lower,

$16$

or lower,

![]() $T_{skewcw,2}$

would have immediately been promoted to the most promising tensor for proving the exponent is two; see the discussion in [Reference Conner, Gesmundo, Landsberg and Ventura15].

$T_{skewcw,2}$

would have immediately been promoted to the most promising tensor for proving the exponent is two; see the discussion in [Reference Conner, Gesmundo, Landsberg and Ventura15].

Remark 1.3. The computation of the trilinear map associated to

![]() $\operatorname {det}_3$

, which inputs three matrices and outputs a number, is different than the computation of the associated polynomial, which inputs a single matrix and outputs a number. The polynomial may be computed using

$\operatorname {det}_3$

, which inputs three matrices and outputs a number, is different than the computation of the associated polynomial, which inputs a single matrix and outputs a number. The polynomial may be computed using

![]() $12$

multiplications in the naïve algorithm and using

$12$

multiplications in the naïve algorithm and using

![]() $10$

with the algorithm in [Reference Derksen17].

$10$

with the algorithm in [Reference Derksen17].

Previous to this paper,

![]() $M_{\langle 2\rangle }$

was the only nontrivial matrix multiplication tensor whose border rank had been determined, despite

$M_{\langle 2\rangle }$

was the only nontrivial matrix multiplication tensor whose border rank had been determined, despite

![]() $50$

years of work on the subject. We add two more cases to this list.

$50$

years of work on the subject. We add two more cases to this list.

Theorem 1.4.

![]() $\underline {\mathbf {R}}(M_{\langle 223\rangle })=10$

.

$\underline {\mathbf {R}}(M_{\langle 223\rangle })=10$

.

The upper bound dates back to Bini et al. in 1980 [Reference Bini, Lotti and Romani7]. Koszul flattenings [Reference Landsberg and Ottaviani36] give

![]() $\underline {\mathbf {R}}(M_{\langle 22\mathbf {n}\rangle })\geq 3\mathbf {n}$

. Smirnov [Reference Smirnov38] showed that

$\underline {\mathbf {R}}(M_{\langle 22\mathbf {n}\rangle })\geq 3\mathbf {n}$

. Smirnov [Reference Smirnov38] showed that

![]() $\underline {\mathbf {R}}(M_{\langle 22\mathbf {n}\rangle })\leq 3\mathbf {n}+1$

for

$\underline {\mathbf {R}}(M_{\langle 22\mathbf {n}\rangle })\leq 3\mathbf {n}+1$

for

![]() $\mathbf {n}\leq 7$

, and we expect equality to hold for all

$\mathbf {n}\leq 7$

, and we expect equality to hold for all

![]() $\mathbf {n}$

.

$\mathbf {n}$

.

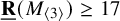

Theorem 1.5.

-

1.

$\underline {\mathbf {R}}(M_{\langle 233\rangle })=14$

.

$\underline {\mathbf {R}}(M_{\langle 233\rangle })=14$

. -

2. We have the following border rank lower bounds:

$$ \begin{align*} \mathbf{n} &\quad \underline{\mathbf{R}}(M_{\langle 2\mathbf{n}\mathbf{n}\rangle})\geq & \mathbf{n} &\quad \underline{\mathbf{R}}(M_{\langle 2\mathbf{n}\mathbf{n}\rangle})\geq & \mathbf{n} &\quad \underline{\mathbf{R}}(M_{\langle 2\mathbf{n}\mathbf{n}\rangle})\geq \\ 4 & \quad 22=4^2+6 & 11 & \quad 136=11^2+15 & 18 & \quad 348= 18^2+24 \\ 5 & \quad 32=5^2+7 & 12 & \quad 161=12^2+17 & 19 & \quad 387=19^2+26 \\ 6 & \quad 44=6^2+8 & 13 & \quad 187=13^2+18 & 20 & \quad 427= 20^2+27 \\ 7 & \quad 58=7^2+9 & 14 & \quad 215=14^2+19 & 21 & \quad 470= 21^2+29 \\ 8 & \quad 75=8^2+11 & 15 & \quad 246=15^2+21 & 22 & \quad 514= 22^2+30 \\ 9 & \quad 93=9^2+12 & 16 & \quad 278=16^2+22 & 23 & \quad 561= 23^2+32 \\ 10 & \quad 114=10^2+14 & 17 & \quad 312=17^2+23 & 24 & \quad 609= 24^2+33. \end{align*} $$

$$ \begin{align*} \mathbf{n} &\quad \underline{\mathbf{R}}(M_{\langle 2\mathbf{n}\mathbf{n}\rangle})\geq & \mathbf{n} &\quad \underline{\mathbf{R}}(M_{\langle 2\mathbf{n}\mathbf{n}\rangle})\geq & \mathbf{n} &\quad \underline{\mathbf{R}}(M_{\langle 2\mathbf{n}\mathbf{n}\rangle})\geq \\ 4 & \quad 22=4^2+6 & 11 & \quad 136=11^2+15 & 18 & \quad 348= 18^2+24 \\ 5 & \quad 32=5^2+7 & 12 & \quad 161=12^2+17 & 19 & \quad 387=19^2+26 \\ 6 & \quad 44=6^2+8 & 13 & \quad 187=13^2+18 & 20 & \quad 427= 20^2+27 \\ 7 & \quad 58=7^2+9 & 14 & \quad 215=14^2+19 & 21 & \quad 470= 21^2+29 \\ 8 & \quad 75=8^2+11 & 15 & \quad 246=15^2+21 & 22 & \quad 514= 22^2+30 \\ 9 & \quad 93=9^2+12 & 16 & \quad 278=16^2+22 & 23 & \quad 561= 23^2+32 \\ 10 & \quad 114=10^2+14 & 17 & \quad 312=17^2+23 & 24 & \quad 609= 24^2+33. \end{align*} $$

-

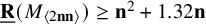

3. For

$0 < \epsilon < \frac {1}{4}$

, and

$0 < \epsilon < \frac {1}{4}$

, and

$\mathbf {n}>\frac {6}{\epsilon } \frac {3\sqrt {6}+6 - \epsilon }{6\sqrt {6} - \epsilon }$

,

$\mathbf {n}>\frac {6}{\epsilon } \frac {3\sqrt {6}+6 - \epsilon }{6\sqrt {6} - \epsilon }$

,

$\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+(3\sqrt {6}-6-\epsilon ) \mathbf {n} $

. In particular,

$\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+(3\sqrt {6}-6-\epsilon ) \mathbf {n} $

. In particular,

$\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+1.32 \mathbf {n}+1$

when

$\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+1.32 \mathbf {n}+1$

when

$\mathbf {n}\geq 25$

.

$\mathbf {n}\geq 25$

.

Previously, only the near trivial result that

![]() $\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+1$

was known by [Reference Lickteig37, Rem. p175].

$\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+1$

was known by [Reference Lickteig37, Rem. p175].

The upper bound in (1) is due to Smirnov [Reference Smirnov38], where he also proved

![]() $\underline {\mathbf {R}}(M_{\langle 244\rangle })\leq 24$

, and

$\underline {\mathbf {R}}(M_{\langle 244\rangle })\leq 24$

, and

![]() $ \underline {\mathbf {R}}(M_{\langle 255\rangle })\leq 38$

. When

$ \underline {\mathbf {R}}(M_{\langle 255\rangle })\leq 38$

. When

![]() $\mathbf {n}$

is even, one has the upper bound

$\mathbf {n}$

is even, one has the upper bound

![]() $\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\leq \frac 74\mathbf {n}^2$

by writing

$\underline {\mathbf {R}}(M_{\langle 2\mathbf {n}\mathbf {n}\rangle })\leq \frac 74\mathbf {n}^2$

by writing

![]() $M_{\langle 2\mathbf {n}\mathbf {n}\rangle }=M_{\langle 222\rangle }\boxtimes M_{\langle 1\frac {\mathbf {n}}{2}\frac {\mathbf {n}}{2}\rangle }$

, where

$M_{\langle 2\mathbf {n}\mathbf {n}\rangle }=M_{\langle 222\rangle }\boxtimes M_{\langle 1\frac {\mathbf {n}}{2}\frac {\mathbf {n}}{2}\rangle }$

, where

![]() $\boxtimes $

denotes Kronecker product of tensors; see, for example, [Reference Conner, Gesmundo, Landsberg and Ventura15].

$\boxtimes $

denotes Kronecker product of tensors; see, for example, [Reference Conner, Gesmundo, Landsberg and Ventura15].

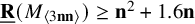

Theorem 1.6. For all

![]() $\mathbf {n}\ge 18$

,

$\mathbf {n}\ge 18$

,

![]() $\underline {\mathbf {R}}(M_{\langle 3\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+\sqrt {\frac 83} \mathbf {n}> \mathbf {n}^2+1.6\mathbf {n} $

.

$\underline {\mathbf {R}}(M_{\langle 3\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+\sqrt {\frac 83} \mathbf {n}> \mathbf {n}^2+1.6\mathbf {n} $

.

Previously, the only bound was the near trivial result that when

![]() $\mathbf {n}\geq 4$

,

$\mathbf {n}\geq 4$

,

![]() $\underline {\mathbf {R}}(M_{\langle 3\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+2$

by [Reference Lickteig37, Rem. p175].

$\underline {\mathbf {R}}(M_{\langle 3\mathbf {n}\mathbf {n}\rangle })\geq \mathbf {n}^2+2$

by [Reference Lickteig37, Rem. p175].

Using [Reference Lickteig37, Rem. p175], one obtains:

Corollary 1.7. For all

![]() $\mathbf {n} \ge 18$

and

$\mathbf {n} \ge 18$

and

![]() $\mathbf {m}\geq 3$

,

$\mathbf {m}\geq 3$

,

![]() $\underline {\mathbf {R}}(M_{\langle \mathbf {m}\mathbf {n}\mathbf {n}\rangle })\ge \mathbf {n}^2+\sqrt {\frac 83} \mathbf {n} +\mathbf {m}-3$

.

$\underline {\mathbf {R}}(M_{\langle \mathbf {m}\mathbf {n}\mathbf {n}\rangle })\ge \mathbf {n}^2+\sqrt {\frac 83} \mathbf {n} +\mathbf {m}-3$

.

Theorems 1.5 and 1.6 are the first nontrivial border rank lower bounds for any tensor in

![]() $\mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {b}}{\mathord { \otimes } } \mathbb C^{\mathbf {c}}$

when

$\mathbb C^{\mathbf {a}}{\mathord { \otimes } } \mathbb C^{\mathbf {b}}{\mathord { \otimes } } \mathbb C^{\mathbf {c}}$

when

![]() $\mathbf {c}>2\operatorname {max}\{ \mathbf {a},\mathbf {b}\}$

other than the above mentioned near trivial result of Lickteig, vastly expanding the classes of tensors for which lower bound techniques exist.

$\mathbf {c}>2\operatorname {max}\{ \mathbf {a},\mathbf {b}\}$

other than the above mentioned near trivial result of Lickteig, vastly expanding the classes of tensors for which lower bound techniques exist.

1.3 What comes next?

1.3.1 Breaking the lower bound barriers

The geometric interpretation of the border rank lower bound barriers of [Reference Efremenko, Garg, Oliveira and Wigderson18, Reference Gałązka21] is that all equations obtained by taking minors, called rank methods, are actually equations for a larger variety than

![]() $\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

, called the r-th cactus variety [Reference Buczyńska and Buczyński11]. This cactus variety agrees with the secant variety for

$\sigma _r(\operatorname {\mathrm {Seg}}(\mathbb P A\times \mathbb P B\times \mathbb P C))$

, called the r-th cactus variety [Reference Buczyńska and Buczyński11]. This cactus variety agrees with the secant variety for

![]() $r<13$

, but it quickly fills the ambient space of tensors in

$r<13$

, but it quickly fills the ambient space of tensors in

![]() $\mathbb C^{\mathbf {m}}{\mathord { \otimes } }\mathbb C^{\mathbf {m}}{\mathord { \otimes } } \mathbb C^{\mathbf {m}}$

at latest when

$\mathbb C^{\mathbf {m}}{\mathord { \otimes } }\mathbb C^{\mathbf {m}}{\mathord { \otimes } } \mathbb C^{\mathbf {m}}$

at latest when

![]() $r=6\mathbf {m}-4$

. Thus one cannot prove

$r=6\mathbf {m}-4$

. Thus one cannot prove

![]() $\underline {\mathbf {R}}(T)>6\mathbf {m}-4$

for any tensor T via rank/determinantal methods, in particular, with border apolarity alone.

$\underline {\mathbf {R}}(T)>6\mathbf {m}-4$

for any tensor T via rank/determinantal methods, in particular, with border apolarity alone.

In brief, the r-th secant variety consists of points on limits of spans of zero-dimensional smooth schemes of length r. The r-th cactus variety consists of points on limits of spans of zero-dimensional schemes of length r.

The border apolarity algorithm produces ideals, and thus to break the barrier, one needs to distinguish ideals that are limits of smooth schemes from ideals that are limits of nonsmoothable schemes, and ideals that are not limits of any sequence of saturated ideals. In principle, this can be done using deformation theory (see, e.g., [Reference Hartshorne25]). This is exciting, as it is the first proposed path to overcoming the lower bound barriers.

Remark 1.8. After this paper was posted on arXiv, we went on to find an ideal passing all border apolarity tests for

![]() $M_{\langle 3\rangle }$

with

$M_{\langle 3\rangle }$

with

![]() $r=17$

. We are currently working to effectively implement deformation theory to determine if such an example comes from an actual border rank

$r=17$

. We are currently working to effectively implement deformation theory to determine if such an example comes from an actual border rank

![]() $17$

decomposition. The obstruction to doing this is the effective implementation of the theory. The naïve implementation, even on a large computer cluster, is not feasible, and we are working to develop effective computational techniques.

$17$

decomposition. The obstruction to doing this is the effective implementation of the theory. The naïve implementation, even on a large computer cluster, is not feasible, and we are working to develop effective computational techniques.

1.3.2 Upper bounds, especially for tensors relevant for Strassen’s laser method

There is intense interest in tensors not subject to the upper bound barriers for Strassen’s laser method described in [Reference Ambainis, Filmus and Le Gall4, Reference Alman1, Reference Alman and Vassilevska Williams3, Reference Christandl, Vrana and Zuiddam13]. All tensors used in or proposed for the laser method have positive-dimensional symmetry groups, so the border apolarity method potentially may be applied. For example, the small Coppersmith–Winograd tensor

![]() $T_{cw,q}:=\sum _{j=1}^q a_0{\mathord { \otimes } } b_j{\mathord { \otimes } } c_j+ a_j{\mathord { \otimes } } b_0{\mathord { \otimes } } c_j+ a_j{\mathord { \otimes } } b_j{\mathord { \otimes } } c_0$

has a very large symmetry group, namely the orthogonal group

$T_{cw,q}:=\sum _{j=1}^q a_0{\mathord { \otimes } } b_j{\mathord { \otimes } } c_j+ a_j{\mathord { \otimes } } b_0{\mathord { \otimes } } c_j+ a_j{\mathord { \otimes } } b_j{\mathord { \otimes } } c_0$

has a very large symmetry group, namely the orthogonal group

![]() $O(q)$

[Reference Conner, Gesmundo, Landsberg and Ventura14], which has dimension

$O(q)$

[Reference Conner, Gesmundo, Landsberg and Ventura14], which has dimension

![]() $\binom q2$

. Since these tensors, and their Kronecker squares tend to have border rank below the cactus barrier, we expect to be able to effectively apply the method as is to determine the border rank at least for small Kronecker powers. After this paper was posted on arXiv, border apolarity was utilized to determine the border rank of

$\binom q2$

. Since these tensors, and their Kronecker squares tend to have border rank below the cactus barrier, we expect to be able to effectively apply the method as is to determine the border rank at least for small Kronecker powers. After this paper was posted on arXiv, border apolarity was utilized to determine the border rank of

![]() $T_{cw,2}^{\boxtimes 2}$

in [Reference Conner, Huang and Landsberg16] and the answer ended up being the known upper bound. We are developing techniques to write down usual border rank decompositions guided by the ideals produced by border apolarity to potentially determine new upper bounds for higher Kronecker powers of

$T_{cw,2}^{\boxtimes 2}$

in [Reference Conner, Huang and Landsberg16] and the answer ended up being the known upper bound. We are developing techniques to write down usual border rank decompositions guided by the ideals produced by border apolarity to potentially determine new upper bounds for higher Kronecker powers of

![]() $T_{cw,2}$

and

$T_{cw,2}$

and

![]() $\operatorname {det}_3$

(or to show that the known bounds are sharp). In other words, we are working to use border apolarity to inject some “science” into the “art” of finding upper bounds.

$\operatorname {det}_3$

(or to show that the known bounds are sharp). In other words, we are working to use border apolarity to inject some “science” into the “art” of finding upper bounds.

1.3.3 Geometrization of the

$(111)$

test for matrix multiplication

$(111)$

test for matrix multiplication

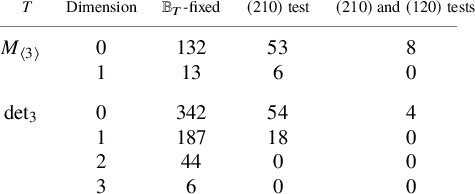

Our results for

![]() $M_{\langle 2\mathbf {n}\mathbf {n}\rangle },M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

for general

$M_{\langle 2\mathbf {n}\mathbf {n}\rangle },M_{\langle 3\mathbf {n}\mathbf {n}\rangle }$

for general

![]() $\mathbf {n}$

only use the

$\mathbf {n}$

only use the

![]() $(210)$

and

$(210)$

and

![]() $(120)$

tests as defined in §3, and we expect to be able to prove stronger results for general

$(120)$

tests as defined in §3, and we expect to be able to prove stronger results for general

![]() $\mathbf {n}$

in these cases once we develop a proper geometric understanding of the

$\mathbf {n}$

in these cases once we develop a proper geometric understanding of the

![]() $(111)$

test like we have for the

$(111)$

test like we have for the

![]() $(210)$

test.

$(210)$

test.

1.4 Overview

In §2, we review terminology regarding border rank decompositions of tensors, Borel subgroups and Borel fixed subspaces. We then describe a curve of multigraded ideals one may associate to a border rank decomposition. We also review Borel fixed subspaces and list them in the cases relevant for this paper. In §3, we describe the border apolarity algorithm and accompanying tests. In §4, we review the matrix multiplication tensor. In §5, we describe the computation to prove Theorems 1.1 and 1.2, which are computer assisted calculations, the code for which is available at github.com/adconner/chlbapolar. In §6, we discuss representation theory relevant for applying the border apolarity algorithm to matrix multiplication. In §7, we prove our localization and optimization algorithm and use it to prove Theorems 1.4, 1.5 and 1.6.

2 Preliminaries

2.1 Definitions/Notation

Throughout,

![]() $A,B,C,U,V,W$

will denote complex vector spaces, respectively, of dimensions

$A,B,C,U,V,W$

will denote complex vector spaces, respectively, of dimensions

![]() $\mathbf {a},\mathbf {b},\mathbf {c},\mathbf {u},\mathbf {v},\mathbf {w}$

. The dual space to A is denoted

$\mathbf {a},\mathbf {b},\mathbf {c},\mathbf {u},\mathbf {v},\mathbf {w}$

. The dual space to A is denoted

![]() $A^*$

. The space of symmetric degree d tensors is denoted

$A^*$

. The space of symmetric degree d tensors is denoted

![]() $S^dA$

, which may also be viewed as the space of degree d homogeneous polynomials on

$S^dA$

, which may also be viewed as the space of degree d homogeneous polynomials on

![]() $A^*$

. Set

$A^*$

. Set

![]() $\operatorname {Sym}(A):=\bigoplus _d S^dA$

. The identity map is denoted

$\operatorname {Sym}(A):=\bigoplus _d S^dA$

. The identity map is denoted

![]() $\operatorname {Id}_A\in A{\mathord { \otimes } } A^*$

. For

$\operatorname {Id}_A\in A{\mathord { \otimes } } A^*$

. For

![]() $X\subset A$

,

$X\subset A$

,

![]() $X^{\perp }:=\{\alpha \in A^*\mid \alpha (x)=0, \forall x\in X\}$

is its annihilator, and

$X^{\perp }:=\{\alpha \in A^*\mid \alpha (x)=0, \forall x\in X\}$

is its annihilator, and

![]() $\langle X\rangle \subset A$

denotes the linear span of X. Projective space is

$\langle X\rangle \subset A$

denotes the linear span of X. Projective space is

![]() $\mathbb P A= (A\backslash \{0\})/\mathbb C^*$

, and if

$\mathbb P A= (A\backslash \{0\})/\mathbb C^*$

, and if

![]() $x\in A\backslash \{0\}$

, we let

$x\in A\backslash \{0\}$

, we let

![]() $[x]\in \mathbb P A$

denote the associated point in projective space (the line through x). The general linear group of invertible linear maps

$[x]\in \mathbb P A$

denote the associated point in projective space (the line through x). The general linear group of invertible linear maps

![]() $A{\mathord {\;\rightarrow \;}} A$

is denoted

$A{\mathord {\;\rightarrow \;}} A$

is denoted

![]() $\operatorname {GL}(A)$

and the special linear group of determinant one linear maps is denoted

$\operatorname {GL}(A)$

and the special linear group of determinant one linear maps is denoted

![]() $\operatorname {SL}(A)$

. The permutation group on r elements is denoted

$\operatorname {SL}(A)$

. The permutation group on r elements is denoted

![]() $\mathfrak S_r$

.

$\mathfrak S_r$

.

For at tensor

![]() $T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, define its symmetry group

$T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, define its symmetry group

One quotients by

![]() $(\mathbb C^*)^{\times 2}:=\{(\lambda \operatorname {Id}_A,\mu \operatorname {Id}_B,\nu \operatorname {Id}_C)\mid \lambda \mu \nu =1\}$

because

$(\mathbb C^*)^{\times 2}:=\{(\lambda \operatorname {Id}_A,\mu \operatorname {Id}_B,\nu \operatorname {Id}_C)\mid \lambda \mu \nu =1\}$

because

![]() $(\nu \operatorname {Id}_A,\mu \operatorname {Id}_B,\frac 1{\nu \mu }\operatorname {Id}_C)$

is the kernel of the map

$(\nu \operatorname {Id}_A,\mu \operatorname {Id}_B,\frac 1{\nu \mu }\operatorname {Id}_C)$

is the kernel of the map

![]() $\operatorname {GL}(A)\times \operatorname {GL}(B)\times \operatorname {GL}(C){\mathord {\;\rightarrow \;}} \operatorname {GL}(A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

. Lie algebras of Lie groups are denoted with corresponding symbols in old German script, for example,

$\operatorname {GL}(A)\times \operatorname {GL}(B)\times \operatorname {GL}(C){\mathord {\;\rightarrow \;}} \operatorname {GL}(A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

. Lie algebras of Lie groups are denoted with corresponding symbols in old German script, for example,

![]() ${\mathfrak g}_T$

is the Lie algebra corresponding to

${\mathfrak g}_T$

is the Lie algebra corresponding to

![]() $G_T$

.

$G_T$

.

The Grassmannian of r planes through the origin is denoted

![]() $G(r,A)$

, which we will view in its Plücker embedding

$G(r,A)$

, which we will view in its Plücker embedding

![]() $G(r,A)\subset \mathbb P {\Lambda ^{r}} A$

. That is, given

$G(r,A)\subset \mathbb P {\Lambda ^{r}} A$

. That is, given

![]() $E\in G(r,A)$

, that is, a linear subspace

$E\in G(r,A)$

, that is, a linear subspace

![]() $E\subset A$

of dimension r, if

$E\subset A$

of dimension r, if

![]() is a basis of E, we represent E as a point in

is a basis of E, we represent E as a point in

![]() $\mathbb P({\Lambda ^{r}}A)$

by

$\mathbb P({\Lambda ^{r}}A)$

by

![]() $[e_1\wedge \cdots \wedge e_r]$

. Here, the wedge product is defined by

$[e_1\wedge \cdots \wedge e_r]$

. Here, the wedge product is defined by

![]() $e_1\wedge \cdots \wedge e_r:=\sum _{\sigma \in \mathfrak S_r}\mathrm {{sgn}}(\sigma ) e_{\sigma (1)}{\mathord { \otimes } } \cdots {\mathord { \otimes } } e_{\sigma (r)}$

.

$e_1\wedge \cdots \wedge e_r:=\sum _{\sigma \in \mathfrak S_r}\mathrm {{sgn}}(\sigma ) e_{\sigma (1)}{\mathord { \otimes } } \cdots {\mathord { \otimes } } e_{\sigma (r)}$

.

For a set

![]() $Z\subset \mathbb P A$

,

$Z\subset \mathbb P A$

,

![]() $\overline {Z}\subset \mathbb P A$

denotes its Zariski closure,

$\overline {Z}\subset \mathbb P A$

denotes its Zariski closure,

![]() $\hat Z\subset A$

denotes the cone over Z union the origin,

$\hat Z\subset A$

denotes the cone over Z union the origin,

![]() $I(Z)=I(\hat Z)\subset \operatorname {Sym}(A^*)$

denotes the ideal of Z, that is,

$I(Z)=I(\hat Z)\subset \operatorname {Sym}(A^*)$

denotes the ideal of Z, that is,

![]() $I(Z)=\{ P\in \operatorname {Sym}(A^*)\mid P(z)=0 \forall z\in \hat Z\}$

, and

$I(Z)=\{ P\in \operatorname {Sym}(A^*)\mid P(z)=0 \forall z\in \hat Z\}$

, and

![]() $\mathbb C[\hat Z]=\operatorname {Sym}(A^*)/I(Z)$

, denotes the homogeneous coordinate ring of

$\mathbb C[\hat Z]=\operatorname {Sym}(A^*)/I(Z)$

, denotes the homogeneous coordinate ring of

![]() $\hat Z$

. Both

$\hat Z$

. Both

![]() $I(Z)$

and

$I(Z)$

and

![]() $\mathbb C[\hat Z]$

are

$\mathbb C[\hat Z]$

are

![]() $\mathbb {Z}$

-graded by degree.

$\mathbb {Z}$

-graded by degree.

We will be dealing with ideals on products of three projective spaces, that is, we will be dealing with polynomials that are homogeneous in three sets of variables, so our ideals with be

![]() $\mathbb {Z}^3$

-graded. More precisely, we will study ideals

$\mathbb {Z}^3$

-graded. More precisely, we will study ideals

![]() $I\subset \operatorname {Sym}(A^*){\mathord { \otimes } } \operatorname {Sym}(B^*){\mathord { \otimes } } \operatorname {Sym}(C^*)$

, and

$I\subset \operatorname {Sym}(A^*){\mathord { \otimes } } \operatorname {Sym}(B^*){\mathord { \otimes } } \operatorname {Sym}(C^*)$

, and

![]() $I_{ijk}$

denotes the component in

$I_{ijk}$

denotes the component in

![]() $S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

.

$S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

.

Given

![]() $T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, we may consider it as a linear map

$T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, we may consider it as a linear map

![]() $T_C: C^*{\mathord {\;\rightarrow \;}} A{\mathord { \otimes } } B$

, and we let

$T_C: C^*{\mathord {\;\rightarrow \;}} A{\mathord { \otimes } } B$

, and we let

![]() $T(C^*)\subset A{\mathord { \otimes } } B$

denote its image and similarly for permuted statements. A tensor T is concise if the maps

$T(C^*)\subset A{\mathord { \otimes } } B$

denote its image and similarly for permuted statements. A tensor T is concise if the maps

![]() $T_A,T_B,T_C$

are injective, that is, if it requires all basis vectors in each of

$T_A,T_B,T_C$

are injective, that is, if it requires all basis vectors in each of

![]() $A,B,C$

to write down in any basis.

$A,B,C$

to write down in any basis.

We remark that the tensor T may be recovered up to isomorphism from any of the spaces

![]() $T(A^*),T(B^*),T(C^*)$

; see, for example, [Reference Landsberg and Michałek33].

$T(A^*),T(B^*),T(C^*)$

; see, for example, [Reference Landsberg and Michałek33].

Elements

![]() $P\in S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^k C^*$

may be viewed as differential operators on elements

$P\in S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^k C^*$

may be viewed as differential operators on elements

![]() $X\in S^s A {\mathord { \otimes } } S^t B {\mathord { \otimes } } S^u C$

. Write

$X\in S^s A {\mathord { \otimes } } S^t B {\mathord { \otimes } } S^u C$

. Write

![]() for the contraction operation. The annihilator of X, denoted

for the contraction operation. The annihilator of X, denoted

![]() $\text {Ann}\,(X)$

, is defined to be the ideal of all

$\text {Ann}\,(X)$

, is defined to be the ideal of all

![]() $P\in \operatorname {Sym}(A^*){\mathord { \otimes } } \operatorname {Sym}(B^*){\mathord { \otimes } } \operatorname {Sym}(C^*)$

such that

$P\in \operatorname {Sym}(A^*){\mathord { \otimes } } \operatorname {Sym}(B^*){\mathord { \otimes } } \operatorname {Sym}(C^*)$

such that

![]() . In the case that

. In the case that

![]() $X=T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, its annihilator consists of all elements in degree

$X=T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, its annihilator consists of all elements in degree

![]() $(i,j,k)$

with one of

$(i,j,k)$

with one of

![]() $i,j,k$

greater than one and the annihilators in low degrees are just the usual linear annihilators defined above. Explicitly, the annihilators in low degree are

$i,j,k$

greater than one and the annihilators in low degrees are just the usual linear annihilators defined above. Explicitly, the annihilators in low degree are

![]() $T(C^*)^{\perp }\subset A^*{\mathord { \otimes } } B^*$

,

$T(C^*)^{\perp }\subset A^*{\mathord { \otimes } } B^*$

,

![]() $T(B^*)^{\perp }\subset A^*{\mathord { \otimes } } C^*$

and

$T(B^*)^{\perp }\subset A^*{\mathord { \otimes } } C^*$

and

![]() $T(A^*)^{\perp }\subset B^*{\mathord { \otimes } } C^*$

and

$T(A^*)^{\perp }\subset B^*{\mathord { \otimes } } C^*$

and

![]() $T^{\perp }\subset A^*{\mathord { \otimes } } B^*{\mathord { \otimes } } C^*$

.

$T^{\perp }\subset A^*{\mathord { \otimes } } B^*{\mathord { \otimes } } C^*$

.

2.2 Border rank decompositions as curves in Grassmannians

A border rank r decomposition of a tensor T is normally viewed as a curve

![]() $T(t)=\sum _{j=1}^r T_j(t)$

, where each

$T(t)=\sum _{j=1}^r T_j(t)$

, where each

![]() $T_j(t)$

is rank one for all

$T_j(t)$

is rank one for all

![]() $t\neq 0$

, and

$t\neq 0$

, and

![]() $\lim _{t{\mathord {\;\rightarrow \;}} 0}T(t)=T$

. It will be useful to change perspective, viewing a border rank r decomposition of a tensor

$\lim _{t{\mathord {\;\rightarrow \;}} 0}T(t)=T$

. It will be useful to change perspective, viewing a border rank r decomposition of a tensor

![]() $T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

as a curve

$T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

as a curve

![]() $E_t\subset G(r,A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

satisfying

$E_t\subset G(r,A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

satisfying

-

(i) for all

$t\neq 0$

,

$t\neq 0$

,

$E_t$

is spanned by r rank one tensors, and

$E_t$

is spanned by r rank one tensors, and -

(ii)

$T\in E_0$

.

$T\in E_0$

.

Example 2.1. The border rank decomposition

may be rephrased as the curve

$$ \begin{align*} E_t &= [(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1)\wedge (a_1+ta_2){\mathord{ \otimes } } (b_1+tb_2){\mathord{ \otimes } } (c_1+tc_2)] \\[2pt] &= \begin{aligned}[t] {[}(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1)\wedge (&a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1+ t(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+ a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1+a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1) \\[2pt] {}& +t^2(a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2+ a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1) +t^3a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2) ] \end{aligned} \\[2pt] &= \begin{aligned}[t] {[}(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1)\wedge ( & a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+ a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1+a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1 \\[2pt] {}&+t (a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2+ a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1) +t^2a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2) ] \end{aligned} \\[2pt] &\subset G(2,A{\mathord{ \otimes } } B{\mathord{ \otimes } } C). \end{align*} $$

$$ \begin{align*} E_t &= [(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1)\wedge (a_1+ta_2){\mathord{ \otimes } } (b_1+tb_2){\mathord{ \otimes } } (c_1+tc_2)] \\[2pt] &= \begin{aligned}[t] {[}(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1)\wedge (&a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1+ t(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+ a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1+a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1) \\[2pt] {}& +t^2(a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2+ a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1) +t^3a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2) ] \end{aligned} \\[2pt] &= \begin{aligned}[t] {[}(a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1)\wedge ( & a_1{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+ a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1+a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_1 \\[2pt] {}&+t (a_1{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2+ a_2{\mathord{ \otimes } } b_1{\mathord{ \otimes } } c_2+a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_1) +t^2a_2{\mathord{ \otimes } } b_2{\mathord{ \otimes } } c_2) ] \end{aligned} \\[2pt] &\subset G(2,A{\mathord{ \otimes } } B{\mathord{ \otimes } } C). \end{align*} $$

Here,

2.3 Multigraded ideal associated to a border rank decomposition

Given a border rank r decomposition

![]() $T=\lim _{t{\mathord {\;\rightarrow \;}} 0}\sum _{j=1}^r T_j(t)$

, we have additional information. Let

$T=\lim _{t{\mathord {\;\rightarrow \;}} 0}\sum _{j=1}^r T_j(t)$

, we have additional information. Let

denote the

![]() $\mathbb {Z}^3$

-graded ideal of the set of r distinct points

$\mathbb {Z}^3$

-graded ideal of the set of r distinct points

![]() $[T_1(t)]\cup \cdots \cup [T_r(t)]$

, where

$[T_1(t)]\cup \cdots \cup [T_r(t)]$

, where

![]() $I_{ijk,t}\subset S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

. Sometimes, it is more convenient to work with

$I_{ijk,t}\subset S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

. Sometimes, it is more convenient to work with

![]() $I_{ijk,t}^{\perp }$

which contains equivalent information.

$I_{ijk,t}^{\perp }$

which contains equivalent information.

Example 2.2. Consider the ideal of

![]() $[a_1{\mathord { \otimes } } b_1{\mathord { \otimes } } c_1]$

. In degree

$[a_1{\mathord { \otimes } } b_1{\mathord { \otimes } } c_1]$

. In degree

![]() $(ijk)$

, we have

$(ijk)$

, we have

![]() $I_{ijk}=\langle \alpha ^M{\mathord { \otimes } } {\beta }^N{\mathord { \otimes } } \gamma ^P\rangle $

, where

$I_{ijk}=\langle \alpha ^M{\mathord { \otimes } } {\beta }^N{\mathord { \otimes } } \gamma ^P\rangle $

, where

![]() $\alpha ^M=\alpha ^{m_1}\cdots \alpha ^{m_i}$

etc., and

$\alpha ^M=\alpha ^{m_1}\cdots \alpha ^{m_i}$

etc., and

![]() $M,N,P$

ranges over those triples where at least one of the indices appearing is not equal to

$M,N,P$

ranges over those triples where at least one of the indices appearing is not equal to

![]() $1$

. Thus,

$1$

. Thus,

![]() $I_{ijk}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k\rangle $

.

$I_{ijk}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k\rangle $

.

When we take the ideal of the union of two points, the ideal is the intersection of the two ideals, and if the points are in general position, for example,

![]() $[a_1{\mathord { \otimes } } b_1{\mathord { \otimes } } c_1]\cup [a_2{\mathord { \otimes } } b_2{\mathord { \otimes } } c_2]$

, in the notation above one of the indices appearing in

$[a_1{\mathord { \otimes } } b_1{\mathord { \otimes } } c_1]\cup [a_2{\mathord { \otimes } } b_2{\mathord { \otimes } } c_2]$

, in the notation above one of the indices appearing in

![]() $M,N,P$

must not be

$M,N,P$

must not be

![]() $1$

and one must not be

$1$

and one must not be

![]() $2$

, so

$2$

, so

![]() $I_{ijk}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k, a_2^i{\mathord { \otimes } } b_2^j{\mathord { \otimes } } c_2^k\rangle $

.

$I_{ijk}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k, a_2^i{\mathord { \otimes } } b_2^j{\mathord { \otimes } } c_2^k\rangle $

.

Thus, in Example 2.1 above,

![]() $I_{ijk,t}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k, (a_1+ta_2)^i{\mathord { \otimes } } (b_1+tb_2)^j{\mathord { \otimes } } (c_1+tc_2)^k\rangle $

, where the role of

$I_{ijk,t}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k, (a_1+ta_2)^i{\mathord { \otimes } } (b_1+tb_2)^j{\mathord { \otimes } } (c_1+tc_2)^k\rangle $

, where the role of

![]() $a_2$

in Example 2.2 is played by

$a_2$

in Example 2.2 is played by

![]() $(a_1+ta_2)$

and similarly for

$(a_1+ta_2)$

and similarly for

![]() $b_2,c_2$

. As

$b_2,c_2$

. As

![]() $t{\mathord {\;\rightarrow \;}} 0$

,

$t{\mathord {\;\rightarrow \;}} 0$

,

![]() $I_{ijk,t}^{\perp }$

limits to

$I_{ijk,t}^{\perp }$

limits to

![]() $I_{ijk}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k, ia_1^{i-1}a_2{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k+ ja_1^i{\mathord { \otimes } } b_1^{j-1}b_2{\mathord { \otimes } } c_1^k+ k a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^{k-1}c_2 \rangle $

.

$I_{ijk}^{\perp }=\langle a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k, ia_1^{i-1}a_2{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^k+ ja_1^i{\mathord { \otimes } } b_1^{j-1}b_2{\mathord { \otimes } } c_1^k+ k a_1^i{\mathord { \otimes } } b_1^j{\mathord { \otimes } } c_1^{k-1}c_2 \rangle $

.

If the r points are in general position, then

![]() $\text {codim}(I_{ijk,t})=r$

as long as

$\text {codim}(I_{ijk,t})=r$

as long as

![]() $r\leq \operatorname {dim} S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

; see, for example, [Reference Buczyńska and Buczyński11, Lemma 3.9].

$r\leq \operatorname {dim} S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

; see, for example, [Reference Buczyńska and Buczyński11, Lemma 3.9].

Let

![]() $\mathbf {a}\leq \mathbf {b}\leq \mathbf {c}$

. If

$\mathbf {a}\leq \mathbf {b}\leq \mathbf {c}$

. If

![]() $r\leq \binom {\mathbf {a}+1}2$

, then for all

$r\leq \binom {\mathbf {a}+1}2$

, then for all

![]() $(ijk)$

with

$(ijk)$

with

![]() $i+j+k>1$

, one has

$i+j+k>1$

, one has

![]() $r\leq \operatorname {dim} S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

.

$r\leq \operatorname {dim} S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

.

In all the examples in this paper

![]() $r\leq \binom {\mathbf {a}+1}2$

. For example, for

$r\leq \binom {\mathbf {a}+1}2$

. For example, for

![]() $M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

,

$M_{\langle 2\mathbf {n}\mathbf {n}\rangle }$

,

![]() $\binom {\mathbf {a}+1}2=2\mathbf {n}^2+\mathbf {n}$

and we prove border rank lower bounds like

$\binom {\mathbf {a}+1}2=2\mathbf {n}^2+\mathbf {n}$

and we prove border rank lower bounds like

![]() $\mathbf {n}^2+1.32\mathbf {n}$

.

$\mathbf {n}^2+1.32\mathbf {n}$

.

Thus, in this paper we may and will assume

![]() $\text {codim} (I_{ijk})=r$

for all

$\text {codim} (I_{ijk})=r$

for all

![]() $(ijk)$

with

$(ijk)$

with

![]() $i+j+k>1$

.

$i+j+k>1$

.

Thus, in addition to

![]() $E_0=I_{111,0}^{\perp }$

defined in §2.2, we obtain a limiting ideal I, where we define

$E_0=I_{111,0}^{\perp }$

defined in §2.2, we obtain a limiting ideal I, where we define

![]() $I_{ijk}:=\lim _{t{\mathord {\;\rightarrow \;}} 0}I_{ijk,t}$

and the limit is taken in the Grassmannian of codimension r subspaces in

$I_{ijk}:=\lim _{t{\mathord {\;\rightarrow \;}} 0}I_{ijk,t}$

and the limit is taken in the Grassmannian of codimension r subspaces in

![]() $S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

,

$S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

,

We remark that there are subtleties here: The limiting ideal may not be saturated. See [Reference Buczyńska and Buczyński11] for a discussion.

Thus, we may assume a multigraded ideal I coming from a border rank r decomposition of a concise tensor T satisfies the following conditions:

-

(i) I is contained in the annihilator of T, which by definition says

$I_{110}\subset T(C^*)^{\perp }$

,

$I_{110}\subset T(C^*)^{\perp }$

,

$I_{101}\subset T(B^*)^{\perp } $

,

$I_{101}\subset T(B^*)^{\perp } $

,

$I_{011}\subset T(A^*)^{\perp }$

and

$I_{011}\subset T(A^*)^{\perp }$

and

$I_{111}\subset T^{\perp }\subset A^*{\mathord { \otimes } } B^*{\mathord { \otimes } } C^*$

.

$I_{111}\subset T^{\perp }\subset A^*{\mathord { \otimes } } B^*{\mathord { \otimes } } C^*$

. -

(ii) For all

$(ijk)$

with

$(ijk)$

with

$i+j+k>1$

,

$i+j+k>1$

,

$\text {codim} I_{ijk}=r$

.

$\text {codim} I_{ijk}=r$

. -

(iii) I is an ideal, so the multiplication maps

(2) $$ \begin{align} I_{i-1,j,k}{\mathord{ \otimes } } A^*{\mathord{\,\oplus }\,} I_{i,j-1,k}{\mathord{ \otimes } } B^* {\mathord{\,\oplus }\,} I_{i,j,k-1}{\mathord{ \otimes } } C^*{\mathord{\;\rightarrow\;}} S^iA^*{\mathord{ \otimes } } S^jB^*{\mathord{ \otimes } } S^kC^* \end{align} $$

$$ \begin{align} I_{i-1,j,k}{\mathord{ \otimes } } A^*{\mathord{\,\oplus }\,} I_{i,j-1,k}{\mathord{ \otimes } } B^* {\mathord{\,\oplus }\,} I_{i,j,k-1}{\mathord{ \otimes } } C^*{\mathord{\;\rightarrow\;}} S^iA^*{\mathord{ \otimes } } S^jB^*{\mathord{ \otimes } } S^kC^* \end{align} $$

have image contained in

$I_{ijk}$

.

$I_{ijk}$

.

Here, equation (2) is the sum of three maps, the first of which is the restriction of the symmetrization map

![]() $S^{i-1}A^*{\mathord { \otimes } } A^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^k C^*{\mathord {\;\rightarrow \;}} S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^k C^*$

to

$S^{i-1}A^*{\mathord { \otimes } } A^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^k C^*{\mathord {\;\rightarrow \;}} S^iA^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^k C^*$

to

![]() $I_{i-1,j,k}{\mathord { \otimes } } A^*$

and similarly for the others. When

$I_{i-1,j,k}{\mathord { \otimes } } A^*$

and similarly for the others. When

![]() $i-1=0$

, the first map is just the inclusion

$i-1=0$

, the first map is just the inclusion

![]() $I_{0jk}{\mathord { \otimes } } A^*\subset A^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

.

$I_{0jk}{\mathord { \otimes } } A^*\subset A^*{\mathord { \otimes } } S^jB^*{\mathord { \otimes } } S^kC^*$

.

One may prove border rank lower bounds for T by showing that for a given r, no such

![]() $I $

exists. For arbitrary tensors, we do not see any effective way to prove this, but for tensors with a large symmetry group, we have a vast simplification of the problem as described in the next subsection.

$I $

exists. For arbitrary tensors, we do not see any effective way to prove this, but for tensors with a large symmetry group, we have a vast simplification of the problem as described in the next subsection.

2.4 Lie’s theorem and consequences

Lie’s theorem may be stated as: Let H be a connected solvable group and W an H-module, then a closed H-fixed set

![]() $X\subset \mathbb P W$

contains an H-fixed point. Applying this fact to appropriately chosen sets X yield the Normal Form Lemma and its generalization, the Fixed Ideal Theorem.

$X\subset \mathbb P W$

contains an H-fixed point. Applying this fact to appropriately chosen sets X yield the Normal Form Lemma and its generalization, the Fixed Ideal Theorem.

Theorem 2.3 (Normal form lemma, tensor case [Reference Landsberg and Michałek34]).

Let

![]() $T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, and let

$T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, and let

![]() $H\subset G_T$

be a connected solvable group. If

$H\subset G_T$

be a connected solvable group. If

![]() $\underline {\mathbf {R}}(T)\leq r$

, then there exists

$\underline {\mathbf {R}}(T)\leq r$

, then there exists

![]() $E_0\in G(r,A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

corresponding to a border rank r decomposition of T as in §2.2 that is H-fixed, that is,

$E_0\in G(r,A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

corresponding to a border rank r decomposition of T as in §2.2 that is H-fixed, that is,

![]() $b\cdot E_0=E_0$

for all

$b\cdot E_0=E_0$

for all

![]() $b\in H$

.

$b\in H$

.

By the Normal Form Lemma, in order to prove

![]() $\underline {\mathbf {R}}(T)>r$

, it is sufficient to rule out the existence of a border rank r decomposition

$\underline {\mathbf {R}}(T)>r$

, it is sufficient to rule out the existence of a border rank r decomposition

![]() $E_t$

where

$E_t$

where

![]() $E_0$

is a H-fixed point of

$E_0$

is a H-fixed point of

![]() $G(r, A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

.

$G(r, A{\mathord { \otimes } } B{\mathord { \otimes } } C)$

.

Theorem 2.4 (Fixed Ideal Theorem, tensor case [Reference Buczyńska and Buczyński11]).

Let

![]() $T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, and let

$T\in A{\mathord { \otimes } } B{\mathord { \otimes } } C$

, and let

![]() $H\subset G_T$

be a connected solvable group. If

$H\subset G_T$

be a connected solvable group. If

![]() $\underline {\mathbf {R}}(T)\leq r$

, then there exists an ideal

$\underline {\mathbf {R}}(T)\leq r$

, then there exists an ideal

![]() $I\in \operatorname {Sym}(A^*){\mathord { \otimes } } \operatorname {Sym}(B^*){\mathord { \otimes } } \operatorname {Sym}(C^*)$

as in §2.3 corresponding to a border rank r decomposition of a tensor T that is H-fixed, that is,

$I\in \operatorname {Sym}(A^*){\mathord { \otimes } } \operatorname {Sym}(B^*){\mathord { \otimes } } \operatorname {Sym}(C^*)$

as in §2.3 corresponding to a border rank r decomposition of a tensor T that is H-fixed, that is,

![]() $b\cdot I_{ijk}=I_{ijk}$

for all

$b\cdot I_{ijk}=I_{ijk}$

for all

![]() $b\in H$

and all

$b\in H$

and all

![]() $(i,j,k)$

.

$(i,j,k)$

.

The conclusions of the theorems above are stronger for larger groups of symmetries H, so in this paper we will always fix a Borel subgroup

![]() ${\mathbb B}_T \subset G_T$

, that is, a maximal connected solvable subgroup of

${\mathbb B}_T \subset G_T$

, that is, a maximal connected solvable subgroup of

![]() $G_T$

. Thus, we may assume a multigraded ideal I coming from a border rank r decomposition of T satisfies the additional condition:

$G_T$

. Thus, we may assume a multigraded ideal I coming from a border rank r decomposition of T satisfies the additional condition:

-

(iv) Each

$I_{ijk}$

is

$I_{ijk}$

is

${\mathbb B}_T$

-fixed.

${\mathbb B}_T$

-fixed.

As we explain in the next subsection, for the instances in considered in this paper, Borel fixed spaces are easy to list.

2.5 Borel fixed subspaces

All of the

![]() ${\mathbb B}_T$

-modules for which we would like to study

${\mathbb B}_T$

-modules for which we would like to study

![]() ${\mathbb B}_T$

-fixed subspaces are also

${\mathbb B}_T$

-fixed subspaces are also

![]() $G_T$

-modules, where

$G_T$

-modules, where

![]() $G_T$

is reductive. This fact simplifies the description of

$G_T$

is reductive. This fact simplifies the description of

![]() ${\mathbb B}_T$

-fixed subspaces, so we will assume this in what follows.

${\mathbb B}_T$

-fixed subspaces, so we will assume this in what follows.

It will be convenient for us to linearize the problem by considering Lie algebras instead of Lie groups. Let

![]() ${\mathfrak g}_T$

be the Lie algebra of

${\mathfrak g}_T$

be the Lie algebra of

![]() $G_T$

, and let

$G_T$

, and let

![]() $\mathfrak b_T \subset {\mathfrak g}_T$

be the Lie algebra of

$\mathfrak b_T \subset {\mathfrak g}_T$

be the Lie algebra of

![]() ${\mathbb B}_T\subset G_T$

. A subspace

${\mathbb B}_T\subset G_T$

. A subspace

![]() $S \subset M$

is

$S \subset M$

is

![]() ${\mathbb B}_T$

fixed if and only if it is

${\mathbb B}_T$

fixed if and only if it is

![]() $\mathfrak b_T$

fixed.

$\mathfrak b_T$

fixed.

2.5.1 Weights and weight diagrams

For more details on the material in this section, see any of [Reference Humphreys26, Reference Knapp28, Reference Fulton and Harris20, Reference Bourbaki10].

If one has a diagonalizable matrix, one may choose a basis of eigenvectors each of which has an associated eigenvalue. If one has a space

![]() ${\mathfrak t}\subset \mathfrak {gl}_m$

of simultaneously diagonalizable matrices, we may choose a basis of simultaneous eigenvectors, say

${\mathfrak t}\subset \mathfrak {gl}_m$

of simultaneously diagonalizable matrices, we may choose a basis of simultaneous eigenvectors, say

![]() . Instead of considering the eigenvalues of each individual matrix, it is convenient to think of all the eigenvalues simultaneously as elements of

. Instead of considering the eigenvalues of each individual matrix, it is convenient to think of all the eigenvalues simultaneously as elements of

![]() ${\mathfrak t}^*$

, and these generalized eigenvalues are called weights. Write the weights as

${\mathfrak t}^*$

, and these generalized eigenvalues are called weights. Write the weights as

![]() . Then, given

. Then, given

![]() $X\in {\mathfrak t}$

, one has

$X\in {\mathfrak t}$

, one has

![]() $Xe_j=\mu _j(X)e_j$

, where the number

$Xe_j=\mu _j(X)e_j$

, where the number

![]() $\mu _j(X)$

is X’s usual eigenvalue for the eigenvector

$\mu _j(X)$

is X’s usual eigenvalue for the eigenvector

![]() $e_j$

. In this context, the eigenvectors are called weight vectors.

$e_j$

. In this context, the eigenvectors are called weight vectors.

Since

![]() ${\mathfrak g}_T$

is reductive, there exists a unique up to conjugation maximal torus

${\mathfrak g}_T$

is reductive, there exists a unique up to conjugation maximal torus

![]() ${\mathfrak t} \subset {\mathfrak g}_T$

, and the choice of

${\mathfrak t} \subset {\mathfrak g}_T$

, and the choice of

![]() $\mathfrak b_T$

fixes a unique

$\mathfrak b_T$

fixes a unique

![]() ${\mathfrak t} \subset \mathfrak b_T$

. The maximal torus is an abelian subalgebra such that the adjoint action on

${\mathfrak t} \subset \mathfrak b_T$

. The maximal torus is an abelian subalgebra such that the adjoint action on

![]() ${\mathfrak g}_T$

is simultaneously diagonalizable and the weight zero space under this action is exactly

${\mathfrak g}_T$

is simultaneously diagonalizable and the weight zero space under this action is exactly

![]() ${\mathfrak t}$

. That is,

${\mathfrak t}$

. That is,

![]() ${\mathfrak g}_T = {\mathfrak t}{\mathord {\,\oplus }\,} \bigoplus _{\alpha \ne 0} {\mathfrak g}_{\alpha }$

, where

${\mathfrak g}_T = {\mathfrak t}{\mathord {\,\oplus }\,} \bigoplus _{\alpha \ne 0} {\mathfrak g}_{\alpha }$

, where

![]() ${\mathfrak g}_{\alpha }$

is the weight space under the adjoint action of

${\mathfrak g}_{\alpha }$

is the weight space under the adjoint action of

![]() ${\mathfrak t}$

corresponding to weight

${\mathfrak t}$

corresponding to weight

![]() $\alpha $