Impact Statement

It is shown in this paper that industrial assets with low amount of data can significantly improve the performances of their anomaly detection classifiers by collaborating with similar assets containing more data. The proposed technique enables collaborative learning via a hierarchical model of the asset fleet, where the higher level distributions represent the general behavior of asset clusters and individual asset level parameters sampled from the higher level distributions.

1. Introduction

Modern industrial asset operations are monitored in real time using a plethora of embedded sensors. Availability of asset condition time series combined with readily available computing power and communication technologies has extensively automated industrial operations in the recent decade (Xu et al., Reference Xu, He and Li2014; Gilchrist and Gilchrist, Reference Gilchrist and Gilchrist2016).

Asset health management, in particular, has moved from physics-based formulations to machine learning (ML) techniques. As a part of asset health management, detecting anomalies in an asset’s condition data is critical for accurate prognosis. An ideal anomaly detection algorithm instantaneously identifies deviations in real time, and activates the prognosis algorithm to plan timely maintenance. Accurate anomaly detection also enables efficient extraction of the failure trajectories from historical condition data. Failure trajectories are the time series ranging from the asset’s deviation from normal behavior till its failure. Since historical failure trajectories constitute the training dataset for prognosis, learning capabilities of the prognosis models primarily depend on accurate anomaly detection. An inefficient anomaly detection algorithm instead could let a failure go undetected, or flag many anomalies that turn out to be benign and not require any intervention (Kang, Reference Kang2018).

Most industries rely on rule-based systems for anomaly detection. These comprise of preset warnings and trip limits on the sensor measurements (Saxena et al., Reference Saxena, Goebel, Simon and Eklund2008; Zaidan et al., Reference Zaidan, Harrison, Mills and Fleming2015). Force tripping an asset often results in production losses, which could have been avoided if a planned maintenance was carried out in good time. Moreover, the warning-trip systems are inherently nonresponsive. An asset, for example, could not only be operating well within the limits, but also be deviating from its normal behavior. This deviation would not be flagged by a warning-trip system until sensor measurements exceedd the preset limits, which could already be too late and the opportune time be lost.

In scenarios where the domain knowledge about the underlying distribution is available beforehand, statistical classifiers provide a justifiable solution for anomaly detection. Statistical classifiers posit that the condition monitoring data generated during normal asset operations can be described using underlying distributions. Assuming that an asset commences operating in normal condition, the underlying density function ![]() $ p\left(\theta \right) $,

$ p\left(\theta \right) $, ![]() $ \theta $ being its parameters, can be estimated to model that asset’s normal operation data. Upcoming anomalies in asset operations cause a change in system dynamics, and induce deviation from its estimated density function. Statistical tests are used to evaluate if a newly recorded data point is significantly different to be deemed anomalous (Rajabzadeh et al., Reference Rajabzadeh, Rezaie and Amindavar2016; Kang, Reference Kang2018).

$ \theta $ being its parameters, can be estimated to model that asset’s normal operation data. Upcoming anomalies in asset operations cause a change in system dynamics, and induce deviation from its estimated density function. Statistical tests are used to evaluate if a newly recorded data point is significantly different to be deemed anomalous (Rajabzadeh et al., Reference Rajabzadeh, Rezaie and Amindavar2016; Kang, Reference Kang2018).

Statistical classifiers are among the recommended anomaly detection techniques in the recent literature on asset health management (Kang, Reference Kang2018). The asset condition data are associated with intrinsic and extrinsic measurement errors caused by system instabilities and inefficiencies, even while the asset is operating in stable conditions. For most preliminary algorithms deployment and simulations, the combined random effect of error and fluctuations in the sensor measurements has been treated as multivariate Gaussian (Kobayashi and Simon, Reference Kobayashi and Simon2005; Saxena et al., Reference Saxena, Goebel, Simon and Eklund2008; Borguet and Léonard, Reference Borguet and Léonard2009).

But independent modeling of assets is accompanied with challenges, primarily those of distribution instabilities. Depending on the variance in asset data, distribution parameters would not be stable until certain amount of data describing the asset’s working regime is obtained. Moreover, owing to the statistically heterogeneous nature of asset operations, collective modeling of the fleet-wide data is challenging (Salvador Palau et al., Reference Salvador Palau, Liang, Lütgehetmann and Parlikad2019). These characteristics impede the application of statistical classifiers for detecting anomalies in the early periods of asset operations when sufficient training data are not available. Therefore, a systematic method for modeling the underlying clusters of similar assets, and enabling their comprising assets to collaboratively learn from one another is much needed.

This paper addresses the above problem by using a hierarchical model for the asset fleet that systematically identifies similar assets, and formulates higher level distributions of the asset level parameters. Hierarchical models enable the individuals from a population, comprising of statistically coherent subpopulations, to collaboratively learn from one another (Eckert et al., Reference Eckert, Parent, Bélanger and Garcia2007; Teacy et al., Reference Teacy, Luck, Rogers and Jennings2012; Gelman et al., Reference Gelman, Carlin, Stern, Dunson, Vehtari and Rubin2013; Hensman et al., Reference Hensman, Lawrence and Rattray2013). The higher-level distributions in this paper represent the general behavior of similar assets, and the individual asset behaviors are described by the parameters sampled from corresponding higher level distributions. Comprehensive information about hierarchical modeling can be found in Gelman et al. (Reference Gelman, Carlin, Stern, Dunson, Vehtari and Rubin2013) and Gelman and Hill (Reference Gelman and Hill2006).

The continuing paper is structured as: Section 2 discusses the prevalent hierarchical modeling and collaborative anomaly detection techniques in the industrial health management literature. Following this, Section 3 describes hierarchical modeling of an asset fleet, including the mathematical description for extending an asset’s independent model to a hierarchical fleet-wide model containing clusters of similar assets. An example implementation of the hierarchical model for a simulated fleet of assets is shown in Section 4. The same section also compares the performance of the hierarchical model with the case where the asset parameters were independently estimated. The results from the experiments are discussed in Section 5. Finally, Sections 6 and 7 summarize the key conclusion and highlight the future research directions respectively.

2. Literature Review

This section discusses the prevalent applications of hierarchical modeling and automated anomaly detection in the context of industrial assets’ health management.

2.1. Hierarchical modeling of the industrial assets

Applied mathematicians have stressed on understanding the heterogeneous nature of the industrial assets since as long as 1967. Lindley et al. (Reference Lindley, Cox and Lewis1967) proposed the use of a simple statistical trend test to quantify the evolving reliability of independent industrial assets. The underlying argument was that a single Poisson process model could not describe the times between failures occurring in multiple independent assets. Ascher (Reference Ascher1983) further highlighted the importance of understanding inter-asset heterogeneity with an illustration of “happy,” “noncommittal,” or “sad” assets, corresponding to increasing, constant, or decreasing times between failures respectively. Ascher (Reference Ascher1983) showed that using the trend test proposed by Lindley et al. (Reference Lindley, Cox and Lewis1967) followed by a nonhomogeneous Poisson processes model, independent industrial assets could be described significantly more accurately.

Multiple industrial assets are independent, but not identical in statistical sense. Yet, their independent and identically distributed (IID) natures are assumed on several occasions for the ease of modeling (Arjas and Bhattacharjee, Reference Arjas and Bhattacharjee2004). For the modern industrial automation almost entirely relying on data-driven ML algorithms, such oblivion to the statistically heterogeneous nature of industrial data poses ever greater risk. Industrial automation, according to the notion of Industry 4.0, aims at end-to-end hands off collaborative control made possible by a series of decision-making algorithms (Gilchrist and Gilchrist, Reference Gilchrist and Gilchrist2016). For example, a maintenance planning procedure broadly comprises of anomaly detection, followed by failure prediction, followed by maintenance planning, and finally followed by resource allocation. In such a serial dependency, inefficiencies or inaccuracies of an algorithm governing any of these steps can easily perpetuate through the control pipeline and deteriorate the decision-making of the algorithms in the following steps.

Industrial asset fleets are in fact a collection of not identical, but similar individuals. For example, a collection of automobiles could be manufactured differently, but they all share similarities in their basic design (Chen and Singpurwalla, Reference Chen and Singpurwalla1996). This characteristic make hierarchical models a suitable solution for statistical analyses of the asset fleets. While modeling the asset fleets, collective behaviors of clusters of similar assets are described using higher level distributions, from which are sampled the parameters describing individual asset operations. For the asset health management applications, researchers have proposed using hierarchical modeling to account for system heterogeneity. While most of the applications focus on describing times between failures, there are also some instances in recent literation where the condition data-driven real time prognosis is enhanced using hierarchical modeling.

One of the earliest applications use hierarchical Bayesian estimation of Bernoulli model parameters for reliability estimation of emergency diesel generators in separate nuclear power plants (Chen and Singpurwalla, Reference Chen and Singpurwalla1996). They showed that hierarchical Bernoulli model was a better technique for simultaneously modeling the collective “composite” and individual reliabilities of the generators, compared to the prevalent approach of analyzing data from all generators as a single dataset. Most other applications in the traditional survival analysis target modeling the times between failures, similar to the illustration described in Ascher (Reference Ascher1983). For example, Arjas and Bhattacharjee (Reference Arjas and Bhattacharjee2004) used a hierarchical Poisson process model to describe the times between failures of closing valves in the safety systems of nuclear plants. They used hierarchical modeling for median times between failures for a collection of valves experiencing different rates of failures over a period of observation. An interesting application can also be found in Johnson et al. (Reference Johnson, Moosman and Cotter2005) where hierarchical modeling was used for reliability estimation of new space crafts, which had experienced none to few failures. Similar other applications include Economou et al. (Reference Economou, Kapelan and Bailey2007), Dedecius and Ettler (Reference Dedecius and Ettler2014), and Yuan and Ji (Reference Yuan and Ji2015), all commonly modeling the times between failures for various equipment.

Of the more recent but fewer condition data-driven prognosis applications, Zaidan et al. (Reference Zaidan, Harrison, Mills and Fleming2015) demonstrated the benefits of hierarchical Bayesian modeling for inferring the deterioration pattern of gas turbines operating in various conditions. Their model involved inferring the health index regression pattern of several gas turbines with respect to operating time, and was shown that hierarchical modeling is a statistically robust solution while learning the prediction function from data spanning across a large fleet of machines. Kao and Chen (Reference Kao and Chen2012) used hierarchical Bayesian neural networks for predicting the failure times of fatigue crack growth, where the focus was on quantifying the systemic heterogeneities across the assets rather than enhancing individual predictions.

2.2. Anomaly detection for industrial assets

The traditional applications of anomaly detection mostly target system diagnostics, involving fault identification and classification. However, with condition data readily available, online anomaly detection techniques are recently gaining popularity.

Anomaly detection in industrial asset operations is challenging. This is because the assets operate over a wide range of environments, in various operating regimes, and can fail in multiple modes (Khan and Madden, Reference Khan and Madden2010; Michau and Fink, Reference Michau and Fink2019). Every asset has its own unique behavior and failure tendency, and therefore requires an anomaly detector particularly suited for its operations. Moreover, the assets do not fail frequently, making the classifier’s training data highly imbalanced toward “normal operation” class. Researchers, therefore, often treat anomaly detection in asset operations as a one-class time series classification problem (Kang, Reference Kang2018).

This paper focuses only on the statistical classifiers, which are introduced in Section 1, due to their straightforward implementation compared to more sophisticated algorithms like deep learning. Such statistical classifiers have been proposed by several researchers for anomaly detection in gas turbine combustors, cooling fans, and general performance monitoring (Borguet and Léonard, Reference Borguet and Léonard2009; Jin et al., Reference Jin, Ma, Cheng and Pecht2012; Yan, Reference Yan2016; Kang, Reference Kang2018).

Interestingly, the literature presents examples where different degrees and forms of collaboration among the assets have shown to improve the performances of anomaly detectors. In the simplest form of collaboration, similar assets are manually identified by the operators based on predetermined indicators, and an overall model is trained using the data from all units as a single IID dataset. This type of collaboration can be found in Zio and Di Maio (Reference Zio and Di Maio2010), González-Prida et al. (Reference González-Prida, Orchard, Martín, Guillén, Shambhu and Shariff2016), and Lapira and Lee (Reference Lapira and Lee2012), where in every case, the operators use a relevant parameter for clustering the corresponding assets. Some researchers have also clustered the entire time series of condition monitoring data based on their Euclidean distances like in the case of Liu (Reference Liu2018), Leone et al. (Reference Leone, Cristaldi and Turrin2016), and Al-Dahidi et al. (Reference Al-Dahidi, Di Maio, Baraldi, Zio and Seraoui2018). In a comparatively more complex collaborative approach, Michau et al. (Reference Michau, Palmé and Fink2018) modeled the functional behaviors of each unit using deep neural networks and identified the similar ones based on the amount of deviation in the neural network parameters. However, each of these applications are associated with their own set of constraints, which primarily are the lack of complete representation for the case of Zio and Di Maio (Reference Zio and Di Maio2010), González-Prida et al. (Reference González-Prida, Orchard, Martín, Guillén, Shambhu and Shariff2016), and Lapira and Lee (Reference Lapira and Lee2012), dimensional complexity while evaluating the Euclidean distances in Liu (Reference Liu2018), Leone et al. (Reference Leone, Cristaldi and Turrin2016), and Al-Dahidi et al. (Reference Al-Dahidi, Di Maio, Baraldi, Zio and Seraoui2018), and the necessary training data for each unit required to train the neural networks in the case of Michau et al. (Reference Michau, Palmé and Fink2018).

Among examples of collaborative anomaly detection solutions, the closest one to the problem discussed in this paper can be found in Michau and Fink (Reference Michau and Fink2019). Michau and Fink (Reference Michau and Fink2019) stress the necessity of one class-classification for industrial systems owing to a wide range of possible operating regimes and rarity of failures. Michau and Fink (Reference Michau and Fink2019) also focus on early life monitoring where a given asset would not have sufficient data for training a robust classifier and propose that the asset rely on learning from other similar assets. However, their proposed solution relies on accumulating data from similar assets to a central location (or the target asset), and augmenting the features space to define a boundary for normal operation common to all similar assets. It must be noted that while the target problem is similar, Michau and Fink (Reference Michau and Fink2019) focus on feature alignment and the current paper focuses on modeling an overall fleet behavior and modifying it to suit individual assets. As such, the solution proposed in this paper differs from the one presented in Michau and Fink (Reference Michau and Fink2019) in three aspects. First, the proposed hierarchical model is capable of identifying the asset clusters in the fleet, in contrast to Michau and Fink (Reference Michau and Fink2019), where it is assumed that all assets within the fleet are similar or known beforehand. Second, the operating regime targeted in this paper is that of earlier operations compared to Michau and Fink (Reference Michau and Fink2019), where the assets they describe as new have 17,000 data points for 24-dimensional data. Finally, hierarchical modeling presented here is a distributed learning technique, and more importantly a technique that enables the assets to learn from each other’s models rather than their data.

In summary, anomaly detection in asset operations has become increasingly important in the recent years due to widespread automation. Several researchers have shown that collaborative learning among the assets can help improve the performances of fault classification models, although with their own set of constraints. Anomaly detection is especially challenging during the early stages of asset operations where sufficient data are not available to model the corresponding regimes of operations. The authors believe that hierarchical modeling of the asset fleet addresses this challenge by enabling the assets with insufficient data to collaborate with other similar assets containing more data. The literature also shows that hierarchical modeling is a reliable technique to model heterogeneity in an asset fleet but, to the best of the authors’ knowledge, it has not yet been implemented for data-driven anomaly detection in industrial assets.

3. Mathematical Description

3.1. Independent asset models

Consider, a fleet comprising of ![]() $ I $ assets. Any given asset

$ I $ assets. Any given asset ![]() $ i $ is monitored using

$ i $ is monitored using ![]() $ d $ sensors, measuring the internal and external parameters such as temperature, vibrations, pressure, and so on. Each of which is a feature describing that asset’s behavior, and thus the nth set of measurements from ith asset can be represented as a vector

$ d $ sensors, measuring the internal and external parameters such as temperature, vibrations, pressure, and so on. Each of which is a feature describing that asset’s behavior, and thus the nth set of measurements from ith asset can be represented as a vector ![]() $ {\mathbf{x}}_{i, n}\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^d $.

$ {\mathbf{x}}_{i, n}\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^d $.

If ![]() $ {N}_i $ measurements recorded from asset

$ {N}_i $ measurements recorded from asset ![]() $ i $ over a given time period, then that asset’s data can be represented as a vector

$ i $ over a given time period, then that asset’s data can be represented as a vector ![]() $ {\mathbf{X}}_i=\left[{\mathbf{x}}_{i,1},{\mathbf{x}}_{i,2},\dots, {\mathbf{x}}_{i,{N}_i}\right],{\mathbf{X}}_i\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^{d\times {N}_i} $.

$ {\mathbf{X}}_i=\left[{\mathbf{x}}_{i,1},{\mathbf{x}}_{i,2},\dots, {\mathbf{x}}_{i,{N}_i}\right],{\mathbf{X}}_i\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^{d\times {N}_i} $.

Owing to the random nature of measurement noise, and assuming no manual interventions, the underlying distribution of an individual asset’s data can be modeled using a multivariate Gaussian ![]() $ {\mathbf{x}}_{i, n}\sim N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ where

$ {\mathbf{x}}_{i, n}\sim N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ where ![]() $ {\boldsymbol{\mu}}_i\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^d $ is the mean vector and

$ {\boldsymbol{\mu}}_i\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^d $ is the mean vector and ![]() $ {\mathbf{C}}_i\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^{d\times d} $ is the covariance matrix.

$ {\mathbf{C}}_i\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^{d\times d} $ is the covariance matrix.

$$ p\left({\mathbf{x}}_{i, n}|{\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)=\frac{1}{\sqrt{{\left(2\pi \right)}^d\mid {\mathbf{C}}_i\mid }}\exp \left(-\frac{1}{2}{\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)}^T{\mathbf{C}}_i^{-1}\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)\right) $$

$$ p\left({\mathbf{x}}_{i, n}|{\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)=\frac{1}{\sqrt{{\left(2\pi \right)}^d\mid {\mathbf{C}}_i\mid }}\exp \left(-\frac{1}{2}{\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)}^T{\mathbf{C}}_i^{-1}\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)\right) $$ Maximum likelihood estimation can be used to evaluate ![]() $ {\hat{\boldsymbol{\mu}}}_i $ and

$ {\hat{\boldsymbol{\mu}}}_i $ and ![]() $ {\hat{\mathbf{C}}}_i $ values for

$ {\hat{\mathbf{C}}}_i $ values for ![]() $ {\mathbf{X}}_i $. A graphical representation of an isolated independent asset model is shown in Figure 1. The following section describes extending the independent asset model to a hierarchical model.

$ {\mathbf{X}}_i $. A graphical representation of an isolated independent asset model is shown in Figure 1. The following section describes extending the independent asset model to a hierarchical model.

Figure 1. Graphical representation of modeling an asset’s data as multivariate Gaussian.

3.2. Hierarchical modeling

A fleet often comprises of assets which are similar by their operational behavior. This could be because certain assets have the same base model, or they may be operating in similar conditions (Jin et al., Reference Jin, Djurdjanovic, Ardakani, Wang, Buzza, Begheri, Brown and Lee2015; Leone et al., Reference Leone, Cristaldi and Turrin2017). It gives rise to the presence of statistically homogenous asset clusters within the fleet. The challenges related to distribution instabilities mentioned in Section 1 can be alleviated if the individuals comprising such a cluster are jointly modeled with a common underlying distribution of their individual distribution parameters.

Hierarchical model of the asset fleet mathematically formulates this idea by defining distributions at two levels. The parameters describing the distributions of individual asset data are considered to be sampled from their corresponding higher level distributions. The higher level distributions are shared by the asset clusters, and therefore jointly resemble the operating regimes of the assets comprising those clusters. The higher level distributions are chosen as the conjugate priors of the asset level distribution parameters. Estimated asset level parameters are weighed more toward the higher level distribution when the asset does not possess sufficient data. However, as more data are accumulated over time, the weight shifts toward the asset’s own data and eventually becomes equivalent to an independent model. This enables an asset with insufficient data in its early phase of operations to collaboratively learn from similar other assets containing more data.

For the case of asset fleets, Normal-Inverse Wishart are chosen as the higher level distributions. These are the natural conjugate priors for a multivariate Gaussian with unknown mean and covariance. Concretely, the parameters ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ describing ith asset are believed to be sampled from higher distributions as

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ describing ith asset are believed to be sampled from higher distributions as ![]() $ {\boldsymbol{\mu}}_i\sim N\left({\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) $ and

$ {\boldsymbol{\mu}}_i\sim N\left({\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) $ and ![]() $ {\mathbf{C}}_i\sim I W\left({\boldsymbol{\Lambda}}_k,{\alpha}_k\right) $ where

$ {\mathbf{C}}_i\sim I W\left({\boldsymbol{\Lambda}}_k,{\alpha}_k\right) $ where ![]() $ k=1,2,\dots, K $ represents the cluster index and

$ k=1,2,\dots, K $ represents the cluster index and ![]() $ \left({\mathbf{m}}_k\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^d,\hskip0.30em ,{\beta}_k\hskip0.30em \in \hskip0.30em \mathrm{\mathbb{R}},\hskip0.30em {\boldsymbol{\Lambda}}_k\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^{d\times d},\hskip0.30em {\alpha}_k\hskip0.30em \in \hskip0.30em \mathrm{\mathbb{R}}\right) $ are the parameters of cluster level distributions.

$ \left({\mathbf{m}}_k\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^d,\hskip0.30em ,{\beta}_k\hskip0.30em \in \hskip0.30em \mathrm{\mathbb{R}},\hskip0.30em {\boldsymbol{\Lambda}}_k\hskip0.30em \in \hskip0.30em {\mathrm{\mathbb{R}}}^{d\times d},\hskip0.30em {\alpha}_k\hskip0.30em \in \hskip0.30em \mathrm{\mathbb{R}}\right) $ are the parameters of cluster level distributions.

$$ p\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k,{\mathbf{C}}_i\right)= N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,\hskip0.35em {\beta}_k^{-1}{\mathbf{C}}_i\right)=\sqrt{\frac{\beta_k^d}{{\left(2\pi \right)}^d\mid {\mathbf{C}}_i\mid }}\exp \left(-\frac{\beta_k}{2}{\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T{\mathbf{C}}_i^{-1}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)\right) $$

$$ p\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k,{\mathbf{C}}_i\right)= N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,\hskip0.35em {\beta}_k^{-1}{\mathbf{C}}_i\right)=\sqrt{\frac{\beta_k^d}{{\left(2\pi \right)}^d\mid {\mathbf{C}}_i\mid }}\exp \left(-\frac{\beta_k}{2}{\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T{\mathbf{C}}_i^{-1}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)\right) $$ $$ p\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)= I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,\hskip0.30em ,{\alpha}_k\right)=\frac{{\left|{\boldsymbol{\Lambda}}_k\right|}^{\alpha_k/2}}{2^{\alpha_k d/2}{\Gamma}_d\left(\frac{\alpha_k}{2}\right)}{\left|{\mathbf{C}}_i\right|}^{-\left({\alpha}_k+ d+1\right)/2}\exp \left(-\frac{1}{2} Tr\left({\boldsymbol{\Lambda}}_i{\mathbf{C}}_i^{-1}\right)\right) $$

$$ p\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)= I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,\hskip0.30em ,{\alpha}_k\right)=\frac{{\left|{\boldsymbol{\Lambda}}_k\right|}^{\alpha_k/2}}{2^{\alpha_k d/2}{\Gamma}_d\left(\frac{\alpha_k}{2}\right)}{\left|{\mathbf{C}}_i\right|}^{-\left({\alpha}_k+ d+1\right)/2}\exp \left(-\frac{1}{2} Tr\left({\boldsymbol{\Lambda}}_i{\mathbf{C}}_i^{-1}\right)\right) $$ where ![]() $ \Gamma $ is the multivariate Gamma function, and

$ \Gamma $ is the multivariate Gamma function, and ![]() $ Tr\left(\right) $ is the trace function.

$ Tr\left(\right) $ is the trace function.

As it can be observed that, at higher level lies a mixture of Normal-Inverse Wishart distributions from which pairs of ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ are sampled. The probability density function for a given

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ are sampled. The probability density function for a given ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ pair conditional on higher level parameters can therefore be written as:

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ pair conditional on higher level parameters can therefore be written as:

$$ p\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i|{\mathbf{m}}_k,{\beta}_k,{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)=\sum \limits_{k=1}^K\left[{\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)\right] $$

$$ p\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i|{\mathbf{m}}_k,{\beta}_k,{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)=\sum \limits_{k=1}^K\left[{\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)\right] $$ where ![]() $ {\pi}_k\hskip0.30em \in \hskip0.30em \mathrm{\mathbb{R}} $ and

$ {\pi}_k\hskip0.30em \in \hskip0.30em \mathrm{\mathbb{R}} $ and ![]() $ {\sum}_{k=1}^K{\pi}_k=1 $ is the proportion of assets belonging to kth cluster. Individual asset data are further sampled from this

$ {\sum}_{k=1}^K{\pi}_k=1 $ is the proportion of assets belonging to kth cluster. Individual asset data are further sampled from this ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ pair.

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ pair.

Therefore, probability density function for complete data for an asset ![]() $ i $ is:

$ i $ is:

$$ p\left({\mathbf{x}}_{i,1},{\mathbf{x}}_{i,2},\dots, {\mathbf{x}}_{1,{N}_i}\right)=\prod \limits_{n=1}^{N_i}\left[ N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\sum \limits_{k=1}^K[{\pi}_k N({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)\right]] $$

$$ p\left({\mathbf{x}}_{i,1},{\mathbf{x}}_{i,2},\dots, {\mathbf{x}}_{1,{N}_i}\right)=\prod \limits_{n=1}^{N_i}\left[ N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\sum \limits_{k=1}^K[{\pi}_k N({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)\right]] $$ probability density function of the entire fleet data across all assets (represented by ![]() $ \mathbf{X} $) is:

$ \mathbf{X} $) is:

$$ p\left(\mathbf{X}\right)=\prod \limits_{i=1}^I\left[\prod \limits_{n=1}^{N_i}\left[ N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\sum \limits_{k=1}^K[{\pi}_k N({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)]\right]\right] $$

$$ p\left(\mathbf{X}\right)=\prod \limits_{i=1}^I\left[\prod \limits_{n=1}^{N_i}\left[ N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\sum \limits_{k=1}^K[{\pi}_k N({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)]\right]\right] $$ For a given set of ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{m}}_k,{\alpha}_k,\right) $, the above function is also the likelihood of the data. Obtaining estimates of

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{m}}_k,{\alpha}_k,\right) $, the above function is also the likelihood of the data. Obtaining estimates of ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{m}}_k,{\alpha}_k,\right) $ parameters would therefore require maximizing the log of above probability function with respect to the parameters. The required log-likelihood objective function of the entire dataset for given parameter values is:

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{m}}_k,{\alpha}_k,\right) $ parameters would therefore require maximizing the log of above probability function with respect to the parameters. The required log-likelihood objective function of the entire dataset for given parameter values is:

$$ \log \left( p\left(\mathbf{X}\right)\right)=\sum \limits_{i=1}^I\sum \limits_{n=1}^{N_i}\log \left( N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\right)+\sum \limits_{i=1}^I\log \left(\sum \limits_{k=1}^K{\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k,)\right) $$

$$ \log \left( p\left(\mathbf{X}\right)\right)=\sum \limits_{i=1}^I\sum \limits_{n=1}^{N_i}\log \left( N\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\right)+\sum \limits_{i=1}^I\log \left(\sum \limits_{k=1}^K{\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k,)\right) $$ However, it can be observed that, due to presence of summation ![]() $ {\sum}_{k=1}^K $ within

$ {\sum}_{k=1}^K $ within ![]() $ \log \left(\right) $ function in the second term, analytically evaluating partial derivatives and equating them to zero is not straightforward, because both LHS and RHS of the final equations would comprise of unknown parameters. The next section explains an iterative expectation maximization (EM) algorithm that solves this problem.

$ \log \left(\right) $ function in the second term, analytically evaluating partial derivatives and equating them to zero is not straightforward, because both LHS and RHS of the final equations would comprise of unknown parameters. The next section explains an iterative expectation maximization (EM) algorithm that solves this problem.

3.2.1. Model parameters estimation

Maximizing the log-likelihood in Equation (7) is difficult specifically because the clusters within the fleet and their constituent assets are not predetermined. The data are therefore in a sense incomplete.

A latent (hidden) binary variable matrix ![]() $ \mathbf{z}\hskip0.30em \in \hskip0.30em {\left\{0,1\right\}}^{I\times K} $ is introduced to complete the data, such that

$ \mathbf{z}\hskip0.30em \in \hskip0.30em {\left\{0,1\right\}}^{I\times K} $ is introduced to complete the data, such that ![]() $ {\mathbf{z}}_{i, k}=1 $ if the ith asset belongs to the kth cluster. For a given asset

$ {\mathbf{z}}_{i, k}=1 $ if the ith asset belongs to the kth cluster. For a given asset ![]() $ i $ and set of distribution parameters, the probability of

$ i $ and set of distribution parameters, the probability of ![]() $ {\mathbf{z}}_{i, k}=1 $ is therefore given by

$ {\mathbf{z}}_{i, k}=1 $ is therefore given by

This, if evaluated across all values of ![]() $ k $, and

$ k $, and ![]() $ {\mathbf{z}}_i^{th} $ vector of

$ {\mathbf{z}}_i^{th} $ vector of ![]() $ \mathbf{z} $ would be

$ \mathbf{z} $ would be

$$ p\left({\mathbf{z}}_i|\boldsymbol{\theta} \right)=\prod \limits_{k=1}^K{\left[{\pi}_k\right]}^{{\mathbf{z}}_{i, k}} $$

$$ p\left({\mathbf{z}}_i|\boldsymbol{\theta} \right)=\prod \limits_{k=1}^K{\left[{\pi}_k\right]}^{{\mathbf{z}}_{i, k}} $$ where ![]() $ \boldsymbol{\theta} $ represents the set of parameters

$ \boldsymbol{\theta} $ represents the set of parameters ![]() $ \left({\mathbf{m}}_k,{\beta}_k,{\boldsymbol{\Lambda}}_k,{\alpha}_k,{\pi}_k\right) $.

$ \left({\mathbf{m}}_k,{\beta}_k,{\boldsymbol{\Lambda}}_k,{\alpha}_k,{\pi}_k\right) $.

Moreover, the probability of ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ conditioned on

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right) $ conditioned on ![]() $ {\mathbf{z}}_{i, k}=1 $ is

$ {\mathbf{z}}_{i, k}=1 $ is

This, again if evaluated across all values of k is given by

$$ p\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i|{\mathbf{z}}_i=1,\boldsymbol{\theta} \right)=\prod \limits_{k=1}^K{\left[ N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)\right]}^{{\mathbf{z}}_{i, k}} $$

$$ p\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i|{\mathbf{z}}_i=1,\boldsymbol{\theta} \right)=\prod \limits_{k=1}^K{\left[ N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)\right]}^{{\mathbf{z}}_{i, k}} $$ Probability of ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{z}}_i\right) $ can therefore be evaluated simply by multiplying Equations (9) and (11) as

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{z}}_i\right) $ can therefore be evaluated simply by multiplying Equations (9) and (11) as

$$ p\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{z}}_i|\boldsymbol{\theta} \right)=\prod \limits_{k=1}^K{\left[{\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)\right]}^{{\mathbf{z}}_{i, k}} $$

$$ p\left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\mathbf{z}}_i|\boldsymbol{\theta} \right)=\prod \limits_{k=1}^K{\left[{\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W\left({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k\right)\right]}^{{\mathbf{z}}_{i, k}} $$ Continuing similar to Equations (5) and (6), the complete data probability for a given set of parameters ![]() $ \boldsymbol{\theta} $ is given by

$ \boldsymbol{\theta} $ is given by

$$ p\left(\mathbf{X},\mathbf{z}|\boldsymbol{\theta} \right)=\prod \limits_{i=1}^I\left[\prod \limits_{n=1}^{N_i}\left[ N\left({\mathbf{x}}_i|{\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\prod \limits_{k=1}^K[{\pi}_k N({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)]{}^{{\mathbf{z}}_{i, k}}\right]\right] $$

$$ p\left(\mathbf{X},\mathbf{z}|\boldsymbol{\theta} \right)=\prod \limits_{i=1}^I\left[\prod \limits_{n=1}^{N_i}\left[ N\left({\mathbf{x}}_i|{\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\prod \limits_{k=1}^K[{\pi}_k N({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)]{}^{{\mathbf{z}}_{i, k}}\right]\right] $$ The graphical representation shown in Figure 2 describes the hierarchical modeling for whole fleet data, including the hidden cluster indicator ![]() $ \mathbf{z} $.

$ \mathbf{z} $.

Figure 2. Graphical representation of hierarchically modeled fleet data. Individual asset data are modeled as multivariate Gaussians, whose mean and covariance parameters are sampled from higher level Normal-Inverse Wishart distributions respectively.

The complete data log-likelihood for a given set of parameters ![]() $ \boldsymbol{\theta} $ thus equates to

$ \boldsymbol{\theta} $ thus equates to

$$ \log \left( p\left(\mathbf{X},\mathbf{z}|\boldsymbol{\theta} \right)\right)=\sum \limits_{i=1}^I\sum \limits_{n=1}^{N_i}\log \left( N\left({\mathbf{x}}_i|{\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\right)+\sum \limits_{i=1}^I\sum \limits_{k=1}^K{\mathbf{z}}_{i, k}\log \left({\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)\right) $$

$$ \log \left( p\left(\mathbf{X},\mathbf{z}|\boldsymbol{\theta} \right)\right)=\sum \limits_{i=1}^I\sum \limits_{n=1}^{N_i}\log \left( N\left({\mathbf{x}}_i|{\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\right)+\sum \limits_{i=1}^I\sum \limits_{k=1}^K{\mathbf{z}}_{i, k}\log \left({\pi}_k N\left({\boldsymbol{\mu}}_i|{\mathbf{m}}_k,{\beta}_k^{-1}{\mathbf{C}}_i\right) I W({\mathbf{C}}_i|{\boldsymbol{\Lambda}}_k,{\alpha}_k)\right) $$ To maximize the complete data log-likelihood function in Equation (14), Equation (14) must be differentiated with respect to individual parameters to obtain the corresponding maxima. However, the values of ![]() $ {\mathbf{z}}_{i, k} $ are unknown, and therefore, the partial derivative equations are not solvable.

$ {\mathbf{z}}_{i, k} $ are unknown, and therefore, the partial derivative equations are not solvable.

The EM algorithm addresses this problem of parameter estimation via looped iterations through two steps: the expectation(E)-step, and the maximization(M)-step which are explained in the following subsections. Here again, ![]() $ \boldsymbol{\theta} $ are the model parameters and the parameters corresponding to tth iteration are written as

$ \boldsymbol{\theta} $ are the model parameters and the parameters corresponding to tth iteration are written as ![]() $ {\boldsymbol{\theta}}^t $.

$ {\boldsymbol{\theta}}^t $.

In the E-step, a function ![]() $ Q\left(\boldsymbol{\theta}, {\boldsymbol{\theta}}^t\right) $ is computed which is the expectation of the complete data log-likelihood w.r.t. the distribution of hidden variable

$ Q\left(\boldsymbol{\theta}, {\boldsymbol{\theta}}^t\right) $ is computed which is the expectation of the complete data log-likelihood w.r.t. the distribution of hidden variable ![]() $ \mathbf{z} $ conditioned over the incomplete data

$ \mathbf{z} $ conditioned over the incomplete data ![]() $ \mathbf{X} $ and

$ \mathbf{X} $ and ![]() $ {\boldsymbol{\theta}}^t $ parameter values. Concretely,

$ {\boldsymbol{\theta}}^t $ parameter values. Concretely,

Therefore, the ![]() $ \mathbf{z} $ terms are replaced by their expected values for the given incomplete data

$ \mathbf{z} $ terms are replaced by their expected values for the given incomplete data ![]() $ \mathbf{X} $ and

$ \mathbf{X} $ and ![]() $ {\boldsymbol{\theta}}^t $ parameter values, and the other terms in

$ {\boldsymbol{\theta}}^t $ parameter values, and the other terms in ![]() $ Q\left(\boldsymbol{\theta}, {\boldsymbol{\theta}}^t\right) $ depend on

$ Q\left(\boldsymbol{\theta}, {\boldsymbol{\theta}}^t\right) $ depend on ![]() $ \boldsymbol{\theta} $.

$ \boldsymbol{\theta} $.

In the M-step, the values of parameters for the next (t + 1)th iteration ![]() $ {\boldsymbol{\theta}}^{t+1} $ of the E-step are evaluated by maximising

$ {\boldsymbol{\theta}}^{t+1} $ of the E-step are evaluated by maximising ![]() $ Q\left(\boldsymbol{\theta}, {\boldsymbol{\theta}}^t\right) $ over

$ Q\left(\boldsymbol{\theta}, {\boldsymbol{\theta}}^t\right) $ over ![]() $ \boldsymbol{\theta} $, but treating

$ \boldsymbol{\theta} $, but treating ![]() $ \mathbf{z} $ terms as constants.

$ \mathbf{z} $ terms as constants.

Estimated values of model parameters at M-step of every EM iteration are presented in Equations (17)–(22), where the “![]() $ {\boldsymbol{\gamma}}_{i, k} $” terms are the expected

$ {\boldsymbol{\gamma}}_{i, k} $” terms are the expected ![]() $ {\mathbf{z}}_{i, k} $ values from the previous E-step. The estimates for

$ {\mathbf{z}}_{i, k} $ values from the previous E-step. The estimates for ![]() $ {\alpha}_k $ at M-steps can be obtained using any nonlinear optimization routine. Derivations of the E- and M-steps for our application are shown in Appendix A.

$ {\alpha}_k $ at M-steps can be obtained using any nonlinear optimization routine. Derivations of the E- and M-steps for our application are shown in Appendix A.

$$ \frac{1}{{\hat{\beta}}_k}=\frac{\sum_{i=1}^I\hskip0.40em {\boldsymbol{\gamma}}_{i, k}{\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T{\mathbf{C}}_i^{-1}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}{d\hskip0.40em {\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}} $$

$$ \frac{1}{{\hat{\beta}}_k}=\frac{\sum_{i=1}^I\hskip0.40em {\boldsymbol{\gamma}}_{i, k}{\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T{\mathbf{C}}_i^{-1}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}{d\hskip0.40em {\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}} $$ $$ {\hat{\mathbf{m}}}_k={\left[\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1}\left[\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}{\boldsymbol{\mu}}_i\right] $$

$$ {\hat{\mathbf{m}}}_k={\left[\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1}\left[\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}{\boldsymbol{\mu}}_i\right] $$ $$ {\hat{\boldsymbol{\Lambda}}}_k=\left[{\alpha}_k\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}\right]{\left[\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1} $$

$$ {\hat{\boldsymbol{\Lambda}}}_k=\left[{\alpha}_k\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}\right]{\left[\sum \limits_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1} $$ $$ {\hat{\boldsymbol{\mu}}}_i=\frac{1}{N_i+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}}\left[\sum \limits_{n=1}^{N_i}{\mathbf{x}}_{i, n}+\sum \limits_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}{\mathbf{m}}_k\right] $$

$$ {\hat{\boldsymbol{\mu}}}_i=\frac{1}{N_i+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}}\left[\sum \limits_{n=1}^{N_i}{\mathbf{x}}_{i, n}+\sum \limits_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}{\mathbf{m}}_k\right] $$ $$ {\hat{\mathbf{C}}}_i=\frac{\sum_{n=1}^{N_i}\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right){\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)}^T+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right){\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\boldsymbol{\Lambda}}_k}{N_i+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\alpha}_k+ d+2} $$

$$ {\hat{\mathbf{C}}}_i=\frac{\sum_{n=1}^{N_i}\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right){\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)}^T+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right){\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\boldsymbol{\Lambda}}_k}{N_i+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\alpha}_k+ d+2} $$Parameters for the zeroth iteration are randomly initialized, and the estimates are believed to have converged when their evaluated values are consistent over consecutive iterations or when the complete data log-likelihood in Equation (14) ceases to increase any further with more iterations.

The initialization of parameters can also vary by application. Generally, it was observed here that, the asset level parameters (i.e., ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\forall i\hskip0.30em \in \hskip0.30em \left\{ I\right\} $) were best initialized by the standard maximum log-likelihood estimator for the asset’s Gaussian model. While initializing the higher level parameters,

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i\right)\forall i\hskip0.30em \in \hskip0.30em \left\{ I\right\} $) were best initialized by the standard maximum log-likelihood estimator for the asset’s Gaussian model. While initializing the higher level parameters, ![]() $ {\beta}_k $ were best initialized at low values and

$ {\beta}_k $ were best initialized at low values and ![]() $ {\alpha}_k $ as equal to the dimension of the data. These ensured wider search space in the early iterations.

$ {\alpha}_k $ as equal to the dimension of the data. These ensured wider search space in the early iterations. ![]() $ \left({\mathbf{m}}_k,{\boldsymbol{\Lambda}}_k\right)\hskip0.40em \forall \hskip0.40em k\hskip0.30em \in \hskip0.30em \left\{ K\right\} $ initialized randomly around the observed data values, but ensuring that the initial

$ \left({\mathbf{m}}_k,{\boldsymbol{\Lambda}}_k\right)\hskip0.40em \forall \hskip0.40em k\hskip0.30em \in \hskip0.30em \left\{ K\right\} $ initialized randomly around the observed data values, but ensuring that the initial ![]() $ {\boldsymbol{\Lambda}}_k $ were positive definite matrices. The steps followed for hierarchical model parameters estimation, including the initialization in the experiments described here and EM iterations, are described in Algorithm 1. In Algorithm 1,

$ {\boldsymbol{\Lambda}}_k $ were positive definite matrices. The steps followed for hierarchical model parameters estimation, including the initialization in the experiments described here and EM iterations, are described in Algorithm 1. In Algorithm 1, ![]() $ E\left({x}_{i, n}\right) $ in line 4 represents the expectation of

$ E\left({x}_{i, n}\right) $ in line 4 represents the expectation of ![]() $ {x}_{i, n} $ vector,

$ {x}_{i, n} $ vector, ![]() $ \mathit{\operatorname{rand}}(d) $ and

$ \mathit{\operatorname{rand}}(d) $ and ![]() $ \mathit{\operatorname{rand}}\left( d, d\right) $ functions in line 9 generate random real numbered matrices of

$ \mathit{\operatorname{rand}}\left( d, d\right) $ functions in line 9 generate random real numbered matrices of ![]() $ (d) $ and

$ (d) $ and ![]() $ \left( d\times d\right) $ dimensions respectively, and

$ \left( d\times d\right) $ dimensions respectively, and ![]() $ p\left({clust}_i= k\right) $ in line 16 represents the overall data likelihood for the ith asset, assuming that the ith asset belongs to the cluster

$ p\left({clust}_i= k\right) $ in line 16 represents the overall data likelihood for the ith asset, assuming that the ith asset belongs to the cluster ![]() $ k $. Moreover, the terms on the RHS in the M-step are the values from the previous iterations, except

$ k $. Moreover, the terms on the RHS in the M-step are the values from the previous iterations, except ![]() $ {\boldsymbol{\gamma}}_{i, k} $ which are evaluated at the corresponding E-step.

$ {\boldsymbol{\gamma}}_{i, k} $ which are evaluated at the corresponding E-step.

Algorithm 1: Pseudo-code describing the steps to estimate the hierarchical model parameters for an asset fleet comprising  $ K $ clusters and generating

$ K $ clusters and generating  $ d $ dimensional condition data

$ d $ dimensional condition data

Result: Estimated hierarchical model parameters

1 Initialise the parameters:

2 for each asset i do

3  $ \left|\hskip1em {\boldsymbol{\mu}}_i\leftarrow \frac{\sum_{n=1}^{N_i}{\boldsymbol{x}}_{i, n}}{N_i}\right. $;

$ \left|\hskip1em {\boldsymbol{\mu}}_i\leftarrow \frac{\sum_{n=1}^{N_i}{\boldsymbol{x}}_{i, n}}{N_i}\right. $;

4 ![]() $ \left|\hskip1em {\boldsymbol{C}}_i^{\left( n, m\right)}\leftarrow E(\left({\boldsymbol{x}}_{i, n}- E\left({\boldsymbol{x}}_{i, n}\right)\right)\left({\boldsymbol{x}}_{i, m}- E\left({\boldsymbol{x}}_{i, m}\right)\right)\right. $;

$ \left|\hskip1em {\boldsymbol{C}}_i^{\left( n, m\right)}\leftarrow E(\left({\boldsymbol{x}}_{i, n}- E\left({\boldsymbol{x}}_{i, n}\right)\right)\left({\boldsymbol{x}}_{i, m}- E\left({\boldsymbol{x}}_{i, m}\right)\right)\right. $;

5 end

6 for each cluster k do

7 ![]() $ |\hskip1em {\beta}_k\leftarrow 0.001 $;

$ |\hskip1em {\beta}_k\leftarrow 0.001 $;

8 ![]() $ |\hskip1em {\alpha}_k\leftarrow d $;

$ |\hskip1em {\alpha}_k\leftarrow d $;

9 ![]() $ |\hskip1em \left({\mathbf{m}}_k,{\boldsymbol{\Lambda}}_k\right)\leftarrow $

$ |\hskip1em \left({\mathbf{m}}_k,{\boldsymbol{\Lambda}}_k\right)\leftarrow $ ![]() $ \left(\mathit{\operatorname{rand}}(d),\mathit{\operatorname{rand}}\left( d\times d\right)\right) $;

$ \left(\mathit{\operatorname{rand}}(d),\mathit{\operatorname{rand}}\left( d\times d\right)\right) $;

10 end

11

12 The EM iterations:

13 while Iter < 20 do

14 ![]() $ | $ The E-step:

$ | $ The E-step:

15 ![]() $ | $ for each asset i and cluster k do

$ | $ for each asset i and cluster k do

16![]() $ | $

$ | $ ![]() $ \left|\hskip1em {\gamma}_{i, k}\leftarrow \frac{p\left({clust}_i= k\right)}{p\left({clust}_i=1\right)+ p\left({clust}_i=2\right)+\dots + p\left({clust}_i= k\right)}\right. $;

$ \left|\hskip1em {\gamma}_{i, k}\leftarrow \frac{p\left({clust}_i= k\right)}{p\left({clust}_i=1\right)+ p\left({clust}_i=2\right)+\dots + p\left({clust}_i= k\right)}\right. $;

17 ![]() $ | $ end

$ | $ end

18 ![]() $ | $ The M-step:

$ | $ The M-step:

19 ![]() $ | $ for each asset i do

$ | $ for each asset i do

20![]() $ | $

$ | $  $ |\hskip1em {\hat{\boldsymbol{\mu}}}_i\leftarrow \frac{1}{N_i+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}}\left[{\sum}_{n=1}^{N_i}{\mathbf{x}}_{i, n}+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}{\mathbf{m}}_k\right] $;

$ |\hskip1em {\hat{\boldsymbol{\mu}}}_i\leftarrow \frac{1}{N_i+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}}\left[{\sum}_{n=1}^{N_i}{\mathbf{x}}_{i, n}+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}{\mathbf{m}}_k\right] $;

21 ![]() $ | $

$ | $  $ |\hskip1em {\hat{\mathbf{C}}}_i\leftarrow \frac{\sum_{n=1}^{N_i}\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right){\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)}^T+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right){\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\boldsymbol{\Lambda}}_k}{N_i+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\alpha}_k+ d+2} $;

$ |\hskip1em {\hat{\mathbf{C}}}_i\leftarrow \frac{\sum_{n=1}^{N_i}\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right){\left({\mathbf{x}}_{i, n}-{\boldsymbol{\mu}}_i\right)}^T+{\sum}_{k=1}^K{\beta}_k{\boldsymbol{\gamma}}_{i, k}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right){\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\boldsymbol{\Lambda}}_k}{N_i+{\sum}_{k=1}^K{\boldsymbol{\gamma}}_{i, k}{\alpha}_k+ d+2} $;

22 ![]() $ | $ end

$ | $ end

23 ![]() $ | $ for each cluster k do

$ | $ for each cluster k do

24  $ |\hskip2.5em |\hskip1em \frac{1}{{\hat{\beta}}_k}\leftarrow \frac{\sum_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T{\mathbf{C}}_i^{-1}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}{d{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}} $;

$ |\hskip2.5em |\hskip1em \frac{1}{{\hat{\beta}}_k}\leftarrow \frac{\sum_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}^T{\mathbf{C}}_i^{-1}\left({\boldsymbol{\mu}}_i-{\mathbf{m}}_k\right)}{d{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}} $;

25 ![]() $ | $

$ | $ $ \hskip2.5em |\hskip1em {\hat{\mathbf{m}}}_k\leftarrow {\left[{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1}\left[{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}{\boldsymbol{\mu}}_i\right] $;

$ \hskip2.5em |\hskip1em {\hat{\mathbf{m}}}_k\leftarrow {\left[{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1}\left[{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}{\boldsymbol{\mu}}_i\right] $;

26 ![]() $ | $

$ | $ $ \hskip2.5em |\hskip1em {\hat{\boldsymbol{\Lambda}}}_k\leftarrow \left[{\alpha}_k{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}\right]{\left[{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1} $;

$ \hskip2.5em |\hskip1em {\hat{\boldsymbol{\Lambda}}}_k\leftarrow \left[{\alpha}_k{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}\right]{\left[{\sum}_{i=1}^I{\boldsymbol{\gamma}}_{i, k}{\mathbf{C}}_i^{-1}\right]}^{-1} $;

27 ![]() $ | $

$ | $ $ \hskip2.5em |\hskip1em {\hat{\boldsymbol{\pi}}}_k\leftarrow \frac{\sum_{i=1}^I{\boldsymbol{\gamma}}_{i, k}}{I} $;

$ \hskip2.5em |\hskip1em {\hat{\boldsymbol{\pi}}}_k\leftarrow \frac{\sum_{i=1}^I{\boldsymbol{\gamma}}_{i, k}}{I} $;

28 ![]() $ | $

$ | $ $ \hskip2.5em |\hskip1em {\alpha}_k\leftarrow $ BFGS

$ \hskip2.5em |\hskip1em {\alpha}_k\leftarrow $ BFGS![]() $ {}_{max}\left(\frac{1}{2}{\alpha}_k\log |{\boldsymbol{\Lambda}}_k|{\sum}_i{\boldsymbol{\gamma}}_{i k}-\frac{d}{2}\log (2){\alpha}_k{\sum}_i{\boldsymbol{\gamma}}_{i k}-\log \left({\Gamma}_d\left(\frac{\alpha_k}{2}\right)\right){\sum}_i{\boldsymbol{\gamma}}_{i k}-\right. $

$ {}_{max}\left(\frac{1}{2}{\alpha}_k\log |{\boldsymbol{\Lambda}}_k|{\sum}_i{\boldsymbol{\gamma}}_{i k}-\frac{d}{2}\log (2){\alpha}_k{\sum}_i{\boldsymbol{\gamma}}_{i k}-\log \left({\Gamma}_d\left(\frac{\alpha_k}{2}\right)\right){\sum}_i{\boldsymbol{\gamma}}_{i k}-\right. $

![]() $ | $

$ | $ $ \hskip4.33em |\hskip1em \hskip1em \frac{1}{2}\left({\alpha}_k+ d+1\right){\sum}_i{\boldsymbol{\gamma}}_{i k}\hskip0.2em \log \hskip0.2em |{\boldsymbol{C}}_i|) $;

$ \hskip4.33em |\hskip1em \hskip1em \frac{1}{2}\left({\alpha}_k+ d+1\right){\sum}_i{\boldsymbol{\gamma}}_{i k}\hskip0.2em \log \hskip0.2em |{\boldsymbol{C}}_i|) $;

29 ![]() $ | $ end

$ | $ end

30 ![]() $ | $

$ | $ ![]() $ Iter\leftarrow Iter+1 $;

$ Iter\leftarrow Iter+1 $;

31 end

32 return: ![]() $ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\beta}_k,{\alpha}_k,{\boldsymbol{\Lambda}}_k,{\mathbf{m}}_k\right)\forall i, k\hskip0.30em \in \hskip0.30em I, K $ respectively.

$ \left({\boldsymbol{\mu}}_i,{\mathbf{C}}_i,{\beta}_k,{\alpha}_k,{\boldsymbol{\Lambda}}_k,{\mathbf{m}}_k\right)\forall i, k\hskip0.30em \in \hskip0.30em I, K $ respectively.

4. Example Implementation

This section discusses the experiments conducted to demonstrate and evaluate the performance of the hierarchical model for anomaly detection. Performance of the hierarchical model is also compared with independent modeling of the assets.

Independent modeling does not consider the presence of similar assets in the fleet. Therefore, the ![]() $ \left({\hat{\boldsymbol{\mu}}}_i,{\hat{\boldsymbol{C}}}_i\right) $ estimates for every asset, obtained via independent modeling, correspond to their maximum likelihood estimates based on that asset’s data only. These estimates are evaluated according to Equations (23) and (24).

$ \left({\hat{\boldsymbol{\mu}}}_i,{\hat{\boldsymbol{C}}}_i\right) $ estimates for every asset, obtained via independent modeling, correspond to their maximum likelihood estimates based on that asset’s data only. These estimates are evaluated according to Equations (23) and (24).

$$ {\hat{\boldsymbol{\mu}}}_i=\frac{{\sum \limits}_{n=1}^N{\boldsymbol{x}}_{i, n}}{N_i} $$

$$ {\hat{\boldsymbol{\mu}}}_i=\frac{{\sum \limits}_{n=1}^N{\boldsymbol{x}}_{i, n}}{N_i} $$ where ![]() $ {\hat{\boldsymbol{C}}}_i^{\left( n, m\right)} $ represents the

$ {\hat{\boldsymbol{C}}}_i^{\left( n, m\right)} $ represents the ![]() $ {\left( n, m\right)}^{th} $ entry of the estimated covariance matrix

$ {\left( n, m\right)}^{th} $ entry of the estimated covariance matrix ![]() $ {\hat{\boldsymbol{C}}}_i $, and

$ {\hat{\boldsymbol{C}}}_i $, and ![]() $ E\left({\boldsymbol{x}}_{i, n}\right) $ represents the expectation of

$ E\left({\boldsymbol{x}}_{i, n}\right) $ represents the expectation of ![]() $ {\boldsymbol{x}}_{i, n} $ data vector.

$ {\boldsymbol{x}}_{i, n} $ data vector.

Experimental cases, and the performance metric used for evaluating and comparing both modeling approaches are described in the following subsections. Section 4.1 explains the synthetic dataset used for the experiments, Section 4.3 describes the evaluation metric, and finally Section 4.3 and 4.4 present the experimental results to compare the performances of hierarchical and independent modeling techniques.

4.1. Experimental data

Synthetic datasets representing a fleet of assets, containing subpopulations of similar assets, were used for the experiments. These constituted the training and the testing datasets.

4.1.1. Training dataset

The data generation method described here ensured that the fleet comprised of coherent subpopulations of assets, and also that no two assets in the fleet were identical.

The training dataset comprised of multidimensional samples of assets’ condition data over a period of their normal operation and collected across the entire fleet. The condition data for each asset comprised of points randomly sampled from a Gaussian distribution, with constant mean and covariance. This ensured that the simulated asset data were equivalent to a real asset operating in steady condition but with associated noise and fluctuations as explained in Section 1. The means of the underlying Gaussians were considered to be the equivalents of the asset model types, and the covariances of the Gaussians were considered to be the equivalents of their operating conditions.

Different asset model types are designed to operate in different ranges. Therefore, the assets belonging to the same model type are expected to operate within a certain permissible range. This was represented in the training dataset by defining ranges for the Gaussian means of assets belonging to separate model types. Similarly, the operating condition of an asset determines how much variation is caused in its condition data. For example, older engines are expected to have higher vibrations than the newer ones, and therefore induce larger variation from their mean vibrations value. This was represented in the dataset by defining a set of possible covariance matrices that an asset’s Gaussian can be associated with.

Before simulating the assets, separate ranges for each feature were defined. Each set of ranges represented a separate model type present in the fleet. Moreover, a set of covariance matrices was also defined. While simulating an asset, its model type and operating condition were first characterized. Following which, the multidimensional mean of that asset’s underlying Gaussian distribution was randomly selected within the range of its corresponding model type. Similarly, the covariance matrix corresponding to the asset’s operating condition was selected from the predefined set of covariances. From this Gaussian, number of points were sampled, which represented that asset’s condition data collected over a period of its normal operation. The same process was repeated for all assets comprising the fleet, and the final collection of points for assets constituted the training dataset.

4.1.2. Testing dataset

The testing dataset for any given simulated asset described in Section 4.1.1 was a mixture of points sampled from that asset’s true underlying distribution and points sampled from an anomalous distribution. The anomalous distribution was generated by inducing systematic deviation from the true underlying distribution. This deviation was induced in the form of change in the mean and covariance of the true distribution. A large number of points were sampled from both true and anomalous distribution to ensure good statistics.

Consider a given asset ![]() $ i $ in the fleet, whose true underlying distribution had the mean and covariance values

$ i $ in the fleet, whose true underlying distribution had the mean and covariance values ![]() $ {\mu}_i $ and

$ {\mu}_i $ and ![]() $ {C}_i $ respectively. The anomalous distribution for this asset would be a multivariate Gaussian of the same dimension, but with its underlying mean and covariance being

$ {C}_i $ respectively. The anomalous distribution for this asset would be a multivariate Gaussian of the same dimension, but with its underlying mean and covariance being ![]() $ {\mu}_i+ l $ and

$ {\mu}_i+ l $ and ![]() $ L.\ast {C}_i $ where,

$ L.\ast {C}_i $ where, ![]() $ l $ and

$ l $ and ![]() $ L $ are the deviations induced into the true mean and covariance values. The induced deviations were constant across all assets. Moreover, both

$ L $ are the deviations induced into the true mean and covariance values. The induced deviations were constant across all assets. Moreover, both ![]() $ l $ and

$ l $ and ![]() $ L $ were varied across a wide range to study the sensitivity of the classifiers with respect to the Gaussian’s mean and covariance.

$ L $ were varied across a wide range to study the sensitivity of the classifiers with respect to the Gaussian’s mean and covariance.

A schematic description of how the normal and anomalous data for the simulated assets were generated is shown in Figure 3. This figure shows an example of generating normal and anomalous data for a two-dimensional dataset, where the regions defined for separate model types are shaded in color and the set of covariances are shown using ellipses. And, while the procedure is the same for five-dimensional data, the regions in space representing the model types have been widened in Figure 3 for easier representation.

Figure 3. A schematic representation describing how the normal and anomalous data were generated for the experiments. The procedure is shown here for a two-dimensional dataset as an example.

4.1.3. Experimental specifications

The simulated fleet used for the experiments discussed here comprised of 800 assets. The assets could each belong to either of the two possible operating conditions and to either of the two possible model types. Therefore, the fleet comprised of total four clusters of assets, represented by each combination of the operating condition and the model type. All clusters contained the same number of assets (i.e., 200 assets per cluster).

The simulated condition data was five dimensional. All asset means for those belonging to the first model type lay within the range ![]() $ \left(-25,25\right) $, and for the second model type lay within the range

$ \left(-25,25\right) $, and for the second model type lay within the range ![]() $ \left(\mathrm{275,325}\right) $. Similarly, the two covariance matrices corresponding to the operating conditions are shown in 25. The ranges for means and the two covariance matrices were arbitrarily chosen.

$ \left(\mathrm{275,325}\right) $. Similarly, the two covariance matrices corresponding to the operating conditions are shown in 25. The ranges for means and the two covariance matrices were arbitrarily chosen.

$$ {C}^1=\left[\begin{array}{ccccc}16.68& 5.43& 3.28& -2.31& 1.76\\ {}5.43& 22.05& -3.74& -1.11& -1.14\\ {}3.28& -3.74& 18.72& 3.91& -3.19\\ {}-2.31& -1.11& 3.91& 20.87& 4.00\\ {}1.76& -1.14& -3.19& 4.00& 23.12\end{array}\right]\hskip0.24em \mathrm{and}\hskip0.24em {C}^2=\left[\begin{array}{ccccc}55.59& 3.39& 3.24& -2.00& -3.95\\ {}3.39& 55.75& 1.22& -24.02& -3.76\\ {}3.24& 1.22& 55.83& 15.29& 1.78\\ {}-2.00& -24.02& 15.29& 63.69& 11.21\\ {}-3.95& -3.76& 1.78& 11.21& 23.12\end{array}\right] $$

$$ {C}^1=\left[\begin{array}{ccccc}16.68& 5.43& 3.28& -2.31& 1.76\\ {}5.43& 22.05& -3.74& -1.11& -1.14\\ {}3.28& -3.74& 18.72& 3.91& -3.19\\ {}-2.31& -1.11& 3.91& 20.87& 4.00\\ {}1.76& -1.14& -3.19& 4.00& 23.12\end{array}\right]\hskip0.24em \mathrm{and}\hskip0.24em {C}^2=\left[\begin{array}{ccccc}55.59& 3.39& 3.24& -2.00& -3.95\\ {}3.39& 55.75& 1.22& -24.02& -3.76\\ {}3.24& 1.22& 55.83& 15.29& 1.78\\ {}-2.00& -24.02& 15.29& 63.69& 11.21\\ {}-3.95& -3.76& 1.78& 11.21& 23.12\end{array}\right] $$where the superscript represents the cluster id. Moreover, the assets comprising the fleet held different amount of data (number of points sampled from its underlying Gaussian). Each asset could have either low, medium, or high amount of data. Assets belonging to the low data category held only five data points. Assets belonging to the medium and high data category contained 20 and 100 data points, respectively. To make the setup clear, the corresponding values of the variables defined and derived in Section 3 are summarized in Table 1.

Table 1. The values of various parameters introduced in Section 3.

As an example, consider an asset belonging to the first model type and first operating condition. Let this asset belong to the “medium” data category. To simulate this asset, its mean was first selected as a random point with features lying within the range ![]() $ \left(-25,25\right) $. This mean was

$ \left(-25,25\right) $. This mean was ![]() $ \left(10.05,-\mathrm{15.95,4.94},-\mathrm{4.24,0.68}\right) $. Next, with this mean and

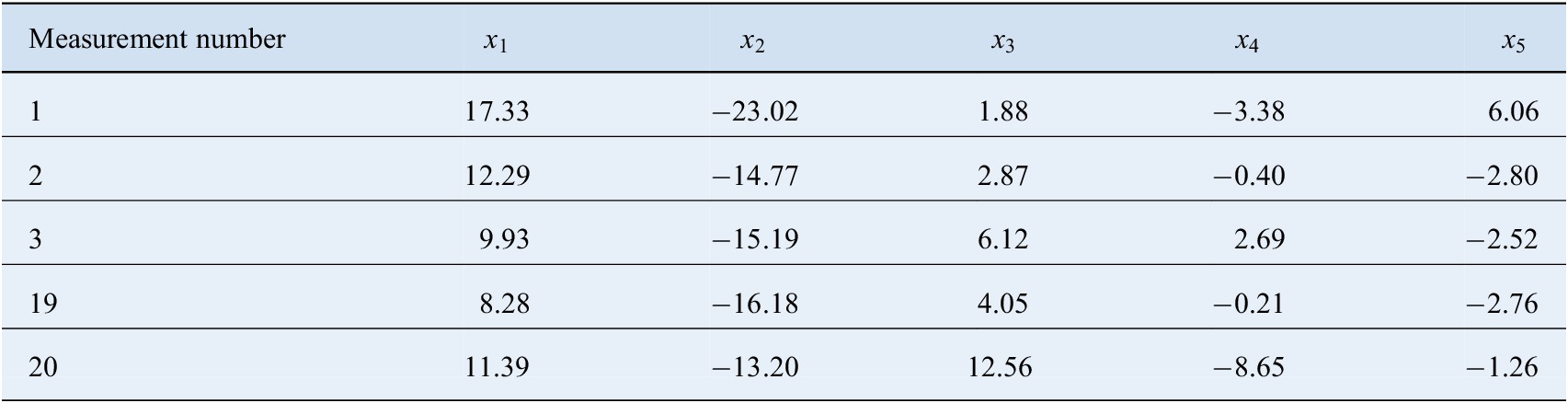

$ \left(10.05,-\mathrm{15.95,4.94},-\mathrm{4.24,0.68}\right) $. Next, with this mean and ![]() $ {C}^1 $ from Equation (25) as the covariance, 20 points were randomly sampled. Twenty points were sampled because this asset belonged to the medium data category. An example of the condition data for this asset is shown in Table 2. The remaining 799 assets in the fleet were similarly simulated based on their model type, operating condition, and the category they belonged to. The complete training dataset can be found at: https://github.com/Dhada27/Hierarchical-Modelling-Asset-Fleets

$ {C}^1 $ from Equation (25) as the covariance, 20 points were randomly sampled. Twenty points were sampled because this asset belonged to the medium data category. An example of the condition data for this asset is shown in Table 2. The remaining 799 assets in the fleet were similarly simulated based on their model type, operating condition, and the category they belonged to. The complete training dataset can be found at: https://github.com/Dhada27/Hierarchical-Modelling-Asset-Fleets

Table 2. An example of condition data for a medium data category asset.

The proportion of assets belonging to the low data category were varied across a wide range from ![]() $ 0.1 $ to

$ 0.1 $ to ![]() $ 0.9 $. The remaining assets were evenly divided into medium and high data categories. For example, if

$ 0.9 $. The remaining assets were evenly divided into medium and high data categories. For example, if ![]() $ 0.3 $ proportion of assets belonged to the low data category, then

$ 0.3 $ proportion of assets belonged to the low data category, then ![]() $ 0.35 $ proportion of assets belonged to high and medium data category each. Moreover, all clusters contained the same number of assets belonging to either of the three categories. Given this dataset, the goal for an anomaly detection algorithm was to model the assets’ normal operation by estimating the parameters of the underlying Gaussians. There was no indicator for the algorithm to know which cluster a given asset belonged to.

$ 0.35 $ proportion of assets belonged to high and medium data category each. Moreover, all clusters contained the same number of assets belonging to either of the three categories. Given this dataset, the goal for an anomaly detection algorithm was to model the assets’ normal operation by estimating the parameters of the underlying Gaussians. There was no indicator for the algorithm to know which cluster a given asset belonged to.

The testing dataset for each asset comprised of 1,500 points randomly sampled from the true underlying distribution, and 1,500 points sampled from the anomalous distribution. The deviations ![]() $ l $ and

$ l $ and ![]() $ L $ for the anomalous distributions were each varied while keeping the other constant, so that the sensitivity of the algorithms with respect to either parameters could be studied. Values of

$ L $ for the anomalous distributions were each varied while keeping the other constant, so that the sensitivity of the algorithms with respect to either parameters could be studied. Values of ![]() $ l $ were varied across

$ l $ were varied across ![]() $ \left\{\mathrm{0,5,10,20,50,100}\right\} $ while keeping

$ \left\{\mathrm{0,5,10,20,50,100}\right\} $ while keeping ![]() $ L $ fixed at

$ L $ fixed at ![]() $ 1 $, and the values of

$ 1 $, and the values of ![]() $ L $ were varied across

$ L $ were varied across ![]() $ \left\{\mathrm{1,1.5,2,5,10}\right\} $ while keeping

$ \left\{\mathrm{1,1.5,2,5,10}\right\} $ while keeping ![]() $ l $ fixed at

$ l $ fixed at ![]() $ 0 $.

$ 0 $.

4.2. Experimental design

The experiments involved comparing four learning scenarios as explained below.

1. Independent learning. In the first scenario, the assets were capable of learning from their own data only. This means that the only source of information for estimating the parameters of the underlying Gaussian was the given asset’s condition data only. The mean and covariance estimates in this scenario were evaluated according to the standard maximum likelihood estimation in Equations (23) and (24).

2. Learning from similar assets. In this scenario, the hierarchical model for the fleet was implemented. Clusters of similar assets were identified, and the parameters for the hierarchical model were estimated using the EM algorithm as explained in Section 3. The EM steps were iterated

$ 20 $ times, and the values of

$ 20 $ times, and the values of  $ {\hat{\mu}}_i $ and

$ {\hat{\mu}}_i $ and  $ {\hat{C}}_i $ after the 20th iteration were treated as the final estimates of hierarchical modeling. Twenty iterations were deemed sufficient for parameter estimation because the overall data log-likelihood did not increase any further. The value of

$ {\hat{C}}_i $ after the 20th iteration were treated as the final estimates of hierarchical modeling. Twenty iterations were deemed sufficient for parameter estimation because the overall data log-likelihood did not increase any further. The value of  $ K $, which are the number of clusters present in the fleet was set to its true value

$ K $, which are the number of clusters present in the fleet was set to its true value  $ 4 $.

$ 4 $.3. Learning from all. The third scenario was similar to the one in Case 2 above, but with the difference being in this scenario the assets did not have a sense of identifying similar assets. This means that a given asset here learnt from all other assets in the fleet. To model this scenario, the same steps as those in Case 2 were followed, but the value of

$ K $ was set to 1. As a result, the entire fleet was treated as one cluster and the density function parameters of all assets shared a common underlying distribution.

$ K $ was set to 1. As a result, the entire fleet was treated as one cluster and the density function parameters of all assets shared a common underlying distribution.4. Only the low data assets learn from others. Finally, a combination of hierarchical and independent modeling was considered in the experiments. This scenario involved clustering and hierarchical modeling similar to the one in Case 2. But, while all 800 assets here participated in estimating hierarchical model parameters, only those assets belonging to the low data category used the final estimates for classifying the testing dataset. The medium and high data category assets used independent modeling to estimate their Gaussian parameters. Concretely, the final estimates for the assets belonging to the low data category were derived from the hierarchical model, whereas the final estimates for the assets belonging to the medium and high data category were derived from their independent models.

It was observed during the experiments that the accuracy of clustering using EM algorithm relied on the initialization of parameters, especially the ![]() $ {\beta}_k $ and

$ {\beta}_k $ and ![]() $ {\alpha}_k $ parameters. These parameters must be initialized such that the algorithm’s search space is wide enough and is not trapped in local optima during the early iterations. The approximate initializations of parameters to ensure a wider search space are mentioned in Section 3. However, even with the optimal initialization, the EM algorithm was unable to cluster the assets due to the wide range of means chosen.

$ {\alpha}_k $ parameters. These parameters must be initialized such that the algorithm’s search space is wide enough and is not trapped in local optima during the early iterations. The approximate initializations of parameters to ensure a wider search space are mentioned in Section 3. However, even with the optimal initialization, the EM algorithm was unable to cluster the assets due to the wide range of means chosen.

This problem is highlighted in Figure 4, where a sample of 50 assets from each of the asset clusters was taken and the total 200 assets thus formed were clustered based on the available 5 and 6 data points only. The figures show both cases—where all assets had the same amount of data, and where the assets are divided into “low,” “medium,” and “high” data categories explained in Section 4.1.3. In the figures corresponding to the latter case, the assets belonging to the “low,” “medium,” and “high” data categories are represented in red, orange, and green colors, respectively. Also, the number of data points with assets belonging to the low data category were 5 and 6, and were constant for the remaining assets. In all figures, the assets with ids 1–50 belonged to the same cluster, 51–100 belonged to the next cluster, and so on. Therefore, these asset ids are expected to be clustered together, which was not the case for only initial five or six data points. The wrongly clustered assets are marked with the dotted red circle.

Figure 4. The figures represent the clustering done by the EM algorithm when the assets (low data category assets in c and d) have five and six data points only. The incorrectly clustered assets are marked with dotted red circle.

In the real world, this problem can be addressed by including certain categorical data along with the time series data. Categorical data can arise from the operational experience, such as asset’s environment, upkeep, operation, and so on. However, for the experimental results presented here, it was assured that the assets were correctly clustered in these cases. If it was found that an asset was wrongly clustered, it was manually reassigned to its correct cluster and the results evaluated again. The goal of the experiments is to demonstrate the advantage of hierarchical modeling over the conventional independent modeling on the effectiveness of collaborative learning between assets.

4.3. Performance evaluation

After the estimated model parameters are obtained, the operator must define a region in multidimensional space that encompasses the asset’s normal operations data. For the statistical classifiers, this region is often defined based on a critical value from the probability density function (PDF) values, such that any point having the PDF value less than the critical value will lie outside the region and be deemed anomalous. The critical value corresponds to an ![]() $ \alpha $ significance level, which separates the most likely