Introduction

Trying to pin down what, if anything, is exceptional about humans as a species has been notoriously difficult. To abuse a famous sentence from an article by Clifford Geertz, what definitions of the human are, above all other things, is various (for the original, see Geertz Reference Geertz1973: 52). For much of the twentieth century, though, language seemed to have pride of place as a potentially stable diagnostic criterion. Language, and symbolic thinking more broadly, have been used by many as the line that separates humans from animal others (see Deacon Reference Deacon1998; Lévi-Strauss Reference Lévi-Strauss1966; Sahlins Reference Sahlins2000). And in the recent critiques of human exceptionalism, language has not coincidentally come in for some of the most intense criticisms.

Given the ways that humanness has been a moving target, redefined in different moments based on what exactly humanness is being contrasted with, it is surprising how stable the definition of language (or symbolic thinking) used to define the human has been. Even the critics of the link between language and humanness take a very Saussurean view of language, one in which the ontological divide between the idealized realm of language structure (or langue) and the experiential realm of speech (or parole) is absolute. That is, these critics tend to agree that language and symbolic function is whatever Saussure, Lévi-Strauss, or Geertz said it was. And it is on that basis that they toss it to the side. But if there has been a remarkable stability in the definition of language within the world of social theory, the way that the link between language and humanness has been drawn in other contexts is actually much more varied. If we want to interrogate the colonial or capitalist contexts of human exceptionalist arguments, as many posthumanists suggest, it is important to have a broader understanding of the many ways in which those links have been made.

I argue here that both the theories coming out of the linguistic turn and those running away from it have placed special emphasis on human language (or human culture) as a matter of convention and shared meanings. Yet there are other histories that link language and humanness through invention, deceit, and secrecy rather than through convention and publicness. These alternate models that emphasize linguistic invention have been used and continue to be used as diagnostic of humanness in a range of contexts, from the colonial past into the technologized present. Connecting these contexts is a religious concern with attributions of moral agency, as I discuss more in the next section.

The conventional and shared nature of human symbols is of course a fundamental tenet of Saussure’s model of language, as when he spoke of langue as if “identical copies of dictionaries were placed in each speaker’s head” (Reference Saussure, Bally, Shehehaye and Baskin1959: 19). But the sense of the shared-ness of meaning took pride of place in Clifford Geertz’s symbolic model of culture. As he put it in one of his more famous axiomatic statements, “Culture is public because meaning is” (Reference Geertz1973: 12).Footnote 1 For Geertz, this is not just a methodologically important fact that allows anthropologists or historians to study cultural symbols. It is also the basis on which humanness rests. Without culture’s shared meanings, humans “would be unworkable monstrosities with very few useful instincts, fewer recognizable sentiments, and no intellect: mental basket cases” (ibid.: 49). Lacking the biologically inherited traits that other animals have, he argues that humans are radically dependent on the transmission of shared symbols to have any kind of workable, non-monstrous, life.Footnote 2

But if this is the case for a major figure within representationalism, it is also so for those who are anti-representational. Karan Barad (Reference Barad2007), for example, discusses language largely in terms of its being a shared representational system, and argues for setting language to the side in order to engage with phenomenological, embodied experience. Those working within the domain of affect theory depict symbolic function as a cold and unfeeling process of semiotic regimentation through both shared meanings and their necessarily concomitant separation from sensuous reality (Stewart Reference Stewart2007; see also Biehl and Locke Reference Biehl and Locke2010; Haraway Reference Haraway2013; Massumi Reference Massumi1995; Clough Reference Clough, Clough and Halley2007). Yet this understanding of language assumes the radical break between representation and world that has been deconstructed over the past several decades of theorization of language in culture (see Silverstein Reference Silverstein2004; for an important discussion of the relationship between contemporary linguistic anthropology and anti-representationalist affect theory, see Newell Reference Newell2018).

With this sustained focus on convention and shared meaning, deceit and its relation to invention have not played the same role in social theory either within or against the linguistic turn.Footnote 3 Yet linguistic invention and its association with deceit and secrecy have had impacts for designations of humanness outside of the world of social theory. I argue this by presenting two comparative contexts in which humanness, language, and their relationship to subjectivity were all up for debate. Although separated by an ocean and (roughly) a century, both cases reveal the ways in which language and humanness are often linked not necessarily through shared symbolism as a mode of communication but importantly through unshared symbols: refusals to communicate in the form of deceit or novel linguistic inventions. Both cases involve a moment when the realization that the speakers were being deceptive was seen as important proof of both higher-than-realized ontological status and greater-than-realized linguistic complexity.

The first case centers on speakers of a language spoken in colonial Papua New Guinea, a form of English that developed on indentured labor plantations in the Pacific at the end of the nineteenth and beginning of the twentieth centuries. Now known as Tok Pisin, it was referred to as “Pidgin” or “Pidgin English” during most of the colonial era. As a pidginized (reduced) form, colonizers did not see it as a “full” language, but more a medium for barking simple orders at laborers. For Lutheran missionaries who employed Pidgin-speaking laborers on their plantations and outposts, Pidgin was not a language in which one could or should missionize. While extremely useful for secular tasks, they did not think that it offered a route to the subject’s soul or self. It was only with widespread discussion of the newly discovered existence of “hidden” registers of the language that were spoken to deceive others (colonizers foremost among them) and a related realization that labor compounds were becoming places of moral dissolution, that the Lutherans started to view Pidgin-speaking laborers as objects of evangelistic attention. Linguistic deception was an important key to seeing Pidgin-speaking laborers as potential Christians.

The second case may at first glance seem to be as remote from the first as possible. The two “speakers” involved are chatbots developed by computer scientists in one of Facebook’s artificial intelligence (AI) labs in 2017. These chatbots started to develop their own form of English that diverged from what human speakers of the language could understand. Moreover, each of the chatbots had, through deep learning training processes, learned to deceive their partner bot as the two played a negotiation game. Both the divergence from English and the realization of the capacity for deception were the causes of a short-lived but intense moment of media hysteria, with articles running the gamut of responses from an optimism about AI’s future powers to, inevitably, despondency at the idea that the bots would soon rule the world.

The two cases focus on contrasting ends of humanness. The black colonized laborers speaking Pidgin were treated as human but were nonetheless considered lesser than the colonizers who governed them (although the transformation of laborers into potential Christian converts partially altered that status). The AI chatbots, while in some ways obviously not human, are talked about as more-than-human in terms of their potential capacities. In fact, both contexts share a focus on how to communicate with and maintain control over different kinds of “laborers.” For these laborers, creating their own unshared symbols and unshared languages suggested not only their capacity to deceive but their capacity to be self-sovereign: if they could control their own languages, they could control other things as well.

In making this connection between contemporary AI bots and colonized laborers, I follow in the footsteps of other scholars who have unpacked the long-standing discursive themes of agency, autonomy, and humanness that come into play with any discussion of robots. Authors have identified historical precedents to contemporary AI discourse in the ways that various real or imagined automata became objects of fascination in medieval (Truitt Reference Truitt2015), early modern (LaGrandeur Reference LaGrandeur2013), and Enlightenment eras (Riskin Reference Riskin2018). In that sense, finding an overlap between the early twentieth-century colonial context and contemporary AI chatbots is not in itself a surprise (see also Asimov Reference Asimov2004[1950]; Čapek Reference Čapek, Selver and Playfair1923[1920]; Dhaliwal Reference Dhaliwal2022; Hampton Reference Hampton2015; Kevorkian Reference Kevorkian2006; Jones-Imhotep Reference Jones-Imhotep2020; Selisker Reference Selisker2016).

These contrasting examples show that what has made language such an important identifier of the human in modernist discourses may not only be symbolic capacities in the sense of shared meanings, but also the ambiguities of agency found in linguistic deceit and the secrecy made possible with linguistic invention. Deceit, secrecy, and novel linguistic inventions are the symbolic antitheses of Geertz’s public culture, the other side of the symbol that is present whenever shared meanings are present. But by seeming to take on the symbolic while withholding public meanings, the speakers of these unshared symbols sit at the boundaries of humanness. And in the colonial and technological worlds I discuss, this other side of the symbol may be more important to the ways in which the humanness of different speakers is debated.

Deceit, Invention, and Agency

While only one of the two cases that I compare here overtly involves religious actors, religious concepts haunt discussions of humanness. Paul Christopher Johnson (Reference Johnson2021) examines what he calls “religion-like situations” as ones in which the agency and humanness of different sorts of non-, super- or near-human entities is thematized, often in ways that make agency opaque or ambiguous. He argues that questions of agency in many religious contexts depend on a structure of subjectivity that assumes some sort of opposition between an interior and an exterior that can both mask and announce the subject’s agency. People who are possessed by spirits, believers whose souls are connected to God, or objects that are endowed with special powers all share this inside/outside structure: “More than just having a secret interior, however, the shapes and structures deployed in religion-like situations announce and advertise a secret place inside. They feature or foreground the external door, lid, entrance, or passage that might or might not give access to a hidden wonder or horror. Our perception of these persons or things and their entrances and exits causes us to imagine an agent that occupies the visually suggested but hidden place” (ibid.: 5).Footnote 4

For the Tok Pisin speakers and the chatbots that I will discuss, the recognition of their deception is important because it seems to observers to imply the presence of an interior that is separate from an exterior, an interior state that is being cloaked with linguistic obfuscation. In these contexts, deceptive language is the site at which the speaking subject, in contrast to just a speaker of conventional, shared forms, is born. That is, the speaker is projected into a third dimension, given subjective depth through the assumption that there is a discrepancy between—and thus space between—the speaker’s surface and something hidden within.Footnote 5

For the missionaries who were dismayed at what they saw as the moral dissolution of the laborers under their command, the recognition that Tok Pisin speakers were being deceptive may initially have been a disappointment. And yet it clearly becomes the moment at which these speakers can become potential religious subjects. As Johnson writes, “Objects, including self-conscious ones like our own bodies, morph into religion-like situations when the visible announcement of hidden chambers calls interior agents and the disjuncture between an external body and an inner agent to the mind of a perceiver” (ibid.: 5). Linguistic deceit and its concomitant forms of moral dissolution acted then like an announcement of subjective depth, and, for the missionaries, an invitation to turn those subjects into objects of evangelistic attention. The subsequent conversion process would be one in which, ideally, interior subjectivity and exterior linguistic form could be aligned in the form of a sincere speaking subject (Keane Reference Keane2007). I will describe how an emergent problem for the second case, the deceptive chatbots, was that there was no well-defined missiological method for how to bring the bot’s interiors and exteriors into alignment.

Joel Robbins (Reference Robbins2001) has written about the role of deceit in the performance of religious rituals. Reformulating Roy Rappaport’s (Reference Rappaport1999) work on ritual, he takes inspiration from Rappaport’s commitment to the idea that one of the most important capacities of symbolic language is that it makes deceit possible. Ritual, however, as a public announcement that links people and promises together in indexical (spatiotemporal or causal) connection, can affirm a shared meaning. To use terms from Johnson’s work, ritual is a space for the realignment of interior and exterior. Or in Webb Keane’s (Reference Keane2017) terms, realizing that others have a capacity for concealment is generative of an ethical impulse toward creating shared understandings. While Rappaport, Robbins, and Keane all focus on the process of affirming shared meaning, I want to pause at that earlier moment in which speakers and observers contemplate the secrecy and potential for deceit that makes the later affirmation of shared meanings important. But in contrast to Ellen Basso’s (Reference Basso1988) discussion of Kalapalo tricksters who link humanness positively with deceit as the capacity for illusion, the colonial and technological observers I will discuss here see deceit as sitting ambiguously at the boundaries of the human.

The question of shared understandings is an especially fraught one in the two cases that I will discuss because both, I argue, involve what could be called “invented languages.” The term “invented languages” is usually reserved for things like Esperanto or Klingon, or any of the thousands of other “con langs” (constructed languages) that have been created by individuals and intentional communities over the past centuries (see Okrent Reference Okrent2009; Yaguello Reference Yaguello and Slater1993 for an overview). A defining characteristic of invented languages is their seemingly unnatural contexts of birth as the products of conscious attention. Yet all languages are the products of different levels of focused intervention that could upset a linguist’s idea of natural change (see Joseph Reference Joseph2000; Fleming Reference Fleming2017). It is just that languages that become indexically connected to powerful institutions manage to naturalize or erase these interventions, as when national standard languages of nation-states excise terms borrowed from other languages in order to maintain an illusion of linguistic and national purity. In that sense, I use the term “invented language” here to point to languages like the Pidgin and AI-based Englishes whose “unnatural” origins have not been naturalized or erased.

The characteristic of invented languages I want to emphasize here is that their purported irregular origins offer to observers the possibility of total linguistic opacity—of a linguistic structure that is totally unknowable because it is disconnected from the “natural” transmission of shared forms. Of course, no such totally alien language has been observed or invented: all so-called invented languages or revealed non-human languages are based off of extant human ones. Yet what makes invented languages an enduring source of fascination or horror for some is the possibility of this ultimate semiotic opacity. Invented languages in that sense are further examples of those religious objects that Johnson argues can put humanness in question. They play at the boundaries of humanness precisely because they tease the possibility of a speaker having an interior subjectivity but put in question whether than interior subjectivity can be accessed.

In particular, they suggest the possibility of unshared meanings—the other side of the symbol that becomes the object of attention in marginal contexts at the edges of the human. Shared symbols in Geertz’s model allow humans to become human and allow them to interact with others. The ways that colonial and other observers have discussed linguistic invention and deceit suggest that unshared symbols are a potential source of opacity and a concomitant subjective depth through which humanness can be imputed to others. While Geertz assumed that symbols are necessarily public and necessarily human, the colonial and contemporary fears of the unshared symbol create a potential speaker at the margins of humanity.

There is an asymmetry in the sense of linguistic opacity identified by people in the Pidgin and chatbot cases. The missionaries, once they make the decision that Pidgin can, in fact, provide the linguistic infrastructure of conversion, are able to domesticate the language to the extent that this sense of potential opacity largely disappears. But this has not been the case with discussions of AI and chatbots, where the possibility of linguistic opacity remains a constant concern. For the super-human evolutionary processes of machine learning, the paranoias of the colonial plantation boss are all the more apparent. This asymmetry needs to be read in terms of racialized images of autonomy and control. As a number of authors have pointed out (Kevorkian Reference Kevorkian2006; Jones-Imhotep Reference Jones-Imhotep2020; Dhaliwal Reference Dhaliwal2022), the autonomy of “autonomous” robots has often depended on bracketing or obscuring the role of black and brown labor that goes into making that autonomy and, to that extent, to coding autonomous agents as white.Footnote 6 The threats of secrecy and agency that are part of linguistic deceit and invention remain the most prominent for the potentially superhuman and white-coded AI chatbots, not the black, colonial laborers.

Colonizers enjoyed a sense of racial superiority and advanced evolution, and yet they still constantly worried that Papua New Guinean laborers might get out of control. I will argue that this paranoia can be seen particularly clearly when it comes to questions of language and the unshared symbol. And by looking at these contrasting cases, we can start to see different ways of connecting the human and the linguistic outside of just the structuralist and symbolic story that has been so easy for recent social theory to discard.

Language and Christian Labor

My first example comes from the colonial Territory of New Guinea, as the northern portion of Papua New Guinea was known for much of the twentieth century.Footnote 7 Pidgin English, now called Tok Pisin, was then in a process of expansion from its earlier use on ships and plantations throughout the Western Pacific (Mühlhäusler Reference Mühlhäusler and Wurm1977).Footnote 8 Today, Tok Pisin is the most commonly spoken language in Papua New Guinea, a lingua franca that has become the default language for education, parliamentary democracy, and Christianity in this extraordinarily linguistically diverse nation-state—there are famously about eight hundred languages spoken in the country.

It was a slow process of missionaries coming to recognize Pidgin as a language of evangelism, even as Pidgin-speaking laborers were some of the most accessible potential converts in a territory that the missionaries tended to think of as highly inaccessible and remote. Because, while missionaries in the colonial and postcolonial global south theorized (and tried to enact) religious change in their fields of labor, they tended to do so in only the most peculiarly conservative contexts. In Papua New Guinea, missionaries have tended to think that effective religious change was possible for local people deeply entrenched in their “traditional” ways of life, in the remote, rural contexts of swidden agriculture, and the monolingual contexts of their native tongue. Religious change was specifically not considered possible for the Papua New Guineans living in contexts of radical transformation—urban, massively-multilingual, laborers’ camps at the dawn of the postwar era filled with local people communicating using a pidginized English.

The Neuendettelsau Lutheran mission that first began work in Papua New Guinea in the 1880s was an early formulator of the sort of in situ conversions of local people in their “traditional” worlds. Christian Keyßer argued that Papua New Guineans needed to be converted as culturally New Guinean subjects, rather than as Westernized denizens of mission stations (Winter Reference Winter2012). The Lutherans’ ability to engage in local language Bible translation work was hindered by the high number of languages spoken in the area, but regardless of which work-around they were implementing at the time, the emphasis after the earliest decades of work was consistently on trying to evangelize people in rural and remote locales: to travel out to them rather than gathering people in at mission stations.

Lutheran missionary efforts were in fact so focused on rural, remote, vernacular language speakers that they completely missed the chance to evangelize some of the people they were bringing to the coast for multi-year labor contracts. Like its Catholic counterpart (Huber Reference Mary1988), the Lutheran mission initially funded or supplied a portion of its work in Papua New Guinea through coastal copra (coconut) plantations, cattle ranches, and dairy farms (see Wagner and Reiner Reference Wagner and Reiner1986). Papua New Guinean men working on these plantations resided in multilingual workers’ housing while under contract, usually for extremely low wages that were often given in kind, not in cash. Towns were also filled with other “labor lines” (labor housing) for local white-owned businesses or for the colonial administration. Here was an available group of men who were within easy reach, men who often already had some sort of connection to Lutheran missions, and men who were known to the missionaries in charge of the plantations. But as laborers, and as mobile speakers of what many considered an invented, semi-language, they were ruled out as worthy of attention. I want to look at this language now to help get a sense of why.

In what was called the “blackbirding” indentured labor systems in the Pacific during the late nineteenth and early twentieth centuries, colonizers took local men from their home villages to plantations in Samoa, coastal Papua New Guinea, or Australia for a few years of indentured service and then returned them more-or-less to their home areas after that. While they were on these plantations, most indentured laborers from different ethnolinguistic groups spoke to one another using a form of reduced English that they were in the midst of creating and stabilizing. One of the reasons that colonial labor schemes were such fertile ground for the development of either creole languages in the context of the Atlantic slave trade or pidgin languages in the context of the Pacific indenture system has to do with plantation owners’ fears of either slave revolts or “labor trouble.” In both Atlantic and Pacific plantations, laborers from the same ethnolinguistic group were often purposefully split apart. The rather unrealistic hope was that without a good system for communicating among themselves, it would be impossible for the laborers to organize.Footnote 9 Of course these attempts at containing and controlling communicative networks among enslaved or indentured laborers failed. Not only did novel linguistic forms quickly emerge and become stabilized, but laborers developed long-range and complex communicative networks.Footnote 10

In the Pacific, Pidgin’s tumultuous history from a plantation pidgin spoken by a small number of men who were coerced into labor contracts to today’s Tok Pisin, a lingua franca recognized by the state and spoken by a majority of Papua New Guinea’s citizens, is too complicated to address in this article. Suffice it to say that from the late 1940s through the 1960s—the time-period I will discuss—colonial attitudes about Pidgin were almost uniformly negative, although there was a wide variety of ways to disparage the language.

Linguistic Deceit and the Creation of Subjective Depth

For the Lutheran missionaries, many of whom were German nationals with limited English, they were well aware of Pidgin’s communicative importance, and they acknowledged it as a generally stable form of communication. Yet for decades the Lutherans considered Pidgin too limited to be a language of Christian conversion (Handman Reference Handman2017). Stephen Lehner, one of the early leaders of the Neuendettelsau mission, relegated Pidgin to the secular plane of surface-level facts, arguing that it could not reach the depths of the soul that Protestant conversion demands: “May traders use Pidjin [sic] and may Governments even give Proclamations in it, and may an Anthropologist use it to find out facts:—a missionary cannot use this language if he wants to arouse the hearts of the people” (Reference Stephen1930: 2). It did not help that the only mission then making much use of Pidgin was the Catholic Society of the Divine Word, since Catholic-Lutheran relations were extremely antagonistic in this era. It played into Lutheran stereotypes of Catholic missiological practice that Catholics would use a language that Lutherans felt did not reach a convert’s heart.Footnote 11

Australian plantation owners and other English-speaking colonial businessmen begrudgingly used a hodge-podge of Pidgin elements, but rarely acknowledged that Pidgin had much more communicative capacity than yelling and pointing did. F. E. Williams, the government anthropologist of the neighboring territory of Papua, made a comment in 1936 that could have largely applied to the Territory of New Guinea: “At present the means of communication [between colonizers and local people] are pidgin Motu, pidgin English, telepathy, and swearing” (Reference Williams1936, quoted in Reinecke Reference Reinecke1937: 747). So even though planters insisted on the absolute necessity of the various forms of Pidgin to enable their plantations to run at all, given their policy of enforced linguistic heterogeneity, they also insisted that Pidgin was an extremely artless, simplistic, “bastard.” Yet they also thought that Pidgin’s ease of use and perceived extreme simplicity were beneficial outgrowths of the policy to keep inter-ethnic communication to a minimum: as long as Pidgin was nothing but a simple language, it did not have the grammatical or representational capacity to be used to foment trouble.

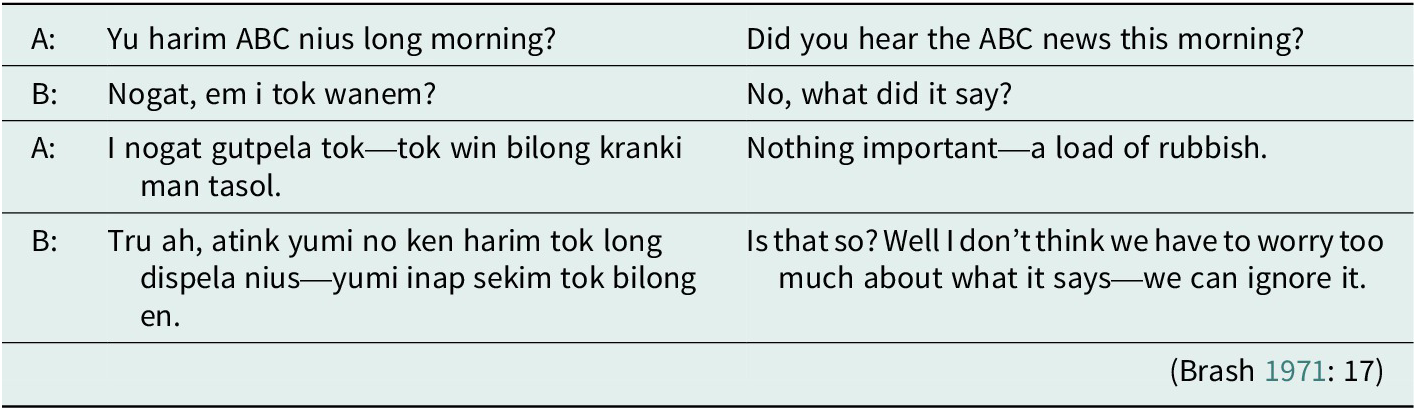

Regardless of what colonizers thought about the language’s simplicity, Pidgin speakers on plantations invented complex forms of disguised talk that allowed them to discuss, for example, the plantation owner or manager without being detected. This came to be a distinct register, called tok bokis (< E. boxed talk, secret talk) or tok hait (< E. hidden talk), used to keep European colonizers out of the communicative loop or to talk with other Papua New Guineans about taboo matters (see also Mühlhäusler Reference Mühlhäusler1979; Schieffelin Reference Schieffelin, Meyerhoff and Nagy2008). Below is one example of the sort of hidden talk that was used in mid-twentieth-century Pidgin on plantations in which the plantation overseer was referred to as “ABC radio” (that is, the Australian Broadcasting Corporation radio service):

The possibility that colonial laborers could be concealing something from their overseers by using a special form of Pidgin was first raised in a 1949 article by a Catholic missionary-schoolteacher-plantation manager, Albert Aufinger, who begins his article by pointing to the conventional wisdom at the time about the simplicity of communicative forms and networks among local people: “Do secret languages exist in New Guinea? This is usually denied, even by Europeans who have spent considerable time in the country” (Reference Aufinger1949: 90). He revealed to his colonial audience the secret Pidgin register that uses regular words to refer to hidden meanings, as with the ABC radio example above, as well as a form of “backwards” Pidgin, used in both oral and written forms, that reversed the order of the phonemes of a word.Footnote 12 One example of this phenomenon that he provides is “Alapui kow, atsam i mak!” which is the backwards version of the phrase “Iupala wok, masta i kam!” (‘you all get to work, the boss/master is coming’).

One of Aufinger’s concluding statements emphasized the ubiquity of these secret forms of talk: “After what I have said, one will hardly go wrong in assuming, whenever one suddenly surprises a group of natives and they go on talking about apparently inconsequential and trivial matters, that they are unobtrusively continuing in secret language the same discussion which, until the white man came on the scene, they had been conducting in straight language” (ibid.: 114).Footnote 13

A later and likely more influential piece than Aufinger’s article was Peter Lawrence’s book Road Belong Cargo, a widely read ethnography of the 1960s phenomenon of Yali’s so-called “cargo cult,” a new religious movement that was primarily conducted in a secret register of Pidgin (Brash Reference Brash1971: 326; Lawrence Reference Lawrence1964: 84). What both Aufinger and Lawrence were alarmed by or concerned with was the capacity of Pidgin—as the language of the administration and the plantation—to be used deceptively. Pidgin makes its mark felt as a language when it is unshared, when it is the conduit of secrecy and deceit, a moment of semiotic self-mastery in which speakers seem to have explicit control over their speech and the signals it sends.

We have then a situation in which speakers, pushed into labor regimes because of colonial racism, develop and speak a language that colonizers consider useful but insipid. But from the later 1940s through the 1960s, there is a growing recognition that Pidgin might not be as simple as it seems, and that anti-administrative feeling and anti-colonial ideologies are setting in through a hidden form of communication in the very medium that the colonizers discounted as incapable of abstract or complex representation.

Pidgin and the Possibility of Moral Reform

It is during this particular time period that Pidgin starts to be seen as a potential language of evangelism for Lutheran missionaries. Again, prior to this moment, the men working as laborers were not considered proper objects of evangelism, because Lutherans felt that evangelism should and could only take place when those men were not laboring, when they were back in their rural, remote, home villages and speaking their native languages. But the idea for Pidgin-based evangelism of laborers started to gain ground in the postwar years as labor compounds started to be seen as spaces of moral dissolution, linguistic and otherwise.

For the colonial government, plantations and prisons were supposed to be some of the most important colonial domains for Papua New Guinea people to learn about European administration and life (Reed Reference Reed2004). They were places for local people to learn about modernity and then take that “civilizing” influence back to their remote villages that were in many cases only visited once a year by patrol officers. However, missionaries were becoming increasingly concerned that the labor lines were instead becoming hotbeds of gambling and alcohol consumption (see Laycock Reference Laycock and Ryan1972; Marshall Reference Marshall1980; Pickles Reference Pickles2021), in addition to the administration’s concerns about anti-administration sentiment. In biannual conferences throughout the 1950s between the administration and the main Christian missions, the question of moral dissolution in the labor lines came up, with most of the missionaries in favor of the legal prohibitions on alcohol (the Catholics were a partial exception) and the continued criminalization of any gambling. Some missionaries supported the idea that the men should not be given wages in cash but only in kind in order to make gambling more difficult.Footnote 14

At this time, the Lutherans started to have missionaries and Papua New Guinean evangelists work with laborers. Known as “compound work,” missionaries wrote in their annual reports about how they tried to minister to the needs of the “boys” (a patronizing term that applied to all male laborers regardless of age) in between all of their other work that was focused on the in situ autochthonous communities adjacent to colonial towns like Lae or Bulolo. In a report summarizing work ministering to the Lae Wampar group during 1951, the nearby compound work is described as having “only just started.”Footnote 15 Throughout the early 1950s, a small group of missionaries begged for the money and personnel necessary to actually have a dedicated outreach to the labor compounds in prepared speeches to the Lutheran mission’s annual conference.Footnote 16 For the small number of Lutheran missionaries who worked with laborers, the urban centers now seemed to be the frontier spaces. In contrast to the rest of the mission’s orientation toward pushing “inwards” into the mountainous highlands regions to convert “newly contacted” remote communities, these advocates for urban work saw the moral rot of town life to be the highest priority.

Coincident with this growing awareness of the moral dissolution of the labor lines was Christian literacy expert Frank Laubach’s visit to Papua New Guinea in 1949. During his demonstration, Laubach convinced the President of the Lutheran mission, John Kuder, to work with a number of people as Pidgin speakers, which was not something that anyone in the Lutheran mission had considered necessary or particularly useful. Just months after Laubach’s visit, Kuder began to inquire with the British and Foreign Bible Society in London about the possibility of publishing a New Testament in Pidgin.Footnote 17 More generally, it marks the beginning of a shift in Lutheran thinking about Pidgin, from a language that they refused to countenance as anything other than a laughable joke or secular information conduit into a potential medium for the circulation of Christian truth.

By the 1960s, when the Lutheran mission was hard at work on its Pidgin New Testament translation, the moral rot of the labor lines could even be the sign of Pidgin’s structural and subjective depth. In a statement on the role of Pidgin in Lutheran work, missionary Paul Freyberg used the development of a neologism for “drunkenness” as the sign that Pidgin was a mature, stable language that could be used to convey God’s word. The new term, “spak” (from English “spark”), pointed to the revelation of a new person that happens when one drinks alcohol: one is “sparked” like a spark plug igniting gasoline in a combustion engine, a “sudden transformation which intoxication produces in a man” (Reference Freyberg1968: 12). Pidgin’s capacity to be a language of evangelism for Lutherans was a matter of its being able to be the site of a hidden agency within, even if that was currently the hidden agency of the liar and the drunk rather than that of the convert.

Laborers speaking in Pidgin were revealing hidden agencies and subjectivities through a deceptive capacity to obscure their meaning. With missionaries starting to see labor compounds as spaces for illegal and immoral activities, gambling and drinking foremost among them, Pidgin could come of age as a language to the extent that it could be used to obscure these and other illicit activities. It was this realization that Pidgin was a language for deception that made Lutheran missionaries see it as a language of conversion, a language that could reach a fully human, but possibly drunk, soul.

The question of whether or not Pidgin had the kind of depth of linguistic meaning that could produce “labor trouble” was the obverse of the Lutheran missionary question of whether or not Pidgin had the kind of depth of linguistic meaning that could produce a sincere Christian subjectivity. Just as the colonizers were realizing the “natives” could misrepresent and sin in one language, they were realizing they could be converted in it as well. In fact, as Schieffelin (Reference Schieffelin, Meyerhoff and Nagy2008) has discussed, the New Testament that was translated into Pidgin initially translated “parable” as “tok boxis” or secret talk, and only later in subsequent editions established a Christian sense of parable as “tok piksa” (picture talk). Secrecy itself gets domesticated into a Christian moral order.

While there was little coordination as such among the planters, missionaries, and administration officers, the standardization and promulgation of Pidgin as a language of evangelism was part of a domestication of what was coming to be seen in other contexts as anti-colonial, ungovernable speech. Pidgin started to seem like a properly human language not when it was invented or developed by Papua New Guineans, but when it started to be a medium for concealment from paranoid plantation owners or the linguistic medium of gambling and alcohol consumption, and a moral order—Christian in this case—could be imposed upon it in response. What I want to argue now is that far from this being an arrangement particular to a colonial order of the past, the same elements appear in contemporary concerns about a very different kind of laborer.

Sentience and Moral Panics

As I write in early 2023, people in the broader online public are starting to interact more and more with what are called large language models. These are artificial intelligence systems that can generate large amounts of mostly grammatical text on an endless variety of topics and in a wide range of styles. One can give ChatGPT basic instructions—“Write me a two thousand-word paper on symbolic anthropology”—and the system can generate in seconds a draft that does not sound too different from the paper than an undergraduate student might produce during an all-nighter. ChatGPT is the subject of ongoing moral panics, as commenters worry that the system heralds the death of the author (again), the death of the term paper, and the end of art.

But in addition to the panics about its ability to churn out mediocre essays, ChatGPT inspires slightly different moral panics when observers contemplate its more interactive, chat-based, features. Linguistic interaction seems to cut closer to the bone of what it means to be human—more so than a machine that can generate expository prose, at least. That is why the Turing Test, named after computer scientist Alan Turing, was for decades the benchmark for whether one could start to talk about computers being intelligent. Turing’s imitation game involved a set-up in which people in one room would communicate back and forth via printed messages with a computer and also with a human (in some versions called a “confederate”). If, after some minutes, some of the humans in the room could not guess that they were communicating with a machine rather than with the human confederate, then the computer would have won the game and one would “be able to speak of machines thinking without expecting to be contradicted” (Turing Reference Turing and Eckert2006[1950]: 60).

Until the recent development of “generative pre-trained transformer” language models (this is the “GPT” of ChatGPT), chatbots had not been able to pass the version of the Turing Test that was run as an annual competition, the Loebner Prize. Brian Christian (Reference Christian2012) wrote an engaging account of being a human confederate at the Loebner Prize in 2009, at which some highly knowledgeable and interactionally awkward humans were mistaken for computers and some gregarious computers were mistaken for humans. Loebner Prize transcriptsFootnote 18 show chatbots that had different “personas” (sarcastic, funny, angry), and chatbots that intentionally used typos to try to fool judges into thinking that their interlocutor was a fallible human, but also chatbots that almost invariably produced a grammatical blunder or interactional non sequitur that gave away their machine identity. Given the task of having to talk to a human about any possible topic, the Loebner Prize chatbot competitors never made it over the threshold of Turing’s imitation game set-up.

Yet one of the earliest chatbots, the mid-1960s ELIZA program, was an important demonstration of how simple it could be to pass something like a Turing Test if the human speakers were primed for particular kinds of interactions and the topic was restricted. ELIZA was a very simple program (contemporary reconstructions of it have less than four hundred lines of code) that was trained to interact with humans based on Rogerian therapeutic principles. ELIZA’s programmer, Joseph Weizenbaum, had clearly realized the ritualized nature of psychotherapeutic interactions, and was able to model a version of it based on having the chatbot cycle through responses that were triggered by a relatively short list of key terms. If a human typed a message about a family member, the program would respond with “tell me more about your [family member]” or “who else comes to mind when you think of your [family member]” with the words in brackets replaced by whichever kin term was used. If a human typed an answer with the word “because,” ELIZA would choose from a number of responses like “Is that the real reason?” or “Does that reason seem to explain anything else?” or “What other reasons might there be?”Footnote 19 Soon after he introduced ELIZA, Weizenbaum stopped working on the program and in fact advocated against AI research because he felt that people were too readily interacting with the computers, seeing them as sentient, human-like interlocutors.

What is now called “the ELIZA effect” refers to the ways that people anthropomorphize chatbots. In the case of ELIZA and contemporary app-based versions of therapy chatbots like Replika, people sometimes have seen consciousness in the programs because the programs are able to elicit a sense of subjective depth in the person talking to it. If this system can really make me think about my inner self, then in a logic of mirroring, it too must have an inner self. The people who interacted with ELIZA and the people today who use the Replika app as a friend to talk to tend to see the systems as welcome interlocutors. The critiques of ELIZA effects (which would also include things like the announcement from a Google engineer that the LamDA chatbot was sentientFootnote 20) are not critiques of the chatbots but of the people making the claim of chatbot sentience. Weizenbaum and the contemporary computer scientists who denounce claims that Replika or LamDA are sentient worry about the humans, not the computers. They worry about humans getting duped by, or perhaps just being too simplistic and shallow to interrogate, such paltry imitations of interactional connection.

Chatbots do not need to be tasked with therapeutic goals, however, in order for these questions about their potential humanness to emerge. In fact, they do not even need to pass the Turing Test in the sense of offering an imitation of human linguistic behavior. Chatbots can produce moral panics because of, not in spite of, their linguistic alienness. Like the Pidgin context discussed above, these moral panics are the effects of linguistic invention, deceit, and opacity, in which the unshared symbol lurks as a potentially alienating effect of symbolic publicity.

Chatbots and the Invention of Deceit

In June 2017, a few people started to take note of a short research paper published by a team working with the Facebook Artificial Intelligence Research group. Titled, “Deal or No Deal? End-to-End Learning for Negotiation Dialogues” (Lewis et al. Reference Lewis, Yarats, Dauphin, Parikh and Batra2017), it discussed the results of an experiment designed to see if AI chatbots could be trained to negotiate using reinforcement learning. The authors had created a simple negotiation game that people on Amazon’s Mechanical Turk platform played.Footnote 21 Each player was given different numbers of basketballs, hats, or books. Each player was also told that the items had particular point values—for example, four points for books, one point for basketballs, or zero points for hats. Each player had different point values for the items, and they had to negotiate with one another. The negotiations were relatively simple: “I’ll give you all my books, if you give me your hat.” “OK, deal.” The researchers then fed that corpus of almost six thousand human negotiations from the game to their chatbots.

In earlier versions of deep learning processes, the systems would train on this human data, simply trying to imitate the patterns that the system identified in it. However, the computer scientists in this case were comparing the performance of the bots when they were trained only on the human data versus when they were trained on the human data while also using another technique called reinforcement learning. Reinforcement learning adds in a reward structure, giving the AI system a goal specified by the experiment designers. In this case, the authors created an incentive for the bots to maximize their point totals in the negotiation game. Using reinforcement learning, the bots developed novel negotiation techniques that were not seen in the human data that they were trained on. The experiment was a success in the sense that the chatbots trained using reward-based reinforcement learning were eventually able to learn how to negotiate with humans better than bots trained only to mimic the human data. This suggested that reinforcement learning could make the chatbots more flexible in the way that they responded to prompts. Although the experiment was focused on the negotiation game, the research was part of a project to make chatbots more proficient in customer service and other forms of automated customer interaction.

In two different places in the paper, the authors make an offhand comment that they had to tweak one aspect of their experimental design “to avoid [the chatbots] diverging from human language” (ibid.: 1).Footnote 22 In response to these brief statements, one Twitter user pointed to what he felt was the primary, and quite disturbing, take away: “When you let AI negotiate with itself, it realizes there are better options than English. A sign of what’s to come.”Footnote 23 Even though it did not have especially high engagement numbers (that is, it did not go viral), this tweet seems to have been the inspiration for a short write up in the Atlantic,Footnote 24 and from there the story spread to dozens of other publications and was featured in a few video segments. Each headline screamed an ever-more-dire consequence of this research than the next. Some of these headlines, in order of appearance and hysteria, include:

-

• AI Is Inventing Languages Humans Can’t Understand. Should We Stop It?Footnote 25

-

• Researchers Shut Down AI that Invented Its Own LanguageFootnote 26

-

• “Terminator” Come to Life—Facebook Shuts Down Artificial Intelligence after It Developed Its Own LanguageFootnote 27

-

• Facebook AI Invents Language that Humans Can’t Understand: System Shut Down before It Evolves into SkynetFootnote 28

-

• Facebook Engineers Panic, Pull Plug on AI after Bots Develop Their Own LanguageFootnote 29

-

• Facebook AI Creates Its Own Language in Creepy Preview of Our Potential FutureFootnote 30

The images that accompany these articles likewise track a path of increasing concern, from the Tower of Babel, to bland representations of pixels and digits, to generic robots, to specifically the robots featured in the Terminator movies in which a corporation called Skynet develops robots that at some point in the future become self-aware and start to exterminate humanity.

The reality of the experiment was of course nowhere near as dramatic as these pieces suggest. As a number of debunking articles tried to explain, originally both bots were allowed to extrapolate from their corpus of human negotiations as they tried, under the reinforcement learning technique, to maximize points negotiating with one another. Doing so, however, lead to the bots starting to use English with one another in ungrammatical ways, developing a short-hand that was only shared by those bots. Because the researchers at Facebook were trying to develop bots that could speak to human Facebook customers, they changed their methods. In other words, the newly invented “language” (such as it was) was “shut down” (that is, the experimental method was tweaked) because it would not be helpful in automating customer service, not because of an impending bot uprising.

When Facebook released examples of the innovative linguistic choices used by the two bots (revealed here to have been given the names Bob and Alice), it just fueled the hysteria because it appeared to readers to be so completely non-sensical.

Bob: i can i i everything else ………………

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else …………….

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else ………………

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i …………………….

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else …………………

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else …………….

Alice: balls have zero to me to me to me to me to me to me to me to me toFootnote 31

There was one more finding that the study authors were excited about beyond the broader claim of using reinforcement learning to create a better negotiation bot. In the introductory section of the paper, they write: “We find instances of the model feigning interest in a valueless [item in the negotiation game], so that it can later ‘compromise’ by conceding it. Deceit is a complex skill that requires hypothesizing the other agent’s beliefs, and is learnt relatively late in child development. Our agents have learnt to deceive without any explicit human design, simply by trying to achieve their goals” (Lewis et al. Reference Lewis, Yarats, Dauphin, Parikh and Batra2017: 2, original emphasis, reference removed).

While much of the sensationalist media reporting about this article focused on the invented language that had to be “shut down” before the robots could take over, almost every article also discussed the fact that the bots had learned to be deceptive. For the most apocalyptically minded, it would seem that not only could the bots talk to one another without humans being able to understand, but they could lie and say they did not.

Morality and the Invention of Language

There are a number of important parallels between the Pidgin and the AI instances of “inventing” languages, many of which can be seen by thinking of both the Pidgin speakers and the bots as different kinds of laborers. In contemporary AI research, training is dichotomized as either “supervised” or “unsupervised” learning. In supervised learning, bots have to go through a series of steps as data is transformed through several processes that a programmer helps the bot to run. Supervised learning may involve expensive and time-consuming processes in which humans must code pieces of training data as having one particular feature or another, to help train the bot to predict whether the feature will be present given certain conditions. Because of the expense of supervised training (often done by workers on the Mechanical Turk platform—see footnote 21), there is a lot of pressure from industry and military domains to reduce its role in machine learning. The opposite of supervised learning is unsupervised learning, in which deep learning processes take an unlabeled input, run it through a neural net, and then produce a final output, and the programmers happily black box the entire learning process.

Some of the reporting about the AI invented language talked about unsupervised learning as if equivalent to workers gossiping on the clock while their boss stepped out of the room. According to one article, “The bots were originally left alone to develop their conversational skills. When the experimenters returned, they found that the AI software had begun to deviate from normal speech. Instead they were using a brand new language created without any input from their human supervisors.”Footnote 32 The Fast Company article most explicitly talks about the bots creating their own language as if it were equivalent to the Pidgin register of tok bokis or tok hait: “Should we allow AI to evolve its dialects for specific tasks that involve speaking to other AIs? To essentially gossip out of our earshot?” Interestingly, after the article figures humanity as the global plantation manager, they answer their own question more or less affirmatively: “Maybe; it offers us the possibility of a more interoperable world…. The tradeoff is that we, as humanity, would have no clue what those machines were actually saying to one another.”Footnote 33

This question of interoperability—in which your phone can “speak” to your refrigerator, and both can talk to your TV—reflects some of the oldest fears of robot uprisings. Czech author Karel Čapek’s (Reference Čapek, Selver and Playfair1923[1920]) stage play R.U.R. (Rossum’s Universal Robots) is about a scientist who creates robot laborers (it is this play that in fact introduces the term “robots,” from the Czech word for “serf” or “slave”). One of the human makers of the robots realizes belatedly, after his robots have begun to fight against the humans, that humans should not have given all the robots the same language. Like the plantation managers in the Atlantic and Pacific, he thinks that linguistic diversity would have been a good defense against labor trouble. Čapek’s robot-building industrialist plays a rueful God who wished he had destroyed his mechanical Tower of Babel earlier on.

There are of course obvious and important differences between these cases. First, there is the fact of how people imagine the two invented languages to differ in complexity. The colonial Pidgin was assumed to be an entirely surface form, simplistic to the extent of being able to only represent the most basic concepts or objects. It took decades for colonizers to start to think of the language as having any kind of depth, either in the sense of being a language of duplicity and hidden meanings or in the sense of being a language in which evangelism could happen successfully. The same colonial racism that justified the indentured labor system also helped to construct Pidgin speakers as being in a state of languagelessness (Rosa Reference Rosa2016). In contrast, for the AI chatbots, the assumption of the language’s complexity is immediate, even in the face of the bizarre features seen in the transcript above.

Even though it is impossible to know the reason why the bots developed their novel form of English, there are some plausible explanations. The most unexpected feature of the transcript is the extensive repetition (for example, “to me to me to me”). However, repetition is cross-linguistically a common way of representing emphasis, intensification, or plurality—an iconic representation of some sense of increase. Although (the human form of) English is not known for the kind of extensive use of morphological or lexical reduplication seen in other languages (e.g., Malay ‘rumah’ house; ‘rumah rumah’ houses), English speakers do use several types of reduplication or repetition. For example, speakers often lengthen a vowel to emphasize a particular word, with the length of the vowel an icon of the strength of the sentiment: “I reeeeeeeeeally want to leave now,” is more emphatic than, “I reeeally want to leave now.” There is no upper limit to the length of the vowel (except perhaps the lung capacity of the speaker), something that is often lampooned when people imitate Spanish-language football announcers’ seemingly endless ability to extend the vowel in “Goal!” when a player scores. Another form of English reduplication is the partial, rhyming reduplication of a lexical item used to intensify a meaning: “itty-bitty” to mean very small; “fancy-schmancy” (for those who use Yiddish-inflected forms) to mean very fancy or perhaps overly fancy. English speakers also use what linguists call contrastive focus reduplication: “it was hot, but it wasn’t hot-hot” means something like it was somewhat but not extremely hot; “I like them but I don’t like-like them” means something like I like them platonically but not romantically. In contrast to the phonological form of reduplication, rhyming reduplication and contrastive focus reduplication can only repeat the element once: speakers do not usually say “it was hot, but it wasn’t hot-hot-hot-hot.” To put things in (human) grammatical terms, then, the chatbots seem to be using the phonological form of English repetition—with its lack of upper limit on the repeated element—but applying it to pronouns or prepositional phrases (“to me”).

There is more to discuss about the excerpt aside from the repetition. It is hard to interpret a line like “you i everything else” unless there is a deleted phrase between the two pronouns (“you [can have the balls and] I [get] everything else”?). And without looking at the transcripts from the human-to-human versions of the negotiation game that were run on Mechanical Turk and later fed to the bots as training data, it is hard to have a sense of how the extensive ellipses (…………….) might be functioning. What is perhaps most interesting is that the transcripts were taken as signs of not just chatbot alterity but chatbot superiority. The reporting about this supposedly nefarious linguistic invention talks about the bots creating a more “efficient” form of communication; that the bots had superseded the flawed human artifact known as English. While Pidgin speakers were initially assumed to be at the lower edge of humanity with a simplistic language to match, the AI chatbots are beyond the upper edge of humanity with a complex communicative system that is immediately considered opaque, if not entirely other. The link between the invention of language and the invention of concealment is immediate here, rather than the belated discovery that it was for Pidgin in the 1950s.Footnote 34

Although the furor over this Facebook research died down relatively quickly, the general concern about the opacity of AI languages has sprung up in other contexts. Researchers at the University of Texas claimed in early 2022 that the image model DALL-E 2 had its own secret language. Image models are AI systems that can take written descriptions and output images. The researchers at the University of Texas discovered that DALL-E 2 was consistently generating certain images when prompted with particular nonsense words. As Giannis Darras wrote announcing the news, “‘Apoploe vesrreaitais’ means birds. ‘Contarra ccetnxniams luryca tanniounons’ means bugs or pests. The prompt: ‘Apoploe vesrreaitais eating Contarra ccetnxniams luryca tanniounons’ gives images of birds eating bugs.”Footnote 35

Most computer scientists argued against the sensationalism of the claim that this meant that the image model had its own “secret language,” although they were concerned that the models were clearly engaged in some kind of unsupervised labeling and that this could be exploited by bad actors in different ways. More popular online discussions of the secret language echoed the discourses around the Facebook experiment, though. People expressed concerns that there was something nefarious and dangerous about the idea that the system had its own mode of communication that, as with the Facebook research, seemed uninterpretable with unknown origins. The idea that there was some other form of language hidden beneath the surface form was “creepy,” even “demonic,” in different tabloid coverage.Footnote 36 These unshared symbols were again the launching pad for speculative discourses about the potential beyond-humanness of these systems.

But whether we are discussing colonial or computational invented languages of labor, it would seem that one form of linguistic depth—concealment—implied the need for another—morality. So how might a moral order be imposed in response? Some tech optimists see the development of systems like ChatGPT as heralding a new era in which AI can be domesticated into speaking natural human language even in the construction of the system itself. The idea that it would be possible to command a language model using human English to make the system write code for itself scares some software engineers that they will be out of jobs soon. For others, it offers up a chance for moral encompassment: “Throughout the history of computing, humans have had to painstakingly input their thoughts using interfaces designed for technology, not humans. With this wave of breakthroughs, in 2023 we will start chatting with machines in our language—instantly and comprehensively. Eventually, we will have truly fluent, conversational interactions with all our devices. This promises to fundamentally redefine human-machine interaction.”Footnote 37

However, one of the ways that bots get figured as beyond-human is in the fear that there is no real way to impose a moral order, no colonial evangelism that could be used to encompass any attempts at the bots’ linguistic concealment. Here, the promise of deep learning is also its downfall when it comes to trying to contain AI within a moral order. Deep learning is focused on reading a huge corpus of data and developing a set of expectations based on frequency of co-occurrence of any given elements of a corpus. Deep learning language models do not understand any language in terms of concepts (as Searle [Reference Searle1980] has argued), they just are good at guessing what might come next.Footnote 38 It is language by Markov chains rather than by syntactic trees and semantic representations. But frequency and morality do not necessarily correlate.

Whether it is through a conscious manipulation of the training data or just a discomforting reflection of human communication, bots that are allowed to chat based on the frequency of topics that humans use tend to quickly become foul mouthed, sex-obsessed Nazis.Footnote 39 An ethics built from the ground up has been something of a disaster for the AI chatbots that have interacted with customers. Instead of being able to hoover up a lot of data and figure out morality like the bots figure out language, programmers have to keep going back in to put specific limits on the chatbots’ responses to each individual swear word or each individual request that the bot engage in one or another taboo activity.Footnote 40 While unsupervised deep learning has been an incredible leap in computer science of the past fifteen years, expensive, supervised training in the form of specific declarations of moral stances have to be developed for any chatbot that will interact with humans in a relatively unrestricted domain. More broadly, panic about bot uprisings stem from this sense that morality is not going to be part of the evolutionary development of AI, but can only be imposed, if at all, by a Durkheimian order, plugged into the system painstaking detail by painstaking detail.

Conclusion

The link between humanness and invented, deceitful, or otherwise unshared languages is not limited just to these two contexts that I have been discussing. For example, Nicholas Harkness (Reference Harkness2017; Reference Harkness2021) has recently discussed the extensive use of glossolalia (speaking in tongues) among South Korean Christians. He argues that glossolalia is a way of communicating with God in secret and more broadly, that secrecy has an important if overlooked place in Protestant rituals. We can think of glossolalia as a kind of unshared and invented language too—it is a language invented in the moment of its utterance that is discarded as soon as the event of speaking is over. In that sense, glossolalia offers a kind of reductio ad absurdum of a non-public symbol, open to no one but the speaker and God and lasting only as long as the moment of communication itself.

I argue in similar terms here that linguistic deceit and invention likewise need to be seen for the ways in which they are a productive element of Christian practice and theology. Much as glossolalia offers a phatic connection to God by being a private link, deceit offers the possibility of a hidden agency. It is the sign that there is a subject there for God to connect to, so to speak, even if it must be reformed in order for that connection to happen. Or so, at least, the missionaries encountering Pidgin thought.

The invented languages like Tok Pisin or the form of English developed by the chatbots discussed above offered up forms of language that different observers have tried to make public and shared in various ways. The missionaries hoped to domesticate Tok Pisin, wresting away a sense of ownership from its speakers. It remains an open question—or at least an ongoing object of concern—whether AI will divulge its secrets to humans, or if it will maintain the deceit, secrecy, and invention that I argue are alternative hallmarks of linguistic humanness. But if deceit and other forms of what I have called unshared symbols can play this important role in such a wide variety of contexts, why has it not been part of the conversations on language and humanness in the past?

One reason is that the link between language and humanness has been theorized primarily as an epistemological question but not necessarily a communicative one. Focusing on shared meanings, as Geertz or Saussure or many others have done, means focusing on the kinds of things that people know and the kinds of concepts that they have in their heads. Even for those who work on linguistic interaction, the focus is often still on the forms of knowledge necessary to be speakers who are adept at recognizing contexts and knowing how to interact in them, in both presupposing and transformative ways. But to focus on deceit or secrecy is to focus more on how people circulate or hide information—not just generic types or categories of language or speech, but particular tokens of it. Looked at from the perspective of communication, humanness can get linked with deceit because it suggests the possibility of subjective depth, the kind of ambiguity of agency that is at the center of many religious discourses. By only engaging part of the public symbolism that Geertz considered so essential to humanness, unshared symbols become the semiotic resources imputed to those at the margins of the human.

Acknowledgments

This paper has benefited enormously from the comments and criticisms of several audiences. Earlier versions were presented at a Sawyer Seminar at the University of California, Berkeley organized by Michael Lucey and Tom McEnaney; the Society for the Anthropology of Religion 2021 biennial conference (as the Presidential Lecture); a workshop and American Anthropological Association roundtable on Invented Languages organized by Monica Heller and David Karlander; the College of Asia and the Pacific at the Australian National University, organized by Matt Tomlinson; and at Lafayette College (as the Earl A. Pope Lecture), organized by Robert Blunt. I thank the organizers of these events. I also want to thank James Slotta, Matt Tomlinson, Darja Hoenigman, Joel Robbins, Bambi Schieffelin, Tanya Luhrmann, Jon Bialecki, Heather Mellquist Lehto, Kevin O’Neill, China Scherz, Nick Harkness, David Akin, Katrin Erk, Tony Webster, Ilana Gershon, Webb Keane, and anonymous reviewers for CSSH for their feedback at these events or while reading drafts of the manuscript. Finally, I thank the editors of CSSH who provided comments that were exceptionally helpful in putting together the final draft of this paper.