Our Dead Are Never Dead To Us,

Until We Have Forgotten Them

At the heart of modern conceptions of biomedicine sits a core narrative of ‘progress’, one in which profound scientific breakthroughs from the nineteenth century onwards have cumulatively and fundamentally transformed the individual life course for many patients in the global community. Whilst there remain healthcare inequalities around the world, science has endeavoured to make medical breakthroughs for everybody. Thus for many commentators it has been vital to focus on the ends – the preservation or extension of life and the reduction of human suffering emerging out of new therapeutic regimes – and to accept that the accumulation of past practice cannot be judged against the yardstick of the most modern ethical values. Indeed, scientists, doctors and others in the medical field have consistently tried hard to follow ethical practices even when the law was loose or unfocussed and public opinion was supportive of an ends rather than means approach. Unsystematic instances of poor practice in research and clinical engagement thus had (and have) less contemporary meaning than larger systemic questions of social and political inequalities for the living, related abuses of power by states and corporate entities in the global economy, and the suffering wrought by cancer, degenerative conditions and antibiotic resistant diseases. Perhaps unsurprisingly given how many patients were healed, there has been a tendency in recent laboratory studies of the history of forensic science, pathology and transplant surgery, to clean up, smooth over and thus harmonise the medical past.1 Yet, these processes of ‘progress’ have also often been punctuated by scandals (historical and current) about medical experimentation, failed drug therapies, rogue doctors and scientists and misuse of human research material.2 In this broad context, while the living do have a place in the story of ‘progress’, it is the bodies of the dead which have had and always have a central role. They are a key component of medical training and anatomical teaching, provide the majority of resources for organ transplantation and (through the retention and analysis of organs and tissue) constitute one of the basic building blocks of modern medical research. For many in the medical sciences field, the dead could and should become bio-commons given the powerful impact of modern degenerative and other diseases, accelerating problems linked to lifestyle, and the threats of current and future pandemics. Yet, equally inside the medical research community there remain many neglected hidden histories of the dead that are less understood than they should be in global medicine, and for this reason they are central to this new book.

Such perspectives are important. On the one hand, they key into a wider sense that practice in medical science should not be subject to retrospective ethical reconstruction. On the other hand, it is possible to trace a range of modern challenges to the theme of ‘progress’, the ethics of medical research and practice, as well as the scope and limits of professional authority. This might include resistance to vaccination, scepticism about the precision of precision medicine, an increasing willingness to challenge medical decisions and mistakes in the legal system, accelerating public support for assisted dying, and a widening intolerance of the risks associated with new and established drugs. Nowhere is this challenge more acute than in what historians broadly define as ‘body ethics’. By way of recent example, notwithstanding the provisions of the Human Tissue Act (Eliz. 2 c. 30: 2004) (hereafter HTA2004), the BBC reported in 2018 that the NHS had a huge backlog of ‘clinical waste’ because its sub-contracted disposal systems had failed.3 Material labelled ‘anatomical waste’ and kept in secure refrigerated units contained organs awaiting incineration at home or abroad. By July 2019, the Daily Telegraph revealed how such human waste, including body parts and amputations from operative surgeries, was found in 100 shipping containers sent from Britain to Sri Lanka for clinical waste disposal.4 More widely, the global trade in organs for transplantation has come into increasingly sharp relief, while the supply of cadavers, tissue and organs for medical research remains contentious. Some pathologists and scientists, for instance, are convinced that HTA2004 stymied creative research opportunities.5 They point out that serendipity is necessary for major medical breakthroughs. Legislating against kismet may, they argue, have been counterproductive. Ethical questions around whose body is it anyway thus continue to attract a lot of media publicity and often involve the meaning of the dead for all our medical futures.

Lately these ethical issues have also been the focus of high-profile discussion in the global medical community, especially amongst those countries participating at the International Federation of Associations of Anatomists (hereafter FAA). It convened in Beijing, China, in 2014, where a new proposal promised ‘to create an international network on body donation’ with the explicit aim of providing practical ‘assistance to those countries with difficulties setting up donation programmes’.6 The initiative was developed by the Trans-European Pedagogic Anatomical Research Group (TEPARC), following HTA2004 in Britain that had increased global attention on best practice in body donation. Under the TEPARC reporting umbrella, Beat Riederer remarked in 2015: ‘From an ethical point of view, countries that depend upon unclaimed bodies of dubious provenance are [now] encouraged to use these reports and adopt strategies for developing successful donation programmes.’7 Britain can with some justification claim to be a global leader in moving away from a reliance on ‘unclaimed’ corpses for anatomical teaching and research to embracing a system of body bequests based on informed consent. Similar ethical frameworks have begun to gain a foothold in Europe and East Asia, and are starting to have more purchase on the African8 and North and South American subcontinents too.9 Nonetheless, there is a long way to travel. As Gareth Jones explains, although ‘their use is far less in North America’ it is undeniable that ‘unclaimed corpses continue to constitute … around 20 per cent of medical schools’ anatomical programmes’ in the USA and Canada.10 Thus, the New York Times reported in 2016 that a new City of New York state law aimed to stop the use of ‘unclaimed’ corpses for dissection.11 The report came about because of a public exposé that the newspaper ran about the burial of a million bodies on Hart Island in an area of mass graves called Potter’s Field. Since 1980, the Hart Island Research project has found 65,801 ‘unclaimed’ bodies, dissected and buried anonymously.12 In a new digital hidden history project called the ‘Passing Cloud Museum’, their stories are being collected for posterity.13 And with some contemporary relevance, for during the Covid-19 pandemic the Hart Island pauper graveyard was re-opened by the New York public health authorities. Today, it once more contains contaminated bodies with untold stories to be told about the part people played in medical ‘progress’. For the current reality is that ‘in some states of the US, unclaimed bodies are passed to state anatomy boards’. Jones thus points out that:

When the scalpel descends on these corpses, no-one has given informed consent for them to be cut up. … Human bodies are more than mere scientific material. They are integral to our humanity, and the manner in which this material is obtained and used reflects our lives together as human beings. The scientific exploration of human bodies is of immense importance, but it must only be carried out in ways that will enhance anatomy’s standing in the human community.14

In a global medical marketplace, then, the legal ownership of human material and the ethical conduct of the healthcare and medical sciences can twist and turn. But with the increasing reach of medical research and intervention, questions of trust, communication, authority, ownership and professional boundaries become powerfully insistent. As the ethicist Heather Douglas reminds us: ‘The question is what we should expect of scientists qua in their behaviour, in their decisions as scientists, engaged in their professional life. As the importance of science in our society has grown over the past half-century, so has the urgency of this question.’ She helpfully elaborates:

The standard answer to this question, arising from the Freedom of Science movement in the early 1940s, has been that scientists are not burdened with the same moral responsibilities as the rest of us, that is, that scientists enjoy ‘a morally unencumbered freedom from permanent pressure to moral self-reflection’. … Because of the awesome power of science, to change both, our world, our lives, and our conception of ourselves, the actual implementation of scientists’ general responsibilities will fall heavily on them. With full awareness of science’s efficacy and power, scientists must think carefully about the possible impacts and potential implications of their work. … The ability to do harm (and good) is much greater for a scientist, and the terrain almost always unfamiliar. The level of reflection such responsibility requires may slow down science, but such is the price we all pay for responsible behavior.15

Whether increasing public scepticism of experts and medical science will require a deeper and longer process of reflection and regulation is an important and interesting question. There is also, however, a deep need for historical explorations of these broad questions, and particularly historical perspectives on the ownership and use of, authority over and ethical framing of the dead body. As George Santanyana reminds us, we must guard against either neglecting a hidden scientific past or embellishing it since each generic storyline is unlikely to provide a reliable future guide –

Progress, far from consisting in change, depends on retentiveness. When change is absolute there remains no being to improve and no direction is set for possible improvement: and when experience is not retained … infancy is perpetual. Those who cannot remember the past are condemned to repeat it.16

Against this backdrop, in his totemic book The Work of the Dead, Thomas Laqueur reminds us how: ‘the dead body still matters – for individuals, for communities, for nations’.17 This is because there has been ‘an indelible relationship between the dead body and the civilisation of the living’.18 Cultural historians thus criticise those in medico-scientific circles who are often trained to ignore or moderate the ‘work of the dead for the living’ in their working lives. Few appreciate the extent to which power relations, political and cultural imperatives and bureaucratic procedures have shaped, controlled and regulated the taking of dead bodies and body parts for medical research, transplantation and teaching over the longue durée. Yet our complex historical relationships with the dead (whether in culture, legislation, memory, medicine or science) has significant consequences for the understanding of current ethical dilemmas. Again, as George Santayana observed: ‘Our dignity is not in what we do, but in what we understand’ about our recent past and its imperfect historical record.19 It is to this issue that we now turn.

History and Practice

To offer a critique of the means and not the ends of medical research, practice and teaching through the lens of bodies and body parts is potentially contentious. Critics of the record of medical science are often labelled as neo-liberals, interpreting past decisions from the standpoint of the more complete information afforded by hindsight and judging people and processes according to yardsticks which were not in force or enforced at the time. Historical mistakes, practical and ethical, are regrettable but they are also explicable in this view. Such views underplay, however, two factors that are important for this book. First, there exists substantial archival evidence of the scale of questionable practice in medical teaching, research and body ethics in the past, but it has often been overlooked or ignored. Second, there has been an increasing realisation that the general public and other stakeholders in the past were aware of and contested control, ownership and use of bodies and body parts. While much weight has been given to the impact of very recent medical scandals on public trust, looking further back suggests that ordinary people had a clear sense that they were either marginalised in, or had been misinformed about, the major part their bodies played in medical ‘progress’. In see-saw debates about what medicine did right and what it did wrong, intensive historical research continues to be an important counterweight to the success story of biomedicine.

Evidence to substantiate this view is employed in subsequent chapters, but an initial insight is important for framing purposes. Thus, in terms of ownership and control of the dead body, it is now well established that much anatomy teaching and anatomical or biomedical research in the Victorian and Edwardian periods was dependent upon medical schools and researchers obtaining the ‘unclaimed bodies’ of the very poor.20 This past is a distant country, but under the NHS (and notwithstanding that some body-stock was generated through donation schemes promoted from the 1950s) the majority of cadavers were still delivered to medical schools from the poorest and most vulnerable sectors of British society until the 1990s. The extraordinary gift that we all owe in modern society to these friendless and nameless people has until recently been one of the biggest untold stories in medical science. More than this, however, the process of obtaining bodies and then using them for research and teaching purposes raised and raises important questions of power, control and ethics. Organ retention scandals, notably at Liverpool Children’s Hospital at Alder Hey, highlighted the fact that bodies and body parts had been seen as a research resource on a considerable scale. Human material had been taken and kept over many decades, largely without the consent or knowledge of patients and relatives, and the scandals highlighted deep-seated public beliefs in the need to protect the integrity of the body at death. As Laqueur argues: ‘The work of the dead – dead bodies – is possible only because they remain so deeply and complexly present’ in our collective actions and sense of public trust at a time of globalisation in healthcare.21 It is essentially for this reason that a new system of informed consent, with an opt-in clause, in which body donation has to be a positive choice written down by the bereaved and/or witnessed by a person making a living will, was enshrined into HTA2004. Even under the terms of that act, however, it is unclear whether those donating bodies or allowing use of tissue and other samples understand all the ways in which that material might be recycled over time or converted into body ‘data’. Questions of ownership, control and power in modern medicine must thus be understood across a much longer continuum than is currently the case.

The same observation might be made of related issues of public trust and the nature of communication. There is little doubt that public trust was fundamentally shaken by the NHS organ retention scandals of the early twenty-first century, but one of the contributions of this book is to trace a much longer history of flashpoints between a broadly conceived ‘public’ and different segments of the medical profession. Thus, when a Daily Mail editorial asked in 1968 – ‘THE CHOICE: Do we save the living … or do we protect the dead?’ – it was crystallising the question of how far society should prioritise and trust the motives of doctors and others involved in medical research and practice.22 There was (as we will see in subsequent chapters) good reason not to, something rooted in a very long history of fractured and incomprehensible communication between practitioners or researchers and their patients and donors. Thus, a largely unspoken aspect of anatomical teaching and research is that some bodies, organs and tissue samples – identified by age, class, disability, ethnicity, gender, sexuality and epidemiology – have always been more valuable than others.23 Equally, when human harvesting saves lives, questions of the quality of life afterwards are often downplayed. The refinement of organ transplantation has saved many lives, and yet there is little public commentary on the impact of rejection drugs and the link between those drugs and a range of other life-reducing conditions. It was informative, therefore, in the summer of 2016 that the BBC reported on how although many patients are living longer after a cancer diagnosis, the standard treatments they undergo have (and always have had) significant long-term side effects even in remission.24 These are physical – a runny nose, loss of bowel control, and hearing loss – as well as mental. Low self-esteem is common for many cancer sufferers. A 2016 study by Macmillan Cancer Support, and highlighted in the same BBC report, found that of the ‘625,000 patients in remission’, the majority ‘are suffering with depression after cancer treatment’. We often think that security issues are about protecting personal banking on the Internet, preventing terrorism incidents and stopping human trafficking, but there are also ongoing biosecurity issues in the medical sciences concerning (once more) whose body and mind is it anyway?25

Other communication issues are easily identifiable. How many people, for instance, really understand that coroners, medical researchers and pathologists have relied on the dead body to demarcate their professional standing and still do?26 In the past, to raise the status of the Coronial office (by way of example) there was a concerted campaign to get those coroners that were by tradition legally qualified to become medically qualified. But to achieve that professional outcome, they needed better access and authority over the dead. And how many people – both those giving consent for use of bodies and body parts and those with a vaguer past and present understanding of the processes of research and cause of death evaluation – truly comprehend the journey on which such human material might embark? In the Victorian and Edwardian periods, people might be dissected to their extremities, with organs, bodies and samples retained or circulated for use and re-use. Alder Hey reminded the public that this was also the normative journey in the twentieth century too. Even today, Coronial Inquests create material that is passed on, and time limits on the retention of research material slip and are meant to slip, as we shall see in Part II. The declaration of death by a hospital doctor was (and is) often not the dead-end. As the poet Bill Coyle recently wrote:

But, he asks, ‘could it be the opposite is true?’ To be alive is to experience a future tense ‘through space and time’. To be dead is all about the deceased becoming fixed in time – ‘while you stay where you are’, as the poet reminds us. Yet, this temporal dichotomy – the living in perpetual motion, the dead stock-still – has been and remains deceptive. Medical science and training rely, has always relied, on the constant movement of bodies, body parts and tissue samples. Tracing the history of this movement is a key part in addressing current ethical questions about where the limits of that process of movement should stand, and thus is central to the novel contribution being made in this book.

A final sense of the importance of historical perspective in understanding current questions about body ethics can be gained by asking the question: When is a body dead? One of the difficulties in arriving at a concise definition of a person’s dead-end is that the concept of death itself has been a very fluid one in European society.28 In early modern times, when the heart stopped the person was declared dead. By the late-Georgian era, the heart and lungs had to cease functioning together before the person became officially deceased. Then by the early nineteenth century, surgeons started to appreciate that brain death was a scientific mystery and that the brain was capable of surviving deep physical trauma. The notion of coma, hypothermia, oxygen starvation, resuscitation and its neurology entered the medical canon. Across the British Empire, meantime, cultures of death and their medical basis in countries like India and on the African subcontinent remained closely associated with indigenous spiritual concepts of the worship of a deity.29 Thus, the global challenge of ‘calling the time of death’ started to be the subject of lively debates from the 1960s as intersecting mechanisms – growing world population levels, the huge costs of state-subsidised healthcare, the rise of do not resuscitate protocols in emergency medicine, and a biotechnological revolution that made it feasible to recycle human material in ways unimaginable fifty years before – gave rise to questions such as when to prolong a whole life and when to accept that the parts of a person are more valuable to others. These now had more focus and meaning. Simultaneously, however, the reach of medical technology in the twentieth century has complicated the answers to such questions. As the ability to monitor even the faintest traces of human life – chemically in cells – biologically in the organs – and neurologically in the brain – became more feasible in emergency rooms and Intensive Care Units, hospital staff began to witness the wonders of the human body within. It turned out to have survival mechanisms seldom seen or understood.

In the USA, Professor Sam Parnia’s recent work has highlighted how calling death at twenty minutes in emergency room medicine has tended to be done for customary reasons rather than sound medical ones.30 He points out, ‘My basic message is this: The death we commonly perceive today … is a death that can be reversed’ and resuscitation figures tell their own story: ‘The average resuscitation rate for cardiac arrest patients is 18 per cent in US hospitals and 16 per cent in Britain. But at this hospital [in New York] it is 33 per cent – and the rate peaked at 38 per cent earlier this year.’31 Today more doctors now recognise that there is a fine line between peri-mortem – at or near the point of death – and post-mortem – being in death. And, it would be a brave medic indeed who claimed that they always know the definitive difference because it really depends on how much the patient’s blood can be oxygenated to protect the brain from anoxic insults in trauma. Ironically, however, the success story of medical technology has started to reintroduce medical dilemmas with strong historical roots. An eighteenth-century surgeon with limited medical equipment in his doctor’s bag knew that declaring the precise time of death was always a game of medical chance. Their counterpart, the twenty-first-century hospital consultant, is now equipped with an array of technology, but calling time still remains a calculated risk. Centuries apart, the historical irony is that in this grey zone, ‘the past may be dead’, but sometimes ‘it is very difficult to make it lie down’.32

In so many ways, then, history matters in a book about disputed bodies and body disputes. Commenting in the press on controversial NHS organ donation scandals in 1999, Lord Winston, a leading pioneer of infertility and IVF treatments, said:

The headlines may shock everyone, but believe me, the research is crucial. … Organs and parts of organs are removed and subjected to various tests – They are weighed and measured, pieces removed and placed under the microscope and biochemically tested. While attempts can be made to restore the external appearance of the body at the conclusion of a post-mortem, it is inevitable some parts may be occasionally missing.33

Winston admitted that someone of Jewish descent (as he was) would be upset to learn that a loved one’s body was harvested for medical research without consent and that what was taken might not be returned. As a scientist, he urged people to continue to be generous in the face of a public scandal. He was, like many leading figures in the medical profession, essentially asking the public to act in a more enlightened manner than the profession had itself done for centuries. The sanctions embodied in HTA2004 – the Human Tissue Authority public information website explains for instance that: ‘It is unlawful to have human tissue with the intention of its DNA being analyzed, without the consent of the person from whom the tissue came’ – are a measure of the threat to public trust that Winston was prefiguring.34 But this was not a new threat. As one leading educationalist pointed out in a feature article for the BBC Listener magazine in March 1961: ‘Besides, there are very few cultural or historical situations that are inert’ – the priority, he pointed out, should be dismembering medicine’s body of ethics – comparable, he thought, to ‘corpses patiently awaiting dissection’.35 By the early twenty-first century it was evident that medical ethics had come to a crucial crossroads and the choice was clear-cut. Medicine had to choose, either ‘proprietorial’ or ‘custodial’ property rights over the dead body, and to concede that the former had been its default position for too long.36 Phrases like ‘public trust’ could no longer simply be about paying lip service to public sensibilities, and there had been some recognition that the medical sciences needed to make a cultural transition in the public imagination from an ethics of conviction to an ethics of responsibility.37 Yet this transition is by no means complete. New legislation crossed a legal threshold on informed consent, but changing ingrained opinions takes a lot longer. And wider questions for both the public and scientists remain: Is the body ever a ‘dead-end’ in modern medical research? At what end-of-life stage should no more use be made of human material in a clinical or laboratory setting? Have the dead the moral right to limit future medical breakthroughs for the living in a Genome era? Would you want your body material to live on after you are dead? And if you did, would you expect that contribution to be cited in a transcript at an award ceremony for a Nobel Prize for science? Are you happy for that gift to be anonymous, for medical law to describe your dead body as abandoned to posterity? Or perhaps you agree with the former Archbishop of Canterbury, Dr. Rowan Williams, Master of Magdalen College Cambridge, who believes that ‘the dead must be named’ or else we lose our sense of shared humanity in the present?38

In this journey from proprietorial to custodial rights, from the ethics of conviction to an ethics of responsibility, and to provide a framework for answering the rhetorical questions posed above, history is important. And central to that history are the individual and collective lives of the real people whose usually hidden stories lie behind medical progress and medical scandal. They are an intrinsic aspect of a medical mosaic, too often massaged or airbrushed in a history of the body, because it seemed harder to make sense of the sheer scale of the numbers involved and their human complexities. Engaging the public today involves co-creating a more complete historical picture.

Book Themes

Against this backdrop, the primary purpose of this book is to ask what have often been uncomfortable questions about the human material harvested for research and teaching in the past. It has often been assumed (incorrectly) that the journey of such material could not be traced in the historical archives because once dead bodies and their parts had entered a modern medical research culture, their ‘human stories’ disappeared in the name of scientific ‘progress’. In fact, the chapters that follow are underpinned by a selection of representative case-studies focussing on Britain in the period 1945 to 2000. Through them, we can reconstruct, trace and analyse the multi-layered material pathways, networks and thresholds the dead passed through as their bodies were broken up in a complex and often secretive chain of supply. The overall aim is therefore to recover a more personalised history of the body at the end of life by blending the historical and ethical to touch on a number of themes that thread their way throughout Parts I and II. We will encounter, inter alia, notions of trust and expertise; the problem of piecemeal legislation; the ambiguities of consent and the ‘extra time’ of the dead that was created; the growth of the Information State and its data revolution; the ever-changing role of memory in culture; the shifting boundaries of life and death (both clinically and philosophically); the differential power relations inside the medical profession; and the nature and use of history itself in narratives of medical ‘progress’. In the process, the book moves from the local to the national and, in later chapters, to the international, highlighting the very deep roots of concerns over the use of the dead which we casually associate with the late twentieth century.

Part II presents the bulk of the new research material, raising fundamental historical questions about: the working practices of the medical sciences; the actors, disputes and concealments involved; the issues surrounding organ donation; how a range of professionals inside dissection rooms, Coronial courts, pathology premises and hospital facilities often approached their workflows in an ahistorical way; the temporal agendas set by holding on to research material as long as possible; the extent to which post-war medical research demanded a greater breaking up of the body compared to the past; and the ways that the medical profession engaged in acts of spin-doctoring at difficult moments in its contemporary history. Along the way, elements of actor network theory are utilised (an approach discussed in Chapter 1). This is because the dead passed through the hands and knowledge of a range of actors, including hospital porters, undertakers, ambulance drivers, coroners, local government officials registering death certificates, as well as those cremating clinical waste, not all of whom are currently understood as agents of biomedicine. The chapters also invite readers of this book to make unanticipated connections from core questions of body ethics to, for instance: smog, air pollution, networks between institutions and the deceased and the cultural importance of female bodies to dissection. These perspectives are balanced by taking into account that medical scientists are complex actors in their own right too, shaped by social, cultural, political, economic and administrative circumstances, that are sometimes in their control, and sometimes not. In other words, this book is all about the messy business of human research material and the messy inside stories of its conduct in the modern era.

In this context, three research objectives frame Chapters 4 to 6. The first is to investigate how the dead passed along a complex chain of material supply in twentieth-century medicine and what happened at each research stage, highlighting why those post-mortem journeys still matter for the living, because they fundamentally eroded trust in medicine in a way that continues to shape public debates. We thus begin in Chapter 4 with a refined case-study analysis of the human material that was acquired or donated to the dissection room of St Bartholomew’s Hospital in London from the 1930s to the l970s. Since Victorian times, it has been the fourth largest teaching facility in Britain. Never-before-seen data on dissections and their human stories reconnect us to hidden histories of the dead generated on the premises of this iconic place to train in medicine, and wider historical lessons in an era when biomedicine moved centre-stage in the global community.

Second, we then take a renewed look at broken-up bodies and the muddled bureaucracy that processed them. This human material was normally either dispatched using a bequest form from the mid-1950, or, more usually, acquired from a care home or hospice because the person died without close relatives in the modern era and was not always subject to the same rigorous audit procedures. What tended to happen to these body stories is that they arrived in a dissection room or research facility with a patient case note and then clinical mentalities took over. In the course of which, little consideration was given to the fact that processes of informed consent (by hospitals, coroners, pathologists and transplant teams) were not as transparent as they should have been; some parts of the body had been donated explicitly (on kidney donor cards) and others not (such as the heart). Effectively, the ‘gift’ became piecemeal, even before the organ transplantation, dissection or further research study got under way. Frequently, bureaucracy de-identified and therefore abridged the ‘gift exchange’. Human connections were thus consigned to the cul-de-sac of history. This is a physical place (real, rather than imagined) inside medical research processes where the human subjects of medical ‘progress’ often got parked out of sight of the general public. The majority were labelled as retentions and refrigerated for a much longer period of time than the general public generally realised, sometimes up to twenty-five years. This is not to argue that these retentions were necessarily an inconvenient truth, a professional embarrassment or part of a conspiracy theory with Big Pharma. Rather, retentions reflected the fact that the promise of ‘progress’ and a consequent augmentation of medico-legal professional status and authority proved very difficult to deliver unless it involved little public consultation in an era of democracy. Thus Chapter 5 analyses questions of the ‘extra time’ for the retention of bodies and body parts created inside the working practices of coroners and which are only drawn out through detailed consideration of organ donation controversies. A lack of visibility of these body parts was often the human price of a narrative of ‘progress’ and that invisibility tended to disguise the end of the process of use, and larger ethical questions of dignity in death. Likewise, a publicity-shy research climate created many missed research opportunities; frequently, coroners’ autopsies got delayed, imprecise paperwork was commonplace at post-mortems and few thought the bureaucratic system was working efficiently. As we will see in Chapter 5, frustrated families complained about poor communication levels between the police, coroners and grieving relatives, factors that would later influence the political reach of HTA2004. Paradoxically, the medical sciences, by not putting their ethics in order sooner, propped up a supply system of the dead that was not working for everyone involved on the inside, and thus recent legislation, instead of mitigating against the mistakes of this recent past, regulated much more extensively. In so doing, serendipity – the opportunity costs of potential future medical breakthroughs – took second place to the need for an overhaul of informed consent. Hidden histories of the dead therefore proved to be tactical and not strategic in the modern era for the medical research community.

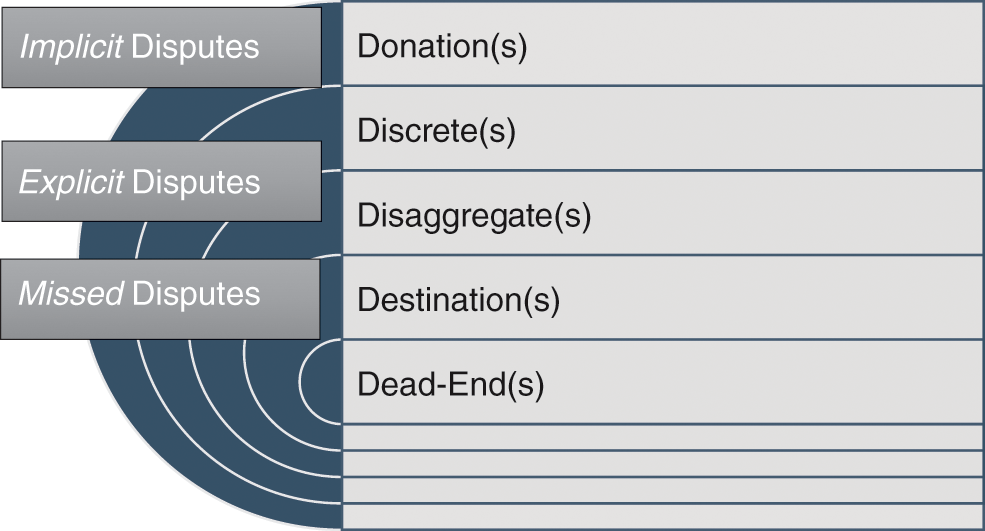

Finally, the book culminates by examining the complex ways that bodies could be disputed, and how the body itself was in dispute with, the best intentions of new medical research after 1945. It focusses specifically on the work of pathologists in the modern era and their extensive powers of retention and further research. Unquestionably, many patients have benefitted from brain banking and the expansion of the science of neurology, a central thematic focus of Chapter 6. Yet, this innovative work was often conducted behind the closed doors of research facilities that did not see the need for better public engagement, until recently. As we shall see, that proved to be a costly error too, both for levels of professional trust in pathologists and better public understanding of what patients could expect of medicine in painful end-of-life situations. For many patients, meanwhile, the side-effects of drug development for brain conditions have sometimes been downplayed with detrimental outcomes for their sense of well-being. Quality-of-life ‘gains’ did contrast with the claims of ‘progress’ that underpinned a furtive research climate, and this resulted in a public stand-off once NHS scandals about brain retention started to emerge in 1999. On the one hand, in an ageing population research into degenerative diseases had and has a powerful role to play in global medicine. On the other hand, medicine still needs to learn much more about the complex and interconnected relationship between brain, emotion and memory formation as lived experiences. Few in the medical professions appreciated that missed body disputes – misinformation by doctors about lengthy retentions of human material – could create a countermovement that disputed medicine’s best intentions. Disputes about the body can go both ways – forwards and backwards – grateful and resentful – accepting and questioning – and it is this Janus-like approach that the book in part recounts.

To enter into this closed world of medico-legal actors and their support staff without setting their working-lives in context would be to misunderstand this fascinating and fast-moving modern medical research culture from 1945 in Britain. In Part I, therefore, Chapter 1 outlines the key historical debates there have been about this complex medical community of competing interest groups and their focus on the need to obtain more human research material. It concentrates on the main gaps in our historical knowledge about their working-lives. To fully appreciate that backdrop, Chapter 2 reviews the broad ethical and legal frameworks that regulated the use of the dead for research purposes locally, nationally and internationally. In this way, Chapter 3 illustrates, with a selection of representative human stories, the main cultural trends and threads of the central argument of the book that will be developed in Part II. We end this Introduction, therefore, with a thought-provoking encounter on the BBC imagined for us by Christopher Hitchens – talented journalist, public intellectual and writer, science champion, prominent atheist and cancer sufferer. He reminded his worldwide audience in Mortality (2012) why hidden histories of the dead matter to us all in a global community. His body had disputed chemotherapy’s ‘kill or cure venom’ that made him ‘a passive patient in a fight he did not pick’ with cancer. He disputed the ‘battle’ he was expected to wage when the disease was battling him, and praised the promise of precision medicine to retrieve out of the cul-de-sac of history, lost or neglected parts of this dreaded human experience, to be fused with new knowledge and creative-thinking.39 He hoped that superstitions surrounding cancer (what he called ‘its maladies of the mind and body’) would eventually ‘yield to reason and science’ not just in the laboratory but by co-creating with patients, both the living and the dead. For Hitchens died on 15 December 2011. The final deadline that he met was to sequence his genome. It remains deposited for posterity at the American National Institutes of Health. He pushed past the dead-end one last time, into scientific eternity – Eram quod eros quod sum – I am what you are; you will be what I am.40

Introduction

In January 2001, the famous English sportsman Randolph Adolphus Turpin was elected into America’s International Boxing Hall of Fame. The celebration marked fifty years since he had defeated Sugar Ray Robinson to win the world middle-weight boxing title in 1951.1 Older fans of boxing appreciated that Turpin would not be present at the US inauguration. He had committed suicide aged just 38, in 1966. Few, however, knew that the fatal decision to end his life had caused considerable controversy in British medical circles. His boxer’s brain became the subject of professional debates and medical research disputes between a coroner, pathologist, senior neurologists and heart specialists, as well as his family and the popular press. In 1966, the tragic events were opened up to public enquiry and exposed medico-legal tensions about who owned a body and its parts in death. In neglected archives, forgotten medical stories like that of Turpin reveal narratives of the dead that often question the global picture of a medico-scientific consensus which argued that the accumulation, deidentification and retention of human material was necessary for ‘progress’. We rediscover, instead, faces, people, families and communities whose loved ones became the unacknowledged bedrock of modern British medical research. These missing persons relocated in the historical record exemplify that medical breakthroughs could have been part of an important and ongoing public engagement campaign in a biomedical age.

On Friday 22 July 1966, the lead sports writer of the Daily Mail featured the sad death of Turpin. The ex-boxer ‘shot himself with a .22 pistol in an attic bedroom over his wife’s Leamington Spa café on May 17’.2 The case looked like a straightforward suicide, but was to prove to be more complicated and controversial. Turpin died ‘after wounding his daughter, Carmen, aged two’ (although critically injured, she survived the violent attack by her father). At the Inquest, medical evidence established how: ‘Turpin fired at himself twice. The first bullet lodged against his skull but was not fatal. The second passed through his heart.’ The coroner, however, came in for considerable criticism in the press about his conclusions. It was noted that ‘Dr. H. Stephens Tibbits did not call for the brain tests that could have decided if brain damage caused by Turpin’s 24 years of boxing (including his amateur days) might have contributed to his state of mind on the day he died’. The pathologist who conducted the post-mortem on behalf of the Coronial hearing expressed the prevailing medical view that: ‘An examination by a neuropathologist using a fine microscope could have disclosed any tell-tale symptoms of brain damage such as a boxer might suffer.’ In particular, more medical research would have pinpointed ‘traces of haemorrhage in the tiny blood vessels of his brain’. But Dr Barrowcliff (pathologist) was not permitted to proceed because Dr Tibbits (coroner) would not authorise him to do so. The pathologist regretted that: ‘There was a certain amount of urgency involved here’ because of the fame of the suicide victim ‘to which academic interest took second place’. The press thus noted: that ‘the opportunity had been missed to carry out this study was received with dismay from a physician concerned with the Royal College of Physicians Committee on Boxing’. Its ‘eight leading specialists on the brain, heart and eyes’ were very disappointed that the pursuit of medical research that was in the public interest had been overridden by a coroner’s exclusive powers over the dead. The family meanwhile were relieved to have been consulted at all, since it was not a legal requirement at the time. They were anxious that the Coronial hearing should take into account Turpin’s suicide note. His last words, in fact, revealed disagreement between medical personnel, the family and suicide victim about the cause of death and therefore the potential of his brain for further research. To engage with this sort of hidden history of the dead and its body parts dispute, which is normally neglected in the literature, we need to trace this human story in greater archival depth.

Thus, Turpin left a handwritten note which stated that the Inland Revenue were chasing him for a large unpaid tax bill. He claimed this was levied on money he had not actually earned, and this was the chief cause of his death – ‘Naturally they will say the balance of my mind was disturbed but it is not’, he wrote; ‘I have had to carry the can.’3 Money troubles since his retirement from boxing in 1958 certainly seemed to have mounted. Four years previously the Daily Mail had reported on a bankruptcy hearing which established that ‘Turpin who earned £150,000 from his boxing career, now tussles for £25 a bout as a wrestler’.4 At a tax hearing at Warwick it was reported that: ‘His main creditor is the Inland Revenue. It claims £17,126 tax for boxing earnings between 1949 and 1958.’ He still owed ‘£15, 225’ and could only offer to pay back the tax bill ‘at £2 per week’ – a repayment schedule which would take ‘153 years’. Turpin had earned about £750 in 1961–2, but paid back a loan to a friend of £450 and £300 to his wife in cash, rather than the taxman. He was essentially broke and a broken man. The press, however, did not let the matter of his perilous financial situation or mental health condition rest. And because they did not, we can retrace the human circumstances of a controversial Coronial case concerning his valuable brain material: an approach this book will be following in subsequent chapters. For the aim is to uncover the sorts of human faces that were subsumed inside modern British medical research cultures.

In a hard-hitting editorial, the Daily Mail insisted that: ‘two questions must be answered about Randolph Turpin’s wretched life whilst boxing – Was he the lingering victim of punch drunkenness? What happened to the £585,439 paid to see his four title fights?’ Here was a ‘back-street kid who was a wealthy champion at 23, bankrupt at 34, and demented and dead at 38’.5 His ‘first marriage broke up, there were stories of assaults all pointing to a diminishing sense of social responsibility. A second marriage was to bring him happiness but his career… never recovered’. The newspaper asked why his family GP was not called as a medical witness at the Inquest. When interviewed by the press, the family doctor said that although ‘I do not like using the phrase, I would say that Turpin was punch drunk. He was not the sort of man to worry about financial matters or about people who had let him down. In my opinion boxing was responsible for his death.’ It was revealed that Turpin was ‘part deaf from a childhood swimming accident’ and he became ‘increasingly deaf through the years’. The GP, however, believed his hearing impairment had not impacted on either his physical balance or the balance of his mind. His elder brother and a family friend, nevertheless, contradicted that statement, telling the press that Turpin had ‘eye trouble’ and ‘double vision’ from his boxing days. He often felt dizzy and disorientated. The difficulty was that only Turpin’s 4-year-old daughter, Charmaine, and his youngest child, Carmen, aged 17 months (she sustained ‘bullet wounds in her head and chest’6) really knew what happened at the suicidal shooting. They were too young and traumatised to give evidence in the coroner’s court.7 In the opinion of Chief Detective-Inspector Frederick Bunting, head of Warwickshire CID, it was simply a family tragedy.8 Turpin had risen from childhood poverty and fought against racial discrimination (his father was from British Guyana and died after being gassed in WWI; his mother, almost blind, brought up five children on an army pension of just 27s per week, living in a single room).9 Sadly, ‘the money came too quickly’ and his ‘personality did not match his ring skill’, according to Bunting. Even so, by the close of the case what was noteworthy from a medico-legal perspective were the overarching powers of the coroner once the corpse came into his official purview. That evidence hinted at a hinterland of medical science research that seldom came into public view.

It seems clear that the pathologist commissioned to do Turpin’s post-mortem was prepared to apply pressure to obtain more human material for research purposes. Here was a fit young male body from an ethnicity background that could provide valuable anatomical teaching and research material. This perspective about the utility of the body and its parts was shared by the Royal College of Physicians, who wanted to better understand the impact of boxing on the brain. This public interest line of argument was also highlighted in the medical press, notably the Lancet. The family, meanwhile, were understandably concerned with questions of dignity in death. Their priority was to keep Turpin’s body intact as much as possible. Yet, what material journeys really happened in death were never recounted in the Coronial court. For, once the Inquest verdict of ‘death by suicide’ was reached, there was no need for any further public accountability. The pathologist in court did confirm that he examined the brain; he said he wanted to do further research, but tellingly he stated that he did not proceed ‘at that point’. Crucially, however, he did not elaborate on what would happen beyond ‘that point’ to the retained brain once the coroner’s case was completed in court.

As all good historians know, what is not said, is often as significant as what is. Today historians know to double-check on stories of safe storage by tracing what really happened to valuable human material once the public work of a coroner or pathologist was complete. The material reality was that Turpin’s brain was refrigerated, and it could technically be retained for many years. Whilst it was not subdivided in the immediate weeks and months after death, the fact of its retention meant that in subsequent years it could still enter a research culture as a brain slice once the publicity had died down. As we shall see, particularly in Chapter 6, this was a common occurrence from the 1960s onwards. At the time, it was normal for family and friends to trust a medico-legal system that could be misleading about the extra time of the dead it created with human research material. This neglected perspective therefore requires framing in the historiography dealing with bodies, body donations and the harvesting of human material for medical research purposes, and it is this task that informs the rest of the chapter.

The Long View

Historical studies of the dead, anatomisation and the use of bodies for research processes have become increasingly numerous since the early 2000s.10 Adopting theories and methodological approaches drawn from cultural studies,11 ethnography,12 social history, sociology, anthropology and intellectual history, writers have given us an increasingly rich understanding of cultures of death, the engagement of the medical professions with the dead body and the wider culture of body ethics. It is unfeasible (and not desirable) here to give a rendering of the breadth of this field given its locus at the intersection of so many disciplines. To do so would over-burden the reader with a cumbersome and time-consuming literature review. Imagine entering an academic library and realising that the set reading for this topic covered three floors of books, articles and associated reading material. It could make even the most enthusiastic student of the dead feel defeated. Two features of that literature, however, are important for the framing of this book.

First, we have become increasingly aware that medical ‘advances’ were intricately tied up with the power of the state and medicine over the bodies of the poor, the marginal and so-called ‘ordinary’ people. This partly involved the strategic alignment of medicine with the expansion of asylums, mental hospitals, prisons and workhouses.13 But it also went much further. Renewed interest in ‘irregular’ practitioners and their practices in Europe and its colonies highlighted how medical power and professionalisation were inexorably and explicitly linked to the extension of authority over the sick, dying and dead bodies of ‘ordinary’ people.14 More than this, the development of subaltern studies on the one hand and a ‘history from below’ movement on the other hand has increasingly suggested the vital importance for anatomists, medical researchers and other professionals involved in the process of death, of gaining and retaining control of the bodies of the very poorest and least powerful segments of national populations.15 A second feature of the literature has been a challenge to the sense and ethics of medical ‘progress’, notably by historians of the body who have been diligent in searching out the complex and fractured stories of the ‘ordinary’ people whose lives and deaths stand behind ‘great men’ and ‘great advances’. In this endeavour they have, inch by inch, begun to reconstruct a medico-scientific mindset that had been a mixture of caring and careless, clinical and inexact, dignified and disingenuous, elitist and evasive. In this space, ethical dilemmas and mistakes about medicine’s cultural impact, such as those highlighted in the Turpin case with which this chapter opened, were multiple. Exploring these mistakes and dilemmas – to some extent explicable but nonetheless fundamental for our understanding of questions of power, authority and professionalisation – is, historians have increasingly seen, much more important than modern ‘presentist’ views of medicine would have us believe.16

These are some of the imperatives for the rest of Parts I and II of this book. The remainder of this first chapter develops some of these historiographical perspectives. It does so by focussing on how trends in the literature interacted with social policy issues in the modern world. What is presented is not therefore a traditional historiographical dissection of the minutiae of academic debates of interest to a select few, but one that concentrates on the contemporary impact of archival work by historians as a collective. For that is where the main and important gap exists in the historical literature – we in general know some aspects of this medical past – but we need to know much more about its human interactions. Before that, however, we must engage with the question of definitions. Thus, around 1970 a number of articles appeared in the medical press about ‘spare-part surgery’ (today called organ transplantation). ‘Live donors’ and ‘donated’ cadavers sourced across the NHS in England will be our focus in this book too. To avoid confusion, we will be referring to this supply system as a combination of ‘body donations’ (willingly done) and ‘mechanisms of body supply’ (often involuntary). The former were bequested before death by altruistic individuals; the latter were usually acquired without consent. They entered research cultures that divided up the whole body for teaching, transplant and associated medical research purposes. This material process reflected the point at which the disassembling of identity took place (anatomical, Coronial, neurological and in pathology) into pathways and procedures, which we will be reconstructing. In other words, ‘pioneer operations’ in transplantation surgery soon ‘caught unawares the medical, legal, ethical and social issues’ which seemed to the media to urgently require public consultation in Britain.17 As one contemporary leading legal expert explained:

This is a new area of medical endeavour; its consequences are still so speculative that nobody can claim an Olympian detachment from them. Those who work outside the field do not yet know enough about it to form rational and objective conclusions. Paradoxically, those who work in the thick of it … know too much and are too committed to their own projects to offer impartial counsel to the public, who are the ultimate judges of the value of spare-part surgery.18

Other legal correspondents pointed out that since time was of the essence when someone died, temporal issues were bound to cause a great deal of practical problems:

For a few minutes after death cellular metabolism continues throughout the majority of the body cell mass. Certain tissues are suitable for removal only during this brief interval, although improvements in storage and preservation may permit a short delay in actual implantation in the recipient. Cadaver tissues are divided into two groups according to the speed with which they must be salvaged. First, there are ‘critical’ tissues, such as the kidney and liver, which must be removed from the deceased within a matter of thirty to forty-five minutes after death. On the other hand, certain ‘noncritical’ tissues may be removed more at leisure. Skin may be removed within twelve hours from time of death. The cornea may be taken at any time within six hours. The fact is, however, that in all cases action must be taken promptly to make use in a living recipient of the parts of a non-living donor, and this gives rise to legal problems. There is but little time to negotiate with surviving relatives, and waiting for the probate of the will is out of the question.19

Transplant surgeons today and anatomists over the past fifty years shared an ethical dilemma – how to get hold of human research material fast before it decayed too much for re-use. It was this common medico-legal scenario that scholars were about to rediscover in the historical record of the hidden histories of the body just as the transplantation era opened.

Ruth Richardson’s distinguished book, Death, Dissection and the Destitute, was first published in 1987. It pioneered hidden histories of disputed bodies.20 In it, she identified the significance of the Anatomy Act of 1832 (hereafter AA1832) in Britain, noting that the poorest by virtue of pauperism had become the staple of the Victorian dissection table. As Richardson pointed out, that human contribution to the history of medical science had been vital but hidden from public view. Those in economic destitution, needing welfare, owed a healthcare debt to society in death according to the New Poor Law (1834). Having identified this class injustice, more substantive detailed archive work was required to appreciate its cultural dimensions, but it would take another twenty-five years for the next generation of researchers to trace what exactly happened to those dying below the critical threshold of relative to absolute poverty.21 The author of this new book that you are currently reading for the first time (and three previous ones) has been at the vanguard of aligning such historical research with contemporary social policy issues in the medical humanities.

Once that research was under way, it anticipated several high-profile human material scandals in the NHS. These included the retention and storage of children’s organs at Alder Hey Children’s Hospital, the clinical audit of the practice of Dr Harold Shipman, and the response to the inquiry into the children’s heart surgery service at Bristol Royal Infirmary. Such scandals brought to the public’s attention a lack of informed consent, lax procedures in death certification, inadequate post-mortems and substandard human tissue retention paperwork, almost all of which depended upon bureaucracy developed from Victorian times. Eventually, these controversies would culminate in public pressure for the passing of HTA2004 to ensure that a proper system of informed consent repealed the various Anatomy Acts of the nineteenth and twentieth centuries, as we will go on to see in Chapter 2. Recent legislation likewise provided for the setting up of a Human Tissue Authority in 2005 to license medical research and its teaching practices in human anatomy, and more broadly regulate the ethical boundaries of biomedicine. As the Introduction suggested, it seemed that finally the secrets of the past were now being placed on open access in the public domain. Or were they?

Today, studies of the cultural history of anatomy and the business of acquiring the dead for research purposes – and it has always been a commercial transaction of some description with remarkable historical longevity – have been the focus of renewed scholarly endeavours that are now pan-European and postcolonial, and encompass neglected areas of the global South.22 In part, what prompted this genre of global studies was an increasing focus on today’s illegal trade in organs and body parts that proliferates in the poorest parts of the world. The most recent literatures on this subject highlight remarkable echoes with the increasingly rich historical record. Scott Carney, for instance, has investigated how the social inequalities of the transplantation era in a global marketplace are prolific because of e-medical tourism. In The Red Market (the term for the sale of blood products, bone, skulls and organs), Carney explains that on the Internet in 2011 his body was, and is, worth $200,000 to body-brokers that operate behind an antivirus firewall to protect them against international law.23 He could also sell what these e-traders term ‘black gold’ – waste products like human hair or teeth – less dangerous to his well-being to extract for sale but still intrinsic to his sense of identity and mental health. Carney calculates that the commodification of human hair is a $900 billion worldwide business. The sacred (hair bought at Hindu temples and shrines) has become the profane (wigs, hair extensions and so on) whether it involves ‘black gold’ or ‘Red Market’ commodities, in which Carney’s original phrasing (quoted in a New York Times book review) describes:

an impoverished Indian refugee camp for survivors of the 2004 tsunami that was known as Kidneyvakkam, or Kidneyville, because so many people there had sold their kidneys to organ brokers in efforts to raise desperately needed funds. ‘Brokers,’ he writes, ‘routinely quote a high payout – as much as $3,000 for the operation – but usually only dole out a fraction of the offered price once the person has gone through it. Everyone here knows that it is a scam. Still the women reason that a rip-off is better than nothing at all.’ For these people, he adds, selling organs ‘sometimes feels like their only option in hard times’; poor people around the world, in his words, ‘often view their organs as a critical social safety net’.24

Having observed this body-part brokering often during his investigative journalism on location across the developing world, Carney raises a pivotal ethical question. Surely, he asks, in the medical record-keeping the term ‘organ donor’ in such circumstances is simply a good cover story for criminal activity? When the poorest are exploited for their body parts, eyes, hair and human tissues – dead or alive – the brokers that do this turn the gift of goodwill implied in the phrase ‘organ donor’ into something far more sinister, the ‘organ vendor’. This perspective, as Carney himself acknowledges, is deeply rooted in medical history.

In the past, the removal of an organ or body part from a dissected body involved the immediate loss of a personal history. Harvesting was generally hurried and the paperwork done quickly. A tick box exercise was the usual method within hours of death. Recycling human identity involved medical bureaucracy and confidential paperwork. This mode of discourse mattered. Clinical mentalities soon took over and this lesson from the past has considerable resonance in the present. Thus, by the time that the transplant surgeon talks to the potential recipient of a body donation ‘gift’, involving a solid organ like the heart, the human transaction can become (and often became) a euphemism. Importantly, that language shift, explains Carney, has created a linguistic register for unscrupulous body traders too. Thus, when a transplant surgeon typically says to a patient today ‘you need a kidney’ – what they should be saying is ‘you need someone else’s kidney’. Even though each body part has a personal profile, the language of ‘donation’ generally discards it in the desire to anonymise the ‘gift’. Yet, Carney argues, just because a person is de-identified does not mean that their organ has to lose its hidden history too. It can be summarised: male, 24, car crash victim, carried a donor card, liked sports – female, 35, washerwoman, Bangladeshi, 3 children, healthy, endemic poverty. It might be upsetting on a post-mortem passport to know about the human details, disturbing the organ recipient’s mental position after transplant surgery, but modern medical ethics needs to be balanced by declaring the ‘gift’ from the dead to the living too. Instead, medical science has tended to have a fixed mentality about the superior contribution of bio-commons to its research endeavours.

Historians of the body that have worked on the stories of the poorest in the past to learn their historical lessons for the future, argue that it would be a more honest transaction to know their human details, either post-mortem or post-operative. Speaking about the importance of the ‘gift relationship’ without including its human face amounts to false history, according to Carney and others. In this, he reflects a growing body of literature on medical tourism, which challenges the prevailing view that medical science’s neglected hidden histories do not matter compared to larger systemic questions of social, medical and life-course inequalities for the living. Instead, for Carney and his fellow scholars, the hidden histories of ‘body donations’ were a dangerous road to travel without public accountability in the material journeys of human beings in Britain after WWII. They created a furtive research climate that others could then exploit. Effectively, unintended consequences have meant that body-brokers do buy abroad, do import those organs and do pass them off as ‘body donations’ to patients often so desperate for a transplant that medical ignorance is the by-product of this ‘spare-part’ trade. Just then as the dead on a class basis in the past lost their human faces, today the vulnerable are exploited:

Eventually, Red Markets have the nasty social side effect of moving flesh upward – never downward – through social classes. Even without a criminal element, unrestricted free markets act like vampires, sapping the health and strength from ghettos of poor donors and funneling their parts to the wealthy.25

Thus, we are in a modern sense outsourcing human misery in medicine to the poorest communities in India, Africa and China, in exactly the same way that medical science once outsourced its body supply needs in the past to places of high social deprivation across Britain, America and Australia, as well as European cities like Brussels and Vienna.26 The dead (in the past), the living-dead (in the recent past) and those living (today) are part of a chain of commodification over many centuries. In other words, what medical science is reluctant to acknowledge and which historians have been highlighting for thirty years is that a wide variety of hidden histories of the body have been shaped by the ‘tyranny of the gift’, as much as altruism, and continue to be so.27

Unsurprisingly, then, the complexities surrounding this ‘gift relationship’ are an important frame for this book.28 Margaret Lock, for instance, has explored Twice Dead: Transplants and the Reinvention of Death (2002) and ‘the Christian tradition of charity [which] has facilitated a willingness to donate organs to strangers’ via a medical profession which ironically generally takes a secular view of the ‘donated body’.29 One reason she notes that public confidence broke down in the donation process was that medical science did not review ‘ontologies of death’ and their meaning in popular culture. Instead, the emphasis was placed on giving without a balancing mechanism in medical ethics that ‘invites an examination of the ways in which contemporary society produces and sustains a discourse and practices that permit us to be thinkers at the end-of-life’ and, for the purpose of this book, what we do with the dead-end of life too.30 Lock helpfully elaborates:

Even when the technologies and scientific knowledge that enable these innovations [like transplant surgery] are virtually the same, they produce different effects in different settings. Clearly, death is not a self-evident phenomenon. The margins between life and death are socially and culturally constructed, mobile, multiple, and open to dispute and reformulation. … We may joke about being brain-dead but many of us do not have much idea of what is implicated in the clinical situation. … We are scrutinising extraordinary activities: death-defying technologies, in which the creation of meaning out of destruction produces new forms of human affiliation. These are profoundly emotional matters. … Competing discourse and rhetoric on the public domain in turn influences the way in which brain death is debated, institutionalised, managed and modified in clinical settings.31

Thus, for a generation that donated their bodies after WWII questions of reciprocity were often raised in the press but seldom resolved inside the medical profession by co-creating in medical ethics with the general public. There remained more continuity than discontinuity in the history of body supply, whether for dissection supply or transplant surgery, as we shall see in Part II. The reach of this research culture hence remains overlooked in ways that this book maps for the first time. Meanwhile, along this historical road, as Donna Dickenson highlights, often ‘the language of the gift relationship was used to camouflage … exploitation’. This is the common situation today when a patient consents to their human tissue donation, but it is recycled for commercial gain into data-generation. For the donor is seldom part of that medical choice nor shares directly in the knowledge or profits generated.32 In other words: ‘Researchers, biotechnology companies and funding bodies certainly don’t think the gift relationship is irrelevant: they do their very best to promote donors’ belief in it, although it is a one-way gift-relationship.’33 Even though these complex debates about what can, and what should go for further medical research and training today can seem to be so fast moving that the past is another country, they still merit more historical attention. Consequently, the historical work that Richardson pioneered was a catalyst, stimulating a burgeoning field of medical humanities study, and one with considerable relevance for contemporary social policy trends now.

How then do the hidden histories of this book relate to what is happening today in a biotech age? The answer lies in the immediate aftermath of WWII when medical schools started to reform how they acquired bodies for dissection and what they intended to do with them. Seldom do those procedures and precedents feature in the historical literature. This author studied in-depth older legislation like the Murder Act (running from 1752 to 1832) and the first Anatomy Act (covering 1832–1929) in two previous books. Even so, few studies move forward in time by maintaining those links to the past that continue to have meaning in the post-1945 era in the way that this study does.34 That anomaly is important because it limits our historical appreciation of medical ethics. It likewise adds to the problem of how science relates its current standards to the recent past. Kwame Anthony Appiah (philosopher, cultural theorist and novelist) conducting the Reith Lectures for the BBC thus reminds us: ‘Although our ancestors are powerful in shaping our attitudes to the past’ – and we need to always be mindful of this – we equally ‘should always be in active dialogue with the past’ – to stay engaged with what we have done – and why.35 Indeed, as academic research has shown in the past decade, the policing of the boundaries of medical ethics that involve the sorts of body disputes which are fundamental to us as a society also involves the maintenance of long-term confidence and public trust that have been placed in the medical sciences. This still requires vigilance, and in this sense the investigation and production of a seamless historical timeframe is vital. Such a process demands that we engage in an overview of the various threshold points that created – and create – hidden histories in the first place.

This is the subject of the next section, but since hidden histories of the body in the post-war period – stories like that of Randolph Turpin – are the product of, reflect and embody the powerful reach of intricate networks of power, influence and control, it is first necessary to engage briefly with the field of actor-network studies. Helpfully, Bruno Latour wrote in the 1980s that everything in the world exists in a constantly shifting network of relationships.36 The human actors involved operate together with varying degrees of agency and autonomy. Retracing and reconstructing these actor networks therefore involves engaged research and engagement with research, argue Michel Callow, John Law and Arne Rip.37 This approach to historical studies can enhance our collective understanding of how confidence and public trust change over time, as well as illuminate mistrust in the medical sciences. Latour argues we thus first need to ‘describe’ the network of actors involved in a given situation. Only then can we investigate the ‘social forces acting’ that shape the matrix of those involved. Latour along with Michel Callow hence prioritised the need to map the dynamic interactions of science and technology since these disciplines have come to such prominence in Western society. How the sociology of science operates in the modern world was likewise an extension of their work. Actor network theory and its study are therefore essentially ‘science in action’ and are one of the foundational premises of the case studies in Part II of this book.

Latour pioneered this novel approach because he recognised that science needed help to rebuild its reputation and regain its authority in the modern period, at a time when the ethical basis of so much medical research and claims to be in the public good became controversial in the global community. In 1999, John Law and John Hassard outlined a further development of actor network theory, arguing that if it was to become a genuine framework for transdisciplinary studies then it had to have five basic characteristics:

It does not explore why or how a network takes the form its does.

It does explore the relational ties of the network – its methods and how to do something within its world.

It is interested in how common activities, habits and procedures sustain themselves.

It is adamantly empirical – the focus is how the network of relationships performed – because without performance the network dissolves.

It is concerned with what conflicts are in the network, as well as consensus, since this is part of the performative social elements.38

Michael Lee Scott’s 2006 work further refined this model.39 He pointed out that those who defend the achievements of science and its research cultures too often treat its performance like a car. As long as the car travels, they do not question the performance of the results, the function of its individual components or its destination. Only when science stumbles or breaks down, is its research apparatus investigated. When society treats science like a well-performing car, ‘the effect is known as punctualisation’. We need medical mistakes and/or a breakdown of public confidence, argues Scott, to ‘punctuate’ our apathy about the human costs of the medical sciences to society as a whole. In other words, belief in science and rationalism is logical, but human beings are emotional and experiential too. If science has encapsulated our cultural imaginations for good healthcare reasons, we still need to keep checking that its medical performance delivers for everybody and is ethical. This notion of ‘encapsulation’, Scott explains, is important for understanding how the research cultures of the medical sciences really work. A useful analogy is computer programming. It is common for programmers to adopt a ‘language of mechanism that restricts access to some of the object’s component’. In other words, when a member of the general public then turns a computer on, most people are generally only concerned that the computer works today in the way that the car-owner does when they turn on the ignition key in the morning to go to work. Even so, those simple actions hand over a considerable part of human agency to new technology. On the computer, we do not see the language of algorithms (the mechanisms of the system) that have authority over us and conceal their real-time operation. Science operates in an equivalent way to computer programmes, according to Scott, because it has hidden and privileged research objectives, written into its code of conduct and a complex, interrelated and often hidden set of actors. This book takes its lead from this latest conceptual thinking in actor network studies, but it also takes those methods in a novel research direction too. We begin by remodelling the sorts of research threshold points created inside the system of so-called body bequests and what these ‘donations’ meant for the way that the medical sciences conducted itself, networked and performed its research expertise in post-war Britain.

Remapping Disputed Bodies – Missing Persons’ Reports

The quotation ‘volenti non fit iniuria’ – no wrong is done to one who is willing – encapsulates modern attitudes towards ethical conduct in the dissection room, transplant operation theatre and more widely towards the use of human tissue and body parts for research purposes.40 In practice, however, things are rarely this simple. Bronwyn Parry, a cultural geographer, has described this defensive position as follows:

New biotechnologies enable us, in other words, to extract genetic or biochemical material from living organisms, to process it in some way – by replicating, modifying, or transforming it – and in so doing, to produce from it further combinations of that information that might themselves prove to be commodifiable and marketable.41