When I started my career in materials research in the late 1980s, publishing a research paper was a completely different experience compared to today. While the manuscript was typed using a word processor on a computer (the manuscript for my thesis was actually typed on a typewriter!), graphs were drawn by hand, photographs were developed in a dark room, and big packages with manuscripts were sent by post to the journals. Submitting a paper involved a great deal of work and took much more time.

The journal to publish a paper was selected based on prestige and reputation, not on impact factor (IF). Prestige and IF have diverged over time: what were considered prestigious journals are now considered of lesser importance because they do not have large IFs. After a manuscript was submitted, it was sent directly to referees and was not screened by editors beforehand. The hard and time-consuming preparation of a manuscript led to better care and quality, resulting in a much-reduced number of submissions.

Italy, in the materials field, was very provincial at that time, and my mentors discouraged me from submitting papers to the most prestigious journals. They argued that papers from Italy were usually rejected in an unfair way, while papers from important international professors were easily accepted even when weak. A rejection was a serious issue at that time, both in terms of time lost and for the injured pride. But I was not convinced by this argument, and when I thought I had a very good paper, I was willing to submit it to important journals. It took a lot of time and discussions to convince my professor to submit a paper to the Journal of the American Ceramic Society, but when finally I did, it was accepted and published. Reference Gusmano, Montesperelli, Traversa and Mattogno1

On another paper, Reference Arpaia, Cigna, Simoncelli and Traversa2 Professor Mario Arpaia, who unfortunately prematurely passed away many years ago, told me that its publication happened only thanks to my “capa tosta” (stubbornness). I submitted another paper to Corrosion against the opinions of my seniors, and six months later, I received a small card with the response: Accept as is! Reference Zanoni, Gusmano, Montesperelli and Traversa3 I understood that if you had a very good paper, you had an honest chance of having it published, even if you were Italian. The comments from the reviewers that I received were, in general, tough but useful, thoughtful, and constructive; the final result was an improved manuscript. The main task of the editors then was to accept papers, not to reject them; so, there was the possibility of improving the manuscript, and minor mistakes could be addressed with the help of reviewers.

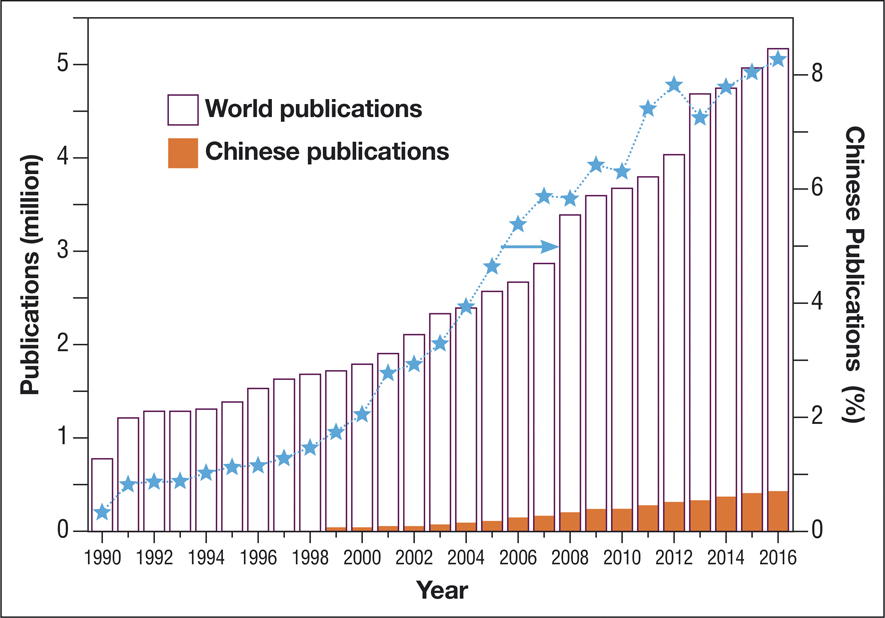

This landscape is now significantly altered, probably forever, due to two major reasons: the dramatic increase in the number of submissions and the pressure to publish in journals with a high IF; and one minor reason, rapid publication. The figure on the next page shows the number of papers indexed in the Web of Science from 1990 to 2016, published in the entire world. Even considering that not all of the manuscripts published in 1990 were indexed, the increase in the number of publications is remarkable, with more than a sixfold increase in the timespan considered, topping more than 5 million in 2016. These are published papers. With a conservative estimate of 50% rejection, this means more than 10 million manuscripts were submitted for publication in 2016, a flood by any estimate.

One of the causes of this flood is the increase in research budgets around the world, with many countries, such as those in the Middle East, making massive investments in research. China also has played a major role, with a factor of increase in the number of indexed papers of about 170, in the time-span considered, reaching more than 8% of the world total in 2016. Notably, in 1990, this was negligible, a mere 0.3%. One wonders if such a large amount of published work really helps the progress of science, and especially of technology. Such a massive volume of information renders it difficult to stay updated in the literature, even in one’s own field.

The substantial increase in the number of submissions has also led to drastic changes in journal policies. The most important one is that now it is more typical to reject papers than to accept them. Many journals have introduced a preliminary step of editorial evaluation, prior to sending the manuscripts out to review, performed either by scientific editors or by professional editorial assistants. Now it has become important to evaluate the impact of a paper, in terms of the probability of receiving as many citations as possible.

Recently, a professional editorial assistant commented on one of my submissions that, despite the fact that the work was novel, he felt it would not have sufficient impact. I am at a stage of my career where my h-index is 59 (69 on Google Scholar), and I am being told that I am not able to understand what impact my work may have. This is very depressing. The attitudes of scientific editors and peers are scary. They appear to be going in the direction to oblige people working on select topics that are in vogue today (graphene, metal–organic frameworks, and hybrid perovskite solar cells are among the trends of the moment) if one wants to publish in high IF journals. This seems like a trial to kill certain research lines in favor of others.

Another aspect that is affecting the quality of published research is the rush in publishing. Everything has to be quick, including the reviewers’ work. So now a reviewer tries to find the weak point in a manuscript and kill all the work based on that, because the tendency of journals is to reject papers, not to accept them. The short time allotted reduces the thoughtfulness of the reviewers’ comments, so many times these comments are inappropriate or not useful.

Number of total publications indexed in the Web of Science each year between 1990 and 2016, compared with the number of indexed publications having a Chinese affiliation. The plotted data with the star symbol show the percentage of Chinese publications with respect to the world total.

We go to the second major reason one has to publish in high IF journals, which is pressure from employing institutions and funding agencies. One gets employment, higher salary, promotions, and additional research funds based on factors that are not necessarily intrinsic to the quality of the performed work. There is then a reason to consider adjusting data and results, perform little tricks here and there to get a scoop. Sometimes someone is caught, sometimes with disproportionate punishment. But sometimes claims are made that may lead to the creation of new companies, until the discovery that the claims are unsupported, resulting in bankruptcy and employee firing.

What is the value of a paper published in high IF journals? What is the real economic return in terms of creation of new technologies and new industries? It is not easy to make such an estimate, but I am pretty sure that this economic return is negligible for the majority of published papers. Employers and funding agencies need to explain why a high IF publication is so important.

I discovered the existence of the Web of Science and Scopus in 2008, during my hiring at the National Institute for Materials Science (NIMS) in Tsukuba, Japan. Before then, I did not have any idea about the existence of an h-index. I thus started to play the game of high IF journals because it was requested by my employer. Fortunately, the working conditions at NIMS were excellent, and I was successful without altering my research directions or my ethical convictions. But now I am starting to feel inadequate within this new system.

We are at a point where we as scientists need to think about and evaluate what we are doing. Most of all, we need to preserve the openness and freedom of scientific thinking, avoiding homologation on given research topics, which is a risk if the same trends continue into the future.