1 Introduction

Answer Set Programming (ASP; Lifschitz Reference Lifschitz2002) allows us to address knowledge-intense search and optimization problems in a greatly declarative way due to its integrated modeling, grounding, and solving workflow (Gebser and Schaub Reference Gebser and Schaub2016; Kaufmann et al. Reference Kaufmann, Leone, Perri and Schaub2016). Problems are modeled in a rule-based logical language featuring variables, function symbols, recursion, and aggregates, among others. Moreover, the underlying semantics allows us to express defaults and reachability in an easy way. A corresponding logic program is then turned into a propositional format by systematically replacing all variables by variable-free terms. This process is called grounding. Finally, the actual ASP solver takes the resulting propositional version of the original program and computes its answer sets.

Given that both grounding and solving constitute the computational cornerstones of ASP, it is surprising that the importance of grounding has somehow been eclipsed by that of solving. This is nicely reflected by the unbalanced number of implementations. With lparse (Syrjänen Reference Syrjänen2001b), (the grounder in) dlv (Faber et al. Reference Faber, Leone and Perri2012), and gringo (Gebser et al. Reference Gebser, Kaminski, König, Schaub and Notes2011), three grounder implementations face dozens of solver implementations, among them smodels (Simons et al. Reference Simons, Niemelä and Soininen2002), (the solver in) dlv (Leone et al. Reference Leone, Pfeifer, Faber, Eiter, Gottlob, Perri and Scarcello2006), assat (Lin and Zhao Reference Lin and Zhao2004), cmodels (Giunchiglia et al. Reference Giunchiglia, Lierler and Maratea2006), clasp (Gebser et al. Reference Gebser, Kaufmann and Schaub2012), wasp (Alviano et al. Reference Alviano, Faber and Gebser2015) just to name the major ones. What caused this imbalance? One reason may consist in the high expressiveness of ASP’s modeling language and the resulting algorithmic intricacy (Gebser et al. Reference Gebser, Kaminski, König, Schaub and Notes2011). Another may lie in the popular viewpoint that grounding amounts to database materialization, and thus that most fundamental research questions have been settled. And finally the semantic foundations of full-featured ASP modeling languages have been established only recently (Harrison et al. Reference Harrison, Lifschitz and Yang2014; Gebser et al. Reference Gebser, Harrison, Kaminski, Lifschitz and Schaub2015a), revealing the semantic gap to the just mentioned idealized understanding of grounding. In view of this, research on grounding focused on algorithm and system design (Faber et al. Reference Faber, Leone and Perri2012; Gebser et al. Reference Gebser, Kaminski, König, Schaub and Notes2011) and the characterization of language fragments guaranteeing finite propositional representations (Syrjänen Reference Syrjänen2001b; Gebser et al. Reference Gebser, Schaub and Thiele2007; Lierler and Lifschitz Reference Lierler and Lifschitz2009; Calimeri et al. Reference Calimeri, Cozza, Ianni and Leone2008).

As a consequence, the theoretical foundations of grounding are much less explored than those of solving. While there are several alternative ways to characterize the answer sets of a logic program (Lifschitz Reference Lifschitz2008), and thus the behavior of a solver, we still lack indepth formal characterizations of the input–output behavior of ASP grounders. Although we can describe the resulting propositional program up to semantic equivalence, we have no formal means to delineate the actual set of rules.

To this end, grounding involves some challenging intricacies. First of all, the entire set of systematically instantiated rules is infinite in the worst – yet not uncommon – case. For a simple example, consider the program:

This program induces an infinite set of variable-free terms, viz. a, f(a),

![]() $f(f(a)),\dots$

, that leads to an infinite propositional program by systematically replacing variable X by all these terms in the second rule, viz.

$f(f(a)),\dots$

, that leads to an infinite propositional program by systematically replacing variable X by all these terms in the second rule, viz.

On the other hand, modern grounders only produce the fact p(a) and no instances of the second rule, which is semantically equivalent to the infinite program. As well, ASP’s modeling language comprises (possibly recursive) aggregates, whose systematic grounding may be infinite in itself. To illustrate this, let us extend the above program with the rule

deriving q when the number of satisfied instances of p is one. Analogous to above, the systematic instantiation of the aggregate’s element results in an infinite set, viz.

Again, a grounder is able to reduce the rule in Equation (1) to the fact q since only p(a) is obtained in our example. That is, it detects that the set amounts to the singleton

![]() $\{a\mathrel{:}p(a)\}$

, which satisfies the aggregate. After removing the rule’s (satisfied) antecedent, it produces the fact q. In fact, a solver expects a finite set of propositional rules including aggregates over finitely many objects only. Hence, in practice, the characterization of the grounding result boils down to identifying a finite yet semantically equivalent set of rules (whenever possible). Finally, in practice, grounding involves simplifications whose application depends on the ordering of rules in the input. In fact, shuffling a list of propositional rules only affects the order in which a solver enumerates answer sets, whereas shuffling a logic program before grounding may lead to different though semantically equivalent sets of rules. To see this, consider the program:

$\{a\mathrel{:}p(a)\}$

, which satisfies the aggregate. After removing the rule’s (satisfied) antecedent, it produces the fact q. In fact, a solver expects a finite set of propositional rules including aggregates over finitely many objects only. Hence, in practice, the characterization of the grounding result boils down to identifying a finite yet semantically equivalent set of rules (whenever possible). Finally, in practice, grounding involves simplifications whose application depends on the ordering of rules in the input. In fact, shuffling a list of propositional rules only affects the order in which a solver enumerates answer sets, whereas shuffling a logic program before grounding may lead to different though semantically equivalent sets of rules. To see this, consider the program:

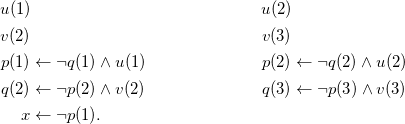

This program has two answer sets; both contain p(1) and q(3), while one contains q(2) and the other p(2). Systematically grounding the program yields the obvious four rules. However, depending upon the order, in which the rules are passed to a grounder, it already produces either the fact p(1) or q(3) via simplification. Clearly, all three programs are distinct but semantically equivalent in sharing the above two answer sets.

Our elaboration of the foundations of ASP grounding rests upon the semantics of ASP’s modeling language (Harrison et al. Reference Harrison, Lifschitz and Yang2014; Gebser et al. Reference Gebser, Harrison, Kaminski, Lifschitz and Schaub2015a), which captures the two aforementioned sources of infinity by associating non-ground logic programs with infinitary propositional formulas (Truszczyński Reference Truszczyński2012). Our main result shows that the stable models of a non-ground input program coincide with the ones of the ground output program returned by our grounding algorithm upon termination. In formal terms, this means that the stable models of the infinitary formula associated with the input program coincide with the ones of the resulting ground program. Clearly, the resulting program must be finite and consist of finitary subformulas only. A major part of our work is thus dedicated to equivalence preserving transformations between ground programs. In more detail, we introduce a formal characterization of grounding algorithms in terms of (fixed point) operators. A major role is played by specific well-founded operators whose associated models provide semantic guidance for delineating the result of grounding. More precisely, we show how to obtain a finitary propositional formula capturing a logic program whenever the corresponding well-founded model is finite, and notably how this transfers to building a finite propositional program from an input program during grounding. The two key instruments accomplishing this are dedicated forms of program simplification and aggregate translation, each addressing one of the two sources of infinity in the above example. In practice, however, all these concepts are subject to approximation, which leads to the order-dependence observed in the last example.

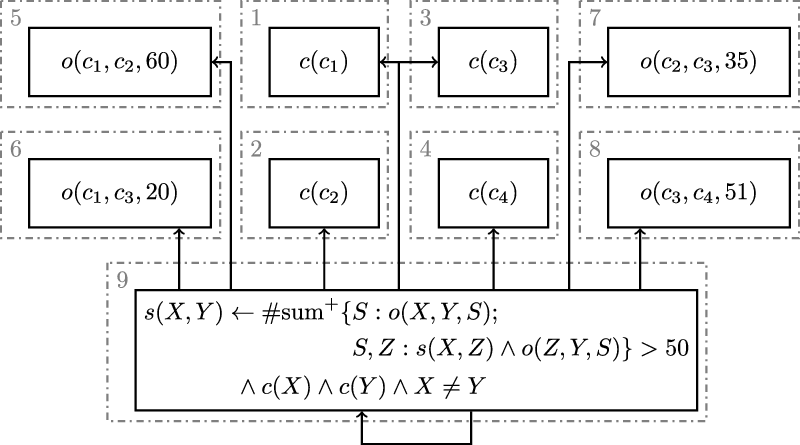

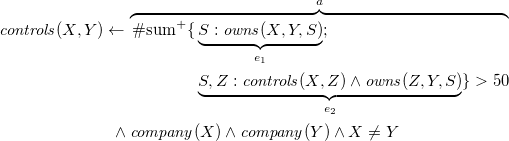

We address an expressive class of logic programs that incorporates recursive aggregates and thus amounts to the scope of existing ASP modeling languages (Gebser et al. Reference Gebser, Kaminski, Kaufmann, Lindauer, Ostrowski, Romero, Schaub and Thiele2015b). This is accompanied with an algorithmic framework detailing the grounding of recursive aggregates. The given grounding algorithms correspond essentially to the ones used in the ASP grounder gringo (Gebser et al. Reference Gebser, Kaminski, König, Schaub and Notes2011). In this way, our framework provides a formal characterization of one of the most widespread grounding systems. In fact, modern grounders like (the one in) dlv (Faber et al. Reference Faber, Leone and Perri2012) or gringo (Gebser et al. Reference Gebser, Kaminski, König, Schaub and Notes2011) are based on database evaluation techniques (Ullman Reference Ullman1988; Abiteboul et al. Reference Abiteboul, Hull and Vianu1995). The instantiation of a program is seen as an iterative bottom-up process starting from the program’s facts while being guided by the accumulation of variable-free atoms possibly derivable from the rules seen so far. During this process, a ground rule is produced if its positive body atoms belong to the accumulated atoms, in which case its head atom is added as well. This process is repeated until no further such atoms can be added. From an algorithmic perspective, we show how a grounding framework (relying upon database evaluation techniques) can be extended to incorporate recursive aggregates.

Our paper is organized as follows.

Section 2 lays the basic foundations of our approach. We start in Section 2.1 by recalling definitions of (monotonic) operators on lattices; they constitute the basic building blocks of our characterization of grounding algorithms. We then review infinitary formulas along with their stable and well-founded semantics in Section 2.2, 2.3 and 2.4 respectively. In this context, we explore several operators and define a class of infinitary logic programs that allows us to capture full-featured ASP languages with (recursive) aggregates. Interestingly, we have to resort to concepts borrowed from id-logic (Bruynooghe et al. Reference Bruynooghe, Denecker and Truszczyński2016; Truszczyński Reference Truszczyński2012) to obtain monotonic operators that are indispensable for capturing iterative algorithms. Notably, the id-well-founded model can be used for approximating regular stable models. Finally, we define in Section 2.5 our concept of program simplification and elaborate upon its semantic properties. The importance of program simplification can be read off two salient properties. First, it results in a finite program whenever the interpretation used for simplification is finite. And second, it preserves all stable models when simplified with the id-well-founded model of the program.

Section 3 is dedicated to the formal foundations of component-wise grounding. As mentioned, each rule is instantiated in the context of all atoms being possibly derivable up to this point. In addition, grounding has to take subsequent atom definitions into account. To this end, we extend well-known operators and resulting semantic concepts with contextual information, usually captured by two- and four-valued interpretations, respectively, and elaborate upon their formal properties that are relevant to grounding. In turn, we generalize the contextual operators and semantic concepts to sequences of programs in order to reflect component-wise grounding. The major emerging concept is essentially a well-founded model for program sequences that takes backward and forward contextual information into account. We can then iteratively compute this model to approximate the well-founded model of the entire program. This model-theoretic concept can be used for governing an ideal grounding process.

Section 4 turns to logic programs with variables and aggregates. We align the semantics of such aggregate programs with the one of Ferraris (Reference Ferraris2011) but consider infinitary formulas (Harrison et al. Reference Harrison, Lifschitz and Yang2014). In view of grounding aggregates, however, we introduce an alternative translation of aggregates that is strongly equivalent to that of Ferraris but provides more precise well-founded models. In turn, we refine this translation to be bound by an interpretation so that it produces finitary formulas whenever this interpretation is finite. Together, the program simplification introduced in Section 2.5 and aggregate translation provide the basis for turning programs with aggregates into semantically equivalent finite programs with finitary subformulas.

Section 5 further refines our semantic approach to reflect actual grounding processes. To this end, we define the concept of an instantiation sequence based on rule dependencies. We then use the contextual operators of Section 3 to define approximate models of instantiation sequences. While approximate models are in general less precise than well-founded ones, they are better suited for on-the-fly grounding along an instantiation sequence. Nonetheless, they are strong enough to allow for completely evaluating stratified programs.

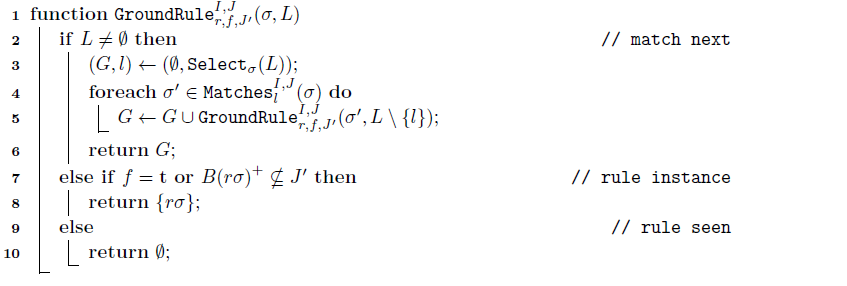

Section 6 lays out the basic algorithms for grounding rules, components, and entire programs and characterizes their output in terms of the semantic concepts developed in the previous sections. Of particular interest is the treatment of aggregates, which are decomposed into dedicated normal rules before grounding, and reassembled afterward. This allows us to ground rules with aggregates by means of grounding algorithms for normal rules. Finally, we show that our grounding algorithm guarantees that an obtained finite ground program is equivalent to the original non-ground program.

The previous sections focus on the theoretical and algorithmic cornerstones of grounding. Section 7 refines these concepts by further detailing aggregate propagation, algorithm specifics, and the treatment of language constructs from gringo’s input language.

We relate our contributions to the state of the art in Section 8 and summarize it in Section 9.

Although the developed approach is implemented in gringo series 4 and 5, their high degree of sophistication make it hard to retrace the algorithms from Section 6. Hence, to ease comprehensibility, we have moreover implemented the presented approach in

![]() $\mu$

-gringo

Footnote 1

in a transparent way and equipped it with means for retracing the developed concepts during grounding. This can thus be seen as the practical counterpart to the formal elaboration given below. Also, this system may enable some readers to construct and to experiment with own grounder extensions.

$\mu$

-gringo

Footnote 1

in a transparent way and equipped it with means for retracing the developed concepts during grounding. This can thus be seen as the practical counterpart to the formal elaboration given below. Also, this system may enable some readers to construct and to experiment with own grounder extensions.

This paper draws on material presented during an invited talk at the third workshop on grounding, transforming, and modularizing theories with variables (Gebser et al. Reference Gebser, Kaminski and Schaub2015c).

2 Foundations

2.1 Operators on lattices

This section recalls basic concepts on operators on complete lattices.

A complete lattice is a partially ordered set

![]() $(L,\leq)$

in which every subset

$(L,\leq)$

in which every subset

![]() $S \subseteq L$

has a greatest lower bound and a least upper bound in

$S \subseteq L$

has a greatest lower bound and a least upper bound in

![]() $(L,\leq)$

.

$(L,\leq)$

.

An operator O on lattice

![]() $(L,\leq)$

is a function from L to L. It is monotone if

$(L,\leq)$

is a function from L to L. It is monotone if

![]() $x \leq y$

implies

$x \leq y$

implies

![]() ${{O}(x)} \leq {{O}(y)}$

for each

${{O}(x)} \leq {{O}(y)}$

for each

![]() $x,y \in L$

; and it is antimonotone if

$x,y \in L$

; and it is antimonotone if

![]() $x \leq y$

implies

$x \leq y$

implies

![]() ${{O}(y)} \leq {{O}(x)}$

for each

${{O}(y)} \leq {{O}(x)}$

for each

![]() $x,y \in L$

.

$x,y \in L$

.

Let O be an operator on lattice

![]() $(L,\leq)$

. A prefixed point of O is an

$(L,\leq)$

. A prefixed point of O is an

![]() $x \in L$

such that

$x \in L$

such that

![]() ${{O}(x)} \leq x$

. A postfixed point of O is an

${{O}(x)} \leq x$

. A postfixed point of O is an

![]() $x \in L$

such that

$x \in L$

such that

![]() $x \leq {{O}(x)}$

. A fixed point of O is an

$x \leq {{O}(x)}$

. A fixed point of O is an

![]() $x \in L$

such that

$x \in L$

such that

![]() $x = {{O}(x)}$

, that is, it is both a prefixed and a postfixed point.

$x = {{O}(x)}$

, that is, it is both a prefixed and a postfixed point.

Theorem 1. (Knaster-Tarski; Tarski Reference Tarski1955) Let O be a monotone operator on complete lattice

![]() $(L,\leq)$

. Then, we have the following properties:

$(L,\leq)$

. Then, we have the following properties:

-

(a) Operator O has a least fixed and prefixed point which are identical.

-

(b) Operator O has a greatest fixed and postfixed point which are identical.

-

(c) The fixed points of O form a complete lattice.

2.2 Formulas and interpretations

We begin with a propositional signature

![]() ${\Sigma}$

consisting of a set of atoms. Following Truszczyński (Reference Truszczyński2012), we define the sets

${\Sigma}$

consisting of a set of atoms. Following Truszczyński (Reference Truszczyński2012), we define the sets

![]() ${\mathcal{F}}_0,{\mathcal{F}}_1, \ldots$

of formulas as follows:

${\mathcal{F}}_0,{\mathcal{F}}_1, \ldots$

of formulas as follows:

-

${\mathcal{F}}_0$

is the set of all propositional atoms in

${\mathcal{F}}_0$

is the set of all propositional atoms in

${\Sigma}$

,

${\Sigma}$

, -

${\mathcal{F}}_{i+1}$

is the set of all elements of

${\mathcal{F}}_{i+1}$

is the set of all elements of

${\mathcal{F}}_i$

, all expressions

${\mathcal{F}}_i$

, all expressions

${\mathcal{H}}^\wedge$

and

${\mathcal{H}}^\wedge$

and

${\mathcal{H}}^\vee$

with

${\mathcal{H}}^\vee$

with

${\mathcal{H}} \subseteq {\mathcal{F}}_i$

, and all expressions

${\mathcal{H}} \subseteq {\mathcal{F}}_i$

, and all expressions

$F \rightarrow G$

with

$F \rightarrow G$

with

$F,G\in{\mathcal{F}}_i$

.

$F,G\in{\mathcal{F}}_i$

.

The set

![]() ${\mathcal{F}} = \bigcup_{i=0}^\infty{\mathcal{F}}_i$

contains all (infinitary propositional) formulas over

${\mathcal{F}} = \bigcup_{i=0}^\infty{\mathcal{F}}_i$

contains all (infinitary propositional) formulas over

![]() ${\Sigma}$

.

${\Sigma}$

.

In the following, we use the shortcuts

-

$\top = \emptyset^\wedge$

and

$\top = \emptyset^\wedge$

and

$\bot = \emptyset^\vee$

,

$\bot = \emptyset^\vee$

, -

${\neg} F = F \rightarrow \bot$

where F is a formula, and

${\neg} F = F \rightarrow \bot$

where F is a formula, and -

$F \wedge G = {\{ F, G \}}^\wedge$

and

$F \wedge G = {\{ F, G \}}^\wedge$

and

$F \vee G = {\{ F, G \}}^\vee$

where F and G are formulas.

$F \vee G = {\{ F, G \}}^\vee$

where F and G are formulas.

We say that a formula is finitary, if it has a finite number of subformulas.

An occurrence of a subformula in a formula is called positive, if the number of implications containing that occurrence in the antecedent is even, and strictly positive if that number is zero; if that number is odd the occurrence is negative. The sets

![]() ${{F}^+}$

and

${{F}^+}$

and

![]() ${{F}^-}$

gather all atoms occurring positively or negatively in formula F, respectively; if applied to a set of formulas, both expressions stand for the union of the respective atoms in the formulas. Also, we define

${{F}^-}$

gather all atoms occurring positively or negatively in formula F, respectively; if applied to a set of formulas, both expressions stand for the union of the respective atoms in the formulas. Also, we define

![]() ${{F}^\pm}={{F}^+}\cup{{F}^-}$

as the set of all atoms occurring in F.

${{F}^\pm}={{F}^+}\cup{{F}^-}$

as the set of all atoms occurring in F.

A two-valued interpretation over signature

![]() ${\Sigma}$

is a set I of propositional atoms such that

${\Sigma}$

is a set I of propositional atoms such that

![]() $I \subseteq {\Sigma}$

. Atoms in an interpretation I are considered true and atoms in

$I \subseteq {\Sigma}$

. Atoms in an interpretation I are considered true and atoms in

![]() ${\Sigma} \setminus I$

as false. The set of all interpretations together with the

${\Sigma} \setminus I$

as false. The set of all interpretations together with the

![]() $\subseteq$

relation forms a complete lattice.

$\subseteq$

relation forms a complete lattice.

The satisfaction relation between interpretations and formulas is defined as follows:

-

$I \models a$

for atoms a if

$I \models a$

for atoms a if

$a \in I$

,

$a \in I$

, -

$I \models {\mathcal{H}}^\wedge$

if

$I \models {\mathcal{H}}^\wedge$

if

$I \models F$

for all

$I \models F$

for all

$F \in {\mathcal{H}}$

,

$F \in {\mathcal{H}}$

, -

$I \models {\mathcal{H}}^\vee$

if

$I \models {\mathcal{H}}^\vee$

if

$I \models F$

for some

$I \models F$

for some

$F \in {\mathcal{H}}$

, and

$F \in {\mathcal{H}}$

, and -

$I \models F \rightarrow G$

if

$I \models F \rightarrow G$

if

$I \not\models F$

or

$I \not\models F$

or

$I \models G$

.

$I \models G$

.

An interpretation I is a model of a set

![]() ${\mathcal{H}}$

of formulas, written

${\mathcal{H}}$

of formulas, written

![]() $I \models {\mathcal{H}}$

, if it satisfies each formula in the set.

$I \models {\mathcal{H}}$

, if it satisfies each formula in the set.

In the following, all atoms, formulas, and interpretations operate on the same (implicit) signature, unless mentioned otherwise.

2.3 Logic programs and stable models

Our terminology in this section keeps following the one of Truszczyński (Reference Truszczyński2012).

The reduct

![]() ${F^{I}}$

of a formula F w.r.t. an interpretation I is defined as:

${F^{I}}$

of a formula F w.r.t. an interpretation I is defined as:

-

$\bot$

if

$\bot$

if

$I \not\models F$

,

$I \not\models F$

, -

a if

$I \models F$

and

$I \models F$

and

$F = a\in{\mathcal{F}}_0$

,

$F = a\in{\mathcal{F}}_0$

, -

${\{ {G^{I}} \mid G \in {\mathcal{H}}\}}^\wedge$

if

${\{ {G^{I}} \mid G \in {\mathcal{H}}\}}^\wedge$

if

$I \models F$

and

$I \models F$

and

$F = {\mathcal{H}}^\wedge$

,

$F = {\mathcal{H}}^\wedge$

, -

${\{ {G^{I}} \mid G \in {\mathcal{H}}\}}^\vee$

if

${\{ {G^{I}} \mid G \in {\mathcal{H}}\}}^\vee$

if

$I \models F$

and

$I \models F$

and

$F = {\mathcal{H}}^\vee$

, and

$F = {\mathcal{H}}^\vee$

, and -

${G^{I}} \rightarrow {H^{I}}$

if

${G^{I}} \rightarrow {H^{I}}$

if

$I \models F$

and

$I \models F$

and

$F = G \rightarrow H$

.

$F = G \rightarrow H$

.

An interpretation I is a stable model of a formula F if it is among the (set inclusion) minimal models of

![]() ${F^{I}}$

.

${F^{I}}$

.

Note that the reduct removes (among other unsatisfied subformulas) all occurrences of atoms that are false in I. Thus, the satisfiability of the reduct does not depend on such atoms, and all minimal models of

![]() ${F^{I}}$

are subsets of I. Hence, if I is a stable model of F, then it is the only minimal model of

${F^{I}}$

are subsets of I. Hence, if I is a stable model of F, then it is the only minimal model of

![]() ${F^{I}}$

.

${F^{I}}$

.

Sets

![]() $\mathcal{H}_1$

and

$\mathcal{H}_1$

and

![]() $\mathcal{H}_2$

of infinitary formulas are equivalent if they have the same stable models and classically equivalent if they have the same models; they are strongly equivalent if, for any set

$\mathcal{H}_2$

of infinitary formulas are equivalent if they have the same stable models and classically equivalent if they have the same models; they are strongly equivalent if, for any set

![]() $\mathcal{H}$

of infinitary formulas,

$\mathcal{H}$

of infinitary formulas,

![]() $\mathcal{H}_1\cup\mathcal{H}$

and

$\mathcal{H}_1\cup\mathcal{H}$

and

![]() $\mathcal{H}_2\cup\mathcal{H}$

are equivalent. As shown by Harrison et al. (Reference Harrison, Lifschitz, Pearce and Valverde2017), this also allows for replacing a part of any formula with a strongly equivalent formula without changing the set of stable models.

$\mathcal{H}_2\cup\mathcal{H}$

are equivalent. As shown by Harrison et al. (Reference Harrison, Lifschitz, Pearce and Valverde2017), this also allows for replacing a part of any formula with a strongly equivalent formula without changing the set of stable models.

In the following, we consider implications with atoms as consequent and formulas as antecedent. As common in logic programming, they are referred to as rules, heads, and bodies, respectively, and denoted by reversing the implication symbol. More precisely, an

![]() ${\mathcal{F}}$

-program is set of rules of form

${\mathcal{F}}$

-program is set of rules of form

![]() $h \leftarrow F$

where

$h \leftarrow F$

where

![]() $h \in {\mathcal{F}}_0$

and

$h \in {\mathcal{F}}_0$

and

![]() $F\in{\mathcal{F}}$

. We use

$F\in{\mathcal{F}}$

. We use

![]() ${H(h \leftarrow F)} = h$

to refer to rule heads and

${H(h \leftarrow F)} = h$

to refer to rule heads and

![]() ${B(h \leftarrow F)} = F$

to refer to rule bodies. We extend this by letting

${B(h \leftarrow F)} = F$

to refer to rule bodies. We extend this by letting

![]() ${H(P)}\ = \{ {H(r)} \mid r \in P \}$

and

${H(P)}\ = \{ {H(r)} \mid r \in P \}$

and

![]() ${B(P)}\ = \{ {B(r)} \mid r \in P \}$

for any program P.

${B(P)}\ = \{ {B(r)} \mid r \in P \}$

for any program P.

An interpretation I is a model of an

![]() ${\mathcal{F}}$

-program P, written

${\mathcal{F}}$

-program P, written

![]() $I \models P$

, if

$I \models P$

, if

![]() $I \models {B(r)} \rightarrow {H(r)}$

for all

$I \models {B(r)} \rightarrow {H(r)}$

for all

![]() $r \in P$

. The latter is also written as

$r \in P$

. The latter is also written as

![]() $I \models r$

. We define the reduct of P w.r.t. I as

$I \models r$

. We define the reduct of P w.r.t. I as

![]() ${P^{I}} = \{ {r^{I}} \mid r \in P \}$

where

${P^{I}} = \{ {r^{I}} \mid r \in P \}$

where

![]() ${r^{I}} = {H(r)} \leftarrow {{B(r)}^{I}}$

. As above, an interpretation I is a stable model of P if I is among the minimal models of

${r^{I}} = {H(r)} \leftarrow {{B(r)}^{I}}$

. As above, an interpretation I is a stable model of P if I is among the minimal models of

![]() ${P^{I}}$

. Just like the original definition of Gelfond and Lifschitz (Reference Gelfond and Lifschitz1988), the reduct of such programs leaves rule heads intact and only reduces rule bodies. (This feature fits well with the various operators defined in the sequel.)

${P^{I}}$

. Just like the original definition of Gelfond and Lifschitz (Reference Gelfond and Lifschitz1988), the reduct of such programs leaves rule heads intact and only reduces rule bodies. (This feature fits well with the various operators defined in the sequel.)

This program-oriented reduct yields the same stable models as obtained by applying the full reduct to the corresponding infinitary formula.

Proposition 2 Let P be an

![]() ${\mathcal{F}}$

-program.

${\mathcal{F}}$

-program.

Then, the stable models of formula

![]() ${\{ {B(r)} \rightarrow {H(r)} \mid r \in P \}}^\wedge$

are the same as the stable models of program P.

${\{ {B(r)} \rightarrow {H(r)} \mid r \in P \}}^\wedge$

are the same as the stable models of program P.

For programs, Truszczyński (Reference Truszczyński2012) introduces in an alternative reduct, replacing each negatively occurring atom with

![]() $\bot$

, if it is falsified, and with

$\bot$

, if it is falsified, and with

![]() $\top$

, otherwise. More precisely, the so-called id-reduct

$\top$

, otherwise. More precisely, the so-called id-reduct

![]() ${F_{I}}$

of a formula F w.r.t. an interpretation I is defined as

${F_{I}}$

of a formula F w.r.t. an interpretation I is defined as

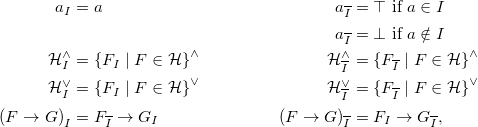

\begin{align*}{a_{I}} & = a &{a_{\overline{I}}} & = \top \text{ if } a \in I \\& &{a_{\overline{I}}} & = \bot \text{ if } a \notin I \\{{\mathcal{H}}^{\wedge}_{I}} & = {\{ {F_{I}} \mid F \in {\mathcal{H}} \}}^\wedge &{{\mathcal{H}}^{\wedge}_{\overline{I}}} & = {\{ {F_{\overline{I}}} \mid F \in {\mathcal{H}} \}}^\wedge \\{{\mathcal{H}}^{\vee}_{I}} & = {\{ {F_{I}} \mid F \in {\mathcal{H}} \}}^\vee &{{\mathcal{H}}^{\vee}_{\overline{I}}} & = {\{ {F_{\overline{I}}} \mid F \in {\mathcal{H}} \}}^\vee \\{{(F \rightarrow G)}_{I}} & = {F_{\overline{I}}} \rightarrow {G_{I}} &{{(F \rightarrow G)}_{\overline{I}}} & = {F_{I}} \rightarrow {G_{\overline{I}}},\end{align*}

\begin{align*}{a_{I}} & = a &{a_{\overline{I}}} & = \top \text{ if } a \in I \\& &{a_{\overline{I}}} & = \bot \text{ if } a \notin I \\{{\mathcal{H}}^{\wedge}_{I}} & = {\{ {F_{I}} \mid F \in {\mathcal{H}} \}}^\wedge &{{\mathcal{H}}^{\wedge}_{\overline{I}}} & = {\{ {F_{\overline{I}}} \mid F \in {\mathcal{H}} \}}^\wedge \\{{\mathcal{H}}^{\vee}_{I}} & = {\{ {F_{I}} \mid F \in {\mathcal{H}} \}}^\vee &{{\mathcal{H}}^{\vee}_{\overline{I}}} & = {\{ {F_{\overline{I}}} \mid F \in {\mathcal{H}} \}}^\vee \\{{(F \rightarrow G)}_{I}} & = {F_{\overline{I}}} \rightarrow {G_{I}} &{{(F \rightarrow G)}_{\overline{I}}} & = {F_{I}} \rightarrow {G_{\overline{I}}},\end{align*}

where a is an atom,

![]() ${\mathcal{H}}$

a set of formulas, and F and G are formulas.

${\mathcal{H}}$

a set of formulas, and F and G are formulas.

The id-reduct of an

![]() ${\mathcal{F}}$

-program P w.r.t. an interpretation I is

${\mathcal{F}}$

-program P w.r.t. an interpretation I is

![]() ${P_{I}} = \{ {r_{I}} \mid r \in P \}$

where

${P_{I}} = \{ {r_{I}} \mid r \in P \}$

where

![]() ${r_{I}} = {H(r)} \leftarrow {{B(r)}_{I}}$

. As with

${r_{I}} = {H(r)} \leftarrow {{B(r)}_{I}}$

. As with

![]() ${r^{I}}$

, the transformation of r into

${r^{I}}$

, the transformation of r into

![]() ${r_{I}}$

leaves the head of r unaffected.

${r_{I}}$

leaves the head of r unaffected.

Example 1 Consider the program containing the single rule

We get the following reduced programs w.r.t. interpretations

![]() $\emptyset$

and

$\emptyset$

and

![]() $\{p\}$

:

$\{p\}$

:

Note that both reducts leave the rule’s head intact.

Extending the definition of positive occurrences, we define a formula as (strictly) positive if all its atoms occur (strictly) positively in the formula. We define an

![]() ${\mathcal{F}}$

-program as (strictly) positive if all its rule bodies are (strictly) positive.

${\mathcal{F}}$

-program as (strictly) positive if all its rule bodies are (strictly) positive.

For example, the program in Example 1 is positive but not strictly positive because the only body atom p appears in the scope of two antecedents within the rule body

![]() ${\neg} {\neg} p$

.

${\neg} {\neg} p$

.

As put forward by van Emden and Kowalski (Reference van Emden and Kowalski1976), we may associate with each program P its one-step provability operator

![]() ${{T}_{P}}$

, defined for any interpretation X as

${{T}_{P}}$

, defined for any interpretation X as

Proposition 3 (Truszczyński Reference Truszczyński2012) Let P be a positive

![]() ${\mathcal{F}}$

-program.

${\mathcal{F}}$

-program.

Then, the operator

![]() ${{T}_{P}}$

is monotone.

${{T}_{P}}$

is monotone.

Fixed points of

![]() ${{T}_{P}}$

are models of P guaranteeing that each contained atom is supported by some rule in P; prefixed points of

${{T}_{P}}$

are models of P guaranteeing that each contained atom is supported by some rule in P; prefixed points of

![]() ${{T}_{P}}$

correspond to the models of P. According to Theorem 1(a), the

${{T}_{P}}$

correspond to the models of P. According to Theorem 1(a), the

![]() ${{T}_{P}}$

operator has a least fixed point for positive

${{T}_{P}}$

operator has a least fixed point for positive

![]() ${\mathcal{F}}$

-programs. We refer to this fixed point as the least model of P and write it as

${\mathcal{F}}$

-programs. We refer to this fixed point as the least model of P and write it as

![]() ${\mathit{LM}(P)}$

.

${\mathit{LM}(P)}$

.

Observing that the id-reduct replaces all negative occurrences of atoms, any id-reduct

![]() ${P_{I}}$

of a program w.r.t. an interpretation I is positive and thus possesses a least model

${P_{I}}$

of a program w.r.t. an interpretation I is positive and thus possesses a least model

![]() ${\mathit{LM}({P_{I}})}$

. This gives rise to the following definition of a stable operator (Truszczyński Reference Truszczyński2012): Given an

${\mathit{LM}({P_{I}})}$

. This gives rise to the following definition of a stable operator (Truszczyński Reference Truszczyński2012): Given an

![]() ${\mathcal{F}}$

-program P, its id-stable operator is defined for any interpretation I as

${\mathcal{F}}$

-program P, its id-stable operator is defined for any interpretation I as

The fixed points of

![]() ${{S}_{P}}$

are the id-stable models of P.

${{S}_{P}}$

are the id-stable models of P.

Note that neither the program reduct

![]() ${P^{I}}$

nor the formula reduct

${P^{I}}$

nor the formula reduct

![]() ${F^{I}}$

guarantee (least) models. Also, stable models and id-stable models do not coincide in general.

${F^{I}}$

guarantee (least) models. Also, stable models and id-stable models do not coincide in general.

Example 2 Reconsider the program from Example 1, comprising rule

This program has the two stable models

![]() $\emptyset$

and

$\emptyset$

and

![]() $\{p\}$

, but the empty model is the only id-stable model.

$\{p\}$

, but the empty model is the only id-stable model.

Proposition 4 (Truszczyński Reference Truszczyński2012) Let P be an

![]() ${\mathcal{F}}$

-program.

${\mathcal{F}}$

-program.

Then, the id-stable operator

![]() ${{S}_{P}}$

is antimonotone.

${{S}_{P}}$

is antimonotone.

No analogous antimonotone operator is obtainable for

![]() ${\mathcal{F}}$

-programs by using the program reduct

${\mathcal{F}}$

-programs by using the program reduct

![]() ${P^{I}}$

(and for general theories with the formula reduct

${P^{I}}$

(and for general theories with the formula reduct

![]() ${F^{I}}$

). To see this, reconsider Example 2 along with its two stable models

${F^{I}}$

). To see this, reconsider Example 2 along with its two stable models

![]() $\emptyset$

and

$\emptyset$

and

![]() $\{p\}$

. Given that both had to be fixed points of such an operator, it would behave monotonically on

$\{p\}$

. Given that both had to be fixed points of such an operator, it would behave monotonically on

![]() $\emptyset$

and

$\emptyset$

and

![]() $\{p\}$

.

$\{p\}$

.

In view of this, we henceforth consider exclusively id-stable operators and drop the prefix “id”. However, we keep the distinction between stable and id-stable models.

Truszczyński (Reference Truszczyński2012) identifies in a class of programs for which stable models and id-stable models coincide. The set

![]() ${\mathcal{N}}$

consists of all formulas F such that any implication in F has

${\mathcal{N}}$

consists of all formulas F such that any implication in F has

![]() $\bot$

as consequent and no occurrences of implications in its antecedent. An

$\bot$

as consequent and no occurrences of implications in its antecedent. An

![]() ${\mathcal{N}}$

-program consists of rules of form

${\mathcal{N}}$

-program consists of rules of form

![]() $ h \leftarrow F $

where

$ h \leftarrow F $

where

![]() $h \in {\mathcal{F}}_0$

and

$h \in {\mathcal{F}}_0$

and

![]() $F \in {\mathcal{N}}$

.

$F \in {\mathcal{N}}$

.

Proposition 5 (Truszczyński Reference Truszczyński2012) Let P be an

![]() ${\mathcal{N}}$

-program.

${\mathcal{N}}$

-program.

Then, the stable and id-stable models of P coincide.

Note that a positive

![]() ${\mathcal{N}}$

-program is also strictly positive.

${\mathcal{N}}$

-program is also strictly positive.

2.4 Well-founded models

Our terminology in this section follows the one of Truszczyński (Reference Truszczyński, Kifer and Liu2018) and traces back to the early work of Belnap (Reference Belnap1977) and Fitting (Reference Fitting2002). Footnote 2

We deal with pairs of sets and extend the basic set relations and operations accordingly. Given sets I’, I, J’, J, and X, we define:

-

$(I',J') \mathrel{\bar{\prec}} (I,J)$

if

$(I',J') \mathrel{\bar{\prec}} (I,J)$

if

$I' \prec I$

and

$I' \prec I$

and

$J' \prec J$

for

$J' \prec J$

for

$(\bar{\prec}, \prec) \in \{ (\sqsubset, \subset), (\sqsubseteq, \subseteq) \}$

$(\bar{\prec}, \prec) \in \{ (\sqsubset, \subset), (\sqsubseteq, \subseteq) \}$

-

$(I',J') \mathrel{\bar{\circ}} (I,J) = (I' \circ I, J' \circ J)$

for

$(I',J') \mathrel{\bar{\circ}} (I,J) = (I' \circ I, J' \circ J)$

for

$(\bar{\circ}, \circ) \in \{ (\sqcup, \cup), (\sqcap, \cap), (\smallsetminus,\setminus) \}$

$(\bar{\circ}, \circ) \in \{ (\sqcup, \cup), (\sqcap, \cap), (\smallsetminus,\setminus) \}$

-

$(I,J) \mathrel{\bar{\circ}} X = (I,J) \mathrel{\bar{\circ}} (X,X)$

for

$(I,J) \mathrel{\bar{\circ}} X = (I,J) \mathrel{\bar{\circ}} (X,X)$

for

$\bar{\circ} \in \{ \sqcup, \sqcap, \smallsetminus \}$

$\bar{\circ} \in \{ \sqcup, \sqcap, \smallsetminus \}$

A four-valued interpretation over signature

![]() ${\Sigma}$

is represented by a pair

${\Sigma}$

is represented by a pair

![]() $(I,J) \sqsubseteq ({\Sigma}, {\Sigma})$

where I stands for certain and J for possible atoms. Intuitively, an atom that is

$(I,J) \sqsubseteq ({\Sigma}, {\Sigma})$

where I stands for certain and J for possible atoms. Intuitively, an atom that is

-

certain and possible is true,

-

certain but not possible is inconsistent,

-

not certain but possible is unknown, and

-

not certain and not possible is false.

A four-valued interpretation (I’,J’) is more precise than a four-valued interpretation (I,J), written

![]() $(I,J) \leq_p (I',J')$

, if

$(I,J) \leq_p (I',J')$

, if

![]() $I \subseteq I'$

and

$I \subseteq I'$

and

![]() $J' \subseteq J$

. The precision ordering also has an intuitive reading: the more atoms are certain or the fewer atoms are possible, the more precise is an interpretation. The least precise four-valued interpretation over

$J' \subseteq J$

. The precision ordering also has an intuitive reading: the more atoms are certain or the fewer atoms are possible, the more precise is an interpretation. The least precise four-valued interpretation over

![]() ${\Sigma}$

is

${\Sigma}$

is

![]() $(\emptyset,{\Sigma})$

. As with two-valued interpretations, the set of all four-valued interpretations over a signature

$(\emptyset,{\Sigma})$

. As with two-valued interpretations, the set of all four-valued interpretations over a signature

![]() ${\Sigma}$

together with the relation

${\Sigma}$

together with the relation

![]() $\leq_p$

forms a complete lattice. A four-valued interpretation is called inconsistent if it contains an inconsistent atom; otherwise, it is called consistent. It is total whenever it makes all atoms either true or false. Finally, (I,J) is called finite whenever both I and J are finite.

$\leq_p$

forms a complete lattice. A four-valued interpretation is called inconsistent if it contains an inconsistent atom; otherwise, it is called consistent. It is total whenever it makes all atoms either true or false. Finally, (I,J) is called finite whenever both I and J are finite.

Following Truszczyński (Reference Truszczyński, Kifer and Liu2018), we define the id-well-founded operator of an

![]() ${\mathcal{F}}$

-program P for any four-valued interpretation (I,J) as

${\mathcal{F}}$

-program P for any four-valued interpretation (I,J) as

This operator is monotone w.r.t. the precision ordering

![]() $\leq_p$

. Hence, by Theorem 1(b),

$\leq_p$

. Hence, by Theorem 1(b),

![]() ${{W}_{P}}$

has a least fixed point, which defines the id-well-founded model of P, also written as

${{W}_{P}}$

has a least fixed point, which defines the id-well-founded model of P, also written as

![]() ${\mathit{WM}(P)}$

. In what follows, we drop the prefix “id” and simply refer to the id-well-founded model of a program as its well-founded model. (We keep the distinction between stable and id-stable models.)

${\mathit{WM}(P)}$

. In what follows, we drop the prefix “id” and simply refer to the id-well-founded model of a program as its well-founded model. (We keep the distinction between stable and id-stable models.)

Any well-founded model (I,J) of an

![]() ${\mathcal{F}}$

-program P satisfies

${\mathcal{F}}$

-program P satisfies

![]() $I \subseteq J$

.

$I \subseteq J$

.

Lemma 6 Let P be an

![]() ${\mathcal{F}}$

-Program.

${\mathcal{F}}$

-Program.

Then, the well-founded model

![]() ${\mathit{WM}(P)}$

of P is consistent.

${\mathit{WM}(P)}$

of P is consistent.

Example 3 Consider program

![]() ${{P}_{3}}$

consisting of the following rules:

${{P}_{3}}$

consisting of the following rules:

\begin{align*}a & \\b & \leftarrow a \\c & \leftarrow {\neg} b \\d & \leftarrow c \\e & \leftarrow {\neg} d.\end{align*}

\begin{align*}a & \\b & \leftarrow a \\c & \leftarrow {\neg} b \\d & \leftarrow c \\e & \leftarrow {\neg} d.\end{align*}

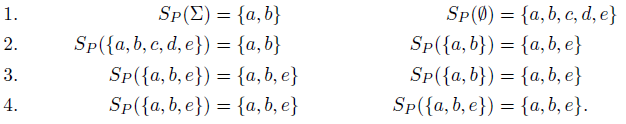

We compute the well-founded model of

![]() ${{P}_{3}} $

in four iterations starting from

${{P}_{3}} $

in four iterations starting from

![]() $(\emptyset,{\Sigma})$

:

$(\emptyset,{\Sigma})$

:

The left and right column reflect the certain and possible atoms computed at each iteration, respectively. We reach a fixed point at Step 4. Accordingly, the well-founded model of

![]() ${{P}_{3}}$

is

${{P}_{3}}$

is

![]() $(\{a, b, e\}, \{a, b, e\})$

.

$(\{a, b, e\}, \{a, b, e\})$

.

Unlike general

![]() ${\mathcal{F}}$

-programs, the class of

${\mathcal{F}}$

-programs, the class of

![]() ${\mathcal{N}}$

-programs warrants the same stable and id-stable models for each of its programs. Unfortunately,

${\mathcal{N}}$

-programs warrants the same stable and id-stable models for each of its programs. Unfortunately,

![]() ${\mathcal{N}}$

-programs are too restricted for our purpose (for instance, for capturing aggregates in rule bodies

Footnote 3

). To this end, we define a more general class of programs and refer to them as

${\mathcal{N}}$

-programs are too restricted for our purpose (for instance, for capturing aggregates in rule bodies

Footnote 3

). To this end, we define a more general class of programs and refer to them as

![]() ${\mathcal{R}}$

-programs. Although id-stable models of

${\mathcal{R}}$

-programs. Although id-stable models of

![]() ${\mathcal{R}}$

-programs may differ from their stable models (see below), their well-founded models encompass both stable and id-stable models. Thus, well-founded models can be used for characterizing stable model-preserving program transformations. In fact, we see in Section 2.5 that the restriction of

${\mathcal{R}}$

-programs may differ from their stable models (see below), their well-founded models encompass both stable and id-stable models. Thus, well-founded models can be used for characterizing stable model-preserving program transformations. In fact, we see in Section 2.5 that the restriction of

![]() ${\mathcal{F}}$

- to

${\mathcal{F}}$

- to

![]() ${\mathcal{R}}$

-programs allows us to provide tighter semantic characterizations of program simplifications.e define

${\mathcal{R}}$

-programs allows us to provide tighter semantic characterizations of program simplifications.e define

![]() ${\mathcal{R}}$

to be the set of all formulas F such that implications in F have no further occurrences of implications in their antecedents. Then, an

${\mathcal{R}}$

to be the set of all formulas F such that implications in F have no further occurrences of implications in their antecedents. Then, an

![]() ${\mathcal{R}}$

-program consists of rules of form

${\mathcal{R}}$

-program consists of rules of form

![]() $h \leftarrow F$

where

$h \leftarrow F$

where

![]() $h \in {\mathcal{F}}_0$

and

$h \in {\mathcal{F}}_0$

and

![]() $F \in {\mathcal{R}}$

. As with

$F \in {\mathcal{R}}$

. As with

![]() ${\mathcal{N}}$

-programs, a positive

${\mathcal{N}}$

-programs, a positive

![]() ${\mathcal{R}}$

-program is also strictly positive.

${\mathcal{R}}$

-program is also strictly positive.

Our next result shows that (id-)well-founded models can be used for approximating (regular) stable models of

![]() ${\mathcal{R}}$

-programs.

${\mathcal{R}}$

-programs.

Theorem 7 Let P be an

![]() ${\mathcal{R}}$

-program and (I,J) be the well-founded model of P.

${\mathcal{R}}$

-program and (I,J) be the well-founded model of P.

If X is a stable model of P, then

![]() $I \subseteq X \subseteq J$

.

$I \subseteq X \subseteq J$

.

Example 4 Consider the

![]() ${\mathcal{R}}$

-program

${\mathcal{R}}$

-program

![]() ${{P}_{4}}$

:

Footnote 4

${{P}_{4}}$

:

Footnote 4

Observe that

![]() $\{a, b, c\}$

is the only stable model of

$\{a, b, c\}$

is the only stable model of

![]() ${{P}_{4}}$

, the program does not have any id-stable models, and the well-founded model of

${{P}_{4}}$

, the program does not have any id-stable models, and the well-founded model of

![]() ${{P}_{4}}$

is

${{P}_{4}}$

is

![]() $(\emptyset,\{a,b,c\})$

. In accordance with Theorem 7, the stable model of

$(\emptyset,\{a,b,c\})$

. In accordance with Theorem 7, the stable model of

![]() ${{P}_{4}}$

is enclosed in the well-founded model.

${{P}_{4}}$

is enclosed in the well-founded model.

Note that the id-reduct handles

![]() $b \rightarrow a$

the same way as

$b \rightarrow a$

the same way as

![]() ${\neg} b \vee a$

. In fact, the program obtained by replacing

${\neg} b \vee a$

. In fact, the program obtained by replacing

with

is an

![]() ${\mathcal{N}}$

-program and has neither stable nor id-stable models.

${\mathcal{N}}$

-program and has neither stable nor id-stable models.

Further, note that the program in Example 2 is not an

![]() ${\mathcal{R}}$

-program, whereas the one in Example 3 is an

${\mathcal{R}}$

-program, whereas the one in Example 3 is an

![]() ${\mathcal{R}}$

-program.

${\mathcal{R}}$

-program.

2.5 Program simplification

In this section, we define a concept of program simplification relative to a four-valued interpretation and show how its result can be characterized by the semantic means from above. This concept has two important properties. First, it results in a finite program whenever the interpretation used for simplification is finite. And second, it preserves all (regular) stable models of

![]() ${\mathcal{R}}$

-programs when simplified with their well-founded models.

${\mathcal{R}}$

-programs when simplified with their well-founded models.

Definition 1 Let P be an

![]() ${\mathcal{F}}$

-program, and (I,J) be a four-valued interpretation.

${\mathcal{F}}$

-program, and (I,J) be a four-valued interpretation.

We define the simplification of P w.r.t. (I,J) as

For simplicity, we drop parentheses and we write

![]() ${P^{I,J}}$

instead of

${P^{I,J}}$

instead of

![]() ${P^{(I,J)}}$

whenever clear from context.

${P^{(I,J)}}$

whenever clear from context.

The program simplification

![]() ${P^{I,J}}$

acts as a filter eliminating inapplicable rules that fail to satisfy the condition

${P^{I,J}}$

acts as a filter eliminating inapplicable rules that fail to satisfy the condition

![]() $J \models {{B(r)}_{I}}$

. That is, first, all negatively occurring atoms in B(r) are evaluated w.r.t. the certain atoms in I and replaced accordingly by

$J \models {{B(r)}_{I}}$

. That is, first, all negatively occurring atoms in B(r) are evaluated w.r.t. the certain atoms in I and replaced accordingly by

![]() $\bot$

and

$\bot$

and

![]() $\top$

, respectively. Then, it is checked whether the reduced body

$\top$

, respectively. Then, it is checked whether the reduced body

![]() ${{B(r)}_{I}}$

is satisfiable by the possible atoms in J. Only in this case, the rule is kept in

${{B(r)}_{I}}$

is satisfiable by the possible atoms in J. Only in this case, the rule is kept in

![]() $P^{I,J}$

. No simplifications are applied to the remaining rules. This is illustrated in Example 5 below.

$P^{I,J}$

. No simplifications are applied to the remaining rules. This is illustrated in Example 5 below.

Note that

![]() ${P^{I,J}}$

is finite whenever (I,J) is finite.

${P^{I,J}}$

is finite whenever (I,J) is finite.

Observe that for an

![]() ${\mathcal{F}}$

-program P the head atoms in

${\mathcal{F}}$

-program P the head atoms in

![]() ${{P^{I,J}}}$

correspond to the result of applying the provability operator of program

${{P^{I,J}}}$

correspond to the result of applying the provability operator of program

![]() ${P_{I}}$

to the possible atoms in J, that is,

${P_{I}}$

to the possible atoms in J, that is,

![]() ${H({P^{I,J}})} = {{{T}_{{P_{I}}}}(J)}$

.

${H({P^{I,J}})} = {{{T}_{{P_{I}}}}(J)}$

.

Our next result shows that programs simplified with their well-founded model maintain this model.

Theorem 8 Let P be an

![]() ${\mathcal{F}}$

-program and (I,J) be the well-founded model of P.

${\mathcal{F}}$

-program and (I,J) be the well-founded model of P.

Then, P and

![]() ${P^{I,J}}$

have the same well-founded model.

${P^{I,J}}$

have the same well-founded model.

Example 5 In Example 3, we computed the well-founded model

![]() $(\{a, b, e\}, \{a, b, e\})$

of

$(\{a, b, e\}, \{a, b, e\})$

of

![]() ${{P}_{3}}$

. With this, we obtain the simplified program

${{P}_{3}}$

. With this, we obtain the simplified program

![]() ${{P}_{3}'} = {{{P}_{3}}^{\{a, b, e\}, \{a, b, e\}}}$

after dropping

${{P}_{3}'} = {{{P}_{3}}^{\{a, b, e\}, \{a, b, e\}}}$

after dropping

![]() $c \leftarrow {\neg} b$

and

$c \leftarrow {\neg} b$

and

![]() $d \leftarrow c$

:

$d \leftarrow c$

:

\begin{align*}a & \\b & \leftarrow a \\e & \leftarrow {\neg} d.\end{align*}

\begin{align*}a & \\b & \leftarrow a \\e & \leftarrow {\neg} d.\end{align*}

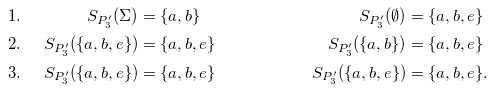

Next, we check that the well-founded model of

![]() ${{P}_{3}'}$

corresponds to the well-founded model of

${{P}_{3}'}$

corresponds to the well-founded model of

![]() ${{P}_{3}}$

:

${{P}_{3}}$

:

We observe that it takes two applications of the well-founded operator to obtain the well-founded model. This could be reduced to one step if atoms false in the well-founded model would be removed from the negative bodies by the program simplification. Keeping them is a design decision with the goal to simplify notation in the following.

The next series of results further elaborates on semantic invariants guaranteed by our concept of program simplification. The first result shows that it preserves all stable models between the sets used for simplification.

Theorem 9 Let P be an

![]() ${\mathcal{F}}$

-program, and I, J, and X be two-valued interpretations.

${\mathcal{F}}$

-program, and I, J, and X be two-valued interpretations.

If

![]() $I \subseteq X \subseteq J$

, then X is a stable model of P iff X is a stable model of

$I \subseteq X \subseteq J$

, then X is a stable model of P iff X is a stable model of

![]() ${P^{I,J}}$

.

${P^{I,J}}$

.

As a consequence, we obtain that

![]() ${\mathcal{R}}$

-programs simplified with their well-founded model also maintain stable models.

${\mathcal{R}}$

-programs simplified with their well-founded model also maintain stable models.

Corollary 10 Let P be an

![]() ${\mathcal{R}}$

-program and (I,J) be the well-founded model of P.

${\mathcal{R}}$

-program and (I,J) be the well-founded model of P.

Then, P and

![]() ${P^{I,J}}$

have the same stable models.

${P^{I,J}}$

have the same stable models.

For instance, the

![]() ${\mathcal{R}}$

-program in Example 3 and its simplification in Example 5 have the same stable model. Unlike this, the program from Example 2 consisting of rule

${\mathcal{R}}$

-program in Example 3 and its simplification in Example 5 have the same stable model. Unlike this, the program from Example 2 consisting of rule

![]() $p \leftarrow {\neg} {\neg} p$

induces two stable models, while its simplification w.r.t. its well-founded model

$p \leftarrow {\neg} {\neg} p$

induces two stable models, while its simplification w.r.t. its well-founded model

![]() $(\emptyset,\emptyset)$

yields an empty program admitting the empty stable model only.

$(\emptyset,\emptyset)$

yields an empty program admitting the empty stable model only.

Note that given an

![]() ${\mathcal{R}}$

-program with a finite well-founded model, we obtain a semantically equivalent finite program via simplification. As detailed in the following sections, grounding algorithms only compute approximations of the well-founded model. However, as long as the approximation is finite, we still obtain semantically equivalent finite programs. This is made precise by the next two results showing that any program between the original and its simplification relative to its well-founded model preserves the well-founded model, and that this extends to all stable models for

${\mathcal{R}}$

-program with a finite well-founded model, we obtain a semantically equivalent finite program via simplification. As detailed in the following sections, grounding algorithms only compute approximations of the well-founded model. However, as long as the approximation is finite, we still obtain semantically equivalent finite programs. This is made precise by the next two results showing that any program between the original and its simplification relative to its well-founded model preserves the well-founded model, and that this extends to all stable models for

![]() ${\mathcal{R}}$

-programs.

${\mathcal{R}}$

-programs.

Theorem 11 Let P and Q be

![]() ${\mathcal{F}}$

-programs, and (I,J) be the well-founded model of P.

${\mathcal{F}}$

-programs, and (I,J) be the well-founded model of P.

If

![]() ${P^{I,J}} \subseteq Q \subseteq P$

, then P and Q have the same well-founded models.

${P^{I,J}} \subseteq Q \subseteq P$

, then P and Q have the same well-founded models.

Corollary 12 Let P and Q be

![]() ${\mathcal{R}}$

-programs, and (I,J) be the well-founded model of P.

${\mathcal{R}}$

-programs, and (I,J) be the well-founded model of P.

If

![]() ${P^{I,J}} \subseteq Q \subseteq P$

, then P and Q are equivalent.

${P^{I,J}} \subseteq Q \subseteq P$

, then P and Q are equivalent.

3 Splitting

One of the first steps during grounding is to group rules into components suitable for successive instantiation. This amounts to splitting a logic program into a sequence of subprograms. The rules in each such component are then instantiated with respect to the atoms possibly derivable from previous components, starting with some component consisting of facts only. In other words, grounding is always performed relative to a set of atoms that provide a context. Moreover, atoms found to be true or false can be used for on-the-fly simplifications.

Accordingly, this section parallels the above presentation by extending the respective formal concepts with contextual information provided by atoms in a two- and four-valued setting. We then assemble the resulting concepts to enable their consecutive application to sequences of subprograms. Interestingly, the resulting notion of splitting allows for more fine-grained splitting than the traditional concept (Lifschitz and Turner Reference Lifschitz and Turner1994) since it allows us to partition rules in an arbitrary way. In view of grounding, we show that once a program is split into a sequence of programs, we can iteratively compute an approximation of the well-founded model by considering in turn each element in the sequence.

In what follows, we append letter “C” to names of interpretations having a contextual nature.

To begin with, we extend the one-step provability operator accordingly.

Definition 2 Let P be an

![]() ${\mathcal{F}}$

-program and

${\mathcal{F}}$

-program and

![]() ${\mathit{I\!{C}}}$

be a two-valued interpretation.

${\mathit{I\!{C}}}$

be a two-valued interpretation.

For any two-valued interpretation I, we define the one-step provability operator of P relative to

![]() ${\mathit{I\!{C}}}$

as

${\mathit{I\!{C}}}$

as

A prefixed point of

![]() ${{T}_{P}^{{\mathit{I\!{C}}}}}$

is a also a prefixed point of

${{T}_{P}^{{\mathit{I\!{C}}}}}$

is a also a prefixed point of

![]() ${{T}_{P}}$

. Thus, each prefixed point of

${{T}_{P}}$

. Thus, each prefixed point of

![]() ${{T}_{P}^{{\mathit{I\!{C}}}}}$

is a model of P but not vice versa.

${{T}_{P}^{{\mathit{I\!{C}}}}}$

is a model of P but not vice versa.

To see this, consider program

![]() $P=\{a \leftarrow b\}$

. We have

$P=\{a \leftarrow b\}$

. We have

![]() ${{{T}_{P}}(\emptyset)} = \emptyset$

and

${{{T}_{P}}(\emptyset)} = \emptyset$

and

![]() ${{{T}_{P}^{\{b\}}}(\emptyset)} = \{a\}$

. Hence,

${{{T}_{P}^{\{b\}}}(\emptyset)} = \{a\}$

. Hence,

![]() $\emptyset$

is a (pre)fixed point of

$\emptyset$

is a (pre)fixed point of

![]() ${{T}_{P}}$

but not of

${{T}_{P}}$

but not of

![]() ${{T}_{P}^{\{b\}}}$

since

${{T}_{P}^{\{b\}}}$

since

![]() $\{a\} \not\subseteq \emptyset$

. The set

$\{a\} \not\subseteq \emptyset$

. The set

![]() $\{a\}$

is a prefixed point of both operators.

$\{a\}$

is a prefixed point of both operators.

Proposition 13 Let P be a positive program, and

![]() ${\mathit{I\!{C}}}$

and J be two valued interpretations.

${\mathit{I\!{C}}}$

and J be two valued interpretations.

Then, the operators

![]() ${{T}_{P}^{{\mathit{I\!{C}}}}}$

and

${{T}_{P}^{{\mathit{I\!{C}}}}}$

and

![]() ${{{T}_{P}^{\,\boldsymbol{\cdot}}}(J)}$

are both monotone.

${{{T}_{P}^{\,\boldsymbol{\cdot}}}(J)}$

are both monotone.

We use Theorems 1 and 13 to define a contextual stable operator.

Definition 3 Let P be an

![]() ${\mathcal{F}}$

-program and

${\mathcal{F}}$

-program and

![]() ${\mathit{I\!{C}}}$

be a two-valued interpretation.

${\mathit{I\!{C}}}$

be a two-valued interpretation.

For any two-valued interpretation J, we define the stable operator relative to

![]() ${\mathit{I\!{C}}}$

, written

${\mathit{I\!{C}}}$

, written

![]() ${{{S}_{P}^{{\mathit{I\!{C}}}}}(J)}$

, as the least fixed point of

${{{S}_{P}^{{\mathit{I\!{C}}}}}(J)}$

, as the least fixed point of

![]() ${{T}_{{P_{J}}}^{{\mathit{I\!{C}}}}}$

.

${{T}_{{P_{J}}}^{{\mathit{I\!{C}}}}}$

.

While the operator is antimonotone w.r.t. its argument J, it is monotone regarding its parameter

![]() ${\mathit{I\!{C}}}$

.

${\mathit{I\!{C}}}$

.

Proposition 14 Let P be an

![]() ${\mathcal{F}}$

-program, and

${\mathcal{F}}$

-program, and

![]() ${\mathit{I\!{C}}}$

and J be two-valued interpretations.

${\mathit{I\!{C}}}$

and J be two-valued interpretations.

Then, the operators

![]() ${{S}_{P}^{{\mathit{I\!{C}}}}}$

and

${{S}_{P}^{{\mathit{I\!{C}}}}}$

and

![]() ${{{S}_{P}^{\,\boldsymbol{\cdot}}}(J)}$

are antimonotone and monotone, respectively.

${{{S}_{P}^{\,\boldsymbol{\cdot}}}(J)}$

are antimonotone and monotone, respectively.

By building on the relative stable operator, we next define its well-founded counterpart. Unlike above, the context is now captured by a four-valued interpretation.

Definition 4 Let P be an

![]() ${\mathcal{F}}$

-program and

${\mathcal{F}}$

-program and

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be a four-valued interpretation.

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be a four-valued interpretation.

For any four-valued interpretation (I,J), we define the well-founded operator relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

as

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

as

As above, we drop parentheses and simply write

![]() ${{W}_{P}^{I,J}}$

instead of

${{W}_{P}^{I,J}}$

instead of

![]() ${{W}_{P}^{(I,J)}}$

. Also, we keep refraining from prepending the prefix “id” to the well-founded operator along with all concepts derived from it below.

${{W}_{P}^{(I,J)}}$

. Also, we keep refraining from prepending the prefix “id” to the well-founded operator along with all concepts derived from it below.

Unlike the stable operator, the relative well-founded one is monotone on both its argument and parameter.

Proposition 15 Let P be an

![]() ${\mathcal{F}}$

-program, and (I,J) and

${\mathcal{F}}$

-program, and (I,J) and

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be four-valued interpretations.

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be four-valued interpretations.

Then, the operators

![]() ${{W}_{P}^{{\mathit{I\!{C}}},{\mathit{J\!{C}}}}}$

and

${{W}_{P}^{{\mathit{I\!{C}}},{\mathit{J\!{C}}}}}$

and

![]() ${{{W}_{P}^{\,\boldsymbol{\cdot}}}(I,J)}$

are both monotone w.r.t. the precision ordering.

${{{W}_{P}^{\,\boldsymbol{\cdot}}}(I,J)}$

are both monotone w.r.t. the precision ordering.

From Theorems 1 and 15, we get that the relative well-founded operator has a least fixed point.

Definition 5 Let P be an

![]() ${\mathcal{F}}$

-program and

${\mathcal{F}}$

-program and

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be a four-valued interpretation.

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be a four-valued interpretation.

We define the well-founded model of P relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

, written

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

, written

![]() ${\mathit{WM}^{({\mathit{I\!{C}}},{\mathit{J\!{C}}})}(P)}$

, as the least fixed point of

${\mathit{WM}^{({\mathit{I\!{C}}},{\mathit{J\!{C}}})}(P)}$

, as the least fixed point of

![]() ${{W}_{P}^{{\mathit{I\!{C}}},{\mathit{J\!{C}}}}}$

.

${{W}_{P}^{{\mathit{I\!{C}}},{\mathit{J\!{C}}}}}$

.

Whenever clear from context, we keep dropping parentheses and simply write

![]() ${\mathit{WM}^{I,J}(P)}$

instead of

${\mathit{WM}^{I,J}(P)}$

instead of

![]() ${\mathit{WM}^{(I,J)}(P)}$

.

${\mathit{WM}^{(I,J)}(P)}$

.

In what follows, we use the relativized concepts defined above to delineate the semantics and resulting simplifications of the sequence of subprograms resulting from a grounder’s decomposition of the original program. For simplicity, we first present a theorem capturing the composition under the well-founded operation, before we give the general case involving a sequence of programs.

Just like suffix C, we use the suffix E (and similarly letter E further below) to indicate atoms whose defining rules are yet to come.

As in traditional splitting, we begin by differentiating a bottom and a top program. In addition to the input atoms (I,J) and context atoms in

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

, we moreover distinguish a set of external atoms,

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

, we moreover distinguish a set of external atoms,

![]() $({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

, which occur in the bottom program but are defined in the top program. Accordingly, the bottom program has to be evaluated relative to

$({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

, which occur in the bottom program but are defined in the top program. Accordingly, the bottom program has to be evaluated relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

(and not just

$({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

(and not just

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

as above) to consider what could be derived by the top program. Also, observe that our notion of splitting aims at computing well-founded models rather than stable models.

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

as above) to consider what could be derived by the top program. Also, observe that our notion of splitting aims at computing well-founded models rather than stable models.

Theorem 16 Let

![]() ${\mathit{P\!{B}}}$

and

${\mathit{P\!{B}}}$

and

![]() ${\mathit{P\!{T}}}$

be

${\mathit{P\!{T}}}$

be

![]() ${\mathcal{F}}$

-programs,

${\mathcal{F}}$

-programs,

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be a four-valued interpretation,

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

be a four-valued interpretation,

![]() $(I,J) = {\mathit{WM}^{{\mathit{I\!{C}}},{\mathit{J\!{C}}}}({\mathit{P\!{B}}} \cup {\mathit{P\!{T}}})}$

,

$(I,J) = {\mathit{WM}^{{\mathit{I\!{C}}},{\mathit{J\!{C}}}}({\mathit{P\!{B}}} \cup {\mathit{P\!{T}}})}$

,

![]() $({\mathit{I\!{E}}},{\mathit{J\!{E}}}) = (I, J) \sqcap ({{{B({\mathit{P\!{B}}})}}^\pm} \cap {H({\mathit{P\!{T}}})})$

,

$({\mathit{I\!{E}}},{\mathit{J\!{E}}}) = (I, J) \sqcap ({{{B({\mathit{P\!{B}}})}}^\pm} \cap {H({\mathit{P\!{T}}})})$

,

![]() $({\mathit{I\!{B}}},{\mathit{J\!{B}}}) = {\mathit{WM}^{({\mathit{I\!{C}}}, {\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})}({\mathit{P\!{B}}})}$

, and

$({\mathit{I\!{B}}},{\mathit{J\!{B}}}) = {\mathit{WM}^{({\mathit{I\!{C}}}, {\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})}({\mathit{P\!{B}}})}$

, and

![]() $({{\mathit{I\!{T}}}},{{\mathit{J\!{T}}}}) = {\mathit{WM}^{({\mathit{I\!{C}}}, {\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{B}}},{\mathit{J\!{B}}})}({\mathit{P\!{T}}})}$

.

$({{\mathit{I\!{T}}}},{{\mathit{J\!{T}}}}) = {\mathit{WM}^{({\mathit{I\!{C}}}, {\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{B}}},{\mathit{J\!{B}}})}({\mathit{P\!{T}}})}$

.

Then, we have

![]() $(I,J) = ({\mathit{I\!{B}}},{\mathit{J\!{B}}}) \sqcup ({{\mathit{I\!{T}}}},{{\mathit{J\!{T}}}})$

.

$(I,J) = ({\mathit{I\!{B}}},{\mathit{J\!{B}}}) \sqcup ({{\mathit{I\!{T}}}},{{\mathit{J\!{T}}}})$

.

Partially expanding the statements of the two previous result nicely reflects the decomposition of the application of the well-founded founded model of a program:

Note that the formulation of the theorem forms the external interpretation

![]() $({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

, by selecting atoms from the overarching well-founded model (I,J). This warrants the correspondence of the overall interpretations to the union of the bottom and top well-founded model. This global approach is dropped below (after the next example) and leads to less precise composed models.

$({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

, by selecting atoms from the overarching well-founded model (I,J). This warrants the correspondence of the overall interpretations to the union of the bottom and top well-founded model. This global approach is dropped below (after the next example) and leads to less precise composed models.

Example 6 Let us illustrate the above approach via the following program:

The well-founded model of this program relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})=(\emptyset, \emptyset)$

is

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})=(\emptyset, \emptyset)$

is

First, we partition the four rules of the program into

![]() ${\mathit{P\!{B}}}$

and

${\mathit{P\!{B}}}$

and

![]() ${\mathit{P\!{T}}}$

as given above. We get

${\mathit{P\!{T}}}$

as given above. We get

![]() $({\mathit{I\!{E}}},{\mathit{J\!{E}}})= (\emptyset,\emptyset)$

since

$({\mathit{I\!{E}}},{\mathit{J\!{E}}})= (\emptyset,\emptyset)$

since

![]() ${{{B({\mathit{P\!{B}}})}}^\pm} \cap {H({\mathit{P\!{T}}})}=\emptyset$

. Let us evaluate

${{{B({\mathit{P\!{B}}})}}^\pm} \cap {H({\mathit{P\!{T}}})}=\emptyset$

. Let us evaluate

![]() ${\mathit{P\!{B}}}$

before

${\mathit{P\!{B}}}$

before

![]() ${\mathit{P\!{T}}}$

. The well-founded model of

${\mathit{P\!{T}}}$

. The well-founded model of

![]() ${\mathit{P\!{B}}}$

relative to

${\mathit{P\!{B}}}$

relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

is

$({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

is

With this, we calculate the well-founded model of

![]() ${\mathit{P\!{T}}}$

relative to

${\mathit{P\!{T}}}$

relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{B}}},{\mathit{J\!{B}}})$

:

$({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{B}}},{\mathit{J\!{B}}})$

:

We see that the union of

![]() $({\mathit{I\!{B}}},{\mathit{J\!{B}}}) \sqcup ({\mathit{I\!{T}}},{\mathit{J\!{T}}})$

is the same as the well-founded model of

$({\mathit{I\!{B}}},{\mathit{J\!{B}}}) \sqcup ({\mathit{I\!{T}}},{\mathit{J\!{T}}})$

is the same as the well-founded model of

![]() ${\mathit{P\!{B}}} \cup {\mathit{P\!{T}}}$

relative to

${\mathit{P\!{B}}} \cup {\mathit{P\!{T}}}$

relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

.

$({\mathit{I\!{C}}},{\mathit{J\!{C}}})$

.

This corresponds to standard splitting in the sense that

![]() $\{a,b\}$

is a splitting set for

$\{a,b\}$

is a splitting set for

![]() ${\mathit{P\!{B}}}\cup {\mathit{P\!{T}}}$

and

${\mathit{P\!{B}}}\cup {\mathit{P\!{T}}}$

and

![]() ${\mathit{P\!{B}}}$

is the “bottom” and

${\mathit{P\!{B}}}$

is the “bottom” and

![]() ${\mathit{P\!{T}}}$

is the “top” (Lifschitz and Turner Reference Lifschitz and Turner1994).

${\mathit{P\!{T}}}$

is the “top” (Lifschitz and Turner Reference Lifschitz and Turner1994).

Example 7 For a complement, let us reverse the roles of programs

![]() ${\mathit{P\!{B}}}$

and

${\mathit{P\!{B}}}$

and

![]() ${\mathit{P\!{T}}}$

in Example 6. Unlike above, body atoms in

${\mathit{P\!{T}}}$

in Example 6. Unlike above, body atoms in

![]() ${\mathit{P\!{B}}}$

now occur in rule heads of

${\mathit{P\!{B}}}$

now occur in rule heads of

![]() ${\mathit{P\!{T}}}$

, that is,

${\mathit{P\!{T}}}$

, that is,

![]() ${{{B({\mathit{P\!{B}}})}}^\pm} \cap {{H({\mathit{P\!{T}}})}} = \{a,b\}$

. We thus get

${{{B({\mathit{P\!{B}}})}}^\pm} \cap {{H({\mathit{P\!{T}}})}} = \{a,b\}$

. We thus get

![]() $({\mathit{I\!{E}}},{\mathit{J\!{E}}})= (\{a,b\}, \{a, b\})$

. The well-founded model of

$({\mathit{I\!{E}}},{\mathit{J\!{E}}})= (\{a,b\}, \{a, b\})$

. The well-founded model of

![]() ${\mathit{P\!{B}}}$

relative to

${\mathit{P\!{B}}}$

relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

is

$({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{E}}},{\mathit{J\!{E}}})$

is

And the well-founded model of

![]() ${\mathit{P\!{T}}}$

relative to

${\mathit{P\!{T}}}$

relative to

![]() $({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{B}}},{\mathit{J\!{B}}})$

is

$({\mathit{I\!{C}}},{\mathit{J\!{C}}}) \sqcup ({\mathit{I\!{B}}},{\mathit{J\!{B}}})$

is

Again, we see that the union of both models is identical to (I,J).

This decomposition has no direct correspondence to standard splitting (Lifschitz and Turner Reference Lifschitz and Turner1994) since there is no splitting set.

Next, we generalize the previous results from two programs to sequences of programs. For this, we let

![]() $\mathbb{I}$

be a well-ordered index set and direct our attention to sequences

$\mathbb{I}$

be a well-ordered index set and direct our attention to sequences

![]() ${({{P}_{i}})}_{i \in \mathbb{I}}$

of

${({{P}_{i}})}_{i \in \mathbb{I}}$

of

![]() ${\mathcal{F}}$

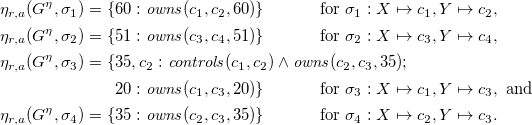

-programs.