I Introduction

Ours is an era of technological innovation. Our law, then, must be tailored to the ever-changing conditions of life. Unsurprisingly, the problem has not escaped the attention of academics. We do not propose to develop a new theory of regulation here. Instead, we discuss an institutional problem: when some new technology emerges, should it be regulated by judges or by legislators?

It is commonly thought that regulation should begin with an attempt to adapt existing laws and conditions to the specific problems posed by some innovation.Footnote 1 Gregory Mandel identified three caveats to that principle.Footnote 2 First, the decision maker must consider the possibility that pre-existing legal categories are inapplicable to the innovation in question. Second, legal actors should not be blindsided by the novelty of any one technology. Third, it is inevitable that at least some of the legal issues that a technology poses are unforeseeable at the time of its inception. The primary concern of the present paper is with the third of Mandel’s propositions. More specifically, we are concerned here with the cost of information to legislators and judges: if the judiciary can inform themselves more easily about some innovation, then they should regulate it, and vice versa.Footnote 3

Section II advances our theoretical propositions. We distinguish between risky and uncertain technologies. A risky technology poses an obvious risk, and the problem before the regulator is one of comparing cost and benefit. Critical to that exercise is the acquisition of information. We argue that the judiciary, which acquires information gratis from litigants, is better suited to the regulation of risky technologies. Uncertain technologies, on the other hand, can be harmful in ways which cannot be foreseen at the time of the technological innovation. Cost and benefit are incalculable; regulation must instead be based on subjective preferences about the degree of uncertainty that society should tolerate. Legislative law-making is designed with a view to aggregating subjective preferences. Accordingly, uncertain technologies should be regulated through statute.

Section III applies our analysis to self-driving cars. That is an example of a risky technology: the type of physical harm that self-driving cars can cause is easily identifiable in the short term. What is unknown is the probability with which accidents will occur. The judiciary perform better at acquiring this kind of information, a proposition to whose veracity the history of automotive regulation readily attests. Once a stable body of regulation emerges, considerations of taxonomy and accessibility might mean that codification becomes desirable. However, in the immediate aftermath of innovation, precedent is better.

Section IV discusses an uncertain technology, here 3D printing. We do not know what kinds of harm 3D printing might cause to our security – we are not able to predict all of its possible uses, let alone those uses’ impact or their desirability. Accordingly, in deciding how to regulate 3D printers, we must decide how much uncertainty we are willing to bear. That is a question of subjective preference. At present, attempts at regulation have almost universally originated from legislatures. The courts, on the other hand, have been too slow to react: doctrinal muddles, such as the tension between regulation and the First Amendment, hamper any meaningful attempt at a judicial assessment of 3D printing. Statute is superior. Section V concludes.

II Theoretical propositions

1 Assumptions

We do not develop a novel theory of the courts, the legislature, or technological progress. Our argument instead proceeds from assumptions. The credibility of our conclusions can accordingly never exceed that of the assumptions. For this reason, we propose to lay them bare at the outset.

a Facts

The first assumption that we make is that it is cheaper for the courts to inform themselves about facts than it is for legislatures. The assumption, of course, is not novel – it has its origins in the Austrian literature and has gone on to become a staple in the “efficiency of the common law” literature.Footnote 4 To forestall epistemological complications,Footnote 5 we must define facts. By that term, we mean to denote information about elements of material reality that are immediately observable: the speed of the wind on Tuesday, the distance between Rotterdam and Amsterdam, and such like.

The stock of factual information at humanity’s disposal is vast, but widely dispersed.Footnote 6 Individual citizens observe a very small fraction of reality. The production of regulation begins with funnelling information from individuals to a centralised lawmaking body, be it judicial or legislative. The literature notes that the courts command an advantage.Footnote 7 Why so? Every time a case comes to trial, litigants transmit information to the judge. Litigants do that because the courts offer them a valuable service in the form of dispute resolution. The same is not true of legislatures. Most citizens do not volunteer their private observations to Parliament.Footnote 8 Those who do are usually interest groups, whose representations are often skewed. Therefore, we may say that the courts acquire information about facts at a lower cost than Parliament.

b Prognoses

The second assumption that we make is that it is cheaper for the legislature to make prognoses. Why so? Prognoses, in general, entail the production of new information. For example, we may say that a 5% increase in the price of cigarettes will produce a 10% drop in lung-cancer mortality. That information can only be produced, never observed. Accurate prognoses are costly to produce. Furthermore, the prognosticator can seldom capture more than a fraction of the social benefit of her work.Footnote 9 Suppose that scientists do establish the link between cigarette prices and lung-cancer mortality. Their prognoses can then be used by the government in setting excise duties. The resultant regulation would reduce cancer mortality across society. However, the scientists who produced the initial prognosis are unable to charge those who, without the price hike, would develop cancer. As a result, we may expect fewer members of society to invest their resources, material or intellectual, into the production of prognoses.Footnote 10 Absent some inducement, prognoses will be underproduced.Footnote 11

In litigation, parties’ willingness to pay for prognoses is tied to stakes. Stakes in individual cases are usually considerably lower than the social benefits of regulation.Footnote 12 Suppose, for example, that an emphysema victim is suing a tobacco company for £50,000. The victim can win if she persuades the courts to change the law to extend liability for emphysema to cigarette manufacturers. It would clearly be useful to the victim if she could demonstrate that a 5% increase in the price of cigarettes would cause a 10% drop in the lung-cancer mortality rate. The regulation would also produce considerable benefits for society. However, if the cost of the study is £100,000, the victim will not pay for it: her private return would be negative. Since the courts have neither the legal nor the fiscal power to commission studies on their own accord, they can never acquire the necessary information.Footnote 13 Parliament, conversely, can simply retain experts and pay them out of the public purse. Prognoses, then, are more accessible to parliaments than to courts.

c Preferences

Regulation is not based purely on facts and prognoses: the regulator must also choose between competing visions of society. As Tallachini – among others – has highlighted, normative considerations shape the interpretation of scientific facts under uncertainty.Footnote 14 All propositions of scientific fact are uncertain to some degree.Footnote 15 Uncertainty, then, is ineliminable. Propositions of scientific fact can only be put to use in lawmaking if their interpretation is framed by norms and ideologies. Accordingly, the lawmaker, if tasked with regulating discoveries, must choose between competing ideologies.Footnote 16 The third assumption that we make is that the legislature can make that choice at a lower cost than the judiciary.

In modern polities, the lawmaker’s personal preferences are not generally thought sufficient to ground regulatory choices. That Theresa May prefers dancing to sports would not, it is thought, be an acceptable reason to substitute Physical Education with Choreography on the state-school curriculum. Lawmakers must aggregate social preferences. In that respect, the judiciary is vastly inferior to Parliament. Judges, who are usually unelected, are reluctant to align themselves publicly with any ideology.Footnote 17 Parliament, on the other hand, is designed to aggregate preferences. The legislature, then, enjoys a comparative advantage in preference aggregation.

d Applicative certainty

The fourth assumption that we make is that judge-made law is more specific than parliament-made law. The point is well established in the literature. Thus, Kaplow points out that, under precedent, standards are converted into rules.Footnote 18 As a general legal command is applied to concrete facts, the command itself becomes more specific.

e Hierarchic certainty

The fifth – and last – assumption that we make is that Parliament’s regulatory output is better structured.Footnote 19 Codes, being more abstract than court judgments, tend to treat problems of delineation and taxonomy robustly. Anglo-Saxon litigators usually fight over distinctions between cases, not over problems of construction. There are comparatively fewer boundary disputes in civilian litigation. Counsels’ energies are instead directed at the interpretation of words in statutes. Therefore, codified law is on the whole more structured than uncodified law.

2 The regulation of disruptive technology in the short term

Now that these assumptions are in place, it is possible to examine the relative institutional competence of the courts and the legislature in relation to disruptive technologies. When we speak of disruptive technology here, we speak of a specific capitalistic incident of scientific progress. Under ordinary market conditions, companies use scientific progress to improve their products’ existing attributes: processing units become more compact, cars become quicker, screens become brighter, and so on. Technologies like these are important, but they are not disruptive. Sometimes, a company will use technology to introduce new attributes to an existing product: video becomes streamable, phones access the internet, and such like. These technologies are disruptive: they alter the market’s competitive dimensions, as well as the settled behaviours of users.Footnote 20 It is this latter type of innovation that concerns us here. In line with juridico-economic orthodoxy, we assume that regulation is precautionary:Footnote 21 its purpose is to minimise the possibility of social harm while maximising social welfare. The simplest case concerns disruptive technologies which pose risks, in the actuarial sense of that word: there is a contingency, or a set of tractable contingencies, which may materialise with a certain probability. The informational problem before the litigator is to determine those probabilities.

a Risk

We give a worked example of a risky technology later. It is easier to begin with an ordinary instance of technological change. Suppose that some new type of car engine is developed which permits carmakers to reduce production costs. There is a certain probability that the new engines will explode mid-journey. The regulatory problem is very simple: does the reduction in production costs match the cost of potential accidents?Footnote 22 To devise a solution, the regulator need only come up with a law such that if the decrease in production costs is lower than the increase in accident costs, the engines will not be rolled out to market.Footnote 23

One way to achieve this would be to commission experts in automotive technology to calculate risk. The courts would be ill-suited to solving the problem in this way: they would have to wait for a private party to commission the study and transmit its content to them. That would only happen if a claim arises somewhere in the polity where the amount at stake exceeds the cost of the study. Parliament is not constrained in this way. It can order the study whenever it likes.

Another solution would be to permit the manufacturer to roll out the engine, and then introduce regulation if and when the need arises. In that case, the courts will – over time – accumulate a lot of information about accidents. There being no regulation on the point, the law will be uncertain. This, in turn, will impel parties to litigate more.Footnote 24 As they litigate, the courts will discover the causes, magnitude, and consequences of engine malfunction. The courts will receive that information at no cost. Parliament, conversely, would only be able to acquire it by paying someone to observe accidents and write reports. The cost would likely be high. Certainly, it would be positive. Therefore, the courts have an advantage.

It emerges, then, that in ex ante regulation, Parliament has an advantage, and in ex post regulation, the courts do. Whether ex ante or ex post regulation is to be preferred is a matter of context.Footnote 25 It may, however, be said that under the capitalist mode of social organisation, innovation is generally favoured,Footnote 26 so much so that most innovations do not require prior approval.Footnote 27 In most instances, therefore, we will regulate a disruptive technology only once we discover that it is risky. Ex post regulation is the default. The courts outperform the legislature in ex post regulation. Therefore, it is best to leave the regulation of risky disruptive technologies to the courts.

b Uncertainty

Not all regulatory problems are reducible to actuarial probability. In the simple hypothesis discussed above, the problem is one of converting “known unknowns” into “known knowns”. But new technologies are also sometimes uncertain in the Knightian sense.Footnote 28 They have unintended consequences. Those consequences, by definition, are unpredictable. They are “unknown unknowns”.

When the Curies discovered radium in 1898, nobody could have anticipated the nuclear bomb. It would be absurd to claim that regulators in 1898 should have calculated the risk of radium being used for slaughter. Likewise, ARPANET – an early precursor of the internet – became operational in 1969. Congress did not immediately pass laws to curb the digital circulation of child pornography. In both cases, the eventualities in question – the nuclear bomb and online child pornography – were wholly unforeseeable. It is only with hindsight that we can tell how the innovations in question would come to demand regulatory attention.Footnote 29

Sometimes, the type of harm that an invention might cause is unknowable. In such instances, the theoretical sketch developed in the preceding section is of no application. Instead, the question is how much uncertainty we – as a society – are willing to tolerate. Humans are uncertainty averse. Individuals and societies, however, differ in their degree of uncertainty aversion.Footnote 30 For example, many are willing to tolerate uncertain genetic experiments. However, few would tolerate the uncertainty caused by experiments on living human beings.

There is no optimal degree of uncertainty aversion. When it comes to “blind spots”, the desirability of forbearance can only be determined with hindsight. Uncertainty aversion is analogous to ideology. Statements about uncertainty, like “oughts”, are unprovable. Uncertainty aversion is purely a matter of subjective preference.Footnote 31

It was seen earlier that the courts are not suited to the aggregation of ideological preferences.Footnote 32 The same is true of their ability to aggregate uncertainty-related preferences. Any judge who expresses a view that a technology is too uncertain – and ought to be banned – would be open to criticism on accountability grounds. For this reason, judicial responses to uncertain technologies are likely to be anaemic. There is no reason to suspect that the legislature will exhibit a systematic tendency to dodge the problems of uncertain technology. If anything, uncertain technologies are a potentially useful source of political capital for supporters and detractors alike.Footnote 33 The legislature, then, is better suited to the regulation of uncertain technologies.

c The distinction between risk and uncertainty

The exposition thus far proceeds on the somewhat delusive assumption that it is easy, or at any rate unproblematic, to distinguish risk and uncertainty.Footnote 34 Very often, advances in the socio-scientific understanding of some issue result in the conversion of uncertainty into risk.Footnote 35 For example, when ARPANET was first developed, the social impact of the technology was uncertain because the social repercussions of information technology were understood poorly, if at all.Footnote 36 As the internet evolved, its more nefarious uses grew apparent. We may nowadays speak of child pornography as a risk. We are familiar with the problem of digital child pornography, we monitor its incidence and we have government agencies dedicated to reducing it. In the framework of our analysis, the internet has become less uncertain and more risky. The process can also run in reverse: a risky technology can become uncertain. For example, radium was likely initially conceived as risky. Once its usefulness in warfare – itself a highly uncertain process – became apparent, the technology became uncertain. It is not at all straightforward to categorise technologies one way or the other; a risky technology can turn out to be uncertain and an uncertain technology may turn out to be risky.

Evidently, this poses a problem: at the time at which a new technology appears, we have no simple way of telling whether it is risky or uncertain. The problem, at the epistemological level, is this: uncertainty, as seen, entails “unknown unknowns” – there are threats of whose existence we are unaware. The more “unknown unknowns” there are, the more uncertain a given technology. But we cannot ever count or measure “unknown unknowns”. The best that we can do is speculate in a rough and intuitive manner.

This has two implications for our analysis. First, it raises an institutional issue. When a new technology emerges, who should decide whether it is risky or uncertain? It is thought that this authority should always rest with the legislature. Neither judges nor parliamentarians may claim to be better at identifying “unknown unknowns”. However, the two differ in their aptitude for identifying the degree of uncertainty aversion that society prefers. Uncertainty aversion is quasi-ideological in nature, in that it is subjective and unable to be corroborated. It follows, then, that under our framework, the legislature should decide whether inventions are uncertain or risky. It should deal with uncertain inventions itself and delegate risky ones to the courts. Fortunately, this is readily borne out in practice – in all jurisdictions that we know of, the courts cannot normally make new rules in areas already covered by statute. Therefore, if Parliament – in line with our model – decides to regulate a technology because it is uncertain, the courts will, absent some constitutional constraint, be unable to hinder the process. Conversely, if Parliament adjudges a certain invention risky, it can delegate it to the courts through its own inaction. Since the courts cannot refuse to decide cases, they cannot – under a system based on precedentFootnote 37 – refuse to produce regulation, either.

Second, and less optimistically, it is inevitable that some inventions will be miscategorised. Parliament will sometimes deem a risky technology uncertain and regulate it itself. The courts will sometimes regulate uncertain technology because the legislature has failed to act, erroneously believing the invention to be risky. Does this mean that our analysis is flawed? If the categorisation is central to the cost-effective regulation of disruptive technology – as we believe it is – then the rate of error under our paradigm would be lower than the rate of error in an institutional framework which is unguided by any paradigm, which is what we have in operation today. Accordingly, we remain convinced that our paradigm represents an improvement on the one currently in place.

3 Disruptive technology in the long term

The foregoing concerns the regulator’s immediate response to innovations. In practice, regulation is updated periodically.Footnote 38 The process often continues long after the technology has ceased to be new or disruptive. This may have implications for the relative regulatory competence of judges and parliamentarians. For instance, we said that it is better for risky technologies to be regulated by the courts. This is true in the short term, but it may be that there is a point in time at which it becomes better to switch from precedent to statute. Conversely, statute is more suited to uncertain technologies. However, there might come a point in time at which it would be better for the legislature to cede competence to the courts.

It was said earlier that courts produce more specific laws, but legislatures perform better at structuring bodies of law. Specific laws are more desirable, since they enable private parties to plan their affairs better. However, even if the law is perfectly specific, it might become difficult to predict which rule will apply to which case – a problem of hierarchic uncertainty. If such hierarchic disputes are widespread, the law becomes uncertain. Now, some areas of life require few rules. Vehicular speed is regulated in a very straightforward way. The rules are simple, like “do not drive at more than 50 km/h in cities”. They are few in number: “the speed limit is 50 km/h in cities, 90 km/h outside of cities, and 120 km/h on highways”. Other spheres of life are complex and require sophisticated regulation. For instance, we are not able to describe the regulation of surgical procedures, or the conduct of war, in the same way as we describe the speed limit.Footnote 39

As legal complexity increases, so does the potential for overlap between the rules that constitute some corpus of law.Footnote 40 We assume here that statute is better structured. Therefore, once a law becomes sufficiently complex, it is best to reduce overlap through codification. Codification entails a loss of specificity, since it prevents the judiciary from tailoring regulation contextually. However, provided that the level of overlap is high, the gain in hierarchic certainty will outweigh the increase in applicative uncertainty. In the long term, precedent is desirable for simple areas of law and statute for complex ones.

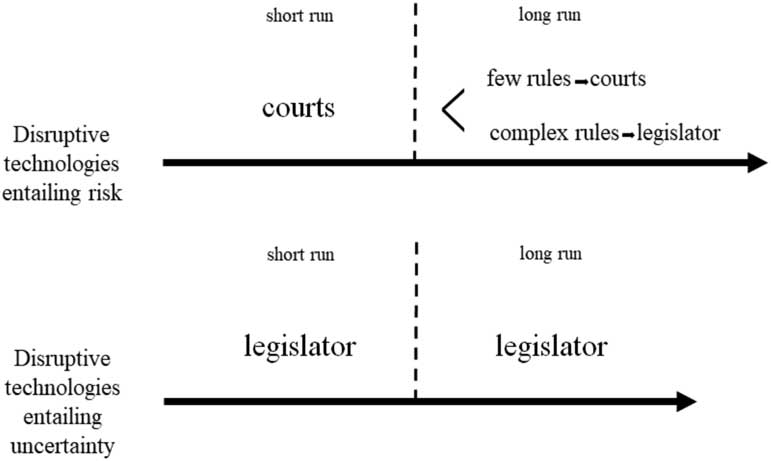

How does this impact the analysis developed in the first part? First, a risky disruptive innovation is better suited to precedent. However, if regulation under precedent produces many rules, then codification becomes desirable. If, conversely, the judges devise a simple, efficacious regulatory scheme, then precedent remains optimal in the long term. Second, it was seen that an uncertain disruptive innovation is best regulated through statute. If the statute turns out to be simple, it is best to leave its specific applications to the courts in perpetuity. This would ensure that the law becomes as specific as possible. If, on the other hand, the statute turns out complex, then its development must remain the responsibility of the legislature.

Figure 1 Theoretical arguments illustrated

4 Summary

We have made two theoretical arguments. First, in the short term, disruptive technologies that entail risk ought to be regulated by the courts. Disruptive technologies that entail uncertainty ought to be regulated by the legislature. The latter proposition holds true in both the long and the short term. So long as uncertainty remains unconverted to risk, the courts will be ineffective regulators. Second, in the long term, the courts should regulate risky technologies if the regulatory framework comprises a few simple rules. The legislature should control fields whose regulation comprises many complex rules. We readily concede that these are very abstract points which do not easily fit current patterns of innovation or regulation. For this reason, we now propose to examine their application in two contexts: self-driving cars and 3D printing.

III Self-driving cars

A self-driving car is a self-operating vehicle provided with the self-learning artificial intelligence (AI) system. In our framework, self-driving cars exemplify “simple” risky technologies.Footnote 41 When we say risk here, we mean not the risks that inhere in any technological project – such as the likelihood of its failure to achieve its stated objectives – but rather the perils that might flow from the technology being rolled out to market without any regulatory controls. Collision is the risk which – naturally – predominates any discussion of self-driving cars. Accordingly, the problem before the regulator is not one of identifying the possible sources of harm from the adoption of the new technology. The sources are by and large known; regulatory choices instead turn on assigning a probability to incidents.

There are many classifications of self-driving cars. Some classifications focus on the levels of human interaction with automation.Footnote 42 Other, on the types of drivers’ control over a car.Footnote 43 The industry, in turn,Footnote 44 defines several levels of autonomy ranging from no autonomy to full automation. We discuss self-driving cars, considering them fully automated vehicles. The characteristics of car operation are as follows: the vehicle is entirely self-contained. It is powered by computers, interfaces, software and sensors being able to acquire and consequently use the information relevant for driving.Footnote 45 The technology is expected to reduce the number of accidents on the roads. However, it is also risky: there is still a positive probability of accidents, caused by either killing a pedestrians or crashing into other vehicles.

In the context of the present submission, the question is this: who should regulate liability for the “misbehaviours” of self-driving vehicles? The prevention of collisions safety is a key concern for the development of self-driving cars.Footnote 46 Under our framework, the courts should command a considerable advantage. This is so because the problem is one of “simple” risk. In substantiating that argument, we begin with an overview of the history of car-accident regulation. Thereafter, we will return to self-driving cars.

The safety issues that arise as between self-driving and non-self-driving cars are analogous to those that arose between motor cars and horses. The US case law from the early twentieth century recognised a motor car as falling under the general concept of a means of conveyance. The legal treatment of motor cars was not unlike that of horses and street cars.Footnote 47 That a car could potentially frighten horses was not sufficient to render it a public nuisance.Footnote 48 Additionally, the courts expressed their belief that they would be able to acquire the knowledge allowing them to regulate automobile safety.Footnote 49 By and large, they succeeded. One example is the Stop, Look and Listen Rule. The standard of care for a driver was the same as that for a horseman: they had to look and listen while passing railroad crossings. Since the number of accidents kept on growing, the courts adapted the law to reflect the technological shift to cars: stopping became mandatory.Footnote 50

The appearance of automobiles prompted an increase in litigation. That increase, in turn, resulted in the courts acquiring a large volume of information about automotive transport. The acquisition of information corresponded to an incremental increase in the courts’ regulatory output, so that, by the end, the case law established a framework for the regulation of automobiles.

Over time, the regulation of cars grew more complex. The courts do not excel at regulating complex areas of law in the long term: hierarchic overlap is inevitable. At that point, the legislator set rules on traffic communication and speed limits.Footnote 51 The legislature also intervened in the torts regime. The case law revealed that the classical rules of negligence were often inadequate.Footnote 52 Therefore, the statutes refined the common law in areas such as contributory negligence.Footnote 53

On the whole, we may say that the appearance of cars was not met with any specific regulations: rules were adopted if and when they became necessary. Initially, the task was undertaken by the courts, which were informationally superior to the legislature – they heard more cases. Over time, it became apparent that cars would require relatively complex regulation. This, in turn, led to the legislative takeover: traffic laws, statutory torts and such like superseded the general law of negligence as the main source of automotive regulation.

The regulation of self-driving cars will probably follow the same pattern.Footnote 54 The discussed approaches mention remedying the harm caused by a self-driving car through the general tort of negligence or, alternatively, through statutory torts of product liability, or other duties of care involving negligence-based and strict liability. No matter which approach is eventually chosen, the approaches are all based on classical legal concepts, which the judiciary know well. Therefore, the answer to the question “who should regulate?” is this: in the short term, the judiciary should regulate automobiles, because of their informational advantages. In the long term, it may transpire that self-driving cars require complex regulation. If that turns out to be correct, then there will come a point at which the legislature should take over from the courts.

In the first instance, the courts can regulate self-driving cars through the tort of negligence. Application of the negligence-based liability to accidents caused by self-driving cars would hinge on the definition of “due care”. The threshold for a self-driving car driving on the streets will probably be extremely high.Footnote 55 Applying negligence to machines is problematic because the standard was developed for humans and also because machines cannot compensate their victims.Footnote 56 Since there is no human driver, the courts would have to ask whether the self-driving car performed as it should have.Footnote 57 Unless the liability can be directly attributed to a person steering the car, for example through the navigation system, the question will always be whether the car was manufactured and designed with due care or, failing that, whether the owner or manufacturer – qua principal – exerted due care.

Liability could be imposed under the product liability regime. That is so because self-driving cars exhibit similarities to other products. They may have flaws and defects. Alternatively, it may be said that cars can learn to make decisions. Therefore, the manufacturer of the car, or its owner, might be assumed to control the car. Accordingly, the manufacturer (if it supplies the car with AI) would need to prove that it exerted due care in detecting and disclosing flaws.Footnote 58

Alternatively, there is an obvious analogy with vicarious liability. Should manufacturers observe dangerous behaviour, they must take precautionary action. Thus, there would be negligence-based liability for damage caused by the self-driving car where it can be shown that the car had a propensity to cause a particular type of harm or injury and that the manufacturer or user were aware of that dangerous propensity. The liability of the manufacturer or the owner would be strict if the self-driving car were found to be an agent for its principal.Footnote 59

In this context few observations regarding the application of the existing rules have been made. We do no exhaust them here. As far as negligence-based liability in the manufacture of software is concerned, defects might be difficult to prove. The plaintiff would need to prove the error in the software algorithm.Footnote 60 Second, it could be difficult to show that a defect was present when the car left the production facility.Footnote 61 Under a system of strict liability, it might be difficult to pinpoint the specific defect which caused an accident. Suppose a self-driving car decides, on its own, that it is better to hit a pedestrian than to cause a chain crash. The circumstances would not logically suggest that there was a defect.Footnote 62 In the end, the car chooses the lesser evil. Additionally, lack of accident evidence might render foreseeability unprovable. Furthermore, it might be difficult to show that there was an alternative design.Footnote 63 In consequence, the injured party could be left without a remedy. As far as the liability aspects in the principal-agent relationship are concerned, it can be questioned whether a car can have a proclivity to cause a particular type of harm or that the user was aware of that proclivity. It might be expected that manufacturers and users will seldom anticipate danger. For the same reason, it could also be difficult to say when a machine is under somebody’s control or within the course of some form of employment. In consequence, the owner of the car or the manufacturer would not be able to escape liability. This will force the regulator to choose between different approaches to balance risks.Footnote 64

In light of the foregoing, legislators have enacted or proposed solutions. Most focus on manufacturers, who are the cheapest cost-avoiders. Due to that there are suggestions for the full strict liability of a manufacturer.Footnote 65 Alternatively, there is a proposal to hold the manufacturer liable proportionally to the learning ability of the AI system. In other words, the longer a manufacturer supplies the car with the information to make it self-driving, the greater the manufacturer’s responsibility.Footnote 66 Another alternative suggests that strict liability should be limited to the value of their robot’s portfolio, which is like a special fund or stock.Footnote 67 Moreover, for example, the State of Michigan introduced the law limiting the liability of the manufacturer if a third party alters software.Footnote 68 Other proposals mention obligatory insuranceFootnote 69 including insurance against damage not liabilityFootnote 70 or adapting the concept of negligence to changing circumstances.Footnote 71 There are also propositions to employ limited liability companies for the operation of autonomous systems.Footnote 72 These solutions concern legislative initiatives that aim to correct the shortcomings of the current system.Footnote 73 If that approach is preferred, then regulation will be predominantly statutory.

To sum up, a few observations can be made. First, the risks of automotive innovations are generally appraised after a product has been brought to market. In order to regulate risky technologies, the starting point should be the existing body of law and judges’ ability to acquire information about the operation of the new technology and the necessary regulatory adaptations. Second, the legislator should step in to correct the system once lacunae appear. Therefore, the general regulation of automotive risks should – for now – be entrusted to the judiciary. Past experience and current legislative proposals indicate that, once the field matures, it will become sufficiently complex to warrant a switch to statute. Accordingly, the legislature is presently limiting itself to limited legal commands, leaving information about the specific workings of the AI system to be acquired incrementally by the judiciary.

IV 3D printing

3D printing is an innovation with disruptive potential. A 3D printer can produce anything, from ceramics to human cells. All that is necessary is a computer and a CAD file. The implications of 3D printing are not fully understood.Footnote 74 Sometimes, the consequences are obvious (ceramics). Sometimes, they are unpredictable (guns). Since 3D printing is an invention with many unforeseeable applications, we classify the technology as “uncertain”.Footnote 75

Because 3D printing is a facilitative technology, we cannot easily anticipate the totality of its applications. To maintain tractability, we focus on security risks. For instance, is it possible for an ordinary person to manufacture a gun, such as a revolver, an assault rifle, or even a grenade, in the privacy of their own home? If so, 3D printing would thwart gun regulation.Footnote 76 We cannot assign a numerical probability to that risk. Nor can we claim that this exhausts the kinds of harm that 3D printing may generate. The Peace Research Institute, for instance, notes that 3D printing will also permit Western military technology to be copied cheaply by enemy militants. Similarly, it is not possible to rule out the use of 3D printing to create larger weapons, such as drones or even rocket launchers.Footnote 77 3D printing, then, is uncertain. The uncertainty is not unipolar: 3D printing may also, plausibly, usher in a new Industrial Revolution.Footnote 78

There is thus a commonality between this example and the one developed in the preceding section: the task before the regulator is to measure the expected value of 3D printing to the economy against the expected costs of the increased incidence of security incidents. There is, however, a difference, since the long-term social benefits of 3D printing and the likelihood of security incidents are incalculable. The question, then, is one of uncertainty aversion: how much innovation are we willing to tolerate if there is an unknown probability of it being used to perpetuate atrocities? As we argued earlier, the courts are ill-suited to this kind of decision.Footnote 79 Gun control is ideologically charged. The judiciary are reluctant to voice ideological opinions. Legislatures, conversely, aggregate ideological preferences. Accordingly, it is best for uncertain technologies – of which 3D printing is one – to be regulated by statute.

3D printing opens new avenues of weapon production. There is a legitimate social interest in controlling weapon production. For instance, the manufacture of a working 3D gun that is undetectable by standard metal detectors has repeatedly caused alarm.Footnote 80 The existing gun licencing framework is based on the imposition of controls on one component, the weapon’s frame. Frames can be 3D printed: forgery becomes costless. It might be necessary to introduce a licensing scheme which focuses on some other, non-3D-printable component.Footnote 81 It is far from easy to see how this can be done without wide-scale disruptions to the existing enforcement framework.

Representative Steve Israel proposed to amend the renewed 1988 Undetectable Firearms Act to criminalise firearms whose production is undetectable.Footnote 82 The need for regulation manifested powerfully when Cody Wilson published software that enabled users to print a 3D plastic gun. Everyone could easily manufacture firearms at a relatively low cost.Footnote 83

3D printing technology has sparked a review of gun-control laws. The current regulatory framework was devised at a time when few individuals could produce weapons.Footnote 84 This led the legislature to focus on possession and distribution. Only a few laws regulate 3D firearms. Certain agencies have tried to advance preemptive regulation.Footnote 85 For instance, the City Council of Philadelphia adopted an ordinance banning the use of 3D printing for manufacturing arms: “[n]o person shall use a three-dimensional printer to create any firearm, or any piece or part thereof, unless such person possesses a license to manufacture firearms under Federal Law, 18 U.S.C. § 923(a)”.Footnote 86 Similarly, the US State Department took immediate action when Wilson posted the Liberator’s blueprint on the internet.Footnote 87

In line with our prediction, the immediate regulatory response to the new technology has come from the legislature. Gun control is a rich skein of political capital. The judiciary, on the other hand, struggle to aggregate ideological and uncertainty-related preferences about guns. Any attempt at judicial regulation would require a balance to be struck between free speech, public safety, and the right to bear arms. The judicial branch is – understandably – reluctant to intervene.Footnote 88

We said earlier that complex regulation usually becomes hierarchically uncertain if left in the hands of the judiciary.Footnote 89 Firearms regulation is no exception: the principal doctrinal challenge to regulating 3D weapons emanates from case law. Legislation regulating 3D printed guns may infringe on the First and Second Amendments to the US Constitution. The First Amendment relates to the freedom of speech, which is interpreted broadly.Footnote 90 The First Amendment covers digital blueprints and CAD files. Yet, the level of protection that CAD files enjoy depends on how the courts characterise them.Footnote 91 For example, if the courts classify CAD files as pure speech, then they will be afforded the highest level of protection.Footnote 92 Clarity, however, is difficult to attain: the law will remain unsettled until such time as the legislature decides to act and a challenge is put before the Supreme Court.

The Second Amendment can potentially cover printed guns. In District of Columbia v Heller,Footnote 93 the Court interpreted the right to possess arms narrowly: it only covered weapons which are “typically possessed by law-abiding citizens”.Footnote 94 It follows that only those firearms that are popular in the market, and not the “dangerous and unusual”Footnote 95 ones, are protected under the Second Amendment. The US District Court of Maryland has concretely applied this line of reasoning, the so-called “in common use” test, to assault-style long guns.Footnote 96

It seems that regulations governing 3D printed weapons may pass the “in common use” test only if a significant section of the population is familiar with this type of arms. Because of this, a regulation restricting, or even banning, the production of 3D printed guns can only be adopted in the infancy of the technology. Yet, one may also construe “dangerous and unusual” to refer to design rather than mode of production. Should this turn out to be the case, all regulation is foreclosed.

It is evident that the courts – whatever their informational advantages – are not well-suited to the regulation of an uncertain technology. To the judiciary, a personal right to manufacture weaponry is “constitutional terra nova”.Footnote 97 As any student of common law legal history will attest, the rapid production of concrete regulatory solutions to ideologically contested social problems is not the judiciary’s forte. In fact, under the American constitutional framework, the regulatory process has been impeded by the judiciary. Being unable to aggregate preferences about uncertainty, the courts will doubtless be forced to decide cases on the basis of first principles – the right to bear arms, freedom of speech, and such like. Those principles, though no doubt important to Americans, will also operate to obscure the central regulatory issue, which is the desirability of 3D gun printing.

V Conclusion

The time is opportune to conclude. We began by drawing a distinction between technologies that are risky and technologies that are uncertain. Risky technologies are best regulated by the judiciary. This is so because litigation is the most cost-effective method of funnelling information from litigants to the lawmaker. Uncertain technologies are different, in that regulatory choices have to be made on the basis of subjective preference rather than objective facts. The legislature, which is designed with a view to aggregating social preferences, is superior to the courts. We also drew a distinction between the short and the long term. In the short term, the main regulatory problem is the acquisition of information. In the long term, attention shifts to problems of hierarchy, overlap, and taxonomy. Accordingly, the more complex an area of law becomes, the likelier it is that codification – with its superior structure – will become desirable.

We then moved to apply the theory to self-driving cars. Self-driving cars are risky: there is a positive probability that they will injure or kill humans. The history of automotive regulation shows that, in the short term, the courts are well-suited to solving regulatory challenges. As the law matures, judge-made rules begin to overlap, prompting a shift to statute. It is likely that self-driving cars will follow the same pattern: at first, the courts will command an advantage due to their informational superiority. Once a stable body of legal principles emerges, codification will be preferable.

We also examined the applications of the theory to an uncertain technology, 3D printing. The set of potential risks that 3D printers pose is open-ended. It is not possible to ascertain the actuarial probability that harm will occur. Accordingly, the problem is one of aggregating social preferences about uncertainty. Regulation in the field is nascent. In line with our predictions, the more promising regulatory initiatives originate from legislative bodies. The courts, stuck between entrenched constitutional doctrines and the need to regulate harm, have been slow to respond. Evidently, the legislature commands an advantage.

These examples, of course, are neither conclusive nor exhaustive. Much remains to be done. We hope, however, that our theoretical framework – schematic as it is for now – represents a small advance in our understanding of the institutional dynamics of technological regulation.