Introduction

Although multiple authors have cited the importance of evaluation within the conservation sector (e.g. Sutherland et al., Reference Sutherland, Pullin, Dolman and Knight2004; Ferraro & Pattanayak, Reference Ferraro and Pattanayak2006; Brooks et al., Reference Brooks, Wright and Sheil2009), implementation of project monitoring and evaluation has been far from ubiquitous. Evaluation is essential to provide insight into existing activities, help inform future decisions and programme design, and demonstrate success to other professionals and funding bodies (Hatry et al., Reference Hatry, Ammons, Coe, Kopczynski and Lombardo1999; Blann & Light, Reference Blann and Light2000). Particularly in the conservation sector, where both time and finances are often limited, organizations cannot afford to waste either of these resources on ineffective activities. Evaluation can help to direct programmes towards approaches with proven success (Stem et al., Reference Stem, Margoluis, Salafsky and Brown2005).

Training is one activity that benefits from evaluation. The ability of conservationists to share their knowledge, expertise and experience effectively with others is imperative to the discipline's success. Training may be regarded as a form of capacity building, which has been called for in nearly every sector of conservation (OECD, 2006; Rodriguez et al., Reference Rodriguez, Rodriguez-Clark, Oliveira-Miranda, Good and Grajal2006; Clubbe, Reference Clubbe2013). Training can help practitioners to be more effective, increasing their overall impact and improving conservation success by disseminating new techniques and skills (Fien et al., Reference Fien, Scott and Tilbury2001).

There is an underlying assumption that training is inherently a good thing that generates benefits at individual, organizational and/or systemic levels. However, despite the importance of evaluating the impacts of training in conservation, such evaluations have been relatively scarce (Rajeev et al., Reference Rajeev, Madan and Jayarajan2009). By measuring impacts, more effective and tailored courses can be designed and implemented (Morrison et al., Reference Morrison, Zhang, Urban, Hall, Ittekkot and Avril2013). The few published evaluations have generally focused on formal academic programmes (Muir & Schwartz, Reference Muir and Schwartz2009) or on environmental education (Thomson et al., Reference Thomson, Hoffman and Staniforth2010; Rakotomamonjy et al., Reference Rakotomamonjy, Jones, Razafimanahaka, Ramamonjisoa and Williams2015).

The paucity of impact studies is also attributable in part to the challenging and nuanced nature of training outcomes; attributing change to one intervention or training course is difficult and sometimes impossible (James, Reference James2001). Both counterfactuals and control groups are challenging, as no two organizations or individuals are identical (James, Reference James2009). Measurement is difficult, as some impacts cannot be quantified (Hailey et al., Reference Hailey, James and Wrigley2005), and concerns over exposing shortcomings or failures may prevent organizations from undertaking evaluations (Redford & Taber, Reference Redford and Taber2000; Fien et al., Reference Fien, Scott and Tilbury2001). Additionally, because capacity is not static, the timing of the evaluation after the training intervention can affect the results. Individuals learn and retain information at different rates, positions within organizations may contribute to individual capacity, and external conditions may affect capacity independently of an intervention (James, Reference James2009; Simister & Smith, Reference Simister and Smith2010).

Arguably, however, the greatest challenge to understanding the effects of training is a lack of clarity regarding why it is being done in the first place (James, Reference James2009; Manolis et al., Reference Manolis, Chan, Finkelstein, Stephens, Nelson, Grant and Dombeck2009). Ideally, intended outcomes should be specified before training activities are undertaken, using an explicit theory of change. Training providers and capacity development organizations alike often lack a clear statement of how their activities will influence recipients (Simister & Smith, Reference Simister and Smith2010), and without this, evaluation can become time-consuming, expensive and ineffective. Success must be defined before it can be measured.

We evaluated the impact of the long-term training programme of Durrell Wildlife Conservation Trust through a case study of the Durrell Conservation Academy's activities in Mauritius. The island of Mauritius, in the Indian Ocean, has a number of threatened and endemic species living in highly degraded habitat, with only 2% of the original forest cover remaining. With limited opportunities for conservation training available locally, either Mauritians must go abroad to receive training, or external organizations must provide training on the island. Durrell, an international NGO, has been training Mauritians since 1977, offering courses of 1 week to 3 months’ duration (Durrell Wildlife Conservation Trust, 2015), and in 2013 it established a permanent international training base in Mauritius to make training more accessible for Mauritian conservationists.

Durrell is a partner of the Mauritian Wildlife Foundation, which was created in the late 1970s and now has 21 projects on the island of Mauritius as well as on the island of Rodrigues and several smaller islands (Mauritian Wildlife Foundation, 2015). Many of Durrell's Mauritian trainees are employees of the Mauritian Wildlife Foundation, but the Trust has also trained individuals from the private and government sectors. Additionally, local and overseas PhD students have conducted research in partnership with both organizations, and hundreds of volunteers have participated in conservation work. However, we focus only on formal courses offered by Durrell, because of the similarities with other training programmes within conservation, the extensive training database, and the facility to compare courses taken, and their effects.

Our objectives were to evaluate (1) how Durrell's training for Mauritian conservationists has changed over time, (2) what Durrell and its trainees intend to achieve through the training programmes, (3) how these intentions have changed over time, (4) how successful Durrell has been in achieving the intended outcomes, and (5) the extent to which participants' intended outcomes have been achieved; (6) we also synthesized general recommendations for training within the conservation sector.

Methods

Given the complex and interacting nature of training impacts, we used both qualitative and quantitative methods, as recommended in both the development and conservation sectors (Stem et al., Reference Stem, Margoluis, Salafsky and Brown2005; Simister & Smith, Reference Simister and Smith2010; Wheeldon, Reference Wheeldon2010). We used a questionnaire and semi-structured interviews to examine the aims of a training programme and to record impact. Both grounded theory and Most Significant Change were used to design and analyse the interviews. Grounded theory is a qualitative method for analysing interviews and texts, in which coding is used to gather and analyse data systematically (Bernard, Reference Bernard2011), and has been used successfully in a variety of disciplines (Bhandari et al., Reference Bhandari, Montori, Devereaux, Dosanjh, Sprague and Guyatt2003; Pitney & Ehlers, Reference Pitney and Ehlers2004; Schenk et al., Reference Schenk, Hunziker and Kienast2007), including to analyse the impact of mentorship on university students and to understand the mentoring process (Pitney & Ehlers, Reference Pitney and Ehlers2004). However, its use in conservation is limited. We utilized grounded theory because there were no specific expectations prior to the research, and thus themes, and eventually a model, could arise from the interviews through coding.

Most Significant Change is a method focused on collecting stories, and is particularly useful when impacts are hard to predict and measure. It is an iterative process, whereby stories of change or impact are collected from individuals receiving a capacity building intervention. These stories are chosen not to be representative but with the intention of seeking out interesting or unusual stories, both positive and negative (Davies & Dart, Reference Davies and Dart2005; Simister & Smith, Reference Simister and Smith2010). Most Significant Change was first utilized in the conservation sector in 2008 in an effort to assess the impacts of livelihood-based interventions holistically (Wilder & Walpole, Reference Wilder and Walpole2008).

Prior to data collection, protocols were reviewed and approved through the formal ethics review procedure of Imperial College London's MSc in conservation science. We conducted semi-structured interviews with current and former Durrell staff in the UK and with staff and current training participants in Mauritius. We used these interviews to inform the development of a questionnaire, which was emailed to course participants. Self-assessment was utilized throughout the study, as it reflects how recipients view the intervention (Hailey et al., Reference Hailey, James and Wrigley2005). This increases stakeholder buy-in and makes evaluation a more participatory process (James, Reference James2001). However, there are limitations to self-assessment: information will be inherently biased and subjective, and there is no external reference point (Hailey et al., Reference Hailey, James and Wrigley2005).

Interviews

Individuals that have influenced Durrell's training academy were referred to as planners. We interviewed them to understand how Durrell's mission in training has evolved, and how they expected training to effect change. Both Mauritian and non-Mauritian individuals who had completed Durrell courses in Mauritius, and Mauritians who had completed a course at Durrell's headquarters in Jersey, UK, were referred to as participants. They were interviewed to examine why they attended the courses, what they expected, how satisfied they were, and what their perceptions were of lasting impact from their training. Interviewees were informed about the purpose of the research and gave their consent to be interviewed. Interviews were conducted anonymously and confidentially.

We used the interviews with planners to generate an implied theory of change using grounded theory, depicting how planners thought training would effect change. We utilized Most Significant Change in participant interviews to seek out unique stories of change that communicated impacts that are difficult to measure. Following the Most Significant Change approach, we chose stories we found to be particularly illuminating rather than representative (Davies & Dart, Reference Davies and Dart2005). The choice of stories was also intended to highlight the breadth of training impacts and to illustrate both positive and negative impacts.

Questionnaire

Following the interviews we developed a questionnaire to gather evidence for the links in the implied theory of change (Supplementary Material 1). We used Qualtrics (Qualtrics, Provo, USA) to administer the questionnaire. The online questionnaire was piloted on MSc students at Imperial College London, refined to better represent the Mauritian context, and then re-piloted on post-graduate diploma students in Mauritius. Respondents were informed of the aim of the research and assured anonymity and confidentiality. They were free to withdraw from the survey at any time.

Survey respondents who had taken more than one course answered questions for each course. Based on the themes that emerged in the implied theory of change and participant interviews, the questionnaire explored six elements of training that were considered to be important by both planners and participants: perception of control, career effects, work environment, networking, practical skills, and theory (Table 1).

Table 1 An overview of the main topics addressed in the questionnaire administered to conservation practitioners who had received training from Durrell Wildlife Conservation Trust in Mauritius.

We aggregated the responses to the relevant questions to produce a score for each category (Table 1). Cronbach's α test was used to determine the reliability of this aggregation in terms of the consistency of responses to various elements of the score; the higher the α value, the more reliable the scale, with a value of 1 indicating that all elements making up the score show the same results, and a value of 0 indicating they are all different (de Vaus, Reference de Vaus2013). Career effects had an α of 0.85, practical skills 0.83, perception of control 0.83, and work environment 0.81. These high α values verified that aggregation was appropriate (Bernard, Reference Bernard2011), and therefore we calculated scores for each participant by coding questions on a Likert scale of 1–5 and then summing the responses. All questions were weighted equally. Scores for the networking category were calculated by summing the number of students and staff the respondent was still in contact with.

To determine the validity of the implied theory of change we tested the connections between elements for significant relationships. We expected that elements that were connected in the implied theory of change would have a significant positive relationship between their scores. Spearman's rank correlation coefficient was used to determine if there were any significant associations between respondents’ scores (Table 2; see Supplementary Table S1 for correlation coefficients and P values). Summary statistics were calculated with Excel (Microsoft, Redmond, USA), and Spearman's rank correlation coefficient was computed in R v. 3.0.1 (R Development Core Team, 2016).

Table 2 Significant and non-significant correlations between scores from the questionnaire (Spearman's rank correlation), where 0 indicates non-significant correlation at P > 0.05.

* P < 0.05; ** P < 0.01; *** P < 0.001

Data collection

Ten planners were interviewed and asked about their recollections of the aims of the training programme during their involvement, from 1961 to 2015. Sixteen participants, 13 of whom were Mauritian, were interviewed, having participated in courses during 1977–2014. We sent 98 questionnaires by email to Mauritian nationals who had participated in one or more Durrell courses during 1977–2014 in Jersey or elsewhere, and people of other nationalities who had participated in Durrell courses in Mauritius during 1991–2014. Of these, 54 individuals (55%) returned completed questionnaires, providing 75 individual course assessments; 57% of respondents were Mauritian and 43% were international.

Results

Did trainees get what they wanted?

Trainees were asked what they had hoped to gain from their course, and whether they perceived they had attained that. The majority of respondents achieved what they had hoped to, except in terms of preparation for a new role: 53% of respondents who hoped to improve their career prospects did not feel their course had prepared them for a new role. Overall, however, respondents perceived that they were getting what they wanted (Fig. 1).

Fig. 1 The number of trainees who desired a particular outcome from a given course, and the percentage who did and did not perceive they had achieved their desired outcome in terms of various elements of capacity building (n = 75 trainees).

A Mauritian participant explained why they felt a 10-day course focused on island species conservation was so beneficial for networking:

Probably I wouldn't at that time have thought about that, but you have participants from different places, like governments… you get ideas and things together, and there's so many sides you can get from others because everybody will kind of share things from what they're experiencing.

Perception of control can generally be defined as a trainee's belief that they can effect change in their professional lives. One participant described their experience on the 3-month Durrell Endangered Species Management course in Jersey, which they attended early in their conservation career:

For me that was the turning point…That was the real spark. It was there that I kind of fully comprehended what I was doing…And also, you come from a country like Mauritius, you think you are one of those isolated islands you know that nobody knows of …and you kind of say, well, what we're doing here is not isolated, it's actually quite important, and people recognize it, the work that we do …I really felt I could do more. I should do more.

Another participant described their improved confidence in communication:

I was very afraid, talking in front of people, doing a presentation, I was so petrified, and then going there I did several presentations, I was more confident…When somebody's coming here I can talk to him…have better communication. And also when there is a new staff coming here I know how to talk to [them], if there's something wrong, I know…how to say it is wrong. Not, ‘It is wrong!’ No, I know how to talk about that.

One participant described why they attended the endangered species management course:

I [didn't] want to end up in conservation stuck at the same place because of a qualification that prevented progress…I didn't have any qualification in conservation.

Another participant described their disappointment in career progression following the course:

After I came back, I was hoping that I would be promoted or I would change projects or something, but nothing happened. I was quite disappointed. Because for me, it was like I had achieved something … and I thought I would be, like have some more responsibility, doing something different or apply what I've learned, but nothing happened. I was very disappointed.

The first Durrell trainee described his career path following training in Jersey:

So that's how in 1976 they advertised—the government of Mauritius—advertised the scholarship for Wildlife Preservation Trust at the zoo. At that time I was teaching, I was an education officer, teaching biology up to high school certificate level… and I stayed, you know, almost 10 months in Jersey…The ministry created what they called a conservation unit [in 1990]. Established a conservation unit, just administratively, and I was asked to lead that… This is when I proposed the creation of the National Park… in June 1994 the park was created, was officially established, and I was appointed in August 1994 as the first director of National Parks…I'm happy that I've had a good career, thanks to the initial training I got.

Validity of the implied theory of change

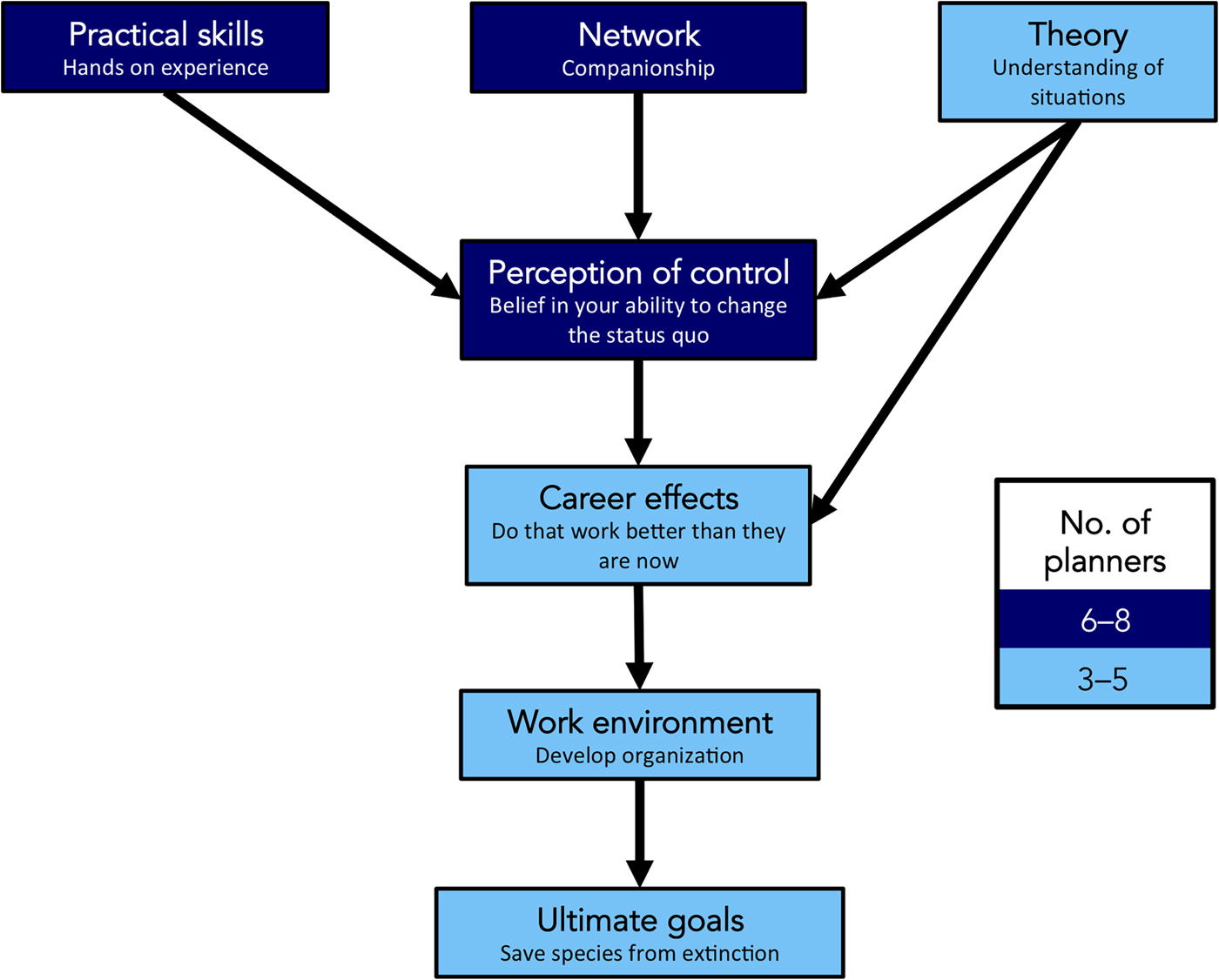

No explicit theory of change was found in Durrell's internal literature, so the implied theory of change was constructed from interviews with planners. We found that planners believed if trainees were taught practical skills and theory, and were exposed to networking with other students, this would lead to an increased belief that they could effect change (perception of control), which would lead to them performing their jobs better and moving to new roles, affecting the overall performance of their organization and ultimately saving species from extinction. The core of the implied theory of change, centring on personal effects that lead to external change, has been a part of the ethos of the training programme consistently since the 1970s (Fig. 2).

Fig. 2 Visual representation of the implied theory of change. The shading indicates how many planners mentioned each element (n = 10).

Based on the results from the questionnaire and participant interviews, the validity of the implied theory of change was tested, both for individual elements and the linkages between elements (Fig. 3). Both perception of control and work environment scores had significantly positive correlations with practical skills and career effects, and with each other (Table 2). Neither theory nor networking scores were significantly correlated with any other score.

Fig. 3 Implied theory of change (ITOC), with each box representing the degree to which a particular aspect was enhanced by training, according to questionnaire data, and arrows indicating which connections between aspects are quantitatively supported (based on the results in Table 2).

All elements of the implied theory of change were positive, showing that a majority of respondents perceived an increase in their capacity. The connection between the theory and any other section was not supported, however, nor was the connection between networking and perception of control.

Discussion

Our findings add to the available knowledge regarding the intentions behind, and the perceived impacts of, a long-term conservation training programme. The intentions of both Durrell and trainees changed little over time, although they broadened to some extent (objectives 1 and 3). Both Durrell and trainees desired practical skills, theory, improved perception of control, improved career prospects, and to influence work environments (objective 2), and both achieved their intended outcomes of training overall, with the exception of preparation for new roles (objectives 4 and 5).

Implied theory of change

Although the implied theory of change has been relatively consistent over time, a limitation must be acknowledged; although a planner may have mentioned several topics, their personal emphasis is not depicted. Also, the theory is based on hindsight, and planners' perceptions may have shifted over time. The implied theory of change may therefore appear to have been more consistent over time than it was in reality. The lack of contemporaneous documentation means that it is not possible to validate the planners’ impressions about what their aims were at the time.

Respondents’ positive evaluations of the impact of training affirm that in general the individual sections of the implied theory of change are logical, achievable and potentially replicable by other organizations. Despite not having a theory of change beforehand, as suggested by Simister & Smith (Reference Simister and Smith2010), Durrell was still successful overall in achieving its desired impacts. This is encouraging, as many organizations have undertaken capacity building activities without an expressed theory of change (James, Reference James2009; Manolis et al., Reference Manolis, Chan, Finkelstein, Stephens, Nelson, Grant and Dombeck2009). Our findings show that it is possible to construct a theory of change post hoc for use in monitoring and evaluation.

The lack of a significant association between a respondent's gain in practical skills and being more effective in their job, despite both of these having significant correlations with perception of control, implies that practical skills must feed into perception of control before effectiveness at work will be influenced, as implied by the theory of change. This highlights the importance of perception of control as a critical step in maximizing the impact of training.

The concept that improved theory leads to more effective individuals is not supported by our findings. In interviews many participants explained that the courses did not necessarily change their actions but helped them understand the theory behind them. Theory may be a key aspect of training but to believe it is changing performance at work appears to be misleading. Similarly, networking may be an intended outcome but it should not be expected to influence other effects of the course. Despite many interviewed participants mentioning that they enjoyed meeting the other course participants, there was no significant correlation between networking scores and any other element, and therefore our findings do not support the implication that networking leads to greater collaboration (Morrison et al., Reference Morrison, Zhang, Urban, Hall, Ittekkot and Avril2013). However, it is possible that networking has affected participants in other ways, such as exposing them to new ideas, cultures and contexts. It should also be noted that networking was not a taught aspect of courses; planners hoped that networking would happen organically, but teaching trainees how to network effectively could change these results. Networking is more complex than simply counting the number of individuals trainees are still in contact with, and a more nuanced investigation of this may be beneficial in the future.

Career effects and work environment

Of the specific increases in capacity desired by trainees (Fig. 1), the highest level of dissatisfaction was with career progression; 53% of trainees who desired improved career prospects felt the course did not prepare them for a new role. However, whether this may be considered a failure or not depends on the training provider's emphasis, priorities and expectations. In some cases a trainee may feel more capable, have more skills and better knowledge of theory, and be better at their job, but the opportunity to progress within their organization or preferred country may not be available. Additionally, it is assumed that desiring greater career prospects would indicate a desire for a new role but this may not be true for all participants, as some may feel that although their position has not changed their prospects have improved. We recommend further investigation of satisfaction with career prospects.

Given the significant interactions between work environment scores and perception of control, career effects and practical skills, the importance of the environment in influencing the impact of training warrants consideration, as has already been suggested (Barrett et al., Reference Barrett, Brandon, Gibson and Gjertsen2001; OECD, 2006). In a positive environment the effects of training may be realized through increased interest among managers and colleagues, and opportunities to use skills gained from the course. If the work environment is negative it tends to dampen the effects of training without being influenced itself. However, work environments, despite their importance, are often beyond the scope and control of a training course. We recommend that investigating ways to prepare individuals for returning to negative work environments be considered, as this will affect nearly all other impacts of the course.

Both career progression and work environment highlight the tension between training focused on the individual and training focused on the organization. If training is intended primarily to influence an organization, the organization should be willing to support trainees upon their return, and trainees should be poised to influence their organization. Individuals on the cusp of career advancement could be identified and trained. However, if individuals are the primary focus of training, then training providers need to devise methods of supporting the individual, who may be returning to a negative or difficult work environment but who, with proper support, may have potential for effecting change either in their current organization or in another organization. Whether the focus is on individuals or organizations will influence course design, and monitoring and evaluation, and ideally this should be specified prior to training.

Impact on Durrell Conservation Academy

Drawing on many of the findings of this research, in particular the impact of training on perceptions of control, Durrell has developed a forward-looking theory of change that will be used to inform the training developed and how it is monitored and evaluated. This study has also been an impetus for a programme to develop training impact indicators to add to the existing Durrell Index, which is currently focused on species conservation impacts. These indicators and the rationale for them will be published online, to contribute to the broader understanding of impact measurement within the conservation training sector.

Methods employed in the study

The mixed-methods approach we used to evaluate training in conservation was relatively quick and inexpensive, although not without limitations. The self-assessment of participants is inherently biased and difficult to triangulate, and with little evidence to corroborate what planners recall about their intentions we cannot know if our reconstructed theory of change is accurate. In the future, collecting data from the colleagues of training participants may add robustness and provide further insights into how training affected their capacity. However, the mix of questionnaires and interviews with both providers and recipients of training facilitated evaluation of Durrell's perceived intentions both statistically and through stories, and ultimately gave direction and focus to the training programme. We believe this combination of methods is replicable for evaluating other capacity-building programmes.

We have shown that even when conservation activities are already underway it is still possible to construct a theory of change to guide monitoring and evaluation. Our findings highlight the importance of the work environment and the way this interacts with other course effects. Training providers must consider how to address this or they risk wasting their resources. The effect of training on trainees' perception of control is an integral and vital effect of training. Skills and theory alone do not suffice. Motivation and confidence are vital, and training can instil in conservation practitioners a belief that they can make a difference. As the sector becomes more challenging, it becomes increasingly important that conservationists believe they can have a positive impact on the world around them.

Acknowledgements

We thank the Mauritian Wildlife Foundation, Wildlife Preservation Canada and the government of Mauritius for their support and direction, and the study participants for their insights and engagement.

Author contributions

BS, JC and EJMG designed the study. BS conducted the interviews and the data analysis, and JC and EJMG provided supervision, guidance and support.

Biographical sketches

Brittany Sawrey is interested in how leadership affects conservation outcomes, and the intersection of conservation and religious organizations. Jamie Copsey has been working in the field of biodiversity conservation training and education for over 17 years. His current research interests concern human motivations for natural resource use, conservation management and leadership and how we understand and measure capacity-building efforts. E. J. Milner-Gulland is the Tasso Leventis Professor of Biodiversity and leads the Interdisciplinary Centre for Conservation Science at Oxford University (http://www.iccs.org.uk).