Industrial and organizational (I-O) psychology is a field that is broadly concerned with improving organizations and the lives of people who work in organizations (The Society for Industrial and Organizational Psychology, 2019). To serve these goals, the work of I-O psychology fundamentally revolves around the science and practice of organizational interventions (Muchinsky & Howes, Reference Muchinsky and Howes2019)—including studying, developing, implementing, testing, and refining organizational interventions, as well as the theories that these interventions are based upon. We define organizational intervention as any planned change in policies, programs, procedures, or systems intended to shift employee attitudes or behaviors in organizations (Porras & Silvers, Reference Porras and Silvers1991). In I-O psychology, the focus on organizational interventions cuts across industries. Whether one is an external consultant responsible for implementing an off-the-shelf selection battery, an internal practitioner concerned with developing new safety training resources, or an academic conducting a meta-analysis on the relationship between work–family policies and employee well-being, all of these I-O professionals rely on the science and practice of organizational interventions to execute their work.

By developing and implementing organizational interventions, and the theories that support these interventions, I-O psychologists have demonstrated a range of benefits for workers and organizations over the last century (Katzell & Austin, Reference Katzell and Austin1992). Consider, for example, the unique benefits that are often yielded to organizations and individuals by interventions such as intelligence testing, goal-setting techniques, and flexible work-arrangement policies. At the same time, each of these interventions has in some instances been associated with noteworthy side effects, such as increased instances of unethical behavior, illegal discrimination against minority groups, and reduced career opportunities for individuals. In the present effort, we call attention to the issue of side effects more broadly as a widespread, pernicious, and poorly understood phenomenon in the field of I-O psychology that deserves more of our attention.

Although I-O psychologists make up a small minority of psychologists, the field has a disproportionate and growing influence on the daily lives of millions of people (U.S. Department of Labor, 2019). For example, I-O psychologists may be found in the HR departments of virtually every Fortune 500 company and the federal government—working as internal or external consultants—or conducting research in universities or institutes. When one considers that large organizations employ approximately one half of the U.S. labor force and that I-O psychologists in all likelihood helped design the organizational interventions that support the selection, development, and management of those employees, it becomes clear that even side effects that are low in probability or magnitude of harm have the potential to negatively affect a large number of people.

Of course, we are not the first to call attention to the unintended consequences of acting on social systems (Merton, Reference Merton1936), or more specifically the side effects that are associated with psychological research and interventions (Argyris, Reference Argyris1968). In this paper, we use the term side effects to describe adverse, unintended events that are experienced by individuals or groups that are associated with the implementation of organizational interventions. An adverse event is an undesired change in some secondary criterion or criteria. Secondary criteria can range from “hard” (i.e., more visible) outcomes including behavior, performance, and organizational metrics to “soft” (i.e., less visible) outcomes like attitudes, cognition, affect, and health. Adverse events differ from cases in which an intervention fails to produce the intended change in the primary criterion or criteria of interest (e.g., a safety training program that fails to improve safety communication), which occur frequently (LaClair & Rao, Reference LaClair and Rao2002). We simply refer to such an event as a failed intervention. Although a failed intervention may be considered an unintended consequence, we do not consider it a side effect. Put differently, if taking a painkiller fails to eliminate a headache we would not conclude that the continuing headache is a side effect of the drug.

This perspective piece takes a three-part approach to examining the issue of side effects that are associated with organizational interventions. First, drawing on published research and historical cases, we draw attention to noteworthy examples of organizational interventions in which side effects have been studied or discussed. Second, we identify a few sociocultural forces that may be contributing to the inadequate attention devoted to the study of side effects. Third, we propose recommendations for the future study, monitoring, and advertisement of side effects. In offering this perspective, we hope to stimulate conversations within I-O psychology about appropriate strategies for studying, preventing, and educating others about intervention side effects.

Examples

One of the first issues to emerge when considering the side effects that are associated with organizational interventions is that of practical significance. How frequently do such side effects emerge, and are these effects strong enough to illicit genuine concern? To satisfactorily answer these questions, we need a large and representative data set—or better yet, multiple data sets bearing on particular types of organizational interventions. With a few exceptions discussed here, such data sets do not yet exist. Thus, to demonstrate the practical significance of developing a deeper understanding of side effects, we review a handful of noteworthy examples in the published literature that represent a range of organizational interventions as a proof of concept. In particular, we discuss the side effects that are associated with eight types of organizational interventions that were either once popular or continue to be popular today. Due to their popularity, many of these interventions have been widely implemented, suggesting that even if the base rate of some side effects is low, a sizable number of the total working population may have incurred their consequences.

Scientific management

Scientific management was introduced in the early 20th century by Frederick Taylor and later refined and applied by Lillian and Frank Gilbreath and others. Although I-O psychology was not formally recognized as a field at this time, our histories count Taylor and the Gilbreaths as pioneers whose work on scientific management paved the way for the formal emergence of the field (for a review of early figures in I-O psychology, see van de Water, Reference Water1997). Scientific management does not refer to a specific organizational intervention but rather to a set of principles that have informed organizational interventions. For example, scientific management principles prescribe the timing of tasks to eliminate waste as well as incentive systems in which workers are paid according to the amount produced (Derksen, Reference Derksen2014). Proponents of scientific management claimed that these principles, when applied systematically, can be expected to boost worker productivity and pay without significantly raising costs, delivering a win–win situation for all parties (Taylor, Reference Taylor1911).

However, scientific management has now come to serve as a historic example of intervention side effects in organizations. In particular, it is important to consider the unintended consequences to physical and mental health when a person is asked to repeat the same, mundane physical motion hundreds or thousands of times per day (Parker, Reference Parker2003)—the exact types of tasks that resulted from the application of scientific management principles to industrial work. Of course, these unintended consequences did not go unaddressed by all proponents of scientific management. To their credit, the Gilbreaths considered the long-term safety and health of workers as a criterion at least as important as short-term gains in productivity, leading to the design of tasks that could be repeated efficiently with lower probability of injury. In a now famous case, the Gilbreaths redesigned the work of bricklayers by elevating the height of the brick stand. This small change allowed workers to lay more bricks in a day while minimizing back injuries that were common in the job at the time.

Thus, through systematic study, the Gilbreaths demonstrated that some of the side effects of scientific management could be circumvented. Nevertheless, not all workers were fortunate enough to experience interventions designed by such careful hands. We are not aware of any data from this period reflecting the total number of organizations that implemented scientific management principles, nor are we aware of data that clearly demonstrate the frequency or intensity of side effects that are associated with scientific management interventions. However, public concern over such side effects was strong enough to provoke Congress to form a committee to investigate the issue in 1914. The findings of the investigation, which involved observations of workers and managers at 30 field sites spanning multiple companies (see Hoxie, Reference Hoxie1916), led to recommendations to ban specific time-keeping practices that were considered too harmful to worker health and well-being.

Intelligence testing

As work has become increasingly fast paced and complex, advancing our understanding of intelligence is becoming more important to organizational success (Scherbaum et al., Reference Scherbaum, Goldstein, Yusko, Ryan and Hanges2012). Intelligence is a multifaceted construct that evolved out of many research traditions with different definitions and foci. Consequently, the methods that are used to operationalize intelligence take many forms. In this discussion, we only focus on traditional assessments of g, or general mental ability. Although this approach to intelligence testing has been criticized on a number of fronts (e.g., Schneider & Newman, Reference Schneider and Newman2015), we focus on the use of g because such tests continue to be used frequently in employee selection systems. Scores from general mental ability tests commonly represent “developed abilities and are a function of innate talent, learned knowledge and skills, and environmental factors that influence knowledge and skill acquisition, such as prior educational opportunities” (Kuncel & Hezlett, Reference Kuncel and Hezlett2010, p. 339).

The popularity of intelligence testing interventions in organizations has largely been driven by the success of general mental ability tests in predicting employee performance (Scherbaum et al., Reference Scherbaum, Goldstein, Yusko, Ryan and Hanges2012). In their landmark meta-analysis, Schmidt and Hunter (Reference Schmidt and Hunter1998) demonstrated the criterion-related validity of g scores across a variety of job types and industries. Based on these results and later work (e.g., Bertua et al., Reference Bertua, Anderson and Salgado2005; Richardson et al., Reference Richardson, Abraham and Bond2012; Schmidt & Hunter, Reference Schmidt and Hunter2004), it is now well accepted that intelligence testing is one of the most powerful tools available to organizations for predicting individual performance across educational and work domains.

Despite the benefits of intelligence testing, perhaps no intervention in I-O psychology has generated more debate (Murphy et al., Reference Murphy, Cronin and Tam2003). Much of this debate focuses on the unintended social consequences of using intelligence test scores to inform real-world decisions (Messick, Reference Messick1995). It is now well established, for example, that average test scores associated with traditional intelligence tests tend to be lower for some minority groups (Hough et al., Reference Hough, Oswald and Ployhart2001; Roth et al., Reference Roth, Bevier, Bobko, Switzer III and Tyler2001). Thus, on average, organizations that used these tests to select applicants increased their odds of discriminating against minority group members (Pyburn et al., Reference Pyburn, Ployhart and Kravitz2008). When one considers that the majority of Fortune 500 companies, as well as most institutions of higher education, now use some form of cognitive ability testing to inform applicant selection decisions, the potential for side effects stemming from this one type of organizational intervention is noteworthy.

A number of approaches have been proposed with the goal of mitigating the side effects of traditional intelligence tests (e.g., score banding, alternative measurement methods). Some of these approaches have demonstrated partial success (Ployhart & Holtz, Reference Ployhart and Holtz2008), but none have been found to eliminate all concerns of bias. The impressive volume of research by I-O psychologists on the unintended consequences of intelligence testing demonstrates that this is one particular domain where side effects have been studied and monitored closely.

Forced ranking

Forced ranking is a performance management intervention that grew in popularity during the final two decades of the 20th century but is subject to mixed reception today. During its heyday, it was estimated that over 60% of the Fortune 500 used the practice in some form (Grote, Reference Grote2005). In a forced ranking system, all employees within the organization are rank ordered (i.e., compared with one another), typically based on performance ratings from supervisors, in order to identify the lowest and highest performing employees (Wiese & Buckley, Reference Wiese and Buckley1998). Next, managers use this information to reward a certain percentage of performers at the top (e.g., bonuses, promotions) and to remove a percentage of employees at the bottom of the distribution (e.g., termination). Such a process may be repeated on an annual basis or less frequently to “clean house” and start fresh with promising new talent. The logic underlying forced ranking is straightforward—by rewarding the strongest and eliminating the weakest performers, the talent pool will get stronger over time, boosting organizational productivity (Scullen et al., Reference Scullen, Bergey and Aiman-Smith2005).

Although forced ranking systems have been shown to improve organizational efficiency in some instances, many organizations have also experienced noteworthy side effects associated with their implementation (Blume et al., Reference Blume, Baldwin and Rubin2009). For example, Dominick (Reference Dominick, Smithers and London2009) noted that the use of forced ranking systems is negatively related to employee morale and positively related to reports of inaccuracy and unfairness in performance reviews. In addition, the use of such systems has also been found to increase the probability of adverse impact against ethnic minorities (Giumetti et al., Reference Giumetti, Schroeder and Switzer2015). Furthermore, having a forced ranking system is positively related to the number of lawsuits brought against organizations by their employees relating to promotion, compensation, or termination decisions (Adler et al., Reference Adler, Campion, Colquitt, Grubb, Murphy, Ollander-Krane and Pulakos2016).

Although modern proponents of forced ranking systems might argue that side effects such as these only occur in instances where systems are poorly implemented, Bates (Reference Bates2003) proposed that even a well-designed forced ranking system can drive away good performers who fear unfair rankings or simply propel poor workers who are skilled at office politics to success. Clearly, although some organizations may benefit from temporary and carefully monitored periods of forced ranking interventions, for many organizations the side effects outweigh the advantages (Chattopadhayay & Ghosh, Reference Chattopadhayay and Ghosh2012). Although systematic data on the issue are lacking, the declining popularity of forced ranking systems over the last two decades is a testament to the concern by scholars and practitioners over potential side effects.

Goal setting

Goal setting refers to the process of specifying objectives related to performance on some task as well as planning the steps needed to facilitate execution. According to goal setting theory (Locke & Latham, Reference Locke and Latham1990, Reference Locke and Latham2002, Reference Locke and Latham2006), goals have a higher probability of motivating task performance when they are perceived as specific, measurable, challenging, accepted, and tied to desired outcomes. A number of meta-analyses have established positive relationships between goal setting and task performance among individuals and groups (Kleingeld et al., Reference Kleingeld, Mierlo and Arends2011; Mento et al., Reference Mento, Steel and Karren1987; Tubbs, Reference Tubbs1986).

Nevertheless, goal setting is not always associated with positive outcomes. For example, Kleingeld et al.’s (Reference Kleingeld, Mierlo and Arends2011) meta-analysis found that when groups are given goals focused on individual task performance, overall group performance tends to suffer. Further work by Ordóñez et al. (Reference Ordóñez, Schweitzer, Galinsky and Bazerman2009) discusses how goal setting can increase risky decision making and unethical behavior and decrease intrinsic motivation.

A case study in which goal setting appeared to contribute to undesirable outcomes can be observed in the 2016 Wells Fargo scandal, in which thousands of employees were terminated for opening over two million fake customer accounts. Following an independent investigation, the widespread fraud was attributed in part to the aggressive sales goals enforced by senior managers (Kouchaki, Reference Kouchaki2016). Although goal setting interventions can serve as powerful motivators of task performance, their ubiquitous presence in organizations—from the C-suite to the factory floor—demonstrates that goals are routinely set and then tied to incentive systems without considering potential side effects (Kerr, Reference Kerr1975). Although recent examples of research on the side effects of goal setting interventions are encouraging (e.g., Ordóñez et al., Reference Ordóñez, Schweitzer, Galinsky and Bazerman2009), we still have much to learn about the contexts in which goals can help or backfire in organizations.

Charismatic leadership

By influencing followers toward goal achievement, leaders have an important influence on organizations (Kaiser et al., Reference Kaiser, Hogan and Craig2008). Charismatic leadership is a style of social influence characterized by inspiration, confidence, optimism, and the articulation of a future-oriented vision (Strange & Mumford, Reference Strange and Mumford2002). Since charismatic leadership emerged as a popular topic of study in the 1980s and 1990s, the literature has overwhelmingly emphasized its benefits (van Knippenberg & Sitkin, Reference Knippenberg and Sitkin2013). For example, a growing body of meta-analytic work demonstrates that charismatic leadership tends to be positively related to important outcomes like employee job satisfaction (DeGroot et al., Reference DeGroot, Kiker and Cross2000), motivation (Judge & Piccolo, Reference Judge and Piccolo2004), performance (Wang et al., Reference Wang, Oh, Courtright and Colbert2011), and innovation (Watts et al., Reference Watts, Steele and Den Hartog2020). Thus, it comes as no surprise that charismatic leadership interventions have become increasingly popular, such as assessing the charisma of managerial candidates or focusing on boosting charisma via leadership development programs (Antonakis et al., Reference Antonakis, Fenley and Liechti2011).

Nevertheless, some researchers contend that there is a dark side to charismatic leadership that must be considered as well. Namely, there is a concern that the inspirational visions promoted by such leaders may in some instances lead to poor decision making (Conger, Reference Conger1990; Fanelli et al., Reference Fanelli, Misangyi and Tosi2009), as well as increase follower dependence on the leader (Eisenbeiß & Boerner, Reference Eisenbeiß and Boerner2013). Another concern is that charisma might serve as a tool by which personalized leaders—those focused on the achievement of personal goals at the expense of others’ interests—bring about darker visions (Howell & Avolio, Reference Howell and Avolio1992).

Some recent experimental work supports the conclusion that people exposed to models of personalized charismatic leadership experience noteworthy side effects such as impaired problem recognition (Watts et al., Reference Watts, Ness, Steele and Mumford2018). Further, historiometric work by O’Connor et al. (Reference O’Connor, Mumford, Clifton, Gessner and Connelly1995) has shown that the emergence of charismatic leaders with personalized motives tends to be associated with harmful outcomes for organizational members and society more broadly. Perhaps then, organizations should not be encouraged to hire, promote, and develop charismatic leaders as a blanket recommendation without first giving careful consideration to these leaders’ values and motives. In other words, charismatic leadership interventions may represent an example in which some types of side effects emerge upon interaction with specific individual differences.

Group cohesion

I-O psychologists have investigated a wide range of models for studying the group processes that facilitate and inhibit effectiveness (Driskell et al., Reference Driskell, Salas and Driskell2018; Ilgen, Reference Ilgen1999; Sundstrom et al., Reference Sundstrom, De Meuse and Futrell1990). One such factor that has been studied extensively is cohesion, or the extent to which group members feel committed to one another as well as the task at hand (Goodman et al., Reference Goodman, Ravlin and Schminke1987). The positive relationship between group cohesion and desired outcomes, such as group satisfaction and performance, has been demonstrated in meta-analyses spanning a variety of organizational contexts (e.g., Carron et al., Reference Carron, Colman, Wheeler and Stevens2002; Evans & Dion, Reference Evans and Dion1991; Gully et al., Reference Gully, Devine and Whitney1995; Hülsheger et al., Reference Hülsheger, Anderson and Salgado2009). A number of popular interventions and training programs have emerged over the last few decades that are intended to boost group cohesion.

Nevertheless, there are good reasons to suspect this rosy portrait of group cohesion is overly simplistic. First, early research on the psychology of conformity suggested too much cohesion may inhibit group functioning. Groupthink refers to the tendency of a cohesive group to agree with other members rather than more objectively appraise their current plan or decision (Janis, Reference Janis1972). A number of adverse events have been attributed to groupthink, including widespread fraud at Enron (O’Connor, Reference O’Connor2002) and the Challenger disaster (Moorhead et al., Reference Moorhead, Ference and Neck1991). Although recommendations for reducing groupthink have been proposed (e.g., Miranda & Saunders, Reference Miranda and Saunders1995; Turner & Pratkanis, Reference Turner and Pratkanis1994), it remains a pervasive problem that can inhibit decision making among highly cohesive groups.

Social network theories provide another lens for understanding the potential side effects of group cohesion. Members of highly cohesive groups tend to demonstrate dense networks—that is, they are groups characterized by many frequent and close interactions among members (Reagans & McEvily, Reference Reagans and McEvily2003). Work by Wise (Reference Wise2014) and Lechner et al. (Reference Lechner, Frankenberger and Floyd2010) demonstrates that moderate levels of group cohesion tend to facilitate performance within networks, but once cohesion reaches a certain threshold, performance begins to suffer. At this time, we are unaware of data bearing on the prevalence with which group cohesion interventions might result in such unintended consequences. As a precautionary measure, however, employees who are exposed to group cohesion interventions may benefit from education concerning the potential side effects of too much cohesion.

Bias training

As organizations are increasingly prioritizing the importance of diversity and inclusion, training interventions designed to increase employee awareness of biases, prejudices, stereotypes, and socially undesirable attitudes are growing in popularity (Grant & Sandberg, Reference Grant and Sandberg2014). For example, in 2018 Starbucks made headlines when it closed all of its U.S. stores for a day to train employees about biases. These programs have good intentions. The rationale for such training is that increasing awareness of biases may help to improve decision making and make organizations more welcoming of diversity, both in terms of demographics and opinions.

Such training efforts can be successful at improving individual awareness of some types of biases, such as cognitive decision-making biases (Mulhern et al., Reference Mulhern, McIntosh, Watts and Medeiros2019). However, a number of studies suggest that interventions focused on the reduction of stereotypes and implicit attitudes may be less effective (Legault et al., Reference Legault, Gutsell and Inzlicht2011). For example, Skorinko and Sinclair (Reference Skorinko and Sinclair2013) found that the technique of perspective taking, an approach that has been shown to reduce explicit expressions of stereotypes (Todd et al., Reference Todd, Bodenhausen, Richeson and Galinsky2011), may in some cases strengthen implicitly held stereotypes.

Similarly, interventions focused on reducing stereotypes about sexual harassment have in some cases been found to increase gendered beliefs (Tinkler et al., Reference Tinkler, Li and Mollborn2007). In other cases, such interventions have resulted in a decreased willingness to report instances of sexual harassment, decreased perceptions of harassment, and increased victim blaming (Bingham & Scherer, Reference Bingham and Scherer2001). Such training programs are rapidly becoming ubiquitous in large organizations and are often required on an annual basis. Given the widespread prevalence of such training programs, more attention needs to be devoted to understanding the base rate of side effects that are associated with these programs, the longevity of these effects, and strategies for reaching the populations that are more resistant to change.

Flexible work policies

Flexible work policies refer to formal arrangements that organizations provide to their employees that are intended to increase employee control over when and where they work (Shockley & Allen, Reference Shockley and Allen2007). Examples of flexible work policies include compressed work weeks (e.g., working 10 hours a day for 4 days each week), working from a distance either full or part time, flexible scheduling, and special leaves of absence for personal or health reasons.

Flexible work policies are held to benefit organizations by improving employee health and well-being, reducing costs, and improving employees’ ability to balance work and family roles (Halpern, Reference Halpern2005). There is empirical support for some of these propositions. For example, a meta-analysis by Allen et al. (Reference Allen, Johnson, Kiburz and Shockley2013) showed that flexible work arrangements have a small, but negative, relationship with work-to-family conflict, suggesting that such policies may help some employees balance work and family responsibilities.

The research on flexible work policies also points to a number of unintended consequences, however. For example, employees who take a leave of absence to bond with a newborn, care for a parent, or recover from illness may encounter a number of problems when they return to work. Judiesch and Lyness (Reference Judiesch and Lyness1999) found that managers who took a leave of absence, regardless of the reason for it, experienced less wage growth, fewer promotions, and lower performance ratings than those who did not. In a meta-analysis focused on the effects of telecommuting, Gajendran and Harrison (Reference Gajendran and Harrison2007) found that employees who telecommute more than 2.5 days per week tended to report lower work–family conflict but worse relationships with coworkers. Although flexible work policies come in many forms, on the whole they are becoming increasingly prevalent. Although popular, flexible work policies should not be viewed as universally beneficial for individual employees and organizations. Managers and employees should consider their particular job and organizational contexts (e.g., climate, type of work) prior to their implementation and use.

Why side effects get ignored

In reviewing the eight examples above, a noteworthy conclusion comes to fore: When side effects are intentionally and systematically studied, we learn valuable insights that improve the success of organizational interventions while mitigating their unintended consequences. For some of these examples (e.g., traditional intelligence testing), I-O psychologists have played a critical role in shedding much light on side effects. However, in other domains (e.g., charismatic leadership), the science of side effects has, with a few exceptions, remained stagnant. We contend that, on the whole, the field of I-O psychology has not devoted the attention to side effects that this subject deserves. Indeed, we are often aware of potential side effects, but unlike primary effects, we rarely invest the time and resources needed to systematically understand them.

We suggest there are at least seven social forces that are to blame for this stagnation of knowledge. Four of these forces are internal to the I-O profession, meaning they stem from the complex nature of I-O work. We labeled these forces the challenge of causal attribution, simplification bias, theory mania, and divided loyalties. Three additional forces are external, meaning that they are a function of the unique organizational or market environments in which I-O psychologists operate. These forces include the marketing problem, lack of accountability, and resource constraints. Together, these forces may be inhibiting the field’s willingness to study intervention-associated side effects. By naming these forces, we hope at the very least to come to a better understanding of the complexity of I-O work and the context in which this work often unfolds. More importantly, understanding these forces may help us to identify strategies for identifying and managing potential side effects.

The challenge of causal attribution

First, the difficulty of drawing strong causal conclusions may discourage the study of intervention-associated side effects in organizations. Put differently, drawing causal conclusions about side effects—their magnitude, probability, and the specific conditions affecting these attributes—is no simple task. In the field of medicine, researchers are well aware of the challenges involved in determining whether some treatment contributed to an adverse event. In most cases, there is little talk of causation—only association. If an adverse event is reported by a patient or physician following the administration of some treatment, this event is recorded as a potential side effect. As the number of reports associated with some treatment increase in frequency, the researcher becomes more confident that the side effect may be caused by the treatment. However, in any nonexperimental or quasi-experimental design, the process of ruling out alternative explanations is fraught with challenges (Shadish et al., Reference Shadish, Cook and Campbell2002). Adverse events may in fact be due to some third variable, such as differences in dosing or administration of the treatment, the simultaneous administration of other treatments, individual differences in the patient’s physiological makeup, differences in environmental factors (e.g., nutrition, exercise), or interactions among any number of these factors.

Establishing cause-and-effect relationships concerning the side effects of organizational interventions may be considered even more challenging, because the potential for extraneous influences is even greater. Organizational interventions occur in complex social systems with many individuals who influence one another in a myriad of ways. In addition, medical treatments tend to be standardized based on a set of preestablished guidelines and chemical formulas. The “formula” of an organizational intervention may vary considerably depending on the professional who designs or administers it as well as on the unique features of the intervention context. Thus, the complexity involved in establishing cause-and-effect relationships may discourage I-O psychologists from studying the side effects of organizational interventions.

Simplification bias

Second, simplification bias may be inhibiting the study of side effects in I-O psychology. Simplification bias refers to the human tendency to reduce the complexity of information in order to comprehend reality (Oreg & Bayazit, Reference Oreg and Bayazit2009). I-O psychology’s orientation toward simplification is probably one of necessity, stemming partly from the complexity involved in developing rigorous and useful scientific theories in uncontrolled and dynamic contexts (Popper, Reference Popper1961) and also due to the challenge of translating research insights to managers who have oftentimes not been trained in the fundamentals of organizational research methods or statistics. Consider, for example, the question of whether an intelligence test demonstrates acceptable validity for use as an employee selection tool. I-O psychologists have been trained to think about such questions as a matter of degree, relying on a multifaceted collection of evidence (Messick, Reference Messick1995). Nevertheless, managers ultimately require a black and white answer of yes or no.

Simplification bias may be inhibiting the study of side effects because of the added complexity involved in identifying side effects (see the challenge of causal attribution). Detecting side effects requires measuring changes across multiple criteria that may or may not appear immediately relevant to the primary criterion of interest. When measuring multiple criteria, research insights tend to become more complex. Thus, studying side effects may obscure what might otherwise be a straightforward interpretation about the value of some organizational intervention.

Further, as highlighted by the side effects associated with charismatic leadership and group cohesion, our failure to identify nonlinear trends may be limiting our understanding of the complexities of how side effects might operate. Understanding nonlinear relationships requires a commitment to complex thinking as well as an understanding of the alternative statistical procedures that are necessary to test these relationships. Thus, our pursuit of parsimony may be contributing to our lack of established knowledge in the domain of side effects.

Theory mania

Third, I-O psychology’s theory mania—its obsessive focus on deductive research—may be in conflict with the inductive approach required to study side effects. Although many top journals in the field have begun to institute policies that encourage inductive discovery, the norm of constraining research according to a set of a priori hypotheses remains the dominant focus of most published research in I-O psychology (Antonakis, Reference Antonakis2017; Cortina et al., Reference Cortina, Aguinis and DeShon2017; Spector, Reference Spector2017). Thus, researchers who hope to publish in these visible outlets are better off focusing their efforts on deductive approaches to research and ignoring research on side effects.

The goal of studying side effects is to establish practical and reliable information about the frequency and severity of intervention-associated adverse events. Estimates of the frequency and severity of side effects are based entirely on observations and reports, not theory. Theory may have a role to play yet in explaining why particular side effects occur under certain organizational conditions. But knowledge in this area is not advanced enough to consider why at this point, only when and how much. Inductive discovery must be valued more highly if I-O researchers are going to invest resources toward the study of side effects.

Divided loyalties

Fourth, side effects associated with organizational interventions may be understudied by I-O psychologists due to divided loyalties inherent in much I-O work. Here we define divided loyalties as the condition of being responsible for serving two or more stakeholders who may or may not have conflicting interests. In this case, stakeholders commonly include oneself, coworkers, shareholders, the law, the field, and internal or external clients. Although financial conflicts of interest tend to receive the most attention in the media, nonfinancial conflicts emerging from competing values or objectives within a profession deserve even more attention because these types of conflicts reflect ambiguous and oftentimes unstated tensions that can covertly inhibit decision making (Lefkowitz, Reference Lefkowitz2017; Thompson, Reference Thompson1993).

Such conflicts are particularly problematic for I-O psychologists (Anderson et al., Reference Anderson, Herriot and Hodgkinson2001). Indeed, The Society for Industrial and Organizational Psychology’s mission signals a tension that has plagued I-O psychologists since the earliest days of the field—we are simultaneously tasked with improving organizations and improving the lives of individuals embedded within these organizations (Lefkowitz, Reference Lefkowitz2017). We are human engineers concerned with demonstrating our business value to the firm, and we are psychologists who are deeply concerned with protecting the rights and well-being of individual employees.

The issue of divided loyalties emerges in a variety of forms and is likely inhibiting the study of side effects across practice and academe. In practice, I-O professionals are oftentimes tasked with championing the value of organizational interventions to managers and clients, and at the same time they may be responsible for evaluating the efficacy of these interventions. In such situations, information about potential side effects may be intentionally hidden or glossed over in order to focus on the more affirmative results. Perhaps a more likely scenario, however, is that in such situations data bearing on potential side effects are not collected at all. Discovering side effects and educating others about them may be seen as a service to science, but for many serving science does not pay the bills.

Divided loyalties may also be inhibiting the study of side effects in academia, particularly in the form of power dynamics. Senior academics may be motivated to defend the value of organizational interventions and theories they have spent careers lives developing, even in the face of newly emerging evidence of side effects that should also be considered. Junior academics may shy away from investigating side effects associated with established theories, methods, or interventions to pursue research directions perceived as more novel or to avoid offending senior colleagues who control access to coveted positions, publications, and awards.

The marketing problem

Fifth, the side effects of organizational interventions may be understudied as a result of I-O psychology’s marketing problem—the first of three external forces we discuss that emerge from the organizational or market environment in which I-O psychologists operate. The marketing problem refers to the lack of awareness among the general public about what I-O psychology is and, consequently, a lack of awareness about the value that I-O psychologists are uniquely qualified to provide (Gasser et al., Reference Gasser, Butler, Waddilove and Tan2004). In this context, any knowledge of side effects may be viewed as a potential threat to the popularity of organizational interventions that I-O psychologists are hired to design and implement. By investigating potential intervention side effects, one risks learning about problems that might otherwise have remained hidden—problems that could interfere with the marketing of professional services.

These perceived threats due to marketing concerns are not limited to practitioners. Academics who are interested in promoting particular theories, methods, and interventions may also be tempted to ignore side effects due to fear that negative information might detract from the perceived effectiveness of their work. We suggest such fears are shortsighted and could be exacerbating I-O psychology’s marketing problem. Ironically, by ignoring side effects, we may be undermining, rather than enhancing, the perceived value of I-O psychology’s products and services. In medicine, patients have come to expect that all treatments have side effects. In fact, patients would be skeptical if a doctor or commercial failed to mention side effects. Such a patient might wonder, “Does this treatment do anything at all?” Thus, an unintended and happy consequence of advertising side effects is that it may help to signal the potential efficacy of organizational interventions.

Lack of accountability

Sixth, there is relatively little accountability for ensuring the effectiveness of organizational interventions in practice and even less accountability for demonstrating that the benefits of some intervention outweigh the risks. In the United States, every new drug or medical treatment must undergo multiple stages of clinical trials prior to marketing and distribution. There are no such requirements for I-O psychologists, nor are there government agencies that are devoted to the regulation of organizational interventions. Although I-O psychologists tend to hold master’s or doctoral degrees, no such degree, license, or certification is legally required to write “prescriptions” for organizational interventions in most states (Howard & Lowman, Reference Howard and Lowman1985). Unlike the fields of medicine or clinical psychology, barriers to entry for becoming a “management consultant” are quite low or in some cases nonexistent. Here we are not equating I-O consultants with management consultants. Rather, we are suggesting that many I-O consultants are indeed engaged in similar activities as management consultants (e.g., executive coaching) and that the majority of the public would not know the difference (see the marketing problem).

Although the qualifications of the person providing organizational interventions are largely unregulated, an important caveat is that some consequences of organizational interventions are regulated. For example, in the United States there are legal standards in place to protect individuals from discrimination, including unintentional discrimination (e.g., adverse impact), that is associated with organizational interventions. The side effects of organizational interventions, however, may extend far beyond illegal discrimination. The general lack of accountability may create an environment in which the detection of side effects is viewed as an unnecessary burden on managers, consultants, and researchers.

Resource constraints

Seventh, the side effects that are associated with organizational interventions may be understudied simply because organizations are unwilling to invest the resources that are needed to detect them. For example, evaluations of organizational interventions are frequently skipped in the interest of saving money (Steele et al., Reference Steele, Mulhearn, Medeiros, Watts, Connelly and Mumford2016). Even if an evaluation of some intervention is conducted, researchers have rarely been trained to consider potential side effects when selecting criteria. Additionally, depending on an I-O psychologist’s specific organization or academic department, access to resources, incentives, and opportunities for conducting and publishing research on side effects can vary widely.

Resource constraints might also open the door to other social forces that inhibit the study of side effects. For example, empirical reports that present a clean and simple picture of effects (see simplification bias) tend to be perceived by reviewers as making a stronger contribution to theory (see theory mania). As a result, these more straightforward pieces have a higher probability of being published. For academics who are on the tenure clock, focusing their limited resources on executing research studies that make clear contributions to the literature is unlikely to promote the discovery of side effects—although such behavior is rational.

In sum, these seven complex social forces may be inhibiting the study of side effects in I-O psychology. When interpreting this summary of forces, one should not assume that each force operates independently. The forces may affect one another or interact to further influence the likelihood that side effects are studied. For example, when divided loyalties are salient, tendencies toward simplification bias might be further amplified in the interest of ignoring information that reflects negatively on the value of some intervention. As another example, when tendencies toward theory mania are combined with a small number of “premier” publication targets (as is the case in many top-ranked management departments), these conditions may increase the tendency to only present results for criteria that are prespecified in extant theories. Such conditions are, unfortunately, not conducive to the study of intervention side effects.

A way forward

It is our view that the science and practice of I-O psychology will benefit from investing more attention toward understanding intervention-associated side effects. Next, we suggest six strategies for advancing knowledge in this domain. Although such strategies are unlikely to solve all of the issues emerging from the complex social forces described previously, the adoption of some of these strategies may help to produce a “mental shift” in how we think about, study, discuss, and monitor side effects as a field.

Ask a (slightly) different question

First, the study and management of side effects might be improved by reframing the research questions, or problem statements, that are used to plan for organizational interventions. One of the most common research question formats that guides intervention research is: “Does [intervention] accomplish [intended result]?” To begin anticipating the side effects associated with organizational interventions, we need only to tweak that question to something like: “If we do [intervention] to accomplish [intended result], it might also affect [unintended result].”Footnote 1

Such reframing of the research question encourages forecasting—that is, the mental simulation of downstream consequences that may be associated with different action possibilities (Byrne et al., Reference Byrne, Shipman and Mumford2010). Forecasting may serve two important functions for identifying side effects. The first is that forecasting stimulates the generation of novel ideas about a range of potential side effects. The second function served by forecasting is that of informing idea selection: identifying which potential side effects are worth pursuing. Although established data on side effects will not be available on many interventions, adopting such thinking may encourage practitioners and academics to start asking for and collecting these data.

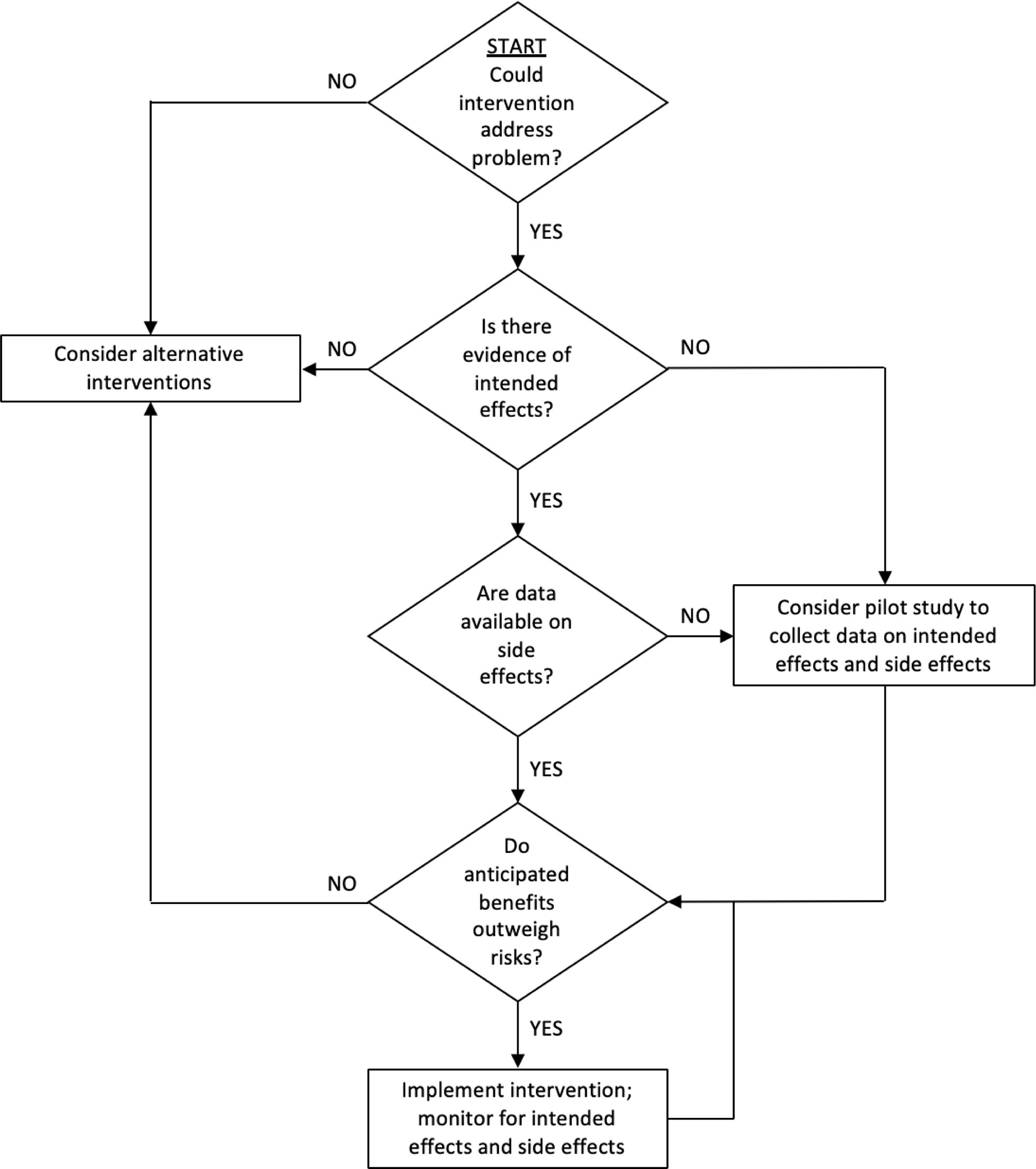

Figure 1 provides a flow chart that illustrates what such a decision sequence might look like if I-O psychologists were to intentionally integrate the consideration of side effects into the planning phases of their research on organizational interventions. In this figure, the diamonds represent decision points and the boxes represent actions from the perspective of the researcher or practitioner. Without intentionally considering the possibility of side effects during the planning stages of interventions, researchers are limited to identifying side effects post hoc—another important strategy for learning about side effects described next.

Figure 1. Accounting for side effects when planning organizational interventions

Develop reporting systems

Once suspected side effects have occurred, some evaluative infrastructure is required to report these adverse events. The Food and Drug Administration (FDA) provides a database (i.e., registry) for storing and monitoring the reports of adverse events associated with medical treatments. Health care providers and consumers can report adverse events that they identify directly to the FDA (e.g., MedWatch Voluntary Reporting Form) or to the manufacturer of the drug, who is then required to report this information to the FDA. Medical researchers are developing sophisticated models and methods for mining large quantities of adverse event reports to discover previously unadvertised side effects as well as harmful interactions among treatments (e.g., Henriksson et al., Reference Henriksson, Kvist, Dalianis and Duneld2015).

We are not the first to note the value that such an aggregation tool could provide in the organizational sciences (Bosco et al., Reference Bosco, Steel, Oswald, Uggerslev and Field2015; Johnson et al., Reference Johnson, Steel, Scherbaum, Hoffman, Jeanneret and Foster2010). Such a database could be sponsored and hosted by professional organizations (e.g., Society for Industrial and Organizational Psychology, Academy of Management, Society for HR Management) to encourage broad participation and allow for some standardization in reporting. Of course, many hurdles must be overcome to support the utility of such a database, such as ensuring confidentiality of reports and coming to a consensus on the specific information that should be collected about each event (Macrae, Reference Macrae2016).

Another major challenge in developing any useful database of adverse events is capturing information about not only the organizational intervention and its associated outcomes but also the numerous decisions surrounding how the intervention was implemented. Such information is critical for identifying whether the intervention itself is resulting in side effects or whether unique characteristics of the implementation process or context are responsible for the emergence of side effects. These challenges in data collection aside, some information is better than no information. Such a database could serve as a catalyst for much transparent and collective discovery about intervention-associated side effects in organizations.

Incentivize research

Providing incentives is another strategy for motivating research on intervention side effects. For example, journal editors can encourage research on side effects in particular topic areas via special issues that are devoted to the subject. Along these lines, new journals might be established that are broadly devoted to the discovery of side effects in the organizational sciences. Such a journal could serve as a natural home for inductive research on side effects that might not align with the deductive orientations of most top journals in the field. This new journal could also serve as a home for practitioner-driven, quasi-experimental research that tends to be underrepresented in most top journals (Grant & Wall, Reference Grant and Wall2009).

Consulting firms might also contribute to research on side effects by marketing the benefits of such discovery to clients. After all, not all unintended consequences are negative (Merton, Reference Merton1936). Moreover, demonstrating an ongoing concern for protecting clients from potential side effects sends a number of positive signals about a service provider. Such practices can signal openness, transparency, and trust—the elements needed to build lasting client relationships. Potential strategies for collecting information about side effects include administering a battery of qualitative and quantitative postintervention measures (e.g., out-take forms), process evaluation measures (Nielsen & Randall, Reference Nielsen and Randall2013), and providing contact information for collecting reports of adverse events.

Establish effect-size guidelines

In the field of medicine, some drugs require prescriptions, whereas others are administered over the counter. This decision is enforced by the FDA based on a number of factors, including the frequency and severity of side effects associated with the drug as well as the potential for abuse. A similar model might be used to classify higher risk and lower risk organizational interventions.

The dimensions of frequency, intensity, and confidence can be mapped for each organizational intervention to classify its “risk level.” Frequency refers to the proportion of individuals or groups who report a particular side effect within a defined window of time following an organizational intervention. Intensity refers to the severity of the intervention-associated side effects. Finally, confidence refers to the strength of evidence available that supports the conclusion that a side effect is associated with a particular intervention. Confidence is stronger when studies that identify side effects employ more rigorous designs and when many studies are available that converge on similar conclusions.

Once an organizational intervention has become classified as higher risk, it becomes increasingly important to advertise these risks to potential consumers. In this case, consumers refer to managers and employees in client organizations. A similar system may be useful for interpreting the risk and likelihood of success of organizational interventions. Research is needed to understand the usefulness of this approach in an organizational context and to establish potential cutoffs for frequency, intensity, and confidence needed to classify an organizational intervention as higher or lower risk. Such information would clearly be useful to practitioners and researchers during the planning stages of organizational interventions, particularly when making decisions that involve weighing anticipated benefits with risks (see Figure 1).

Advertise known side effects

In medicine, every drug comes with a warning label. Any form of advertising is legally required to list the side effects associated with the drug. The goal of advertising side effects is to educate consumers about the known risks associated with organizational interventions. Advertising allows these consumers to make informed decisions about whether the benefits outweigh the costs, as well as consider a range of strategies for mitigating or monitoring side effects as they emerge.

One approach to advertising side effects is to present aggregated information about side effects in a publicly accessible website. WebMD is one such website where patients and health care professionals can look up the side effects that are associated with any common medical treatment. What if there was a WebMD of available organizational interventions where managers, human resources professionals, and organizational consultants could research the side effects that are associated with an intervention prior to recommendation or approval? Of course, all tools have the potential to be misused (e.g., greater potential for misdiagnoses by nonexperts referencing WebMD). Nevertheless, once we are able to reliably assess the side effects that are associated with organizational interventions, it will become increasingly important to advertise these potential risks to consumers.

Educate future researchers

For broad changes such as these to occur within the field of I-O psychology, it is important to educate future organizational researchers in the concepts, methods, and norms that support these changes. Education about side effects can be integrated into special topics courses that tend to focus on organizational interventions (e.g., training and development, personnel selection, motivation, leadership, groups and teams, occupational health psychology, etc.) as well as research methods courses that are staples within all graduate programs in the field.

Along these lines, developing a robust understanding of moderators—what they are, and how they set boundary conditions for interpreting cause-and-effect relationships—is critical to advancing and interpreting knowledge on side effects.Footnote 2 Although some side effects may emerge as main effects of organizational interventions, others may only appear under specific conditions that are associated with a particular organizational intervention such as how the intervention was implemented, the climate in which implementation occurred, or the unique characteristics of the individuals or groups that were targeted (e.g., Wolfson et al., Reference Wolfson, Mathieu, Tannenbaum and Maynard2019). To improve awareness about side effects, graduate students in I-O psychology can benefit from being taught not only what moderator effects are but also how to explain these effects intuitively to lay audiences. In sum, learning to anticipate, monitor, and report side effects, as well as identify the conditions in which these effects are most likely to occur, could be considered a fundamental skill for future practitioners and academics alike.

Conclusion

The arguments we put forth here should be interpreted in the context of two limitations. First, the empirical literature on intervention-associated side effects in organizations is sparse for many topic areas. As a result, we summarize in narrative form, rather than quantify, a handful of examples pertaining to intervention-associated side effects in organizations. One of our initial goals when writing this paper was to identify a taxonomy of the types of side effects that are associated with organizational interventions. However, we found that the current state of knowledge on the topic is simply not advanced enough to put forth such a taxonomy. We need future research that establishes major types of side effects, their frequency and severity, and the conditions under which these unintended consequences are most likely to emerge.

Second, this paper is a perspective piece, meaning that we are arguing for a particular set of opinions based on our observations of the literature as well as our personal experiences in conducting research and implementing organizational interventions. Although we have heard first hand from senior academics and practitioners that these ideas warrant greater discussion in the field, it is unclear how widely shared this perspective may be. Future research that surveys professionals who study, design, and implement organizational interventions concerning their views about side effects may prove fruitful for understanding broader opinions on the topic.

Bearing these limitations in mind, the arguments presented here lead to some noteworthy conclusions. Given I-O psychology’s broad influence, it is no longer good enough to ignore the side effects of organizational interventions. Perhaps not all, but certainly a great many, side effects may be reliably measured and predicted. As Robert Merton (Reference Merton1936) proposed nearly a century ago, unintended consequences need not remain unanticipated.

The benefits of advancing our knowledge of side effects are clear. By developing more accurate expectations about when and why side effects are associated with organizational interventions, we can introduce more sophisticated interventions that eliminate, reduce, compensate for, or at the very least monitor potential negative outcomes while boosting the probability of positive outcomes. In addition, these procedures may result in the detection of unintended positive outcomes associated with organizational interventions.

The road will not be easy. A number of social forces appear to be working against the collective discovery of side effects. Nevertheless, a number of efforts have already proven successful, allowing for some optimism. In conclusion, we believe that adopting a more intentional and systematic approach to studying, monitoring, and advertising side effects is vital to the future of I-O psychology as well as the well-being of the organizations and individuals that I-O psychologists serve. We hope this piece helps to stimulate productive discussion within the field along these lines.