1 Introduction

Modelling plasma dynamics typically involves operating with classical fields on fine grids. This requires dealing with large amounts of data, especially in kinetic models, which are notorious for being computationally expensive. Quantum computing (QC) has the potential to significantly speed up kinetic simulations by leveraging quantum superposition and entanglement (see Nielsen & Chuang Reference Nielsen and Chuang2010). However, quantum speedup is possible only if the depth of a quantum circuit modelling the plasma dynamics scales advantageously (polylogarithmically) with the system size (number of grid cells). Achieving such efficient encoding is challenging and remains an open problem for most plasma systems of practical interest.

Here, we explore the possibility of an efficient quantum algorithm for modelling of linear oscillations and waves in a Vlasov plasma (see Stix Reference Stix1992). Previous works in this area focused on modelling either spatially monochromatic or conservative waves within initial-value problems (see Engel, Smith & Parker Reference Engel, Smith and Parker2019; Ameri et al. Reference Ameri, Ye, Cappellaro, Krovi and Loureiro2023; Toyoizumi, Yamamoto & Hoshino Reference Toyoizumi, Yamamoto and Hoshino2023). However, typical practical applications (for example, for magnetic confinement fusion) require modelling of inhomogeneous dissipative waves within boundary-value problems, which require different approaches. Here, we consider a minimal problem of this kind to develop a prototype algorithm that would be potentially extendable to such practical applications.

Specifically, we assume a one-dimensional collisionless Maxwellian electron plasma governed by the linearized Vlasov–Ampère system. Added to this system is a spatially localized external current (antenna) that drives plasma oscillations at a fixed frequency $\omega$![]() . This source produces evanescent waves if $\omega$

. This source produces evanescent waves if $\omega$![]() is smaller than the plasma frequency $\omega _p$

is smaller than the plasma frequency $\omega _p$![]() . For $\omega > \omega _p$

. For $\omega > \omega _p$![]() , it produces Langmuir waves, which propagate away from the source while experiencing Landau damping along the way. Outgoing boundary conditions are adopted to introduce irreversible dissipation. This relatively simple system captures paradigmatic dynamics typical for linear kinetic plasma problems and, thus, can serve as a testbed for developing more general algorithms of practical interest. We start by showing how this system can be cast in the form of a linear vector equationFootnote 1

, it produces Langmuir waves, which propagate away from the source while experiencing Landau damping along the way. Outgoing boundary conditions are adopted to introduce irreversible dissipation. This relatively simple system captures paradigmatic dynamics typical for linear kinetic plasma problems and, thus, can serve as a testbed for developing more general algorithms of practical interest. We start by showing how this system can be cast in the form of a linear vector equationFootnote 1

where $\boldsymbol {A}$![]() is a (non-Hermitian) square matrix, ${\boldsymbol{\psi} }$

is a (non-Hermitian) square matrix, ${\boldsymbol{\psi} }$![]() is a vector that represents the dynamical variables (the electric field and the distribution function) on a grid and $\boldsymbol {b}$

is a vector that represents the dynamical variables (the electric field and the distribution function) on a grid and $\boldsymbol {b}$![]() describes the antenna. There is a variety of quantum algorithms that can solve equations like (1.1), for example, the Harrow–Hassidim–Lloyd algorithms (see Harrow, Hassidim & Lloyd Reference Harrow, Hassidim and Lloyd2009), the Ambainis algorithm (see Ambainis Reference Ambainis2012), the algorithms inspired by the adiabatic QC (see Costa et al. Reference Costa, An, Sanders, Su, Babbush and Berry2021; Jennings et al. Reference Jennings, Lostaglio, Pallister, Sornborger and Subaşı2023) and solvers based on the quantum signal processing (QSP) (see Low & Chuang Reference Low and Chuang2017, Reference Low and Chuang2019; Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021), to name a few. Here, we propose to use a method based on the QSP, specifically, the quantum singular value transformation (QSVT) (see Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021), because it is known to scale near optimally with the condition number of $\boldsymbol {A}$

describes the antenna. There is a variety of quantum algorithms that can solve equations like (1.1), for example, the Harrow–Hassidim–Lloyd algorithms (see Harrow, Hassidim & Lloyd Reference Harrow, Hassidim and Lloyd2009), the Ambainis algorithm (see Ambainis Reference Ambainis2012), the algorithms inspired by the adiabatic QC (see Costa et al. Reference Costa, An, Sanders, Su, Babbush and Berry2021; Jennings et al. Reference Jennings, Lostaglio, Pallister, Sornborger and Subaşı2023) and solvers based on the quantum signal processing (QSP) (see Low & Chuang Reference Low and Chuang2017, Reference Low and Chuang2019; Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021), to name a few. Here, we propose to use a method based on the QSP, specifically, the quantum singular value transformation (QSVT) (see Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021), because it is known to scale near optimally with the condition number of $\boldsymbol {A}$![]() and the desired precision.

and the desired precision.

Specifically, this paper focuses on the problem that one unavoidably has to overcome when applying the QSVT to kinetic plasma simulations. This problem is how to encode the corresponding large-dimensional matrix $\boldsymbol {A}$![]() into a quantum circuit. A direct encoding of this matrix is prohibitively inefficient. Various methods for encoding matrices into quantum circuits were developed recently (see Clader et al. Reference Clader, Dalzell, Stamatopoulos, Salton, Berta and Zeng2022; Camps et al. Reference Camps, Lin, Beeumen and Yang2023; Zhang & Yuan Reference Zhang and Yuan2023; Kuklinski & Rempfer Reference Kuklinski and Rempfer2024; Lapworth Reference Lapworth2024; Liu et al. Reference Liu, Du, Lin, Vary and Yang2024; Sünderhauf, Campbell & Camps Reference Sünderhauf, Campbell and Camps2024). We propose how to make it more efficiently by compressing the content of $\boldsymbol {A}$

into a quantum circuit. A direct encoding of this matrix is prohibitively inefficient. Various methods for encoding matrices into quantum circuits were developed recently (see Clader et al. Reference Clader, Dalzell, Stamatopoulos, Salton, Berta and Zeng2022; Camps et al. Reference Camps, Lin, Beeumen and Yang2023; Zhang & Yuan Reference Zhang and Yuan2023; Kuklinski & Rempfer Reference Kuklinski and Rempfer2024; Lapworth Reference Lapworth2024; Liu et al. Reference Liu, Du, Lin, Vary and Yang2024; Sünderhauf, Campbell & Camps Reference Sünderhauf, Campbell and Camps2024). We propose how to make it more efficiently by compressing the content of $\boldsymbol {A}$![]() at encoding. The same technique can be applied in modelling kinetic or fluid plasma and electromagnetic waves in higher-dimensional problems, so our results can be used as a stepping stone towards developing more practical algorithms in the future. The presentation of the rest of the algorithm and (emulation of) quantum simulations are left to future work.

at encoding. The same technique can be applied in modelling kinetic or fluid plasma and electromagnetic waves in higher-dimensional problems, so our results can be used as a stepping stone towards developing more practical algorithms in the future. The presentation of the rest of the algorithm and (emulation of) quantum simulations are left to future work.

Our paper is organized as follows. In § 2 we present the main equations of the electrostatic kinetic plasma problem. In § 3 we present the numerical discretization of this model. In § 4 we cast the discretized equations into the form (1.1). In § 5 we present classical numerical simulations of the resulting system, which can be used for benchmarking quantum algorithms. In § 6 we present the general strategy for encoding the matrix $\boldsymbol {A}$![]() into a quantum circuit. In § 7 we explicitly construct the corresponding oracle and discuss how it scales with the parameters of the problem. In § 8 we present a schematic circuit of the quantum algorithm based on this oracle. In § 9 we present our main conclusions.

into a quantum circuit. In § 7 we explicitly construct the corresponding oracle and discuss how it scales with the parameters of the problem. In § 8 we present a schematic circuit of the quantum algorithm based on this oracle. In § 9 we present our main conclusions.

2 Model

2.1 One-dimensional Vlasov–Ampère system

Electrostatic oscillations of a one-dimensional electron plasma can be described by the Vlasov–Ampère system

where $E(t, x)$![]() is the electric field, $f(t, x, v)$

is the electric field, $f(t, x, v)$![]() is the electron probability distribution, $t$

is the electron probability distribution, $t$![]() is time, $x$

is time, $x$![]() is the coordinate in the physical space, $v$

is the coordinate in the physical space, $v$![]() is the coordinate in the velocity space, $e > 0$

is the coordinate in the velocity space, $e > 0$![]() is the elementary charge and $m_e$

is the elementary charge and $m_e$![]() is the electron mass. We have also introduced a fixed source term $\mathcal {F} = \mathcal {F}(x, v)$

is the electron mass. We have also introduced a fixed source term $\mathcal {F} = \mathcal {F}(x, v)$![]() (balanced by particle losses through the plasma boundaries), which is explained below. The term $j^{(S)}$

(balanced by particle losses through the plasma boundaries), which is explained below. The term $j^{(S)}$![]() represents a prescribed source current that drives plasma oscillations. The corresponding source charge density $\rho ^{(S)}$

represents a prescribed source current that drives plasma oscillations. The corresponding source charge density $\rho ^{(S)}$![]() can be inferred from $j^{(S)}$

can be inferred from $j^{(S)}$![]() using the charge conservation law

using the charge conservation law

Let us split the electron distribution into the background distribution $F$![]() and a perturbation $g$

and a perturbation $g$![]() :

:

We assume that $g$![]() is small, so the system can be linearized in $g$

is small, so the system can be linearized in $g$![]() . Also, because we assume a neutral plasma, the system does not have a background electric field, so the stationary background distribution satisfies $v\partial _x F = \mathcal {F}$

. Also, because we assume a neutral plasma, the system does not have a background electric field, so the stationary background distribution satisfies $v\partial _x F = \mathcal {F}$![]() . Provided that $\mathcal {F}$

. Provided that $\mathcal {F}$![]() depends on $x$

depends on $x$![]() , $F$

, $F$![]() can be spatially inhomogeneous. At the same time, since $\mathcal {F}$

can be spatially inhomogeneous. At the same time, since $\mathcal {F}$![]() is fixed, it does not enter the equation for $g$

is fixed, it does not enter the equation for $g$![]() . This leads to the following linearized equations:Footnote 2

. This leads to the following linearized equations:Footnote 2

Here, $v$![]() is normalized to $v_{\rm th}$

is normalized to $v_{\rm th}$![]() , $x$

, $x$![]() is normalized to the electron Debye length $\lambda _D$

is normalized to the electron Debye length $\lambda _D$![]() and the time is normalized to the inverse electron plasma frequency $\omega _{p}^{-1}$

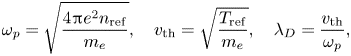

and the time is normalized to the inverse electron plasma frequency $\omega _{p}^{-1}$![]() , where

, where

where $n_{\rm ref}$![]() and $T_{\rm ref}$

and $T_{\rm ref}$![]() are some fixed values of the electron density and temperature, respectively. The distribution functions $g$

are some fixed values of the electron density and temperature, respectively. The distribution functions $g$![]() and $F$

and $F$![]() are normalized to $n_{\rm ref}/v_{\rm th}$

are normalized to $n_{\rm ref}/v_{\rm th}$![]() , and $E$

, and $E$![]() is normalized to $T_{\rm ref}/(e\lambda _D)$

is normalized to $T_{\rm ref}/(e\lambda _D)$![]() . We also assume that $F$

. We also assume that $F$![]() is Maxwellian, i.e.

is Maxwellian, i.e.

where the background density $n$![]() and temperature $T$

and temperature $T$![]() are normalized to $n_{\rm ref}$

are normalized to $n_{\rm ref}$![]() and $T_{\rm ref}$

and $T_{\rm ref}$![]() , respectively. An analytical description of the system (2.4) is presented in Appendix A.

, respectively. An analytical description of the system (2.4) is presented in Appendix A.

2.2 Boundary-value problem

To reformulate (2.4) as a boundary-value problem, we consider a source oscillating at a constant real frequency $\omega _0$![]() :

:

Assuming $g, E \propto \exp (-\mathrm {i}\omega _0 t)$![]() , one can recast (2.4) as

, one can recast (2.4) as

where $H = F/T$![]() and the variables $g$

and the variables $g$![]() and $E$

and $E$![]() are now considered as the corresponding time-independent complex amplitudes. For definiteness, we impose a localized current source:

are now considered as the corresponding time-independent complex amplitudes. For definiteness, we impose a localized current source:

Then, by (2.2), the corresponding charge density is that of an oscillating dipole:

We will be interested in the spatial distribution of the electric field $E(x)$![]() driven by the source $j^{(S)}$

driven by the source $j^{(S)}$![]() and undergoing linear Landau damping caused by interaction of this field with the distribution perturbation $g$

and undergoing linear Landau damping caused by interaction of this field with the distribution perturbation $g$![]() .

.

To avoid numerical artifacts and keep the grid resolution reasonably low, we impose an artificial diffusivity $\eta$![]() in the velocity space by modifying (2.8a) as

in the velocity space by modifying (2.8a) as

This allows us to reduce the grid resolution in both velocity and real space.

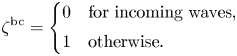

To avoid numerical errors caused by the waves reflected from spatial boundaries, we impose outgoing (non-reflecting) boundary conditions on both edges (see Thompson Reference Thompson1987). The resulting system is

where

In our case of a one-dimensional system, the incoming waves correspond to $v < 0$![]() at the right spatial boundary of the simulation box and $v > 0$

at the right spatial boundary of the simulation box and $v > 0$![]() at the left spatial boundary.

at the left spatial boundary.

3 Discretization

To discretize (2.12), we introduce the spatial grid

and the velocity grid

Here, $q_x = N_x-1$![]() and $q_v = N_v-1$

and $q_v = N_v-1$![]() , where

, where

For convenience, we also introduce the integer $M_v = 2^{n_v-1}$![]() . The first $M_v$

. The first $M_v$![]() points on the velocity grid, $k=[0,M_v)$

points on the velocity grid, $k=[0,M_v)$![]() , correspond to $v_k < 0$

, correspond to $v_k < 0$![]() , and the last $M_v$

, and the last $M_v$![]() points, $k=[M_v,N_v)$

points, $k=[M_v,N_v)$![]() , correspond to $v_k > 0$

, correspond to $v_k > 0$![]() . The notation $[k_1,k_2)$

. The notation $[k_1,k_2)$![]() , where $k_1$

, where $k_1$![]() and $k_2$

and $k_2$![]() are integers, denotes the set of all integers from $k_1$

are integers, denotes the set of all integers from $k_1$![]() to $k_2$

to $k_2$![]() , including $k_1$

, including $k_1$![]() but excluding $k_2$

but excluding $k_2$![]() . Similarly, the notation $[k_1,k_2]$

. Similarly, the notation $[k_1,k_2]$![]() denotes the set of integers from $k_1$

denotes the set of integers from $k_1$![]() to $k_2$

to $k_2$![]() , including both $k_1$

, including both $k_1$![]() and $k_2$

and $k_2$![]() . Also, throughout this paper, the discretized version of any given function $y(x,v)$

. Also, throughout this paper, the discretized version of any given function $y(x,v)$![]() is denoted as $y_{j,k}$

is denoted as $y_{j,k}$![]() , where the first subindex is the spatial-grid index and the second subindex is the velocity-grid index

, where the first subindex is the spatial-grid index and the second subindex is the velocity-grid index

The integral in the velocity space is computed by using the corresponding Riemann sum, $\int y(v)\,{\rm d} v = \sum _k y(v_k)\Delta v$![]() . To remove $\Delta v$

. To remove $\Delta v$![]() from discretized equations, we renormalize the distribution functions as

from discretized equations, we renormalize the distribution functions as

In real space, we use the central finite difference scheme

where $\sigma = (2h)^{-1}$![]() , $j = [1,N_x-2]$

, $j = [1,N_x-2]$![]() , $k=[0,N_v)$

, $k=[0,N_v)$![]() and the expressions for the derivatives at the boundaries are obtained by considering the Lagrange interpolating polynomial of the second order. (Instead of using the diffusivity $\eta$

and the expressions for the derivatives at the boundaries are obtained by considering the Lagrange interpolating polynomial of the second order. (Instead of using the diffusivity $\eta$![]() introduced in (2.11) to smooth high-frequency oscillations in phase space, it might be possible to apply the upwinding difference scheme that is often used for the discretization of convective equations; see, for example, Brio & Wu Reference Brio and Wu1988.) Similarly, the second derivative with respect to velocity is discretized as

introduced in (2.11) to smooth high-frequency oscillations in phase space, it might be possible to apply the upwinding difference scheme that is often used for the discretization of convective equations; see, for example, Brio & Wu Reference Brio and Wu1988.) Similarly, the second derivative with respect to velocity is discretized as

where $\beta = \Delta v^{-2}$![]() , $j = [0,N_x)$

, $j = [0,N_x)$![]() , $k=[1,N_v-2]$

, $k=[1,N_v-2]$![]() and the derivatives at the boundaries are obtained by considering the Lagrange polynomial of the third order.

and the derivatives at the boundaries are obtained by considering the Lagrange polynomial of the third order.

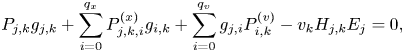

After the discretization, the Vlasov equation (2.12a) becomes

where $j = [0,N_x)$![]() and $k=[0,N_v)$

and $k=[0,N_v)$![]() . The function $P_{j,k}$

. The function $P_{j,k}$![]() is given by

is given by

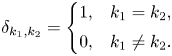

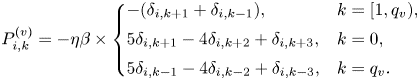

where $\delta _{k_1,k_2}$![]() is the Kronecker delta:

is the Kronecker delta:

The function $p^{\rm sign}_k = 1 - 2(\delta _{k,0}+\delta _{k,q_v})$![]() appears because the discretization (3.6) in velocity results in different signs in front of the diagonal element $g_{j,k}$

appears because the discretization (3.6) in velocity results in different signs in front of the diagonal element $g_{j,k}$![]() for bulk and boundary velocity elements. The coefficient $(\delta _{j,0}-\delta _{j,q_x})$

for bulk and boundary velocity elements. The coefficient $(\delta _{j,0}-\delta _{j,q_x})$![]() is necessary to take into account the different signs that appear due to the discretization in space (3.5) at bulk and boundary points. The function $\zeta ^{\rm bc}_{j,k}$

is necessary to take into account the different signs that appear due to the discretization in space (3.5) at bulk and boundary points. The function $\zeta ^{\rm bc}_{j,k}$![]() is the discretized version of the function (2.13) responsible for the outgoing boundary conditions:

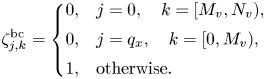

is the discretized version of the function (2.13) responsible for the outgoing boundary conditions:

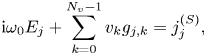

The function $P^{(x)}_{j,k,i}$![]() varies for bulk and boundary spatial points according to (3.5):

varies for bulk and boundary spatial points according to (3.5):

The function $P^{(v)}_{i,k}$![]() varies for bulk and boundary velocity points according to (3.6):

varies for bulk and boundary velocity points according to (3.6):

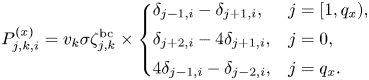

Finally, Ampère's law (2.12b) is recasted as

where $j=[0,N_x)$![]() .

.

4 Matrix representation

After the discretization, (3.7) and (3.13) can be converted into the form of (1.1) as follows.

4.1 The vector ${\psi }$

First of all, to construct (1.1), one needs to encode $g_{j,k}$![]() and $E_j$

and $E_j$![]() into ${\boldsymbol{\psi} }$

into ${\boldsymbol{\psi} }$![]() . Since one needs to store $N_f = 2$

. Since one needs to store $N_f = 2$![]() fields on a $N_x\times N_v$

fields on a $N_x\times N_v$![]() phase space, the size of ${\boldsymbol{\psi} }$

phase space, the size of ${\boldsymbol{\psi} }$![]() should be $N_{\rm tot} = N_f N_{xv}$

should be $N_{\rm tot} = N_f N_{xv}$![]() , where $N_{xv} = N_x N_v$

, where $N_{xv} = N_x N_v$![]() , and this vector can be saved by using $1+n_x+n_v$

, and this vector can be saved by using $1+n_x+n_v$![]() qubits, where $n_x$

qubits, where $n_x$![]() and $n_v$

and $n_v$![]() have been introduced in (3.3a,b). Within the vector ${\boldsymbol{\psi} }$

have been introduced in (3.3a,b). Within the vector ${\boldsymbol{\psi} }$![]() , the fields are arranged in the following way:

, the fields are arranged in the following way:

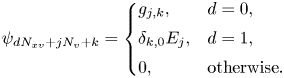

Here $\psi _{d N_{xv} + j N_v + k}$![]() are the elements of ${\boldsymbol{\psi} }$

are the elements of ${\boldsymbol{\psi} }$![]() with $j = [0, N_x)$

with $j = [0, N_x)$![]() , $k = [0,N_v)$

, $k = [0,N_v)$![]() and $d = [0, N_f)$

and $d = [0, N_f)$![]() . Since the electric field does not depend on velocity, the second half of ${\boldsymbol{\psi} }$

. Since the electric field does not depend on velocity, the second half of ${\boldsymbol{\psi} }$![]() is filled with $E_j$

is filled with $E_j$![]() only if the velocity index $k$

only if the velocity index $k$![]() is equal to zero.

is equal to zero.

To address each field, $g_{j,k}$![]() or $E_j$

or $E_j$![]() , we introduce the register $r_f$

, we introduce the register $r_f$![]() with one qubit. The zero state $\mid {0}\rangle_{r_f}$

with one qubit. The zero state $\mid {0}\rangle_{r_f}$![]() corresponds to addressing the plasma distribution function, and the unit state $\mid {1}\rangle_{r_f}$

corresponds to addressing the plasma distribution function, and the unit state $\mid {1}\rangle_{r_f}$![]() flags the electric field. We also use additional two registers, denoted $r_x$

flags the electric field. We also use additional two registers, denoted $r_x$![]() and $r_v$

and $r_v$![]() , with $n_x$

, with $n_x$![]() and $n_v$

and $n_v$![]() qubits, respectively, to specify the fields’ position in the real and velocity spaces, correspondingly. Then, one can express the vector ${\boldsymbol{\psi} }$

qubits, respectively, to specify the fields’ position in the real and velocity spaces, correspondingly. Then, one can express the vector ${\boldsymbol{\psi} }$![]() as

as

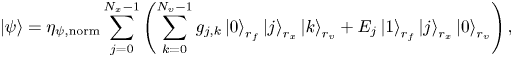

where $\eta _{\psi, {\rm norm}}$![]() is the normalization factor used to ensure that $\langle \psi |\psi \rangle = 1$

is the normalization factor used to ensure that $\langle \psi |\psi \rangle = 1$![]() . (The assumed notation is such that the least significant qubit is the rightmost qubit. In quantum circuits, the least significant qubit is the lowest one.) Notably, this encoding can be called half-analogue, since the electric field and the electron distribution function are encoded into the amplitudes of the quantum state, which is a continuous variable. However, positions in phase space are discretized and digital since they are encoded into bitstrings of the quantum states.

. (The assumed notation is such that the least significant qubit is the rightmost qubit. In quantum circuits, the least significant qubit is the lowest one.) Notably, this encoding can be called half-analogue, since the electric field and the electron distribution function are encoded into the amplitudes of the quantum state, which is a continuous variable. However, positions in phase space are discretized and digital since they are encoded into bitstrings of the quantum states.

4.2 The source term

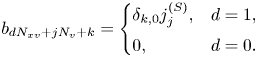

Corresponding to (4.1), the discretized version of the vector $\boldsymbol {b}$![]() in (1.1) is as follows:

in (1.1) is as follows:

Note that all elements in the first half of $\boldsymbol {b}$![]() are zero, and, in its second half, only every $N_v$

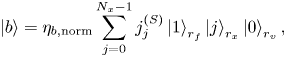

are zero, and, in its second half, only every $N_v$![]() th element is non-zero. In the ‘ket’ notation, this can be written as

th element is non-zero. In the ‘ket’ notation, this can be written as

where $\eta _{b,{\rm norm}}$![]() is the normalization factor used to ensure that $\langle b|b \rangle = 1$

is the normalization factor used to ensure that $\langle b|b \rangle = 1$![]() .

.

4.3 The matrix ${A}$

The corresponding $N_{\rm tot}\times N_{\rm tot}$![]() matrix $\boldsymbol {A}$

matrix $\boldsymbol {A}$![]() is represented as

is represented as

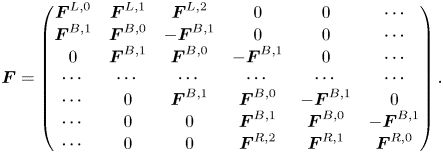

where $\boldsymbol {F}$![]() , $\boldsymbol {C}^E$

, $\boldsymbol {C}^E$![]() , $\boldsymbol {C}^f$

, $\boldsymbol {C}^f$![]() and $\boldsymbol {S}$

and $\boldsymbol {S}$![]() are $N_{xv}\times N_{xv}$

are $N_{xv}\times N_{xv}$![]() submatrices. A schematic structure of this matrix is shown in figure 1. The submatrix $\boldsymbol {F}$

submatrices. A schematic structure of this matrix is shown in figure 1. The submatrix $\boldsymbol {F}$![]() encodes the coefficients in front of $g$

encodes the coefficients in front of $g$![]() in the Vlasov equation (3.7) and is given by

in the Vlasov equation (3.7) and is given by

This submatrix consists of $N_x^2$![]() blocks, whose elements are mostly zeros. Each block is of size $N_v\times N_v$

blocks, whose elements are mostly zeros. Each block is of size $N_v\times N_v$![]() , and the position of each block's elements is determined by the row index $k_r = [0, N_v)$

, and the position of each block's elements is determined by the row index $k_r = [0, N_v)$![]() and the column index $k_c = [0, N_v)$

and the column index $k_c = [0, N_v)$![]() . The discretization at the left spatial boundary is described by the blocks $\boldsymbol {F}^{L,0}$

. The discretization at the left spatial boundary is described by the blocks $\boldsymbol {F}^{L,0}$![]() , $\boldsymbol {F}^{L,1}$

, $\boldsymbol {F}^{L,1}$![]() and $\boldsymbol {F}^{L,2}$

and $\boldsymbol {F}^{L,2}$![]() . The discretization at the right spatial boundary is described by the blocks $\boldsymbol {F}^{R,0}$

. The discretization at the right spatial boundary is described by the blocks $\boldsymbol {F}^{R,0}$![]() , $\boldsymbol {F}^{R,1}$

, $\boldsymbol {F}^{R,1}$![]() and $\boldsymbol {F}^{R,2}$

and $\boldsymbol {F}^{R,2}$![]() . The discretization at the bulk spatial points is described by the blocks $\boldsymbol {F}^{B,0}$

. The discretization at the bulk spatial points is described by the blocks $\boldsymbol {F}^{B,0}$![]() and $\boldsymbol {F}^{B,1}$

and $\boldsymbol {F}^{B,1}$![]() . Using (3.8), (3.11) and (3.12), one obtains the following expressions for the elements in each block in (4.6) at the left spatial boundary:

. Using (3.8), (3.11) and (3.12), one obtains the following expressions for the elements in each block in (4.6) at the left spatial boundary:

At the right spatial boundary,

and at bulk spatial points,

We denote the part of the submatrix $\boldsymbol {F}$![]() that depends on $v$

that depends on $v$![]() as $\tilde {\boldsymbol {F}}$

as $\tilde {\boldsymbol {F}}$![]() . The matrix elements of $\tilde {\boldsymbol {F}}$

. The matrix elements of $\tilde {\boldsymbol {F}}$![]() are indicated in figure 1 with red. The part of $\boldsymbol {F}$

are indicated in figure 1 with red. The part of $\boldsymbol {F}$![]() that does not depend on $v$

that does not depend on $v$![]() is denoted as $\hat {\boldsymbol {F}}$

is denoted as $\hat {\boldsymbol {F}}$![]() and shown in blue in figure 1 within the submatrix $\boldsymbol {F}$

and shown in blue in figure 1 within the submatrix $\boldsymbol {F}$![]() .

.

The matrix $\boldsymbol {C}^E$![]() is block diagonal and encodes the coefficients in front of $E$

is block diagonal and encodes the coefficients in front of $E$![]() in the Vlasov equation (3.7):

in the Vlasov equation (3.7):

Here $j_r,j_c = [0, N_x)$![]() and $k_r, k_c = [0,N_v)$

and $k_r, k_c = [0,N_v)$![]() . In this submatrix the first column in each diagonal block of size $N_v\times N_v$

. In this submatrix the first column in each diagonal block of size $N_v\times N_v$![]() is non-sparse whilst all other columns are filled with zeros. The Kronecker delta $\delta _{k_c,0}$

is non-sparse whilst all other columns are filled with zeros. The Kronecker delta $\delta _{k_c,0}$![]() appears because of the chosen encoding of the electric field into the state vector ${\boldsymbol{\psi} }$

appears because of the chosen encoding of the electric field into the state vector ${\boldsymbol{\psi} }$![]() according to (4.1).

according to (4.1).

The submatrix $\boldsymbol {C}^f$![]() is also block diagonal and encodes the coefficients in front of $g$

is also block diagonal and encodes the coefficients in front of $g$![]() in (3.13):

in (3.13):

Here $j_r,j_c = [0, N_x)$![]() and $k_r, k_c = [0,N_v)$

and $k_r, k_c = [0,N_v)$![]() . The first row in each block of size $N_v\times N_v$

. The first row in each block of size $N_v\times N_v$![]() is non-sparse due to the sum in (3.13).

is non-sparse due to the sum in (3.13).

Finally, the matrix $\boldsymbol {S}$![]() is diagonal and encodes the coefficients in front of $E$

is diagonal and encodes the coefficients in front of $E$![]() in (3.13):

in (3.13):

Here $j_r,j_c = [0, N_x)$![]() and $k_r, k_c = [0,N_v)$

and $k_r, k_c = [0,N_v)$![]() .

.

5 Classical simulations

To test our discretization scheme, we performed classical simulations for homogeneous plasma ($n = T = 1$![]() ), which facilitates comparison with the analytic theory described in Appendix A. In our simulations, the phase space is described by $x_{\rm max} = 100, n_x = 9$

), which facilitates comparison with the analytic theory described in Appendix A. In our simulations, the phase space is described by $x_{\rm max} = 100, n_x = 9$![]() and $v_{\rm max} = 4, n_v = 8$

and $v_{\rm max} = 4, n_v = 8$![]() . The source in the form (2.9) is placed at $x_0 = 50$

. The source in the form (2.9) is placed at $x_0 = 50$![]() with $\varDelta _S = 1.0$

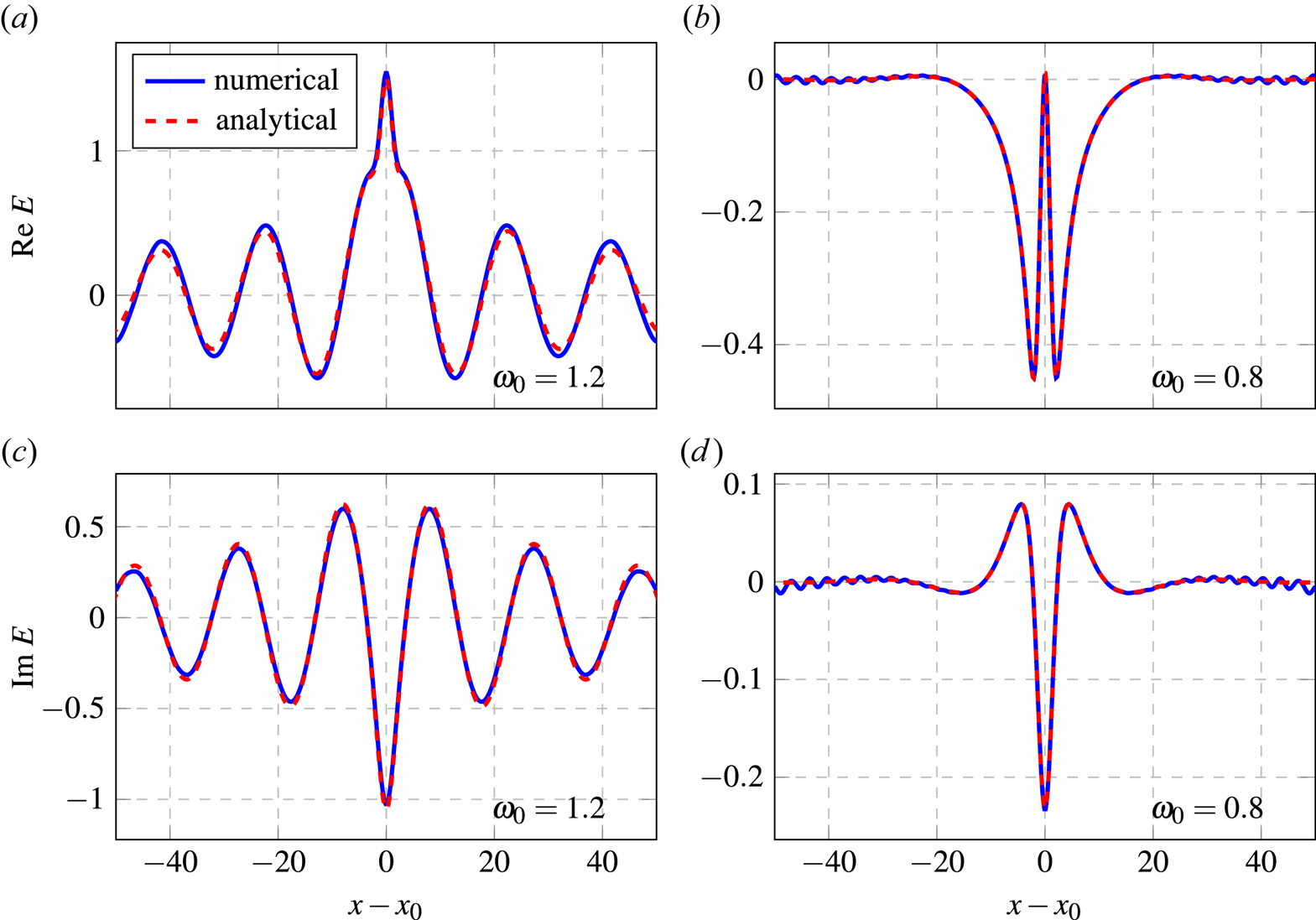

with $\varDelta _S = 1.0$![]() . We consider two cases (figure 2): (a) $\omega _0 = 1.2$

. We consider two cases (figure 2): (a) $\omega _0 = 1.2$![]() , which corresponds to the case when the source frequency exceeds the plasma frequency; and (b) $\omega _0 = 0.8$

, which corresponds to the case when the source frequency exceeds the plasma frequency; and (b) $\omega _0 = 0.8$![]() , which corresponds to the case when the plasma frequency exceeds the source frequency. The numerical calculations were performed by inverting the matrix (4.5) using the sparse-QR-factorization-based method provided in CUDA toolkit cuSOLVER (see Novikau Reference Novikau2024a; cuSolver 2024).

, which corresponds to the case when the plasma frequency exceeds the source frequency. The numerical calculations were performed by inverting the matrix (4.5) using the sparse-QR-factorization-based method provided in CUDA toolkit cuSOLVER (see Novikau Reference Novikau2024a; cuSolver 2024).

Figure 2. Plots showing the spatial distribution of the electric field computed numerically (blue) and analytically (red) using (A17). (a,c) Plots of ${\rm Re}\,E$![]() and ${\rm Im}\,E$

and ${\rm Im}\,E$![]() , respectively, for $\omega _0 = 1.20$

, respectively, for $\omega _0 = 1.20$![]() . One can see Langmuir waves propagating away from the source (located at $x = x_0$

. One can see Langmuir waves propagating away from the source (located at $x = x_0$![]() ) and experiencing weak Landau damping. (b,d) Plots of ${\rm Re}\,E$

) and experiencing weak Landau damping. (b,d) Plots of ${\rm Re}\,E$![]() and ${\rm Im}\,E$

and ${\rm Im}\,E$![]() , respectively, for $\omega _0 = 0.8$

, respectively, for $\omega _0 = 0.8$![]() . One can see Debye shielding of the source charge. In both cases, $n_x = 9$

. One can see Debye shielding of the source charge. In both cases, $n_x = 9$![]() , $n_v = 8$

, $n_v = 8$![]() and $\eta = 0$

and $\eta = 0$![]() .

.

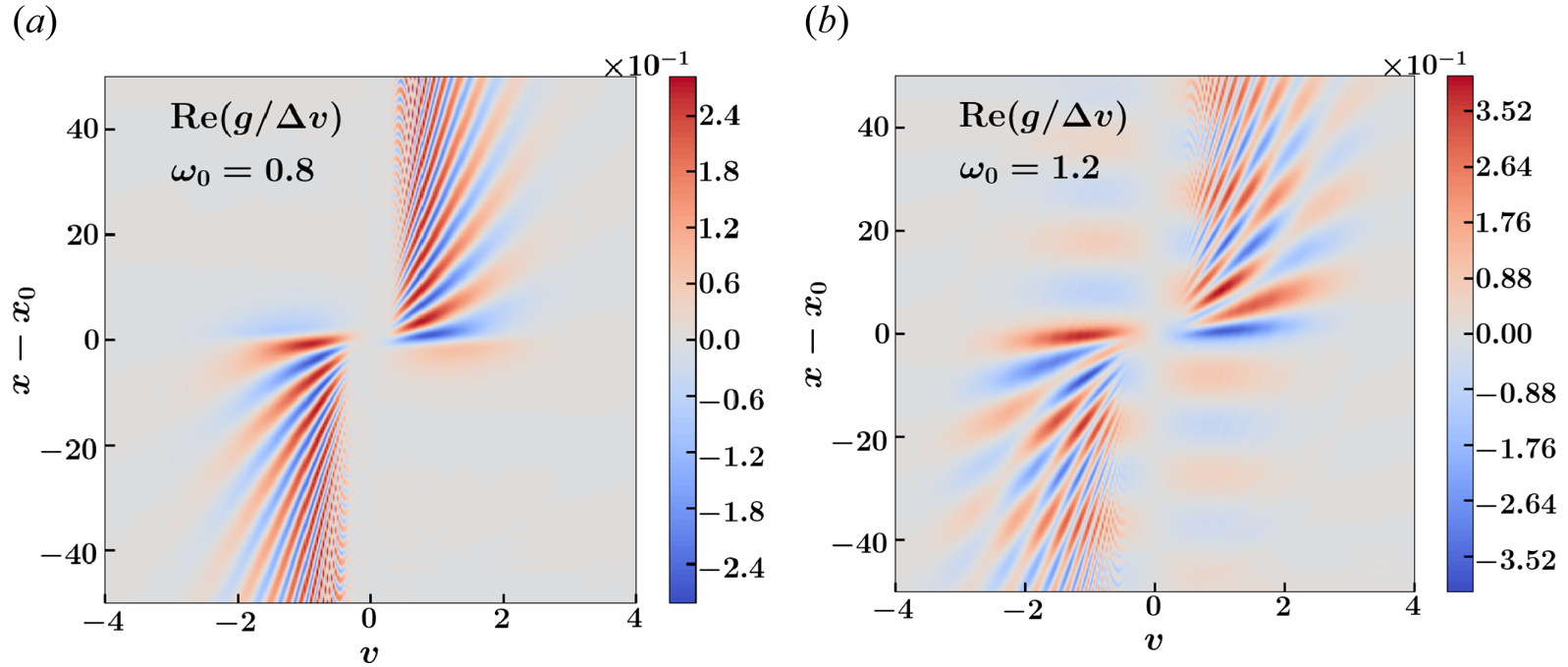

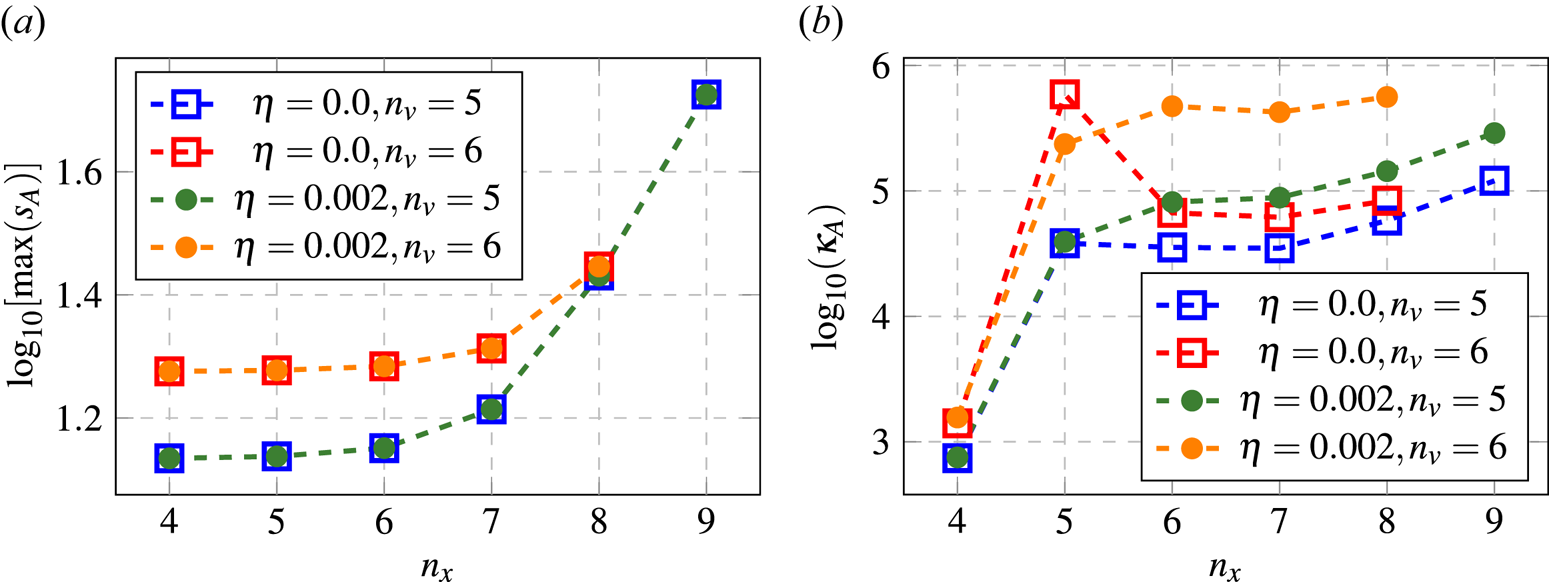

In the case with $\omega _0 = 1.2$![]() , the source launches Langmuir waves propagating outward and gradually dissipating via Landau damping. The outgoing boundary conditions allow the propagating wave to leave the simulated box with negligible reflection. In the case with $\omega _0 = 0.8$

, the source launches Langmuir waves propagating outward and gradually dissipating via Landau damping. The outgoing boundary conditions allow the propagating wave to leave the simulated box with negligible reflection. In the case with $\omega _0 = 0.8$![]() , the plasma shields the electric field, which penetrates plasma roughly up to a Debye length. Due to the high resolution in both real and velocity space, the model remains stable and does not generate visible numerical artifacts (figure 3). Artifacts become noticeable at lower resolution (figures 4 and 5) but can be suppressed by introducing artificial diffusivity $\eta$

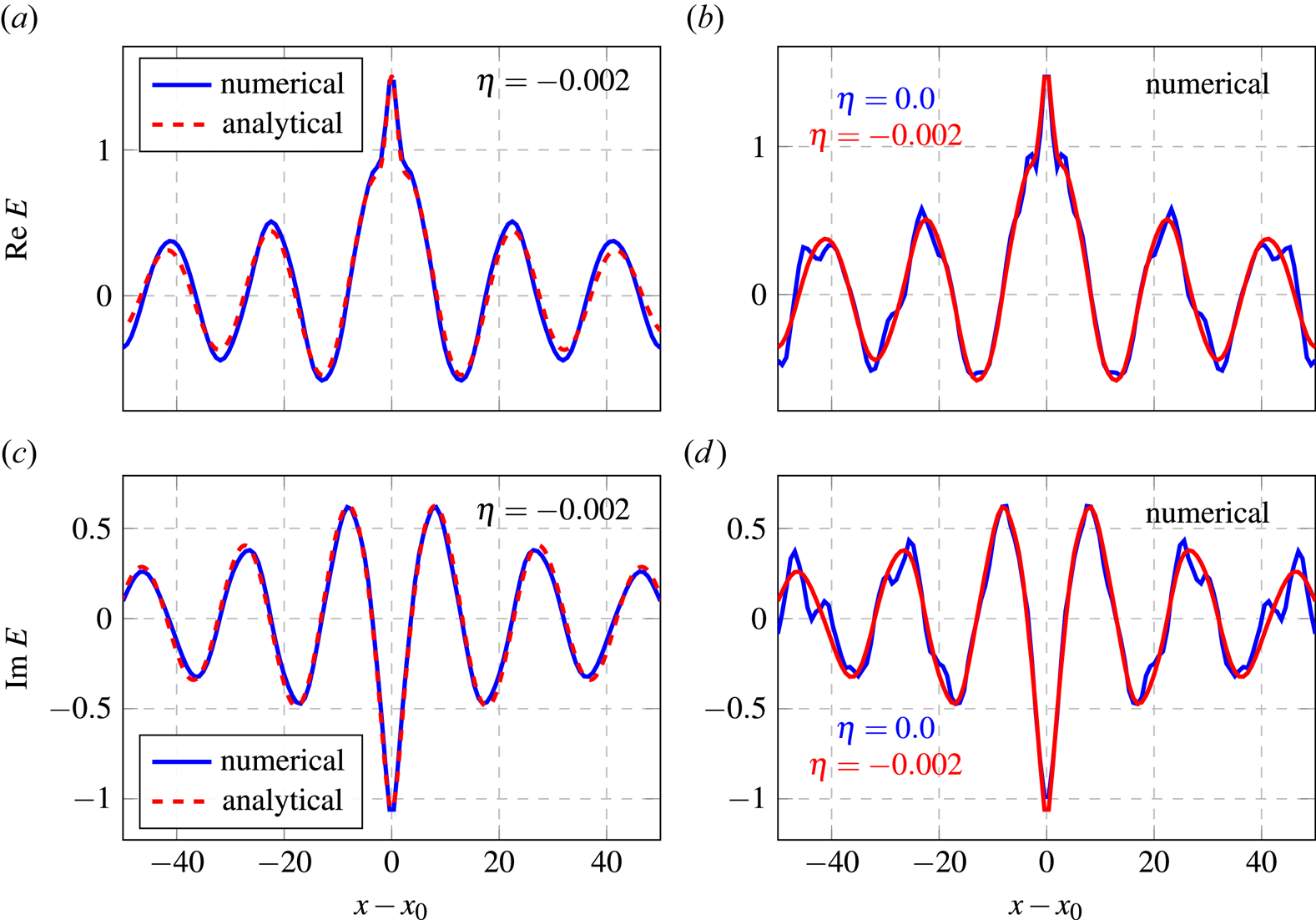

, the plasma shields the electric field, which penetrates plasma roughly up to a Debye length. Due to the high resolution in both real and velocity space, the model remains stable and does not generate visible numerical artifacts (figure 3). Artifacts become noticeable at lower resolution (figures 4 and 5) but can be suppressed by introducing artificial diffusivity $\eta$![]() in velocity space (2.11). Such simulations are demonstrated in figures 4 and 5 for $n_x = 7, n_v = 5$

in velocity space (2.11). Such simulations are demonstrated in figures 4 and 5 for $n_x = 7, n_v = 5$![]() and $\eta = 0.002$

and $\eta = 0.002$![]() . As seen in figure 4, the results are in good agreement with the analytical solution. The introduction of the diffusivity does not change the spectral norm of the matrix $\boldsymbol {A}$

. As seen in figure 4, the results are in good agreement with the analytical solution. The introduction of the diffusivity does not change the spectral norm of the matrix $\boldsymbol {A}$![]() (figure 6a), which is always much higher than unity. However, keep in mind that the diffusivity complicates block encoding (BE) (§ 6.4) and somewhat increases the condition number $\kappa _A$

(figure 6a), which is always much higher than unity. However, keep in mind that the diffusivity complicates block encoding (BE) (§ 6.4) and somewhat increases the condition number $\kappa _A$![]() of $\boldsymbol {A}$

of $\boldsymbol {A}$![]() (figure 6b). The condition number grows with both $n_v$

(figure 6b). The condition number grows with both $n_v$![]() and $n_x$

and $n_x$![]() (except in cases with poor spatial resolution). In particular, if one takes $n_x = 7$

(except in cases with poor spatial resolution). In particular, if one takes $n_x = 7$![]() , $n_v = 5$

, $n_v = 5$![]() and $\eta = 0.002$

and $\eta = 0.002$![]() , as in the simulations above, then $\kappa _A = 8.844\times 10^4$

, as in the simulations above, then $\kappa _A = 8.844\times 10^4$![]() (i.e. $\log _{10}\kappa _A = 4.95$

(i.e. $\log _{10}\kappa _A = 4.95$![]() ). Without the diffusivity, and with the same phase-space resolution, the condition number is $\kappa _A = 3.489\times 10^4$

). Without the diffusivity, and with the same phase-space resolution, the condition number is $\kappa _A = 3.489\times 10^4$![]() (i.e. $\log _{10}\kappa _A = 4.54$

(i.e. $\log _{10}\kappa _A = 4.54$![]() ).

).

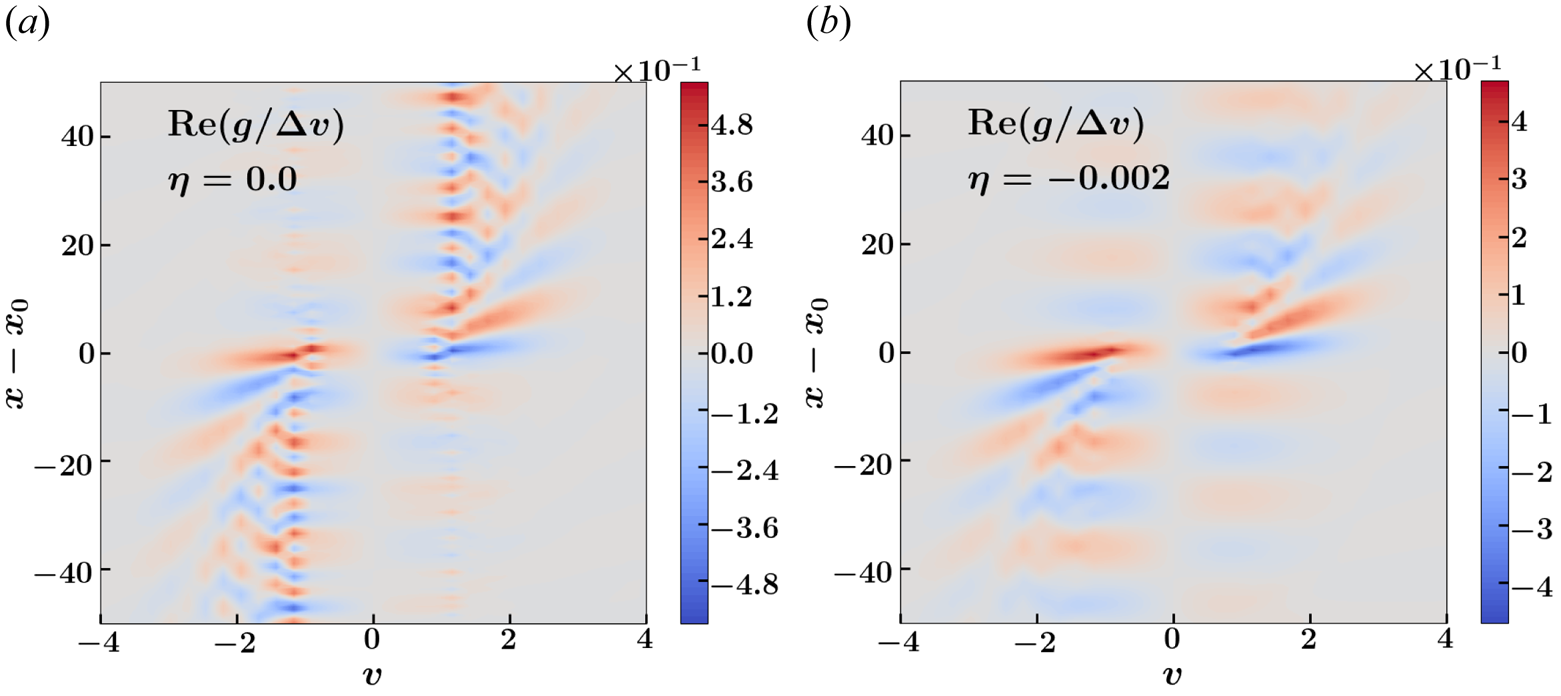

Figure 3. Plots showing the real component of the plasma distribution function, in units $\Delta v$![]() , computed numerically with $n_x = 9$

, computed numerically with $n_x = 9$![]() , $n_v = 8$

, $n_v = 8$![]() and $\eta = 0.0$

and $\eta = 0.0$![]() . Results are shown for (a) $\omega _0 = 0.8$

. Results are shown for (a) $\omega _0 = 0.8$![]() and (b) $\omega _0 = 1.2$

and (b) $\omega _0 = 1.2$![]() .

.

Figure 4. Plots showing the spatial distribution of the electric field for $\omega _0 = 1.2$![]() , $n_x = 7$

, $n_x = 7$![]() , and $n_v = 5$

, and $n_v = 5$![]() . (a,c) Results from the numerical (blue) and analytical (red) computations with the diffusivity $\eta = 0.002$

. (a,c) Results from the numerical (blue) and analytical (red) computations with the diffusivity $\eta = 0.002$![]() . (b,d) Results from the numerical computations with (red) and without (blue) diffusivity.

. (b,d) Results from the numerical computations with (red) and without (blue) diffusivity.

Figure 5. Plots showing the real component of the plasma distribution function, in units $\Delta v$![]() , computed numerically for the cases with $\omega _0=1.2, n_x = 7$

, computed numerically for the cases with $\omega _0=1.2, n_x = 7$![]() and $n_v = 5$

and $n_v = 5$![]() . Results are shown for (a) $\eta = 0.0$

. Results are shown for (a) $\eta = 0.0$![]() and (b) $\eta = 0.002$

and (b) $\eta = 0.002$![]() .

.

Figure 6. Plots showing the dependence of the maximum singular value (a) and the matrix condition number (b) of the matrix $\boldsymbol {A}$![]() on the size of the spatial grid for various $\eta$

on the size of the spatial grid for various $\eta$![]() and $n_v$

and $n_v$![]() . The values are computed numerically (Novikau Reference Novikau2024b).

. The values are computed numerically (Novikau Reference Novikau2024b).

6 Encoding the equations into a quantum circuit

6.1 Initialization

To encode the right-hand-side vector (4.4) into a quantum circuit, one can use the fact that the shape of the source current (2.9) is Gaussian. As shown in Novikau, Dodin & Startsev (Reference Novikau, Dodin and Startsev2023), Kane, Gomes & Kreshchuk (Reference Kane, Gomes and Kreshchuk2023) and Hariprakash et al. (Reference Hariprakash, Modi, Kreshchuk, Kane and Bauer2023), one can encode this function by using either QSVT (see Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021) or the so-called quantum eigenvalue transformation of unitaries (QETU) (see Dong, Lin & Tong Reference Dong, Lin and Tong2022), where the scaling of the resulting circuit is $\mathcal {O}(n_v \log _2(\varepsilon ^{-1}_{\rm qsvt}))$![]() and $\varepsilon _{\rm qsvt}$

and $\varepsilon _{\rm qsvt}$![]() is the desired absolute error in the QSVT approximation of the Gaussian. However, one should keep in mind that the success probability of the initialization circuit depends on the Gaussian width. To increase this probability, amplitude amplification can be used (see Brassard et al. Reference Brassard, Høyer, Mosca and Tapp2002). After the initialization, the required initial state $\mid {b}\rangle$

is the desired absolute error in the QSVT approximation of the Gaussian. However, one should keep in mind that the success probability of the initialization circuit depends on the Gaussian width. To increase this probability, amplitude amplification can be used (see Brassard et al. Reference Brassard, Høyer, Mosca and Tapp2002). After the initialization, the required initial state $\mid {b}\rangle$![]() is usually entangled with the zero state of the ancillae used for the QSVT/QETU procedure and for the amplitude amplification. Thus, the subsequent QSVT circuit computing the inverse matrix $\boldsymbol {A}^{-1}$

is usually entangled with the zero state of the ancillae used for the QSVT/QETU procedure and for the amplitude amplification. Thus, the subsequent QSVT circuit computing the inverse matrix $\boldsymbol {A}^{-1}$![]() should be controlled by this zero state to guarantee that $\boldsymbol {A}^{-1}$

should be controlled by this zero state to guarantee that $\boldsymbol {A}^{-1}$![]() acts on the state $\mid {b}\rangle$

acts on the state $\mid {b}\rangle$![]() .

.

6.2 Block encoding: basic idea

To solve (1.1) with the matrix (4.5) and the source (4.4) on a digital quantum computer, one can use the QSVT, which approximates the inverse matrix $\boldsymbol {A}^{-1}$![]() with an odd polynomial of the matrix singular values. (The mathematical foundations of the QSVT are described, for example, in Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021; Lin Reference Lin2022.) The QSVT returns a quantum state $\mid {\psi _{\rm qsvt}}\rangle$

with an odd polynomial of the matrix singular values. (The mathematical foundations of the QSVT are described, for example, in Gilyén et al. Reference Gilyén, Su, Low and Wiebe2019; Martyn et al. Reference Martyn, Rossi, Tan and Chuang2021; Lin Reference Lin2022.) The QSVT returns a quantum state $\mid {\psi _{\rm qsvt}}\rangle$![]() whose projection on zero ancillae is proportional to the solution ${\boldsymbol{\psi} }$

whose projection on zero ancillae is proportional to the solution ${\boldsymbol{\psi} }$![]() of (1.1):

of (1.1):

Here $\phi _{\rm glob}$![]() is an unknown global angle and the scalar parameter $\kappa _{\rm qsvt}$

is an unknown global angle and the scalar parameter $\kappa _{\rm qsvt}$![]() is of the order of the condition number of the matrix $\boldsymbol {A}$

is of the order of the condition number of the matrix $\boldsymbol {A}$![]() .

.

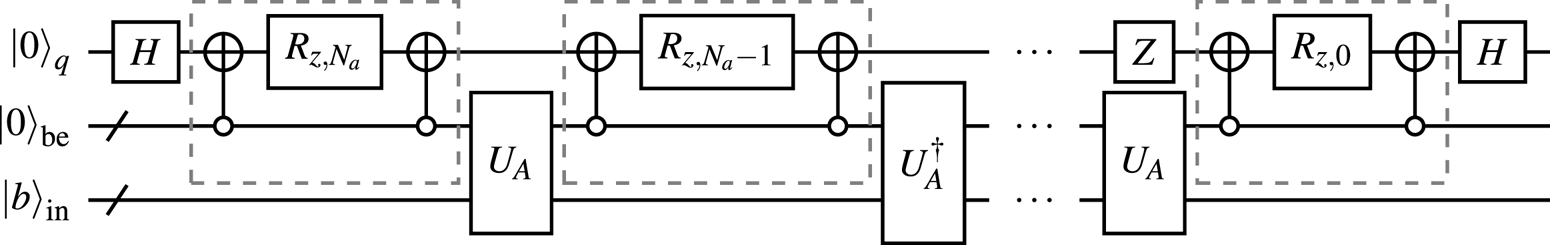

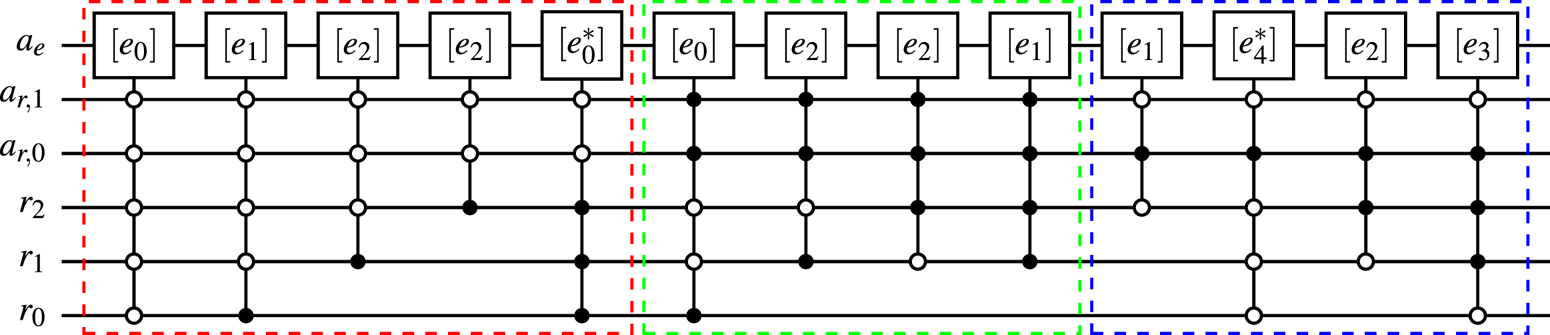

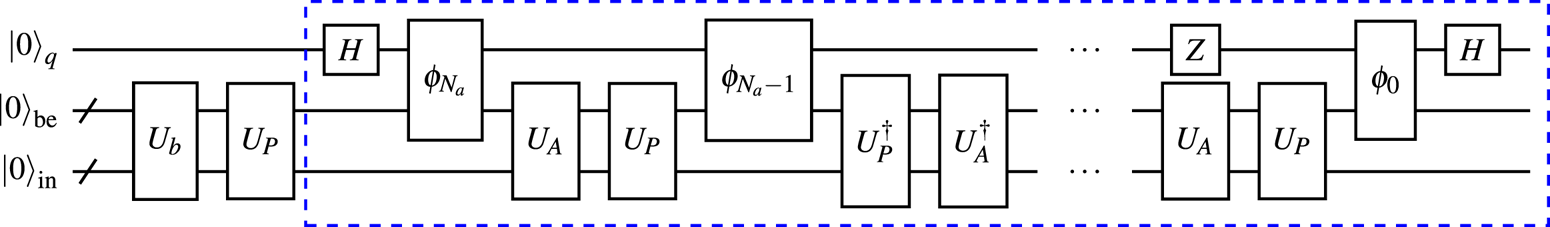

A typical QSVT circuit is shown in figure 7, where the angles $\phi _i$![]() are computed classically. These angles serve as the parameters that specify the function computed by the QSVT circuit. In our case, the function is the inverse function. (More details about the computation of the QSVT angles can be found in Dong et al. Reference Dong, Meng, Whaley and Lin2021; Ying Reference Ying2022). The subcircuit $U_A$

are computed classically. These angles serve as the parameters that specify the function computed by the QSVT circuit. In our case, the function is the inverse function. (More details about the computation of the QSVT angles can be found in Dong et al. Reference Dong, Meng, Whaley and Lin2021; Ying Reference Ying2022). The subcircuit $U_A$![]() is the so-called BE oracle with the following matrix representation:

is the so-called BE oracle with the following matrix representation:

Here $\boldsymbol {U}_A$![]() is a unitary matrix. (The dots correspond to submatrices that keep $\boldsymbol {U}_A$

is a unitary matrix. (The dots correspond to submatrices that keep $\boldsymbol {U}_A$![]() unitary but otherwise are unimportant.) This unitary encodes the matrix $\boldsymbol {A}$

unitary but otherwise are unimportant.) This unitary encodes the matrix $\boldsymbol {A}$![]() as a sub-block that is accessed by setting the ancilla register ‘be’ to zero:

as a sub-block that is accessed by setting the ancilla register ‘be’ to zero:

The QSVT addresses the BE oracle $\mathcal {O}(\kappa _{\rm qsvt}\log _2(\varepsilon ^{-1}_{\rm qsvt}))$![]() times to approximate the inverse matrix $\boldsymbol {A}^{-1}$

times to approximate the inverse matrix $\boldsymbol {A}^{-1}$![]() . The efficient implementation of the BE oracle is the key to a potential quantum speedup that might be provided by the QSVT. As discussed in Novikau et al. (Reference Novikau, Dodin and Startsev2023), the QSVT can provide a polynomial speedup for two- and higher-dimensional classical wave systems if the quantum circuit of the BE oracle scales polylogarithmically or better with the size of $\boldsymbol {A}$

. The efficient implementation of the BE oracle is the key to a potential quantum speedup that might be provided by the QSVT. As discussed in Novikau et al. (Reference Novikau, Dodin and Startsev2023), the QSVT can provide a polynomial speedup for two- and higher-dimensional classical wave systems if the quantum circuit of the BE oracle scales polylogarithmically or better with the size of $\boldsymbol {A}$![]() .

.

Figure 7. The QSVT circuit encoding a real polynomial of order $N_a$![]() , where $N_a$

, where $N_a$![]() is odd, by using $N_a + 1$

is odd, by using $N_a + 1$![]() angles $\phi _k$

angles $\phi _k$![]() pre-computed classically. The gates denoted as $R_{z,k}$

pre-computed classically. The gates denoted as $R_{z,k}$![]() represent the rotations $R_z(2\phi _k)$

represent the rotations $R_z(2\phi _k)$![]() . For an even $N_a$

. For an even $N_a$![]() , the gate $Z$

, the gate $Z$![]() should be removed and the rightmost BE oracle $U_A$

should be removed and the rightmost BE oracle $U_A$![]() should be replaced with its Hermitian adjoint version $U_A^{\dagger}$

should be replaced with its Hermitian adjoint version $U_A^{\dagger}$![]() .

.

To block encode a non-Hermitian matrix such as $\boldsymbol {A}$![]() , one can first extend it to a Hermitian one as

, one can first extend it to a Hermitian one as

and then use the technique from Berry & Childs (Reference Berry and Childs2012). However, this will require at least two additional ancilla qubits for the extension (6.4). Another option is to decompose $\boldsymbol {A}$![]() into two Hermitian matrices, i.e.

into two Hermitian matrices, i.e.

where $\boldsymbol {A}_h = (\boldsymbol {A} + \boldsymbol {A}^{\dagger} )/2$![]() and $\boldsymbol {A}_a = (\boldsymbol {A} - \boldsymbol {A}^{\dagger} )/(2\mathrm {i})$

and $\boldsymbol {A}_a = (\boldsymbol {A} - \boldsymbol {A}^{\dagger} )/(2\mathrm {i})$![]() . The sum (6.5) can be computed by using the circuit of linear combination of unitaries, which requires an additional ancilla. Also note that the matrices $\boldsymbol {A}_a$

. The sum (6.5) can be computed by using the circuit of linear combination of unitaries, which requires an additional ancilla. Also note that the matrices $\boldsymbol {A}_a$![]() and $\boldsymbol {A}_h$

and $\boldsymbol {A}_h$![]() , although being Hermitian, still have a non-trivial structure. Thus, for this method, it is necessary to block encode two separate matrices, which may double the depth of the BE oracle.

, although being Hermitian, still have a non-trivial structure. Thus, for this method, it is necessary to block encode two separate matrices, which may double the depth of the BE oracle.

To reduce the number of ancillae and avoid encoding two matrices instead of one, we propose to encode the non-Hermitian $\boldsymbol {A}$![]() directly, without invoking the extension (6.4) or the splitting (6.5). This technique was already used in Novikau et al. (Reference Novikau, Dodin and Startsev2023). Although the direct encoding requires ad hoc construction of some parts of the BE oracle, this approach leads to a more compact BE circuit.

directly, without invoking the extension (6.4) or the splitting (6.5). This technique was already used in Novikau et al. (Reference Novikau, Dodin and Startsev2023). Although the direct encoding requires ad hoc construction of some parts of the BE oracle, this approach leads to a more compact BE circuit.

To encode $\boldsymbol {A}$![]() into the unitary $\boldsymbol {U}_A$

into the unitary $\boldsymbol {U}_A$![]() , one needs to normalize $\boldsymbol {A}$

, one needs to normalize $\boldsymbol {A}$![]() such that $\varsigma ||\boldsymbol {A}||_{\rm max} \leq 1$

such that $\varsigma ||\boldsymbol {A}||_{\rm max} \leq 1$![]() , where

, where

and $\varsigma$![]() is related to the matrix non-sparsity as will be explained later (see (6.14)). Here, by the term ‘non-sparsity’, we understand the maximum number of non-zero elements in a matrix row or column.Footnote 3 Hence, $\boldsymbol {A}$

is related to the matrix non-sparsity as will be explained later (see (6.14)). Here, by the term ‘non-sparsity’, we understand the maximum number of non-zero elements in a matrix row or column.Footnote 3 Hence, $\boldsymbol {A}$![]() should be normalized as

should be normalized as

The general structure of a BE oracle implementing the direct encoding of a non-Hermitian matrix is

where the oracles $O_F$![]() , $O^{F}_{F,{\rm corr}}$

, $O^{F}_{F,{\rm corr}}$![]() , $O_M$

, $O_M$![]() , $O^{B}_{F,{\rm corr}}$

, $O^{B}_{F,{\rm corr}}$![]() and $O^{\dagger} _F$

and $O^{\dagger} _F$![]() encode the positions of non-zero matrix elements in $\boldsymbol {A}$

encode the positions of non-zero matrix elements in $\boldsymbol {A}$![]() , and $O_H$

, and $O_H$![]() encodes the values of these elements. The upper superscripts ‘F’ and ‘B’ stand for ‘forward’ and ‘backward’ action. The oracle $U_A$

encodes the values of these elements. The upper superscripts ‘F’ and ‘B’ stand for ‘forward’ and ‘backward’ action. The oracle $U_A$![]() can be constructed by using quantum gates acting on a single qubit but controlled by multiple qubits. Here, such gates are called single-target multicontrolled (STMC) gates (§ 6.3).

can be constructed by using quantum gates acting on a single qubit but controlled by multiple qubits. Here, such gates are called single-target multicontrolled (STMC) gates (§ 6.3).

Equation (6.8) is based on the BE technique from Berry & Childs (Reference Berry and Childs2012) with the only difference that the oracles $O^{F}_{F,{\rm corr}}$![]() and $O^{B}_{F,{\rm corr}}$

and $O^{B}_{F,{\rm corr}}$![]() are introduced to take into account the non-Hermiticity of $\boldsymbol {A}$

are introduced to take into account the non-Hermiticity of $\boldsymbol {A}$![]() and are computed ad hoc by varying STMC gates. Basically, the oracles $O_F$

and are computed ad hoc by varying STMC gates. Basically, the oracles $O_F$![]() , $O_M$

, $O_M$![]() and $O^{\dagger} _F$

and $O^{\dagger} _F$![]() create a structure of a preliminary Hermitian matrix that is as close as possible to the target non-Hermitian matrix $\boldsymbol {A}$

create a structure of a preliminary Hermitian matrix that is as close as possible to the target non-Hermitian matrix $\boldsymbol {A}$![]() . The structure is then corrected by the oracles $O^{F}_{F,{\rm corr}}$

. The structure is then corrected by the oracles $O^{F}_{F,{\rm corr}}$![]() and $O^{B}_{F,{\rm corr}}$

and $O^{B}_{F,{\rm corr}}$![]() constructed by varying their circuits to encode the structure of $\boldsymbol {A}$

constructed by varying their circuits to encode the structure of $\boldsymbol {A}$![]() .

.

We consider separately the action of the oracle

where the oracle $O_H$![]() is not included, and the oracles $O^{F}_F$

is not included, and the oracles $O^{F}_F$![]() and $O^{B}_F$

and $O^{B}_F$![]() are introduced to simplify notations. The matrix representation of the oracle $U_D$

are introduced to simplify notations. The matrix representation of the oracle $U_D$![]() projected onto zero ancillae is denoted as $\boldsymbol {D}^A$

projected onto zero ancillae is denoted as $\boldsymbol {D}^A$![]() . The matrix $\boldsymbol {D}^A$

. The matrix $\boldsymbol {D}^A$![]() has non-zero elements at the same positions as those of the non-zero elements of $\boldsymbol {A}$

has non-zero elements at the same positions as those of the non-zero elements of $\boldsymbol {A}$![]() .

.

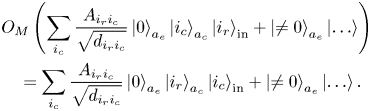

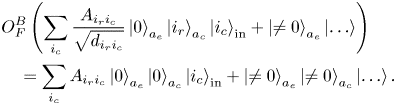

We use the input register ‘in’ to encode a row index $i_r$![]() and the ancilla register $a_c$

and the ancilla register $a_c$![]() to perform intermediate computations. Then,

to perform intermediate computations. Then,

where $i_{c}$![]() are the column indices of all non-zero elements of $\boldsymbol {A}$

are the column indices of all non-zero elements of $\boldsymbol {A}$![]() at the row $i_r$

at the row $i_r$![]() ; $N_{c,i_r}$

; $N_{c,i_r}$![]() is the number of these elements at $i_r$

is the number of these elements at $i_r$![]() ; $d_{i_r i_{c}} \leq 1$

; $d_{i_r i_{c}} \leq 1$![]() are the matrix elements of $\boldsymbol {D}^A$

are the matrix elements of $\boldsymbol {D}^A$![]() . The number $N_{c,i_r}$

. The number $N_{c,i_r}$![]() is less or equal to the non-sparsity of $\boldsymbol {A}$

is less or equal to the non-sparsity of $\boldsymbol {A}$![]() and can be different at different $i_c$

and can be different at different $i_c$![]() . The elements $d_{i_r i_{c}}$

. The elements $d_{i_r i_{c}}$![]() are usually different powers of the factor $2^{-1/2}$

are usually different powers of the factor $2^{-1/2}$![]() that appears due to the usage of multiple Hadamard gates $H$

that appears due to the usage of multiple Hadamard gates $H$![]() .

.

The oracle $O_H$![]() , which enters $U_A$

, which enters $U_A$![]() but is not a part of $U_D$

but is not a part of $U_D$![]() , takes the matrix $\boldsymbol {D}^A$

, takes the matrix $\boldsymbol {D}^A$![]() and modifies its elements to form $\boldsymbol {A}$

and modifies its elements to form $\boldsymbol {A}$![]() . Usually, $O_H$

. Usually, $O_H$![]() acts on an extra ancilla $a_e$

acts on an extra ancilla $a_e$![]() that is not used by $U_D$

that is not used by $U_D$![]() . Due to that, we can formally consider $U_D$

. Due to that, we can formally consider $U_D$![]() separately from $O_H$

separately from $O_H$![]() . The action of $O_H$

. The action of $O_H$![]() can be considered as the mapping

can be considered as the mapping

where $A_{i_r i_c}$![]() are elements of the matrix $\boldsymbol {A}$

are elements of the matrix $\boldsymbol {A}$![]() after the normalization (6.7). For instance, to encode a real-value element $A_{i_r i_c}$

after the normalization (6.7). For instance, to encode a real-value element $A_{i_r i_c}$![]() , one can use the rotation gate $R_y(\theta )$

, one can use the rotation gate $R_y(\theta )$![]() :

:

The factor $d_{i_r i_c}$![]() appears from the action of the oracle $U_D$

appears from the action of the oracle $U_D$![]() (6.10). Our goal is to have $A_{i_r i_c} = \cos (\theta /2)d_{i_r i_c}$

(6.10). Our goal is to have $A_{i_r i_c} = \cos (\theta /2)d_{i_r i_c}$![]() . Thus,

. Thus,

The fact that $d_{i_r i_c} \leq 1$![]() is the reason why it is necessary to include $\varsigma$

is the reason why it is necessary to include $\varsigma$![]() into the normalization (6.7). From this, we conclude that

into the normalization (6.7). From this, we conclude that

The oracle $O_H$![]() usually consists of STMC rotations gates $R_x$

usually consists of STMC rotations gates $R_x$![]() , $R_y$

, $R_y$![]() , $R_z$

, $R_z$![]() and $R_c$

and $R_c$![]() (C1). The first two are used to encode imaginary and real values, correspondingly. The third one can be used to change the sign of a value or to turn a real value into an imaginary one if necessary, and vice versa. The gate $R_c$

(C1). The first two are used to encode imaginary and real values, correspondingly. The third one can be used to change the sign of a value or to turn a real value into an imaginary one if necessary, and vice versa. The gate $R_c$![]() is used to encode complex values.

is used to encode complex values.

Now, let us specify the action of different parts of $U_A$![]() . The oracles $O^{F}_{F,{\rm corr}} O_F$

. The oracles $O^{F}_{F,{\rm corr}} O_F$![]() encode column indices into the ancilla register $a_c$

encode column indices into the ancilla register $a_c$![]() :

:

The oracle $O_H$![]() uses the row index from the state register ‘in’ and the column indices from the ancilla register $a_c$

uses the row index from the state register ‘in’ and the column indices from the ancilla register $a_c$![]() to determine which element should be computed and then encodes it into the state amplitude:

to determine which element should be computed and then encodes it into the state amplitude:

After that, the oracle $O_M$![]() transfers the column indices from $a_c$

transfers the column indices from $a_c$![]() to the input register:

to the input register:

Finally, the oracles $O^{\dagger} _F O^{B}_{F,{\rm corr}}$![]() entangle the states encoding the column indices in the input register with the zero state in the ancilla register:

entangle the states encoding the column indices in the input register with the zero state in the ancilla register:

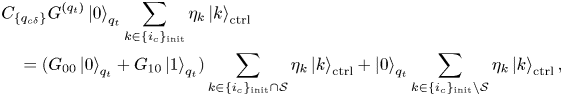

6.3 Single-target multicontrolled gates

If one has a single-target gate $G$![]() , whose matrix representation is

, whose matrix representation is

then the corresponding STMC gate $C_{\{q_{c\delta }\}}G^{(q_t)}$![]() is defined as the gate $G$

is defined as the gate $G$![]() acting on the target qubit $q_t$

acting on the target qubit $q_t$![]() and controlled by a set of qubits $\{q_{c\delta }\}$

and controlled by a set of qubits $\{q_{c\delta }\}$![]() . If $q_{c\delta }$

. If $q_{c\delta }$![]() is a control qubit then the gate $G$

is a control qubit then the gate $G$![]() is triggered if and only if $\mid {\delta }\rangle_{q_{c\delta }}$

is triggered if and only if $\mid {\delta }\rangle_{q_{c\delta }}$![]() , where $\delta = 0$

, where $\delta = 0$![]() or $1$

or $1$![]() . If the gate $G$

. If the gate $G$![]() acting on a quantum state vector ${\boldsymbol{\psi} }$

acting on a quantum state vector ${\boldsymbol{\psi} }$![]() of $n$

of $n$![]() qubits is controlled by the qubit $q_{c\delta } \in [0,n)$

qubits is controlled by the qubit $q_{c\delta } \in [0,n)$![]() , then only the state vector's elements with the indices $\{i_e\}_{q_{c\delta }}$

, then only the state vector's elements with the indices $\{i_e\}_{q_{c\delta }}$![]() can be modified by the gate $G$

can be modified by the gate $G$![]() :

:

For instance, for $q_{c1} = 0$![]() , every second element of ${\boldsymbol{\psi} }$

, every second element of ${\boldsymbol{\psi} }$![]() can be modified by the gate $G$

can be modified by the gate $G$![]() . Then, the STMC gate $C_{\{q_{c\delta }\}}G^{(q_t)}$

. Then, the STMC gate $C_{\{q_{c\delta }\}}G^{(q_t)}$![]() can modify only the elements from the set

can modify only the elements from the set

where $\bigcap$![]() is the intersection operator. The most common case is when $q_t$

is the intersection operator. The most common case is when $q_t$![]() is a more significant qubit than the control ones, and the initial state of $q_t$

is a more significant qubit than the control ones, and the initial state of $q_t$![]() is the zero state. In this case, the action of $C_{\{q_{c\delta }\}}G^{(q_t)}$

is the zero state. In this case, the action of $C_{\{q_{c\delta }\}}G^{(q_t)}$![]() can be described as

can be described as

where $\eta _{k}$![]() are the complex amplitudes of the initial state of the control register.

are the complex amplitudes of the initial state of the control register.

6.4 General algorithm for the BE

To construct the BE oracle $U_A$![]() , we use the following general procedure.

, we use the following general procedure.

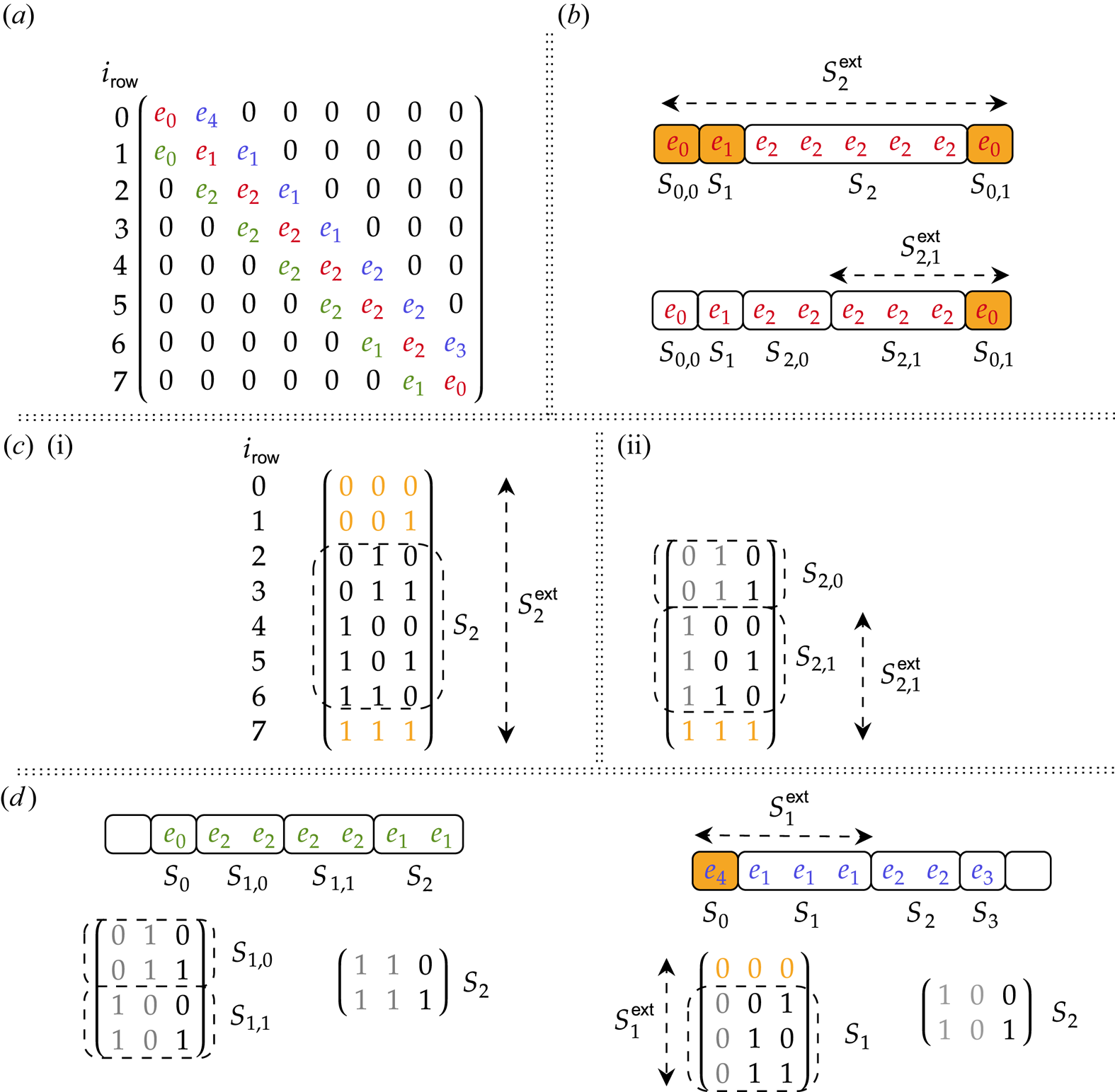

(i) Introduce ancilla qubits necessary for intermediate computations in the oracle $U_A$

(§ 6.5).

(§ 6.5).(ii) Assume that the bitstring of the qubits of the input register (also called the state register) encodes a row index of the matrix $\boldsymbol {A}$

.

.(iii) Using STMC gates, construct the oracle $U_D$

following the idea presented in (6.10) to encode column indices as a superposition of bitstrings in the state register (§ 6.6).

following the idea presented in (6.10) to encode column indices as a superposition of bitstrings in the state register (§ 6.6).(iv) Compute the matrix $\boldsymbol {D}^A$

or derive it using several matrices $\boldsymbol {D}^A$

or derive it using several matrices $\boldsymbol {D}^A$ constructed for matrices $\boldsymbol {A}$

constructed for matrices $\boldsymbol {A}$ of small sizes (§ 7.3).

of small sizes (§ 7.3).(v) Normalize the matrix $\boldsymbol {A}$

according to (6.7) using the non-sparsity-related parameter (6.14).

according to (6.7) using the non-sparsity-related parameter (6.14).(vi) Using STMC rotation gates, construct the oracle $O_H$

to perform the transformation (6.11) (§ 7).

to perform the transformation (6.11) (§ 7).

Once the circuit for the oracle $U_A$![]() is constructed using STMC gates, one can transpile the circuit into a chosen universal set of elementary gates. Standard decomposition methods require at least $\mathcal {O}(n)$

is constructed using STMC gates, one can transpile the circuit into a chosen universal set of elementary gates. Standard decomposition methods require at least $\mathcal {O}(n)$![]() of basic gates (see Barenco et al. Reference Barenco, Bennett, Cleve, DiVincenzo, Margolus, Shor, Sleator, Smolin and Weinfurter1995), where $n$

of basic gates (see Barenco et al. Reference Barenco, Bennett, Cleve, DiVincenzo, Margolus, Shor, Sleator, Smolin and Weinfurter1995), where $n$![]() is the number of controlling qubits in an STMC gate. Yet, it was recently shown (see Claudon et al. Reference Claudon, Zylberman, Feniou, Debbasch, Peruzzo and Piquemal2023) that it is possible to decompose an arbitrary STMC gate into a circuit with $\mathcal {O}(\log _2(n)^{\log _2(12)}\log _2(1/\epsilon _{\rm STMC}))$

is the number of controlling qubits in an STMC gate. Yet, it was recently shown (see Claudon et al. Reference Claudon, Zylberman, Feniou, Debbasch, Peruzzo and Piquemal2023) that it is possible to decompose an arbitrary STMC gate into a circuit with $\mathcal {O}(\log _2(n)^{\log _2(12)}\log _2(1/\epsilon _{\rm STMC}))$![]() depth where $\epsilon _{\rm STMC}$

depth where $\epsilon _{\rm STMC}$![]() is the allowed absolute error in the approximation of the STMC gate. In our assessment of the BE oracle's scaling below, we assume that the corresponding circuit comprises STMC gates not decomposed into elementary gates.

is the allowed absolute error in the approximation of the STMC gate. In our assessment of the BE oracle's scaling below, we assume that the corresponding circuit comprises STMC gates not decomposed into elementary gates.

6.5 Ancilla qubits for the BE

The first step to construct a circuit of the BE oracle is to introduce ancilla qubits and assign the meaning to their bitstrings. As seen from figure 1, the matrix $\boldsymbol {A}$![]() is divided into four submatrices. To address each submatrix, we introduce the ancilla qubit $a_f$

is divided into four submatrices. To address each submatrix, we introduce the ancilla qubit $a_f$![]() . In combination with the register $r_f$

. In combination with the register $r_f$![]() introduced in (4.2), the register $a_f$

introduced in (4.2), the register $a_f$![]() allows addressing each submatrix in the following way:

allows addressing each submatrix in the following way:

If $i_r$![]() and $i_c$

and $i_c$![]() are row and column indices of $\boldsymbol {A}$

are row and column indices of $\boldsymbol {A}$![]() , respectively, such that

, respectively, such that

with integers $i_{fr}, i_{fc} = [0,1]$![]() , $i_{xr}, i_{xc} = [0, N_x)$

, $i_{xr}, i_{xc} = [0, N_x)$![]() and $i_{vr}, i_{vc} = [0, N_v)$

and $i_{vr}, i_{vc} = [0, N_v)$![]() , then the ancilla $a_f$

, then the ancilla $a_f$![]() encodes the integer $i_{fc}$

encodes the integer $i_{fc}$![]() .

.

According to figure 1, each submatrix is split into $N_x^2$![]() blocks of size $N_v\times N_v$

blocks of size $N_v\times N_v$![]() each. Within each submatrix, only the diagonal and a few off-diagonal (in the submatrix $\boldsymbol {F}$

each. Within each submatrix, only the diagonal and a few off-diagonal (in the submatrix $\boldsymbol {F}$![]() ) blocks contain non-zero matrix elements. To address these blocks, we introduce the ancilla register $a_{xr}$

) blocks contain non-zero matrix elements. To address these blocks, we introduce the ancilla register $a_{xr}$![]() with three qubits. This register stores the relative positions of blocks with respect to the main diagonal within each submatrix. The states $\mid {000}\rangle_{a_{xr}}$

with three qubits. This register stores the relative positions of blocks with respect to the main diagonal within each submatrix. The states $\mid {000}\rangle_{a_{xr}}$![]() and $\mid {100}\rangle_{a_{xr}}$

and $\mid {100}\rangle_{a_{xr}}$![]() correspond to the blocks on the main diagonal of a submatrix. The states $\mid {001}\rangle_{a_{xr}}$

correspond to the blocks on the main diagonal of a submatrix. The states $\mid {001}\rangle_{a_{xr}}$![]() and $\mid {010}\rangle_{a_{xr}}$

and $\mid {010}\rangle_{a_{xr}}$![]() correspond to the off-diagonal blocks shifted by one and two blocks to the right, respectively. The states $\mid {101}\rangle_{a_{xr}}$

correspond to the off-diagonal blocks shifted by one and two blocks to the right, respectively. The states $\mid {101}\rangle_{a_{xr}}$![]() and $\mid {110}\rangle_{a_{xr}}$

and $\mid {110}\rangle_{a_{xr}}$![]() correspond to the off-diagonal blocks shifted by one and two blocks to the left, respectively. Using the same notation as in (6.24), the meaning of the register $a_{xr}$

correspond to the off-diagonal blocks shifted by one and two blocks to the left, respectively. Using the same notation as in (6.24), the meaning of the register $a_{xr}$![]() can be described schematically as follows:

can be described schematically as follows:

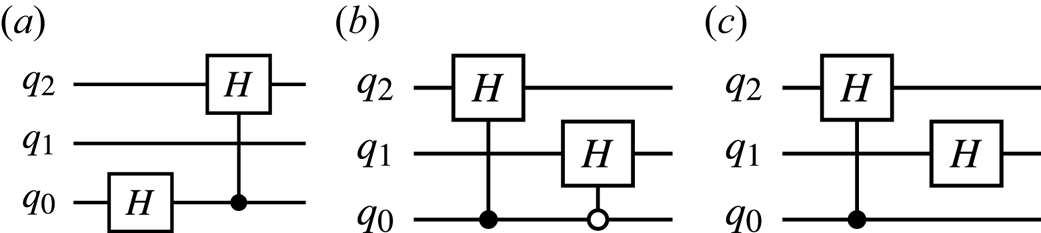

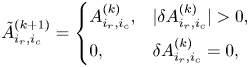

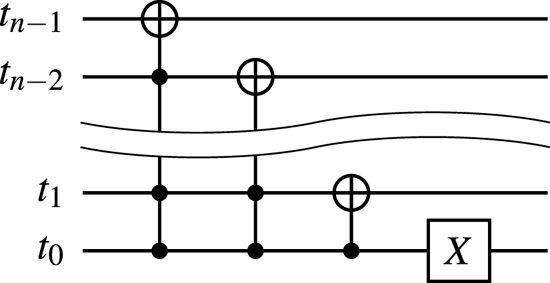

To encode the above bitstrings, one can use the circuits shown in figures 8(a) and 8(b).

Figure 8. (a) The circuit encoding the superposition of states $\mid {000}\rangle_q$![]() , $\mid {001}\rangle_q$

, $\mid {001}\rangle_q$![]() and $\mid {101}\rangle_q$

and $\mid {101}\rangle_q$![]() for a given input zero state. (b) The circuit encoding the superposition of states $\mid {000}\rangle_q$

for a given input zero state. (b) The circuit encoding the superposition of states $\mid {000}\rangle_q$![]() , $\mid {001}\rangle_q$

, $\mid {001}\rangle_q$![]() and $\mid {010}\rangle_q$

and $\mid {010}\rangle_q$![]() for a given input state produced by the circuit 8(a). To encode the superposition of states $\mid {100}\rangle_q$

for a given input state produced by the circuit 8(a). To encode the superposition of states $\mid {100}\rangle_q$![]() , $\mid {101}\rangle_q$

, $\mid {101}\rangle_q$![]() and $\mid {110}\rangle_q$

and $\mid {110}\rangle_q$![]() , one uses the same circuit except that an additional $X$

, one uses the same circuit except that an additional $X$![]() gate is applied to the qubit $q_2$

gate is applied to the qubit $q_2$![]() in the end. (c) The circuit encoding the superposition of states $\mid {000}\rangle_q$

in the end. (c) The circuit encoding the superposition of states $\mid {000}\rangle_q$![]() , $\mid {001}\rangle_q$

, $\mid {001}\rangle_q$![]() , $\mid {010}\rangle_q$

, $\mid {010}\rangle_q$![]() and $\mid {011}\rangle_q$

and $\mid {011}\rangle_q$![]() for a given input state produced by the circuit 8(a). To encode the superposition of states $\mid {100}\rangle_q$

for a given input state produced by the circuit 8(a). To encode the superposition of states $\mid {100}\rangle_q$![]() , $\mid {101}\rangle_q$

, $\mid {101}\rangle_q$![]() , $\mid {110}\rangle_q$

, $\mid {110}\rangle_q$![]() and $\mid {111}\rangle_q$

and $\mid {111}\rangle_q$![]() , one uses the same circuit except that an additional $X$

, one uses the same circuit except that an additional $X$![]() gate is applied to the qubit $q_2$

gate is applied to the qubit $q_2$![]() in the end.

in the end.

In the submatrix $\boldsymbol {C}^f$![]() , some of the blocks have rows that contain $N_v$

, some of the blocks have rows that contain $N_v$![]() non-zero elements. To address these elements, we introduce the ancilla register $a_v$

non-zero elements. To address these elements, we introduce the ancilla register $a_v$![]() with $n_v$

with $n_v$![]() qubits. This register stores the integer $i_{vc}$

qubits. This register stores the integer $i_{vc}$![]() (6.24) when the elements of $\boldsymbol {C}^f$

(6.24) when the elements of $\boldsymbol {C}^f$![]() and $\boldsymbol {C}^E$

and $\boldsymbol {C}^E$![]() are addressed; otherwise, the register is not used. For instance, to address the non-zero elements in the submatrix $\boldsymbol {C}^f$

are addressed; otherwise, the register is not used. For instance, to address the non-zero elements in the submatrix $\boldsymbol {C}^f$![]() , one uses the following encoding:

, one uses the following encoding:

As regards to $\boldsymbol {C}^E$![]() , since all its non-zero elements have $i_{vc} = 0$

, since all its non-zero elements have $i_{vc} = 0$![]() , one keeps the register $a_v$

, one keeps the register $a_v$![]() in the zero state when $\mid {1}\rangle_{a_f}\mid {0}\rangle_{r_f}$

in the zero state when $\mid {1}\rangle_{a_f}\mid {0}\rangle_{r_f}$![]() .

.

The ancilla register $a_{vr}$![]() with three qubits is introduced to encode positions of the matrix elements within the non-zero blocks of the submatrix $\boldsymbol {F}$

with three qubits is introduced to encode positions of the matrix elements within the non-zero blocks of the submatrix $\boldsymbol {F}$![]() . In particular, the states $\mid {000}\rangle_{a_{vr}}$

. In particular, the states $\mid {000}\rangle_{a_{vr}}$![]() and $\mid {100}\rangle_{a_{vr}}$

and $\mid {100}\rangle_{a_{vr}}$![]() correspond to the elements on the main diagonal of a block. The states $\mid {001}\rangle_{a_{vr}}$

correspond to the elements on the main diagonal of a block. The states $\mid {001}\rangle_{a_{vr}}$![]() , $\mid {010}\rangle_{a_{vr}}$

, $\mid {010}\rangle_{a_{vr}}$![]() and $\mid {011}\rangle_{a_{vr}}$

and $\mid {011}\rangle_{a_{vr}}$![]() correspond to the elements shifted by one, two and three cells, respectively, to the right from the diagonal. The states $\mid {101}\rangle_{a_{vr}}$

correspond to the elements shifted by one, two and three cells, respectively, to the right from the diagonal. The states $\mid {101}\rangle_{a_{vr}}$![]() , $\mid {110}\rangle_{a_{vr}}$

, $\mid {110}\rangle_{a_{vr}}$![]() and $\mid {111}\rangle_{a_{vr}}$

and $\mid {111}\rangle_{a_{vr}}$![]() correspond to the elements shifted by one, two and three cells, respectively, to the left from the diagonal. Using the notations from (6.24), the meaning of the register $a_{vr}$

correspond to the elements shifted by one, two and three cells, respectively, to the left from the diagonal. Using the notations from (6.24), the meaning of the register $a_{vr}$![]() can be described as

can be described as

To encode the above bitstrings, one can use the circuits shown in figures 8(b) and 8(c).

Also, we introduce the ancilla $a_e$![]() whose zero-state's amplitude will encode the complex value of a given matrix element.

whose zero-state's amplitude will encode the complex value of a given matrix element.

6.6 Constructing the oracle ${U_D}$

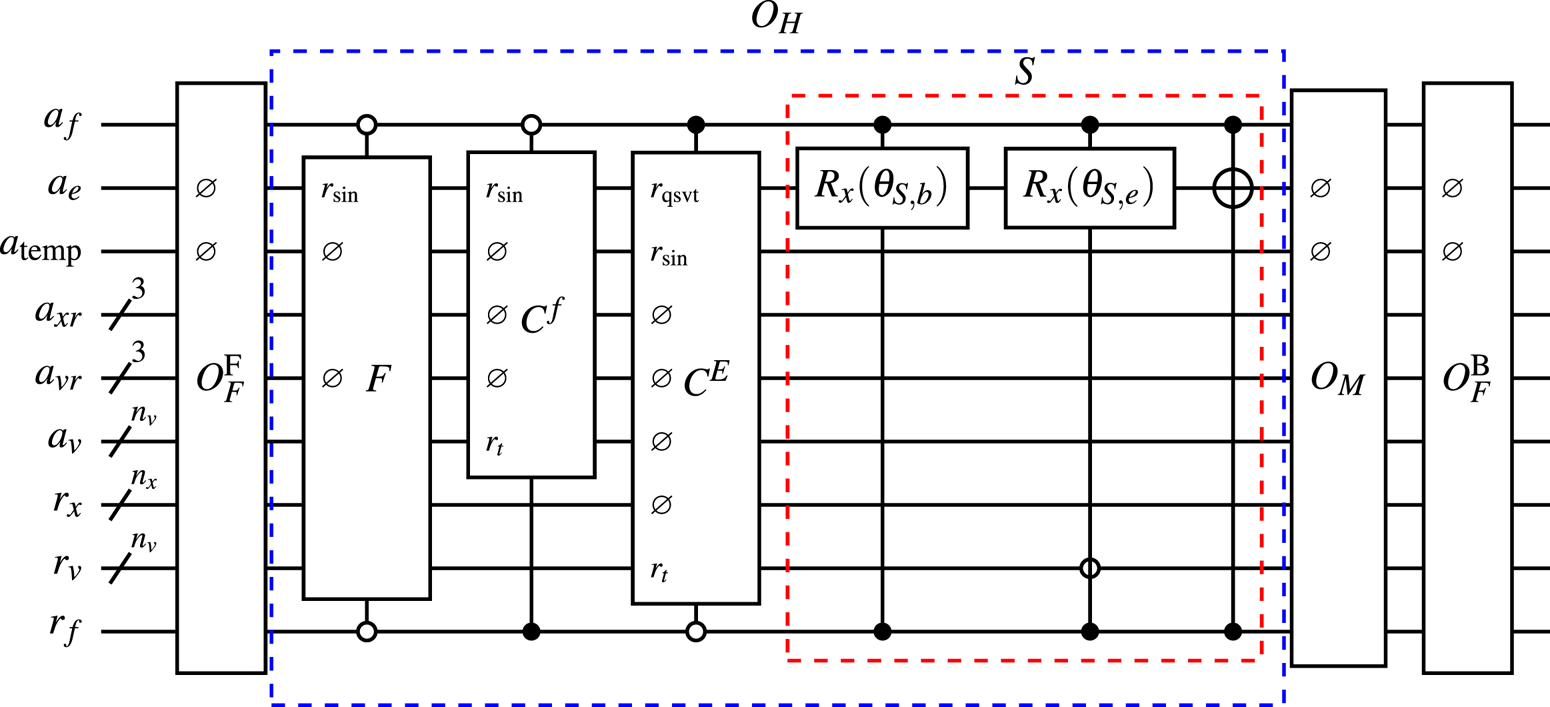

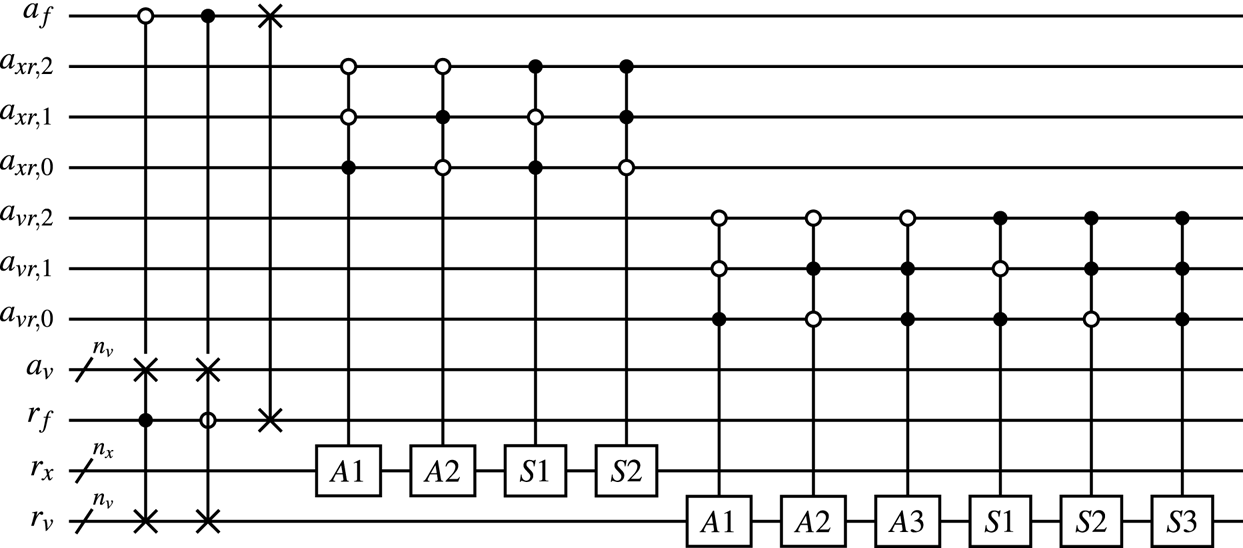

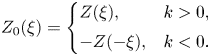

A schematic of the BE oracle $U_A$![]() is shown in figure 9, where the dashed blue box is the oracle $O_H$

is shown in figure 9, where the dashed blue box is the oracle $O_H$![]() described in § 7, and the rest of the boxes are components of the oracle $U_D$

described in § 7, and the rest of the boxes are components of the oracle $U_D$![]() .

.

Figure 9. The circuit representation of the BE oracle $U_A$![]() . The oracle $O_M$

. The oracle $O_M$![]() is shown in figure 11. The oracles $F$

is shown in figure 11. The oracles $F$![]() , $C^f$

, $C^f$![]() , $C^E$

, $C^E$![]() and $S$

and $S$![]() encode the corresponding submatrices introduced in (4.5), and are described in § 7. Here, the symbol $\varnothing$

encode the corresponding submatrices introduced in (4.5), and are described in § 7. Here, the symbol $\varnothing$![]() means that the gate does not use the corresponding qubit. The qubit $r_{\rm sin}$

means that the gate does not use the corresponding qubit. The qubit $r_{\rm sin}$![]() shown in some oracles indicates that the corresponding oracle includes the circuit shown in figure 15. The qubit $r_{\rm qsvt}$

shown in some oracles indicates that the corresponding oracle includes the circuit shown in figure 15. The qubit $r_{\rm qsvt}$![]() indicates that the oracle $C^E$

indicates that the oracle $C^E$![]() is constructed using QSVT. The positions of the qubits $r_f$

is constructed using QSVT. The positions of the qubits $r_f$![]() and $a_f$

and $a_f$![]() are changed for the sake of clarity.

are changed for the sake of clarity.

The oracle $U_D$![]() is built ad hoc manually by considering its suboracles separately. The suboracle $O_F^{F} = O^{F}_{F,{\rm corr}} O_F$

is built ad hoc manually by considering its suboracles separately. The suboracle $O_F^{F} = O^{F}_{F,{\rm corr}} O_F$![]() is constructed based on (6.15), where the ‘in’ register includes the registers $r_v$

is constructed based on (6.15), where the ‘in’ register includes the registers $r_v$![]() , $r_x$

, $r_x$![]() and $r_f$

and $r_f$![]() described in § 4.1. The ancilla registers $a_v$

described in § 4.1. The ancilla registers $a_v$![]() , $a_{vr}$

, $a_{vr}$![]() , $a_{xr}$

, $a_{xr}$![]() and $a_f$

and $a_f$![]() described in § 6.5 represent the $a_c$

described in § 6.5 represent the $a_c$![]() register in (6.15). For instance, the part of the circuit $O_F$

register in (6.15). For instance, the part of the circuit $O_F$![]() encoding the ancilla $a_f$

encoding the ancilla $a_f$![]() (6.23) is shown in figure 10, where the first Hadamard gate creates the address to the submatrices $\boldsymbol {F}$

(6.23) is shown in figure 10, where the first Hadamard gate creates the address to the submatrices $\boldsymbol {F}$![]() and $\boldsymbol {C}^E$

and $\boldsymbol {C}^E$![]() , and the Pauli $X$

, and the Pauli $X$![]() gate generates the address to the elements of the submatrix $\boldsymbol {S}$

gate generates the address to the elements of the submatrix $\boldsymbol {S}$![]() with $i_{vr} > 0$

with $i_{vr} > 0$![]() (using the notation from (6.24)). The last Hadamard gate produces the addresses to $\boldsymbol {C}_f$

(using the notation from (6.24)). The last Hadamard gate produces the addresses to $\boldsymbol {C}_f$![]() and $\boldsymbol {S}$

and $\boldsymbol {S}$![]() at $i_{vr} = 0$

at $i_{vr} = 0$![]() .

.

The implementation of the suboracle $O_F^{B} = O^{\dagger} _F O^{B}_{F,{\rm corr}}$![]() is done based on (6.18). Its correcting part $O^{B}_{F,{\rm corr}}$

is done based on (6.18). Its correcting part $O^{B}_{F,{\rm corr}}$![]() is implemented ad hoc by varying positions and control nodes of STMC gates.

is implemented ad hoc by varying positions and control nodes of STMC gates.

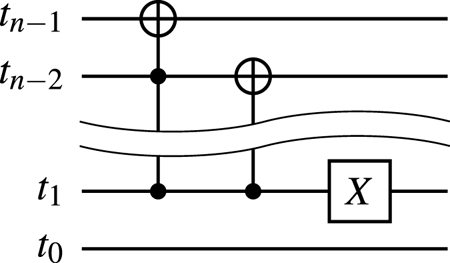

The suboracle $O_M$![]() that performs the mapping (6.17) uses the SWAP gates to exchange the states in the registers $a_f$

that performs the mapping (6.17) uses the SWAP gates to exchange the states in the registers $a_f$![]() and $a_v$

and $a_v$![]() with the states in the registers $r_f$

with the states in the registers $r_f$![]() and $r_v$

and $r_v$![]() , respectively, as shown in figure 11. To encode absolute column indices into the input registers using the states of the registers $a_{xr}$

, respectively, as shown in figure 11. To encode absolute column indices into the input registers using the states of the registers $a_{xr}$![]() and $a_{vr}$

and $a_{vr}$![]() , one should follow the rules (6.25) and (6.27) and apply the quantum adders and subtractors described in Appendix C. The important feature of the oracle $O_M$

, one should follow the rules (6.25) and (6.27) and apply the quantum adders and subtractors described in Appendix C. The important feature of the oracle $O_M$![]() is that the number of arithmetic operators in it does not depend on $N_x$

is that the number of arithmetic operators in it does not depend on $N_x$![]() or $N_v$

or $N_v$![]() . Since the circuits of the arithmetic operators scale as $\mathcal {O}(n_x)$

. Since the circuits of the arithmetic operators scale as $\mathcal {O}(n_x)$![]() or $\mathcal {O}(n_v)$

or $\mathcal {O}(n_v)$![]() depending on the target register of the operator, the scaling of $O_M$

depending on the target register of the operator, the scaling of $O_M$![]() is $\mathcal {O}(n_x + n_v)$

is $\mathcal {O}(n_x + n_v)$![]() . The full circuit of $U_D$

. The full circuit of $U_D$![]() can be found in Novikau (Reference Novikau2024b). The modelling of the circuit is done using the QuCF framework (see Novikau Reference Novikau2024c), which is based on the QuEST toolkit (see Jones et al. Reference Jones, Brown, Bush and Benjamin2019).

can be found in Novikau (Reference Novikau2024b). The modelling of the circuit is done using the QuCF framework (see Novikau Reference Novikau2024c), which is based on the QuEST toolkit (see Jones et al. Reference Jones, Brown, Bush and Benjamin2019).

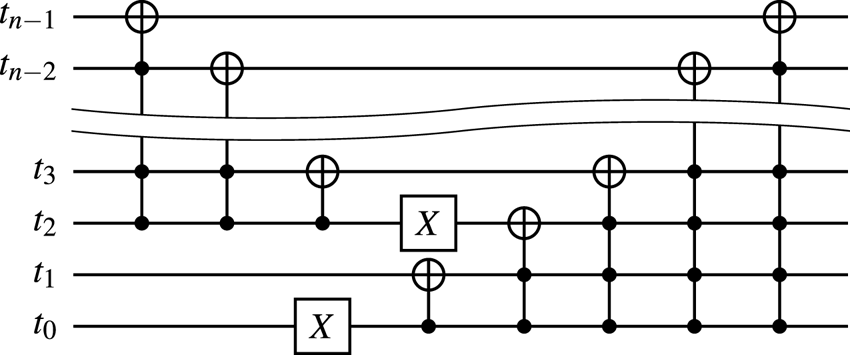

Figure 11. The circuit of the oracle $O_M$![]() . The adders $A1$

. The adders $A1$![]() –$A3$

–$A3$![]() and the subtractors $S1$

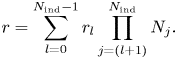

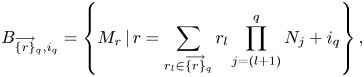

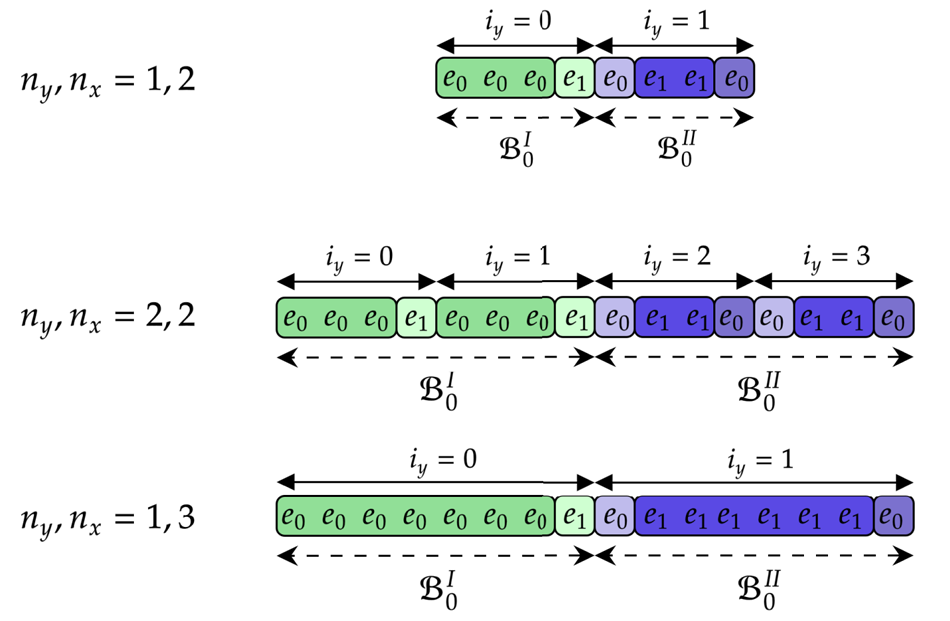

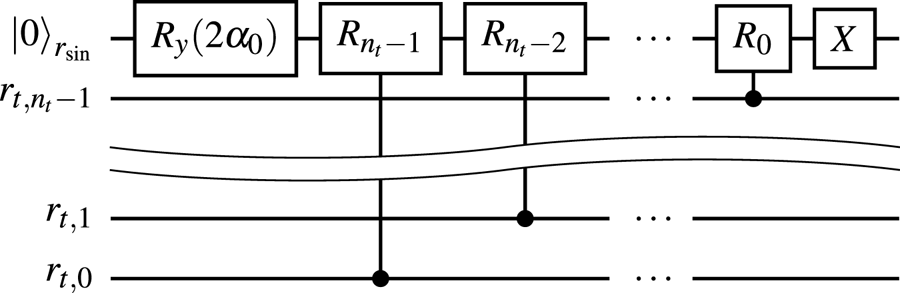

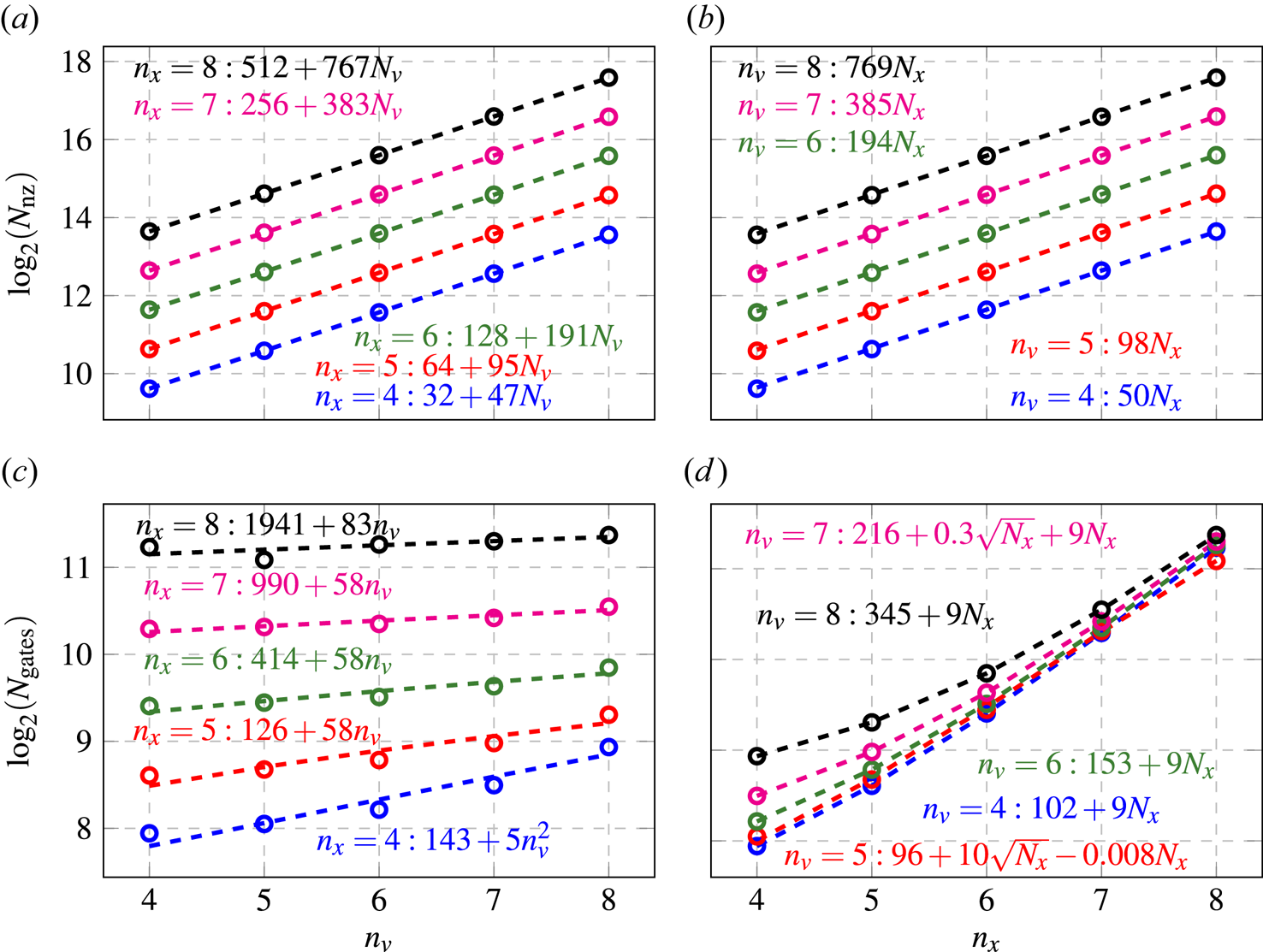

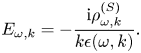

and the subtractors $S1$![]() –$S3$