Article contents

Bio-inspired variable-stiffness flaps for hybrid flow control, tuned via reinforcement learning

Published online by Cambridge University Press: 05 February 2023

Abstract

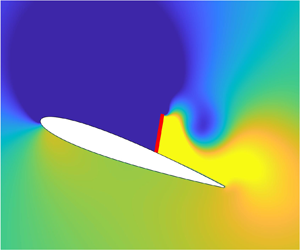

A bio-inspired, passively deployable flap attached to an airfoil by a torsional spring of fixed stiffness can provide significant lift improvements at post-stall angles of attack. In this work, we describe a hybrid active–passive variant to this purely passive flow control paradigm, where the stiffness of the hinge is actively varied in time to yield passive fluid–structure interaction of greater aerodynamic benefit than the fixed-stiffness case. This hybrid active–passive flow control strategy could potentially be implemented using variable-stiffness actuators with less expense compared with actively prescribing the flap motion. The hinge stiffness is varied via a reinforcement-learning-trained closed-loop feedback controller. A physics-based penalty and a long–short-term training strategy for enabling fast training of the hybrid controller are introduced. The hybrid controller is shown to provide lift improvements as high as 136 % and 85 % with respect to the flapless airfoil and the best fixed-stiffness case, respectively. These lift improvements are achieved due to large-amplitude flap oscillations as the stiffness varies over four orders of magnitude, whose interplay with the flow is analysed in detail.

JFM classification

- Type

- JFM Rapids

- Information

- Copyright

- © The Author(s), 2023. Published by Cambridge University Press

References

REFERENCES

- 2

- Cited by