1. Introduction

In this article we develop and apply an adjoint-based approach for controlling incompressible two-phase flows with sharp interfaces governed by the Stokes equations. The main model we consider is that of

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm \\ \boldsymbol{\nabla} \boldsymbol{\cdot} \sigma_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm \end{array}\right\}, \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm \\ \boldsymbol{\nabla} \boldsymbol{\cdot} \sigma_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm \end{array}\right\}, \end{equation}where a discontinuity in traction forms at the interface due to (a possibly non-constant) surface tension.

While a significant simplification of many practical flows, this model shares important features prevalent in more complex multiphase flows, such as discontinuous flow quantities (here pressure and velocity gradients) and the presence of higher-order surface properties such as curvature. Understanding these features and their effect on optimization problems permits optimization of more realistic fluids at higher Reynolds numbers. Common extensions to this model are important to many engineering and industrial applications, such as the interactions of water and oil in reservoirs (see Pau et al. Reference Pau, Almgren, Bell and Lijewski2009), air and fuel in combustion engines (see Desjardins, Moureau & Pitsch Reference Desjardins, Moureau and Pitsch2008), water and air in sprinklers (see Hua et al. Reference Hua, Kumar, Khoo and Xue2002), etc. Developing a robust and flexible control and optimization framework will enable improved efficiency in these systems.

The optimal control of single-phase fluid problems has been extensively studied, starting with the pioneering work of Pironneau (Reference Pironneau1974) and Jameson (Reference Jameson1988) in the context of aerodynamic design. However, there are only a few contributions to two-phase flows. Extending single-phase results to two-phase flows has generally been difficult due to the inclusion of the geometry as a problem unknown and the ensuing discontinuities in flow quantities. In the sharp interface limit, the fluid–fluid interface acts similarly to a domain boundary and, thus, the optimal control of two-phase flows shares many features in common with shape optimization. Therefore, the shape calculus for shape optimization developed in Delfour & Zolésio (Reference Delfour and Zolésio2001) and later, extended to time-dependent domains in Moubachir & Zolésio (Reference Moubachir and Zolésio2006) plays a central role. To efficiently perform optimal control of partial-differential-equation-constrained systems, the preferred approach is generally through the adjoint method based on the Lagrange multiplier theory. A major downside of the method is that it is purely formal, in that it assumes the required differentiability of the problem variables, so care must be taken when applying it directly to problems with discontinuous solutions. In the case of incompressible two-phase flows with a sharp interface, a commonly used formulation is the so-called one-fluid model (see Prosperetti & Tryggvason Reference Prosperetti and Tryggvason2009). In this model, the velocity and pressure are considered as variables over the whole domain, e.g.

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{u} \triangleq \alpha \boldsymbol{u}_+ + (1 - \alpha) \boldsymbol{u}_-, \\ p\triangleq \alpha p_+ + (1 - \alpha) p_-, \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{u} \triangleq \alpha \boldsymbol{u}_+ + (1 - \alpha) \boldsymbol{u}_-, \\ p\triangleq \alpha p_+ + (1 - \alpha) p_-, \end{array}\right\} \end{equation}

where ![]() $\alpha$ is an indicator function. However, recent work on similar systems with discontinuous coefficients, such as Pantz (Reference Pantz2005) for a heat equation and Allaire, Jouve & van Goethem (Reference Allaire, Jouve and van Goethem2011) for linear elasticity, has shown that the bulk variables are not differentiable with respect to the domain, i.e. not shape differentiable. The present manuscript considers the restrictions

$\alpha$ is an indicator function. However, recent work on similar systems with discontinuous coefficients, such as Pantz (Reference Pantz2005) for a heat equation and Allaire, Jouve & van Goethem (Reference Allaire, Jouve and van Goethem2011) for linear elasticity, has shown that the bulk variables are not differentiable with respect to the domain, i.e. not shape differentiable. The present manuscript considers the restrictions ![]() $(\boldsymbol {u}_\pm , p_\pm )$ separately and develops an adjoint method for the coupled system, instead of a single-equation system like the one-fluid model.

$(\boldsymbol {u}_\pm , p_\pm )$ separately and develops an adjoint method for the coupled system, instead of a single-equation system like the one-fluid model.

The novelty of our contribution lies in extending and verifying the methodology proposed by Pantz (Reference Pantz2005) to models similar to the above surface tension-driven two-phase flow. In particular, we derive the adjoint equations and adjoint-based gradient for static and quasi-static two-phase Stokes systems, which have been successfully used in many studies of droplet stability and breakup (see Stone & Leal Reference Stone and Leal1989; Pozrikidis Reference Pozrikidis1990; Hou, Lowengrub & Shelley Reference Hou, Lowengrub and Shelley1994; Stone Reference Stone1994; Lac & Homsy Reference Lac and Homsy2007; Ojala & Tornberg Reference Ojala and Tornberg2015) and provide a practical set-up for advancing the control of multiphase flows. Furthermore, the use of shape calculus allows for deriving an adjoint-based gradient independent of the representation of the interface. From the continuous adjoint perspective, the smoothness of the resulting flow and control parameters is also very important. For example, smooth solutions to the Stokes problem require a smooth curvature, but this is not a priori guaranteed by the optimization procedure. For the forward problem, several time-varying interfacial models have been recently studied in Pruss & Simonett (Reference Pruss and Simonett2016) and shown to be well-posed, paving the way to similar results for the adjoint problem. The smoothness requirement on the interface and curvature also poses problems numerically. For example, the widely used volume of fluid (known as VOF) method requires substantial effort to provide an accurate representation of the curvature (see Popinet Reference Popinet2009). Our numerical discretization relies on boundary element methods (see Pozrikidis Reference Pozrikidis1992) to provide accurate representations of all quantities of interest. In particular, since the interface is expressed explicitly, we can compute accurate geometric quantities. The use of boundary element methods in shape optimization problems also has a long history, as found in classic monographs such as Aliabadi (Reference Aliabadi2002) and in recent applications from Alouges, Desimone & Heltai (Reference Alouges, Desimone and Heltai2011), Bonnet, Liu & Veerapaneni (Reference Bonnet, Liu and Veerapaneni2020) and others.

The current work can also provide a starting point for the adjoint-based optimization of higher Reynolds number multiphase flows governed by the incompressible Navier–Stokes equations. However, the flow nonlinearity and interface breakup will require changes to the formulation. Nonetheless, the present work can be viewed as a verification formulation as well as a predictive approach for droplets in the Stokes regime. Note that optimal control of multiphase Navier–Stokes systems has been approached in the past with various models. For example, in Feppon et al. (Reference Feppon, Allaire, Bordeu, Cortial and Dapogny2019) the authors derive a coupled thermal fluid-structure model and in Deng, Liu & Wu (Reference Deng, Liu and Wu2017) and Garcke et al. (Reference Garcke, Hinze, Kahle and Lam2018) a phase field model was used to describe the interface. Phase-field models have seen the most development in this area, due in part to the fact that solutions are quickly varying but continuous and allow for a standard approach through adjoint-based methods. In contrast, the current method requires the more complex shape calculus to handle the sharp discontinuities explicitly, but the resulting gradients are not quickly varying and the optimization is shown to be well behaved. Other multiphase applications include the optimal control of a two-phase Stefan problem with level set methods in Bernauer & Herzog (Reference Bernauer and Herzog2011), free-surface Stokes problems in Repke, Marheineke & Pinnau (Reference Repke, Marheineke and Pinnau2011), Palacios, Alonso & Jameson (Reference Palacios, Alonso and Jameson2012) and Sellier (Reference Sellier2016), etc. To the authors’ knowledge, no previous work has incorporated sharp interfaces and surface tension in a fluid optimal control problem.

The paper is organized as follows. We begin by introducing the required notation, fluid model and notions of boundary perturbations in § 2. In § 3, we define the optimization problems of interest and derive the adjoint equations and the gradients required for the optimization using gradient descent-type methods. The discretization details are presented in § 4. The discretization and numerical results are focused on a single droplet under axisymmetric assumptions. The presented results are then numerically verified in § 5 for several test problems. Finally, concluding remarks are given in § 6.

2. Preliminaries

2.1. Two-phase Stokes system

We are interested in the quasi-static evolution of multiple clean droplets in an incompressible flow. The droplets experience no phase change, but are driven by a constant surface tension at the fluid–fluid interface. Such a system is governed by the two-phase Stokes equations, which have been described in detail in classic monographs (see Ladyzhenskaya Reference Ladyzhenskaya1963; Pozrikidis Reference Pozrikidis1992; Temam Reference Temam2001). The notation and main auxiliary results used below are presented in appendix A.

We consider a three-dimensional domain ![]() $\varOmega _- \subset \mathbb {R}^d, d = 3$, and its complement

$\varOmega _- \subset \mathbb {R}^d, d = 3$, and its complement ![]() $\varOmega _+ = \mathbb {R}^d \setminus \bar {\varOmega }_-$ to denote the union of the droplets’ interiors and the ambient fluid, respectively. The boundary between the two domains is

$\varOmega _+ = \mathbb {R}^d \setminus \bar {\varOmega }_-$ to denote the union of the droplets’ interiors and the ambient fluid, respectively. The boundary between the two domains is ![]() $\varSigma \triangleq \bar {\varOmega }_- \cap \bar {\varOmega }_+$. In the following, we assume

$\varSigma \triangleq \bar {\varOmega }_- \cap \bar {\varOmega }_+$. In the following, we assume ![]() $\varSigma$ is a finite set of disjoint, closed, bounded and orientable surfaces. Furthermore, we require that

$\varSigma$ is a finite set of disjoint, closed, bounded and orientable surfaces. Furthermore, we require that ![]() $\varSigma$ is of class

$\varSigma$ is of class ![]() $\mathcal {C}^2$ to allow for the definition of a unique curvature at every point.

$\mathcal {C}^2$ to allow for the definition of a unique curvature at every point.

The droplets and the ambient fluid have dynamic viscosities ![]() $\mu _-$ and

$\mu _-$ and ![]() $\mu _+$, respectively. The flow in each phase is described by the velocity fields

$\mu _+$, respectively. The flow in each phase is described by the velocity fields ![]() $\boldsymbol {u}_\pm : \varOmega _\pm \to \mathbb {R}^d$ and the pressures

$\boldsymbol {u}_\pm : \varOmega _\pm \to \mathbb {R}^d$ and the pressures ![]() $p_\pm : \varOmega _\pm \to \mathbb {R}$. We also define the Cauchy stress tensor and the rate-of-strain tensor by

$p_\pm : \varOmega _\pm \to \mathbb {R}$. We also define the Cauchy stress tensor and the rate-of-strain tensor by

\begin{equation} \left.\begin{array}{c@{}} \sigma_\pm \triangleq -p_\pm {\boldsymbol{\mathsf{I}}} + 2 \mu_\pm \varepsilon_\pm, \\ \varepsilon_\pm \triangleq \tfrac{1}{2} (\boldsymbol{\nabla} \boldsymbol{u}_\pm + (\boldsymbol{\nabla} \boldsymbol{u}_\pm)^T). \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \sigma_\pm \triangleq -p_\pm {\boldsymbol{\mathsf{I}}} + 2 \mu_\pm \varepsilon_\pm, \\ \varepsilon_\pm \triangleq \tfrac{1}{2} (\boldsymbol{\nabla} \boldsymbol{u}_\pm + (\boldsymbol{\nabla} \boldsymbol{u}_\pm)^T). \end{array}\right\} \end{equation}Following these definitions, the static incompressible two-phase Stokes equations are given by

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{\nabla} \boldsymbol{\cdot} \sigma_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{u}_+ = \boldsymbol{u}^\infty, \quad \|\boldsymbol{x}\| \to \infty. \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{\nabla} \boldsymbol{\cdot} \sigma_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{u}_+ = \boldsymbol{u}^\infty, \quad \|\boldsymbol{x}\| \to \infty. \end{array}\right\} \end{equation} The boundary conditions at the interface between the fluids are given as a set of jump conditions on ![]() $\varSigma$. Namely, the velocity must satisfy a no-slip condition and the surface stresses are related to the additive curvature of the interface by the Young–Laplace law. We write

$\varSigma$. Namely, the velocity must satisfy a no-slip condition and the surface stresses are related to the additive curvature of the interface by the Young–Laplace law. We write

\begin{equation} \left.\begin{array}{c@{}} {[\![ \boldsymbol{u} ]\!]} = 0, \\ {[\![\, \boldsymbol{f} ]\!]} = \gamma \kappa \boldsymbol{n},\end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} {[\![ \boldsymbol{u} ]\!]} = 0, \\ {[\![\, \boldsymbol{f} ]\!]} = \gamma \kappa \boldsymbol{n},\end{array}\right\} \end{equation}

where ![]() $\boldsymbol {f}_\pm \triangleq \boldsymbol {n} \boldsymbol {\cdot } \sigma _\pm$ is the surface traction,

$\boldsymbol {f}_\pm \triangleq \boldsymbol {n} \boldsymbol {\cdot } \sigma _\pm$ is the surface traction, ![]() $\gamma$ is the surface tension coefficient and

$\gamma$ is the surface tension coefficient and ![]() $\boldsymbol {n}$ is the exterior normal vector to

$\boldsymbol {n}$ is the exterior normal vector to ![]() $\varOmega _-$. The jump operator is defined as

$\varOmega _-$. The jump operator is defined as ![]() $[\![\, f ]\!] \triangleq f_+(\boldsymbol {x}_+) - f_-(\boldsymbol {x}_-)$, where

$[\![\, f ]\!] \triangleq f_+(\boldsymbol {x}_+) - f_-(\boldsymbol {x}_-)$, where

It is convenient to non-dimensionalize the equations to reduce the number of parameters in the problem. We choose the following non-dimensionalizations, common in the literature:

where ![]() $U$ is a characteristic velocity magnitude and

$U$ is a characteristic velocity magnitude and ![]() $R$ is a characteristic droplet radius. The resulting non-dimensional parameters governing the systems are the viscosity ratio

$R$ is a characteristic droplet radius. The resulting non-dimensional parameters governing the systems are the viscosity ratio ![]() $\lambda$ and the capillary number

$\lambda$ and the capillary number ![]() $\textit {Ca}$, defined as

$\textit {Ca}$, defined as

In the remainder, we will continue by using the non-dimensional equations. We will write the non-dimensional stress jump condition (2.3) as follows:

In order to evolve the shape of the droplets in time, we use a quasi-static approach in which we first compute the velocity using (2.2) and then displace the interface using an ordinary differential equation. The evolution of the interface is described by

where ![]() $\boldsymbol {X}$ is a parameterization of the interface and

$\boldsymbol {X}$ is a parameterization of the interface and ![]() $\boldsymbol {V}$ is a given source term. Typical examples of the source term are

$\boldsymbol {V}$ is a given source term. Typical examples of the source term are

where ![]() $\boldsymbol {w}$ can be chosen to be

$\boldsymbol {w}$ can be chosen to be ![]() $\boldsymbol {0}, \boldsymbol {u}$ or a different tangential correction to

$\boldsymbol {0}, \boldsymbol {u}$ or a different tangential correction to ![]() $\boldsymbol {u}$. The choice of

$\boldsymbol {u}$. The choice of ![]() $\boldsymbol {w}$ is left open, since only the normal component of the velocity field governs the deformation of the interface. The tangential component can be artificially imposed for numerical reasons, e.g. to cluster points in regions of high curvature; for example, see the works of Hou et al. (Reference Hou, Lowengrub and Shelley1994) and Ojala & Tornberg (Reference Ojala and Tornberg2015).

$\boldsymbol {w}$ is left open, since only the normal component of the velocity field governs the deformation of the interface. The tangential component can be artificially imposed for numerical reasons, e.g. to cluster points in regions of high curvature; for example, see the works of Hou et al. (Reference Hou, Lowengrub and Shelley1994) and Ojala & Tornberg (Reference Ojala and Tornberg2015).

2.2. Shape derivatives

Interfacial control in multiphase flows with sharp interfaces is inherently a form of shape optimization. With this in mind, we will review some of the concepts behind shape optimization and perturbations with respect to the domain. We consider here Hadamard's method for boundary variations, which has been detailed rigorously in works such as Moubachir & Zolésio (Reference Moubachir and Zolésio2006), Allaire & Schoenauer (Reference Allaire and Schoenauer2007), Delfour & Zolésio (Reference Delfour and Zolésio2001) and, from the view of differential geometry, in Walker (Reference Walker2015).

The method is based on defining so-called perturbations of the identity by

where ![]() $\mathrm {Id}$ is the identity mapping,

$\mathrm {Id}$ is the identity mapping, ![]() $\tilde {\boldsymbol {X}} \in W^{2, \infty }(\mathbb {R}^d; \mathbb {R}^d)$ is a perturbation and

$\tilde {\boldsymbol {X}} \in W^{2, \infty }(\mathbb {R}^d; \mathbb {R}^d)$ is a perturbation and ![]() $\epsilon > 0$ is a real constant. If

$\epsilon > 0$ is a real constant. If ![]() $\epsilon$ is sufficiently small, then

$\epsilon$ is sufficiently small, then ![]() $\boldsymbol {X}_\epsilon$ is a diffeomorphism on

$\boldsymbol {X}_\epsilon$ is a diffeomorphism on ![]() $\mathbb {R}^d$ (Allaire & Schoenauer Reference Allaire and Schoenauer2007, lemma 6.13). As such, by Hadamard's method we can identify every admissible shape

$\mathbb {R}^d$ (Allaire & Schoenauer Reference Allaire and Schoenauer2007, lemma 6.13). As such, by Hadamard's method we can identify every admissible shape ![]() $\varOmega _\epsilon$ with a perturbation

$\varOmega _\epsilon$ with a perturbation ![]() $\boldsymbol {X}_\epsilon$. Therefore, the method allows, among other things, defining differentiation of shapes by differentiation in the Banach space

$\boldsymbol {X}_\epsilon$. Therefore, the method allows, among other things, defining differentiation of shapes by differentiation in the Banach space ![]() $W^{2, \infty }(\mathbb {R}^d; \mathbb {R}^d)$ in terms of well known concepts, such as the Gâteaux and Fréchet derivatives.

$W^{2, \infty }(\mathbb {R}^d; \mathbb {R}^d)$ in terms of well known concepts, such as the Gâteaux and Fréchet derivatives.

In fact, using this simple definition, we can define the Fréchet derivatives of relevant functionals of the type

\begin{equation} \left.\begin{array}{c@{}} \displaystyle\mathcal{J}_1(\varOmega) \triangleq \int_{\varOmega} f(\boldsymbol{x}; \varOmega)\, \textrm{d} V, \\ \displaystyle\mathcal{J}_2(\varSigma) \triangleq \int_{\varSigma} f(\boldsymbol{x}; \varOmega) \,{\rm d}S, \\ \displaystyle\mathcal{J}_3(\varSigma) \triangleq \int_{\varSigma} g(\boldsymbol{x}; \varSigma) \,{\rm d}S. \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \displaystyle\mathcal{J}_1(\varOmega) \triangleq \int_{\varOmega} f(\boldsymbol{x}; \varOmega)\, \textrm{d} V, \\ \displaystyle\mathcal{J}_2(\varSigma) \triangleq \int_{\varSigma} f(\boldsymbol{x}; \varOmega) \,{\rm d}S, \\ \displaystyle\mathcal{J}_3(\varSigma) \triangleq \int_{\varSigma} g(\boldsymbol{x}; \varSigma) \,{\rm d}S. \end{array}\right\} \end{equation}We refer to Allaire & Schoenauer (Reference Allaire and Schoenauer2007) and Walker (Reference Walker2015) for proofs of these results and additional shape derivatives of quantities of interest, such as the normal and the additive curvature. The lemma below reproduces the main derivatives of functionals used in the current work.

Lemma 2.1 ( Walker Reference Walker2015, lemma 5.7)

Let ![]() $\tilde {\boldsymbol {X}} \in W^{2, \infty }(\mathbb {R}^d; \mathbb {R}^d)$ be a sufficiently small perturbation,

$\tilde {\boldsymbol {X}} \in W^{2, \infty }(\mathbb {R}^d; \mathbb {R}^d)$ be a sufficiently small perturbation, ![]() $\varOmega \subset \mathbb {R}^d$ and

$\varOmega \subset \mathbb {R}^d$ and ![]() $\varSigma \subset \mathbb {R}^d$ a

$\varSigma \subset \mathbb {R}^d$ a ![]() $d - 1$ manifold without boundary. Then, we have that the shape derivatives of the functionals (2.11) are

$d - 1$ manifold without boundary. Then, we have that the shape derivatives of the functionals (2.11) are

\begin{equation} \left.\begin{array}{c@{}} \displaystyle D \mathcal{J}_1[\tilde{\boldsymbol{X}}] = \int_\varOmega f'[\tilde{\boldsymbol{X}}] + \int_{\partial \varOmega} f \tilde{\boldsymbol{X}} \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S, \\ \displaystyle D \mathcal{J}_2[\tilde{\boldsymbol{X}}] = \int_\varSigma f'[\tilde{\boldsymbol{X}}] + \int_{\varSigma} (\boldsymbol{\nabla} f \boldsymbol{\cdot} \boldsymbol{n} + \kappa f) \tilde{\boldsymbol{X}} \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S, \\ \displaystyle D \mathcal{J}_3[\tilde{\boldsymbol{X}}] = \int_\varSigma g'[\tilde{\boldsymbol{X}}] + \int_{\varSigma} \kappa g \tilde{\boldsymbol{X}} \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S. \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \displaystyle D \mathcal{J}_1[\tilde{\boldsymbol{X}}] = \int_\varOmega f'[\tilde{\boldsymbol{X}}] + \int_{\partial \varOmega} f \tilde{\boldsymbol{X}} \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S, \\ \displaystyle D \mathcal{J}_2[\tilde{\boldsymbol{X}}] = \int_\varSigma f'[\tilde{\boldsymbol{X}}] + \int_{\varSigma} (\boldsymbol{\nabla} f \boldsymbol{\cdot} \boldsymbol{n} + \kappa f) \tilde{\boldsymbol{X}} \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S, \\ \displaystyle D \mathcal{J}_3[\tilde{\boldsymbol{X}}] = \int_\varSigma g'[\tilde{\boldsymbol{X}}] + \int_{\varSigma} \kappa g \tilde{\boldsymbol{X}} \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S. \end{array}\right\} \end{equation} Extensions to moving domains ![]() $\varOmega (t)$ and

$\varOmega (t)$ and ![]() $\varSigma (t)$ are presented in Moubachir & Zolésio (Reference Moubachir and Zolésio2006). An important result in shape optimization is the Hadamard–Zolésio structure theorem from Delfour & Zolésio (Reference Delfour and Zolésio2001, theorem 3.6, chapter 9), which guarantees that we can always express the first-order shape derivative of functionals (2.11) as boundary integrals that only depend on the normal component of

$\varSigma (t)$ are presented in Moubachir & Zolésio (Reference Moubachir and Zolésio2006). An important result in shape optimization is the Hadamard–Zolésio structure theorem from Delfour & Zolésio (Reference Delfour and Zolésio2001, theorem 3.6, chapter 9), which guarantees that we can always express the first-order shape derivative of functionals (2.11) as boundary integrals that only depend on the normal component of ![]() $\tilde {\boldsymbol {X}}$. Restricting the shape gradient to the boundary of a domain

$\tilde {\boldsymbol {X}}$. Restricting the shape gradient to the boundary of a domain ![]() $\varOmega$ is of special interest to us, since it greatly simplifies the numerical aspects. In particular, it allows restricting the whole problem to a problem on the interface

$\varOmega$ is of special interest to us, since it greatly simplifies the numerical aspects. In particular, it allows restricting the whole problem to a problem on the interface ![]() $\varSigma$ between the droplets and the surrounding fluid.

$\varSigma$ between the droplets and the surrounding fluid.

Finally, we will informally consider the shape differentiability of surface functionals that depend on the problem variables ![]() $p_\pm , \boldsymbol {u}_\pm$ and

$p_\pm , \boldsymbol {u}_\pm$ and ![]() $\boldsymbol {X}$. The main issue, also discussed in Pantz (Reference Pantz2005) and Allaire et al. (Reference Allaire, Jouve and van Goethem2011), is that

$\boldsymbol {X}$. The main issue, also discussed in Pantz (Reference Pantz2005) and Allaire et al. (Reference Allaire, Jouve and van Goethem2011), is that ![]() $\boldsymbol {u}$ as a function on

$\boldsymbol {u}$ as a function on ![]() $\varOmega$ is not shape differentiable, but

$\varOmega$ is not shape differentiable, but ![]() $\boldsymbol {u}_\pm$ as restrictions to

$\boldsymbol {u}_\pm$ as restrictions to ![]() $\varOmega _\pm$ are shape differentiable.

$\varOmega _\pm$ are shape differentiable.

Recall that, for ![]() $\textit {Ca} < \infty$, there is always a non-zero jump in pressure

$\textit {Ca} < \infty$, there is always a non-zero jump in pressure

and the following jumps in the components of the velocity gradient

where ![]() $\boldsymbol {t}^\alpha$, for

$\boldsymbol {t}^\alpha$, for ![]() $\alpha \in \{1, \ldots , d - 1\}$, is an orthonormal basis for the tangent space to

$\alpha \in \{1, \ldots , d - 1\}$, is an orthonormal basis for the tangent space to ![]() $\varSigma$. Similar jump relations can be derived for the rate-of-strain tensor. With this in mind, we consider the general functional

$\varSigma$. Similar jump relations can be derived for the rate-of-strain tensor. With this in mind, we consider the general functional

We can see from lemma 2.1 that the integrand must be differentiable up to the boundary to allow defining ![]() $\boldsymbol {\nabla } j \boldsymbol {\cdot } \boldsymbol {n}$. In the following, we will restrict to differentiable functionals of the type

$\boldsymbol {\nabla } j \boldsymbol {\cdot } \boldsymbol {n}$. In the following, we will restrict to differentiable functionals of the type

i.e. that only depend on the normal component of the velocity field and the surface parameterization, both of which have continuous normal gradients. In the case of volume integrals, we would not need further restrictions on the cost functionals we consider.

3. Optimization problem

We now define two constrained optimization problems that we will attempt to characterize. In particular, we will use the Lagrange multiplier theory to determine adjoint equations and derive an expression for first-order variations of the cost functionals of interest.

Firstly, we define a classic shape optimization problem

\[ \left.\begin{array}{c@{}} \displaystyle \mathop{min}\limits_{\boldsymbol{X} \in \mathcal{U}_1} \mathscr{J}_1, \\ \mathscr{E}_1(\boldsymbol{u}_k, p_k, \boldsymbol{X}) = 0, \end{array}\right\} \]

\[ \left.\begin{array}{c@{}} \displaystyle \mathop{min}\limits_{\boldsymbol{X} \in \mathcal{U}_1} \mathscr{J}_1, \\ \mathscr{E}_1(\boldsymbol{u}_k, p_k, \boldsymbol{X}) = 0, \end{array}\right\} \]

where ![]() $\mathcal {U}_1$ is the set of all admissible parameterizations of interfaces

$\mathcal {U}_1$ is the set of all admissible parameterizations of interfaces ![]() $\varSigma \subset \mathbb {R}^d$ and

$\varSigma \subset \mathbb {R}^d$ and ![]() $\mathscr {E}_1$ corresponds to the constraints (2.2) and (2.3). In general, we require that the fluid–fluid interfaces remain of class

$\mathscr {E}_1$ corresponds to the constraints (2.2) and (2.3). In general, we require that the fluid–fluid interfaces remain of class ![]() $\mathcal {C}^2$.

$\mathcal {C}^2$.

Remark 3.1 Note that the parameterizations of a surface ![]() $\varSigma$ are not unique. To rigorously define the set

$\varSigma$ are not unique. To rigorously define the set ![]() $\mathcal {U}_1$, we would be required to define an appropriate notion of equivalence classes (Delfour & Zolésio Reference Delfour and Zolésio2001).

$\mathcal {U}_1$, we would be required to define an appropriate notion of equivalence classes (Delfour & Zolésio Reference Delfour and Zolésio2001).

Secondly, we consider the optimization with respect to a parameter of the problem, namely the capillary number ![]() $\textit {Ca}$. This problem is defined by

$\textit {Ca}$. This problem is defined by

\[ \left.\begin{array}{c@{}} \displaystyle \mathop{min}\limits_{\textit{Ca} \in \mathcal{U}_2} \mathscr{J}_2, \\ \mathscr{E}_2(\boldsymbol{u}_k, p_k, \boldsymbol{X}, \textit{Ca}) = 0, \end{array}\right\} \]

\[ \left.\begin{array}{c@{}} \displaystyle \mathop{min}\limits_{\textit{Ca} \in \mathcal{U}_2} \mathscr{J}_2, \\ \mathscr{E}_2(\boldsymbol{u}_k, p_k, \boldsymbol{X}, \textit{Ca}) = 0, \end{array}\right\} \]

where ![]() $\mathcal {U}_2 \triangleq \mathbb {R}_+$ and

$\mathcal {U}_2 \triangleq \mathbb {R}_+$ and ![]() $\mathscr {E}_2$ encodes the constraints (2.2), (2.3) and (2.8). At least conceptually, we can see that the second problem is a superset of the first one, since it simply includes an additional constraint. For this reason, we will focus our attention on the first problem, with extensions to the second problem as necessary.

$\mathscr {E}_2$ encodes the constraints (2.2), (2.3) and (2.8). At least conceptually, we can see that the second problem is a superset of the first one, since it simply includes an additional constraint. For this reason, we will focus our attention on the first problem, with extensions to the second problem as necessary.

The two optimization problems use tracking-type cost functionals restricted to the interface ![]() $\varSigma$. They are

$\varSigma$. They are

\begin{equation} \left.\begin{array}{c@{}} \displaystyle\mathscr{J}_1(\boldsymbol{X}) \triangleq \mathcal{J}_1(\boldsymbol{X}, \boldsymbol{u}(\boldsymbol{X})) = \tfrac{1}{2} \int_\varSigma (\boldsymbol{u} \boldsymbol{\cdot} \boldsymbol{n} - u_d)^2 \, {\rm d} S, \\ \displaystyle\mathscr{J}_2(\textit{Ca}) \triangleq \mathcal{J}_2(\textit{Ca}, \boldsymbol{X}(\textit{Ca})) = \tfrac{1}{2} \int_\varSigma \|\boldsymbol{X}(T) - \boldsymbol{X}_d\|^2 \, {\rm d} S, \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \displaystyle\mathscr{J}_1(\boldsymbol{X}) \triangleq \mathcal{J}_1(\boldsymbol{X}, \boldsymbol{u}(\boldsymbol{X})) = \tfrac{1}{2} \int_\varSigma (\boldsymbol{u} \boldsymbol{\cdot} \boldsymbol{n} - u_d)^2 \, {\rm d} S, \\ \displaystyle\mathscr{J}_2(\textit{Ca}) \triangleq \mathcal{J}_2(\textit{Ca}, \boldsymbol{X}(\textit{Ca})) = \tfrac{1}{2} \int_\varSigma \|\boldsymbol{X}(T) - \boldsymbol{X}_d\|^2 \, {\rm d} S, \end{array}\right\} \end{equation}

where ![]() $u_d$ and

$u_d$ and ![]() $\boldsymbol {X}_d$ represent a desired surface normal velocity field and geometry. We differentiate between

$\boldsymbol {X}_d$ represent a desired surface normal velocity field and geometry. We differentiate between ![]() $\mathscr {J}_k$, which is a function of the control only, and

$\mathscr {J}_k$, which is a function of the control only, and ![]() $\mathcal {J}_k$, which is a function of the control and the state variables (as required). The equivalence between the two, through the corresponding constraints

$\mathcal {J}_k$, which is a function of the control and the state variables (as required). The equivalence between the two, through the corresponding constraints ![]() $\mathscr {E}_k$, is given by the implicit function theorem under some assumptions. The main theorem that is used in the theory of equality constrained optimization is stated below.

$\mathscr {E}_k$, is given by the implicit function theorem under some assumptions. The main theorem that is used in the theory of equality constrained optimization is stated below.

Theorem 3.2 Let ![]() $\mathscr {J}: X \to \mathbb {R}$ be a functional and

$\mathscr {J}: X \to \mathbb {R}$ be a functional and ![]() $\mathscr {E}: X \to Z$ be a set of constraints, where both

$\mathscr {E}: X \to Z$ be a set of constraints, where both ![]() $X$ and

$X$ and ![]() $Z$ are Banach spaces. Let

$Z$ are Banach spaces. Let ![]() $x_0$ be a local extremum of

$x_0$ be a local extremum of ![]() $\mathscr {J}$ and a regular point of

$\mathscr {J}$ and a regular point of ![]() $\mathscr {E}$. If both

$\mathscr {E}$. If both ![]() $\mathscr {J}$ and

$\mathscr {J}$ and ![]() $\mathscr {E}$ are continuously Fréchet differentiable in a neighbourhood

$\mathscr {E}$ are continuously Fréchet differentiable in a neighbourhood ![]() $U$ of

$U$ of ![]() $x_0$, then there exists a point

$x_0$, then there exists a point ![]() $z_0^* \in Z^*$, such that the Lagrangian functional

$z_0^* \in Z^*$, such that the Lagrangian functional

is stationary at ![]() $(z^*_0, x_0)$, i.e. the Fréchet derivative satisfies

$(z^*_0, x_0)$, i.e. the Fréchet derivative satisfies

A proof of the Lagrange multiplier theorem above can be found in Luenberger (Reference Luenberger1997). For a control problem, we have that the variable ![]() $x \triangleq (y, u)$, where

$x \triangleq (y, u)$, where ![]() $y$ are the state variables and

$y$ are the state variables and ![]() $u$ is the control. In this case, the statement that

$u$ is the control. In this case, the statement that ![]() $(x_0, z^*_0)$ is a stationary point of the Lagrangian

$(x_0, z^*_0)$ is a stationary point of the Lagrangian ![]() $\mathscr {L}$ can be split into the following equations:

$\mathscr {L}$ can be split into the following equations:

\begin{equation} \left.\begin{array}{c@{}} \dfrac{\partial \mathscr{L}}{\partial z^*} = 0 \implies \mathscr{E}(x) = 0, \quad \text{(state equations)} \\ \dfrac{\partial \mathscr{L}}{\partial y} = 0 \implies \left(\dfrac{\partial \mathscr{E}}{\partial x} \right)^*[z^*] = \dfrac{\partial \mathcal{J}}{\partial x}, \quad \text{(adjoint equations)} \\ \dfrac{\partial \mathscr{L}}{\partial u} = 0 \implies \dfrac{\mathrm{d} \mathscr{J}}{\mathrm{d} u} = \dfrac{\partial \mathcal{J}}{\partial u} - \left(\dfrac{\partial \mathscr{E}}{\partial u} \right)^*[z^*] = 0, \quad \text{(optimality condition)} \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \dfrac{\partial \mathscr{L}}{\partial z^*} = 0 \implies \mathscr{E}(x) = 0, \quad \text{(state equations)} \\ \dfrac{\partial \mathscr{L}}{\partial y} = 0 \implies \left(\dfrac{\partial \mathscr{E}}{\partial x} \right)^*[z^*] = \dfrac{\partial \mathcal{J}}{\partial x}, \quad \text{(adjoint equations)} \\ \dfrac{\partial \mathscr{L}}{\partial u} = 0 \implies \dfrac{\mathrm{d} \mathscr{J}}{\mathrm{d} u} = \dfrac{\partial \mathcal{J}}{\partial u} - \left(\dfrac{\partial \mathscr{E}}{\partial u} \right)^*[z^*] = 0, \quad \text{(optimality condition)} \end{array}\right\} \end{equation}

which are valid for all directions ![]() $h$ in the necessary spaces. In the following we will show the specific Lagrangian functionals for our problems of interest and the equations satisfied by the adjoint variables.

$h$ in the necessary spaces. In the following we will show the specific Lagrangian functionals for our problems of interest and the equations satisfied by the adjoint variables.

Remark. Rigorous proofs of existence and uniqueness of solutions to (P1) and (P2) are out of scope for this work. We refer to the seminal work of Ladyzhenskaya (Reference Ladyzhenskaya1963) on smooth domains for results related to the static problem (2.2) and (2.3). Extensions to the unsteady problem can be found in Denisova & Solonnikov (Reference Denisova and Solonnikov1991) and subsequent work. More recently, such problems have been investigated in Kunisch & Lu (Reference Kunisch and Lu2011) and Pruss & Simonett (Reference Pruss and Simonett2016).

3.1. Static problem

We start by looking at the optimization problem (P1) with the static constraints (2.2) and (2.3). The resulting Lagrangian can be written as

where ![]() $\boldsymbol {z}^*$ are the adjoint variables and

$\boldsymbol {z}^*$ are the adjoint variables and ![]() $\boldsymbol {w}_\pm \triangleq (\boldsymbol {u}_\pm , p_\pm )$ are the state variables on

$\boldsymbol {w}_\pm \triangleq (\boldsymbol {u}_\pm , p_\pm )$ are the state variables on ![]() $\varOmega _\pm$, such that

$\varOmega _\pm$, such that ![]() $\boldsymbol {w} \triangleq (\boldsymbol {w}_+, \boldsymbol {w}_-)$. Expanding the constraints, we write

$\boldsymbol {w} \triangleq (\boldsymbol {w}_+, \boldsymbol {w}_-)$. Expanding the constraints, we write

\begin{align} \mathscr{L}_1 &\triangleq \mathscr{J}_1 + \int_{\varOmega_+} \boldsymbol{\nabla} \boldsymbol{u}^*_+ \boldsymbol{\cdot} \sigma_+ \, \textrm{d} V - \int_{\varOmega_+} p^*_+ (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_+)\, \textrm{d} V + \frac{1}{\textit{Ca}} \int_\varSigma \kappa \langle \boldsymbol{u}^* \rangle \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S \nonumber\\ &\quad + \int_{\varOmega_-} \lambda \boldsymbol{\nabla} \boldsymbol{u}^*_- \boldsymbol{\cdot} \sigma_- \, \textrm{d} V - \int_{\varOmega_-} \lambda p^*_- (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_-)\, \textrm{d} V \nonumber\\ &\quad + \int_\varSigma \langle \,\boldsymbol{f} \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u}^* ]\!] \, {\rm d} S + \int_\varSigma \langle \,\boldsymbol{f}^* \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u} ]\!] \, {\rm d} S, \end{align}

\begin{align} \mathscr{L}_1 &\triangleq \mathscr{J}_1 + \int_{\varOmega_+} \boldsymbol{\nabla} \boldsymbol{u}^*_+ \boldsymbol{\cdot} \sigma_+ \, \textrm{d} V - \int_{\varOmega_+} p^*_+ (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_+)\, \textrm{d} V + \frac{1}{\textit{Ca}} \int_\varSigma \kappa \langle \boldsymbol{u}^* \rangle \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S \nonumber\\ &\quad + \int_{\varOmega_-} \lambda \boldsymbol{\nabla} \boldsymbol{u}^*_- \boldsymbol{\cdot} \sigma_- \, \textrm{d} V - \int_{\varOmega_-} \lambda p^*_- (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_-)\, \textrm{d} V \nonumber\\ &\quad + \int_\varSigma \langle \,\boldsymbol{f} \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u}^* ]\!] \, {\rm d} S + \int_\varSigma \langle \,\boldsymbol{f}^* \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u} ]\!] \, {\rm d} S, \end{align}

where ![]() $\langle \,f \rangle _\lambda$ denotes a weighted average, defined as

$\langle \,f \rangle _\lambda$ denotes a weighted average, defined as

The weak form of the Stokes equations, (2.2), can be obtained by integration by parts and then applying the following equality:

Remark 3.3 The main subtlety in our derivation comes from the choice in constructing the Lagrangian. In classic single-phase works, such as Bewley, Moin & Temam (Reference Bewley, Moin and Temam2001) and Wei & Freund (Reference Wei and Freund2006), the strong form of the equations is used with an integral over the whole domain. In the context of multiphase flows, such an approach would be possible using the one-fluid model, where ![]() $(\boldsymbol {u}, p)$ are considered as bulk variables with the jump conditions carried over as singular source terms. However, such a choice results in an incorrect shape derivative. As discussed in Pantz (Reference Pantz2005), the reason for this is that the state variables are not shape differentiable over

$(\boldsymbol {u}, p)$ are considered as bulk variables with the jump conditions carried over as singular source terms. However, such a choice results in an incorrect shape derivative. As discussed in Pantz (Reference Pantz2005), the reason for this is that the state variables are not shape differentiable over ![]() $\varOmega$.

$\varOmega$.

Therefore, equations with discontinuous coefficients and, in particular, multiphase flows must be treated with care. The correct construction involves considering the restrictions to ![]() $\varOmega _\pm$, i.e.

$\varOmega _\pm$, i.e. ![]() $(\boldsymbol {u}_\pm , p_\pm )$ as above, and enforcing values that are well-defined on the interface. For example, an alternative expansion of

$(\boldsymbol {u}_\pm , p_\pm )$ as above, and enforcing values that are well-defined on the interface. For example, an alternative expansion of

would lead to ill-defined results. The choice of a weak form in (3.6) is not formally required, but allows us to directly apply the results from lemma 2.1 and is consistent with the methods used in the shape optimization community, e.g. in Allaire & Schoenauer (Reference Allaire and Schoenauer2007).

We start by stating the equations satisfied by the adjoint variables in the following result.

Lemma 3.4 At a stationary point of the Lagrangian (3.6), the adjoint variables ![]() $\boldsymbol {w}^*_\pm$ satisfy the following equations:

$\boldsymbol {w}^*_\pm$ satisfy the following equations:

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}^*_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{\nabla} \boldsymbol{\cdot} \sigma^*_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{u}^*_+ = 0, \quad \|\boldsymbol{x}\| \to \infty, \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}^*_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{\nabla} \boldsymbol{\cdot} \sigma^*_\pm = 0, \quad \boldsymbol{x} \in \varOmega_\pm, \\ \boldsymbol{u}^*_+ = 0, \quad \|\boldsymbol{x}\| \to \infty, \end{array}\right\} \end{equation}with the jump conditions

\begin{equation} \left.\begin{array}{c@{}} {[\![ \boldsymbol{u}^* ]\!]} = 0, \\ {[\![\, \boldsymbol{f}^* ]\!]} _\lambda = \boldsymbol{\nabla}_{\boldsymbol{u}} \mathcal{J}_1. \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} {[\![ \boldsymbol{u}^* ]\!]} = 0, \\ {[\![\, \boldsymbol{f}^* ]\!]} _\lambda = \boldsymbol{\nabla}_{\boldsymbol{u}} \mathcal{J}_1. \end{array}\right\} \end{equation}The adjoint Cauchy stress tensor, rate-of-strain tensor and traction vector are in complete analogy to the definitions of the primal Stokes problem given in § 2. For the cost functional (3.1), we have that

Proof. The adjoint equations are easily obtained by differentiating the Lagrangian with respect to the state variables ![]() $\boldsymbol {w}_\pm$. We have that

$\boldsymbol {w}_\pm$. We have that

\begin{align} \dfrac{\partial \mathscr{L}_1}{\partial \boldsymbol{w}} [\tilde{\boldsymbol{w}}] &= \dfrac{\partial \mathcal{J}_1}{\partial \boldsymbol{w}} [\tilde{\boldsymbol{w}}] + \int_{\varOmega_+} \boldsymbol{\nabla} \boldsymbol{u}^*_+ \boldsymbol{\cdot} \tilde{\sigma}_+ \, \textrm{d} V - \int_{\varOmega_+} p^*_+ (\boldsymbol{\nabla} \boldsymbol{\cdot} \tilde{\boldsymbol{u}}_+)\, \textrm{d} V \nonumber\\ &\quad + \int_{\varOmega_-} \lambda \boldsymbol{\nabla} \boldsymbol{u}^*_- \boldsymbol{\cdot} \tilde{\sigma}_- \, \textrm{d} V - \int_{\varOmega_-} \lambda p^*_- (\boldsymbol{\nabla} \boldsymbol{\cdot} \tilde{\boldsymbol{u}}_-)\, \textrm{d} V + \int_\varSigma \langle \,\tilde{\boldsymbol{f}} \rangle_\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u}^* ]\!] \, {\rm d} S. \end{align}

\begin{align} \dfrac{\partial \mathscr{L}_1}{\partial \boldsymbol{w}} [\tilde{\boldsymbol{w}}] &= \dfrac{\partial \mathcal{J}_1}{\partial \boldsymbol{w}} [\tilde{\boldsymbol{w}}] + \int_{\varOmega_+} \boldsymbol{\nabla} \boldsymbol{u}^*_+ \boldsymbol{\cdot} \tilde{\sigma}_+ \, \textrm{d} V - \int_{\varOmega_+} p^*_+ (\boldsymbol{\nabla} \boldsymbol{\cdot} \tilde{\boldsymbol{u}}_+)\, \textrm{d} V \nonumber\\ &\quad + \int_{\varOmega_-} \lambda \boldsymbol{\nabla} \boldsymbol{u}^*_- \boldsymbol{\cdot} \tilde{\sigma}_- \, \textrm{d} V - \int_{\varOmega_-} \lambda p^*_- (\boldsymbol{\nabla} \boldsymbol{\cdot} \tilde{\boldsymbol{u}}_-)\, \textrm{d} V + \int_\varSigma \langle \,\tilde{\boldsymbol{f}} \rangle_\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u}^* ]\!] \, {\rm d} S. \end{align} For ![]() $k \in \{+, -\}$, we have that

$k \in \{+, -\}$, we have that

by algebraic manipulations. This is the weak formulation of the adjoint equations from lemma 3.4 with test functions ![]() $(\tilde {\boldsymbol {u}}_\pm , \tilde {p}_\pm )$.

$(\tilde {\boldsymbol {u}}_\pm , \tilde {p}_\pm )$.

We are now in a position to give the definition of the shape derivative of the cost functional from (P1). It is stated as follows.

Theorem 3.5 We assume that ![]() $(\boldsymbol {u}_\pm , p_\pm )$ and

$(\boldsymbol {u}_\pm , p_\pm )$ and ![]() $\mathscr {J}_1$ are shape differentiable. Then, the shape gradient of the cost functional (P1) is

$\mathscr {J}_1$ are shape differentiable. Then, the shape gradient of the cost functional (P1) is

where

\begin{equation} \left.\begin{array}{c@{}} \mathcal{C}^*[\boldsymbol{w}^*; \boldsymbol{X}] \triangleq \dfrac{1}{\textit{Ca}} ( \kappa^*[\boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{n}] + n^*[\kappa \boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{t}] + \kappa^2 \boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{n} + \kappa (\boldsymbol{n} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u}^*) \boldsymbol{\cdot} \boldsymbol{n} ), \\ \mathcal{S}^*[\boldsymbol{w}^*; \boldsymbol{X}, \boldsymbol{w}] \triangleq \langle \,\boldsymbol{f} \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{n} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u}^* ]\!] + \langle \,\boldsymbol{f}^* \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{n} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u} ]\!] - 2 [\![ \varepsilon^* \boldsymbol{\cdot} \varepsilon ]\!] _\lambda. \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \mathcal{C}^*[\boldsymbol{w}^*; \boldsymbol{X}] \triangleq \dfrac{1}{\textit{Ca}} ( \kappa^*[\boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{n}] + n^*[\kappa \boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{t}] + \kappa^2 \boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{n} + \kappa (\boldsymbol{n} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u}^*) \boldsymbol{\cdot} \boldsymbol{n} ), \\ \mathcal{S}^*[\boldsymbol{w}^*; \boldsymbol{X}, \boldsymbol{w}] \triangleq \langle \,\boldsymbol{f} \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{n} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u}^* ]\!] + \langle \,\boldsymbol{f}^* \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{n} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u} ]\!] - 2 [\![ \varepsilon^* \boldsymbol{\cdot} \varepsilon ]\!] _\lambda. \end{array}\right\} \end{equation}For the cost functional (3.1), we have that

where ![]() $j \triangleq \boldsymbol {u} \boldsymbol {\cdot } \boldsymbol {n} - u_d$. The operators

$j \triangleq \boldsymbol {u} \boldsymbol {\cdot } \boldsymbol {n} - u_d$. The operators ![]() $\kappa ^*$ and

$\kappa ^*$ and ![]() $n^*$ correspond to the adjoint operators of the Eulerian shape derivative of the additive curvature and normal vector, respectively. They are defined in appendix B.

$n^*$ correspond to the adjoint operators of the Eulerian shape derivative of the additive curvature and normal vector, respectively. They are defined in appendix B.

Proof. A detailed derivation of the shape gradient is given in appendix C. We note that both the derivation of the shape gradient in theorem 3.5 and the adjoint equations in lemma 3.4 are purely formal; they assume that the state variables have shape derivatives of the required regularity.

3.2. Quasi-static problem

The extension from (P1) to the unsteady problem (P2) is straightforward from the point of view of the Lagrangian formalism. However, introducing the unsteady element complicates the theoretical analysis of the problem, since it is not clear if the solution will maintain the required smoothness. Similar issues appear at a numerical level, as we will see in subsequent sections.

Furthermore, the shape derivative formalism from § 2.2 needs to be extended. Intuitively, we now want to perturb the time-dependent domains ![]() $\varOmega _\pm (t)$. In the

$\varOmega _\pm (t)$. In the ![]() $(d + 1)$-dimensional

$(d + 1)$-dimensional ![]() $(t, \boldsymbol {x})$ space, one can think of a fixed domain

$(t, \boldsymbol {x})$ space, one can think of a fixed domain ![]() $\varOmega$ as a cylinder, while a moving domain

$\varOmega$ as a cylinder, while a moving domain ![]() $\varOmega (t)$ will be a general tubular manifold. Domain perturbations of this kind were made rigorous in Moubachir & Zolésio (Reference Moubachir and Zolésio2006), to which we refer to any additional details.

$\varOmega (t)$ will be a general tubular manifold. Domain perturbations of this kind were made rigorous in Moubachir & Zolésio (Reference Moubachir and Zolésio2006), to which we refer to any additional details.

As before, the Lagrangian functional associated with (P2) is given by

or, explicitly,

\begin{align} \mathscr{L}_2 &\triangleq \mathscr{J}_2 + \int_0^T \int_{\varOmega_+(t)} \boldsymbol{\nabla} \boldsymbol{u}^*_+ \boldsymbol{\cdot} \sigma_+ \, \textrm{d} V \, \textrm{d} t - \int_0^T \int_{\varOmega_+(t)} p^*_+ (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_+)\, \textrm{d} V \, \textrm{d} t \nonumber\\ &\quad + \int_0^T \int_{\varOmega_-(t)} \lambda \boldsymbol{\nabla} \boldsymbol{u}^*_- \boldsymbol{\cdot} \sigma_- \, \textrm{d} V \, \textrm{d} t - \int_0^T \int_{\varOmega_-(t)} \lambda p^*_- (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_-)\, \textrm{d} V \, \textrm{d} t \nonumber\\ &\quad + \frac{1}{\textit{Ca}} \int_0^T \int_{\varSigma(t)} \kappa \boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S \, \textrm{d} t \nonumber\\ &\quad + \int_0^T \int_{\varSigma(t)} \langle \,\boldsymbol{f} \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u}^* ]\!] \, {\rm d} S \, \textrm{d} t + \int_0^T \int_{\varSigma(t)} \langle \,\boldsymbol{f}^* \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u} ]\!] \, {\rm d} S \, \textrm{d} t \nonumber\\ &\quad - \int_0^T \int_{\varSigma(t)} \boldsymbol{X}^* \boldsymbol{\cdot} (\boldsymbol{X}_t - \boldsymbol{V}(\boldsymbol{u}, \boldsymbol{X})) \,{\rm d}S \, \textrm{d} t \nonumber\\ & \quad -\int_{\varSigma(0)} \boldsymbol{X}^*_0 \boldsymbol{\cdot} (\boldsymbol{X}(0) - \boldsymbol{X}_0) \,{\rm d}S. \end{align}

\begin{align} \mathscr{L}_2 &\triangleq \mathscr{J}_2 + \int_0^T \int_{\varOmega_+(t)} \boldsymbol{\nabla} \boldsymbol{u}^*_+ \boldsymbol{\cdot} \sigma_+ \, \textrm{d} V \, \textrm{d} t - \int_0^T \int_{\varOmega_+(t)} p^*_+ (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_+)\, \textrm{d} V \, \textrm{d} t \nonumber\\ &\quad + \int_0^T \int_{\varOmega_-(t)} \lambda \boldsymbol{\nabla} \boldsymbol{u}^*_- \boldsymbol{\cdot} \sigma_- \, \textrm{d} V \, \textrm{d} t - \int_0^T \int_{\varOmega_-(t)} \lambda p^*_- (\boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}_-)\, \textrm{d} V \, \textrm{d} t \nonumber\\ &\quad + \frac{1}{\textit{Ca}} \int_0^T \int_{\varSigma(t)} \kappa \boldsymbol{u}^* \boldsymbol{\cdot} \boldsymbol{n} \, {\rm d} S \, \textrm{d} t \nonumber\\ &\quad + \int_0^T \int_{\varSigma(t)} \langle \,\boldsymbol{f} \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u}^* ]\!] \, {\rm d} S \, \textrm{d} t + \int_0^T \int_{\varSigma(t)} \langle \,\boldsymbol{f}^* \rangle _\lambda \boldsymbol{\cdot} [\![ \boldsymbol{u} ]\!] \, {\rm d} S \, \textrm{d} t \nonumber\\ &\quad - \int_0^T \int_{\varSigma(t)} \boldsymbol{X}^* \boldsymbol{\cdot} (\boldsymbol{X}_t - \boldsymbol{V}(\boldsymbol{u}, \boldsymbol{X})) \,{\rm d}S \, \textrm{d} t \nonumber\\ & \quad -\int_{\varSigma(0)} \boldsymbol{X}^*_0 \boldsymbol{\cdot} (\boldsymbol{X}(0) - \boldsymbol{X}_0) \,{\rm d}S. \end{align}Lemma 3.6 We assume that ![]() $\boldsymbol {w}_\pm$ and

$\boldsymbol {w}_\pm$ and ![]() $\mathscr {J}_2$ are shape differentiable. Furthermore, the source term

$\mathscr {J}_2$ are shape differentiable. Furthermore, the source term ![]() $\boldsymbol {V}$ in (2.8) is also assumed to be shape differentiable. Then, at a stationary point of the Lagrangian (3.19), the adjoint variables

$\boldsymbol {V}$ in (2.8) is also assumed to be shape differentiable. Then, at a stationary point of the Lagrangian (3.19), the adjoint variables ![]() $\boldsymbol {w}^*_\pm$ satisfy the Stokes equations (2.2) with the jump conditions

$\boldsymbol {w}^*_\pm$ satisfy the Stokes equations (2.2) with the jump conditions

\begin{equation} \left.\begin{array}{c@{}} {[\![ \boldsymbol{u}^* ]\!]} = 0, \\ \displaystyle {[\![\, \boldsymbol{f}^* ]\!]} _\lambda = \boldsymbol{\nabla} _{\boldsymbol{u}} \mathcal{J}_2 + \left(\dfrac{\partial \boldsymbol{V}}{\partial \boldsymbol{u}} \right)^*[\boldsymbol{X}^*], \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} {[\![ \boldsymbol{u}^* ]\!]} = 0, \\ \displaystyle {[\![\, \boldsymbol{f}^* ]\!]} _\lambda = \boldsymbol{\nabla} _{\boldsymbol{u}} \mathcal{J}_2 + \left(\dfrac{\partial \boldsymbol{V}}{\partial \boldsymbol{u}} \right)^*[\boldsymbol{X}^*], \end{array}\right\} \end{equation}

where ![]() $\boldsymbol {X}^*$ is given by lemma 3.7. In the case of the cost functional from (3.1), we have that

$\boldsymbol {X}^*$ is given by lemma 3.7. In the case of the cost functional from (3.1), we have that

Also, using the forcing term described in (2.9), we have that

Proof. The proof is completely equivalent to that of lemma 3.4.

Lemma 3.7 Under the assumptions of lemma 3.6, we have that at a stationary point of the Lagrangian (3.19), the adjoint variable ![]() $\boldsymbol {X}^*$ satisfies the following evolution equation:

$\boldsymbol {X}^*$ satisfies the following evolution equation:

\begin{equation} \left.\begin{array}{c@{}} \begin{aligned} -\boldsymbol{X}^*_t & = \{\boldsymbol{\nabla} _{\boldsymbol{X}} \mathcal{J}_2 + (\boldsymbol{\nabla} _\varSigma \boldsymbol{\cdot} \boldsymbol{V}) (\boldsymbol{X}^* \boldsymbol{\cdot} \boldsymbol{n}) + \mathcal{V}^*[\boldsymbol{X}^*; \boldsymbol{X}, \boldsymbol{w}] \\ & \quad + \mathcal{C}^*[\boldsymbol{w}^*; \boldsymbol{X}] + \mathcal{S}^*[\boldsymbol{w}^*; \boldsymbol{X}, \boldsymbol{w}]\} \boldsymbol{n}, \end{aligned}\\ \boldsymbol{X}^*(T) = \boldsymbol{\nabla} _{\boldsymbol{X}(T)} \mathcal{J}_2, \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \begin{aligned} -\boldsymbol{X}^*_t & = \{\boldsymbol{\nabla} _{\boldsymbol{X}} \mathcal{J}_2 + (\boldsymbol{\nabla} _\varSigma \boldsymbol{\cdot} \boldsymbol{V}) (\boldsymbol{X}^* \boldsymbol{\cdot} \boldsymbol{n}) + \mathcal{V}^*[\boldsymbol{X}^*; \boldsymbol{X}, \boldsymbol{w}] \\ & \quad + \mathcal{C}^*[\boldsymbol{w}^*; \boldsymbol{X}] + \mathcal{S}^*[\boldsymbol{w}^*; \boldsymbol{X}, \boldsymbol{w}]\} \boldsymbol{n}, \end{aligned}\\ \boldsymbol{X}^*(T) = \boldsymbol{\nabla} _{\boldsymbol{X}(T)} \mathcal{J}_2, \end{array}\right\} \end{equation}

where ![]() $\mathcal {C}^*$ and

$\mathcal {C}^*$ and ![]() $\mathcal {S}^*$ are defined in lemma 3.4 and

$\mathcal {S}^*$ are defined in lemma 3.4 and ![]() $\mathcal {V}^*$ is the adjoint operator corresponding to (2.8). In the case of the cost functional (3.1), we have that

$\mathcal {V}^*$ is the adjoint operator corresponding to (2.8). In the case of the cost functional (3.1), we have that

Similarly, for the choice of forcing term described in (2.9), we have that

Proof. The derivation of the above evolution equation is presented in appendix C. Determining the shape gradient of the cost functional (3.1) is a direct application of lemma 2.1.

Theorem 3.8 The gradient of the cost functional for (P2) is given by

Proof. The proof of theorem 3.8 follows from the definition of the Lagrangian given in (3.19). Note that the gradient is simply a classic derivative in ![]() $\mathbb {R}$ in this case.

$\mathbb {R}$ in this case.

This completes the statement of the two optimization problems we have proposed. In both cases, we have access to first-order variations that can be used as part of gradient descent algorithms to perform the optimization.

4. Numerical methods

In this section, we will detail the numerical methods used to solve the Stokes system (2.2) and (2.3) and the adjoint Stokes system described in lemma 3.4. Since the Stokes equations are symmetric, we require a single solver in both cases. We will also look into discretizations of the shape gradient in theorem 3.5, the interface evolution equation (2.8) and the corresponding adjoint evolution equation from lemma 3.7. The numerical methods described here have been implemented in an open-source code that can be found in Fikl (Reference Fikl2020).

4.1. Boundary integral equations

An efficient method of solving the Stokes equation is based on boundary integral equations (Pozrikidis Reference Pozrikidis1992). In our case, this is particularly useful because the entire problem reduces to the interface if the cost functional is expressed on ![]() $\varSigma$ alone, as is the case for the choices presented in (3.1).

$\varSigma$ alone, as is the case for the choices presented in (3.1).

Boundary integral methods are based on Green's function fundamental solutions to the Stokes problem. Using the fundamental solutions as kernels in a linear integral operator, there are many possible representations of the two-phase Stokes problem. A common representation is based on the Lorentz reciprocal theorem and has been used in studies of interfacial dynamics (see Pozrikidis Reference Pozrikidis1990; Stone Reference Stone1994; Lac & Homsy Reference Lac and Homsy2007). However, this representation is less suitable for our case, since the pressure and stress kernels are hypersingular. Hypersingular integrals are generally harder to analyse and accurately evaluate numerically.

A second representation for two-phase flows with a stress discontinuity has been discussed in Pozrikidis (Reference Pozrikidis1990) and is described in detail in Pozrikidis (Reference Pozrikidis1992, chapter 5.3). It is a so-called single-layer potential representation, with the velocity field described by, for ![]() $\boldsymbol {x} \notin \varSigma$,

$\boldsymbol {x} \notin \varSigma$,

where ![]() $\boldsymbol {q}: \varSigma \to \mathbb {R}^d$ and

$\boldsymbol {q}: \varSigma \to \mathbb {R}^d$ and ![]() ${\mathsf{G}}_{ij}$ are usually referred to as the density and the Stokeslet, (4.4). This representation is complemented by definitions of the pressure and stress, for

${\mathsf{G}}_{ij}$ are usually referred to as the density and the Stokeslet, (4.4). This representation is complemented by definitions of the pressure and stress, for ![]() $\boldsymbol {x} \notin \varSigma$,

$\boldsymbol {x} \notin \varSigma$,

where both have been appropriately non-dimensionalized, as described in § 2.1. The usual summation convention over repeated indices has been used in the definitions. The fundamental solutions for the three main variables in Stokes flow are given by

\begin{equation} \left.\begin{array}{c@{}} p_i(\boldsymbol{r}) \triangleq 2\dfrac{r_i}{r}, \\ {\mathsf{G}}_{ij}(\boldsymbol{r}) \triangleq \dfrac{\delta_{ij}}{r} + \dfrac{r_i r_j}{r}, \\ {\mathsf{T}}_{ijk}(\boldsymbol{r}) \triangleq -6 \dfrac{r_i r_j r_k}{r}, \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} p_i(\boldsymbol{r}) \triangleq 2\dfrac{r_i}{r}, \\ {\mathsf{G}}_{ij}(\boldsymbol{r}) \triangleq \dfrac{\delta_{ij}}{r} + \dfrac{r_i r_j}{r}, \\ {\mathsf{T}}_{ijk}(\boldsymbol{r}) \triangleq -6 \dfrac{r_i r_j r_k}{r}, \end{array}\right\} \end{equation}

for ![]() $i, j, k \in \{1, \ldots , d\}$, where

$i, j, k \in \{1, \ldots , d\}$, where ![]() $\boldsymbol {r} \triangleq \boldsymbol {x} - \boldsymbol {y}$ and

$\boldsymbol {r} \triangleq \boldsymbol {x} - \boldsymbol {y}$ and ![]() $r \triangleq \|\boldsymbol {r}\|$. To solve for the density we use the provided boundary and jump conditions, which, however, require limits as

$r \triangleq \|\boldsymbol {r}\|$. To solve for the density we use the provided boundary and jump conditions, which, however, require limits as ![]() $\boldsymbol {x} \to \varSigma$ of the layer potentials above. These limits are generally not trivial, since the kernels in question are all singular at

$\boldsymbol {x} \to \varSigma$ of the layer potentials above. These limits are generally not trivial, since the kernels in question are all singular at ![]() $\varSigma$. The jumps in the velocity, pressure and traction are well known and given in Pozrikidis (Reference Pozrikidis1992, § 4.1). Using the surface limits of the traction and the jump (2.3), we obtain the following equation (Pozrikidis Reference Pozrikidis1992, (5.3.9)):

$\varSigma$. The jumps in the velocity, pressure and traction are well known and given in Pozrikidis (Reference Pozrikidis1992, § 4.1). Using the surface limits of the traction and the jump (2.3), we obtain the following equation (Pozrikidis Reference Pozrikidis1992, (5.3.9)):

In the non-homogeneous far-field boundary conditions case, additional terms can be added, as described in Pozrikidis (Reference Pozrikidis1992).

Equation (4.5) is a Fredholm integral equation of the second kind. The linear operator acting on the density ![]() $\boldsymbol {q}$ is generally well-conditioned. In fact, it matches the conditioning of the underlying physical problem, which is well-conditioned for

$\boldsymbol {q}$ is generally well-conditioned. In fact, it matches the conditioning of the underlying physical problem, which is well-conditioned for ![]() $0 < \lambda < \infty$. This allows for efficient results by classic iterative linear solvers such as GMRES.

$0 < \lambda < \infty$. This allows for efficient results by classic iterative linear solvers such as GMRES.

The layer potentials presented so far are sufficient to solve the forward Stokes equations (2.2), (2.3) and (2.8). However, in the case of the adjoint equations, we further require knowledge of the normal and tangential velocity gradients. They can be obtained by differentiating the velocity (4.1) and are given, for ![]() $\boldsymbol {x} \notin \varSigma$, by

$\boldsymbol {x} \notin \varSigma$, by

The kernel for the velocity gradient is given by

The surface limits of the new layer-potential operators are not standard, but they can be easily derived from the main variables and the definition of the stress tensor (2.1).

Theorem 4.1 The jump conditions for the normal and tangential velocity gradient layer potentials, defined in (4.6)–(4.7), are given by

\begin{equation} \left.\begin{array}{c@{}} \displaystyle\lim_{\epsilon \to 0^+} \mathcal{U}_i[\boldsymbol{q}](\boldsymbol{x} \pm \epsilon \boldsymbol{n}) = \pm 4 {\rm \pi}(q_i - (q_j n_j) n_i) + \mathrm{p.v.} \int_\varSigma n_k(\boldsymbol{x}) {\mathsf{U}}_{ijk}(\boldsymbol{x}, \boldsymbol{y}) q_j(\,\boldsymbol{y}) \,{\rm d}S, \\ \displaystyle\lim_{\epsilon \to 0^+} \mathcal{V}^\alpha_i[\boldsymbol{q}](\boldsymbol{x} \pm \epsilon \boldsymbol{n}) = \mathrm{p.v.} \int_\varSigma t^\alpha_k(\boldsymbol{x}) {\mathsf{U}}_{ijk}(\boldsymbol{x}, \boldsymbol{y}) q_j(\,\boldsymbol{y}) \,{\rm d}S, \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \displaystyle\lim_{\epsilon \to 0^+} \mathcal{U}_i[\boldsymbol{q}](\boldsymbol{x} \pm \epsilon \boldsymbol{n}) = \pm 4 {\rm \pi}(q_i - (q_j n_j) n_i) + \mathrm{p.v.} \int_\varSigma n_k(\boldsymbol{x}) {\mathsf{U}}_{ijk}(\boldsymbol{x}, \boldsymbol{y}) q_j(\,\boldsymbol{y}) \,{\rm d}S, \\ \displaystyle\lim_{\epsilon \to 0^+} \mathcal{V}^\alpha_i[\boldsymbol{q}](\boldsymbol{x} \pm \epsilon \boldsymbol{n}) = \mathrm{p.v.} \int_\varSigma t^\alpha_k(\boldsymbol{x}) {\mathsf{U}}_{ijk}(\boldsymbol{x}, \boldsymbol{y}) q_j(\,\boldsymbol{y}) \,{\rm d}S, \end{array}\right\} \end{equation}

where ![]() $\mathrm {p.v.}$ denotes the Cauchy principal value interpretation of the singular integral.

$\mathrm {p.v.}$ denotes the Cauchy principal value interpretation of the singular integral.

We must also obtain formulae for the stress and strain-rate tensors that appear in theorem 3.5. The stress tensor can most easily be obtained algebraically from the traction, the pressure and the components of the velocity gradient from (4.6) and (4.7), by means of (2.1). Then, the strain rate tensor can be directly obtained from (2.1). Explicit formulae for the stress components in both two and three dimensions are given in Aliabadi (Reference Aliabadi2002, § 2.5.1).

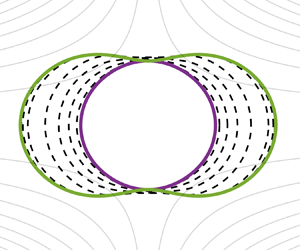

Finally, for the numerical implementation, we will make an axisymmetric assumption on the problem set-up, with coordinates ![]() $\boldsymbol {x} = (x, \rho , \phi )$ (see figure 1). With this assumption, we must also change the meaning of the fundamental solutions we have used thus far. For example, in the three-dimensional case,

$\boldsymbol {x} = (x, \rho , \phi )$ (see figure 1). With this assumption, we must also change the meaning of the fundamental solutions we have used thus far. For example, in the three-dimensional case, ![]() ${\mathsf{G}}_{ij}$ represents the interactions between a source point

${\mathsf{G}}_{ij}$ represents the interactions between a source point ![]() $\boldsymbol {y}$ and a target point

$\boldsymbol {y}$ and a target point ![]() $\boldsymbol {x}$ of strength

$\boldsymbol {x}$ of strength ![]() $\boldsymbol {q}$. However, in the axisymmetric setting, we must consider the interactions between a target point

$\boldsymbol {q}$. However, in the axisymmetric setting, we must consider the interactions between a target point ![]() $\boldsymbol {x}$ and a ring of source points

$\boldsymbol {x}$ and a ring of source points ![]() $\boldsymbol {y}$ for fixed

$\boldsymbol {y}$ for fixed ![]() $(x_0, \rho _0)$. This is achieved by analytically integrating the kernels in the azimuthal direction

$(x_0, \rho _0)$. This is achieved by analytically integrating the kernels in the azimuthal direction ![]() $\phi$, with the aid of elliptic integrals. Several axisymmetric kernels have been derived in Pozrikidis (Reference Pozrikidis1992). We give in appendix D a short description of all the kernels required in this work.

$\phi$, with the aid of elliptic integrals. Several axisymmetric kernels have been derived in Pozrikidis (Reference Pozrikidis1992). We give in appendix D a short description of all the kernels required in this work.

Figure 1. Droplet in axisymmetric coordinates ![]() $(x, \rho , \phi )$.

$(x, \rho , \phi )$.

4.2. Boundary element methods

The numerical discretization of the layer potentials from § 4.1 has been performed using a standard collocation method (also known as a boundary element method), similar to the methods described in Kress (Reference Kress1989, chapter 13.3). We will only present the details for a single droplet, as seen in figure 1. In an axisymmetric setting, all the integrals over the surface ![]() $\varSigma$ can be reduced to integrals over the top half-plane and a one-dimensional interface

$\varSigma$ can be reduced to integrals over the top half-plane and a one-dimensional interface ![]() $\varGamma \triangleq \{\boldsymbol {x} \in \varSigma : \phi = 0\}$. We refer to Kress (Reference Kress1989), Aliabadi (Reference Aliabadi2002) or Pozrikidis (Reference Pozrikidis1992) for additional details on the construction of one-dimensional collocation methods and only briefly describe the chosen notation for this work.

$\varGamma \triangleq \{\boldsymbol {x} \in \varSigma : \phi = 0\}$. We refer to Kress (Reference Kress1989), Aliabadi (Reference Aliabadi2002) or Pozrikidis (Reference Pozrikidis1992) for additional details on the construction of one-dimensional collocation methods and only briefly describe the chosen notation for this work.

We define a uniform one-dimensional computational grid with parameter ![]() $\xi \in [0, 1/2]$ discretized into

$\xi \in [0, 1/2]$ discretized into ![]() $M$ elements

$M$ elements ![]() $\varGamma _m \triangleq [\xi _m, \xi _{m + 1})$, for

$\varGamma _m \triangleq [\xi _m, \xi _{m + 1})$, for ![]() $m \in \{0, \ldots , M\}$. On each element

$m \in \{0, \ldots , M\}$. On each element ![]() $\varGamma _m$, we define a set of

$\varGamma _m$, we define a set of ![]() $N + 1$ uniformly distributed collocation points

$N + 1$ uniformly distributed collocation points ![]() $\xi _{m, n}$, for a total of

$\xi _{m, n}$, for a total of ![]() $N_c \triangleq (M \times N) + 1$ unique points. For a high-order finite element type discretization, we use the standard nodal Lagrange basis functions

$N_c \triangleq (M \times N) + 1$ unique points. For a high-order finite element type discretization, we use the standard nodal Lagrange basis functions ![]() $\phi _n$. Finally, we use the standard Gauss–Legendre quadrature rule on regular elements and specialized methods for elements containing singularities.

$\phi _n$. Finally, we use the standard Gauss–Legendre quadrature rule on regular elements and specialized methods for elements containing singularities.

4.3. Singularity handling and singular quadrature rules

The main difficulty in implementing a boundary element method lies in the handling of the singularities for each kernel. As seen in the previous sections, the adjoint problem requires all the quantities present in the Stokes problem with several different singularity types. The surface limit of the velocity layer potential (4.1) is weakly singular, with a ![]() $\log$-type singularity, and the remaining layer potentials, e.g. (4.3), are defined by means of the Cauchy principal value and are strongly singular, with a

$\log$-type singularity, and the remaining layer potentials, e.g. (4.3), are defined by means of the Cauchy principal value and are strongly singular, with a ![]() $r^{-1}$-type singularity.

$r^{-1}$-type singularity.

There are multiple methods used to handle singular integrals, such as changes of variables, singularity subtraction, regularization or generalized quadrature rules (see Aliabadi Reference Aliabadi2002, chapter 11). We focus on making use of generalized quadrature rules and analytical techniques for accurate numerical integration. For all singularity types we rely on an element subdivision method, as seen in figure 2.

Figure 2. (a) Collocation points (black circles) on two adjacent elements and (b) element subdivisions and quadrature points (grey circles) for a singularity at point ![]() $\boldsymbol {x}_i$.

$\boldsymbol {x}_i$.

This method proceeds by isolating the element containing the currently evaluated target point ![]() $\boldsymbol {x}_i$ and splitting it into two elements with specially constructed quadrature rules on each. This allows reducing the problem to that of endpoint singularities, and minimizing the required quadrature rules. This is important because in the case of generalized quadrature rules, their construction depends on the location of the singularity, e.g. Carley (Reference Carley2006) and Kolm & Rokhlin (Reference Kolm and Rokhlin2001). There are two special cases that need to be handled: element endpoints consider the two existing adjacent elements as the subdivision and the two points at the poles (

$\boldsymbol {x}_i$ and splitting it into two elements with specially constructed quadrature rules on each. This allows reducing the problem to that of endpoint singularities, and minimizing the required quadrature rules. This is important because in the case of generalized quadrature rules, their construction depends on the location of the singularity, e.g. Carley (Reference Carley2006) and Kolm & Rokhlin (Reference Kolm and Rokhlin2001). There are two special cases that need to be handled: element endpoints consider the two existing adjacent elements as the subdivision and the two points at the poles (![]() $\rho = 0$) only make use of the interior element for a one-sided evaluation.

$\rho = 0$) only make use of the interior element for a one-sided evaluation.

4.3.1. Singularity subtraction

The pressure kernel (4.2), the traction kernel (4.3) and the normal velocity gradient kernel (4.6) can all be handled by the method of singularity subtraction. The singularity subtraction for the traction is described in Pozrikidis (Reference Pozrikidis1990). In the case of the pressure kernel, we make use of the well known identities

where ![]() $\varepsilon _{ijk}$ is the Levi-Civita symbol forming the cross product. We can express the pressure layer potential (4.2), for

$\varepsilon _{ijk}$ is the Levi-Civita symbol forming the cross product. We can express the pressure layer potential (4.2), for ![]() $\boldsymbol {x} \in \varSigma$, as

$\boldsymbol {x} \in \varSigma$, as

The new kernel ![]() $\tilde {p}_j$ is given by

$\tilde {p}_j$ is given by

The axisymmetric form of this kernel can be found in appendix D. Finally, the layer-potential representation of the normal velocity gradient (4.6) can be desingularized by noting that

and using the formulae derived for the pressure and traction layer potentials. Since the integrals are no longer singular, we use the standard Gauss–Legendre quadrature rules on each subdivision. The subdivisions are still required in this case, even though the singularity is removed, because the resulting integrand is generally not smooth (only continuous) and can degrade the accuracy of the solution.

4.3.2. Weakly singular integrals

For the weakly singular integrals in our problem, such as the velocity single-layer potential representation (4.1), we make use of the known Alpert quadrature rules. Alpert quadrature rules are a set of corrected trapezoidal rules that achieve high accuracy for ![]() $\log$ singularities. These quadrature rules can be constructed for endpoint singularities, as described in Alpert (Reference Alpert1999), and require no additional changes to the kernels.

$\log$ singularities. These quadrature rules can be constructed for endpoint singularities, as described in Alpert (Reference Alpert1999), and require no additional changes to the kernels.

4.3.3. Strongly singular integrals

The remaining layer potential representation that requires special handling is that of the tangential velocity gradient, (4.7). This kernel is strongly singular and does not have known solutions for singularity subtraction. Therefore, we require a set of generalized quadrature rules for Cauchy principal value integrals. We have made use of the quadrature rules described in Carley (Reference Carley2006), based on the work of Kolm & Rokhlin (Reference Kolm and Rokhlin2001).

However, we cannot make use of the subdivision method in this case. Applying the quadrature rules relies on a change of variables from the reference element ![]() $[-1, 1]$ to the surface element, which cannot be naively applied for strongly singular integrals with endpoint singularities (Monegato Reference Monegato1994). Instead, we construct unique quadrature rules for each interior collocation point in the reference element. As before, the element endpoints are handled by considering the union of the adjacent elements with a singularity at the origin

$[-1, 1]$ to the surface element, which cannot be naively applied for strongly singular integrals with endpoint singularities (Monegato Reference Monegato1994). Instead, we construct unique quadrature rules for each interior collocation point in the reference element. As before, the element endpoints are handled by considering the union of the adjacent elements with a singularity at the origin ![]() $\xi = 0$. The two poles need to be handled separately, since they are by definition one-sided and do not allow a change of variables. Therefore, we are forced to construct quadrature rules directly on the elements

$\xi = 0$. The two poles need to be handled separately, since they are by definition one-sided and do not allow a change of variables. Therefore, we are forced to construct quadrature rules directly on the elements ![]() $\varGamma _1$ and

$\varGamma _1$ and ![]() $\varGamma _M$. This is done by extending the results from Carley (Reference Carley2006) to arbitrary

$\varGamma _M$. This is done by extending the results from Carley (Reference Carley2006) to arbitrary ![]() $[a, b]$ intervals. Unlike the previous cases, we require

$[a, b]$ intervals. Unlike the previous cases, we require ![]() $N+1$ unique quadrature rules to handle the tangential velocity layer potential (4.7).

$N+1$ unique quadrature rules to handle the tangential velocity layer potential (4.7).

4.4. Geometry representation

The geometry is represented spectrally by Fourier modes, for high accuracy. Together with the discretization of the layer potentials from § 4.2, the result is a superparametric collocation method.

To compute the tangent vector, the normal vector and the curvature, we make use of fast Fourier transform. The fast Fourier transform is computed on the uniform grid formed by the collocation points, to avoid issues with non-uniform spacing. Since we only have access to the top half-plane, we mirror the geometry to form a closed curve on which the Fourier transform can be directly applied. Finally, all the quantities are interpolated to the required quadrature points using the Fourier coefficients computed at the uniform collocation points.

4.5. Evolution equation

The interface evolution (2.8) is integrated using the classic third-order strong-stability- preserving Runge–Kutta method