1. Introduction

The concept almost convergence was first introduced by Lorentz [Reference Lorentz6] in 1948 which is used to study the property of divergent sequences, that is what will happen if all the Banach limits of a sequence are equal. In [Reference Lorentz6], Lorentz defined almost convergence by the equality of all the Banach limits and discovered that this definition is equivalent to the one we give later. Lorentz [Reference Lorentz6] also studied the relationship between almost convergence, or in other words summation by method F, and matrix methods, then found that most of the commonly used matrix methods contain the method F. One is referred to [Reference Lorentz6] for details.

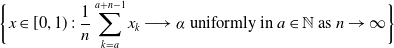

Definition 1.1.

A bounded sequence

![]() $ \{x_{k}\}_{k=1}^{\infty} $

is called almost convergent to a number

$ \{x_{k}\}_{k=1}^{\infty} $

is called almost convergent to a number

![]() $ t\in\mathbb{R} $

, if

$ t\in\mathbb{R} $

, if

uniformly in

![]() $ a\in \mathbb{N} $

as

$ a\in \mathbb{N} $

as

![]() $ n\rightarrow\infty $

. We write this by

$ n\rightarrow\infty $

. We write this by

![]() $ \{x_{k}\}_{k=1}^{\infty}\in \mathbf{AC}(t) $

.

$ \{x_{k}\}_{k=1}^{\infty}\in \mathbf{AC}(t) $

.

It is easy to notice that if take

![]() $ a=1 $

, the summation is exactly Cesàro summation. So a sequence that is almost convergent to t must be Cesàro convergent to t. Borel [Reference Borel2] presented a classical result that the set such that the corresponding sequences with binary expansions of numbers in [0, 1] are Cesàro convergent to

$ a=1 $

, the summation is exactly Cesàro summation. So a sequence that is almost convergent to t must be Cesàro convergent to t. Borel [Reference Borel2] presented a classical result that the set such that the corresponding sequences with binary expansions of numbers in [0, 1] are Cesàro convergent to

![]() $\frac{1}{2}$

has full Lebesgue measure. That is

$\frac{1}{2}$

has full Lebesgue measure. That is

where

![]() $ \mathscr{L} $

denotes the Lebesgue measure and

$ \mathscr{L} $

denotes the Lebesgue measure and

![]() $ x=(x_{1}x_{2}\ldots)_{2} $

denotes the binary expansion of

$ x=(x_{1}x_{2}\ldots)_{2} $

denotes the binary expansion of

![]() $ x\in[0,1] $

. Besicovitch [Reference Besicovitch1] showed that for all

$ x\in[0,1] $

. Besicovitch [Reference Besicovitch1] showed that for all

![]() $ 0\leq\alpha\leq 1 $

, the set

$ 0\leq\alpha\leq 1 $

, the set

has Hausdorff dimension

![]() $ -\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) $

. Besicovitch’s result is also extended to other matrix summations [Reference Usachev8]. For further research about almost convergence and its applications, one can refer to [Reference Esi and Necdet4, Reference Mohiuddine7, Reference Usachev8] and the references therein.

$ -\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) $

. Besicovitch’s result is also extended to other matrix summations [Reference Usachev8]. For further research about almost convergence and its applications, one can refer to [Reference Esi and Necdet4, Reference Mohiuddine7, Reference Usachev8] and the references therein.

Almost convergence can imply Cesàro convergence, and a Cauchy sequence must be an almost convergent sequence. Besides, there are many other sences of convergence. For each one, we can study the Hausdorff dimension of the sets similar to

![]() $ F_{\alpha} $

. In this paper, we consider numbers with almost convergent sequences associated with their binary expansions. Connor [Reference Connor3] proved that there is no almost convergent binary expansion sequences for almost all numbers in [0, 1]. In 2022, Usachev [Reference Usachev8] showed that for rational

$ F_{\alpha} $

. In this paper, we consider numbers with almost convergent sequences associated with their binary expansions. Connor [Reference Connor3] proved that there is no almost convergent binary expansion sequences for almost all numbers in [0, 1]. In 2022, Usachev [Reference Usachev8] showed that for rational

![]() $ \alpha $

, the Hausdorff dimension of the set

$ \alpha $

, the Hausdorff dimension of the set

\begin{align*} G_{\alpha} &\;:\!=\;\bigg\{x\in [0,1)\;:\;\frac{1}{n}\sum_{k=a}^{a+n-1}x_{k}\longrightarrow\alpha\textrm{ uniformly in }a\in\mathbb{N}\textrm{ as }n\rightarrow\infty\bigg\}\\[5pt] {}&\;=\bigg\{x\in [0,1)\;:\;\{x_{k}\}_{k=1}^{\infty}\in \boldsymbol{AC}(\alpha)\bigg\}\end{align*}

\begin{align*} G_{\alpha} &\;:\!=\;\bigg\{x\in [0,1)\;:\;\frac{1}{n}\sum_{k=a}^{a+n-1}x_{k}\longrightarrow\alpha\textrm{ uniformly in }a\in\mathbb{N}\textrm{ as }n\rightarrow\infty\bigg\}\\[5pt] {}&\;=\bigg\{x\in [0,1)\;:\;\{x_{k}\}_{k=1}^{\infty}\in \boldsymbol{AC}(\alpha)\bigg\}\end{align*}

is also

![]() $ -\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) $

, but left a problem for the case when

$ -\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) $

, but left a problem for the case when

![]() $ \alpha $

is irrational. Usachev’s proof depends highly on the rationality of

$ \alpha $

is irrational. Usachev’s proof depends highly on the rationality of

![]() $ \alpha $

, while our method is applicable for any

$ \alpha $

, while our method is applicable for any

![]() $ \alpha $

.

$ \alpha $

.

Theorem 1.2.

For all

![]() $ 0\leq\alpha\leq 1 $

, we have

$ 0\leq\alpha\leq 1 $

, we have

We refer to Lorentz [Reference Lorentz6] for the definition of strongly regular matrix method.

Definition 1.3.

A matrix method of summation is a mapping on the space of all sequences

![]() $ \{x_{k}\}_{k=1}^{\infty} $

generated by a matrix

$ \{x_{k}\}_{k=1}^{\infty} $

generated by a matrix

![]() $ A=\{a_{nk}\}_{n,k=1}^{\infty} $

, which is

$ A=\{a_{nk}\}_{n,k=1}^{\infty} $

, which is

We call it strongly regular if

Corollary 3.5 in [Reference Usachev8] remains true for irrational

![]() $ \alpha $

. That is

$ \alpha $

. That is

Corollary 1.4.

Let

![]() $ A=\{a_{nk}\}_{n,k=1}^{\infty} $

be a strongly regular matrix method, which is weaker than (or consistent with) the Cesàro method, and let

$ A=\{a_{nk}\}_{n,k=1}^{\infty} $

be a strongly regular matrix method, which is weaker than (or consistent with) the Cesàro method, and let

![]() $ 0\leq\alpha\leq1 $

. The Hausdorff dimension of the set

$ 0\leq\alpha\leq1 $

. The Hausdorff dimension of the set

is

![]() $ -\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) $

.

$ -\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) $

.

The proof of this corollary is completely same as which in [Reference Usachev8].

2. Preliminaries

In this section, we recall the definition of Hausdorff dimension and mass distribution principle that will be used later.

Definition 2.1. [Reference Falconer5] Given a set

![]() $ E\subset\mathbb{R}^{n} $

, its s -dimensional Hausdorff measure is

$ E\subset\mathbb{R}^{n} $

, its s -dimensional Hausdorff measure is

where

![]() $ \{O_{i}\}_{i=1}^{\infty} $

is an open cover, and

$ \{O_{i}\}_{i=1}^{\infty} $

is an open cover, and

![]() $ |\cdot| $

denotes the diameter. Besides, the Hausdorff dimension of E is

$ |\cdot| $

denotes the diameter. Besides, the Hausdorff dimension of E is

Theorem 2.2. (Mass distribution principle) [Reference Falconer5] Let E be a set, and there is a strictly positive Borel measure

![]() $ \mu $

supported on E. If some

$ \mu $

supported on E. If some

![]() $ s\geq 0 $

,

$ s\geq 0 $

,

holds for all

![]() $x\in E$

, then

$x\in E$

, then

At the end, we fix a notation. For any

![]() $ x\in[0,1) $

, let

$ x\in[0,1) $

, let

be the binary expansion of x. We write

![]() $ x=(x_{1},x_{2},\ldots)_{2} $

. For any

$ x=(x_{1},x_{2},\ldots)_{2} $

. For any

![]() $ m\geq 1 $

and a finite block

$ m\geq 1 $

and a finite block

![]() $ (\varepsilon_{1}\varepsilon_{2}\ldots\varepsilon_{n}) $

with

$ (\varepsilon_{1}\varepsilon_{2}\ldots\varepsilon_{n}) $

with

![]() $ \varepsilon_{i}\in\{0,1\} $

for all

$ \varepsilon_{i}\in\{0,1\} $

for all

![]() $ 1\leq i\leq n $

, we write

$ 1\leq i\leq n $

, we write

which is the set of points whose binary expansion begin with the digits

![]() $ \varepsilon_{1},\varepsilon_{2},\ldots,\varepsilon_{n} $

. Recall that

$ \varepsilon_{1},\varepsilon_{2},\ldots,\varepsilon_{n} $

. Recall that

is the set which consists of numbers with binary expansion sequences that are Cesàro convergent to

![]() $ \alpha $

, and

$ \alpha $

, and

The upper bound of the Hausdorff dimension of

![]() $G_{\alpha}$

is

$G_{\alpha}$

is

![]() since

since

![]() $ G_{\alpha} $

is a subset of

$ G_{\alpha} $

is a subset of

![]() $ F_{\alpha} $

, so by Besicovitch’s result [Reference Besicovitch1],

$ F_{\alpha} $

, so by Besicovitch’s result [Reference Besicovitch1],

Thus, we need only to focus on the lower bound of the Hausdorff dimension of

![]() $ G_{\alpha} $

.

$ G_{\alpha} $

.

3. Proof of Theorem 1.2

The lower bound of the Hausdorff dimension of

![]() $ G_{\alpha} $

is given by classical methods:

$ G_{\alpha} $

is given by classical methods:

-

(1) Construct a Cantor subset of

$ G_{\alpha} $

, denoted by

$ G_{\alpha} $

, denoted by

$ E_{m,\alpha} $

for each

$ E_{m,\alpha} $

for each

$ m\in\mathbb{N} $

large;

$ m\in\mathbb{N} $

large; -

(2) Define a probability measure supported on

$ E_{m,\alpha} $

;

$ E_{m,\alpha} $

; -

(3) Use mass distribution principle to find the lower bound of the Hausdorff dimension of

$ E_{m,\alpha} $

.

$ E_{m,\alpha} $

.

So define the set

![]() $ E_{m,\alpha} $

as:

$ E_{m,\alpha} $

as:

\begin{align*}\textrm{For every }m\in \mathbb{N}\textrm{, }E_{m,\alpha}\;:\!=\;\bigg\{x\in [0,1)\;:\;\sum_{k=(j-1)m+1}^{jm}x_{k}=[m\alpha]+\xi_{j} \textrm{ for all }j\in\mathbb{N}\bigg\}\textrm{,}\end{align*}

\begin{align*}\textrm{For every }m\in \mathbb{N}\textrm{, }E_{m,\alpha}\;:\!=\;\bigg\{x\in [0,1)\;:\;\sum_{k=(j-1)m+1}^{jm}x_{k}=[m\alpha]+\xi_{j} \textrm{ for all }j\in\mathbb{N}\bigg\}\textrm{,}\end{align*}

where

![]() $ [m\alpha] $

denotes the largest integer less than or equal to

$ [m\alpha] $

denotes the largest integer less than or equal to

![]() $ m\alpha $

, and

$ m\alpha $

, and

![]() $ \{\xi_{j}\}_{j\in\mathbb{N}} $

is defined as follows. Firstly, take

$ \{\xi_{j}\}_{j\in\mathbb{N}} $

is defined as follows. Firstly, take

![]() $ \xi_{1} $

to be 0, and

$ \xi_{1} $

to be 0, and

![]() $ \xi_{2} $

to be the integer such that

$ \xi_{2} $

to be the integer such that

![]() $ 2[m\alpha]+\xi_{1}+\xi_{2}=[2m\alpha] $

, so

$ 2[m\alpha]+\xi_{1}+\xi_{2}=[2m\alpha] $

, so

![]() $ \xi_{2} $

could be 0 or 1. Then, secondly, we define

$ \xi_{2} $

could be 0 or 1. Then, secondly, we define

![]() $ \xi_{j} $

inductively, that is, we choose

$ \xi_{j} $

inductively, that is, we choose

![]() $ \xi_{j} $

to be an integer satisfying

$ \xi_{j} $

to be an integer satisfying

By a simple calculation, we have

where

![]() $ \{m\alpha\}=m\alpha-[m\alpha] $

. Therefore,

$ \{m\alpha\}=m\alpha-[m\alpha] $

. Therefore,

![]() $ \xi_{j} $

could be

$ \xi_{j} $

could be

![]() $ -1 $

, 0, 1, or 2.

$ -1 $

, 0, 1, or 2.

In other words,

![]() $ E_{m,\alpha} $

is a collection of such numbers, whose binary expansion sequences satisfy that for all

$ E_{m,\alpha} $

is a collection of such numbers, whose binary expansion sequences satisfy that for all

![]() $ j\in\mathbb{N} $

, the first jm digits contain exactly

$ j\in\mathbb{N} $

, the first jm digits contain exactly

![]() $ [jm\alpha] $

many ones, and if we cut the sequences into blocks of length m, the j-th block contains exactly

$ [jm\alpha] $

many ones, and if we cut the sequences into blocks of length m, the j-th block contains exactly

![]() $ [m\alpha]+\xi_{j} $

ones. Except that, there is no request for the position of ones.

$ [m\alpha]+\xi_{j} $

ones. Except that, there is no request for the position of ones.

We check that

![]() $ E_{m,\alpha} $

is indeed a subset of

$ E_{m,\alpha} $

is indeed a subset of

![]() $ G_{\alpha} $

when m is sufficiently large.

$ G_{\alpha} $

when m is sufficiently large.

Lemma 3.1.

Let

![]() $ 0<\alpha\leq 1 $

,

$ 0<\alpha\leq 1 $

,

![]() $ m>\left[\frac{100}{\alpha}\right]+100 $

, or

$ m>\left[\frac{100}{\alpha}\right]+100 $

, or

![]() $ \alpha=0 $

,

$ \alpha=0 $

,

![]() $ m\in\mathbb{N} $

. Then for any

$ m\in\mathbb{N} $

. Then for any

![]() $ x\in E_{m,\alpha} $

,

$ x\in E_{m,\alpha} $

,

Proof. First, for

![]() $ \alpha=0 $

,

$ \alpha=0 $

,

![]() $ E_{m,0} $

contains a single point 0, so it is a subset of

$ E_{m,0} $

contains a single point 0, so it is a subset of

![]() $ G_{0} $

. Second, for

$ G_{0} $

. Second, for

![]() $ 0<\alpha\leq1 $

, we see that

$ 0<\alpha\leq1 $

, we see that

![]() $ [m\alpha] $

cannot be 0 for m large. Fix an integer a, for any

$ [m\alpha] $

cannot be 0 for m large. Fix an integer a, for any

![]() $ x\in E_{m,\alpha} $

, let

$ x\in E_{m,\alpha} $

, let

![]() $ j_{1} $

be the largest j such that

$ j_{1} $

be the largest j such that

![]() $ jm<a $

, and

$ jm<a $

, and

![]() $ j_{2} $

be the smallest j such that

$ j_{2} $

be the smallest j such that

![]() $ jm\geq a+n-1 $

. Then, on one hand,

$ jm\geq a+n-1 $

. Then, on one hand,

\begin{equation} \sum_{k=a}^{a+n-1}x_{k}\leq\sum_{k=j_{1}m+1}^{j_{2}m}x_{k}\leq \big[j_{2}m\alpha\big]-\big[j_{1}m\alpha\big] \leq(j_{2}-j_{1})m\alpha+1\textrm{.} \end{equation}

\begin{equation} \sum_{k=a}^{a+n-1}x_{k}\leq\sum_{k=j_{1}m+1}^{j_{2}m}x_{k}\leq \big[j_{2}m\alpha\big]-\big[j_{1}m\alpha\big] \leq(j_{2}-j_{1})m\alpha+1\textrm{.} \end{equation}

On the other hand,

\begin{equation} \sum_{k=a}^{a+n-1}x_{k}\geq\sum_{k=(j_{1}+1)m+1}^{(j_{2}-1)m}x_{k}\geq\big[(j_{2}-1)m\alpha\big] -\big[(j_{1}+1)m\alpha\big] \geq(j_{2}-j_{1})m\alpha-2m\alpha-1\textrm{.} \end{equation}

\begin{equation} \sum_{k=a}^{a+n-1}x_{k}\geq\sum_{k=(j_{1}+1)m+1}^{(j_{2}-1)m}x_{k}\geq\big[(j_{2}-1)m\alpha\big] -\big[(j_{1}+1)m\alpha\big] \geq(j_{2}-j_{1})m\alpha-2m\alpha-1\textrm{.} \end{equation}

Furthermore, we have

Combining (3.1) (3.2) (3.3) together, it follows that

Since the left and the right most terms in (3.4) do not depend on a, the convergence is uniform when n tends to infinity. This shows

![]() $ E_{m,\alpha} $

is a subset of

$ E_{m,\alpha} $

is a subset of

![]() $ G_{\alpha} $

.

$ G_{\alpha} $

.

Next, we analyze the Cantor structure of

![]() $ E_{m,\alpha} $

in detail. Here, we assume that

$ E_{m,\alpha} $

in detail. Here, we assume that

![]() $ 0<\alpha\leq 1 $

and m is sufficiently large. Denote

$ 0<\alpha\leq 1 $

and m is sufficiently large. Denote

![]() $ U_{j} $

the blocks of length m which contain exactly

$ U_{j} $

the blocks of length m which contain exactly

![]() $ [m\alpha]+\xi_{j} $

ones,

$ [m\alpha]+\xi_{j} $

ones,

![]() $ j=1,2,\ldots $

, that is

$ j=1,2,\ldots $

, that is

Then, for each j, the collection

![]() $ U_{j} $

contains

$ U_{j} $

contains

![]() $ D_{j}=\textrm{C}_{m}^{[m\alpha]+\xi_{j}} $

elements, where

$ D_{j}=\textrm{C}_{m}^{[m\alpha]+\xi_{j}} $

elements, where

![]() $ \textrm{C}_{n}^{k} $

are binomial coefficients. So the first level of the Cantor structure of

$ \textrm{C}_{n}^{k} $

are binomial coefficients. So the first level of the Cantor structure of

![]() $ E_{m,\alpha} $

is

$ E_{m,\alpha} $

is

The second level is

and by induction, the level-j of the Cantor structure of

![]() $ E_{m,\alpha} $

is

$ E_{m,\alpha} $

is

Then, we have that

Now we compute the lower bound of the Hausdorff dimension of

![]() $ E_{m,\alpha} $

. We have to deal with some binomial coefficients later, so here we simplify it by using Stirling formula.

$ E_{m,\alpha} $

. We have to deal with some binomial coefficients later, so here we simplify it by using Stirling formula.

Lemma 3.2.

Let

![]() $ \{d_{m}\}_{m\geq 1} $

be a sequence of integers with

$ \{d_{m}\}_{m\geq 1} $

be a sequence of integers with

![]() $ d_{m}\leq m $

for all

$ d_{m}\leq m $

for all

![]() $ m\geq 1 $

and

$ m\geq 1 $

and

Then

Proof. Write

![]() $ m^{\prime}=d_{m} $

. By Stirling formula,

$ m^{\prime}=d_{m} $

. By Stirling formula,

\begin{align*} \textrm{C}_{m}^{m^{\prime}} {}&=\frac{m\textrm{!}}{m^{\prime}\textrm{!}(m-m^{\prime})\textrm{!}}\\[5pt] {}&=\frac{\sqrt{2\pi m}(\frac{m}{\textrm{e}})^{m}\textrm{e}^{O(\frac{1}{m})}}{\sqrt{2\pi m^{\prime}}(\frac{m^{\prime}}{\textrm{e}})^{m^{\prime}}\textrm{e}^{O(\frac{1}{m^{\prime}})}\sqrt{2\pi (m-m^{\prime})}(\frac{m-m^{\prime}}{\textrm{e}})^{m-m^{\prime}}\textrm{e}^{O(\frac{1}{m-m^{\prime}})}}\\[5pt] {}&=\left(\frac{m}{m-m^{\prime}}\right)^{m}\left(\frac{m-m^{\prime}}{m^{\prime}}\right)^{m^{\prime}}O\!\left(m^{-\frac{1}{2}}\right)\textrm{.} \end{align*}

\begin{align*} \textrm{C}_{m}^{m^{\prime}} {}&=\frac{m\textrm{!}}{m^{\prime}\textrm{!}(m-m^{\prime})\textrm{!}}\\[5pt] {}&=\frac{\sqrt{2\pi m}(\frac{m}{\textrm{e}})^{m}\textrm{e}^{O(\frac{1}{m})}}{\sqrt{2\pi m^{\prime}}(\frac{m^{\prime}}{\textrm{e}})^{m^{\prime}}\textrm{e}^{O(\frac{1}{m^{\prime}})}\sqrt{2\pi (m-m^{\prime})}(\frac{m-m^{\prime}}{\textrm{e}})^{m-m^{\prime}}\textrm{e}^{O(\frac{1}{m-m^{\prime}})}}\\[5pt] {}&=\left(\frac{m}{m-m^{\prime}}\right)^{m}\left(\frac{m-m^{\prime}}{m^{\prime}}\right)^{m^{\prime}}O\!\left(m^{-\frac{1}{2}}\right)\textrm{.} \end{align*}

So we have

\begin{align*} \limsup_{m\rightarrow\infty}\frac{1}{m}\log_{2}\textrm{C}_{m}^{m^{\prime}} {}&=\limsup_{m\rightarrow\infty}\bigg(\!\log_{2}\frac{1}{1-\frac{m^{\prime}}{m}}+\frac{m^{\prime}}{m}\log_{2}\frac{1-\frac{m^{\prime}}{m}}{\frac{m^{\prime}}{m}}+O\!\left(\frac{\log_{2}m}{m}\right)\!\!\bigg)\\[5pt] {}&=\log_{2}\frac{1}{1-\alpha}+\alpha\log_{2}\frac{1-\alpha}{\alpha}\\[5pt] {}&=-\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha)\textrm{.} \end{align*}

\begin{align*} \limsup_{m\rightarrow\infty}\frac{1}{m}\log_{2}\textrm{C}_{m}^{m^{\prime}} {}&=\limsup_{m\rightarrow\infty}\bigg(\!\log_{2}\frac{1}{1-\frac{m^{\prime}}{m}}+\frac{m^{\prime}}{m}\log_{2}\frac{1-\frac{m^{\prime}}{m}}{\frac{m^{\prime}}{m}}+O\!\left(\frac{\log_{2}m}{m}\right)\!\!\bigg)\\[5pt] {}&=\log_{2}\frac{1}{1-\alpha}+\alpha\log_{2}\frac{1-\alpha}{\alpha}\\[5pt] {}&=-\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha)\textrm{.} \end{align*}

Lemma 3.3.

For any

![]() $ 0<\alpha\leq 1 $

and

$ 0<\alpha\leq 1 $

and

![]() $ m>[\frac{100}{\alpha}]+100 $

, we have

$ m>[\frac{100}{\alpha}]+100 $

, we have

Proof. Define a measure

![]() $ \mu $

supported on

$ \mu $

supported on

![]() $ E_{m,\alpha} $

. Let

$ E_{m,\alpha} $

. Let

![]() $ \mu ([0,1))=1 $

. For all

$ \mu ([0,1))=1 $

. For all

![]() $ j>0 $

and every element

$ j>0 $

and every element

![]() $ I\in\mathscr{S}_{j} $

, we set

$ I\in\mathscr{S}_{j} $

, we set

and

![]() $ \mu(E)=0 $

for all

$ \mu(E)=0 $

for all

![]() $ E\cap S_{j}=\emptyset $

. Then, one can see that the set function

$ E\cap S_{j}=\emptyset $

. Then, one can see that the set function

![]() $\mu$

satisfies Kolmogorov’s consistency condition, that is, for any

$\mu$

satisfies Kolmogorov’s consistency condition, that is, for any

![]() $j\ge 1$

and

$j\ge 1$

and

![]() $I\in \mathscr{S}_j$

,

$I\in \mathscr{S}_j$

,

Thus, it can be extended into a mass distribution supported on

![]() $E_{m,\alpha}$

[Reference Falconer5]. So for any ball B(x, r) centered at

$E_{m,\alpha}$

[Reference Falconer5]. So for any ball B(x, r) centered at

![]() $ x\in[0,1) $

with radius

$ x\in[0,1) $

with radius

![]() $ r<1 $

, there is an integer j such that

$ r<1 $

, there is an integer j such that

![]() $ 2^{-(j+1)m}\leq r\lt 2^{-jm} $

. It follows that the ball intersects at most 2 elements in

$ 2^{-(j+1)m}\leq r\lt 2^{-jm} $

. It follows that the ball intersects at most 2 elements in

![]() , then we have

, then we have

\begin{align*} \frac{\log_{2}\mu(B(x,r))}{\log_{2}r} {}&\geq\frac{\log_{2}\!(2\cdot(\prod_{k=1}^{j}D_{k})^{-1})}{\log_{2}r} \\[5pt] &= \frac{-\log_{2}\prod_{k=1}^{j}D_{k}}{\log_{2}r}+O\!\left(\frac{1}{\log_{2}r}\right)\\[5pt] {}&\geq \frac{-\log_{2}\prod_{k=1}^{j}D_{k}}{-(j+1)m}+O\!\left(\frac{1}{\log_{2}r}\right)\textrm{.} \end{align*}

\begin{align*} \frac{\log_{2}\mu(B(x,r))}{\log_{2}r} {}&\geq\frac{\log_{2}\!(2\cdot(\prod_{k=1}^{j}D_{k})^{-1})}{\log_{2}r} \\[5pt] &= \frac{-\log_{2}\prod_{k=1}^{j}D_{k}}{\log_{2}r}+O\!\left(\frac{1}{\log_{2}r}\right)\\[5pt] {}&\geq \frac{-\log_{2}\prod_{k=1}^{j}D_{k}}{-(j+1)m}+O\!\left(\frac{1}{\log_{2}r}\right)\textrm{.} \end{align*}

Thus

and the lemma holds by mass distribution principle.

Remark 1.

For the case

![]() $ \alpha=0 $

and

$ \alpha=0 $

and

![]() $ m\in\mathbb{N} $

, the result in Lemma 3.3 is trivial since

$ m\in\mathbb{N} $

, the result in Lemma 3.3 is trivial since

![]() $ E_{m,0} $

is a set of a single point, and

$ E_{m,0} $

is a set of a single point, and

![]() $ D_{j}=1 $

for all

$ D_{j}=1 $

for all

![]() $ j\in\mathbb{N} $

.

$ j\in\mathbb{N} $

.

Proof. (Proof of Theorem 1.2) As Lemmas 3.1 and 3.3 hold for m sufficiently large, we can take the limit supremum of

![]() $ \dim_{\textrm{H}}\!E_{m,\alpha} $

as

$ \dim_{\textrm{H}}\!E_{m,\alpha} $

as

![]() $ m\rightarrow\infty $

. Let

$ m\rightarrow\infty $

. Let

![]() $ \xi_{(m,\alpha)} $

be a number in

$ \xi_{(m,\alpha)} $

be a number in

![]() $ \{-1,0,1,2\} $

such that

$ \{-1,0,1,2\} $

such that

![]() $ \textrm{C}_{m}^{[m\alpha]+\xi_{(m,\alpha)}} $

is the smallest one among

$ \textrm{C}_{m}^{[m\alpha]+\xi_{(m,\alpha)}} $

is the smallest one among

and take

![]() $ m^{\prime}=[m\alpha]+\xi_{(m,\alpha)} $

. Then, we have

$ m^{\prime}=[m\alpha]+\xi_{(m,\alpha)} $

. Then, we have

\begin{align*} \dim_{\textrm{H}}\!G_{\alpha} {}&\geq\limsup_{m\rightarrow\infty}\dim_{\textrm{H}}\!E_{m,\alpha}\\[5pt] {}&=\limsup_{m\rightarrow\infty}\liminf_{j\rightarrow\infty}\frac{\log_{2}\prod_{k=1}^{j}D_{k}}{jm}\\ &\geq\limsup_{m\rightarrow\infty}\liminf_{j\rightarrow\infty}\frac{\log_{2}\prod_{k=1}^{j}\textrm{C}_{m}^{[m\alpha]+\xi_{(m,\alpha)}}}{jm}\\{} {}&=\limsup_{m\rightarrow\infty}\frac{1}{m}\log_{2}\textrm{C}_{m}^{m^{\prime}}\\&=-\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) \end{align*}

\begin{align*} \dim_{\textrm{H}}\!G_{\alpha} {}&\geq\limsup_{m\rightarrow\infty}\dim_{\textrm{H}}\!E_{m,\alpha}\\[5pt] {}&=\limsup_{m\rightarrow\infty}\liminf_{j\rightarrow\infty}\frac{\log_{2}\prod_{k=1}^{j}D_{k}}{jm}\\ &\geq\limsup_{m\rightarrow\infty}\liminf_{j\rightarrow\infty}\frac{\log_{2}\prod_{k=1}^{j}\textrm{C}_{m}^{[m\alpha]+\xi_{(m,\alpha)}}}{jm}\\{} {}&=\limsup_{m\rightarrow\infty}\frac{1}{m}\log_{2}\textrm{C}_{m}^{m^{\prime}}\\&=-\alpha\log_{2}\alpha-(1-\alpha)\log_{2}\!(1-\alpha) \end{align*}

where the last equality follows from Lemma 3.2 since

![]() $ \limsup_{m\rightarrow\infty}\frac{m^{\prime}}{m}=\alpha $

.

$ \limsup_{m\rightarrow\infty}\frac{m^{\prime}}{m}=\alpha $

.

Usachev [Reference Usachev8] also posed a potential method to attach the case when

![]() $ \alpha $

is irrational. Define a sequence of rationals

$ \alpha $

is irrational. Define a sequence of rationals

![]() $ \{\frac{p_{i}}{q_{i}} \}_{i=1}^{\infty}$

which converges to

$ \{\frac{p_{i}}{q_{i}} \}_{i=1}^{\infty}$

which converges to

![]() $ \alpha $

, and construct a set that consists of numbers with such binary expansions. It requests that among the positions in

$ \alpha $

, and construct a set that consists of numbers with such binary expansions. It requests that among the positions in

the binary expansion sequences have exactly

![]() $ p_{i}m $

many ones. Although we do have

$ p_{i}m $

many ones. Although we do have

for every positive integer a, the convergence may not be uniform as mentioned in [Reference Usachev8], that in every block of length

![]() $ q_{i}m $

, all the ones appear at first and then the zeros follow.

$ q_{i}m $

, all the ones appear at first and then the zeros follow.

So ensure the convergence is uniform, it is necessary to pose suitable restrictions on the distribution of ones, for example, asking zeros and ones appear regularly. Here, we cut the sequence into blocks of the same length, and there is only a little difference about the quantity of ones in different blocks. It avoids the problem caused by

![]() $ q_{i} $

going to infinity and ones being separated from zeros.

$ q_{i} $

going to infinity and ones being separated from zeros.