Introduction

Learning with virtual reality (VR) has several benefits, such as improved motivation (Freina & Ott, Reference Freina and Ott2015), experiencing scenarios that are otherwise infeasible, expensive, or dangerous in reality (Scavarelli et al., Reference Scavarelli, Arya and Teather2021), and enhanced learning with sensory cues (Psotka, Reference Psotka1995). VR can be distinguished between desktop virtual reality (DVR—using a desktop screen) and immersive virtual reality (IVR—using a head-mounted display). However, it remains largely unclear which type of VR optimally supports learning (Richards & Taylor, Reference Richards and Taylor2015; Selzer et al., Reference Selzer, Gazcon and Larrea2019), particularly as it relates to learning retention. Our experiment focuses on this research gap by comparing the effectiveness of DVR and IVR training on learning retention over time for a procedural skill.

Methods

Hypothesis development

In their meta-analysis, Wu et al. (Reference Wu, Yu and Gu2020) present that immediate learning was higher for IVR compared to DVR groups. Since initial learning predicts future performance (Arthur et al., Reference Arthur, Bennett, Stanush and McNelly1998; Kamuche & Ledman, Reference Kamuche and Ledman2005), it is expected that:

H1. The number of errors in the physical assembly in the retention test is lower for participants trained with IVR than those trained with DVR.

H2. Time to completion (TTC) of the physical assembly in the retention test is lower for participants trained with IVR than those trained with DVR.

Exceeding goals leads to satisfaction (Kernan & Lord, Reference Kernan and Lord1991) and since H1 and H2 hypothesize that the IVR group’s performance is better, it is predicted:

H3. Participants trained with IVR rate affective learning outcomes higher than those trained with DVR after the retention test as measured by satisfaction.

The same argument applies to self-efficacy as Moores and Chang (Reference Moores and Chang2009) found that performance positively correlates with self-efficacy. Both should be higher for IVR:

H4. Participants trained with IVR rate affective learning outcomes higher than those trained with DVR after the retention test as measured by self-efficacy.

Several prior studies report higher motivation when learning with IVR rather than DVR (Makransky et al., Reference Makransky, Borre-Gude and Mayer2019; Mouatt et al., Reference Mouatt, Smith, Mellow, Parfitt, Smith and Stanton2020). Since Huang et al. (Reference Huang, Roscoe, Johnson‐Glenberg and Craig2021) present that motivation from a VR session can be sustained, it is hypothesized that:

H5. Participants trained with IVR rate affective learning outcomes higher than those trained with DVR after the retention test as measured by motivation.

Research approach

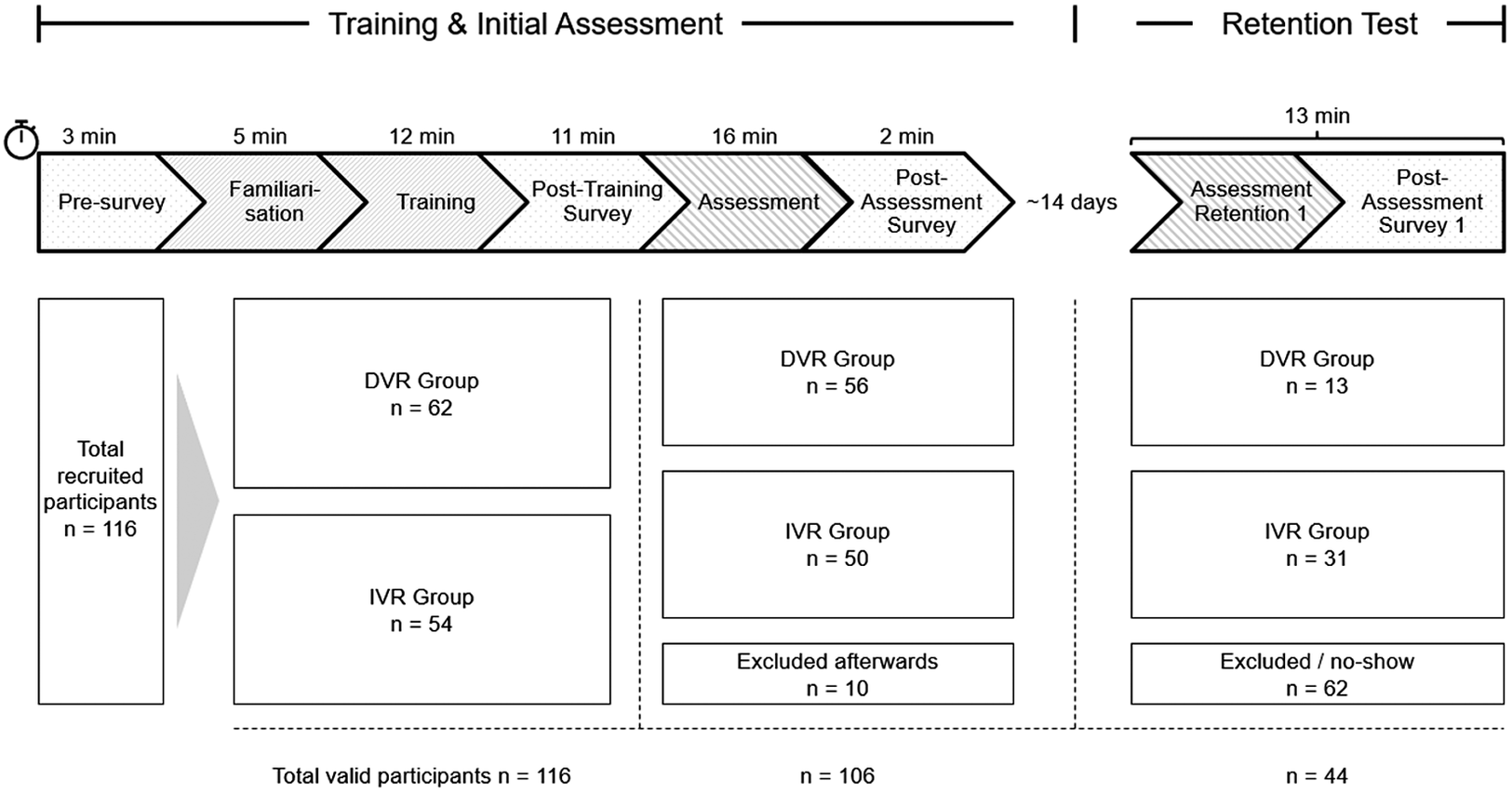

To test the hypotheses outlined above, a between-subjects experiment was conducted. A total of 116 participants were recruited, of which n = 44 participants successfully completed the entire experiment. The sample in the retention test is predominantly male as the population of students and staff in engineering (the context of our study) is also mostly male (DVR: 92.31%, IVR: 96.97%). The median age for the DVR group was 21 and 19 years for the IVR group. All participants had a normal or corrected-to-normal vision. The groups were also very similar in assembly experience with 30.77% in the DVR and 27.27% in the IVR group reporting having no assembly experience. None of the participants had previously assembled the task used in the experiment.

The research design was similar to the one used by Bohné et al. (Reference Bohné, Heine, Gurerk, Rieger, Kemmer and Cao2021). Participants were randomly split into two groups and trained in the execution of an unfamiliar industrial assembly task using either DVR or IVR. The research design is shown in Figure 1. The experiment received ethical approval from the University of Cambridge.

Figure 1. Training procedure and participant flow through the experiment phases.

While the DVR training was delivered on a standard laptop computer with an external computer mouse, the IVR training was delivered using an Oculus Quest 2 headset. Both groups received the same training in the same VR environment (Figure 2). The VR environment was an advanced version of the environment used by Bohné et al. (Reference Bohné, Heine, Gurerk, Rieger, Kemmer and Cao2021). It included a familiarization phase before the main training to account for the pretraining principle (Mayer, Reference Mayer2017).

Figure 2. Screenshots from the virtual workstation with instructions on the board behind.

After the training, participants assembled the physical components in a real-world context. A researcher observed the physical assembly and recorded the performance. Metrics measured included the time to completely assemble the component (TTC) and the number of mistakes made. The latter was determined using a standardized error counting sheet. While participants could ask for a hint at any time, each hint was counted as an error. The assessment procedure was repeated after 14 days (±2 days) after the initial training to determine the degree of retention for both groups (Figure 3).

Figure 3. Layout of the physical workstation used for assessment.

Results

For data cleaning, outliners with a z-score larger than three were excluded (Osborne & Overbay, Reference Osborne and Overbay2004). The questionnaires included attention check items to ensure participants would not give careless responses. In case of attention check failures, the participant’s data were excluded. In case data points for a participant were missing, the data were excluded. Homoscedasticity of data was assessed with Shapiro–Wilk tests and Levene tests were used to assess the normality of the data. If the data were homoscedastic and normally distributed for all groups, ANOVAs were used to compare the data and Wilcoxon rank-sum-tests otherwise. A 5% significance level was used, as recommended by Christensen et al. (Reference Christensen, Johnson and Turner2013).

Table 1 summarizes the results of the hypotheses tests and the descriptive indicators. All tests are nonsignificant. All descriptive indicators indicate a better performance of the IVR group as the number of mistakes and TTC are lower and the affective factors have been rated higher by the IVR group.

Table 1. Summary of the experiment results

Note. A cross indicates that the associated hypothesis is rejected. The number of mistakes was counted manually during the physical assembly. TTC is in seconds. Satisfaction, self-efficacy, and motivation were each assessed with multiple 7-point Likert questions.

Abbreviations: DVR, desktop virtual reality; IVR, immersive virtual reality; TTC, time to completion.

Discussion

The hypotheses that the IVR group would perform significantly better in the retention test than the DVR group as measured by the number of mistakes and TTC were based on a meta-analysis by Wu et al. (Reference Wu, Yu and Gu2020). They reported a small effect size for better performance of IVR than DVR groups directly after training. However, the studies comparing learning retention after DVR and IVR training are inconclusive as Buttussi and Chittaro (Reference Buttussi and Chittaro2018) and Lu et al. (Reference Lu, Nanjappan, Parsons, Yu and Liang2022) found nonsignificant differences, Smith et al. (2018) reported mixed results, and Alrehaili and Al Osman (Reference Alrehaili and AlOsman2019) recorded DVR as slightly better media. Since there are nonsignificant differences in the objective factors for learning retention, the goal attainment should be similar between both groups. The nonsignificant difference in satisfaction in retention is thus expected (Kernan & Lord, Reference Kernan and Lord1991). The groups’ nonsignificant differences in self-efficacy are in line with Moores and Chang’s (Reference Moores and Chang2009) finding as there are nonsignificant differences in prior performance. The hypothesis that motivation would be higher in the retention test was more exploratory since little evidence on the relationship has been published so far.

Our experimental results can help researchers to further guide their research. Our study differs from previous and related works because we included retention and directly compared IVR and DVR environments. Contrary to prior studies, participants in our experiment were also assessed by using a physical assembly and thus tested for their procedural and conceptual knowledge rather than only tested for their conceptual knowledge with simple online tests. Furthermore, we ensured that the DVR and IVR training environments were as similar as possible, only differing in their human–computer interaction method.

A limitation of our study is the relatively small sample size in the retention test. Only 44 participants participated in the retention test compared to 106 valid data points for the initial assessment. This discrepancy was the result of the Covid-19 pandemic in the UK at the time of the experiment as many participants had to quarantine during the retention test or were not available for the additional assessment. Additionally, as the population in the training center was predominantly male, the same applies to our sample (3 women and 103 men in the initial test). Results might be different for a more balanced sample.

Three further areas of research are proposed. First, the future experiment should replicate according to the original plan with several retention tests and sufficient participants in the retention tests, as this has still been rarely analyzed in the literature. Second, the transferability of the results should be investigated by running the experiment with a different training content to test if the findings hold outside an industrial training context. Third, retention of VR training should be compared with retention after learning with other media such as paper or videos.

Conclusion

The results of our experiment suggest that there are nonsignificant differences in performance in retention after learning with DVR or IVR and that these technologies could be used interchangeably. This applies both when measuring performance with performance indicators such as TTC and number of errors, and with affective indicators such as satisfaction, self-efficacy, and motivation. Because of the Covid pandemic, the sample in our study was smaller than planned which might explain the nonsignificant results. Decisions for a media (DVR or IVR) should consider the results of this study and other factors that were not examined in our study including cost, software development complexity, the appropriateness of a task for DVR or IVR, and the required infrastructure for DVR or IVR training.

Acknowledgments

The authors would like to thank Helen Dudfield, Mayowa Olonilua, and Thomas Govan for their advice in planning the experiment, and Athena Hughes, James Tombling, Julius Bock, Kim Ruf, Konrad Ciezarek, Siemens Congleton, and MakeUK for supporting the experiment.

Open peer review

To view the open peer review materials for this article, please visit http://doi.org/10.1017/exp.2022.28.

Data availability statement

The data that support the findings of this study are available upon request from the corresponding author.

Authorship contributions

A.F., L.P., P.Z., and T.B. conceived and designed the study. P.Z. adapted the Virtual Reality application to the requirements of the experiment. A.F. and L.P. conducted the data gathering. A.F. and P.Z. analyzed the data. A.F., L.P., P.Z., and T.B. wrote the article.

Funding statement

This work was supported by the Defence Science and Technology Laboratory (Dstl) under its Serapis framework. Permission to Publish was granted by Dstl in May 2022.

Conflict of interest

The authors have no conflicts of interest to declare.

Comments

Comments to the Author: In this paper, the authors present their work in comparing 3D immersive HMD against 2D desktop-based display for learning retention of an assembly task. Overall, the paper is well-written and presents the work in a succinct manner. The paper also cites relevant papers from the literature. Below is another paper that could be used in the discussion section, as it is closely related to their work.

Lu et al. Effect of display platforms on spatial knowledge acquisition and engagement: an evaluation with 3D geometry visualizations. J Vis (2022). https://doi.org/10.1007/s12650-022-00889-w.

Another limitation to be pointed out is the male-dominated population, in addition to the small sample size due to pandemic restrictions. As such, it is unclear if the same effects would be found if there is a more balanced population ratio.

Also, I wonder if the authors interviewed (even briefly) the participants after the experiment. If they did, it would be useful to add some of this analysis.