1. Introduction

With the exponential growth in the amount of available textual resources, organization, categorization, and summarization of these data presents a challenge, the extent of which becomes even more apparent when it is taken into account that a majority of these resources do not contain any adequate meta information. Manual categorization and tagging of documents is unfeasible due to a large amount of data, therefore, development of algorithms capable of tackling these tasks automatically and efficiently has become a necessity (Firoozeh et al. Reference Firoozeh, Nazarenko, Alizon and Daille2020).

One of the crucial tasks for organization of textual resources is keyword identification, which deals with automatic extraction of words that represent crucial semantic aspects of the text and summarize its content. First automated solutions to keyword extraction have been proposed more than a decade ago (Witten et al. Reference Witten, Paynter, Frank, Gutwin and Nevill-Manning1999; Mihalcea and Tarau Reference Mihalcea and Tarau2004) and the task is currently again gaining traction, with several new algorithms proposed in the recent years. Novel unsupervised approaches, such as RaKUn (Škrlj, Repar, and Pollak Reference Škrlj, Repar and Pollak2019) and YAKE (Campos et al. Reference Campos, Mangaravite, Pasquali, Jorge, Nunes and Jatowt2018), work fairly well and have some advantages over supervised approaches, as they are language and genre independent, do not require any training and are computationally undemanding. On the other hand, they also have a couple of crucial deficiencies:

-

• Term frequency–inverse document frequency (TfIdf) and graph-based features, such as PageRank, used by these systems to detect the importance of each word in the document, are based only on simple statistics like word occurrence and co-occurrence, and are therefore unable to grasp the entire semantic information of the text.

-

• Since these systems cannot be trained, they cannot be adapted to the specifics of the syntax, semantics, content, genre and keyword assignment regime of a specific text (e.g., a variance in a number of keywords).

These deficiencies result in a much worse performance when compared to the state-of-the-art supervised algorithms (see Table 2), which have a direct access to the gold-standard keyword set for each text during the training phase, enabling more efficient adaptation. Most recent supervised neural algorithms (Chen et al. Reference Chen, Zhang, Wu, Yan and Li2018; Meng et al. Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019; Yuan et al. Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020), therefore, achieve excellent performance under satisfactory training conditions and can model semantic relations much more efficiently than algorithms based on simpler word frequency statistics. On the other hand, these algorithms are resource demanding, require vast amounts of domain-specific data for training, and can therefore not be used in domains and languages that lack manually labeled resources of sufficient size.

In this research, we propose Transformer-Based Neural Tagger for Keyword IDentification (TNT-KID)Footnote a that is capable of overcoming the aforementioned deficiencies of supervised and unsupervised approaches. We show that while requiring only a fraction of manually labeled data required by other neural approaches, the proposed approach achieves performance comparable to the state-of-the-art supervised approaches on test sets for which a lot of manually labeled training data are available. On the other hand, if training data that is sufficiently similar to the test data are scarce, our model outperforms state-of-the-art approaches by a large margin. This is achieved by leveraging the transfer learning technique, where a keyword tagger is first trained in an unsupervised way as a language model on a large corpus and then fine-tuned on a (usually) small-sized corpus with manually labeled keywords. By conducting experiments on two different domains, computer science articles and news, we show that the language model pretraining allows the algorithm to successfully adapt to a specific domain and grasp the semantic information of the text, which drastically reduces the needed amount of labeled data for training the keyword detector.

The transfer learning technique (Peters et al. Reference Peters, Neumann, Iyyer, Gardner, Clark, Lee and Zettlemoyer2018; Howard and Ruder Reference Howard and Ruder2018), which has recently become a well-established procedure in the field of natural language processing (NLP), in a large majority of cases relies on very large unlabeled textual resources used for language model pretraining. For example, a well-known English BERT model (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019) was pretrained on the Google Books Corpus (Goldberg and Orwant Reference Goldberg and Orwant2013) (800 million tokens) and Wikipedia (2500 million tokens). On the other hand, we show that smaller unlabeled domain-specific corpora (87 million tokens for computer science and 232 million tokens for news domain) can be successfully used for unsupervised pretraining, which makes the proposed approach easily transferable to languages with less textual resources and also makes training more feasible in terms of time and computer resources available.

Unlike most other proposed state-of-the-art neural keyword extractors (Meng et al. Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017, Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019; Chen et al. Reference Chen, Zhang, Wu, Yan and Li2018; Ye and Wang Reference Ye and Wang2018; Yuan et al. Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020), we do not employ recurrent neural networks but instead opt for a transformer architecture (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017), which has not been widely employed for the task at hand. In fact, the study by Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020) is the only study we are aware of that employs transformers for the keyword extraction task. Another difference between our approach and most very recent state-of-the-art approaches from the related work is also task formulation. While Meng et al. (Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017), (Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019) and Yuan et al. (Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020) formulate a keyword extraction task as a sequence-to-sequence generation task, where the classifier is trained to generate an output sequence of keyword tokens step by step according to the input sequence and the previous generated output tokens, we formulate a keyword extraction task as a sequence labeling task, similar as in Gollapalli, Li, and Yang (Reference Gollapalli, Li and Yang2017), Luan, Ostendorf, and Hajishirzi (Reference Luan, Ostendorf and Hajishirzi2017) and Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020).

Besides presenting a novel keyword extraction procedure, the study also offers an extensive error analysis, in which the visualization of transformer attention heads is used to gain insights into inner workings of the model and in which we pinpoint key factors responsible for the differences in performance of TNT-KID and other state-of-the-art approaches. Finally, this study also offers a systematic evaluation of several building blocks and techniques used in a keyword extraction workflow in the form of an ablation study. Besides determining the extent to which transfer learning affects the performance of the keyword extractor, we also compare two different pretraining objectives, autoregressive language modeling and masked language modeling (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019), and measure the influence of transformer architecture adaptations, a choice of input encoding scheme and the addition of part-of-speech (POS) information on the performance of the model.

The paper is structured as follows. Section 2 addresses the related work on keyword identification and covers several supervised and unsupervised approaches to the task at hand. Section 3 describes the methodology of our approach, while in Section 4 we present the datasets, conducted experiments and results. Section 5 covers error analysis, Section 6 presents the conducted ablation study, while the conclusions and directions for further work are addressed in Section 7.

2. Related work

This section overviews selected methods for keyword extraction, supervised in Section 2.1 and unsupervised in Section 2.2. The related work is somewhat focused on the newest keyword extraction methods, therefore, for a more comprehensive survey of slightly older methods, we refer the reader to Hasan and Ng (Reference Hasan and Ng2014).

2.1 Supervised keyword extraction methods

Traditional supervised approaches to keyword extraction considered the task as a two step process (the same is true for unsupervised approaches). First, a number of syntactic and lexical features are used to extract keyword candidates from the text. Second, the extracted candidates are ranked according to different heuristics and the top n candidates are selected as keywords (Yuan et al. Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020). One of the first supervised approaches to keyword extraction was proposed by Witten et al. (Reference Witten, Paynter, Frank, Gutwin and Nevill-Manning1999), whose algorithm named KEA uses only TfIdf and the term’s position in the text as features for term identification. These features are fed to the Naive Bayes classifier, which is used to determine for each word or phrase in the text if it is a keyword or not. Medelyan, Frank, and Witten (Reference Medelyan, Frank and Witten2009) managed to build on the KEA approach and proposed the Maui algorithm, which also relies on the Naive Bayes classifier for candidate selection but employs additional semantic features, such as, for example, node degree, which quantifies the semantic relatedness of a candidate to other candidates, and Wikipedia-based keyphraseness, which is the likelihood of a phrase being a link in the Wikipedia.

Wang, Peng, and Hu (Reference Wang, Peng and Hu2006) was one of the first studies that applied a feedforward neural network classifier for the task at hand. This approach still relied on manual feature engineering and features such as TfIdf and appearance of the keyphrase candidate in the title or heading of the given document. On the other hand, Villmow, Wrzalik, and Krechel (Reference Villmow, Wrzalik and Krechel2018) applied a Siamese Long Short-Term Memory (LSTM) network for keyword extraction, which no longer required manual engineering of statistical features.

A more recent supervised approach is the so-called sequence labeling approach to keyword extraction by Gollapalli et al. (Reference Gollapalli, Li and Yang2017), where the idea is to train a keyword tagger using token-based linguistic, syntactic and structural features. The approach relies on a trained Conditional Random Field (CRF) tagger and the authors demonstrated that this approach is capable of working on-par with slightly older state-of-the-art systems that rely on information from the Wikipedia and citation networks, even if only within-document features are used. In another sequence labeling approach proposed by Luan et al. (Reference Luan, Ostendorf and Hajishirzi2017), a sophisticated neural network is built by combing an input layer comprising a concatenation of word, character and part-of-speech embeddings, a bidirectional Long Short-Term Memory (BiLSTM) layer and, a CRF tagging layer. They also propose a new semi-supervised graph-based training regime for training the network.

Some of the most recent state-of-the-art approaches to keyword detection consider the problem as a sequence-to-sequence generation task. The first research leveraging this tactic was proposed by Meng et al. (Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017), employing a generative model for keyword prediction with a recurrent encoder–decoder framework with an attention mechanism capable of detecting keywords in the input text sequence and also potentially finding keywords that do not appear in the text. Since finding absent keywords involves a very hard problem of finding a correct class in a set of usually thousands of unbalanced classes, their model also employs a copying mechanism (Gu et al. Reference Gu, Lu, Li and Li2016) based on positional information, in order to allow the model to find important keywords present in the text, which is a much easier problem.

The approach was further improved by Chen et al. (Reference Chen, Zhang, Wu, Yan and Li2018), who proposed additional mechanisms that handle repetitions and increase keyphrase diversity. In their system named CorrRNN, the so-called coverage vector is employed to check whether the word in the document has been summarized by previous keyphrases. Also, before the generation of each new keyphrase, preceding phrases are taken into account to eliminate generation of duplicate phrases.

Another improvement was proposed by Ye and Wang (Reference Ye and Wang2018), who tried to reduce the amount of data needed for successful training of the model proposed by Meng et al. (Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017). They propose a semi-supervised keyphrase generation method (in Section 4, we refer to this model as a Semi-supervised CopyRNN), which, besides the labeled samples, also leverages unlabeled samples, that are labeled in advance by syntetic keyphrases obtained with unsupervised keyword extraction methods or by employing a self-learning algorithms. The novel keyword extraction approach proposed by Wang et al. (Reference Wang, Liu, Qin, Xu, Wang, Chen and Xiong2018) also tries to reduce the amount of needed labeled data. The employed Topic-Based Adversarial Neural Network (TANN) is capable of leveraging the unlabeled data in the target domain and also data from the resource-rich source domain for the keyword extraction in the target domain. They propose a special topic correlation layer, in order to incorporate the global topic information into the document representation, and a set of domain-invariant features, which allow the transfer from the source to the target domain by adversarial training on the topic-based representations.

The study by Meng et al. (Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019) tried to improve the approach proposed in their previous study (Meng et al. Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017) by investigating different ways in which the target keywords can be fed to a classifier during the training phase. While the original system used the so-called one-to-one approach, where a training example consists of an input text and a single keyword, the improved model employs a one-to-seq approach, where an input text is matched with a concatenated sequence made of all the keywords for a specific text. The study also shows that the order of the keywords in the text matters. The best-performing model from Meng et al. (Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019), named CopyRNN, is used in our experiments for the comparison with the state of the art (see Section 4). A one-to-seq approach has been further improved by Yuan et al. (Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020), who incorporated two diversity mechanisms into the model. The mechanisms (called semantic coverage and orthogonal regularization) constrain the overall inner representation of a generated keyword sequence to be semantically similar to the overall meaning of the source text, and therefore force the model to produce diverse keywords. The resulting model leveraging these mechanisms has been named CatSeqD and is also used in our experiments for the comparison between TNT-KID and the state of the art.

A further improvement of the generative approach towards keyword detection has been proposed by Chan et al. (Reference Chan, Chen, Wang and King2019), who integrated a reinforcement learning (RL) objective into the keyphrase generation approach proposed by Yuan et al. (Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020). This is done by introducing an adaptive reward function that encourages the model to generate sufficient amount of accurate keyphrases. They also propose a new Wikipedia-based evaluation method that can more robustly evaluate the quality of the predicted keyphrases by also considering name variations of the ground truth keyphrases.

We are aware of one study that tackled keyword detection with transformers. Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020) fed contextual embeddings generated using several transformer and recurrent architectures (BERT Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019, RoBERTa Liu et al. Reference Liu, Ott, Goyal, Du, Joshi, Chen, Levy, Lewis, Zettlemoyer and Stoyanov2019, GPT-2 Radford et al. Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019, ELMo Peters et al. Reference Peters, Neumann, Iyyer, Gardner, Clark, Lee and Zettlemoyer2018, etc.) into two distinct neural architectures, a bidirectional Long Short-Term Memory Network (BiLSTM) and a BiLSTM network with an additional conditional random fields layer (BiLSTM-CRF). Same as in Gollapalli et al. (Reference Gollapalli, Li and Yang2017), they formulate a keyword extraction task as a sequence labeling approach, in which each word in the document is assigned one of the three possible labels:

![]() $k_b$

denotes that the word is the first word in a keyphrase,

$k_b$

denotes that the word is the first word in a keyphrase,

![]() $k_i$

means that the word is inside a keyphrase, and

$k_i$

means that the word is inside a keyphrase, and

![]() $k_o$

indicates that the word is not part of a keyphrase.

$k_o$

indicates that the word is not part of a keyphrase.

The study shows that contextual embeddings generated by transformer architectures generally perform better than static (e.g., FastText embeddings Bojanowski et al. Reference Bojanowski, Grave, Joulin and Mikolov2017) and among them, BERT showcases the best performance. Since all of the keyword detection experiments are conducted on scientific articles, they also test SciBERT (Beltagy, Lo, and Cohan Reference Beltagy, Lo and Cohan2019), a version of BERT pretrained on a large multi-domain corpus of scientific publications containing 1.14M papers sampled from Semantic Scholar. They observe that this genre-specific pretraining on texts of the same genre as the texts in the keyword datasets slightly improves the performance of the model. They also report significant gains in performance when the BiLSTM-CRF architecture is used instead of BiLSTM.

The neural sequence-to-sequence models are capable of outperforming all older supervised and unsupervised models by a large margin, but do require a very large training corpora with tens of thousands of documents for successful training. This means that their use is limited only to languages (and genres) in which large corpora with manually labeled keywords exist. On the other hand, the study by Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020) indicates that the employment of contextual embeddings reduces the need for a large dataset with manually labeled keywords. These models can, therefore, be deployed directly on smaller datasets by leveraging semantic information already encoded in contextual embeddings.

2.2 Unsupervised keyword extraction methods

The previous section discussed recently emerged methods for keyword extraction that operate in a supervised learning setting and can be data-intensive and time consuming. Unsupervised keyword detectors can tackle these two problems, yet at the cost of the reduced overall performance.

Unsupervised approaches need no training and can be applied directly without relying on a gold-standard document collection. In general, they can be divided into four main categories, namely statistical, graph-based, embeddings-based, and language model-based methods:

-

• Statistical methods, such as KP-MINER (El-Beltagy and Rafea Reference El-Beltagy and Rafea2009), RAKE (Rose et al. Reference Rose, Engel, Cramer and Cowley2010), and YAKE (Campos et al. Reference Campos, Mangaravite, Pasquali, Jorge, Nunes and Jatowt2018), use statistical characteristics of the texts to capture keywords. An extensive survey of these methods is presented in the study by Merrouni, Frikh, and Ouhbi (Reference Merrouni, Frikh and Ouhbi2020).

-

• Graph-based methods, such as TextRank (Mihalcea and Tarau Reference Mihalcea and Tarau2004), Single Rank (Wan and Xiao Reference Wan and Xiao2008) and its extension ExpandRank (Wan and Xiao Reference Wan and Xiao2008), TopicRank (Bougouin, Boudin, and Daille Reference Bougouin, Boudin and Daille2013), Topical PageRank (Sterckx et al. Reference Sterckx, Demeester, Deleu and Develder2015), KeyCluster (Liu et al. Reference Liu, Li, Zheng and Sun2009), and RaKUn (Škrlj et al. Reference Škrlj, Repar and Pollak2019) build graphs to rank words based on their position in the graph. A survey by Merrouni et al. (Reference Merrouni, Frikh and Ouhbi2020) also offers good coverage of graph-based algorithms.

-

• Embedding-based methods such as the methods proposed by Wang, Liu, and McDonald (Reference Wang, Liu and McDonald2015a), Key2Vec (Mahata et al. Reference Mahata, Kuriakose, Shah and Zimmermann2018), and EmbedRank (Bennani-Smires et al. Reference Bennani-Smires, Musat, Hossmann, Baeriswyl and Jaggi2018) employ semantic information from distributed word and sentence representations (i.e., embeddings) for keyword extraction. Thes methods are covered in more detail in the survey by Papagiannopoulou and Tsoumakas (Reference Papagiannopoulou and Tsoumakas2020).

-

• Language model-based methods, such as the ones proposed by Tomokiyo and Hurst (Reference Tomokiyo and Hurst2003) and Liu et al. (Reference Liu, Chen, Zheng and Sun2011), on the other hand use language model-derived statistics to extract keywords from text. The methods are well covered in surveys by Papagiannopoulou and Tsoumakas (Reference Papagiannopoulou and Tsoumakas2020) and Çano and Bojar (Reference Çano and Bojar2019).

Among the statistical approaches, the state-of-the-art keyword extraction algorithm is YAKE (Campos et al. Reference Campos, Mangaravite, Pasquali, Jorge, Nunes and Jatowt2018). It defines a set of features capturing keyword characteristics, which are heuristically combined to assign a single score to every keyword. These features include casing, position, frequency, relatedness to context, and dispersion of a specific term. Another recent statistical method proposed by Won, Martins, and Raimundo (Reference Won, Martins and Raimundo2019) shows that it is possible to build a very competitive keyword extractor by using morpho-syntactic patterns for the extraction of candidate keyphrases and afterward employ simple textual statistical features (e.g., term frequency, inverse document frequency, position measures etc.) to calculate ranking scores for each candidate.

One of the first graph-based methods for keyword detection is TextRank (Mihalcea and Tarau Reference Mihalcea and Tarau2004), which first extracts a lexical graph from text documents and then leverages Google’s PageRank algorithm to rank vertices in the graph according to their importance inside a graph. This approach was somewhat upgraded by TopicRank (Bougouin et al. Reference Bougouin, Boudin and Daille2013), where candidate keywords are additionally clustered into topics and used as vertices in the graph. Keywords are detected by selecting a candidate from each of the top-ranked topics. Another method that employs PageRank is PositionRank (Florescu and Caragea Reference Florescu and Caragea2017). Here, a word-level graph that incorporates positional information about each word occurence is constructed. One of the most recent graph-based keyword detectors is RaKUn (Škrlj et al. Reference Škrlj, Repar and Pollak2019) that employs several new techniques for graph construction and vertice ranking. First, the initial lexical graph is expanded and adapted with the introduction of meta-vertices, that is, aggregates of existing vertices. Second, for keyword detection and ranking, a graph-theoretic load centrality measure is used along with the implemented graph redundancy filters.

Besides employing PageRank on document’s words and phrases, there are other options for building a graph. For example, in the CommunityCluster method proposed by Grineva, Grinev, and Lizorkin (Reference Grineva, Grinev and Lizorkin2009), a single document is represented as a graph of semantic relations between terms that appear in that document. On the other hand, the CiteTextRank approach (Gollapalli and Caragea Reference Gollapalli and Caragea2014), used for extraction of keywords from scientific articles, leverages additional contextual information derived from a citation network, in which a specific document is referenced. Finally, SGRank (Danesh, Sumner, and Martin Reference Danesh, Sumner and Martin2015) and KeyphraseDS (Yang et al. Reference Yang, Lu, Yang, Li, Wu and Wei2017) methods belong to a family of the so-called hybrid statistical graph algorithms. SGRank ranks candidate keywords extracted from the text according to the set of statistical heuristics (position of the first occurrence, term length, etc.) and the produced ranking is fed into a graph-based algorithm, which conducts the final ranking. In the KeyphraseDS approach, keyword extraction consists of three steps: candidates are first extracted with a CRF model and a keyphrase graph is constructed from the candidates; spectral clustering, which takes into consideration knowledge and topic-based semantic relatedness, is conducted on the graph; and final candidates are selected through the integer linear programming (ILP) procedure, which considers semantic relevance and diversity of each candidate.

The first keyword extraction method that employed embeddings was proposed by Wang et al. (Reference Wang, Liu and McDonald2015a). Here, a word graph is created, in which the edges have weights based on the word co-occurrence and the euclidean distance between word embeddings. A weighted PageRank algorithm is used to rank the words. This method is further improved in Wang, Liu, and McDonald (Reference Wang, Liu and McDonald2015b), where a personalized weighted PageRank is employed together with the pretrained word embeddings. (Mahata et al. Reference Mahata, Kuriakose, Shah and Zimmermann2018) suggested further improvement to the approach by introducing domain-specific embeddings, which are trained on multiword candidate phrases extracted from corpus documents. Cosine distance is used to measure the distance between embeddings and a direct graph is constructed, in which candidate keyphrases are represented as vertices. The final ranking is derived by using a theme-weighted PageRank algorithm (Langville and Meyer Reference Langville and Meyer2004).

An intriguing embedding-based solution was proposed by Papagiannopoulou and Tsoumakas (Reference Papagiannopoulou and Tsoumakas2018). Their Reference Vector Algorithm (RVA) for keyword extraction employs the so-called local word embeddings, which are embeddings trained on the single document from which keywords need to be extracted.

Yet, another state-of-the-art embedding-based keyword extraction method is EmbedRank (Bennani-Smires et al. Reference Bennani-Smires, Musat, Hossmann, Baeriswyl and Jaggi2018). In the first step, candidate keyphrases are extracted according to to the part-of-speech (POS)-based pattern (phrases consisting of zero or more adjectives followed by one or more nouns). Sent2Vec embeddings (Pagliardini, Gupta, and Jaggi Reference Pagliardini, Gupta and Jaggi2018) are used for representation of candidate phrases and documents in the same vector space. Each phrase is ranked according to the cosine distance between the candidate phrase and the embedding of the document in which it appears.

Language model-based keyword extraction algorithms are less common than other approaches. Tomokiyo and Hurst (Reference Tomokiyo and Hurst2003) extracted keyphrases by employing several unigram and n-gram language models, and by measuring KL divergence (Vidyasagar Reference Vidyasagar2010) between them. Two features are used in the system: phraseness, which measures if a given word sequence is a phrase, and informativeness, which measures how well a specific keyphrase captures the most important ideas in the document. Another interesting approach is the one proposed by Liu et al. (Reference Liu, Chen, Zheng and Sun2011), which relies on the idea that keyphrasing can be considered as a type of translation, in which documents are translated into the language of keyphrases. Statistical machine translation word alignment techniques are used for the calculation of matching probabilities between words in the documents and keyphrases.

3. Methodology

This section presents the methodology of our approach. Section 3.1 presents the architecture of the neural model, Section 3.2 covers the transfer learning techniques used, Section 3.3 explains how the final fine-tuning phase of the keyword detection workflow is conducted, and Section 4.3 covers evaluation of the model.

3.1 Architecture

The model follows an architectural design of an original transformer encoder (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017) and is shown in Figure 1(a). Same as in the GPT-2 architecture (Radford et al. Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019), the encoder consists of a normalization layer that is followed by a multi-head attention mechanism. A residual connection is employed around the attention mechanism, which is followed by another layer normalization. This is followed by the fully connected feedforward and dropout layers, around which another residual connection is employed.

Figure 1. TNT-KID’s architecture overview. (a) Model architecture. (b) The attention mechanism.

For two distinct training phases, language model pretraining and fine-tuning, two distinct “heads” are added on top of the encoder, which is identical for both phases and therefore allows for the transfer of weights from the pretraining phase to the fine-tuning phase. The language model head predicts the probability for each word in the vocabulary that it appears at a specific position in the sequence and consists of a dropout layer and a feedforward layer, which returns the output matrix of size

![]() $\textrm{SL} * |V|$

, where

$\textrm{SL} * |V|$

, where

![]() $\textrm{SL}$

stands for sequence length (i.e., a number of words in the input text) and

$\textrm{SL}$

stands for sequence length (i.e., a number of words in the input text) and

![]() $|V|$

stands for the vocabulary size. This is followed by the adaptive softmax layer (Grave et al. Reference Grave, Joulin, Cissé, Grangier and Jégou2017) (see description below).

$|V|$

stands for the vocabulary size. This is followed by the adaptive softmax layer (Grave et al. Reference Grave, Joulin, Cissé, Grangier and Jégou2017) (see description below).

During fine-tuning, the language model head is replaced with a token classification head, in which we apply ReLu nonlinearity and dropout to the encoder output, and then feed the output to the feedforward classification layer, which returns the output matrix of size

![]() $\textrm{SL} * \textrm{NC}$

, where NC stands for the number of classes (in our case 2, since we model keyword extraction as a binary classification task, see Section 3.3 for more details). Finally, a softmax layer is added in order to obtain probabilities for each class.

$\textrm{SL} * \textrm{NC}$

, where NC stands for the number of classes (in our case 2, since we model keyword extraction as a binary classification task, see Section 3.3 for more details). Finally, a softmax layer is added in order to obtain probabilities for each class.

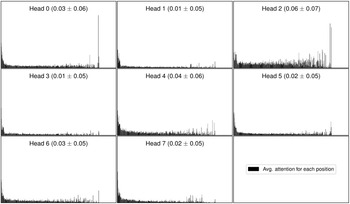

We also propose some significant modifications of the original GPT-2 architecture. First, we propose a re-parametrization of the attention mechanism (see Figure 1(b)). In the original transformer architecture, positional embedding is simply summed to the input embedding and fed to the encoder. While this allows the model to learn to attend by relative positions, the positional information is nevertheless fed to the attention mechanism in an indirect aggregated manner. On the other hand, we propose to feed the positional encoding to the attention mechanism directly, since we hypothesize that this would not only allow modeling of the relative positions between tokens but would also allow the model to better distinguish between the positional and semantic/grammatical information and therefore make it possible to assign attention to some tokens purely on the basis of their position in the text. The reason behind this modification is connected with the hypothesis that token position is especially important in the keyword identification task and with this re-parametrization the model would be capable of directly modeling the importance of relation between each token and each position. Note that we use relative positional embeddings for representing the positional information, same as in Dai et al. (Reference Dai, Yang, Yang, Carbonell, Le and Salakhutdinov2019), where the main idea is to only encode the relative positional information in the hidden states instead of the absolute.

Standard scaled dot-product attention (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017) requires three inputs, a so-called query, key, value matrix representations of the embedded input sequence and its positional information (i.e., element wise addition of input embeddings and positional embeddings) and the idea is to obtain attention scores (in a shape of an attention matrix) for each relation between tokens inside these inputs by first multiplying query (Q) and transposed key (K) matrix representations, applying scaling and softmax functions, and finally multiplying the resulting normalized matrix with the value (V) matrix, or more formally,

where

![]() $d_k$

represents the scaling factor, usually corresponding to the first dimension of the key matrix. On the other hand, we propose to add an additional positional input representation matrix

$d_k$

represents the scaling factor, usually corresponding to the first dimension of the key matrix. On the other hand, we propose to add an additional positional input representation matrix

![]() $K_{\textrm{position}}$

and model attention with the following equation:

$K_{\textrm{position}}$

and model attention with the following equation:

\[ \textrm{Attention}(Q,K,V, K_{\rm pos}) = \textrm{softmax} \bigg ( \frac{QK^T + QK_{\rm position}^T}{\sqrt{d_k}} \bigg ) V \]

\[ \textrm{Attention}(Q,K,V, K_{\rm pos}) = \textrm{softmax} \bigg ( \frac{QK^T + QK_{\rm position}^T}{\sqrt{d_k}} \bigg ) V \]

Second, besides the text input, we also experiment with the additional part-of-speech (POS) tag sequence as an input. This sequence is first embedded and then added to the word embedding matrix. Note that this additional input is optional and is not included in the model for which the results are presented in Section 4.4 due to marginal effect on the performance of the model in the proposed experimental setting (see Section 6).

The third modification involves replacing the standard input embedding layer and softmax function with adaptive input representations (Baevski and Auli Reference Baevski and Auli2019) and an adaptive softmax (Grave, Joulin, Cissé, Grangier and Jégou Reference Grave, Joulin, Cissé, Grangier and Jégou2017). While the modifications presented above affect both training phases (i.e., the language model pretraining and the token classification fine-tuning), the third modification only affects the language model pretraining (see Section 3.2). The main idea is to exploit the unbalanced word distribution to form word clusters containing words with similar appearance probabilities. The entire vocabulary is split into a smaller cluster containing words that appear most frequently, a second (usually slightly bigger) cluster that contains words that appear less frequently and a third (and also optional fourth) cluster that contains all the other words that appear rarely in the corpus. During language model training, instead of predicting an entire vocabulary distribution at each time step, the model first tries to predict a cluster in which a target word appears in and after that predicts a vocabulary distribution just for the words in that cluster. Since in a large majority of cases, the target word belongs to the smallest cluster containing most frequent words, the model in most cases only needs to generate probability distribution for less than a tenth of a vocabulary, which drastically reduces the memory requirements and time complexity of the model at the expense of a marginal drop in performance.

We experiment with two tokenization schemes, word tokenization and Sentencepiece (Kudo and Richardson Reference Kudo and Richardson2018) byte pair encoding (see Section 4 for details) and for these two schemes, we employ two distinct cluster distributions due to differences in vocabulary size. When word tokenization is employed, the vocabulary size tends to be bigger (e.g., reaching up to 600,000 tokens in our experiments on the news corpora), therefore in this setting, we employ four clusters, first one containing 20,000 most frequent words, second one containing 20,000 semi-frequent words, third one containing 160,000 less frequent words, and the fourth cluster containing the remaining least frequent words in the vocabulary.Footnote b When byte pair encoding is employed, the vocabulary is notably smaller (i.e., containing about 32,000 tokens in all experiments) and the clustering procedure is strictly speaking no longer necessary. Nevertheless, since the initial experiments showed that the performance of the model does not worsen if the majority of byte pair tokens is kept in the first cluster, we still employ the clustering procedure in order to reduce the time complexity of the model, but nevertheless adapt the cluster distribution. We only apply three clusters: the first one contains 20,000 most frequent byte pairs, same as when word tokenization is employed; the second cluster is reduced to contain only 10,000 semi-frequent byte pairs; the third cluster contains only about 2000 least frequent byte pairs.

We also present the modification, which only affects the fine-tuning token classification phase (see Section 3.3). During this phase, a two layer randomly initialized encoder, consisting of dropout and two bidirectional Long Short-Term Memory (BiLSTM) layers, is added (with element-wise summation) to the output of the transformer encoder. The initial motivation behind this adaptation is connected with findings from the related work, which suggest that recurrent layers are quite successful at modeling positional importance of tokens in the keyword detection task (Meng et al. Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017; Yuan et al. Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020) and by the study of Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020), who also reported good results when a BiLSTM classifier and contextual embeddings generated by transformer architectures were employed for keyword detection. Also, the results of the initial experiments suggested that some performance gains can in fact be achieved by employing this modification.

In terms of computational complexity, a self-attention layer complexity is

![]() $ \mathcal{O}(n^2 * d)$

and the complexity of the recurrent layer is

$ \mathcal{O}(n^2 * d)$

and the complexity of the recurrent layer is

![]() $ \mathcal{O}(n * d^2)$

, where n is the sequence length and d is the embedding size (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017). This means that the complexity of the transformer model with an additional BiLSTM encoder is therefore

$ \mathcal{O}(n * d^2)$

, where n is the sequence length and d is the embedding size (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017). This means that the complexity of the transformer model with an additional BiLSTM encoder is therefore

![]() $ \mathcal{O}(n^2 * d^2)$

. In terms of the number of operations required, the standard TNT-KID model encoder employs the sequence size of 512, embedding size of 512 and 8 attention layers, resulting in altogether

$ \mathcal{O}(n^2 * d^2)$

. In terms of the number of operations required, the standard TNT-KID model encoder employs the sequence size of 512, embedding size of 512 and 8 attention layers, resulting in altogether

![]() $512^2 * 512 * 8 = 1,073,741,824$

operations. By adding the recurrent encoder with two recurrent bidirectional layers (which is the same as adding four recurrent layers, since each bidirectional layer contains two unidirectional LSTM layers), the number of operations increases by

$512^2 * 512 * 8 = 1,073,741,824$

operations. By adding the recurrent encoder with two recurrent bidirectional layers (which is the same as adding four recurrent layers, since each bidirectional layer contains two unidirectional LSTM layers), the number of operations increases by

![]() $512 * 512^2 * 4 = 536,870,912$

. In practice, this means that the model with the additional recurrent encoder conducts token classification roughly 50% slower than the model without the encoder. Note that this addition does not affect the language model pretraining, which tends to be the more time demanding task due to larger corpora involved.

$512 * 512^2 * 4 = 536,870,912$

. In practice, this means that the model with the additional recurrent encoder conducts token classification roughly 50% slower than the model without the encoder. Note that this addition does not affect the language model pretraining, which tends to be the more time demanding task due to larger corpora involved.

Finally, we also experiment with an employment of the BiLSTM-CRF classification head on top of the transformer encoder, same as in the approach proposed by Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020) (see Section 6 for more details about the results of this experiment). For this experiment, during the fine-tuning token classification phase, the token classification head described above is replaced with a BiLSTM-CRF classification head proposed by Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020), containing one BiLSTM layer and a CRF (Lafferty, McCallum, and Pereira Reference Lafferty, McCallum and Pereira2001) layer.Footnote c Outputs of the BiLSTM

![]() $f={f_1,...,f_n}$

are fed as inputs to a CRF layer, which returns the output score s(f,y) for each possible label sequence according to the following equation:

$f={f_1,...,f_n}$

are fed as inputs to a CRF layer, which returns the output score s(f,y) for each possible label sequence according to the following equation:

![]() $\tau_{y_{t-1},y_t}$

is a transition matrix representing the transition score from class

$\tau_{y_{t-1},y_t}$

is a transition matrix representing the transition score from class

![]() $y_{t-1}$

to

$y_{t-1}$

to

![]() $y_t$

. The final probability of each label sequence score is generated by exponentiating the scores and normalizing over all possible output label sequences:

$y_t$

. The final probability of each label sequence score is generated by exponentiating the scores and normalizing over all possible output label sequences:

To find the optimal sequence of labels efficiently, the CRF layer uses the Viterbi algorithm (Forney Reference Forney1973).

3.2 Transfer learning

Our approach relies on a transfer learning technique (Howard and Ruder Reference Howard and Ruder2018; Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019), where a neural model is first pretrained as a language model on a large corpus. This model is then fine-tuned for each specific keyword detection task on each specific manually labeled corpus by adding and training the token classification head described in the previous section. With this approach, the syntactic and semantic knowledge of the pretrained language model is transferred and leveraged in the keyword detection task, improving the detection on datasets that are too small for the successful semantic and syntactic generalization of the neural model.

In the transfer learning scenario, two distinct pretraining objectives can be considered. First, is the autoregressive language modeling where the task can be formally defined as predicting a probability distribution of words from the fixed size vocabulary V, for word

![]() $w_{t}$

, given the historical sequence

$w_{t}$

, given the historical sequence

![]() $w_{1:t-1} = [w_1,...,w_{t-1}]$

. This pretraining regime was used in the GPT-2 model (Radford et al. Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019) that we modified. Since in the standard transformer architecture self-attention is applied to an entire surrounding context of a specific word (i.e., the words that appear after a specific word in each input sequence are also used in the self-attention calculation), we employ obfuscation masking to the right context of each word when the autoregressive language model objective is used, in order to restrict the information only to the prior words in the sentence (plus the word itself) and prevent target leakage (see Radford et al. (Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019) for details on the masking procedure).

$w_{1:t-1} = [w_1,...,w_{t-1}]$

. This pretraining regime was used in the GPT-2 model (Radford et al. Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019) that we modified. Since in the standard transformer architecture self-attention is applied to an entire surrounding context of a specific word (i.e., the words that appear after a specific word in each input sequence are also used in the self-attention calculation), we employ obfuscation masking to the right context of each word when the autoregressive language model objective is used, in order to restrict the information only to the prior words in the sentence (plus the word itself) and prevent target leakage (see Radford et al. (Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019) for details on the masking procedure).

Another option is a masked language modeling objective, first proposed by Devlin et al. (Reference Devlin, Chang, Lee and Toutanova2019). Here, a percentage of words from the input sequence is masked in advance, and the objective is to predict these masked words from an unmasked context. This allows the model to leverage both left and right context, or more formally, the token

![]() $w_{t}$

is also determined by sequence of tokens

$w_{t}$

is also determined by sequence of tokens

![]() $w_{t+1:n} = [w_{t+1},...,w_{t+n}]$

. We follow the masking procedure described in the original paper by Devlin et al. (Reference Devlin, Chang, Lee and Toutanova2019), where 15% of words are randomly designated as targets for prediction, out of which 80% are replaced by a masked token (

$w_{t+1:n} = [w_{t+1},...,w_{t+n}]$

. We follow the masking procedure described in the original paper by Devlin et al. (Reference Devlin, Chang, Lee and Toutanova2019), where 15% of words are randomly designated as targets for prediction, out of which 80% are replaced by a masked token (

![]() $< {mask}> $

), 10% are replaced by a random word and 10% remain intact.

$< {mask}> $

), 10% are replaced by a random word and 10% remain intact.

The final output of the model is a softmax probability distribution calculated over the entire vocabulary, containing the predicted probabilities of appearance (P) for each word given its left (and in case of the masked language modeling objective also right) context. Training, therefore, consists of the minimization of the negative log loss (NLL) on the batches of training corpus word sequences by backpropagation through time:

While the masked language modeling objective might outperform autoregressive language modeling objective in a setting where a large pretraining corpus is available (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019) due to the inclusion of the right context, these two training objectives have at least to our knowledge never been compared in a setting where only a relatively small domain-specific corpus is available for the pretraining phase. For more details about the performance comparison of these two pretraining objectives, see Section 6.

3.3 Keyword identification

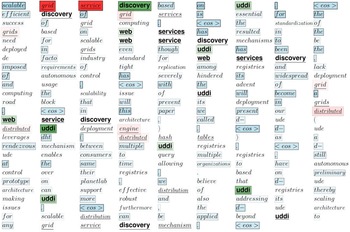

Since each word in the sequence can either be a keyword (or at least part of the keyphrase) or not, the keyword tagging task can be modeled as a binary classification task, where the model is trained to predict if a word in the sequence is a keyword or not.Footnote d Figure 2 shows an example of how an input text is first transformed into a numerical sequence that is used as an input of the model, which is then trained to produce a sequence of zeroes and ones, where the positions of ones indicate the positions of keywords in the input text.

Figure 2. Encoding of the input text “The advantage of this is to introduce distributed interactions between the UDDI clients.” with keywords distributed interactions and UDDI. In the first step, the text is converted into a numerical sequence, which is used as an input to the model. The model is trained to convert this numerical sequence into a sequence of zeroes and ones, where the ones indicate the position of a keyword.

Since a large majority of words in the sequence are not keywords, the usage of a standard NLL function (see Equation (1)), which would simply calculate a sum of log probabilities that a word is either a keyword or not for every input word sequence, would badly affect the recall of the model since the majority negative class would prevail. To solve this problem and maximize the recall of the system, we propose a custom classification loss function, where probabilities for each word in the sequence are first aggregated into two distinct sets, one for each class. For example, text “The advantage of this is to include distributed interactions between the UDDI clients.” in Figure 2 would be split into two sets, the first one containing probabilities for all the words in the input example which are not keywords (The, advantage, of, this, is, to, include, between, the, clients,.), and the other containing probabilities for all the words in the input example that are keywords or part of keyphrases (distributed, interactions, UDDI). Two NLLs are calculated, one for each probability set, and both are normalized with the size of the set. Finally, the NLLs are summed. More formally, the loss is computed as follows. Let

![]() $W = \{w_i\}_{i = 1}^{n}$

represent an enumerated sequence of tokens for which predictions are obtained. Let

$W = \{w_i\}_{i = 1}^{n}$

represent an enumerated sequence of tokens for which predictions are obtained. Let

![]() $p_i$

represent the predicted probabilities for the ith token that it either belongs or does not belong to the ground truth class. The

$p_i$

represent the predicted probabilities for the ith token that it either belongs or does not belong to the ground truth class. The

![]() $o_i$

represents the output weight vector of the neural network for token i and j corresponds to the number of classes (two in our case as the word can be a keyword or not). Predictions are in this work obtained via a log-softmax transform (first), defined as follows (for the ith token):

$o_i$

represents the output weight vector of the neural network for token i and j corresponds to the number of classes (two in our case as the word can be a keyword or not). Predictions are in this work obtained via a log-softmax transform (first), defined as follows (for the ith token):

The loss function is comprised from two main parts. Let

![]() $K_+ \subseteq W$

represent tokens that are keywords and

$K_+ \subseteq W$

represent tokens that are keywords and

![]() $K_- \subseteq W$

the set of tokens that are not keywords. Note that

$K_- \subseteq W$

the set of tokens that are not keywords. Note that

![]() $|K_- \cup K_+| = n$

, that is, the two sets cover all considered tokens for which predictions are obtained. During loss computation, only the probabilities of the ground truth class are considered. We mark them with

$|K_- \cup K_+| = n$

, that is, the two sets cover all considered tokens for which predictions are obtained. During loss computation, only the probabilities of the ground truth class are considered. We mark them with

![]() $p_i^+$

or

$p_i^+$

or

![]() $p_i^-$

. Then the loss is computed as

$p_i^-$

. Then the loss is computed as

The final loss is finally computed as

Note that even though all predictions are given as an argument, the two parts of the loss address different token indices (i).

In order to produce final set of keywords for each document, tagged words are extracted from the text and duplicates are removed. Note that a sequence of ones is always interpreted as a multiword keyphrase and not as a combination of one-worded keywords (e.g., distributed interactions from Figure 2 is considered as a single multiword keyphrase and not as two distinct one word keywords). After that, the following filtering is conducted:

-

• If a keyphrase is longer than four words, it is discarded.

-

• Keywords containing punctuation (with the exception of dashes and apostrophes) are removed.

-

• The detected keyphrases are ranked and arranged according to the softmax probability assigned by the model in a descending order.

4. Experiments

We first present the datasets used in the experiments. This is followed by the experimental design, evaluation, and the results achieved by TNT-KID in comparison to the state of the art.

4.1 Keyword extraction datasets

Experiments were conducted on seven datasets from two distinct genres, scientific papers about computer science and news. The following datasets from the computer science domain are used:

-

• KP20k (Meng et al. Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017): This dataset contains titles, abstracts, and keyphrases of 570,000 scientific articles from the field of computer science. The dataset is split into train set (530,000), validation set (20,000), and test set (20,000).

-

• Inspec (Hulth Reference Hulth2003): The dataset contains 2000 abstracts of scientific journal papers in computer science collected between 1998 and 2002. Two sets of keywords are assigned to each document, the controlled keywords that appear in the Inspec thesaurus, and the uncontrolled keywords, which are assigned by the editors. Only uncontrolled keywords are used in the evaluation, same as by Meng et al. (Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017), and the dataset is split into 500 test papers and 1500 train papers.

-

• Krapivin (Krapivin, Autaeu, and Marchese Reference Krapivin, Autaeu and Marchese2009): This dataset contains 2304 full scientific papers from computer science domain published by ACM between 2003 and 2005 with author-assigned keyphrases. Four-hundred and sixty papers from the dataset are used as a test set and the others are used for training. Only titles and abstracts are used in our experiments.

-

• NUS (Nguyen and Kan Reference Nguyen and Kan2007): The dataset contains titles and abstracts of 211 scientific conference papers from the computer science domain and contains a set of keywords assigned by student volunters and a set of author-assigned keywords, which are both used in evaluation.

-

• SemEval (Kim et al. Reference Kim, Medelyan, Kan and Baldwin2010): The dataset used in the SemEval-2010 Task 5, Automatic Keyphrase Extraction from Scientific Articles, contains 244 articles from the computer science domain collected from the ACM Digital Library. One-hundred articles are used for testing and the rest are used for training. Again, only titles and abstracts are used in our experiments, the article’s content was discarded.

From the news domain, three datasets with manually labeled gold-standard keywords are used:

-

• KPTimes (Gallina, Boudin, and Daille Reference Gallina, Boudin and Daille2019): The corpus contains 279,923 news articles containing editor-assigned keywords that were collected by crawling New York Times news website.Footnote e After that, the dataset was randomly divided into training (92.8%), development (3.6%) and test (3.6%) sets.

-

• JPTimes (Gallina et al. Reference Gallina, Boudin and Daille2019): Similar as KPTimes, the corpus was collected by crawling Japan Times online news portal.Footnote f The corpus only contains 10,000 English news articles and is used in our experiments as a test set for the classifiers trained on the KPTimes dataset.

-

• DUC (Wan and Xiao Reference Wan and Xiao2008): The dataset consists of 308 English news articles and contains 2488 hand-labeled keyphrases.

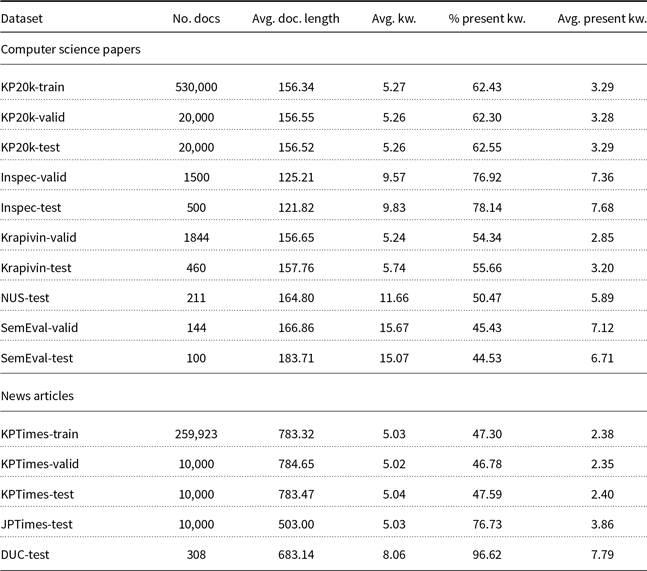

The statistics about the datasets that are used for training and testing of our models are presented in Table 1. Note that there is a big variation in dataset sizes in terms of number of documents (column No. docs), and in an average number of keywords (column Avg. kw.) and present keywords per document (columns Avg. present kw.), ranging from 2.35 present keywords per document in KPTimes-valid to 7.79 in DUC-test.

4.2 Experimental design

We conducted experiments on the datasets described in Section 4.1. First, we lowercased and tokenized all datasets. We experimented with two tokenization schemes, word tokenization and Sentencepiece (Kudo and Richardson Reference Kudo and Richardson2018) byte pair encoding (see Section 6 for more details on how these two tokenization schemes affect the overall performance). During both tokenization schemes, a special

![]() $< {eos}> $

token is used to indicate the end of each sentence. For the best-performing model, for which the results are presented in Section 4.4, byte pair encoding was used. For generating the additional POS tag sequence input described in Section 3.1, which was not used in the best-performing model, Averaged Perceptron Tagger from the NLTK library (Bird and Loper Reference Bird and Loper2004) was used. The neural architecture was implemented in PyTorch (Paszke et al. Reference Paszke, Gross, Massa, Lerer, Bradbury, Chanan, Killeen, Lin, Gimelshein, Antiga, Desmaison, Kopf, Yang, DeVito, Raison, Tejani, Chilamkurthy, Steiner, Fang, Bai and Chintala2019).

$< {eos}> $

token is used to indicate the end of each sentence. For the best-performing model, for which the results are presented in Section 4.4, byte pair encoding was used. For generating the additional POS tag sequence input described in Section 3.1, which was not used in the best-performing model, Averaged Perceptron Tagger from the NLTK library (Bird and Loper Reference Bird and Loper2004) was used. The neural architecture was implemented in PyTorch (Paszke et al. Reference Paszke, Gross, Massa, Lerer, Bradbury, Chanan, Killeen, Lin, Gimelshein, Antiga, Desmaison, Kopf, Yang, DeVito, Raison, Tejani, Chilamkurthy, Steiner, Fang, Bai and Chintala2019).

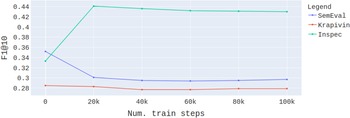

In the pretraining phase, two language models were trained for up to 10 epochs, one on the concatenation of all the texts from the computer science domain and the other on the concatenation of all the texts from the news domain. Overall the language model train set for computer science domain contained around 87 million tokens and the news train set about 232 million tokens. These small sizes of the language model train sets enable relatively fast training and smaller model sizes (in terms of number of parameters) due to the reduced vocabulary.

Table 1. Datasets used for empirical evaluation of keyword extraction algorithms. No.docs stands for number of documents, Avg. doc. length stands for average document length in the corpus (in terms of number of words, that is, we split the text by white space), Avg. kw. stands for average number of keywords per document in the corpus, % present kw. stands for the percentage of keywords that appear in the corpus (i.e., percentage of document’s keywords that appear in the text of the document), and Avg. present kw. stands for the average number of keywords per document that actually appear in the text of the specific document

After the pretraining phase, the trained language models were fine-tuned on each dataset’s validation sets (see Table 1), which were randomly split into 80% of documents used for fine-tuning and 20% of documents used for hyperparameter optimization and test set model selection. The documents containing more than 512 tokens are truncated. Next, the documents are sorted according to the token length and split into batches. The documents in each batch are padded with a special

![]() $ <{pad}>$

token to the length of the longest document in the batch. Each model was fine-tuned for a maximum of 10 epochs and after each epoch, the trained model was tested on the documents chosen for hyperparameter optimization and test set model selection. The model that showed the best performance (in terms of F1@10 score) was used for keyword detection on the test set. All combinations of the following hyperparameter values were tested before choosing the best combination, which is written in bold in the list below and on average worked best for all the datasets in both domainsFootnote g:

$ <{pad}>$

token to the length of the longest document in the batch. Each model was fine-tuned for a maximum of 10 epochs and after each epoch, the trained model was tested on the documents chosen for hyperparameter optimization and test set model selection. The model that showed the best performance (in terms of F1@10 score) was used for keyword detection on the test set. All combinations of the following hyperparameter values were tested before choosing the best combination, which is written in bold in the list below and on average worked best for all the datasets in both domainsFootnote g:

-

• Learning rates: 0.00005, 0.0001, 0.0003, 0.0005, 0.001.

-

• Embedding size: 256, 512.

-

• Number of attention heads: 4, 8, 12.

-

• Sequence length: 128, 256, 512.

-

• Number of attention layers: 4, 8, 12.

Note that in our experiments, we use the same splits as in related work (Meng et al. Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019; Meng et al. Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017; Gallina et al. Reference Gallina, Boudin and Daille2019) for all datasets with predefined splits (i.e., all datasets with train and validation sets, see Table 1). The exceptions are NUS, DUC and JPTimes datasets with no available predefined validation-test splits. For NUS and DUC, 10-fold cross-validation is used and the model used for keyword detection on the JPTimes-test dataset was fine-tuned on the KPTimes-valid dataset. Another thing to consider is that in the related work by Yuan et al. (Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020), Meng et al. (Reference Meng, Zhao, Han, He, Brusilovsky and Chi2017), Gallina et al. (Reference Gallina, Boudin and Daille2019), Chen et al. (Reference Chen, Zhang, Wu, Yan and Li2018) and Ye and Wang (Reference Ye and Wang2018), to which we are comparing, large datasets KPTimes-train and KP20k-train with 530,000 documents and 260,000 documents, respectively, are used for the classification model training and these trained models are applied on all test sets from the matching domain. On the other hand, we do not train our classification models on these two large train sets but instead use smaller KPTimes-valid and KP20k-valid datasets for training, since we argue that, due to language model pretraining, fine-tuning the model on a relatively small labeled dataset is sufficient for the model to achieve competitive performance. We do however conduct the language model pretraining on the concatenation of all the texts from the computer science domain and the news domain as explained above, and these two corpora also contain texts from KPTimes-train and KP20k-train datasets.

4.3 Evaluation

To asses the performance of the model, we measure F1@k score, a harmonic mean between Precision@k and Recall@k.

In a ranking task, we are interested in precision at rank k. This means that only the keywords ranked equal to or better than k are considered and the rest are disregarded. Precision is the ratio of the number of correct keywords returned by the system divided by the number of all keywords returned by the system,Footnote h or more formally:

Recall@k is the ratio of the number of correct keywords returned by the system and ranked equal to or better than k divided by the number of correct ground truth keywords:

Due to the high variance of a number of ground truth keywords, this type of recall becomes problematic if k is smaller than the number of ground truth keywords, since it becomes impossible for the system to achieve a perfect recall. Similar can happen to precision@k, if the number of keywords in a gold standard is lower than k, and the returned number of keywords is fixed at k. We shall discuss how this affects different keyword detection systems in Section 7.

Finally, we formally define F1@k as a harmonic mean between Precision@k and Recall@k:

In order to compare the results of our approach to other state-of-the-art approaches, we use the same evaluation methodology as Yuan et al. (Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020) and Meng et al. (Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019), and measure F1@k with k being either 5 or 10. Note that F1@k is calculated as a harmonic mean of macro-averaged precision and recall, meaning that precision and recall scores for each document are averaged and the F1 score is calculated from these averages. Same as in the related work, lowercasing and stemming are performed on both the gold standard and the predicted keywords (keyphrases) during the evaluation and the predicted keyword is considered correct only if the stemmed and lowercased forms of predicted and gold-standard keywords exactly match (i.e., partial matches are considered incorrect). Only keywords that appear in the text of the documents (present keywords)Footnote i were used as a gold standard and the documents containing no present keywords were removed, in order to make the results of the conducted experiments comparable with the reported results from the related work.

As is pointed out in the study by Gallina, Boudin, and Daille (Reference Gallina, Boudin and Daille2020), evaluation and comparison of keyphrase extraction algorithms is not a trivial task, since keyphrase extraction models in different studies are evaluated under different, not directly comparable experimental setups. To make the comparison fair, they recommend the testing of the models on the same datasets, using identical gold-standard keyword sets and employing the same preprocessing techniques and parameter settings. We follow these guidelines strictly, when it comes to the use of identical datasets and gold-standard keyword sets, but somewhat deviate from them when it comes to the employment of identical preprocessing techniques and parameter settings employed for different approaches. Since all unsupervised approaches operate on a set of keyphrase candidates, extracted from the input document, Gallina et al. (Reference Gallina, Boudin and Daille2020) argues that the extraction of these candidates and other parameters should be identical (e.g., they select the sequences of adjacent nouns with one or more preceding adjectives of length up to five words in order to extract keyword candidates) for a fair comparison between algorithms. On the other hand, we are more interested in comparison between keyword extraction approaches instead of algorithms alone and argue that the distinct keyword candidate extraction techniques are inseparable from the overall approach and should arguably be optimized for each distinct algorithm. Therefore, we employ the original preprocessing proposed by the authors for each specific unsupervised approach and apply hyperparameters recommended by the authors. For the supervised approaches, we again employ preprocessing and parameter settings recommended by the authors (e.g., we employ word tokenization proposed by the authors of the systems for CopyRNN and CatSeqD, and employ GPT-specific byte pair tokenizer for GPT-2 and GPT-2 + BiLSTM-CRF approaches).

Instead of reimplementing each specific keyword extraction approach, we report results from the original studies whenever possible, that is, whenever the original results were reported for the same datasets, gold-standard keyword sets, and evaluation criteria, in order to avoid any possible biased decisions (e.g., the choice of hyperparameter settings not clearly defined in the original paper) and reimplementation mistakes. The results of the reimplementation are only reported for evaluation on datasets missing in the original studies and for algorithms with the publicly available code with clear usage instructions. If that is not the case, or if we were not able to obtain the source code from the original authors, the reimplementation was not attempted, since it is in most cases almost impossible to reimplement an algorithm accurately just by following the description in the paper (Repar, Martinc, and Pollak Reference Repar, Martinc and Pollak2019).

4.4 Keyword extraction results and comparison to the state of the art

In Table 2, we present the results achieved by TNT-KID and a number of algorithms from the related work on the datasets presented in Table 1. Note that TfIdf, TextRank, YAKE, RaKUn, Key2Vec, and EmbedRank algorithms are unsupervised and do not require any training. KEA, Maui, GPT-2, GPT-2 + BiLSTM-CRF, and TNT-KID were trained on the different validation set for each of the datasets, and CopyRNN and CatSeqD were trained on the large KP20k-train dataset for keyword detection on computer science domain, and on the KPTimes-train dataset for keyword detection on the news domain, since they require a large train set for competitive performance. For two other CopyRNN variants, CorrRNN and Semi-supervised CopyRNN, we only report results on science datasets published in Chen et al. (Reference Chen, Zhang, Wu, Yan and Li2018) and Ye and Wang (Reference Ye and Wang2018) respectively, since the code for these two systems is not publicly available. The published results for CorrRNN were obtained by training the model on the KP20k-train dataset. On the other hand, Semi-supervised CopyRNN was trained on 40,000 labeled documents from the KP20k-train dataset and 400,000 documents without labels from the same dataset.

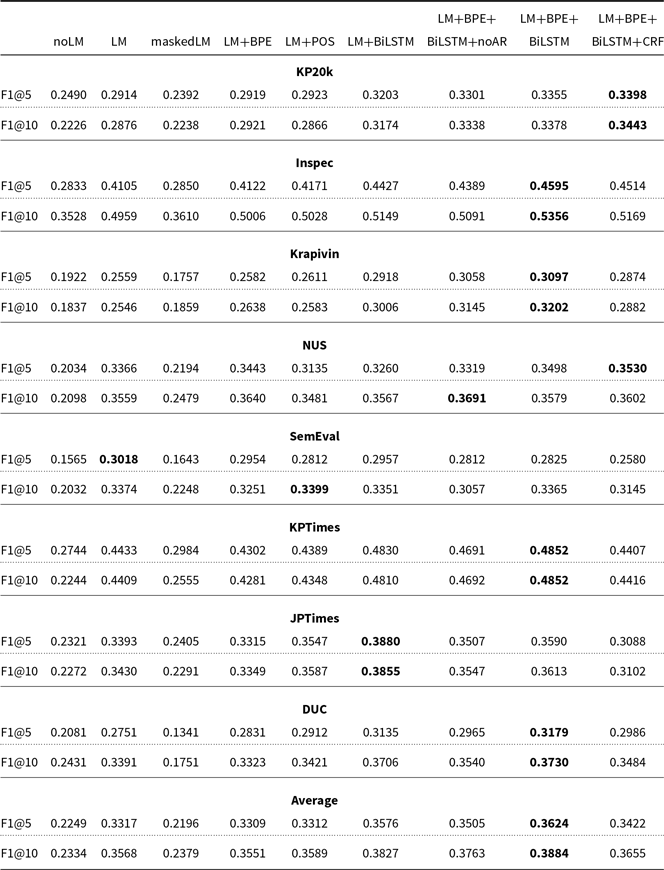

Table 2. Empirical evaluation of state-of-the-art keyword extractors. Results marked with * were obtained by our implementation or reimplementation of the algorithm and results without * were reported in the related work

For RaKUn (Škrlj et al. Reference Škrlj, Repar and Pollak2019) and YAKE (Campos et al. Reference Campos, Mangaravite, Pasquali, Jorge, Nunes and Jatowt2020), we report results for default hyperparameter settings, since the authors of RaKUn, as well as YAKE’s authors claim that a single hyperparameter set can offer sufficient performance across multiple datasets. We used the author’s official github implementationsFootnote j in the experiments. For Key2Vec (Mahata et al. Reference Mahata, Kuriakose, Shah and Zimmermann2018), we employ the github implementation of the algorithm Footnote k to generate results for all datasets, since the results in the original study are not comparable due to different set of keywords used (i.e., the keywords are not limited to only the ones that appear in text). Since the published code does not contain a script for the training of domain-specific embeddings trained on multiword candidate phrases, GloVe embeddings (Pennington, Socher, and Manning Reference Pennington, Socher and Manning2014) with the dimension of 50 are used instead. Footnote l The EmbedRank results in the original study (Bennani-Smires et al. Reference Bennani-Smires, Musat, Hossmann, Baeriswyl and Jaggi2018) are also not comparable (again, the keywords in the study are not limited to only the ones that appear in text); therefore, we once again use the official github implementationFootnote m of the approach to generate results for all datasets and employ the recommended Sent2Vec embeddings (Pagliardini et al. Reference Pagliardini, Gupta and Jaggi2018) trained on English Wikipedia with the dimension of 700.

For KEA and Maui, we do not conduct additional testing on corpora for which results are not available in the related work (KPTimes, JPTimes, and DUC corpus) due to bad performance of the algorithms on all the corpora for which results are available. Finally, for TfIdf and TextRank, we report results from the related work where available (Yuan et al. Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020) and use the implementation of the algorithms from the Python Keyphrase Extraction (PKE) libraryFootnote n to generate unavailable results. Same as for RaKUn and YAKE, default hyperparameters are used.

For KEA, Maui, CopyRNN, and CatSeqD, we report results for the computer science domain published in Yuan et al. (Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020) and for the news domain we report results for CopyRNN published in Gallina et al. (Reference Gallina, Boudin and Daille2019). The results that were not reported in the related work are results for CatSeqD on KPTimes, JPTimes, and DUC, since this model was originally not tested on these three datasets, and the F1@5 score results for CopyRNN on KPTimes and JPTimes. Again, the author’s official github implementationsFootnote o were used for training and testing of both models. The models were trained and tested on the large KPTimes-train dataset with a help of a script supplied by the authors of the papers. Same hyperparameters that were used for KP20k training in the original papers (Meng et al. Reference Meng, Yuan, Wang, Brusilovsky, Trischler and He2019; Yuan et al. Reference Yuan, Wang, Meng, Thaker, Brusilovsky, He and Trischler2020) were used.

We also report results for the unmodified pretrained GPT-2 (Radford et al. Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019) model with a standard feedforward token classification head, and a pretrained GPT-2 with a BiLSTM-CRF token classification head, as proposed in Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020) and described in Section 3.1.Footnote p Note that a pretrained GPT-2 model with a BiLSTM-CRF token classification head in this experiment does not conduct binary classification, but rather employs the sequence labeling procedure from Sahrawat et al. (Reference Sahrawat, Mahata, Kulkarni, Zhang, Gosangi, Stent, Sharma, Kumar, Shah and Zimmermann2020) described in Section 2, which assigns words in the text sequence into three classes. For the unmodified pretrained GPT-2 (Radford et al. Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019) model and a pretrained GPT-2 with a BiLSTM-CRF token classification head, we apply the same fine-tuning regime as for TNT-KID, that is we fine-tune the models for up to 10 epochs on each dataset’s validation sets (see Table 1), which were randomly split into 80% of documents used for training and 20% of documents used for the test set model selection. The model that showed the best performance on this set of documents (in terms of F1@10 score) was used for keyword detection on the test set. We use the default hyperparameters (i.e., sequence length of 512, embedding size of 768, learning rate of 0.00003, 12 attention heads, and a batch size of 8) for both models and the original GPT-2 tokenization regime.

Overall, supervised neural network approaches drastically outperform all other approaches. Among them, TNT-KID performs the best on four datasets in terms of F1@10. It is outperformed by CatSeqD (on NUS and SemEval) or GPT-2+ BiLSTM-CRF (on JPTImes and DUC) on the other four datasets. CatSeqD also performs competitively on KP20k, Krapivin, and KPTimes datasets, but is outperformed by a large margin on three other datasets by both GPT-2 + BiLSTM-CRF and TNT-KID. To be more specific, in terms of F1@10, TNT-KID outperforms the CatSeqD approach by about 20% points on the Inspec dataset, on the DUC dataset, it outperforms CatSeqD by about 30% points, and on JPTimes it outperforms CatSeqD by about 12% points.

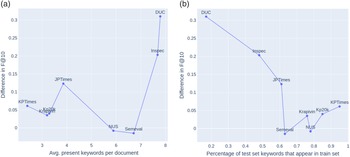

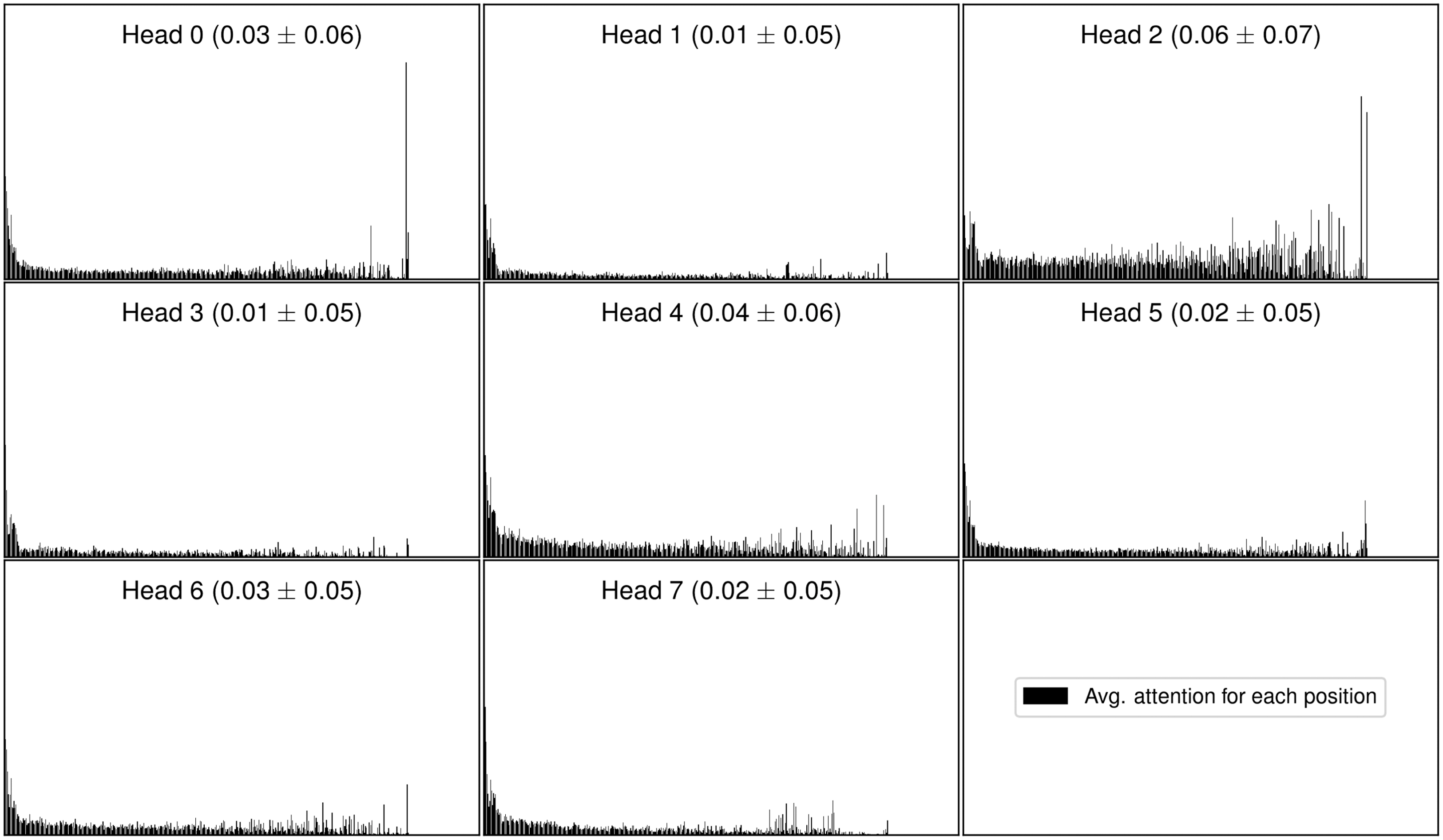

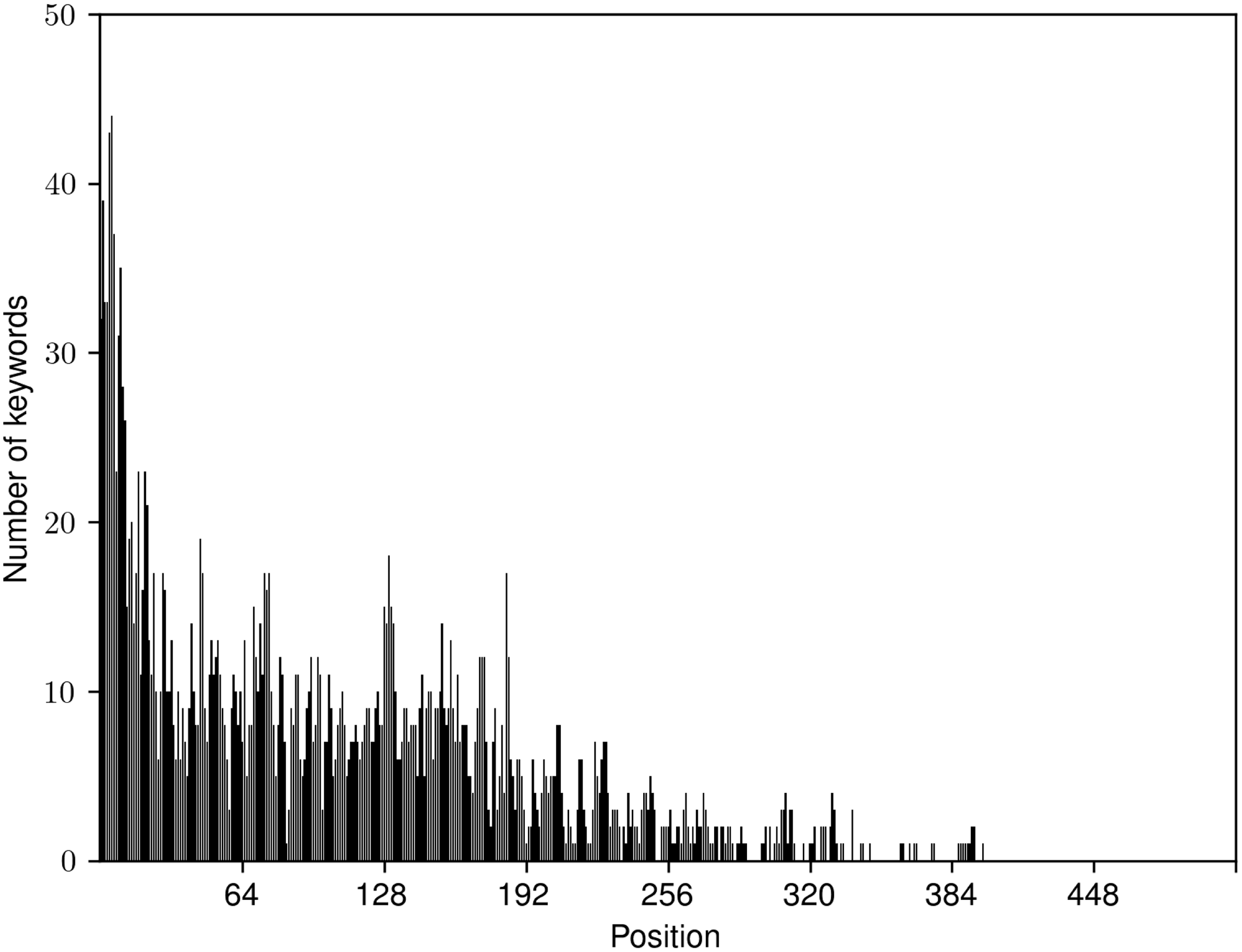

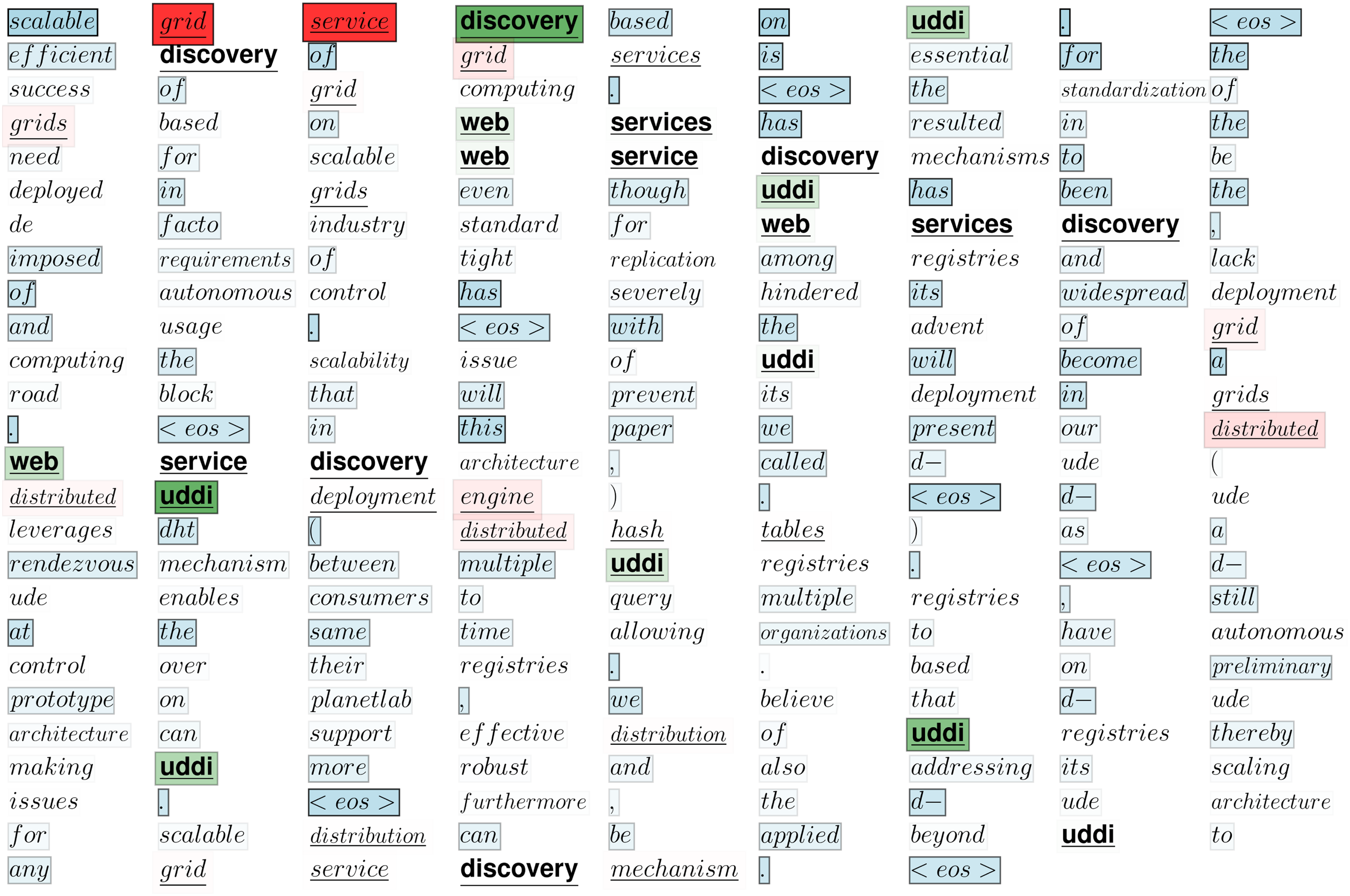

The results of CopyRNN, Semi-supervised CopyRNN, and CorrRNN are in a large majority of cases very consistent with CatSeqD. For example, CopyRNN performs slightly better than CatSeqD on DUC and JPTimes, and slightly worse on the other six datasets. Semi-supervised CopyRNN performs slightly worse than CopyRNN on the majority of datasets for which the results are available according to both criteria. On the other hand, CorrRNN slightly outperforms CopyRNN on two out of the three datasets for which the results are available according to both criteria, but is nevertheless still outperformed by CatSeqD on both of these datasets.