1. Introduction

The problem of reconstructing missing information, due to measurement constraints and lack of spatial/temporal resolution, is ubiquitous in almost all important applications of turbulence to laboratory experiments, geophysics, meteorology and oceanography (Le Dimet & Talagrand Reference Le Dimet and Talagrand1986; Bell et al. Reference Bell, Lefebvre, Le Traon, Smith and Wilmer-Becker2009; Torn & Hakim Reference Torn and Hakim2009; Krysta et al. Reference Krysta, Blayo, Cosme and Verron2011; Asch, Bocquet & Nodet Reference Asch, Bocquet and Nodet2016). For example, satellite imagery often suffers from missing data due to dead pixels and thick cloud cover (Shen et al. Reference Shen, Li, Cheng, Zeng, Yang, Li and Zhang2015; Zhang et al. Reference Zhang, Yuan, Zeng, Li and Wei2018; Militino, Ugarte & Montesino Reference Militino, Ugarte and Montesino2019; Storer et al. Reference Storer, Buzzicotti, Khatri, Griffies and Aluie2022). In particle tracking velocimetry (PTV) experiments (Dabiri & Pecora Reference Dabiri and Pecora2020), spatial gaps naturally occur due to the use of a small number of seeded particles. Additionally, in particle image velocimetry (PIV) experiments, missing information can arise due to out-of-pair particles, object shadows or light reflection issues (Garcia Reference Garcia2011; Wang et al. Reference Wang, Gao, Wang, Wei, Li and Wang2016; Wen et al. Reference Wen, Li, Peng, Zhou and Liu2019). Similarly, in many instances, the experimental probes are limited to assessing only a subset of the relevant fields, asking for a careful a priori engineering of the most relevant features to be tracked. Recently, many data-driven machine learning tools have been proposed to fulfil some of the previous tasks. Research using these black-box tools is at its infancy and we lack systematic quantitative benchmarks for paradigmatic high-quality and high-quantity multi-scale complex datasets, a mandatory step to making them useful for the fluid-dynamics community. In this paper, we perform a systematic quantitative comparison among three data-driven methods (no information on the underlying equations) to reconstruct highly complex two-dimensional (2-D) fields from a typical geophysical set-up, such as that of rotating turbulence. The first two methods are connected with a linear model reduction, the so-called proper orthogonal decomposition (POD) and the third is based on a fully nonlinear convolutional neural network (CNN) embedded in a framework of a generative adversarial network (GAN) (Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014; Deng et al. Reference Deng, He, Liu and Kim2019; Subramaniam et al. Reference Subramaniam, Wong, Borker, Nimmagadda and Lele2020; Buzzicotti et al. Reference Buzzicotti, Bonaccorso, Di Leoni and Biferale2021; Guastoni et al. Reference Guastoni, Güemes, Ianiro, Discetti, Schlatter, Azizpour and Vinuesa2021; Kim et al. Reference Kim, Kim, Won and Lee2021; Buzzicotti & Bonaccorso Reference Buzzicotti and Bonaccorso2022; Yousif et al. Reference Yousif, Yu, Hoyas, Vinuesa and Lim2022). Proper orthogonal decomposition is widely used for pattern recognition (Sirovich & Kirby Reference Sirovich and Kirby1987; Fukunaga Reference Fukunaga2013), optimization (Singh et al. Reference Singh, Myatt, Addington, Banda and Hall2001) and data assimilation (Romain, Chatellier & David Reference Romain, Chatellier and David2014; Suzuki Reference Suzuki2014). To repair the missing data in a gappy field, Everson & Sirovich (Reference Everson and Sirovich1995) proposed gappy POD (GPOD), where coefficients are optimized according to the measured data outside the gap. By introducing some modifications to GPOD, Venturi & Karniadakis (Reference Venturi and Karniadakis2004) improved its robustness and made it reach the maximum possible resolution at a given level of spatio-temporal gappiness. Gunes, Sirisup & Karniadakis (Reference Gunes, Sirisup and Karniadakis2006) showed that GPOD reconstruction outperforms the Kriging interpolation (Oliver & Webster Reference Oliver and Webster1990; Stein Reference Stein1999; Gunes & Rist Reference Gunes and Rist2008). However, GPOD is essentially a linear interpolation and thus is in trouble when dealing with complex multi-scale and non-Gaussian flows as the ones typical of fully developed turbulence (Alexakis & Biferale Reference Alexakis and Biferale2018) and/or large missing areas (Li et al. Reference Li, Buzzicotti, Biferale, Wan and Chen2021). Extended POD (EPOD) was first used in Maurel, Borée & Lumley (Reference Maurel, Borée and Lumley2001) on the PIV data of a turbulent internal engine flow, where the POD analysis is conducted in a sub-domain spanning only the central rotating region but the preferred directions of the jet–vortex interaction can be clearly identified. Borée (Reference Borée2003) generalized the EPOD and reported that EPOD can be applied to study the correlation of any physical quantity in any domain with the projection of any measured quantity on its POD modes in the measurement domain. EPOD has many applications of flow sensing, where flow predictions are made based on remote probes (Tinney, Ukeiley & Glauser Reference Tinney, Ukeiley and Glauser2008; Hosseini, Martinuzzi & Noack Reference Hosseini, Martinuzzi and Noack2016; Discetti et al. Reference Discetti, Bellani, Örlü, Serpieri, Vila, Raiola, Zheng, Mascotelli, Talamelli and Ianiro2019). For example, using EPOD as a reference of their CNN models, Guastoni et al. (Reference Guastoni, Güemes, Ianiro, Discetti, Schlatter, Azizpour and Vinuesa2021) predicted the 2-D velocity-fluctuation fields at different wall-normal locations from the wall-shear-stress components and the wall pressure in a turbulent open-channel flow. EPOD also provides a linear relation between the input and output fields.

In recent years, CNNs have made a great success in computer vision tasks (Niu & Suen Reference Niu and Suen2012; Russakovsky et al. Reference Russakovsky2015; He et al. Reference He, Zhang, Ren and Sun2016) because of their powerful ability of handling nonlinearities (Hornik Reference Hornik1991; Kreinovich Reference Kreinovich1991; Baral, Fuentes & Kreinovich Reference Baral, Fuentes and Kreinovich2018). In fluid mechanics, CNN has also been shown as a encouraging technique for data prediction/reconstruction (Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2019; Güemes, Discetti & Ianiro Reference Güemes, Discetti and Ianiro2019; Kim & Lee Reference Kim and Lee2020; Li et al. Reference Li, Buzzicotti, Biferale and Bonaccorso2023). Many researchers devote time to the super-resolution task, where CNNs are used to reconstruct high-resolution data from low-resolution data of laminar and turbulent flows (Liu et al. Reference Liu, Tang, Huang and Lu2020; Subramaniam et al. Reference Subramaniam, Wong, Borker, Nimmagadda and Lele2020; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2021; Kim et al. Reference Kim, Kim, Won and Lee2021). In the scenario where a large gap exists, missing both large- and small-scale features, Buzzicotti et al. (Reference Buzzicotti, Bonaccorso, Di Leoni and Biferale2021) reconstructed for the first time a set of 2-D damaged snapshots of three-dimensional (3-D) rotating turbulence with GAN. Recent works show that CNN or GAN is also feasible to reconstruct the 3-D velocity fields with 2-D observations (Matsuo et al. Reference Matsuo, Nakamura, Morimoto, Fukami and Fukagata2021; Yousif et al. Reference Yousif, Yu, Hoyas, Vinuesa and Lim2022). GAN consists of two CNNs, a generator and a discriminator. Previous preliminary research indicates that the introduction of discriminator significantly improves the high-order statistics of the prediction (Deng et al. Reference Deng, He, Liu and Kim2019; Subramaniam et al. Reference Subramaniam, Wong, Borker, Nimmagadda and Lele2020; Buzzicotti et al. Reference Buzzicotti, Bonaccorso, Di Leoni and Biferale2021; Güemes et al. Reference Güemes, Discetti, Ianiro, Sirmacek, Azizpour and Vinuesa2021; Kim et al. Reference Kim, Kim, Won and Lee2021). Different from the previous work (Buzzicotti et al. Reference Buzzicotti, Bonaccorso, Di Leoni and Biferale2021), here we attack the problem of data reconstruction with GAN by changing the ratio between the input measurements and the missing information. Furthermore, we present a first systematic assessment of the nonlinear vs linear reconstruction methods, by showing also results using two different POD-based methods. We discuss and present novel results concerning both point-based and statistical metrics. Moreover, the dependency of GAN properties on the adversarial ratio is also systematically studied. The adversarial ratio gauges the relative importance of the discriminator in comparison with the generator throughout the training process.

Two factors make the reconstruction difficult. First, turbulent flows have a large number of active degrees of freedom which grows with the turbulent intensity, typically parameterized by the Reynolds number. The second factor is the spatio-temporal gappiness, which depends on the area and geometry of the missing region. In the current work we conduct a first systematic comparative study between GPOD, EPOD and GAN on the reconstruction of turbulence in the presence of rotation, which is a paradigmatic system with both coherent vortices at large scales and strong non-Gaussian and intermittent fluctuations at small scales (Alexakis & Biferale Reference Alexakis and Biferale2018; Buzzicotti, Clark Di Leoni & Biferale Reference Buzzicotti, Clark Di Leoni and Biferale2018; Di Leoni et al. Reference Di Leoni, Alexakis, Biferale and Buzzicotti2020).

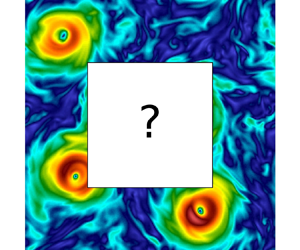

Figure 1 displays some examples of the reconstruction task in this work. The aim is to fill the gap region with data close to the ground truth (figure 1c,f). A second long term goal would also be to systematically perform features ranking, which is understanding the quality of the supplied information on the basis of its performance in the reconstruction goal. The latter is connected to the sacred grail of turbulence: identifying the master degrees of freedom driving turbulent flow, connected also to control problems (Choi, Moin & Kim Reference Choi, Moin and Kim1994; Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Gad-el Hak & Tsai Reference Gad-el Hak and Tsai2006; Brunton & Noack Reference Brunton and Noack2015; Fahland et al. Reference Fahland, Stroh, Frohnapfel, Atzori, Vinuesa, Schlatter and Gatti2021). The study presented in this work is a first step towards a quantitative assessment of the tools that can be employed to ask and answer this kind of question. In order to focus on two paradigmatic realistic set-ups, we study two gap geometries, a central square gap (figure 1a,d) and random gappiness (figure 1b,e). The latter is related to practical applications such as PTV and PIV. The gap area is also varied from a small to an extremely large proportion up to the limit where only one thin layer is supplied at the border, a seemingly impossible reconstruction task, for evaluation of the three methods in different situations. In a recent work, Clark Di Leoni et al. (Reference Clark Di Leoni, Agarwal, Zaki, Meneveau and Katz2022) used physics-informed neural networks for reconstruction with sparse and noisy particle tracks obtained experimentally. As in practice the measurements are always noisy or filtered, we also investigate the robustness of the EPOD and GAN reconstruction methods.

Figure 1. Examples of the reconstruction task on 2-D slices of 3-D turbulent rotating flows, where the colour code is proportional to the velocity module. Two gap geometries are considered: (a,d) a central square gap and (b,e) random gappiness. Gaps in each row have the same area and the corresponding ground truth is shown in (c,f). We denote ![]() $G$ as the gap region and

$G$ as the gap region and ![]() $S=I\setminus G$ as the known region, where

$S=I\setminus G$ as the known region, where ![]() $I$ is the entire 2-D image.

$I$ is the entire 2-D image.

The paper is organized as follows. Section 2.1 describes the dataset and the reconstruction problem set-up. The GPOD, EPOD and GAN-based reconstruction methods are introduced in §§ 2.2, 2.3 and 2.4, respectively. In § 3, the performances of POD- and GAN-based methods in turbulence reconstruction are systematically compared when there is one central square gap of different sizes. We address the dependency on the adversarial ratio for the GAN-based reconstruction in § 4 and show results for random gappiness from GPOD, EPOD and GAN in § 5. The robustness of EPOD and GAN to measurement noise and the computational cost of all methods are discussed in § 6. Finally, conclusions of the work are presented in § 7.

2. Methodology

2.1. Dataset and reconstruction problem set-up

For the evaluation of different reconstruction tools, we use a dataset from the TURB-Rot (Biferale et al. Reference Biferale, Bonaccorso, Buzzicotti and di Leoni2020) open database. The dataset used in this study is generated from a direct numerical simulation of the Navier–Stokes equations for homogeneous incompressible flow in the presence of rotation with periodic boundary conditions (Godeferd & Moisy Reference Godeferd and Moisy2015; Pouquet et al. Reference Pouquet, Rosenberg, Marino and Herbert2018; Seshasayanan & Alexakis Reference Seshasayanan and Alexakis2018; van Kan & Alexakis Reference van Kan and Alexakis2020; Yokoyama & Takaoka Reference Yokoyama and Takaoka2021). In a rotating frame of reference, both Coriolis and centripetal accelerations must be taken into account. However, the centrifugal force can be expressed as the gradient of the centrifugal potential and included in the pressure gradient term. In this way, the resulting equations will explicitly show only the presence of the Coriolis force, while the centripetal term is absorbed into a modified pressure (Cohen & Kundu Reference Cohen and Kundu2004). The simulation is performed using a fully dealiased parallel pseudo-spectral code in a 3-D (![]() $x_1$-

$x_1$-![]() $x_2$-

$x_2$-![]() $x_3$) periodic domain of size

$x_3$) periodic domain of size ![]() $[0,2{\rm \pi} )^3$ with 256 grid points in each direction, as shown in the inset of figure 2(b). Denoting

$[0,2{\rm \pi} )^3$ with 256 grid points in each direction, as shown in the inset of figure 2(b). Denoting ![]() $l_0=2{\rm \pi}$ as the domain size, the Fourier spectral wavenumber is

$l_0=2{\rm \pi}$ as the domain size, the Fourier spectral wavenumber is ![]() $\boldsymbol {k}=(k_1,k_2,k_3)$, where

$\boldsymbol {k}=(k_1,k_2,k_3)$, where ![]() $k_1=2n_1{\rm \pi} /l_0$ (

$k_1=2n_1{\rm \pi} /l_0$ (![]() $n_1\in \mathbb {Z}$) and one can similarly obtain

$n_1\in \mathbb {Z}$) and one can similarly obtain ![]() $k_2$ and

$k_2$ and ![]() $k_3$. The governing equations can be written as

$k_3$. The governing equations can be written as

where ![]() $\boldsymbol {u}$ is the incompressible velocity,

$\boldsymbol {u}$ is the incompressible velocity, ![]() $\boldsymbol {\varOmega }=\varOmega \hat {\boldsymbol {x}}_3$ is the system rotation vector,

$\boldsymbol {\varOmega }=\varOmega \hat {\boldsymbol {x}}_3$ is the system rotation vector, ![]() $\tilde {p}=p+\frac {1}{2}\rho \lVert \boldsymbol {\varOmega }\times \boldsymbol {x}\rVert ^2$ is the pressure in an inertial frame modified by a centrifugal term,

$\tilde {p}=p+\frac {1}{2}\rho \lVert \boldsymbol {\varOmega }\times \boldsymbol {x}\rVert ^2$ is the pressure in an inertial frame modified by a centrifugal term, ![]() $\nu$ is the kinematic viscosity and

$\nu$ is the kinematic viscosity and ![]() $\boldsymbol {f}$ is an external forcing mechanism at scales around

$\boldsymbol {f}$ is an external forcing mechanism at scales around ![]() $k_f=4$ via a second-order Ornstein–Uhlenbeck process (Sawford Reference Sawford1991; Buzzicotti et al. Reference Buzzicotti, Bhatnagar, Biferale, Lanotte and Ray2016). Figure 2(a) plots the energy evolution with time of the whole simulation. The energy spectrum

$k_f=4$ via a second-order Ornstein–Uhlenbeck process (Sawford Reference Sawford1991; Buzzicotti et al. Reference Buzzicotti, Bhatnagar, Biferale, Lanotte and Ray2016). Figure 2(a) plots the energy evolution with time of the whole simulation. The energy spectrum ![]() $E(k)=\frac {1}{2}\sum _{k\le \lVert \boldsymbol {k}\rVert < k+1}\lVert \hat {\boldsymbol {u}}(\boldsymbol {k})\rVert ^2$ averaged over time is shown in figure 2(b), where the grey area indicates the forcing wavenumbers. To enlarge the inertial range between

$E(k)=\frac {1}{2}\sum _{k\le \lVert \boldsymbol {k}\rVert < k+1}\lVert \hat {\boldsymbol {u}}(\boldsymbol {k})\rVert ^2$ averaged over time is shown in figure 2(b), where the grey area indicates the forcing wavenumbers. To enlarge the inertial range between ![]() $k_f$ and the Kolmogorov dissipative wavenumber,

$k_f$ and the Kolmogorov dissipative wavenumber, ![]() $k_\eta =32$, which is picked as the scale where

$k_\eta =32$, which is picked as the scale where ![]() $E(k)$ starts to decay exponentially, the viscous term

$E(k)$ starts to decay exponentially, the viscous term ![]() $\nu \nabla ^2\boldsymbol {u}$ in (2.1) is replaced with a hyperviscous term

$\nu \nabla ^2\boldsymbol {u}$ in (2.1) is replaced with a hyperviscous term ![]() $\nu _h\nabla ^4\boldsymbol {u}$ (Haugen & Brandenburg Reference Haugen and Brandenburg2004; Frisch et al. Reference Frisch, Kurien, Pandit, Pauls, Ray, Wirth and Zhu2008). We define an effective Reynolds number as

$\nu _h\nabla ^4\boldsymbol {u}$ (Haugen & Brandenburg Reference Haugen and Brandenburg2004; Frisch et al. Reference Frisch, Kurien, Pandit, Pauls, Ray, Wirth and Zhu2008). We define an effective Reynolds number as ![]() $Re_{eff}=(k_0/k_\eta )^{-3/4}\approx 13.45$, with the smallest wavenumber

$Re_{eff}=(k_0/k_\eta )^{-3/4}\approx 13.45$, with the smallest wavenumber ![]() $k_0=1$. A linear friction term

$k_0=1$. A linear friction term ![]() $-\beta \boldsymbol {u}$ acting only on wavenumbers

$-\beta \boldsymbol {u}$ acting only on wavenumbers ![]() $\lVert \boldsymbol {k}\rVert \le 2$ is also used in right-hand side of (2.1) to prevent a large-scale condensation (Alexakis & Biferale Reference Alexakis and Biferale2018). As shown in figure 2(a), the flow reaches a stationary state with a Rossby number

$\lVert \boldsymbol {k}\rVert \le 2$ is also used in right-hand side of (2.1) to prevent a large-scale condensation (Alexakis & Biferale Reference Alexakis and Biferale2018). As shown in figure 2(a), the flow reaches a stationary state with a Rossby number ![]() $Ro=\mathcal {E}^{1/2}/(\varOmega /k_f)\approx 0.1$, where

$Ro=\mathcal {E}^{1/2}/(\varOmega /k_f)\approx 0.1$, where ![]() $\mathcal {E}$ is the kinetic energy. The integral length scale is

$\mathcal {E}$ is the kinetic energy. The integral length scale is ![]() $L=\mathcal {E}/\int kE(k)\,\mathrm {d}k\sim 0.15l_0$ and the integral time scale is

$L=\mathcal {E}/\int kE(k)\,\mathrm {d}k\sim 0.15l_0$ and the integral time scale is ![]() $T=L/\mathcal {E}^{1/2}\approx 0.185$. Readers can refer to Biferale et al. (Reference Biferale, Bonaccorso, Buzzicotti and di Leoni2020) for more details on the simulation.

$T=L/\mathcal {E}^{1/2}\approx 0.185$. Readers can refer to Biferale et al. (Reference Biferale, Bonaccorso, Buzzicotti and di Leoni2020) for more details on the simulation.

Figure 2. (a) Energy evolution of the turbulent flow, where we also show the sampling time periods for the training/validation and testing data. (b) The averaged energy spectrum. The grey area indicates the forcing wavenumbers, and ![]() $k_\eta$ is the Kolmogorov dissipative wavenumber where

$k_\eta$ is the Kolmogorov dissipative wavenumber where ![]() $E(k)$ starts to decay exponentially. The inset shows an instantaneous visualization of the velocity module with the frame of reference for the simulation.

$E(k)$ starts to decay exponentially. The inset shows an instantaneous visualization of the velocity module with the frame of reference for the simulation.

The dataset is extracted from the above simulation as follows. First, we sampled 600 snapshots of the whole 3-D velocity field from time ![]() $t=276$ up to

$t=276$ up to ![]() $t=876$ for training and validation, and we sampled 160 3-D snapshots from

$t=876$ for training and validation, and we sampled 160 3-D snapshots from ![]() $t=1516$ to

$t=1516$ to ![]() $t=1676$ for testing, as shown in figure 2(a). A sampling interval

$t=1676$ for testing, as shown in figure 2(a). A sampling interval ![]() ${\rm \Delta} t_s\approx 5.41T$ is used to decrease correlations in time between two successive snapshots.

${\rm \Delta} t_s\approx 5.41T$ is used to decrease correlations in time between two successive snapshots.

To reduce the amount of data to be analysed, the resolution of sampled fields is downsized from ![]() $256^3$ to

$256^3$ to ![]() $64^3$ by a spectral low-pass filter

$64^3$ by a spectral low-pass filter

where the cutoff is the Kolmogorov dissipative wavenumber such as to only eliminate the fully dissipative degrees of freedom where the flow becomes smooth (Frisch Reference Frisch1995). Therefore, there is no loss of data complexity in this procedure and it also indicates that the measurement resolution corresponds to ![]() $k_\eta$.

$k_\eta$.

For each downsized 3-D field, we selected 16 horizontal (![]() $x_1$–

$x_1$–![]() $x_2$) planes at different

$x_2$) planes at different ![]() $x_3$, each of which can be augmented to 11 (for training and validation) or 8 (for testing) different ones by randomly shifting it along both the

$x_3$, each of which can be augmented to 11 (for training and validation) or 8 (for testing) different ones by randomly shifting it along both the ![]() $x_1$ and

$x_1$ and ![]() $x_2$ directions using periodic boundary conditions (Biferale et al. Reference Biferale, Bonaccorso, Buzzicotti and di Leoni2020). Therefore, a total of 176 or 128 planes can be obtained at each instant of time.

$x_2$ directions using periodic boundary conditions (Biferale et al. Reference Biferale, Bonaccorso, Buzzicotti and di Leoni2020). Therefore, a total of 176 or 128 planes can be obtained at each instant of time.

Finally, the 105 600 planes sampled at early times are randomly shuffled and used to constitute the train/validation split: 84 480 (80 %)/10 560 (10 %), which is used for the training process, while the other 20 480 planes sampled at later times are used for the testing process.

The parameters of the dataset are summarized in table 1. In the present study, we only reconstruct the velocity module, ![]() $u=\lVert \boldsymbol {u}\rVert$, which is always positive. Note that we restrict our study to 2-D horizontal slices in order to make contact with geophysical observation, although GPOD, EPOD and GAN are feasible to use with 3-D data.

$u=\lVert \boldsymbol {u}\rVert$, which is always positive. Note that we restrict our study to 2-D horizontal slices in order to make contact with geophysical observation, although GPOD, EPOD and GAN are feasible to use with 3-D data.

Table 1. Description of the dataset used for the evaluation of reconstruction methods. Here, ![]() $N_{x_1}$ and

$N_{x_1}$ and ![]() $N_{x_2}$ indicate the resolution of the horizontal plane. The number of fields for training/validation/testing is denoted as

$N_{x_2}$ indicate the resolution of the horizontal plane. The number of fields for training/validation/testing is denoted as ![]() $N_{train}$/

$N_{train}$/![]() $N_{valid}$/

$N_{valid}$/![]() $N_{test}$. The sampling time periods for training/validation and testing are

$N_{test}$. The sampling time periods for training/validation and testing are ![]() $T_{train}$ and

$T_{train}$ and ![]() $T_{test}$, respectively.

$T_{test}$, respectively.

We next describe the reconstruction problem set-up. Figure 3 presents an example of a gappy field, where ![]() $I$,

$I$, ![]() $G$ and

$G$ and ![]() $S$ represent the whole region, the gap region and the known region, respectively. Given the damaged area

$S$ represent the whole region, the gap region and the known region, respectively. Given the damaged area ![]() $A_G$, we can define the gap size as

$A_G$, we can define the gap size as ![]() $l=\sqrt {A_G}$. As shown in figure 1, two gap geometries are considered: (i) a square gap located at the centre and (ii) random gappiness which spreads over the whole region. Once the positions in

$l=\sqrt {A_G}$. As shown in figure 1, two gap geometries are considered: (i) a square gap located at the centre and (ii) random gappiness which spreads over the whole region. Once the positions in ![]() $G$ are determined,

$G$ are determined, ![]() $G$ is fixed for all planes over the training and the testing processes. Note that the GAN-based reconstruction can also handle the case where

$G$ is fixed for all planes over the training and the testing processes. Note that the GAN-based reconstruction can also handle the case where ![]() $G$ is randomly changed for different planes (not shown). For a field

$G$ is randomly changed for different planes (not shown). For a field ![]() $u(\boldsymbol {x})$ defined on

$u(\boldsymbol {x})$ defined on ![]() $I$, we define the supplied measurements in

$I$, we define the supplied measurements in ![]() $S$ as

$S$ as ![]() $u_S(\boldsymbol {x})=u(\boldsymbol {x})$ (with

$u_S(\boldsymbol {x})=u(\boldsymbol {x})$ (with ![]() $\boldsymbol {x}\in S$), and the ground truth or the predicted field in

$\boldsymbol {x}\in S$), and the ground truth or the predicted field in ![]() $G$, as

$G$, as ![]() $u_G^{(t)}(\boldsymbol {x})=u(\boldsymbol {x})$ or

$u_G^{(t)}(\boldsymbol {x})=u(\boldsymbol {x})$ or ![]() $u_G^{(p)}(\boldsymbol {x})$ (with

$u_G^{(p)}(\boldsymbol {x})$ (with ![]() $\boldsymbol {x}\in G$). The reconstruction models are ‘learned’ with the training data defined on the whole region

$\boldsymbol {x}\in G$). The reconstruction models are ‘learned’ with the training data defined on the whole region ![]() $I$. Once the training process completed, one can evaluate the models by comparing the prediction and the ground truth in

$I$. Once the training process completed, one can evaluate the models by comparing the prediction and the ground truth in ![]() $G$ over the test dataset.

$G$ over the test dataset.

Figure 3. Schematic diagram of a gappy field.

2.2. The GPOD reconstruction

This section briefly presents the procedure of GPOD. The first step is to conduct POD analysis with the training data on the whole region ![]() $I$, namely solving the eigenvalue problem

$I$, namely solving the eigenvalue problem

where

is the correlation matrix, given ![]() $\langle {\cdot }\rangle$ as the average over the training dataset. We denote

$\langle {\cdot }\rangle$ as the average over the training dataset. We denote ![]() $\lambda _n$ as the eigenvalues and

$\lambda _n$ as the eigenvalues and ![]() $\psi _n(\boldsymbol {x})$ as the POD eigenmodes, where

$\psi _n(\boldsymbol {x})$ as the POD eigenmodes, where ![]() $n=1,\ldots,N_I$ and

$n=1,\ldots,N_I$ and ![]() $N_I=N_{x_1}\times N_{x_2}$ is the number of points in

$N_I=N_{x_1}\times N_{x_2}$ is the number of points in ![]() $I$. For the homogeneous periodic flow considered in this study, it can be demonstrated that the POD modes correspond to Fourier modes, and their spectra are identical (Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012). In all POD analyses of the present study, the mean of

$I$. For the homogeneous periodic flow considered in this study, it can be demonstrated that the POD modes correspond to Fourier modes, and their spectra are identical (Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012). In all POD analyses of the present study, the mean of ![]() $u(\boldsymbol {x})$ is not removed. Any realization of the field can be decomposed as

$u(\boldsymbol {x})$ is not removed. Any realization of the field can be decomposed as

\begin{equation} u(\boldsymbol{x})=\sum_{n=1}^{N_I}a_n\psi_n(\boldsymbol{x})\quad(\boldsymbol{x}\in I), \end{equation}

\begin{equation} u(\boldsymbol{x})=\sum_{n=1}^{N_I}a_n\psi_n(\boldsymbol{x})\quad(\boldsymbol{x}\in I), \end{equation}with the POD coefficients

In the case when we have data only in ![]() $S$, the relation (2.6) cannot be used and one can adopt the dimension reduction by keeping only the first

$S$, the relation (2.6) cannot be used and one can adopt the dimension reduction by keeping only the first ![]() $N'$ POD modes and minimizing the distance between the measurements and the linear POD decomposition (Everson & Sirovich Reference Everson and Sirovich1995)

$N'$ POD modes and minimizing the distance between the measurements and the linear POD decomposition (Everson & Sirovich Reference Everson and Sirovich1995)

\begin{equation} \tilde{E} = \int_S \left| u_S(\boldsymbol{x}) - \sum_{n=1}^{N'} a_{n}^{(p)}\psi_n(\boldsymbol{x}) \right|^2 \, \mathrm{d}\kern 0.06em \boldsymbol{x}, \end{equation}

\begin{equation} \tilde{E} = \int_S \left| u_S(\boldsymbol{x}) - \sum_{n=1}^{N'} a_{n}^{(p)}\psi_n(\boldsymbol{x}) \right|^2 \, \mathrm{d}\kern 0.06em \boldsymbol{x}, \end{equation}

to obtain the predicted coefficients ![]() $\{a_{n}^{(p)}\}_{n=1}^{N'}$. Then the GPOD prediction can be given as

$\{a_{n}^{(p)}\}_{n=1}^{N'}$. Then the GPOD prediction can be given as

\begin{equation} u_G^{(p)}(\boldsymbol{x})=\sum_{n=1}^{N'}a_{n}^{(p)}\psi_n(\boldsymbol{x})\quad(\boldsymbol{x}\in G). \end{equation}

\begin{equation} u_G^{(p)}(\boldsymbol{x})=\sum_{n=1}^{N'}a_{n}^{(p)}\psi_n(\boldsymbol{x})\quad(\boldsymbol{x}\in G). \end{equation}

We optimize the value of ![]() $N'$ during the training phase by requiring a minimum mean

$N'$ during the training phase by requiring a minimum mean ![]() $L_2$ distance with the ground truth in the gap

$L_2$ distance with the ground truth in the gap

Table 2 summarizes the optimal ![]() $N'$ used in this study. An analysis of reconstruction error for different

$N'$ used in this study. An analysis of reconstruction error for different ![]() $N'$ is conducted in Appendix A. Let us notice that there also exists a different approach to select, frame by frame, a subset of POD modes to be used in the GPOD approach, based on Lasso, a regression analysis that performs mode selection with regularization (Tibshirani Reference Tibshirani1996). Results using this second approach do not show any significant improvement in a typical case of our study (see Appendix B).

$N'$ is conducted in Appendix A. Let us notice that there also exists a different approach to select, frame by frame, a subset of POD modes to be used in the GPOD approach, based on Lasso, a regression analysis that performs mode selection with regularization (Tibshirani Reference Tibshirani1996). Results using this second approach do not show any significant improvement in a typical case of our study (see Appendix B).

Table 2. Summary of the optimal ![]() $N'$ for the square gap (s.g.) and random gappiness (r.g.) with different sizes.

$N'$ for the square gap (s.g.) and random gappiness (r.g.) with different sizes.

2.3. The EPOD reconstruction

To use EPOD for flow reconstruction, we first compute the correlation matrix

and solve the eigenvalue problem

to obtain the eigenvalues ![]() $\sigma _n$ and the POD eigenmodes

$\sigma _n$ and the POD eigenmodes ![]() $\phi _n(\boldsymbol {x})$, where

$\phi _n(\boldsymbol {x})$, where ![]() $n=1,\ldots,N_S$ and

$n=1,\ldots,N_S$ and ![]() $N_S$ equals to the number of points in

$N_S$ equals to the number of points in ![]() $S$. We remark that

$S$. We remark that ![]() $\phi _n(\boldsymbol {x})$ are not Fourier modes, as the presence of the internal gap breaks the homogeneity. Any realization of the measured field in

$\phi _n(\boldsymbol {x})$ are not Fourier modes, as the presence of the internal gap breaks the homogeneity. Any realization of the measured field in ![]() $S$ can be decomposed as

$S$ can be decomposed as

\begin{equation} u_S(\boldsymbol{x})=\sum_{n=1}^{N_S}b_n\phi_n(\boldsymbol{x})\quad(\boldsymbol{x}\in S), \end{equation}

\begin{equation} u_S(\boldsymbol{x})=\sum_{n=1}^{N_S}b_n\phi_n(\boldsymbol{x})\quad(\boldsymbol{x}\in S), \end{equation}

where the ![]() $n$th POD coefficient is obtained from

$n$th POD coefficient is obtained from

Furthermore, with (2.12) and an important property (Borée Reference Borée2003), ![]() $\langle b_nb_p\rangle =\sigma _n\delta _{np}$, one can derive the following identity:

$\langle b_nb_p\rangle =\sigma _n\delta _{np}$, one can derive the following identity:

Here, we reiterate that ![]() $\langle {\cdot }\rangle$ denotes the average over the training dataset. Specifically,

$\langle {\cdot }\rangle$ denotes the average over the training dataset. Specifically, ![]() $\langle b_n u_S\rangle$ can be interpreted as

$\langle b_n u_S\rangle$ can be interpreted as ![]() $(1/N_{train})\sum _{c=1}^{N_{train}}b_n^{(c)}u_S^{(c)}$, where the superscript

$(1/N_{train})\sum _{c=1}^{N_{train}}b_n^{(c)}u_S^{(c)}$, where the superscript ![]() $c$ represents the index of a particular snapshot. The extended POD mode is defined by replacing

$c$ represents the index of a particular snapshot. The extended POD mode is defined by replacing ![]() $u_S(\boldsymbol {x})$ with the field to be predicted

$u_S(\boldsymbol {x})$ with the field to be predicted ![]() $u_G^{(t)}(\boldsymbol {x})$ in (2.14)

$u_G^{(t)}(\boldsymbol {x})$ in (2.14)

Once we have obtained the set of EPOD modes (2.15) in the training process we can start the reconstruction of a test data with the measurement ![]() $u_S(\boldsymbol {x})$ from calculating the POD coefficients (2.13) and the prediction in

$u_S(\boldsymbol {x})$ from calculating the POD coefficients (2.13) and the prediction in ![]() $G$ can be obtained as the correlated part with

$G$ can be obtained as the correlated part with ![]() $u_S(\boldsymbol {x})$ (Borée Reference Borée2003)

$u_S(\boldsymbol {x})$ (Borée Reference Borée2003)

\begin{equation} u_G^{(p)}(\boldsymbol{x})=\sum_{n=1}^{N_S}b_n\phi_n^E(\boldsymbol{x})\quad(\boldsymbol{x}\in G). \end{equation}

\begin{equation} u_G^{(p)}(\boldsymbol{x})=\sum_{n=1}^{N_S}b_n\phi_n^E(\boldsymbol{x})\quad(\boldsymbol{x}\in G). \end{equation}2.4. The GAN-based reconstruction with context encoders

In a previous work, Buzzicotti et al. (Reference Buzzicotti, Bonaccorso, Di Leoni and Biferale2021) used a context encoder (Pathak et al. Reference Pathak, Krahenbuhl, Donahue, Darrell and Efros2016) embedded in GAN to generate missing data for the case where the total gap size is fixed, but with different spatial distributions. To generalize the previous approach to study gaps of different geometries and sizes, we extend previous GAN architecture by adding one layer at the start, two layers at the end of the generator and one layer at the start of the discriminator, as shown in figure 4. The generator is a functional ![]() $GEN({\cdot })$ first taking the damaged ‘context’,

$GEN({\cdot })$ first taking the damaged ‘context’, ![]() $u_S(\boldsymbol {x})$, to produce a latent feature representation with an encoder, and second with a decoder to predict the missing data,

$u_S(\boldsymbol {x})$, to produce a latent feature representation with an encoder, and second with a decoder to predict the missing data, ![]() $u_G^{(p)}(\boldsymbol {x})=GEN(u_S(\boldsymbol {x}))$. The latent feature represents the output vector of the encoder with

$u_G^{(p)}(\boldsymbol {x})=GEN(u_S(\boldsymbol {x}))$. The latent feature represents the output vector of the encoder with ![]() $4096$ neurons in figure 4, extracted from the input with the convolutions and nonlinear activations. To constrain the predicted velocity module being positive, a rectified linear unit (ReLU) activation function is adopted at the last layer of generator. The discriminator acts as a ‘referee’ functional

$4096$ neurons in figure 4, extracted from the input with the convolutions and nonlinear activations. To constrain the predicted velocity module being positive, a rectified linear unit (ReLU) activation function is adopted at the last layer of generator. The discriminator acts as a ‘referee’ functional ![]() $D({\cdot })$, which takes either

$D({\cdot })$, which takes either ![]() $u_G^{(t)}(\boldsymbol {x})$ or

$u_G^{(t)}(\boldsymbol {x})$ or ![]() $u_G^{(p)}(\boldsymbol {x})$ and outputs the probability that the provided input (

$u_G^{(p)}(\boldsymbol {x})$ and outputs the probability that the provided input (![]() $u_G^{(t)}$ or

$u_G^{(t)}$ or ![]() $u_G^{(p)}$) belongs to the real turbulent ensemble. The generator is trained to minimize the following loss function:

$u_G^{(p)}$) belongs to the real turbulent ensemble. The generator is trained to minimize the following loss function:

where the ![]() $L_2$ loss

$L_2$ loss

is the mean squared error (MSE) between the prediction and the ground truth. It is important to stress that, contrary to the GPOD case, the supervised ![]() $L_2$ loss is calculated only inside the gap region

$L_2$ loss is calculated only inside the gap region ![]() $G$. The hyper-parameter

$G$. The hyper-parameter ![]() $\lambda _{adv}$ is called the adversarial ratio and the adversarial loss is

$\lambda _{adv}$ is called the adversarial ratio and the adversarial loss is

\begin{align} \mathcal{L}_{adv}&=\langle\log(1-D(u_G^{(p)}))\rangle= \int p(u_S)\log[1-D(GEN(u_S))]\,\mathrm{d}u_S \nonumber\\ &=\int p_p(u_G)\log(1-D(u_G))\,\mathrm{d}u_G, \end{align}

\begin{align} \mathcal{L}_{adv}&=\langle\log(1-D(u_G^{(p)}))\rangle= \int p(u_S)\log[1-D(GEN(u_S))]\,\mathrm{d}u_S \nonumber\\ &=\int p_p(u_G)\log(1-D(u_G))\,\mathrm{d}u_G, \end{align}

where ![]() $p(u_S)$ is the probability distribution of the field in

$p(u_S)$ is the probability distribution of the field in ![]() $S$ over the training dataset and

$S$ over the training dataset and ![]() $p_p(u_G)$ is the probability distribution of the predicted field in

$p_p(u_G)$ is the probability distribution of the predicted field in ![]() $G$ given by the generator. At the same time, the discriminator is trained to maximize the cross-entropy based on its classification prediction for both real and predicted samples

$G$ given by the generator. At the same time, the discriminator is trained to maximize the cross-entropy based on its classification prediction for both real and predicted samples

\begin{align} \mathcal{L}_{DIS}&=\langle\log(D(u_G^{(t)}))\rangle+\langle\log(1-D(u_G^{(p)}))\rangle \nonumber\\ &=\int[p_t(u_G)\log(D(u_G))+p_p(u_G)\log(1-D(u_G))]\,\mathrm{d}u_G, \end{align}

\begin{align} \mathcal{L}_{DIS}&=\langle\log(D(u_G^{(t)}))\rangle+\langle\log(1-D(u_G^{(p)}))\rangle \nonumber\\ &=\int[p_t(u_G)\log(D(u_G))+p_p(u_G)\log(1-D(u_G))]\,\mathrm{d}u_G, \end{align}

where ![]() $p_t(u_G)$ is the probability distribution of the ground truth,

$p_t(u_G)$ is the probability distribution of the ground truth, ![]() $u_G^{(t)}(\boldsymbol {x})$. Goodfellow et al. (Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) further showed that the adversarial training between generator and discriminator with

$u_G^{(t)}(\boldsymbol {x})$. Goodfellow et al. (Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) further showed that the adversarial training between generator and discriminator with ![]() $\lambda _{adv}=1$ in (2.17) minimizes the Jensen–Shannon (JS) divergence between the real and the predicted distributions,

$\lambda _{adv}=1$ in (2.17) minimizes the Jensen–Shannon (JS) divergence between the real and the predicted distributions, ![]() ${\rm JSD}(p_t\parallel p_p)$. Refer to (3.6) for the definition of the JS divergence. Therefore, the adversarial loss helps the generator to produce predictions that are statistically similar to real turbulent configurations. It is important to stress that the adversarial ratio

${\rm JSD}(p_t\parallel p_p)$. Refer to (3.6) for the definition of the JS divergence. Therefore, the adversarial loss helps the generator to produce predictions that are statistically similar to real turbulent configurations. It is important to stress that the adversarial ratio ![]() $\lambda _{adv}$, which controls the weighted summation of

$\lambda _{adv}$, which controls the weighted summation of ![]() $\mathcal {L}_{MSE}$ and

$\mathcal {L}_{MSE}$ and ![]() $\mathcal {L}_{adv}$, is tuned to reach a balance between the MSE and turbulent statistics of the reconstruction (see § 4). More details about the GAN are discussed in Appendix C, including the architecture, hyper-parameters and the training schedule.

$\mathcal {L}_{adv}$, is tuned to reach a balance between the MSE and turbulent statistics of the reconstruction (see § 4). More details about the GAN are discussed in Appendix C, including the architecture, hyper-parameters and the training schedule.

Figure 4. Architecture of generator and discriminator network for flow reconstruction with a square gap. The kernel size ![]() $k$ and the corresponding stride

$k$ and the corresponding stride ![]() $s$ are determined based on the gap size

$s$ are determined based on the gap size ![]() $l$. Similar architecture holds for random gappiness as well.

$l$. Similar architecture holds for random gappiness as well.

3. Comparison between POD- and GAN-based reconstructions

To conduct a systematic comparison between POD- and GAN-based reconstructions, we start by studying the case with a central square gap of various sizes (see figure 1). All reconstruction methods are first evaluated with the predicted velocity module itself, which is dominated by the large-scale coherent structures. The predictions are further assessed from a multi-scale perspective, with the help of the gradient of the predicted velocity module, spectral properties and multi-scale flatness. Finally, the performance on predicting extreme events is studied for all methods.

3.1. Large-scale information

In this section, the predicted velocity module in the missing region is quantitatively evaluated. First we consider the reconstruction error and define the normalized MSE in the gap as

where ![]() $\langle {\cdot }\rangle$ represents hereafter the average over the test data. The normalization factor is defined as

$\langle {\cdot }\rangle$ represents hereafter the average over the test data. The normalization factor is defined as

where

\begin{equation} \sigma_G^{(p)} = \left\langle \frac{1}{A_G} \int_G |u_G^{(p)}|^2(\boldsymbol{x}) \, \mathrm{d}\kern 0.06em \boldsymbol{x} \right\rangle^{1/2}, \end{equation}

\begin{equation} \sigma_G^{(p)} = \left\langle \frac{1}{A_G} \int_G |u_G^{(p)}|^2(\boldsymbol{x}) \, \mathrm{d}\kern 0.06em \boldsymbol{x} \right\rangle^{1/2}, \end{equation}

and ![]() $\sigma _G^{(t)}$ is defined similarly. With the specific form of

$\sigma _G^{(t)}$ is defined similarly. With the specific form of ![]() $E_{u_G}$, predictions with too small or too large energy will give a large MSE. To provide a baseline for MSE, a set of predictions can be made by randomly sampling the missing field from the true turbulent data. In other words, the baseline comes from uncorrelated predictions that are statistically consistent with the ground truth. The baseline value is around 0.54, see Appendix D. Figure 5 shows the

$E_{u_G}$, predictions with too small or too large energy will give a large MSE. To provide a baseline for MSE, a set of predictions can be made by randomly sampling the missing field from the true turbulent data. In other words, the baseline comes from uncorrelated predictions that are statistically consistent with the ground truth. The baseline value is around 0.54, see Appendix D. Figure 5 shows the ![]() $\mathrm {MSE}(u_G)$ from GPOD, EPOD and GAN reconstructions in a square gap with different sizes. The MSE is first calculated over data batches of size 128 (batch size used for GAN training), then the same calculation is repeated over 160 different batches, from which we calculate the MSE mean and its range of variation. The EPOD and GAN reconstructions provide similar MSEs except at the largest gap size, where GAN has a little bit larger MSE than EPOD. Besides, both EPOD and GAN have smaller MSEs than GPOD for all gap sizes. Figure 6 shows the probability density function (p.d.f.) of the spatially averaged

$\mathrm {MSE}(u_G)$ from GPOD, EPOD and GAN reconstructions in a square gap with different sizes. The MSE is first calculated over data batches of size 128 (batch size used for GAN training), then the same calculation is repeated over 160 different batches, from which we calculate the MSE mean and its range of variation. The EPOD and GAN reconstructions provide similar MSEs except at the largest gap size, where GAN has a little bit larger MSE than EPOD. Besides, both EPOD and GAN have smaller MSEs than GPOD for all gap sizes. Figure 6 shows the probability density function (p.d.f.) of the spatially averaged ![]() $L_2$ error in the missing region for one flow configuration

$L_2$ error in the missing region for one flow configuration

Figure 5. The MSE of the reconstructed velocity module from GPOD, EPOD and GAN in a square gap with different sizes. The abscissa is normalized by the domain size ![]() $l_0$. Red horizontal line represents the uncorrelated baseline.

$l_0$. Red horizontal line represents the uncorrelated baseline.

Figure 6. The p.d.f.s of the spatially averaged ![]() $L_2$ error over different flow configurations obtained from GPOD, EPOD and GAN for a square gap with sizes

$L_2$ error over different flow configurations obtained from GPOD, EPOD and GAN for a square gap with sizes ![]() $l/l_0=24/64$,

$l/l_0=24/64$, ![]() $40/64$ and

$40/64$ and ![]() $62/64$.

$62/64$.

where

is the normalized point-wise ![]() $L_2$ error. The p.d.f.s are shown for three different gap sizes

$L_2$ error. The p.d.f.s are shown for three different gap sizes ![]() $l/l_0=24/64$,

$l/l_0=24/64$, ![]() $40/64$ and

$40/64$ and ![]() $62/64$. Clearly, the p.d.f.s concentrating on regions of smaller

$62/64$. Clearly, the p.d.f.s concentrating on regions of smaller ![]() $\bar {\varDelta }_{u_G}$ correspond to the cases with smaller MSEs in figure 5. To further study the performance of the three tools, we plot the averaged point-wise

$\bar {\varDelta }_{u_G}$ correspond to the cases with smaller MSEs in figure 5. To further study the performance of the three tools, we plot the averaged point-wise ![]() $L_2$ error,

$L_2$ error, ![]() $\langle \varDelta _{u_G}(\boldsymbol {x})\rangle$, for a square gap of size

$\langle \varDelta _{u_G}(\boldsymbol {x})\rangle$, for a square gap of size ![]() $l/l_0=40/64$ in figure 7. It shows that GPOD produces large

$l/l_0=40/64$ in figure 7. It shows that GPOD produces large ![]() $\langle \varDelta _{u_G}\rangle$ all over the gap, while EPOD and GAN behave quite better, especially for the edge region. Moreover, GAN generates smaller

$\langle \varDelta _{u_G}\rangle$ all over the gap, while EPOD and GAN behave quite better, especially for the edge region. Moreover, GAN generates smaller ![]() $\langle \varDelta _{u_G}\rangle$ than EPOD in the inner area (figure 7b,c). However, the

$\langle \varDelta _{u_G}\rangle$ than EPOD in the inner area (figure 7b,c). However, the ![]() $L_2$ error is naturally dominated by the more energetic structures (the ones found at large scales in our turbulent flows) and does not provide an informative evaluation of the predicted fields at multiple scales, which is also important for assessing the reconstruction tools for the turbulent data. Indeed, from figure 8 it is possible to see in a glimpse that the POD- and GAN-based reconstructions have completely different multi-scale statistics which are not captured by the MSE. Figure 8 shows predictions of an instantaneous velocity module field based on GPOD, EPOD and GAN methods compared with the ground truth solution. For all three gap sizes

$L_2$ error is naturally dominated by the more energetic structures (the ones found at large scales in our turbulent flows) and does not provide an informative evaluation of the predicted fields at multiple scales, which is also important for assessing the reconstruction tools for the turbulent data. Indeed, from figure 8 it is possible to see in a glimpse that the POD- and GAN-based reconstructions have completely different multi-scale statistics which are not captured by the MSE. Figure 8 shows predictions of an instantaneous velocity module field based on GPOD, EPOD and GAN methods compared with the ground truth solution. For all three gap sizes ![]() $l/l_0=24/64$,

$l/l_0=24/64$, ![]() $40/64$ and

$40/64$ and ![]() $62/64$, GAN produces realistic reconstructions while GPOD and EPOD only generates blurry predictions. Besides, there are also obvious discontinuities between the supplied measurements and the GPOD predictions of the missing part. This is clearly due to the fact that the number of POD modes

$62/64$, GAN produces realistic reconstructions while GPOD and EPOD only generates blurry predictions. Besides, there are also obvious discontinuities between the supplied measurements and the GPOD predictions of the missing part. This is clearly due to the fact that the number of POD modes ![]() $N'$ used for prediction in (2.8) is limited, as there are only

$N'$ used for prediction in (2.8) is limited, as there are only ![]() $N_S$ measured points available in (2.7) for each damaged data (thus

$N_S$ measured points available in (2.7) for each damaged data (thus ![]() $N'< N_S$). Moreover, minimizing the

$N'< N_S$). Moreover, minimizing the ![]() $L_2$ distance from ground truth in (2.9) results in solutions with almost the correct energy contents but without the complex multi-scale properties. Unlike GPOD using global basis defined on the whole region

$L_2$ distance from ground truth in (2.9) results in solutions with almost the correct energy contents but without the complex multi-scale properties. Unlike GPOD using global basis defined on the whole region ![]() $I$, EPOD gives better results by considering the correlation between fields defined on two smaller regions,

$I$, EPOD gives better results by considering the correlation between fields defined on two smaller regions, ![]() $S$ and

$S$ and ![]() $G$. In this way the prediction (2.16) has the degrees of freedom equal to

$G$. In this way the prediction (2.16) has the degrees of freedom equal to ![]() $N_S$, which are larger than those for GPOD. Therefore, EPOD can predict the large-scale coherent structures but is still limited in generating correct multi-scale properties. Specifically, when the gap size is extremely large,

$N_S$, which are larger than those for GPOD. Therefore, EPOD can predict the large-scale coherent structures but is still limited in generating correct multi-scale properties. Specifically, when the gap size is extremely large, ![]() $N_S$ is very small thus both GPOD and EPOD have small degrees of freedom to make realistic predictions.

$N_S$ is very small thus both GPOD and EPOD have small degrees of freedom to make realistic predictions.

Figure 7. Averaged point-wise ![]() $L_2$ error obtained from (a) GPOD, (b) EPOD and (c) GAN for a square gap of size

$L_2$ error obtained from (a) GPOD, (b) EPOD and (c) GAN for a square gap of size ![]() $l/l_0=40/64$. (d) Profiles of

$l/l_0=40/64$. (d) Profiles of ![]() $\langle \varDelta _{u_G}\rangle$ along the red diagonal line shown in (a), parameterized by

$\langle \varDelta _{u_G}\rangle$ along the red diagonal line shown in (a), parameterized by ![]() $x_d$.

$x_d$.

Figure 8. Reconstruction of an instantaneous field (velocity module) by the different tools for a square gap of sizes ![]() $l/l_0=24/64$ (a),

$l/l_0=24/64$ (a), ![]() $40/64$ (b) and

$40/64$ (b) and ![]() $62/64$ (c). The damaged fields are shown in the first column, while the second to fourth columns show the reconstructed fields obtained from GPOD, EPOD and GAN. The ground truth is shown in the fifth column.

$62/64$ (c). The damaged fields are shown in the first column, while the second to fourth columns show the reconstructed fields obtained from GPOD, EPOD and GAN. The ground truth is shown in the fifth column.

To quantify the statistical similarity between the predictions and the ground truth, we can study the JS divergence, ![]() $\mathrm {JSD}(u_G)=\mathrm {JSD}(\mathrm {p.d.f.}(u_G^{(t)})\parallel \mathrm {p.d.f.}(u_G^{(p)}))$, defined on the distribution of the velocity amplitude at one point, which is a marginal distribution of the whole p.d.f. of the real or predicted fields inside the gap,

$\mathrm {JSD}(u_G)=\mathrm {JSD}(\mathrm {p.d.f.}(u_G^{(t)})\parallel \mathrm {p.d.f.}(u_G^{(p)}))$, defined on the distribution of the velocity amplitude at one point, which is a marginal distribution of the whole p.d.f. of the real or predicted fields inside the gap, ![]() $p_t$ or

$p_t$ or ![]() $p_p$. For distributions

$p_p$. For distributions ![]() $P$ and

$P$ and ![]() $Q$ of a continuous random variable

$Q$ of a continuous random variable ![]() $x$, the JS divergence is a measure of their similarity

$x$, the JS divergence is a measure of their similarity

where ![]() $M=\frac {1}{2}(P+Q)$ and

$M=\frac {1}{2}(P+Q)$ and

is the Kullback–Leibler divergence. A small JS divergence indicates that the two probability distributions are close and vice versa. We use the base 2 logarithm and thus ![]() $0\le \mathrm {JSD}(P\parallel Q)\le 1$, with

$0\le \mathrm {JSD}(P\parallel Q)\le 1$, with ![]() $\mathrm {JSD}(P\parallel Q)=0$ if and only if

$\mathrm {JSD}(P\parallel Q)=0$ if and only if ![]() $P=Q$. Similar to the MSE, the JS divergence is calculated using batches of data and 10 different batches are used to obtain its mean and range of variation. The batch size used to evaluate the JS divergence is now set at 2048, which is larger than that used for the MSE, in order to improve the estimation of the probability distributions. Figure 9 shows

$P=Q$. Similar to the MSE, the JS divergence is calculated using batches of data and 10 different batches are used to obtain its mean and range of variation. The batch size used to evaluate the JS divergence is now set at 2048, which is larger than that used for the MSE, in order to improve the estimation of the probability distributions. Figure 9 shows ![]() $\mathrm {JSD}(u_G)$ for the three reconstruction tools. We have found that GAN gives smaller

$\mathrm {JSD}(u_G)$ for the three reconstruction tools. We have found that GAN gives smaller ![]() $\mathrm {JSD}(u_G)$ than GPOD and EPOD by an order of magnitude over almost the full range of gap sizes, indicating that the p.d.f. of GAN prediction has a better correspondence to the ground truth. This is further shown in figure 10, where we present the p.d.f.s of the predicted velocity module for different gap sizes compared with that of the original data. Besides the imprecise p.d.f. shapes of GPOD and EPOD, we note that they are also predicting some negative values, which is unphysical for a velocity module. This problem is avoided in the GAN reconstruction, as a ReLU activation function has been used in the last layer of the generator.

$\mathrm {JSD}(u_G)$ than GPOD and EPOD by an order of magnitude over almost the full range of gap sizes, indicating that the p.d.f. of GAN prediction has a better correspondence to the ground truth. This is further shown in figure 10, where we present the p.d.f.s of the predicted velocity module for different gap sizes compared with that of the original data. Besides the imprecise p.d.f. shapes of GPOD and EPOD, we note that they are also predicting some negative values, which is unphysical for a velocity module. This problem is avoided in the GAN reconstruction, as a ReLU activation function has been used in the last layer of the generator.

Figure 9. The JS divergence between p.d.f.s of the velocity module inside the missing region from the original data and the predictions obtained from GPOD, EPOD and GAN for a square gap with different sizes.

Figure 10. The p.d.f.s of the velocity module in the missing region obtained from GPOD, EPOD and GAN for a square gap with different sizes. The p.d.f. of the original data over the whole region is plotted for reference and ![]() $\sigma (u)$ is the standard deviation of the original data.

$\sigma (u)$ is the standard deviation of the original data.

3.2. Multi-scale information

This section reports a quantitative analysis of the multi-scale information reconstructed by the three methods. We first study the gradient of the predicted velocity module in the missing region, ![]() $ {\partial } u_G/ {\partial } x_1$. Figure 11 plots

$ {\partial } u_G/ {\partial } x_1$. Figure 11 plots ![]() $\mathrm {MSE}( {\partial } u_G/ {\partial } x_1)$, which is similarly defined as (3.1), and we can see that all methods produce

$\mathrm {MSE}( {\partial } u_G/ {\partial } x_1)$, which is similarly defined as (3.1), and we can see that all methods produce ![]() $\mathrm {MSE}( {\partial } u_G/ {\partial } x_1)$ with values much larger than those of

$\mathrm {MSE}( {\partial } u_G/ {\partial } x_1)$ with values much larger than those of ![]() $\mathrm {MSE}(u_G)$. Moreover, GAN shows similar errors to GPOD at the largest gap size and to EPOD at small gap sizes. However, the MSE itself is not enough for a comprehensive evaluation of the reconstruction. This can be easily understood again by looking at the gradient of different reconstructions shown in figure 12. It is obvious that GAN predictions are much more ‘realistic’ than those of GPOD and EPOD, although their values of

$\mathrm {MSE}(u_G)$. Moreover, GAN shows similar errors to GPOD at the largest gap size and to EPOD at small gap sizes. However, the MSE itself is not enough for a comprehensive evaluation of the reconstruction. This can be easily understood again by looking at the gradient of different reconstructions shown in figure 12. It is obvious that GAN predictions are much more ‘realistic’ than those of GPOD and EPOD, although their values of ![]() $\mathrm {MSE}( {\partial } u_G/ {\partial } x_1)$ are close. Indeed, if both fields are highly fluctuating, even a small spatial shift between the reconstruction and the true solution would result in a significantly larger MSE. This is exactly the case of GAN predictions, where we can see that they have obvious correlations with the ground truth but the MSE is large because of its sensitivity to small spatial shifting. On the other hand, the GPOD or EPOD solutions are inaccurate, having too small spatial fluctuations even with a similar MSE when compared with the GAN. As done above for the velocity amplitude, we further quantify the quality of the reconstruction by looking at the JS divergence between the two p.d.f.s in figure 13. For other metrics to assess the quality of the predictions see, e.g. Wang et al. (Reference Wang, Bovik, Sheikh and Simoncelli2004); Wang & Simoncelli (Reference Wang and Simoncelli2005) and Li et al. (Reference Li, Buzzicotti, Biferale and Bonaccorso2023). Figure 13 confirms that GAN is able to well predict the p.d.f. of

$\mathrm {MSE}( {\partial } u_G/ {\partial } x_1)$ are close. Indeed, if both fields are highly fluctuating, even a small spatial shift between the reconstruction and the true solution would result in a significantly larger MSE. This is exactly the case of GAN predictions, where we can see that they have obvious correlations with the ground truth but the MSE is large because of its sensitivity to small spatial shifting. On the other hand, the GPOD or EPOD solutions are inaccurate, having too small spatial fluctuations even with a similar MSE when compared with the GAN. As done above for the velocity amplitude, we further quantify the quality of the reconstruction by looking at the JS divergence between the two p.d.f.s in figure 13. For other metrics to assess the quality of the predictions see, e.g. Wang et al. (Reference Wang, Bovik, Sheikh and Simoncelli2004); Wang & Simoncelli (Reference Wang and Simoncelli2005) and Li et al. (Reference Li, Buzzicotti, Biferale and Bonaccorso2023). Figure 13 confirms that GAN is able to well predict the p.d.f. of ![]() $ {\partial } u_G/ {\partial } x_1$ while GPOD and EPOD do not have this ability. Moreover, GPOD produces comparable

$ {\partial } u_G/ {\partial } x_1$ while GPOD and EPOD do not have this ability. Moreover, GPOD produces comparable ![]() $\mathrm {JSD}( {\partial } u_G/ {\partial } x_1)$ to EPOD. The above conclusions are further supported in figure 14, which shows p.d.f.s of

$\mathrm {JSD}( {\partial } u_G/ {\partial } x_1)$ to EPOD. The above conclusions are further supported in figure 14, which shows p.d.f.s of ![]() $ {\partial } u_G/ {\partial } x_1$ from the predictions and the ground truth. We next compare the scale-by-scale energy budget of the original and reconstructed solutions in figure 15, with the help of the energy spectrum defined over the whole region

$ {\partial } u_G/ {\partial } x_1$ from the predictions and the ground truth. We next compare the scale-by-scale energy budget of the original and reconstructed solutions in figure 15, with the help of the energy spectrum defined over the whole region

Figure 11. The MSE of the gradient of the reconstructed velocity module from GPOD, EPOD and GAN in a square gap with different sizes. Red horizontal line represents the uncorrelated baseline.

Figure 12. The gradient of the velocity module fields shown in figure 8. The first column shows the damaged fields with a square gap of sizes ![]() $l/l_0=24/64$ (a),

$l/l_0=24/64$ (a), ![]() $40/64$ (b) and

$40/64$ (b) and ![]() $62/64$ (c). Note that, for the maximum gap size,

$62/64$ (c). Note that, for the maximum gap size, ![]() $l/l_0=62/64$, we have only one velocity layer on the vertical borders where we do not supply any information on the gradient. The second to fifth columns plot the gradient fields obtained from GPOD, EPOD, GAN and the ground truth.

$l/l_0=62/64$, we have only one velocity layer on the vertical borders where we do not supply any information on the gradient. The second to fifth columns plot the gradient fields obtained from GPOD, EPOD, GAN and the ground truth.

Figure 13. The JS divergence between p.d.f.s of the gradient of the reconstructed velocity module inside the missing region from the original data and the predictions obtained from GPOD, EPOD and GAN for a square gap with different sizes.

Figure 14. The p.d.f.s of the gradient of the reconstructed velocity module in the missing region obtained from GPOD, EPOD and GAN for a square gap with different sizes. The p.d.f. of the original data over the whole region is plotted for reference and ![]() $\sigma ( {\partial } u/ {\partial } x_1)$ is the standard deviation of the original data.

$\sigma ( {\partial } u/ {\partial } x_1)$ is the standard deviation of the original data.

Figure 15. Energy spectra of the original velocity module and the reconstructions obtained from GPOD, EPOD and GAN for a square gap of different sizes (a–c). The corresponding ![]() $E(k)/E^{(t)}(k)$ is shown in (d–f), where

$E(k)/E^{(t)}(k)$ is shown in (d–f), where ![]() $E(k)$ and

$E(k)$ and ![]() $E^{(t)}(k)$ are the spectra of the reconstructions and the ground truth, respectively.

$E^{(t)}(k)$ are the spectra of the reconstructions and the ground truth, respectively.

\begin{equation} E(k)=\sum_{k\le\lVert \boldsymbol{k}\rVert< k+1}\frac{1}{2}\langle\hat{u}(\boldsymbol{k})\hat{u}^\ast(\boldsymbol{k})\rangle, \end{equation}

\begin{equation} E(k)=\sum_{k\le\lVert \boldsymbol{k}\rVert< k+1}\frac{1}{2}\langle\hat{u}(\boldsymbol{k})\hat{u}^\ast(\boldsymbol{k})\rangle, \end{equation}

where ![]() $\boldsymbol {k}=(k_1, k_2)$ is the wavenumber,

$\boldsymbol {k}=(k_1, k_2)$ is the wavenumber, ![]() $\hat {u}(\boldsymbol {k})$ is the Fourier transform of velocity module and

$\hat {u}(\boldsymbol {k})$ is the Fourier transform of velocity module and ![]() $\hat {u}^\ast (\boldsymbol {k})$ is its complex conjugate. To highlight the reconstruction performance as a function of the wavenumber, we also show the ratio between the reconstructed and the original spectra,

$\hat {u}^\ast (\boldsymbol {k})$ is its complex conjugate. To highlight the reconstruction performance as a function of the wavenumber, we also show the ratio between the reconstructed and the original spectra, ![]() $E(k)/E^{(t)}(k)$ for the three different gap sizes in the second row of figure 15. Figure 16 plots the flatness of the reconstructed fields,

$E(k)/E^{(t)}(k)$ for the three different gap sizes in the second row of figure 15. Figure 16 plots the flatness of the reconstructed fields,

where ![]() $\delta _r u=u(\boldsymbol {x}+\boldsymbol {r})-u(\boldsymbol {x})$ and

$\delta _r u=u(\boldsymbol {x}+\boldsymbol {r})-u(\boldsymbol {x})$ and ![]() $\boldsymbol {r}=(r, 0)$. The angle bracket in (3.9) represents the average over the test dataset and over

$\boldsymbol {r}=(r, 0)$. The angle bracket in (3.9) represents the average over the test dataset and over ![]() $\boldsymbol {x}$, for which

$\boldsymbol {x}$, for which ![]() $\boldsymbol {x}$ or

$\boldsymbol {x}$ or ![]() $\boldsymbol {x}+\boldsymbol {r}$ fall in the gap. The flatness of the ground truth calculated over the whole region is also shown for reference. We remark that the flatness is used to characterize the intermittency in the turbulence community (see Frisch Reference Frisch1995). It is determined by the two-point p.d.f.s,

$\boldsymbol {x}+\boldsymbol {r}$ fall in the gap. The flatness of the ground truth calculated over the whole region is also shown for reference. We remark that the flatness is used to characterize the intermittency in the turbulence community (see Frisch Reference Frisch1995). It is determined by the two-point p.d.f.s, ![]() $\mathrm {p.d.f.}(\delta _ru)$, connected to the distribution of the whole real or generated fields inside the gap,

$\mathrm {p.d.f.}(\delta _ru)$, connected to the distribution of the whole real or generated fields inside the gap, ![]() $p_t$ or

$p_t$ or ![]() $p_p$. Figures 15 and 16 show that GAN performs well to reproduce the multi-scale statistical properties, except at small scales for large gap sizes. However, GPOD and EPOD can only predict a good energy spectrum for the small gap size

$p_p$. Figures 15 and 16 show that GAN performs well to reproduce the multi-scale statistical properties, except at small scales for large gap sizes. However, GPOD and EPOD can only predict a good energy spectrum for the small gap size ![]() $l/l_0=24/64$ but fail at all scales for both the energy spectrum and flatness at gap sizes

$l/l_0=24/64$ but fail at all scales for both the energy spectrum and flatness at gap sizes ![]() $l/l_0=40/64$ and

$l/l_0=40/64$ and ![]() $62/60$.

$62/60$.

Figure 16. The flatness of the original field and the reconstructions obtained from GPOD, EPOD and GAN for a square gap of different sizes.

3.3. Extreme events

In this section, we focus on the ability of the different methods to reconstruct extreme events inside the gap for each frame. In figure 17 we present the scatter plots of the largest values of velocity module or its gradient measured in the gap region from the original data and the predicted fields generated by GPOD, EPOD or GAN. On top of each panel we report the scatter plot correlation index, defined as

where ![]() $|\sin \theta |=\lVert \boldsymbol {U}\times \boldsymbol {e}\rVert /\lVert \boldsymbol {U}\rVert$ with

$|\sin \theta |=\lVert \boldsymbol {U}\times \boldsymbol {e}\rVert /\lVert \boldsymbol {U}\rVert$ with ![]() $\theta$ as the angle between the unit vector

$\theta$ as the angle between the unit vector ![]() $\boldsymbol {e}=(1/\sqrt {2},1/\sqrt {2})$ and

$\boldsymbol {e}=(1/\sqrt {2},1/\sqrt {2})$ and ![]() $\boldsymbol {U}=(\max (u_G^{(p)}),\max (u_G^{(t)}))$. The vector

$\boldsymbol {U}=(\max (u_G^{(p)}),\max (u_G^{(t)}))$. The vector ![]() $\boldsymbol {U}$ for

$\boldsymbol {U}$ for ![]() $ {\partial } u_G/ {\partial } x_1$ can be similarly defined. It is obvious that

$ {\partial } u_G/ {\partial } x_1$ can be similarly defined. It is obvious that ![]() $c\in [0,1]$ and

$c\in [0,1]$ and ![]() $c=1$ corresponds to a perfect prediction in terms of the extreme events. Figure 17 shows that, for both extreme values of the velocity module and its gradient, GAN is the least biased while the other two methods tend to underestimate them.

$c=1$ corresponds to a perfect prediction in terms of the extreme events. Figure 17 shows that, for both extreme values of the velocity module and its gradient, GAN is the least biased while the other two methods tend to underestimate them.

Figure 17. Scatter plots of the maximum values of velocity module (a–c) and its gradient (d–f) in the missing region obtained from the original data and the one produced by GPOD, EPOD or GAN for a square gap of size ![]() $l/l_0=40/64$. Colours are proportional to the density of points in the scatter plot. The correlation indices are shown on top of each panel.

$l/l_0=40/64$. Colours are proportional to the density of points in the scatter plot. The correlation indices are shown on top of each panel.

4. Dependency of GAN-based reconstruction on the adversarial ratio

As shown by the previous results, GAN is certainly superior regarding metrics evaluated in this study. This supremacy is given by the fact that, with the nonlinear CNN structure of the generator, GAN optimizes the point-wise ![]() $L_2$ loss and minimizes the JS divergence between the probability distributions of the real and generated fields with the help of the adversarial discriminator (see § 2.4). To study the effects of the balancing between the above two objectives on reconstruction quality, we have performed a systematic scanning of the GAN performances for changing adversarial ratio

$L_2$ loss and minimizes the JS divergence between the probability distributions of the real and generated fields with the help of the adversarial discriminator (see § 2.4). To study the effects of the balancing between the above two objectives on reconstruction quality, we have performed a systematic scanning of the GAN performances for changing adversarial ratio ![]() $\lambda _{adv}$, the hyper-parameter controlling the relative importance of

$\lambda _{adv}$, the hyper-parameter controlling the relative importance of ![]() $L_2$ loss and adversarial loss of the generator, as shown in (2.17). We consider a central square gap of size

$L_2$ loss and adversarial loss of the generator, as shown in (2.17). We consider a central square gap of size ![]() $l/l_0=40/64$ and train the GAN with different adversarial ratios, where

$l/l_0=40/64$ and train the GAN with different adversarial ratios, where ![]() $\lambda _{adv}=10^{-4}$,

$\lambda _{adv}=10^{-4}$, ![]() $10^{-3}$,

$10^{-3}$, ![]() $10^{-2}$ and

$10^{-2}$ and ![]() $10^{-1}$. Table 3 shows the values of

$10^{-1}$. Table 3 shows the values of ![]() $\mathrm {MSE}(u_G)$ and

$\mathrm {MSE}(u_G)$ and ![]() $\mathrm {JSD}(u_G)$ obtained at different adversarial ratios.

$\mathrm {JSD}(u_G)$ obtained at different adversarial ratios.

Table 3. The MSE and the JS divergence between p.d.f.s for the original and generated velocity module inside the missing region, obtained from GAN with different adversarial ratios for a square gap of size ![]() $l/l_0=40/64$. The results for GPOD and EPOD are provided as well for comparison. The MSE and JS divergence are computed over different test batches, specifically of sizes 128 and 2048, respectively. From these computations, we obtain both the mean values and the error bounds.

$l/l_0=40/64$. The results for GPOD and EPOD are provided as well for comparison. The MSE and JS divergence are computed over different test batches, specifically of sizes 128 and 2048, respectively. From these computations, we obtain both the mean values and the error bounds.

It is obvious that the adversarial ratio controls the balance between the point-wise reconstruction error and the predicted turbulent statistics. As the adversarial ratio increases, the MSE increases while the JS divergence decreases. p.d.f.s of the predicted velocity module from GANs with different adversarial ratios are compared with that of the original data in figure 18, which shows that the predicted p.d.f. gets closer to the original one with a larger adversarial ratio. The above results clearly show that there exists an optimal adversarial ratio to satisfy the multi-objective requirements of having a small ![]() $L_2$ distance and a realistic p.d.f.. In the limit of vanishing

$L_2$ distance and a realistic p.d.f.. In the limit of vanishing ![]() $\lambda _{adv}$, the GAN outperforms GPOD and EPOD in terms of MSE, but falls behind them concerning JS divergence (table 3).

$\lambda _{adv}$, the GAN outperforms GPOD and EPOD in terms of MSE, but falls behind them concerning JS divergence (table 3).

Figure 18. The p.d.f.s of the reconstructed velocity module inside the gap region, which is obtained from GAN with different adversarial ratios, for a square gap of size ![]() $l/l_0=40/64$.

$l/l_0=40/64$.

5. Dependency on gap geometry: random gappiness

Things change when looking at a completely different topology of the damages. Here, we study case (ii) in § 2.1, where position points are removed randomly in the original domain ![]() $I$, without any spatial correlations. Because the random gappiness is easier for interpolation than a square gap of the same size, all reconstruction methods show good and comparable results in terms of the MSE, the JS divergence and p.d.f.s for velocity module (figures 19 and 20). For almost all damaged densities, POD- and GAN-based methods give small values of

$I$, without any spatial correlations. Because the random gappiness is easier for interpolation than a square gap of the same size, all reconstruction methods show good and comparable results in terms of the MSE, the JS divergence and p.d.f.s for velocity module (figures 19 and 20). For almost all damaged densities, POD- and GAN-based methods give small values of ![]() $\mathrm {MSE}(u_G)$ and

$\mathrm {MSE}(u_G)$ and ![]() $\mathrm {JSD}(u_G)$. However, when the total damaged region area is extremely large, GPOD and EPOD are not able to reconstruct the field at large wavenumbers while GAN still works well because of the adversarial training, as shown by the energy spectra in figure 21. Figure 22 shows the reconstruction of the velocity module and the corresponding gradient fields for random gappiness with two extremely large sizes. It is obvious that GPOD and EPOD only predict the large-scale structure while GAN generates reconstructions with multi-scale information. To make a comparison between the random gappiness case and the super-resolution task, we can compare the effect of random gappiness of sizes

$\mathrm {JSD}(u_G)$. However, when the total damaged region area is extremely large, GPOD and EPOD are not able to reconstruct the field at large wavenumbers while GAN still works well because of the adversarial training, as shown by the energy spectra in figure 21. Figure 22 shows the reconstruction of the velocity module and the corresponding gradient fields for random gappiness with two extremely large sizes. It is obvious that GPOD and EPOD only predict the large-scale structure while GAN generates reconstructions with multi-scale information. To make a comparison between the random gappiness case and the super-resolution task, we can compare the effect of random gappiness of sizes ![]() $l/l_0=60/64$ and

$l/l_0=60/64$ and ![]() $62/64$, similar to a downsampling of the original field by approximately a factor of

$62/64$, similar to a downsampling of the original field by approximately a factor of ![]() $3$ and

$3$ and ![]() $4$ for each spatial direction, respectively.

$4$ for each spatial direction, respectively.

Figure 19. The MSE (a) and the JS divergence (b) between p.d.f.s for the original and generated velocity module inside the missing region, obtained from GPOD, EPOD and GAN for random gappiness with different sizes.

Figure 20. The p.d.f.s of the velocity module in the missing region obtained from GPOD, EPOD and GAN for random gappiness with different sizes. The p.d.f. of the original data over the whole region is plotted for reference and ![]() $\sigma (u)$ is the standard deviation of the original data.

$\sigma (u)$ is the standard deviation of the original data.

Figure 21. Energy spectra of the original velocity module and the reconstructions obtained from GPOD, EPOD and GAN for random gappiness with different sizes (a–c). The corresponding ![]() $E(k)/E^{(t)}(k)$ is shown in (d–f), where

$E(k)/E^{(t)}(k)$ is shown in (d–f), where ![]() $E(k)$ and

$E(k)$ and ![]() $E^{(t)}(k)$ are the spectra of the reconstructions and the ground truth, respectively. The vertical dashed and dash-dot lines respectively indicate the wavenumbers corresponding to the data resolutions

$E^{(t)}(k)$ are the spectra of the reconstructions and the ground truth, respectively. The vertical dashed and dash-dot lines respectively indicate the wavenumbers corresponding to the data resolutions ![]() $l/l_0=60/64$ and

$l/l_0=60/64$ and ![]() $62/64$. These wavenumbers are calculated as

$62/64$. These wavenumbers are calculated as ![]() $k_\eta /d$, where

$k_\eta /d$, where ![]() $d$ is the corresponding downsampling rate.

$d$ is the corresponding downsampling rate.

Figure 22. Reconstruction of an instantaneous field (velocity module) by the different tools for random gappiness of sizes ![]() $l/l_0=60/64$ (a) and