1 Introduction

A fundamental result in the theory of linear recurrences due to Pólya [Reference PólyaPó21] asserts that if

![]() $\{f(n)\}$

is a sequence satisfying a linear recurrence and taking all of its values in the set

$\{f(n)\}$

is a sequence satisfying a linear recurrence and taking all of its values in the set

![]() $G\cup \{0\}$

for some finitely generated multiplicative subgroup G of

$G\cup \{0\}$

for some finitely generated multiplicative subgroup G of

![]() $\mathbb {Q}^*$

, then the generating series of

$\mathbb {Q}^*$

, then the generating series of

![]() $f(n)$

is a finite sum of series of the form

$f(n)$

is a finite sum of series of the form

![]() $gx^i/(1-g'x^n)$

with

$gx^i/(1-g'x^n)$

with

![]() $g,g'\in G$

and

$g,g'\in G$

and

![]() $i,n$

natural numbers along with a finite set of monomials with coefficients in G; moreover, these series can be chosen to have disjoint supports. In particular, Pólya’s theorem gives a complete characterization of sequences

$i,n$

natural numbers along with a finite set of monomials with coefficients in G; moreover, these series can be chosen to have disjoint supports. In particular, Pólya’s theorem gives a complete characterization of sequences

![]() $\{f(n)\}\subseteq \mathbb {Q}^*$

that have the property that both

$\{f(n)\}\subseteq \mathbb {Q}^*$

that have the property that both

![]() $\{f(n)\}$

and

$\{f(n)\}$

and

![]() $\{1/f(n)\}$

satisfy a nontrivial linear recurrence.

$\{1/f(n)\}$

satisfy a nontrivial linear recurrence.

Pólya’s result was later extended to number fields by Benzaghou [Reference BenzaghouBen70] and then by Bézivin [Reference BézivinBé87] to all fields (even those of positive characteristic). A noncommutative multivariate version was recently proved by the authors [Reference Bell and SmertnigBS21]; the noncommutative variant can be interpreted as structural description of unambiguous weighted finite automata over a field.

The generating function of a sequence satisfying a linear recurrence is the power series in some variable x of a rational function about

![]() $x=0$

. When one adopts this point of view, it is natural to ask whether such results can be extended to D-finite (or differentiably finite) power series. We recall that if K is a field of characteristic zero, then a univariate power series

$x=0$

. When one adopts this point of view, it is natural to ask whether such results can be extended to D-finite (or differentiably finite) power series. We recall that if K is a field of characteristic zero, then a univariate power series

![]() $F(x) =\sum f(n)x^n\in K[[x]]$

is D

-finite if

$F(x) =\sum f(n)x^n\in K[[x]]$

is D

-finite if

![]() $F(x)$

satisfies a nontrivial homogeneous linear differential equation with polynomial coefficients:

$F(x)$

satisfies a nontrivial homogeneous linear differential equation with polynomial coefficients:

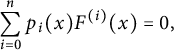

$$ \begin{align*}\sum_{i=0}^n p_i(x) F^{(i)}(x)=0,\end{align*} $$

$$ \begin{align*}\sum_{i=0}^n p_i(x) F^{(i)}(x)=0,\end{align*} $$

with

![]() $p_0(x),\ldots ,p_n(x)\in K[x]$

.

$p_0(x),\ldots ,p_n(x)\in K[x]$

.

Univariate D-finite series were introduced by Stanley [Reference StanleySta80], and the class of D-finite power series is closed under many operations, such as taking K-linear combinations, products, sections, diagonals, Hadamard products, and derivatives, and it contains a multitude of classical generating functions arising from enumerative combinatorics [Reference StanleySta99, Chapter 6]. In particular, algebraic series and their diagonals are D-finite, and a power series F is D-finite if and only if its sequence of coefficients satisfies certain recursions with polynomial coefficients [Reference LipshitzLip89, Theorem 3.7].

Bézivin gave a sweeping extension of Pólya’s result, showing that a univariate D-finite Bézivin series over a field K of characteristic

![]() $0$

with the property that there is a fixed

$0$

with the property that there is a fixed

![]() $r\ge 1$

and a fixed finitely generated subgroup G of

$r\ge 1$

and a fixed finitely generated subgroup G of

![]() $K^*$

such that every coefficient of

$K^*$

such that every coefficient of

![]() $F(x)$

can be written as a sum of at most r elements of G is in fact a rational power series and has only simple poles [Reference BézivinBé86].

$F(x)$

can be written as a sum of at most r elements of G is in fact a rational power series and has only simple poles [Reference BézivinBé86].

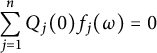

A natural, and thus far unexplored, direction in which to extend the results of Pólya and Bézivin is to consider multivariate analogues. A multivariate variant of D-finite series was given by Lipshitz [Reference LipshitzLip89]. Here, one has a field K of characteristic zero and declares that a formal multivariate series

is D

-finite if all the partial derivatives

![]() $(\partial /\partial x_1)^{e_1} \cdots (\partial /\partial x_d)^{e_d}F$

for

$(\partial /\partial x_1)^{e_1} \cdots (\partial /\partial x_d)^{e_d}F$

for

![]() $e_1$

,

$e_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $e_d \ge 0$

are contained in a finite-dimensional vector space over the rational function field

$e_d \ge 0$

are contained in a finite-dimensional vector space over the rational function field

![]() $K({\boldsymbol x})$

. Equivalently, for each

$K({\boldsymbol x})$

. Equivalently, for each

![]() $i \in [1,d]$

, the series F satisfies a linear partial differential equation of the form

$i \in [1,d]$

, the series F satisfies a linear partial differential equation of the form

with polynomials

![]() $P_{i,0}$

,

$P_{i,0}$

,

![]() $P_{i,1}$

,

$P_{i,1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $P_{i,n} \in K[{\boldsymbol x}]$

, at least one of which is nonzero.

$P_{i,n} \in K[{\boldsymbol x}]$

, at least one of which is nonzero.

In fact, many interesting classical Diophantine questions can be expressed in terms of questions about coefficients of multivariate rational power series and multivariate D-finite series. To give one example, Catalan’s conjecture (now a theorem due to Mihăilescu [Reference MihăilescuMih04]) states that the only solutions to the equation

![]() $3^n=2^m+1$

are given by

$3^n=2^m+1$

are given by

![]() $(n,m)=(1,1)$

and

$(n,m)=(1,1)$

and

![]() $(2,3)$

. This is equivalent to the statement that the bivariate rational power series

$(2,3)$

. This is equivalent to the statement that the bivariate rational power series

has nonzero coefficients except for the coefficients of

![]() $x_1^2x_2^3$

and

$x_1^2x_2^3$

and

![]() $x_1x_2$

. On the other hand, it is typically much more difficult to obtain results about multivariate rational functions and multivariate D-finite series for several reasons. In the case of univariate rational series, the coefficients have a nice closed form and there is a strong structural description of the set of zero coefficients due to Skolem, Mahler, and Lech (see [Reference Everest, van der Poorten, Shparlinski and WardEvdPSW03, Chapter 2.1]). Similarly, for D-finite series, there are many strong results concerning the asymptotics of their coefficients (see [Reference Flajolet and SedgewickFS09], in particular Chapters VII.9 and VIII.7) and one can often make use of these results when considering problems in the univariate case. Straightforward attempts at extending these approaches to higher dimensions typically fail or become too unwieldy. For this reason, new ideas are often important in obtaining multivariate extensions.

$x_1x_2$

. On the other hand, it is typically much more difficult to obtain results about multivariate rational functions and multivariate D-finite series for several reasons. In the case of univariate rational series, the coefficients have a nice closed form and there is a strong structural description of the set of zero coefficients due to Skolem, Mahler, and Lech (see [Reference Everest, van der Poorten, Shparlinski and WardEvdPSW03, Chapter 2.1]). Similarly, for D-finite series, there are many strong results concerning the asymptotics of their coefficients (see [Reference Flajolet and SedgewickFS09], in particular Chapters VII.9 and VIII.7) and one can often make use of these results when considering problems in the univariate case. Straightforward attempts at extending these approaches to higher dimensions typically fail or become too unwieldy. For this reason, new ideas are often important in obtaining multivariate extensions.

It is therefore of considerable interest to understand multivariate D-finite series, although doing so often presents additional technical difficulties. In this paper, we consider structural results for multivariate series that are implied by the additional restrictions on the coefficients of a D-finite series that were imposed by Bézivin and Pólya in the univariate case. For a field K of characteristic zero and a multiplicative subgroup

![]() $G \le K^*$

, we set

$G \le K^*$

, we set

and write

for the r-fold sumset of

![]() $G_0$

.

$G_0$

.

In view of Bézivin’s and Pólya’s results, we give the following definitions.

Definition 1.1 A power series

![]() is:

is:

-

• a Bézivin series if there exists a finitely generated subgroup

$G \le K^*$

and

$G \le K^*$

and

$r \in \mathbb {N}$

such that

$r \in \mathbb {N}$

such that

$f(\boldsymbol n) \in rG_0$

for all

$f(\boldsymbol n) \in rG_0$

for all

$\boldsymbol n \in \mathbb {N}^d$

;

$\boldsymbol n \in \mathbb {N}^d$

; -

• a Pólya series if there exists a finitely generated subgroup

$G \le K^*$

such that

$G \le K^*$

such that

$f(\boldsymbol n) \in G_0$

for all

$f(\boldsymbol n) \in G_0$

for all

$\boldsymbol n \in \mathbb {N}^d$

.

$\boldsymbol n \in \mathbb {N}^d$

.

The terminology of Pólya series is standard; we have chosen to name Bézivin series based on Bézivin’s results characterizing this type of series in the univariate case [Reference BézivinBé86, Reference BézivinBé87].

In this paper, we completely characterize multivariate D-finite Bézivin and Pólya series. As an immediate consequence, we obtain a characterization of D-finite series whose Hadamard (sub)inverse is also D-finite. The proofs make repeated use of unit equations and results from the theory of semilinear sets.

To state our main result, we recall the notion of semilinear subsets of

![]() $\mathbb {N}^d$

. A subset

$\mathbb {N}^d$

. A subset

![]() $\mathcal {S} \subseteq \mathbb {N}^d$

is simple linear if it is of the form

$\mathcal {S} \subseteq \mathbb {N}^d$

is simple linear if it is of the form

![]() $\mathcal {S} = \boldsymbol a_0 + \boldsymbol a_1\mathbb {N} + \cdots + \boldsymbol a_s \mathbb {N}$

with (

$\mathcal {S} = \boldsymbol a_0 + \boldsymbol a_1\mathbb {N} + \cdots + \boldsymbol a_s \mathbb {N}$

with (

![]() $\mathbb {Z}$

-)linearly independent

$\mathbb {Z}$

-)linearly independent

![]() $\boldsymbol a_1$

,

$\boldsymbol a_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol a_s \in \mathbb {N}^d$

. The terminology comes from the theory of semilinear sets (see Section 2.1). The following is our main result.

$\boldsymbol a_s \in \mathbb {N}^d$

. The terminology comes from the theory of semilinear sets (see Section 2.1). The following is our main result.

Theorem 1.2 Let K be a field of characteristic zero, let

![]() $d \ge 0$

, and let

$d \ge 0$

, and let

be a Bézivin series, with all coefficients of F contained in

![]() $rG_0$

for some finitely generated subgroup

$rG_0$

for some finitely generated subgroup

![]() $G \le K^*$

and

$G \le K^*$

and

![]() $r \in \mathbb {N}$

. Then the following statements are equivalent.

$r \in \mathbb {N}$

. Then the following statements are equivalent.

-

(a) F is D-finite.

-

(b) F is rational.

-

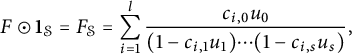

(c) F is a (finite) sum of skew-geometric series with coefficients in G, that is, rational functions of the form

where $$\begin{align*}\frac{g_0u_0}{(1-g_1 u_1)\cdots (1-g_l u_l)}, \end{align*}$$

$$\begin{align*}\frac{g_0u_0}{(1-g_1 u_1)\cdots (1-g_l u_l)}, \end{align*}$$

$u_0$

,

$u_0$

,

$u_1$

,

$u_1$

,

$\ldots \,$

,

$\ldots \,$

,

$u_l$

are monomials in

$u_l$

are monomials in

$x_1$

,

$x_1$

,

$\ldots \,$

,

$\ldots \,$

,

$x_d$

such that

$x_d$

such that

$u_1$

,

$u_1$

,

$\ldots \,$

,

$\ldots \,$

,

$u_l$

are algebraically independent, and

$u_l$

are algebraically independent, and

$g_0$

,

$g_0$

,

$g_1$

,

$g_1$

,

$\ldots \,$

,

$\ldots \,$

,

$g_l \in G$

.

$g_l \in G$

.

-

(d) As in (c), but in addition, the sum may be taken in such a way that for any two summands, the support is either identical or disjoint. Moreover, every

$\boldsymbol n \in \mathbb {N}^d$

is contained in the support of at most r summands.

$\boldsymbol n \in \mathbb {N}^d$

is contained in the support of at most r summands. -

(e) There exists a partition of

$\mathbb {N}^d$

into finitely many simple linear sets so that on each such set

$\mathbb {N}^d$

into finitely many simple linear sets so that on each such set

$\mathcal {S}=\boldsymbol a_0 + \boldsymbol a_1 \mathbb {N} + \cdots + \boldsymbol a_s \mathbb {N}$

with

$\mathcal {S}=\boldsymbol a_0 + \boldsymbol a_1 \mathbb {N} + \cdots + \boldsymbol a_s \mathbb {N}$

with

$\boldsymbol a_1$

,

$\boldsymbol a_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\boldsymbol a_s$

linearly independent, where

$\boldsymbol a_s$

linearly independent, where $$\begin{align*}f(\boldsymbol a_0 + m_1 \boldsymbol a_1 + \cdots + m_s \boldsymbol a_s) = \sum_{i=1}^l g_{i,0} g_{i,1}^{m_1} \cdots g_{i,s}^{m_s} \qquad\text{for}\ (m_1,\ldots,m_s) \in \mathbb{N}^s, \end{align*}$$

$$\begin{align*}f(\boldsymbol a_0 + m_1 \boldsymbol a_1 + \cdots + m_s \boldsymbol a_s) = \sum_{i=1}^l g_{i,0} g_{i,1}^{m_1} \cdots g_{i,s}^{m_s} \qquad\text{for}\ (m_1,\ldots,m_s) \in \mathbb{N}^s, \end{align*}$$

$0 \le l \le r$

and

$0 \le l \le r$

and

$g_{i,j} \in G$

for

$g_{i,j} \in G$

for

$i \in [1,l]$

and

$i \in [1,l]$

and

$j \in [0,s]$

.

$j \in [0,s]$

.

The description of D-finite Pólya series, that is, the case

![]() $r=1$

of the previous theorem, deserves separate mention, although it follows readily from the more general result on Bézivin series. Following terminology from formal language theory, a sum of power series

$r=1$

of the previous theorem, deserves separate mention, although it follows readily from the more general result on Bézivin series. Following terminology from formal language theory, a sum of power series

![]() $F_1$

,

$F_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $F_n$

is unambiguous if the support of

$F_n$

is unambiguous if the support of

![]() $F_i$

is disjoint from the support of

$F_i$

is disjoint from the support of

![]() $F_j$

for

$F_j$

for

![]() $i \ne j$

(see Definition 3.16).

$i \ne j$

(see Definition 3.16).

Theorem 1.3 Let K be a field of characteristic zero, let

![]() $d \ge 0$

, and let

$d \ge 0$

, and let

be a Pólya series with coefficients contained in

![]() $G_0$

for some finitely generated subgroup

$G_0$

for some finitely generated subgroup

![]() $G \le K^*$

. Then the following statements are equivalent.

$G \le K^*$

. Then the following statements are equivalent.

-

(a) F is D-finite.

-

(b) F is rational.

-

(c) F is a (finite) unambiguous sum of skew-geometric series with coefficients in G.

-

(d) The support of F can be partitioned into finitely many simple linear sets so that on each such set

$\mathcal {S}=\boldsymbol a_0 + \boldsymbol a_1 \mathbb {N} + \cdots + \boldsymbol a_s \mathbb {N}$

with

$\mathcal {S}=\boldsymbol a_0 + \boldsymbol a_1 \mathbb {N} + \cdots + \boldsymbol a_s \mathbb {N}$

with

$\boldsymbol a_1$

,

$\boldsymbol a_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\boldsymbol a_s$

linearly independent, with

$\boldsymbol a_s$

linearly independent, with $$\begin{align*}f(\boldsymbol a_0 + m_1 \boldsymbol a_1 + \cdots + m_s \boldsymbol a_s) = g_{0} g_{1}^{m_1} \cdots g_{s}^{m_s} \qquad\text{for}\ (m_1,\ldots,m_s) \in \mathbb{N}^s, \end{align*}$$

$$\begin{align*}f(\boldsymbol a_0 + m_1 \boldsymbol a_1 + \cdots + m_s \boldsymbol a_s) = g_{0} g_{1}^{m_1} \cdots g_{s}^{m_s} \qquad\text{for}\ (m_1,\ldots,m_s) \in \mathbb{N}^s, \end{align*}$$

$g_{j} \in G$

for

$g_{j} \in G$

for

$j \in [0,s]$

.

$j \in [0,s]$

.

For a power series

![]() , let

, let

where

![]() $f(\boldsymbol n)^{\dagger } = f(\boldsymbol n)^{-1}$

if

$f(\boldsymbol n)^{\dagger } = f(\boldsymbol n)^{-1}$

if

![]() $f(\boldsymbol n)$

is nonzero and

$f(\boldsymbol n)$

is nonzero and

![]() $f(\boldsymbol n)^\dagger $

is zero otherwise. The series

$f(\boldsymbol n)^\dagger $

is zero otherwise. The series

![]() $F^\dagger $

is the Hadamard subinverse of F.

$F^\dagger $

is the Hadamard subinverse of F.

We call a power series finitary if its coefficients are contained in a finitely generated

![]() $\mathbb {Z}$

-subalgebra of K. The set of finitary power series is trivially closed under K-linear combinations, products, sections, diagonals, Hadamard products, and derivatives. Therefore, the set of finitary D-finite series is closed under the same operations. Algebraic series as well as their diagonals and sections are finitary D-finite (see Lemma 7.1).

$\mathbb {Z}$

-subalgebra of K. The set of finitary power series is trivially closed under K-linear combinations, products, sections, diagonals, Hadamard products, and derivatives. Therefore, the set of finitary D-finite series is closed under the same operations. Algebraic series as well as their diagonals and sections are finitary D-finite (see Lemma 7.1).

Corollary 1.4 Let

![]() be finitary D-finite. Then

be finitary D-finite. Then

![]() $F^\dagger $

is finitary if and only if F satisfies the equivalent conditions of Theorem 1.3 for some finitely generated subgroup

$F^\dagger $

is finitary if and only if F satisfies the equivalent conditions of Theorem 1.3 for some finitely generated subgroup

![]() $G \le K^*$

. In particular, if F and

$G \le K^*$

. In particular, if F and

![]() $F^\dagger $

are both finitary D-finite, then they are in fact unambiguous sums of skew-geometric series.

$F^\dagger $

are both finitary D-finite, then they are in fact unambiguous sums of skew-geometric series.

1.1 Notation

Throughout the paper, we fix a field K of characteristic

![]() $0$

. When considering a Bézivin series

$0$

. When considering a Bézivin series

![]() , we will always tacitly assume that

, we will always tacitly assume that

![]() $G \le K^*$

denotes a finitely generated subgroup, and

$G \le K^*$

denotes a finitely generated subgroup, and

![]() $r \ge 1$

denotes a positive integer, such that every coefficient of F is contained in

$r \ge 1$

denotes a positive integer, such that every coefficient of F is contained in

![]() $rG_0$

.

$rG_0$

.

2 Preliminaries

For a,

![]() $b \in \mathbb {Z}$

, let

$b \in \mathbb {Z}$

, let

![]() . Let

. Let

![]() $\mathbb {N} = \{0,1,2,\ldots \}$

and

$\mathbb {N} = \{0,1,2,\ldots \}$

and

![]() $\mathbb {N}_{\ge k} = \{\, x \in \mathbb {N} : x \ge k \,\}$

for

$\mathbb {N}_{\ge k} = \{\, x \in \mathbb {N} : x \ge k \,\}$

for

![]() $k \in \mathbb {N}$

.

$k \in \mathbb {N}$

.

2.1 Semilinear sets

We summarize a few results from the theory of semilinear sets, and refer to [Reference SakarovitchSak09, Chapter II.7.3] and [Reference D’Alessandro, Intrigila and VarricchioDIV12] for more details.

Definition 2.1 Let

![]() $d \ge 1$

. A subset

$d \ge 1$

. A subset

![]() $\mathcal {A} \subseteq \mathbb {N}^d$

is:

$\mathcal {A} \subseteq \mathbb {N}^d$

is:

-

• linear if there exist

$\boldsymbol a$

,

$\boldsymbol a$

,

$\boldsymbol b_1$

,

$\boldsymbol b_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\boldsymbol b_l \in \mathbb {N}^d$

such that

$\boldsymbol b_l \in \mathbb {N}^d$

such that

$\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

;

$\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

; -

• semilinear if

$\mathcal {A}$

is a finite union of linear sets;

$\mathcal {A}$

is a finite union of linear sets; -

• simple linear if there exist

$\boldsymbol a$

,

$\boldsymbol a$

,

$\boldsymbol b_1$

,

$\boldsymbol b_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\boldsymbol b_l \in \mathbb {N}^d$

such that

$\boldsymbol b_l \in \mathbb {N}^d$

such that

$\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

and

$\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

and

$\boldsymbol b_1$

,

$\boldsymbol b_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\boldsymbol b_l$

are linearly independent over

$\boldsymbol b_l$

are linearly independent over

$\mathbb {Z}$

.

$\mathbb {Z}$

.

Whenever we consider a representation of a simple linear set of the form

![]() $\boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

, we shall tacitly assume that the vectors

$\boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

, we shall tacitly assume that the vectors

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_l$

are taken to be linearly independent.

$\boldsymbol b_l$

are taken to be linearly independent.

We make some observations on the uniqueness of the presentation of a linear set

![]() $\mathcal {A}$

. Suppose

$\mathcal {A}$

. Suppose

![]() $\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

with

$\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

with

![]() $\boldsymbol a$

,

$\boldsymbol a$

,

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_l$

as above. The element

$\boldsymbol b_l$

as above. The element

![]() $\boldsymbol a$

is uniquely determined by

$\boldsymbol a$

is uniquely determined by

![]() $\mathcal {A}$

, as it is the minimum of

$\mathcal {A}$

, as it is the minimum of

![]() $\mathcal {A}$

in the coordinatewise partial order on

$\mathcal {A}$

in the coordinatewise partial order on

![]() $\mathbb {N}^d$

. Therefore, also the associated monoid

$\mathbb {N}^d$

. Therefore, also the associated monoid

![]() $\mathcal {A} - \boldsymbol a \subseteq \mathbb {N}^d$

is uniquely determined by

$\mathcal {A} - \boldsymbol a \subseteq \mathbb {N}^d$

is uniquely determined by

![]() $\mathcal {A}$

. The set

$\mathcal {A}$

. The set

![]() $\{\boldsymbol b_1,\ldots , \boldsymbol b_l\}$

must contain every atom of

$\{\boldsymbol b_1,\ldots , \boldsymbol b_l\}$

must contain every atom of

![]() $\mathcal {A} - \boldsymbol a$

, that is, every element that cannot be written as a sum of two nonzero elements of

$\mathcal {A} - \boldsymbol a$

, that is, every element that cannot be written as a sum of two nonzero elements of

![]() $\mathcal {A} - \boldsymbol a$

. If l is taken minimal, then

$\mathcal {A} - \boldsymbol a$

. If l is taken minimal, then

![]() $\{\boldsymbol b_1,\ldots ,\boldsymbol b_l\}$

is equal to the set of atoms of

$\{\boldsymbol b_1,\ldots ,\boldsymbol b_l\}$

is equal to the set of atoms of

![]() $\mathcal {A} - \boldsymbol a$

, and is therefore unique. In particular, if

$\mathcal {A} - \boldsymbol a$

, and is therefore unique. In particular, if

![]() $\mathcal {A}$

is simple linear and

$\mathcal {A}$

is simple linear and

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_l$

are linearly independent, then the representation is unique (up to order of

$\boldsymbol b_l$

are linearly independent, then the representation is unique (up to order of

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_l$

).

$\boldsymbol b_l$

).

If

![]() $\mathcal {A}$

and

$\mathcal {A}$

and

![]() $\mathcal {A}'$

are two linear sets with the same associated monoid, then there exist

$\mathcal {A}'$

are two linear sets with the same associated monoid, then there exist

![]() $\boldsymbol a$

,

$\boldsymbol a$

,

![]() $\boldsymbol a'$

,

$\boldsymbol a'$

,

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_l \in \mathbb {N}^d$

with

$\boldsymbol b_l \in \mathbb {N}^d$

with

![]() $\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

and

$\mathcal {A} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

and

![]() $\mathcal {A}'= \boldsymbol a' + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

. By choosing l minimal, the choice of

$\mathcal {A}'= \boldsymbol a' + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_l \mathbb {N}$

. By choosing l minimal, the choice of

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_l$

is again unique (up to order).

$\boldsymbol b_l$

is again unique (up to order).

The semilinear subsets of

![]() $\mathbb {N}^d$

are precisely the sets definable in the Presburger arithmetic of

$\mathbb {N}^d$

are precisely the sets definable in the Presburger arithmetic of

![]() $\mathbb {N}$

, by a theorem of Ginsburg and Spanier [Reference Ginsburg and SpanierGS66]. We shall make use of the following fundamental (but nontrivial) facts.

$\mathbb {N}$

, by a theorem of Ginsburg and Spanier [Reference Ginsburg and SpanierGS66]. We shall make use of the following fundamental (but nontrivial) facts.

Proposition 2.2 The semilinear subsets of

![]() $\mathbb {N}^d$

form a Boolean algebra under set-theoretic intersection and union. In particular, finite unions and finite intersections of semilinear sets, as well as complements of semilinear sets, are again semilinear.

$\mathbb {N}^d$

form a Boolean algebra under set-theoretic intersection and union. In particular, finite unions and finite intersections of semilinear sets, as well as complements of semilinear sets, are again semilinear.

Proof By [Reference SakarovitchSak09, Proposition II.7.15], a subset of

![]() $\mathbb {N}^d$

is semilinear if and only if it is rational. By [Reference SakarovitchSak09, Theorem II.7.3], the rational subsets of

$\mathbb {N}^d$

is semilinear if and only if it is rational. By [Reference SakarovitchSak09, Theorem II.7.3], the rational subsets of

![]() $\mathbb {N}^d$

form a Boolean algebra.

$\mathbb {N}^d$

form a Boolean algebra.

One can show that every semilinear set is a finite union of simple linear sets. A stronger and much deeper result, which has been shown by Eilenberg and Schützenberger [Reference Eilenberg and SchützenbergerES69] and independently by Ito [Reference ItoIto69], is the following.

Proposition 2.3 Every semilinear set is a finite disjoint union of simple linear sets.

Proof For the proof of Ito, see [Reference ItoIto69, Theorem 2]. Alternatively, one may apply the more general [Reference Eilenberg and SchützenbergerES69, Unambiguity Theorem] of Eilenberg and Schützenberger to the monoid

![]() $\mathbb {N}^d$

. This second proof is also contained in the book of Sakarovitch, and one obtains the claim as follows: let

$\mathbb {N}^d$

. This second proof is also contained in the book of Sakarovitch, and one obtains the claim as follows: let

![]() $S \subseteq \mathbb {N}^d$

be semilinear. Then S is a rational subset of

$S \subseteq \mathbb {N}^d$

be semilinear. Then S is a rational subset of

![]() $\mathbb {N}^d$

(see [Reference SakarovitchSak09, Proposition II.7.5] and the discussion preceding it). By [Reference SakarovitchSak09, Theorem II.7.4] or [Reference Eilenberg and SchützenbergerES69, Unambiguity Theorem], every rational subset of

$\mathbb {N}^d$

(see [Reference SakarovitchSak09, Proposition II.7.5] and the discussion preceding it). By [Reference SakarovitchSak09, Theorem II.7.4] or [Reference Eilenberg and SchützenbergerES69, Unambiguity Theorem], every rational subset of

![]() $\mathbb {N}^d$

is unambiguous. Again by [Reference SakarovitchSak09, Proposition II.7.15], every unambiguous rational subset of

$\mathbb {N}^d$

is unambiguous. Again by [Reference SakarovitchSak09, Proposition II.7.15], every unambiguous rational subset of

![]() $\mathbb {N}^d$

is a finite disjoint union of simple linear sets.

$\mathbb {N}^d$

is a finite disjoint union of simple linear sets.

2.2 Unit equations

Unit equations play a central role in our proofs. We recall the fundamental finiteness result. For number fields, it was proved independently by Evertse [Reference EvertseEve84] and van der Poorten and Schlickewei [Reference van der Poorten and SchlickeweivdPS82]; the extension to arbitrary fields appears in [Reference van der Poorten and SchlickeweivdPS91]. We refer to [Reference Evertse and GyőryEG15, Chapter 6] or [Reference Bombieri and GublerBG06, Theorem 7.4.1] for more details.

Let G be a finitely generated subgroup of the multiplicative subgroup

![]() $K^*$

of the field K. It is important here that

$K^*$

of the field K. It is important here that

![]() $\operatorname {\mathrm {char}} K=0$

. Let

$\operatorname {\mathrm {char}} K=0$

. Let

![]() $m \ge 1$

. A solution

$m \ge 1$

. A solution

![]() $(x_1,\ldots ,x_m) \in K^{m}$

to an equation of the form

$(x_1,\ldots ,x_m) \in K^{m}$

to an equation of the form

is nondegenerate if

![]() $\sum _{i \in I} x_i \ne 0$

for every

$\sum _{i \in I} x_i \ne 0$

for every

![]() $\emptyset \subsetneq I \subsetneq [1,m]$

. In particular, if

$\emptyset \subsetneq I \subsetneq [1,m]$

. In particular, if

![]() $m \ge 2$

, then all

$m \ge 2$

, then all

![]() $x_i$

of a nondegenerate solution are nonzero.

$x_i$

of a nondegenerate solution are nonzero.

Proposition 2.4 (Evertse; van der Poorten and Schlickewei)

There exist only finitely many projective points

![]() $(x_1: \cdots : x_m)$

with coordinates

$(x_1: \cdots : x_m)$

with coordinates

![]() $x_1$

,

$x_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $x_m \in G$

such that

$x_m \in G$

such that

![]() $(x_1,\ldots ,x_m)$

is a nondegenerate solution to the unit equation (2.1).

$(x_1,\ldots ,x_m)$

is a nondegenerate solution to the unit equation (2.1).

It is easily seen that there can be infinitely many degenerate solutions (even when considered as projective points), but one can recursively apply the theorem to subequations. In particular, we will commonly use an argument of the following type.

Let

![]() $\Omega $

be some index set, and let

$\Omega $

be some index set, and let

![]() $g_1$

,

$g_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $g_m \colon \Omega \to G_0$

be maps such that

$g_m \colon \Omega \to G_0$

be maps such that

For every partition

![]() $\mathcal {P} = \{I_1,\ldots ,I_t\}$

of the set

$\mathcal {P} = \{I_1,\ldots ,I_t\}$

of the set

![]() $[1,m]$

, let

$[1,m]$

, let

![]() $\Omega _{\mathcal {P}} \subseteq \Omega $

consist of all

$\Omega _{\mathcal {P}} \subseteq \Omega $

consist of all

![]() $\omega $

such that for all

$\omega $

such that for all

![]() $j \in [1,t]$

, the tuple

$j \in [1,t]$

, the tuple

![]() $(g_i(\omega ))_{i \in I_j}$

is a nondegenerate solution of the unit equation

$(g_i(\omega ))_{i \in I_j}$

is a nondegenerate solution of the unit equation

![]() $\sum _{i \in I_j} X_i = 0$

. Since every solution of a unit equation can be partitioned into nondegenerate solutions in at least one way, we obtain

$\sum _{i \in I_j} X_i = 0$

. Since every solution of a unit equation can be partitioned into nondegenerate solutions in at least one way, we obtain

![]() $\Omega = \bigcup _{\mathcal {P}} \Omega _{\mathcal {P}}$

, where the union runs over all partitions of

$\Omega = \bigcup _{\mathcal {P}} \Omega _{\mathcal {P}}$

, where the union runs over all partitions of

![]() $[1,m]$

.

$[1,m]$

.

Since there are only finitely many partitions of

![]() $[1,m]$

, one can often deal with each

$[1,m]$

, one can often deal with each

![]() $\Omega _{\mathcal {P}}$

separately, or reduce to one

$\Omega _{\mathcal {P}}$

separately, or reduce to one

![]() $\Omega _{\mathcal {P}}$

having some desirable property by an application of the pigeonhole principle. For example, if

$\Omega _{\mathcal {P}}$

having some desirable property by an application of the pigeonhole principle. For example, if

![]() $\Omega $

is infinite, then at least one

$\Omega $

is infinite, then at least one

![]() $\Omega _{\mathcal {P}}$

is infinite. Similarly, if

$\Omega _{\mathcal {P}}$

is infinite. Similarly, if

![]() $\Omega = \mathbb {N}^d$

, then not all

$\Omega = \mathbb {N}^d$

, then not all

![]() $\Omega _{\mathcal {P}}$

can be contained in semilinear sets of rank at most

$\Omega _{\mathcal {P}}$

can be contained in semilinear sets of rank at most

![]() $d-1$

, because

$d-1$

, because

![]() $\mathbb {N}^d$

cannot be covered by finitely many semilinear sets of rank

$\mathbb {N}^d$

cannot be covered by finitely many semilinear sets of rank

![]() $\le d-1$

.

$\le d-1$

.

2.3 Hahn series

We recall that an abelian group G is totally ordered as a group if G is equipped with a total order

![]() $\le $

with the property that

$\le $

with the property that

![]() $a+c\le b+c$

whenever

$a+c\le b+c$

whenever

![]() $a,b, c\in G$

are such that

$a,b, c\in G$

are such that

![]() $a\le b$

. For the group

$a\le b$

. For the group

![]() $H=\mathbb {Q}^d$

, we will give H a total ordering that is compatible with the group structure by first picking positive linearly independent real numbers

$H=\mathbb {Q}^d$

, we will give H a total ordering that is compatible with the group structure by first picking positive linearly independent real numbers

![]() $\epsilon _1$

,

$\epsilon _1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\epsilon _d$

and then declaring that

$\epsilon _d$

and then declaring that

![]() $(a_1,\ldots , a_d)\le (b_1,\ldots ,b_d)$

if and only if

$(a_1,\ldots , a_d)\le (b_1,\ldots ,b_d)$

if and only if

$$\begin{align*}\sum_{i=1}^d a_i \epsilon_i \le \sum_{i=1}^d b_i \epsilon_i. \end{align*}$$

$$\begin{align*}\sum_{i=1}^d a_i \epsilon_i \le \sum_{i=1}^d b_i \epsilon_i. \end{align*}$$

Lemma 2.5 The following hold for the above order:

-

•

$\mathbb {N}^d$

is a well-ordered subset of

$\mathbb {N}^d$

is a well-ordered subset of

$\mathbb {Q}^d$

.

$\mathbb {Q}^d$

. -

• If

$(a_1,\ldots , a_d)< (b_1,\ldots ,b_d)$

and if

$(a_1,\ldots , a_d)< (b_1,\ldots ,b_d)$

and if

$(c_1,\ldots ,c_d)\in \mathbb {Q}^d$

, then there is some

$(c_1,\ldots ,c_d)\in \mathbb {Q}^d$

, then there is some

$N>0$

such that

$N>0$

such that

$n(b_1,\ldots ,b_d)> n(a_1,\ldots ,a_d)+(c_1,\ldots ,c_d)$

whenever

$n(b_1,\ldots ,b_d)> n(a_1,\ldots ,a_d)+(c_1,\ldots ,c_d)$

whenever

$n\ge N$

.

$n\ge N$

.

Proof Let S be a nonempty subset of

![]() $\mathbb {N}^d$

. Pick

$\mathbb {N}^d$

. Pick

![]() $(a_1,\ldots ,a_d)\in S$

, and let

$(a_1,\ldots ,a_d)\in S$

, and let

![]() . Then, if

. Then, if

![]() $(b_1,\ldots , b_d)\in S$

is less than

$(b_1,\ldots , b_d)\in S$

is less than

![]() $(a_1,\ldots ,a_d)$

, we must have

$(a_1,\ldots ,a_d)$

, we must have

![]() $b_i \le u/\epsilon _i$

for

$b_i \le u/\epsilon _i$

for

![]() $i \in [1,d]$

. Thus, there are only finitely many elements in S that are less than u and so S has a smallest element. Next, if

$i \in [1,d]$

. Thus, there are only finitely many elements in S that are less than u and so S has a smallest element. Next, if

![]() $(a_1,\ldots , a_d)< (b_1,\ldots ,b_d)$

, then

$(a_1,\ldots , a_d)< (b_1,\ldots ,b_d)$

, then

![]() . Then

. Then

![]() $n\theta> \sum _{i=1}^d c_i \epsilon _i$

for all n sufficiently large.

$n\theta> \sum _{i=1}^d c_i \epsilon _i$

for all n sufficiently large.

The second property is equivalent to

![]() $\mathbb {Q}^d$

with the given order being archimedean.

$\mathbb {Q}^d$

with the given order being archimedean.

If G is a totally ordered abelian group, we can define the ring of Hahn power series

Then

![]() $K(\mkern -3mu( {\boldsymbol x}^G)\mkern -3mu)$

, together with the obvious operations, is a ring. For

$K(\mkern -3mu( {\boldsymbol x}^G)\mkern -3mu)$

, together with the obvious operations, is a ring. For

one defines

to be the support of F. We define

. Then there is a valuation

![]() $\mathsf v \colon K(\mkern -3mu( x^G )\mkern -3mu) \to G \cup \{\infty \}$

, defined as follows: one sets

$\mathsf v \colon K(\mkern -3mu( x^G )\mkern -3mu) \to G \cup \{\infty \}$

, defined as follows: one sets

![]() $\mathsf v(F)=g$

where

$\mathsf v(F)=g$

where

![]() ${\boldsymbol x}^g$

is the minimal monomial in the support of F if

${\boldsymbol x}^g$

is the minimal monomial in the support of F if

![]() $F \ne 0$

, and

$F \ne 0$

, and

![]() $\mathsf v(0)=\infty $

.

$\mathsf v(0)=\infty $

.

For

![]() $F_1 = \sum _{g\in G} a_g {\boldsymbol x}^g$

and

$F_1 = \sum _{g\in G} a_g {\boldsymbol x}^g$

and

![]() $F_2 = \sum _{g \in G} b_g {\boldsymbol x}^g \in K(\mkern -3mu( {\boldsymbol x}^G )\mkern -3mu)$

, the Hadamard product is defined as

$F_2 = \sum _{g \in G} b_g {\boldsymbol x}^g \in K(\mkern -3mu( {\boldsymbol x}^G )\mkern -3mu)$

, the Hadamard product is defined as

If K is algebraically closed and G is divisible, then

![]() $K(\mkern -3mu( {\boldsymbol x}^G)\mkern -3mu)$

is an algebraically closed field. In particular, if we use the order given above for

$K(\mkern -3mu( {\boldsymbol x}^G)\mkern -3mu)$

is an algebraically closed field. In particular, if we use the order given above for

![]() $H=\mathbb {Q}^d$

, we see that if K is algebraically closed, then

$H=\mathbb {Q}^d$

, we see that if K is algebraically closed, then

![]() $K(\mkern -3mu( {\boldsymbol x}^H)\mkern -3mu)$

is algebraically closed. After making the identification

$K(\mkern -3mu( {\boldsymbol x}^H)\mkern -3mu)$

is algebraically closed. After making the identification

![]() , where

, where

![]() $\boldsymbol e_i=(0,\ldots ,1,\ldots ,0)$

and where there is a

$\boldsymbol e_i=(0,\ldots ,1,\ldots ,0)$

and where there is a

![]() $1$

in the ith coordinate and zeros in all other coordinates, we have that the formal power series ring

$1$

in the ith coordinate and zeros in all other coordinates, we have that the formal power series ring

![]() is a subalgebra of

is a subalgebra of

![]() $K(\mkern -3mu( x^H)\mkern -3mu)$

.

$K(\mkern -3mu( x^H)\mkern -3mu)$

.

We will find it convenient to write

![]() ${\boldsymbol x}^{(a_1,\ldots ,a_d)} =x_1^{a_1}\cdots x_d^{a_d}$

and write

${\boldsymbol x}^{(a_1,\ldots ,a_d)} =x_1^{a_1}\cdots x_d^{a_d}$

and write

![]() $K(\mkern -3mu( x_1^{\mathbb {Q}},\ldots ,x_{d}^{\mathbb {Q}} )\mkern -3mu)$

for

$K(\mkern -3mu( x_1^{\mathbb {Q}},\ldots ,x_{d}^{\mathbb {Q}} )\mkern -3mu)$

for

![]() $K(\mkern -3mu( {\boldsymbol x}^H)\mkern -3mu)$

. In the other direction, we will find it convenient to abbreviate the power series ring as

$K(\mkern -3mu( {\boldsymbol x}^H)\mkern -3mu)$

. In the other direction, we will find it convenient to abbreviate the power series ring as

![]() . These conventions are consistent with usual multi-index notation, and so for

. These conventions are consistent with usual multi-index notation, and so for

![]() $\boldsymbol a= (a_1,\ldots ,a_d)$

and

$\boldsymbol a= (a_1,\ldots ,a_d)$

and

![]() $\boldsymbol b = (b_1,\ldots ,b_d) \in \mathbb {Q}^d$

, we write

$\boldsymbol b = (b_1,\ldots ,b_d) \in \mathbb {Q}^d$

, we write

![]() $\boldsymbol a + \boldsymbol b = (a_1+b_1, \ldots , a_d + b_d)$

and

$\boldsymbol a + \boldsymbol b = (a_1+b_1, \ldots , a_d + b_d)$

and

![]() $\boldsymbol a \boldsymbol b = (a_1b_1, \ldots , a_d b_d)$

. If

$\boldsymbol a \boldsymbol b = (a_1b_1, \ldots , a_d b_d)$

. If

![]() $\boldsymbol a \in \mathbb {Z}^d$

and

$\boldsymbol a \in \mathbb {Z}^d$

and

![]() ${\boldsymbol \lambda }=(\lambda _1,\ldots ,\lambda _d)$

with

${\boldsymbol \lambda }=(\lambda _1,\ldots ,\lambda _d)$

with

![]() $\lambda _i \in K(\mkern -3mu( \boldsymbol x^{H} )\mkern -3mu)$

, we also write

$\lambda _i \in K(\mkern -3mu( \boldsymbol x^{H} )\mkern -3mu)$

, we also write

![]() ${\boldsymbol \lambda }^{\boldsymbol a}= \prod _{i=1}^d \lambda _i^{a_i}$

, although we will usually only use this for

${\boldsymbol \lambda }^{\boldsymbol a}= \prod _{i=1}^d \lambda _i^{a_i}$

, although we will usually only use this for

![]() $\lambda _i \in K^*$

or

$\lambda _i \in K^*$

or

![]() $\lambda _i$

a monomial.

$\lambda _i$

a monomial.

The set of [rational] Bézivin series is not closed under products or differentiation. However, it is closed under Hadamard products and it forms a

![]() $K[{\boldsymbol x}]$

-submodule of

$K[{\boldsymbol x}]$

-submodule of

![]() , as the following easy lemma shows.

, as the following easy lemma shows.

Lemma 2.6 Let

![]() be a Bézivin series with coefficients in

be a Bézivin series with coefficients in

![]() $rG_0$

. If

$rG_0$

. If

![]() $P \in K[\boldsymbol x]$

is a polynomial with s terms in its support, then there exists a set

$P \in K[\boldsymbol x]$

is a polynomial with s terms in its support, then there exists a set

![]() $B \subseteq K$

of cardinality

$B \subseteq K$

of cardinality

![]() $rs$

such that

$rs$

such that

In particular, the series

![]() $PF$

is a Bézivin series with coefficients in

$PF$

is a Bézivin series with coefficients in

![]() $rsG_0'$

for a suitable finitely generated subgroup

$rsG_0'$

for a suitable finitely generated subgroup

![]() $G' \le K^*$

.

$G' \le K^*$

.

We will need to understand the factorization of polynomials of the form

![]() $1-c{\boldsymbol x}^{\boldsymbol e}$

with

$1-c{\boldsymbol x}^{\boldsymbol e}$

with

![]() $\boldsymbol e \in \mathbb {N}^d$

.

$\boldsymbol e \in \mathbb {N}^d$

.

Lemma 2.7 Let K be algebraically closed, and let

![]() $\boldsymbol e = (e_1,\ldots ,e_d) \in \mathbb {Z}^d \setminus \{\boldsymbol 0\}$

. In the factorial domain

$\boldsymbol e = (e_1,\ldots ,e_d) \in \mathbb {Z}^d \setminus \{\boldsymbol 0\}$

. In the factorial domain

![]() $K[{\boldsymbol x}^{\pm 1}]=K[x_1^{\pm 1},\ldots ,x_d^{\pm 1}]$

, the Laurent polynomial

$K[{\boldsymbol x}^{\pm 1}]=K[x_1^{\pm 1},\ldots ,x_d^{\pm 1}]$

, the Laurent polynomial

factors into irreducibles as

$$\begin{align*}Q = \prod_{j=1}^t (1 - \zeta^j b {\boldsymbol x}^{\boldsymbol e/t}), \end{align*}$$

$$\begin{align*}Q = \prod_{j=1}^t (1 - \zeta^j b {\boldsymbol x}^{\boldsymbol e/t}), \end{align*}$$

where

![]() $t=\gcd (e_1,\ldots ,e_d)$

,

$t=\gcd (e_1,\ldots ,e_d)$

,

![]() $\zeta \in K^*$

is a primitive t-th root of unity, and

$\zeta \in K^*$

is a primitive t-th root of unity, and

![]() $b^t=c$

. In particular, the Laurent polynomial Q is irreducible if and only if

$b^t=c$

. In particular, the Laurent polynomial Q is irreducible if and only if

![]() $\gcd (e_1,\ldots ,e_d) = 1$

.

$\gcd (e_1,\ldots ,e_d) = 1$

.

Proof The proof follows an argument of Ostrowski [Reference OstrowskiOst76, Theorem IX]. Since

![]() $(e_1/t, \ldots , e_d/t)$

is a unimodular row, there exists a matrix

$(e_1/t, \ldots , e_d/t)$

is a unimodular row, there exists a matrix

![]() $A=(a_{i,j})_{i,j \in [1,d]} \in \operatorname {\mathrm {GL}}_d(\mathbb {Z})$

whose first row is

$A=(a_{i,j})_{i,j \in [1,d]} \in \operatorname {\mathrm {GL}}_d(\mathbb {Z})$

whose first row is

![]() $(e_1/t, \ldots , e_d/t)$

. This matrix A induces a ring automorphism

$(e_1/t, \ldots , e_d/t)$

. This matrix A induces a ring automorphism

![]() $\varphi $

of the Laurent polynomial ring

$\varphi $

of the Laurent polynomial ring

![]() $K[{\boldsymbol x}^{\pm 1}]$

with

$K[{\boldsymbol x}^{\pm 1}]$

with

![]() $\varphi (x_i)=\prod _{j=1}^d x_j^{a_{i,j}}$

. Then

$\varphi (x_i)=\prod _{j=1}^d x_j^{a_{i,j}}$

. Then

![]() $\varphi ^{-1}(Q) = 1-cx_1^{t}$

. Since

$\varphi ^{-1}(Q) = 1-cx_1^{t}$

. Since

![]() $K[x_1^{\pm 1}]$

is divisor-closed in

$K[x_1^{\pm 1}]$

is divisor-closed in

![]() $K[{\boldsymbol x}^{\pm 1}]$

, the problem reduces to that of factoring the univariate Laurent polynomial

$K[{\boldsymbol x}^{\pm 1}]$

, the problem reduces to that of factoring the univariate Laurent polynomial

![]() $1-cx_1^{t}$

in

$1-cx_1^{t}$

in

![]() $K[x_1^{\pm 1}]$

. Since

$K[x_1^{\pm 1}]$

. Since

![]() $K[x_1^{\pm 1}]$

is obtained from the factorial domain

$K[x_1^{\pm 1}]$

is obtained from the factorial domain

![]() $K[x_1]$

by inverting the prime element

$K[x_1]$

by inverting the prime element

![]() $x_1$

, and clearly

$x_1$

, and clearly

![]() $x_1$

is not a factor of

$x_1$

is not a factor of

![]() $1-cx_1^t$

in

$1-cx_1^t$

in

![]() $K[x_1]$

, it suffices to consider the factorization of

$K[x_1]$

, it suffices to consider the factorization of

![]() $1-cx_1^t$

in

$1-cx_1^t$

in

![]() $K[x_1]$

. However, here the result is clear.

$K[x_1]$

. However, here the result is clear.

3 Rational series with polynomial–exponential coefficients

In this section, we consider rational series whose denominator is a product of elements of the form

![]() $1-cu$

with

$1-cu$

with

![]() $c \in K^*$

and

$c \in K^*$

and

![]() $u \in K[{\boldsymbol x}]$

a nonconstant monomial. This will come in handy in the later sections, as it will turn out that rational Bézivin series are always of such a form. For the class of rational series under investigation here, it is possible to give a fairly explicit structural description of their coefficient sequences, namely they are piecewise polynomial–exponential on simple linear subsets of

$u \in K[{\boldsymbol x}]$

a nonconstant monomial. This will come in handy in the later sections, as it will turn out that rational Bézivin series are always of such a form. For the class of rational series under investigation here, it is possible to give a fairly explicit structural description of their coefficient sequences, namely they are piecewise polynomial–exponential on simple linear subsets of

![]() $\mathbb {N}^d$

.

$\mathbb {N}^d$

.

Definition 3.1 Let

![]() $f \colon \mathbb {N}^d \to K$

be a sequence.

$f \colon \mathbb {N}^d \to K$

be a sequence.

-

• The sequence f is piecewise polynomial–exponential on simple linear subsets of

$\mathbb {N}^d$

if there exists a partition of

$\mathbb {N}^d$

if there exists a partition of

$\mathbb {N}^d$

into simple linear sets

$\mathbb {N}^d$

into simple linear sets

$\mathcal {S}_1$

,

$\mathcal {S}_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\mathcal {S}_m$

so that for each

$\mathcal {S}_m$

so that for each

$i \in [1,m]$

, there exist

$i \in [1,m]$

, there exist

$k_i \in \mathbb {N}$

, polynomials

$k_i \in \mathbb {N}$

, polynomials

$A_{i,1}$

,

$A_{i,1}$

,

$\ldots \,$

,

$\ldots \,$

,

$A_{i,k_i} \in K[{\boldsymbol x}]$

, and

$A_{i,k_i} \in K[{\boldsymbol x}]$

, and

${\boldsymbol \alpha }_{i,1}$

,

${\boldsymbol \alpha }_{i,1}$

,

$\ldots \,$

,

$\ldots \,$

,

${\boldsymbol \alpha }_{i,k_i} \in (K^*)^d$

such that

${\boldsymbol \alpha }_{i,k_i} \in (K^*)^d$

such that  $$\begin{align*}f({\boldsymbol n}) = \sum_{j = 1}^{k_i} A_{i,j}(\boldsymbol n) {\boldsymbol\alpha}_{i,j}^{\boldsymbol n} \qquad\text{for}\ {\boldsymbol n} \in \mathcal{S}_i. \end{align*}$$

$$\begin{align*}f({\boldsymbol n}) = \sum_{j = 1}^{k_i} A_{i,j}(\boldsymbol n) {\boldsymbol\alpha}_{i,j}^{\boldsymbol n} \qquad\text{for}\ {\boldsymbol n} \in \mathcal{S}_i. \end{align*}$$

-

• The sequence f is piecewise polynomial on simple linear subsets of

$\mathbb {N}^d$

if one can moreover take

$\mathbb {N}^d$

if one can moreover take

${\boldsymbol \alpha }_{i,j}=(1,\ldots ,1)$

for all

${\boldsymbol \alpha }_{i,j}=(1,\ldots ,1)$

for all

$i \in [1,m]$

and

$i \in [1,m]$

and

$j \in [1,k_i]$

.

$j \in [1,k_i]$

. -

• The sequence f is piecewise exponential on simple linear subsets of

$\mathbb {N}^d$

if one can take the polynomials

$\mathbb {N}^d$

if one can take the polynomials

$A_{i,j}$

to be constant for all

$A_{i,j}$

to be constant for all

$i \in [1,m]$

and

$i \in [1,m]$

and

$j \in [1,k_i]$

.

$j \in [1,k_i]$

.

Note that in the piecewise exponential case, sums of exponentials (

![]() $k_i> 1$

) are allowed. There is no restriction on the ranks of the simple linear sets; the rank of

$k_i> 1$

) are allowed. There is no restriction on the ranks of the simple linear sets; the rank of

![]() $\mathcal {S}_i$

may be smaller than d, and also need not be the same for

$\mathcal {S}_i$

may be smaller than d, and also need not be the same for

![]() $\mathcal {S}_1$

,

$\mathcal {S}_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\mathcal {S}_m$

. Each of these three types of representation is trivially preserved under refinements of the partition. In particular, representations of the above types are not unique. It is not hard to see that every series

$\mathcal {S}_m$

. Each of these three types of representation is trivially preserved under refinements of the partition. In particular, representations of the above types are not unique. It is not hard to see that every series

![]() , whose coefficient sequence is polynomial–exponential on simple subsets of

, whose coefficient sequence is polynomial–exponential on simple subsets of

![]() $\mathbb {N}^d$

, is rational (see Corollary 3.12). We give an easy example to illustrate the definition.

$\mathbb {N}^d$

, is rational (see Corollary 3.12). We give an easy example to illustrate the definition.

Example 3.2 Consider the sequence

![]() $f \colon \mathbb {N}^2 \to \mathbb {Q}$

defined by

$f \colon \mathbb {N}^2 \to \mathbb {Q}$

defined by

$$\begin{align*}\begin{aligned} \sum_{m,n =0}^\infty f(m,n)x^my^n &= \frac{1}{1-2xy} + \frac{1}{1-3xy} + \frac{y}{(1-3xy)^2(1-5y)} + \frac{x}{(1-xy)(1-x)} \\ &= \sum_{k=0}^\infty (2^k+3^k)x^ky^k + \sum_{k,l=0}^\infty (k+1)3^k5^lx^ky^{k+l+1} + \sum_{k,l=0}^\infty x^{k+l+1}y^{l}. \end{aligned} \end{align*}$$

$$\begin{align*}\begin{aligned} \sum_{m,n =0}^\infty f(m,n)x^my^n &= \frac{1}{1-2xy} + \frac{1}{1-3xy} + \frac{y}{(1-3xy)^2(1-5y)} + \frac{x}{(1-xy)(1-x)} \\ &= \sum_{k=0}^\infty (2^k+3^k)x^ky^k + \sum_{k,l=0}^\infty (k+1)3^k5^lx^ky^{k+l+1} + \sum_{k,l=0}^\infty x^{k+l+1}y^{l}. \end{aligned} \end{align*}$$

Then

$$\begin{align*}f(m,n) = \begin{cases} 2^m + 3^m, & \text{if}\ m=n, \\ \frac{1}{5} (m+1)(\frac{3}{5})^m 5^n, & \text{if}\ m < n, \\ 1, & \text{if}\ m>n. \end{cases} \end{align*}$$

$$\begin{align*}f(m,n) = \begin{cases} 2^m + 3^m, & \text{if}\ m=n, \\ \frac{1}{5} (m+1)(\frac{3}{5})^m 5^n, & \text{if}\ m < n, \\ 1, & \text{if}\ m>n. \end{cases} \end{align*}$$

Since

$$\begin{align*}\begin{aligned} \{\, (n,n) \in \mathbb{N}^2 : n \in \mathbb{N} \,\} &= (1,1)\mathbb{N},\\ \{\, (m,n) \in \mathbb{N}^2 : m < n \,\} &= (0,1) + (1,1)\mathbb{N} + (0,1)\mathbb{N}, \text{ and }\\ \{\, (m,n) \in \mathbb{N}^2 : m> n \,\} &= (1,0) + (1,1)\mathbb{N} + (1,0)\mathbb{N} \end{aligned} \end{align*}$$

$$\begin{align*}\begin{aligned} \{\, (n,n) \in \mathbb{N}^2 : n \in \mathbb{N} \,\} &= (1,1)\mathbb{N},\\ \{\, (m,n) \in \mathbb{N}^2 : m < n \,\} &= (0,1) + (1,1)\mathbb{N} + (0,1)\mathbb{N}, \text{ and }\\ \{\, (m,n) \in \mathbb{N}^2 : m> n \,\} &= (1,0) + (1,1)\mathbb{N} + (1,0)\mathbb{N} \end{aligned} \end{align*}$$

are simple linear sets, the sequence f is piecewise polynomial–exponential on simple linear subsets of

![]() $\mathbb {N}^2$

. However, f is neither piecewise polynomial nor piecewise exponential on simple linear subsets of

$\mathbb {N}^2$

. However, f is neither piecewise polynomial nor piecewise exponential on simple linear subsets of

![]() $\mathbb {N}^2$

. The coefficient sequence of

$\mathbb {N}^2$

. The coefficient sequence of

![]() $\frac {1}{1-2xy} + \frac {1}{1-3xy}$

is piecewise exponential on simple linear subsets of

$\frac {1}{1-2xy} + \frac {1}{1-3xy}$

is piecewise exponential on simple linear subsets of

![]() $\mathbb {N}^2$

, and the coefficient sequence of

$\mathbb {N}^2$

, and the coefficient sequence of

![]() $\frac {x}{(1-xy)(1-x)}$

is piecewise polynomial on simple linear subsets of

$\frac {x}{(1-xy)(1-x)}$

is piecewise polynomial on simple linear subsets of

![]() $\mathbb {N}^2$

.

$\mathbb {N}^2$

.

The following basic properties hold.

Lemma 3.3 Let

![]() $\mathcal {PE}$

,

$\mathcal {PE}$

,

![]() $\mathcal {P}$

,

$\mathcal {P}$

,

![]() denote, respectively, the power series whose coefficient sequences are piecewise polynomial–exponential [polynomial, exponential] on simple linear subsets of

denote, respectively, the power series whose coefficient sequences are piecewise polynomial–exponential [polynomial, exponential] on simple linear subsets of

![]() $\mathbb {N}^d$

.

$\mathbb {N}^d$

.

-

(1) Each of

$\mathcal {PE}$

,

$\mathcal {PE}$

,

$\mathcal {P}$

, and

$\mathcal {P}$

, and

$\mathcal E$

is a

$\mathcal E$

is a

$K[\boldsymbol x]$

-submodule of

$K[\boldsymbol x]$

-submodule of

and is closed under Hadamard products.

and is closed under Hadamard products. -

(2) The sets

$\mathcal {PE}$

and

$\mathcal {PE}$

and

$\mathcal {P}$

are also closed under products and partial derivatives. In particular,

$\mathcal {P}$

are also closed under products and partial derivatives. In particular,

$\mathcal {PE}$

and

$\mathcal {PE}$

and

$\mathcal {P}$

form subalgebras of

$\mathcal {P}$

form subalgebras of

.

.

Proof

(1) Let F and G be series that are [polynomial–exponential, polynomial, exponential] on simple linear subsets. It is clear that for every monomial u and every scalar

![]() $\lambda \in K$

, also

$\lambda \in K$

, also

![]() $\lambda uF$

is of the same form. Thus, it suffices to show that the same is true for

$\lambda uF$

is of the same form. Thus, it suffices to show that the same is true for

![]() $F+G$

. If

$F+G$

. If

![]() $\mathcal {S}$

and

$\mathcal {S}$

and

![]() $\mathcal {T} \subseteq \mathbb {N}^d$

are simple linear subsets, then the intersection

$\mathcal {T} \subseteq \mathbb {N}^d$

are simple linear subsets, then the intersection

![]() $\mathcal {S} \cap \mathcal {T}$

is semilinear. By Proposition 2.3, the intersection

$\mathcal {S} \cap \mathcal {T}$

is semilinear. By Proposition 2.3, the intersection

![]() $\mathcal {S} \cap \mathcal {T}$

can be represented as a finite disjoint union of simple linear sets. Thus, in representations of the coefficient sequences of F and G as in Definition 3.1, we may assume that the simple linear sets coincide for the two series, by passing to a common refinement. Then the claim about

$\mathcal {S} \cap \mathcal {T}$

can be represented as a finite disjoint union of simple linear sets. Thus, in representations of the coefficient sequences of F and G as in Definition 3.1, we may assume that the simple linear sets coincide for the two series, by passing to a common refinement. Then the claim about

![]() $F+G$

is immediate.

$F+G$

is immediate.

(2) This can again be computed on each simple linear set. Alternatively, it will follow from Theorem 3.10 and Corollary 3.13.

The set

![]() $\mathcal E$

is not closed under products or derivatives, since

$\mathcal E$

is not closed under products or derivatives, since

![]() $(1-x)^{-1} \in \mathcal E$

, but

$(1-x)^{-1} \in \mathcal E$

, but

![]() for

for

![]() $e \ge 2$

is polynomial–exponential but not exponential on simple linear subsets of

$e \ge 2$

is polynomial–exponential but not exponential on simple linear subsets of

![]() $\mathbb {N}$

. We recall some easy facts about the algebraic independence of monomials.

$\mathbb {N}$

. We recall some easy facts about the algebraic independence of monomials.

Lemma 3.4 Let

![]() $\boldsymbol e_1$

,

$\boldsymbol e_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol e_n \in \mathbb {N}^d$

, let

$\boldsymbol e_n \in \mathbb {N}^d$

, let

![]() $c_1$

,

$c_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $c_n \in K^*$

, and let

$c_n \in K^*$

, and let

![]() $m_1$

,

$m_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $m_n \in \mathbb {N}_{\ge 1}$

. The following statements are equivalent.

$m_n \in \mathbb {N}_{\ge 1}$

. The following statements are equivalent.

-

(a) The vectors

$\boldsymbol e_1$

,

$\boldsymbol e_1$

,

$\ldots \,$

,

$\ldots \,$

,

$\boldsymbol e_n$

are linearly independent over

$\boldsymbol e_n$

are linearly independent over

$\mathbb {Z}$

.

$\mathbb {Z}$

. -

(b) The monomials

${\boldsymbol x}^{\boldsymbol e_1}$

,

${\boldsymbol x}^{\boldsymbol e_1}$

,

$\ldots \,$

,

$\ldots \,$

,

${\boldsymbol x}^{\boldsymbol e_n}$

generate a free abelian subgroup of the unit group of

${\boldsymbol x}^{\boldsymbol e_n}$

generate a free abelian subgroup of the unit group of

$K[{\boldsymbol x}^{\pm 1}]$

.

$K[{\boldsymbol x}^{\pm 1}]$

. -

(c) The monomials

${\boldsymbol x}^{\boldsymbol e_1}$

,

${\boldsymbol x}^{\boldsymbol e_1}$

,

$\ldots \,$

,

$\ldots \,$

,

${\boldsymbol x}^{\boldsymbol e_n}$

are algebraically independent over K.

${\boldsymbol x}^{\boldsymbol e_n}$

are algebraically independent over K. -

(d) The polynomials

are algebraically independent over K. $$\begin{align*}(1 - c_1 {\boldsymbol x}^{\boldsymbol e_1})^{m_1}, \ldots, (1 - c_n {\boldsymbol x}^{\boldsymbol e_n})^{m_n} \end{align*}$$

$$\begin{align*}(1 - c_1 {\boldsymbol x}^{\boldsymbol e_1})^{m_1}, \ldots, (1 - c_n {\boldsymbol x}^{\boldsymbol e_n})^{m_n} \end{align*}$$

Proof

(a)

![]() $\,\Leftrightarrow \,$

(b) Clear.

$\,\Leftrightarrow \,$

(b) Clear.

(a)

![]() $\,\Leftrightarrow \,$

(c) Consider the family

$\,\Leftrightarrow \,$

(c) Consider the family

![]() $({{\boldsymbol x}}^{\boldsymbol e_1 c_1 + \cdots + \boldsymbol e_n c_n})_{c_1,\ldots ,c_n \in \mathbb {N}}$

of monomials. The monomials in this family are pairwise distinct, and hence linearly independent over K, if and only if

$({{\boldsymbol x}}^{\boldsymbol e_1 c_1 + \cdots + \boldsymbol e_n c_n})_{c_1,\ldots ,c_n \in \mathbb {N}}$

of monomials. The monomials in this family are pairwise distinct, and hence linearly independent over K, if and only if

![]() $\boldsymbol e_1$

,

$\boldsymbol e_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol e_n$

are linearly independent. However, the linear independence of

$\boldsymbol e_n$

are linearly independent. However, the linear independence of

![]() $({{\boldsymbol x}}^{\boldsymbol e_1 c_1 + \cdots + \boldsymbol e_n c_n})_{c_1,\ldots ,c_n \in \mathbb {N}}$

is equivalent to the algebraic independence of

$({{\boldsymbol x}}^{\boldsymbol e_1 c_1 + \cdots + \boldsymbol e_n c_n})_{c_1,\ldots ,c_n \in \mathbb {N}}$

is equivalent to the algebraic independence of

![]() ${\boldsymbol x}^{\boldsymbol e_1}, \ldots , {\boldsymbol x}^{\boldsymbol e_n}$

.

${\boldsymbol x}^{\boldsymbol e_1}, \ldots , {\boldsymbol x}^{\boldsymbol e_n}$

.

(c)

![]() $\,\Leftrightarrow \,$

(d) If

$\,\Leftrightarrow \,$

(d) If

![]() $0 \ne P \in K[y_1,\ldots ,y_n]$

, then P vanishes on

$0 \ne P \in K[y_1,\ldots ,y_n]$

, then P vanishes on

![]() $(1 - c_1{\boldsymbol x}^{\boldsymbol e_1}, \ldots , 1-c_n{\boldsymbol x}^{\boldsymbol e_n})$

if and only if

$(1 - c_1{\boldsymbol x}^{\boldsymbol e_1}, \ldots , 1-c_n{\boldsymbol x}^{\boldsymbol e_n})$

if and only if

![]() $P(1 - c_1 y_1, \ldots , 1 - c_n y_n)$

vanishes on

$P(1 - c_1 y_1, \ldots , 1 - c_n y_n)$

vanishes on

![]() $({\boldsymbol x}^{\boldsymbol e_1}, \ldots , {\boldsymbol x}^{\boldsymbol e_n})$

. Hence,

$({\boldsymbol x}^{\boldsymbol e_1}, \ldots , {\boldsymbol x}^{\boldsymbol e_n})$

. Hence,

![]() ${\boldsymbol x}^{\boldsymbol e_1}$

,

${\boldsymbol x}^{\boldsymbol e_1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() ${\boldsymbol x}^{\boldsymbol e_n}$

are algebraically independent if and only if the polynomials

${\boldsymbol x}^{\boldsymbol e_n}$

are algebraically independent if and only if the polynomials

![]() $1 - c_1 {\boldsymbol x}^{\boldsymbol e_1}$

,

$1 - c_1 {\boldsymbol x}^{\boldsymbol e_1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $1 - c_n {\boldsymbol x}^{\boldsymbol e_n}$

are algebraically independent.

$1 - c_n {\boldsymbol x}^{\boldsymbol e_n}$

are algebraically independent.

Now, for any choice of polynomials

![]() $f_1$

,

$f_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $f_n$

, the field

$f_n$

, the field

![]() $K(f_1,\ldots ,f_n)$

is an algebraic extension of

$K(f_1,\ldots ,f_n)$

is an algebraic extension of

![]() $K(f_1^{m_1},\ldots ,f_n^{m_n})$

, and therefore the two fields have the same transcendence degree over K. Thus,

$K(f_1^{m_1},\ldots ,f_n^{m_n})$

, and therefore the two fields have the same transcendence degree over K. Thus,

![]() $1 - c_1 {\boldsymbol x}^{\boldsymbol e_1}$

,

$1 - c_1 {\boldsymbol x}^{\boldsymbol e_1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $1 - c_n {\boldsymbol x}^{\boldsymbol e_n}$

is algebraically independent if and only if

$1 - c_n {\boldsymbol x}^{\boldsymbol e_n}$

is algebraically independent if and only if

![]() $(1 - c_1 {\boldsymbol x}^{\boldsymbol e_1})^{m_1}$

,

$(1 - c_1 {\boldsymbol x}^{\boldsymbol e_1})^{m_1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $(1 - c_n {\boldsymbol x}^{\boldsymbol e_n})^{m_n}$

is algebraically independent.

$(1 - c_n {\boldsymbol x}^{\boldsymbol e_n})^{m_n}$

is algebraically independent.

Looking once more at Definition 3.1, in a sequence with piecewise polynomial-exponential coefficients on simple linear sets, for each simple linear set

![]() $\mathcal {S}_i$

, we have

$\mathcal {S}_i$

, we have

![]() $f(\boldsymbol n) = \sum _{j = 1}^{k_i} A_{i,j}(\boldsymbol n) {\boldsymbol \alpha }_{i,j}^{\boldsymbol n}$

for

$f(\boldsymbol n) = \sum _{j = 1}^{k_i} A_{i,j}(\boldsymbol n) {\boldsymbol \alpha }_{i,j}^{\boldsymbol n}$

for

![]() $\boldsymbol n \in \mathcal {S}_i$

. We can represent

$\boldsymbol n \in \mathcal {S}_i$

. We can represent

![]() $\mathcal {S}_i$

as

$\mathcal {S}_i$

as

![]() $\mathcal {S}_i = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_s \mathbb {N}$

with suitable

$\mathcal {S}_i = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_s \mathbb {N}$

with suitable

![]() $\boldsymbol a$

,

$\boldsymbol a$

,

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_s \in \mathbb {N}^d$

, where

$\boldsymbol b_s \in \mathbb {N}^d$

, where

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_s$

are linearly independent. On

$\boldsymbol b_s$

are linearly independent. On

![]() $\mathcal {S}_i$

, we can therefore also consider representations of the form

$\mathcal {S}_i$

, we can therefore also consider representations of the form

![]() $f(\boldsymbol n) = \sum _{j=1}^l B_{i,j}(\boldsymbol m) \boldsymbol \beta _{i,j}^{\boldsymbol m}$

where

$f(\boldsymbol n) = \sum _{j=1}^l B_{i,j}(\boldsymbol m) \boldsymbol \beta _{i,j}^{\boldsymbol m}$

where

![]() $\boldsymbol m=(m_1,\ldots ,m_s)$

with

$\boldsymbol m=(m_1,\ldots ,m_s)$

with

![]() $\boldsymbol n = \boldsymbol a + m_1 \boldsymbol b_1 + \cdots + m_s \boldsymbol b_s$

, and

$\boldsymbol n = \boldsymbol a + m_1 \boldsymbol b_1 + \cdots + m_s \boldsymbol b_s$

, and

![]() $B_{i,1}$

,

$B_{i,1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $B_{i,l} \in K[y_1,\ldots ,y_s]$

,

$B_{i,l} \in K[y_1,\ldots ,y_s]$

,

![]() $\boldsymbol \beta _{i,1}$

,

$\boldsymbol \beta _{i,1}$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol \beta _{i,l} \in (K^*)^s$

. One easily sees how the two notions relate.

$\boldsymbol \beta _{i,l} \in (K^*)^s$

. One easily sees how the two notions relate.

Lemma 3.5 Let

![]() , and let

, and let

![]() $\mathcal {S} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_s \mathbb {N}$

be simple linear. Consider the following statements.

$\mathcal {S} = \boldsymbol a + \boldsymbol b_1 \mathbb {N} + \cdots + \boldsymbol b_s \mathbb {N}$

be simple linear. Consider the following statements.

-

(a) There exist polynomials

$A_1$

,

$A_1$

,

$\ldots \,$

,

$\ldots \,$

,

$A_l \in K[\boldsymbol x]$

and

$A_l \in K[\boldsymbol x]$

and

${\boldsymbol \alpha }_1$

,

${\boldsymbol \alpha }_1$

,

$\ldots \,$

,

$\ldots \,$

,

${\boldsymbol \alpha }_l \in (K^*)^d$

such that

${\boldsymbol \alpha }_l \in (K^*)^d$

such that  $$\begin{align*}f(\boldsymbol n) = \sum_{j=1}^l A_j(\boldsymbol n) {\boldsymbol\alpha}_j^{\boldsymbol n} \qquad\text{for}\ {\boldsymbol n} \in \mathcal{S}. \end{align*}$$

$$\begin{align*}f(\boldsymbol n) = \sum_{j=1}^l A_j(\boldsymbol n) {\boldsymbol\alpha}_j^{\boldsymbol n} \qquad\text{for}\ {\boldsymbol n} \in \mathcal{S}. \end{align*}$$

-

(b) There exist polynomials

$B_1$

,

$B_1$

,

$\ldots \,$

,

$\ldots \,$

,

$B_l \in K[y_1,\ldots ,y_s]$

and

$B_l \in K[y_1,\ldots ,y_s]$

and

${\boldsymbol \beta }_1$

,

${\boldsymbol \beta }_1$

,

$\ldots \,$

,

$\ldots \,$

,

${\boldsymbol \beta }_l \in (K^*)^s$

such that

${\boldsymbol \beta }_l \in (K^*)^s$

such that  $$\begin{align*}f(\boldsymbol a + \boldsymbol b_1 m_1 + \cdots + \boldsymbol b_s m_s) = \sum_{j=1}^l B_j(\boldsymbol m) {\boldsymbol\beta}_j^{\boldsymbol m} \qquad\text{for}\ \boldsymbol m=(m_1,\ldots,m_s) \in \mathbb{N}^s. \end{align*}$$

$$\begin{align*}f(\boldsymbol a + \boldsymbol b_1 m_1 + \cdots + \boldsymbol b_s m_s) = \sum_{j=1}^l B_j(\boldsymbol m) {\boldsymbol\beta}_j^{\boldsymbol m} \qquad\text{for}\ \boldsymbol m=(m_1,\ldots,m_s) \in \mathbb{N}^s. \end{align*}$$

Then (a)

![]() $\,\Rightarrow \,$

(b). If K is algebraically closed, then also (b)

$\,\Rightarrow \,$

(b). If K is algebraically closed, then also (b)

![]() $\,\Rightarrow \,$

(a).

$\,\Rightarrow \,$

(a).

Proof

(a)

![]() $\,\Rightarrow \,$

(b). By straightforward substitution.

$\,\Rightarrow \,$

(b). By straightforward substitution.

(b)

![]() $\,\Rightarrow \,$

(a). Let

$\,\Rightarrow \,$

(a). Let

![]() $\boldsymbol n=(n_1,\ldots ,n_d) \in \mathcal {S}$

. Since

$\boldsymbol n=(n_1,\ldots ,n_d) \in \mathcal {S}$

. Since

![]() $\boldsymbol b_1$

,

$\boldsymbol b_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $\boldsymbol b_s$

are linearly independent, there exist unique

$\boldsymbol b_s$

are linearly independent, there exist unique

![]() $m_1$

,

$m_1$

,

![]() $\ldots \,$

,

$\ldots \,$

,

![]() $m_s \in \mathbb {N}^d$

with

$m_s \in \mathbb {N}^d$

with

![]() $\boldsymbol n = \boldsymbol a + \boldsymbol b_1 m_1 + \cdots + \boldsymbol b_s m_s$

. Solving this linear system over

$\boldsymbol n = \boldsymbol a + \boldsymbol b_1 m_1 + \cdots + \boldsymbol b_s m_s$

. Solving this linear system over

![]() $\mathbb {Q}$

, there exists

$\mathbb {Q}$

, there exists

![]() $N \in \mathbb {N}_{\ge 1}$

and, for every

$N \in \mathbb {N}_{\ge 1}$

and, for every

![]() $i \in [1,s]$

and

$i \in [1,s]$

and

![]() $j \in [1,d]$

integers

$j \in [1,d]$

integers

![]() $p_i$

,

$p_i$

,

![]() $q_{i,j}$

such that

$q_{i,j}$

such that

$$\begin{align*}m_i = p_i/N + \sum_{j=1}^d n_j q_{i,j}/N \end{align*}$$

$$\begin{align*}m_i = p_i/N + \sum_{j=1}^d n_j q_{i,j}/N \end{align*}$$

for all

![]() $\boldsymbol n=(n_1,\ldots ,n_d) \in \mathcal {S}$

and

$\boldsymbol n=(n_1,\ldots ,n_d) \in \mathcal {S}$

and

![]() $\boldsymbol m=(m_1,\ldots ,m_s) \in \mathbb {N}^s$

with

$\boldsymbol m=(m_1,\ldots ,m_s) \in \mathbb {N}^s$

with

![]() $\boldsymbol n = \boldsymbol a + \boldsymbol b_1 m_1 + \cdots + \boldsymbol b_s m_s$

.

$\boldsymbol n = \boldsymbol a + \boldsymbol b_1 m_1 + \cdots + \boldsymbol b_s m_s$

.

Let

![]() $\nu \in [1,l]$

. Suppose

$\nu \in [1,l]$

. Suppose

![]() ${\boldsymbol \beta }_\nu =(\beta _{\nu ,1},\ldots ,\beta _{\nu ,s})$

and pick

${\boldsymbol \beta }_\nu =(\beta _{\nu ,1},\ldots ,\beta _{\nu ,s})$

and pick

![]() $\gamma _{\nu ,i} \in K^*$

with

$\gamma _{\nu ,i} \in K^*$

with

![]() $\gamma _{\nu ,i}^N = \beta _{\nu ,i}$

for

$\gamma _{\nu ,i}^N = \beta _{\nu ,i}$

for

![]() $i \in [1,s]$

. Set

$i \in [1,s]$