Precision psychiatry seeks to provide the right treatment to the right patient at the right time, using algorithms to predict disease trajectory or treatment response. Although promising for improving mental healthcare and intervention efficacy, reliance on predicted outcomes over current presentations raises potential hazards. As an example, justice is one of the four major principles of bioethics (the other three being beneficence, non-maleficence and autonomy) and it refers to a fair and equitable treatment of patients and distribution of resources.Reference Beauchamp and Childress1 Algorithmic fairness (also referred to as fairness in this paper) is a principle linked to justice in bioethics and it can be operationalised by a number of different metrics.Reference Verma and Rubin2 A prediction algorithm can be considered fair for a demographic attribute if the predictions do not systematically disadvantage any subgroups regarding the respective demographic attribute (including but not limited to gender, education, age, race, ethnicity and socioeconomic status). Evidence so far shows that biases in medical algorithms can lead to underdiagnosis and disparate allocation of health resources in different subpopulations.Reference Seyyed-Kalantari, Zhang, McDermott, Chen and Ghassemi3,Reference Obermeyer, Powers, Vogeli and Mullainathan4 To prevent the emergence, perpetuation or reinforcement of health disparities through the use of prediction models, fairness considerations should be incorporated into their development and implementation.

Furthermore, most medical decisions are made by clinicians alone, who can also be subject to biases.Reference Schwartz and Blankenship5,Reference Anglin and Malaspina6 When assessing fairness of prediction models, measuring biases in clinicians’ judgements can serve as an important benchmark against which model fairness can be more pragmatically evaluated. For the ethical and fair implementation of precision psychiatry approaches, a comprehensive understanding of clinical decision-making including all agents involved in the process is crucial.

Psychosis risk states and prediction models

Psychotic disorders remain one of the most substantial contributors to global burden of disease.Reference Vos, Lim, Abbafati, Abbas, Abbasi and Abbasifard7 Unfortunately, existing treatment options do not sufficiently alleviate clinical symptoms once psychosis is fully developed.Reference Catalan, Richter, Salazar de Pablo, Vaquerizo-Serrano, Mancebo and Pedruzo8 Thus, research efforts have focused on an indicated preventive approach to identify and treat people at clinical high risk (CHR) for psychosis and to avoid a deleterious disease course.Reference Oliver, Reilly, Baccaredda Boy, Petros, Davies and Borgwardt9,Reference Salazar de Pablo, Radua, Pereira, Bonoldi, Arienti and Besana10 Robust evidence suggests that individuals at CHR have a probability of approximately 25% of developing psychosis within 2 to 3 years.Reference Salazar de Pablo, Radua, Pereira, Bonoldi, Arienti and Besana10 Moreover, they often already suffer from or develop psychiatric disorders other than psychosis,Reference Beck, Andreou, Studerus, Heitz, Ittig and Leanza11 experience cognitive impairmentReference Catalan, Salazar de Pablo, Aymerich, Damiani, Sordi and Radua12 or retain CHR symptoms.Reference Beck, Andreou, Studerus, Heitz, Ittig and Leanza11,Reference Addington, Cornblatt, Cadenhead, Cannon, McGlashan and Perkins13 Thus, individuals at CHR are a heterogeneous population regarding their disease trajectory.Reference Salazar de Pablo, Besana, Arienti, Catalan, Vaquerizo-Serrano and Cabras14

A multitude of models for prediction of psychosis in CHR populations have been developed in recent years.Reference Sanfelici, Dwyer, Antonucci and Koutsouleris15 Moreover, recent studies have explored the potential of machine learning to predict further important clinical end-points for people on the psychosis spectrum, such as the development of poor psychosocial functioning.Reference Kambeitz-Ilankovic, Vinogradov, Wenzel, Fisher, Haas and Betz16,Reference Koutsouleris, Kambeitz-Ilankovic, Ruhrmann, Rosen, Ruef and Dwyer17 To use such models in clinical practice, it is of utmost importance to ensure both high robustness and generalisability – a prerequisite that has only recently started to be addressed.Reference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18,Reference Koutsouleris, Worthington, Dwyer, Kambeitz-Ilankovic, Sanfelici and Fusar-Poli19 An equally important prerequisite for the deployment of prediction algorithms in clinical settings is their compliance with ethical principles. Although previous work has focused on the use of algorithms in clinical practice on a theoretical level, addressing issues such as trust,Reference Gille, Jobin and Ienca20 privacy,Reference Jacobson, Bentley, Walton, Wang, Fortgang and Millner21 transparencyReference Jacobson, Bentley, Walton, Wang, Fortgang and Millner21,Reference Starke, Schmidt, De Clercq and Elger22 and fairness,Reference Xu, Xiao, Wang, Ning, Shenkman and Bian23 the field so far lacks empirical investigations into the ethics of prediction algorithms.

In this study, we made a detailed investigation of published algorithms which were generated to predict clinical outcomes related to psychosis in individuals at CHR for psychosis or with recent-onset depression – of which 11 were developed to predict transition to psychosis and two to predict psychosocial outcome. The predictions of these algorithms were investigated with respect to multiple fairness criteria and compared with predictions of clinicians.

Method

We evaluated the fairness of psychosis prediction models published in peer-reviewed journals on data from the PRONIA cohort (https://cordis.europa.eu/project/id/602152). PRONIA (Prognostic Tools for Early Psychosis Management) is an EU-funded, naturalistic, multisite study conducted in research sites across Europe and Australia. Over 1700 participants (patients at CHR for psychosis, with recent-onset psychosis or with recent-onset depression and healthy controls) underwent a comprehensive clinical assessment, neuropsychological testing, provided blood markers and underwent a multimodal neuroimaging protocol. All participants were followed-up for 18 months to assess multiple outcome parameters. Further demographic and clinical characteristics of the sample have been previously published.Reference Koutsouleris, Kambeitz-Ilankovic, Ruhrmann, Rosen, Ruef and Dwyer17,Reference Koutsouleris, Dwyer, Degenhardt, Maj, Urquijo-Castro and Sanfelici24 Written informed consent was obtained from all participants. The study was registered with the German Clinical Trials Register (DRKS00005042) and approved by the local research ethics committees in each location.

We investigated algorithms for the prediction of two clinically highly relevant outcomes: transition to psychosis and development of poor psychosocial functioning. In a first step, previously published clinical prediction models for the transition to psychosis were tested in the PRONIA data-set as an independent test sample and predictions were evaluated with respect to fairness criteria explained below. In this analysis, we focused on a subset of six predictive models with an area under the curve (AUC) of 65% or higher based on a previous analysisReference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 and also included a model that was validated independently.Reference Koutsouleris, Worthington, Dwyer, Kambeitz-Ilankovic, Sanfelici and Fusar-Poli19,Reference Cannon, Yu, Addington, Bearden, Cadenhead and Cornblatt25 In addition, we investigated fairness in prediction models for transition to psychosis based on further data modalities (clinical/neurocognitive, neuroimaging and genetic data as well as their combination) developed in the PRONIA study using data relating to CHR for psychosis and recent-onset depression.Reference Koutsouleris, Dwyer, Degenhardt, Maj, Urquijo-Castro and Sanfelici24 In a second step, we analysed predictive models of social and role functioning outcomes in individuals at CHR for psychosis or with recent-onset depression, as published by Koutsouleris et al.Reference Koutsouleris, Kambeitz-Ilankovic, Ruhrmann, Rosen, Ruef and Dwyer17 Social and role functioning were measured using the Global Functioning: Social Scale and the Global Functioning: Role Scale;Reference Cornblatt, Auther, Niendam, Smith, Zinberg and Bearden26 for the analyses in our study, scores >7 points were taken as indicators of good functioning, whereas ≤7 points indicated poor functioning (7 points indicating mild impairment in social or role functioning, 8 points indicating good social or role functioning: for further details see the Supplementary material, available online at https://dx.doi.org/10.1192/bjp.2023.141).Reference Cornblatt, Auther, Niendam, Smith, Zinberg and Bearden26,Reference Carrión, McLaughlin, Goldberg, Auther, Olsen and Olvet27 In all analyses, we used the performance of clinical raters as a pragmatic benchmark against which the relative benefits and hazards of model bias can be assessed.

Fairness was investigated with respect to two relevant sensitive attributes: gender and educational attainment. Education was binarised to higher and lower educational level using the median of years of education in the sample as a cut-off. For each prediction model, we calculated the accuracy, balanced accuracy, true positive rate, true negative rate, positive predictive value and negative predictive value for all sensitive attributes. Owing to the small number of participants of non-European ethnicity in the PRONIA sample, analysing fairness for the sensitive attributes of race and ethnicity was not possible.

We chose four fairness metrics that are relevant in the context of outcome prediction in people at CHR for psychosis. For each sensitive attribute, we tested whether all subgroups exhibit: (a) equal balanced accuracy (accuracy equality), (b) equal positive predictive value (predictive parity), (c) equal false-positive rate (false-positive error rate (FPER) balance) and (d) equal false-negative rate (false-negative error rate (FNER) balance).Reference Verma and Rubin2 Owing to class imbalance in outcome variables (transition to psychosis, poor functional outcome), we used balanced accuracy as a measure of accuracy equality. The performance measures (accuracy, true-positive rate (TPR), false-positive rate (FPR), false-negative rate (FNR), positive predictive value (PPV)) of one group were taken as the reference values of the respective demographic attributes. The fairness metrics were calculated as the ratio of each attribute's group metric to the reference group metric. Therefore, a value of 1 indicates absolute fairness, and the more a value deviates from 1, the more pronounced the disparities. Values between 0.8 and 1.25, indicating that a subgroup's metric values were at least 80% of the subgroup with the highest metric values, were considered as fulfilling the fairness criteria according to the so-called four-fifths rule.Reference Bobko, Roth and Martocchio28

We used permutation testing to assess whether prediction models showed statistically significant deviations from fair predictions.Reference Ojala and Garriga29 Fairness metrics were computed in 10 000 samples with randomly permuted sensitive attributes to create a null distribution. Separate permutation tests were performed for each fairness criterion. Throughout the study, a Bonferroni-corrected threshold of α < 0.05 was considered significant.

All statistical analyses were performed in R (R-Studio Version 1.3.1093, R Version 4.0.4, for Windows).

Results

Table 1 summarises key characteristics of the PRONIA sample used for the analyses. Table 2 lists all performance and fairness metrics for all models and sensitive attributes. There were no significant differences in psychosocial outcome or rates of transition to psychosis between male and female participants. Transition rates in participants with higher and lower educational level at baseline did not significantly differ either. However, participants with lower educational level showed a significantly higher rate of poor outcome in psychosocial functioning (CHR sample: poor role functioning in 54% of participants with higher educational level versus 75% in those with lower educational level (χ2 = 7.737, P = 0.003); poor social functioning in 45% of participants with higher educational level versus 69% in those with lower educational level (χ2 = 10.316, P < 0.001)).

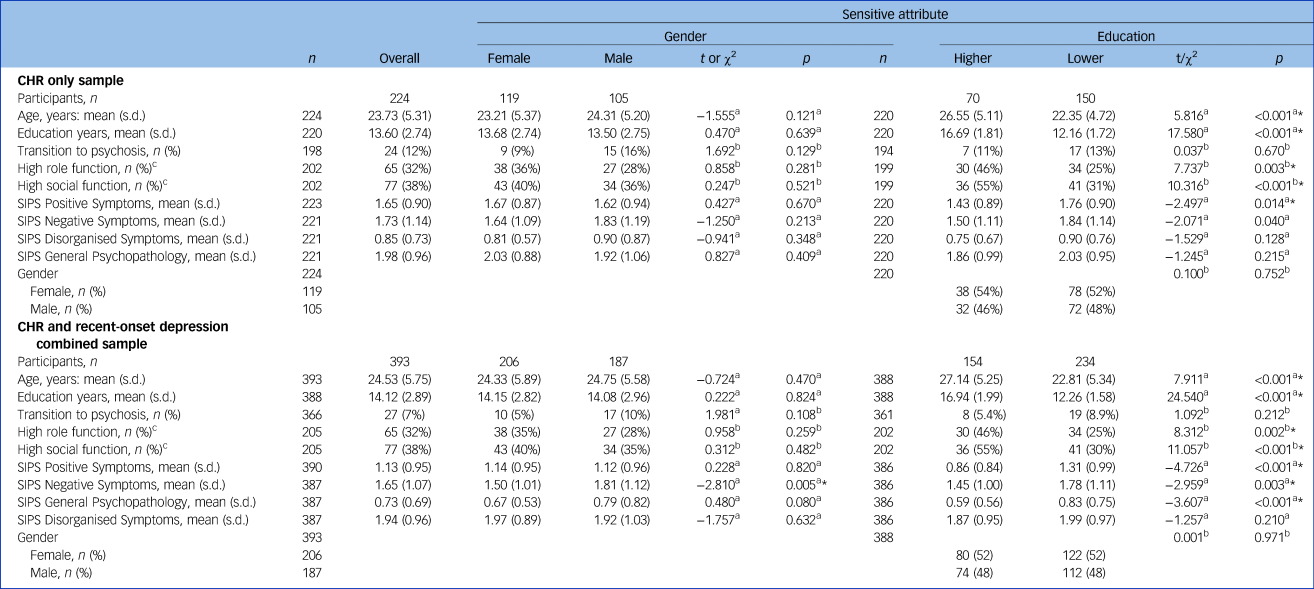

Table 1 Key characteristics of the study sample

CHR, clinical high risk for psychosis; SIPS, Structured Interview for Psychosis-Risk Syndromes.

a. Welch two-sample t-test.

b. Pearson's χ2-test.

c. Global Functioning: Social Scale and Global Functioning: Role Scale; cut-off: 7.

* Significance level p < 0.05.

Table 2 Performance matrices and fairness indices of psychosis transition and functional outcome prediction algorithms

TP, true positive; TN, true negative; FP, false positive; FN, false negative; ACC, accuracy; BAC, balanced accuracy (BAC); TPR, true-positive rate; TNR, true-negative rate; PPV, positive predictive value; NPV, negative predictive value; FPER, false-positive error rate; FNER, false-negative error rate; CHR, clinical high risk for psychosis; PRS, polygenic risk score; MRI, magnetic resonance imaging; NAPLS, North American Prodrome Longitudinal Study risk calculator.

* Bonferroni corrected P < 0.05. All fairness metrics are stated as metric value (P-value).

The investigated algorithms were often not within the predefined permissible fairness range for the sensitive attributes of gender (27 out of 64 criteria) and education (34 out of 64 criteria). For the four fairness criteria that were analysed according to the two sensitive attributes on 13 algorithms and clinicians’ predictions, statistically significant fairness violations emerged for FPER in only three models and FNER in only one model. These significant deviations all emerged for the sensitive attribute education and coherently consisted in assigning more favourable outcomes to participants with higher educational level; we did not find any statistically significant fairness violations for gender.

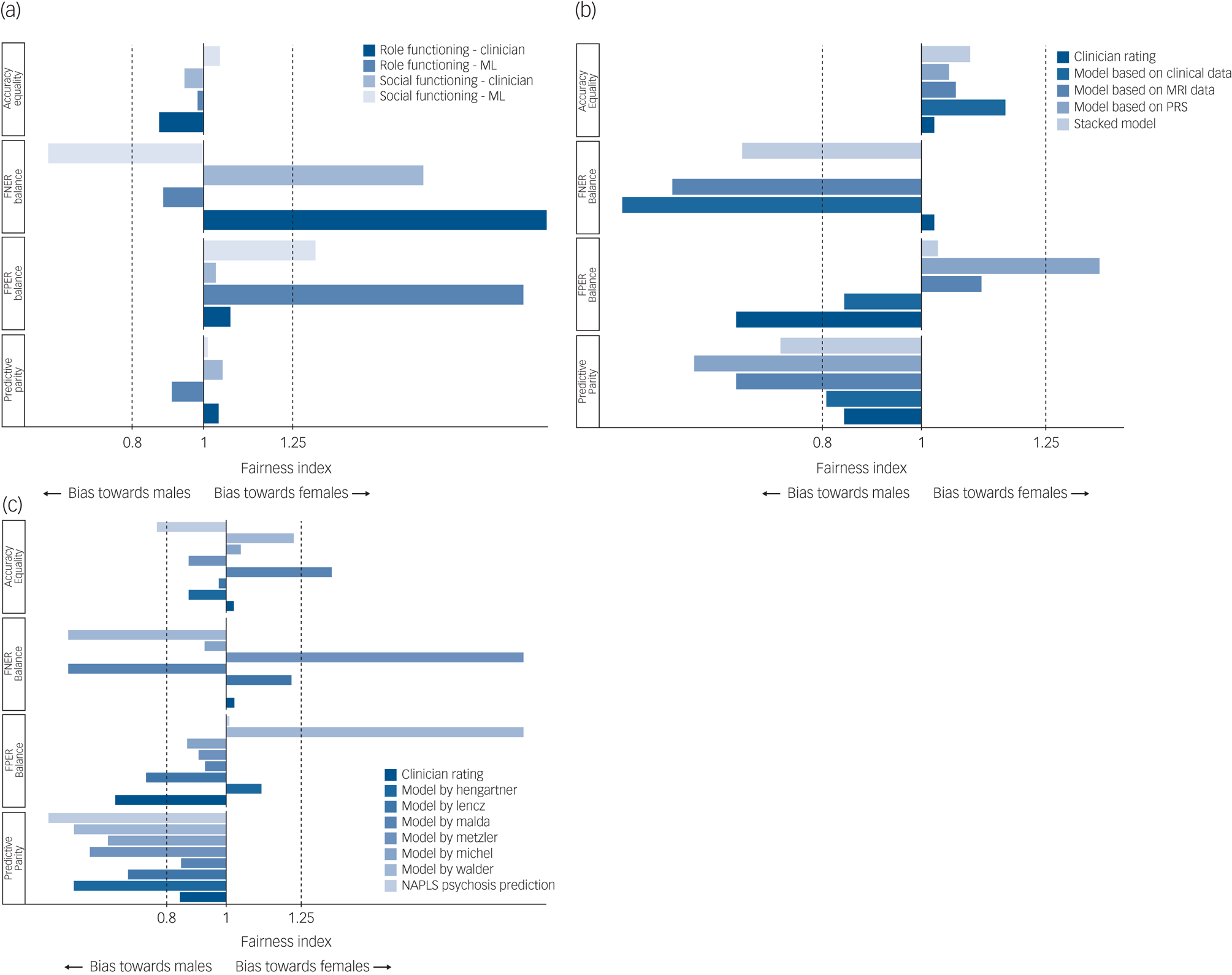

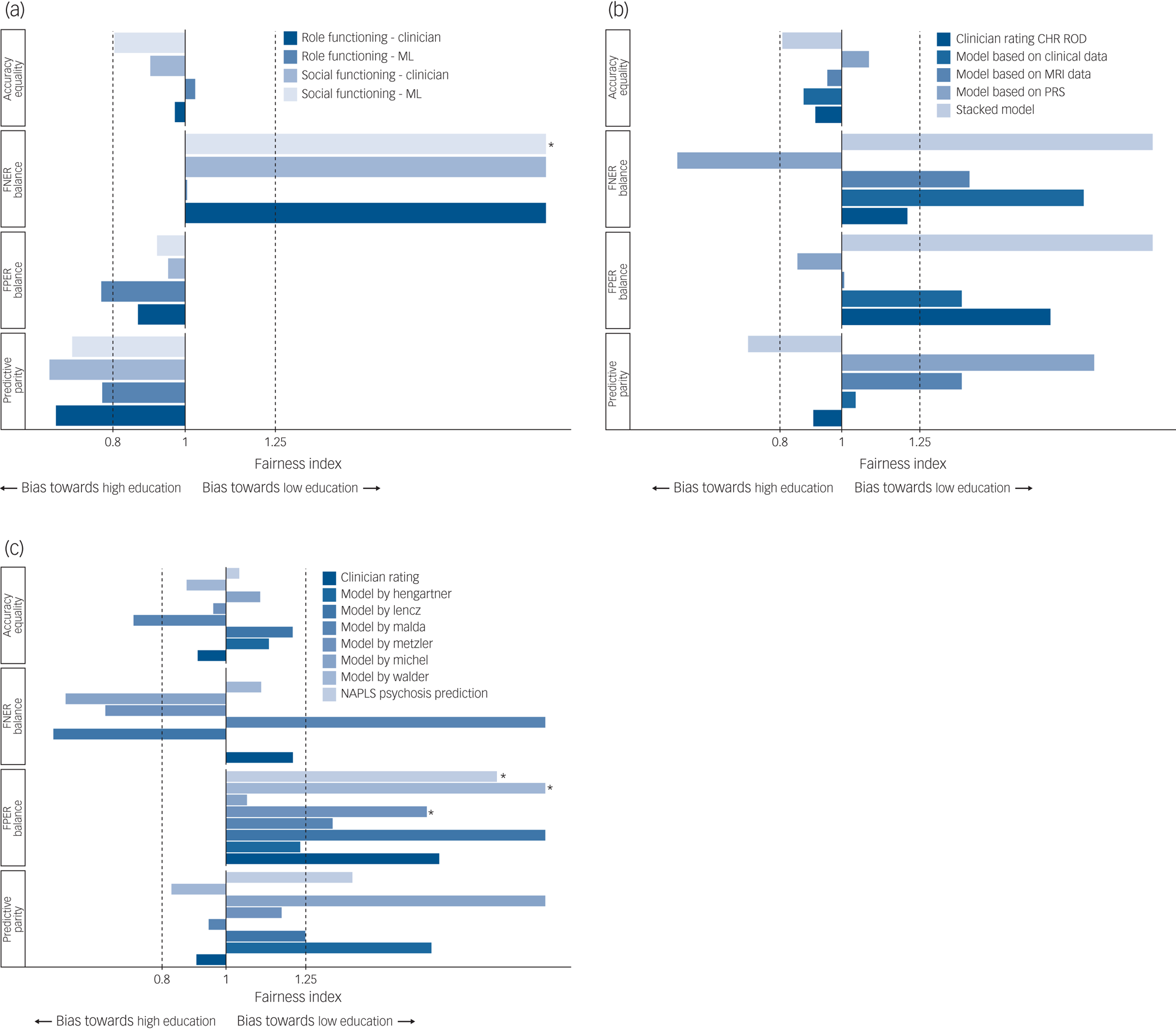

All performance and fairness metrics are presented in Table 2. Figure 1 depicts fairness metrics for the sensitive attribute gender, Fig. 2 for the sensitive attribute education.

Fig. 1 Fairness of prediction models validated on PRONIA data for the sensitive attribute ‘gender’, with males as the reference group.

(a) The fairness of predictions of functional outcome. (b) and (c) The fairness of predictions of transition to psychosis. The continuous line at x = 1 shows absolute fairness and the dashed lines at x = 0.8 and x = 1.25 cover the permissible fairness range according to the four-fifths rule. Values higher than 2 were replaced with x = 2 in the figures. The false-negative error rate (FNER) balance could not be calculated for the model by Hengartner, the North American Prodrome Longitudinal Study (NAPLS) risk calculator and polygenic risk score (PRS) model because there were 0 false negatives in the reference group. ML, machine learning; MRI, magnetic resonance imaging.

Fig. 2 Fairness of prediction models validated on PRONIA data for the sensitive attribute ‘education’, binarised high/low, with participants with a higher educational level as the reference group.

(a) The fairness of predictions of functional outcome. (b) and (c) The fairness of predictions of transition to psychosis. The continuous line at x = 1 shows absolute fairness and the dotted lines at x = 0.8 and at x = 1.25 cover the permissible fairness range according to the four-fifths rule. Values higher than 2 were replaced with x = 2 in the figures. The false-negative error rate (FNER) balance could not be calculated for the model by Hengartner and the North American Prodrome Longitudinal Study (NAPLS) risk calculator as there were 0 false negatives in the reference group. *Bonferroni corrected P < 0.05. ML, machine learning; CHR, clinical high risk for psychosis; ROD, recent-onset depression; MRI, magnetic resonance imaging.

Fairness of models for the prediction of transition to psychosis

In a first analysis, we investigated six previously published prediction models for the transition to psychosis that showed the highest accuracy when applied to the PRONIA data-set as an independent test sampleReference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 and the North American Prodrome Longitudinal Study (NAPLS) risk calculator, which has been validated for the prediction of transition to psychosis in the PRONIA data-set.Reference Koutsouleris, Worthington, Dwyer, Kambeitz-Ilankovic, Sanfelici and Fusar-Poli19,Reference Cannon, Yu, Addington, Bearden, Cadenhead and Cornblatt25 Features used in prediction models can be found in Supplementary Table 1. Five out of seven examined models fulfilled the criteria accuracy equality for both gender and education, with accuracy equalities in the permissible range and no significant deviations of accuracy in any of the sensitive attributes. The four-fifths rule was violated by the prediction model of Malda et al (2019),Reference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 which had a higher accuracy for females and participants with higher educational level, and by the NAPLS risk calculator, which had a higher accuracy for males. On predictive parity, we observed a systematic deviation in all seven prediction algorithms and clinicians’ predictions towards higher positive predictive values for males, although none reached statistical significance. All prediction models, including clinicians’ predictions, showed a higher false-positive rate for participants with lower educational level, with three models showing statistically significant deviations (model by Metzler:Reference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 FPER = 1.630, P = 0.003; model by Walder:Reference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 FPER = 2.410, P = 0.004; NAPLS risk calculator:Reference Cannon, Yu, Addington, Bearden, Cadenhead and Cornblatt25 FPER = 1.850, P < 0.001).

Overall, there was no systematic difference of bias between clinicians and algorithms.

In a second analysis, we investigated multimodal models for the prediction of transition to psychosis based on clinical, neuroimaging and genetic data and a stacked model based on the listed data modalities, which were previously developed in the PRONIA data-set. All four models fulfilled accuracy equality for both sensitive attributes, with a slight systematic deviation for higher balanced accuracies for females, whereas the positive predictive value was higher for males in all models and in clinicians’ predictions. In comparison, clinicians’ ratings fulfilled accuracy equality, FNER balance and predictive parity, while violating FPER balance, with higher false-positive rates in males.

For education, all machine learning models and the clinicians’ ratings fulfilled accuracy equality. Positive predictive rate was lower for participants with higher educational level in clinical/neuropsychological, neuroimaging- and genetics-based models, whereas it was higher for those with higher educational level in the stacked model.

Fairness of models for the prediction of psychosocial functioning

In a fourth analysis we investigated fairness criteria in a model for the prediction of psychosocial outcomes which was previously developed in the PRONIA data-set.Reference Koutsouleris, Kambeitz-Ilankovic, Ruhrmann, Rosen, Ruef and Dwyer17 There was no violation of accuracy equality for any of the tested sensitive attributes. Clinicians assigned more favourable outcomes to males, whereas machine learning-based predictions fulfilled three of four fairness criteria, violating only predictive equality. False-positive rates were higher in for females (role functioning: FPR = 27.1% for females versus 14.3% for males; social functioning: FPR = 29.2% for females versus 22.2% for males).

For education, neither the clinicians’ ratings nor the machine learning models fulfilled the four-fifths rule for all fairness criteria. Both clinicians’ and machine learning's predictions had a higher positive predictive rate for participants with higher educational level for both role and social functioning. False-negative rates were higher for participants with lower educational level, indicating that the rate of those who were incorrectly assigned as poor outcome was higher in people with lower educational level (social functioning machine learning model: FNER = 7.030, P = 0.006). Algorithmic predictions were not more systematically biased than clinicians’ predictions.

Discussion

In the present study, we empirically investigated algorithmic fairness on a range of models for the prediction of outcome in people at clinical high risk for psychosis. A substantial number of investigated algorithms were not within the predefined permissible fairness range for the sensitive attributes of gender and years of education.

Educational bias in models

Overall, apart from algorithms based on neuroimaging data and polygenic risk score (PRS), there was a general tendency to predict more favourable outcomes for participants with higher educational level at baseline. In the majority of examined prediction models, participants with lower educational level were more often falsely predicted to have a transition to psychosis or a poor functional outcome than those with more education: in 6 out of 11 prediction models for transition to psychosis, both accuracy and balanced accuracy were higher for participants with higher educational level, whereas with the exception of the PRS-based prediction model, all prediction models for transition to psychosis had a higher FPR in participants with lower educational level. Clinicians similarly had a higher rate of false positives for participants with lower educational level. Given that the analysed models were masked to years of education, one explanation for these findings could be that they included features directly or indirectly associated with education years. Supporting this hypothesis, the three significantly unfair models included education-related features: the model by Metzler et al included verbal IQ,Reference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 the model by Walder et al included scholastic adjustment in childhood and functioningReference Rosen, Betz, Schultze-Lutter, Chisholm, Haidl and Kambeitz-Ilankovic18 and the NAPLS risk calculator included scores from two neuropsychological tests (the Hopkins Verbal Learning Test – Revised total raw score, testing verbal learning and memory, and the Brief Assessment of Cognition in Schizophrenia symbol coding raw score, testing processing speed).Reference Cannon, Yu, Addington, Bearden, Cadenhead and Cornblatt25 Furthermore, the models based on polygenic risk score and neuroimaging data – two data modalities that are likely to be less associated with education years than neuropsychological data – were the only models without a bias towards participants with higher educational level: higher positive predictive values in participants with lower educational level were revealed in both the PRS and magnetic resonance imaging (MRI) models, and the PRS model was the only model with higher false-negative and false-positive rates as well as lower accuracy for participants with higher educational level. Another hypothetical explanation for the discrepancies in prognostic performance due to educational level is that medical research does not sufficiently represent people with lower educational level and the consequent lack of sufficient data leads to worse algorithmic performance.

Similar to algorithmic educational bias, clinicians also assigned more favourable outcomes to participants with higher educational level. Clinicians’ educational bias is a phenomenon that has not been explored in this context before. There are studies showing bias in clinicians’ behaviour in medicine, with the focus so far being on factors such as race, ethnicity, gender, age and weight (bias against obese patients).Reference Chapman, Kaatz and Carnes30 Specifically, in psychiatry, research on bias-related misdiagnosis shows that previous experiences and certain prototypes might bias psychiatrists’ clinical decision-making while simultaneously being a source of expertise.Reference Adeponle, Groleau and Kirmayer31 In psychiatry, bias-related misdiagnoses have been examined for race,Reference Adeponle, Groleau and Kirmayer31 sexual orientationReference Barr, Roberts and Thakkar32 and ethnicity,Reference Anglin and Malaspina6 although education-related bias has not been explored to date. A bias associating more favourable outcomes to patients with higher educational level could be caused by confirmation bias of clinicians: because educational attainment is one of the most relevant environmental risk factors for psychosis,Reference Dickson, Hedges, Ma, Cullen, MacCabe and Kempton33 clinicians might be more likely to associate higher educational level with lower risks of transition to psychosis or poor psychosocial outcome. To the best of our knowledge, our study is the first to show a tendency towards educational bias in psychiatry.

Gender-related bias

In all prediction models for transition to psychosis, positive predictive values were consistently higher for males, and in 9 out of 11 models for transition to psychosis, the deviation from absolute fairness in positive predictive value transgressed the predefined permissible range of fairness. The clinicians’ predictions also had a higher positive predictive value for males, remaining in the permissible fairness range. Furthermore, clinicians more often falsely predicted poor functional outcome for females, whereas machine learning models more often falsely predicted good functional outcome for females. The male and female patients in the PRONIA data-set did not significantly differ in their key characteristics, including age, years of education and risk symptoms on the Structured Interview for Psychosis-Risk Syndromes (SIPS).

Clinician versus algorithmic bias

Our results suggest that algorithmic predictions were not systematically more biased than clinicians’ predictions. Of 11 algorithms that predicted transition to psychosis, only 1 (Walder) was less fair than clinicians’ predictions on all fairness criteria for the sensitive attribute gender, and only one (the stacked model) was less fair on all fairness criteria for the sensitive attribute education. Although clinicians were less fair regarding false-positive predictions for the sensitive attribute gender, they made fairer predictions from an overall accuracy point of view. Similarly, although clinicians were fairer regarding false-negative predictions for the sensitive attribute education, 6 out of 11 prediction models were fairer on overall accuracy. Thus, our data did not show any sign of generalised systematic bias of algorithms in comparison with clinicians’ predictions.

Algorithmic fairness is a novel field of research in medicine and, so far, investigations of fairness of algorithmic predictions without benchmarking them to standard procedures found biased decisions disadvantageous to specific populations characterised by race,Reference Obermeyer, Powers, Vogeli and Mullainathan4,Reference Guo, Lee, Kassamali, Mita and Nambudiri34 genderReference Barr, Roberts and Thakkar32 and age.Reference Abbasi-Sureshjani, Raumanns, Michels, Schouten, Cheplygina, Cardoso, Van Nguyen, Heller, Abreu, Isgum and Silva35 However, they did not quantify the amount of clinician bias: thus, it was not possible to conclude whether the prediction models led to more or less fairness compared with standard procedures. By comparing algorithmic and clinicians’ predictions, we allow a framework for weighing the additional harm that can be caused by employing algorithms in predictive medicine. Based on our findings that the examined algorithms did not show stronger bias compared with clinicians’ predictions, their clinical use might not pose an ethical conflict from a fairness perspective. We underline here that comparing an algorithm with the current standard (i.e. clinicians’ judgements) might serve as a pragmatic benchmark for assessing the ethical permissibility of an algorithm in terms of fairness.

Fairness metrics and their relevance

Our study shows that the degree of fairness differs depending on the fairness metric. The algorithms we analysed all showed comparable and little bias in accuracy equality; however, their degree of fairness vacillated more in FPER and FNER balance. The priority that should be given to a fairness metric would depend on the predictive task at hand. In our study, we focused on the prediction of transition to psychosis, in which case, a non-recognition (false-negative prediction) would have different consequences than overdiagnosis (false-positive prediction). Non-recognition would possibly deprive the mispredicted individual of necessary preventive measures that could delay the onset, speed up the diagnosis and ameliorate the course of disease. In this context, a higher educational level could present a double-edged sword, as it is associated with higher health literacy,Reference Stormacq, Van den Broucke and Wosinski36 better health outcomesReference Kunst, Bos, Lahelma, Bartley, Lissau and Regidor37 and might be a protective factor against psychiatric disorders;Reference Erickson, El-Gabalawy, Palitsky, Patten, Mackenzie and Stein38 but at the same time, a bias associating more favourable outcomes to patients with higher educational level could lead to a higher risk of non-recognition of CHR states and to lack/delay of necessary preventive health measures. On the other hand, higher rates of overdiagnosis in patients with lower educational level could reinforce stigma against these patients, could harm them by overtreatment with medications with substantial side-effects (e.g. antipsychotics) but might simultaneously lead to better preventive care through more frequent follow-up visits and early recognition of psychosis symptoms.

Strengths

To the best of our knowledge, this study is the first to examine bias in psychosis prediction and the first to compare predictive bias between clinicians and algorithms in medicine. By testing the fairness of various predictive algorithms based on different data modalities, we provide evidence for a pattern of educational bias in psychosis prediction. None of the models we analysed included education years and only one model contained gender as a model feature (model by Malda et al); therefore, the investigated models mostly did not directly include the sensitive attributes they were analysed for, allowing an assessment of inherent biases incorporated in the models. Moreover, by analysing models that were developed using other data-sets than PRONIA, we could also assess bias independent from training data-sets, allowing a more realistic assessment of model fairness. The educational bias we detected was consistent in all models. By analysing models based on different data modalities, such as genetic, imaging and clinical data, we assessed whether model bias depends on data modalities used in model development and showed that models based on genetic and imaging data are less susceptible to educational bias. In addition, our study is the first in the literature to find educational bias in psychosis prediction.

Limitations

Our study has several limitations. First, the ethnic and racial homogeneity of our sample – consisting mostly of White European patients – limits the generalisability of our findings. Fairness analyses need to be replicated in samples with higher heterogeneity to control for ethnic and racial bias, especially since previous work hints at racial and ethnic bias in psychiatric diseases.Reference Anglin and Malaspina6,Reference Adeponle, Groleau and Kirmayer31 Second, our work compares bias in algorithms with bias in clinicians’ predictions but cannot address the question of whether these biases are compounded in algorithm-supported decision-making processes in which predictions are not made by a clinician or an algorithm alone but algorithms are used to support clinicians’ decisions. Third, the follow-up period of the patients presented in this study was limited to 18 months. Longer follow-ups can change the performance metrics of the presented models for transition to psychosis, as the number of patients developing psychosis will probably be higher over a longer follow-up period. Fourth, although we found evidence for an educational bias, educational status in our study was based on years of education and not on the type and level of education. However, even in a sample with a relatively high overall educational status, we found a clear tendency of predicting more favourable outcomes for those with more years of education. Fifth, in the absence of a consensus, we applied the four-fifths rule as an orientation for a permissible fairness range. However, we note that this range is arbitrary and can be adapted according to the fairness question at hand. Sixth, we analysed the algorithms according to the categories and cut-offs of the original studies, since changing categories or shifting cut-offs would require training the algorithms anew, which would result in new algorithms. Thus, although our research focused on assessing the fairness of existing algorithms and addressed the deployment phase of algorithms, future research should also investigate the development phase and control for effects of changing labels/categories as well as shifting cut-offs. Finally, although this study unravels a pattern of educational bias, it remains unclear whether the observed educational bias would translate to a disadvantage in mental healthcare for patients with higher educational level at clinical high risk for psychosis. Psychosis prediction algorithms are not widely used in clinical psychiatry and in order to investigate the consequences of such bias, data from clinical settings in which predictive algorithms are employed are necessary. Data from clinical settings would also allow researchers to determine whether there are compounding effects of clinicians’ interaction with a biased algorithm.

Implications

Even though educational status was not directly included as a predictive feature in the algorithms we evaluated, the consistent pattern of educational bias found in our study highlights the fact that dissecting biases in algorithms requires comprehensive analyses and may not always be straightforward since fairness violations might be present for demographic attributes that are not included in the algorithmic decision-making process.

As predictive algorithms gain importance in medical practice, it is of paramount importance to ensure their compliance with ethical principles. The accuracy of clinical decisions based on predictions can only be tested at a later point, by which time the disparate allocation of resources based on algorithmic decision-making might already have resulted in disparities in healthcare, putting certain demographic groups at higher risk or depriving them of necessary preventive measures. Thus, fairness as a core principle of bioethics should be incorporated in the development of precision medicine approaches to avoid the emergence, perpetuation and reinforcement of health disparities.

Supplementary material

Supplementary material is available online at https://doi.org/10.1192/bjp.2023.141.

Data availability

Supplementary findings supporting this study are available on request from the corresponding author. The data are not publicly available owing to Institutional Review Board restrictions, since the participants did not consent to their data being publicly available. Code used for the analysis will be made available under https://github.com/deryasahinmd.

Author contributions

D.Ş.: conceptualisation, methodology, investigation, software, analysis, writing (original draft), visualisation, funding acquisition; L.K.-I., S.W., D.D., R.U., R.S., S.B., P.B., E.M., S.R., F.S.-L., R.L., A.B., C.P., N.K.: data acquisition, investigation, resources, writing (review and editing); J.K.: data acquisition, investigation, resources, conceptualization, methodology, software, analysis, supervision, writing (review and editing).

Funding

This work was funded by a Gerok grant awarded to D.Ş. by the Medical Faculty of University of Cologne under German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) support. PRONIA is a Collaborative Project funded by the European Union under the 7th Framework Programme under grant agreement no. 602152.

Declaration of interest

L.K.-I., F.S.-L. and R.U. are members of BJPsych editorial board and did not take part in the review or decision-making process of this paper.

eLetters

No eLetters have been published for this article.