1. Introduction

In this work, we assess the potential of deep neural networks (DNNs, see LeCun, Bengio & Hinton Reference LeCun, Bengio and Hinton2015) to perform non-intrusive sensing, that consists of using measurable quantities in a fluid flow to reconstruct its behaviour in another location in the domain or to predict its dynamics in the future, without using probes that affect the flow itself. For instance, it is possible to accurately measure time-resolved quantities at the wall, such as the wall-shear stress or the pressure, and then correlate these measurements with the flow farther away. A reliable flow estimation is typically a prerequisite for closed-loop control applications, where the actuation is applied with the aim of suppressing the effect of certain structures in the flow (Choi, Moin & Kim Reference Choi, Moin and Kim1994). In order to effectively perform closed-loop control it is necessary to monitor the instantaneous state of the flow so as to devise the best way to affect it, however, characterizing the flow state can be extremely challenging, particularly at very high Reynolds numbers where the near-wall structures become progressively smaller.

Before the appearance of DNNs, flow-field predictions were performed mainly through linear methods. Among them, the linear stochastic estimation (LSE) introduced by Adrian (Reference Adrian1988) stands out. Recently Suzuki & Hasegawa (Reference Suzuki and Hasegawa2017) and Encinar & Jiménez (Reference Encinar and Jiménez2019) have used LSE to reconstruct the velocity field on a wall-parallel plane in a turbulent channel flow employing wall measurements. The latter study showed that LSE can only reconstruct the large wall-attached eddies in the outer part of the logarithmic region. An extension of the LSE method in the spectral domain (Tinney et al. Reference Tinney, Coiffet, Delville, Hall, Jordan and Glauser2006) was shown to be more suitable for noisy predictions in turbulent flows. More recently, Baars & Tinney (Reference Baars and Tinney2014) proposed a method based on proper orthogonal decomposition (POD) to improve the spectral-LSE approach. Borée (Reference Borée2003) reported the possibility of projecting a synchronized field on the POD temporal modes of another quantity; this method is known as extended POD (EPOD). The correlation matrix between the temporal POD coefficients of two given quantities can be used to predict one based on the other. The work of Borée (Reference Borée2003) proved EPOD to be equivalent to LSE when all modes are retained in the reconstruction. Using remote probes, EPOD has been used to provide predictions in turbulent jets (Tinney, Ukeiley & Glauser Reference Tinney, Ukeiley and Glauser2008), channel flows (Discetti, Raiola & Ianiro Reference Discetti, Raiola and Ianiro2018), pipe flows (Discetti et al. Reference Discetti, Bellani, Örlü, Serpieri, Sanmiguel Vila, Raiola, Zheng, Mascotelli, Talamelli and Ianiro2019) and wall-mounted obstacles (Bourgeois, Noack & Martinuzzi Reference Bourgeois, Noack and Martinuzzi2013; Hosseini, Martinuzzi & Noack Reference Hosseini, Martinuzzi and Noack2016). Note, however, that, in the latter work, quadratic terms are included in the model of the POD coefficient dynamics, hinting at the need of nonlinear estimation even for a relatively simple, predominantly oscillatory flow. In this regard, Sasaki et al. (Reference Sasaki, Vinuesa, Cavalieri, Schlatter and Henningson2019) recently assessed the capabilities of both linear and nonlinear transfer functions with single and multiple inputs to provide turbulent-flow predictions. They documented a significant improvement in the predictions when the transfer functions were designed to account for nonlinear interactions between the inputs and the flow field. The improved prediction capabilities of nonlinear methods over linear ones were also reported by Mokhasi, Rempfer & Kandala (Reference Mokhasi, Rempfer and Kandala2009) and Nair & Goza (Reference Nair and Goza2020).

DNNs are nonlinear models that have found application in many research areas (Jean et al. Reference Jean, Burke, Xie, Davis, Lobell and Ermon2016; De Fauw et al. Reference De Fauw2018; Norouzzadeh et al. Reference Norouzzadeh, Nguyen, Kosmala, Swanson, Palmer, Packer and Clune2018; Ham, Kim & Luo Reference Ham, Kim and Luo2019; Udrescu & Tegmark Reference Udrescu and Tegmark2020; Vinuesa et al. Reference Vinuesa, Azizpour, Leite, Balaam, Dignum, Domisch, Felländer, Langhans, Tegmark and Fuso Nerini2020). Due to their potential applications in flow modelling, identification of turbulence features and flow control, DNNs have recently received extensive attention in the fluid-mechanics research community (Kutz Reference Kutz2017; Jiménez Reference Jiménez2018; Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). Here, we provide a brief overview of the recent neural-network applications in fluid mechanics, before coming back to the flow estimation problem we investigated in this work. In the case of turbulence modelling, DNNs have been reported to improve the results of Reynolds-averaged Navier–Stokes (Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Wu, Xiao & Paterson Reference Wu, Xiao and Paterson2018) models and large-eddy simulations (Beck, Flad & Munz Reference Beck, Flad and Munz2019; Lapeyre et al. Reference Lapeyre, Misdariis, Cazard, Veynante and Poinsot2019; Maulik et al. Reference Maulik, San, Rasheed and Vedula2019). There are also a number of ongoing efforts towards including the constraints from the Navier–Stokes equations into prediction models through the so-called physics-informed neural networks (Wang, Wu & Xiao Reference Wang, Wu and Xiao2017; Raissi, Perdikaris & Karniadakis Reference Raissi, Perdikaris and Karniadakis2019). Furthermore, several artificial-intelligence-based solutions have been proposed to perform optimal control on different types of flows, such as the wake behind one or more cylinders (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020). Other promising applications of machine learning to fluid mechanics include generation of inflow conditions (Fukami et al. Reference Fukami, Nabae, Kawai and Fukagata2019b) and extraction of flow patterns (Raissi, Yazdani & Karniadakis Reference Raissi, Yazdani and Karniadakis2020).

DNNs have also been used in temporal prediction of dynamical systems. As an example, Srinivasan et al. (Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019) compared the capabilities of the multi-layer perceptron (MLP, also known as a fully connected neural network) and several long–short-term memory (LSTM) networks to predict the coefficients of a low-order model for near-wall turbulence (Moehlis, Faisst & Eckhardt Reference Moehlis, Faisst and Eckhardt2004). While the most relevant flow features are captured by both architectures, the LSTM network outperformed the MLP in terms of ability to predict turbulence statistics and the dynamics of the flow. This work has been extended by Eivazi et al. (Reference Eivazi, Guastoni, Schlatter, Azizpour and Vinuesa2021), where the LSTM network has been compared with a Koopman-based framework which accounts for nonlinearities through external forcing. Although both approaches provide accurate predictions of the dynamical evolution of the system, the latter outperforms the LSTM in terms of time and data required for training. Similar temporal predictions of the near-wall model (Moehlis et al. Reference Moehlis, Faisst and Eckhardt2004) were conducted by Pandey, Schumacher & Sreenivasan (Reference Pandey, Schumacher and Sreenivasan2020) using echo state networks. Moreover, nonlinear autoregressive exogeneous networks have been used by Lozano-Durán, Bae & Encinar (Reference Lozano-Durán, Bae and Encinar2020) to exploit the relation between the temporal dynamics of the Fourier coefficients of a minimal turbulent channel flow. Their results showed accurate predictions of the bursting events in the logarithmic layer from buffer-region data. Other related work, in the context of control of the Kuramoto–Sivashinsky chaotic system, was recently conducted by Bucci et al. (Reference Bucci, Semeraro, Allauzen, Wisniewski, Cordier and Mathelin2019). Note, however, that the use of temporal sequences implies a high computational cost to generate well-resolved temporal data. Furthermore, longer sequences require higher memory requirements in order to predict the future behaviour. For these reasons, several neural-network-based models that learn spatial relations have been proposed in the literature. Convolutional neural networks (CNNs) have become increasingly popular during the last years due to the hierarchical structure of their input (Fukushima Reference Fukushima1980, Reference Fukushima1988; LeCun et al. Reference LeCun, Boser, Denker, Henderson, Howard, Hubbard and Jackel1989; Lecun et al. Reference Lecun, Bottou, Bengio and Haffner1998). For instance, Fukami, Fukagata & Taira (Reference Fukami, Fukagata and Taira2019a, Reference Fukami, Fukagata and Taira2021); Güemes et al. (Reference Güemes, Discetti, Ianiro, Sirmacek, Azizpour and Vinuesa2021) have shown that flow fields from the laminar wake of a cylinder and a turbulent channel can be reconstructed from extremely coarse data with remarkable success. CNNs have also been used to investigate the dynamical features of the flow without a priori knowledge, as shown by Jagodinski, Zhu & Verma (Reference Jagodinski, Zhu and Verma2020).

Neural networks are mathematical models which exhibit very appealing properties, such as being universal approximators (Hornik, Stinchcombe & White Reference Hornik, Stinchcombe and White1989). This means that they can potentially represent any continuous function with the adequate model parametrization, even though there is no guarantee that it is possible to infer the value of the parameters from data sampled from the original function. Nonetheless, neural-network parameters are typically tuned through data-driven training, and as such they have been compared and used together with other data-driven methods. For instance, the relationship between POD (see Lumley Reference Lumley1967) and the MLP is well documented in the literature (Bourlard & Kamp Reference Bourlard and Kamp1988; Baldi & Hornik Reference Baldi and Hornik1989). These works showed that a MLP with a single hidden layer is equivalent to POD if a linear activation function is used. One of the early applications on a fluid-dynamics dataset was proposed by Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002), who compared the results of POD-based neural networks with linear and nonlinear functions for the prediction of near-wall velocity fields, showing that nonlinear POD has significantly better predictive capabilities. More recently, the emergence of autoencoder architectures has motivated a renewed interest in the application of neural networks for dimensionality reduction. Hinton & Salakhutdinov (Reference Hinton and Salakhutdinov2006) proposed the use of deep autoencoders to obtain a low-order representation of high-dimensional data, showing that this approach is able to retain more information than POD. It is interesting to note that this work avoids the inherent difficulty of optimizing weights in deep autoencoders by training each layer with a restricted Boltzmann machine. Murata, Fukami & Fukagata (Reference Murata, Fukami and Fukagata2020) used an autoencoder with convolutional layers to obtain a low-order representation of the flow around a cylinder. Their results suggest that CNN autoencoders with linear activation functions reproduce the same dimensionality reduction as POD, while the use of nonlinear activation functions improves the reconstruction performance. On a related note, flow reconstruction based on shallow neural networks was studied by Erichson et al. (Reference Erichson, Mathelin, Yao, Brunton, Mahoney and Kutz2020) in several fluid-mechanics examples.

This work is not the first in which neural networks are used to perform non-intrusive sensing in wall-bounded flows: in a seminal study over 20 years ago, Lee et al. (Reference Lee, Kim, Babcock and Goodman1997) tested a two-layer neural network (with a single nonlinear activation function) to predict the wall actuation, based on the wall-shear-stress components, in order to reduce the drag at the wall. Guzmán Iñigo, Sipp & Schmid (Reference Guzmán Iñigo, Sipp and Schmid2014) predicted the velocity field in a transitional boundary-layer flow using wall-shear measurements, by projecting the velocity fields on a POD basis and using a dynamic observer to predict the dynamics of the flow. More recently, Kim & Lee (Reference Kim and Lee2020) used the two wall-shear-stress components to predict the instantaneous wall-normal heat flux with satisfactory results. The same wall information was used by Guastoni et al. (Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020) to predict the instantaneous streamwise flow fields at several wall-normal positions using convolutional networks. Their results show that these neural networks provide better predictions than linear methods (see below) in terms of instantaneous predictions and second-order statistics. The predictions were limited to one velocity component and to a low Reynolds number. In this work, all the velocity components are predicted and a higher ![]() $Re$ is investigated as well. Furthermore, in the work by Güemes, Discetti & Ianiro (Reference Güemes, Discetti and Ianiro2019) the information of the most-energetic scales was encoded into a POD basis, and a CNN was used to predict that information at different wall-normal locations from streamwise wall-shear-stress measurements. Their results demonstrated that CNNs can significantly outperform linear methods in the prediction of POD time coefficients for low-order reconstruction of the velocity fields. Their convolutional network would predict only one POD temporal coefficient at a time, in this work all the coefficients are computed at the same time thanks to the implementation of an improved network architecture.

$Re$ is investigated as well. Furthermore, in the work by Güemes, Discetti & Ianiro (Reference Güemes, Discetti and Ianiro2019) the information of the most-energetic scales was encoded into a POD basis, and a CNN was used to predict that information at different wall-normal locations from streamwise wall-shear-stress measurements. Their results demonstrated that CNNs can significantly outperform linear methods in the prediction of POD time coefficients for low-order reconstruction of the velocity fields. Their convolutional network would predict only one POD temporal coefficient at a time, in this work all the coefficients are computed at the same time thanks to the implementation of an improved network architecture.

DNNs perform best when training and test data are taken from the same distribution, i.e. for the same flow and at the same Reynolds number in our case. However, in a real-world application the flow conditions will be continuously varying and/or it might be unfeasible to perform a full training at exactly the same conditions. If the flow behaviour at the Reynolds number of interest is roughly the same as the training Reynolds number and if the neural network can successfully approximate it, then the model should be able to perform consistently across the different Reynolds numbers, as shown in active flow control applications by Tang et al. (Reference Tang, Rabault, Kuhnle, Wang and Wang2020). In order to improve the performance at a different Reynolds number, it would then be possible to exploit initial training at a certain flow condition and transfer this knowledge to another one. Such knowledge transfer could reduce significantly the amount of data needed for training and improve the network applicability for industrial applications. Transfer learning (Pan & Yang Reference Pan and Yang2009) is the suitable learning framework to address this issue, and it is discussed in detail below.

The methods proposed by Guastoni et al. (Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020) and Güemes et al. (Reference Güemes, Discetti and Ianiro2019), henceforth referred to as fully convolutional network (FCN) and FCN-POD respectively, are extended in the present study. Both models are able to provide a nonlinear characterization of the relation between wall features and the flow on wall-parallel planes. The purpose of this work is to provide a detailed comparison of the two aforementioned nonlinear methods regarding their capabilities in predicting turbulent flow fields from wall information. Their improvement over linear methods is measured using EPOD as a reference. Furthermore, transfer learning was applied to the FCN approach with the purpose of evaluating to what extent a network trained at one Reynolds number can be used at a different one.

The remainder of this article is organized as follows. Section 2.1 describes the numerical databases used for training and testing the neural networks and § 2.2 provides a brief comparison between the considered models. The FCN and FCN-POD methods are further detailed in § 2.4 and § 2.5, respectively, while EPOD is discussed in § 2.3. Results from the considered prediction methods are presented and compared in § 3, including instantaneous fields in § 3.1, second-order statistics in § 3.2 and spectra in § 3.3. Furthermore, an assessment of transfer learning between different Reynolds numbers is presented in § 4. Finally, the main conclusions of the work are presented in § 5. Two appendices are provided containing additional information about the training of the neural-network-based models and regarding the predicted instantaneous flow fields.

2. Methodology

2.1. Datasets

All the DNN variants in this study have been trained using the data generated from direct numerical simulations (DNS) of a turbulent open-channel flow. Periodic boundary conditions are imposed in the x- and z-directions (which are the streamwise and spanwise coordinates, respectively), and a no-slip condition is applied at the lower boundary (![]() $y=0$, where

$y=0$, where ![]() $y$ is the wall-normal coordinate). Differently from a standard channel-flow simulation, a symmetry condition is imposed at the upper boundary. In standard channel flows, the wall-attached coherent structures may extend beyond the channel centreline, thus affecting the other wall (Lozano-Durán, Flores & Jiménez Reference Lozano-Durán, Flores and Jiménez2012). On the other hand, in open-channel flows there is no upper wall. This makes the simulation more suitable to investigate to which extent the neural networks are able to learn the dynamics of near-wall turbulence, since the interaction of the large scales with both walls is not present.

$y$ is the wall-normal coordinate). Differently from a standard channel-flow simulation, a symmetry condition is imposed at the upper boundary. In standard channel flows, the wall-attached coherent structures may extend beyond the channel centreline, thus affecting the other wall (Lozano-Durán, Flores & Jiménez Reference Lozano-Durán, Flores and Jiménez2012). On the other hand, in open-channel flows there is no upper wall. This makes the simulation more suitable to investigate to which extent the neural networks are able to learn the dynamics of near-wall turbulence, since the interaction of the large scales with both walls is not present.

The simulation is performed using the pseudo-spectral code SIMSON (Chevalier et al. Reference Chevalier, Schlatter, Lundbladh and Henningson2007) with constant mass flow rate, in a domain ![]() $\varOmega = L_x \times L_y \times L_z = 4{\rm \pi} h \times h \times 2{\rm \pi} h$ (where

$\varOmega = L_x \times L_y \times L_z = 4{\rm \pi} h \times h \times 2{\rm \pi} h$ (where ![]() $h$ is the channel height), as shown in figure 1. Two friction Reynolds numbers

$h$ is the channel height), as shown in figure 1. Two friction Reynolds numbers ![]() $Re_{\tau }$ (based on

$Re_{\tau }$ (based on ![]() $h$ and the friction velocity

$h$ and the friction velocity ![]() $u_{\tau }=\sqrt {\tau _w/\rho }$, where

$u_{\tau }=\sqrt {\tau _w/\rho }$, where ![]() $\tau _w$ is the wall-shear stress and

$\tau _w$ is the wall-shear stress and ![]() $\rho$ is the fluid density) are considered, as summarized in table 1. The flow field is represented with

$\rho$ is the fluid density) are considered, as summarized in table 1. The flow field is represented with ![]() $N_y$ Chebyshev modes in the wall-normal direction and with

$N_y$ Chebyshev modes in the wall-normal direction and with ![]() $N_x$ and

$N_x$ and ![]() $N_z$ Fourier modes in the streamwise and spanwise directions, respectively. The instantaneous fields are obtained at constant time intervals from the time-advancing scheme, which is a second-order Crank–Nicholson scheme for the linear terms and a third-order Runge–Kutta method for the nonlinear terms. Dealiasing using a standard 3/2 rule is employed in the wall-parallel Fourier directions. The velocity fields to be used as ground truth for training and testing are sampled at the following inner-scaled wall-normal coordinates:

$N_z$ Fourier modes in the streamwise and spanwise directions, respectively. The instantaneous fields are obtained at constant time intervals from the time-advancing scheme, which is a second-order Crank–Nicholson scheme for the linear terms and a third-order Runge–Kutta method for the nonlinear terms. Dealiasing using a standard 3/2 rule is employed in the wall-parallel Fourier directions. The velocity fields to be used as ground truth for training and testing are sampled at the following inner-scaled wall-normal coordinates: ![]() $y^{+}=15,\,30,\,50$ and

$y^{+}=15,\,30,\,50$ and ![]() $100$. Note that ‘+’ denotes viscous scaling, i.e. in terms of the friction velocity

$100$. Note that ‘+’ denotes viscous scaling, i.e. in terms of the friction velocity ![]() $u_{\tau }$ or the viscous length

$u_{\tau }$ or the viscous length ![]() $\ell ^{*}=\nu / u_{\tau }$ (where

$\ell ^{*}=\nu / u_{\tau }$ (where ![]() $\nu$ is the fluid kinematic viscosity). A dataset is defined as a collection of samples, each consisting of the shear-stress and pressure fields at the wall as inputs, along with the corresponding velocity fields at the target wall-normal locations as outputs. The training/validation dataset at

$\nu$ is the fluid kinematic viscosity). A dataset is defined as a collection of samples, each consisting of the shear-stress and pressure fields at the wall as inputs, along with the corresponding velocity fields at the target wall-normal locations as outputs. The training/validation dataset at ![]() $Re_{\tau } = 180$ consists of 50 400 instantaneous fields, with a sampling period of

$Re_{\tau } = 180$ consists of 50 400 instantaneous fields, with a sampling period of ![]() $\Delta t^+_{s} = 5.08$. The sampling period at

$\Delta t^+_{s} = 5.08$. The sampling period at ![]() $Re_{\tau } = 550$ is set to

$Re_{\tau } = 550$ is set to ![]() $\Delta t^+_{s} = 1.49$ and the training/validation dataset includes 19 920 fields. The resolution in viscous units of the individual fields is the same in both

$\Delta t^+_{s} = 1.49$ and the training/validation dataset includes 19 920 fields. The resolution in viscous units of the individual fields is the same in both ![]() $Re_{\tau }$ datasets. This is necessary to represent all the flow features; however, this also implies that the number of Fourier modes in the wall-parallel directions is higher at

$Re_{\tau }$ datasets. This is necessary to represent all the flow features; however, this also implies that the number of Fourier modes in the wall-parallel directions is higher at ![]() $Re_{\tau }=550$, as shown in table 1, even if the domain

$Re_{\tau }=550$, as shown in table 1, even if the domain ![]() $\varOmega$ is the same. A higher number of modes corresponds to a higher number of spatial locations in which the different quantities are sampled from the DNS and this partially compensate the lower number of fields since the two proposed neural-network-based methods act locally on the input data, as will be shown in § 2.2.

$\varOmega$ is the same. A higher number of modes corresponds to a higher number of spatial locations in which the different quantities are sampled from the DNS and this partially compensate the lower number of fields since the two proposed neural-network-based methods act locally on the input data, as will be shown in § 2.2.

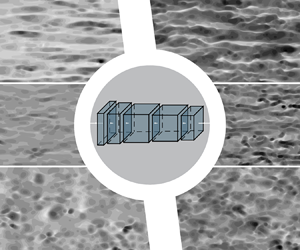

Figure 1. Computational domain and frame of reference for the DNS of the turbulent open channel considered in this study.

Table 1. Description of the DNS datasets used for computing the EPOD and training/testing the CNN-based models. The number of fields refers to the number of training and validation fields.

In both ![]() $Re_{\tau }$ cases, the dataset is split into training and validation sets, with a ratio of

$Re_{\tau }$ cases, the dataset is split into training and validation sets, with a ratio of ![]() $4\,{:}\,1$. The training and validation sets are obtained from a group of randomly initialized simulations. With the number of samples chosen for our investigation at either

$4\,{:}\,1$. The training and validation sets are obtained from a group of randomly initialized simulations. With the number of samples chosen for our investigation at either ![]() $Re_{\tau }$, there is a simulation whose samples are used both for training and for validation. Note that there is no temporal overlapping between the two datasets, as the first samples from the ‘shared’ simulation are sequentially added to the training set until the required number of training samples is reached. The remaining samples are then added to the validation set. This is done to reduce the correlation between the two datasets. While using the separate groups of simulations or adding an additional time separation between the last sample of the training set and the first sample of the validation would have further reduced the correlation, this was not enforced, since the validation error is only used during training to tune the network hyperparameters and avoid overfitting the training dataset. If the training provides satisfactory results, it is common practice to use both training and validation sets for the training of the final models. This was not implemented in our case, but it allows the model to make use of all the available data to improve its performance. The validation error may be significantly different from the one computed during testing, hence all the comparisons in this work are based on the error computed on the test dataset. The predictions used to assess the performance of the trained models were obtained from additional simulations. This is done both at

$Re_{\tau }$, there is a simulation whose samples are used both for training and for validation. Note that there is no temporal overlapping between the two datasets, as the first samples from the ‘shared’ simulation are sequentially added to the training set until the required number of training samples is reached. The remaining samples are then added to the validation set. This is done to reduce the correlation between the two datasets. While using the separate groups of simulations or adding an additional time separation between the last sample of the training set and the first sample of the validation would have further reduced the correlation, this was not enforced, since the validation error is only used during training to tune the network hyperparameters and avoid overfitting the training dataset. If the training provides satisfactory results, it is common practice to use both training and validation sets for the training of the final models. This was not implemented in our case, but it allows the model to make use of all the available data to improve its performance. The validation error may be significantly different from the one computed during testing, hence all the comparisons in this work are based on the error computed on the test dataset. The predictions used to assess the performance of the trained models were obtained from additional simulations. This is done both at ![]() $Re_{\tau } = 180$ and

$Re_{\tau } = 180$ and ![]() $Re_{\tau } = 550$, and it is necessary to ensure that the training and test datasets are completely uncorrelated, both in space and time. Test samples were taken from simulations initialized with a random seed, different from that of the training-data simulation. The correlation between training simulations and between training and test simulations was checked with the cross-correlations

$Re_{\tau } = 550$, and it is necessary to ensure that the training and test datasets are completely uncorrelated, both in space and time. Test samples were taken from simulations initialized with a random seed, different from that of the training-data simulation. The correlation between training simulations and between training and test simulations was checked with the cross-correlations ![]() $\rho _{ij}(h)$, defined as (Makarashvili et al. Reference Makarashvili, Merzari, Obabko, Siegel and Fischer2017)

$\rho _{ij}(h)$, defined as (Makarashvili et al. Reference Makarashvili, Merzari, Obabko, Siegel and Fischer2017)

where ![]() $h$ is the time lag and

$h$ is the time lag and ![]() $\gamma _{ij}(h)=\mathbb {E}[(x_{i,t+h}-\mu _i)(x_{j,t}-\mu _j)]$ are the cross-covariances.

$\gamma _{ij}(h)=\mathbb {E}[(x_{i,t+h}-\mu _i)(x_{j,t}-\mu _j)]$ are the cross-covariances. ![]() $i$ and

$i$ and ![]() $j$ refer to the two simulations and

$j$ refer to the two simulations and ![]() $x$ is the considered quantity, in this case one of the velocity components at a given wall-normal location. By computing

$x$ is the considered quantity, in this case one of the velocity components at a given wall-normal location. By computing ![]() $\rho _{ij}(0)$, it was possible to verify that the simulations had run sufficiently long to ensure that they are overall statistically uncorrelated, before starting the sampling to build the datasets.

$\rho _{ij}(0)$, it was possible to verify that the simulations had run sufficiently long to ensure that they are overall statistically uncorrelated, before starting the sampling to build the datasets.

The size of the test dataset (more than 3000 fields for both ![]() $Re_{\tau }$) is sufficient to achieve convergence of the turbulence statistics from the predicted flow, and then these are compared with the reference values from the DNS.

$Re_{\tau }$) is sufficient to achieve convergence of the turbulence statistics from the predicted flow, and then these are compared with the reference values from the DNS.

2.2. Summary of the methods

In this study we consider three different data-driven methods to estimate the instantaneous two-dimensional fields of the velocity fluctuations, at a given wall-normal distance. The velocity-fluctuation fields are obtained from the sampled DNS fields by subtracting the mean flow, averaged in time and in the two spatially homogeneous directions. Two models (FCN and FCN-POD) are introduced and compared with the EPOD method, which is used as a baseline model, highlighting the capabilities (and limitations) of linear estimation. Despite using the same input data and predicting the same output quantities, the three models leverage different tools in order to extract the information from the inputs and estimate the outputs. Here, we briefly review the differences and similarities between the models, each method is described in detail in the dedicated sections.

The three methods mainly differ when it comes to the use of POD (see Lumley Reference Lumley1967) on the input and output data, as well as the presence of a neural network in the prediction model. These differences are summarized in table 2, but it is important to note that the use of these mathematical tools can substantially differ from one method to the other, as detailed later. EPOD and FCN-POD use POD, which has the advantage of allowing us to filter out the noise content represented by small and uncorrelated scales, taking advantage of the energy optimality of POD modes. Additionally, spatial and temporal dynamics are separated.

Table 2. Summary of the properties from each method.

EPOD decomposes both the input and the output: the flow field at a certain wall-normal distance is reconstructed as a linear combination of orthogonal modes ![]() $\boldsymbol {\phi }_i(\boldsymbol {x})$

$\boldsymbol {\phi }_i(\boldsymbol {x})$

\begin{equation} \boldsymbol{u}(\boldsymbol{x},t) \approx \sum^{N_m}_{i=1}\psi_i(t)\sigma_i\boldsymbol{\phi}_i(\boldsymbol{x}), \end{equation}

\begin{equation} \boldsymbol{u}(\boldsymbol{x},t) \approx \sum^{N_m}_{i=1}\psi_i(t)\sigma_i\boldsymbol{\phi}_i(\boldsymbol{x}), \end{equation}

where ![]() $N_m$ is the total number of POD modes,

$N_m$ is the total number of POD modes, ![]() $\psi _i(t)$ is the temporal POD coefficient corresponding to mode

$\psi _i(t)$ is the temporal POD coefficient corresponding to mode ![]() $i$ and

$i$ and ![]() $\sigma _i$ is its corresponding root-squared energy contribution. While the orthogonal modes are computed from a POD of the training dataset, the temporal coefficients are estimated by decomposing input wall quantities using POD and assuming a linear correlation between the known temporal modes of the wall quantities

$\sigma _i$ is its corresponding root-squared energy contribution. While the orthogonal modes are computed from a POD of the training dataset, the temporal coefficients are estimated by decomposing input wall quantities using POD and assuming a linear correlation between the known temporal modes of the wall quantities ![]() $\psi _{w,i}(t)$ and the temporal modes

$\psi _{w,i}(t)$ and the temporal modes ![]() $\psi _i(t)$ of the output flow field of the test dataset. Further details are provided in § 2.3. On the other hand, FCN-POD applies POD only on the output flow fields, using the spatial modes from the training set and predicting the corresponding temporal coefficients using a neural network. This is a development of the model used by Güemes et al. (Reference Güemes, Discetti and Ianiro2019), note, however, that, in that study, the domain employed and reconstructed provided a compact POD eigenspectrum, here, the availability of a larger domain in the streamwise and spanwise directions spreads the energy content over a wider set of POD modes. This makes the predictions of temporal coefficients more difficult, especially those associated with the least energetic modes. To address this issue, the large instantaneous flow fields were subdivided into

$\psi _i(t)$ of the output flow field of the test dataset. Further details are provided in § 2.3. On the other hand, FCN-POD applies POD only on the output flow fields, using the spatial modes from the training set and predicting the corresponding temporal coefficients using a neural network. This is a development of the model used by Güemes et al. (Reference Güemes, Discetti and Ianiro2019), note, however, that, in that study, the domain employed and reconstructed provided a compact POD eigenspectrum, here, the availability of a larger domain in the streamwise and spanwise directions spreads the energy content over a wider set of POD modes. This makes the predictions of temporal coefficients more difficult, especially those associated with the least energetic modes. To address this issue, the large instantaneous flow fields were subdivided into ![]() $N_{s_x}\times N_{s_z}$ smaller regions (henceforth referred to as subdomains). The neural network predicts the temporal coefficients for all subdomains at the same time.

$N_{s_x}\times N_{s_z}$ smaller regions (henceforth referred to as subdomains). The neural network predicts the temporal coefficients for all subdomains at the same time.

The FCN and FCN-POD models consider the instantaneous two-dimensional fields of the streamwise and spanwise wall-shear-stress components and of the wall pressure as inputs. In the physical coordinates representation of these fields, the presence of coherent features motivates the use of convolutional layers in our neural-network models to process the information. In these layers, the inputs are processed at the same time, hence a convolution in three dimensions is performed and it is defined as

following Goodfellow, Bengio & Courville (Reference Goodfellow, Bengio and Courville2016), where ![]() $\boldsymbol {I} \in \mathbb {R}^{d_1 \times d_2 \times d_3}$ is the input,

$\boldsymbol {I} \in \mathbb {R}^{d_1 \times d_2 \times d_3}$ is the input, ![]() $\boldsymbol {K} \in \mathbb {R}^{k_1 \times k_2 \times k_3}$ is the so-called kernel (or filter) containing the learnable parameters of the layer and the transformed output

$\boldsymbol {K} \in \mathbb {R}^{k_1 \times k_2 \times k_3}$ is the so-called kernel (or filter) containing the learnable parameters of the layer and the transformed output ![]() $\boldsymbol {F}$ is the feature map. Note that we consider

$\boldsymbol {F}$ is the feature map. Note that we consider ![]() $d_3 = k_3$, meaning that the resulting feature map is two-dimensional. Multiple feature maps can be stacked and sequentially fed into another convolutional layer as input. This allows the next layer to combine the features individually identified in each feature map, enabling the prediction of larger and more complex features for progressively deeper convolutional networks. Note that a convolutional layer can be rewritten as a matrix multiplication, hence it is mathematically equivalent to a fully connected layer (Ma & Lu Reference Ma and Lu2017). If we assume that the input features are spatially localized, using a convolutional layer allows translational equivariance to be enforced in both periodic directions. Furthermore, since

$d_3 = k_3$, meaning that the resulting feature map is two-dimensional. Multiple feature maps can be stacked and sequentially fed into another convolutional layer as input. This allows the next layer to combine the features individually identified in each feature map, enabling the prediction of larger and more complex features for progressively deeper convolutional networks. Note that a convolutional layer can be rewritten as a matrix multiplication, hence it is mathematically equivalent to a fully connected layer (Ma & Lu Reference Ma and Lu2017). If we assume that the input features are spatially localized, using a convolutional layer allows translational equivariance to be enforced in both periodic directions. Furthermore, since ![]() $k_i \ll d_i\ \forall i\neq 3$, the use of kernels greatly reduces the number of parameters that need to be learned during training (in comparison with fully connected MLP networks).

$k_i \ll d_i\ \forall i\neq 3$, the use of kernels greatly reduces the number of parameters that need to be learned during training (in comparison with fully connected MLP networks).

Both neural-network-based models use fully convolutional neural network (hence FCN in the names). This architecture is similar, but conceptually different from, CNNs, which typically have several convolutional layers followed by one or more fully connected layers (which are the building blocks of MLP networks). In CNNs, the localized information processed by the individual convolutional kernels is combined to obtain a global prediction, whereas in FCNs only convolutional layers are present and the network architecture is based on the assumption that the relation between input and output variables is spatially localized. The input region from which a single point of the output can draw information is called receptive field and it can be computed based on the network architecture, as described by Dumoulin & Visin (Reference Dumoulin and Visin2016). In the FCN model, the instantaneous two-dimensional velocity fluctuations are directly predicted from the input fields by using the fully convolutional neural network. Additional details are provided in § 2.4. On the other hand, the neural network in the FCN-POD model predicts the temporal coefficients for each of the ![]() $N_{s_x}\times N_{s_z}$ subdomains at once, as described in § 2.5.

$N_{s_x}\times N_{s_z}$ subdomains at once, as described in § 2.5.

2.3. Extended POD

EPOD provides a linear relation between input and output and it is the reference method for all the following comparisons. Doing so, it is possible to assess the prediction improvement with nonlinear, neural-network-based methods in the context of wall-bounded turbulence. Wall quantities at each instant can be rearranged as a row vector and used to assemble a snapshot matrix

\begin{equation} \boldsymbol{\mathsf{W}}=\begin{bmatrix} \left.\dfrac{\partial u}{\partial y}\right|_{x_1}^{t_1} & \cdots & \left.\dfrac{\partial u}{\partial y}\right|_{x_{N_p}}^{t_1} & \left.\dfrac{\partial w}{\partial y}\right|_{x_1}^{t_1} & \cdots & \left.\dfrac{\partial w}{\partial y}\right|_{x_{N_p}}^{t_1} & p_{x_1}^{t_1} & \cdots & p_{x_{N_p}}^{t_1}\\ \vdots & \ddots & \vdots & \vdots & \ddots & \vdots & \vdots & \ddots & \vdots \\ \left.\dfrac{\partial u}{\partial y}\right|_{x_1}^{t_{N_t}} & \cdots & \left.\dfrac{\partial u}{\partial y}\right|_{x_{N_p}}^{t_{N_t}} & \left.\dfrac{\partial w}{\partial y}\right|_{x_1}^{t_{N_t}} & \cdots & \left.\dfrac{\partial w}{\partial y}\right|_{x_{N_p}}^{t_{N_t}} & p_{x_1}^{t_{N_t}} & \cdots & p_{x_{N_p}}^{t_{N_t}} \end{bmatrix}. \end{equation}

\begin{equation} \boldsymbol{\mathsf{W}}=\begin{bmatrix} \left.\dfrac{\partial u}{\partial y}\right|_{x_1}^{t_1} & \cdots & \left.\dfrac{\partial u}{\partial y}\right|_{x_{N_p}}^{t_1} & \left.\dfrac{\partial w}{\partial y}\right|_{x_1}^{t_1} & \cdots & \left.\dfrac{\partial w}{\partial y}\right|_{x_{N_p}}^{t_1} & p_{x_1}^{t_1} & \cdots & p_{x_{N_p}}^{t_1}\\ \vdots & \ddots & \vdots & \vdots & \ddots & \vdots & \vdots & \ddots & \vdots \\ \left.\dfrac{\partial u}{\partial y}\right|_{x_1}^{t_{N_t}} & \cdots & \left.\dfrac{\partial u}{\partial y}\right|_{x_{N_p}}^{t_{N_t}} & \left.\dfrac{\partial w}{\partial y}\right|_{x_1}^{t_{N_t}} & \cdots & \left.\dfrac{\partial w}{\partial y}\right|_{x_{N_p}}^{t_{N_t}} & p_{x_1}^{t_{N_t}} & \cdots & p_{x_{N_p}}^{t_{N_t}} \end{bmatrix}. \end{equation}

Here, ![]() $\, N_t$ refers to the total number of snapshots (equal to the number of instantaneous flow fields

$\, N_t$ refers to the total number of snapshots (equal to the number of instantaneous flow fields ![]() $N_f$ for the EPOD) and

$N_f$ for the EPOD) and ![]() $N_p$ refers to the total number of grid points in one field. Such formulation applies for regular grids; for non-regular grids, the snapshot matrix should be adapted to take into account the area associated with each grid point. Using the method proposed by Sirovich (Reference Sirovich1987), it is possible to decompose this matrix into POD modes as

$N_p$ refers to the total number of grid points in one field. Such formulation applies for regular grids; for non-regular grids, the snapshot matrix should be adapted to take into account the area associated with each grid point. Using the method proposed by Sirovich (Reference Sirovich1987), it is possible to decompose this matrix into POD modes as

with ![]() $\boldsymbol {\varPsi }_w \in \mathbb {R}^{N_f\times N_f}$ and

$\boldsymbol {\varPsi }_w \in \mathbb {R}^{N_f\times N_f}$ and ![]() $\boldsymbol {\varPhi }_w \in \mathbb {R}^{N_f\times 3N_p}$ being the temporal and spatial mode matrices, respectively, and

$\boldsymbol {\varPhi }_w \in \mathbb {R}^{N_f\times 3N_p}$ being the temporal and spatial mode matrices, respectively, and ![]() $\boldsymbol {\varSigma }_w \in \mathbb {R}^{N_f\times N_f}$ being a diagonal matrix containing the singular values. Note that we perform an economy-size decomposition, such that the size of the square matrix

$\boldsymbol {\varSigma }_w \in \mathbb {R}^{N_f\times N_f}$ being a diagonal matrix containing the singular values. Note that we perform an economy-size decomposition, such that the size of the square matrix ![]() $\boldsymbol {\varSigma }_{w}$ is

$\boldsymbol {\varSigma }_{w}$ is ![]() $\min (N_f, 3N_p)$. The EPOD modes (Borée Reference Borée2003), corresponding to the projection of the wall quantities on the flow-field temporal basis, are defined as

$\min (N_f, 3N_p)$. The EPOD modes (Borée Reference Borée2003), corresponding to the projection of the wall quantities on the flow-field temporal basis, are defined as

where ![]() $\boldsymbol{\mathsf{U}}$ is the snapshot matrix in which the instantaneous velocity fluctuations in the three directions are rearranged

$\boldsymbol{\mathsf{U}}$ is the snapshot matrix in which the instantaneous velocity fluctuations in the three directions are rearranged

\begin{equation} \boldsymbol{\mathsf{U}}=\begin{bmatrix} u_{x_1}^{t_1} & \cdots & u_{x_{N_p}}^{t_1} & v_{x_1}^{t_1} & \cdots & v_{x_{N_p}}^{t_1} & w_{x_1}^{t_1} & \cdots & w_{x_{N_p}}^{t_1}\\ \vdots & \ddots & \vdots & \vdots & \ddots & \vdots & \vdots & \ddots & \vdots \\ u_{x_1}^{t_{N_t}} & \cdots & u_{x_{N_p}}^{t_{N_t}} & v_{x_1}^{t_{N_t}} & \cdots & v_{x_{N_p}}^{t_{N_t}} & w_{x_1}^{t_{N_t}} & \cdots & w_{x_{N_p}}^{t_{N_t}} \end{bmatrix}. \end{equation}

\begin{equation} \boldsymbol{\mathsf{U}}=\begin{bmatrix} u_{x_1}^{t_1} & \cdots & u_{x_{N_p}}^{t_1} & v_{x_1}^{t_1} & \cdots & v_{x_{N_p}}^{t_1} & w_{x_1}^{t_1} & \cdots & w_{x_{N_p}}^{t_1}\\ \vdots & \ddots & \vdots & \vdots & \ddots & \vdots & \vdots & \ddots & \vdots \\ u_{x_1}^{t_{N_t}} & \cdots & u_{x_{N_p}}^{t_{N_t}} & v_{x_1}^{t_{N_t}} & \cdots & v_{x_{N_p}}^{t_{N_t}} & w_{x_1}^{t_{N_t}} & \cdots & w_{x_{N_p}}^{t_{N_t}} \end{bmatrix}. \end{equation} If the dataset is sufficiently large to reach statistical convergence, the matrix ![]() $\boldsymbol{\mathsf{L}}$ describes the relationship between the temporal POD coefficients of a certain distribution of wall features, and those of the corresponding flow field. Once the temporal correlation matrix is known, an out-of-sample flow field

$\boldsymbol{\mathsf{L}}$ describes the relationship between the temporal POD coefficients of a certain distribution of wall features, and those of the corresponding flow field. Once the temporal correlation matrix is known, an out-of-sample flow field ![]() $\boldsymbol {u}$ can be reconstructed using

$\boldsymbol {u}$ can be reconstructed using ![]() $\boldsymbol{\mathsf{L}}$ and the instantaneous realization of wall features as follows:

$\boldsymbol{\mathsf{L}}$ and the instantaneous realization of wall features as follows:

where ![]() $\boldsymbol {\psi }_{w}$ is the vector containing the temporal coefficients of the wall fields used for prediction. Note that

$\boldsymbol {\psi }_{w}$ is the vector containing the temporal coefficients of the wall fields used for prediction. Note that ![]() $\boldsymbol {\psi }_{w}$ is retrieved by projecting the out-of-sample wall field

$\boldsymbol {\psi }_{w}$ is retrieved by projecting the out-of-sample wall field ![]() $\boldsymbol {w}$ on the POD basis:

$\boldsymbol {w}$ on the POD basis: ![]() $\boldsymbol {\psi }_{w}=\boldsymbol {w}\boldsymbol {\varPhi }_{w}^T \boldsymbol {\varSigma }_{w}^{-1}$, where

$\boldsymbol {\psi }_{w}=\boldsymbol {w}\boldsymbol {\varPhi }_{w}^T \boldsymbol {\varSigma }_{w}^{-1}$, where ![]() $\boldsymbol {\varPhi }_{w}^T \boldsymbol {\varSigma }_{w}^{-1}$ is readily available from the training dataset.

$\boldsymbol {\varPhi }_{w}^T \boldsymbol {\varSigma }_{w}^{-1}$ is readily available from the training dataset.

An important remark is that, due to the homogeneity in the streamwise and spanwise directions and the very large number of fields for the lower Reynolds number ![]() $Re_{\tau } = 180$, the wall-based matrix

$Re_{\tau } = 180$, the wall-based matrix ![]() $\boldsymbol {\varSigma }_{w}$ is ill conditioned. In fact, due to the correlation between subsequent time-resolved snapshots, the rank of

$\boldsymbol {\varSigma }_{w}$ is ill conditioned. In fact, due to the correlation between subsequent time-resolved snapshots, the rank of ![]() $\boldsymbol {\varSigma }_{w}$ is smaller than

$\boldsymbol {\varSigma }_{w}$ is smaller than ![]() $N_f$, which is the number of snapshots (here smaller than

$N_f$, which is the number of snapshots (here smaller than ![]() $N_p$). A well- or ill-conditioned matrix is based on the condition number: if the condition number is large the matrix is ill conditioned, while if it is small the matrix is well conditioned. Defining the condition number as

$N_p$). A well- or ill-conditioned matrix is based on the condition number: if the condition number is large the matrix is ill conditioned, while if it is small the matrix is well conditioned. Defining the condition number as ![]() $\kappa (\varSigma _w)={\max (\varSigma _w)}/{\min (\varSigma _w)}$, the large difference between the first and the last POD modes leads to an ill-conditioned matrix, which is difficult to be inverted numerically. To address this issue, a reduced-order representation of the matrix

$\kappa (\varSigma _w)={\max (\varSigma _w)}/{\min (\varSigma _w)}$, the large difference between the first and the last POD modes leads to an ill-conditioned matrix, which is difficult to be inverted numerically. To address this issue, a reduced-order representation of the matrix ![]() $\boldsymbol {\varSigma }_w$ is employed, using a number of modes equal to the rank of the matrix. The energy contribution of the excluded modes is zero, and thus the predictions are numerically equivalent to a full-rank estimation. Differently from the

$\boldsymbol {\varSigma }_w$ is employed, using a number of modes equal to the rank of the matrix. The energy contribution of the excluded modes is zero, and thus the predictions are numerically equivalent to a full-rank estimation. Differently from the ![]() $Re_{\tau } = 180$ case, the singular values matrix at the wall

$Re_{\tau } = 180$ case, the singular values matrix at the wall ![]() $\boldsymbol {\varSigma }_{w}$ has a lower condition number for

$\boldsymbol {\varSigma }_{w}$ has a lower condition number for ![]() $Re_{\tau } = 550$ case, possibly because of the smaller number of snapshots that are used in this case. As a result, it is not necessary to resort to a reduce-order representation in order to invert the matrix

$Re_{\tau } = 550$ case, possibly because of the smaller number of snapshots that are used in this case. As a result, it is not necessary to resort to a reduce-order representation in order to invert the matrix ![]() $\boldsymbol {\varSigma }_{w}$. Even when

$\boldsymbol {\varSigma }_{w}$. Even when ![]() $\boldsymbol {\varSigma }_w$ has rank equal to

$\boldsymbol {\varSigma }_w$ has rank equal to ![]() $N_f$, it might be convenient to truncate the matrix

$N_f$, it might be convenient to truncate the matrix ![]() $\boldsymbol{\mathsf{L}}$ (Discetti et al. Reference Discetti, Raiola and Ianiro2018). Decomposing the flow quantities as

$\boldsymbol{\mathsf{L}}$ (Discetti et al. Reference Discetti, Raiola and Ianiro2018). Decomposing the flow quantities as ![]() $\boldsymbol{\mathsf{U}}=\boldsymbol {\varPsi }\boldsymbol {\varSigma }\boldsymbol {\varPhi }$, similarly to what is done for the wall quantities in (2.5), it can be observed that the product of the two matrices

$\boldsymbol{\mathsf{U}}=\boldsymbol {\varPsi }\boldsymbol {\varSigma }\boldsymbol {\varPhi }$, similarly to what is done for the wall quantities in (2.5), it can be observed that the product of the two matrices ![]() $\boldsymbol {\varPsi }\boldsymbol {\varPsi }_{w}^{T}$ in (2.6) returns a unitary-norm matrix with rank equal to those of

$\boldsymbol {\varPsi }\boldsymbol {\varPsi }_{w}^{T}$ in (2.6) returns a unitary-norm matrix with rank equal to those of ![]() $\boldsymbol {\varPsi }$ and

$\boldsymbol {\varPsi }$ and ![]() $\boldsymbol {\varPsi }_{w}^{T}$, which are bases in the

$\boldsymbol {\varPsi }_{w}^{T}$, which are bases in the ![]() $\mathbb {R}^{N_f}$ vector space. As a consequence, a certain

$\mathbb {R}^{N_f}$ vector space. As a consequence, a certain ![]() $j$th wall mode, uncorrelated with any field mode, would not result in a corresponding null row or column. To ensure the removal of the uncorrelated content from the matrix

$j$th wall mode, uncorrelated with any field mode, would not result in a corresponding null row or column. To ensure the removal of the uncorrelated content from the matrix ![]() $\boldsymbol {\varPsi }\boldsymbol {\varPsi }_{w}^{T}$, Discetti et al. (Reference Discetti, Raiola and Ianiro2018) proposed to set to zero all the entries of the matrix with absolute values smaller than a threshold proportional to the matrix standard deviation. This operation reduces the expressivity of the EPOD, reducing the number of modes that can be used to represent the output fields. On the other hand, for the predictions in this work, we have found a mean-squared error reduction of approximately 10 percentage points with respect to the standard EPOD procedure. Despite this, since the EPOD is used as a benchmark for the performance of linear methods with respect to the FCN-based approaches proposed herein, the filtered EPOD by Discetti et al. (Reference Discetti, Raiola and Ianiro2018) is not included in this comparison for brevity.

$\boldsymbol {\varPsi }\boldsymbol {\varPsi }_{w}^{T}$, Discetti et al. (Reference Discetti, Raiola and Ianiro2018) proposed to set to zero all the entries of the matrix with absolute values smaller than a threshold proportional to the matrix standard deviation. This operation reduces the expressivity of the EPOD, reducing the number of modes that can be used to represent the output fields. On the other hand, for the predictions in this work, we have found a mean-squared error reduction of approximately 10 percentage points with respect to the standard EPOD procedure. Despite this, since the EPOD is used as a benchmark for the performance of linear methods with respect to the FCN-based approaches proposed herein, the filtered EPOD by Discetti et al. (Reference Discetti, Raiola and Ianiro2018) is not included in this comparison for brevity.

2.4. Fully convolutional neural-network predictions

Fully convolutional networks are commonly used in applications where the input and output domains have structural similarities. Image segmentation (Long, Shelhamer & Darrell Reference Long, Shelhamer and Darrell2015) is one such case, since the output has the same spatial dimension as the input, as in our predictions of two-dimensional flow fields. The inputs of the network are the wall-shear-stress components in the streamwise and spanwise directions, as well as the pressure at the wall. Each of the inputs of the network is normalized using the respective mean and standard deviation, as computed from the training/validation set. The predictions are performed using the same mean and standard deviation values on the test dataset inputs. The outputs are the instantaneous velocity fluctuations, denoted as ![]() $u$,

$u$, ![]() $v$ and

$v$ and ![]() $w$ (corresponding to the streamwise, wall-normal and spanwise velocities, respectively), at a given distance from the wall. Note that the predictions are carried out at the same time as the input fields. In our previous work (Guastoni et al. Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020), a similar FCN was used to predict the streamwise component of instantaneous flow fields. In the present study the predictions are extended to the wall-normal and spanwise components, however, the back-propagation algorithm that is used to train the networks works best when the error in the prediction of three outputs (i.e. the three velocity components) has a similar magnitude for all of them. Thus, the fluctuations are scaled as follows:

$w$ (corresponding to the streamwise, wall-normal and spanwise velocities, respectively), at a given distance from the wall. Note that the predictions are carried out at the same time as the input fields. In our previous work (Guastoni et al. Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020), a similar FCN was used to predict the streamwise component of instantaneous flow fields. In the present study the predictions are extended to the wall-normal and spanwise components, however, the back-propagation algorithm that is used to train the networks works best when the error in the prediction of three outputs (i.e. the three velocity components) has a similar magnitude for all of them. Thus, the fluctuations are scaled as follows:

where ![]() $rms$ refers to root-mean-squared (r.m.s.) quantities. During inference (i.e. when the predictions are computed from the inputs in the test dataset), the outputs of the network are re-scaled back to their original magnitude. The network is trained to minimize the following loss function:

$rms$ refers to root-mean-squared (r.m.s.) quantities. During inference (i.e. when the predictions are computed from the inputs in the test dataset), the outputs of the network are re-scaled back to their original magnitude. The network is trained to minimize the following loss function:

\begin{equation} \mathcal{L}_{{FCN}}(\boldsymbol{\hat{u}}_{FCN};\boldsymbol{\hat{u}}_{DNS})=\frac{1}{N_x N_z} \sum_{i=1}^{N_x} \sum_{j=1}^{N_z} \left | \boldsymbol{\hat{u}}_{FCN}(i,j) - \boldsymbol{\hat{u}}_{DNS}(i,j)\right |^{2}, \end{equation}

\begin{equation} \mathcal{L}_{{FCN}}(\boldsymbol{\hat{u}}_{FCN};\boldsymbol{\hat{u}}_{DNS})=\frac{1}{N_x N_z} \sum_{i=1}^{N_x} \sum_{j=1}^{N_z} \left | \boldsymbol{\hat{u}}_{FCN}(i,j) - \boldsymbol{\hat{u}}_{DNS}(i,j)\right |^{2}, \end{equation}

which is the mean-squared error (MSE) between the instantaneous prediction ![]() $\boldsymbol {\hat {u}}_{FCN}$ and the true velocity fluctuations

$\boldsymbol {\hat {u}}_{FCN}$ and the true velocity fluctuations ![]() $\boldsymbol {\hat {u}}_{DNS}$, as computed by the DNS and scaled in the same way as the network outputs. Training details are further discussed in Appendix A. The FCN architecture is shown in figure 2. Each convolution operation (except for the last one) is followed by batch normalization (Ioffe & Szegedy Reference Ioffe and Szegedy2015) and a rectified-linear-unit (ReLU, see Nair & Hinton Reference Nair and Hinton2010) activation function.

$\boldsymbol {\hat {u}}_{DNS}$, as computed by the DNS and scaled in the same way as the network outputs. Training details are further discussed in Appendix A. The FCN architecture is shown in figure 2. Each convolution operation (except for the last one) is followed by batch normalization (Ioffe & Szegedy Reference Ioffe and Szegedy2015) and a rectified-linear-unit (ReLU, see Nair & Hinton Reference Nair and Hinton2010) activation function.

Figure 2. Schematic representation of the considered FCN architecture. The input fields are on the left (from top to bottom: streamwise wall-shear stress, spanwise wall-shear stress and wall pressure) and the outputs are on the right (from top to bottom: streamwise, wall-normal and spanwise fluctuations). The numbers indicate the number of kernels applied to each of the layers. The kernels (not represented in the figure) have size ![]() $(5\times 5)$ in the first convolutional layer, and

$(5\times 5)$ in the first convolutional layer, and ![]() $(3\times 3)$ in the subsequent layers. A darker colour corresponds to a higher value of the represented quantity.

$(3\times 3)$ in the subsequent layers. A darker colour corresponds to a higher value of the represented quantity.

The chosen inputs and outputs allow the FCN to learn only the spatial relation between the quantities at the wall and the fluctuations farther away from it. Note that it would also be possible to consider predictions in time, and in that case convolutional neural networks could be used (van den Oord et al. Reference van den Oord, Dieleman, Zen, Simonyan, Vinyals, Graves, Kalchbrenner, Senior and Kavukcuoglu2016) treating time as another spatial coordinate, or it would be possible to use recurrent networks, specifically designed to learn temporal sequences as we have recently shown with LSTM networks (Guastoni et al. Reference Guastoni, Srinivasan, Azizpour, Schlatter and Vinuesa2019; Srinivasan et al. Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019). In both cases, the need for multiple samples in time makes the model less flexible than one that relies only on spatial correlations, both during training and testing. These models usually assume a constant sampling time for the data sequence, which might be difficult to enforce, for example if the fields are taken from a numerical simulation with an adaptive timestep. During inference, models that work with sequences would require input fields at different times to perform the prediction. On the other hand, a single input sample is sufficient for the FCN to predict the output.

Input and output fields of the FCN model are obtained from a simulation with periodic boundary conditions in the streamwise and spanwise directions. Such constraints could be added to the loss function, however, this would imply that periodicity would only be satisfied in a least-square sense and for this reason they are not used in the present study. Instead, in our implementation we are able to strictly enforce periodicity in both wall-parallel directions by leveraging the fact that the convolutional-network output is deterministic and influenced only by the local information in the receptive field. In other words, if the network receives a certain local input, the local output value will always be the same, regardless of the local position within the input field. In order to have the same values on both edges of the domain, the inputs fields are padded in the periodic directions, i.e. they are extended on both ends, using the values from the other side of the fields. The padding procedure is exemplified in figure 3, which highlights how the information in the receptive field at the two ends of the domain is indeed the same. The receptive field for this architecture is ![]() $15 \times 15$ points, hence 16 points are added to each field in both streamwise and spanwise directions. Note that this padding would determine a network output size that is slightly larger than the size of the velocity fields from the DNS, and therefore the network output is cropped to match the size of the reference flow fields. The padding involves a computational overhead due to the increased size of the input fields, however, it is important to highlight that the padding is architecture dependent and not input dependent, meaning that the input is

$15 \times 15$ points, hence 16 points are added to each field in both streamwise and spanwise directions. Note that this padding would determine a network output size that is slightly larger than the size of the velocity fields from the DNS, and therefore the network output is cropped to match the size of the reference flow fields. The padding involves a computational overhead due to the increased size of the input fields, however, it is important to highlight that the padding is architecture dependent and not input dependent, meaning that the input is ![]() $\approx 17\,\%$ bigger with a

$\approx 17\,\%$ bigger with a ![]() $192 \times 192$ field resolution (at

$192 \times 192$ field resolution (at ![]() $Re_{\tau } = 180$), but only

$Re_{\tau } = 180$), but only ![]() $\approx 6\,\%$ bigger when the fields have a size of

$\approx 6\,\%$ bigger when the fields have a size of ![]() $512 \times 512$ (at

$512 \times 512$ (at ![]() $Re_{\tau } = 550$).

$Re_{\tau } = 550$).

Figure 3. Example of padding of the input streamwise wall-shear-stress field. The solid blue line indicates the size of the original field. The orange boxes show the information available to the FCN for the reconstruction of a single point of the output fields, at both edges of the domain. A darker colour corresponds to a higher value of the represented quantity.

2.5. POD-based predictions with convolutional neural networks

The FCN-POD model is built upon the previous work by Güemes et al. (Reference Güemes, Discetti and Ianiro2019). In the present work a different neural-network architecture, a fully convolutional one, is used instead of a CNN. The network takes as inputs the wall-shear-stress and wall-pressure fields as sampled from the DNS to predict the temporal coefficients of the POD modes in each of the ![]() $N_{s_x}\times N_{s_z}$ subdomains in which the output velocity-fluctuation field is divided. The advantage of this approach compared with directly decomposing the full field lies in the fact that, in these subdomains, the first POD modes contain a very large fraction of the total energy content. This is a direct consequence of including the energy of the structures larger than the domain into the first POD mode (Liu, Adrian & Hanratty Reference Liu, Adrian and Hanratty2001; Wu Reference Wu2014). As a result, a lower number of modes is needed in each subdomain to reconstruct a large fraction of the total energy, as shown in figure 5(a,c). The same amount of energy would have been spread over a larger number of modes if the entire field had been considered at once. The number of subdomains in each homogeneous direction, as well as the other parameters of the POD for the two

$N_{s_x}\times N_{s_z}$ subdomains in which the output velocity-fluctuation field is divided. The advantage of this approach compared with directly decomposing the full field lies in the fact that, in these subdomains, the first POD modes contain a very large fraction of the total energy content. This is a direct consequence of including the energy of the structures larger than the domain into the first POD mode (Liu, Adrian & Hanratty Reference Liu, Adrian and Hanratty2001; Wu Reference Wu2014). As a result, a lower number of modes is needed in each subdomain to reconstruct a large fraction of the total energy, as shown in figure 5(a,c). The same amount of energy would have been spread over a larger number of modes if the entire field had been considered at once. The number of subdomains in each homogeneous direction, as well as the other parameters of the POD for the two ![]() $Re_{\tau }$ cases are reported in table 3. Further details regarding the subdomain choice are provided at the end of this subsection.

$Re_{\tau }$ cases are reported in table 3. Further details regarding the subdomain choice are provided at the end of this subsection.

Table 3. Description of the POD settings for the subdomain decomposition. Note that only the training set has been used to compute the POD modes.

Similarly to what was done for EPOD in § 2.3, the snapshot matrix ![]() $\boldsymbol{\mathsf{U}}$ is assembled. The most important difference is that in this case the total number of snapshots

$\boldsymbol{\mathsf{U}}$ is assembled. The most important difference is that in this case the total number of snapshots ![]() $N_t$ is equal to the number of instantaneous flow fields

$N_t$ is equal to the number of instantaneous flow fields ![]() $N_f$ times the number of subdomains per each flow field (

$N_f$ times the number of subdomains per each flow field (![]() $N_{s_x}\times N_{s_z}\times N_f$), and

$N_{s_x}\times N_{s_z}\times N_f$), and ![]() $N_p$ refers to the total number of grid points in one subdomain. This organization of the snapshots implies that the spatial modes are assumed to be the same for each individual subdomain. These POD spatial modes can be computed solving the eigenvalue problem of the spatial correlation matrix

$N_p$ refers to the total number of grid points in one subdomain. This organization of the snapshots implies that the spatial modes are assumed to be the same for each individual subdomain. These POD spatial modes can be computed solving the eigenvalue problem of the spatial correlation matrix ![]() $\boldsymbol{\mathsf{C}}$ as follows:

$\boldsymbol{\mathsf{C}}$ as follows:

where ![]() $\boldsymbol {\varPhi }$ is a matrix the rows of which contain the spatial POD modes, while

$\boldsymbol {\varPhi }$ is a matrix the rows of which contain the spatial POD modes, while ![]() $\boldsymbol {\varLambda }$ is a diagonal matrix with elements

$\boldsymbol {\varLambda }$ is a diagonal matrix with elements ![]() $\lambda _i=\sigma _i^2$, which represent the variance content of each mode. The POD coefficients

$\lambda _i=\sigma _i^2$, which represent the variance content of each mode. The POD coefficients ![]() $\psi _i(t)$ are obtained by projecting the flow fields on the spatial POD modes computed with (2.11).

$\psi _i(t)$ are obtained by projecting the flow fields on the spatial POD modes computed with (2.11).

The economy-size decomposition just described returns a number of POD modes ![]() $N_m=3N_p$. This implies that the flow field in each subdomain is represented with

$N_m=3N_p$. This implies that the flow field in each subdomain is represented with ![]() $N_m$ modes without approximation. On the other hand, the FCN-POD only predicts the POD temporal coefficients corresponding to a limited number of modes, so each turbulent-flow field is encoded using a tensor of size

$N_m$ modes without approximation. On the other hand, the FCN-POD only predicts the POD temporal coefficients corresponding to a limited number of modes, so each turbulent-flow field is encoded using a tensor of size ![]() $N_{s_x}\times N_{s_z}\times N_r$, where

$N_{s_x}\times N_{s_z}\times N_r$, where ![]() $N_{r}< N_{m}$ is the number POD modes to be predicted on each subdomain, as illustrated in figure 4. This tensor contains a set of

$N_{r}< N_{m}$ is the number POD modes to be predicted on each subdomain, as illustrated in figure 4. This tensor contains a set of ![]() $N_r$ temporal POD coefficients for each subdomain in which the output flow field is divided. Even if the spatial modes are the same for all the subdomains, the temporal coefficient can have different values on the individual subregions, in order to provide a faithful local reconstruction of the flow. In this regard, it is important to note that the temporal coefficients for the subdomains are predicted together, and each subdomain is receiving information from the surrounding ones through the convolutional operations. This is a significant difference with the implementation in Güemes et al. (Reference Güemes, Discetti and Ianiro2019), where subdomains were not required, having considered a smaller flow field. Furthermore, in the previous cited work a different network is used to predict each mode, while in this study a single network is used to predict the full set of POD coefficients.

$N_r$ temporal POD coefficients for each subdomain in which the output flow field is divided. Even if the spatial modes are the same for all the subdomains, the temporal coefficient can have different values on the individual subregions, in order to provide a faithful local reconstruction of the flow. In this regard, it is important to note that the temporal coefficients for the subdomains are predicted together, and each subdomain is receiving information from the surrounding ones through the convolutional operations. This is a significant difference with the implementation in Güemes et al. (Reference Güemes, Discetti and Ianiro2019), where subdomains were not required, having considered a smaller flow field. Furthermore, in the previous cited work a different network is used to predict each mode, while in this study a single network is used to predict the full set of POD coefficients.

Figure 4. Schematic representation of the encoding of turbulent-flow fields into target tensors of the network, containing the temporal POD coefficients. When ![]() $Re_\tau =180$, the flow field is divided into

$Re_\tau =180$, the flow field is divided into ![]() $12\times 12$ subdomains in the streamwise and spanwise directions respectively; a three-dimensional tensor is built, where the first two dimensions correspond to the streamwise and spanwise position of the subdomain, while the third one accounts for the number of POD modes to be predicted.

$12\times 12$ subdomains in the streamwise and spanwise directions respectively; a three-dimensional tensor is built, where the first two dimensions correspond to the streamwise and spanwise position of the subdomain, while the third one accounts for the number of POD modes to be predicted.

The FCN-POD architecture considered here blends the FCN shown in figure 2 and the network used by Güemes et al. (Reference Güemes, Discetti and Ianiro2019) (see figure 1 in that work). As in the FCN approach, each convolution operation (except for the last one) is followed by batch normalization (Ioffe & Szegedy Reference Ioffe and Szegedy2015) and a ReLU (Nair & Hinton Reference Nair and Hinton2010) activation function. After each activation function a max pooling layer is added. Differently from what was done in the FCN approach, here and in the EPOD approach the output velocity components were not scaled before the decomposition, in order to keep the physical encoding based on the turbulent kinetic energy (TKE) of the flow. Note that by modifying the relative contribution of the velocity components to the energy norm, the modes would have been sorted based on a norm different from the TKE. The network is trained to minimize the loss function

\begin{equation} \mathcal{L}_{{FCN\text{-}POD}}(\psi_{POD};\psi_{DNS})=\frac{1}{N_{s_x} N_{s_z} N_r} \sum_{i=1}^{N_{s_x}} \sum_{j=1}^{N_{s_z}} \sum_{k=1}^{N_r} \left | \psi_{POD}(i,j,k) - \psi_{DNS}(i,j,k)\right |^{2}, \end{equation}

\begin{equation} \mathcal{L}_{{FCN\text{-}POD}}(\psi_{POD};\psi_{DNS})=\frac{1}{N_{s_x} N_{s_z} N_r} \sum_{i=1}^{N_{s_x}} \sum_{j=1}^{N_{s_z}} \sum_{k=1}^{N_r} \left | \psi_{POD}(i,j,k) - \psi_{DNS}(i,j,k)\right |^{2}, \end{equation}which is the MSE between the predicted and the actual POD temporal coefficients of the DNS data. Similarly to the FCN, the predictions are performed at the same instant as that of the input fields, hence the neural network does not require the knowledge of the input at previous timesteps. Parameter settings for training are detailed in Appendix A.

During inference, the velocity-fluctuation fields need to be reconstructed from the POD temporal coefficients. For each subdomain, the ![]() $N_r$ predicted POD temporal coefficients are used to reconstruct the local fluctuation field within the subdomain. This is done with the orthonormal basis functions retrieved from the training data, even at test time. The underlying assumption is ergodicity, i.e. both the training and test datasets share the same statistical features and, consequently, the same spatial modes. This requires a sufficiently large training dataset to ensure convergence of the spatial modes, which is generally ascribed to the convergence of second-order statistics. Once the velocity-fluctuation fields are reconstructed within each subdomain, they are assembled together to provide the full-domain prediction. Note that the tiling of the subdomains does not provide any guarantee of smoothness across the edges of the subdomains because of the finite number of modes that are used to reconstruct the flow and because of the prediction error in the temporal coefficients.

$N_r$ predicted POD temporal coefficients are used to reconstruct the local fluctuation field within the subdomain. This is done with the orthonormal basis functions retrieved from the training data, even at test time. The underlying assumption is ergodicity, i.e. both the training and test datasets share the same statistical features and, consequently, the same spatial modes. This requires a sufficiently large training dataset to ensure convergence of the spatial modes, which is generally ascribed to the convergence of second-order statistics. Once the velocity-fluctuation fields are reconstructed within each subdomain, they are assembled together to provide the full-domain prediction. Note that the tiling of the subdomains does not provide any guarantee of smoothness across the edges of the subdomains because of the finite number of modes that are used to reconstruct the flow and because of the prediction error in the temporal coefficients.

As mentioned earlier, dividing the output velocity-fluctuation fields in subdomains allows us to represent the flow in these subregions with a compact orthogonal basis, as shown in figure 5(a,c). The energy distribution reported for the two ![]() $Re_{\tau }$ cases is very similar, only the energy distribution at

$Re_{\tau }$ cases is very similar, only the energy distribution at ![]() $y^+=15$ becomes slightly more compact for the low-

$y^+=15$ becomes slightly more compact for the low-![]() $Re_{\tau }$ case. The size of the subdomains in the streamwise and spanwise directions is roughly

$Re_{\tau }$ case. The size of the subdomains in the streamwise and spanwise directions is roughly ![]() $h\times 0.5h$ for the

$h\times 0.5h$ for the ![]() $Re_{\tau }=180$ case, and

$Re_{\tau }=180$ case, and ![]() $0.4h\times 0.2h$ at

$0.4h\times 0.2h$ at ![]() $Re_{\tau }=550$. This is smaller than the domain considered in the previous work by Güemes et al. (Reference Güemes, Discetti and Ianiro2019), where the domain had size of

$Re_{\tau }=550$. This is smaller than the domain considered in the previous work by Güemes et al. (Reference Güemes, Discetti and Ianiro2019), where the domain had size of ![]() $h\times h$. The choice of the size of the subdomains is the result of a compromise between network capability of reconstructing the majority of the energy content of the flow and compression of the information. In particular, it is important to reconstruct a significant portion of the total energy using a reduced number of modes. As shown in figure 5(b,d), the first 64 POD modes account for

$h\times h$. The choice of the size of the subdomains is the result of a compromise between network capability of reconstructing the majority of the energy content of the flow and compression of the information. In particular, it is important to reconstruct a significant portion of the total energy using a reduced number of modes. As shown in figure 5(b,d), the first 64 POD modes account for ![]() $90\,\%$ of the total energy at

$90\,\%$ of the total energy at ![]() $Re_{\tau }=180$, while 128 modes are needed at

$Re_{\tau }=180$, while 128 modes are needed at ![]() $Re_{\tau }=550$ to retain a similar amount of energy. Therefore our predictions are truncated at