INTRODUCTION

How learning and instruction might be optimized remains an important goal for second language acquisition (SLA) research, with clear implications for classroom practice (Rogers & Leow, Reference Rogers and Leow2020; Suzuki et al., Reference Suzuki, Nakata and DeKeyser2019, Reference Suzuki, Nakata and DeKeyser2020). One area that has received considerable attention within the field of cognitive psychology is how the distribution of practice might influence the quantity and quality of learning that takes place. Distribution of practice, also referred to as input spacing, refers to whether and how learning is spaced over multiple learning episodes. Massed practice refers to experimental conditions in which learning is concentrated into a single, uninterrupted training session, whereas distributed or spaced practice refers to learning that is spread over two or more training episodes. The term the spacing effect refers to the phenomenon of distributed practice being superior to massed practice for learning, a finding that is particularly evident in delayed testing (Rogers, Reference Rogers2017a; Rohrer, Reference Rohrer2015). The term lag effect refers to the impact of spacing gaps of varying lengths, such as a one-day gap between training sessions versus a one-week gap. The term distributed practice effect is used as a blanket term for both spacing and lag effects (Cepeda et al., Reference Cepeda, Pashler, Vul, Wixted and Rohrer2006; Rogers, Reference Rogers2017a).

A growing amount of SLA research has examined distribution of practice effects on the learning of second language (L2) grammar (e.g., Bird, Reference Bird2010; Kasprowicz et al., Reference Kasprowicz, Marsden and Sephton2019; Rogers, Reference Rogers2015; Suzuki, Reference Suzuki2017; Suzuki & DeKeyser, Reference Suzuki and DeKeyser2017). These studies have been motivated in part to examine the degree to which empirical research in cognitive psychology generalizes to SLA, in particular research that has examined the relationship between the timing between practice sessions (intersession interval, or ISI) and the amount of time between the final practice session and testing (retention interval, or RI). For example, an influential study in the field of cognitive psychology by Cepeda et al. (Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008) comprehensively mapped the optimum ISI/RI ratios with regard to the learning of trivia facts. The results of this study indicated that the optimum spacing between training sessions is dependent on when the knowledge will later be used (i.e., when it will be tested), and that the optimal ISI is approximately 10 to 30% of RI (Rohrer & Pashler, Reference Rohrer and Pashler2007).

With regard to the learning of L2 vocabulary, the focus of the present investigation, a number of studies have examined the effects of input spacing with adult populations (e.g., Cepeda et al., Reference Cepeda, Coburn, Rohrer, Wixted, Mozer and Pashler2009; Küpper-Tetzel & Erdfelder, Reference Küpper-Tetzel and Erdfelder2012; Nakata, Reference Nakata2015; Nakata & Suzuki, Reference Nakata and Suzuki2019; Pavlik & Anderson, Reference Pavlik and Anderson2005). While these studies have generally found advantages for more distributed conditions, studies examining distributed practice effects with nonadult populations, that is, young learners in authentic contexts, have returned conflicting results: either no difference between the spacing conditions (e.g., Küpper-Tetzel et al., Reference Küpper-Tetzel, Erdfelder and Dickhäuser2014) or greater learning effects for more intensive conditions (e.g., Rogers & Cheung, Reference Rogers and Cheung2018).

Given that these results contradict the findings of a wealth of laboratory-based studies from the field of cognitive psychology, it is prudent to replicate these studies to establish the external validity of these findings (Porte & McManus, Reference Porte and McManus2018; Rogers & Révész, Reference Rogers, Révész, McKinley and Rose2020). This study represents a conceptual replication of a recent study (Rogers & Cheung, Reference Rogers and Cheung2018) that set out to examine distributed practice effects with young learners under ecologically valid learning conditions. The aim of the present replication is to examine the degree that the results of Rogers and Cheung (Reference Rogers and Cheung2018) generalize to a different teaching and learning context, with the broader goal of establishing whether input spacing is a viable method within an authentic teaching and learning environment. In the following sections, we first review the motivation of Rogers and Cheung’s (Reference Rogers and Cheung2018) original study. We then provide a rationale for the present conceptual replication, before describing the present study.

MOTIVATION FOR THE ORIGINAL STUDY

The motivation for Rogers and Cheung’s (Reference Rogers and Cheung2018) original study was rooted in questions of the generalizability of previous input spacing research in authentic SLA teaching and learning contexts. Although distribution of practice effects are frequently cited as one of the most widely researched and robust findings in all the educational sciences, it has been argued that only a handful of these studies are “educationally relevant” (Rohrer, Reference Rohrer2015). As such, Rogers and Cheung (Reference Rogers and Cheung2018) contend that there is a far less stable foundation to base any claims of the benefits of distributing practice over longer periods than is typically claimed.

A second criticism levied by Rogers and Cheung (Reference Rogers and Cheung2018) concerns the ecological validity of previous, “educationally relevant” research. Specifically, Rogers and Cheung argue that previous research, including classroom-based studies, has strived for high degrees of internal validity in their experimental designs, at the cost of external and ecological validity. To elaborate, internal validity relates to experimental control and refers to the level of certainty that the results of the experiment can be attributed to the experimental treatment, that is, that the observed changes in the dependent variable are a result of the independent variable. Any factor that allows for an alternative interpretation of the findings represents a threat to internal validity (Shadish et al., Reference Shadish, Cook and Campbell2002). External validity refers to the degree that results hold true outside of the particular study, that is, the generalizability of the findings. External validity is best established through replication (Porte & McManus, Reference Porte and McManus2018; Shadish et al., Reference Shadish, Cook and Campbell2002). It is widely acknowledged that there is a constant tension between internal and external validity in experimental research (e.g., Hulstijn, Reference Hulstijn1997; Rogers & Révész, Reference Rogers, Révész, McKinley and Rose2020). Related to external validity is the construct of ecological validity, which is related to the “ecology” of a particular context. A study can claim to have ecological validity if the experiment is similar to the context to which it aims to generalize. Ecological validity in this sense refers not only to the location in which the experiment takes place but also to the interrelations of all aspects of the setting (e.g., Van Lier, Reference Van Lier2010).

Rogers and Cheung argue that many studies that meet Rohrer’s criteria of educational relevance (e.g., Küpper-Tetzel et al., Reference Küpper-Tetzel, Erdfelder and Dickhäuser2014) have overemphasized internal validity in their experimental designs at the cost of decreased external and ecological validity. It goes without saying that a high level of experimental control is not an issue in itself and that tightly controlled laboratory studies have greatly contributed to our understanding of SLA (see, e.g., Hulstijn, Reference Hulstijn1997). The issue is that much previous research that has claimed ecological validity has adopted a narrow interpretation in that they operationalize this construct to refer to only the location in which the experimental study takes place. Although these studies have taken place in a classroom, they have imposed artificial experimental conditions that would not be present normally in a classroom environment. At best, this has meant that the experimental conditions have not been validated with regard to the learning environment in which the study takes place (Rogers & Révész, Reference Rogers, Révész, McKinley and Rose2020). At worst, the studies have imposed artificial experimental conditions that do not reflect an authentic learning environment, such as not allowing participants to take notes (e.g., Küpper-Tetzel et al., Reference Küpper-Tetzel, Erdfelder and Dickhäuser2014) or forbidding participants from engaging in specific cognitive strategies during instruction (e.g., Pavlik & Anderson, Reference Pavlik and Anderson2005).

To address these issues, Rogers and Cheung set out to examine whether the effects of input spacing extend to an authentic teaching and learning environment. Using a within-participants experimental design, Rogers and Cheung examined the learning of L2 vocabulary, specifically 20 English adjectives related to describing people, across four classrooms in a primary school in Hong Kong. What was innovative about Rogers and Cheung’s (Reference Rogers and Cheung2018) study was that they asked the teachers to teach the target L2 lexical items as they normally would, with the condition that each individual teacher was consistent in their approach across the training episodes. Vocabulary was learned under two different spacing conditions: a spaced-short condition with a 1-day ISI (i.e., gap between training sessions) and a spaced-long condition with an 8-day ISI. Learning was assessed after a 28-day delay (RI) using a multiple-choice test, which asked the learners to circle the picture that most closely matched the meaning of the target item. Classroom observations were carried out over the course of the experiment by the research team to examine how the teachers taught the material over the training conditions.

The results of the lesson observations indicated that the four teachers largely adopted similar approaches in first presenting the material to the students, then emphasizing the pronunciation/spelling of the target items, followed by choral drilling and another form of form-focused practice, for example, completing crossword puzzles. The results of the delayed posttest showed minimal learning gains across the four classrooms: roughly 10% improvement across all target items. This minimal amount of learning might be explained in terms of the lack of transfer appropriateness across the training conditions, which emphasized the pronunciation of items, and the testing condition, which reflected the degree to which participants could recognize the orthography of target items. Despite the minimal amount of learning, participants learned the spaced-short items at significantly higher rates than the spaced-long items, going against predictions of theoretical models of lag effects.

JUSTIFICATION FOR REPLICATION

There are a number of theoretical, pedagogic, and methodological reasons that justify a replication of Rogers and Cheung’s (Reference Rogers and Cheung2018) study. On a theoretical level, it is important to investigate lag effects in L2 learning because lag effects are closely linked with the benefits of repetition, review, and practice. Such benefits find support in skill-based theories of SLA (e.g., DeKeyser, Reference DeKeyser, VanPatten and Williams2015) where deliberate practice can aid in the transition from declarative/explicit knowledge to procedural knowledge. Lag effects have also been identified as an area of research with useful pedagogical implications because they may help bring about “desirable difficulties” in learning. Desirable difficulties occur as a result of conditions that “trigger the encoding and retrieval processes that support learning, comprehension, and remembering” (Bjork & Bjork, Reference Bjork, Bjork, Gernsbacher, Pew, Hough and Pomerantz2011, p. 58). In other words, long-term memory is strengthened as a result of making retrieval effortful, for example through spacing practice over longer periods (see Lightbown, Reference Lightbown and Han2008; Rogers & Leow, Reference Rogers and Leow2020; Suzuki et al., Reference Suzuki, Nakata and DeKeyser2019, Reference Suzuki, Nakata and DeKeyser2020 for discussions of desirable difficulties in L2 learning) and so may lead to more efficient and effective L2 practice (Suzuki et al., Reference Suzuki, Nakata and DeKeyser2019).

Further, as noted, a limited number of ecologically valid studies have examined the effects of the distribution of practice across all domains of learning. Additional research is needed to justify any claims as to its pedagogical applications. In addition, there are even fewer studies examining the effects of input spacing on the learning of L2 vocabulary in authentic teaching and learning contexts with nonadult populations of learners. By carrying out such a replication, the present study would meet wider calls for more ecologically valid research within the field of SLA with nontraditional populations (Kasprowicz & Marsden, Reference Kasprowicz and Marsden2018; Lightbown & Spada, Reference Lightbown and Spada2019; Rogers & Révész, Reference Rogers, Révész, McKinley and Rose2020; Spada, Reference Spada2005, Reference Spada2015).

This replication study also sets out to address some of the limitations of Rogers and Cheung’s (Reference Rogers and Cheung2018) study. First, while one of the strengths of Rogers and Cheung’s study is the use of an ecologically valid research design, the study lacked the experimental control that can be found in other laboratory and cognitively oriented classroom-based research. As such, a replication is needed to help avoid “leaps of logic” in extrapolating research findings from one context to another (Hatch, Reference Hatch1979; Spada, Reference Spada2015) and to ensure that the results are real and do not reflect an artefact of the environment in which the study took place. A replication would also help in building a body of evidence toward the generalizability of distribution of practice effects in SLA in that “extrapolating from one ‘controlled’ experimental study … may not be as great as extrapolating from several ‘less controlled’ classroom studies (i.e., with intact classes) that report similar findings in distinctive settings” (Spada, Reference Spada2005, p. 334). In other words, a replication would provide evidence as to the applicability of input spacing in authentic learning environments.

One limitation of Rogers and Cheung’s (Reference Rogers and Cheung2018) study that the present study set out to address is the low levels of learning demonstrated by the learners. Theoretical accounts of the benefits of distributed practice often include some aspect of the benefits of retrieval (Toppino & Gerbier, Reference Toppino and Gerbier2014). In the case of Rogers and Cheung (Reference Rogers and Cheung2018), it could be argued that the low levels of learning mask any benefits of spaced practice. As such, we have set out to better link the materials used during training and testing, with a view to using materials that are both transfer-appropriate and valid with regard to the teaching and learning context in which this experimental study is set.

PRESENT STUDY

Like Rogers and Cheung (Reference Rogers and Cheung2018), the present study adopted a within-participants experimental design (Rogers & Révész, Reference Rogers, Révész, McKinley and Rose2020) to examine the impact of different spacing schedules on the learning of L2 vocabulary in a classroom setting. A summary comparing the methodological features of Rogers and Cheung (Reference Rogers and Cheung2018) and the present study is presented in Table 1.

TABLE 1. Comparison of methodological features of Rogers and Cheung (Reference Rogers and Cheung2018) and the present study

PARTICIPANTS

An a priori power analysis was carried out using G*power 3.1 (Faul et al., Reference Faul, Erdfelder, Buchner and Lang2009). Anticipating a medium-sized effect (f = .25) within a mixed within-between 2 × 2 ANOVA experimental design, the analysis here revealed that a minimum of 54 participants were required to achieve a power of .95 with an alpha level of .05.

The participants who were recruited for this study were 87 children (L1 Cantonese, aged 8–9), studying in Primary Grade 4 in a Hong Kong Primary school in a low socioeconomic setting in Hong Kong. These participants were drawn from three intact classrooms. Each class was taught by a different instructor (three instructors in total), each with multiple years of experience teaching in the local context. The children who took part in this study had been studying English for nearly four years, having begun learning English in kindergarten as part of the Hong Kong Primary Curriculum. The children in this study were aware that they were taking part in an experimental study, and informed consent was collected from their parents, teachers, and the school administration prior to the commencement of the study. Data were excluded from participants whose guardians did not complete and return the consent forms and from participants who missed one or more of the testing and/or training sessions, resulting in a final participant pool of 66 participants.

MATERIALS

All materials for this study were chosen and/or designed based on considerations of their appropriateness within the local teaching and learning context, with input from the stakeholders involved in the project, that is, teachers and other school representatives.

Twenty English words were selected for this project from the content vocabulary words in the students’ course book. These 20 words comprised a mix of word classes: prepositions, nouns (e.g., body parts/food), and action verbs. To help control for item frequency effects, these 20 words were divided into two separate word lists of 10 words each. This division was carried out with the aim of maintaining a balance, as far as possible, with considerations of lexical rarity (Cobb, Reference Cobb2016), word class, and, in the case of verbs, regular and irregular forms (see Table 2).

TABLE 2. Lexical rarity and word groups of target items included in the study

Note: K1 words in normal font, K2 words in bold, off-list words in italics.

TRAINING AND TESTING MATERIALS

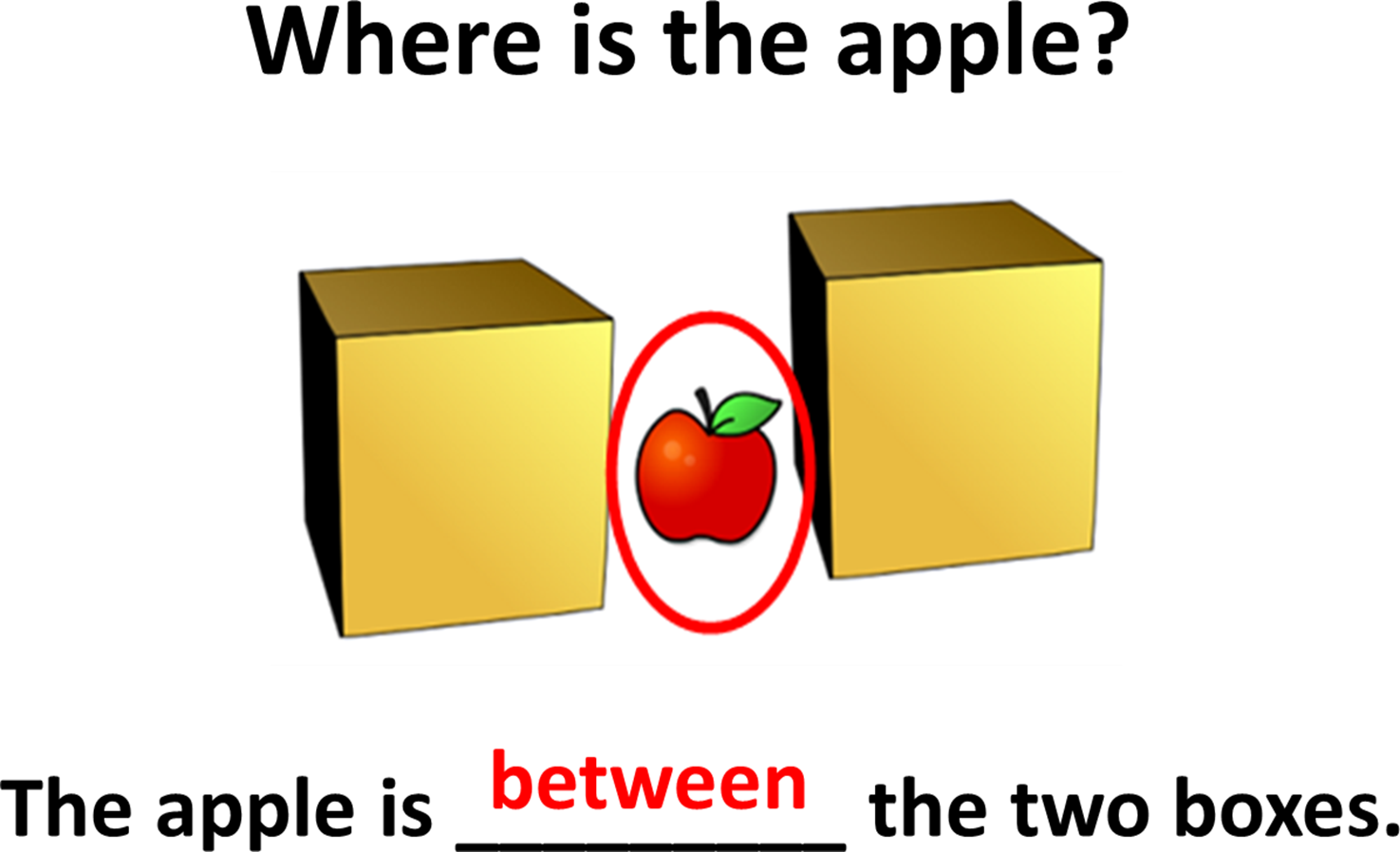

Two PowerPoint presentations were created for the teachers to use when presenting the target items: one for List A and one for List B. Each slide was animated to present information incrementally. A completed slide can be seen in Figure 1.

FIGURE 1. An example slide from PowerPoint presentations used in the training phase of the experiment.

In addition, crossword puzzles were used across the training and testing phases of this study. These puzzles were created using a free online crossword puzzle generator.Footnote 1 Four crossword puzzles were created for each of the two lists of words (eight unique crossword puzzles in total; four puzzles for List A and four puzzles for List B). Half the crossword puzzles were used for the testing phase of the experiment and half were used for the training phase of the experiment. The clues were identical across all puzzles in that they were taken from the course materials. While the clues did not vary across the test versions, the order of the items and design of the crossword puzzles were unique for each version of the puzzle. An example of one puzzle is available as a supplementary file.

The materials for the testing phase were four crossword puzzles designed with the same considerations in mind as those used during the training phase. Two of these puzzles (one for Set A and one for Set B) were administered as the pretest; two of the puzzles (one for Set A and one for Set B) were administered as the posttest. It was decided to use separate puzzles rather than combining the two sets into a larger set of 20 so that the testing phase more closely matched the training conditions. This was done on a theoretical level so that the testing conditions would more closely match the training conditions, which would in theory lead to better transfer of learning (e.g., Lightbown, Reference Lightbown and Han2008). On a practical level, the teachers in the study felt that a 20-item crossword puzzle would be too difficult to manage and that administering two shorter puzzles would be more appropriate for the students.

Only one posttest was administered to help control for testing effects as a potential confounding variable (Rogers & Cheung, Reference Rogers and Cheung2018; Suzuki, Reference Suzuki2017). The internal reliability of the two test versions (α = .86 and .95) was acceptable, given Plonsky and Derrick’s (Reference Plonsky and Derrick2016) guidelines. The entire experiment, from prebriefing to postbriefing sessions, took 9 weeks in total. It comprised a prebriefing session, three training sessions, and two testing sessions (a pretest and a 28-day delayed posttest). The temporal distribution of the learning of the target vocabulary items was manipulated within-subjects and was identical to the spacing conditions adopted in Rogers and Cheung’s (Reference Rogers and Cheung2018) study. This was done by first dividing the 20 target items into two lists of 10 items apiece. One of these lists was first studied in Training Session 1 (henceforth spaced-long items). The other list was first studied in Training Session 2 (henceforth spaced-short items). The order that the lists were studied was counterbalanced across the different classes that took part in the study. Both lists were reviewed in Training Session 3. This resulted in an 8-day ISI for the items studied in Session 1 and reviewed in Session 3, and a 1-day ISI for items studied in Session 2 and reviewed in Session 3.

The procedural timeline for the experiment can be seen in Table 3. All target items included in this study had not been previously taught by the instructors in the course. A prebriefing session was held with the teachers, led by a member of the research team. As part of this session, the consent forms were distributed and the experimental procedures and protocols were discussed. Pretests were then administered in class in the following week. Two weeks later, all three classes took part in Training Session 1, in which 10 spaced-long items were introduced and taught using the prescribed materials. The spaced-long items were counterbalanced among the three classes, with Class A and Class C studying one set, and Class B studying the other. One week later, the 10 spaced-short items were introduced and taught using the prescribed materials in Training Session 2. The following day, Training Session 3 took place, in which all 20 target items were reviewed. The procedure for each training session was agreed upon through a collaborative discussion between the school principal, teachers who agreed to take part in the study, and the research team. Each training session consisted of an initial PowerPoint presentation that the teachers used to introduce the target items. This was followed by a crossword puzzle to practice the target items, and concluded with feedback from the teachers. Training Sessions 1 and 2 took approximately 15 minutes to complete. Training Session 3 took approximately 25 minutes to complete because of the higher number of target vocabulary items.

TABLE 3. Experimental design

The surprise posttest was administered 28 days after Training Session 3 (i.e., the review session), resulting in an ISI/RI ratio of 3.6% and 28.6% for the spaced-short and spaced-long items, respectively. The timing of the posttest was identical to that of Rogers and Cheung’s (Reference Rogers and Cheung2018) original study, which is based on providing the optimum ISI/RI ratio for the spaced-long condition as per Cepeda et al.’s (Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008) model. Following the posttest, interviews were carried out with the teachers to discuss the results and the degree (if any) to which they deviated from the agreed-upon experimental procedure.

SCORING AND STATISTICAL ANALYSES

Each crossword puzzle consisted of 10 items. Two separate dichotomous scoring methods, strict and lenient, were used to score the data, and separate analyses were run for each. Under strict scoring, each item was scored as 1 if participants provided the correct spelling of the target item, and 0 if the correct spelling was not provided, that is, if the participant made any spelling mistake, no matter how minor, then the item was counted as incorrect. Under lenient scoring, 1 point was awarded if the participants provided a correct spelling or an incorrect spelling that did not impede the completion of the crossword puzzle, and 0 points were awarded for cases in which students could not provide the missing information. Approximately 25% of the scripts were double marked independently by members of the research team. A reliability analysis showed a high level of agreement between the markers: r (70) = .992, p < .001 (see Plonsky & Derrick, Reference Plonsky and Derrick2016 for a discussion of reliability standards in SLA research). Visual inspection of the data revealed a skewed distribution of data. A Shapiro–Wilk test of normality confirmed a nonnormal distribution for both the pretest (W = .946, p = .006) and posttest scores (W = .799, p < .001) for strict scoring, and a normal distribution for the pretest using lenient scoring (W = .968, p = .088) and nonnormal distribution for the posttest (W = .729, p < .001).

Two different statistical approaches were undertaken to analyze the data. First, given the nonnormal distribution for three out of the four sets of data, nonparametric statistical procedures, specifically Mann–Whitney U tests and Wilcoxon signed rank tests, were carried out using SPSS V25. We elected to use these nonparametric tests as they were identical to the tests run in Rogers and Cheung’s (Reference Rogers and Cheung2018) study, thus allowing for greater comparability. The alpha level for these tests was set at .05. Effect sizes were calculated in Pearson’s correlation coefficient r. For interpreting effect sizes, we adopt Plonsky and Oswald’s (Reference Plonsky and Oswald2014) field-specific guidelines of r = .25 as small-, .4 as medium-, and .6 as large-sized effects, respectively.

In addition to the aforementioned nonparametric tests, the data were further analyzed using more current methods, specifically a series of logit mixed-effects models (Linck & Cunnings, Reference Linck and Cunnings2015). These analyses were carried out using the lme4 package in R (Bates et al., Reference Bates, Mächler, Bolker and Walker2015). Within these models, distribution (spaced-short vs. spaced-long), time (pretest vs. posttest), and class (Class A vs. Class B vs. Class C) were included as fixed effects with crossed random effects for subjects and items. The models were developed incrementally using a maximum-likelihood technique (Cunnings, Reference Cunnings2012; Linck & Cunnings, Reference Linck and Cunnings2015; see also Rogers, Reference Rogers2017b; Suzuki & Sunada, Reference Suzuki and Sunada2019 for similar approaches). First, an initial null model was created using only random intercepts for participants and items. Following this, the fixed effects were added incrementally and each model was compared against the null model using the ANOVA function in the lme4 package. This was followed by random slopes. If the model that included the fixed effect was significant against the null model, this result was interpreted as indicating that the fixed effect in question had a significant relationship with the dependent variable and should be included in any subsequent analyses. If the result was nonsignificant, this was interpreted as no significant relationship, and that this fixed effect could be excluded from any models to follow. The best-fitting model was then analyzed to determine which fixed effects, if any, reached statistical significance.

RESULTS

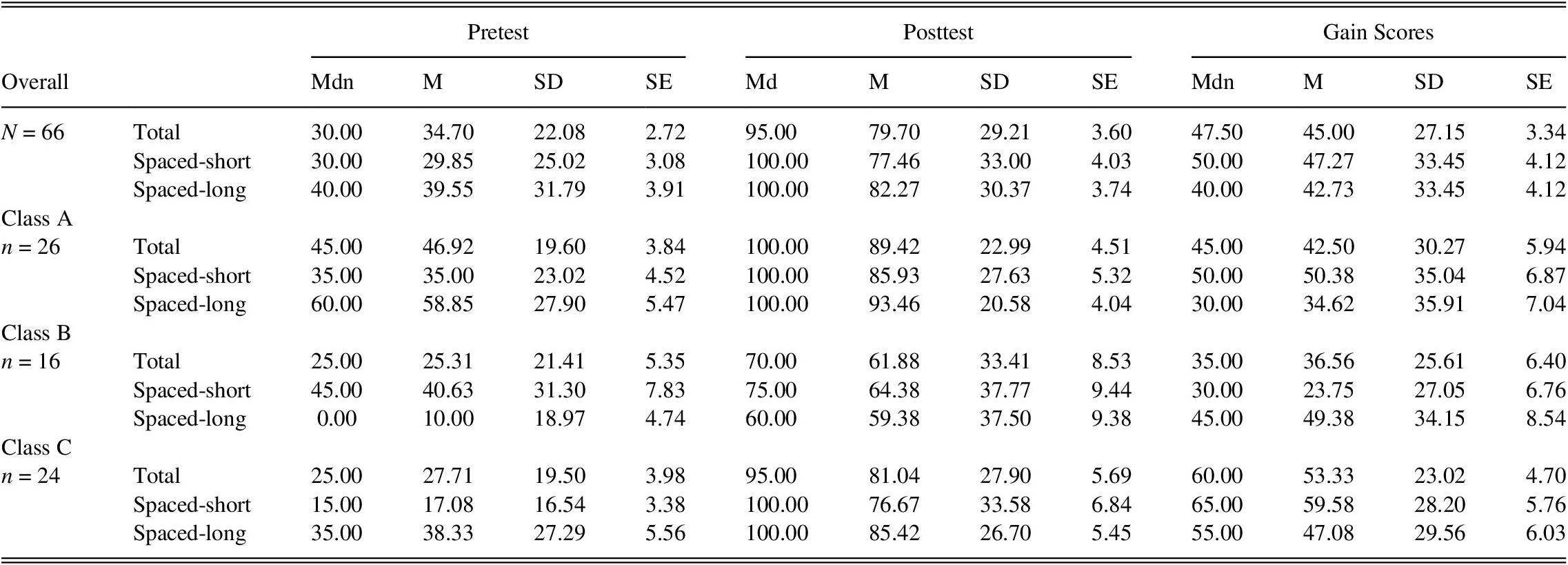

Descriptive statistics were generated with regard to pretest and posttest performance for the three classes and further broken down for spaced-short and spaced-long items. These results are presented in Table 4 for strict scoring and Table 5 for lenient scoring. First, we examined total learning across all participants and item types using a Wilcoxon signed-ranks test. This analysis revealed a significant difference in median ranks from pretest to posttest across all participants with a large-sized overall effect using strict scoring (Z = −6.863, p < .001, r = .60), and similar results under lenient scoring (Z = −6.768, p < .001, r = .59).

TABLE 4. Descriptive statistics of performance on pretest, posttest, and gain scores (%) under strict scoring

TABLE 5. Descriptive statistics of performance on pretest, posttest, and gain scores (%) under lenient scoring

Similar analyses were run to examine spaced-short and spaced-long items independently. These analyzes showed a significant result for both spaced-short (Z = −6.646, p < .001, r = .56) and spaced-long items (Z = −6.566, p < .001, r = .57) for strict scoring, and for spaced-short (Z = −6.257, p < .001, r = .54) and spaced-long items (Z = −6.320, p < .001, r = .55) under lenient scoring, all of which indicated medium-sized effects. Finally, to compare scores across spaced-short versus spaced-long items, gain scores were first calculated from pretest to posttest, then these scores were compared using Mann–Whitney U tests. This test indicated a nonsignificant result between spaced-short and spaced-long items with a small-sized effect under strict (U = 2,149.5, p = .90, r = .01) and lenient scoring (U = 1,979, p = .36, r = .08).

As noted, in addition to the nonparametric tests mentioned previously, a series of logit mixed-effects analyses were carried out on the data. To interpret the data provided by these models, if a fixed effect reaches significance within the model, then this indicates that the effect in question is significant in accounting for variance in the overall dataset. As an example, if a model indicates that the fixed effect of distribution is significant, this points toward a significant difference in accuracy between the variables included within the fixed effect of distribution, that is, spaced-short versus spaced-long items. In addition to significant main effects, the mixed-effects model can also indicate a significant interaction between fixed effects. For example, if the model results in a significant interaction between distribution and time, then this result points toward differences between pretest and posttest performance with regard to spaced-short versus spaced-long items.

The results of these models corroborated the results of the nonparametric tests mentioned in the preceding text in that a significant effect was found for the fixed effect of time, indicating that all participants’ performance improved significantly from pretest to posttest. Most importantly, the fixed effect of distribution and its interactions with group and time were all nonsignificant, indicating that the distribution of items, spaced-short versus spaced-long, did not differ significantly with regard to pretest and posttest scores, and across the different groups, that is, classes that took part in the study. The results of the best-fitting models for both strict and lenient scoring can be found in Table 6.

TABLE 6. Results of best-fitting models of logit mixed-effects models for strict and lenient scoring

Note: Time = pretest vs. posttest; distribution = spaced-short vs. spaced-long; group = Class A vs. Class B vs. Class C. * denotes interaction. Model Formula: accuracy ~ time*group*distribution + (time*distribution|participant) + (group|item), glmerControl (optimizer = “bobyqa”), family = binomial.

QUALITATIVE RESULTS

The main objective of the postexperiment interviews was to collect information from the teachers as to whether and to what degree they deviated from the experimental procedure and to confirm that they maintained consistency across the different training sessions during the experiment.

Overall, all three teachers reported that they were consistent in their approaches across the training stages of the experiment and utilized the experimental materials as intended. Teacher A reported playing a miming game with students after presenting the PowerPoint and prior to asking students to complete the practice crossword puzzles during the training sessions. While going through the PowerPoint, the teacher also highlighted the past tense forms (e.g., sat, roared, walked) of the verbs in the study, by explicitly pointing these out to the students during the presentation. Teacher B reported that they did not deviate from the procedure and went through the PowerPoint with students, asking them to remember the words, prior to completing the practice crossword puzzles. Teacher C reported following the experimental procedure but also devised an additional activity where they showed the pictures one by one to the students and asked the students to spell the words to their partner. During the presentation and feedback, they reported highlighting the spelling of words that they perceived to be difficult for the students, such as poured and roared. All teachers reported checking the answers to the crossword puzzles with the students during the training and review sessions by eliciting the answers from the students and showing the students the correct answer on the overhead projector.

DISCUSSION

This study examined the optimum learning schedules of L2 vocabulary in child classrooms under ecologically justified learning conditions. Participants learned the target vocabulary items in two learning sessions following either a spaced-short (1-day ISI) or a spaced-long condition (8-day ISI). Learning was measured through a 4-week delayed posttest, which consisted of crossword puzzles that were transfer-appropriate to the training conditions of the study. The results here indicated medium to high learning gains across all target items, with no significant differences between the two spacing conditions. These results are in line with the existing research that has examined lag effects in child L2 classrooms (Kasprowicz et al., Reference Kasprowicz, Marsden and Sephton2019; Küpper-Tetzel et al., Reference Küpper-Tetzel, Erdfelder and Dickhäuser2014; Rogers & Cheung, Reference Rogers and Cheung2018; Serrano & Huang, Reference Serrano and Huang2018), where no advantages have been found for spaced-long conditions in comparison with spaced-short conditions. Taken as a whole, these results provide growing evidence that lag effects, in particular the optimum ISI/RI ratios reported in the cognitive psychology literature, might not translate directly to conditions with lower levels of experimental control.

Across the SLA literature, there is mixed empirical evidence as to the advantages of distributed practice. Some studies have provided support for the ISI/RI ratio framework proposed within the cognitive psychology literature (Cepeda et al., Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008) when examining the learning of isolated L2 grammatical structures (Bird, Reference Bird2010; Rogers, Reference Rogers2015). Other studies, however, have returned conflicting results with advantages for more intensive conditions, or similar amounts of learning across both conditions (Suzuki, Reference Suzuki2017; Suzuki & DeKeyser, Reference Suzuki and DeKeyser2017). Studies that have examined more intensive versus more extensive learning schedules on a programmatic level have also reported either advantages for more intensive learning conditions or similar results across conditions (e.g., Collins & White, Reference Collins and White2011; Serrano, Reference Serrano2011; Serrano & Muñoz, Reference Serrano and Muñoz2007). Taken together with the results from the present study and the four child L2 classroom-based studies cited in a preceding paragraph, there does not appear to be a clear advantage at present for longer spacing conditions across the extant SLA literature.

There are a number of plausible explanations for this trend. The first, as has been suggested in the literature (e.g., Rogers, Reference Rogers2017a; Serrano, Reference Serrano and Muñoz2012) is that the optimum ISI/RI intervals of Cepeda et al. (Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008) are not directly applicable to SLA, due to the fact that SLA is arguably more complex that the data on which Cepeda et al.’s (Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008) model is based, that is, the learning of trivia facts (see Serrano, Reference Serrano and Muñoz2012 for a discussion). This interpretation is speculative as the majority of SLA studies to date, including the present study, have justified the timing of their delayed posttests in light of Cepeda et al.’s (Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008) model, with mixed results. A systematic exploration of the learning of SLA content over a wide range of other ratios would provide evidence necessary for this interpretation. In this regard, future SLA research might begin by establishing the validity of ISI/RI ratios to the learning of SLA content under controlled laboratory settings, followed by research to explore the degree that this generalizes to authentic teaching and learning contexts. Another possibility is the methodological differences between SLA studies and those in cognitive psychology. One difference, as noted in the preceding text, is that posttests are typically manipulated experimentally between-participants in studies in cognitive psychology, whereas SLA studies tend to do so within-participants, thus creating a potential confound due to repeated retrieval opportunities. A final possibility is that the benefits of distributed practice have been overstated in the literature and previous findings are not robust in the face of the increased variability present in authentic classroom environments. This is evident in the current study, where the results show a high degree of variability within groups, as reflected by the standard deviations within each group. Regardless of the explanation, it is clear that further research, both laboratory- and classroom-based, is needed to substantiate any claims as to the benefits of longer spacing conditions for SLA and, in particular, instructed SLA.

A further point of discussion concerns the conceptualization of spacing within the broader SLA and cognitive psychology literature. As noted, studies interested in spacing operationalize retention with regard to the distance from the final training session and the test, in other words the RI. However, as pointed out by a reviewer, the distance from the initial training session to the testing phase is also valuable from a pedagogical perspective in that instructors and students may be concerned with the amount of vocabulary that can be retained for long periods following initial exposure, as well as following later review. Future SLA research may explore total retention time (i.e., time from initial exposure to testing) as a potential moderating variable on the effects of spacing.

It is also important to highlight some of the methodological limitations of the present study. Unlike the previous research on which the current experiment is based (Rogers & Cheung, Reference Rogers and Cheung2018), we were unable to carry out lesson observations due to reasons of practicality/agreement with the teachers and other stakeholders in the host school. Therefore, we relied on postobservation interviews with the teachers to collect data regarding their fidelity to the experimental protocols. Although the teachers reported using the materials as intended, and we have no reason to believe otherwise, it is important to acknowledge that we do not have direct evidence of the teachers’ classroom practice throughout this study, and their responses may have been influenced by, for example, social desirability bias. Two other limitations concern measurement. First, as the same clues were included across the crossword puzzles used throughout the experiment, it is possible that the results here might have been influenced by a testing effect. Second, the outcome measure used in the current study, crossword puzzles, only captures one aspect of vocabulary knowledge, specifically form recall (Schmitt, Reference Schmitt2010). It would be advantageous for future research to utilize multiple measures of vocabulary knowledge, which would provide a more complete picture of lexical development (Webb, Reference Webb2005).

A final point concerns ecological validity. As noted, some previous quasi-experimental distribution of practice research has taken a narrow view of ecological validity in operationalizing this construct as an experimental study that takes place in a classroom setting, regardless of the levels of experimental control and authenticity of the experimental manipulations. To truly make any claims about the degree to which research findings generalize to authentic learning environments, SLA and otherwise, interventions need to be empirically tested within authentic environments with higher degrees of ecological validity. Researchers might do so by validating and justifying their experimental interventions and instruments with regard to the context they aim to generalize to (Lightbown & Spada, Reference Lightbown and Spada2019; Rogers & Révész, Reference Rogers, Révész, McKinley and Rose2020). In doing so, a parallel decrease in effect sizes might be expected. However, if the effects of an intervention cannot be seen in a quasi-experimental study that is ecologically valid in its experimental manipulations, we question whether any effects of the intervention would be seen when implemented “at the chalkface,” that is, in real teaching and learning environments.

CONCLUSION

By way of conclusion, we would like to comment briefly on the practical implications of this research. It is unlikely that anyone, whether researcher or teaching practitioner, would question that review and revision are beneficial for learning. On a theoretical level, such benefits find support in, for example, skill-based theories of language learning (e.g., DeKeyser, Reference DeKeyser, Loewen and Sato2017), where repeated practice is necessary for proceduralization and automatization to take place. The question posed by studies examining distribution of practice effects is not whether, but rather when, and how often, review should take place to optimize learning (Suzuki et al., Reference Suzuki, Nakata and DeKeyser2019). With regard to how often, there is some unsurprising evidence that “more is better” (Bahrick et al., Reference Bahrick, Bahrick, Bahrick and Bahrick1993). When it comes to when a review should take place, it perhaps goes without saying that a review period close to the assessment is likely to be most beneficial to students’ performance on the assessment (Cepeda et al., Reference Cepeda, Vul, Rohrer, Wixted and Pashler2008). With regard to the long-term retention of L2 vocabulary studied by young learners as part of normal classroom instruction, what evidence there is indicates that the timing of the review does not significantly influence learning or retention. In other words, the practical takeaway for language teachers from this growing body of research is that it does not appear to matter when L2 vocabulary is reviewed as part of classroom instruction, as long as it is reviewed.

SUPPLEMENTARY MATERIALS

To view supplementary material for this article, please visit https://doi.org/10.1017/S0272263120000236.