1. Introduction

Real business cycle (RBC) models and their modern versions in dynamic stochastic general equilibrium (DSGE) models have enjoyed a great deal of success since they were first introduced to the literature by Kydland and Prescott (Reference Kydland and Prescott1982). These models are the standard framework used by academics and policymakers to understand economic fluctuations and analyze the effects of monetary and fiscal policies on the macroeconomy. They typically feature rational optimizing agents and various sources of random disturbances to preferences, technology, government purchases, monetary policy rules, and/or international trade. Two notable disturbances, that are now considered standard, are shocks to neutral technology (NT) and shocks to investment-specific technology (IST). NT shocks make both labor and existing capital more productive. On the other hand, IST shocks have no impact on the productivity of old capital goods, but they make new capital goods more productive and less expensive.

Nonetheless, the modeling of these two types of technology in many DSGE models often varies greatly from a stationary process to an integrated smooth trend process, with each choice having different implications for the variations in economic activity and the analysis of macro variables. In this paper, we revisit the question of the specification of NT and IST via the lens of an unobserved components (UCs) model of the total factor productivity (TFP) and the relative price of investment (RPI). Specifically, the approach allows us to decompose a series into two unobserved components: one UC that reflects permanent or trend movements in the series, and the other that captures transitory movements in the series. In addition, based on the stock of evidence from Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011), Benati (Reference Benati2013), Basu et al. (Reference Basu, Fernald and Oulton2003), Chen and Wemy (Reference Chen and Wemy2015), and other studies that appear to indicate that the two series are related in the long run, we specify that RPI and TFP share a common unobserved trend component. We label this component as general purpose technology (GPT), and we argue that it reflects spillover effects from innovations in information and communication technologies to aggregate productivity. As demonstrated in other studies, we contend that such an analysis of the time series properties of TFP and RPI must be the foundation for the choice of the stochastic processes of NT and IST in DSGE models.

The UC framework offers ample flexibility and greater benefits. First, our framework nests all competing theories of the univariate and bivariate properties of RPI and TFP. In fact, the framework incorporates Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011)’s result of co-integration and Benati (Reference Benati2013)’s findings of the long-run positive comovement as special cases. In that sense, we are able to evaluate the validity of all the proposed specifications of NT and IST in the DSGE literature. Furthermore, the UC structure yields a quantitative estimate, in addition to the qualitative measure in Benati (Reference Benati2013), of potential scale differences between the trends of RPI and TFP. Last, but not least, it is grounded on economic theory. In particular, we demonstrate that our UC framework may be derived from the neoclassical growth model used by studies in the growth accounting literature, for example, Greenwood et al. (Reference Greenwood, Hercowitz and Huffman1997a, Reference Greenwood, Hercowitz and Per1997b), Oulton (Reference Oulton2007), and Greenwood and Krusell (Reference Greenwood and Krusell2007), to investigate the contribution of embodiment in the growth of aggregate productivity. As such, we may easily interpret the idiosyncratic UC of RPI and TFP, and their potential interaction from the lenses of a well-defined economic structure.

Using the time series of the logarithm of (the inverse of) RPI and TFP from

![]() $1959.II$

to

$1959.II$

to

![]() $2019.II$

in the USA, we estimate our UC model through Markov chain Monte Carlo methods developed by Chan and Jeliazkov (Reference Chan and Jeliazkov2009) and Grant and Chan (Reference Grant and Chan2017). A novel feature of this approach is that it builds upon the band and sparse matrix algorithms for state space models, which are shown to be more efficient than the conventional Kalman filter-based algorithms. Two points emerge from our estimation results.

$2019.II$

in the USA, we estimate our UC model through Markov chain Monte Carlo methods developed by Chan and Jeliazkov (Reference Chan and Jeliazkov2009) and Grant and Chan (Reference Grant and Chan2017). A novel feature of this approach is that it builds upon the band and sparse matrix algorithms for state space models, which are shown to be more efficient than the conventional Kalman filter-based algorithms. Two points emerge from our estimation results.

First, our findings indicate that the idiosyncratic trend component in RPI and TFP is better captured by a differenced first-order autoregressive, ARIMA(1,1,0), process; a result which suggests that NT and IST should each be modeled as following an ARIMA(1,1,0) process. While several papers like Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b), Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011), and Kaihatsu and Kurozumi (Reference Kaihatsu and Kurozumi2014) adopt an ARIMA(1,1,0) specification, other notable studies such as Smets and Wouters (Reference Smets and Wouters2007) and Fisher (Reference Fisher2006) impose either a trend stationary ARMA(1,0) process or an integrated ARIMA(0,1,0) process, respectively. Through our exploration of the literature, we find that this disconnected practice is complicated by two important facts. The first fact is that it is not easy to establish whether highly persistent macro data are trend stationary or difference stationary in finite sample. Since the models are expected to fit the data along this dimension, researchers typically take a stand on the specification of the trend in DSGE models by arbitrarily building into these models stationary or nonstationary components of NT and IST. Then, the series are transformed accordingly in the same fashion prior to the estimation of the parameters of the models. However, several studies demonstrate that this approach may create various issues such as generating spurious cycles and correlations in the filtered series, and/or altering the persistence and the volatility of the original series. The second fact, which is closely related to the first, is that DSGE models must rely on impulse dynamics to match the periodicity of output as such models have weak amplification mechanisms. In DSGE models, periodicity is typically measured by the autocorrelation function of output. Many studies document that output growth is positively correlated over short horizons. Consequently, if technology follows a differenced first-order autoregressive, ARIMA(1,1,0), process, the model will approximately mimic the dynamics of output growth in the data. Therefore, our results serve to reduce idiosyncratic trend misspecification which may lead to erroneous conclusions about the dynamics of macroeconomic variables.

The second point is that while the idiosyncratic trend component in each (the inverse of RPI and TFP) is not common to both series, it appears that the variables share a common stochastic trend component which captures a positive long-run covariation between the series. We argue that the common stochastic trend component is a reflection of GPT progress from innovations in information and communications technologies. In fact, using industry-level and aggregate-level data, several studies, such as Cummins and Violante (Reference Cummins and Violante2002), Basu et al. (Reference Basu, Fernald and Oulton2003), and Jorgenson et al. (Reference Jorgenson, Ho, Samuels and Stiroh2007), document that improvements in information communication technologies contributed to productivity growth in the 1990s and the 2000s in essentially every industry in the USA. Through our results, we are also able to confirm that changes in the trend of RPI have lasting impact on the long-run developments of TFP. In addition, errors associated with the misspecification of common trend between NT and IST may bias conclusions about the source of business cycle fluctuations in macroeconomic variables. For instance, Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) shows that when NT and IST are co-integrated, then the shocks to the common stochastic trend become the major source of fluctuations of output, investment, and hours. This challenges results in several studies which demonstrate that exogenous disturbances in either NT or IST, may individually, account for the majority of the business cycle variability in economic variables. Therefore, our results suggest that researchers might need to modify DSGE models to consider the possibility of the existence of this long-run relationship observed in the data and its potential effect on fluctuations.

2. The model

Fundamentally, there are multiple potential representations of the relationship between the trend components and the transitory components in RPI and TFP. We adopt the UC approach which stipulates that RPI and TFP can each be represented as the sum of a permanent component, an idiosyncratic component, and a transitory component in the following fashion:

where

![]() $z_{t}$

is the logarithm of RPI,

$z_{t}$

is the logarithm of RPI,

![]() $x_{t}$

is the logarithm of TFP,

$x_{t}$

is the logarithm of TFP,

![]() $\tau _{t}$

is the common trend component in RPI and TFP,

$\tau _{t}$

is the common trend component in RPI and TFP,

![]() $\tau _{x,t}$

is the idiosyncratic trend component in TFP, and

$\tau _{x,t}$

is the idiosyncratic trend component in TFP, and

![]() $c_{z,t}$

and

$c_{z,t}$

and

![]() $c_{x,t}$

are the corresponding idiosyncratic transitory components. The parameter

$c_{x,t}$

are the corresponding idiosyncratic transitory components. The parameter

![]() $\gamma$

captures the relationship between the trends in RPI and TFP.

$\gamma$

captures the relationship between the trends in RPI and TFP.

The first differences of the trend components are modeled as following stationary processes:

where

![]() $\eta _t\sim \mathcal{N}(0,\sigma _{\eta }^2)$

and

$\eta _t\sim \mathcal{N}(0,\sigma _{\eta }^2)$

and

![]() $\eta _{x,t}\sim \mathcal{N}(0,\sigma _{\eta _x}^2)$

are independent of each other at all leads and lags,

$\eta _{x,t}\sim \mathcal{N}(0,\sigma _{\eta _x}^2)$

are independent of each other at all leads and lags,

![]() $1(A)$

is the indicator function for the event A, and

$1(A)$

is the indicator function for the event A, and

![]() $T_{B}$

is the index corresponding to the time of the break at

$T_{B}$

is the index corresponding to the time of the break at

![]() $1982.I$

.

$1982.I$

.

Finally, following Morley et al. (Reference Morley, Nelson and Zivot2003) and Grant and Chan (Reference Grant and Chan2017), the transitory components are assumed to follow AR(2) processes:

where

![]() $\epsilon _{z,t}\sim \mathcal{N}(0,\sigma _{z}^2)$

,

$\epsilon _{z,t}\sim \mathcal{N}(0,\sigma _{z}^2)$

,

![]() $\epsilon _{x,t}\sim \mathcal{N}(0,\sigma _{x}^2)$

, and

$\epsilon _{x,t}\sim \mathcal{N}(0,\sigma _{x}^2)$

, and

![]() $corr(\varepsilon _{z,t},\varepsilon _{x,t}) = 0$

.Footnote

1

$corr(\varepsilon _{z,t},\varepsilon _{x,t}) = 0$

.Footnote

1

The presence of the common stochastic trend component in RPI and TFP captures the argument that innovations in information technologies are GPT, and they have been the main driver of the trend in RPI and a major source of the growth in productivity in the USA. Simply put, GPT can be defined as a new method that leads to fundamental changes in the production process of industries using it, and it is important enough to have a protracted aggregate impact on the economy. As discussed extensively in Jovanovic and Rousseau (Reference Jovanovic and Rousseau Peter2005), electrification and information technology (IT) are probably the most recent GPTs so far. In fact, using industry-level data, Cummins and Violante (Reference Cummins and Violante2002) and Basu et al. (Reference Basu, Fernald and Oulton2003) find that improvements in information communication technologies contributed to productivity growth in the 1990s in essentially every industry in the USA. Moreover, Jorgenson et al. (Reference Jorgenson, Ho, Samuels and Stiroh2007) show that much of the TFP gain in the USA in the 2000s originated in industries that are the most intensive users of IT. Specifically, the authors look at the contribution to the growth rate of value-added and aggregate TFP in the USA in 85 industries. They find that the four IT-producing industries (computer and office equipment, communication equipment, electronic components, and computer services) accounted for nearly all of the acceleration of aggregate TFP in 1995–2000. Furthermore, IT-using industries, which engaged in great IT investment in the period 1995–2000, picked up the momentum and contributed almost half of the aggregate acceleration in 2000–2005. Overall, the authors assert that IT-related industries made significant contributions to the growth rate of TFP in the period 1960–2005. Similarly, Gordon (Reference Gordon1990) and Cummins and Violante (Reference Cummins and Violante2002) have argued that technological progress in areas such as equipment and software have contributed to a faster rate of decline in RPI, a fact that has also been documented in Fisher (Reference Fisher2006) and Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b).

A complementary interpretation of the framework originates from the growth accounting literature associated with the relative importance of embodiment in the growth of technology. In particular, Greenwood et al. (Reference Greenwood, Hercowitz and Huffman1997a, Reference Greenwood, Hercowitz and Per1997b), Greenwood and Krusell (Reference Greenwood and Krusell2007), and Oulton (Reference Oulton2007) show that the nonstationary component in TFP is a combination of the trend in NT and the trend in IST. In that case, we may interpret the common component,

![]() $\tau _{t}$

, as the trend in IST,

$\tau _{t}$

, as the trend in IST,

![]() $\tau _{x,t}$

as the trend in NT, and the parameter

$\tau _{x,t}$

as the trend in NT, and the parameter

![]() $\gamma$

as the current price share of investment in the value of output. In Appendix A.1, we show that our UC framework may be derived from a simple neoclassical growth model.

$\gamma$

as the current price share of investment in the value of output. In Appendix A.1, we show that our UC framework may be derived from a simple neoclassical growth model.

Furthermore, this UC framework offers more flexibility as it nests all the univariate and bivariate specifications of NT and IST in the literature. Specifically, let us consider the following cases:

-

1. If

$\gamma = 0$

, then trends in RPI and TFP are independent of each other, and this amounts to the specifications adopted in Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b) and Kaihatsu and Kurozumi (Reference Kaihatsu and Kurozumi2014).

$\gamma = 0$

, then trends in RPI and TFP are independent of each other, and this amounts to the specifications adopted in Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b) and Kaihatsu and Kurozumi (Reference Kaihatsu and Kurozumi2014). -

2. If

$\gamma = 0$

,

$\gamma = 0$

,

$\varphi _{\mu } = 0$

, and

$\varphi _{\mu } = 0$

, and

$\varphi _{\mu _{x}} = 0$

, then the trend components follow a random walk plus drift, and the resulting specification is equivalent to the assumptions found in Fisher (Reference Fisher2006).

$\varphi _{\mu _{x}} = 0$

, then the trend components follow a random walk plus drift, and the resulting specification is equivalent to the assumptions found in Fisher (Reference Fisher2006). -

3. If we pre-multiply equation (1) by

$\gamma$

, and subtract the result from equation (2), we obtain(7)where

$\gamma$

, and subtract the result from equation (2), we obtain(7)where \begin{equation} x_{t} - \gamma z_{t} = \tau _{x,t} + c_{xz,t} \end{equation}

\begin{equation} x_{t} - \gamma z_{t} = \tau _{x,t} + c_{xz,t} \end{equation}

$c_{xz,t} = c_{x,t} - \gamma c_{z,t}$

.

$c_{xz,t} = c_{x,t} - \gamma c_{z,t}$

.-

(a) If

$\gamma \neq 0$

,

$\gamma \neq 0$

,

$\varphi _{\mu _{x}} = 0$

, and

$\varphi _{\mu _{x}} = 0$

, and

$\sigma _{\eta _{x}} = 0$

, then RPI and TFP are co-integrated, with co-integrating vector

$\sigma _{\eta _{x}} = 0$

, then RPI and TFP are co-integrated, with co-integrating vector

$(-\gamma, 1)$

, as argued in Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011).

$(-\gamma, 1)$

, as argued in Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011). -

(b) If, in addition,

$\zeta _{x} \neq 0$

, then

$\zeta _{x} \neq 0$

, then

$\tau _{x,t}$

is a linear deterministic trend, and RPI and TFP are co-integrated around a linear deterministic trend.

$\tau _{x,t}$

is a linear deterministic trend, and RPI and TFP are co-integrated around a linear deterministic trend. -

(c) If, otherwise,

$\zeta _{x} = 0$

, the RPI and TFP are co-integrated around a constant term.

$\zeta _{x} = 0$

, the RPI and TFP are co-integrated around a constant term.

-

-

4. If

$\gamma \gt 0$

(

$\gamma \gt 0$

(

$\gamma \lt 0$

), then the common trend component has a positive effect on both RPI and TFP (a positive effect on RPI and a negative effect on TFP), which would imply a positive (negative) covariation between the two series. This is essentially the argument in Benati (Reference Benati2013).

$\gamma \lt 0$

), then the common trend component has a positive effect on both RPI and TFP (a positive effect on RPI and a negative effect on TFP), which would imply a positive (negative) covariation between the two series. This is essentially the argument in Benati (Reference Benati2013). -

5. If

$\varphi _{\mu } = 1$

and

$\varphi _{\mu } = 1$

and

$\varphi _{\mu _{x}} = 1$

. This gives rise to the following smooth evolving processes for the trend:(8)

$\varphi _{\mu _{x}} = 1$

. This gives rise to the following smooth evolving processes for the trend:(8) \begin{equation} \Delta \tau _t = \zeta _1 1(t\lt T_{B}) + \zeta _2 1(t\geq T_{B}) + \Delta \tau _{t-1} + \eta _t, \end{equation}

(9)

\begin{equation} \Delta \tau _t = \zeta _1 1(t\lt T_{B}) + \zeta _2 1(t\geq T_{B}) + \Delta \tau _{t-1} + \eta _t, \end{equation}

(9) \begin{equation} \Delta \tau _{x,t} = \zeta _{x,1}1(t\lt T_{B}) + \zeta _{x,2}1(t\geq t_0) + \Delta \tau _{x,t-1} +\eta _{x,t}. \end{equation}

\begin{equation} \Delta \tau _{x,t} = \zeta _{x,1}1(t\lt T_{B}) + \zeta _{x,2}1(t\geq t_0) + \Delta \tau _{x,t-1} +\eta _{x,t}. \end{equation}

Through a model comparison exercise, we are able to properly assess the validity of each of these competing assumptions and their implications for estimation and inference in order to shed light on the appropriate representation between NT and IST.

3. Bayesian estimation

In this section, we provide the details of the priors and outline the Bayesian estimation of the unobserved components model in equations (1)–(6). In particular, we highlight how the model can be estimated efficiently using band matrix algorithms instead of conventional Kalman filter-based methods.

We assume proper but relatively noninformative priors for the model parameters

![]() $\gamma$

,

$\gamma$

,

![]() $\boldsymbol \phi =(\phi _{z,1},\phi _{z,2},\phi _{x,1},\phi _{x,2} )^{\prime }, \boldsymbol \varphi =(\varphi _{\mu },\varphi _{\mu _x})^{\prime }, \boldsymbol \zeta = (\zeta _1,\zeta _2,\zeta _{x,1},\zeta _{x,2})^{\prime }$

,

$\boldsymbol \phi =(\phi _{z,1},\phi _{z,2},\phi _{x,1},\phi _{x,2} )^{\prime }, \boldsymbol \varphi =(\varphi _{\mu },\varphi _{\mu _x})^{\prime }, \boldsymbol \zeta = (\zeta _1,\zeta _2,\zeta _{x,1},\zeta _{x,2})^{\prime }$

,

![]() $\boldsymbol \sigma ^2 = (\sigma _{\eta }^2, \sigma _{\eta _x}^2, \sigma _{z}^2, \sigma _{x}^2)^{\prime }$

, and

$\boldsymbol \sigma ^2 = (\sigma _{\eta }^2, \sigma _{\eta _x}^2, \sigma _{z}^2, \sigma _{x}^2)^{\prime }$

, and

![]() $\boldsymbol \tau _0$

. In particular, we adopt a normal prior for

$\boldsymbol \tau _0$

. In particular, we adopt a normal prior for

![]() $\gamma$

:

$\gamma$

:

![]() $\gamma \sim \mathcal{N}(\gamma _{0}, V_{\gamma })$

with

$\gamma \sim \mathcal{N}(\gamma _{0}, V_{\gamma })$

with

![]() $\gamma _{0} = 0$

and

$\gamma _{0} = 0$

and

![]() $V_{\gamma }=1$

. These values imply a weakly informative prior centered at 0. Moreover, we assume independent priors for

$V_{\gamma }=1$

. These values imply a weakly informative prior centered at 0. Moreover, we assume independent priors for

![]() $\boldsymbol \phi$

,

$\boldsymbol \phi$

,

![]() $\boldsymbol \varphi$

,

$\boldsymbol \varphi$

,

![]() $\boldsymbol \zeta$

and

$\boldsymbol \zeta$

and

![]() $\boldsymbol \tau _0$

:

$\boldsymbol \tau _0$

:

where

![]() $\textbf{R}$

denotes the stationarity region. The prior on the AR coefficients

$\textbf{R}$

denotes the stationarity region. The prior on the AR coefficients

![]() $\boldsymbol \phi$

affects how persistent the cyclical components are. We assume relatively large prior variances,

$\boldsymbol \phi$

affects how persistent the cyclical components are. We assume relatively large prior variances,

![]() $\textbf{V}_{\boldsymbol \phi } = \textbf{I}_4$

, so that a priori

$\textbf{V}_{\boldsymbol \phi } = \textbf{I}_4$

, so that a priori

![]() $\boldsymbol \phi$

can take on a wide range of values. The prior mean is assumed to be

$\boldsymbol \phi$

can take on a wide range of values. The prior mean is assumed to be

![]() $\boldsymbol \phi _0= (1.3, -0.7, 1.3, -0.7)^{\prime }$

, which implies that each of the two AR(2) processes has two complex roots, and they are relatively persistent. Similarly, for the prior on

$\boldsymbol \phi _0= (1.3, -0.7, 1.3, -0.7)^{\prime }$

, which implies that each of the two AR(2) processes has two complex roots, and they are relatively persistent. Similarly, for the prior on

![]() $\boldsymbol \varphi$

, we set

$\boldsymbol \varphi$

, we set

![]() $\textbf{V}_{\boldsymbol \varphi } = \textbf{I}_2$

with prior mean

$\textbf{V}_{\boldsymbol \varphi } = \textbf{I}_2$

with prior mean

![]() $\boldsymbol \varphi _0= (0.9, 0.9)^{\prime }$

, which implies that the two AR(1) processes are fairly persistent. Next, we assume that the priors on

$\boldsymbol \varphi _0= (0.9, 0.9)^{\prime }$

, which implies that the two AR(1) processes are fairly persistent. Next, we assume that the priors on

![]() $ \sigma _{z}^2$

and

$ \sigma _{z}^2$

and

![]() $\sigma _{x}^2$

are inverse-gamma:

$\sigma _{x}^2$

are inverse-gamma:

We set

![]() $\nu _{z} = \nu _{x} = 4$

,

$\nu _{z} = \nu _{x} = 4$

,

![]() $S_{z} = 6\times 10^{-5}$

, and

$S_{z} = 6\times 10^{-5}$

, and

![]() $S_{x} = 3\times 10^{-5}$

. These values imply prior means of

$S_{x} = 3\times 10^{-5}$

. These values imply prior means of

![]() $\sigma _{z}^2$

and

$\sigma _{z}^2$

and

![]() $\sigma _{x}^2$

to be, respectively,

$\sigma _{x}^2$

to be, respectively,

![]() $2\times 10^{-5}$

and

$2\times 10^{-5}$

and

![]() $S_{x} = 10^{-5}$

.

$S_{x} = 10^{-5}$

.

For

![]() $\sigma _{\eta }^2$

and

$\sigma _{\eta }^2$

and

![]() $\sigma _{\eta _x}^2$

, the error variances in the state equations (3) and (4), we follow the suggestion of Frühwirth-Schnatter and Wagner (Reference Frühwirth-Schnatter and Wagner2010) to use normal priors centered at 0 on the standard deviations

$\sigma _{\eta _x}^2$

, the error variances in the state equations (3) and (4), we follow the suggestion of Frühwirth-Schnatter and Wagner (Reference Frühwirth-Schnatter and Wagner2010) to use normal priors centered at 0 on the standard deviations

![]() $\sigma _{\eta }$

and

$\sigma _{\eta }$

and

![]() $\sigma _{\eta _x}$

. Compared to the conventional inverse-gamma prior, a normal prior centered at 0 has the advantage of not distorting the likelihood when the true value of the error variance is close to zero. In our implementation, we use the fact that a normal prior

$\sigma _{\eta _x}$

. Compared to the conventional inverse-gamma prior, a normal prior centered at 0 has the advantage of not distorting the likelihood when the true value of the error variance is close to zero. In our implementation, we use the fact that a normal prior

![]() $\sigma _{\eta }\sim \mathcal{N}(0,V_{\sigma _{\eta }})$

on the standard deviation implies gamma prior on the error variance

$\sigma _{\eta }\sim \mathcal{N}(0,V_{\sigma _{\eta }})$

on the standard deviation implies gamma prior on the error variance

![]() $\sigma _{\eta }^2$

:

$\sigma _{\eta }^2$

:

![]() $\sigma _{\eta }^2 \sim \mathcal{G}(1/2,1/(2V_{\sigma _{\eta }}))$

, where

$\sigma _{\eta }^2 \sim \mathcal{G}(1/2,1/(2V_{\sigma _{\eta }}))$

, where

![]() $\mathcal{G}(a, b)$

denotes the gamma distribution with mean

$\mathcal{G}(a, b)$

denotes the gamma distribution with mean

![]() $a/b$

. Similarly, we assume

$a/b$

. Similarly, we assume

![]() $\sigma _{\eta _x}^2 \sim \mathcal{G}(1/2,1/(2V_{\sigma _{\eta _x}}))$

. We set

$\sigma _{\eta _x}^2 \sim \mathcal{G}(1/2,1/(2V_{\sigma _{\eta _x}}))$

. We set

![]() $V_{\sigma _{\eta }} = 5\times 10^{-6}$

and

$V_{\sigma _{\eta }} = 5\times 10^{-6}$

and

![]() $V_{\sigma _{\eta _x}} = 5\times 10^{-5}.$

$V_{\sigma _{\eta _x}} = 5\times 10^{-5}.$

Next, we outline the posterior simulator to estimate the model in equations (1)–(6) with the priors described above. To that end, let

![]() $\boldsymbol \tau = (\tau _1,\tau _{x,1},\tau _2,\tau _{x,2},\ldots$

,

$\boldsymbol \tau = (\tau _1,\tau _{x,1},\tau _2,\tau _{x,2},\ldots$

,

![]() $\tau _T,\tau _{x,T})^{\prime }$

and

$\tau _T,\tau _{x,T})^{\prime }$

and

![]() $\textbf{y}=(z_1,x_1,\ldots, z_T,x_T)^{\prime }$

. Then, posterior draws can be obtained by sequentially sampling from the following conditional distributions:

$\textbf{y}=(z_1,x_1,\ldots, z_T,x_T)^{\prime }$

. Then, posterior draws can be obtained by sequentially sampling from the following conditional distributions:

-

1.

$p(\boldsymbol \tau,\gamma \,|\, \textbf{y}, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0) = p(\gamma \,|\, \textbf{y}, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0) p(\boldsymbol \tau \,|\, \textbf{y}, \gamma, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0)$

;

$p(\boldsymbol \tau,\gamma \,|\, \textbf{y}, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0) = p(\gamma \,|\, \textbf{y}, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0) p(\boldsymbol \tau \,|\, \textbf{y}, \gamma, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0)$

; -

2.

$p(\boldsymbol \phi \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0)$

;

$p(\boldsymbol \phi \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \varphi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0)$

; -

3.

$p(\boldsymbol \varphi \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \phi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0)$

;

$p(\boldsymbol \varphi \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \phi, \boldsymbol \zeta, \boldsymbol \sigma ^2,\boldsymbol \tau _0)$

; -

4.

$p(\boldsymbol \sigma ^2 \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta,\boldsymbol \tau _0)$

;

$p(\boldsymbol \sigma ^2 \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \zeta,\boldsymbol \tau _0)$

; -

5.

$p(\boldsymbol \zeta,\boldsymbol \tau _0 \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \sigma ^2)$

.

$p(\boldsymbol \zeta,\boldsymbol \tau _0 \,|\, \textbf{y}, \boldsymbol \tau, \gamma, \boldsymbol \phi, \boldsymbol \varphi, \boldsymbol \sigma ^2)$

.

We refer the readers to Appendix A.5 for implementation details of the posterior sampler.

4. Empirical results

In this section, we report parameter estimates of the bivariate unobserved components model defined in equations (1)–(6). The dataset consists of the time series of the logarithm of (the inverse of) RPI and TFP from

![]() $1959.II$

to

$1959.II$

to

![]() $2019.II$

. RPI is computed as the investment deflator divided by the consumption deflator, and it is easily accessible from the Federal Reserve Economic Database (FRED). While the complete description of the deflators along with the accompanying details of the computation of the series are found in DiCecio (Reference DiCecio2009), it is worth emphasizing that the investment deflator corresponds to the quality-adjusted investment deflator calculated following the approaches in Gordon (Reference Gordon1990), Cummins and Violante (Reference Cummins and Violante2002), and Fisher (Reference Fisher2006). On the other hand, we compute TFP based on the aggregate TFP growth, which is measured as the growth rate of the business sector TFP corrected for capital utilization. The capital utilization-adjusted aggregate TFP growth series is produced by Fernald (Reference Fernald2014) and is widely regarded as the best available measure of NT.Footnote

2

$2019.II$

. RPI is computed as the investment deflator divided by the consumption deflator, and it is easily accessible from the Federal Reserve Economic Database (FRED). While the complete description of the deflators along with the accompanying details of the computation of the series are found in DiCecio (Reference DiCecio2009), it is worth emphasizing that the investment deflator corresponds to the quality-adjusted investment deflator calculated following the approaches in Gordon (Reference Gordon1990), Cummins and Violante (Reference Cummins and Violante2002), and Fisher (Reference Fisher2006). On the other hand, we compute TFP based on the aggregate TFP growth, which is measured as the growth rate of the business sector TFP corrected for capital utilization. The capital utilization-adjusted aggregate TFP growth series is produced by Fernald (Reference Fernald2014) and is widely regarded as the best available measure of NT.Footnote

2

First, we perform statistical break tests to verify, as has been established in the empirical literature, that RPI experienced a break in its trend around early

![]() $1982$

. Following the recommendations in Bai and Perron (Reference Bai and Perron2003), we find a break date at

$1982$

. Following the recommendations in Bai and Perron (Reference Bai and Perron2003), we find a break date at

![]() $1982.I$

in the mean of the log difference of RPI as documented in Fisher (Reference Fisher2006), Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b), and Benati (Reference Benati2013). In addition, a consequence of our specification is that RPI and TFP must share a common structural break. Consequently, we follow the methodology outlined in Qu and Perron (Reference Qu and Perron2007) to test whether or not the trends in the series are orthogonal.Footnote

3

The estimation results suggest that RPI and TFP might share a common structural break at

$1982.I$

in the mean of the log difference of RPI as documented in Fisher (Reference Fisher2006), Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b), and Benati (Reference Benati2013). In addition, a consequence of our specification is that RPI and TFP must share a common structural break. Consequently, we follow the methodology outlined in Qu and Perron (Reference Qu and Perron2007) to test whether or not the trends in the series are orthogonal.Footnote

3

The estimation results suggest that RPI and TFP might share a common structural break at

![]() $1980.I$

. This break date falls within the confidence interval of the estimated break date between the same two time series,

$1980.I$

. This break date falls within the confidence interval of the estimated break date between the same two time series,

![]() $[1973.I,1982.III]$

, documented in Benati (Reference Benati2013). Therefore, the evidence from structural break tests does not rule out the presence of a single break at

$[1973.I,1982.III]$

, documented in Benati (Reference Benati2013). Therefore, the evidence from structural break tests does not rule out the presence of a single break at

![]() $1982.I$

in the common stochastic trend component of RPI and TFP.

$1982.I$

in the common stochastic trend component of RPI and TFP.

To jumpstart the discussion of the estimation results, we provide a graphical representation of the fit of our model as illustrated by the fitted values of the two series in Figure 1. It is clear from the graph that the bivariate unobserved components model is able to fit both series well with fairly narrow credible intervals.

Figure 1. Fitted values of

![]() $\widehat{z}_t = \tau _t$

and

$\widehat{z}_t = \tau _t$

and

![]() $\widehat{x}_t = \gamma \tau _t + \tau _{x,t}$

. The shaded region represents the 5-th and 95-th percentiles.

$\widehat{x}_t = \gamma \tau _t + \tau _{x,t}$

. The shaded region represents the 5-th and 95-th percentiles.

We report the estimates of model parameters in Table 1 and organize the discussion of our findings around the following two points: (1) the within-series relationship and (2) the cross-series relationship. At the same time, we elaborate on the econometric ramifications of these two points on the analysis of DSGE models.

Table 1. Posterior means, standard deviations, and 95% credible intervals of model parameters

4.1. The Within-Series Relationship in RPI and TFP

The within-series relationship is concerned with the relative importance of the permanent component and the transitory component in (the inverse of) RPI and TFP. This relationship is captured via the estimated values of (i) the drift parameters,

![]() $\zeta$

and

$\zeta$

and

![]() $\zeta _{x}$

, (ii) the autoregressive parameters of the permanent components in RPI and TFP,

$\zeta _{x}$

, (ii) the autoregressive parameters of the permanent components in RPI and TFP,

![]() $\varphi _{\mu }$

and

$\varphi _{\mu }$

and

![]() $\varphi _{\mu _x}$

, (iii) the autoregressive parameters of the transitory components in RPI and TFP,

$\varphi _{\mu _x}$

, (iii) the autoregressive parameters of the transitory components in RPI and TFP,

![]() $\phi _{z,1}$

,

$\phi _{z,1}$

,

![]() $\phi _{z,2}$

,

$\phi _{z,2}$

,

![]() $\phi _{x,1}$

, and

$\phi _{x,1}$

, and

![]() $\phi _{x,2}$

, (iv) and the standard deviations of the permanent and transitory components,

$\phi _{x,2}$

, (iv) and the standard deviations of the permanent and transitory components,

![]() $\sigma _{\eta }$

,

$\sigma _{\eta }$

,

![]() $\sigma _{\eta _{x}}$

,

$\sigma _{\eta _{x}}$

,

![]() $\sigma _{z}$

, and

$\sigma _{z}$

, and

![]() $\sigma _{x}$

.

$\sigma _{x}$

.

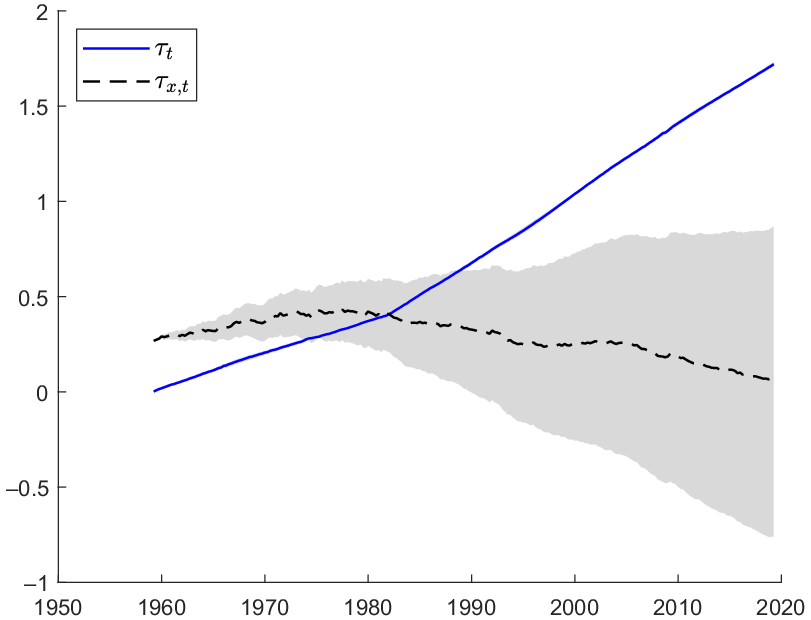

First, it is evident from Figure 2 that the estimated trend in RPI,

![]() $\tau _t$

, is strongly trending upward, especially after the break at

$\tau _t$

, is strongly trending upward, especially after the break at

![]() $1982.I$

. In fact, the growth rate of

$1982.I$

. In fact, the growth rate of

![]() $\tau _t$

has more than doubled after

$\tau _t$

has more than doubled after

![]() $1982.I$

: as reported in Table 1, the posterior means of

$1982.I$

: as reported in Table 1, the posterior means of

![]() $\zeta _{1}$

and

$\zeta _{1}$

and

![]() $\zeta _{2}$

are 0.004 and 0.009, respectively. This is consistent with the narrative that the decline in the mean growth of RPI has accelerated in the period after

$\zeta _{2}$

are 0.004 and 0.009, respectively. This is consistent with the narrative that the decline in the mean growth of RPI has accelerated in the period after

![]() $1982.I$

, and this acceleration has been facilitated by the rapid price decline in information processing equipment and software. In contrast, the estimated trend

$1982.I$

, and this acceleration has been facilitated by the rapid price decline in information processing equipment and software. In contrast, the estimated trend

![]() $\tau _{x,t}$

shows gradual decline after

$\tau _{x,t}$

shows gradual decline after

![]() $1982.I$

—its growth rate decreases from 0.001 before the break to

$1982.I$

—its growth rate decreases from 0.001 before the break to

![]() $- 0.003$

after the break, although both figures are not statistically different from zero. Overall, it appears that trends in both series are captured completely by the stochastic trend component in RPI, and this piece of evidence provides additional support that GPT might be an important driver of the growth in TFP in the USA.

$- 0.003$

after the break, although both figures are not statistically different from zero. Overall, it appears that trends in both series are captured completely by the stochastic trend component in RPI, and this piece of evidence provides additional support that GPT might be an important driver of the growth in TFP in the USA.

Figure 2. Posterior means of

![]() $\tau _t$

and

$\tau _t$

and

![]() $\tau _{x,t}$

. The shaded region represents the 16-th and 84-th percentiles.

$\tau _{x,t}$

. The shaded region represents the 16-th and 84-th percentiles.

Moving on to the estimated autoregressive parameters of the permanent component,

![]() $\widehat{\varphi }_{\mu } = 0.101$

and

$\widehat{\varphi }_{\mu } = 0.101$

and

![]() $\widehat{\varphi }_{\mu _x} = -0.051$

, we note that growths in RPI and TFP do not appear to be as serially correlated as reported in the empirical literature. For instance, Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b) report a posterior median value of 0.287 for the investment-specific technological process and 0.163 for NT. With regard to the AR(2) processes that describe the transitory components in RPI and TFP, the estimated autoregressive parameters indicate that these components are relatively more persistent than the growth components of the series.

$\widehat{\varphi }_{\mu _x} = -0.051$

, we note that growths in RPI and TFP do not appear to be as serially correlated as reported in the empirical literature. For instance, Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a, Reference Justiniano, Primiceri and Tambalotti2011b) report a posterior median value of 0.287 for the investment-specific technological process and 0.163 for NT. With regard to the AR(2) processes that describe the transitory components in RPI and TFP, the estimated autoregressive parameters indicate that these components are relatively more persistent than the growth components of the series.

Furthermore, the estimated values of the variance of the innovations lead to some interesting observations. The variance of the idiosyncratic growth rate in TFP,

![]() $\sigma _{\eta _x}^2 = 5.17\times 10^{-5}$

, is larger than its counterpart for the transitory component,

$\sigma _{\eta _x}^2 = 5.17\times 10^{-5}$

, is larger than its counterpart for the transitory component,

![]() $\sigma _{x}^2 = 7.76\times 10^{-6}$

. On the other hand, the variance of the growth rate in RPI,

$\sigma _{x}^2 = 7.76\times 10^{-6}$

. On the other hand, the variance of the growth rate in RPI,

![]() $\sigma _{\eta }^2 = 5.51\times 10^{-6}$

, is smaller than the variance of its transitory component,

$\sigma _{\eta }^2 = 5.51\times 10^{-6}$

, is smaller than the variance of its transitory component,

![]() $\sigma _{z}^2 = 1.79\times 10^{-5}$

.

$\sigma _{z}^2 = 1.79\times 10^{-5}$

.

Overall, these results seem to indicate that (i) RPI and TFP appear to follow an ARIMA(1,1,0) process, (ii) RPI and TFP growths are only weakly serially correlated, (iii) transitory components in RPI and TFP are relatively more persistent than the growth components on these series, (iv) TFP growth shocks generate more variability than shocks to the growth rate of RPI, and (v) shocks to the transitory component of RPI are more volatile than shocks to the growth rate of RPI.

Now, we discuss the implications of these findings on the analysis of business cycle models. First, information about the process underlying RPI and TFP helps to reduce errors associated with the specification of idiosyncratic trends in DSGE models. Macro variables such as output are highly persistent. To capture this feature, researchers must typically take a stand on the specification of the trend in DSGE models. Since output typically inherits the trend properties of TFP and/or RPI, having accurate information about the trend properties of TFP and/or RPI should allow the researcher to minimize the possibility of trend misspecification which in turn has significant consequences on estimation and inference. Specifically, DSGE models are typically built to explain the cyclical movements in the data. Therefore, preliminary data transformation, in the form of removing secular trend variations, are oftentimes required before the estimation of the structural parameters. One alternative entails arbitrarily building into the model a noncyclical component of NT and/or IST and filtering the raw data using the model-based specification. Therefore, trend misspecifications may lead to inappropriate filtering approaches and erroneous conclusions. In fact, Singleton (Reference Singleton1988) documents that inadequate filtering generally leads to inconsistent estimates of the parameters. Similarly, Canova (Reference Canova2014) recently demonstrates that the posterior distribution of the structural parameters vary greatly with the preliminary transformations (linear detrending, Hodrick and Prescott filtering, growth rate filtering, and band-pass filtering) used to remove secular trends. Consequently, this translates into significant differences in the impulse and propagation of shocks. Furthermore, Cogley and Nason (Reference Cogley and Nason1995b) show that it is hard to interpret results from filtered data as facts or artifacts about business cycles as filtering may generate spurious cycles in difference stationary and trend stationary processes. For example, the authors find that a model may exhibit business cycle periodicity and comovement in filtered data even when such phenomena are not present in the data.

Second, information about the process underlying RPI and TFP improves our understanding of the analysis of macro dynamics. Researchers typically analyze the dynamics of output and other macro variables along three dimensions over short horizons: the periodicity of output, comovement of macro variables with respect to output, and the relative volatility of macro variables. Specifically, in macro models, periodicity is usually measured by the autocorrelation function of output, and Cogley and Nason (Reference Cogley and Nason1993) and Cogley and Nason (Reference Cogley and Nason1995a) show that the periodicity of output is essentially determined by impulse dynamics. Several studies document that output growth is positively correlated over short horizons, and standard macro models struggle to generate this pattern. Consequently, in models where technology followed an ARIMA (1,1,0) process, output growth would inherit the AR(1) structure of TFP growth, and the model would be able to match the periodicity of output in the data. This point is particularly important as it relates to the responses of macro variables to exogenous shocks. Cogley and Nason (Reference Cogley and Nason1995a) highlight that macro variables, and output especially, contain a trend-reverting component that has a hump-shaped impulse response function. Since the response of output to technology shock, for instance, pretty much matches the response of technology itself, Cogley and Nason (Reference Cogley and Nason1995a) argue that exogenous shocks must produce this hump-shaped pattern for the model to match the facts about autocorrelated output growth. Ultimately, if the goal of the researcher is to match the periodicity of output in a way that the model also matches the time series characteristics of the impulse dynamics, then the choice of the trend is paramount in the specification of the model.

In addition, information about the volatilities and persistence of the processes underlying technology shocks may strengthen our inference on the relative importance of these shocks on fluctuations. As demonstrated in Canova (Reference Canova2014), the estimates of structural parameters depend on nuisance features such as the persistence and the volatility of the shocks, and misspecification of these nuisance features generates biased estimates of impact and persistence coefficients and leads to incorrect conclusions about the relative importance of the shocks.

4.2 The Cross-Series Relationship between (the Inverse of) RPI and TFP

We can evaluate the cross-series relationship through the estimated value of the parameter that captures the extent of the relationship between the trends in RPI and TFP, namely,

![]() $\gamma$

.

$\gamma$

.

First, the positive value of

![]() $\widehat{\gamma }$

implies a positive long-run co-variation between (the inverse of) RPI and TFP as established in Benati (Reference Benati2013). In addition, the estimated value of the parameter

$\widehat{\gamma }$

implies a positive long-run co-variation between (the inverse of) RPI and TFP as established in Benati (Reference Benati2013). In addition, the estimated value of the parameter

![]() $\gamma$

, (

$\gamma$

, (

![]() $\widehat{\gamma } = 0.478$

), is significantly different from zero and quite large. This result supplements the qualitative findings by providing a quantitative measure of scale differences in the trends of RPI and TFP. From a econometric point of view, we may interpret

$\widehat{\gamma } = 0.478$

), is significantly different from zero and quite large. This result supplements the qualitative findings by providing a quantitative measure of scale differences in the trends of RPI and TFP. From a econometric point of view, we may interpret

![]() $\widehat{\gamma }$

as the elasticity of TFP to IST changes. In that case, we may assert that, for the time period

$\widehat{\gamma }$

as the elasticity of TFP to IST changes. In that case, we may assert that, for the time period

![]() $1959.II$

to

$1959.II$

to

![]() $2019.II$

considered in this study, a 1% change in IST progress leads to a 0.478% increase in aggregate TFP. These observations are consistent with the view that permanent changes in RPI, which may reflect improvements in information communication technologies, might be representation of innovations in GPT. In fact, using industry-level data, Cummins and Violante (Reference Cummins and Violante2002) and Basu et al. (Reference Basu, Fernald and Oulton2003) find that improvements in information communication technologies contributed to productivity growth in the 1990s in essentially every industry in the USA. Moreover, Jorgenson et al. (Reference Jorgenson, Ho, Samuels and Stiroh2007) show that much of the TFP gain in the USA in the 2000s originated in industries that are the most intensive users of IT.

$2019.II$

considered in this study, a 1% change in IST progress leads to a 0.478% increase in aggregate TFP. These observations are consistent with the view that permanent changes in RPI, which may reflect improvements in information communication technologies, might be representation of innovations in GPT. In fact, using industry-level data, Cummins and Violante (Reference Cummins and Violante2002) and Basu et al. (Reference Basu, Fernald and Oulton2003) find that improvements in information communication technologies contributed to productivity growth in the 1990s in essentially every industry in the USA. Moreover, Jorgenson et al. (Reference Jorgenson, Ho, Samuels and Stiroh2007) show that much of the TFP gain in the USA in the 2000s originated in industries that are the most intensive users of IT.

In addition, this finding contributes to the debate about the specification of common trends and the source of business cycle fluctuations in DSGE models. In particular, Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) and Benati (Reference Benati2013) explore the relationship between RPI and TFP through the lenses of statistical tests of units root and co-integration, and the potential implications of such relationship on the role of technology shocks in economic fluctuations. Using quarterly US data over the period from

![]() $1948.I$

to

$1948.I$

to

![]() $2006.IV$

, Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) find that RPI and TFP contain a nonstationary stochastic component which is common to both series. In other words, TFP and RPI are co-integrated, which implies that NT and IST should be modeled as containing a common stochastic trend. Therefore, Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) estimate a DSGE model that imposes this result and identify a new source of business cycle fluctuations: shocks to the common stochastic trend in neutral and investment-specific productivity. They find that the shocks play a sizable role in driving business cycle fluctuations as they explain three-fourth of the variances of output and investment growth and about one-third of the predicted variances of consumption growth and hours worked. If such results were validated, they would reshape the common approach of focusing on the importance of either NT or IST distinctively.

$2006.IV$

, Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) find that RPI and TFP contain a nonstationary stochastic component which is common to both series. In other words, TFP and RPI are co-integrated, which implies that NT and IST should be modeled as containing a common stochastic trend. Therefore, Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) estimate a DSGE model that imposes this result and identify a new source of business cycle fluctuations: shocks to the common stochastic trend in neutral and investment-specific productivity. They find that the shocks play a sizable role in driving business cycle fluctuations as they explain three-fourth of the variances of output and investment growth and about one-third of the predicted variances of consumption growth and hours worked. If such results were validated, they would reshape the common approach of focusing on the importance of either NT or IST distinctively.

However, Benati (Reference Benati2013) expresses some doubt about the co-integration results in Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011). He claims that TFP and RPI are most likely not co-integrated, and he traces the origin of this finding of co-integration to the use of an inconsistent criteria for lag order selection in the Johansen procedure. When he uses the Schwartz information criterion (SIC) and Hannah–Quinn (HQ) criterion, the Johansen test points to no co-integration. Yet, he establishes that although the two series may not be co-integrated, they may still share a common stochastic nonstationary component. Using an approach proposed by Cochrane and Sbordone (Reference Cochrane and Sbordone1988) that searches for a statistically significant extent of co-variation between the two series’ long-horizon differences, Benati (Reference Benati2013) suggests that the evidence from his analysis points toward a common

![]() $I(1)$

component that induces a positive co-variation between TFP and RPI at long horizons. Then, he uses such restrictions in a vector autoregression (VAR) to identify common RPI and TFP component shocks and finds that the shocks play a sizable role in the fluctuations of TFP, RPI, and output: about 30% of the variability of RPI and TFP and 28% of the variability in output.

$I(1)$

component that induces a positive co-variation between TFP and RPI at long horizons. Then, he uses such restrictions in a vector autoregression (VAR) to identify common RPI and TFP component shocks and finds that the shocks play a sizable role in the fluctuations of TFP, RPI, and output: about 30% of the variability of RPI and TFP and 28% of the variability in output.

First, the results from our analysis complements the investigation in Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) and Benati (Reference Benati2013). The relatively large estimate of

![]() $\gamma$

validates the notion that NT and IST should not be modeled in DSGE models as emanating from orthogonal processes. While the two studies may disagree about the exact nature of the relationship between NT and IST, both share the common view that their underlying processes are not independent of each other as shown by the results herein, and disturbances to the process that joins them play a significant role in fluctuations of economic activity. This indicates, obviously, that a proper accounting, based on the time series properties of RPI and TFP, of the joint specification of NT and IST is necessary to address succinctly questions of economic fluctuations as common trend misspecification provide inexact conclusions about the role of shocks in economic fluctuations. For instance, papers that have approached the question about the source of fluctuations from the perspective of general equilibrium models have generated diverging results, partly because of the specification of NT and IST. In Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a), investment(-specific) shocks account for most of the business cycle variations in output, hours, and investment (at least 50%), while NT shocks play a minimal role. On the hand, the findings in Smets and Wouters (Reference Smets and Wouters2007) flip the script: the authors find that NT (and wage mark up) shocks account for most of the output variations, while investment(-specific) shocks play no role. Nonetheless, these two studies share the common feature that the processes underlying NT and IST are orthogonal to each other. As we discussed above, when Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) impose a common trend specification between the two types of technology, neither shocks matter for fluctuations: a new shock, the common stochastic trend shocks, emerges as the main source of business cycle fluctuations. Our framework offers a structural way to assimilate the common trend specification in DSGE models and evaluate the effects of such specifications in fluctuations. The typical approach to trend specification in DSGE models is to build a noncyclical component into the model via unit roots in NT and/or IST and filtering the raw data using the model-based transformation. However, this practice produces, in addition to inexact results about sources of fluctuations, counterfactual trend implications because they incorporate balanced growth path restrictions that are to some extent violated in the data. Therefore, Ferroni (Reference Ferroni2011) and Canova (Reference Canova2014) recommend an alternative one-step estimation approach that allows to specify a reduced-form representation of the trend component, which is ultimately combined to the DSGE model for estimation. Our results about the time series characteristics of RPI and TFP provide some guidance on the specification of such reduced-form models. Fernández-Villaverde et al. (Reference Fernández-Villaverde, Rubio-Ramirez and Schorfheide2016) recommend this alternative approach as one of the most desirable and promising approach to modeling trends in DSDE models. Furthermore, our framework distances itself from statistical tests and their associated issues of lag order and low power as the debate about the long-run relationship between RPI and TFP has thus far depended on the selection of lag order in the Johansen’s test. Also, our framework incorporates Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011)’s result of co-integration and Benati (Reference Benati2013)’s finding of co-variation as special cases.

$\gamma$

validates the notion that NT and IST should not be modeled in DSGE models as emanating from orthogonal processes. While the two studies may disagree about the exact nature of the relationship between NT and IST, both share the common view that their underlying processes are not independent of each other as shown by the results herein, and disturbances to the process that joins them play a significant role in fluctuations of economic activity. This indicates, obviously, that a proper accounting, based on the time series properties of RPI and TFP, of the joint specification of NT and IST is necessary to address succinctly questions of economic fluctuations as common trend misspecification provide inexact conclusions about the role of shocks in economic fluctuations. For instance, papers that have approached the question about the source of fluctuations from the perspective of general equilibrium models have generated diverging results, partly because of the specification of NT and IST. In Justiniano et al. (Reference Justiniano, Primiceri and Tambalotti2011a), investment(-specific) shocks account for most of the business cycle variations in output, hours, and investment (at least 50%), while NT shocks play a minimal role. On the hand, the findings in Smets and Wouters (Reference Smets and Wouters2007) flip the script: the authors find that NT (and wage mark up) shocks account for most of the output variations, while investment(-specific) shocks play no role. Nonetheless, these two studies share the common feature that the processes underlying NT and IST are orthogonal to each other. As we discussed above, when Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) impose a common trend specification between the two types of technology, neither shocks matter for fluctuations: a new shock, the common stochastic trend shocks, emerges as the main source of business cycle fluctuations. Our framework offers a structural way to assimilate the common trend specification in DSGE models and evaluate the effects of such specifications in fluctuations. The typical approach to trend specification in DSGE models is to build a noncyclical component into the model via unit roots in NT and/or IST and filtering the raw data using the model-based transformation. However, this practice produces, in addition to inexact results about sources of fluctuations, counterfactual trend implications because they incorporate balanced growth path restrictions that are to some extent violated in the data. Therefore, Ferroni (Reference Ferroni2011) and Canova (Reference Canova2014) recommend an alternative one-step estimation approach that allows to specify a reduced-form representation of the trend component, which is ultimately combined to the DSGE model for estimation. Our results about the time series characteristics of RPI and TFP provide some guidance on the specification of such reduced-form models. Fernández-Villaverde et al. (Reference Fernández-Villaverde, Rubio-Ramirez and Schorfheide2016) recommend this alternative approach as one of the most desirable and promising approach to modeling trends in DSDE models. Furthermore, our framework distances itself from statistical tests and their associated issues of lag order and low power as the debate about the long-run relationship between RPI and TFP has thus far depended on the selection of lag order in the Johansen’s test. Also, our framework incorporates Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011)’s result of co-integration and Benati (Reference Benati2013)’s finding of co-variation as special cases.

Finally, our findings about the fact that TFP may contain two nonstationary components contributes to the VAR literature about the identification of technology shocks and their role in business cycle fluctuations. Specifically, using a bivariate system consisting of the log difference of labor productivity and hours worked, Gali (Reference Gali1999) assesses the role of technology in generating fluctuations by identifying a (neutral) technology shock under the restriction (which could be derived from most standard RBC models) that only such shocks may have a permanent effect on the log level of labor productivity. An (implicit) underlying assumption of this long-run restriction is that the unit root in labor productivity is driven exclusively by (neutral) technology. Fisher (Reference Fisher2006) extended Gali (Reference Gali1999)’s framework to show that IST shocks may also have a permanent effect on the log level of labor productivity, and they play an significant role in generating fluctuations in economic variables. While both authors consider labor productivity as the key variable to their identification process, their argument may easily be applied to the case when we consider TFP instead. In Appendix A.1, we demonstrate that if output is measured in consumption units, only NT affects TFP permanently. However, when output is tabulated according to the Divisia index, both NT and IST permanently affect TFP. Therefore, our UC framework provides a theoretical structure to derive sensible and equally valid long-run restrictions about RPI and TFP, in the spirit of Gali (Reference Gali1999) and Fisher (Reference Fisher2006), to identify NT and IST shocks in a VAR framework.

5. Model comparison

In this section, we explore the fit of our bivariate unobserved components model defined in equations (1)–(6) to alternative restricted specifications that encompass the various assumptions in the theoretical and empirical literature. Therefore, our model constitutes a complete laboratory that may be used to evaluate the plausibility of competing specifications and their probable ramifications for business cycle analysis.

We adopt the Bayesian model comparison framework to compare various specifications via the Bayes factor. More specifically, suppose we wish to compare model

![]() $M_0$

against model

$M_0$

against model

![]() $M_1$

. Each model

$M_1$

. Each model

![]() $M_i, i=0,1,$

is formally defined by a likelihood function

$M_i, i=0,1,$

is formally defined by a likelihood function

![]() $p(\textbf{y}\,|\, \boldsymbol \theta _{i}, M_{i})$

and a prior distribution on the model-specific parameter vector

$p(\textbf{y}\,|\, \boldsymbol \theta _{i}, M_{i})$

and a prior distribution on the model-specific parameter vector

![]() $\boldsymbol \theta _{i}$

denoted by

$\boldsymbol \theta _{i}$

denoted by

![]() $p(\boldsymbol \theta _i \,|\, M_i)$

. Then, the Bayes factor in favor of

$p(\boldsymbol \theta _i \,|\, M_i)$

. Then, the Bayes factor in favor of

![]() $M_0$

against

$M_0$

against

![]() $M_1$

is defined as:

$M_1$

is defined as:

where

![]() $ p(\textbf{y}\,|\, M_{i}) = \int p(\textbf{y}\,|\, \boldsymbol \theta _{i}, M_{i}) p(\boldsymbol \theta _{i}\,|\, M_{i})\mathrm{d}\boldsymbol \theta _{i}$

is the marginal likelihood under model

$ p(\textbf{y}\,|\, M_{i}) = \int p(\textbf{y}\,|\, \boldsymbol \theta _{i}, M_{i}) p(\boldsymbol \theta _{i}\,|\, M_{i})\mathrm{d}\boldsymbol \theta _{i}$

is the marginal likelihood under model

![]() $M_i$

,

$M_i$

,

![]() $i=0,1$

. Note that the marginal likelihood is the marginal data density (unconditional on the prior distribution) implied by model

$i=0,1$

. Note that the marginal likelihood is the marginal data density (unconditional on the prior distribution) implied by model

![]() $M_i$

evaluated at the observed data

$M_i$

evaluated at the observed data

![]() $\textbf{y}$

. Since the marginal likelihood can be interpreted as a joint density forecast evaluated at the observed data, it has a built-in penalty for model complexity. If the observed data are likely under the model, the associated marginal likelihood would be “large” and vice versa. It follows that

$\textbf{y}$

. Since the marginal likelihood can be interpreted as a joint density forecast evaluated at the observed data, it has a built-in penalty for model complexity. If the observed data are likely under the model, the associated marginal likelihood would be “large” and vice versa. It follows that

![]() $\mathrm{BF}_{01}\gt 1$

indicates evidence in favor of model

$\mathrm{BF}_{01}\gt 1$

indicates evidence in favor of model

![]() $M_0$

against

$M_0$

against

![]() $M_1$

, and the weight of evidence is proportional to the value of the Bayes factor. For a textbook treatment of the Bayes factor and the computation of the marginal likelihood, see Chan et al. (Reference Chan, Koop, Poirier and Tobias2019).

$M_1$

, and the weight of evidence is proportional to the value of the Bayes factor. For a textbook treatment of the Bayes factor and the computation of the marginal likelihood, see Chan et al. (Reference Chan, Koop, Poirier and Tobias2019).

5.1. Testing

$\gamma = 0$

$\gamma = 0$

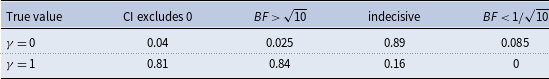

In the first modified model, we impose the restriction that

![]() $\gamma = 0$

, which essentially amounts to testing the GPT theory. If the restricted model were preferred over the unrestricted model, then the trends in RPI and TFP would be orthogonal, and the traditional approach of specifying NT and IST would be well founded.

$\gamma = 0$

, which essentially amounts to testing the GPT theory. If the restricted model were preferred over the unrestricted model, then the trends in RPI and TFP would be orthogonal, and the traditional approach of specifying NT and IST would be well founded.

The posterior mean of

![]() $\gamma$

is estimated to be about

$\gamma$

is estimated to be about

![]() $0.48$

, and the posterior standard deviation is 0.55. Most of the mass of the posterior distribution is on positive values—the posterior probability that

$0.48$

, and the posterior standard deviation is 0.55. Most of the mass of the posterior distribution is on positive values—the posterior probability that

![]() $\gamma \gt 0$

is 0.88. To formally test if

$\gamma \gt 0$

is 0.88. To formally test if

![]() $\gamma =0$

, we compute the Bayes factor in favor of the baseline model in equations (1)–(6) against the unrestricted version with

$\gamma =0$

, we compute the Bayes factor in favor of the baseline model in equations (1)–(6) against the unrestricted version with

![]() $\gamma =0$

imposed. In this case, the Bayes factor can be obtained by using the Savage–Dickey density ratio

$\gamma =0$

imposed. In this case, the Bayes factor can be obtained by using the Savage–Dickey density ratio

![]() $p(\gamma =0)/p(\gamma =0\,|\, \textbf{y})$

.Footnote

4

$p(\gamma =0)/p(\gamma =0\,|\, \textbf{y})$

.Footnote

4

The Bayes factor in favor of the baseline model is about 1.1, suggesting that even though there is some evidence against the hypothesis

![]() $\gamma =0$

, the evidence is not strong. To better understand this result, we plot the prior and posterior distributions of

$\gamma =0$

, the evidence is not strong. To better understand this result, we plot the prior and posterior distributions of

![]() $\gamma$

in Figure 3. As is clear from the figure, the prior and posterior densities at

$\gamma$

in Figure 3. As is clear from the figure, the prior and posterior densities at

![]() $\gamma =0$

have similar values. However, it is also apparent that the data move the prior distribution to the right, making larger values of

$\gamma =0$

have similar values. However, it is also apparent that the data move the prior distribution to the right, making larger values of

![]() $\gamma$

more likely under the posterior distribution. Hence, there seems to be some support for the hypothesis that

$\gamma$

more likely under the posterior distribution. Hence, there seems to be some support for the hypothesis that

![]() $\gamma \gt 0$

. More importantly, this result confirms the fact that

$\gamma \gt 0$

. More importantly, this result confirms the fact that

![]() $\gamma \neq 0$

, and the approach of modeling NT and IST as following independent processes is clearly not supported by the data. As we amply discussed in Section 4.2, such common trend misspecification yields incorrect restrictions about balanced growth restrictions and invalid conclusions about the main sources of business cycle fluctuations. Therefore, this suggest that business cycle researchers need to modify the specification of DSGE models to consider the possibility of the existence of this long-run joint relationship observed in the data and its potential effect on fluctuations.

$\gamma \neq 0$

, and the approach of modeling NT and IST as following independent processes is clearly not supported by the data. As we amply discussed in Section 4.2, such common trend misspecification yields incorrect restrictions about balanced growth restrictions and invalid conclusions about the main sources of business cycle fluctuations. Therefore, this suggest that business cycle researchers need to modify the specification of DSGE models to consider the possibility of the existence of this long-run joint relationship observed in the data and its potential effect on fluctuations.

Figure 3. Prior and posterior distributions of

![]() $\gamma$

.

$\gamma$

.

5.2. Testing

$\varphi _\mu = \varphi _{\mu _x} =0$

$\varphi _\mu = \varphi _{\mu _x} =0$

Next, we test the joint hypothesis that

![]() $\varphi _\mu = \varphi _{\mu _x} =0$

. In this case, the restricted model would allow the trend component to follow a random walk plus drift process such that the growths in RPI and TFP are constant. This would capture the assumptions in Fisher (Reference Fisher2006).

$\varphi _\mu = \varphi _{\mu _x} =0$

. In this case, the restricted model would allow the trend component to follow a random walk plus drift process such that the growths in RPI and TFP are constant. This would capture the assumptions in Fisher (Reference Fisher2006).

Again the Bayes factor in favor of the baseline model against the restricted model with

![]() $\varphi _\mu = \varphi _{\mu _x} =0$

imposed can be obtained by computing the Savage–Dickey density ratio

$\varphi _\mu = \varphi _{\mu _x} =0$

imposed can be obtained by computing the Savage–Dickey density ratio

![]() $p(\varphi _\mu = 0, \varphi _{\mu _x} =0)/ p(\varphi _\mu = 0, \varphi _{\mu _x} = 0\,|\, \textbf{y})$

. The Bayes factor in favor of the restricted model with

$p(\varphi _\mu = 0, \varphi _{\mu _x} =0)/ p(\varphi _\mu = 0, \varphi _{\mu _x} = 0\,|\, \textbf{y})$

. The Bayes factor in favor of the restricted model with

![]() $\varphi _\mu = \varphi _{\mu _x} =0$

is about 14. This indicates that there is strong evidence in favor of the hypothesis

$\varphi _\mu = \varphi _{\mu _x} =0$

is about 14. This indicates that there is strong evidence in favor of the hypothesis

![]() $\varphi _\mu = \varphi _{\mu _x} =0$

. This is consistent with the estimation results reported in Table 1—the estimates of

$\varphi _\mu = \varphi _{\mu _x} =0$

. This is consistent with the estimation results reported in Table 1—the estimates of

![]() $\varphi _\mu$

and

$\varphi _\mu$

and

![]() $ \varphi _{\mu _x}$

are both small in magnitude with relatively large posterior standard deviations. This is an example where the Bayes factor favors a simpler, more restrictive model.

$ \varphi _{\mu _x}$

are both small in magnitude with relatively large posterior standard deviations. This is an example where the Bayes factor favors a simpler, more restrictive model.

Despite restricting

![]() $\varphi _\mu = \varphi _{\mu _x} =0$

, this restricted model is able to fit the RPI and TFP series very well, as shown in Figure 4. This suggests that an ARIMA(0,1,0) specification for NT and IST would also be a viable alternative in DSGE models. Nonetheless, an ARIMA(0,1,0) specification, which implies a constant growth rate, would be incapable of generating the positive autocorrelated AR(1) observed in output growth. As we mentioned in Section 4.1, Cogley and Nason (Reference Cogley and Nason1995a) show that output dynamics are essentially determined by impulse dynamics. Therefore, DSGE models would be able to match the periodicity of output if the process of technology is simulated to have a positively autocorrelated growth rate. In addition, Cogley and Nason (Reference Cogley and Nason1995a) demonstrate that an ARIMA(1,1,0) only would be needed to produce a hump-shaped response of output in order for models to match these facts about autocorrelated output growth.

$\varphi _\mu = \varphi _{\mu _x} =0$

, this restricted model is able to fit the RPI and TFP series very well, as shown in Figure 4. This suggests that an ARIMA(0,1,0) specification for NT and IST would also be a viable alternative in DSGE models. Nonetheless, an ARIMA(0,1,0) specification, which implies a constant growth rate, would be incapable of generating the positive autocorrelated AR(1) observed in output growth. As we mentioned in Section 4.1, Cogley and Nason (Reference Cogley and Nason1995a) show that output dynamics are essentially determined by impulse dynamics. Therefore, DSGE models would be able to match the periodicity of output if the process of technology is simulated to have a positively autocorrelated growth rate. In addition, Cogley and Nason (Reference Cogley and Nason1995a) demonstrate that an ARIMA(1,1,0) only would be needed to produce a hump-shaped response of output in order for models to match these facts about autocorrelated output growth.

Figure 4. Fitted values of

![]() $\widehat{z}_t = \tau _t$

and

$\widehat{z}_t = \tau _t$

and

![]() $\,\widehat{x}_t = \gamma \tau _t + \tau _{x,t}$

of the restricted model with

$\,\widehat{x}_t = \gamma \tau _t + \tau _{x,t}$

of the restricted model with

![]() $\varphi _\mu = \varphi _{\mu _x} =0$

. The shaded region represents the 5-th and 95-th percentiles.

$\varphi _\mu = \varphi _{\mu _x} =0$

. The shaded region represents the 5-th and 95-th percentiles.

5.3. Testing

$\varphi _{\mu _x} = \sigma _{\eta _x}^2=0$

$\varphi _{\mu _x} = \sigma _{\eta _x}^2=0$

Now, we test the joint hypothesis that

![]() $\varphi _{\mu _x} = \sigma _{\eta _x}^2=0$

. This restriction goes to the heart of the debate between Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011) and Benati (Reference Benati2013). If the restricted model held true, then RPI and TFP would be co-integrated as argued by Schmitt-Grohé and Uribè (Reference Schmitt-Grohé and Uribè2011). On the other hand, if the restrictions were rejected, we would end with a situation where RPI and TFP are not co-integrated, but still share a common component as shown in Benati (Reference Benati2013).

$\varphi _{\mu _x} = \sigma _{\eta _x}^2=0$