1 Introduction

Explainability of an Artificial Intelligence (AI) system (i.e., tracking the steps that lead to the decision) was an easy task in the early stages of AI, as the majority of the systems were logic-based. For this reason, it was easy to provide transparency to their decisions by providing explanations and therefore to gain the trust of their users. This changed in the last 20 yr, when data-driven methods started to evolve and became part of most AI systems, giving them computational capabilities and learning skills that cannot easily be reached by means of logic languages alone. Eventually, the steadily increasing complexity of computational evolution of AI methods resulted in more obscure systems.

Therefore, a new research field appeared in order to make AI systems more explainable, called eXplainable Artificial Intelligence (XAI). The graph is presented in Figure 1, showing the Google searches that contain the keyword XAI is very interesting, as it shows that people’s searches are steadily increasing since the mid of 2016, indicating the interest in explaining decisions in AI (the picture was part of the study Adadi & Berrada Reference Adadi and Berrada2018). Capturing an accurate definition of what can be considered an explanation is quite challenging, as can be seen in Miller (Reference Miller2019). Among many definitions, some commonly accepted ones are:

-

• An explanation is an assignment of causal responsibility (Josephson & Josephson Reference Josephson and Josephson1996).

-

• An explanation is both a process and a product that is it is the process and the result of a Why? question, Lombrozo (Reference Lombrozo2006).

-

• An explanation is a process to find meaning or create shared meaning, Malle (Reference Malle2006).

Figure 1. Google searches with XAI (Adadi & Berrada Reference Adadi and Berrada2018)

Providing explanations to an AI system has two directions: the first one is to gain trust in a system or convince a user, and the other is for the scientists to understand how a data-driven model reaches a decision. The first case has many real-word implementations which explain the decision of a system to the user. A significant amount of work can be found in the fields of Medical Informatics (Holzinger et al. Reference Holzinger, Malle, Kieseberg, Roth, Müller, Reihs and Zatloukal2017; Tjoa & Guan Reference Tjoa and Guan2019; Lamy et al. Reference Lamy, Sekar, Guezennec, Bouaud and Séroussi2019), Legal Informatics (Waltl & Vogl Reference Waltl and Vogl2018; Deeks Reference Deeks2019), Military Defense Systems (Core et al. Reference Core, Lane, Van Lent, Solomon, Gomboc and Carpenter2005; Core et al. Reference Core, Lane, Van Lent, Gomboc, Solomon and Rosenberg2006; Madhikermi et al. Reference Madhikermi, Malhi and Främling2019; Keneni et al. Reference Keneni, Kaur, Al Bataineh, Devabhaktuni, Javaid, Zaientz and Marinier2019), and Robotic Platforms (Sheh Reference Sheh2017; Anjomshoae et al. Reference Anjomshoae, Najjar, Calvaresi and Främling2019). In the second case, the studies try to enhance transparency in the data-driven model (Bonacina Reference Bonacina2017; Yang & Shafto Reference Yang and Shafto2017; Gunning & Aha Reference Gunning and Aha2019; Samek & Müller Reference Samek and Müller2019; Fernandez et al. Reference Fernandez, Herrera, Cordon, del Jesus and Marcelloni2019); in some cases, visualization are also used (Choo & Liu Reference Choo and Liu2018).

In the last decade, Argumentation has achieved significant impact to XAI. Argumentation has strong Explainability capabilities, as it can translate the decision of an AI system in an argumentation procedure, which shows step by step how it concludes to a result. Every Argumentation Framework (AF) is based upon well-defined mathematical models, from which the basic definitions are close to Set Theory, extended with some extra properties between the elements. The advantages of Argumentation, which give aid to XAI, are that given a set of possible decisions, the decisions can be mapped to a graphical representation, with predefined attack properties that subsequently will lead to the winning decision and will show the steps that were followed in order to reach it.

This study provides an overview over the topics of Argumentation and XAI combined. We present a survey that describes how Argumentation enables XAI in AI systems. Argumentation combined with XAI is a wide research field, but our intention is to include the most representative relevant studies. We classify studies based on the type of problem they solve such as Decision-Making, Justification of an opinion, and Dialogues between Human–System and System–System scenarios and show how Argumentation enables XAI when solving these problems. Then, we delineate on how Argumentation has helped in providing explainable systems, in the application domains of Medical Informatics, Law Informatics, Robotics, the Semantic Web (SW), Security, and some other general purpose systems. Moreover, we get into the field of Machine Learning (ML) and address how transparency can be achieved with the use of an AF. The contributions of our study are the following:

-

1. We present an extensive literature review of how Argumentation enables XAI.

-

2. We show how Argumentation enables XAI, for solving problems in Decision-Making, Justification, and Dialogues.

-

3. We present how Argumentation has helped build explainable systems in the application domains of Medical Informatics, Law, the SW, Security, and Robotics.

-

4. We show how Argumentation can become the missing link between ML and XAI.

The remainder of this study is organized as follows. Section 2 presents the motivation and the contributions of this survey. Section 3 discusses related works and describes other surveys related to Argumentation or XAI. Section 4 contains the background needed for the terms in the subsequent sections. In Section 5, we present how Argumentation enables Explainability in Decision-Making, Justification, and Dialogues. Moreover, we present how agents can use Argumentation to enhance their Explainability skills and what principles they must follow, in order not to be considered biased. Subsequently, Section 6 shows how Argumentation helped build explainable systems in various application fields. Section 7 elaborates on studies that combine Argumentation and ML, in order to show how Argumentation can become the missing link between ML and XAI. Finally, we discuss our findings and conclude in Section 8.

2 Motivation

Argumentation Theory is developing into one of the main reasoning methods to capture Explainability in AI. The quantity of theoretical studies that use Argumentation to enable Explainability, as well as the plurality of explainable systems that use Argumentation to provide explanations in so many application fields that are presented in this survey, is proofs for the importance of Argumentation Theory in XAI. Nevertheless, previous surveys over Argumentation either do not point out its Explainability capabilities or present the Explainability capabilities of Argumentation only for a specific domain (see Section 3). Therefore, we believe that there is a need in the literature for a survey over the topic of Argumentation and XAI combined, for various problem types and applications domains.

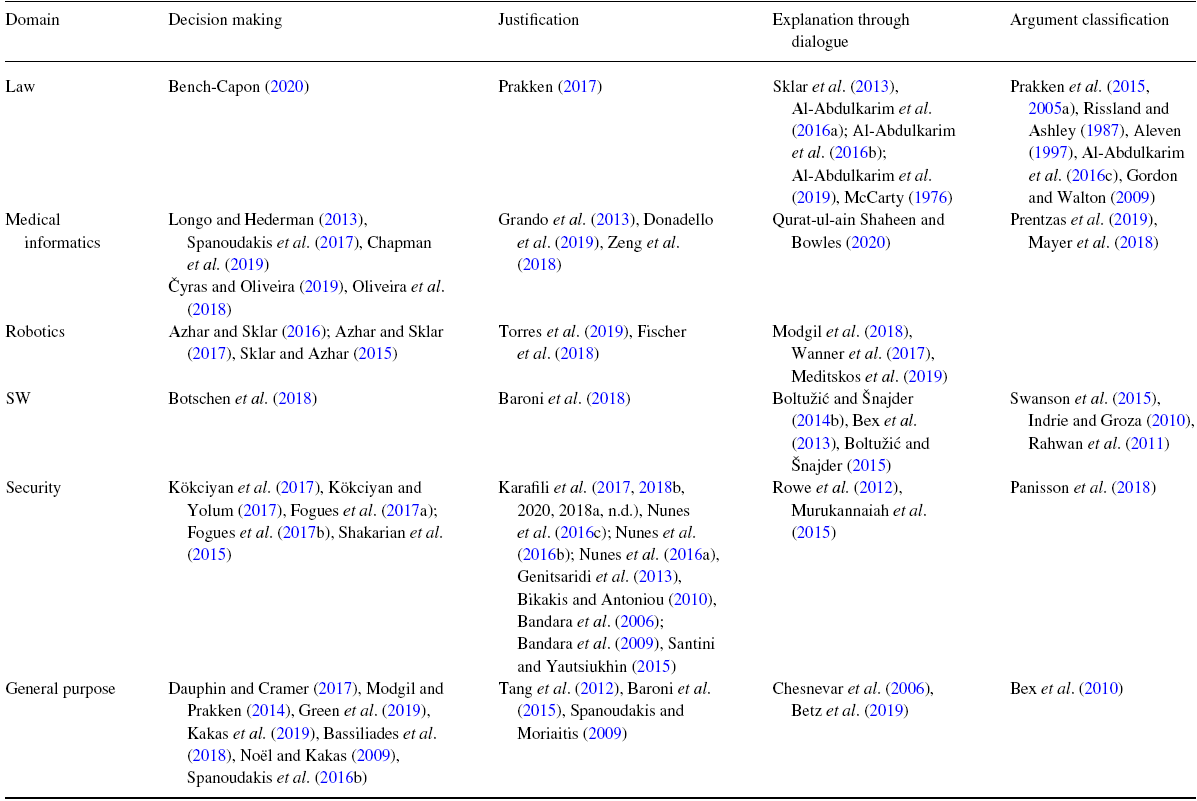

First, we want to present an extensive literature overview of how Argumentation enables XAI. For this reason, we classify studies based on the most important practical problem types that Argumentation solves, such as decision-making, justification of an opinion, and explanation through dialogues. Our goal is to show how Argumentation enables XAI in order to solve such problems. We believe that such a classification is more interesting for the reader who tries to locate which research studies are related to the solution of specific problem types.

Second, we want to point out the capabilities of Argumentation in building explainable systems. We can see that any AI system that chooses Argumentation as its reasoning mechanism for explaining its decision can gain great Explainability capabilities and provide explanations which are closer to the human way of thinking. Henceforth, using Argumentation for providing explanations makes an AI system friendlier and more trustworthy to the user. Our goal is to show that Argumentation for building explainable systems is not committed to one application domain. Therefore, we present an overview of studies in many domains such as Medical Informatics, Law, the SW, Security, Robotics, and some general purpose systems.

Finally, our intention is to connect ML, the field that brought to the surface XAI, with Argumentation. The literature review over studies that combine Argumentation with ML to explain the decision of data-driven models revealed how closely related those two fields are. For this reason, we wanted to show that Argumentation can act as a link between ML and XAI.

3 Related work

In this section, we present surveys that are related to Argumentation or XAI. Our intention is to help the reader to obtain an extensive look in the field of Argumentation or XAI and become aware of its various forms, capabilities, and implementations. One could read the surveys of Modgil et al. (Reference Modgil, Toni, Bex, Bratko, Chesñevar, Dvořák, Falappa, Fan, Gaggl, García, González, Gordon, Leite, Možina, Reed, Simari, Szeider, Torroni and Woltran2013) in order to understand the uses of Argumentation, Baroni et al. (Reference Baroni, Caminada and Giacomin2011) to understand how Abstract AFs are defined and their semantics, Amgoud et al. (Reference Amgoud, Cayrol, Lagasquie-Schiex and Livet2008) to understand how Bipolar AFs are defined, Doutre and Mailly (Reference Doutre and Mailly2018) to understand the dynamic enforcement that Argumentation Theory has over a set of constrains, and Bonzon et al. (Reference Bonzon, Delobelle, Konieczny and Maudet2016) to see how we can compare set of arguments. Moreover, most studies in this section indicate what is missing in Argumentation Theory or XAI in general and how the gaps should be filled.

Argumentation is becoming one of the main mechanisms when it comes to explaining the decision of an AI system. Therefore, understanding how an argument is defined as acceptable within an AF is crucial. An interesting study to understand such notions is presented in Cohen et al. (Reference Cohen, Gottifredi, García and Simari2014), where the authors present a survey which analyzes the topic of support relations between arguments. The authors talk about the advantages and disadvantages of the deductive support, necessity support, evidential support, and backing. Deductive support captures the intuition: if an argument

![]() $\mathcal{A}$

supports argument

$\mathcal{A}$

supports argument

![]() $\mathcal{B}$

, then the acceptance of

$\mathcal{B}$

, then the acceptance of

![]() $\mathcal{A}$

implies the acceptance of

$\mathcal{A}$

implies the acceptance of

![]() $\mathcal{B}$

and, as a consequence, the non-acceptance of

$\mathcal{B}$

and, as a consequence, the non-acceptance of

![]() $\mathcal{B}$

implies the non-acceptance of

$\mathcal{B}$

implies the non-acceptance of

![]() $\mathcal{A}$

. Necessity support triggers the following constraint: if argument

$\mathcal{A}$

. Necessity support triggers the following constraint: if argument

![]() $\mathcal{A}$

supports argument

$\mathcal{A}$

supports argument

![]() $\mathcal{B}$

, it means that

$\mathcal{B}$

, it means that

![]() $\mathcal{A}$

is necessary for

$\mathcal{A}$

is necessary for

![]() $\mathcal{B}$

. Hence, the acceptance of

$\mathcal{B}$

. Hence, the acceptance of

![]() $\mathcal{B}$

implies the acceptance of

$\mathcal{B}$

implies the acceptance of

![]() $\mathcal{A}$

(conversely the non-acceptance of

$\mathcal{A}$

(conversely the non-acceptance of

![]() $\mathcal{A}$

implies the non-acceptance of

$\mathcal{A}$

implies the non-acceptance of

![]() $\mathcal{B}$

). Evidential support states that arguments are accepted if they have a support that will make them acceptable by the other participants in a conversation. Backing provides support to the claim of the argument. The authors showed that each support establishes different constraints to the acceptability of arguments.

$\mathcal{B}$

). Evidential support states that arguments are accepted if they have a support that will make them acceptable by the other participants in a conversation. Backing provides support to the claim of the argument. The authors showed that each support establishes different constraints to the acceptability of arguments.

An important survey for solving reasoning problems in an AF is introduced in Charwat et al. (Reference Charwat, Dvoǎák, Gaggl, Wallner and Woltran2015), where the authors show the different techniques of solving implementation issues for an AF. The authors group the techniques into two different classes. First, the reduction-based techniques where the argumentation implementation problem is transformed into another problem, a satisfiability problem in propositional logic (Biere et al. Reference Biere, Heule and van Maaren2009), or a constraint-satisfaction problem (Dechter & Cohen Reference Dechter and Cohen2003), or an Answer Set Programming (ASP) problem (Fitting Reference Fitting1992; Lifschitz Reference Lifschitz2019). Reduction-based techniques have the following advantages: (i) they are directly adapted with newer versions of solvers and (ii) they can be easily adapted to specific needs which an AF may need to obey. While, the other category called direct approaches refers to systems and methods implementing AF from scratch, thus allowing algorithms to realize argumentation-specific optimizations.

Argumentation and ML are fused in Longo (Reference Longo2016), Cocarascu and Toni (Reference Cocarascu and Toni2016). Longo (Reference Longo2016) in his study considers that any AF should be divided into sub-components, to make the training of ML classifiers easier when they are asked to build an AF from a set of arguments and relations between them. He considers that there should be five different classifiers, one for understanding the internal structure of arguments, one for the definition of conflicts between arguments, another for the evaluation of conflicts and definition of valid attacks, one for determining the dialectical status of arguments, and a last one to accrue acceptable arguments. Thus, he provides in his survey any ML classifier that has been built for each component, studies that have defined a formalization for the elements of any component, and studies that provide data for training. Nevertheless, he mentions that there is a lack of data to train a classifier for each sub-component. On the other hand, Cocarascu and Toni (Reference Cocarascu and Toni2016) analyze the implementation of Argumentation in ML, categorizing them by the data-driven model they augment, the arguments, the AF, and semantics they deploy. Therefore, they show real-life systems of ML and Reinforcement Learning models that are aided by Argumentation in the scope of Explainability. Kakas and Michael (Reference Kakas and Michael2020), in their survey, elaborate over the topic of Abduction and Argumentation, which are presented as two different forms of reasoning that can play a fundamental role in ML. More specifically, the authors elaborate on how reasoning with Abduction and Argumentation can provide a natural-fitting mechanism for the development of explainable ML models.

Two similar surveys are Moens (Reference Moens2018), Lippi and Torroni (Reference Lippi and Torroni2016), where the authors elaborate over the topic of Argumentation Mining (AM), from free text, and dialogues through speech. AM is an advanced form of Natural Language Processing (NLP). The classifiers in order to understand an argument inside a piece of text or speech must first understand the whole content of the conversation, the topic of the conversation, as well as the specific key phrases that may indicate whether an argument exists. The aforementioned actions facilitate the identification of the argument in a sentence or dialog. Further analysis is necessary to clarify what kind of argument has been identified (i.e. opposing, defending, etc.). There are two key problems identified for AM systems in both surveys: (i) the fact that they cannot support a multi-iteration argumentation procedure, since it is hard for them to extract argument from a long argumentation dialogue, (ii) the lack of training data to train argument annotators, apart from some great efforts such as: The DebaterFootnote 1 , DebatepediaFootnote 2 , IdebateFootnote 3 , VBATESFootnote 4 , and ProConFootnote 5 . Moreover, Moens (Reference Moens2018) talks about studies where facial expressions are also inferred through a vision module to better understand the form of the argument. Another survey on AM is Lawrence and Reed (Reference Lawrence and Reed2020).

Finally, the explanation of Case-Based Reasoning (CBR) systems is explored in the survey of Sørmo et al. (Reference Sørmo, Cassens and Aamodt2005). Even though the authors do not include Argumentation in their survey, CBR works similarly to Case-Based Argumentation. Therefore, one could find interesting information about Explainability with CBR. Moreover, the authors extend the study of Wick and Thompson (Reference Wick and Thompson1992), in which reasoning methods that take into consideration the desires of the user and the system are presented, in order to follow explanation pipelines. The pipelines capture the different methods that can help a system reach a decision:

-

1. Transparency: Explain How the System Reached the Answer.

-

2. Justification: Explain Why the Answer is a Good Answer.

-

3. Relevance: Explain Why a Question Asked is Relevant.

-

4. Conceptualization: Clarify the Meaning of Concepts.

-

5. Learning: Teach the user about the domain to state the question better.

On the other hand, the literature of surveys for XAI is also rich. A smooth introduction to XAI is the paper of Miller (Reference Miller2019), where the author reviews relevant papers from philosophy, cognitive psychology, and social psychology and he argues that XAI can benefit from existing models of how people define, generate, select, present, and evaluate explanations. The paper drives the following conclusions: (1) Why? questions are contrastive; (2) Explanations are selected in a biased manner; (3) Explanations are social; and (4) Probabilities are not as important as causal links. As an extension of these ideas, we can see the extensive survey of Atkinson et al. (Reference Atkinson, Bench-Capon and Bollegala2020) on the topic of Explainability in AI and Law.

Fundamentally, XAI is a field that came to the surface when AI systems moved from logic-based to data-driven models with learning capabilities. We can see this in the survey of Adadi and Berrada (Reference Adadi and Berrada2018), where the authors show how XAI methods have developed during the last 20 yr. As it was natural, data-driven models increased the complexity of tracking the steps to reach a decision. Thus, the Explainability of a decision was considered as a ‘black box’. For this reason, a lot of studies have tried to provide even more Explainability to data-driven models especially in the last decade. We can see many similar surveys that describe the Explainability methods which are considered state of the art, for the decision of various data-driven models in Možina et al. (Reference Možina, Žabkar and Bratko2007), Došilović et al. (Reference Došilović, Brčić and Hlupić2018), Schoenborn and Althoff (Reference Schoenborn and Althoff2019), Guidotti et al. (Reference Guidotti, Monreale, Ruggieri, Turini, Giannotti and Pedreschi2018), Collenette et al. (Reference Collenette, Atkinson and Bench-Capon2020). Nevertheless, there are many AI systems that still have not reached the desired transparency for the way they reach their decisions. A survey with open challenges in XAI can be found in the study of Das and Rad (Reference Das and Rad2020).

Deep learning is the area of ML that is the most obscure to explain its decision. Even though many methods have been developed to achieve the desired level of transparency, there are still a lot of open challenges in this area. A survey that gathers methods to explain the decision of a deep learning model, as well as the open challenges, can be found in Carvalho et al. (Reference Carvalho, Pereira and Cardoso2019). In this scope, we can find other more practical surveys that talk about Explainability of decision-making for data-driven models in Medical Informatics (Tjoa & Guan Reference Tjoa and Guan2019; Pocevičiūtė et al. Reference Pocevičiūtė, Eilertsen and Lundström2020) or in Cognitive Robotic Systems (Anjomshoae et al. Reference Anjomshoae, Najjar, Calvaresi and Främling2019). The last three studies present how data-driven models give explanations for their decision in the field of Medical Informatics in order to recommend a treatment, to make a diagnosis (with the help of an expert making the final call), and image analysis to classify an illness, for example through magnetic resonance images to classify if a person has some type of cancer.

A theoretical scope on why an explanation is desired for the decision of an AI system can be found in the study of Koshiyama et al. (Reference Koshiyama, Kazim and Engin2019), where the authors argue that a user has the right to know every decision that may change her life. Hence, they gather AI systems that offer some method of providing explanations for their decisions and interact with human users. This study was supported 2 yr ago by the European Union which has defined new regulations about this specific topic (Regulation Reference Regulation2016). Another, human-centric XAI survey is that of Páez (Reference Páez2019), where the author shows the different types of acceptable explanations of a decision based on cognitive psychology and groups the AI systems according to the type of explanation they provide. Moreover, the author reconsiders the first grouping based on the form of understanding (i.e., direct, indirect, etc) the AI systems offer to a human.

4 Background

The theoretical models of Argumentation obtained a more practical substance in 1958 by Toulmin through his book The Uses of Argumentation (Toulmin Reference Toulmin1958), where he presented how we can use Argumentation to solve every day problems. Yet, the demanding computational complexity limited the applicability of AFs for addressing real-world problems. Fortunately, during the last 20 yr, Argumentation Theory was brought back to the surface, new books were introduced (Walton Reference Walton2005; Besnard & Hunter Reference Besnard and Hunter2008), and mathematical formalizations were defined. The Deductive Argumentation Framework (DAF) (Besnard & Hunter Reference Besnard and Hunter2001) is the first mathematical formalization describing an AF and its non-monotonic reasoning capabilities.

In this section, we will give the basic definitions of an Abstract Argumentation Framework (AAF) (Dung Reference Dung1995; Dung & Son Reference Dung and Son1995) and we will introduce several extensions of the AAF. More specifically, we are going to talk about the Structured Argumentation Framework (SAF) (Dung Reference Dung2016), the Label-Based Argumentation Framework (LBAF) (Caminada Reference Caminada2008), the Bipolar Argumentation Framework (BAF) (Cayrol & Lagasquie-Schiex Reference Cayrol and Lagasquie-Schiex2005), the Quantitative Bipolar Argumentation Framework (QBAF) (Baroni et al. Reference Baroni, Rago and Toni2018), and the Probabilistic Bipolar Argumentation Framework (PBAF) (Fazzinga et al. Reference Fazzinga, Flesca and Furfaro2018). All these frameworks are used in studies mentioned in the next sections, so to avoid over analysis, we will give the definitions of the AAF and we will mention the dimension being extended from its variations.

Deductive Argumentation Framework: DAF is defined only on first-order logic rules and terms, and all the aforementioned frameworks are built upon it. DAF considers an argument as a set of formulae that support a claim, using elements from a propositional (or other type) language

![]() $\Delta$

. Thus, arguments in DAF are represented as

$\Delta$

. Thus, arguments in DAF are represented as

![]() $\langle \Gamma, \phi \rangle$

, where

$\langle \Gamma, \phi \rangle$

, where

![]() $\Gamma \subseteq \Delta$

denotes the set of formulae and is called the support of the argument, which help establish the claim

$\Gamma \subseteq \Delta$

denotes the set of formulae and is called the support of the argument, which help establish the claim

![]() $\phi$

. The following properties hold: (i)

$\phi$

. The following properties hold: (i)

![]() $\Gamma \subset \Delta$

, (ii)

$\Gamma \subset \Delta$

, (ii)

![]() $\Gamma \vdash \phi$

, (iii)

$\Gamma \vdash \phi$

, (iii)

![]() $\Gamma \nvdash \perp$

, and (iv)

$\Gamma \nvdash \perp$

, and (iv)

![]() $\Gamma$

is minimal with respect to set inclusion for which (i), (ii), and (iii) hold. Furthermore, there exist two types of attack, undercut and rebut.

$\Gamma$

is minimal with respect to set inclusion for which (i), (ii), and (iii) hold. Furthermore, there exist two types of attack, undercut and rebut.

-

1. For any propositions

$\phi$

and

$\phi$

and

$\psi$

,

$\psi$

,

$\phi$

attacks

$\phi$

attacks

$\psi$

when

$\psi$

when

$\phi \equiv \neg \psi$

(the symbol

$\phi \equiv \neg \psi$

(the symbol

$\neg$

denotes the strong negation).

$\neg$

denotes the strong negation). -

2. Rebut:

$\langle \Gamma_1, \phi_1 \rangle$

rebuts

$\langle \Gamma_1, \phi_1 \rangle$

rebuts

$\langle \Gamma_2, \phi_2 \rangle$

, if

$\langle \Gamma_2, \phi_2 \rangle$

, if

$\phi_1$

attacks

$\phi_1$

attacks

$\phi_2$

.

$\phi_2$

. -

3. Undercut:

$\langle \Gamma_1, \phi_1 \rangle$

undercuts

$\langle \Gamma_1, \phi_1 \rangle$

undercuts

$\langle \Gamma_2, \phi_2 \rangle$

, if

$\langle \Gamma_2, \phi_2 \rangle$

, if

$\phi_1$

attacks some

$\phi_1$

attacks some

$\psi \in \Gamma_2$

.

$\psi \in \Gamma_2$

. -

4.

$\langle \Gamma_1, \phi_1 \rangle$

attacks

$\langle \Gamma_1, \phi_1 \rangle$

attacks

$\langle \Gamma_2, \phi_2 \rangle$

, if it rebuts or undercuts it.

$\langle \Gamma_2, \phi_2 \rangle$

, if it rebuts or undercuts it.

Abstract Argumentation Framework: An AAF is a pair

![]() $\left(\mathcal{A}, \mathcal{R}\right)$

, where

$\left(\mathcal{A}, \mathcal{R}\right)$

, where

![]() $\mathcal{A}$

is a set of arguments and

$\mathcal{A}$

is a set of arguments and

![]() $\mathcal{R} \subseteq \mathcal{A} \times \mathcal{A}$

a set of attacks, such that

$\mathcal{R} \subseteq \mathcal{A} \times \mathcal{A}$

a set of attacks, such that

![]() $\forall a, b \in \mathcal{A}$

the relation

$\forall a, b \in \mathcal{A}$

the relation

![]() $\left(a, b\right) \in \mathcal{R}$

means a attacks b

$\left(a, b\right) \in \mathcal{R}$

means a attacks b

![]() $\left(\textit{equivalently } \left(b, a\right) \in \mathcal{R} \textit{ means } \emph{b attacks a}\right)$

. Let,

$\left(\textit{equivalently } \left(b, a\right) \in \mathcal{R} \textit{ means } \emph{b attacks a}\right)$

. Let,

![]() $S \subseteq \mathcal{A}$

we call:

$S \subseteq \mathcal{A}$

we call:

-

1. S conflict free, if

$\forall a, b \in \mathcal{S}$

holds

$\forall a, b \in \mathcal{S}$

holds

$(a,b) \notin \mathcal{R}$

$(a,b) \notin \mathcal{R}$

$\left(\textit{or } (b, a) \notin \mathcal{R}\right)$

.

$\left(\textit{or } (b, a) \notin \mathcal{R}\right)$

. -

2. S defends an argument a

$\in \mathcal{A}$

if

$\in \mathcal{A}$

if

$\forall b \in \mathcal{A}$

such that

$\forall b \in \mathcal{A}$

such that

$\left(b, a\right) \in \mathcal{R}$

,

$\left(b, a\right) \in \mathcal{R}$

,

$\exists c \in S$

and

$\exists c \in S$

and

$\left(c, b\right) \in \mathcal{R}$

.

$\left(c, b\right) \in \mathcal{R}$

. -

3. S is admissible if is conflict free, and

$\forall a \in S \textit{, } \forall b \in A$

, such that

$\forall a \in S \textit{, } \forall b \in A$

, such that

$(b, a) \in \mathcal{R}, \textit{ } \exists c \in S$

holds

$(b, a) \in \mathcal{R}, \textit{ } \exists c \in S$

holds

$\left(c,b\right) \in \mathcal{R}$

.

$\left(c,b\right) \in \mathcal{R}$

.

Next, the semantics of AAF are specific subsets of arguments, which are defined from the aforementioned properties. But first, we need to introduce the function

![]() $\mathcal{F}$

, where

$\mathcal{F}$

, where

![]() $\mathcal{F}:2^{\mathcal{A}}\to2^{\mathcal{A}}$

, such that for

$\mathcal{F}:2^{\mathcal{A}}\to2^{\mathcal{A}}$

, such that for

![]() $S \subseteq \mathcal{A}$

,

$S \subseteq \mathcal{A}$

,

![]() $\mathcal{F}\left( S\right)= \{ a \mid \textit{a is defended by }S\}$

. The fixpoint of a function

$\mathcal{F}\left( S\right)= \{ a \mid \textit{a is defended by }S\}$

. The fixpoint of a function

![]() $\mathcal{F}$

given a set S is a point where the input of the function is identical to the output,

$\mathcal{F}$

given a set S is a point where the input of the function is identical to the output,

![]() $\mathcal{F}\left( S\right) = S$

.

$\mathcal{F}\left( S\right) = S$

.

-

1. Stable: Let

$S \subseteq \mathcal{A}$

, S is a stable extension of

$S \subseteq \mathcal{A}$

, S is a stable extension of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is conflict free and

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is conflict free and

$\forall a \in A\setminus S$

,

$\forall a \in A\setminus S$

,

$\exists b \in S$

such that

$\exists b \in S$

such that

$(b,a) \in \mathcal{R}$

.

$(b,a) \in \mathcal{R}$

. -

2. Preferred: Let

$S \subseteq \mathcal{A}$

, S is a preferred extension of

$S \subseteq \mathcal{A}$

, S is a preferred extension of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is maximal for the set inclusion among the admissible sets of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is maximal for the set inclusion among the admissible sets of

$\mathcal{A}$

.

$\mathcal{A}$

. -

3. Complete: Let

$S \subseteq \mathcal{A}$

, S is a complete extension of

$S \subseteq \mathcal{A}$

, S is a complete extension of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is conflict-free fixpoint of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is conflict-free fixpoint of

$\mathcal{F}$

.

$\mathcal{F}$

. -

4. Grounded: Let

$S \subseteq \mathcal{A}$

, S is a grounded extension of

$S \subseteq \mathcal{A}$

, S is a grounded extension of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is the minimal fixpoint of

$\left(\mathcal{A}, \mathcal{R}\right)$

, iff S is the minimal fixpoint of

$\mathcal{F}$

.

$\mathcal{F}$

.

Structured Argumentation Framework: SAF represents the arguments in the form of logical rules (Rule (1)), and it introduces the constraints of preference between arguments. First, we need to define some new concepts. A theory is a pair

![]() $\left(\mathcal{T}, \mathcal{P} \right)$

whose sentences are formulae in the background monotonic logic

$\left(\mathcal{T}, \mathcal{P} \right)$

whose sentences are formulae in the background monotonic logic

![]() $\left(L,\vdash \right)$

of the form

$\left(L,\vdash \right)$

of the form

![]() $L \leftarrow L_1,\ldots, L_n$

where

$L \leftarrow L_1,\ldots, L_n$

where

![]() $L, L_1,\ldots, L_n$

are ground literals. Henceforth,

$L, L_1,\ldots, L_n$

are ground literals. Henceforth,

![]() $\mathcal{T}$

is a set of ground literals, and

$\mathcal{T}$

is a set of ground literals, and

![]() $\mathcal{P}$

is a set of rules which follow the general form

$\mathcal{P}$

is a set of rules which follow the general form

![]() $label: claim \leftarrow premise$

(Rule (1)).

$label: claim \leftarrow premise$

(Rule (1)).

for

![]() $n \in \mathbb{N}$

, and

$n \in \mathbb{N}$

, and

![]() $a, b_1,\ldots,b_n \in \mathcal{T}$

.

$a, b_1,\ldots,b_n \in \mathcal{T}$

.

Rule (1) is understood as if the facts

![]() $b_1,\ldots,b_n$

are true, then its claim a is true, otherwise if any of the facts is false, the claim is false. Additionally, if we have two rules r,r’ similar to Rule (1), we define the preference of r over r’ by prefer(r,r’). The arguments in SAF have the same format similar to Rule (1). Therefore, when we say we prefer an argument a over b, we mean the relation prefer(a,b). If the preference rules are removed from the framework, then it is also called Assumption-Based Argumentation Framework (ABA) (Dung et al. Reference Dung, Kowalski and Toni2009), where a set of assumptions (i.e. body of rule) support a claim (i.e. head of rule). Additionally, ABA can tackle incomplete information because if we do not have all the literals from the body of a rule, we can make some assumptions, to fill the missing information.

$b_1,\ldots,b_n$

are true, then its claim a is true, otherwise if any of the facts is false, the claim is false. Additionally, if we have two rules r,r’ similar to Rule (1), we define the preference of r over r’ by prefer(r,r’). The arguments in SAF have the same format similar to Rule (1). Therefore, when we say we prefer an argument a over b, we mean the relation prefer(a,b). If the preference rules are removed from the framework, then it is also called Assumption-Based Argumentation Framework (ABA) (Dung et al. Reference Dung, Kowalski and Toni2009), where a set of assumptions (i.e. body of rule) support a claim (i.e. head of rule). Additionally, ABA can tackle incomplete information because if we do not have all the literals from the body of a rule, we can make some assumptions, to fill the missing information.

Label-Based Argumentation Framework: LBAF is a framework where the arguments are characterized by a label, which defines the acceptability of an argument. Briefly:

-

1. an argument is labeled in if all of its attackers are labeled out and is called acceptable.

-

2. an argument is labeled out if at least one of its attackers is labeled in.

-

3. an argument is labeled undec, when we cannot label it neither in nor out.

Keeping this in mind, let

![]() $\left( \mathcal{A}, \mathcal{R} \right)$

be an LBAF, then the framework can be represented through a function

$\left( \mathcal{A}, \mathcal{R} \right)$

be an LBAF, then the framework can be represented through a function

![]() $\mathcal{L}:\mathcal{A} \to \{{\rm in},{\rm out},\textrm{undec}\}$

. The function

$\mathcal{L}:\mathcal{A} \to \{{\rm in},{\rm out},\textrm{undec}\}$

. The function

![]() $\mathcal{L}$

must be an injection (i.e.

$\mathcal{L}$

must be an injection (i.e.

![]() $\forall a \in \mathcal{A}, \exists \mathcal{L}(a) \in \{\textrm{in},\textrm{out},\textrm{undec}\}$

).

$\forall a \in \mathcal{A}, \exists \mathcal{L}(a) \in \{\textrm{in},\textrm{out},\textrm{undec}\}$

).

Bipolar Argumentation Framework: A BAF is a triplet

![]() $\left(\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-} \right)$

, where as before

$\left(\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-} \right)$

, where as before

![]() $\mathcal{A}$

is a set of arguments,

$\mathcal{A}$

is a set of arguments,

![]() $\mathcal{R}^{+} \subseteq \mathcal{A}\times \mathcal{A}$

is a binary relation called support relation, and

$\mathcal{R}^{+} \subseteq \mathcal{A}\times \mathcal{A}$

is a binary relation called support relation, and

![]() $\mathcal{R}^{-} \subseteq \mathcal{A}\times \mathcal{A}$

is a binary relation called attack relation. Therefore,

$\mathcal{R}^{-} \subseteq \mathcal{A}\times \mathcal{A}$

is a binary relation called attack relation. Therefore,

![]() $\forall a,b \in \mathcal{A}$

if

$\forall a,b \in \mathcal{A}$

if

![]() $\left(a, b\right) \in \mathcal{R}^{+}$

, we say the argument a supports argument b, equivalently

$\left(a, b\right) \in \mathcal{R}^{+}$

, we say the argument a supports argument b, equivalently

![]() $\forall a,b \in \mathcal{A}$

if

$\forall a,b \in \mathcal{A}$

if

![]() $\left(a, b\right) \in \mathcal{R}^{-}$

, we say the argument a attacks argument b.

$\left(a, b\right) \in \mathcal{R}^{-}$

, we say the argument a attacks argument b.

Quantitative Bipolar Argumentation Framework: QBAF is an extension of BAF and is a 5-tuple

![]() $\left(\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-}, \tau, \sigma \right)$

, where

$\left(\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-}, \tau, \sigma \right)$

, where

![]() $\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-}$

are the same as in BAF, and

$\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-}$

are the same as in BAF, and

![]() $\tau: \mathcal{A} \to \mathcal{K}$

is a base score function. The function

$\tau: \mathcal{A} \to \mathcal{K}$

is a base score function. The function

![]() $\tau$

gives initial values to the arguments from a preorder set of numerical values

$\tau$

gives initial values to the arguments from a preorder set of numerical values

![]() $\mathcal{K}$

, meaning that

$\mathcal{K}$

, meaning that

![]() $\mathcal{K}$

is equipped with a function

$\mathcal{K}$

is equipped with a function

![]() $<$

, such that

$<$

, such that

![]() $\forall a, b \in \mathcal{K}$

if

$\forall a, b \in \mathcal{K}$

if

![]() $a < b$

, then

$a < b$

, then

![]() $b \nless a$

. Another important component of QBAF is the strength of an argument, which is defined by a total function

$b \nless a$

. Another important component of QBAF is the strength of an argument, which is defined by a total function

![]() $\sigma:\mathcal{A} \to \mathcal{K}$

.

$\sigma:\mathcal{A} \to \mathcal{K}$

.

Probabilistic Bipolar Argumentation Framework: PBAF is the last AF we will mention and is also an extension of BAF. PBAF is quadruple

![]() $(\mathcal{A}, \mathcal{R}^{+},$

$(\mathcal{A}, \mathcal{R}^{+},$

![]() $ \mathcal{R}^{-}, \mathcal{P})$

where

$ \mathcal{R}^{-}, \mathcal{P})$

where

![]() $\left(\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-} \right)$

is a BAF and

$\left(\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-} \right)$

is a BAF and

![]() $\mathcal{P}$

is a probability distribution function over the set

$\mathcal{P}$

is a probability distribution function over the set

![]() $PD = \{(\mathcal{A'}, \mathcal{R'}^{+}, \mathcal{R'}^{-} )\mid\mathcal{A'} \subseteq \mathcal{A} \wedge \mathcal{R'}^{-} \subseteq (\mathcal{A'} \times \mathcal{A'})\cap \mathcal{R}^{-} \wedge \mathcal{R'}^{+} \subseteq (\mathcal{A'} \times \mathcal{A'}) \cap \mathcal{R}^{+} \}$

. The elements in

$PD = \{(\mathcal{A'}, \mathcal{R'}^{+}, \mathcal{R'}^{-} )\mid\mathcal{A'} \subseteq \mathcal{A} \wedge \mathcal{R'}^{-} \subseteq (\mathcal{A'} \times \mathcal{A'})\cap \mathcal{R}^{-} \wedge \mathcal{R'}^{+} \subseteq (\mathcal{A'} \times \mathcal{A'}) \cap \mathcal{R}^{+} \}$

. The elements in

![]() $PD\left( (\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-}, \mathcal{P}) \right)$

called possible BAFs are the possible scenario that may occur and are represented through a BAF which was extended with probabilities.

$PD\left( (\mathcal{A}, \mathcal{R}^{+}, \mathcal{R}^{-}, \mathcal{P}) \right)$

called possible BAFs are the possible scenario that may occur and are represented through a BAF which was extended with probabilities.

5 Argumentation and Explainability

Explainability serves a much bigger goal than just the desire of computer scientists to make their system more transparent and understandable. Apart from the fact that Explainability is an aspect that justifies the decision of an AI system, it is also a mandatory mechanism of any AI system that can take decisions which affect the life of a person (Core et al. Reference Core, Lane, Van Lent, Gomboc, Solomon and Rosenberg2006). For example, by making an automated charge to our credit card for a TV show that we are subscribers of, or booking an appointment to a doctor that we asked our personal AI helper to make last month, among others. The European Union has defined regulations that obligate a system with this kind of characteristics to provide explanations over their decisions (Regulation Reference Regulation2016). Therefore, in this section, we describe how Argumentation enables Explainability. We can see that explaining a decision with argumentation is not something that emerged in recent years but existed in Argumentation Theory from its beginning (Pavese Reference Pavese2019).

We are going to divide this chapter based on the most important baselines that can provide explanation through Argumentation; Decision-Making, Justification, and Dialogues. Moreover, we will present some basic studies that establish the field of XAI through Argumentation. But first, we offer a literature review on how agents can use Argumentation, and the Ethics that Argumentation should follow. We consider these two subsections important, in order to show how XAI can be implemented in an agent through Argumentation, and what principles the agent must follow in order to be considered unbiased.

5.1 Agents and argumentation

Single agent systems (SAS) and multi-agent systems (MAS) can be built upon various forms of logic, such as first-order logic (Smullyan Reference Smullyan1995), description logic (Nute Reference Nute2001), propositional logic (Buvac & Mason Reference Buvac and Mason1993), and ASP (Fitting Reference Fitting1992; Lifschitz Reference Lifschitz2019). One could read the book of Wooldridge (Reference Wooldridge2009) to see the connections of SAS and MAS with first-order logic and other forms of logic, as well as to take a glimpse to Agents Theory in general.

At this point, we are going to describe the most important studies that share Argumentation Theory and Agents Theory either in SAS or MAS. One of the first studies that addressed this issue was that of Kakas and Moraitis (Reference Kakas and Moraitis2003, Reference Kakas and Moraitis2006), in which the authors presented an AF to support the decisions of an agent. They consider a dynamic framework where the strength of arguments is defined by the context and the desires of the agent. Also, the concept of abduction is used by the agents in this framework. When they are faced with incomplete information, the agents can make hypotheses based on assumptions. Another important aspect is that of the personality of an agent. Based on definitions from cognitive psychology, the authors give to each agent its own beliefs and desires translated into the AF as preferences rules. For instance, let the two arguments

![]() $a = \emph{`I will go for football after work'}$

, and

$a = \emph{`I will go for football after work'}$

, and

![]() $b = \emph{`Bad news, we need to stay over hours today, to finish the project'}$

. Obviously, these two arguments are in conflict; thus, we need a meta-argument preference hierarchy. If, additionally, we knew that argument b is stated by the employer, and argument a by the employee, then it would hold prefer(b,a). We can see implementations of such theoretical frameworks in Panisson et al. (Reference Panisson, Meneguzzi, Vieira and Bordini2014), Panisson and Bordini (Reference Panisson and Bordini2016), where the authors use the AgentSpeak programming language to create agents that argue over a set of specific beliefs and desires using description logic, as well as in Spanoudakis et al. (Reference Spanoudakis, Kakas and Moraitis2016b) where agents argue in a power saving optimization problem between different stakeholders.

$b = \emph{`Bad news, we need to stay over hours today, to finish the project'}$

. Obviously, these two arguments are in conflict; thus, we need a meta-argument preference hierarchy. If, additionally, we knew that argument b is stated by the employer, and argument a by the employee, then it would hold prefer(b,a). We can see implementations of such theoretical frameworks in Panisson et al. (Reference Panisson, Meneguzzi, Vieira and Bordini2014), Panisson and Bordini (Reference Panisson and Bordini2016), where the authors use the AgentSpeak programming language to create agents that argue over a set of specific beliefs and desires using description logic, as well as in Spanoudakis et al. (Reference Spanoudakis, Kakas and Moraitis2016b) where agents argue in a power saving optimization problem between different stakeholders.

Next, Amgoud provides two studies (Amgoud & Serrurier Reference Amgoud and Serrurier2007, Reference Amgoud and Serrurier2008) where agents are able to argue and explain classification. They consider a set of examples

![]() $\mathcal{X}$

, a set of classes

$\mathcal{X}$

, a set of classes

![]() $\mathcal{C}$

, and a set of hypothesis

$\mathcal{C}$

, and a set of hypothesis

![]() $\mathcal{H}$

which are governed by a pre-ordered relation

$\mathcal{H}$

which are governed by a pre-ordered relation

![]() $\leq$

that defines which hypothesis is stronger. Then, an example

$\leq$

that defines which hypothesis is stronger. Then, an example

![]() $x \in \mathcal{X}$

is classified in a class

$x \in \mathcal{X}$

is classified in a class

![]() $c \in \mathcal{C}$

by the hypothesis

$c \in \mathcal{C}$

by the hypothesis

![]() $h \in \mathcal{H}$

, and an argument for this statement is formalized as

$h \in \mathcal{H}$

, and an argument for this statement is formalized as

![]() $a = \left(h, x ,c\right)$

. It is easily understood that other hypotheses could classify the same example to other classes; thus, when all the arguments are created, an AAF is generated.

$a = \left(h, x ,c\right)$

. It is easily understood that other hypotheses could classify the same example to other classes; thus, when all the arguments are created, an AAF is generated.

An important need for agents which use AFs is the capability of understanding natural language and performing conversations with humans (Kemke Reference Kemke2006; Liu et al. Reference Liu, Eshghi, Swietojanski and Rieser2019). Understanding natural language and having a predefined protocol for conversation ease the exchange of arguments (Willmott et al. Reference Willmott, Vreeswijk, Chesnevar, South, McGinnis, Modgil, Rahwan, Reed and Simari2006; Panisson et al. Reference Panisson, Meneguzzi, Vieira and Bordini2015), allow the agents to perform negotiations (Pilotti et al. Reference Pilotti, Casali and Chesñevar2015), be more persuasive (Black & Atkinson Reference Black and Atkinson2011; Rosenfeld & Kraus Reference Rosenfeld and Kraus2016b), and to explain in more detail how they reached a decision (Laird & Nielsen Reference Laird and Nielsen1994).

The recent study of Ciatto et al. (Reference Ciatto, Calvaresi, Schumacher and Omicini2015) proposes an AAF for an MAS focusing on the notions of Explainability and Interpretation. The authors define the notion of interpreting an object O (i.e., interacting with an object by performing an action), as a function I that gives a score in [0,1] with respect to how interpretable the object O is to the agent, when the agent wants to perform an action. The authors consider an explanation as the procedure to find a more interpretable object x

![]() $^{\prime}$

from a less interpretable x. Thus, when a model

$^{\prime}$

from a less interpretable x. Thus, when a model

![]() $M:\mathcal{X}\to \mathcal{Y}$

maps an input set of objects

$M:\mathcal{X}\to \mathcal{Y}$

maps an input set of objects

![]() $\mathcal{X}$

to an output set of actions

$\mathcal{X}$

to an output set of actions

![]() $\mathcal{Y}$

, the model is trying to construct a more interpretable model M’. An AAF can then use this procedure to explain why a set of objects is considered more interpretable.

$\mathcal{Y}$

, the model is trying to construct a more interpretable model M’. An AAF can then use this procedure to explain why a set of objects is considered more interpretable.

5.2 Argumentation and ethics

An agent in order to be trustworthy when it argues about a topic, it needs to be unbiased. For an agent to be considered unbiased, it must not support only its personal interest through the argumentation dialogue with other agent(s). Personal interests are usually supported through fallacies, such as exaggerations of the truth, or with unethical/fake facts. For this reason, the notions of ethics and argumentation are closely related to the problem of tackling biased agents (Correia Reference Correia2012). Moreover, knowing the ethics that an agent follows when it argues is crucial as it enhances the Explainability, by allowing the opposing party to see how the agent supports its personal interests, and to accurately create counter arguments.

The combination of ethics and argumentation to construct unbiased agents who achieve argumentational integrity (Schreier et al. Reference Schreier, Groeben and Christmann1995) is mostly used for persuading the opposing agent(s) (Ripley Reference Ripley2005). Ethics in argumentation is also implemented in legal cases to conduct fair trials (Czubaroff Reference Czubaroff2007), and in medical cases for patients privacy (Labrie & Schulz Reference Labrie and Schulz2014).

The existence of ethics in argumentation is very important in decision-making problems that have conflicting interests between the participants. Especially in scenarios where the proper relations are mandatory, Ethics in Argumentation becomes a necessity. The authors in Mosca et al. (Reference Mosca, Sarkadi, Such and McBurney2020) propose a model where an agent works as a supervisor over decision-making problems where conflicting interests exist, for sharing content online. The agent takes into consideration the personal utility and the moral values of each participant and justifies a decision. A similar study is Langley (Reference Langley2019) for more generic scenarios.

E-Democracy is an evolving area of interest for governments wishing to engage with citizens through the use of new technologies. E-Democracy goal is to motivate young persons to become active members of the community by voting over decisions for their community through web applications and argue if they disagree with a decision that is at stake. It is easily understood that ethics is an important aspect in the argumentation dialogues of an e-Democracy application. Citizens and the government should not be biased only in favor of their own personal interest but for the interest of the community. More specifically, citizens should think if a personal request affects negatively the other members of the community, while the government should consider if the decision that it is proposing has indeed positive results to the community or is only good for the popularity of the members of the government. In Cartwright and Atkinson (Reference Cartwright and Atkinson2009), Wyner et al. (Reference Wyner, Atkinson and Bench-Capon2012b), we can see many web applications for e-Democracy, while in Atkinson et al. (Reference Atkinson, Bench-Capon and McBurney2005a), e-Democracy is used to justify the proposal of an action.

The idea of e-Democracy goes one step further with Wyner et al. (Reference Wyner, Atkinson and Bench-Capon2012a), where the authors propose a model to critique citizens proposals. The authors use Action-based Alternative Translating scheme (Wooldridge & Van Der Hoek Reference Wooldridge and Van Der Hoek2005) to accept or reject the justification of a proposal and automatically provide a critique on the proposal, using practical reasoning. The critique is in the form of critical questions which are provided by the Action-based Alternative Translating scheme. Similarly, Atkinson et al. (Reference Atkinson, Bench-Capon, Cartwright and Wyner2011) use AF with values to critique citizens proposals, and Wardeh et al. (Reference Wardeh, Wyner, Atkinson and Bench-Capon2013) provide web-based tools for this task.

5.3 XAI through Argumentation

In this section, we will present some important studies that lead to the conclusion that Argumentation Theory is one of the most suitable models to provide explanations to AI systems.

The studies of Fan and Toni are very important in this field, as they provide a methodology for computing explanation in AAF (Fan & Toni Reference Fan and Toni2014) and ABA (Fan & Toni Reference Fan and Toni2015a). In the former, the authors define the notion of explanation as: given an argument A defended by a set of arguments S, A is called topic of S and S is the explanation of A. Then, they call compact explanation of A the smallest S with respect to subset relation, equivalently verbose explanation of A the largest S with respect to subset relation. Moreover, based on the notion of Dispute Trees, they provide another method of explanation, with respect to the acceptability semantics of Dispute Trees. A Dispute Tree is defined as follows: (i) the root element is the topic of discussion, (ii) each node at odd depth is an opponent, (iii) each node at even depth is a proponent, and (iv) there does not exist node which is opponent and proponent at the same time. Then, Admissible Disputed Trees are those that each path from the root to the leaf has even length, and the root argument is called acceptable. Furthermore, Dispute Forest is the set of all Admissible Disputed Trees. It is easily seen that the set of Dispute Forest contains all the explanations for why an argument A is acceptable. While in the next study (Fan & Toni Reference Fan and Toni2015a), the authors extend these definitions to ABA.

Next, two studies present a formalization on how to model Explainability into an AF, in the context of solving scientific debates. In Šešelja and Straßer (2013), the authors consider the formalization of an explanation as an extra relation and a set in the AF. More specifically, given an AF

![]() $\left(\mathcal{A}, \mathcal{R} \right)$

an explainable AF is a 5-tuple

$\left(\mathcal{A}, \mathcal{R} \right)$

an explainable AF is a 5-tuple

![]() $\left(\mathcal{A},\mathcal{X}, \mathcal{R},\mathcal{R'},\tilde{\mathcal{R}} \right)$

such that

$\left(\mathcal{A},\mathcal{X}, \mathcal{R},\mathcal{R'},\tilde{\mathcal{R}} \right)$

such that

![]() $\mathcal{X}$

is the set of topics that receive arguments from

$\mathcal{X}$

is the set of topics that receive arguments from

![]() $\mathcal{A}$

as explanations, the relation

$\mathcal{A}$

as explanations, the relation

![]() $\mathcal{R'}$

defines that an argument

$\mathcal{R'}$

defines that an argument

![]() $a \in \mathcal{A}$

is part of the explanation of an element from

$a \in \mathcal{A}$

is part of the explanation of an element from

![]() $x \in \mathcal{X}$

, and finally

$x \in \mathcal{X}$

, and finally

![]() $\tilde{\mathcal{R}}$

states that some arguments may not exist simultaneously in an explanation for a topic because their co-occurrence brings inconsistencies. Therefore, an explanation is a set

$\tilde{\mathcal{R}}$

states that some arguments may not exist simultaneously in an explanation for a topic because their co-occurrence brings inconsistencies. Therefore, an explanation is a set

![]() $\mathcal{E}$

that contains all the arguments from

$\mathcal{E}$

that contains all the arguments from

![]() $\mathcal{A}$

that are connected through

$\mathcal{A}$

that are connected through

![]() $\mathcal{R'}$

with an element from

$\mathcal{R'}$

with an element from

![]() $x \in \mathcal{X}$

and do not co-exist in

$x \in \mathcal{X}$

and do not co-exist in

![]() $\tilde{\mathcal{R}}$

. On the same, principles are the formalization of Sakama (Reference Sakama2018). These formalizations were used for Abduction in Argumentation Theory, to model criticism inherent to scientific debates in terms of counter-arguments, to model alternative explanations, and to evaluate or compare explanatory features of scientific debates.

$\tilde{\mathcal{R}}$

. On the same, principles are the formalization of Sakama (Reference Sakama2018). These formalizations were used for Abduction in Argumentation Theory, to model criticism inherent to scientific debates in terms of counter-arguments, to model alternative explanations, and to evaluate or compare explanatory features of scientific debates.

Case Base Reasoning (CBR) is one of the most commonly used methods in providing explanations about decisions of an AI system. Many studies use CBR to provide explanations in combination with Argumentation. In Čyras et al. (Reference Čyras, Satoh and Toni2016a); Čyras et al. (Reference Čyras, Satoh and Toni2016b), the authors use CBR to classify arguments to a set of possible options, and when new information is inserted to the Knowledge Base (KB), the class of the argument may change.

Imagine we have the options

![]() $O_1 = $

book this hotel,

$O_1 = $

book this hotel,

![]() $O_2 =$

do not book this hotel, and our criteria are that the hotel should be close to the city center and cheap.

$O_2 =$

do not book this hotel, and our criteria are that the hotel should be close to the city center and cheap.

We find the hotel H to be close to the city center. Then, we have an argument for booking hotel H, but when we look at the price, we see that it is too expensive for us. Then, we have a new argument not to book the hotel H.

We can understand that this method is close to explanation through dialogues, where each step adds new information to the KB. Therefore, the authors also provide an illustration of their framework with Dispute Trees. Another study that uses CBR and Argumentation is Čyras et al. (Reference Čyras, Birch, Guo, Toni, Dulay, Turvey, Greenberg and Hapuarachchi2019), where the authors give a framework that explains why certain legislation passes and others not, based on a set of features. They use the features of: (i) The Type, if the legislation is proposed by the Government, Private Sector, etc, (ii) The Starting House Parliament (it is a UK study; thus, the authors consider the House of Lords and the House of Commons), (iii) The number of legislations that are proposed, (iv) Ballot Number, and (v) Type of Committee. Another CBR model that classifies arguments based on precedents and features, for legal cases, is presented in Bex et al. (Reference Bex, Bench-Capon and Verheij2011). The authors use a framework that takes in consideration information from the KB in order to classify the argument. More specifically, given a verdict that a person stole some money, under specific circumstances, the system must classify the argument if the defendant is guilty or not. The system will search for similar cases in its KB to make an inference based on important features such as type of crime, the details of the legal case, the age of the defendant, and if the defendant was the moral instigator.

The aspect of explanation of query failure using arguments is studied in Arioua et al. (Reference Arioua, Tamani, Croitoru and Buche2014). More specifically, the authors elaborate on query failures based on Boolean values in the presence of knowledge inconsistencies, such as missing and conflicting information within the ontology KB. The framework supports a dialectical interaction between the user and the reasoner. The ontology can also construct arguments from the information in the ontology on the question. The user can request for explanations on why a question Q holds or not, and it can follow up with questions to the explanation provided by the framework.

5.4 Decision-making with argumentation

Argumentation is highly related to Decision-Making. In fact, it has been stated that Argumentation was proposed in order to facilitate Decision-Making (Mercier & Sperber Reference Mercier and Sperber2011). The contributions of Argumentation in Decision-Making are plenty, with the most important being support or opposition of a decision, reasoning for a decision, tackling KBs with uncertainty, and recommendations.

The problem of selecting the best decision from a variety of choices is maybe the most popular among studies that combine Argumentation and Decision-Making. In Amgoud and Prade (Reference Amgoud and Prade2009), the authors present the first AAF for Decision-Making used by MAS. They propose a two-step method of mapping the decision problem in the context of AAF. First, the authors consider beliefs and opinions as arguments. Second, pairs of options are compared using decision principles. The decision principles are: (i) Unipolar refers only to the arguments attacks or defenses; (ii) Bipolar takes into consideration both; and (iii) Non-Polar is those that given a specific choice (the opinion), an aggregation occurs, such that arguments pros and cons disappear in a meta-argument reconsideration of the AAF. Moreover, the authors test their framework under optimistic and pessimistic decision criteria (i.e., a decision may be more desirable or less than other), and decision-making under uncertainty. Decision-Making under uncertainty is also presented in Amgoud and Prade (Reference Amgoud and Prade2006), where the authors try to tackle uncertainty over some decisions by comparing alternative solutions. Pessimistic and optimistic criteria are also part of the study.

In Zhong et al. (Reference Zhong, Fan, Toni and Luo2014), the authors define an AF that takes into consideration information from the KB to make a decision using similar decisions. The framework first parses the text of the argument and extracts the most important features (nouns, verbs). Then, it compares with the decisions in the KB and returns the most similar decision, with respect to the quantity of common features. The framework can back up its decision with arguments on how similar the two cases are and uses arguments which were stated for the similar case in the KB. On the other hand, in Zeng et al. (Reference Zeng, Miao, Leung and Chin2018), the authors use a Decision Graph with Context (DGC), to understand the context, in order to support a decision. A DGC is a graph, where the decisions are represented as nodes, and the interactions between them (attacking and supporting) as edges. The authors map the DGC in an ABA by considering decisions as arguments and the interactions between them as attack and support relations. Then, if a decision is accepted in the ABA, it is considered a good decision.

Decision-Making and Argumentation are also used to support and explain the result of a recommendation system (Friedrich & Zanker Reference Friedrich and Zanker2011; Rago et al. Reference Rago, Cocarascu and Toni2018). Recommendation systems with Argumentation resemble feature selection combined with user evaluation on features. A recommendation system is 6-tuple

![]() $\left(\mathcal{M}, \mathcal{AT}, \mathcal{T}, \mathcal{L}, \mathcal{U}, \mathcal{R}\right)$

such that:

$\left(\mathcal{M}, \mathcal{AT}, \mathcal{T}, \mathcal{L}, \mathcal{U}, \mathcal{R}\right)$

such that:

-

1.

$\mathcal{M}$

is a finite set of items.

$\mathcal{M}$

is a finite set of items. -

2.

$\mathcal{AT}$

is a finite, non-empty set of attributes for the items.

$\mathcal{AT}$

is a finite, non-empty set of attributes for the items. -

3.

$\mathcal{T}$

a set of types for the attributes.

$\mathcal{T}$

a set of types for the attributes. -

4. the sets

$\mathcal{M}$

and

$\mathcal{M}$

and

$\mathcal{AT}$

are pairwise disjoint.

$\mathcal{AT}$

are pairwise disjoint. -

5.

$\mathcal{X}= \mathcal{M} \cup \mathcal{AT}$

.

$\mathcal{X}= \mathcal{M} \cup \mathcal{AT}$

. -

6.

$\mathcal{L}\subseteq \left(\mathcal{M}\times \mathcal{AT}\right)$

is a symmetrical binary relation.

$\mathcal{L}\subseteq \left(\mathcal{M}\times \mathcal{AT}\right)$

is a symmetrical binary relation. -

7.

$\mathcal{U}$

is a finite, non-empty set of users.

$\mathcal{U}$

is a finite, non-empty set of users. -

8.

$ \mathcal{R}:\mathcal{U}\times\mathcal{X}\to [-1,1]$

a partial function of ratings.

$ \mathcal{R}:\mathcal{U}\times\mathcal{X}\to [-1,1]$

a partial function of ratings.

Mapping the recommendation system to an AF is done after the ratings have been given by a variety of users. Arguments are the different items from

![]() $\mathcal{M}$

, and positive and negative ratings to the attributes related to an item from

$\mathcal{M}$

, and positive and negative ratings to the attributes related to an item from

![]() $\mathcal{M}$

as supports or attacks.

$\mathcal{M}$

as supports or attacks.

Decision-Making for MAS in an ABA is presented in Fan et al. (Reference Fan, Toni, Mocanu and Williams2014). The authors consider that agents can have different goals and decisions hold attributes that are related to the goal of each agent. In their case, the best decision is considered as an acceptable argument in the joint decision framework of two different agents. Moreover, the authors define trust between agents, meaning that the arguments of an agent are stronger than the arguments of others in the scope of some scenario.

5.5 Justification through argumentation

Justification is a form of explaining an argument, in order to make it more convincing and persuade the opposing participant(s). Justification uses means of supporting an argument with background knowledge, defensive arguments from the AF, and external knowledge. One important study in this field is Čyras et al. (Reference Čyras, Fan, Schulz and Toni2017), where with the help of ABA and Dispute Trees, the authors show how easy it is to justify if an argument is acceptable or not, just by reasoning over the Dispute Tree. Similarly, Schulz and Toni (Reference Schulz and Toni2016) provide two methods of Justification for a claim that is part of an ASP, both using correspondence between ASP and stable extensions of an ABA. The first method relies on Attack Trees. The authors consider an Attack Tree as: given an argument A, the root of the tree, and the children being the attackers of A, iteratively each node in the tree has as children only its attackers. An Attack Tree is constructed for the stable extension of an ABA and is using admissible fragments of the ASP. If the literals that form the argument are part of the fragment, then the argument is justified. The second justification method relies on the more typical method of checking if there exists an Admissible Dispute Tree for the argument.

Preference rules are usually used to justify the acceptability of an argument. We can see such studies in Melo et al. (Reference Melo, Panisson and Bordini2016), Cerutti et al. (Reference Cerutti, Giacomin and Vallati2019). Acceptability of an argument is easily explained through preference rules, due to the fact that preference rules are a sequence of preferences between logic rules. Melo et al. (Reference Melo, Panisson and Bordini2016) present preferences over arguments formed from information of different external sources by computing the degree of trust each agent has for a source. The authors define the trust of a source

![]() $\phi$

as a function

$\phi$

as a function

![]() $tr(\phi) \in [0,1]$

. Given an argument A with supporting set

$tr(\phi) \in [0,1]$

. Given an argument A with supporting set

![]() $S = \{\phi_1,\ldots,\phi_n\}$

from different external sources, the trust of an argument is given by Equation (2), where

$S = \{\phi_1,\ldots,\phi_n\}$

from different external sources, the trust of an argument is given by Equation (2), where

![]() $\otimes$

is a generic operator (i.e., it could be any operator according to the characteristics of the problem we try to solve).

$\otimes$

is a generic operator (i.e., it could be any operator according to the characteristics of the problem we try to solve).

Moreover, the authors consider two different types of agent’s behaviors: (i) Credulous agents trust only the most trustworthy source (the one with the biggest score from tr), and (ii) Skeptical agents consider all the sources from which they received information. Their study was based on Tang et al. (Reference Tang, Sklar and Parsons2012), where the authors also define trust of arguments in MAS. Cerutti et al. (Reference Cerutti, Giacomin and Vallati2019) designed the ArgSemSAT system that can return the complete labelings of an AAF and is commonly used for the justification of the acceptability of an argument. ArgSemSAT is based on satisfiability solvers (SAT), and its biggest innovations are: (i) it can find a global labeling encoding which describes better the acceptability of an argument, (ii) it provides a method where if we compute first the stable extensions we can optimize the procedure of computing the preferred extensions, and (iii) it can optimize the labeling procedure and computation of extensions of an AAF, with the help of SAT solvers and domain-specific knowledge.

Justification for Argument-Based Classification has been the topic of the study in Thimm and Kersting (Reference Thimm and Kersting2017). The authors propose a method of justifying the classification of a specific argument, based on the features that it possesses. For instance, X should be classified as a penguin because it has the features black, bird, not(fly), eatsfish. An advantage of using classification based on features is that it makes explanation an easy task.

One common method to justify an argument is by adding values to the AF. There are cases where we cannot be conclusive that either party is wrong or right, in a situation of practical reasoning. The role of Argumentation in a case like this is to persuade the opposing party rather than to lead to mutually satisfactory compromises. Therefore, the values that are added to an AF are social values, and whether an attack of one argument on another succeeds depends on the comparative strength of the values assigned to the arguments. For example, consider the argument A1 from BBC, and the argument A2 from Fox News.

Obviously, those two arguments are in conflict; yet, we wish to reach to some conclusion about the weather, even an uncertain one, in order to plan a road trip. In this case, adding social values to the AF will solve the problem. For instance, a naive way is to define a partial order by relying on an assignment of trustworthiness: if we trust information arriving from BBC more than from Fox News, we can use this order to reach to the conclusion. Another way is to take a third opinion and consider valid the argument that is supported by two sources. These ideas were implemented in an AF by Bench-Capon in Bench-Capon (Reference Bench-Capon2003a); Bench-Capon (Reference Bench-Capon2002), where the author extends an AAF by adding values (AFV). Subsequently, AFVs were used to solve legal conflicts (Bench-Capon Reference Bench-Capon2003b; Bench-Capon et al. Reference Bench-Capon, Atkinson and Chorley2005), to infer inconsistency between preferences of arguments (Amgoud & Cayrol Reference Amgoud and Cayrol2002a), and to produce acceptable arguments (Amgoud & Cayrol Reference Amgoud and Cayrol2002b).

The notions of AFVs were extended by Modgil in Hierarchical Argumentation Frameworks (Modgil Reference Modgil2006a, b, Reference Modgil2009). Intuitively, given a set of values

![]() $\{a_1,\ldots,a_n\}$

, an extended AAF (i.e., attacks and defence relations exist) is created for each value

$\{a_1,\ldots,a_n\}$

, an extended AAF (i.e., attacks and defence relations exist) is created for each value

![]() $((\mathcal{A}_1,\mathcal{R}_1),\ldots,(\mathcal{A}_n,\mathcal{R}_n))$

.

$((\mathcal{A}_1,\mathcal{R}_1),\ldots,(\mathcal{A}_n,\mathcal{R}_n))$

.

![]() $\mathcal{A}_i$

contains arguments whose value is

$\mathcal{A}_i$

contains arguments whose value is

![]() $a_i$

and

$a_i$

and

![]() $\mathcal{R}_i$

attacks which are related to the arguments of

$\mathcal{R}_i$

attacks which are related to the arguments of

![]() $\mathcal{A}_i$

, for

$\mathcal{A}_i$

, for

![]() $i \in \{1,\ldots,n\}$

. This mapping helped to accommodate arguments that define preferences between other arguments, thus incorporating meta level argumentation-based reasoning about preferences at the object level. Additionally, the studies of Coste-Marquis et al. (Reference Coste-Marquis, Konieczny, Marquis and Ouali2012a, b) and Bistarelli et al. (Reference Bistarelli, Pirolandi and Santini2009) depict more accurately how the social values are translated into numerical values in a single AAF, extending the studies of Dunne et al. (Reference Dunne, Hunter, McBurney, Parsons and Wooldridge2009), Dunne et al. (Reference Dunne, Hunter, McBurney, Parsons and Wooldridge2011).

$i \in \{1,\ldots,n\}$

. This mapping helped to accommodate arguments that define preferences between other arguments, thus incorporating meta level argumentation-based reasoning about preferences at the object level. Additionally, the studies of Coste-Marquis et al. (Reference Coste-Marquis, Konieczny, Marquis and Ouali2012a, b) and Bistarelli et al. (Reference Bistarelli, Pirolandi and Santini2009) depict more accurately how the social values are translated into numerical values in a single AAF, extending the studies of Dunne et al. (Reference Dunne, Hunter, McBurney, Parsons and Wooldridge2009), Dunne et al. (Reference Dunne, Hunter, McBurney, Parsons and Wooldridge2011).

AFVs had a significant impact contributed significantly to practical reasoning, that is, reasoning about what action is better for an agent to perform in a particular scenario. The authors in Atkinson and Bench-Capon (Reference Atkinson and Bench-Capon2007b) justify the choice of an action through an argumentation scheme, which is subjected to a set of critical questions. In order for the argument scheme and critical question to be given correct interpretations, the authors use the Action-Based Alternating Transition System as the basis of their definitions. The contributions of AFVs are for the justification of an action, to show how preferences based upon specific values emerge through practical reasoning. The authors use values in the argumentation scheme to denote some descriptive social attitude or interest, which an agent (or a group of agents) wish to hold. Moreover, the values provide an explanation for moving from one state to another, after an action is performed. Therefore, values in this argumentation scheme obtain a qualitative, rather than a quantitative meaning. Two extensions of this study are Atkinson and Bench-Capon (Reference Atkinson and Bench-Capon2007a) and (Reference Atkinson and Bench-Capon2018). In the former, the agent must take into consideration the actions of another agent when it wants to perform an action. While in the latter, the agent must take in consideration the actions of all the agents that exist in a framework. An implementation of this argumentation scheme for formalizing the audit dialogue in which companies justify their compliance decisions to regulators can be found in Burgemeestre et al. (Reference Burgemeestre, Hulstijn and Tan2011).

5.6 Dialogues and argumentation for XAI

Explaining an opinion by developing an argumentation dialogue has its roots in Argumentation Dialogue Games (ADG) (Levin & Moore Reference Levin and Moore1977), which existed long before Dung presented the AAF (Dung Reference Dung1995). These dialogues occur between two parties which argue about the tenability of one or more claims or arguments, each trying to persuade the other participant to adopt their point of view. Hence, such dialogues are also called persuasion dialogues. Dung’s AAF (Dung Reference Dung1995) enhanced the area of ADG and helped many scientists to implement the notions of ADG in an AF (Hage et al. Reference Hage, Leenes and Lodder1993; Bench-Capon Reference Bench-Capon1998; Bench-Capon et al. Reference Bench-Capon, Geldard and Leng2000).