1 Introduction

The rapid growth of large-scale data is driving demand for efficient processing of the data to obtain valuable knowledge. Typical instances of large-scale data are large graphs such as social networks, road networks, and consumer purchase histories. Since such large graphs are becoming more and more prevalent, highly efficient large-graph processing is becoming more and more important. A quite natural solution for dealing with large graphs is to use parallel processing. However, developing efficient parallel programs is not an easy task, because subtle programming mistakes lead to fatal errors such as deadlock and to nondeterministic results.

From the programmer’s point of view, there are various models and approaches to the parallel processing of large graphs, including the MapReduce model (Bu et al. Reference Bu, Howe, Balazinska and Ernst2012), the matrix model (Kang et al. Reference Kang, Tsourakakis and Faloutsos2011; Reference Kang, Tong, Sun, Lin and Faloutsos2012), the data parallelism programming model with a domain-specific language (Hong et al. Reference Hong, Chafi, Sedlar and Olukotun2012; Nguyen et al. Reference Nguyen, Lenharth and Pingali2013), and the vertex-centric model (Malewicz et al. Reference Malewicz, Austern, Bik, Dehnert, Horn, Leiser and Czajkowski2010; McCune et al. Reference McCune, Weninger and Madey2015). The vertex-centric model is particularly promising for avoiding mistakes in parallel programming. It has been intensively studied and has served as the basis for a number of practically useful graph processing systems (McCune et al. Reference McCune, Weninger and Madey2015; DKhan, Reference Khan2017; Liu & Khan, Reference Liu and Khan2018; Song et al., Reference Song, Liu, Wu, Gerstlauer, Li and John2018; Zhuo et al., Reference Zhuo, Chen, Luo, Wang, Yang, Qian and Qian2020). We thus focus on the vertex-centric model in this article.

In vertex-centric graph processing, all vertices in a graph are distributed among computational nodes that iteratively execute a series of computations in parallel. The computations consist of communication with other vertices, aggregation of vertex values as needed, and calculation of their respective values. Communication is typically between adjacent vertices; a vertex accepts messages from incoming edges as input and sends the results of its calculations to other vertices along outgoing edges.

Several vertex-centric graph processing frameworks have been proposed, including Pregel (Malewicz et al., Reference Malewicz, Austern, Bik, Dehnert, Horn, Leiser and Czajkowski2010; McCune et al., Reference McCune, Weninger and Madey2015), Giraph, Footnote 1 GraphLab (Low et al. Reference Low, Gonzalez, Kyrola, Bickson, Guestrin and Hellerstein2012), GPS (Salihoglu & Widom Reference Salihoglu and Widom2013), GraphX (Gonzalez et al. Reference Gonzalez, Xin, Dave, Crankshaw, Franklin and Stoica2014), Pregel+ (Yan et al. Reference Yan, Cheng, Xing, Lu, Ng and Bu2014b), and Gluon (Dathathri et al. Reference Dathathri, Gill, Hoang, Dang, Brooks, Dryden, Snir, Pingali, Foster and Grossman2018). Although they release the programmer from the difficulties of parallel programming for large-graph processing to some extent, there still exists a big gap between writing a natural, intuitive, and concise program and writing an efficient program. As discussed in Section 2, a naturally written vertex-centric program tends to have inefficiency problems. To improve efficiency, the programmer must describe explicit and sometimes complex controls over communications, execution states, and terminations. However, writing these controls is not only an error-prone task but also a heavy burden on the programmer.

In this article, we present a functional domain-specific language (DSL) called Fregel for vertex-centric graph processing and describe its model, design, and implementation.

Fregel has two notable features. First, it supports declarative description of vertex computation in functional style without any complex controls over communications, execution states, and terminations. This enables the programmer to write a vertex computation in a natural and intuitive manner. Second, the compiler translates a Fregel program into code runnable in the Giraph or Pregel+ framework. The compiler inserts optimized code fragments into programs generated for these frameworks that perform the complex controls, thereby improving processing efficiency,

Our technical contributions can be summarized as follows:

-

We abstract and formalize synchronous vertex-centric computation as a second-order function that captures the higher-level computation behavior using recursive execution corresponding to dynamic programming on a graph. In contrast to the traditional vertex-centric computation model, which pushes (sends) information from a vertex to other vertices, our model is pull-based (or peek-based) in the sense that a vertex “peeks” on neighboring vertices to get information necessary for computation.

-

We present Fregel, a functional DSL for declarative-style programming on large graphs that is based on the pulling-style vertex-centric model. It abstracts communication and aggregation by using comprehensions. Fregel encourages concise, compositional-style programming on large graphs by providing four second-order functions on graphs. Fregel is purely functional without any side effects. This functional nature enables various transformations and optimizations during the compilation process. As Fregel is a subset of Haskell, Haskell tools can be used to test and debug Fregel programs. The Haskell code of the Fregel interpreter in which Fregel programs can be executed is presented in Section 5. Though sequential, this interpreter is useful for checking Fregel programs.

-

We show that a Fregel program can be compiled into a program for two vertex-centric frameworks through an intermediate representation (IR) that is independent of the target framework. We also present optimization methods for automatically removing inefficiencies from Fregel programs. The key idea is to use modern constraint solvers to identify inefficiencies. The declarative nature of Fregel programs enables such optimization problems to be directly reduced to constraint-solving problems. Fregel’s optimizing compilation frees programmers from problematic programming burdens. Experimental results demonstrated that the compiled code can be executed with reasonable and promising performance.

Fregel currently has a couple of limitations compared with existing Plegel-like frameworks, Giraph and Pregel+. First, the target graph must be a static one that does not change shape or edge weights during execution. Second, a vertex can communicate only with adjacent vertices. Third, each vertex handles only fixed-size data. These mean that algorithms that change the topology of the target graph, update edge weights, or use a variable-length data structure in each vertex cannot be described in Fregel. Removing these limitations by addressing the need to handle dynamism, for example, changing graph shapes and handling variable-length data on each vertex, is left for future work.

The remainder of this article is structured as follows. We start in Section 2 by explaining vertex-centric graph processing and describing its problems. In Section 3, we present our functional vertex-centric graph processing model. On the basis of this functional model, Section 4 describes the design of Fregel with its language constructs and presents many programming examples. In Section 5, we present an interpretive implementation of Fregel in Haskell. In Section 6, we present a detailed implementation of the Fregel compiler, which translates a given Fregel program into Giraph or Pregel+ code. Section 7 discusses optimization methods that remove inefficiencies in the compiled code. Section 8 presents the results of a wide-range evaluation using various programs for both Giraph and Pregel+. Related work is discussed in Section 9, and Section 10 concludes with a summary of the key points, concluding remarks, and mention of future work.

This article revises, expands, and synthesizes materials presented at the 21st ACM SIGPLAN International Conference on Functional Programming (ICFP 2016) (Emoto et al. Reference Emoto, Matsuzaki, Hu, Morihata and Iwasaki2016) and the 14th International Symposium on Functional and Logic Programming (FLOPS 2018) (Morihata et al. Reference Morihata, Emoto, Matsuzaki, Hu and Iwasaki2018). New materials include many practical program examples of Fregel, redesign and implementation of the Fregel compiler that can generate both Giraph and Pregel+ code, and a wide-range evaluation of the Fregel system from the viewpoints of the performance and the memory usage through the use of both Giraph and Pregel+.

2 Vertex-centric graph processing

Vertex-centric computation became widely used following the emergence the Pregel framework (Malewicz et al. Reference Malewicz, Austern, Bik, Dehnert, Horn, Leiser and Czajkowski2010). Pregel enables synchronous computation on the basis of the bulk synchronous parallel (BSP) model (Valiant Reference Valiant1990) and supports procedural-style programming. Hereafter, we use “Pregel” both as the name of the framework and as the name of the BSP-based vertex-centric computation model.

2.1 Overview of vertex-centric graph processing

We explain vertex-centric computation by using Pregel for procedural-style programming through several small examples.

In Pregel, the vertices distributed on computational nodes iteratively execute one unit of their respective computation, a superstep, in parallel, followed by a global barrier synchronization. A superstep is defined as a common user-defined compute function that consists of communication between vertices, aggregation of values on all active vertices, and calculation of a value on each vertex. Since the programmer cannot specify the delivery order of messages, operations on delivered messages are implicitly assumed to be commutative and associative. After execution of the compute function by all vertices, global barrier synchronization is performed. This synchronization ensures the delivery of communication and aggregation messages. Messages sent to other vertices in a superstep are received by the destination vertices in the next superstep. Thus, only deadlock-free programs can be described.

As an example, let us consider a simple problem of marking all vertices of a graph reachable from the source vertex, for which the identifier is one. We call it the all-reachability problem hereafter.

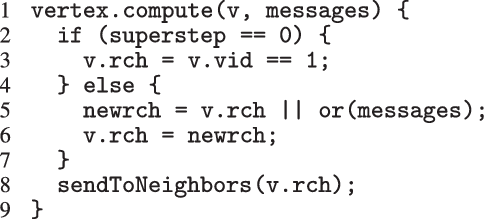

We start with a naive definition of the compute function, which is presented in Figure 1. Here, vertex.compute represents a compute function that is repeatedly executed on each vertex. Its first argument, v, is a vertex that executes this compute function, and its second argument, messages, is a list of delivered messages sent to v in the previous superstep. superstep is a global variable that holds the number of the current superstep, which begins from 0. The compute function is incomplete in the sense that its iterative computation never terminates. Nevertheless, it suffices for the explanation of vertex-centric computation. Termination control is discussed in Section 2.2.

Fig. 1. Incomplete and naive Pregel-like code for all-reachability problem.

Every vertex has a Boolean member variable rch that holds the marking information, that is, whether the vertex is judged to be reachable at the current superstep. The compute function accepts a vertex and its received messages as input. At the first superstep, only the source vertex for which the identifier is one is marked true and the other vertices are marked false. Then each vertex sends its marking information to its neighboring vertices. At the superstep other than the first, each vertex receives incoming messages by “or”ing them, which means that the vertex checks if there is any message containing true. Finally, it “or”s the result and the current rch value, stores the result as the new marking information, and sends it to its neighboring vertices.

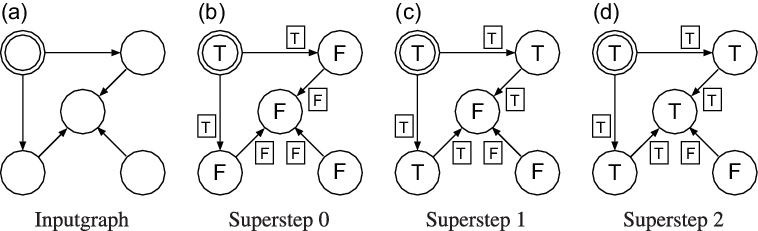

Figure 2 demonstrates how three supersteps are used to mark all reachable vertices for an input graph with five vertices. The T and F in the figure stand for true and false, respectively, and the double circle indicates the starting vertex.

Fig. 2. First three supersteps of the naive program for all-reachability problem.

Though the definition of the compute function is quite simple and easy to understand, the compute function has three apparent inefficiency problems in addition to the non-termination problem.

-

1. A vertex need not send false to its neighboring vertices, because false never switches a neighbor’s rch value to true.

-

2. A vertex need not send true more than once, because sending it only once suffices for marking its neighbors as true.

-

3. It is not necessary to process all vertices at every superstep except the first one. Only those that receive messages from neighbors need to be processed.

The compute function also has two potential inefficiency problems.

-

4. Global barrier synchronization after every superstep might increase overhead. Though Pregel uses synchronous execution, iteration of the compute function could be performed asynchronously without global barrier synchronization.

-

5. Though the compute function is executed independently by every vertex, a set of vertices placed on the same computational node could cooperate for better performance in the computation of vertex values in the set.

The last two inefficiencies have already been recognized, and mechanisms have been proposed to remove them (Gonzalez et al. Reference Gonzalez, Low, Gu, Bickson and Guestrin2012; Yan et al. Reference Yan, Cheng, Lu and Ng2014a).

2.2 Inactivating vertices

To address the apparent inefficiencies, Pregel and many Pregel-like frameworks such as Giraph and Pregel+ introduced an “active” property for each vertex. During iterative execution of the compute function, each vertex is either active or inactive. Initially, all vertices are active. If nothing needs to be done on a vertex, the vertex can become inactive explicitly by voting to halt, which means inactivating itself. At each superstep, only active vertices take part in the calculation of the compute function. An inactive vertex becomes active again by being sent a message from another vertex. The entire iterative processing for a graph terminates when all vertices become inactive and there remain no unreceived messages. Thus, inactivating vertices are used to control program termination.

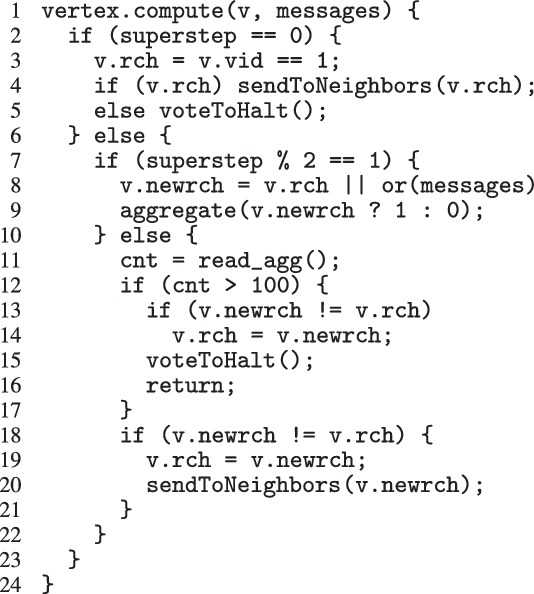

Figure 3 presents Pregel code for the all-reachability problem that remedies the apparent inefficiencies and also terminates when the rch values on all vertices no longer change.

Fig. 3. Improved Pregel-like code for all-reachability problem.

At the first superstep, only the source vertex is marked true, and it sends its rch value to its neighbors. Then all vertices inactivate themselves by voting to halt. At the second and subsequent supersteps, only those vertices that have messages reactivate, receive the messages, and calculate their newrch values. If newrch and the current rch are not the same, the vertex updates its rch value and sends it to its neighboring vertices. Then, all vertices inactivate again by voting to halt. If newrch and the current rch are the same on all vertices, they inactivate simultaneously, and the iterative computation of the compute function terminates.

As can be seen from the code in Figure 3, to remove the apparent inefficiencies, a compute function based on the Pregel model describes communications and termination control explicitly. This makes defining compute functions unintuitive and difficult.

When aggregations are necessary, the situation becomes worse. For example, suppose that we want to mark the reachable vertices and stop when we have a sufficient number (N) of them. For simplicity, we assume that there are more than 100 reachable vertices in the target graph. We call this problem in which

![]() $N = 100$

the 100-reachability problem. At each superstep, the compute function needs to count the number of currently reachable vertices to determine whether it should continue or halt. To enable acquiring such global information, Pregel supports a mechanism called aggregation, which collects data from all active vertices and aggregates them by using a specified operation such as sum or max. Each vertex can use the aggregation result in the next superstep. By using aggregation to count the number of vertices that are marked true, we can solve the 100-reachability problem, as shown in the vertex program in Figure 4.

$N = 100$

the 100-reachability problem. At each superstep, the compute function needs to count the number of currently reachable vertices to determine whether it should continue or halt. To enable acquiring such global information, Pregel supports a mechanism called aggregation, which collects data from all active vertices and aggregates them by using a specified operation such as sum or max. Each vertex can use the aggregation result in the next superstep. By using aggregation to count the number of vertices that are marked true, we can solve the 100-reachability problem, as shown in the vertex program in Figure 4.

Fig. 4. Pregel-like code for 100-reachability problem.

Note that aggregation should be done before the check for the number of reachable vertices. This order is guaranteed by using the odd supersteps to compute aggregation and the even supersteps to check the number. The programmer must explicitly assign states to supersteps so that different supersteps behave differently. The value of newrch is set in an odd superstep and read in the next even superstep. Since the extent of a local variable is one execution of the compute function in a superstep, newrch has to be changed from a local variable in Figure 3 to a member variable of a vertex in Figure 4.

Only active vertices participate in the aggregation, because inactive vertices do not execute the compute function. Thus, vertices marked true should not inactivate, that is, should not vote to halt, in order to determine the precise number of reachable vertices. This subtle control of inactivation is error-prone no matter how careful the programmer.

The program for the 100-reachability problem shows that explicit state controls and subtle termination controls make the program difficult to describe and understand.

2.3 Asynchronous execution

For the fourth potential inefficiency, asynchronous execution in which vertex computations are processed without global barrier synchronization can be considered instead of synchronous execution. Removing barriers could improve the efficiency of the vertex-centric computation. For the all-reachability problem, both synchronous and asynchronous executions lead to the same solution. Generally speaking, however, both executions do not always yield the same result; this depends on the algorithm. In addition, even if they yield the same result, which execution style of the two is more efficient depends on the situation.

Some vertex-centric frameworks, for example, GiraphAsync (Liu et al. Reference Liu, Zhou, Gao and Fan2016), use asynchronous execution. There are also frameworks that support both synchronous and asynchronous executions, such as GraphLab (Low et al. Reference Low, Gonzalez, Kyrola, Bickson, Guestrin and Hellerstein2012), GRACE (Wang et al. Reference Wang, Xie, Demers and Gehrke2013), and PowerSwitch (Xie et al. Reference Xie, Chen, Guan, Zang and Chen2015).

2.4 Grouping related vertices

For coping with the fifth potential inefficiency, placing a group of related vertices on the same computational node and executing all vertex computation as a single unit of processing could improve efficiency. This means enlarging the processing unit from a single vertex to a set of vertices. Many frameworks have been developed on the basis of this idea. For example, NScale (Quamar et al. Reference Quamar, Deshpande and Lin2014), Giraph++ (Tian et al. Reference Tian, Balmin, Corsten, Tatikonda and McPherson2013), and GoFFish (Simmhan et al. Reference Simmhan, Kumbhare, Wickramaarachchi, Nagarkar, Ravi, Raghavendra and Prasanna2014) are based on subgraph-centric computation, and Blogel (Yan et al. Reference Yan, Cheng, Lu and Ng2014a) is based on block-centric computation. Again, which computation style of the two, vertex-centric or group-based, is more efficient depends on the program.

2.5 Fregel’s approach

Fregel enables the programmer to write vertex-centric programs without the complex controls described in Section 2.2 from the declarative perspective and automatically eliminates the apparent inefficiencies of naturally described programs. Since explicit, complex, and imperative controls over communications, terminations, and so forth are removed from a program, the vertex computation proceeds to a functional description with “peeking” on neighboring vertices to obtain information necessary for computation.

To solve the all-reachability problem in Fregel, the programmer writes a natural functional program that corresponds to the Pregel program presented in Figure 1 with a separately specified termination condition. Depending on the compilation options specified by the programmer, the Fregel compiler applies optimizations for reducing inefficiencies in the program and generates a program that can run in a procedural vertex-centric graph processing framework.

As a solution for the fourth potential inefficiency, we propose a method for removing the barrier synchronization and thereby enabling asynchronous execution. This optimization also enables removing the fifth potential inefficiency. In asynchronous execution, the order of processing vertices does not matter; therefore, a group of related vertices can be processed independently from other groups of vertices. To improve the efficiency of processing vertices in a group, we propose introducing priorities for processing vertices.

3 Functional model for synchronous vertex-centric computation

We first modeled the synchronous vertex-centric computation as a higher-order function. Then, on the basis of this model, we designed Fregel, a functional DSL. In this section, we introduce our functional model by using Haskell notation. The Fregel language will be described in Section 4.

In the original Pregel, data communication is viewed as explicit pushing in which a vertex sends data to another vertex, typically to its adjacent vertex along an outgoing edge. Thus, a Pregel program describes data exchange between two vertices explicitly, for example, by using sendToNeighbors in Figure 1, which results in a program with an imperative form. Since our aim is to create a functional model of vertex-centric computation, the explicit-pushing style, which has a high affinity with imperative programs, is inappropriate.

We thus designed our functional model so that data communication is viewed as implicit pulling in which a vertex pulls (or “peeks at”) data in an adjacent vertex connected by an incoming edge. The iterative computation at each vertex is defined in terms of a function, and its return value, that is, the result of a single repetition, is implicitly sent to the adjacent vertices. Every adjacent vertex also implicitly receives the communicated value via an argument of the function.

3.1 Definition of datatypes

First, we define the datatypes needed for our functional model. Let

![]() $\mathit{Graph}~a~b$

be the directed graph type, where a is the vertex value type and b is the edge weight type. The vertices have type

$\mathit{Graph}~a~b$

be the directed graph type, where a is the vertex value type and b is the edge weight type. The vertices have type

![]() $\mathit{Vertex}~a~b$

, and the edges have type

$\mathit{Vertex}~a~b$

, and the edges have type

![]() ${\mathit{Edge}~a~b}$

. A vertex of type

${\mathit{Edge}~a~b}$

. A vertex of type

![]() ${\mathit{Vertex}~a~b}$

has a unique vertex identifier (a positive integer value), a value of type a, and a list of incoming edges of type

${\mathit{Vertex}~a~b}$

has a unique vertex identifier (a positive integer value), a value of type a, and a list of incoming edges of type

![]() ${[\,\mathit{Edge}~a~b\,]}$

. An edge of type

${[\,\mathit{Edge}~a~b\,]}$

. An edge of type

![]() ${\mathit{Edge}~a~b}$

is a pair of the edge weight of type b and the source vertex of this edge.

${\mathit{Edge}~a~b}$

is a pair of the edge weight of type b and the source vertex of this edge.

![]() ${\mathit{Graph}~a~b}$

is a list of all vertices, each of which has the type

${\mathit{Graph}~a~b}$

is a list of all vertices, each of which has the type

![]() ${\mathit{Vertex}~a~b}$

.

${\mathit{Vertex}~a~b}$

.

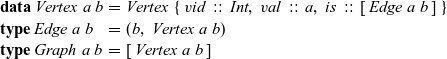

The definitions of these datatypes are as follows, where

![]() ${\mathit{{vid}}}$

,

${\mathit{{vid}}}$

,

![]() ${\mathit{{val}}}$

, and

${\mathit{{val}}}$

, and

![]() ${\mathit{{is}}}$

are the identifier, value, and incoming edges of the vertex, respectively,

${\mathit{{is}}}$

are the identifier, value, and incoming edges of the vertex, respectively,

\begin{equation*}\begin{array}{lcl}\textbf{data}~\mathit{Vertex}~a~b & = & \mathit{Vertex}~\{~\mathit{vid}~::~\mathit{Int},~\mathit{val}~::~a,~\mathit{is}~::~[\,\mathit{Edge}~a~b\,]~\} \\\textbf{type}~\mathit{Edge}~a~b & = & (b,~\mathit{Vertex}~a~b) \\\textbf{type}~\mathit{Graph}~a~b & = & [\,\mathit{Vertex}~a~b\,]\end{array}\end{equation*}

\begin{equation*}\begin{array}{lcl}\textbf{data}~\mathit{Vertex}~a~b & = & \mathit{Vertex}~\{~\mathit{vid}~::~\mathit{Int},~\mathit{val}~::~a,~\mathit{is}~::~[\,\mathit{Edge}~a~b\,]~\} \\\textbf{type}~\mathit{Edge}~a~b & = & (b,~\mathit{Vertex}~a~b) \\\textbf{type}~\mathit{Graph}~a~b & = & [\,\mathit{Vertex}~a~b\,]\end{array}\end{equation*}

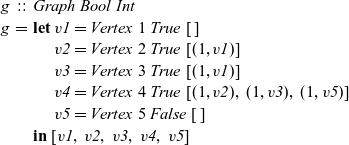

For simplicity, we assume that continuous identifiers starting from one are assigned to vertices and that all vertices in a list representing a graph are ordered by their vertex identifiers. As an example, the graph in Figure 2(d) can be defined by the following data structure, where

![]() ${\mathit{v1}}$

,

${\mathit{v1}}$

,

![]() ${\mathit{v2}}$

,

${\mathit{v2}}$

,

![]() ${\mathit{v3}}$

,

${\mathit{v3}}$

,

![]() ${\mathit{v4}}$

, and

${\mathit{v4}}$

, and

![]() ${\mathit{v5}}$

are the upper-left, upper-right, lower-left, middle, and lower-right vertices, respectively. We assume that all edges have weight 1:

${\mathit{v5}}$

are the upper-left, upper-right, lower-left, middle, and lower-right vertices, respectively. We assume that all edges have weight 1:

\begin{equation*}\begin{array}{lcl}g & :: & \mathit{Graph}~\mathit{Bool}~\mathit{Int} \\g & = & \textbf{let}~\mathit{v1} = Vertex~1~True~[\,] \\ & & \phantom{\textbf{let}}~\mathit{v2} = Vertex~2~True~[(1, \mathit{v1})] \\ & & \phantom{\textbf{let}}~\mathit{v3} = Vertex~3~True~[(1, \mathit{v1})] \\ & & \phantom{\textbf{let}}~\mathit{v4} = Vertex~4~True~[(1,\mathit{v2}),~(1,\mathit{v3}),~(1,\mathit{v5})] \\ & & \phantom{\textbf{let}}~\mathit{v5} = Vertex~5~False~[\,] \\ & & \textbf{in}~[\mathit{v1},~\mathit{v2},~\mathit{v3},~\mathit{v4},~\mathit{v5}]\end{array}\end{equation*}

\begin{equation*}\begin{array}{lcl}g & :: & \mathit{Graph}~\mathit{Bool}~\mathit{Int} \\g & = & \textbf{let}~\mathit{v1} = Vertex~1~True~[\,] \\ & & \phantom{\textbf{let}}~\mathit{v2} = Vertex~2~True~[(1, \mathit{v1})] \\ & & \phantom{\textbf{let}}~\mathit{v3} = Vertex~3~True~[(1, \mathit{v1})] \\ & & \phantom{\textbf{let}}~\mathit{v4} = Vertex~4~True~[(1,\mathit{v2}),~(1,\mathit{v3}),~(1,\mathit{v5})] \\ & & \phantom{\textbf{let}}~\mathit{v5} = Vertex~5~False~[\,] \\ & & \textbf{in}~[\mathit{v1},~\mathit{v2},~\mathit{v3},~\mathit{v4},~\mathit{v5}]\end{array}\end{equation*}

3.2 Description of our model

In synchronous vertex-centric parallel computation, each vertex periodically and synchronously performs the following processing steps, which collectively we call a logical superstep, or LSS for short.

-

1. Each vertex receives the data computed in the previous LSS from the adjacent vertices connected by incoming edges.

-

2. In accordance with the problem to be solved, the vertex performs its respective computation using the received data, the data it computed in the previous LSS, and the weights of the incoming edges. If necessary, the vertex acquires global information using aggregation during computation.

-

3. The vertex sends the result of the computation to all adjacent vertices along its outgoing edges. The adjacent vertices receive the data in the next LSS.

These three processing steps are performed in each LSS. An LSS represents a semantically connected sequence of actions at each vertex. Each vertex repeatedly executes this “sequence of actions.” An LSS is “logical” in the sense that it might contain aggregation and thus might take more than one Pregel superstep. We represent an LSS as a single function and call it an LSS function. As explained earlier, an LSS function does not explicitly describe sending and receiving data between a vertex and the adjacent vertices.

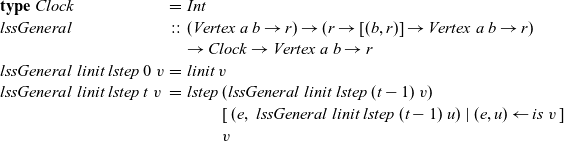

The arguments given to an LSS function are an integer value called the clock and the vertex on which the LSS function is repeatedly performed. A clock represents the number of iterations of the LSS function. Note that the result of an LSS function may have a type different from that of the vertex value. Thus, the type of an LSS function is

![]() ${\mathit{Int}\rightarrow \mathit{Vertex}~a~b \rightarrow r}$

, where a is the vertex value type and r is the result type.

${\mathit{Int}\rightarrow \mathit{Vertex}~a~b \rightarrow r}$

, where a is the vertex value type and r is the result type.

We express the LSS function using two functions. One is an initialization function, which defines the behavior when the clock is 0, and the other is a step function, which defines the behavior when the clock is greater than 0. Let t be a clock value. The initialization function takes as its argument a vertex and returns the result for

![]() ${t = 0}$

. Thus, its type is

${t = 0}$

. Thus, its type is

![]() ${\mathit{Vertex}~a~b \rightarrow r}$

. The step function takes three arguments: the result for the vertex at the previous clock, a list of pairs, each of which is composed by the weight of an incoming edge and the result of the adjacent vertex connected by the edge at the previous clock, and the vertex itself. Thus, its type is

${\mathit{Vertex}~a~b \rightarrow r}$

. The step function takes three arguments: the result for the vertex at the previous clock, a list of pairs, each of which is composed by the weight of an incoming edge and the result of the adjacent vertex connected by the edge at the previous clock, and the vertex itself. Thus, its type is

![]() ${r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r}$

. On the basis of these two functions, a general form of the LSS function is defined in terms of

${r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r}$

. On the basis of these two functions, a general form of the LSS function is defined in terms of

![]() ${\mathit{lssGeneral}}$

, which can be defined as a fold-like second-order function as follows:

${\mathit{lssGeneral}}$

, which can be defined as a fold-like second-order function as follows:

\begin{equation*}\begin{array}{lcl}\textbf{type}~Clock &=& \mathit{Int} \\\mathit{lssGeneral} &::& (\mathit{Vertex}~a~b \rightarrow r) \rightarrow (r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r) \\ & & \rightarrow Clock \rightarrow \mathit{Vertex}~a~b \rightarrow r \\lssGeneral~linit~lstep~0~v &=& linit~v \\lssGeneral~linit~lstep~t~v &=& lstep~(\mathit{lssGeneral}~linit~lstep~(t-1)~v) \\ & & \phantom{lstep}~ [\>{(e,~\mathit{lssGeneral}~linit~lstep~(t-1)~u)}~|~{(e,u)} \leftarrow {\mathit{is}~v}{}\,] \\ & & \phantom{lstep}~v\end{array}\end{equation*}

\begin{equation*}\begin{array}{lcl}\textbf{type}~Clock &=& \mathit{Int} \\\mathit{lssGeneral} &::& (\mathit{Vertex}~a~b \rightarrow r) \rightarrow (r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r) \\ & & \rightarrow Clock \rightarrow \mathit{Vertex}~a~b \rightarrow r \\lssGeneral~linit~lstep~0~v &=& linit~v \\lssGeneral~linit~lstep~t~v &=& lstep~(\mathit{lssGeneral}~linit~lstep~(t-1)~v) \\ & & \phantom{lstep}~ [\>{(e,~\mathit{lssGeneral}~linit~lstep~(t-1)~u)}~|~{(e,u)} \leftarrow {\mathit{is}~v}{}\,] \\ & & \phantom{lstep}~v\end{array}\end{equation*}

An LSS function, lss, for a specific problem is defined by giving appropriate initialization and step functions,

![]() ${\mathit{ainit}}$

and

${\mathit{ainit}}$

and

![]() ${\mathit{astep}}$

, as actual arguments to

${\mathit{astep}}$

, as actual arguments to

![]() ${\mathit{lssGeneral}}$

, that is,

${\mathit{lssGeneral}}$

, that is,

![]() ${\mathit{lss}~=~\mathit{lssGeneral}~\mathit{ainit}~\mathit{astep}}$

.

${\mathit{lss}~=~\mathit{lssGeneral}~\mathit{ainit}~\mathit{astep}}$

.

Let

![]() ${g = [\,v_1,~v_2,~v_3,~\ldots\,]}$

be the target graph of type

${g = [\,v_1,~v_2,~v_3,~\ldots\,]}$

be the target graph of type

![]() ${\mathit{Graph}~a~b}$

of the computation, where we assume that the identifier of

${\mathit{Graph}~a~b}$

of the computation, where we assume that the identifier of

![]() ${v_k}$

is k. The list of computation results of LSS function

${v_k}$

is k. The list of computation results of LSS function

![]() ${\mathit{lss}}$

on all vertices in the graph at clock t is

${\mathit{lss}}$

on all vertices in the graph at clock t is

![]() ${[\,\mathit{lss}~t~v_1,~\mathit{lss}~t~v_2,~\mathit{lss}~t~v_2,~\ldots\,] :: [\,r\,]}$

. Further, let

${[\,\mathit{lss}~t~v_1,~\mathit{lss}~t~v_2,~\mathit{lss}~t~v_2,~\ldots\,] :: [\,r\,]}$

. Further, let

![]() ${g_t}$

be a graph constructed from the results of

${g_t}$

be a graph constructed from the results of

![]() ${\mathit{lss}}$

on all vertices at clock t, that is,

${\mathit{lss}}$

on all vertices at clock t, that is,

![]() ${g_t = \mathit{makeGraph}~g~[\,\mathit{lss}~t~v_1,~\mathit{lss}~t~v_2,~\mathit{lss}~t~v_3,~\ldots\,]}$

. Here,

${g_t = \mathit{makeGraph}~g~[\,\mathit{lss}~t~v_1,~\mathit{lss}~t~v_2,~\mathit{lss}~t~v_3,~\ldots\,]}$

. Here,

![]() ${\mathit{makeGraph}~g~[\,r_1,~r_2,~\ldots\,]}$

returns a graph with the same shape as g for which the i-th vertex has the value

${\mathit{makeGraph}~g~[\,r_1,~r_2,~\ldots\,]}$

returns a graph with the same shape as g for which the i-th vertex has the value

![]() ${r_i}$

and the edges have the same weights as those in g:

${r_i}$

and the edges have the same weights as those in g:

\begin{equation*}\begin{array}{lcl}\mathit{makeGraph} &::& \mathit{Graph}~a~b \rightarrow [\,r\,] \rightarrow \mathit{Graph}~r~b\\\mathit{makeGraph}~g~xs &=& newg\\ & & \textbf{where}~newg~=\\ & & \phantom{\textbf{where}~}\>[\>\mathit{Vertex}~k~(xs~!!~(k-1))\\ & & \phantom{\textbf{where}~\>[\>\mathit{Vertex}~} [\>{(e,~newg~!!~(k'-1))}~|~{(e,~\mathit{Vertex}~k'~\_~\_)} \leftarrow {es}{}\,]\\ & & \phantom{\textbf{where}~\>[\>}~|~\mathit{Vertex}~k~\_~es \leftarrow g\>]\end{array}\end{equation*}

\begin{equation*}\begin{array}{lcl}\mathit{makeGraph} &::& \mathit{Graph}~a~b \rightarrow [\,r\,] \rightarrow \mathit{Graph}~r~b\\\mathit{makeGraph}~g~xs &=& newg\\ & & \textbf{where}~newg~=\\ & & \phantom{\textbf{where}~}\>[\>\mathit{Vertex}~k~(xs~!!~(k-1))\\ & & \phantom{\textbf{where}~\>[\>\mathit{Vertex}~} [\>{(e,~newg~!!~(k'-1))}~|~{(e,~\mathit{Vertex}~k'~\_~\_)} \leftarrow {es}{}\,]\\ & & \phantom{\textbf{where}~\>[\>}~|~\mathit{Vertex}~k~\_~es \leftarrow g\>]\end{array}\end{equation*}

Then the infinite stream (list) of graphs

![]() ${[\,g_0,~g_1,~g_2,~\ldots\,]}$

represents infinite iterations of LSS function

${[\,g_0,~g_1,~g_2,~\ldots\,]}$

represents infinite iterations of LSS function

![]() ${\mathit{lss}}$

. This infinite stream can be produced by using the higher-order function

${\mathit{lss}}$

. This infinite stream can be produced by using the higher-order function

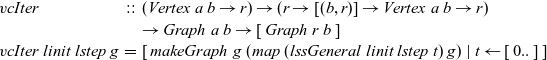

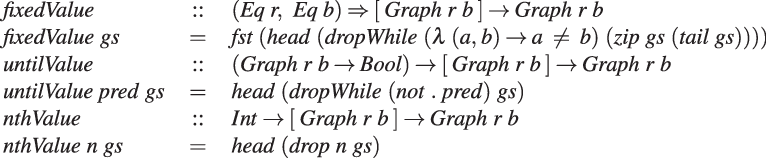

![]() ${\mathit{vcIter}}$

, which takes as its arguments initialization and step functions and a target graph represented by a list of vertices:

${\mathit{vcIter}}$

, which takes as its arguments initialization and step functions and a target graph represented by a list of vertices:

\begin{equation*}\begin{array}{lcl}\mathit{vcIter} &::& (\mathit{Vertex}~a~b \rightarrow r) \rightarrow (r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r) \\ & & \rightarrow \mathit{Graph}~a~b \rightarrow [\,\mathit{Graph}~r~b\,] \\\mathit{vcIter}~linit~lstep~g &=& [\>{\mathit{makeGraph}~g~(map~(\mathit{lssGeneral}~linit~lstep~t)~g)}~|~{t} \leftarrow {[\,0..\,]}{}\,]\end{array}\end{equation*}

\begin{equation*}\begin{array}{lcl}\mathit{vcIter} &::& (\mathit{Vertex}~a~b \rightarrow r) \rightarrow (r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r) \\ & & \rightarrow \mathit{Graph}~a~b \rightarrow [\,\mathit{Graph}~r~b\,] \\\mathit{vcIter}~linit~lstep~g &=& [\>{\mathit{makeGraph}~g~(map~(\mathit{lssGeneral}~linit~lstep~t)~g)}~|~{t} \leftarrow {[\,0..\,]}{}\,]\end{array}\end{equation*}

Though

![]() ${\mathit{vcIter}}$

produces an infinite stream of graphs, we want to terminate its computation at an appropriate clock and return the graph at this clock as the final result. We can give a termination condition to the infinite sequence from outside and obtain the desired result by using

${\mathit{vcIter}}$

produces an infinite stream of graphs, we want to terminate its computation at an appropriate clock and return the graph at this clock as the final result. We can give a termination condition to the infinite sequence from outside and obtain the desired result by using

![]() ${\mathit{term}~(\mathit{vcIter}~linit~lstep~g)}$

, where

${\mathit{term}~(\mathit{vcIter}~linit~lstep~g)}$

, where

![]() ${\mathit{term}}$

selects the desired final result from the sequence of graphs to terminate the computation.

${\mathit{term}}$

selects the desired final result from the sequence of graphs to terminate the computation.

Figure 5 presents example termination functions. A typical termination point is when the computation falls into a steady state, after which graphs in the infinite list never change. The termination function fixedValue returns the graph of the steady state of a given infinite list. Another termination point is when a graph in the stream comes to satisfy a specified condition. We can use the higher-order termination function untilValue for this case. It takes a predicate function specifying the desired condition and returns the first graph that satisfies this predicate from a given infinite stream. Finally, nthValue retrieves the graph at a given clock.

Fig. 5. Termination functions.

We define

![]() ${\mathit{vcModel}}$

as the composition of a termination function and

${\mathit{vcModel}}$

as the composition of a termination function and

![]() ${\mathit{vcIter}}$

. We regard the function

${\mathit{vcIter}}$

. We regard the function

![]() ${\mathit{vcModel}}$

as representing functional vertex-centric graph processing:

${\mathit{vcModel}}$

as representing functional vertex-centric graph processing:

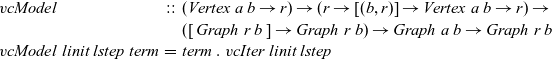

\begin{equation*}\begin{array}{lcl}\mathit{vcModel} &::& (\mathit{Vertex}~a~b \rightarrow r) \rightarrow (r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r) \rightarrow \\ & & ([\,\mathit{Graph}~r~b\,] \rightarrow \mathit{Graph}~r~b) \rightarrow \mathit{Graph}~a~b \rightarrow \mathit{Graph}~r~b \\\mathit{vcModel}~linit~lstep~\mathit{term} &=& \mathit{term}~.~\mathit{vcIter}~linit~lstep\end{array}\end{equation*}

\begin{equation*}\begin{array}{lcl}\mathit{vcModel} &::& (\mathit{Vertex}~a~b \rightarrow r) \rightarrow (r \rightarrow [(b,r)] \rightarrow \mathit{Vertex}~a~b \rightarrow r) \rightarrow \\ & & ([\,\mathit{Graph}~r~b\,] \rightarrow \mathit{Graph}~r~b) \rightarrow \mathit{Graph}~a~b \rightarrow \mathit{Graph}~r~b \\\mathit{vcModel}~linit~lstep~\mathit{term} &=& \mathit{term}~.~\mathit{vcIter}~linit~lstep\end{array}\end{equation*}

An LSS function defined in terms of

![]() ${\mathit{lssGeneral}}$

has a recursive form on the basis of the structure of the input graph. Although a graph has a recursive structure, a recursive call of an LSS function does not cause an infinite recursion, because a recursive call always uses the prior clock, that is,

${\mathit{lssGeneral}}$

has a recursive form on the basis of the structure of the input graph. Although a graph has a recursive structure, a recursive call of an LSS function does not cause an infinite recursion, because a recursive call always uses the prior clock, that is,

![]() ${t-1}$

.

${t-1}$

.

3.3 Simple example

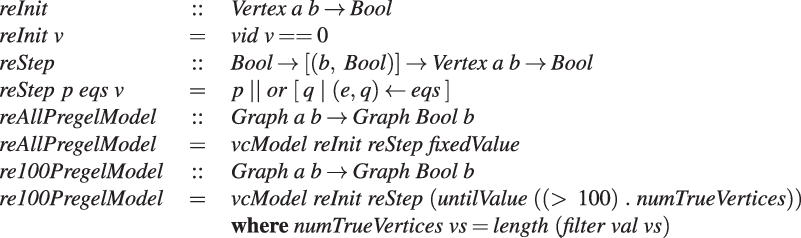

Figure 6 presents the formulation of the reachability problems on the basis of the proposed functional model, where reAllPregelModel is for the all-reachablity problem and

![]() ${\mathit{re100PregelModel}}$

is for the 100-reachability problem. Variable numTrueVertices is the number of vertices with a value of True for the target graph. The only difference between these two formulations is the termination condition; the all-reachability problem formulation uses fixedValue, while the 100-reachability problem one uses untilValue. Note that the LSS function characterized by reInit and reStep has no description for the aggregation that appears in the original Pregel code (Figure 4).

${\mathit{re100PregelModel}}$

is for the 100-reachability problem. Variable numTrueVertices is the number of vertices with a value of True for the target graph. The only difference between these two formulations is the termination condition; the all-reachability problem formulation uses fixedValue, while the 100-reachability problem one uses untilValue. Note that the LSS function characterized by reInit and reStep has no description for the aggregation that appears in the original Pregel code (Figure 4).

Fig. 6. Formulation of reachability problems in our model.

3.4 Limitations of our model

Our model suffers the following limitations:

-

Data can be exchanged only between adjacent vertices.

-

A vertex cannot change the shape of the graph or the weight of an edge.

In the Pregel model, a vertex can send data to a vertex other than the adjacent ones as long as it can specify the destination vertex. In our model, unless global aggregation is used, data can be exchanged only between adjacent vertices directly connected by a directed edge. A vertex-centric graph processing model with this limitation, which is sometimes called the GAS (gather-apply-scatter) model, has been used by many researchers (Gonzalez et al. Reference Gonzalez, Low, Gu, Bickson and Guestrin2012; Bae & Howe Reference Bae and Howe2015; Sengupta et al., Reference Sengupta, Song, Agarwal and Schwan2015).

Furthermore, in our model, computation on a vertex cannot change the shape of the graph or weight of an edge. This limitation makes it is impossible to represent some algorithms including those based on the pointer jumping technique. However, even under this additional limitation, many practical graph algorithms can be described.The Fregel language inherits these limitations because it was designed on the basis of our model. As mentioned in Section 1, removing these limitations from the Fregel language is left for future work.

3.5 Features of our model

Our model has four notable features.

First, our model is purely functional; computation that is periodically and synchronously performed at every vertex is defined as an LSS function without any side effects that have the form of a structural recursion on the graph structure. The recursive execution of such an LSS function is regarded as dynamic programming on the graph on the basis of memorization.

Second, an LSS function does not have explicit descriptions for sending or receiving data between adjacent vertices. Instead, it uses recursive calls of the LSS function for adjacent vertices, which can be regarded as an implicit pulling style of communication.

Third, an LSS function enables the programmer to describe a series of processing steps as a whole that could be unwillingly divided into small supersteps due to barrier synchronization in the BSP model if we used the original Pregel model.

Fourth, the entire computation for a graph is represented as an infinite list of resultant graphs in ascending clock time order. The LSS function has no description for the termination of the computation. Instead, termination is described by a function that appropriately chooses the desired result from an infinite list.

4 Fregel functional domain-specific language

Fregel is a functional DSL for declarative-style programming on large-scale graphs that uses computation based on

![]() ${\mathit{vcModel}}$

(defined in Section 3). A Fregel program can be run on Haskell interpreters like GHCi, because Fregel’s syntax follows that of Haskell. This ability is useful for testing and debugging a Fregel program. After testing and debugging, the Fregel program can be compiled into a program for a Pregel-like framework such as Giraph and Pregel+.

${\mathit{vcModel}}$

(defined in Section 3). A Fregel program can be run on Haskell interpreters like GHCi, because Fregel’s syntax follows that of Haskell. This ability is useful for testing and debugging a Fregel program. After testing and debugging, the Fregel program can be compiled into a program for a Pregel-like framework such as Giraph and Pregel+.

4.1 Main features of Fregel

Fregel captures data access, data aggregation, and data communication in a functional manner and supports concise ways of writing various graph computations in a compositional manner through the use of four second-order functions. Fregel has three main features.

First, Fregel abstracts access to vertex data by using three tables indexed by vertices. The prev table is used to access vertex data (i.e., results of recursive calls of the step function) at the previous clock. The curr table is used to access vertex data at the current clock. These two tables explicitly implement the memorization of calculated values. The third table, val is used to access vertex initial values, that is, the values placed on vertices when the computation started. An index given to a table is neither the identifier of a vertex nor the position of a vertex in a list of incoming edges but rather is a vertex itself. This enables the programmer to write in a more “direct” style for data accesses.

Second, Fregel abstracts aggregation and communication by using a comprehension with a specific generator. Aggregation is described by a comprehension for which the generator is the entire graph (list of all vertices), while communication with adjacent vertices is described by a comprehension for which the generator is the list of adjacent vertices.

Third, Fregel is equipped with four second-order functions for graphs, which we call second-order graph functions. A Fregel program can use these functions multiple times. Function

fregel

corresponds to functional model

![]() ${\mathit{vcModel}}$

defined in Section 3. Function

gzip

pairs values for the corresponding vertices in two graphs of the same shape, and

gmap

applies a given function to every vertex. Function

giter

abstracts iterative computation.

${\mathit{vcModel}}$

defined in Section 3. Function

gzip

pairs values for the corresponding vertices in two graphs of the same shape, and

gmap

applies a given function to every vertex. Function

giter

abstracts iterative computation.

In the following sections, we first introduce the core part of the Fregel language constructs and then explain Fregel programming by using some specific examples.

4.2 Fregel language constructs

A vertex in the functional model described in Section 3.1 has a list of adjacent vertices connected by incoming edges. However, some graph algorithms use edges for the reverse direction. For example, the min-label algorithm (Yan et al. Reference Yan, Cheng, Xing, Lu, Ng and Bu2014b) for calculating strongly connected components of a given graph, which is described in Section 4.6, needs backward propagation in which a vertex sends messages toward its neighbors connected by its incoming edges. In our implicit pulling style of communications, this means that a vertex needs to peek at data in an adjacent vertex connected by an outgoing edge. Thus, though different from the functional model, we decided to let every vertex have two lists of edges: one contains incoming edges in the original graph and the other contains incoming edges in the reversed (transposed) graph. An incoming edge in the reversed graph is an edge produced by reversing an outgoing edge in the original graph. This makes it easier for the programmer to write programs in which part of the computation needs to be carried out on the reversed graph. Hereafter, a “reversed edge” means an edge in the latter list.

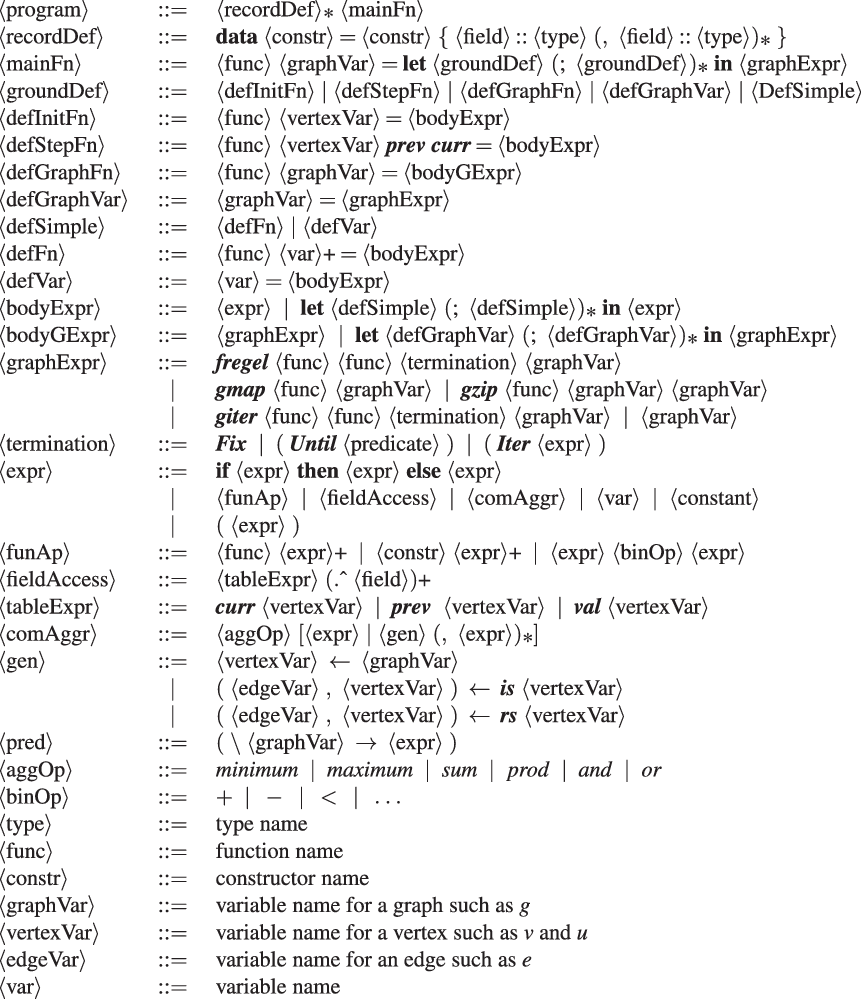

Figure 7 presents the syntax of Fregel. Other than the normal reserved words in bold font, the tokens in bold-slant font are important reserved words like identifier names and data constructor names in Fregel. Program examples of Fregel can be found from Sections 4.3 to 4.6. Please refer to these examples as needed.

Fig. 7. Core part of Fregel syntax.

A Fregel program defines the main function,

![]() $\langle{{mainFn}}\rangle$

, which takes a single input graph and returns a resultant graph. In the program body, the resultant graph is specified by a graph expression,

$\langle{{mainFn}}\rangle$

, which takes a single input graph and returns a resultant graph. In the program body, the resultant graph is specified by a graph expression,

![]() $\langle{{graphExpr}}\rangle$

, which can construct a graph using the four second-order graph functions.

$\langle{{graphExpr}}\rangle$

, which can construct a graph using the four second-order graph functions.

Second-order graph function

fregel

, which is probably the most frequently used function by the programmer, corresponds to

![]() ${\mathit{vcModel}}$

and defines the iterative behavior of an LSS. As described above, it is abstracted as two functions: the initialization function (the first argument) and the step function (the second argument), which is repeatedly executed.

${\mathit{vcModel}}$

and defines the iterative behavior of an LSS. As described above, it is abstracted as two functions: the initialization function (the first argument) and the step function (the second argument), which is repeatedly executed.

The initialization function of

fregel

is the same as that of

![]() ${\mathit{vcModel}}$

. It takes a vertex of type

${\mathit{vcModel}}$

. It takes a vertex of type

![]() ${\mathit{Vertex}~a~b}$

as its only argument and returns an initial value of type r for the iteration carried out by the step function. On the other hand, a step function of

fregel

is slightly different.

${\mathit{Vertex}~a~b}$

as its only argument and returns an initial value of type r for the iteration carried out by the step function. On the other hand, a step function of

fregel

is slightly different.

-

First, the step function of

${\mathit{vcModel}}$

executed on every vertex is passed its own result and those of adjacent vertices at the previous clock, together with the weights of incoming edges, through its arguments. In contrast, the step function of

fregel

takes a

prev

table from which the results of every vertex at the previous clock can be obtained. Edge weights are not explicitly passed to the step function. They can be obtained by using a comprehension for which the generator is the list of adjacent vertices.

${\mathit{vcModel}}$

executed on every vertex is passed its own result and those of adjacent vertices at the previous clock, together with the weights of incoming edges, through its arguments. In contrast, the step function of

fregel

takes a

prev

table from which the results of every vertex at the previous clock can be obtained. Edge weights are not explicitly passed to the step function. They can be obtained by using a comprehension for which the generator is the list of adjacent vertices. -

Second, fregel ’s step function takes another table called curr , which holds the results at the current clock for the cases in which these values are necessary for computing the results for the current LSS. We show an example of using the curr table in Section 4.5.

-

Third, while the termination judgment of

${\mathit{vcModel}}$

is made using a function that chooses a desired graph from a stream of graphs, that of

fregel

is not a function.

${\mathit{vcModel}}$

is made using a function that chooses a desired graph from a stream of graphs, that of

fregel

is not a function.

Since the initialization and step functions return multiple values in many cases, the programmer must often define a record,

![]() $\langle{{recordDef}}\rangle$

, for them before the main function and let each vertex hold the record data. Fregel provides a concise way to access a record field by using the field selection operator denoted by

$\langle{{recordDef}}\rangle$

, for them before the main function and let each vertex hold the record data. Fregel provides a concise way to access a record field by using the field selection operator denoted by

![]() $.\wedge$

, which resembles the ones in Pascal and C.

$.\wedge$

, which resembles the ones in Pascal and C.

Second-order graph function giter iterates a specified computation on a graph. Similar to fregel , it takes two functions: the initialization function iinit as its first argument and the iteration function iiter as its second argument. Let a and b be the vertex value type and edge weight type in the input graph, respectively, and let r be the vertex value type in the output graph. The following iterative computation is performed by giter , where g is the input graph:

First, before entering the iteration, iinit is applied to every vertex in input graph g to produce the initial graph

![]() ${g_0}$

of the iteration. Then iiter is repeatedly called to produce successive graphs,

${g_0}$

of the iteration. Then iiter is repeatedly called to produce successive graphs,

![]() ${g_1}, \ldots, {g_n}$

. The iteration terminates when the termination condition given as the third argument of

giter

is satisfied. The Haskell definition of

giter

in the Fregel interpreter, which may help the reader understand the behavior of

giter

, is presented in Section 5. Different from

fregel

’s step function,

giter

’s iteration function, iiter, takes a graph and returns the next graph, possibly by using second-order graph functions. Since

giter

is used for repeating

fregel

,

gmap

, etc., it takes only a graph. Section 4.6 presents an example of using

giter

.

${g_1}, \ldots, {g_n}$

. The iteration terminates when the termination condition given as the third argument of

giter

is satisfied. The Haskell definition of

giter

in the Fregel interpreter, which may help the reader understand the behavior of

giter

, is presented in Section 5. Different from

fregel

’s step function,

giter

’s iteration function, iiter, takes a graph and returns the next graph, possibly by using second-order graph functions. Since

giter

is used for repeating

fregel

,

gmap

, etc., it takes only a graph. Section 4.6 presents an example of using

giter

.

The termination condition,

![]() $\langle{{termination}}\rangle$

, is specified for the third argument of

fregel

and

giter

. This is not a function like fixedValue in the functional model, but a data represented by a data constructor like

Fix

,

Until

, or

Iter

, where

Fix

means a steady state,

Until

means a termination condition specified by a predicate function, and

Iter

specifies the number of iterations to perform.

$\langle{{termination}}\rangle$

, is specified for the third argument of

fregel

and

giter

. This is not a function like fixedValue in the functional model, but a data represented by a data constructor like

Fix

,

Until

, or

Iter

, where

Fix

means a steady state,

Until

means a termination condition specified by a predicate function, and

Iter

specifies the number of iterations to perform.

The expressions in Fregel are standard expressions in Haskell, field access expressions on a vertex (

![]() $\langle{fieldAccess}\rangle$

), and aggregation expressions (

$\langle{fieldAccess}\rangle$

), and aggregation expressions (

![]() $\langle{comAggr}\rangle$

) each of which applies a combining function to a comprehension with specific generators. There are three generators in Fregel; (1) a graph variable to generate all vertices in a graph, (2)

$\langle{comAggr}\rangle$

) each of which applies a combining function to a comprehension with specific generators. There are three generators in Fregel; (1) a graph variable to generate all vertices in a graph, (2)

![]() ${\boldsymbol{is}~v}$

where v is a vertex variable to generate all pairs of v’s adjacent vertices connected by incoming edges and the edge weights, and (3)

${\boldsymbol{is}~v}$

where v is a vertex variable to generate all pairs of v’s adjacent vertices connected by incoming edges and the edge weights, and (3)

![]() ${\boldsymbol{rs}~v}$

where v is a vertex variable to generate all pairs of v’s adjacent vertices connected by reversed edges and the edge weights. A combining function is one of the six standard functions that have both commutative and associative properties such as minimum.

${\boldsymbol{rs}~v}$

where v is a vertex variable to generate all pairs of v’s adjacent vertices connected by reversed edges and the edge weights. A combining function is one of the six standard functions that have both commutative and associative properties such as minimum.

Though Fregel is syntactically a subset of Haskell, Fregel has the following restrictions:

-

Recursive definitions are not allowed in a let expression. This means that the programmer cannot define (mutually) recursive functions nor variables with circular dependencies.

-

Lists and functions cannot be used as values except for functions given as arguments to second-order graph functions.

-

A user-defined record has to be non-recursive.

-

A specified data obtained from the curr table have to be already determined.

Due to these restrictions, circular dependent values cannot appear in a Fregel program. Thus, Fregel programs do not rely on laziness. In fact, the Fregel compiler compiles a Fregel program into a Java or C++ program that computes non-circular dependent values one by one without the need for lazy evaluation.

4.3 Examples: reachability problems

Our first example Fregel program is one for solving the all-reachability problem (Figure 8(a)). Since the LSS for this problem calculates a Boolean value indicating whether each vertex is currently reachable or not, we define a record RVal that contains only this Boolean value at the rch field in this record.

Fig. 8. Fregel programs for solving reachability problems.

Function reAll, the main part of the program, defines the initialization and step functions. The initialization function, reInit, returns an RVal record in which the rch field is True only if the vertex is the starting point (vertex identifier is one). The vertex identifier can be obtained by using a special predefined function,

vid

. The step function, reStep, collects data at the previous clock from every adjacent vertex connected by an incoming edge. This is done by using the syntax of comprehension, in which the generator is

![]() ${\boldsymbol{is}~v}$

. For every adjacent vertex u, this program obtains the result at the previous clock by using

prev u and accesses its rch field. Then, reStep combines the results of all adjacent vertices by using the

${\boldsymbol{is}~v}$

. For every adjacent vertex u, this program obtains the result at the previous clock by using

prev u and accesses its rch field. Then, reStep combines the results of all adjacent vertices by using the

![]() ${\mathit{or}}$

function and returns the disjunction of the combined value and its respective rch value at the previous clock.

${\mathit{or}}$

function and returns the disjunction of the combined value and its respective rch value at the previous clock.

In reAll, reInit and reStep are given to the fregel function. Its third argument, Fix , specifies the termination condition, and the fourth argument is the input graph.

Figure 8(b) presents a Fregel program for solving the 100-reachability problem. This program is the same as that in Figure 8(a) except for the termination condition. The termination condition in this program uses Until , which corresponds to untilValue in our functional model. Until takes a function that defines the condition. This function gathers the number of currently reachable vertices by aggregation. Fregel’s aggregation takes the form of a comprehension for which the generator is the input graph, that is, a list of all vertices.

Note that both the initialization and step functions are common to both reAll and

![]() ${\mathit{re100}}$

. The only difference between them is the termination condition: reAll specifies

Fix

and

${\mathit{re100}}$

. The only difference between them is the termination condition: reAll specifies

Fix

and

![]() ${\mathit{re100}}$

specifies

Until

. The common step function describes only how to calculate the value of interest (whether or not each vertex is reachable). A description related to termination is not included in the definition of the step function. Instead, it is specified as the third argument of

fregel

. This is in sharp contrast to the programs in the original Pregel (Figures 3 and 4), in which each vertex’s transition to the inactive state is explicitly described in the compute function.

${\mathit{re100}}$

specifies

Until

. The common step function describes only how to calculate the value of interest (whether or not each vertex is reachable). A description related to termination is not included in the definition of the step function. Instead, it is specified as the third argument of

fregel

. This is in sharp contrast to the programs in the original Pregel (Figures 3 and 4), in which each vertex’s transition to the inactive state is explicitly described in the compute function.

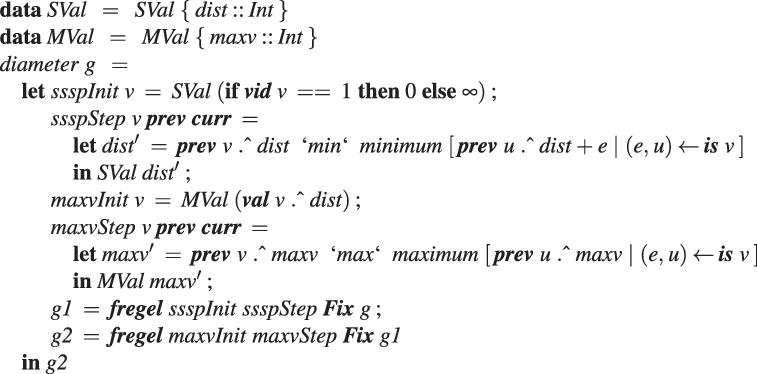

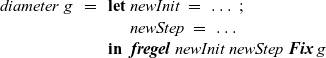

4.4 Example: calculating diameter

The next example calculates the diameter of a graph whose endpoints include the vertex with identifier one. This example sequentially calls two

fregel

functions, each of which is similar to the reachability computation. The input is assumed to be a connected undirected graph. In Fregel, an undirected edge between two vertices

![]() ${v_1}$

and

${v_1}$

and

![]() ${v_2}$

is represented by two directed edges: one from

${v_2}$

is represented by two directed edges: one from

![]() ${v_1}$

to

${v_1}$

to

![]() ${v_2}$

and the other from

${v_2}$

and the other from

![]() ${v_2}$

to

${v_2}$

to

![]() ${v_1}$

.

${v_1}$

.

The first call uses values on edges to find the shortest path length from the source vertex (vertex identifier one) to every vertex. This is known as the single-source shortest path problem. The second one finds the maximum value of the shortest path lengths of all vertices.

Figure 9 presents the program. The LSS for the first

fregel

calculates the tentative shortest path length to every vertex from the source vertex, so record

![]() ${\mathit{{SVal}}}$

consists of an integer field

${\mathit{{SVal}}}$

consists of an integer field

![]() ${\mathit{{dist}}}$

. The step function ssspStep of the first

fregel

uses the edge weights, that is, the first component e of the pair generated in the comprehension, to update the tentative shortest path for a vertex. It takes the minimum sum of the tentative shortest path of every neighbor vertex (

${\mathit{{dist}}}$

. The step function ssspStep of the first

fregel

uses the edge weights, that is, the first component e of the pair generated in the comprehension, to update the tentative shortest path for a vertex. It takes the minimum sum of the tentative shortest path of every neighbor vertex (

![]() ${\boldsymbol{prev}~{u}~.\wedge~{dist}}$

) and the edge length (e) from the neighbor vertex.

${\boldsymbol{prev}~{u}~.\wedge~{dist}}$

) and the edge length (e) from the neighbor vertex.

Fig. 9. Fregel program for calculating diameter.

In the second fregel , every vertex holds the tentative maximum value in the record MVal among the values transmitted to the vertex so far. In its step function, maxvStep, every vertex receives the tentative maximum values of the adjacent vertices connected by incoming edges, calculates the maximum of the received values and its previous tentative value, and updates the tentative value.

The output graph of the first

fregel

,

![]() ${\mathit{g1}}$

, is input to the second

fregel

, and its resultant graph is the final answer, in which every vertex has the value of the diameter.

${\mathit{g1}}$

, is input to the second

fregel

, and its resultant graph is the final answer, in which every vertex has the value of the diameter.

4.5 Example: reachability with ranking

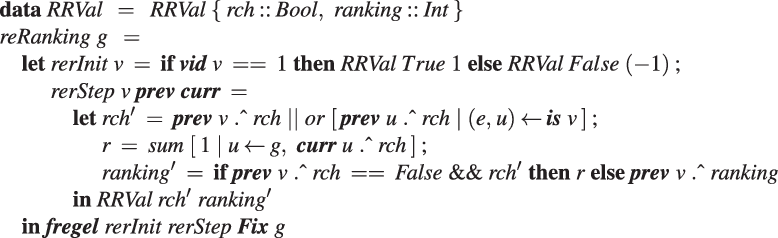

Next, we present an example of using the curr table. The reachability with ranking problem is essentially the same as the all-reachability problem except that it also determines the ranking of every reachable vertex, where ranking r means that the number of steps to the reachable vertex is ranked in the top r among all vertices. A Fregel program for solving this problem is presented in Figure 10.

Fig. 10. Fregel program for solving reachability with ranking problem.

We define a record RRVal with two fields: rch (which is the same as that in RVal in the other reachability problems) and ranking. For the source vertex, the initialization function, rerInit, returns an RRVal record in which the rch and ranking fields are True and 1, respectively. For every other vertex, it returns an RRVal record in which rch is False and ranking is

![]() $-1$

, which means that the ranking is undetermined. The step function, rerStep, calculates the new rch field value in the same manner as for the other reachability problems. In addition, it calculates the number of reachable vertices at the current LSS by using the global aggregation, for which the generator is the entire graph with the

$-1$

, which means that the ranking is undetermined. The step function, rerStep, calculates the new rch field value in the same manner as for the other reachability problems. In addition, it calculates the number of reachable vertices at the current LSS by using the global aggregation, for which the generator is the entire graph with the

![]() ${\mathit{sum}}$

operator. To do this, it filters out the vertices that have not been reached yet. Writing this aggregation as:

${\mathit{sum}}$

operator. To do this, it filters out the vertices that have not been reached yet. Writing this aggregation as:

is incorrect because rch’ is not a local variable on a remote vertex u but rather a local variable on the vertex v that is executing rerStep. To enable v to refer to the rch’ value of the current LSS on a remote vertex u, it is necessary for u to store the value in an RRVal structure by returning an RRVal containing the current rch’ as the result of rerStep. Vertex v can then access the value by

![]() ${\boldsymbol{curr}~{u}~.\wedge~{rch}}$

.

${\boldsymbol{curr}~{u}~.\wedge~{rch}}$

.

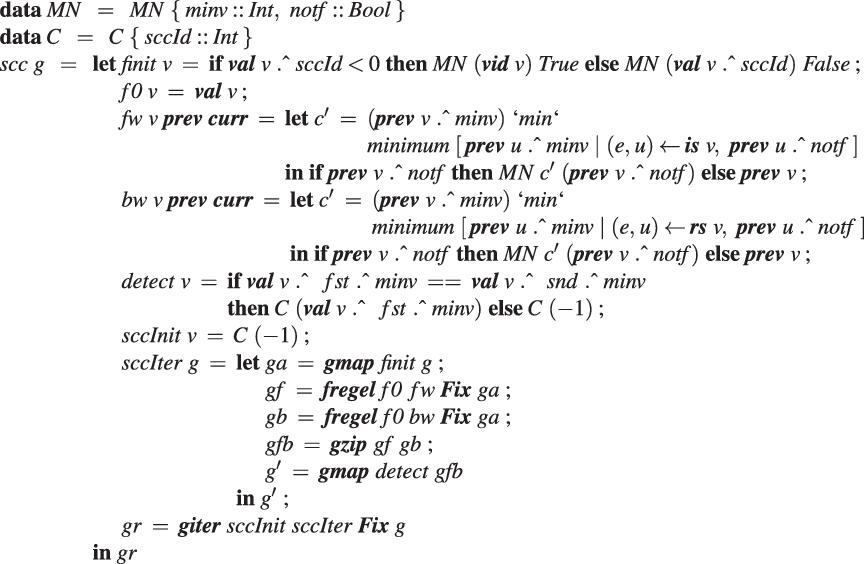

4.6 Example: strongly connected components

As an example of a more complex combination of second-order graph functions, Figure 11 presents a Fregel program for solving the strongly connected components problem. The output of this program is a directed graph with the same shape as the input graph; the value on each vertex is the identifier of the component, that is, the minimum of the vertex identifiers in the component to which it belongs.

Fig. 11. Fregel program for solving strongly connected components problem.

This program is based on the min-label algorithm (Yan et al. Reference Yan, Cheng, Xing, Lu, Ng and Bu2014b). It repeats four operations until every vertex belongs to a component.

-

(1). Initialization: Every vertex for which a component has not yet been found sets the notf flag value. This means that the vertex must participate in the following computation.

-

(2). Forward propagation: Each notf vertex first sets its minv value as its identifier. Then it repeatedly calculates the minimum value of its (previous) minv value and the minv values of the adjacent vertices connected by incoming edges. This is repeated until the computation falls into a steady state.

-

(3). Backward propagation: This is the same as forward propagation except that the direction of minv propagation is reversed; each

${\mathit{{notf}}}$

vertex updates its minv value through the reversed edges.

${\mathit{{notf}}}$

vertex updates its minv value through the reversed edges. -

(4). Component detection: Each

${\mathit{{notf}}}$

vertex judges whether the results (identifiers) of forward propagation and backward propagation are the same. If they are, the vertex belongs to the component represented by the identifier.

${\mathit{{notf}}}$

vertex judges whether the results (identifiers) of forward propagation and backward propagation are the same. If they are, the vertex belongs to the component represented by the identifier.

The program in Figure 11 has a nested iterative structure.

The outer iteration in terms of

giter

repeatedly performs the above operations for the remaining subgraph until no vertices remain. In this outer loop, each vertex has a record C that has only the sccId field. This field has the identifier of the component, which is the minimum identifier of the vertices in the component, or

![]() $-1$

if the component has not been found yet.

$-1$

if the component has not been found yet.

In the processing of operations (1)–(4), each vertex has a record MN with two fields. The minv field holds the minimum of the propagated values, and the

![]() ${\mathit{{notf}}}$

field holds the flag value explained above. The initialization uses

gmap

to create a graph

${\mathit{{notf}}}$

field holds the flag value explained above. The initialization uses

gmap

to create a graph

![]() ${\mathit{ga}}$

. There are two inner iterations by the

fregel

function: one performs forward propagation and the other performs backward propagation. Both take the same graph created in the initialization. Their results,

${\mathit{ga}}$

. There are two inner iterations by the

fregel

function: one performs forward propagation and the other performs backward propagation. Both take the same graph created in the initialization. Their results,

![]() ${\mathit{gf}}$

and gb, are combined by using

gzip

and passed to component detection, which is simply defined by

gmap

.

${\mathit{gf}}$

and gb, are combined by using

gzip

and passed to component detection, which is simply defined by

gmap

.

The four second-order graph functions provided by Fregel abstract computations on graphs and thereby enable the programmer to write a program as a combination of these functions. This functional style of programming makes it easier for the programmer to develop a complicated program, like one for solving the strongly connected components problem.

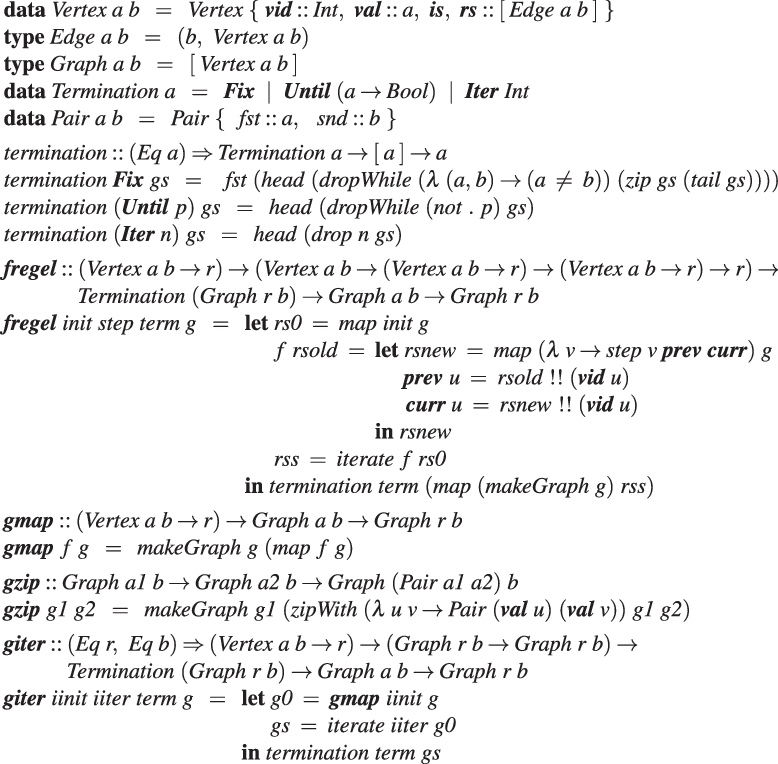

5 Fregel interpreter

As stated at the beginning of Section 4, a Fregel program can be run on Haskell. We implemented the Fregel interpreter as a library of Haskell. Though this Haskell implementation is used only in the testing and debugging phases during the development of Fregel programs, we describe it here to help the reader understand the behaviors of Fregel programs.

Figure 12 shows the core part of the implementation. The datatypes for the graphs are the same as those described in Section 3.1 except that each vertex has a list of reversed edges in its record under the field name

![]() ${\boldsymbol{rs}}$

. The termination point is defined by the

${\boldsymbol{rs}}$

. The termination point is defined by the

![]() ${\mathit{termination}}$

type. It has three data constructors:

Fix

means a steady state,

Until

means a termination condition specified by a predicate function, and

Iter

specifies the number of LSS iterations to perform. Function

${\mathit{termination}}$

type. It has three data constructors:

Fix

means a steady state,

Until

means a termination condition specified by a predicate function, and

Iter

specifies the number of LSS iterations to perform. Function

![]() ${\mathit{termination}}$

applies a given termination point to an infinite list of graphs.

${\mathit{termination}}$

applies a given termination point to an infinite list of graphs.

Fig. 12. Haskell implementation of Fregel.

The second-order graph function

fregel

takes as its arguments an initialization function, a step function, a termination point, and an input graph and returns the resultant graph of its computation. As explained in Section 4.2, the definition of

fregel

here differs somewhat from that of

![]() ${\mathit{vcModel}}$

, because it has to implement the memorization mechanism. It does this by using two lists of computation results for all vertices, which are accessed via the vertex identifiers.

${\mathit{vcModel}}$

, because it has to implement the memorization mechanism. It does this by using two lists of computation results for all vertices, which are accessed via the vertex identifiers.

Function

gmap

applies a given function to every vertex in the target graph and returns a new graph with the same shape in which each vertex has the application result. This is simply defined in terms of

![]() ${\mathit{makeGraph}}$

, for which the definition was presented in Section 3.

${\mathit{makeGraph}}$

, for which the definition was presented in Section 3.

Function

gzip

is given two graphs of the same shape and returns a graph in which each vertex has a pair of values that correspond to those of the vertices of the two graphs. A pair is defined by the

![]() ${\mathit{Pair}}$

type with

${\mathit{Pair}}$

type with

![]() ${\mathit{\_fst}}$

and

${\mathit{\_fst}}$

and

![]() ${\mathit{\_snd}}$

fields. This function can also be defined in terms of

${\mathit{\_snd}}$

fields. This function can also be defined in terms of

![]() ${\mathit{makeGraph}}$

.

${\mathit{makeGraph}}$

.

Function

giter

is given four arguments: iinit, iiter,

![]() ${\mathit{term}}$

, and an input graph. It first applies

${\mathit{term}}$

, and an input graph. It first applies

![]() ${\boldsymbol{gmap}~iinit}$

to the input graph and then repeatedly applies iiter to the result to produce a list of graphs. Finally, it uses

${\boldsymbol{gmap}~iinit}$

to the input graph and then repeatedly applies iiter to the result to produce a list of graphs. Finally, it uses

![]() ${\mathit{term}}$

to terminate the iteration and obtain the final result. It can be defined by using a standard function,

${\mathit{term}}$

to terminate the iteration and obtain the final result. It can be defined by using a standard function,

![]() ${\mathit{iterate}}$

.

${\mathit{iterate}}$

.

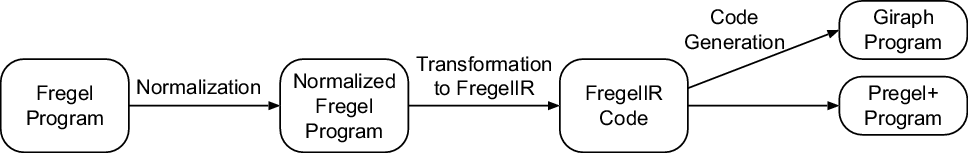

6 Fregel compiler

This section describes the basic compilation flow of Fregel programs. Optimizations for coping with the apparent inefficiency problems described in Section 2.2 are described in Section 7.

6.1 Overview of Fregel compiler

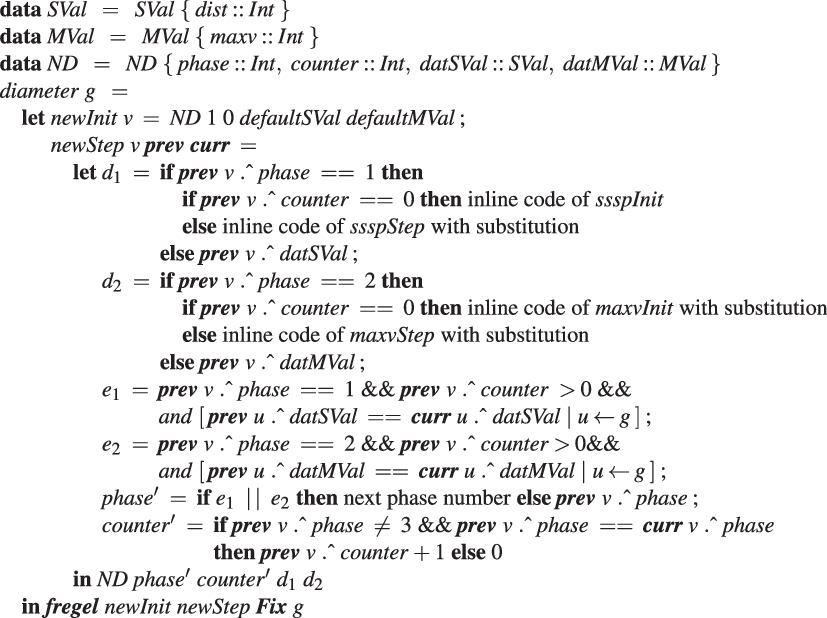

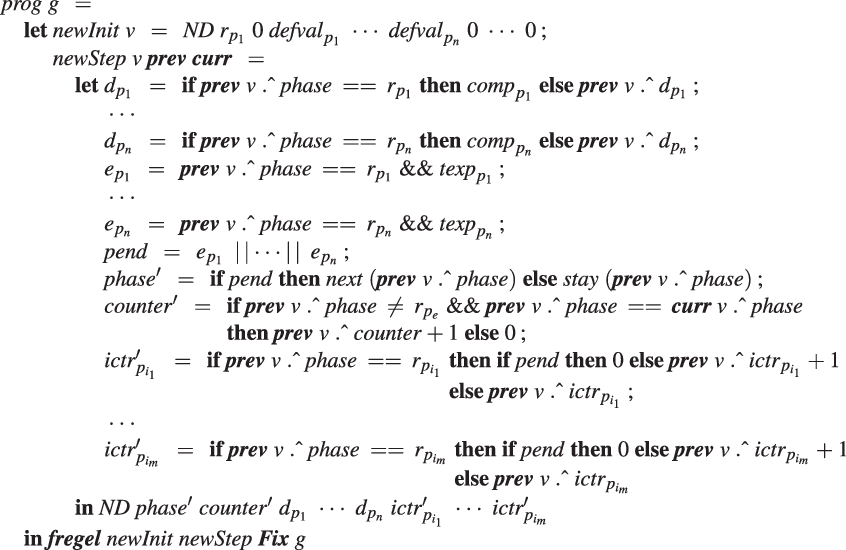

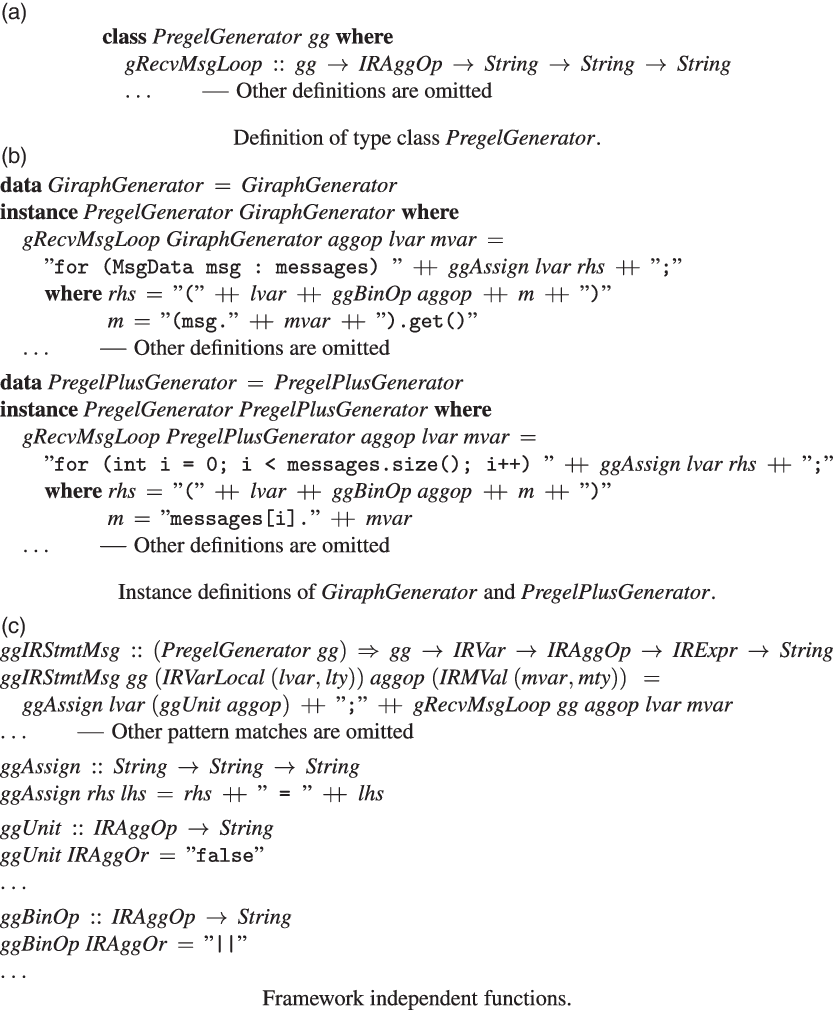

The Fregel compiler is a source-to-source translator from a Fregel program to a program for a Pregel-like framework for vertex-centric graph processing. Currently, our target frameworks are Giraph, for which the programs are in Java, and Pregel+, for which the programs are in C++. The Fregel compiler is implemented in Haskell. Figure 13 presents the compilation flow of a Fregel program.

Fig. 13. Compilation flow of Fregel program.