1. Introduction

Turbulent flows are ubiquitous in our daily life and determine the performances and the energy efficiencies of various thermo-fluids devices (Brunton & Noack Reference Brunton and Noack2015). In most engineering flows, turbulence is bounded by a solid surface, and their interaction plays a crucial role in generation and maintenance of near-wall turbulence, and associated momentum and heat transport between fluid and solid. Even over simple geometries such as a smooth flat wall, however, turbulence exhibits complex behaviour due to its nonlinear and multiscale nature, so that prediction and control of turbulent flow remain challenging.

In this study, we consider the control of a fully developed turbulent channel flow, which is one of the canonical flow configurations. Since near-wall turbulence is responsible for the increase in wall skin friction, a tremendous amount of effort has been devoted to reducing the skin friction drag. In general, flow control strategies can be categorized into passive and active schemes (Gad-el Hak Reference Gad-el Hak1996). The passive scheme does not require power input for control, and its typical example is a riblet surface (Dean & Bhushan Reference Dean and Bhushan2010). In contrast, the active scheme requires additional power input for control, and it can be further classified into predetermined and feedback controls. The former applies a control input with a predetermined spatio-temporal distribution regardless of an instantaneous flow state. This makes a control system simple since no sensing of a flow field is required. Despite its simplicity, it is known that the predetermined control achieves relatively high drag reduction rates, and various control modes, such as spanwise wall oscillation (Jung, Mangiavacchi & Akhavan Reference Jung, Mangiavacchi and Akhavan1992; Quadrio & Ricco Reference Quadrio and Ricco2004), streamwise travelling wave of wall blowing and suction (Min et al. Reference Min, Kang, Speyer and Kim2006; Lieu, Marref & Jovanović Reference Lieu, Marref and Jovanović2010; Mamori, Iwamoto & Murata Reference Mamori, Iwamoto and Murata2014), and uniform wall blowing (Sumitani & Kasagi Reference Sumitani and Kasagi1995; Kametani & Fukagata Reference Kametani and Fukagata2011), have been proposed.

In contrast, the feedback control determines a control input based on a sensor signal obtained from an instantaneous flow field, therefore it enables a more flexible control. Meanwhile, due to the large degrees of freedom of the flow state and also the control input, optimizing a feedback control law is quite challenging. Therefore, existing control strategies have often been developed based on researchers’ physical insights. A typical example of a feedback control is the so-called opposition control (Choi, Moin & Kim Reference Choi, Moin and Kim1994; Hammond, Bewley & Moin Reference Hammond, Bewley and Moin1998; Chung & Talha Reference Chung and Talha2011), where local wall blowing and suction is applied so as to cancel the wall-normal velocity fluctuation at a certain height from the wall. The sensing plane is called a detection plane, and its optimal height has been reported as ![]() $y^+=15$ in a wall unit (Hammond et al. Reference Hammond, Bewley and Moin1998). The relationship between the wall-normal velocity on the detection plane and the control input has been assumed commonly to be linear a priori, and its optimal weight coefficient was found to be approximately unity (Choi et al. Reference Choi, Moin and Kim1994; Chung & Talha Reference Chung and Talha2011). It should be noted that optimization of these parameters in the control algorithm has mostly been done through trial and error, and such an approach is quite inefficient even for a simple control algorithm where the relationship between the sensor signal and the control input is assumed to be linear.

$y^+=15$ in a wall unit (Hammond et al. Reference Hammond, Bewley and Moin1998). The relationship between the wall-normal velocity on the detection plane and the control input has been assumed commonly to be linear a priori, and its optimal weight coefficient was found to be approximately unity (Choi et al. Reference Choi, Moin and Kim1994; Chung & Talha Reference Chung and Talha2011). It should be noted that optimization of these parameters in the control algorithm has mostly been done through trial and error, and such an approach is quite inefficient even for a simple control algorithm where the relationship between the sensor signal and the control input is assumed to be linear.

There also exists another approach to develop efficient feedback control laws. Optimal control theory is a powerful tool to optimize a control input with large degrees of freedom by explicitly leveraging mathematical models of a flow system such as Navier–Stokes equations and mass conservation. Specifically, the spatio-temporal distribution of a control input is determined so as to minimize a prescribed cost functional. The cost functional can be defined within a certain time horizon, so that the future flow dynamics is taken into consideration in the optimization procedures. Optimal control theory was applied successfully to a low-Reynolds-number turbulent channel flow by Bewley, Moin & Temam (Reference Bewley, Moin and Temam2001), and it was demonstrated that the flow can be relaminarized. One of the major drawbacks in optimal control theory is that it requires expensive iterations of forward and adjoint simulations within the time horizon in order to determine the optimal control input. By assuming a vanishingly small time horizon, suboptimal control theory (Lee, Kim & Choi Reference Lee, Kim and Choi1998; Hasegawa & Kasagi Reference Hasegawa and Kasagi2011) provides an analytical expression of the control input without solving adjoint equations, but its control performance is not as high as that achieved by optimal control theory, suggesting the importance of considering future flow dynamics in determining the control input. Another issue is that there exists a severe limitation in the length of the time horizon employed in optimal control theory due to inherent instability of adjoint equations (Wang, Hu & Blonigan Reference Wang, Hu and Blonigan2014). Specifically, the maximum time horizon is approximately 100 in a wall unit (Bewley et al. Reference Bewley, Moin and Temam2001; Yamamoto, Hasegawa & Kasagi Reference Yamamoto, Hasegawa and Kasagi2013), which is quite short considering the time scale of wall turbulence. In particular, this limitation becomes critical at higher Reynolds numbers where large-scale structures play important roles in the dynamics of wall turbulence (Kim & Adrian Reference Kim and Adrian1999).

In recent years, much attention has been paid to reinforcement learning as a new framework for developing efficient control strategies in various fields, such as robot control (Kober, Bagnell & Peters Reference Kober, Bagnell and Peters2013) and games (Silver et al. Reference Silver2016). In reinforcement learning, an agent decides its action based on a current state. As a consequence, the agent receives a reward from an environment. By repeating this interaction with the environment, the agent learns an efficient policy, which dictates the relationship between the state and the action, so as to maximize the total expected future reward. In this way, the policy can be optimized from a long-term perspective. In addition, by combining reinforcement learning and deep neural networks, deep reinforcement learning (Sutton & Barto Reference Sutton and Barto2018) can deal naturally with a complex nonlinear relationship between sensor signals and a control input. We note that there already exist several studies applying machine learning techniques for reducing skin friction drag in a turbulent channel flow. For example, Lee et al. (Reference Lee, Kim, Babcock and Goodman1997) first applied neural networks to design a controller to suppress a certain physical quantity of interest in the short term, i.e. after one time step. More recently, Han & Huang (Reference Han and Huang2020) and Park & Choi (Reference Park and Choi2020) applied convolutional neural networks to predict the wall-normal velocity fluctuation at the detection plane to reproduce the opposition control (Choi et al. Reference Choi, Moin and Kim1994) based on wall measurements only. However, the reinforcement learning distinguishes itself from those other machine learning techniques in the sense that it provides a framework to develop novel control strategies that are effective in the long term. It is therefore no surprise that reinforcement learning is gaining more and more attention for its applications to fluid mechanics. Recent attempts and achievements are summarized in several comprehensive review articles (Rabault et al. Reference Rabault, Ren, Zhang, Tang and Xu2020; Garnier et al. Reference Garnier, Viquerat, Rabault, Larcher, Kuhnle and Hachem2021).

Previous studies cover a variety of purposes, such as drag reduction (Koizumi, Tsutsumi & Shima Reference Koizumi, Tsutsumi and Shima2018; Rabault et al. Reference Rabault, Kuchta, Jensen, Reglade and Cerardi2019; Rabault & Kuhnle Reference Rabault and Kuhnle2019; Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020; Tang et al. Reference Tang, Rabault, Kuhnle, Wang and Wang2020; Tokarev, Palkin & Mullyadzhanov Reference Tokarev, Palkin and Mullyadzhanov2020; Xu et al. Reference Xu, Zhang, Deng and Rabault2020; Ghraieb et al. Reference Ghraieb, Viquerat, Larcher, Meliga and Hachem2021; Paris, Beneddine & Dandois Reference Paris, Beneddine and Dandois2021; Ren, Rabault & Tang Reference Ren, Rabault and Tang2021), control of heat transfer (Beintema et al. Reference Beintema, Corbetta, Biferale and Toschi2020; Hachem et al. Reference Hachem, Ghraieb, Viquerat, Larcher and Meliga2021), optimization of microfluidics (Dressler et al. Reference Dressler, Howes, Choo and de Mello2018; Lee et al. Reference Lee, Balu, Stoecklein, Ganapathysubramanian and Sarkar2021), optimization of artificial swimmers (Novati et al. Reference Novati, Verma, Alexeev, Rossinelli, Van Rees and Koumoutsakos2018; Verma, Novati & Koumoutsakos Reference Verma, Novati and Koumoutsakos2018; Yan et al. Reference Yan, Chang, Tian, Wang, Zhang and Liu2020; Zhu et al. Reference Zhu, Tian, Young, Liao and Lai2021) and shape optimization (Yan et al. Reference Yan, Zhu, Kuang and Wang2019; Li, Zhang & Chen Reference Li, Zhang and Chen2021; Viquerat et al. Reference Viquerat, Rabault, Kuhnle, Ghraieb, Larcher and Hachem2021; Qin et al. Reference Qin, Wang, Wang, Wang, Sun and Zhong2021). In terms of drag reduction considered in the present study, Rabault et al. (Reference Rabault, Kuchta, Jensen, Reglade and Cerardi2019) considered control of a two-dimensional flow around a cylinder at a low Reynolds number. They assumed wall blowing and suction from two local slits over the cylinder, and demonstrated that a control policy obtained by reinforcement learning achieves 8 % drag reduction. Tang et al. (Reference Tang, Rabault, Kuhnle, Wang and Wang2020) discussed Reynolds number effects on the control performance at different Reynolds numbers, namely 100, 200, 300 and 400, and also showed the possibility of applying the obtained control policy to unseen Reynolds numbers. Paris et al. (Reference Paris, Beneddine and Dandois2021) optimized the arrangement of sensors employed for controlling two-dimensional laminar flow behind a cylinder. Their sparsity-seeking algorithm allows us to reduce a number of sensors down to five without sacrificing the control performance. Ghraieb et al. (Reference Ghraieb, Viquerat, Larcher, Meliga and Hachem2021) proposed a degenerated version of reinforcement learning so that it does not require the information of the state as an input. This allows us to find effective open-loop control policies for both laminar and turbulent flows around an aerofoil and a cylinder. Fan et al. (Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020) demonstrated experimentally that reinforcement learning can find effective rotation modes of small cylinders around a primal stationary cylinder for its drag reduction. As shown above, most previous studies consider relatively simple flow fields such as a two-dimensional laminar flow around a blunt object. Also, their control inputs are wall blowing and suction from slots at two or four prescribed locations, or rotation/vibrations of one or two cylinders, so that the degrees of freedom for a control input are commonly limited. Therefore, it remains an open question whether reinforcement learning can be applicable to turbulence control with a control input having large degrees of freedom.

To the best of the authors’ knowledge, this is the first study applying reinforcement learning to control of a fully developed turbulent channel flow for reducing skin friction drag. As is often the case with wall turbulence control, we consider wall blowing and suction as a control input, which is defined at each computational grid point on the wall. This makes the degrees of freedom of the control input quite large (![]() $O(10^4)$), compared with those assumed in the existing applications of reinforcement learning. This paper is organized as follows. After introducing our problem setting in § 2, we explain the framework of the present reinforcement learning in detail in § 3. Then we present new control policies obtained in the present study, and their control results in § 4. In § 5, we discuss further how the unique features of the present control policies lead to high control performances. Finally, we summarize the present study in § 6.

$O(10^4)$), compared with those assumed in the existing applications of reinforcement learning. This paper is organized as follows. After introducing our problem setting in § 2, we explain the framework of the present reinforcement learning in detail in § 3. Then we present new control policies obtained in the present study, and their control results in § 4. In § 5, we discuss further how the unique features of the present control policies lead to high control performances. Finally, we summarize the present study in § 6.

2. Problem setting

2.1. Governing equations and boundary conditions

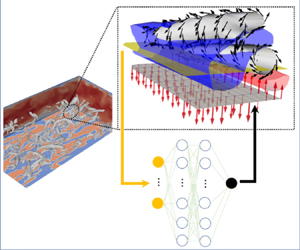

We consider a fully developed turbulent channel flow with wall blowing and suction as a control input, as shown in figure 1. The coordinate systems are set so that ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$ correspond to the streamwise, wall-normal and spanwise directions, respectively. The corresponding velocity components are denoted by

$z$ correspond to the streamwise, wall-normal and spanwise directions, respectively. The corresponding velocity components are denoted by ![]() $u$,

$u$, ![]() $v$ and

$v$ and ![]() $w$. Time is expressed by

$w$. Time is expressed by ![]() $t$. The origin of the coordinates is placed on the bottom wall as shown in figure 1. Unless stated otherwise, we consider only the bottom half of the channel due to the symmetry of the system. The governing equations of the fluid flow are the following incompressible Navier–Stokes and continuity equations:

$t$. The origin of the coordinates is placed on the bottom wall as shown in figure 1. Unless stated otherwise, we consider only the bottom half of the channel due to the symmetry of the system. The governing equations of the fluid flow are the following incompressible Navier–Stokes and continuity equations:

where ![]() $p$ is the static pressure. Throughout this paper, all variables without a superscript are non-dimensionalized by the channel half-width

$p$ is the static pressure. Throughout this paper, all variables without a superscript are non-dimensionalized by the channel half-width ![]() $h^*$ and the bulk mean velocity

$h^*$ and the bulk mean velocity ![]() $U^*_{b}$, while a variable with an asterisk indicates a dimensional value. A constant flow rate condition is imposed, so that the bulk Reynolds number is

$U^*_{b}$, while a variable with an asterisk indicates a dimensional value. A constant flow rate condition is imposed, so that the bulk Reynolds number is ![]() $Re_{b} \equiv {2U^*_{b} h^*}/{\nu ^*} = 4646.72$, where

$Re_{b} \equiv {2U^*_{b} h^*}/{\nu ^*} = 4646.72$, where ![]() $\nu ^*$ is the kinematic viscosity of the fluid. The corresponding friction Reynolds number in the uncontrolled flow is

$\nu ^*$ is the kinematic viscosity of the fluid. The corresponding friction Reynolds number in the uncontrolled flow is ![]() $Re_{\tau } \equiv {u^*_{\tau } h^*}/{\nu ^*} \approx 150$. Here, the friction velocity is defined as

$Re_{\tau } \equiv {u^*_{\tau } h^*}/{\nu ^*} \approx 150$. Here, the friction velocity is defined as ![]() $u^*_{\tau } = \sqrt {\underline {\tau _w^*} / \rho ^*}$, where

$u^*_{\tau } = \sqrt {\underline {\tau _w^*} / \rho ^*}$, where ![]() $\rho ^*$ is the fluid density, and

$\rho ^*$ is the fluid density, and ![]() $\underline {\tau _w^*}$ is the space–time average of the wall friction.

$\underline {\tau _w^*}$ is the space–time average of the wall friction.

Figure 1. Schematic of the computational domain and coordinate system.

Periodic boundary conditions are imposed in the streamwise and spanwise directions. As for the wall-normal direction, we impose no-slip conditions for the tangential velocity components on the wall, while wall blowing and suction with zero-net-mass flux is applied as a control input:

Here, ![]() $\phi (x, z, t)$ indicates the space–time distribution of wall blowing and suction at the bottom wall (

$\phi (x, z, t)$ indicates the space–time distribution of wall blowing and suction at the bottom wall (![]() $y = 0$), and

$y = 0$), and ![]() $\delta _{ij}$ is the Kronecker delta. Wall blowing and suction is also imposed at the top wall, and its space–time distribution is determined based on the same control policy as that used for the bottom wall, so that the resulting flow is always statistically symmetric with respect to the channel centre. The objective of the present study is to find an effective strategy to determine the distributions of

$\delta _{ij}$ is the Kronecker delta. Wall blowing and suction is also imposed at the top wall, and its space–time distribution is determined based on the same control policy as that used for the bottom wall, so that the resulting flow is always statistically symmetric with respect to the channel centre. The objective of the present study is to find an effective strategy to determine the distributions of ![]() $\phi (x, z, t)$ for drag reduction.

$\phi (x, z, t)$ for drag reduction.

In reinforcement learning, a control policy (control law) is learned on a trial-and-error basis requiring a large number of simulations. In order to reduce the computational cost for the training, we introduce the minimal channel (Jiménez & Moin Reference Jiménez and Moin1991), which has the minimum domain size to maintain turbulence. Accordingly, the streamwise, wall-normal and spanwise domain sizes are set to be ![]() $(L_{x}, L_{y}, L_{z}) = (2.67, 2.0, 0.8)$. Once a control policy is obtained in the minimal channel, it is assessed in a larger domain with

$(L_{x}, L_{y}, L_{z}) = (2.67, 2.0, 0.8)$. Once a control policy is obtained in the minimal channel, it is assessed in a larger domain with ![]() $(L_{x}, L_{y}, L_{z}) = (2.5 {\rm \pi}, 2.0, {\rm \pi})$. Hereafter, the latter larger domain is referred to as a full channel.

$(L_{x}, L_{y}, L_{z}) = (2.5 {\rm \pi}, 2.0, {\rm \pi})$. Hereafter, the latter larger domain is referred to as a full channel.

2.2. Numerical methodologies

The governing equations (2.1) and (2.2) are discretized in space by a pseudo-spectral method (Boyd Reference Boyd2001). Specifically, Fourier expansions are adopted in the streamwise and spanwise directions, while Chebyshev polynomials are used in the wall-normal direction. For the minimal channel, the number of modes used in each direction is ![]() $(N_{x}, N_{y}, N_{z}) = (16, 65, 16)$, whilst they are set to be

$(N_{x}, N_{y}, N_{z}) = (16, 65, 16)$, whilst they are set to be ![]() $(N_{x}, N_{y}, N_{z}) = (64, 65, 64)$ for the full channel. The

$(N_{x}, N_{y}, N_{z}) = (64, 65, 64)$ for the full channel. The ![]() $3/2$ rule is applied to eliminate aliasing errors, and therefore the number of grid points in the physical space is 1.5 times the number of modes employed in each direction.

$3/2$ rule is applied to eliminate aliasing errors, and therefore the number of grid points in the physical space is 1.5 times the number of modes employed in each direction.

As for the time advancement, a fractional step method (Kim & Moin Reference Kim and Moin1985) is applied to decouple the pressure term from (2.1). The second-order Adams–Bashforth method is used for the advection term. For viscous terms, we employ the Euler implicit method, since the Crank–Nicolson method sometimes leads to numerical instability due to its slightly narrower stability region (Kajishima & Taira Reference Kajishima and Taira2016). This is reasonable considering that the reinforcement learning is a trial-and-error process, which can lead to unstable control policies, especially during the early stage of learning. Hence it is more advantageous to prioritize stability over accuracy during the training, and then to verify the resulting control performances by the obtained control policies with higher-order schemes afterwards. Indeed, in the present study, a time-advancement scheme hardly affects the evaluation of the skin friction drag for a given control policy, as shown in Appendix D, since we commonly use a relatively small time step in both training and evaluation phases.

Specifically, the time step is set to be ![]() $\Delta t^{+}=0.06$ and

$\Delta t^{+}=0.06$ and ![]() $0.03$ for the minimal and full channels, respectively. The superscript

$0.03$ for the minimal and full channels, respectively. The superscript ![]() $+$ denotes a quantity scaled by the viscous scale in the uncontrolled flow throughout this paper. The above setting of the time step ensures that the Courant number is less than unity even with wall blowing and suction. The present numerical scheme has already been validated and applied successfully to control and estimation problems in previous studies (Yamamoto et al. Reference Yamamoto, Hasegawa and Kasagi2013; Suzuki & Hasegawa Reference Suzuki and Hasegawa2017).

$+$ denotes a quantity scaled by the viscous scale in the uncontrolled flow throughout this paper. The above setting of the time step ensures that the Courant number is less than unity even with wall blowing and suction. The present numerical scheme has already been validated and applied successfully to control and estimation problems in previous studies (Yamamoto et al. Reference Yamamoto, Hasegawa and Kasagi2013; Suzuki & Hasegawa Reference Suzuki and Hasegawa2017).

3. Reinforcement learning

3.1. Outline

Reinforcement learning is a problem where an agent (learner) learns the optimal policy that maximizes a long-term total reward through trial and error. Specifically, an agent receives a state ![]() $s$ from an environment (control target) and decides an action

$s$ from an environment (control target) and decides an action ![]() $a$ based on a policy

$a$ based on a policy ![]() $\mu (a|s)$. By executing the action against the environment, the state changes from

$\mu (a|s)$. By executing the action against the environment, the state changes from ![]() $s$ to

$s$ to ![]() $s'$, and a resulting instantaneous reward

$s'$, and a resulting instantaneous reward ![]() $r$ is obtained. Then

$r$ is obtained. Then ![]() $s'$ and

$s'$ and ![]() $r$ are fed back to the agent and the policy is updated. With the new policy, the next action

$r$ are fed back to the agent and the policy is updated. With the new policy, the next action ![]() $a'$ under the new state

$a'$ under the new state ![]() $s'$ is determined. By repeating the above interaction with the environment, the agent learns the optimal policy. If the next state

$s'$ is determined. By repeating the above interaction with the environment, the agent learns the optimal policy. If the next state ![]() $s'$ and the instantaneous reward

$s'$ and the instantaneous reward ![]() $r$ depend only on the previous state

$r$ depend only on the previous state ![]() $s$ and the action

$s$ and the action ![]() $a$, then this process is called the Markov decision process, which is the basis of the reinforcement learning (Sutton & Barto Reference Sutton and Barto2018).

$a$, then this process is called the Markov decision process, which is the basis of the reinforcement learning (Sutton & Barto Reference Sutton and Barto2018).

In the current flow control problem, the environment is a fully developed turbulent channel flow, whereas the state is sensing a signal from the instantaneous flow field, and the action corresponds to the control input, i.e. wall blowing and suction. The instantaneous reward ![]() $r(t)$ is the friction coefficient

$r(t)$ is the friction coefficient ![]() $C_f(t)$ with a negative sign, since the reward is defined to be maximized, while the wall friction should be minimized in the present study. Specifically, it is defined as

$C_f(t)$ with a negative sign, since the reward is defined to be maximized, while the wall friction should be minimized in the present study. Specifically, it is defined as

where

Here, ![]() $\overline {\tau _{w}}$ is the spatial mean of the wall shear stress over the entire wall, and therefore both

$\overline {\tau _{w}}$ is the spatial mean of the wall shear stress over the entire wall, and therefore both ![]() $r$ and

$r$ and ![]() $C_f$ are functions of time as written explicitly in (3.1) and (3.2). It should be noted that there is no unique way to define the reward. For example, the inverse of the friction coefficient, i.e.

$C_f$ are functions of time as written explicitly in (3.1) and (3.2). It should be noted that there is no unique way to define the reward. For example, the inverse of the friction coefficient, i.e. ![]() $r(t)=1/C_f(t)$, could be another choice. The major difference from the present choice, (3.1), is that the reward increases more rapidly when

$r(t)=1/C_f(t)$, could be another choice. The major difference from the present choice, (3.1), is that the reward increases more rapidly when ![]() $C_f$ becomes smaller. It was confirmed, however, that there is no significant difference in the final outcome. More detailed comparisons in the resulting control policies and their performances between the two rewards can be found in Appendix A.

$C_f$ becomes smaller. It was confirmed, however, that there is no significant difference in the final outcome. More detailed comparisons in the resulting control policies and their performances between the two rewards can be found in Appendix A.

Our objective is to find an efficient control policy that describes the relationship between the flow state and the action for maximizing the future total reward. In the present study, we use the deep deterministic policy gradient (DDPG) algorithm (Lillicrap et al. Reference Lillicrap, Hunt, Pritzel, Heess, Erez, Tassa, Silver and Wierstra2016), which is a framework to optimize a deterministic policy. Specifically, this algorithm consists of two neural networks, called an actor and a critic, as shown in figure 2. The input of the actor is the state ![]() $s$, while its output is the action

$s$, while its output is the action ![]() $a$. Therefore, the actor dictates a control policy

$a$. Therefore, the actor dictates a control policy ![]() $\mu (a|s)$, and it has to be optimized. For this purpose, another network, i.e. a critic, is introduced. The inputs of the critic are the current state

$\mu (a|s)$, and it has to be optimized. For this purpose, another network, i.e. a critic, is introduced. The inputs of the critic are the current state ![]() $s$ and the action

$s$ and the action ![]() $a$. The critic outputs the estimation of an action value function

$a$. The critic outputs the estimation of an action value function ![]() $Q^{\mu } (s,a)$, i.e. the expected total future reward when a certain action

$Q^{\mu } (s,a)$, i.e. the expected total future reward when a certain action ![]() $a$ is taken under a certain state

$a$ is taken under a certain state ![]() $s$. It should be noted that during the training, although the instantaneous reward, i.e. instantaneous wall friction, is obtained at every time step from simulation, we generally do not know

$s$. It should be noted that during the training, although the instantaneous reward, i.e. instantaneous wall friction, is obtained at every time step from simulation, we generally do not know ![]() $Q^{\mu } (s,a)$, since it is determined by the equilibrium state after the current control policy

$Q^{\mu } (s,a)$, since it is determined by the equilibrium state after the current control policy ![]() $\mu$ is applied continuously to the flow field. The role of the critic network is to estimate

$\mu$ is applied continuously to the flow field. The role of the critic network is to estimate ![]() $Q^{\mu } (s,a)$ from past states, actions and resulting rewards.

$Q^{\mu } (s,a)$ from past states, actions and resulting rewards.

Figure 2. Schematic diagram of the DDPG algorithm.

As for training the networks, the actor is first trained so as to maximize the expected total reward ![]() $Q^{\mu } (s,a)$ while fixing the critic network. Then the critic is optimized so that the resulting

$Q^{\mu } (s,a)$ while fixing the critic network. Then the critic is optimized so that the resulting ![]() $Q^{\mu } (s,a)$ minimizes the following squared residual of the Bellman equation:

$Q^{\mu } (s,a)$ minimizes the following squared residual of the Bellman equation:

As shown in figure 2, the two networks are coupled and trained alternatively, so that both of them will be optimized after a number of trials. Here, ![]() $\gamma$ is the time discount rate. If it is set to be small, then the agent searches for a control policy yielding a short-term benefit. In contrast, when

$\gamma$ is the time discount rate. If it is set to be small, then the agent searches for a control policy yielding a short-term benefit. In contrast, when ![]() $\gamma$ approaches unity, the policy is optimized from a longer-term perspective. Meanwhile, it is also known that when it is set too large, the agent tends to select no action to avoid failure, i.e. drag increase. In this study,

$\gamma$ approaches unity, the policy is optimized from a longer-term perspective. Meanwhile, it is also known that when it is set too large, the agent tends to select no action to avoid failure, i.e. drag increase. In this study, ![]() $\gamma$ is set to be

$\gamma$ is set to be ![]() $0.99$, which is the same as the value used commonly in previous studies (Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020; Paris et al. Reference Paris, Beneddine and Dandois2021).

$0.99$, which is the same as the value used commonly in previous studies (Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020; Paris et al. Reference Paris, Beneddine and Dandois2021).

3.2. State, action and network setting

Ideally, the velocity field throughout the entire domain should be defined as the state, and wall blowing and suction imposed at each grid point should be considered as the action. In such a case, however, the degrees of freedom of the state and the action become quite large, so that network training will be difficult. Meanwhile, considering the homogeneity of the current flow configuration in the streamwise and spanwise directions, wall blowing and suction could be decided based on the local information of the flow field. For example, the opposition control (Choi et al. Reference Choi, Moin and Kim1994), which is one of the well-known control strategies, applies local wall blowing and suction so as to cancel the wall-normal velocity fluctuation above the wall. Belus et al. (Reference Belus, Rabault, Viquerat, Che, Hachem and Reglade2019) also introduce the idea of the translational invariance to the control of a one-dimensional falling liquid film based on reinforcement learning. They demonstrate that exploiting the locality of the flow system effectively accelerates network training. Hence, in the present study, we also assume that a local control input can be decided based solely on the velocity information above the location where the control is applied. Specifically, we set the detection plane height to ![]() $y_d^+ = 15$, which is found to be optimal for the opposition control in previous studies (Hammond et al. Reference Hammond, Bewley and Moin1998; Chung & Talha Reference Chung and Talha2011). We note that we have conducted additional configuration where the state is defined as the velocity information at multiple locations above the wall. It was found that the resultant control performance is not improved significantly from that obtained in the present configuration with a single sensing location, and the largest weight was confirmed at approximately

$y_d^+ = 15$, which is found to be optimal for the opposition control in previous studies (Hammond et al. Reference Hammond, Bewley and Moin1998; Chung & Talha Reference Chung and Talha2011). We note that we have conducted additional configuration where the state is defined as the velocity information at multiple locations above the wall. It was found that the resultant control performance is not improved significantly from that obtained in the present configuration with a single sensing location, and the largest weight was confirmed at approximately ![]() $y^+ = 15$ (see Appendix B). Hence the present study focuses on a control with the single detection plane located at

$y^+ = 15$ (see Appendix B). Hence the present study focuses on a control with the single detection plane located at ![]() $y^+_d = 15$ from the wall.

$y^+_d = 15$ from the wall.

As a first step, we consider the simplest linear actor, defined as

Here, the prime indicates the deviation from the spatial mean, so that ![]() $v' = v - \bar {v}$. Throughout this study, the velocity fluctuation used in the state is defined as the deviation from its spatial mean in the

$v' = v - \bar {v}$. Throughout this study, the velocity fluctuation used in the state is defined as the deviation from its spatial mean in the ![]() $x$ and

$x$ and ![]() $z$ directions at each instant, and

$z$ directions at each instant, and ![]() $\alpha$ and

$\alpha$ and ![]() $\beta$ are constants to be optimized. In order to enhance the robustness of the training, a random noise

$\beta$ are constants to be optimized. In order to enhance the robustness of the training, a random noise ![]() $N$ with zero mean and standard deviation

$N$ with zero mean and standard deviation ![]() $0.1$ in a wall unit is added. Throughout the present study, the same magnitude of

$0.1$ in a wall unit is added. Throughout the present study, the same magnitude of ![]() $N$ is used in all the cases. In the present flow configuration, where periodicity is imposed in the streamwise and spanwise directions,

$N$ is used in all the cases. In the present flow configuration, where periodicity is imposed in the streamwise and spanwise directions, ![]() $\bar {v}$ is null, and therefore

$\bar {v}$ is null, and therefore ![]() $v' = v$. We also note that the same values of

$v' = v$. We also note that the same values of ![]() $\alpha$ and

$\alpha$ and ![]() $\beta$ are used for all locations on the wall. In addition, a net mass flux from each wall is assumed to be zero, so that

$\beta$ are used for all locations on the wall. In addition, a net mass flux from each wall is assumed to be zero, so that ![]() $\beta$ is zero. Eventually, the above problem reduces to optimizing the single parameter

$\beta$ is zero. Eventually, the above problem reduces to optimizing the single parameter ![]() $\alpha$ in the actor. This configuration will be referred to as Case Li00, as shown in table 1. For this control algorithm (3.4), the previous study (Chung & Talha Reference Chung and Talha2011) reported that the optimal value of

$\alpha$ in the actor. This configuration will be referred to as Case Li00, as shown in table 1. For this control algorithm (3.4), the previous study (Chung & Talha Reference Chung and Talha2011) reported that the optimal value of ![]() $\alpha$ is approximately unity. The purpose of revisiting this configuration is to assess whether the present reinforcement learning can reproduce the opposition control, find the optimal value of

$\alpha$ is approximately unity. The purpose of revisiting this configuration is to assess whether the present reinforcement learning can reproduce the opposition control, find the optimal value of ![]() $\alpha$, and achieve a drag reduction similar to that reported in the previous study. We also note that the output of the actor is clipped to

$\alpha$, and achieve a drag reduction similar to that reported in the previous study. We also note that the output of the actor is clipped to ![]() $-1 \leq \phi ^{+} \leq 1$ before applying it to the flow simulation in order to avoid a large magnitude of the control input.

$-1 \leq \phi ^{+} \leq 1$ before applying it to the flow simulation in order to avoid a large magnitude of the control input.

Table 1. Considered cases with the corresponding state, numbers of layers and nodes, an activation function, and the weight coefficient ![]() $d$ for the control cost.

$d$ for the control cost.

Considering that the skin friction drag is related directly to the Reynolds shear stress ![]() $-\overline {u'v'}$ (Fukagata, Iwamoto & Kasagi Reference Fukagata, Iwamoto and Kasagi2002), the streamwise velocity fluctuation

$-\overline {u'v'}$ (Fukagata, Iwamoto & Kasagi Reference Fukagata, Iwamoto and Kasagi2002), the streamwise velocity fluctuation ![]() $u'$ would also be worth considering in addition to

$u'$ would also be worth considering in addition to ![]() $v'$. Hence, for the rest of the cases shown in table 1, both

$v'$. Hence, for the rest of the cases shown in table 1, both ![]() $u'$ and

$u'$ and ![]() $v'$ at

$v'$ at ![]() $y^+_d = 15$ are considered as the state. The actor network has 1 layer and 8 nodes, as shown in figure 3(a). We have changed the size of the actor network and found that further increases in the numbers of layers and nodes do not improve the resultant control performance (see Appendix C). The mathematical expression of the present actor network is

$y^+_d = 15$ are considered as the state. The actor network has 1 layer and 8 nodes, as shown in figure 3(a). We have changed the size of the actor network and found that further increases in the numbers of layers and nodes do not improve the resultant control performance (see Appendix C). The mathematical expression of the present actor network is

where ![]() $\boldsymbol {\alpha }_{11}$,

$\boldsymbol {\alpha }_{11}$, ![]() $\boldsymbol {\alpha }_{12}$,

$\boldsymbol {\alpha }_{12}$, ![]() $\boldsymbol {\beta }_1$ and

$\boldsymbol {\beta }_1$ and ![]() $\boldsymbol {\alpha }_2$ are vectors having the same dimension as the number of the nodes, while

$\boldsymbol {\alpha }_2$ are vectors having the same dimension as the number of the nodes, while ![]() $\beta _2$ is a scalar quantity. As for the activation function

$\beta _2$ is a scalar quantity. As for the activation function ![]() $\sigma$, we consider rectified linear unit (ReLU), sigmoid, leaky ReLU and hyperbolic tangent, which are referred to respectively as Cases R18, S18, LR18 and T18 as listed in table 1. The last two digits in each case name represent the numbers of layers and nodes employed in the actor. We also note that a hyperbolic tangent is used for the activation function of the output layer in order to map the range of the control input into

$\sigma$, we consider rectified linear unit (ReLU), sigmoid, leaky ReLU and hyperbolic tangent, which are referred to respectively as Cases R18, S18, LR18 and T18 as listed in table 1. The last two digits in each case name represent the numbers of layers and nodes employed in the actor. We also note that a hyperbolic tangent is used for the activation function of the output layer in order to map the range of the control input into ![]() $\| \phi ^+ \| < 1.0$.

$\| \phi ^+ \| < 1.0$.

Figure 3. Network structures of (a) the actor and (b) the critic.

The network structure of the critic is shown schematically in figure 3(b). It consists of two layers with 8 and 16 nodes for the first and second layers for the state, and another one-layer network with 16 nodes for the action. Then the two networks are integrated by an additional two layers with 64 nodes, and the final output is the action value function ![]() $Q^{\mu } (s,a)$. ReLU is used for the activation function.

$Q^{\mu } (s,a)$. ReLU is used for the activation function.

In order to take into account the cost for applying the control, we extend the reward as

The second term of (3.6) represents the cost of control, and ![]() $d$ is a weight coefficient that determines the balance between the wall friction and the cost of applying the control. In the present study,

$d$ is a weight coefficient that determines the balance between the wall friction and the cost of applying the control. In the present study, ![]() $d$ is changed systematically from 0 to 0.1, which cases are referred to as R18, R18D1, R18D2 and R18D3 (see table 1).

$d$ is changed systematically from 0 to 0.1, which cases are referred to as R18, R18D1, R18D2 and R18D3 (see table 1).

We note that the current reward ((3.1) or (3.6)) is defined based on the global quantities, which are averaged in the homogeneous directions ![]() $x$ and

$x$ and ![]() $z$, whereas the control policy is defined locally as (3.5). Another option would be to define the reward locally as well. Belus et al. (Reference Belus, Rabault, Viquerat, Che, Hachem and Reglade2019) assessed carefully these two possibilities and concluded that to define both the control policy and the reward locally is more effective in training the network for their one-dimensional liquid film problem. In the present problem, however, we found that the training becomes unstable when the local reward is used. The reason for the instability is unclear, but we speculate as follows. In the case of wall turbulence, it is not difficult to achieve local drag reduction by applying strong wall blowing. However, it is highly possible that this will cause large drag increase afterwards (downstream). Therefore, using a local reward may not be effective for evaluating the global drag reduction effect in the present case. Consequently, the reward is defined globally throughout this study. It would also be interesting to include the spanwise velocity fluctuation

$z$, whereas the control policy is defined locally as (3.5). Another option would be to define the reward locally as well. Belus et al. (Reference Belus, Rabault, Viquerat, Che, Hachem and Reglade2019) assessed carefully these two possibilities and concluded that to define both the control policy and the reward locally is more effective in training the network for their one-dimensional liquid film problem. In the present problem, however, we found that the training becomes unstable when the local reward is used. The reason for the instability is unclear, but we speculate as follows. In the case of wall turbulence, it is not difficult to achieve local drag reduction by applying strong wall blowing. However, it is highly possible that this will cause large drag increase afterwards (downstream). Therefore, using a local reward may not be effective for evaluating the global drag reduction effect in the present case. Consequently, the reward is defined globally throughout this study. It would also be interesting to include the spanwise velocity fluctuation ![]() $w'$ to the state. However, we found that it does not contribute to further improvement of the resultant policy (not shown here). It is in contrast to Choi et al. (Reference Choi, Moin and Kim1994), where it is shown that the opposition control based on

$w'$ to the state. However, we found that it does not contribute to further improvement of the resultant policy (not shown here). It is in contrast to Choi et al. (Reference Choi, Moin and Kim1994), where it is shown that the opposition control based on ![]() $w'$ is most effective in reducing the skin friction drag. In their case, however, the control input is also a spanwise velocity on the wall, while the present study considers wall blowing and suction as a control input. Hence which quantities should be included in the state could depend on flow and control configurations.

$w'$ is most effective in reducing the skin friction drag. In their case, however, the control input is also a spanwise velocity on the wall, while the present study considers wall blowing and suction as a control input. Hence which quantities should be included in the state could depend on flow and control configurations.

3.3. Learning procedures

Figure 4 shows the general outline of the present learning procedures. The two networks, i.e. actor and critic, are trained in parallel with flow simulation within a fixed time interval, which is called an episode. In the present study, the episode duration is set to be ![]() $T^+ = 600$, and the flow simulation is repeated within the same interval, i.e.

$T^+ = 600$, and the flow simulation is repeated within the same interval, i.e. ![]() $t\in [0, T]$. In each episode, the flow simulation is started from the identical initial field at

$t\in [0, T]$. In each episode, the flow simulation is started from the identical initial field at ![]() $t = 0$, which is a fully developed uncontrolled flow. For

$t = 0$, which is a fully developed uncontrolled flow. For ![]() $t > 0$, the control input

$t > 0$, the control input ![]() $\phi$ is applied from the two walls in accordance with the control policy

$\phi$ is applied from the two walls in accordance with the control policy ![]() $\mu (a|s)$. We set the episode duration as

$\mu (a|s)$. We set the episode duration as ![]() $T^+ = 600$, so that the period covers the entire process in which the initial uncontrolled flow transits to another fully developed flow with the applied control. If the episode length is too short, then the flow does not converge to a fully developed state, so that the obtained policy is effective for only the initial transient after the onset of the control. Meanwhile, if the episode length becomes longer, then the obtained policy is more biased to the fully developed state under the control, and therefore might not be effective for the initial transient. According to our experience, the episode duration should be determined so that it covers the entire procedures for the initial uncontrolled flow to converge to another fully developed state after the onset of a control. Of course, the transient period should generally depend on a control policy and also a flow condition, therefore the optimal episode duration has to be found by trial and error. The number of training episodes is set to be

$T^+ = 600$, so that the period covers the entire process in which the initial uncontrolled flow transits to another fully developed flow with the applied control. If the episode length is too short, then the flow does not converge to a fully developed state, so that the obtained policy is effective for only the initial transient after the onset of the control. Meanwhile, if the episode length becomes longer, then the obtained policy is more biased to the fully developed state under the control, and therefore might not be effective for the initial transient. According to our experience, the episode duration should be determined so that it covers the entire procedures for the initial uncontrolled flow to converge to another fully developed state after the onset of a control. Of course, the transient period should generally depend on a control policy and also a flow condition, therefore the optimal episode duration has to be found by trial and error. The number of training episodes is set to be ![]() $100$. We tested additional training with different initial conditions and also with twice the number of episodes in several cases, and confirmed that the resulting control policies presented in this study are hardly affected by them. We also note that in the present study, the control policy generally converges and exhibits similar features in the last 10–20 episodes within the total 100 episodes.

$100$. We tested additional training with different initial conditions and also with twice the number of episodes in several cases, and confirmed that the resulting control policies presented in this study are hardly affected by them. We also note that in the present study, the control policy generally converges and exhibits similar features in the last 10–20 episodes within the total 100 episodes.

Figure 4. Schematic of the learning process.

Within each episode, the agent interacts consecutively with the flow by applying a control, and receives the instantaneous reward (3.1) or (3.6). Based on each interaction, the networks of the actor and the critic are updated. The Adam optimizer is used for both the networks, whereas the learning rate is set to be ![]() $0.001$ and

$0.001$ and ![]() $0.002$ for the actor and the critic, respectively. The buffer size is set to be 5 000 000, while the batch size is 64. In the present study, the networks are trained every short interval

$0.002$ for the actor and the critic, respectively. The buffer size is set to be 5 000 000, while the batch size is 64. In the present study, the networks are trained every short interval ![]() $\Delta t^+_{update} = 0.6$. Accordingly, the control input is also recalculated from the updated policy at the same time interval. Within the time interval, the control input is interpolated linearly (see figure 4). Ideally, a smaller time interval is better, since there will be more chances to update the networks. Meanwhile, it is known that a short training interval often causes numerical instability (Rabault et al. Reference Rabault, Kuchta, Jensen, Reglade and Cerardi2019; Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020). Our preliminary simulation results indicate that

$\Delta t^+_{update} = 0.6$. Accordingly, the control input is also recalculated from the updated policy at the same time interval. Within the time interval, the control input is interpolated linearly (see figure 4). Ideally, a smaller time interval is better, since there will be more chances to update the networks. Meanwhile, it is known that a short training interval often causes numerical instability (Rabault et al. Reference Rabault, Kuchta, Jensen, Reglade and Cerardi2019; Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020). Our preliminary simulation results indicate that ![]() $\Delta t^+_{update} = 0.6$ leads to the best control performance.

$\Delta t^+_{update} = 0.6$ leads to the best control performance.

The detailed numerical conditions of the present flow simulations, and also the network configurations used in the present reinforcement learning, are summarized in tables 2 and 3, respectively. The wall clock time needed for the training of ![]() $100$ episodes, i.e. for running direct numerical simulations of

$100$ episodes, i.e. for running direct numerical simulations of ![]() $100$ cases within

$100$ cases within ![]() $t^+ = 600$ in the minimal channel, with a single core of Intel Xeon Gold 6132 (2.6 GHz) is approximately one day. Most computational costs are for performing flow simulations, and the other costs such as updating the network parameters are quite minor. We also note that in the present study, the training of the networks is always conducted in the minimal channel, so that we do not apply transfer learning, where the network is first trained in the minimal channel or in the fully channel with a coarser mesh, and then fine tuning is performed in the full channel with a higher resolution. A few trials suggest that training in the full-size channel makes the training procedures much slower and sometimes unsuccessful, whereas successful cases result in policies similar to those obtained in the minimal channel. Hence the present reinforcement learning can successfully extract essential features of the effective control policies from the minimal channel.

$t^+ = 600$ in the minimal channel, with a single core of Intel Xeon Gold 6132 (2.6 GHz) is approximately one day. Most computational costs are for performing flow simulations, and the other costs such as updating the network parameters are quite minor. We also note that in the present study, the training of the networks is always conducted in the minimal channel, so that we do not apply transfer learning, where the network is first trained in the minimal channel or in the fully channel with a coarser mesh, and then fine tuning is performed in the full channel with a higher resolution. A few trials suggest that training in the full-size channel makes the training procedures much slower and sometimes unsuccessful, whereas successful cases result in policies similar to those obtained in the minimal channel. Hence the present reinforcement learning can successfully extract essential features of the effective control policies from the minimal channel.

Table 2. Numerical conditions in the present flow simulations.

Table 3. Parameters in the present reinforcement learning for Case R18.

4. Results of reinforcement learning

4.1. Linear policy: revisiting the opposition control

As a first step, we consider Case Li00, where only the wall-normal velocity fluctuation ![]() $v'$ at the detection plane at

$v'$ at the detection plane at ![]() $y^+_d = 15$ is used as a state, and the policy dictating the relationship between the state and the control input is linear, as described in (3.4). The time traces of the instantaneous

$y^+_d = 15$ is used as a state, and the policy dictating the relationship between the state and the control input is linear, as described in (3.4). The time traces of the instantaneous ![]() $C_f$ for different episodes are shown in figure 5. The line colour changes from green to blue as the number of episodes increases. For comparison, we also plot the temporal evolution of

$C_f$ for different episodes are shown in figure 5. The line colour changes from green to blue as the number of episodes increases. For comparison, we also plot the temporal evolution of ![]() $C_f$ for the uncontrolled and opposition control cases, with black and red lines, respectively. It can be seen that

$C_f$ for the uncontrolled and opposition control cases, with black and red lines, respectively. It can be seen that ![]() $C_f$ is reduced successfully as the training proceeds, and eventually converges to a value similar to that obtained by the opposition control.

$C_f$ is reduced successfully as the training proceeds, and eventually converges to a value similar to that obtained by the opposition control.

Figure 5. Temporal evolution of ![]() $C_{f}$ obtained in each episode for Case Li00. With increasing episode number, the line colour changes from green to blue. Black and red lines correspond to uncontrolled and opposition control cases.

$C_{f}$ obtained in each episode for Case Li00. With increasing episode number, the line colour changes from green to blue. Black and red lines correspond to uncontrolled and opposition control cases.

In figure 6, the time average ![]() $\langle C_f \rangle$ of the instantaneous friction coefficient is shown, where the bracket

$\langle C_f \rangle$ of the instantaneous friction coefficient is shown, where the bracket ![]() $\langle {\cdot } \rangle$ indicates the time average within the final period

$\langle {\cdot } \rangle$ indicates the time average within the final period ![]() $500 \leq t^{+} \leq 600$ in each episode. It can be seen that

$500 \leq t^{+} \leq 600$ in each episode. It can be seen that ![]() $\langle {C_{f}}\rangle$ decreases for the first ten episodes, then converges to the value obtained by the opposition control. Figure 7 shows the policy, i.e. the control input versus the state, obtained at the end of each episode. The line colour changes from green to blue with increasing episode number. As described by (3.4), the relationship between the state

$\langle {C_{f}}\rangle$ decreases for the first ten episodes, then converges to the value obtained by the opposition control. Figure 7 shows the policy, i.e. the control input versus the state, obtained at the end of each episode. The line colour changes from green to blue with increasing episode number. As described by (3.4), the relationship between the state ![]() $v'$ and the control input

$v'$ and the control input ![]() $\phi$ is linear, and the maximum absolute value of

$\phi$ is linear, and the maximum absolute value of ![]() $\phi ^+$ is clipped to unity. The red line corresponds to the case

$\phi ^+$ is clipped to unity. The red line corresponds to the case ![]() $(\alpha, \beta ) = (-1.0, 0)$ in (3.4), which was found to be optimal for the opposition control in Chung & Talha (Reference Chung and Talha2011). It can be seen that the present policy reproduces the opposition control with the optimal values

$(\alpha, \beta ) = (-1.0, 0)$ in (3.4), which was found to be optimal for the opposition control in Chung & Talha (Reference Chung and Talha2011). It can be seen that the present policy reproduces the opposition control with the optimal values ![]() $(\alpha, \beta ) = (-1.0, 0)$ quite well, while the present policy has a slightly steeper slope. This is probably attributed to the fact that the magnitude of

$(\alpha, \beta ) = (-1.0, 0)$ quite well, while the present policy has a slightly steeper slope. This is probably attributed to the fact that the magnitude of ![]() $\phi$ is clipped in the present policy. From the above results, we validate that the present reinforcement learning successfully finds the optimal linear control policy that has been reported in the previous studies (Choi et al. Reference Choi, Moin and Kim1994; Chung & Talha Reference Chung and Talha2011).

$\phi$ is clipped in the present policy. From the above results, we validate that the present reinforcement learning successfully finds the optimal linear control policy that has been reported in the previous studies (Choi et al. Reference Choi, Moin and Kim1994; Chung & Talha Reference Chung and Talha2011).

Figure 6. Time average of the friction coefficient ![]() $\langle C_{f}\rangle$ at the final period

$\langle C_{f}\rangle$ at the final period ![]() $500 \leq t^{+} \leq 600$ in each episode. Blue indicates Case Li00; thick black indicates uncontrolled; dashed black indicates opposition control; thin black indicates laminar.

$500 \leq t^{+} \leq 600$ in each episode. Blue indicates Case Li00; thick black indicates uncontrolled; dashed black indicates opposition control; thin black indicates laminar.

Figure 7. Obtained policy in Case Li00 at the end of each episode. With increasing episode number, the line colour changes from green to blue. The red line represents the opposition control where ![]() $(w, b) = (-1.0, 0)$.

$(w, b) = (-1.0, 0)$.

4.2. Nonlinear control policies

In this subsection, we present the results obtained by nonlinear policies, where a hidden layer and a nonlinear activation function are added to the actor network as listed in table 1.

4.2.1. Obtained policies

Figure 8 shows ![]() $\langle {C_{f}}\rangle$ as a function of the episode number for Cases R18, S18, LR18 and T18 using different activation functions. For all the cases,

$\langle {C_{f}}\rangle$ as a function of the episode number for Cases R18, S18, LR18 and T18 using different activation functions. For all the cases, ![]() $\langle {C_{f}}\rangle$ reduces from the uncontrolled value with increasing episode number, and eventually converges to a value similar to or even smaller than that achieved by the opposition control. In particular, higher drag reduction rates than that of the opposition control can be confirmed clearly in Cases R18, LR18 and S18.

$\langle {C_{f}}\rangle$ reduces from the uncontrolled value with increasing episode number, and eventually converges to a value similar to or even smaller than that achieved by the opposition control. In particular, higher drag reduction rates than that of the opposition control can be confirmed clearly in Cases R18, LR18 and S18.

Figure 8. Plots of ![]() $\langle C_{f} \rangle$ versus episode number for different policies obtained in the present reinforcement learning. Blue indicates Case R18; yellow indicates Case S18; green indicates Case LR18; red indicates Case T18.

$\langle C_{f} \rangle$ versus episode number for different policies obtained in the present reinforcement learning. Blue indicates Case R18; yellow indicates Case S18; green indicates Case LR18; red indicates Case T18.

The policy obtained at the best episode where the maximum drag reduction rate is achieved in each case is shown in figures 9(b–e), where the control input ![]() $\phi$ is plotted as a function of the state

$\phi$ is plotted as a function of the state ![]() $(u', v')$ at

$(u', v')$ at ![]() $y_d^+ = 15$. Red and blue correspond to wall blowing and suction, respectively. For reference, we also plot the policy of the opposition control defined by (3.4) with

$y_d^+ = 15$. Red and blue correspond to wall blowing and suction, respectively. For reference, we also plot the policy of the opposition control defined by (3.4) with ![]() $(\alpha, \beta ) = (-1.0, 0)$ in figure 9(a). In this case, the control input depends on only

$(\alpha, \beta ) = (-1.0, 0)$ in figure 9(a). In this case, the control input depends on only ![]() $v'$, so that the colour contours are horizontal, and the control input

$v'$, so that the colour contours are horizontal, and the control input ![]() $\phi$ depends linearly on the state

$\phi$ depends linearly on the state ![]() $v'$.

$v'$.

Figure 9. Control input as a function of the flow state at ![]() $y^+_d = 15$: (a) opposition control; (b) Case R18; (c) Case S18; (d) Case LR18; (e) Case T18; (f) joint p.d.f. of

$y^+_d = 15$: (a) opposition control; (b) Case R18; (c) Case S18; (d) Case LR18; (e) Case T18; (f) joint p.d.f. of ![]() $u'$ and

$u'$ and ![]() $v'$ at

$v'$ at ![]() $y^+ = 15$ in the uncontrolled flow.

$y^+ = 15$ in the uncontrolled flow.

In contrast, the present nonlinear control policies shown in figures 9(b–e) obviously depend on not only ![]() $v'$, but also

$v'$, but also ![]() $u'$. In addition, the control input switches rapidly between wall blowing and suction, depending drastically on the state, i.e.

$u'$. In addition, the control input switches rapidly between wall blowing and suction, depending drastically on the state, i.e. ![]() $u'$ and

$u'$ and ![]() $v'$ at

$v'$ at ![]() $y^+_d = 15$. Specifically, for Cases R18 and T18 shown in figures 9(b) and 9(e), respectively, the boundary between wall blowing and suction is inclined, so that wall blowing is applied when a high-speed fluid (

$y^+_d = 15$. Specifically, for Cases R18 and T18 shown in figures 9(b) and 9(e), respectively, the boundary between wall blowing and suction is inclined, so that wall blowing is applied when a high-speed fluid (![]() $u' > 0$) approaches the wall (

$u' > 0$) approaches the wall (![]() $v' < 0$), while wall suction is applied for upwelling (

$v' < 0$), while wall suction is applied for upwelling (![]() $v' >0$) of low-momentum fluid (

$v' >0$) of low-momentum fluid (![]() $u' < 0$). On the other hand, for Cases S18 and LR18 shown in figures 9(c) and 9(d), respectively, the boundary between wall blowing and suction is almost vertical, so that the control input depends mostly on the streamwise velocity fluctuation

$u' < 0$). On the other hand, for Cases S18 and LR18 shown in figures 9(c) and 9(d), respectively, the boundary between wall blowing and suction is almost vertical, so that the control input depends mostly on the streamwise velocity fluctuation ![]() $u'$ only. It should be emphasized that such complex nonlinear relationships between the state and the control input can be obtained first by introducing the neural network for the actor. The joint probability density function (p.d.f.) of

$u'$ only. It should be emphasized that such complex nonlinear relationships between the state and the control input can be obtained first by introducing the neural network for the actor. The joint probability density function (p.d.f.) of ![]() $u'$ and

$u'$ and ![]() $v'$ at

$v'$ at ![]() $y^+_d = 15$ for the uncontrolled flow is plotted in figure 9(f). It can be confirmed that the joint p.d.f. fits roughly in the plot range, and the boundaries between wall blowing and suction obtained in all the cases cross the central part of the joint p.d.f.

$y^+_d = 15$ for the uncontrolled flow is plotted in figure 9(f). It can be confirmed that the joint p.d.f. fits roughly in the plot range, and the boundaries between wall blowing and suction obtained in all the cases cross the central part of the joint p.d.f.

4.2.2. Control performances of obtained policies

As mentioned in § 3.3, the present control policies are obtained through iterative training within the fixed episode period ![]() $T^+ = 600$. In order to evaluate their control performances, here we note that the exploration noise

$T^+ = 600$. In order to evaluate their control performances, here we note that the exploration noise ![]() $N$ in the control policy (3.5) is introduced only during the training process, while it is hereafter turned off in the evaluation of the obtained policies. The time evolutions of the instantaneous

$N$ in the control policy (3.5) is introduced only during the training process, while it is hereafter turned off in the evaluation of the obtained policies. The time evolutions of the instantaneous ![]() $C_f$ for the obtained policies are shown in figure 10. It can be seen that all the nonlinear policies obtained in the present study achieve drag reduction rates higher than that achieved by the opposition control. In particular, relaminarization can be confirmed in Cases R18 and T18. However, it should be noted that these policies may not always be optimal, since the control performance of each policy could depend on an initial condition, especially for the minimal channel considered here. Indeed, when we apply the present policies to another initial condition, the resultant drag reduction rates are commonly larger than that obtained by the opposition control, while the relaminarization is not always confirmed (not shown here). Due to the small domain size of the minimal channel, the turbulent flow becomes intermittent even in the uncontrolled flow (Jiménez & Moin Reference Jiménez and Moin1991), therefore it is difficult to distinguish whether relaminarization is caused by the applied control or the intermittency of the flow.

$C_f$ for the obtained policies are shown in figure 10. It can be seen that all the nonlinear policies obtained in the present study achieve drag reduction rates higher than that achieved by the opposition control. In particular, relaminarization can be confirmed in Cases R18 and T18. However, it should be noted that these policies may not always be optimal, since the control performance of each policy could depend on an initial condition, especially for the minimal channel considered here. Indeed, when we apply the present policies to another initial condition, the resultant drag reduction rates are commonly larger than that obtained by the opposition control, while the relaminarization is not always confirmed (not shown here). Due to the small domain size of the minimal channel, the turbulent flow becomes intermittent even in the uncontrolled flow (Jiménez & Moin Reference Jiménez and Moin1991), therefore it is difficult to distinguish whether relaminarization is caused by the applied control or the intermittency of the flow.

Figure 10. Time evolutions of ![]() $C_f$ obtained with different policies in the minimal channel.

$C_f$ obtained with different policies in the minimal channel.

In order to evaluate the control performances of the obtained policies, we apply them to the full channel. The results are shown in figure 11. Although relaminarization is no longer achieved in the full channel, it can be seen that the present control policies still outperform the opposition control. We regard the initial period ![]() $T+ = 3000$ after the onset of the control shown in figure 11 as a transient period. Then the skin friction drag is further averaged over another period

$T+ = 3000$ after the onset of the control shown in figure 11 as a transient period. Then the skin friction drag is further averaged over another period ![]() $T+ = 4000$ to obtain the value at an equilibrium state. Throughout this study, the same criterion is used for the evaluation of the skin friction drag in the full channel. The resulting drag reduction rates achieved by Cases R18, S18, LR18 and T18 are respectively 31 %, 35 %, 35 % and 27 %, while that of the opposition control remains 23 %.

$T+ = 4000$ to obtain the value at an equilibrium state. Throughout this study, the same criterion is used for the evaluation of the skin friction drag in the full channel. The resulting drag reduction rates achieved by Cases R18, S18, LR18 and T18 are respectively 31 %, 35 %, 35 % and 27 %, while that of the opposition control remains 23 %.

Figure 11. Time evolutions of ![]() $C_f$ obtained with different policies in the full channel.

$C_f$ obtained with different policies in the full channel.

In summary, it is demonstrated that the control policies obtained in the minimal channel still work in the full channel, and the present reinforcement learning successfully finds control policies more efficient than the existing opposition control. We also note that Cases R18, S18 and LR18 lead to similar drag reduction rates, so we do not make particular statements about which case is the best. Rather, we consider that the common features found from these obtained policies shown in figures 9(b–e) – such as the strong dependency of the control input on both ![]() $u'$ and

$u'$ and ![]() $v'$, and the rapid switch from wall blowing and suction – are more important. In the following, taking the optimal policy obtained in Case R18 as the default, we investigate further how each feature contributes to the resulting drag reduction effects.

$v'$, and the rapid switch from wall blowing and suction – are more important. In the following, taking the optimal policy obtained in Case R18 as the default, we investigate further how each feature contributes to the resulting drag reduction effects.

Before closing this subsection, we also briefly address the generality of the present results. The nonlinear policies shown in figures 9(b–e) commonly exhibit a rapid switch between wall blowing and suction, which may cause numerical oscillations and affect the resultant control performances, especially when a pseudo-spectral method is used. Therefore, we have also assessed the obtained policies in the same flow configurations with another code based on a finite difference method. We found that the resultant drag reduction rates are hardly affected by changing the numerical scheme. The detailed comparisons between the two numerical schemes are summarized in Appendix D.

4.3. Effects of the control cost

Here, we assess the impacts of the weight ![]() $d$ for the control cost in the reward (3.6) by comparing Cases R18, R18D1, R18D2 and R18D3. Figure 12 shows the average drag reduction rates during the final 20 episodes after the flow reaches an equilibrium state for each case in the minimal channel. Specifically, 38 %, 38 %, 10 % and 7 % of drag reduction are obtained in Cases R18, R18D1, R18D2 and R18D3, respectively. We also note that these values change to 31 %, 23 %, 7 % and 14 % in the full channel, respectively. From the above results, it can be confirmed that the resulting drag reduction rate decreases with increasing the weight

$d$ for the control cost in the reward (3.6) by comparing Cases R18, R18D1, R18D2 and R18D3. Figure 12 shows the average drag reduction rates during the final 20 episodes after the flow reaches an equilibrium state for each case in the minimal channel. Specifically, 38 %, 38 %, 10 % and 7 % of drag reduction are obtained in Cases R18, R18D1, R18D2 and R18D3, respectively. We also note that these values change to 31 %, 23 %, 7 % and 14 % in the full channel, respectively. From the above results, it can be confirmed that the resulting drag reduction rate decreases with increasing the weight ![]() $d$ for the control cost. This suggests that the control cost is properly reflected in the learning process of the present reinforcement learning.

$d$ for the control cost. This suggests that the control cost is properly reflected in the learning process of the present reinforcement learning.

Figure 12. Drag reduction rates averaged over the final 20 episodes after the flow reaches an equilibrium state for the minimal channel in Cases R18, R18D1, R18D2 and R18D3. The dashed line corresponds to the drag reduction rate of the opposition control.

The obtained policy at the final episode in each case is shown in figures 13(b–e) together with that of the opposition control in figure 13(a). Specifically, in Case R18D1, where ![]() $d$ is relatively small, the obtained policy shown in figure 13(c) is similar to that in Case R18 shown in figure 13(b), where no control cost is taken into account. It should also be noted, however, that the control input in Case R18D1 almost vanishes in the central region of figure 13(c). This indicates that when the cost for the control is relatively small, the obtained policy avoids applying the control when the streamwise and wall-normal velocity fluctuations are relatively small. This is reasonable, since larger velocity fluctuations should have larger contributions to the momentum transfer in the near-wall region. When the cost for the control becomes larger in Cases R18D2 and R18D3, it can be seen that the obtained control policies shown in figures 13(d,e) tend to be similar to the opposition control shown in figure 13(a). From these results, the opposition control can be considered optimal when the weight for the cost of the control becomes large.

$d$ is relatively small, the obtained policy shown in figure 13(c) is similar to that in Case R18 shown in figure 13(b), where no control cost is taken into account. It should also be noted, however, that the control input in Case R18D1 almost vanishes in the central region of figure 13(c). This indicates that when the cost for the control is relatively small, the obtained policy avoids applying the control when the streamwise and wall-normal velocity fluctuations are relatively small. This is reasonable, since larger velocity fluctuations should have larger contributions to the momentum transfer in the near-wall region. When the cost for the control becomes larger in Cases R18D2 and R18D3, it can be seen that the obtained control policies shown in figures 13(d,e) tend to be similar to the opposition control shown in figure 13(a). From these results, the opposition control can be considered optimal when the weight for the cost of the control becomes large.

Figure 13. Control input as a function of the flow state at ![]() $y^+_d = 15$: (a) opposition control; (b) Case R18; (c) Case R18D1; (d) Case R18D2; (e) Case R18D3.

$y^+_d = 15$: (a) opposition control; (b) Case R18; (c) Case R18D1; (d) Case R18D2; (e) Case R18D3.

Before closing this section, we summarize the power consumptions for applying the controls in Cases R18, R18D1, R18D2 and R18D3. The conservative estimate of the control power input for applying wall blowing and suction at the bottom wall can be given by the formula (Hasegawa & Kasagi Reference Hasegawa and Kasagi2011)

where the bracket indicates the average in the ![]() $x$ and

$x$ and ![]() $z$ directions, and also time.

$z$ directions, and also time. ![]() $v_w\ (= \phi )$ denotes the wall blowing and suction at the bottom wall, and

$v_w\ (= \phi )$ denotes the wall blowing and suction at the bottom wall, and ![]() $p_w$ is the wall pressure. Since the energy recovery from the flow is unrealistic, we introduce switching functions

$p_w$ is the wall pressure. Since the energy recovery from the flow is unrealistic, we introduce switching functions ![]() $S_1$ and

$S_1$ and ![]() $S_2$ to make sure that a local negative value is discarded. Namely,

$S_2$ to make sure that a local negative value is discarded. Namely, ![]() $S_1=1$ when

$S_1=1$ when ![]() $p_w v_w>0$, and

$p_w v_w>0$, and ![]() $S_1=0$ when

$S_1=0$ when ![]() $p_w v_w\le 0$. Similarly,

$p_w v_w\le 0$. Similarly, ![]() $S_2=1$ when

$S_2=1$ when ![]() $v_w>0$, while

$v_w>0$, while ![]() $S_2=0$ when

$S_2=0$ when ![]() $v_w\le 0$. As a result, it is found that the ratios of the control power input to the pumping power for driving the uncontrolled flow are 0.53 %, 0.30 %, 0.24 % and 0.14 % for Cases R18, R18D1, R18D2 and R18D3, respectively. These values are approximately two orders of magnitude smaller than the obtained drag reduction rates reported above. Hence the power consumptions for applying the present controls are negligible.

$v_w\le 0$. As a result, it is found that the ratios of the control power input to the pumping power for driving the uncontrolled flow are 0.53 %, 0.30 %, 0.24 % and 0.14 % for Cases R18, R18D1, R18D2 and R18D3, respectively. These values are approximately two orders of magnitude smaller than the obtained drag reduction rates reported above. Hence the power consumptions for applying the present controls are negligible.

5. Feature analyses of obtained policies and control inputs

5.1. Effects of the rate of change from wall blowing to suction