1. Introduction

1.1. History and motivation

The Sandwich Conjecture of Kim and Vu [Reference Kim and Vu6] claims that if

![]() $d \gg \log n$

, then for some sequences

$d \gg \log n$

, then for some sequences

![]() $p_1 = p_1(n) \sim d/n$

and

$p_1 = p_1(n) \sim d/n$

and

![]() $p_2 = p_2(n) \sim d/n$

there is a joint distribution of a random d-regular graph

$p_2 = p_2(n) \sim d/n$

there is a joint distribution of a random d-regular graph

![]() $\mathbb{R}(n,d)$

and two binomial random graphs

$\mathbb{R}(n,d)$

and two binomial random graphs

![]() $\mathbb{G}(n,p_1)$

and

$\mathbb{G}(n,p_1)$

and

![]() $\mathbb{G}(n,p_2)$

such that with probability tending to 1

$\mathbb{G}(n,p_2)$

such that with probability tending to 1

If true, the Sandwich Conjecture would essentially reduce the study of any monotone graph property of the random graph

![]() $\mathbb{R}(n,d)$

in the regime

$\mathbb{R}(n,d)$

in the regime

![]() $d \gg \log n$

to the more manageable

$d \gg \log n$

to the more manageable

![]() $\mathbb{G}(n,p)$

.

$\mathbb{G}(n,p)$

.

For

![]() $\log n \ll d \ll n^{1/3}(\log n)^{-2}$

, Kim and Vu proved the embedding

$\log n \ll d \ll n^{1/3}(\log n)^{-2}$

, Kim and Vu proved the embedding

![]() $\mathbb{G}(n,p_1) \subseteq \mathbb{R}(n,d)$

as well as an imperfect embedding

$\mathbb{G}(n,p_1) \subseteq \mathbb{R}(n,d)$

as well as an imperfect embedding

![]() $\mathbb{R}(n,d) \setminus H \subseteq \mathbb{G}(n,p_2)$

, where H is some pretty sparse subgraph of

$\mathbb{R}(n,d) \setminus H \subseteq \mathbb{G}(n,p_2)$

, where H is some pretty sparse subgraph of

![]() $\mathbb{R}(n,d)$

. In [Reference Dudek, Frieze, Ruciński and Šileikis2] the lower embedding was extended to

$\mathbb{R}(n,d)$

. In [Reference Dudek, Frieze, Ruciński and Šileikis2] the lower embedding was extended to

![]() $d \ll n$

(and, in fact, to uniform hypergraph counterparts of the models

$d \ll n$

(and, in fact, to uniform hypergraph counterparts of the models

![]() $\mathbb{G}(n,p)$

and

$\mathbb{G}(n,p)$

and

![]() $\mathbb{R}(n,d)$

). Recently, Gao, Isaev and McKay [Reference Gao, Isaev and McKay4] came up with a result which confirms the conjecture for

$\mathbb{R}(n,d)$

). Recently, Gao, Isaev and McKay [Reference Gao, Isaev and McKay4] came up with a result which confirms the conjecture for

![]() $d \gg n/\sqrt{\log n}$

([Reference Gao, Isaev and McKay4] is the first paper that gives the (perfect) embedding

$d \gg n/\sqrt{\log n}$

([Reference Gao, Isaev and McKay4] is the first paper that gives the (perfect) embedding

![]() $\mathbb{R}(n,d) \subseteq \mathbb{G}(n,p_2)$

for some range of d) and, subsequently, Gao [Reference Gao3] widely extended this range to

$\mathbb{R}(n,d) \subseteq \mathbb{G}(n,p_2)$

for some range of d) and, subsequently, Gao [Reference Gao3] widely extended this range to

![]() $d=\Omega(\log^7n)$

.

$d=\Omega(\log^7n)$

.

Initially motivated by a paper of Perarnau and Petridis [Reference Perarnau and Petridis12] (see Section 9), we consider sandwiching for bipartite graphs, in which the natural counterparts of

![]() $\mathbb{G}(n,p)$

and

$\mathbb{G}(n,p)$

and

![]() $\mathbb{R}(n,d)$

are random subgraphs of the complete bipartite graph

$\mathbb{R}(n,d)$

are random subgraphs of the complete bipartite graph

![]() ${K_{n_1,n_2}}$

rather than of

${K_{n_1,n_2}}$

rather than of

![]() $K_n$

.

$K_n$

.

1.2. New results

We consider three models of random subgraphs of

![]() ${K_{n_1,n_2}}$

, the complete bipartite graph with bipartition

${K_{n_1,n_2}}$

, the complete bipartite graph with bipartition

![]() $(V_1, V_2)$

, where

$(V_1, V_2)$

, where

![]() $|V_1| = n_1, |V_2| = n_2$

. Given an integer

$|V_1| = n_1, |V_2| = n_2$

. Given an integer

![]() $m \in [0, n_1n_2]$

, let

$m \in [0, n_1n_2]$

, let

![]() ${\mathbb{G}(n_1,n_2,m)}$

be an m-edge subgraph of

${\mathbb{G}(n_1,n_2,m)}$

be an m-edge subgraph of

![]() ${K_{n_1,n_2}}$

chosen uniformly at random (the bipartite Erdős–Rényi model). Given a number

${K_{n_1,n_2}}$

chosen uniformly at random (the bipartite Erdős–Rényi model). Given a number

![]() $p \in [0,1]$

, let

$p \in [0,1]$

, let

![]() ${\mathbb{G}(n_1,n_2,p)}$

be the binomial bipartite random graph where each edge of

${\mathbb{G}(n_1,n_2,p)}$

be the binomial bipartite random graph where each edge of

![]() ${K_{n_1,n_2}}$

is included independently with probability p. Note that in the latter model,

${K_{n_1,n_2}}$

is included independently with probability p. Note that in the latter model,

![]() $pn_{3-i}$

is the expected degree of each vertex in

$pn_{3-i}$

is the expected degree of each vertex in

![]() $V_i$

,

$V_i$

,

![]() $i=1,2$

. If, in addition,

$i=1,2$

. If, in addition,

are integers (we shall always make this implicit assumption), we let

![]() ${{\mathcal{R}}(n_1,n_2,p)}$

be the class of subgraphs of

${{\mathcal{R}}(n_1,n_2,p)}$

be the class of subgraphs of

![]() ${K_{n_1,n_2}}$

such that every

${K_{n_1,n_2}}$

such that every

![]() $v \in V_i$

has degree

$v \in V_i$

has degree

![]() $d_i$

, for

$d_i$

, for

![]() $i = 1, 2$

(it is an easy exercise to show that

$i = 1, 2$

(it is an easy exercise to show that

![]() ${{\mathcal{R}}(n_1,n_2,p)}$

is non-empty). We call such graphs p-biregular. Let

${{\mathcal{R}}(n_1,n_2,p)}$

is non-empty). We call such graphs p-biregular. Let

![]() $\mathbb{R}(n_1,n_2,p)$

be a random graph chosen uniformly from

$\mathbb{R}(n_1,n_2,p)$

be a random graph chosen uniformly from

![]() ${{\mathcal{R}}(n_1,n_2,p)}$

.

${{\mathcal{R}}(n_1,n_2,p)}$

.

In this paper we establish an embedding of

![]() $\mathbb{G}(n_1,n_2,m)$

into

$\mathbb{G}(n_1,n_2,m)$

into

![]() $\mathbb{R}(n_1,n_2,p)$

. This easily implies an embedding of

$\mathbb{R}(n_1,n_2,p)$

. This easily implies an embedding of

![]() ${\mathbb{G}(n_1,n_2,p)}$

into

${\mathbb{G}(n_1,n_2,p)}$

into

![]() $\mathbb{R}(n_1,n_2,p)$

. Moreover, by taking complements, our result translates immediately to the opposite embedding of

$\mathbb{R}(n_1,n_2,p)$

. Moreover, by taking complements, our result translates immediately to the opposite embedding of

![]() $\mathbb{R}(n_1,n_2,p)$

into

$\mathbb{R}(n_1,n_2,p)$

into

![]() $\mathbb{G}(n_1,n_2,m)$

(and thus into

$\mathbb{G}(n_1,n_2,m)$

(and thus into

![]() ${\mathbb{G}(n_1,n_2,p)}$

). This idea was first used in [Reference Gao, Isaev and McKay4] to prove

${\mathbb{G}(n_1,n_2,p)}$

). This idea was first used in [Reference Gao, Isaev and McKay4] to prove

![]() $\mathbb{R}(n,d) \subseteq \mathbb{G}(n,p)$

for

$\mathbb{R}(n,d) \subseteq \mathbb{G}(n,p)$

for

![]() $p \gg \frac{1}{\sqrt{\log n}}$

. In particular, in the balanced case (

$p \gg \frac{1}{\sqrt{\log n}}$

. In particular, in the balanced case (

![]() $n_1=n_2\,{:\!=}\,n$

), we prove this opposite embedding for

$n_1=n_2\,{:\!=}\,n$

), we prove this opposite embedding for

![]() $p \gg \left( \log^3 n / n \right)^{1/4}$

.

$p \gg \left( \log^3 n / n \right)^{1/4}$

.

The proof is far from a straightforward adaptation of the proof in [Reference Dudek, Frieze, Ruciński and Šileikis2]. The common aspect shared by the proofs is that the edges of

![]() $\mathbb{R}(n_1,n_2,p)$

are revealed in a random order, giving a graph process which turns out to be, for most of the time, similar to the basic Erdős–Rényi process that generates

$\mathbb{R}(n_1,n_2,p)$

are revealed in a random order, giving a graph process which turns out to be, for most of the time, similar to the basic Erdős–Rényi process that generates

![]() ${\mathbb{G}(n_1,n_2,m)}$

. The rest of the current proof is different in that it avoids using the configuration model. Instead, we focus on showing that both

${\mathbb{G}(n_1,n_2,m)}$

. The rest of the current proof is different in that it avoids using the configuration model. Instead, we focus on showing that both

![]() $\mathbb{R}(n_1,n_2,p)$

and its random t-edge subgraphs are pseudorandom. We achieve this by applying the switching method (when

$\mathbb{R}(n_1,n_2,p)$

and its random t-edge subgraphs are pseudorandom. We achieve this by applying the switching method (when

![]() $\min \left\{ p, 1-p \right\}$

is small) and otherwise via asymptotic enumeration of bipartite graphs with a given degree sequence proved in [Reference Canfield, Greenhill and McKay1] (see Theorem 5).

$\min \left\{ p, 1-p \right\}$

is small) and otherwise via asymptotic enumeration of bipartite graphs with a given degree sequence proved in [Reference Canfield, Greenhill and McKay1] (see Theorem 5).

In addition, for

![]() $p > 0.49$

, we rely on a non-probabilistic result about the existence of alternating cycles in 2-edge-coloured pseudorandom graphs (Lemma 20), which might be of separate interest.

$p > 0.49$

, we rely on a non-probabilistic result about the existence of alternating cycles in 2-edge-coloured pseudorandom graphs (Lemma 20), which might be of separate interest.

Throughout the paper we assume that the underlying complete bipartite graph

![]() ${K_{n_1,n_2}}$

grows on both sides, that is,

${K_{n_1,n_2}}$

grows on both sides, that is,

![]() $\min\{n_1,n_2\} \to \infty$

, and any parameters (e.g., p, m), events, random variables, etc., are allowed to depend on

$\min\{n_1,n_2\} \to \infty$

, and any parameters (e.g., p, m), events, random variables, etc., are allowed to depend on

![]() $(n_1,n_2)$

. In most cases we will make the dependence on

$(n_1,n_2)$

. In most cases we will make the dependence on

![]() $(n_1,n_2)$

implicit, with all limits and asymptotic notation like

$(n_1,n_2)$

implicit, with all limits and asymptotic notation like

![]() $O, \Omega, \sim$

considered with respect to

$O, \Omega, \sim$

considered with respect to

![]() $\min\{n_1,n_2\} \to \infty$

. We say that an event

$\min\{n_1,n_2\} \to \infty$

. We say that an event

![]() $\mathcal E = \mathcal E(n_1,n_2)$

holds asymptotically almost surely (a.a.s.) if

$\mathcal E = \mathcal E(n_1,n_2)$

holds asymptotically almost surely (a.a.s.) if

![]() $\mathbb{P}\left(\mathcal E\right) \to 1$

.

$\mathbb{P}\left(\mathcal E\right) \to 1$

.

Our main results are Theorem 2 below and its immediate Corollary 3. For a gentle start we first state an abridged version of both in the balanced case

![]() $n_1 = n_2 = n$

. Note that

$n_1 = n_2 = n$

. Note that

![]() $pn^2$

is the number of edges in

$pn^2$

is the number of edges in

![]() $\mathbb{R}(n,n,p)$

.

$\mathbb{R}(n,n,p)$

.

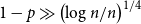

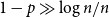

Theorem 1. If

![]() $p\gg\frac{\log n}{n}$

and

$p\gg\frac{\log n}{n}$

and

![]() $1 - p \gg \left( \frac{\log n}{n} \right)^{1/4}$

, then for some

$1 - p \gg \left( \frac{\log n}{n} \right)^{1/4}$

, then for some

![]() $m \sim pn^2$

there is a joint distribution of random graphs

$m \sim pn^2$

there is a joint distribution of random graphs

![]() $\mathbb{G}(n,n,m)$

and

$\mathbb{G}(n,n,m)$

and

![]() $\mathbb{R}(n,n,p)$

such that

$\mathbb{R}(n,n,p)$

such that

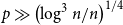

If

![]() $p\gg\left(\frac{\log^3 n}{n}\right)^{1/4}$

, then for some

$p\gg\left(\frac{\log^3 n}{n}\right)^{1/4}$

, then for some

![]() $m \sim pn^2$

there is a joint distribution of random graphs

$m \sim pn^2$

there is a joint distribution of random graphs

![]() $\mathbb{G}(n,n,m)$

and

$\mathbb{G}(n,n,m)$

and

![]() $\mathbb{R}(n,n,p)$

such that

$\mathbb{R}(n,n,p)$

such that

Moreover, in (1) and (2) one can replace

![]() $\mathbb{G}(n,n,m)$

by the binomial random graph

$\mathbb{G}(n,n,m)$

by the binomial random graph

![]() $\mathbb{G}(n,n,p')$

, for some

$\mathbb{G}(n,n,p')$

, for some

![]() $p' \sim p$

.

$p' \sim p$

.

The condition

![]() $p \gg (\log n)/n$

is necessary for (1) to hold with

$p \gg (\log n)/n$

is necessary for (1) to hold with

![]() $m \sim pn^2$

(see Remark 26), since otherwise the maximum degree of

$m \sim pn^2$

(see Remark 26), since otherwise the maximum degree of

![]() $\mathbb{G}(n,n,m)$

is no longer

$\mathbb{G}(n,n,m)$

is no longer

![]() $pn(1+o(1))$

a.a.s. We guess that the maximum degree is, vaguely speaking, the only obstacle for embedding

$pn(1+o(1))$

a.a.s. We guess that the maximum degree is, vaguely speaking, the only obstacle for embedding

![]() $\mathbb{G}(n,n,m)$

(or

$\mathbb{G}(n,n,m)$

(or

![]() $\mathbb{G}(n,n,p')$

) into

$\mathbb{G}(n,n,p')$

) into

![]() $\mathbb{R}(n_1,n_2,p)$

, see Conjecture 1 in Section 10.

$\mathbb{R}(n_1,n_2,p)$

, see Conjecture 1 in Section 10.

We further write

![]() $N \,{:\!=}\, n_1n_2$

,

$N \,{:\!=}\, n_1n_2$

,

![]() $q \,{:\!=}\, 1 - p$

,

$q \,{:\!=}\, 1 - p$

,

![]() $\hat n \,{:\!=}\, \min \left\{ n_1, n_2 \right\}$

,

$\hat n \,{:\!=}\, \min \left\{ n_1, n_2 \right\}$

,

![]() $\hat p \,{:\!=}\, \min \{p, q\}$

, and let

$\hat p \,{:\!=}\, \min \{p, q\}$

, and let

\begin{equation} \mathbb{I} \,{:\!=}\,\mathbb{I}(n_1,n_2,p)= \begin{cases} 1, & \hat p < 2\dfrac{n_1n_2^{-1} + n_1^{-1}n_2}{\log N}\\ \\[-8pt] 0, & \hat p \ge 2\dfrac{n_1n_2^{-1} + n_1^{-1}n_2}{\log N}. \end{cases}\end{equation}

\begin{equation} \mathbb{I} \,{:\!=}\,\mathbb{I}(n_1,n_2,p)= \begin{cases} 1, & \hat p < 2\dfrac{n_1n_2^{-1} + n_1^{-1}n_2}{\log N}\\ \\[-8pt] 0, & \hat p \ge 2\dfrac{n_1n_2^{-1} + n_1^{-1}n_2}{\log N}. \end{cases}\end{equation}

Note that

![]() $\mathbb{I}=0$

entails that the vertex classes are rather balanced: the ratio of their sizes cannot exceed

$\mathbb{I}=0$

entails that the vertex classes are rather balanced: the ratio of their sizes cannot exceed

![]() $\tfrac 1 4 \log N$

. Moreover,

$\tfrac 1 4 \log N$

. Moreover,

![]() $\mathbb{I}=0$

implies that

$\mathbb{I}=0$

implies that

![]() $\hat p \ge 4/\log N$

.

$\hat p \ge 4/\log N$

.

Theorem 2. For every constant

![]() $C > 0$

, there is a constant

$C > 0$

, there is a constant

![]() $C^*$

such that whenever the parameter

$C^*$

such that whenever the parameter

![]() $p \in [0,1]$

satisfies

$p \in [0,1]$

satisfies

and

\begin{equation}1\ge\gamma \,{:\!=}\, \begin{cases} C^* \left( p^2 \mathbb{I} + \sqrt{\frac{\log N}{p\hat n }}\right), \quad & p \le 0.49 \\ \\[-8pt] C^* \left( q^{3/2}\mathbb{I} + \left(\frac{\log N}{\hat n} \right)^{1/4} + \frac1q\sqrt{\frac{\log N}{\hat n}} \log \frac{\hat n}{\log N}\right), \quad & p > 0.49, \end{cases}\end{equation}

\begin{equation}1\ge\gamma \,{:\!=}\, \begin{cases} C^* \left( p^2 \mathbb{I} + \sqrt{\frac{\log N}{p\hat n }}\right), \quad & p \le 0.49 \\ \\[-8pt] C^* \left( q^{3/2}\mathbb{I} + \left(\frac{\log N}{\hat n} \right)^{1/4} + \frac1q\sqrt{\frac{\log N}{\hat n}} \log \frac{\hat n}{\log N}\right), \quad & p > 0.49, \end{cases}\end{equation}

there is, for

![]() $m \,{:\!=}\, \lceil(1-\gamma)pN\rceil$

, a joint distribution of random graphs

$m \,{:\!=}\, \lceil(1-\gamma)pN\rceil$

, a joint distribution of random graphs

![]() ${\mathbb{G}(n_1,n_2,m)}$

and

${\mathbb{G}(n_1,n_2,m)}$

and

![]() $\mathbb{R}(n_1,n_2,p)$

such that

$\mathbb{R}(n_1,n_2,p)$

such that

If, in addition

![]() $\gamma \le 1/2$

, then for

$\gamma \le 1/2$

, then for

![]() $p' \,{:\!=}\, (1 - 2\gamma)p$

, there is a joint distribution of

$p' \,{:\!=}\, (1 - 2\gamma)p$

, there is a joint distribution of

![]() $\mathbb{G}(n_1,n_2,p')$

and

$\mathbb{G}(n_1,n_2,p')$

and

![]() $\mathbb{R}(n_1,n_2,p)$

such that

$\mathbb{R}(n_1,n_2,p)$

such that

By inflating

![]() $C^*$

, the constant

$C^*$

, the constant

![]() $0.49$

in (5) can be replaced by any constant smaller than

$0.49$

in (5) can be replaced by any constant smaller than

![]() $1/2$

. It can be shown (see Remark 27 in Section 10) that if

$1/2$

. It can be shown (see Remark 27 in Section 10) that if

![]() $p \le 1/4$

and

$p \le 1/4$

and

![]() $\gamma = \Theta\left( \sqrt{\frac{\log N}{p\hat n }} \right)$

, then

$\gamma = \Theta\left( \sqrt{\frac{\log N}{p\hat n }} \right)$

, then

![]() $\gamma$

has optimal order of magnitude. For more remarks about the conditions of Theorem 2 and the role of the indicator

$\gamma$

has optimal order of magnitude. For more remarks about the conditions of Theorem 2 and the role of the indicator

![]() $\mathbb{I}$

, see Section 10.

$\mathbb{I}$

, see Section 10.

By taking the complements of

![]() $\mathbb{R}(n_1,n_2,p)$

and

$\mathbb{R}(n_1,n_2,p)$

and

![]() $\mathbb{G}(n_1,n_2,m)$

and swapping p and q, we immediately obtain the following consequence of Theorem 2 which provides the opposite embedding.

$\mathbb{G}(n_1,n_2,m)$

and swapping p and q, we immediately obtain the following consequence of Theorem 2 which provides the opposite embedding.

Corollary 3. For every constant

![]() $C > 0$

there is a constant

$C > 0$

there is a constant

![]() $C^*$

such that whenever the parameter

$C^*$

such that whenever the parameter

![]() $p \in [0,1]$

satisfies

$p \in [0,1]$

satisfies

and

\begin{equation*} 1 \ge \bar\gamma\,{:\!=}\, \begin{cases} C^*\left( p^{3/2}\mathbb{I} + \left(\frac{\log N}{\hat n} \right)^{1/4} + \frac 1p\sqrt{\frac{\log N}{\hat n}} \log \frac{\hat n}{\log N}\right), \quad p < 0.51, \\ \\[-9pt] C^*\left( q^2 \mathbb{I} + \sqrt{\frac{\log N}{q\hat n}}\right), \quad p \ge 0.51, \end{cases} \end{equation*}

\begin{equation*} 1 \ge \bar\gamma\,{:\!=}\, \begin{cases} C^*\left( p^{3/2}\mathbb{I} + \left(\frac{\log N}{\hat n} \right)^{1/4} + \frac 1p\sqrt{\frac{\log N}{\hat n}} \log \frac{\hat n}{\log N}\right), \quad p < 0.51, \\ \\[-9pt] C^*\left( q^2 \mathbb{I} + \sqrt{\frac{\log N}{q\hat n}}\right), \quad p \ge 0.51, \end{cases} \end{equation*}

there is, for

![]() $\bar m=\lfloor(p+\bar\gamma q)N\rfloor$

, a joint distribution of random graphs

$\bar m=\lfloor(p+\bar\gamma q)N\rfloor$

, a joint distribution of random graphs

![]() $\mathbb{G}(n_1,n_2,\bar m)$

and

$\mathbb{G}(n_1,n_2,\bar m)$

and

![]() $\mathbb{R}(n_1,n_2,p)$

such that

$\mathbb{R}(n_1,n_2,p)$

such that

If, in addition,

![]() $\bar \gamma \le 1/2$

, then for

$\bar \gamma \le 1/2$

, then for

![]() $p'' \,{:\!=}\, (p + 2\bar{\gamma} q)N$

there is a joint distribution of

$p'' \,{:\!=}\, (p + 2\bar{\gamma} q)N$

there is a joint distribution of

![]() $\mathbb{G}(n_1,n_2,p'')$

and

$\mathbb{G}(n_1,n_2,p'')$

and

![]() $\mathbb{R}(n_1,n_2,p)$

such that

$\mathbb{R}(n_1,n_2,p)$

such that

Proof. The assumptions of Corollary 3 yield the assumptions of Theorem 2 with

![]() $\gamma=\bar\gamma$

and with p and q swapped. Note also that

$\gamma=\bar\gamma$

and with p and q swapped. Note also that

Thus, by Theorem 2, with probability

![]() $1 - O(N^{-C})$

we have

$1 - O(N^{-C})$

we have

![]() $\mathbb{G}(n_1,n_2,N - \bar m) \subseteq \mathbb{R}(n_1,n_2,q)$

, which, by taking complements, translates into (9). Similarly

$\mathbb{G}(n_1,n_2,N - \bar m) \subseteq \mathbb{R}(n_1,n_2,q)$

, which, by taking complements, translates into (9). Similarly

Thus, by Theorem 2, with probability

![]() $1 - O(N^{-C})$

we have

$1 - O(N^{-C})$

we have

![]() $\mathbb{G}(n_1,n_2,N-p''N) \subseteq \mathbb{R}(n_1,n_2,q)$

, which, by taking complements yields embedding (10).

$\mathbb{G}(n_1,n_2,N-p''N) \subseteq \mathbb{R}(n_1,n_2,q)$

, which, by taking complements yields embedding (10).

Proof of Theorem 1. We apply Theorem 2 and Corollary 3 with

![]() $C=1$

and the corresponding

$C=1$

and the corresponding

![]() $C^*$

. Note that for

$C^*$

. Note that for

![]() $n_1=n_2=n$

, the ratio in (3) equals

$n_1=n_2=n$

, the ratio in (3) equals

![]() $4/\log N = 2/\log n$

, so

$4/\log N = 2/\log n$

, so

To prove (1), assume

![]() $p\gg\log n/n$

and

$p\gg\log n/n$

and

![]() $q \gg \left( \log n/n \right)^{1/4}$

and apply Theorem 2. Note that condition (4) holds and it is straightforward to check that, regardless of whether

$q \gg \left( \log n/n \right)^{1/4}$

and apply Theorem 2. Note that condition (4) holds and it is straightforward to check that, regardless of whether

![]() $p \le 0.49$

or

$p \le 0.49$

or

![]() $p > 0.49$

,

$p > 0.49$

,

![]() $\gamma \to 0$

. In particular,

$\gamma \to 0$

. In particular,

![]() $\gamma \le 1/2$

. We conclude that, indeed, embedding (1) holds with

$\gamma \le 1/2$

. We conclude that, indeed, embedding (1) holds with

![]() $m = \lceil (1 - \gamma)p N \rceil \sim pn^2$

and (1) still holds if we replace

$m = \lceil (1 - \gamma)p N \rceil \sim pn^2$

and (1) still holds if we replace

![]() $\mathbb{G}(n,n,m)$

by

$\mathbb{G}(n,n,m)$

by

![]() $\mathbb{G}(n,n,p')$

with

$\mathbb{G}(n,n,p')$

with

![]() $p' = (1 - 2\gamma)p \sim p$

.

$p' = (1 - 2\gamma)p \sim p$

.

For (2) first note that when

![]() $p \to 1$

, embedding (2) holds trivially with

$p \to 1$

, embedding (2) holds trivially with

![]() $m = n^2$

(even though a nontrivial embedding follows in this case under an additional assumption

$m = n^2$

(even though a nontrivial embedding follows in this case under an additional assumption

![]() $q \gg \log n/n$

. Hence, we further assume

$q \gg \log n/n$

. Hence, we further assume

![]() $q = \Omega(1)$

,

$q = \Omega(1)$

,

![]() $p\gg\left(\log^3 n / n\right)^{1/4}$

and apply Corollary 1.2. Note that condition (8) holds. It is routine to check, taking into account (11), that

$p\gg\left(\log^3 n / n\right)^{1/4}$

and apply Corollary 1.2. Note that condition (8) holds. It is routine to check, taking into account (11), that

![]() $\bar\gamma\le 1/2$

and, moreover,

$\bar\gamma\le 1/2$

and, moreover,

![]() $\bar\gamma q = o(p)$

. We conclude that (2) holds with

$\bar\gamma q = o(p)$

. We conclude that (2) holds with

![]() $m = \lfloor(p + \bar\gamma q)N \rfloor \sim Np = n^2p$

and (2) still holds if

$m = \lfloor(p + \bar\gamma q)N \rfloor \sim Np = n^2p$

and (2) still holds if

![]() $\mathbb{G}(n,n,m)$

is replaced by

$\mathbb{G}(n,n,m)$

is replaced by

![]() $\mathbb{G}(n,n,p')$

with

$\mathbb{G}(n,n,p')$

with

![]() $p' = p + 2 \bar \gamma q \sim p$

.

$p' = p + 2 \bar \gamma q \sim p$

.

1.3. A note on the second version of the manuscript

This project was initially aimed at extending the result in [Reference Dudek, Frieze, Ruciński and Šileikis2] to bipartite graphs and, thus, limited to the lower embedding

![]() $\mathbb{G}(n,n,p_1) \subseteq \mathbb{R}(n,n,p)$

only. While it was in progress, Gao, Isaev and McKay [Reference Gao, Isaev and McKay4] made an improvement on the Sandwich Conjecture by using a surprisingly fruitful idea of taking complements to obtain the upper embedding

$\mathbb{G}(n,n,p_1) \subseteq \mathbb{R}(n,n,p)$

only. While it was in progress, Gao, Isaev and McKay [Reference Gao, Isaev and McKay4] made an improvement on the Sandwich Conjecture by using a surprisingly fruitful idea of taking complements to obtain the upper embedding

![]() $\mathbb{R}(n,d) \subseteq \mathbb{G}(n,p_2)$

directly from the lower embedding

$\mathbb{R}(n,d) \subseteq \mathbb{G}(n,p_2)$

directly from the lower embedding

![]() $\mathbb{G}(n,p_1) \subseteq \mathbb{R}(n,d)$

. We then decided to borrow this idea (but nothing else) and strengthen some of our lemmas to get a significantly broader range of p for which the upper embedding (i.e. Corollary 1.2) holds. It turned out that our approach works for non-bipartite regular graphs, too. Therefore, prompted by the recent substantial progress of Gao [Reference Gao3] on the Sandwich Conjecture (which appeared on arXiv after the first version of this manuscript), in the current version of the manuscript we added Section 7, which outlines how to modify our proofs to get a corresponding sandwiching for non-bipartite graphs. This improves upon the results in [Reference Gao, Isaev and McKay4] (for regular graphs), but is now superseded by [Reference Gao3].

$\mathbb{G}(n,p_1) \subseteq \mathbb{R}(n,d)$

. We then decided to borrow this idea (but nothing else) and strengthen some of our lemmas to get a significantly broader range of p for which the upper embedding (i.e. Corollary 1.2) holds. It turned out that our approach works for non-bipartite regular graphs, too. Therefore, prompted by the recent substantial progress of Gao [Reference Gao3] on the Sandwich Conjecture (which appeared on arXiv after the first version of this manuscript), in the current version of the manuscript we added Section 7, which outlines how to modify our proofs to get a corresponding sandwiching for non-bipartite graphs. This improves upon the results in [Reference Gao, Isaev and McKay4] (for regular graphs), but is now superseded by [Reference Gao3].

1.4. Organisation

In Section 2 we introduce the notation and tools used throughout the paper: the switching technique, probabilistic inequalities and an enumeration result for bipartite graphs with a given degree sequence. In Section 3 we state a crucial Lemma 6 and show how it implies Theorem 2.

In Section 4 we give a proof of Lemma 6 based on two technical lemmas, one about the concentration of a degree-related parameter (Lemma 9), the other (Lemma 10) facilitating the switching technique used in the proof of Lemma 6. Lemma 9 is proved in Section 5, after giving some auxiliary results establishing the concentration of degrees and co-degrees in

![]() $\mathbb{R}(n_1,n_2,p)$

as well as in its conditional versions. In Section 6, we present a proof of Lemma 10, preceded by a purely deterministic result about alternating cycles in 2-edge-coloured pseudorandom graphs. We defer some technical but straightforward results and their proofs (e.g., the proof of Claim 7) to Section 8. A flowchart of the results ultimately leading to the proof of Theorem 2 is presented in Figure 1.

$\mathbb{R}(n_1,n_2,p)$

as well as in its conditional versions. In Section 6, we present a proof of Lemma 10, preceded by a purely deterministic result about alternating cycles in 2-edge-coloured pseudorandom graphs. We defer some technical but straightforward results and their proofs (e.g., the proof of Claim 7) to Section 8. A flowchart of the results ultimately leading to the proof of Theorem 2 is presented in Figure 1.

Figure 1. The structure of the proof of Theorem 2. An arrow from statement A to statement B means that A is used in the proof of B. The numbers in the brackets point to the section where a statement is formulated and where it is proved (unless the proof follows the statement immediately); external results have instead an article reference in square brackets.

The contents of Section 7 were already described (see Subsection 1.3 above). Section 9 contains an application of our main Theorem 2, which was part of the motivation for our research. In Section 10 we present some concluding remarks and our version of the (bipartite) sandwiching conjecture.

2. Preliminaries

2.1. Notation

Recall that

are the degrees of vertices in a p-biregular graph, and thus, the number of edges in any p-biregular graph

![]() $H \in {{\mathcal{R}}(n_1,n_2,p)}$

is

$H \in {{\mathcal{R}}(n_1,n_2,p)}$

is

Throughout the proofs we also use shorthand notation

and

![]() $[n] \,{:\!=}\, \left\{ 1, \dots, n \right\}$

. All logarithms appearing in this paper are natural.

$[n] \,{:\!=}\, \left\{ 1, \dots, n \right\}$

. All logarithms appearing in this paper are natural.

By

![]() $\Gamma_{G}(v)$

we denote the set of neighbours of a vertex v in a graph G.

$\Gamma_{G}(v)$

we denote the set of neighbours of a vertex v in a graph G.

2.2. Switchings

The switching technique is used to compare the size of two classes of graphs, say

![]() ${\mathcal{R}}$

and

${\mathcal{R}}$

and

![]() ${\mathcal{R}}'$

, by defining an auxiliary bipartite graph

${\mathcal{R}}'$

, by defining an auxiliary bipartite graph

![]() $B\,{:\!=}\,B({\mathcal{R}},{\mathcal{R}}')$

, in which two graphs

$B\,{:\!=}\,B({\mathcal{R}},{\mathcal{R}}')$

, in which two graphs

![]() $H \in {\mathcal{R}}$

,

$H \in {\mathcal{R}}$

,

![]() $H' \in {\mathcal{R}}'$

are connected by an edge whenever H can be transformed into H

′ by some operation (a forward switching) that deletes and/or creates some edges of H. By counting the number of edges of

$H' \in {\mathcal{R}}'$

are connected by an edge whenever H can be transformed into H

′ by some operation (a forward switching) that deletes and/or creates some edges of H. By counting the number of edges of

![]() $B({\mathcal{R}},{\mathcal{R}}')$

in two ways, we see that

$B({\mathcal{R}},{\mathcal{R}}')$

in two ways, we see that

which easily implies that

The reverse operation mapping

![]() $H' \in {\mathcal{R}}'$

to its neighbours in graph B, is called a backward switching. Usually, one defines the forward switching in such a way that the backward switching can be easily described.

$H' \in {\mathcal{R}}'$

to its neighbours in graph B, is called a backward switching. Usually, one defines the forward switching in such a way that the backward switching can be easily described.

All switchings used in this paper follow the same pattern. For a fixed graph

![]() $G \subseteq K$

(possibly empty), where

$G \subseteq K$

(possibly empty), where

![]() $K \,{:\!=}\, {K_{n_1,n_2}}$

, the families

$K \,{:\!=}\, {K_{n_1,n_2}}$

, the families

![]() ${\mathcal{R}}, {\mathcal{R}}'$

will be subsets of

${\mathcal{R}}, {\mathcal{R}}'$

will be subsets of

Every

![]() $H \in {\mathcal{R}}_G$

will be interpreted as a blue-red colouring of the edges of

$H \in {\mathcal{R}}_G$

will be interpreted as a blue-red colouring of the edges of

![]() $K\setminus G$

: those in

$K\setminus G$

: those in

![]() $H\setminus G$

will be coloured blue and those in

$H\setminus G$

will be coloured blue and those in

![]() $K \setminus H$

— red. Given

$K \setminus H$

— red. Given

![]() $H\in{\mathcal{R}}$

, consider a subset S of the edges of

$H\in{\mathcal{R}}$

, consider a subset S of the edges of

![]() $K\setminus G$

in which for every vertex

$K\setminus G$

in which for every vertex

![]() $v \in V_1 \cup V_2$

the blue degree equals the red degree, i.e.,

$v \in V_1 \cup V_2$

the blue degree equals the red degree, i.e.,

![]() $\deg_{(H\setminus G)\cap S}(v)=\deg_{(K\setminus H)\cap S}(v)$

. Then switching the colours within S produces another graph

$\deg_{(H\setminus G)\cap S}(v)=\deg_{(K\setminus H)\cap S}(v)$

. Then switching the colours within S produces another graph

![]() $H' \in {\mathcal{R}}_G$

. Formally, E(H

′) is the symmetric difference

$H' \in {\mathcal{R}}_G$

. Formally, E(H

′) is the symmetric difference

![]() $E(H) \triangle S$

. In each application of the switching technique, we will restrict the choices of S to make sure that

$E(H) \triangle S$

. In each application of the switching technique, we will restrict the choices of S to make sure that

![]() $H' \in {\mathcal{R}}'$

.

$H' \in {\mathcal{R}}'$

.

As an elementary illustration of this technique, which nevertheless turns out to be useful in Section 6, we prove here the following result. A cycle in

![]() $K\setminus G$

is alternating if it is a union of a blue matching and a red matching. Note that the definition depends on H. We will omit mentioning this dependence, as H will always be clear from the context. Given

$K\setminus G$

is alternating if it is a union of a blue matching and a red matching. Note that the definition depends on H. We will omit mentioning this dependence, as H will always be clear from the context. Given

![]() $e \in K\setminus G$

, set

$e \in K\setminus G$

, set

Proposition 4. Let a graph

![]() $G\subseteq K$

be such that

$G\subseteq K$

be such that

![]() ${\mathcal{R}}_G\neq\emptyset$

and let

${\mathcal{R}}_G\neq\emptyset$

and let

![]() $e\in K\setminus G$

. Assume that for some number

$e\in K\setminus G$

. Assume that for some number

![]() $D > 0$

and every

$D > 0$

and every

![]() $H\in {\mathcal{R}}_{G}$

the edge e is contained in an alternating cycle of length at most 2D. Then

$H\in {\mathcal{R}}_{G}$

the edge e is contained in an alternating cycle of length at most 2D. Then

![]() ${\mathcal{R}}_{G,\neg e}\neq\emptyset$

,

${\mathcal{R}}_{G,\neg e}\neq\emptyset$

,

![]() ${\mathcal{R}}_{G, e}\neq\emptyset$

, and

${\mathcal{R}}_{G, e}\neq\emptyset$

, and

Proof. Let

![]() $B = B({\mathcal{R}}_{G,e}, {\mathcal{R}}_{G,\neg e})$

be the switching graph corresponding to the following forward switching: choose an alternating cycle S of length at most 2D containing e and switch the colours of edges within S. Note that the backward switching does precisely the same. Note that by the assumption, the minimum degree

$B = B({\mathcal{R}}_{G,e}, {\mathcal{R}}_{G,\neg e})$

be the switching graph corresponding to the following forward switching: choose an alternating cycle S of length at most 2D containing e and switch the colours of edges within S. Note that the backward switching does precisely the same. Note that by the assumption, the minimum degree

![]() $\delta(B)$

is at least 1.

$\delta(B)$

is at least 1.

Since

![]() ${\mathcal{R}}_G={\mathcal{R}}_{G, e}\cup{\mathcal{R}}_{G, \neg e} \neq \emptyset$

, one of the classes

${\mathcal{R}}_G={\mathcal{R}}_{G, e}\cup{\mathcal{R}}_{G, \neg e} \neq \emptyset$

, one of the classes

![]() ${\mathcal{R}}_{G, e}$

and

${\mathcal{R}}_{G, e}$

and

![]() ${\mathcal{R}}_{G, \neg e}$

is non-empty and, in view of

${\mathcal{R}}_{G, \neg e}$

is non-empty and, in view of

![]() $\delta(B) \ge 1$

, the other one is non-empty as well. For

$\delta(B) \ge 1$

, the other one is non-empty as well. For

![]() $\ell = 2, \dots, D$

, the number of cycles of length

$\ell = 2, \dots, D$

, the number of cycles of length

![]() $2\ell$

containing e is, crudely, at most

$2\ell$

containing e is, crudely, at most

![]() $n_1^{\ell - 1} n_2^{\ell - 1} = N^{\ell - 1}$

, hence the maximum degree is

$n_1^{\ell - 1} n_2^{\ell - 1} = N^{\ell - 1}$

, hence the maximum degree is

Thus, by (14), we obtain the claimed bounds.

2.3. Probabilistic inequalities

We first state a few basic concentration inequalities that we apply in our proofs. For a sum X of independent Bernoulli (not necessarily identically distributed) random variables, writing

![]() $\mu = \operatorname{\mathbb{E}} X$

, we have (see Theorem 2.8, (2.5), (2.6), and (2.11) in [Reference Janson, Łuczak and Rucinski5]) that

$\mu = \operatorname{\mathbb{E}} X$

, we have (see Theorem 2.8, (2.5), (2.6), and (2.11) in [Reference Janson, Łuczak and Rucinski5]) that

Let

![]() $\Gamma$

be a set of size

$\Gamma$

be a set of size

![]() $|\Gamma|=g$

and let

$|\Gamma|=g$

and let

![]() $A \subseteq \Gamma$

,

$A \subseteq \Gamma$

,

![]() $|A|=a \ge1$

. For an integer

$|A|=a \ge1$

. For an integer

![]() $r \in [0,g]$

, choose uniformly at random a subset

$r \in [0,g]$

, choose uniformly at random a subset

![]() $R \subseteq \Gamma$

of size

$R \subseteq \Gamma$

of size

![]() $|R|=r$

. The random variable

$|R|=r$

. The random variable

![]() $Y = |A \cap R|$

has then the hypergeometric distribution

$Y = |A \cap R|$

has then the hypergeometric distribution

![]() $\operatorname{Hyp}(g, a, r)$

with expectation

$\operatorname{Hyp}(g, a, r)$

with expectation

![]() $\mu \,{:\!=}\, \operatorname{\mathbb{E}} Y = ar/g$

. By Theorem 2.10 in [Reference Janson, Łuczak and Rucinski5], inequalities (17) and (18) hold for Y, too.

$\mu \,{:\!=}\, \operatorname{\mathbb{E}} Y = ar/g$

. By Theorem 2.10 in [Reference Janson, Łuczak and Rucinski5], inequalities (17) and (18) hold for Y, too.

Moreover, by Remark 2.6 in [Reference Janson, Łuczak and Rucinski5], inequalities (17) and (18) also hold for a random variable Z which has Poisson distribution

![]() $\operatorname{Po}(\mu)$

with expectation

$\operatorname{Po}(\mu)$

with expectation

![]() $\mu$

. In this case, we also have the following simple fact. For

$\mu$

. In this case, we also have the following simple fact. For

![]() $k\ge0$

, set

$k\ge0$

, set

![]() $q_k=\mathbb{P}\left(Z=k\right)$

. Then

$q_k=\mathbb{P}\left(Z=k\right)$

. Then

![]() $q_k/q_{k-1}=\mu/k$

, and hence

$q_k/q_{k-1}=\mu/k$

, and hence

![]() $k = \lfloor\mu\rfloor$

maximises

$k = \lfloor\mu\rfloor$

maximises

![]() $q_k$

(we say that such k is a mode of Z). Since

$q_k$

(we say that such k is a mode of Z). Since

![]() $\operatorname{Var} Z = \mu$

, by Chebyshev’s inequality,

$\operatorname{Var} Z = \mu$

, by Chebyshev’s inequality,

![]() $\mathbb{P}\left(|Z-\mu|<\sqrt{2\mu}\right)\ge1/2$

. Moreover, the interval

$\mathbb{P}\left(|Z-\mu|<\sqrt{2\mu}\right)\ge1/2$

. Moreover, the interval

![]() $(\mu - \sqrt{2 \mu}, \mu + \sqrt{2 \mu})$

contains at most

$(\mu - \sqrt{2 \mu}, \mu + \sqrt{2 \mu})$

contains at most

![]() $\lceil \sqrt{8\mu}\:\rceil$

integers, hence it follows that

$\lceil \sqrt{8\mu}\:\rceil$

integers, hence it follows that

2.4. Asymptotic enumeration of dense bipartite graphs

To estimate co-degrees of

![]() $\mathbb{R}(n,n,p)$

we will use the following asymptotic formula by Canfield, Greenhill and McKay [Reference Canfield, Greenhill and McKay1]. We reformulate it slightly for our convenience.

$\mathbb{R}(n,n,p)$

we will use the following asymptotic formula by Canfield, Greenhill and McKay [Reference Canfield, Greenhill and McKay1]. We reformulate it slightly for our convenience.

Given two vectors

![]() ${\mathbf{d}}_1 = (d_{1,v}, v \in V_1)$

and

${\mathbf{d}}_1 = (d_{1,v}, v \in V_1)$

and

![]() ${\mathbf{d}}_2 = (d_{2,v}, v \in V_2)$

of positive integers such that

${\mathbf{d}}_2 = (d_{2,v}, v \in V_2)$

of positive integers such that

![]() $\sum_{v \in V_1} d_{1, v} = \sum_{v \in V_2} d_{2,v}$

, let

$\sum_{v \in V_1} d_{1, v} = \sum_{v \in V_2} d_{2,v}$

, let

![]() ${{\mathcal{R}}({\mathbf{d}}_1,{\mathbf{d}}_2)}$

be the class of bipartite graphs on

${{\mathcal{R}}({\mathbf{d}}_1,{\mathbf{d}}_2)}$

be the class of bipartite graphs on

![]() $(V_1, V_2)$

with vertex degrees

$(V_1, V_2)$

with vertex degrees

![]() $\deg(v) = d_{i,v}, v \in V_i, i = 1,2$

. Let

$\deg(v) = d_{i,v}, v \in V_i, i = 1,2$

. Let

![]() $|V_i| = n_i$

and write

$|V_i| = n_i$

and write

![]() $\bar d_i = {n_i}^{-1}\sum_{v \in V_i} d_{i,v}$

,

$\bar d_i = {n_i}^{-1}\sum_{v \in V_i} d_{i,v}$

,

![]() $D_i = \sum_{v \in V_i} (d_{i,v} - \bar d_i)^2$

,

$D_i = \sum_{v \in V_i} (d_{i,v} - \bar d_i)^2$

,

![]() $p = \bar d_1/n_2 = \bar d_2/n_1$

, and

$p = \bar d_1/n_2 = \bar d_2/n_1$

, and

![]() $q = 1 - p$

.

$q = 1 - p$

.

Theorem 5. ([Reference Canfield, Greenhill and McKay1]) Given any positive constants a, b, C such that

![]() $a+b < 1/2$

, there exists a constant

$a+b < 1/2$

, there exists a constant

![]() $\epsilon > 0$

so that the following holds. Consider the set of degree sequences

$\epsilon > 0$

so that the following holds. Consider the set of degree sequences

![]() ${\mathbf{d}}_1,{\mathbf{d}}_2$

satisfying

${\mathbf{d}}_1,{\mathbf{d}}_2$

satisfying

-

i.

$\max_{v \in V_1}|d_{1,v} - \bar d_1| \le Cn_2^{1/2 + \epsilon}$

,

$\max_{v \in V_1}|d_{1,v} - \bar d_1| \le Cn_2^{1/2 + \epsilon}$

,

$\max_{v \in V_2} |d_{2,v} - \bar d_2| \le Cn_1^{1/2 + \epsilon}$

$\max_{v \in V_2} |d_{2,v} - \bar d_2| \le Cn_1^{1/2 + \epsilon}$

-

ii.

$\max \{n_1, n_2\} \le C(pq)^2(\min \left\{ n_1, n_2 \right\})^{1 + \epsilon}$

$\max \{n_1, n_2\} \le C(pq)^2(\min \left\{ n_1, n_2 \right\})^{1 + \epsilon}$

-

iii.

$ \frac{(1 - 2p)^2}{4pq} \left( 1 + \frac{5n_1}{6n_2} + \frac{5n_2}{6n_1} \right) \le a \log \max \left\{ n_1, n_2 \right\}$

.

$ \frac{(1 - 2p)^2}{4pq} \left( 1 + \frac{5n_1}{6n_2} + \frac{5n_2}{6n_1} \right) \le a \log \max \left\{ n_1, n_2 \right\}$

.

If

![]() $\min\{n_1, n_2\} \to \infty$

, then uniformly for all such

$\min\{n_1, n_2\} \to \infty$

, then uniformly for all such

![]() ${\mathbf{d}}_1,{\mathbf{d}}_2$

${\mathbf{d}}_1,{\mathbf{d}}_2$

\begin{align} |{\mathcal{R}}({\mathbf{d}}_1,{\mathbf{d}}_2)| &= \binom{n_1n_2}{p n_1n_2}^{-1} \prod_{v \in V_1}\binom {n_2}{d_{1,v}} \prod_{v \in V_2}\binom {n_1}{d_{2,v}} \times \nonumber\\&\quad \times \exp \left[ -\frac{1}{2}\left( 1- \frac{D_1}{pqn_1n_2} \right)\left(1- \frac{D_2}{pqn_1n_2} \right) + O\left((\max\left\{ n_1,n_2 \right\})^{-b}\right) \right],\end{align}

\begin{align} |{\mathcal{R}}({\mathbf{d}}_1,{\mathbf{d}}_2)| &= \binom{n_1n_2}{p n_1n_2}^{-1} \prod_{v \in V_1}\binom {n_2}{d_{1,v}} \prod_{v \in V_2}\binom {n_1}{d_{2,v}} \times \nonumber\\&\quad \times \exp \left[ -\frac{1}{2}\left( 1- \frac{D_1}{pqn_1n_2} \right)\left(1- \frac{D_2}{pqn_1n_2} \right) + O\left((\max\left\{ n_1,n_2 \right\})^{-b}\right) \right],\end{align}

where the constants implicit in the error term may depend on a,b,C.

Note that condition (ii) of Theorem 5 implies the corresponding condition in [Reference Canfield, Greenhill and McKay1] after adjusting

![]() $\epsilon$

. Also, the uniformity of the bound is not explicitly stated in [Reference Canfield, Greenhill and McKay1], but, given

$\epsilon$

. Also, the uniformity of the bound is not explicitly stated in [Reference Canfield, Greenhill and McKay1], but, given

![]() $n_1, n_2$

, one should take

$n_1, n_2$

, one should take

![]() ${\mathbf{d}}_1, {\mathbf{d}}_2$

with the worst error and apply the result in [Reference Canfield, Greenhill and McKay1].

${\mathbf{d}}_1, {\mathbf{d}}_2$

with the worst error and apply the result in [Reference Canfield, Greenhill and McKay1].

3. A crucial lemma

3.1. The set-up

Recall that

![]() $K = {K_{n_1,n_2}}$

has

$K = {K_{n_1,n_2}}$

has

![]() $N = n_1n_2$

edges. Consider a sequence of graphs

$N = n_1n_2$

edges. Consider a sequence of graphs

![]() $\mathbb{G}(t) \subseteq K$

for

$\mathbb{G}(t) \subseteq K$

for

![]() $t = 0, \dots , N$

, where

$t = 0, \dots , N$

, where

![]() $\mathbb{G}(0)$

is empty and, for

$\mathbb{G}(0)$

is empty and, for

![]() $t < N$

,

$t < N$

,

![]() $\mathbb{G}(t + 1)$

is obtained from

$\mathbb{G}(t + 1)$

is obtained from

![]() $\mathbb{G}(t)$

by adding an edge

$\mathbb{G}(t)$

by adding an edge

![]() $\varepsilon_{t + 1}$

chosen from

$\varepsilon_{t + 1}$

chosen from

![]() $K \setminus \mathbb{G}(t)$

uniformly at random, that is, for every graph

$K \setminus \mathbb{G}(t)$

uniformly at random, that is, for every graph

![]() $G \subseteq K$

of size t and every edge

$G \subseteq K$

of size t and every edge

![]() $e \in K \setminus G$

$e \in K \setminus G$

Of course,

![]() $(\varepsilon_1, \dots, \varepsilon_N)$

is just a uniformly random ordering of the edges of K.

$(\varepsilon_1, \dots, \varepsilon_N)$

is just a uniformly random ordering of the edges of K.

Our approach to proving Theorem 2 is to represent the random regular graph

![]() $\mathbb{R}(n_1,n_2,p)$

as the outcome of a random process which behaves similarly to

$\mathbb{R}(n_1,n_2,p)$

as the outcome of a random process which behaves similarly to

![]() $(\mathbb{G}(t))_t$

. Recalling that a p-biregular graph has

$(\mathbb{G}(t))_t$

. Recalling that a p-biregular graph has

![]() $M = pN$

edges, let

$M = pN$

edges, let

be a uniformly random ordering of the edges of

![]() $\mathbb{R}(n_1,n_2,p)$

. By taking the initial segments, we obtain a sequence of random graphs

$\mathbb{R}(n_1,n_2,p)$

. By taking the initial segments, we obtain a sequence of random graphs

For convenience, we shorten

Let us mention here that for a fixed

![]() $H \in {{\mathcal{R}}(n_1,n_2,p)}$

, conditioning on

$H \in {{\mathcal{R}}(n_1,n_2,p)}$

, conditioning on

![]() $\mathbb{R} = H$

, the edge set of the random subgraph

$\mathbb{R} = H$

, the edge set of the random subgraph

![]() $\mathbb{R}(t)$

is a uniformly random t-element subset of the edge set of H. This observation often leads to a hypergeometric distribution and will be utilised several times in our proofs.

$\mathbb{R}(t)$

is a uniformly random t-element subset of the edge set of H. This observation often leads to a hypergeometric distribution and will be utilised several times in our proofs.

We say that a graph G with t edges is admissible, if the family

![]() ${\mathcal{R}}_G$

(see definition (15)) is non-empty, or, equivalently,

${\mathcal{R}}_G$

(see definition (15)) is non-empty, or, equivalently,

For an admissible graph G with t edges and any edge

![]() $ e \in K \setminus G$

, let

$ e \in K \setminus G$

, let

The conditional space underlying (22) can be described as first extending G uniformly at random to an element of

![]() ${\mathcal{R}}_G$

and then randomly permuting the new

${\mathcal{R}}_G$

and then randomly permuting the new

![]() $M-t$

edges.

$M-t$

edges.

The main idea behind the proof of Theorem 2 is that the conditional probabilities in (22) behave similarly to those in (21). Observe that

![]() $p_{t+1}(e,\mathbb{R}(t)) = \mathbb{P}\left({\eta_{t+1} = e}\,|\,{\mathbb{R}(t)}\right)$

. Given a real number

$p_{t+1}(e,\mathbb{R}(t)) = \mathbb{P}\left({\eta_{t+1} = e}\,|\,{\mathbb{R}(t)}\right)$

. Given a real number

![]() $\chi \ge 0$

and

$\chi \ge 0$

and

![]() $t \in \{0, \dots, M - 1\}$

, we define an

$t \in \{0, \dots, M - 1\}$

, we define an

![]() $\mathbb{R}(t)$

-measurable event

$\mathbb{R}(t)$

-measurable event

In the crucial lemma below, we are going to show that for suitably chosen

![]() $\gamma_0, \gamma_1, \dots$

, a.a.s. the events

$\gamma_0, \gamma_1, \dots$

, a.a.s. the events

![]() ${\mathcal{A}}(t,\gamma_t)$

occur simultaneously for all

${\mathcal{A}}(t,\gamma_t)$

occur simultaneously for all

![]() $t = 0,\dots,t_0-1$

where

$t = 0,\dots,t_0-1$

where

![]() $t_0$

is quite close to M. Postponing the choice of

$t_0$

is quite close to M. Postponing the choice of

![]() $\gamma_t$

and

$\gamma_t$

and

![]() $t_0$

, we define an event

$t_0$

, we define an event

\begin{equation} {\mathcal{A}} \,{:\!=}\, \bigcap_{t = 0}^{ t_0 - 1}{\mathcal{A}}(t, \gamma_t).\end{equation}

\begin{equation} {\mathcal{A}} \,{:\!=}\, \bigcap_{t = 0}^{ t_0 - 1}{\mathcal{A}}(t, \gamma_t).\end{equation}

Intuitively, the event

![]() ${\mathcal{A}}$

asserts that up to time

${\mathcal{A}}$

asserts that up to time

![]() $t_0$

the process

$t_0$

the process

![]() $\mathbb{R}(t)$

stays ‘almost uniform’, which will enable us to embed

$\mathbb{R}(t)$

stays ‘almost uniform’, which will enable us to embed

![]() ${\mathbb{G}(n_1,n_2,m)}$

into

${\mathbb{G}(n_1,n_2,m)}$

into

![]() $\mathbb{R}(t_0)$

.

$\mathbb{R}(t_0)$

.

To define time

![]() $t_0$

, it is convenient to parametrise the time by the proportion of edges of

$t_0$

, it is convenient to parametrise the time by the proportion of edges of

![]() $\mathbb{R}$

‘not yet revealed’ after t steps. For this, we define by

$\mathbb{R}$

‘not yet revealed’ after t steps. For this, we define by

Given a constant

![]() $C > 0$

and, we define (recalling the notation in (12)) the ‘final’ value

$C > 0$

and, we define (recalling the notation in (12)) the ‘final’ value

![]() $\tau_0$

of

$\tau_0$

of

![]() $\tau$

as

$\tau$

as

\begin{equation} \tau_0 \,{:\!=}\, \begin{cases} \dfrac{3 \cdot 3240^2(C + 4)\log N}{p \hat n}, \quad & p \le 0.49, \\ \\[-9pt] 700(3(C + 4))^{1/4} \left( q^{3/2}\mathbb{I} + \left(\frac{\log N}{\hat n}\right)^{1/4}\right), \quad & p > 0.49. \end{cases}\end{equation}

\begin{equation} \tau_0 \,{:\!=}\, \begin{cases} \dfrac{3 \cdot 3240^2(C + 4)\log N}{p \hat n}, \quad & p \le 0.49, \\ \\[-9pt] 700(3(C + 4))^{1/4} \left( q^{3/2}\mathbb{I} + \left(\frac{\log N}{\hat n}\right)^{1/4}\right), \quad & p > 0.49. \end{cases}\end{equation}

(Some of the constants appearing here and below are sharp or almost sharp, but others have room to spare as we round them up to the nearest ‘nice’ number.)

Consider the following assumptions on p (which we will later show to follow from the assumptions of Theorem 2):

and

At the end of this subsection we show that these three assumptions imply

so that

is a non-negative integer. Further, for

![]() $t = 0, \dots, M - 1$

, define

$t = 0, \dots, M - 1$

, define

\begin{equation} \gamma_t \,{:\!=}\, 1080 \hat p^2 \mathbb{I} + \begin{cases} 3240 \sqrt{\dfrac{2(C + 3)\log N}{\tau p \hat n}}, \quad & p \le 0.49, \\ \\[-9pt] 25,000 \sqrt{\dfrac{(C + 3)\log N}{ \tau^2q^2 \hat n}} , \quad & p > 0.49. \end{cases}\end{equation}

\begin{equation} \gamma_t \,{:\!=}\, 1080 \hat p^2 \mathbb{I} + \begin{cases} 3240 \sqrt{\dfrac{2(C + 3)\log N}{\tau p \hat n}}, \quad & p \le 0.49, \\ \\[-9pt] 25,000 \sqrt{\dfrac{(C + 3)\log N}{ \tau^2q^2 \hat n}} , \quad & p > 0.49. \end{cases}\end{equation}

Taking (29) for granted, we now state our crucial lemma, which is proved in Section 4.

Lemma 6. For every constant

![]() $C > 0$

, if assumptions (26), (27) and (28) hold, then

$C > 0$

, if assumptions (26), (27) and (28) hold, then

where the constant implicit in the O-term in (31) may also depend on C.

It remains to show (29). When

![]() $p \le 0.49$

, inequality (29) is equivalent to (26). For

$p \le 0.49$

, inequality (29) is equivalent to (26). For

![]() $p > 0.49$

, we have

$p > 0.49$

, we have

![]() $q \le 51\hat p/49$

, which together with assumptions (27) and (28) implies that

$q \le 51\hat p/49$

, which together with assumptions (27) and (28) implies that

3.2. Proof of Theorem 2

From Lemma 6 we are going to deduce Theorem 2 using a coupling argument similar to the one which was employed by Dudek, Frieze, Ruciński and Šileikis [Reference Dudek, Frieze, Ruciński and Šileikis2], but with an extra tweak (inspired by Kim and Vu [Reference Kim and Vu6]) of letting the probabilities

![]() $\gamma_t$

of Bernoulli random variables depend on t, which reduces the error

$\gamma_t$

of Bernoulli random variables depend on t, which reduces the error

![]() $\gamma$

in (5).

$\gamma$

in (5).

It is easy to check that the assumptions of Lemma 6 follow from the assumptions of Theorem 2. Indeed, (28) coincides with assumption (4), while (26) and (27) follow from the assumption

![]() $\gamma \le 1$

(see (5)) for sufficiently large

$\gamma \le 1$

(see (5)) for sufficiently large

![]() $C^*$

.

$C^*$

.

Our aim is to couple

![]() $(\mathbb{G}(t))_t$

and

$(\mathbb{G}(t))_t$

and

![]() $(\mathbb{R}(t))_t$

on the event

$(\mathbb{R}(t))_t$

on the event

![]() ${\mathcal{A}}$

defined in (23). For this we will define a graph process

${\mathcal{A}}$

defined in (23). For this we will define a graph process

![]() $\mathbb{R}'(t) \,{:\!=}\, \{\eta^{\prime}_1, \dots, \eta^{\prime}_t\}, t = 0, \dots, t_0$

so that for every admissible graph G with

$\mathbb{R}'(t) \,{:\!=}\, \{\eta^{\prime}_1, \dots, \eta^{\prime}_t\}, t = 0, \dots, t_0$

so that for every admissible graph G with

![]() $t \in [0, M -1]$

edges and every

$t \in [0, M -1]$

edges and every

![]() $e \in K \setminus G$

$e \in K \setminus G$

where

![]() $p_{t+1}(e, G)$

was defined in (22). Note that

$p_{t+1}(e, G)$

was defined in (22). Note that

![]() $\mathbb{R}'(0)$

is an empty graph. Since the distribution of the process

$\mathbb{R}'(0)$

is an empty graph. Since the distribution of the process

![]() $(\mathbb{R}(t))_t$

is determined by the conditional probabilities (22), in view of (32), the distribution of

$(\mathbb{R}(t))_t$

is determined by the conditional probabilities (22), in view of (32), the distribution of

![]() $\mathbb{R}'(t_0)$

is the same as that of

$\mathbb{R}'(t_0)$

is the same as that of

![]() $\mathbb{R}(t_0)$

and therefore we will identify

$\mathbb{R}(t_0)$

and therefore we will identify

![]() $\mathbb{R}'(t_0)$

with

$\mathbb{R}'(t_0)$

with

![]() $\mathbb{R}(t_0)$

. As the second step, we will show that a.a.s.

$\mathbb{R}(t_0)$

. As the second step, we will show that a.a.s.

![]() ${\mathbb{G}(n_1,n_2,m)}$

can be sampled from

${\mathbb{G}(n_1,n_2,m)}$

can be sampled from

![]() $\mathbb{R}'(t_0) = \mathbb{R}(t_0)$

.

$\mathbb{R}'(t_0) = \mathbb{R}(t_0)$

.

Proceeding with the definition, set

![]() $\mathbb{R}'(0)$

to be the empty graph and define graphs

$\mathbb{R}'(0)$

to be the empty graph and define graphs

![]() $\mathbb{R}'(t)$

,

$\mathbb{R}'(t)$

,

![]() $t = 1, \dots, t_0$

, inductively, as follows. Hence, let us further fix

$t = 1, \dots, t_0$

, inductively, as follows. Hence, let us further fix

![]() $t \in [0, t_0-1]$

and suppose that

$t \in [0, t_0-1]$

and suppose that

have been already chosen. Our immediate goal is to select a random pair of edges

![]() $\varepsilon_{t+1}$

and

$\varepsilon_{t+1}$

and

![]() $\eta_{t+1}^{\prime}$

, according to, resp., (21) and (32), in such a way that the event

$\eta_{t+1}^{\prime}$

, according to, resp., (21) and (32), in such a way that the event

![]() $\varepsilon_{t+1}\in \mathbb{R}'(t+1)$

is quite likely.

$\varepsilon_{t+1}\in \mathbb{R}'(t+1)$

is quite likely.

To this end, draw

![]() $\varepsilon_{t + 1}$

uniformly at random from

$\varepsilon_{t + 1}$

uniformly at random from

![]() $K \setminus G_t$

and, independently, generate a Bernoulli random variable

$K \setminus G_t$

and, independently, generate a Bernoulli random variable

![]() $\xi_{t+1}$

with the probability of success

$\xi_{t+1}$

with the probability of success

![]() $1 - \gamma_t$

(which is in [0,1] by (146)). If event

$1 - \gamma_t$

(which is in [0,1] by (146)). If event

![]() ${\mathcal{A}}(t, \gamma_t)$

has occurred, that is, if

${\mathcal{A}}(t, \gamma_t)$

has occurred, that is, if

then draw a random edge

![]() $\zeta_{t+1} \in K \setminus R_t$

according to the distribution

$\zeta_{t+1} \in K \setminus R_t$

according to the distribution

where the inequality holds by (33). Observe also that

so

![]() $\zeta_{t+1}$

has a properly defined distribution. Finally, fix an arbitrary bijection

$\zeta_{t+1}$

has a properly defined distribution. Finally, fix an arbitrary bijection

between the sets of edges and define

\begin{equation*}\eta^{\prime}_{t+1} = \begin{cases}\varepsilon_{t+1}, &\text{ if } \xi_{t + 1} = 1, \varepsilon_{t+1} \in K \setminus R_t,\\ \\[-9pt] f_{R_t, G_t}(\varepsilon_{t+1}), &\text{ if } \xi_{t + 1} = 1, \varepsilon_{t+1} \in R_t,\\ \\[-9pt] \zeta_{t+1}, &\text{ if } \xi_{t + 1} = 0. \\\end{cases}\end{equation*}

\begin{equation*}\eta^{\prime}_{t+1} = \begin{cases}\varepsilon_{t+1}, &\text{ if } \xi_{t + 1} = 1, \varepsilon_{t+1} \in K \setminus R_t,\\ \\[-9pt] f_{R_t, G_t}(\varepsilon_{t+1}), &\text{ if } \xi_{t + 1} = 1, \varepsilon_{t+1} \in R_t,\\ \\[-9pt] \zeta_{t+1}, &\text{ if } \xi_{t + 1} = 0. \\\end{cases}\end{equation*}

On the other hand, if event

![]() ${\mathcal{A}}(t, \gamma_t)$

has failed, then

${\mathcal{A}}(t, \gamma_t)$

has failed, then

![]() $\eta^{\prime}_{t+1}$

is sampled directly (without defining

$\eta^{\prime}_{t+1}$

is sampled directly (without defining

![]() $\zeta_{t+1}$

) according to the distribution (32). With this definition of

$\zeta_{t+1}$

) according to the distribution (32). With this definition of

![]() $(\mathbb{R}'(t))_{t= 0}^{t_0}$

, it is easy to check that for

$(\mathbb{R}'(t))_{t= 0}^{t_0}$

, it is easy to check that for

![]() $\eta^{\prime}_{t+1}$

defined above, (32) indeed holds, so from now on we drop the prime

$\eta^{\prime}_{t+1}$

defined above, (32) indeed holds, so from now on we drop the prime

![]() $^{\prime}$

and identify

$^{\prime}$

and identify

![]() $\mathbb{R}'(t)$

with

$\mathbb{R}'(t)$

with

![]() $\mathbb{R}(t)$

, which is a subset of

$\mathbb{R}(t)$

, which is a subset of

![]() $\mathbb{R}(n_1,n_2,p)$

.

$\mathbb{R}(n_1,n_2,p)$

.

Most importantly, we conclude that, for

![]() $t = 0, \cdots, t_0 - 1$

$t = 0, \cdots, t_0 - 1$

In view of this, define

and recall that

![]() $m=\lceil (1-\gamma)M\rceil$

. If

$m=\lceil (1-\gamma)M\rceil$

. If

![]() $|S| \ge m$

, define

$|S| \ge m$

, define

![]() ${\mathbb{G}(n_1,n_2,m)}$

as, say, the edges indexed by the smallest m elements of S (note that since the vectors

${\mathbb{G}(n_1,n_2,m)}$

as, say, the edges indexed by the smallest m elements of S (note that since the vectors

![]() $(\xi_i)$

and

$(\xi_i)$

and

![]() $(\varepsilon_i)$

are independent, after conditioning on S, these m edges are uniformly distributed), and if

$(\varepsilon_i)$

are independent, after conditioning on S, these m edges are uniformly distributed), and if

![]() $|S| < m$

, then define

$|S| < m$

, then define

![]() ${\mathbb{G}(n_1,n_2,m)}$

as, say, the graph with edges

${\mathbb{G}(n_1,n_2,m)}$

as, say, the graph with edges

![]() $\{\varepsilon_1, \dots, \varepsilon_m\}$

. Recalling the definition (23) of the event

$\{\varepsilon_1, \dots, \varepsilon_m\}$

. Recalling the definition (23) of the event

![]() ${\mathcal{A}}$

, by (34) we observe that

${\mathcal{A}}$

, by (34) we observe that

![]() ${\mathcal{A}}$

implies the inclusion

${\mathcal{A}}$

implies the inclusion

![]() $\left\{ \varepsilon_t\ \, :\, \ t \in S \right\} \subseteq \mathbb{R}(t_0) \subset \mathbb{R}(M) = \mathbb{R}(n_1,n_2,p)$

. On the other hand

$\left\{ \varepsilon_t\ \, :\, \ t \in S \right\} \subseteq \mathbb{R}(t_0) \subset \mathbb{R}(M) = \mathbb{R}(n_1,n_2,p)$

. On the other hand

![]() $|S| \ge m$

implies

$|S| \ge m$

implies

![]() ${\mathbb{G}(n_1,n_2,m)} \subseteq \left\{ \varepsilon_t\ \, :\, \ t \in S \right\}$

, so

${\mathbb{G}(n_1,n_2,m)} \subseteq \left\{ \varepsilon_t\ \, :\, \ t \in S \right\}$

, so

Since, by Lemma 6, event

![]() ${\mathcal{A}}$

holds with probability

${\mathcal{A}}$

holds with probability

![]() $1 - O(N^{-C})$

, to complete the proof of (6) it suffices to show that also

$1 - O(N^{-C})$

, to complete the proof of (6) it suffices to show that also

For this we need the following claim whose technical proof is deferred to Section 8.

Claim 7. We have

where

\begin{equation*} \theta \,{:\!=}\, 1080 \hat p^2 \mathbb{I} + \begin{cases} 6480\sqrt{\dfrac{2(C + 3)\log N}{p\hat n}}, &\quad p \le 0.49, \\ \\[-9pt] 6250\sqrt{\dfrac{(C + 3)\log N}{q^2\hat n } }\log \frac{\hat n}{\log N}, &\quad p > 0.49, \end{cases}\end{equation*}

\begin{equation*} \theta \,{:\!=}\, 1080 \hat p^2 \mathbb{I} + \begin{cases} 6480\sqrt{\dfrac{2(C + 3)\log N}{p\hat n}}, &\quad p \le 0.49, \\ \\[-9pt] 6250\sqrt{\dfrac{(C + 3)\log N}{q^2\hat n } }\log \frac{\hat n}{\log N}, &\quad p > 0.49, \end{cases}\end{equation*}

and, with

![]() $\gamma$

as in (5),

$\gamma$

as in (5),

Recalling that

![]() $t_0 = \lfloor (1 - \tau_0)M \rfloor$

and

$t_0 = \lfloor (1 - \tau_0)M \rfloor$

and

![]() $m = \lceil(1-\gamma)M\rceil$

, we have

$m = \lceil(1-\gamma)M\rceil$

, we have

\begin{equation} \begin{split} t_0 - \theta M - m &\ge (1-\tau_0)M - 1 - \theta M - (1-\gamma)M - 1 \\[3pt] &= (\gamma - \tau_0 - \theta)M - 2 \overset{\mbox{(36)}}{\ge} \sqrt{2C M \log N}. \end{split} \end{equation}

\begin{equation} \begin{split} t_0 - \theta M - m &\ge (1-\tau_0)M - 1 - \theta M - (1-\gamma)M - 1 \\[3pt] &= (\gamma - \tau_0 - \theta)M - 2 \overset{\mbox{(36)}}{\ge} \sqrt{2C M \log N}. \end{split} \end{equation}

Since

![]() $|S|$

is a sum of independent Bernoulli random variables,

$|S|$

is a sum of independent Bernoulli random variables,

\begin{align*} \mathbb{P}\left(|S| < m\right) &= \mathbb{P}\left(|S| < \operatorname{\mathbb{E}}|S|- (\operatorname{\mathbb{E}}|S| - m) \right) \overset{\mbox{(35)}}{\le} \mathbb{P}\left(|S| < \operatorname{\mathbb{E}}|S| -(t_0 - \theta M - m)\right) \\ \fbox{(18), $\operatorname{\mathbb{E}} |S| \le M$}\quad &\overset{\mbox{}}{\le} \exp \left( -\frac{(t_0 - \theta M - m)^2}{2M} \right) \overset{\mbox{(38)}}{\le} \exp \left( - C \log N \right) = N^{-C},\end{align*}

\begin{align*} \mathbb{P}\left(|S| < m\right) &= \mathbb{P}\left(|S| < \operatorname{\mathbb{E}}|S|- (\operatorname{\mathbb{E}}|S| - m) \right) \overset{\mbox{(35)}}{\le} \mathbb{P}\left(|S| < \operatorname{\mathbb{E}}|S| -(t_0 - \theta M - m)\right) \\ \fbox{(18), $\operatorname{\mathbb{E}} |S| \le M$}\quad &\overset{\mbox{}}{\le} \exp \left( -\frac{(t_0 - \theta M - m)^2}{2M} \right) \overset{\mbox{(38)}}{\le} \exp \left( - C \log N \right) = N^{-C},\end{align*}

which as mentioned above, implies (6).

Finally, we prove (7) by coupling

![]() $\mathbb{G}(n_1, n_2, p')$

with

$\mathbb{G}(n_1, n_2, p')$

with

![]() $\mathbb{G}(n_1,n_2, m)$

so that the former is a subset of the latter with probability

$\mathbb{G}(n_1,n_2, m)$

so that the former is a subset of the latter with probability

![]() $1 - O(N^{-C})$

. Denote by

$1 - O(N^{-C})$

. Denote by

![]() $X \,{:\!=}\, e(\mathbb{G}(n_1,n_2,p'))$

the number of edges of

$X \,{:\!=}\, e(\mathbb{G}(n_1,n_2,p'))$

the number of edges of

![]() $\mathbb{G}(n_1,n_2, p')$

. Whenever

$\mathbb{G}(n_1,n_2, p')$

. Whenever

![]() $X \le m$

, choose X edges of

$X \le m$

, choose X edges of

![]() $\mathbb{G}(n_1,n_2,m)$

at random and declare them to be a copy of

$\mathbb{G}(n_1,n_2,m)$

at random and declare them to be a copy of

![]() $\mathbb{G}(n_1,n_2,p')$

. Otherwise sample

$\mathbb{G}(n_1,n_2,p')$

. Otherwise sample

![]() $\mathbb{G}(n_1,n_2,p')$

independently from

$\mathbb{G}(n_1,n_2,p')$

independently from

![]() $\mathbb{G}(n_1,n_2,m)$

. Hence it remains to show that

$\mathbb{G}(n_1,n_2,m)$

. Hence it remains to show that

![]() $\mathbb{P}\left(X > m\right) = O(N^{-C})$

.

$\mathbb{P}\left(X > m\right) = O(N^{-C})$

.

Recalling that

![]() $m = \lceil{{(1-\gamma)}}\rceil pN$

and

$m = \lceil{{(1-\gamma)}}\rceil pN$

and

![]() $p' = (1 - 2\gamma)p$

, we have

$p' = (1 - 2\gamma)p$

, we have

![]() $m/N \ge (1 - \gamma)p = p' + \gamma p$

. Since

$m/N \ge (1 - \gamma)p = p' + \gamma p$

. Since

![]() $X \sim \operatorname{Bin}(N,p')$

, Chernoff’s bound (17) implies that

$X \sim \operatorname{Bin}(N,p')$

, Chernoff’s bound (17) implies that

Choosing

![]() $C^*$

sufficiently large, the definition (5) implies

$C^*$

sufficiently large, the definition (5) implies

![]() $\gamma \ge \sqrt{\frac{2C\log N}{p N}}$

with a lot of room (to see this easier in the case

$\gamma \ge \sqrt{\frac{2C\log N}{p N}}$

with a lot of room (to see this easier in the case

![]() $p > 0.49$

, note that (4) implies

$p > 0.49$

, note that (4) implies

![]() $\log(\hat n/\log N) \ge 1$

, say). So (38) implies

$\log(\hat n/\log N) \ge 1$

, say). So (38) implies

![]() $\mathbb{P}\left(X > m\right) \le N^{-C}$

.

$\mathbb{P}\left(X > m\right) \le N^{-C}$

.

4. Proof of Lemma 6

4.1. Preparations

Recall our notation

![]() $K = {K_{n_1,n_2}}$

, and

$K = {K_{n_1,n_2}}$

, and

![]() ${\mathcal{R}}_G$

defined in (15). Fix an integer

${\mathcal{R}}_G$

defined in (15). Fix an integer

![]() $t \in [0, t_0)$

. For a graph G with t edges and

$t \in [0, t_0)$

. For a graph G with t edges and

![]() $e, f \in K\setminus G$

, define

$e, f \in K\setminus G$

, define

By skipping a few technical steps, we will see that the essence of the proof of Lemma 6 is to show that the ratio

![]() ${|{\mathcal{R}}_{G,e,\neg f}|} / {|{\mathcal{R}}_{G,f,\neg e}|}$

is approximately 1 for any pair of edges e,f, where G is a ‘typical’ instance of

${|{\mathcal{R}}_{G,e,\neg f}|} / {|{\mathcal{R}}_{G,f,\neg e}|}$

is approximately 1 for any pair of edges e,f, where G is a ‘typical’ instance of

![]() $\mathbb{R}(t)$

.

$\mathbb{R}(t)$

.

Recalling our generic definition of switchings from Subsection 2.2, let us treat the graphs in

![]() ${\mathcal{R}} \,{:\!=}\, {\mathcal{R}}_{G,e,\neg f}$

and

${\mathcal{R}} \,{:\!=}\, {\mathcal{R}}_{G,e,\neg f}$

and

![]() ${\mathcal{R}}' \,{:\!=}\, {\mathcal{R}}_{G,f,\neg e}$

as blue-red edge colourings of the graph

${\mathcal{R}}' \,{:\!=}\, {\mathcal{R}}_{G,f,\neg e}$

as blue-red edge colourings of the graph

![]() $K \setminus G$

. Recall that a path or a cycle in

$K \setminus G$

. Recall that a path or a cycle in

![]() $K \setminus G$

is alternating if no two consecutive edges have the same colour.

$K \setminus G$

is alternating if no two consecutive edges have the same colour.

When edges e, f are disjoint, we define the switching graph

![]() $B = B({\mathcal{R}},{\mathcal{R}}')$

by putting an edge between

$B = B({\mathcal{R}},{\mathcal{R}}')$

by putting an edge between

![]() $H \in {\mathcal{R}}$

and

$H \in {\mathcal{R}}$

and

![]() $H' \in {\mathcal{R}}'$

whenever there is an alternating 6-cycle containing e and f in H such that switching the colours in the cycle gives H

$H' \in {\mathcal{R}}'$

whenever there is an alternating 6-cycle containing e and f in H such that switching the colours in the cycle gives H

![]() $^{\prime}$

(see Figure 2). If e and f share a vertex, instead of 6-cycles we use alternating 4-cycles containing e and f.

$^{\prime}$

(see Figure 2). If e and f share a vertex, instead of 6-cycles we use alternating 4-cycles containing e and f.

Figure 2. Switching between H and H

![]() $^{\prime}$

when e and f are disjoint: solid edges are in

$^{\prime}$

when e and f are disjoint: solid edges are in

![]() $H \setminus G$

(or

$H \setminus G$

(or

![]() $H' \setminus G$

) and the dashed ones in

$H' \setminus G$

) and the dashed ones in

![]() $K \setminus H$

(or

$K \setminus H$

(or

![]() $K \setminus H'$

).

$K \setminus H'$

).

It is easy to describe the vertex degrees in B. For distinct

![]() $u,v\in V_i$

, let

$u,v\in V_i$

, let

![]() $\theta_{G,H}(u,v)$

be the number of (alternating) paths uxv such that ux is blue and xv is red. Note that

$\theta_{G,H}(u,v)$

be the number of (alternating) paths uxv such that ux is blue and xv is red. Note that

![]() $\theta_{G,H}(u,v) = |\Gamma_{H\setminus G}(u)\cap\Gamma_{K\setminus H}(v)|$

. Then, setting

$\theta_{G,H}(u,v) = |\Gamma_{H\setminus G}(u)\cap\Gamma_{K\setminus H}(v)|$

. Then, setting

![]() $f = u_1u_2$

and

$f = u_1u_2$

and

![]() $e = v_1v_2$

, where

$e = v_1v_2$

, where

![]() $u_i,v_i\in V_i$

,

$u_i,v_i\in V_i$

,

![]() $i = 1,2$

,

$i = 1,2$

,

and

Note that equation (13) is equivalent to

\begin{equation} \frac{|{\mathcal{R}}|}{|{\mathcal{R}}'|} = \frac{\frac1{|{\mathcal{R}}'|}\sum_{H'\in{\mathcal{R}}'}\deg_B(H')}{\frac1{|{\mathcal{R}}|}\sum_{H\in{\mathcal{R}}}\deg_B(H)}.\end{equation}

\begin{equation} \frac{|{\mathcal{R}}|}{|{\mathcal{R}}'|} = \frac{\frac1{|{\mathcal{R}}'|}\sum_{H'\in{\mathcal{R}}'}\deg_B(H')}{\frac1{|{\mathcal{R}}|}\sum_{H\in{\mathcal{R}}}\deg_B(H)}.\end{equation}

In view of (39) and (40), the denominator of the RHS above is the (conditional) expectation of the random variable

![]() $\prod_{i : u_i \neq v_i} \theta_{G,\mathbb{R}}(u_i,v_i)$

, given that

$\prod_{i : u_i \neq v_i} \theta_{G,\mathbb{R}}(u_i,v_i)$

, given that

![]() $\mathbb{R}$

contains G and e, but not f (and similarly for the numerator).

$\mathbb{R}$

contains G and e, but not f (and similarly for the numerator).

To get an idea of how large that expectation could be, let us focus on one factor, say,

![]() $\theta_{G,\mathbb{R}}(u_1,v_1)$

, assuming

$\theta_{G,\mathbb{R}}(u_1,v_1)$

, assuming

![]() $u_1 \neq v_1$

. Clearly, the red degree of

$u_1 \neq v_1$

. Clearly, the red degree of

![]() $v_1$

equals

$v_1$

equals

![]() $|\Gamma_{K\setminus\mathbb{R}}(v_1)| = n_2 - d_2 = qn_2$

. Since

$|\Gamma_{K\setminus\mathbb{R}}(v_1)| = n_2 - d_2 = qn_2$

. Since

![]() $|\Gamma_{\mathbb{R}}(v_1)| = pn_2$

, viewing

$|\Gamma_{\mathbb{R}}(v_1)| = pn_2$

, viewing

![]() $\mathbb{R} \setminus G$

as a

$\mathbb{R} \setminus G$

as a

![]() $\tau$

-dense subgraph of

$\tau$

-dense subgraph of

![]() $\mathbb{R}$

(see (24)) we expect that the blue neighbourhood

$\mathbb{R}$

(see (24)) we expect that the blue neighbourhood

![]() $|\Gamma_{\mathbb{R}\setminus G}(u_1)|$

is approximately

$|\Gamma_{\mathbb{R}\setminus G}(u_1)|$

is approximately

![]() $\tau p n_2$

. It is reasonable to expect that for typical G and

$\tau p n_2$

. It is reasonable to expect that for typical G and

![]() $\mathbb{R}$

the red and blue neighbourhoods intersect proportionally, that is, on a set of size about

$\mathbb{R}$

the red and blue neighbourhoods intersect proportionally, that is, on a set of size about

![]() $q \cdot \tau p \cdot n_2 = \tau qd_1$

.

$q \cdot \tau p \cdot n_2 = \tau qd_1$

.

Inspired by this heuristic, we say that, for

![]() $\delta > 0$

, an admissible graph G with t edges is

$\delta > 0$

, an admissible graph G with t edges is

![]() $\delta$

-typical if

$\delta$

-typical if

where the outer maximum is taken over distinct pairs of edges. (The bound

![]() $\tau^2 \delta$

has hardly any intuition, but it is simple and sufficient for our purposes.)

$\tau^2 \delta$

has hardly any intuition, but it is simple and sufficient for our purposes.)

Lemma 6 is a relatively easy consequence of the upcoming Lemma 8, which states that for a suitably chosen function

![]() $\delta(t)$

it is very likely that the initial segments of

$\delta(t)$

it is very likely that the initial segments of

![]() $\mathbb{R}$

are

$\mathbb{R}$

are

![]() $\delta(t)$

-typical.

$\delta(t)$

-typical.

For each

![]() $t = 0, \dots, t_0 - 1$

, define

$t = 0, \dots, t_0 - 1$

, define

\begin{equation} \delta(t) \,{:\!=}\, 120\hat p^2 \mathbb{I} + 360 \sqrt{\frac{(C + 3)\lambda(t)}{6\tau pq\hat n }} ,\end{equation}

\begin{equation} \delta(t) \,{:\!=}\, 120\hat p^2 \mathbb{I} + 360 \sqrt{\frac{(C + 3)\lambda(t)}{6\tau pq\hat n }} ,\end{equation}

where

\begin{equation} \lambda(t) \,{:\!=}\,\begin{cases} 6 \log N, \quad &p \le 0.49, \\ \\[-9pt] 6\log N + \dfrac{64\log N}{\tau p q}, \quad &p > 0.49.\end{cases}\end{equation}

\begin{equation} \lambda(t) \,{:\!=}\,\begin{cases} 6 \log N, \quad &p \le 0.49, \\ \\[-9pt] 6\log N + \dfrac{64\log N}{\tau p q}, \quad &p > 0.49.\end{cases}\end{equation}

For future reference, note that

Indeed, recalling (30), for

![]() $p\le0.49$

,

$p\le0.49$

,