76 results in 94Axx

Convergence rate of entropy-regularized multi-marginal optimal transport costs

- Part of

-

- Journal:

- Canadian Journal of Mathematics , First View

- Published online by Cambridge University Press:

- 15 March 2024, pp. 1-21

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

On the Ziv–Merhav theorem beyond Markovianity I

- Part of

-

- Journal:

- Canadian Journal of Mathematics , First View

- Published online by Cambridge University Press:

- 07 March 2024, pp. 1-25

-

- Article

- Export citation

Unified bounds for the independence number of graphs

- Part of

-

- Journal:

- Canadian Journal of Mathematics , First View

- Published online by Cambridge University Press:

- 11 December 2023, pp. 1-21

-

- Article

- Export citation

t-Design Curves and Mobile Sampling on the Sphere

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 11 / 2023

- Published online by Cambridge University Press:

- 23 November 2023, e105

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

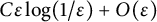

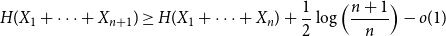

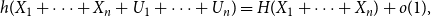

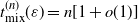

Approximate discrete entropy monotonicity for log-concave sums

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 33 / Issue 2 / March 2024

- Published online by Cambridge University Press:

- 13 November 2023, pp. 196-209

-

- Article

- Export citation

Markov capacity for factor codes with an unambiguous symbol

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems , First View

- Published online by Cambridge University Press:

- 07 November 2023, pp. 1-30

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The unified extropy and its versions in classical and Dempster–Shafer theories

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 23 October 2023, pp. 1-12

-

- Article

- Export citation

Encoding subshifts through sliding block codes

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems , First View

- Published online by Cambridge University Press:

- 03 August 2023, pp. 1-20

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Information-theoretic convergence of extreme values to the Gumbel distribution

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 61 / Issue 1 / March 2024

- Published online by Cambridge University Press:

- 21 June 2023, pp. 244-254

- Print publication:

- March 2024

-

- Article

- Export citation

On asymptotic fairness in voting with greedy sampling

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 55 / Issue 3 / September 2023

- Published online by Cambridge University Press:

- 10 May 2023, pp. 999-1032

- Print publication:

- September 2023

-

- Article

- Export citation

Costa’s concavity inequality for dependent variables based on the multivariate Gaussian copula

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 60 / Issue 4 / December 2023

- Published online by Cambridge University Press:

- 12 April 2023, pp. 1136-1156

- Print publication:

- December 2023

-

- Article

- Export citation

A new lifetime distribution by maximizing entropy: properties and applications

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 38 / Issue 1 / January 2024

- Published online by Cambridge University Press:

- 28 February 2023, pp. 189-206

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Random feedback shift registers and the limit distribution for largest cycle lengths

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 32 / Issue 4 / July 2023

- Published online by Cambridge University Press:

- 14 February 2023, pp. 559-593

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Constant rank factorisations of smooth maps, with applications to sonar

- Part of

-

- Journal:

- European Journal of Applied Mathematics / Volume 35 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 01 December 2022, pp. 1-39

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

A negative binomial approximation in group testing

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 37 / Issue 4 / October 2023

- Published online by Cambridge University Press:

- 28 October 2022, pp. 973-996

-

- Article

- Export citation

Fast mixing of a randomized shift-register Markov chain

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 60 / Issue 1 / March 2023

- Published online by Cambridge University Press:

- 02 September 2022, pp. 253-266

- Print publication:

- March 2023

-

- Article

- Export citation

Varentropy of doubly truncated random variable

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 37 / Issue 3 / July 2023

- Published online by Cambridge University Press:

- 08 July 2022, pp. 852-871

-

- Article

- Export citation

UNIVERSAL CODING AND PREDICTION ON ERGODIC RANDOM POINTS

- Part of

-

- Journal:

- Bulletin of Symbolic Logic / Volume 28 / Issue 3 / September 2022

- Published online by Cambridge University Press:

- 02 May 2022, pp. 387-412

- Print publication:

- September 2022

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Variational model with nonstandard growth conditions for restoration of satellite optical images using synthetic aperture radar

- Part of

-

- Journal:

- European Journal of Applied Mathematics / Volume 34 / Issue 1 / February 2023

- Published online by Cambridge University Press:

- 11 March 2022, pp. 77-105

-

- Article

-

- You have access

- HTML

- Export citation

On reconsidering entropies and divergences and their cumulative counterparts: Csiszár's, DPD's and Fisher's type cumulative and survival measures

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 37 / Issue 1 / January 2023

- Published online by Cambridge University Press:

- 21 February 2022, pp. 294-321

-

- Article

-

- You have access

- Open access

- HTML

- Export citation