The Principles of Deep Learning Theory

The Principles of Deep Learning Theory Book contents

- Frontmatter

- Contents

- Preface

- 0 Initialization

- 1 Pretraining

- 2 Neural Networks

- 3 Effective Theory of Deep Linear Networks at Initialization

- 4 RG Flow of Preactivations

- 5 Effective Theory of Preactivations at Initialization

- 6 Bayesian Learning

- 7 Gradient-Based Learning

- 8 RG Flow of the Neural Tangent Kernel

- 9 Effective Theory of the NTK at Initialization

- 10 Kernel Learning

- 11 Representation Learning

- ∞ The End of Training

- ε Epilogue: Model Complexity from the Macroscopic Perspective

- A Information in Deep Learning

- B Residual Learning

- References

- Index

- References

References

Published online by Cambridge University Press: 05 May 2022

- Frontmatter

- Contents

- Preface

- 0 Initialization

- 1 Pretraining

- 2 Neural Networks

- 3 Effective Theory of Deep Linear Networks at Initialization

- 4 RG Flow of Preactivations

- 5 Effective Theory of Preactivations at Initialization

- 6 Bayesian Learning

- 7 Gradient-Based Learning

- 8 RG Flow of the Neural Tangent Kernel

- 9 Effective Theory of the NTK at Initialization

- 10 Kernel Learning

- 11 Representation Learning

- ∞ The End of Training

- ε Epilogue: Model Complexity from the Macroscopic Perspective

- A Information in Deep Learning

- B Residual Learning

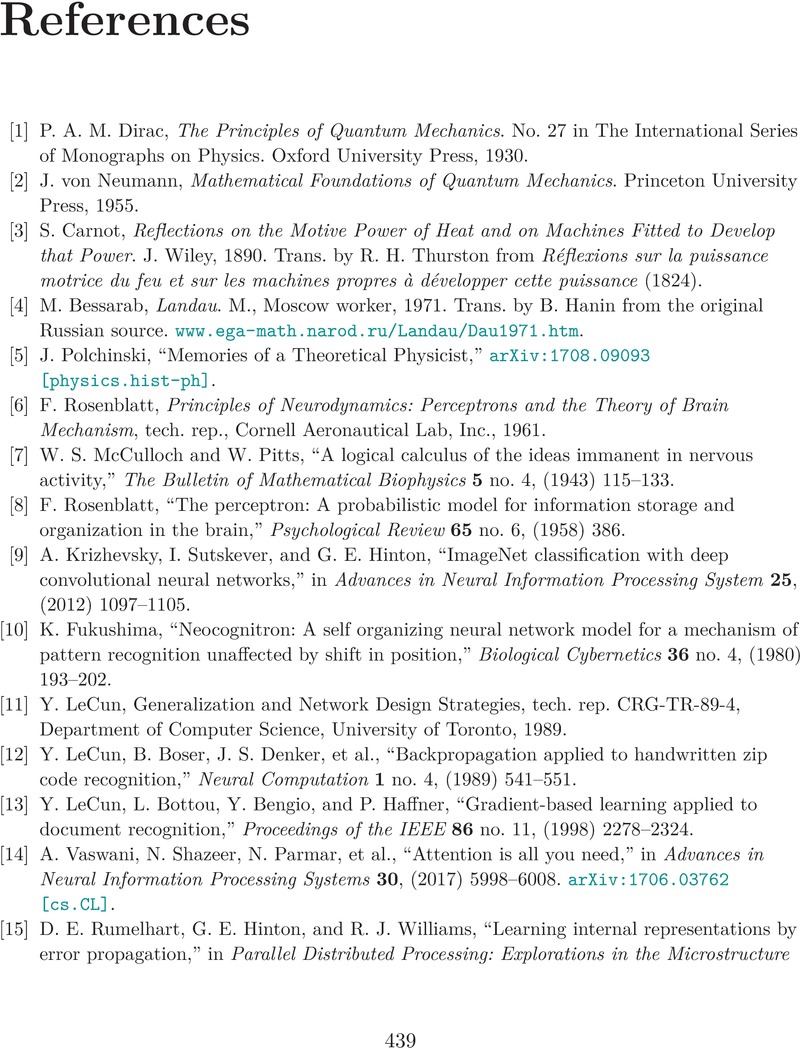

- References

- Index

- References

Summary

- Type

- Chapter

- Information

- The Principles of Deep Learning TheoryAn Effective Theory Approach to Understanding Neural Networks, pp. 439 - 444Publisher: Cambridge University PressPrint publication year: 2022