1 INTRODUCTION

To many ancient astronomers and skywatchers, the night sky was an enormous, material sphere surrounding the Earth. Emerging from the natural philosophy of the pre-Socratics (c. 6th century BCE) was a description of stars attached to, and hence moving daily with this physical celestial orb. Observing and explaining the motion of planets with respect to the fixed stars or identifying transient ‘guest stars’—fundamental steps on the road to modern astronomy—relied on methods to document and report celestial positions on a sphere.

Today, a spherical coordinate pair, such as Right Ascension (α) and Declination (δ), is still the most convenient way to catalogue the locations of celestial objectsFootnote 1. An additional celestial coordinate (distance, redshift, or velocity), is encoded by mapping to a set of concentric spheres with differing radii.

Unfortunately, spherical coordinates provide a direct challenge when producing static plots, maps, or charts to appear in flat, two-dimensional (2D) images—the predominant method for analysing, intepreting, documenting, and communicating scientific outcomes. Without a spherical surface to print on and distribute, any 2D projection of the sky requires a compromise in accuracy between the scale, area, and azimuth of plotted positions—all three properties cannot be presented simultaneously (Farmer Reference Farmer1938). Instead, a decision must always be made (consciously or not) as to which subset of these properties are the most important, and which will be shown in a distorted fashion.

This poses problems for the two complementary phases of visual exploration—making discoveries in data by looking for relationships, patterns, or anomalies—and publication—where the results of an exploration are made available for scientific scrutiny, education, or public communication.

1.1. Visualising all-sky data

The conventional approach to the problem of displaying, presenting, or publishing data in spherical coordinates is to perform a mapping to a flat, 2D representation. Usually developed for building better 2D maps of the (almost) spherical Earth, a variety of projection techniques have made their way into astronomy.

Computer-based plotting has vastly simplified the process of creating coordinate grids, or graticules, so that it is a straightforward task to implement different map projections for a particular data set. Calabretta & Greisen (Reference Calabretta and Greisen2002) provide a comprehensive overview of map projections for astronomy, including forward and inverse transforms from celestial to Cartesian coordinates.

A number of browser-based tools for navigating all-sky data sets exist. These include solutions that are

• mainly intended for education and outreach, such as Google SkyFootnote 2 (Connolly & Ornduff Reference Connolly, Ornduff, Gibbs, Barnes, Manning and Partridge2008) and WikiSkyFootnote 3;

• hybrid solutions merging a strong educational focus with direct links to the underlying data and publications, such as the WorldWide TelescopeFootnote 4 (Goodman Reference Goodman2012; Fay & Roberts Reference Fay and Roberts2016); and

• Dedicated astronomical services, in particular, Aladin DesktopFootnote 5 and Aladin LiteFootnote 6 (Boch & Fernique Reference Boch, Fernique, Manset and Forshay2014).

Using imagery from a variety of multi-wavelength surveys and observations, and offering control over features such as grid lines, constellation maps, and queries for an object of interest (e.g. by name, position, catalogue number, etc.) these solutions provide a powerful means to explore relationships between objects on the sky. However, they suffer from map projection effects on large scales, and are designed for viewing on flat, 2D displays.

The Java-based topcat (Taylor Reference Taylor, Shopbell, Britton and Ebert2005) package provides a comprehensive set of visualisation and analysis tools for catalogue data, including an interactive outside-looking-in all sky representation via the Spherical Polar Plot window. topcat supports a range of common astronomical data formats, and integration with Virtual Observatory services.

Kent (Reference Kent2017) provides a detailed discussion of the use of BlenderFootnote 7 and the Google Spatial Media moduleFootnote 8 to produce navigable spherical panoramas from astrophysical data, including FITS-format images, planetary terrain data and 3D catalogues. The resulting videos can be viewed interactively via YouTubeFootnote 9 using a compatible browser. A similar approach, using panoramic images generated from the splash (Price Reference Price2007) smoothed particle hydrodynamics code, was presented by Russell (Reference Russell, Eldridge, Bray, McClelland and Xiao2017).

1.2. Domes and head-mounted displays

The astronomy education world has had a solution to the problem of spherical coordinate systems for some time: the planetarium dome. Capable of dynamically representing 2π steradians of the sky, catalogues of objects can be shown at their correct location and correct angular separation without areal distortion in the coordinate system. For aesthetic purposes, individual objects can be displayed with an exaggerated local scale, appearing much larger on the planetarium sky than we would ever see them unaided.

Despite continuous improvements in digital full-dome projection techniques, few professional astronomers spend their day making discoveries by projecting their data onto a dome (see, for example, Teuben et al. Reference Teuben, Hut, Levy, Makino, McMillan, Portegies Zwart, Shara, Emmart, Harnden, Primini and Payne2001; Abbott et al. Reference Abbott, Emmart, Levy, Liu, Quinn and Górski2004; Fluke et al. Reference Fluke, Bourke and O’Donovan2006, for early work). The majority perform their day-to-day data exploration on desktop, notebook or tablet screens, accessing a much smaller solid angle.

The emergence of the consumer, virtual reality (VR) head-mounted display (HMD) presents a low-cost alternative to these large-scale spaces. HMDs are ideal for providing an immersive, 4π steradian, all-sky representation, where the viewer can look anywhere: forwards, backwards, up, down, and side-to-side. In essence, they provide a virtual, portable, planetarium dome.

Two broad classes of commodity HMDs exist as follows:

1. Compute-based: In the first generation, the head-set is connected to a computer via a cable, offering higher resolution, and real-time graphics. This is often augmented with both basic orientation tracking via accelerometers and absolute position tracking within a limited region. Commercial options include the Oculus RiftFootnote 10, HTC ViveFootnote 11, and Sony’s PlayStation VRFootnote 12. Increasingly, the computer, display system, and an outward facing camera are combined into a single wearable, offering an experience where the digital world and the real world merge. Often referred to as mixed reality (in contrast to the completely digital environment of VR), commercial and developer products are marketed by Microsoft (HololensFootnote 13) and Google (the standalone DaydreamFootnote 14).

2. Mobile-based: A smartphone is docked within a simple headset. For experiences more complex than viewing a static image or short animation, it may be necessary to stream content to the phone via WiFi. This places a limit on the frame-rates and level of interactivity that can be achieved. Options here include Google CardboardFootnote 15 viewers and Samsung Gear VRFootnote 16.

1.3. Our solution

In this paper, we present a workflow for experimentation with commodity VR HMDs, targeted at all-sky catalogue data in spherical coordinates. Our solution uses the free, open source s2plot programming libraryFootnote 17 (Barnes et al. Reference Barnes, Fluke, Bourke and Parry2006, and see Appendix A) and the A-Frame WebVR framework initiated by MozillaVRFootnote 18 (Section 2).

s2plot offers a simple, but powerful, application programming interface (API) for 3D visualisation that hides access to OpenGLFootnote 19 function calls. The choice of display mode is a runtime decision depending on where the code is deployed. s2plot is written in C, and full functionality can be accessed most readily from C/C++ programs.

A-Frame visualisations are generated with html statements, enriched by JavaScript functions, and can be viewed with a variety of commodity HMDs, including products from Oculus, HTC, Samsung, and Google.

The allskyVR system we present in this paper is a free, open source software solution for creating immersive, all-sky VR experiences. The distribution contains all of the relevant source code, scripts, and A-Frame assets. The software is available for download from

with online documentation at

The VR environment supports orientation-based navigation of the space, and a gaze-based menu system linked to a hierarchy of graphical entities. The resulting assets can be transferred to a web server for online accessibility, most readily via a smartphone and Google Cardboard viewer.

2 GENERATING ALL-SKY VIRTUAL REALITY ENVIRONMENTS

In this section, we demonstrate how the allskyVR distribution generates a VR experience for viewing with a HMD. With a wide variety of commodity HMDs available, we choose to focus on a straightforward approach to early adoption.

We use a viewing paradigm that maps the positions of objects around the viewer onto a virtual celestial sphere, with the added constraint that the viewer’s location is fixed to the sphere’s origin. This environment can be explored by looking in different directions, and is suitable for all HMDs that support head-orientation navigationFootnote 20. More complex, fully navigable (e.g. with a hand-held controller or through absolute position tracking) experiences are left to others to investigate. However, we note that allowing the viewer to move away from the coordinate origin introduces new distortions—the very problem we are trying to avoid.

In this work, an all-sky data set comprises: a spherical coordinate pair, (α, δ), in decimal degrees; a radial coordinate (r = 1 for objects on the celestial sphere) in arbitrary units; an optional category index, which can be used to group objects with similar properties; and an optional per object scaling factor. Colours are assigned to objects based on their category.

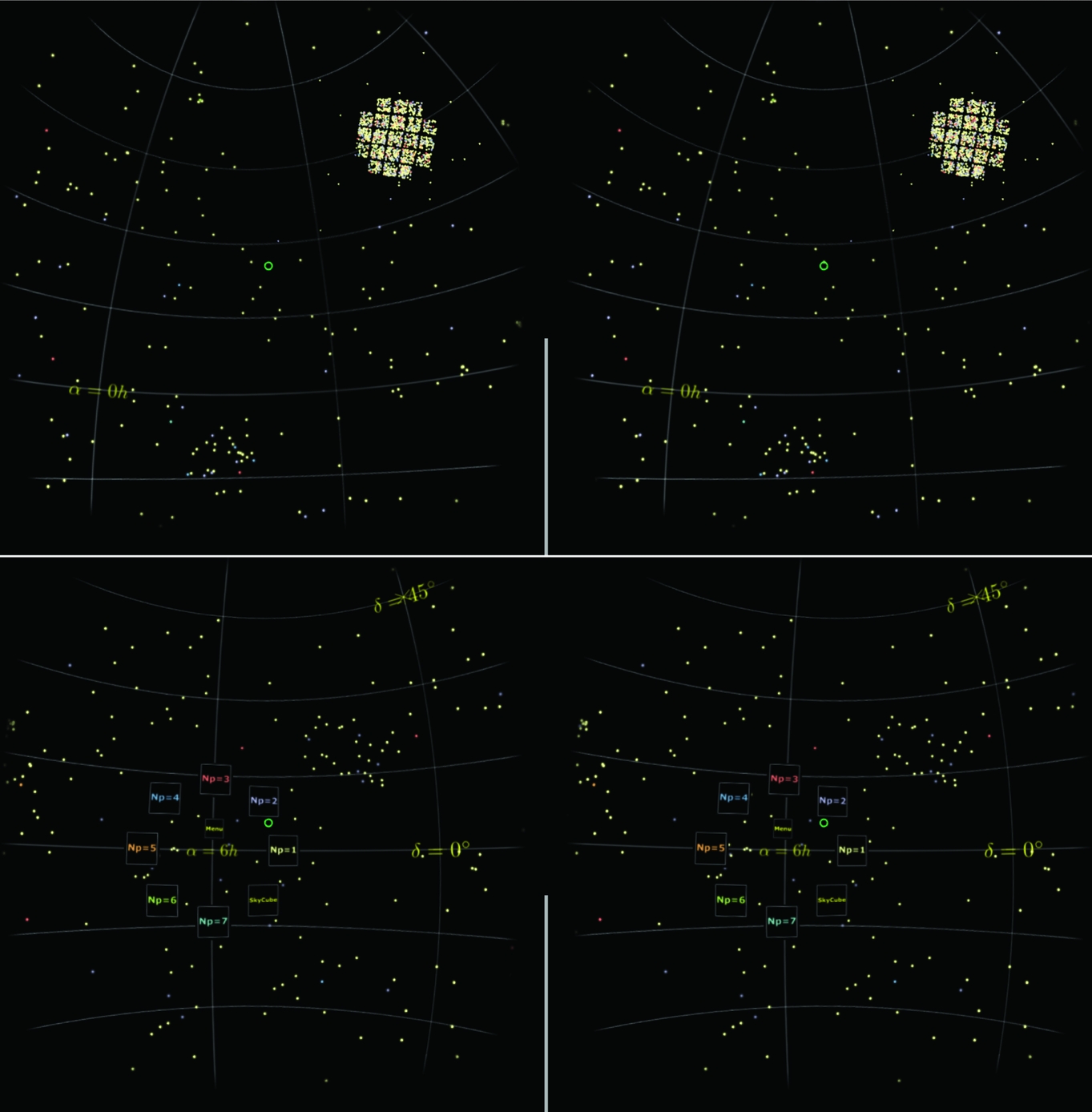

A ready to view astronomical example using our approach is included with the allskyVR distribution. Figure 1 contains two screenshot from this example, incorporating all of the features that will be described in this section. The data set is from the Kepler space mission, showing the locations of confirmed exoplanetary systems. This data set is described in more detail in Appendix B.

Figure 1. Screenshots from an immersive, all-sky visualisation of confirmed exoplanetary systems using our s2plot to A-Frame export pathway. The data set from the Kepler space mission is described in more detail in Appendix B. Features in the virtual reality environment include mapping of individual objects to A-Frame entities (Section 2.3), a low-polygon count Sky Cube providing a reference grid (Section 2.4), and a gaze-based menu system (Section 2.5). These two screenshots were captured from a Samsung Galaxy S7 Edge mobile device showing the left and right image views that form the immersive environment when viewed from a compatible head-mounted display. The expanded menu system is visible in the lower panel. The vertical white line in the centre of each image is used to help with correct placement of the mobile device in a head-mounted display.

2.1. allskyVR

AllskyVR supports two modes of operation: Quickstart and Customisable.

Quickstart mode uses a subset of the allskyVR distribution along with a set of assets we have generated in advance, thus reducing the barrier to experimentation and adoption. Some level of customisation is still available, but only requiring direct modification of html files or user-generated images for the menu system.

A format conversion from input data to A-Frame entities is performed, and a hierarchical framework and gaze-based menu system for selecting individual data categories is built. The output is a collection of html assets, images, and Javascript, along with a pregenerated coordinate grid. This mode can be used without installing s2plot, and is best suited to catalogues with no more than a few thousand items.

Customisable mode requires installation of the s2plot programming library, third-party dependencies (see Appendix A), and the allskyVR distribution. Now, more complete control is possible over the appearance of graphical features such as category labels or choice of colour maps.

Immediately following import of a data file into a custom s2plot application, a key press combination, <shift>-v, initiates the workflow that creates the immersive experience. The output comprises a complete set of assets: html source, Javascript, and images.

The customisable mode provide functions to generate coordinate grids of constant right ascension and declination lines, with configurable labels and other annotations. Publication quality axis labels and annotations are generated using either the FreeType font libraryFootnote 21 or via LaTeXstyle mathematical statements.

For both modes, the resultant assets then need to be moved to a web server that can be accessed from a WebGL compatible browser on a mobile- or compute-based HMD.

We now describe the technical solutions underpinning our approach.

2.2. WebVR and the A-Frame API

We choose to create immersive VR experiences using the WebVR A-Frame API, developed by Mozilla’s VR team. Using html statements, supported by Javascript functions, VR environments can be built quickly in a text editor. The result can then viewed in any HMD that supports browser-based VR, provided WebGLFootnote 22 capabilities have been activated (usually selected in the browser preferences).

A-Frame uses an entity-component architecture: one or more abstract modules can be attached to each element within a scene. The components can alter the way an entity appears (size, colour, and material), how it moves, or whether it reacts to other entities. The entities and their components are described using html statements with a syntax modelled on the CSS (Cascading Style Sheets) languageFootnote 23. Standard A-Frame entities exist for a number of geometrical primitives, such as spheres (<a-sphere>), cylinders (<a-cylinder>), and text (<a-text>).

2.3. A-Frame geometrical primitives

In most graphics libraries, the locations of objects can be shown using three main geometrical primitives: points, spheres, or billboards. A billboard comprises a low-resolution texture assigned to a rectangular polygon, often providing the option with the best visual quality. Billboards are continuously reoriented so that they always point towards the camera, which requires computation on every refresh cycle. The size and colour of all three primitives may be adjustable, along with the polygon resolution of the sphere.

Within an A-Frame scene, points are represented as a single pixel, making them hard to see, and hence this option is not suitable. Additionally, there is no textured billboard in the A-Frame core—although some third-party components have been developed. Since there is more control over the appearance of spheres, particularly the polygon resolution and radius, it is preferable to use this as the primary primitive to display individual objects for the quickstart mode of allskyVR. As we discuss in Section 2.4.2, it is possible to use other primitives in the customisable version, but at the cost of some level of interactivity.

For finer control of each entity’s appearance, we elect to use the more generic blank <a-entity> form, and attach a geometry component, which assigns the sphere primitive type. The polygon count for each of the sphere primitives is controlled by the segmentsWidth and segmentsHeight parameters, allowing the user to select the trade-off between aesthetics (higher polygon count preferred) and performance (lower polygon count preferred).

Functionality in the allskyVR distribution performs the conversion of each object’s position, colour, and scale factor to an A-Frame entity declaration.

An example of the statements required to build a simple A-Frame scene are shown in Figure 2. Here, four red spheres are placed in front of the viewer in an environment surrounded by a dark blue spherical sky. The statements defining the primitives are enclosed within an <a-scene> hierarchy.

Figure 2. A simple A-Frame scene. Four red spheres placed in front of the viewer. The environment is surrounded by a dark blue spherical sky. The polygon count for each of the sphere primitives is controlled by the segmentsWidth and segmentsHeight parameters.

For use within an A-Frame scene, (α, δ, r) coordinates are converted to an (x, y, z) Cartesian triple; the category index is used to place each object into a user-selectable hierarchy; and the scaling factor controls the relative size of the geometrical primitive used to show the object’s location.

2.4. Adding a celestial coordinate system

Mapping coordinates and colours to a collection of low-polygon count spheres is a first step towards an immersive all-sky experience. However, without a visible coordinate grid for reference, it is more difficult to orient oneself within the VR environment.

Generating smooth line segments for constant lines of right ascension and declination can result in an unacceptably high polygon count. Moreover, as there is no line width option, it is necessary to use cylinders if the line thickness needs to be controlled. These effects can have a significant impact on the level of interactivity and responsiveness of the display to head movements. Instead of using line segments or cylinders, we use a pregenerated image of the coordinate system, which is wrapped around the viewer.

2.4.1. Image-based coordinate grids

The A-Frame <a-sky> entity maps an image with a 2:1 aspect ratio in equirectangular coordinates onto a sphere. While there is minimal distortion along the celestial equator, the pixel density changes rapidly across the image. Visually, this approach is unsatisfactory, particularly when looking towards the poles [e.g. as occurs with the YouTube spherical panorama movies—see examples by Kent (Reference Kent2017) and Russell (Reference Russell, Eldridge, Bray, McClelland and Xiao2017)]. Moreover, the <a-sky> entity is a tessellated sphere, which can increases the polygon count in the scene by a few thousand faces in order to achieve a sufficiently smooth surface.

To create a more complex, immersive all-sky experience for HMDs, without substantially increasing the polygon count, we use a technique introduced to computer graphics in the mid-1980s: perspective projection onto the interior six faces of a cube. We refer to this geometrical element as a Sky Cube.

The original approach, as described by Greene (Reference Greene1986), was proposed as a computationally efficient method for environment mapping. It allowed reflection effects and illumination effects from the environment surrounding a model to be included without the overheads of ray-tracing.

The primary limitation of the Sky Cube approach is that the viewer cannot navigate away from the origin: The projection is only correct when viewed from the same location at which it was generated. However, this is the specific scenario we are trying to address. As soon as any physical navigation other than head-orientation is used, or objects are presented at different radial values, the resulting mapping again distorts area, angle, or separation.

In principle, the Sky Cube should be placed infinitely far from the viewer. In practice, a suitably large value for the cube dimensions is chosen, so as to be compatible with the limits of the graphics depth buffer.

2.4.2. Building a Sky Cube with s2plot

We generate a Sky Cube by placing s2plot’s virtual camera at the origin of the celestial sphere. Using s2plot’s dynamic callback system, the view-direction and camera up vector are modified on successive refresh cycles to produce six projections each with a 90° field of view. The camera vectors are set using the ss2sc(. . .) function, and the camera angle is set using the ss2sca(. . .) function. A one frame delay is required in the refresh cycle, as the tga export function ss2wtga(. . .) saves the previously generated frame.

To improve visual quality, texture-based axis labels and other annotations are generated using either LaTeXstyle statements, ss2ltt(. . .), or with FreeType fonts, using the ss2ftt(. . .) function. The s2plot environment variables listed in Appendix A must be set in order for these two texture types to be used.

FreeType textures are preferred for text-only labels, as they provide a great deal of flexibility in the choice of font. LaTeX fonts allow the standard set of mathematical symbols and type-setting commands for superscript and subscript fonts, etc. to be used.

Due to the wide field-of-view of each face of the Sky Cube, we need to orient any textures towards the origin. This is achieved by the following:

1. Querying the camera’s up vector, u, and unit view direction vector, v, with ss2qc(. . .).

2. Calculating a local right vector for a texture centred at xi via the cross product: r = u × xi.

3. Calculating a new local up vector: n = u × xi.

The vectors r and n are converted to unit vectors and then used to determine the four vertices of a polygon onto which the relevant texture is mapped. Some user adjustment of the label content, font, and size may be required to ensure the best possible appearance of FreeType or LaTeXbased textures.

An advantage of using a Sky Cube is that additional geometrical primitives may be rendered into the six cube views. Along with axis labels, this might include additional annotations, background imagery (not supported in the initial release of allskyVR), or representations of object positions when there are too many items in a catalogue to display in real time.

A case where this might be relevant is when images of specific objects are to be ‘baked’ into the Sky Cube views. Here, for example, a package like Montage (Berriman & Good Reference Berriman and Good2017) could be used to obtain a set of cut-out images which are then used as individual billboard texture maps within a customised s2plot application. However, as billboards are continuously oriented towards the camera, some care in interpretation is required, as the images may not always preserve their correct spatial relationships on the sky once baked into the Sky Cube.

As this level of user-specific customisation will vary case-by-case, a sample workflow is included in Appendix B. A vanilla Sky Cube is included for the quickstart mode; alternative options can be downloaded from the allskyVR website.

2.5. Interaction

It is usually impractical to type commands on a keyboard while immersed in a virtual environment. Not all commercial HMDs, however, are equipped with a controller for navigation.

Smartphone-style VR systems, such as Google Cardboard, provide limited selection-based interaction through a button integrated into the HMD housing. The initial release of the Samsung Gear VR system provided a touch-pad on the side of the HMD, along with two buttons linked to menu actions.

While A-Frame provides a tracking interface to HTC Vive and Oculus controllers through the vive-controls and oculus-touch-controls entities, we do not explore them further at this stage. Instead, for convenience, we provide an easily customisable gazed-based menu system, which allows the user to make a hands-free selection of different parts of a scene. Our solution remixes some elements of the A-Frame 360° image gallery exampleFootnote 24.

Physical attributes (e.g. mass, magnitude, and morphology) may be supported by categorical labels, which lend themselves to a hierarchy that can be implemented by nesting A-Frame entities. The menu system allows the visibility of named entities (and their children in the hierarchy) to be toggled. The cursor (a green circle, whose default appearance can be modified in the html source) is drawn at the centre of the field of view. This is achieved by using the Javascript addEventListener(. . .), which then changes the visibility of the element through a call to setAttribute(. . .).

As the viewer’s head position changes, the cursor can be brought into alignment with a textured element for the menu. Maintaining focus on this element causes a submenu to appear: Selection from this new menu toggles visibility of the categories and the Sky Cube. The default locations of the menu entities can be modified, as the textures may obscure important parts of the data set. An optional format file can be specified at runtime, containing the category-based colours and the short text-only labels that will appear in the menu.

Code in the allskyVR distribution manages the creation of the A-Frame hierarchy, textures for the menu system, and integrates the various assets into an output directory containing the html source file, images, and Javascript.

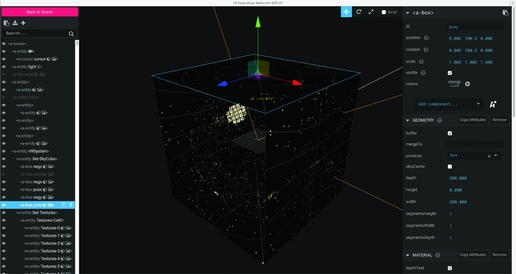

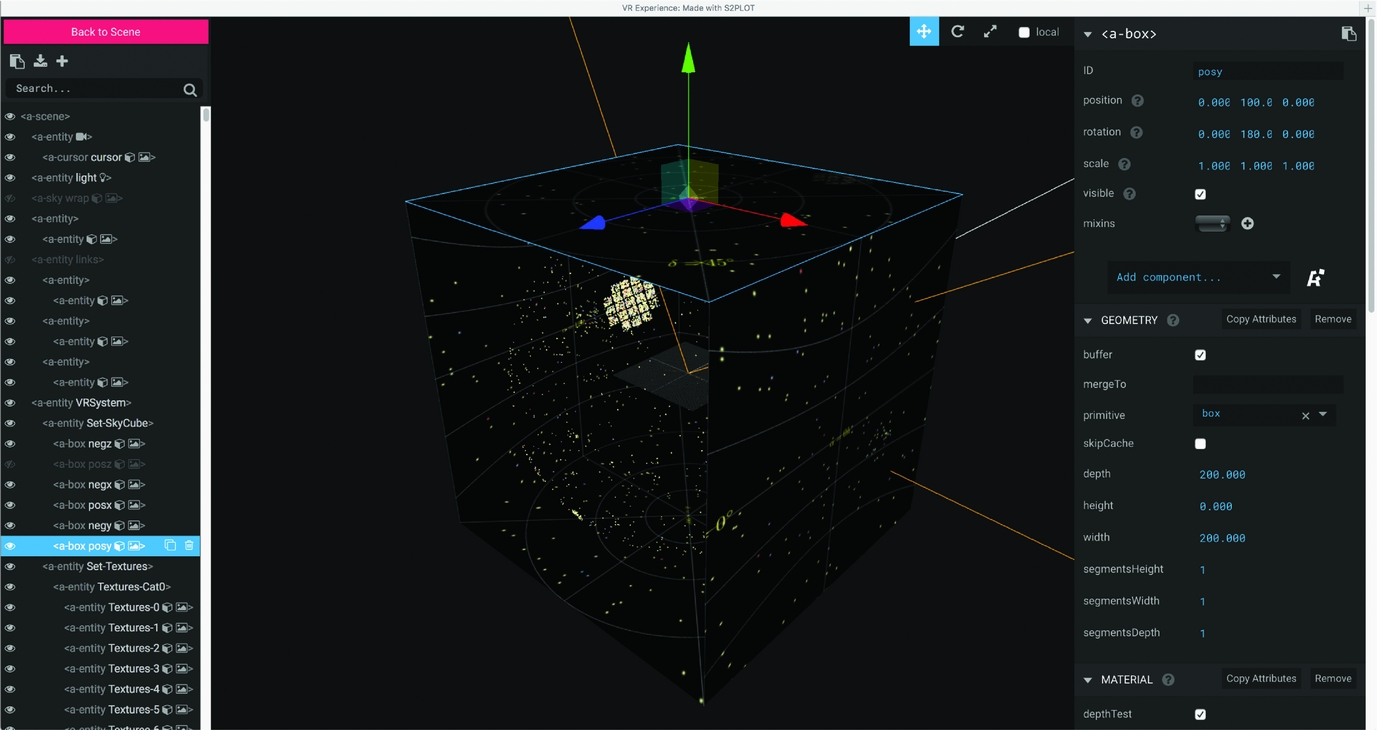

Additional customisation and interaction with the A-Frame entities can be performed using the A-Frame Inspector. The Inspector is accessed by pressing <Cntrl>-<Option>-I, but only when viewing outside of full-screen mode in a browser (Figure 3).

Figure 3. The A-Frame Inspector. This view is accessed by pressing <Cntrl>-<Option>-I when viewing outside of full-screen mode in a browser. The textures forming the Sky Cube are visible: one of which (posy) has been selected. The posz texture has been hidden by selecting the eye symbol from the hierarchy of entities on the left-hand side. This reveals the individual sphere entities inside the Sky Cube. Attributes can be modified for each entity using the options on the right-hand side. The data set from the Kepler space mission is described in more detail in Appendix B.

3 CONCLUDING REMARKS

Standard astronomy software has not yet caught up with the availability of HMDs. This makes it difficult for experimentation, or wider scale early adoption, to occur. Without an easy way to look at your all-sky data with an HMD, how can you objectively assess the level of insight or potential for discovery that could arise?

For highly customisable astronomy visualisation solutions, it is probably necessary to learn how to use vendor specific Software Development Kits (SDKs; e.g. Schaaff et al. Reference Schaaff, Taylor and Rosolowsky2015), or create new solutions based on game engines such as UnityFootnote 25 (Ferrand et al. Reference Ferrand, English and Irani2016) or Unreal EngineFootnote 26.

Unity and Unreal Engine offer a powerful collection of primitives, management of dynamic timeline-based events, and support for HMDs and their corresponding interaction/motion detection solutions. However, they require some familiarity with concepts and approaches from computer generated imagery (CGI) modelling and animation.

The use of a hardware-specific SDK comes with a steep learning curve, and can lock a developer to a single vendor. While this might be appropriate for commercial products (VR games and other entertainment experiences), it is less suitable for a generic solution that can be adopted by researchers. Minor changes in any SDK can have a significant impact on development.

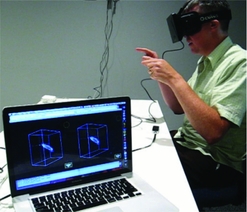

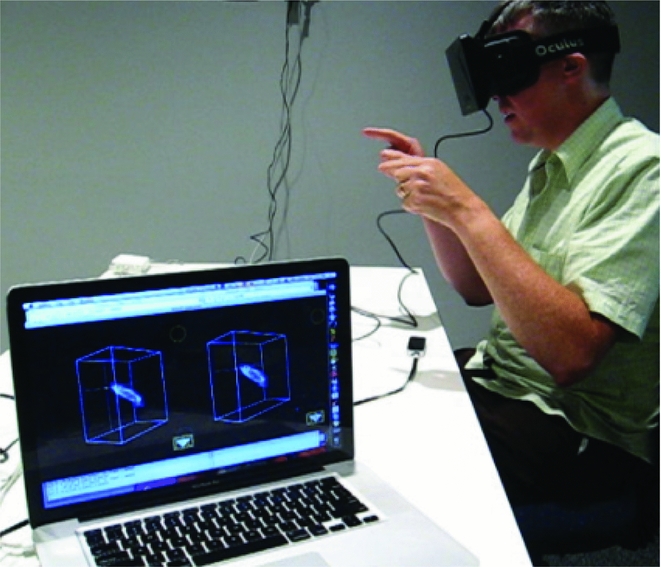

As an example, we performed some simple experimentation with an Oculus Rift DK1 headset in 2015. Using s2plot, and an incomplete integration with the Oculus API, it was possible to generate a usable virtual experience. In Figure 4, the image pair displayed in the HMD can be seen on the laptop screen in the lower left of this image, and the faint (yellow) circle shows the finger position detected by a Leap MotionFootnote 27 controller. No attempt was made to correct the significant chromatic distortions introduced by the DK1 lenses. Unfortunately, support for macOS and Linux operating systems was paused by Oculus soon afterwards due to concerns about the minimum level of graphics performance required to power the RiftFootnote 28. Consequently, we abandoned this approach.

Figure 4. An early experiment with the Oculus Rift DK1 and a Leap Motion controller through a custom s2plot application. Note the image pair rendered on the laptop (lower left), but without any attempt to correct the lens-based distortions.

The allskyVR approach provides a pathway to early adoption of immersive VR for visual exploration and publication. It performs a limited number of tasks related to conversion from a simple input data format to a VR experience, where the only mode of navigation is based on view-direction. When an A-Frame environment is viewed with a compatible HMD, it is possible to explore spatial relationships between objects from a user-centred spherical coordinate system.

We recognise that every astronomer using data in spherical coordinate systems will have different requirements. In our solution, we provide two pathway to adoption of HMDs: a quickstart solution, where conversion of data values to the relevant html statements can be achieved with any number of approaches, and a fully customisable solution for those who can see the benefit of the s2plot programming library to provide more control over the visual outcome. As we have favoured functionality over efficiency in allskyVR, we welcome suggestions to improve the run-time performance if this becomes an issue for some users. Our own testing suggests that interactivity is maintained for datasets comprising a few 1 000 objects rendered as spheres, however, this is device and screen-resolution dependent.

In order to provide astronomers with opportunities to explore their all-sky data with a HMD, a simplified, vendor-agnostic process is required. A-Frame shows one simple way to present all-sky catalogues within a virtual environment, which can be viewed with variety of HMDs. We look forward to seeing how the astronomy-focused VR evolves, and where it finds its niche within the complementary phases of visual exploration and publication.

A THE s2plot LIBRARY AND DEPENDENCIES

s2plot (Barnes et al. Reference Barnes, Fluke, Bourke and Parry2006) is an advanced, 3D graphics library built as a layer on top of OpenGL. This open source library is used most effectively within C/C++ programs. Its key features include a rich API and simple support for a variety of standard and advanced displays including: side-by-side, frame sequential, and interlaced stereoscopic modes; and full/truncated fish-eye projections suitable for digital domes.

s2plot provides a variety of geometrical primitives ranging from points, lines, and spheres to isosurfaces and volume renderings, most of which can be created or displayed with a single function call. Labels or text annotations can be created using LaTeX and FreeType fonts. Geometrical primitives can either be created as static objects (these are created once, and are always displayed) or as dynamic objects (these are regenerated at each screen refresh).

Interactive inspection of 3D data sets is achieved with mouse and keyboard controls, with default behaviour for many key presses (auto-spin the camera, zoom in/out) and user customisation via a callback system.

To access the full functionality presented in this paper requires a working installation of the s2plot distribution (version 3.4 or higher), available from

along with the following additional items:

• The ImageMagick®toolsFootnote 29: the convert utility is used throughout to make conversions between image formats supported by s2plot (tga) and LaTeX (png).

• The allskyVR bundle: comprising the C-language source code, header files, build scripts, and a directory containing pregenerated A-Frame assets.

The s2plot environment variables S2PLOT_IMPATH, S2PLOT_LATEXBIN, S2PLOT_DVIPNGBIN must all be set as described in the ENVIRONMENT.TXT file included in the s2plot distribution. If FreeType textures are to be used for axis labels and other annotations (see Section 2.4.2), the FreeType libraries needs to be installed and the S2FREETYPE environment variable set to yes. It may be necessary to modify the _DEFAULTFONT variables defined in allskyVR.h to better reflect a given system configuration or to manage a specific use case.

For VR export to work correctly, S2PLOT_WIDTH and S2PLOT_HEIGHT must be set to the same value. It is recommended that the largest possible square window is used for the best graphics quality.

B STAR SYSTEMS WITH CONFIRMED EXOPLANETS

In this Appendix, we provide a step-by-step guide to creating an immersive VR environment using allskyVR. As an example, we populate the celestial sphere with the locations of confirmed exoplanet systems.

The first step is to gather data, and convert it to an appropriate format. We access the NASA Expolanet ArchiveFootnote 30, and view the table of confirmed planets. Selecting the α, δ, and number of planets (N p) columns, the data set is downloaded and saved in comma-separated variable (csv) format. Some editing is required to remove duplicate items and the columns of sexagesimal-formatted coordinates. A new data column is created to hold the r z coordinates, with all values set to 1.

The Kepler spacecraft’s primary mission footprint, which contributes a substantial number of exoplanets to the data set, requires some additional attention. A further column is created to contain the relative scaling sizes, S g, for the geometrical primitives. Exoplanet systems with celestial coordinates in the range 18h ⩽ α ⩽ 22h and +30° ⩽ δ ⩽ +60° have a scale value of 1, while other systems have a scale value of 5. This will mean that isolated systems are more easily seen, while limiting the over-crowding in the Kepler field. For other data sets, additional fine-tuning may be required to produce the most effective visualisation.

This modified data set is saved as a csv-format file (exoplanet.csv), with the column order: α (decimal degrees), δ (decimal degrees), r z, N p, S g.

User control of colours and tags is provided through a text file, format.txt, requiring one line in the file per category. The format is

CAT=R,G,B,Label

where the red, green, and blue colour components, [R,G,B], are integer values in the range [0.255], and Label is a short text-only label to appear in the A-Frame menu. It is necessary to avoid spaces and some symbols in the label, such as $ and _, which have particular meanings in LaTeX formatting.

Note that the category labels are only used in the fully customisable mode (Section 2.4.2), where the relevant textures are generated on demand. For the quickstart mode, default textures are provided from an asset directory. These can be replaced by the user as required.

After setting the S2PLOT_WIDTH and S2PLOT_HEIGHT environment variables to 800 (pixels), we launch the s2plot application with the following command-line arguments:

templateSpherical -i exoplanet.csv

-f format.txt -o exosys

On pressing <shift>-v, the VR experience is generated, with all assets moved to the directory VR-exosys. The exoplanetary system data set is now ready to be deployed on a relevant web-server for viewing with a compatible HMD. The two panels in Figure 1 show screenshots captured from a Samsung Galaxy S7 Edge mobile device. The expanded menu, including the category labels, is visible in the lower panel.

B1 Modifying the Sky Cube textures

Suppose the input catalogue comprised a few thousand additional planetary candidates, thus reducing the responsiveness of the immersive experience, and it was sufficient to only interact with a subset of categories. The following workflow would allow a user-controlled portion of objects to have their positions ‘baked’ into the Sky Cube texture.

1. Create two input data files, one which contains only the data items that will be ‘baked’ into the Sky Cube (exoplanet-bake.csv), and one containing only the data items for interactive exploration (exoplanet-interact.csv). Visibility of the latter will be controllable from the HMD using the gaze-based menu system, so use of relevant categories to further subset the data is encouraged.

2. Execute the s2plot application using the smaller of the two data sets, and complete the export step:

templateSpherical -i exoplanet-interact.csv

-f format.txt -o exosys

This will create the relevant html, Javascript, and a-frame for interactive exploration.

3. Make a back-up (in another location) of the six Sky Cube textures: neg?.png and pos?.png.

4. Execute the s2plot application using the larger of the two data sets, choosing a different export directory name, to avoid over-writing the first export:

templateSpherical -i exoplanet-bake.csv

-f format.txt -o exosys-bake

This will create the Sky Cube textures with the additional data set items included.

5. Copy the new set of six Sky Cube textures from the VR-exosys-bake directory to the VR-exosys directory, replacing the original textures.

The result is an immersive environment with a more detailed Sky Cube, which can still have its visibility toggled through the menu system.