1. Introduction

A

![]() $q$

-ary

$q$

-ary

![]() $t$

-error correcting code of length

$t$

-error correcting code of length

![]() $n$

is a subset of

$n$

is a subset of

![]() $[q]^n$

whose elements pairwise differ in at least

$[q]^n$

whose elements pairwise differ in at least

![]() $2t+1$

coordinates. Error correcting codes play a central role in coding theory, as they allow us to correct errors in at most

$2t+1$

coordinates. Error correcting codes play a central role in coding theory, as they allow us to correct errors in at most

![]() $t$

coordinates when sending codewords over a noisy channel. In the course of developing the container method, Sapozhenko in [Reference Sapozhenko10] posed the following natural question: how many error correcting codes (with given parameters) are there?

$t$

coordinates when sending codewords over a noisy channel. In the course of developing the container method, Sapozhenko in [Reference Sapozhenko10] posed the following natural question: how many error correcting codes (with given parameters) are there?

Given a

![]() $t$

-error correcting code

$t$

-error correcting code

![]() $\mathcal{C} \subseteq [q]^n$

of maximum size, any subset of

$\mathcal{C} \subseteq [q]^n$

of maximum size, any subset of

![]() $\mathcal{C}$

is also a

$\mathcal{C}$

is also a

![]() $t$

-error correcting code. Consequently, the number of

$t$

-error correcting code. Consequently, the number of

![]() $q$

-ary

$q$

-ary

![]() $t$

-error correcting codes of length

$t$

-error correcting codes of length

![]() $n$

is at least

$n$

is at least

![]() $2^{ \lvert \mathcal{C} \rvert }$

. It is natural to suspect that the total number of

$2^{ \lvert \mathcal{C} \rvert }$

. It is natural to suspect that the total number of

![]() $t$

-error correcting codes is not much larger, provided that this maximum size code

$t$

-error correcting codes is not much larger, provided that this maximum size code

![]() $\mathcal{C}$

is not too small.

$\mathcal{C}$

is not too small.

A fundamental problem in coding theory is determining the maximum size of an error correcting code with given parameters (e.g., see [[Reference van Lint12], Chapter 5] and [[Reference MacWilliams and Sloane7], Chapter 17] for classic treatments). There is an easy geometric upper bound on the size of a

![]() $q$

-ary

$q$

-ary

![]() $t$

-error correcting code. We use

$t$

-error correcting code. We use

![]() $d(x,y)$

to denote the Hamming distance between

$d(x,y)$

to denote the Hamming distance between

![]() $x$

and

$x$

and

![]() $y \in [q]^n$

, defined as the number of coordinates on which

$y \in [q]^n$

, defined as the number of coordinates on which

![]() $x$

and

$x$

and

![]() $y$

differ. Let

$y$

differ. Let

![]() $B_q(x, r) \;:\!=\; \{ y \in [q]^n \;:\; d(x,y) \le r \}$

be the Hamming ball of radius

$B_q(x, r) \;:\!=\; \{ y \in [q]^n \;:\; d(x,y) \le r \}$

be the Hamming ball of radius

![]() $r$

centred at

$r$

centred at

![]() $x \in [q]^n$

with associated cardinality

$x \in [q]^n$

with associated cardinality

Any

![]() $t$

-error correcting code in

$t$

-error correcting code in

![]() $[q]^n$

has size at most

$[q]^n$

has size at most

This simple upper bound is known as the Hamming bound (also known as the sphere packing bound). Codes that attain equality for this upper bound are called perfect codes; the only non-trivial perfect codes are Hamming codes and Golay codes (see [Reference van Lint11]). Except for some special parameters such as these, there is a large gap between the best known upper and lower bounds.

The Hamming bound can be significantly improved when

![]() $t \ge c \sqrt{n}$

(see [Reference Delsarte3, Reference Robert, Eugene, Howard and Lloyd8, Reference van Lint12]). Most studies on bounds for codes focus on the linear distance case, that is,

$t \ge c \sqrt{n}$

(see [Reference Delsarte3, Reference Robert, Eugene, Howard and Lloyd8, Reference van Lint12]). Most studies on bounds for codes focus on the linear distance case, that is,

![]() $d = \lfloor \delta n \rfloor$

for some constant

$d = \lfloor \delta n \rfloor$

for some constant

![]() $0 \lt \delta \lt 1$

. Curiously, even after consulting with several experts on the subject, we are not aware of any upper bound better than the Hamming bound when

$0 \lt \delta \lt 1$

. Curiously, even after consulting with several experts on the subject, we are not aware of any upper bound better than the Hamming bound when

![]() $t \lt c\sqrt{n}$

. An important family of constructions is BCH codes, which can achieve sizes about

$t \lt c\sqrt{n}$

. An important family of constructions is BCH codes, which can achieve sizes about

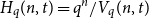

![]() $H_q(n, t)/t!$

for

$H_q(n, t)/t!$

for

![]() $q = 2, t = O(\sqrt{n})$

(see [[Reference MacWilliams and Sloane7], Corollary 9.8]); this is within a subexponential but superpolynomial factor of the Hamming bound. As we are primarily interested in the regime

$q = 2, t = O(\sqrt{n})$

(see [[Reference MacWilliams and Sloane7], Corollary 9.8]); this is within a subexponential but superpolynomial factor of the Hamming bound. As we are primarily interested in the regime

![]() $t \le c\sqrt{n}$

in this paper, we view the Hamming bound

$t \le c\sqrt{n}$

in this paper, we view the Hamming bound

![]() $H_q(n,t)$

as a proxy for the size of the code.

$H_q(n,t)$

as a proxy for the size of the code.

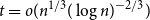

Balogh, Treglown and Wagner [Reference Balogh, Treglown and Wagner2] showed that as long as

![]() $t = o(n^{1/3} (\log n)^{-2/3})$

, the number of binary

$t = o(n^{1/3} (\log n)^{-2/3})$

, the number of binary

![]() $t$

-error correcting codes of length

$t$

-error correcting codes of length

![]() $n$

is at most

$n$

is at most

![]() $2^{(1+o(1)) H_2 (n,t)}$

(their paper only considered the

$2^{(1+o(1)) H_2 (n,t)}$

(their paper only considered the

![]() $q=2$

case, though the situation for larger fixed

$q=2$

case, though the situation for larger fixed

![]() $q$

is similar). They conjectured that the same upper bound should also hold for a larger range of

$q$

is similar). They conjectured that the same upper bound should also hold for a larger range of

![]() $t$

. We prove a stronger version of this conjecture.

$t$

. We prove a stronger version of this conjecture.

Theorem 1.1.

Fix integer

![]() $q \ge 2$

. Below

$q \ge 2$

. Below

![]() $t$

is allowed to depend on

$t$

is allowed to depend on

![]() $n$

.

$n$

.

-

(a) For

$0 \lt t \le 10\sqrt{n}$

, the number of

$0 \lt t \le 10\sqrt{n}$

, the number of

$q$

-ary

$q$

-ary

$t$

-error correcting codes of length

$t$

-error correcting codes of length

$n$

is at most

$n$

is at most

$2^{(1+o(1)) H_q (n,t)}$

.

$2^{(1+o(1)) H_q (n,t)}$

. -

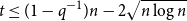

(b) For all

$10\sqrt{n} \lt t \leq (1 - 1/q) n - 2\sqrt{n \log n}$

, the number of

$10\sqrt{n} \lt t \leq (1 - 1/q) n - 2\sqrt{n \log n}$

, the number of

$q$

-ary

$q$

-ary

$t$

-error correcting codes of length

$t$

-error correcting codes of length

$n$

is

$n$

is

$2^{o(H_q(n,t))}$

.

$2^{o(H_q(n,t))}$

. -

(c) There exists some constant

$c_q \gt 0$

such that for all

$c_q \gt 0$

such that for all

$t \gt (1-1/q) n - c_q \sqrt{n \log n}$

, one has

$t \gt (1-1/q) n - c_q \sqrt{n \log n}$

, one has

$H_q(n,t) = O(n^{1/10})$

. (Since there are

$H_q(n,t) = O(n^{1/10})$

. (Since there are

$q^n$

one-element codes, the number of codes is not

$q^n$

one-element codes, the number of codes is not

$2^{O(H_q(n,t))}$

whenever

$2^{O(H_q(n,t))}$

whenever

$H_q(n, t) = O(n^{1/10})$

.)

$H_q(n, t) = O(n^{1/10})$

.)

The most interesting part is (a), where

![]() $t \le 10\sqrt{n}$

; here, our proof builds on the container argument of [Reference Balogh, Treglown and Wagner2]. The key idea in [Reference Balogh, Treglown and Wagner2] was analysing the container algorithm in two stages depending on the number of remaining vertices. Our work introduces two new ideas. We first give a pair of more refined supersaturation estimates; these estimates arise from understanding Hamming ball intersections and weak transitivity-type properties of a Hamming distance graph we study. Secondly, we employ a variant of the classical graph container algorithm with early stopping. See Section 2 for the container argument as well as the statements of the required supersaturation results, and Section 4 for proofs of the supersaturation estimates.

$t \le 10\sqrt{n}$

; here, our proof builds on the container argument of [Reference Balogh, Treglown and Wagner2]. The key idea in [Reference Balogh, Treglown and Wagner2] was analysing the container algorithm in two stages depending on the number of remaining vertices. Our work introduces two new ideas. We first give a pair of more refined supersaturation estimates; these estimates arise from understanding Hamming ball intersections and weak transitivity-type properties of a Hamming distance graph we study. Secondly, we employ a variant of the classical graph container algorithm with early stopping. See Section 2 for the container argument as well as the statements of the required supersaturation results, and Section 4 for proofs of the supersaturation estimates.

For the remaining cases when

![]() $t \gt 10 \sqrt{n}$

, we observe in Section 5 that the Elias bound (a classic upper bound on code sizes) implies the desired result when

$t \gt 10 \sqrt{n}$

, we observe in Section 5 that the Elias bound (a classic upper bound on code sizes) implies the desired result when

![]() $t \le (1 - q^{-1})n - 2 \sqrt{n \log n}$

and that

$t \le (1 - q^{-1})n - 2 \sqrt{n \log n}$

and that

![]() $H_q(n, t)$

is very small when

$H_q(n, t)$

is very small when

![]() $t$

is any larger. Also, note that by the Plotkin bound [[Reference van Lint12], (5.2.4)], if

$t$

is any larger. Also, note that by the Plotkin bound [[Reference van Lint12], (5.2.4)], if

![]() $2t+1 - (1-1/q)n = \alpha \gt 0$

, then the size of a

$2t+1 - (1-1/q)n = \alpha \gt 0$

, then the size of a

![]() $t$

-error correcting code in

$t$

-error correcting code in

![]() $[q]^n$

is at most

$[q]^n$

is at most

![]() $(2t+1)/\alpha$

, which is very small, and so (b) is uninteresting when

$(2t+1)/\alpha$

, which is very small, and so (b) is uninteresting when

![]() $t$

is large.

$t$

is large.

A

![]() $q$

-ary code of length

$q$

-ary code of length

![]() $n$

and distance

$n$

and distance

![]() $d$

is a subset of

$d$

is a subset of

![]() $[q]^n$

whose elements are pairwise separated by Hamming distance at least

$[q]^n$

whose elements are pairwise separated by Hamming distance at least

![]() $d$

. The maximum size of such a code is denoted

$d$

. The maximum size of such a code is denoted

![]() $A_q(n,d)$

. It is natural to conjecture the following much stronger bound on the number of codes of length

$A_q(n,d)$

. It is natural to conjecture the following much stronger bound on the number of codes of length

![]() $n$

and distance

$n$

and distance

![]() $d$

, which is likely quite difficult.

$d$

, which is likely quite difficult.

Conjecture 1.2.

For all fixed

![]() $c \gt 0$

, the number of

$c \gt 0$

, the number of

![]() $q$

-ary codes of length

$q$

-ary codes of length

![]() $n$

and distance

$n$

and distance

![]() $d$

is

$d$

is

![]() $2^{O(A_q(n,d))}$

whenever

$2^{O(A_q(n,d))}$

whenever

![]() $d \lt (1 - q^{-1} - c)n$

.

$d \lt (1 - q^{-1} - c)n$

.

The main difficulty seems to be a lack of strong supersaturation bounds, for example, showing that sets with size much larger than

![]() $A_q(n,d)$

must have many pairs of elements with distance at most

$A_q(n,d)$

must have many pairs of elements with distance at most

![]() $d$

.

$d$

.

The above conjecture is analogous to the following conjecture of Kleitman and Winston [Reference Kleitman and Winston6]. We use

![]() $\operatorname{ex}(n,H)$

to denote the maximum number of edges in an

$\operatorname{ex}(n,H)$

to denote the maximum number of edges in an

![]() $n$

-vertex

$n$

-vertex

![]() $H$

-free graph.

$H$

-free graph.

Conjecture 1.3.

For every bipartite graph

![]() $H$

that contains a cycle, the number of

$H$

that contains a cycle, the number of

![]() $H$

-free graphs on

$H$

-free graphs on

![]() $n$

labelled vertices is

$n$

labelled vertices is

![]() $2^{O_{H}(\!\operatorname{ex}\!(n, H))}$

.

$2^{O_{H}(\!\operatorname{ex}\!(n, H))}$

.

Kleitman and Winston [Reference Kleitman and Winston6] proved the conjecture for

![]() $H = C_4$

and in doing so developed what is now called the graph container method, which is central to the rest of this paper. See Ferber, McKinley, and Samotij [Reference Ferber, McKinley and Samotij4] for recent results and discussion on the problem of enumerating

$H = C_4$

and in doing so developed what is now called the graph container method, which is central to the rest of this paper. See Ferber, McKinley, and Samotij [Reference Ferber, McKinley and Samotij4] for recent results and discussion on the problem of enumerating

![]() $H$

-free graphs.

$H$

-free graphs.

Let

![]() $r_k(n)$

denote the maximum size of a

$r_k(n)$

denote the maximum size of a

![]() $k$

-AP-free subset of

$k$

-AP-free subset of

![]() $[n]$

. Balogh, Liu, and Sharifzadeh [Reference Balogh, Liu and Sharifzadeh1] recently proved the following theorem.

$[n]$

. Balogh, Liu, and Sharifzadeh [Reference Balogh, Liu and Sharifzadeh1] recently proved the following theorem.

Theorem 1.4 (Balogh, Liu, and Sharifzadeh [Reference Balogh, Liu and Sharifzadeh1]). Fix

![]() $k \ge 3$

. The number of

$k \ge 3$

. The number of

![]() $k$

-AP-free subsets of

$k$

-AP-free subsets of

![]() $[n]$

is

$[n]$

is

![]() $2^{O(r_k(n))}$

for infinitely many

$2^{O(r_k(n))}$

for infinitely many

![]() $n$

.

$n$

.

A notable feature of the above theorem is that the asymptotic order of

![]() $r_k(n)$

is not known for any

$r_k(n)$

is not known for any

![]() $k \ge 3$

, similar to the situation for

$k \ge 3$

, similar to the situation for

![]() $A(n,d)$

. Ferber, McKinley, and Samotij [Reference Ferber, McKinley and Samotij4] proved analogous results for the number of

$A(n,d)$

. Ferber, McKinley, and Samotij [Reference Ferber, McKinley and Samotij4] proved analogous results for the number of

![]() $H$

-free graphs. It remains an open problem to extend the above result to all

$H$

-free graphs. It remains an open problem to extend the above result to all

![]() $n$

. Also, Cameron and Erdős asked whether the number of

$n$

. Also, Cameron and Erdős asked whether the number of

![]() $k$

-AP-free subsets of

$k$

-AP-free subsets of

![]() $[n]$

is

$[n]$

is

![]() $2^{(1+o(1))r_k(n)}$

. This is unknown, although for several similar questions the answer is no (see [Reference Balogh, Liu and Sharifzadeh1] for discussion). Likewise, one can ask whether the number of

$2^{(1+o(1))r_k(n)}$

. This is unknown, although for several similar questions the answer is no (see [Reference Balogh, Liu and Sharifzadeh1] for discussion). Likewise, one can ask whether the number of

![]() $q$

-ary error correcting codes of length

$q$

-ary error correcting codes of length

![]() $n$

and distance

$n$

and distance

![]() $d$

is

$d$

is

![]() $2^{(1+o(1))A_q(n,d)}$

.

$2^{(1+o(1))A_q(n,d)}$

.

Remarks about notation. For non-negative quantities depending on

![]() $n$

, we write

$n$

, we write

![]() $f \lesssim g$

to mean that there is some constant

$f \lesssim g$

to mean that there is some constant

![]() $C$

such that

$C$

such that

![]() $f \le Cg$

for all sufficiently large

$f \le Cg$

for all sufficiently large

![]() $n$

. Throughout the paper, we view

$n$

. Throughout the paper, we view

![]() $q$

as a constant, and so hidden constants are allowed to depend on

$q$

as a constant, and so hidden constants are allowed to depend on

![]() $q$

.

$q$

.

Given a finite set

![]() $X$

, we write

$X$

, we write

![]() $2^X$

for the collection of all subsets of

$2^X$

for the collection of all subsets of

![]() $X$

,

$X$

,

![]() $\binom{X}{t}$

for the collection of

$\binom{X}{t}$

for the collection of

![]() $t$

-element subsets of

$t$

-element subsets of

![]() $X$

, and

$X$

, and

![]() $\binom{X}{\le t}$

for the collection of subsets of

$\binom{X}{\le t}$

for the collection of subsets of

![]() $X$

with at most

$X$

with at most

![]() $t$

elements. We also write

$t$

elements. We also write

![]() $\binom{n}{\le t} = \sum _{0 \le i \le t} \binom{n}{i}$

.

$\binom{n}{\le t} = \sum _{0 \le i \le t} \binom{n}{i}$

.

2. Graph container argument

In this section, we analyse the case

![]() $t \le 10\sqrt{n}$

. As in [Reference Balogh, Treglown and Wagner2], we use the method of graph containers. This technique was originally introduced by Kleitman and Winston [Reference Kleitman and Winston5, Reference Kleitman and Winston6]. See Samotij [Reference Samotij9] for a modern introduction to the graph container method. We will not need the more recent hypergraph container method for this work. Instead, as in [Reference Balogh, Treglown and Wagner2], we refine the graph container algorithm and analysis.

$t \le 10\sqrt{n}$

. As in [Reference Balogh, Treglown and Wagner2], we use the method of graph containers. This technique was originally introduced by Kleitman and Winston [Reference Kleitman and Winston5, Reference Kleitman and Winston6]. See Samotij [Reference Samotij9] for a modern introduction to the graph container method. We will not need the more recent hypergraph container method for this work. Instead, as in [Reference Balogh, Treglown and Wagner2], we refine the graph container algorithm and analysis.

Let

![]() $G_{q,n,t}$

be the graph with vertex set

$G_{q,n,t}$

be the graph with vertex set

![]() $[q]^n$

where two vertices are adjacent if their Hamming distance is at most

$[q]^n$

where two vertices are adjacent if their Hamming distance is at most

![]() $2t$

. For a subset

$2t$

. For a subset

![]() $S \subseteq [q]^n,$

we denote by

$S \subseteq [q]^n,$

we denote by

![]() $G_{q,n,t}[S]$

the induced subgraph of

$G_{q,n,t}[S]$

the induced subgraph of

![]() $G_{q, n,t}$

on

$G_{q, n,t}$

on

![]() $S$

. For simplicity of notation, we write

$S$

. For simplicity of notation, we write

![]() $G[S] = G_{q,n,t}[S]$

, as the tuple

$G[S] = G_{q,n,t}[S]$

, as the tuple

![]() $(q,n,t)$

does not change in the proof. Given a graph

$(q,n,t)$

does not change in the proof. Given a graph

![]() $G$

, we write

$G$

, we write

![]() $\Delta (G)$

for the maximum degree of

$\Delta (G)$

for the maximum degree of

![]() $G$

and

$G$

and

![]() $i(G)$

for the number of independent sets in

$i(G)$

for the number of independent sets in

![]() $G$

.

$G$

.

Applications of the container method usually require supersaturation estimates. Our main advance, building on [Reference Balogh, Treglown and Wagner2], is a more refined supersaturation bound for codes. The methods used to prove the first lemma below already allow us to prove the main theorem for

![]() $t = o(\sqrt{n}/\log n)$

; in concert with the second lemma, we are able to establish the full theorem.

$t = o(\sqrt{n}/\log n)$

; in concert with the second lemma, we are able to establish the full theorem.

Lemma 2.1 (Supersaturation I). Let

![]() $t \le 10 \sqrt{n}$

. If

$t \le 10 \sqrt{n}$

. If

![]() $S \subseteq [q]^n$

has size

$S \subseteq [q]^n$

has size

![]() $|S| \ge n^4H_q(n,t)$

, then

$|S| \ge n^4H_q(n,t)$

, then

Lemma 2.2 (Supersaturation II). Let

![]() $\varepsilon \gt 0$

. For all sufficiently large

$\varepsilon \gt 0$

. For all sufficiently large

![]() $n$

, if

$n$

, if

![]() $60 \le t\le 10\sqrt{n}$

and

$60 \le t\le 10\sqrt{n}$

and

![]() $S \subseteq [q]^n$

satisfies

$S \subseteq [q]^n$

satisfies

![]() $\Delta (G[S]) \le n^5$

, then

$\Delta (G[S]) \le n^5$

, then

![]() $i(G[S]) \le 2^{(1+\varepsilon )H_q(n,t)}$

.

$i(G[S]) \le 2^{(1+\varepsilon )H_q(n,t)}$

.

We defer the proof of the above two lemmas to subsection 4.1. Assuming these two lemmas, we now run the container argument to deduce the main theorem. The container algorithm we use is a modification of the classical graph container algorithm of Kleitman and Winston [Reference Kleitman and Winston5, Reference Kleitman and Winston6]. As in [Reference Balogh, Treglown and Wagner2], we divide the container algorithm into several stages and analyse them separately. Moreover, we allow ‘early stopping‘ of the algorithm if we can certify that the residual graph has few independent sets.

Lemma 2.3 (Container). For every

![]() $\varepsilon \gt 0$

, the following holds for

$\varepsilon \gt 0$

, the following holds for

![]() $n$

sufficiently large. For all

$n$

sufficiently large. For all

![]() $60 \le t \le 10 \sqrt{n}$

, there exists a collection

$60 \le t \le 10 \sqrt{n}$

, there exists a collection

![]() $\mathcal{F}$

of subsets of

$\mathcal{F}$

of subsets of

![]() $[q]^n$

with the following properties:

$[q]^n$

with the following properties:

-

$|\mathcal{F}| \le 2^{\varepsilon H_q(n,t)}$

;

$|\mathcal{F}| \le 2^{\varepsilon H_q(n,t)}$

; -

Every

$t$

-error correcting code in

$t$

-error correcting code in

$[q]^n$

is contained in

$[q]^n$

is contained in

$S$

for some

$S$

for some

$S \in \mathcal{F}$

;

$S \in \mathcal{F}$

; -

$i(G[S]) \le 2^{(1 + \varepsilon )H_q(n, t)}$

for every

$i(G[S]) \le 2^{(1 + \varepsilon )H_q(n, t)}$

for every

$S \in \mathcal{F}$

.

$S \in \mathcal{F}$

.

This container lemma implies Theorem 1.1(a).

Proof of Theorem

1.1(a). If

![]() $t \lt 60$

, then the result is already known ([Reference Balogh, Treglown and Wagner2] proved it for

$t \lt 60$

, then the result is already known ([Reference Balogh, Treglown and Wagner2] proved it for

![]() $t = o(n^{1/3} (\log n)^{-2/3})$

). So assume

$t = o(n^{1/3} (\log n)^{-2/3})$

). So assume

![]() $60 \le t \le 10\sqrt{n}$

. Let

$60 \le t \le 10\sqrt{n}$

. Let

![]() $\varepsilon \gt 0$

. Thus, letting

$\varepsilon \gt 0$

. Thus, letting

![]() $\mathcal{F}$

be as in Lemma 2.3, the number of

$\mathcal{F}$

be as in Lemma 2.3, the number of

![]() $t$

-error correcting codes in

$t$

-error correcting codes in

![]() $[q]^n$

is at most

$[q]^n$

is at most

for sufficiently large

![]() $n$

. Since

$n$

. Since

![]() $\varepsilon \gt 0$

can be arbitrarily small, we have the desired result.

$\varepsilon \gt 0$

can be arbitrarily small, we have the desired result.

Proof of Lemma

2.3. Fix

![]() $\varepsilon \gt 0$

. Let

$\varepsilon \gt 0$

. Let

![]() $C \gt 0$

be a sufficiently large constant and assume that

$C \gt 0$

be a sufficiently large constant and assume that

![]() $n$

is sufficiently large throughout. We show that there exists some function

$n$

is sufficiently large throughout. We show that there exists some function

such that for every independent set

![]() $I \in \mathcal{I}(G)$

, there is some ‘fingerprint’

$I \in \mathcal{I}(G)$

, there is some ‘fingerprint’

![]() $P \subseteq I$

that satisfies the following three conditions:

$P \subseteq I$

that satisfies the following three conditions:

-

1.

$\left \lvert P\right \rvert \le \frac{C H_q(n, t) \log n}{n}$

,

$\left \lvert P\right \rvert \le \frac{C H_q(n, t) \log n}{n}$

, -

2.

$i(G[P\cup f(P)])\le 2^{(1+\varepsilon )H_q(n,t)}$

,

$i(G[P\cup f(P)])\le 2^{(1+\varepsilon )H_q(n,t)}$

, -

3.

$I \subseteq P \cup f(P)$

.

$I \subseteq P \cup f(P)$

.

We consider the following algorithm that constructs

![]() $P$

and

$P$

and

![]() $f(P)$

given some

$f(P)$

given some

![]() $I \in \mathcal{I}(G).$

$I \in \mathcal{I}(G).$

Algorithm 1 Fix an arbitrary order

![]() $v_1, \ldots, v_{q^n}$

of the elements of

$v_1, \ldots, v_{q^n}$

of the elements of

![]() $V(G)$

. Let

$V(G)$

. Let

![]() $I \in \mathcal{I}(G)$

be an independent set, as the input.

$I \in \mathcal{I}(G)$

be an independent set, as the input.

Given any independent set

![]() $I$

, the algorithm outputs some

$I$

, the algorithm outputs some

![]() $P$

and

$P$

and

![]() $f(P)$

. Note that

$f(P)$

. Note that

![]() $f(P)$

depends only on

$f(P)$

depends only on

![]() $P$

(i.e., two different

$P$

(i.e., two different

![]() $I$

’s that output the same

$I$

’s that output the same

![]() $P$

will always output the same

$P$

will always output the same

![]() $f(P)$

). Also, for any independent set

$f(P)$

). Also, for any independent set

![]() $I$

, the output satisfies

$I$

, the output satisfies

![]() $P \subseteq I \subseteq P \cup f(P)$

.

$P \subseteq I \subseteq P \cup f(P)$

.

To prove

![]() $\left \lvert P\right \rvert \le \frac{C H_q(n, t) \log n}{n}$

, we distinguish stages in the algorithm based on the size of

$\left \lvert P\right \rvert \le \frac{C H_q(n, t) \log n}{n}$

, we distinguish stages in the algorithm based on the size of

![]() $V(G_i)$

.

$V(G_i)$

.

-

Let

$P_1$

denote the vertices added to

$P_1$

denote the vertices added to

$P$

when

$P$

when

$|V(G_{i-1})| \ge n^4 H_q(n, t)$

.

$|V(G_{i-1})| \ge n^4 H_q(n, t)$

. -

Let

$P_2$

denote the vertices added to

$P_2$

denote the vertices added to

$P$

when

$P$

when

$|V(G_{i-1})| \lt n^4 H_q(n, t)$

.

$|V(G_{i-1})| \lt n^4 H_q(n, t)$

.

While we are adding vertices to

![]() $P_1$

, according to Lemma 2.1, we are removing at least a

$P_1$

, according to Lemma 2.1, we are removing at least a

![]() $\beta \gtrsim n^{3/2}/H_q(n,t)$

fraction of vertices with every successful addition. We have

$\beta \gtrsim n^{3/2}/H_q(n,t)$

fraction of vertices with every successful addition. We have

\begin{align*} |P_1| \le \frac{\log \left ( \frac{q^n}{n^4H_q(n,t)}\right )}{\log \left (\frac{1}{1-\beta }\right )} &\lesssim \frac{\log V_q(n,t)-4\log n}{\beta } \le \frac{\log ( (nq)^t)}{\beta } \lesssim \frac{t\log n }{n^{3/2}} H_q(n,t) \lesssim \frac{ \log n}{n} H_q(n, t). \end{align*}

\begin{align*} |P_1| \le \frac{\log \left ( \frac{q^n}{n^4H_q(n,t)}\right )}{\log \left (\frac{1}{1-\beta }\right )} &\lesssim \frac{\log V_q(n,t)-4\log n}{\beta } \le \frac{\log ( (nq)^t)}{\beta } \lesssim \frac{t\log n }{n^{3/2}} H_q(n,t) \lesssim \frac{ \log n}{n} H_q(n, t). \end{align*}

While we are adding vertices to

![]() $P_2$

, by Lemma 2.2, we are removing at least

$P_2$

, by Lemma 2.2, we are removing at least

![]() $n^5$

vertices with every successful addition, as

$n^5$

vertices with every successful addition, as

![]() $i(G_{i-1}) \ge 2^{(1+\varepsilon )H_q(n,t)}$

during the associated iteration. Consequently,

$i(G_{i-1}) \ge 2^{(1+\varepsilon )H_q(n,t)}$

during the associated iteration. Consequently,

These two inequalities imply that

Thus,

Take

![]() $\mathcal{F}$

to be the collection of all

$\mathcal{F}$

to be the collection of all

![]() $P\cup f(P)$

obtained from this procedure as

$P\cup f(P)$

obtained from this procedure as

![]() $I$

ranges over all independent sets of

$I$

ranges over all independent sets of

![]() $G$

. We have just proved that every

$G$

. We have just proved that every

![]() $t$

-error correcting code is contained in some

$t$

-error correcting code is contained in some

![]() $S\in \mathcal{F}$

. We also have

$S\in \mathcal{F}$

. We also have

![]() $i(G[S])\leq 2^{(1+2\varepsilon )H_q(n,t)}$

for every

$i(G[S])\leq 2^{(1+2\varepsilon )H_q(n,t)}$

for every

![]() $S\in \mathcal{F}$

(equivalent to the stated result after replacing

$S\in \mathcal{F}$

(equivalent to the stated result after replacing

![]() $\varepsilon$

by

$\varepsilon$

by

![]() $\varepsilon/2$

). Finally, using that

$\varepsilon/2$

). Finally, using that

![]() $\log \binom{n}{m} \le m \log (O(n)/m)$

, we have

$\log \binom{n}{m} \le m \log (O(n)/m)$

, we have

\begin{align*} \log \left \lvert \mathcal{F}\right \rvert &\le \log \binom{q^n}{\le \frac{C \log n}{n} H_q(n, t) } \\[5pt] &\lesssim \frac{C \log n}{n} H_q(n, t) \log \left (O\left ( \frac{n V_q(n,t)}{C\log n} \right )\right ) {}\\[5pt] &\le \frac{C \log n}{n} \log \left ( O\left ( \frac{n (qn)^t}{C\log n} \right )\right ) H_q(n, t) \\[5pt] &\lesssim \frac{C t (\log n)^2}{n} H_q(n,t). \end{align*}

\begin{align*} \log \left \lvert \mathcal{F}\right \rvert &\le \log \binom{q^n}{\le \frac{C \log n}{n} H_q(n, t) } \\[5pt] &\lesssim \frac{C \log n}{n} H_q(n, t) \log \left (O\left ( \frac{n V_q(n,t)}{C\log n} \right )\right ) {}\\[5pt] &\le \frac{C \log n}{n} \log \left ( O\left ( \frac{n (qn)^t}{C\log n} \right )\right ) H_q(n, t) \\[5pt] &\lesssim \frac{C t (\log n)^2}{n} H_q(n,t). \end{align*}

Since

![]() $t \le 10 \sqrt{n}$

,

$t \le 10 \sqrt{n}$

,

![]() $ \lvert \mathcal{F} \rvert \le 2^{\varepsilon H_q(n,t)}$

for sufficiently large

$ \lvert \mathcal{F} \rvert \le 2^{\varepsilon H_q(n,t)}$

for sufficiently large

![]() $n$

.

$n$

.

It remains to prove the supersaturation estimates Lemmas 2.1 and 2.2, which we will do in Section 4 after first observing some technical preliminaries in Section 3. The cases when

![]() $t \gt 10\sqrt{n}$

are in Section 5.

$t \gt 10\sqrt{n}$

are in Section 5.

3. Hamming ball volume estimates

3.1 Hamming ball volume ratios

We first record some estimates about sizes of Hamming balls of different radii, beginning with the following decay estimate.

Lemma 3.1.

For every

![]() $1 \le i\le t$

, we have

$1 \le i\le t$

, we have

Proof. Since

![]() $\binom{n-1}{j}=\frac{j+1}{n} \binom{n}{j+1}$

, we have

$\binom{n-1}{j}=\frac{j+1}{n} \binom{n}{j+1}$

, we have

\begin{align*} \frac{V_q(n-1,t-1)}{V_q(n,t)}&=\frac{\sum _{j=0}^{t-1}\binom{n-1}{j}(q-1)^j}{1+\sum _{j=0}^{t-1}\binom{n}{j+1}(q-1)^{j+1}}\le \frac{\sum _{j=0}^{t-1}\binom{n-1}{j}(q-1)^{j}}{\sum _{j=0}^{t-1}\binom{n}{j+1}(q-1)^{j+1}}\le \frac{t}{(q-1)n}. \end{align*}

\begin{align*} \frac{V_q(n-1,t-1)}{V_q(n,t)}&=\frac{\sum _{j=0}^{t-1}\binom{n-1}{j}(q-1)^j}{1+\sum _{j=0}^{t-1}\binom{n}{j+1}(q-1)^{j+1}}\le \frac{\sum _{j=0}^{t-1}\binom{n-1}{j}(q-1)^{j}}{\sum _{j=0}^{t-1}\binom{n}{j+1}(q-1)^{j+1}}\le \frac{t}{(q-1)n}. \end{align*}

Consequently,

\begin{align*} \frac{V_q(n-i,t-i)}{V_q(n,t)}&=\frac{V_q(n-1,t-1)}{V_q(n,t)}\cdot \dots \cdot \frac{V_q(n-i,t-i)}{V_q(n-i+1,t-i+1)}\\[5pt] &\le \frac{1}{(q-1)^i}\cdot \frac{t}{n}\cdot \frac{t-1}{n-1}\cdot \dots \cdot \frac{t-i+1}{n-i+1}\le \left (\frac{t}{(q-1)n}\right )^i. \end{align*}

\begin{align*} \frac{V_q(n-i,t-i)}{V_q(n,t)}&=\frac{V_q(n-1,t-1)}{V_q(n,t)}\cdot \dots \cdot \frac{V_q(n-i,t-i)}{V_q(n-i+1,t-i+1)}\\[5pt] &\le \frac{1}{(q-1)^i}\cdot \frac{t}{n}\cdot \frac{t-1}{n-1}\cdot \dots \cdot \frac{t-i+1}{n-i+1}\le \left (\frac{t}{(q-1)n}\right )^i. \end{align*}

Lemma 3.2.

For

![]() $1 \le \alpha \le t \le n - \alpha$

, we have

$1 \le \alpha \le t \le n - \alpha$

, we have

Proof. Observe that

\begin{equation*} \frac {V_q(n-\alpha, t)}{V_q(n, t)} = \frac {\sum _{i = 0}^t (q-1)^i \left(\substack{n- \alpha \\[5pt] i}\right)}{\sum _{i = 0}^t (q-1)^i \left(\substack{n \\[5pt] i}\right)} \ge \left (\frac {n - \alpha + 1 - t}{n - \alpha + 1}\right )^{\alpha }. \end{equation*}

\begin{equation*} \frac {V_q(n-\alpha, t)}{V_q(n, t)} = \frac {\sum _{i = 0}^t (q-1)^i \left(\substack{n- \alpha \\[5pt] i}\right)}{\sum _{i = 0}^t (q-1)^i \left(\substack{n \\[5pt] i}\right)} \ge \left (\frac {n - \alpha + 1 - t}{n - \alpha + 1}\right )^{\alpha }. \end{equation*}

Also, by Lemma 3.1, we have

Combining this pair of inequalities gives

3.2 Intersection of Hamming balls

We record some estimates about the sizes of intersections of Hamming balls as a function of the distance between their centres.

Let

![]() $W_q (n,t, k)$

be the size of the intersection of two Hamming balls in

$W_q (n,t, k)$

be the size of the intersection of two Hamming balls in

![]() $[q]^n$

of radius

$[q]^n$

of radius

![]() $t$

, the centres being distance

$t$

, the centres being distance

![]() $k$

apart. It is easy to check that

$k$

apart. It is easy to check that

\begin{align*} W_q(n,t,k)&=\sum _{r=0}^k\sum _{s=0}^{k-r}\binom{k}{r} \binom{k-r}{s}(q-2)^{k-r-s}V_q(n-k,t-\max \{k-r,k-s\}). \end{align*}

\begin{align*} W_q(n,t,k)&=\sum _{r=0}^k\sum _{s=0}^{k-r}\binom{k}{r} \binom{k-r}{s}(q-2)^{k-r-s}V_q(n-k,t-\max \{k-r,k-s\}). \end{align*}

Indeed, suppose the two balls are centred at

![]() $(1^n)$

and

$(1^n)$

and

![]() $(2^k1^{n-k})$

. The intersection then consists of all points with

$(2^k1^{n-k})$

. The intersection then consists of all points with

![]() $r$

$r$

![]() $1$

’s and

$1$

’s and

![]() $s$

$s$

![]() $2$

’s among the first

$2$

’s among the first

![]() $k$

coordinates (as

$k$

coordinates (as

![]() $r$

and

$r$

and

![]() $s$

range over all possible values), and

$s$

range over all possible values), and

![]() $\le t - \max \{k-r,k-s\}$

non-

$\le t - \max \{k-r,k-s\}$

non-

![]() $1$

coordinates among the remaining

$1$

coordinates among the remaining

![]() $n-k$

coordinates.

$n-k$

coordinates.

In particular, it is easy to see that

(As above, it does not matter what happens in the first coordinate and the remaining

![]() $n-1$

coordinates can have at most

$n-1$

coordinates can have at most

![]() $t-1$

non-

$t-1$

non-

![]() $1$

coordinates).

$1$

coordinates).

Lemma 3.3.

![]() $W_q(n,t, k+1) \leq W_q(n,t, k)$

for every integer

$W_q(n,t, k+1) \leq W_q(n,t, k)$

for every integer

![]() $0 \le k \lt n$

.

$0 \le k \lt n$

.

Proof. It suffices to consider radius

![]() $t$

Hamming balls centred at

$t$

Hamming balls centred at

![]() $u = 1^n$

,

$u = 1^n$

,

![]() $v = 2^{k}1^{n-k}$

, and

$v = 2^{k}1^{n-k}$

, and

![]() $w = 2^{k+1}1^{n-k-1}$

. We show that

$w = 2^{k+1}1^{n-k-1}$

. We show that

![]() $ \lvert B_q(u,t)\cap B_q(w,t) \rvert \leq \lvert B_q(u,t)\cap B_q(v,t) \rvert$

. Let us consider the following subsets of

$ \lvert B_q(u,t)\cap B_q(w,t) \rvert \leq \lvert B_q(u,t)\cap B_q(v,t) \rvert$

. Let us consider the following subsets of

![]() $[q]^n$

:

$[q]^n$

:

Observe that every

![]() $x = (x_1, \ldots, x_n) \in X$

must have

$x = (x_1, \ldots, x_n) \in X$

must have

![]() $x_{k+1} = 2$

and

$x_{k+1} = 2$

and

![]() $d(x, w) = t$

,

$d(x, w) = t$

,

![]() $d(x, v) = t+1$

. Define

$d(x, v) = t+1$

. Define

![]() $\phi \;:\;X \rightarrow Y$

where

$\phi \;:\;X \rightarrow Y$

where

![]() $\phi (x)$

is obtained from

$\phi (x)$

is obtained from

![]() $x$

by changing the

$x$

by changing the

![]() $(k+1)$

st coordinate from

$(k+1)$

st coordinate from

![]() $2$

to

$2$

to

![]() $1$

. This map is well-defined since for every

$1$

. This map is well-defined since for every

![]() $x \in X$

,

$x \in X$

,

![]() $d(\phi (x), u) \lt d(x, u) \le t$

,

$d(\phi (x), u) \lt d(x, u) \le t$

,

![]() $d(\phi (x), w) = t + 1$

and

$d(\phi (x), w) = t + 1$

and

![]() $d(\phi (x), v) = t$

, and so

$d(\phi (x), v) = t$

, and so

![]() $\phi (x) \in Y$

. Since

$\phi (x) \in Y$

. Since

![]() $\phi$

is injective, we have

$\phi$

is injective, we have

![]() $|X|\leq |Y|$

and therefore

$|X|\leq |Y|$

and therefore

![]() $ \lvert B_q(u,t)\cap B_q(w,t) \rvert \leq \lvert B_q(u,t)\cap B_q(v,t) \rvert$

.

$ \lvert B_q(u,t)\cap B_q(w,t) \rvert \leq \lvert B_q(u,t)\cap B_q(v,t) \rvert$

.

Lemma 3.4.

For every integer

![]() $k\ge 0$

, we have

$k\ge 0$

, we have

Proof. The first inequality is simply Lemma 3.3. We further have that

\begin{align*} W_q(n,t, 2k+1) \!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\\[5pt] &=\sum _{r=0}^{2k+1}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[4pt] s}\right)(q-2)^{2k+1-r-s}V_q(n-2k-1,t-2k-1+\min \{r,s\})\\[5pt] &\le 2\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[4pt] s}\right)(q-2)^{2k+1-r-s}V_q(n-2k-1,t+r-2k-1)\\[5pt] &\le 2\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[4pt] s}\right)(q-2)^{2k+1-r-s}V_q(n+r-2k-1,t+r-2k-1). \end{align*}

\begin{align*} W_q(n,t, 2k+1) \!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\!\\[5pt] &=\sum _{r=0}^{2k+1}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[4pt] s}\right)(q-2)^{2k+1-r-s}V_q(n-2k-1,t-2k-1+\min \{r,s\})\\[5pt] &\le 2\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[4pt] s}\right)(q-2)^{2k+1-r-s}V_q(n-2k-1,t+r-2k-1)\\[5pt] &\le 2\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[4pt] s}\right)(q-2)^{2k+1-r-s}V_q(n+r-2k-1,t+r-2k-1). \end{align*}

Using Lemma 3.1 and substituting the above inequality, we deduce that

\begin{align*} \frac{W_q(n,t, 2k+1)}{W_q(n,t,1)} &=\frac{W_q(n,t, 2k+1)}{qV_q(n-1,t-1)}\\[5pt] &\overset{(*)}{\le } \frac{2}{q}\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[5pt] s}\right)(q-2)^{2k+1-r-s} \left (\frac{t-1}{(q-1)(n-1)}\right )^{2k-r}\\[5pt] &\leq \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q}\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[5pt] r}\right)\left(\substack{2k+1-r\\[5pt] s}\right)(q-2)^{2k+1-r-s}\\[5pt] &= \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q}\sum _{r = 0}^{k}\left(\substack{2k+1\\[5pt] r}\right) ((q-2) + 1)^{2k+1-r}\\[5pt] &\le \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q}\sum _{r = 0}^{2k+1}\left(\substack{2k+1\\[5pt] r}\right) (q-1)^{2k+1-r}\\[5pt] &= \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q} \cdot q^{2k+1} \\[5pt] &\leq \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot 2q^{2k}\le 2\left (\frac{q^2t}{(q-1)n}\right )^k, \end{align*}

\begin{align*} \frac{W_q(n,t, 2k+1)}{W_q(n,t,1)} &=\frac{W_q(n,t, 2k+1)}{qV_q(n-1,t-1)}\\[5pt] &\overset{(*)}{\le } \frac{2}{q}\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[4pt] r}\right)\left(\substack{2k+1-r\\[5pt] s}\right)(q-2)^{2k+1-r-s} \left (\frac{t-1}{(q-1)(n-1)}\right )^{2k-r}\\[5pt] &\leq \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q}\sum _{r = 0}^{k}\sum _{s=0}^{2k+1-r}\left(\substack{2k+1\\[5pt] r}\right)\left(\substack{2k+1-r\\[5pt] s}\right)(q-2)^{2k+1-r-s}\\[5pt] &= \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q}\sum _{r = 0}^{k}\left(\substack{2k+1\\[5pt] r}\right) ((q-2) + 1)^{2k+1-r}\\[5pt] &\le \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q}\sum _{r = 0}^{2k+1}\left(\substack{2k+1\\[5pt] r}\right) (q-1)^{2k+1-r}\\[5pt] &= \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot \frac{2}{q} \cdot q^{2k+1} \\[5pt] &\leq \left (\frac{t-1}{(q-1)(n-1)}\right )^{k}\cdot 2q^{2k}\le 2\left (\frac{q^2t}{(q-1)n}\right )^k, \end{align*}

where

![]() $(\!*\!)$

follows from the previous expansion of

$(\!*\!)$

follows from the previous expansion of

![]() $W_q(n,t,2k+1)$

combined with Lemma 3.1. This proves the second inequality in the lemma. To prove the last inequality in the lemma, apply Lemma 3.1 again to yield

$W_q(n,t,2k+1)$

combined with Lemma 3.1. This proves the second inequality in the lemma. To prove the last inequality in the lemma, apply Lemma 3.1 again to yield

4. Supersaturation

4.1 Supersaturation I

From now on, let

![]() $S \subseteq [q]^n$

and let

$S \subseteq [q]^n$

and let

![]() $G[S] = (S, E)$

be the associated graph with the edge set

$G[S] = (S, E)$

be the associated graph with the edge set

For each

![]() $1 \le k \le 2t$

, write

$1 \le k \le 2t$

, write

In other words,

![]() $E$

is the set of pairs in

$E$

is the set of pairs in

![]() $S$

with Hamming distance at most

$S$

with Hamming distance at most

![]() $2t$

, and each

$2t$

, and each

![]() $E_k$

is the set of pairs in

$E_k$

is the set of pairs in

![]() $S$

with Hamming distance exactly

$S$

with Hamming distance exactly

![]() $k$

. Also, for each

$k$

. Also, for each

![]() $v \in S$

, denote number of edges in

$v \in S$

, denote number of edges in

![]() $E_k$

incident to

$E_k$

incident to

![]() $v$

by

$v$

by

We have the trivial bound

![]() $\deg _k(v) \le\left(\substack{n \\[5pt] k}\right)(q-1)^k$

for every

$\deg _k(v) \le\left(\substack{n \\[5pt] k}\right)(q-1)^k$

for every

![]() $v \in S$

, and hence,

$v \in S$

, and hence,

![]() $\left \lvert E_k\right \rvert \le \frac{1}{2}\binom{n}{k} (q-1)^k \left \lvert S\right \rvert$

.

$\left \lvert E_k\right \rvert \le \frac{1}{2}\binom{n}{k} (q-1)^k \left \lvert S\right \rvert$

.

We first recall the following supersaturation estimate from [Reference Balogh, Treglown and Wagner2] (stated there for binary codes). We include the proof below for completeness. Later on, we will derive new and stronger supersaturation estimates.

Lemma 4.1 ([Reference Balogh, Treglown and Wagner2], Lemma 5.3). If

![]() $|S|\ge 2H_q(n,t)$

, then

$|S|\ge 2H_q(n,t)$

, then

\begin{equation*} \sum _{k=1}^{2t}W_q(n,t,k)|E_k|\ge \frac {|S|^2V_q(n,t)^2}{10\cdot q^n}\quad \textit {and}\quad |E| \ge \frac {n|S|^2}{20tH_q(n,t)}. \end{equation*}

\begin{equation*} \sum _{k=1}^{2t}W_q(n,t,k)|E_k|\ge \frac {|S|^2V_q(n,t)^2}{10\cdot q^n}\quad \textit {and}\quad |E| \ge \frac {n|S|^2}{20tH_q(n,t)}. \end{equation*}

Proof. Define

![]() $K_x= \{a \in S \mid d(x, a) \le t\}$

for

$K_x= \{a \in S \mid d(x, a) \le t\}$

for

![]() $x \in [q]^n$

. Observe that

$x \in [q]^n$

. Observe that

\begin{equation*} \sum _{k = 1}^{2t} W_q(n,t,k) |E_k| = \sum _{x \in [q]^n} \left(\substack{|K_x| \\[5pt] 2}\right), \end{equation*}

\begin{equation*} \sum _{k = 1}^{2t} W_q(n,t,k) |E_k| = \sum _{x \in [q]^n} \left(\substack{|K_x| \\[5pt] 2}\right), \end{equation*}

since both terms count pairs

![]() $(x, \{a, b\})$

for

$(x, \{a, b\})$

for

![]() $x \in [q]^n$

and distinct

$x \in [q]^n$

and distinct

![]() $a, b \in S$

such that

$a, b \in S$

such that

![]() $d(x,a), d(x, b) \le t$

. From Lemmas 3.1 to 3.3, we know that

$d(x,a), d(x, b) \le t$

. From Lemmas 3.1 to 3.3, we know that

for

![]() $1 \le k \le 2t$

. By convexity, since the average value of

$1 \le k \le 2t$

. By convexity, since the average value of

![]() $K_x$

over

$K_x$

over

![]() $x \in [q]^n$

is

$x \in [q]^n$

is

![]() $|S|V_q(n, t)/q^n \ge 2$

, we have

$|S|V_q(n, t)/q^n \ge 2$

, we have

\begin{equation*} \frac {qt}{(q-1)n}\cdot V_q(n, t)|E| \ge W_q(n,t, 1)|E| \ge \sum _{k = 1}^{2t} W_q(n,t,k) |E_k| = \sum _{x \in [q]^n} \left(\substack{|K_x| \\[5pt] 2}\right) \ge \frac {|S|^2 V_q(n, t)^2}{10 q^n}. \end{equation*}

\begin{equation*} \frac {qt}{(q-1)n}\cdot V_q(n, t)|E| \ge W_q(n,t, 1)|E| \ge \sum _{k = 1}^{2t} W_q(n,t,k) |E_k| = \sum _{x \in [q]^n} \left(\substack{|K_x| \\[5pt] 2}\right) \ge \frac {|S|^2 V_q(n, t)^2}{10 q^n}. \end{equation*}

This proves the first inequality stated in the lemma. Rearranging, we derive the second inequality:

Now let us prove the first supersaturation estimate, Lemma 2.1, which says that if

![]() $t \le 10 \sqrt{n}$

and

$t \le 10 \sqrt{n}$

and

![]() $|S| \ge n^4H_q(n,t)$

, then

$|S| \ge n^4H_q(n,t)$

, then

![]() $\Delta (G[S]) \gtrsim \frac{n^{3/2}}{ H_q(n,t)}|S|$

.

$\Delta (G[S]) \gtrsim \frac{n^{3/2}}{ H_q(n,t)}|S|$

.

Proof of Lemma

2.1. By Lemma 4.1, since

![]() $|S| \ge n^4H_q(n,t)$

,

$|S| \ge n^4H_q(n,t)$

,

Together with

![]() $|E_1|+|E_2| \le n^2 q^2 |S|$

and

$|E_1|+|E_2| \le n^2 q^2 |S|$

and

![]() $|E_3|+|E_4|\le n^4 q^4|S|$

, we have (recall that hidden constants are allowed to depend on

$|E_3|+|E_4|\le n^4 q^4|S|$

, we have (recall that hidden constants are allowed to depend on

![]() $q$

)

$q$

)

Furthermore, by Lemma 3.4,

\begin{align*} W_q(n,t,2) &\le W_q(n,t,1), \\[5pt] W_q(n,t,4) &\le W_q(n,t,3) \lesssim \frac{t}{n} W_q(n,t,1), \qquad \text{and} \\[5pt] W_q(n,t,k) &\le W_q(n,t,5) \lesssim \frac{t^2}{n^2} W_q(n,t,1) \qquad \text{for all $k \ge 5$}. \end{align*}

\begin{align*} W_q(n,t,2) &\le W_q(n,t,1), \\[5pt] W_q(n,t,4) &\le W_q(n,t,3) \lesssim \frac{t}{n} W_q(n,t,1), \qquad \text{and} \\[5pt] W_q(n,t,k) &\le W_q(n,t,5) \lesssim \frac{t^2}{n^2} W_q(n,t,1) \qquad \text{for all $k \ge 5$}. \end{align*}

Hence we have

\begin{align*} \sum _{k=1}^{2t}W_q(n,t,k)|E_k| &\le W_q(n,t,1)(|E_1|+|E_2|)+W_q(n,t,3)(|E_3|+|E_4|)+W_q(n,t,5)|E| \\[5pt] &\lesssim \left ( \frac{t}{n^3} + \frac{t}{n} \cdot \frac{t}{n} + \frac{t^2}{n^2} \right ) W_q(n,t,1)|E| \lesssim \frac{t^2}{n^2}\cdot W_q(n,t,1)|E|. \end{align*}

\begin{align*} \sum _{k=1}^{2t}W_q(n,t,k)|E_k| &\le W_q(n,t,1)(|E_1|+|E_2|)+W_q(n,t,3)(|E_3|+|E_4|)+W_q(n,t,5)|E| \\[5pt] &\lesssim \left ( \frac{t}{n^3} + \frac{t}{n} \cdot \frac{t}{n} + \frac{t^2}{n^2} \right ) W_q(n,t,1)|E| \lesssim \frac{t^2}{n^2}\cdot W_q(n,t,1)|E|. \end{align*}

By Lemma 4.1, we have

\begin{align*} \frac{|S|^2V_q(n,t)^2}{q^n} \lesssim \sum _{k=1}^{2t}W_q(n,t,k)|E_k| \lesssim \frac{t^2}{n^2}\cdot W_q(n,t,1)|E|. \end{align*}

\begin{align*} \frac{|S|^2V_q(n,t)^2}{q^n} \lesssim \sum _{k=1}^{2t}W_q(n,t,k)|E_k| \lesssim \frac{t^2}{n^2}\cdot W_q(n,t,1)|E|. \end{align*}

Rearranging and then applying

![]() $W_q(n,t,1) \lesssim (t/n) V_q(n,t)$

from Lemma 3.4, and

$W_q(n,t,1) \lesssim (t/n) V_q(n,t)$

from Lemma 3.4, and

![]() $t \lesssim \sqrt{n}$

, we obtain

$t \lesssim \sqrt{n}$

, we obtain

Thus, the average degree (and hence the maximum degree) in

![]() $G[S]$

is

$G[S]$

is

![]() $\gtrsim \frac{n^{3/2}}{H_q(n,t)} |S|$

.

$\gtrsim \frac{n^{3/2}}{H_q(n,t)} |S|$

.

4.2 Supersaturation II

We maintain the notation from the previous subsection.

Lemma 4.2.

Suppose

![]() $t\le 10\sqrt{n}$

and

$t\le 10\sqrt{n}$

and

![]() $\Delta (G[S])\le n^5$

. Fix

$\Delta (G[S])\le n^5$

. Fix

![]() $\varepsilon \gt 0$

. Let

$\varepsilon \gt 0$

. Let

Then

![]() $|S_1|\le (1 + O(\varepsilon ))H_q(n,t)$

.

$|S_1|\le (1 + O(\varepsilon ))H_q(n,t)$

.

Proof. The idea is that the Hamming balls

![]() $B_q(v,t)$

,

$B_q(v,t)$

,

![]() $v \in S_1$

, are ‘mostly disjoint’, in the sense that the overlap is negligible.

$v \in S_1$

, are ‘mostly disjoint’, in the sense that the overlap is negligible.

For any

![]() $v\in S_1$

, the overlap of

$v\in S_1$

, the overlap of

![]() $B_q(v,t)$

with other balls

$B_q(v,t)$

with other balls

![]() $B_q(u,t)$

,

$B_q(u,t)$

,

![]() $u \in S_1$

, has size

$u \in S_1$

, has size

\begin{align*} \left \lvert B_q(v,t)\cap \bigcup _{u\in S_1\setminus \{v\}} B_q(u,t)\right \rvert &\le \sum _{k=1}^{2t}\deg _k(v) W_q(n,t,k)\\[5pt] &\le \biggl (\sum _{k=1}^{20}\deg _k(v) W_q(n,t,k)\biggr ) + n^5 W_q(n,t,21) \end{align*}

\begin{align*} \left \lvert B_q(v,t)\cap \bigcup _{u\in S_1\setminus \{v\}} B_q(u,t)\right \rvert &\le \sum _{k=1}^{2t}\deg _k(v) W_q(n,t,k)\\[5pt] &\le \biggl (\sum _{k=1}^{20}\deg _k(v) W_q(n,t,k)\biggr ) + n^5 W_q(n,t,21) \end{align*}

by Lemma 3.3. Recall from Lemma 3.4 that

![]() $W_q(n,t,k) \le 2(q^2t/((q-1)n))^{ \lceil k/2 \rceil } V_q(n,t)$

, which is

$W_q(n,t,k) \le 2(q^2t/((q-1)n))^{ \lceil k/2 \rceil } V_q(n,t)$

, which is

![]() $\lesssim n^{- \lceil k/2 \rceil/2} V_q(n,t)$

for each

$\lesssim n^{- \lceil k/2 \rceil/2} V_q(n,t)$

for each

![]() $1 \le k \le 20$

. Combining with

$1 \le k \le 20$

. Combining with

![]() $\deg _k(v) \le \varepsilon n^{ \lceil k/2 \rceil/2}$

for each

$\deg _k(v) \le \varepsilon n^{ \lceil k/2 \rceil/2}$

for each

![]() $v \in S$

, the above sum is

$v \in S$

, the above sum is

![]() $\lesssim \varepsilon V_q(n,t)$

. In other words, for every

$\lesssim \varepsilon V_q(n,t)$

. In other words, for every

![]() $v\in S_1$

, the ball

$v\in S_1$

, the ball

![]() $B_q(v,t)$

contains

$B_q(v,t)$

contains

![]() $(1-O(\varepsilon )) V_q(n,t)$

unique points not shared by other such balls, and thus, the union of these balls has size

$(1-O(\varepsilon )) V_q(n,t)$

unique points not shared by other such balls, and thus, the union of these balls has size

![]() $\ge (1-O(\varepsilon )) V_q(n,t) \lvert S_1 \rvert$

. Since the union is contained in

$\ge (1-O(\varepsilon )) V_q(n,t) \lvert S_1 \rvert$

. Since the union is contained in

![]() $[q]^n$

, we deduce

$[q]^n$

, we deduce

![]() $ \lvert S_1 \rvert \le (1+O(\varepsilon ))q^n/ V_q(n,t) = (1+O(\varepsilon )) H_q(n,t)$

.

$ \lvert S_1 \rvert \le (1+O(\varepsilon ))q^n/ V_q(n,t) = (1+O(\varepsilon )) H_q(n,t)$

.

Lemma 4.3.

Suppose

![]() $60 \le t \le 10\sqrt{n}$

and

$60 \le t \le 10\sqrt{n}$

and

![]() $\Delta (G[S])\le n^{5}$

. Fix

$\Delta (G[S])\le n^{5}$

. Fix

![]() $\varepsilon \gt 0$

. Let

$\varepsilon \gt 0$

. Let

\begin{align*} S_2 &= \left \{ v\in S \;:\; \sum _{k=1}^{20} \deg _k(v) \ge \frac{\log n}{\varepsilon } \right \}. \end{align*}

\begin{align*} S_2 &= \left \{ v\in S \;:\; \sum _{k=1}^{20} \deg _k(v) \ge \frac{\log n}{\varepsilon } \right \}. \end{align*}

Then, the number of independent subsets of

![]() $S_2$

satisfies

$S_2$

satisfies

Proof. Pick a maximum size subset

![]() $X \subseteq S_2$

where every pair of distinct elements of

$X \subseteq S_2$

where every pair of distinct elements of

![]() $X$

have Hamming distance greater than

$X$

have Hamming distance greater than

![]() $t$

. Using the definition of

$t$

. Using the definition of

![]() $S_2$

, for each

$S_2$

, for each

![]() $x \in X$

, we can find a

$x \in X$

, we can find a

![]() $ \lceil (\log n)/\varepsilon \rceil$

-element subset

$ \lceil (\log n)/\varepsilon \rceil$

-element subset

![]() $A_x \subseteq S \cap B_q(x,20)$

. For distinct

$A_x \subseteq S \cap B_q(x,20)$

. For distinct

![]() $x,y \in X$

, one has

$x,y \in X$

, one has

![]() $d(x,y) \gt t \ge 60$

, and thus

$d(x,y) \gt t \ge 60$

, and thus

![]() $A_x \cap A_y = \emptyset$

.

$A_x \cap A_y = \emptyset$

.

Consider the balls

![]() $B_q(u,t)$

,

$B_q(u,t)$

,

![]() $u \in \bigcup _{x \in X} A_x$

. As in the previous proof, we will show that these balls are mostly disjoint. The intersection of one of these balls with the union of all other such balls has size at most

$u \in \bigcup _{x \in X} A_x$

. As in the previous proof, we will show that these balls are mostly disjoint. The intersection of one of these balls with the union of all other such balls has size at most

Indeed, for each of the

![]() $ \lceil (\log n)/\varepsilon \rceil -1$

points

$ \lceil (\log n)/\varepsilon \rceil -1$

points

![]() $u'$

that lie in the same

$u'$

that lie in the same

![]() $A_x$

as

$A_x$

as

![]() $u$

, the overlap is

$u$

, the overlap is

![]() $\le W_q(n,t,1)$

; each ball

$\le W_q(n,t,1)$

; each ball

![]() $B_q(u',t)$

with

$B_q(u',t)$

with

![]() $u'$

in some

$u'$

in some

![]() $A_y$

other than

$A_y$

other than

![]() $A_x$

contributes to

$A_x$

contributes to

![]() $\le W_q(n,t,21)$

overlap since

$\le W_q(n,t,21)$

overlap since

![]() $d(u,u') \ge d(x,y) - 40 \ge t-39 \ge 21$

. There are at most

$d(u,u') \ge d(x,y) - 40 \ge t-39 \ge 21$

. There are at most

![]() $\Delta (G[S]) \le n^5$

other balls that intersect a given ball. Here we invoke the monotonicity of

$\Delta (G[S]) \le n^5$

other balls that intersect a given ball. Here we invoke the monotonicity of

![]() $W_q(n,t,\cdot )$

(Lemma 3.3) and also Lemma 3.4.

$W_q(n,t,\cdot )$

(Lemma 3.3) and also Lemma 3.4.

Thus, the union of the balls

![]() $B_q(u,t)$

,

$B_q(u,t)$

,

![]() $u \in \bigcup _{x \in X} A_x$

, has size

$u \in \bigcup _{x \in X} A_x$

, has size

![]() $ \lvert X \rvert \lceil (\log n)/\varepsilon \rceil \cdot (1-o(1))V_q(n,t)$

. Since this quantity is at most

$ \lvert X \rvert \lceil (\log n)/\varepsilon \rceil \cdot (1-o(1))V_q(n,t)$

. Since this quantity is at most

![]() $q^n$

,

$q^n$

,

Note that

![]() $S_2 \subseteq \bigcup _{x \in X} B_q(x,t)$

(since if there were some uncovered

$S_2 \subseteq \bigcup _{x \in X} B_q(x,t)$

(since if there were some uncovered

![]() $x' \in [q]^n$

, then one can add

$x' \in [q]^n$

, then one can add

![]() $x'$

to

$x'$

to

![]() $X$

). Every pair of elements of

$X$

). Every pair of elements of

![]() $B_q(x,t)$

has Hamming distance at most

$B_q(x,t)$

has Hamming distance at most

![]() $2t$

. It follows that every independent set

$2t$

. It follows that every independent set

![]() $I$

in

$I$

in

![]() $G[S_2]$

(so the elements of

$G[S_2]$

(so the elements of

![]() $I$

are separated by Hamming distance

$I$

are separated by Hamming distance

![]() $\gt 2t$

) can be formed by choosing at most one element from

$\gt 2t$

) can be formed by choosing at most one element from

![]() $B_q(x,t) \cap S_2$

for each

$B_q(x,t) \cap S_2$

for each

![]() $x \in X$

. Since

$x \in X$

. Since

![]() $ \lvert B_q(x,t) \cap S_2 \rvert \le n^5$

, we have

$ \lvert B_q(x,t) \cap S_2 \rvert \le n^5$

, we have

Now we prove the second supersaturation estimate, Lemma 2.2, which says that if

![]() $60 \le t\le 10\sqrt{n}$

and

$60 \le t\le 10\sqrt{n}$

and

![]() $\Delta (G[S]) \le n^5$

, then

$\Delta (G[S]) \le n^5$

, then

![]() $i(G[S]) \le 2^{(1+\varepsilon )H_q(n,t)}$

for all sufficiently large

$i(G[S]) \le 2^{(1+\varepsilon )H_q(n,t)}$

for all sufficiently large

![]() $n$

.

$n$

.

Proof of Lemma

2.2. Define

![]() $S_1$

and

$S_1$

and

![]() $S_2$

as in the previous two lemmas. One has

$S_2$

as in the previous two lemmas. One has

![]() $S = S_1 \cup S_2$

provided that

$S = S_1 \cup S_2$

provided that

![]() $n$

is sufficiently large. Thus

$n$

is sufficiently large. Thus

This is equivalent to the desired result after changing

![]() $\varepsilon$

by a constant factor.

$\varepsilon$

by a constant factor.

5. Bounds on codes with larger distances

When

![]() $t \gt 10\sqrt{n}$

, we observe that a maximum sized

$t \gt 10\sqrt{n}$

, we observe that a maximum sized

![]() $t$

error correcting code must be much smaller than the Hamming bound; this implies an associated bound on the number of

$t$

error correcting code must be much smaller than the Hamming bound; this implies an associated bound on the number of

![]() $t$

-error correcting codes. Recall that

$t$

-error correcting codes. Recall that

![]() $A_q(n, 2t+1)$

is the maximum size of a

$A_q(n, 2t+1)$

is the maximum size of a

![]() $t$

-error correcting code over

$t$

-error correcting code over

![]() $[q]^n$

. The number of

$[q]^n$

. The number of

![]() $q$

-ary

$q$

-ary

![]() $t$

-error correcting codes of length

$t$

-error correcting codes of length

![]() $n$

is at most

$n$

is at most

If

![]() $A_q(n, 2t+1) = o(H_q(n, t)/n)$

, then the number of

$A_q(n, 2t+1) = o(H_q(n, t)/n)$

, then the number of

![]() $t$

-error correcting codes of length

$t$

-error correcting codes of length

![]() $n$

is

$n$

is

![]() $2^{o(H_q(n,t))}$

.

$2^{o(H_q(n,t))}$

.

In fact, in the regime of very large

![]() $t$

, we will be able to obtain Theorem 1.1(c), that is, that there exists

$t$

, we will be able to obtain Theorem 1.1(c), that is, that there exists

![]() $c_q \gt 0$

such that for all

$c_q \gt 0$

such that for all

![]() $t \gt (1-1/q) n - c_q \sqrt{n \log n}$

, we have

$t \gt (1-1/q) n - c_q \sqrt{n \log n}$

, we have

![]() $H_q(n,t) = O(n^{1/10})$

.

$H_q(n,t) = O(n^{1/10})$

.

Proof of Theorem

1.1(c). Let

![]() $t=(1-1/q)n-\alpha$

with

$t=(1-1/q)n-\alpha$

with

![]() $\alpha \lt c_q\sqrt{n\log n}$

for some sufficiently small

$\alpha \lt c_q\sqrt{n\log n}$

for some sufficiently small

![]() $c_q \gt 0$

. Let

$c_q \gt 0$

. Let

![]() $\theta =1-q^{-1}$

. Let

$\theta =1-q^{-1}$

. Let

![]() $Y\sim \text{Bin}(n,\theta )$

be a binomial random variable and

$Y\sim \text{Bin}(n,\theta )$

be a binomial random variable and

![]() $Z\sim \mathcal{N}(0,1)$

a standard normal random variable. Observe that for all

$Z\sim \mathcal{N}(0,1)$

a standard normal random variable. Observe that for all

![]() $0\leq i\leq n$

, we have

$0\leq i\leq n$

, we have

![]() $V_q(n, t) = q^n \mathbf{P}(Y \le t)$

. By the Berry–Esseen theorem (a quantitative central limit theorem), for all

$V_q(n, t) = q^n \mathbf{P}(Y \le t)$

. By the Berry–Esseen theorem (a quantitative central limit theorem), for all

![]() $x\in \mathbb{R}$

,

$x\in \mathbb{R}$

,

We have the following standard estimate on the Gaussian tail:

Thus

\begin{align*} \frac{1}{H_q(n,t)} = \frac{V_q(n,\theta n-\alpha )}{q^n} &\geq \mathbf{P}\left [ Z\leq \frac{-c_q\sqrt{n\log n}}{\sqrt{\theta (1-\theta )n}} \right ] -O\left ( \frac{1}{\sqrt{n}}\right ) \\[5pt] &\gtrsim e^{- \Theta (c_q^2 \log n) }-O\left ( \frac{1}{\sqrt{n}}\right ) \\[5pt] &\gtrsim n^{-1/10} \end{align*}

\begin{align*} \frac{1}{H_q(n,t)} = \frac{V_q(n,\theta n-\alpha )}{q^n} &\geq \mathbf{P}\left [ Z\leq \frac{-c_q\sqrt{n\log n}}{\sqrt{\theta (1-\theta )n}} \right ] -O\left ( \frac{1}{\sqrt{n}}\right ) \\[5pt] &\gtrsim e^{- \Theta (c_q^2 \log n) }-O\left ( \frac{1}{\sqrt{n}}\right ) \\[5pt] &\gtrsim n^{-1/10} \end{align*}

by choosing

![]() $c_q$

to be sufficiently small. Therefore

$c_q$

to be sufficiently small. Therefore

![]() $H_q(n,t) = O(n^{1/10})$

.

$H_q(n,t) = O(n^{1/10})$

.

Remark 5.1. It is possible to give more precise estimates of binomial tails with larger deviations. The above application of the Barry–Esseen theorem is a concise way to obtain what we need.

While we will not get a bound as strong as above for smaller values of

![]() $t$

, already when

$t$

, already when

![]() $t \gt 10\sqrt{n}$

, we have that

$t \gt 10\sqrt{n}$

, we have that

![]() $A_q(n, 2t+1) = o(H_q(n, t)/n)$

, due to the following classic upper bound on

$A_q(n, 2t+1) = o(H_q(n, t)/n)$

, due to the following classic upper bound on

![]() $A_q(n,d)$

.

$A_q(n,d)$

.

Theorem 5.2 (Elias bound [[Reference van Lint12], Theorem 5.2.11]). Let

![]() $\theta = 1 - q^{-1}$

. For every

$\theta = 1 - q^{-1}$

. For every

![]() $r \le \theta n$

satisfying

$r \le \theta n$

satisfying

we have the upper bound

We have the following consequence of the above Elias bound.

Proposition 5.3.

Let

![]() $\theta = 1-q^{-1}.$

If

$\theta = 1-q^{-1}.$

If

![]() $10\sqrt{n} \lt t \leq \theta n - 2\sqrt{n \log n}$

, then we have

$10\sqrt{n} \lt t \leq \theta n - 2\sqrt{n \log n}$

, then we have

Proof. We apply the Elias bound with

![]() $r = t + \alpha$

, where

$r = t + \alpha$

, where

![]() $\alpha = 7$

if

$\alpha = 7$

if

![]() $10 \sqrt{n} \lt t \lt n^{4/5}$

and

$10 \sqrt{n} \lt t \lt n^{4/5}$

and

![]() $\alpha = \sqrt{n\log n}$

if

$\alpha = \sqrt{n\log n}$

if

![]() $n^{4/5} \le t \leq \theta n - 2\sqrt{n \log n}$

. When

$n^{4/5} \le t \leq \theta n - 2\sqrt{n \log n}$

. When

![]() $t \gt 10\sqrt{n}$

, we satisfy the condition

$t \gt 10\sqrt{n}$

, we satisfy the condition

Indeed, when

![]() $10\sqrt{n} \lt t \lt n^{4/5},$

we have

$10\sqrt{n} \lt t \lt n^{4/5},$

we have

![]() $\alpha =7$

, and thus

$\alpha =7$

, and thus

![]() $\theta (2\alpha - 1)n\leq 2\alpha n = 14 n$

whereas

$\theta (2\alpha - 1)n\leq 2\alpha n = 14 n$

whereas

![]() $r^2 \gt (10\sqrt{n})^2 = 100 n$

. When

$r^2 \gt (10\sqrt{n})^2 = 100 n$

. When

![]() $t \ge n^{4/5}$

, we have

$t \ge n^{4/5}$

, we have

![]() $r^2 \ge n^{8/5}$

whereas

$r^2 \ge n^{8/5}$

whereas

![]() $\theta (2\alpha -1)n=O(n^{3/2}\sqrt{\log n})$

. By Lemma 3.2, we have

$\theta (2\alpha -1)n=O(n^{3/2}\sqrt{\log n})$

. By Lemma 3.2, we have

Applying Theorem 5.2 gives that

\begin{align*} A_q(n, 2t+1) &\le \frac{\theta n(2t+1)}{r^2 - 2\theta nr + \theta n(2t+1)} \cdot \frac{q^n}{V_q(n, r)} \\[5pt] &\overset{(5.2)}{\lesssim } \frac{nt}{(t + \alpha )^2} \cdot \frac{q^n}{V_q(n, t+\alpha )}. \\[5pt] &\le \frac{n}{t} \left ( \frac{(t+\alpha )(n-\alpha +1)}{(q-1)n(n-\alpha + 1-t)}\right )^{\alpha } H_q(n, t). \end{align*}

\begin{align*} A_q(n, 2t+1) &\le \frac{\theta n(2t+1)}{r^2 - 2\theta nr + \theta n(2t+1)} \cdot \frac{q^n}{V_q(n, r)} \\[5pt] &\overset{(5.2)}{\lesssim } \frac{nt}{(t + \alpha )^2} \cdot \frac{q^n}{V_q(n, t+\alpha )}. \\[5pt] &\le \frac{n}{t} \left ( \frac{(t+\alpha )(n-\alpha +1)}{(q-1)n(n-\alpha + 1-t)}\right )^{\alpha } H_q(n, t). \end{align*}

When

![]() $10\sqrt{n} \lt t \lt n^{4/5}$

and

$10\sqrt{n} \lt t \lt n^{4/5}$

and

![]() $\alpha = 7$

, the above upper bound simplifies to

$\alpha = 7$

, the above upper bound simplifies to

\begin{align*} & A_q(n, 2t+1) \lesssim \frac {n}{t} \left ( \frac {(t+\alpha )(n-\alpha +1)}{(q-1)n(n-\alpha + 1-t)}\right )^{\alpha } H_q(n, t) \lesssim \frac {t^6}{n^{6}} H_q(n, t) \le \frac {1}{n^{6/5}} H_q(n, t) \\[5pt] & = o\left ( \frac {H_q(n, t)}{n} \right ). \end{align*}

\begin{align*} & A_q(n, 2t+1) \lesssim \frac {n}{t} \left ( \frac {(t+\alpha )(n-\alpha +1)}{(q-1)n(n-\alpha + 1-t)}\right )^{\alpha } H_q(n, t) \lesssim \frac {t^6}{n^{6}} H_q(n, t) \le \frac {1}{n^{6/5}} H_q(n, t) \\[5pt] & = o\left ( \frac {H_q(n, t)}{n} \right ). \end{align*}

When

![]() $n^{4/5} \le t \le \theta n - 2\sqrt{n \log n}$

and

$n^{4/5} \le t \le \theta n - 2\sqrt{n \log n}$

and

![]() $\alpha = \sqrt{n\log n}$

, from the fact that

$\alpha = \sqrt{n\log n}$

, from the fact that

![]() $t+\alpha \leq \theta n-\alpha$

, the above upper bound simplifies to

$t+\alpha \leq \theta n-\alpha$

, the above upper bound simplifies to

\begin{align*} A_q(n, 2t+1) &\lesssim \frac{n}{t} \left ( \frac{(t+\alpha )(n-\alpha +1)}{(q-1)n(n-\alpha + 1-t)}\right )^{\alpha } H_q(n, t)\\[5pt] &\leq n^{1/5} \left ( \frac{(\theta n-\alpha )(n-\alpha +1)}{(q-1)n((1-\theta )n+\alpha +1)}\right )^{\alpha } H_q(n, t). \end{align*}

\begin{align*} A_q(n, 2t+1) &\lesssim \frac{n}{t} \left ( \frac{(t+\alpha )(n-\alpha +1)}{(q-1)n(n-\alpha + 1-t)}\right )^{\alpha } H_q(n, t)\\[5pt] &\leq n^{1/5} \left ( \frac{(\theta n-\alpha )(n-\alpha +1)}{(q-1)n((1-\theta )n+\alpha +1)}\right )^{\alpha } H_q(n, t). \end{align*}

Since

![]() $(n-\alpha +1)/((1-\theta )n+\alpha +1)\leq (n-\alpha )/((1-\theta )n+\alpha )$

and

$(n-\alpha +1)/((1-\theta )n+\alpha +1)\leq (n-\alpha )/((1-\theta )n+\alpha )$

and

![]() $\theta =(q-1)(1-\theta )$

, we get that

$\theta =(q-1)(1-\theta )$

, we get that

\begin{align*} \left ( \frac{(\theta n-\alpha )(n-\alpha +1)}{(q-1)n((1-\theta )n+\alpha +1)}\right )^{\alpha } &\leq \left ( \frac{( n-\alpha/\theta )(n-\alpha )}{n(n+\alpha/(1-\theta ))}\right )^{\alpha }\leq \left ( \frac{( n-\alpha/\theta )(n-\alpha )}{n^2}\right )^{\alpha }\\[5pt] &=(1-(\alpha (1+\theta ^{-1})/n-\alpha ^2\theta ^{-1}/n^2))^\alpha \\[5pt] &\leq e^{-\alpha ^2(1+\theta ^{-1})n^{-1}+\alpha ^3\theta ^{-1}n^{-2}}\\[5pt] &\lesssim e^{-\log n(1+\theta ^{-1})}\\[5pt] &\leq n^{-2}. \end{align*}

\begin{align*} \left ( \frac{(\theta n-\alpha )(n-\alpha +1)}{(q-1)n((1-\theta )n+\alpha +1)}\right )^{\alpha } &\leq \left ( \frac{( n-\alpha/\theta )(n-\alpha )}{n(n+\alpha/(1-\theta ))}\right )^{\alpha }\leq \left ( \frac{( n-\alpha/\theta )(n-\alpha )}{n^2}\right )^{\alpha }\\[5pt] &=(1-(\alpha (1+\theta ^{-1})/n-\alpha ^2\theta ^{-1}/n^2))^\alpha \\[5pt] &\leq e^{-\alpha ^2(1+\theta ^{-1})n^{-1}+\alpha ^3\theta ^{-1}n^{-2}}\\[5pt] &\lesssim e^{-\log n(1+\theta ^{-1})}\\[5pt] &\leq n^{-2}. \end{align*}

This shows that

as desired.

Proof of Theorem

1.1(b). When

![]() $10\sqrt{n} \lt t \le (1 - q^{-1})n - 2 \sqrt{n \log n}$

, by Proposition 5.3 the number of

$10\sqrt{n} \lt t \le (1 - q^{-1})n - 2 \sqrt{n \log n}$

, by Proposition 5.3 the number of

![]() $t$

-error correcting codes is at most

$t$

-error correcting codes is at most

Acknowledgement

We would like to thank the anonymous referees for helpful feedback and suggestions.