1. Introduction

Rough paths calculus [Reference Friz and Hairer9] and the recent extension [Reference Cont and Perkowski6] of FÖllmer’s pathwise ItÔ calculus [Reference FÖllmer8] provide means of dealing with rough trajectories that are not ultimately based on Gaussian processes such as fractional Brownian motion. As observed, e.g., in [Reference Gubinelli, Imkeller and Perkowski12], such a pathwise calculus becomes particularly transparent when expressed in terms of the Faber–Schauder expansions of the integrands. When looking for the Faber–Schauder expansions of trajectories that are suitable pathwise integrators and that have roughness” specified in terms of a given Hurst parameter, one is naturally led [Reference Mishura and Schied18] to certain extensions of a well-studied class of fractal functions, the Takagi–Landsberg functions. These functions are defined as

\begin{align*}f_\alpha(t):=\sum_{m=0}^\infty\frac{\alpha^m}{2^m}\phi(2^m t),\qquad 0\le t\le1,\\[-22pt]\end{align*}

\begin{align*}f_\alpha(t):=\sum_{m=0}^\infty\frac{\alpha^m}{2^m}\phi(2^m t),\qquad 0\le t\le1,\\[-22pt]\end{align*}

where

![]() $\alpha\in(-2,2)$

is a real parameter and

$\alpha\in(-2,2)$

is a real parameter and

is the tent map, If such functions are used to describe rough phenomena in applications, it is a natural question to analyse the range of these functions, i.e., to determine the extrema of the Takagi–Landsberg functions.

While the preceding paragraph describes our original motivation for the research presented in this paper, determining the maximum of generalised Takagi functions is also of intrinsic mathematical interest and attracted several authors in the past. The first contribution was by Kahane [Reference Kahane15], who found the maximum and the set of maximisers of the classical Takagi function, which corresponds to

![]() $\alpha=1$

. This result was later rediscovered in [Reference Martynov17] and subsequently extended in [Reference Baba3] to certain van der Waerden functions. Tabor and Tabor [Reference Tabor and Tabor21] computed the maximum value of the Takagi–Landsberg function for those parameters

$\alpha=1$

. This result was later rediscovered in [Reference Martynov17] and subsequently extended in [Reference Baba3] to certain van der Waerden functions. Tabor and Tabor [Reference Tabor and Tabor21] computed the maximum value of the Takagi–Landsberg function for those parameters

![]() $\alpha_n\in(1/2,1]$

that are characterised by

$\alpha_n\in(1/2,1]$

that are characterised by

![]() $1-\alpha_n-\cdots-\alpha_n^{n}=0$

for

$1-\alpha_n-\cdots-\alpha_n^{n}=0$

for

![]() $n\in\mathbb N$

. Galkin and Galkina [Reference Galkin and Galkina10] proved that the maximum for

$n\in\mathbb N$

. Galkin and Galkina [Reference Galkin and Galkina10] proved that the maximum for

![]() $\alpha\in[-1,1/2]$

is attained at

$\alpha\in[-1,1/2]$

is attained at

![]() $t=1/2$

. In the interval (1,2), the case

$t=1/2$

. In the interval (1,2), the case

![]() $\alpha=\sqrt2$

is special, as it corresponds to the Hurst parameter

$\alpha=\sqrt2$

is special, as it corresponds to the Hurst parameter

![]() $H=1/2$

. The corresponding maximum can be deduced from [11, lemma 5] and was given independently in [Reference Galkin and Galkina10,Reference Schied20]. Mishura and Schied [Reference Mishura and Schied18] added uniqueness to the results from [Reference Galkin and Galkina10,Reference Schied20] and extended them to all

$H=1/2$

. The corresponding maximum can be deduced from [11, lemma 5] and was given independently in [Reference Galkin and Galkina10,Reference Schied20]. Mishura and Schied [Reference Mishura and Schied18] added uniqueness to the results from [Reference Galkin and Galkina10,Reference Schied20] and extended them to all

![]() $\alpha\in(1,2)$

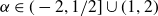

. The various contributions from [Reference Galkin and Galkina10,Reference Kahane15,Reference Mishura and Schied18,Reference Tabor and Tabor21] are illustrated in Figure 1, which shows the largest maximiser of the Takagi–Landsberg function

$\alpha\in(1,2)$

. The various contributions from [Reference Galkin and Galkina10,Reference Kahane15,Reference Mishura and Schied18,Reference Tabor and Tabor21] are illustrated in Figure 1, which shows the largest maximiser of the Takagi–Landsberg function

![]() $f_\alpha$

as a function of

$f_\alpha$

as a function of

![]() $\alpha$

. From Figure 1, it is apparent that the most interesting cases are

$\alpha$

. From Figure 1, it is apparent that the most interesting cases are

![]() $\alpha\in(-2,-1)$

and

$\alpha\in(-2,-1)$

and

![]() $\alpha\in(1/2,1]$

, which are also the ones about which nothing was known beyond the special parameters considered in [Reference Kahane15,Reference Tabor and Tabor21].

$\alpha\in(1/2,1]$

, which are also the ones about which nothing was known beyond the special parameters considered in [Reference Kahane15,Reference Tabor and Tabor21].

Fig. 1. Maximiser of

![]() $t\mapsto f_\alpha(t)$

in

$t\mapsto f_\alpha(t)$

in

![]() $[0,1/2]$

as a function of

$[0,1/2]$

as a function of

![]() $\alpha\in(-2,2)$

.

$\alpha\in(-2,2)$

.

In this paper, we present a completely new approach to the computation of the maximisers of the functions

![]() $f_\alpha$

. This approach works simultaneously for all parameters

$f_\alpha$

. This approach works simultaneously for all parameters

![]() $\alpha\in(-2,2)$

. It even extends to the entire Takagi class, which was introduced by Hata and Yamaguti [Reference Hata and Yamaguti14] and is formed by all functions of the form

$\alpha\in(-2,2)$

. It even extends to the entire Takagi class, which was introduced by Hata and Yamaguti [Reference Hata and Yamaguti14] and is formed by all functions of the form

\begin{equation*}f(t):=\sum_{m=0}^\infty c_m\phi(2^mt),\qquad t\in[0,1],\end{equation*}

\begin{equation*}f(t):=\sum_{m=0}^\infty c_m\phi(2^mt),\qquad t\in[0,1],\end{equation*}

where

![]() $(c_m)_{m\in\mathbb N}$

is an absolutely summable sequence. An example is the choice

$(c_m)_{m\in\mathbb N}$

is an absolutely summable sequence. An example is the choice

![]() $c_m=2^{-m}\varepsilon_m$

, where

$c_m=2^{-m}\varepsilon_m$

, where

![]() $(\varepsilon_m)_{m\in\mathbb N}$

is an i.i.d. sequence of

$(\varepsilon_m)_{m\in\mathbb N}$

is an i.i.d. sequence of

![]() $\{-1,+1\}$

-valued Bernoulli random variables. For this example, the distribution of the maximum was studied by Allaart [Reference Allaart1]. Our approach works for arbitrary sequences

$\{-1,+1\}$

-valued Bernoulli random variables. For this example, the distribution of the maximum was studied by Allaart [Reference Allaart1]. Our approach works for arbitrary sequences

![]() $(c_m)_{m\in\mathbb N}$

and provides a recursive characterisation of the binary expansions of all maximisers and minimisers. This characterisation is called the step condition. It yields a simple method to compute the smallest and largest maximisers and minimisers of f with arbitrary precision. Moreover, it allows us to give exact statements on the cardinality of the set of maximisers and minimisers of f. For the case of the Takagi–Landsberg functions, we find that, for

$(c_m)_{m\in\mathbb N}$

and provides a recursive characterisation of the binary expansions of all maximisers and minimisers. This characterisation is called the step condition. It yields a simple method to compute the smallest and largest maximisers and minimisers of f with arbitrary precision. Moreover, it allows us to give exact statements on the cardinality of the set of maximisers and minimisers of f. For the case of the Takagi–Landsberg functions, we find that, for

![]() $\alpha\in(-2,-1)$

, the function

$\alpha\in(-2,-1)$

, the function

![]() $f_\alpha$

has either two or four maximisers, and we provide their exact values and the maximum values of

$f_\alpha$

has either two or four maximisers, and we provide their exact values and the maximum values of

![]() $f_\alpha$

in closed form. For

$f_\alpha$

in closed form. For

![]() $\alpha\in[-1,1/2]$

, the function

$\alpha\in[-1,1/2]$

, the function

![]() $f_\alpha$

has a unique maximiser at

$f_\alpha$

has a unique maximiser at

![]() $t=1/2$

, and for

$t=1/2$

, and for

![]() $\alpha\in(1,2)$

there are exactly two maximisers at

$\alpha\in(1,2)$

there are exactly two maximisers at

![]() $t=1/3$

and

$t=1/3$

and

![]() $t=2/3$

. The case

$t=2/3$

. The case

![]() $\alpha\in(1/2,1]$

is the most interesting. It will be discussed below.

$\alpha\in(1/2,1]$

is the most interesting. It will be discussed below.

In general, we show that non-uniqueness of maximisers in

![]() $[0,1/2]$

occurs if and only if there exists a Littlewood polynomial P for which the parameter

$[0,1/2]$

occurs if and only if there exists a Littlewood polynomial P for which the parameter

![]() $\alpha$

is a special root of P, called a step root. The step roots also coincide with the discontinuities of the functions that assign to each

$\alpha$

is a special root of P, called a step root. The step roots also coincide with the discontinuities of the functions that assign to each

![]() $\alpha\in(-2,2)$

the respective smallest and largest maximiser of

$\alpha\in(-2,2)$

the respective smallest and largest maximiser of

![]() $f_\alpha$

in

$f_\alpha$

in

![]() $[0,1/2]$

. We show that the polynomials

$[0,1/2]$

. We show that the polynomials

![]() $1-x-\cdots-x^{2n}$

are the only Littlewood polynomials with negative step roots, which in turn all belong to the interval

$1-x-\cdots-x^{2n}$

are the only Littlewood polynomials with negative step roots, which in turn all belong to the interval

![]() $(-2,-1)$

. They correspond exactly to the jumps in

$(-2,-1)$

. They correspond exactly to the jumps in

![]() $(-2,-1)$

of the function in Figure 1. While there are no step roots in

$(-2,-1)$

of the function in Figure 1. While there are no step roots in

![]() $[-1,1/2]\cup(1,2)$

, we show that the step roots lie dense in

$[-1,1/2]\cup(1,2)$

, we show that the step roots lie dense in

![]() $(1/2,1]$

. Moreover, if n is the smallest degree of a Littlewood polynomial that has

$(1/2,1]$

. Moreover, if n is the smallest degree of a Littlewood polynomial that has

![]() $\alpha\in(1/2,1]$

as a step root, then the set of maximisers of

$\alpha\in(1/2,1]$

as a step root, then the set of maximisers of

![]() $f_\alpha$

is a perfect set of Hausdorff dimension

$f_\alpha$

is a perfect set of Hausdorff dimension

![]() $1/(n+1)$

, and the binary expansions of all maximisers are given in explicit form in terms of the coefficients of the corresponding Littlewood polynomial. As a corollary, we show that the closure of the set of all real roots of all Littlewood polynomials is equal to

$1/(n+1)$

, and the binary expansions of all maximisers are given in explicit form in terms of the coefficients of the corresponding Littlewood polynomial. As a corollary, we show that the closure of the set of all real roots of all Littlewood polynomials is equal to

![]() $[-2,-1/2]\cup[1/2,2]$

.

$[-2,-1/2]\cup[1/2,2]$

.

This paper is organised as follows. In Section 2, we present general results for functions of the form (2 · 1). In Section 3, we discuss the particular case of the Takagi–Landsberg functions. The global maxima of

![]() $f_\alpha$

for the cases in which

$f_\alpha$

for the cases in which

![]() $\alpha$

belongs to the intervals

$\alpha$

belongs to the intervals

![]() $(-2,-1)$

,

$(-2,-1)$

,

![]() $[-1,1/2]$

,

$[-1,1/2]$

,

![]() $(1/2,1]$

, and (1,2) are analysed separately in the respective Subsections 3.1, 3.2, 3.3 and 3.4. We also discuss the global minima of

$(1/2,1]$

, and (1,2) are analysed separately in the respective Subsections 3.1, 3.2, 3.3 and 3.4. We also discuss the global minima of

![]() $f_\alpha$

in Subsection 3.5. As explained above, the maxima of the Takagi–Landsberg functions correspond to step roots of the Littlewood polynomials. Our results yield corollaries on the locations of such step roots and on the closure of the set of all real roots of the Littlewood polynomials. These corollaries are stated and proved in Section 4. The proofs of the results from Sections 2 and 3 are deferred to the respective Sections 5 and 6.

$f_\alpha$

in Subsection 3.5. As explained above, the maxima of the Takagi–Landsberg functions correspond to step roots of the Littlewood polynomials. Our results yield corollaries on the locations of such step roots and on the closure of the set of all real roots of the Littlewood polynomials. These corollaries are stated and proved in Section 4. The proofs of the results from Sections 2 and 3 are deferred to the respective Sections 5 and 6.

2. Maxima of functions in the Takagi class

The Takagi class was introduced in [Reference Hata and Yamaguti14]. It consists of the functions of the form

\begin{equation}f(t):=\sum_{m=0}^\infty c_m\phi(2^mt),\qquad t\in[0,1],\end{equation}

\begin{equation}f(t):=\sum_{m=0}^\infty c_m\phi(2^mt),\qquad t\in[0,1],\end{equation}

where

![]() $\textbf{c}=(c_m)_{m\in\mathbb N}$

is a sequence in the space

$\textbf{c}=(c_m)_{m\in\mathbb N}$

is a sequence in the space

![]() $\ell^1$

of absolutely summable sequences and

$\ell^1$

of absolutely summable sequences and

is the tent map. Under this assumption, the series in (2 · 1) converges uniformly in t, so that f is a continuous function. The sequence

![]() $\textbf{c}\in\ell^1$

will be fixed throughout this section.

$\textbf{c}\in\ell^1$

will be fixed throughout this section.

For any

![]() $\{-1,+1\}$

-valued sequence

$\{-1,+1\}$

-valued sequence

![]() $\boldsymbol\rho=(\rho_m)_{m\in\mathbb N_0}$

, we let

$\boldsymbol\rho=(\rho_m)_{m\in\mathbb N_0}$

, we let

\begin{equation}{ T}(\boldsymbol\rho)=\sum_{n=0}^\infty2^{-(n+2)}(1-\rho_n)\in[0,1].\end{equation}

\begin{equation}{ T}(\boldsymbol\rho)=\sum_{n=0}^\infty2^{-(n+2)}(1-\rho_n)\in[0,1].\end{equation}

Then

![]() $\varepsilon_n:=(1-\rho_n)/2$

will be the digits of a binary expansion of

$\varepsilon_n:=(1-\rho_n)/2$

will be the digits of a binary expansion of

![]() $t:={ T}(\boldsymbol\rho)$

. We will call

$t:={ T}(\boldsymbol\rho)$

. We will call

![]() $\boldsymbol\rho$

a Rademacher expansion of t. Clearly, the Rademacher expansion is unique unless t is a dyadic rational number in (0,1). Otherwise, t will admit two distinct Rademacher expansions. The one with infinitely many occurrences of the digit

$\boldsymbol\rho$

a Rademacher expansion of t. Clearly, the Rademacher expansion is unique unless t is a dyadic rational number in (0,1). Otherwise, t will admit two distinct Rademacher expansions. The one with infinitely many occurrences of the digit

![]() $+1$

will be called the standard Rademacher expansion. It can be obtained through the Rademacher functions, which are given by

$+1$

will be called the standard Rademacher expansion. It can be obtained through the Rademacher functions, which are given by

![]() $r_n(t):=(-1)^{\lfloor 2^{n+1} t\rfloor}$

. The following simple lemma illustrates the significance of the Rademacher expansion for the analysis of the function f.

$r_n(t):=(-1)^{\lfloor 2^{n+1} t\rfloor}$

. The following simple lemma illustrates the significance of the Rademacher expansion for the analysis of the function f.

Lemma 2.1. Let

![]() $\boldsymbol\rho=(\rho_m)_{m\in\mathbb N_0}$

be a Rademacher expansion of

$\boldsymbol\rho=(\rho_m)_{m\in\mathbb N_0}$

be a Rademacher expansion of

![]() $t\in[0,1]$

. Then

$t\in[0,1]$

. Then

\begin{equation*}f(t)=\frac14\sum_{m=0}^\infty c_m\bigg(1-\sum_{k=1}^\infty 2^{-k}\rho_m\rho_{m+k}\bigg).\end{equation*}

\begin{equation*}f(t)=\frac14\sum_{m=0}^\infty c_m\bigg(1-\sum_{k=1}^\infty 2^{-k}\rho_m\rho_{m+k}\bigg).\end{equation*}

The following concept is the key to our analysis of the maxima of the function f.

Definition 2.2. We will say that a

![]() $\{-1,+1\}$

-valued sequence

$\{-1,+1\}$

-valued sequence

![]() $(\rho_m)_{m\in\mathbb N_0}$

satisfies the step condition if

$(\rho_m)_{m\in\mathbb N_0}$

satisfies the step condition if

\begin{equation*}\rho_{n}\sum_{m=0}^{n-1}2^mc_m\rho_m\le0\qquad\text{for all $n\in\mathbb N$.}\end{equation*}

\begin{equation*}\rho_{n}\sum_{m=0}^{n-1}2^mc_m\rho_m\le0\qquad\text{for all $n\in\mathbb N$.}\end{equation*}

Now we can state our first main result on the set of maximisers of f.

Theorem 2.3. For

![]() $t\in[0,1]$

, the following conditions are equivalent:

$t\in[0,1]$

, the following conditions are equivalent:

-

(a) t is a maximiser of f;

-

(b) every Rademacher expansion of t satisfies the step condition;

-

(c) there exists a Rademacher expansion of t that satisfies the step condition.

Theorem 2 · 3 provides a way to construct maximisers of f. More precisely, we define recursively the following pair of sequences

![]() $\boldsymbol\rho^{\flat}$

and

$\boldsymbol\rho^{\flat}$

and

![]() $\boldsymbol\rho^{\sharp}$

. We let

$\boldsymbol\rho^{\sharp}$

. We let

![]() $\rho^{\flat}_0=\rho^{\sharp}_0=1$

and, for

$\rho^{\flat}_0=\rho^{\sharp}_0=1$

and, for

![]() $n\in\mathbb N$

,

$n\in\mathbb N$

,

\begin{equation}\rho_n^\flat=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\rho^\flat_m<0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\qquad \qquad\rho_n^\sharp=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\rho^\sharp_m\le0$,}\\[3pt]-1&\text{otherwise.}\end{cases}\end{equation}

\begin{equation}\rho_n^\flat=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\rho^\flat_m<0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\qquad \qquad\rho_n^\sharp=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\rho^\sharp_m\le0$,}\\[3pt]-1&\text{otherwise.}\end{cases}\end{equation}

Corollary 2.4 With the above notation,

![]() ${ T}(\boldsymbol\rho^\flat)$

is the largest and

${ T}(\boldsymbol\rho^\flat)$

is the largest and

![]() ${ T}(\boldsymbol\rho^\sharp)$

is the smallest maximiser of f in

${ T}(\boldsymbol\rho^\sharp)$

is the smallest maximiser of f in

![]() $[0,1/2]$

.

$[0,1/2]$

.

Remark 2.5. By switching the signs in the sequence

![]() $(c_n)_{n\in\mathbb N_0}$

, we get analogous results for the minima of the function f. Specifically, if we define sequences

$(c_n)_{n\in\mathbb N_0}$

, we get analogous results for the minima of the function f. Specifically, if we define sequences

![]() $\boldsymbol\lambda^\flat$

and

$\boldsymbol\lambda^\flat$

and

![]() $\boldsymbol\lambda^\sharp$

by

$\boldsymbol\lambda^\sharp$

by

![]() $\lambda^\flat_0=\lambda^\sharp_0=+1$

and

$\lambda^\flat_0=\lambda^\sharp_0=+1$

and

\begin{equation*}\lambda_n^\flat=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\lambda^\flat_m>0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\qquad \qquad\lambda_n^\sharp=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\lambda^\sharp_m\ge0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\end{equation*}

\begin{equation*}\lambda_n^\flat=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\lambda^\flat_m>0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\qquad \qquad\lambda_n^\sharp=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}2^mc_m\lambda^\sharp_m\ge0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\end{equation*}

then

![]() ${ T}(\boldsymbol\lambda^\flat)$

is the largest and

${ T}(\boldsymbol\lambda^\flat)$

is the largest and

![]() ${ T}(\boldsymbol\lambda^\sharp)$

is the smallest minimiser of f in

${ T}(\boldsymbol\lambda^\sharp)$

is the smallest minimiser of f in

![]() $[0,1/2]$

.

$[0,1/2]$

.

The following corollary and its short proof illustrate the power of our method.

Corollary 2.6 We have

![]() $f(t)\ge0$

for all

$f(t)\ge0$

for all

![]() $t\in[0,1]$

, if and only if

$t\in[0,1]$

, if and only if

![]() $\sum_{m=0}^{n}2^mc_m\ge0$

for all

$\sum_{m=0}^{n}2^mc_m\ge0$

for all

![]() $n\ge0$

.

$n\ge0$

.

Proof. We have

![]() $f\ge0$

if and only if

$f\ge0$

if and only if

![]() $t=0$

is the smallest minimiser of f. By Remark2.5, this is equivalent to

$t=0$

is the smallest minimiser of f. By Remark2.5, this is equivalent to

![]() $\lambda_n^\sharp=+1$

for all n.

$\lambda_n^\sharp=+1$

for all n.

Our method also allows us to determine the cardinality of the set of maximisers of f. This is done in the following proposition.

Proposition 2.7 For

![]() $\boldsymbol\rho^\sharp$

as in (2 · 3), let

$\boldsymbol\rho^\sharp$

as in (2 · 3), let

\begin{equation*}{\mathscr Z}:=\left\{n\in\mathbb N_0\,\Big|\,\sum_{m=0}^n2^mc_m\rho_m^\sharp=0\right\}.\end{equation*}

\begin{equation*}{\mathscr Z}:=\left\{n\in\mathbb N_0\,\Big|\,\sum_{m=0}^n2^mc_m\rho_m^\sharp=0\right\}.\end{equation*}

Then the number of

![]() $\{-1,+1\}$

-valued sequences

$\{-1,+1\}$

-valued sequences

![]() $\boldsymbol\rho$

that satisfy both the step condition and

$\boldsymbol\rho$

that satisfy both the step condition and

![]() $\rho_0=+1$

is

$\rho_0=+1$

is

![]() $2^{|{\mathscr Z}|}$

(where

$2^{|{\mathscr Z}|}$

(where

![]() $2^{\aleph_0}$

denotes as usual the cardinality of the continuum). In particular, the number of maximisers of f in

$2^{\aleph_0}$

denotes as usual the cardinality of the continuum). In particular, the number of maximisers of f in

![]() $[0,1/2]$

is equal to

$[0,1/2]$

is equal to

![]() $2^{|{\mathscr Z}|}$

, provided that all maximisers are not dyadic rationals.

$2^{|{\mathscr Z}|}$

, provided that all maximisers are not dyadic rationals.

Example 2.8. Consider the function f with

![]() $c_m=1/(m+1)^2$

, which was considered in [Reference Hata and Yamaguti14]. We claim that it has exactly two maximisers at

$c_m=1/(m+1)^2$

, which was considered in [Reference Hata and Yamaguti14]. We claim that it has exactly two maximisers at

![]() $t={11}/{24}$

and

$t={11}/{24}$

and

![]() $t={13}/{24}$

. See Figure 2 for an illustration. To prove our claim, we need to identify the sequence

$t={13}/{24}$

. See Figure 2 for an illustration. To prove our claim, we need to identify the sequence

![]() $\boldsymbol\rho^\sharp$

and show that the sums in (2 · 3) never vanish. A short computation yields that

$\boldsymbol\rho^\sharp$

and show that the sums in (2 · 3) never vanish. A short computation yields that

![]() $\rho^\sharp_0=1$

,

$\rho^\sharp_0=1$

,

![]() $\rho^\sharp_1=-1=\rho^\flat_1$

, and

$\rho^\sharp_1=-1=\rho^\flat_1$

, and

![]() $\rho^\sharp_2=-1=\rho^\flat_2$

. To simplify the notation, we let

$\rho^\sharp_2=-1=\rho^\flat_2$

. To simplify the notation, we let

![]() $\boldsymbol\rho:=\boldsymbol\rho^\sharp$

and define

$\boldsymbol\rho:=\boldsymbol\rho^\sharp$

and define

\begin{equation*}R_n:=\sum_{m=0}^n\frac{2^m}{(m+1)^2}\rho_m.\end{equation*}

\begin{equation*}R_n:=\sum_{m=0}^n\frac{2^m}{(m+1)^2}\rho_m.\end{equation*}

Next, we prove by induction on n that for

![]() $n\ge2$

,

$n\ge2$

,

To establish the case

![]() $n=2$

, note first that

$n=2$

, note first that

![]() $R_2=1/{18}$

and hence

$R_2=1/{18}$

and hence

![]() $\rho_3=-1$

. It follows that

$\rho_3=-1$

. It follows that

![]() $R_3=1/18-8/16=-4/9$

. This gives in turn that

$R_3=1/18-8/16=-4/9$

. This gives in turn that

![]() $\rho_4=+1$

and

$\rho_4=+1$

and

![]() $R_4=-4/9+16/25=44/225$

. This establishes (2 · 4) and (2 · 5) for

$R_4=-4/9+16/25=44/225$

. This establishes (2 · 4) and (2 · 5) for

![]() $n=2$

. Now suppose that our claims have been established for all k with

$n=2$

. Now suppose that our claims have been established for all k with

![]() $2\le k\le n$

. Then the second inequality in (2 · 5) yields

$2\le k\le n$

. Then the second inequality in (2 · 5) yields

![]() $\rho_{2n+1}=-1$

and in turn

$\rho_{2n+1}=-1$

and in turn

and

This yields

![]() $\rho_{2n+2}=+1$

, from which we get as above that

$\rho_{2n+2}=+1$

, from which we get as above that

This proves our claims. Furthermore, (2 · 2) yields that the unique maximiser in

![]() $[0,1/2]$

is given by

$[0,1/2]$

is given by

\begin{equation*}T(\boldsymbol\rho) = \frac{1}{4} + \frac{1}{8} + \frac{1}{16}\sum_{n = 0}^{\infty} \frac{1}{4^n} = \frac{11}{24}.\end{equation*}

\begin{equation*}T(\boldsymbol\rho) = \frac{1}{4} + \frac{1}{8} + \frac{1}{16}\sum_{n = 0}^{\infty} \frac{1}{4^n} = \frac{11}{24}.\end{equation*}

Fig. 2. The function with

![]() $c_m=1/(m+1)^2$

analysed in Example 2 · 8. The vertical lines correspond to the two maxima at

$c_m=1/(m+1)^2$

analysed in Example 2 · 8. The vertical lines correspond to the two maxima at

![]() ${11}/{24}$

and at

${11}/{24}$

and at

![]() ${13}/{24}$

.

${13}/{24}$

.

3. Global extrema of the Takagi–Landsberg functions

The Takagi–Landsberg function with parameter

![]() $\alpha\in(-2,2)$

is given by

$\alpha\in(-2,2)$

is given by

\begin{equation}f_\alpha(t):=\sum_{m=0}^\infty\frac{\alpha^m}{2^m}\phi(2^mt),\qquad t\in[0,1].\end{equation}

\begin{equation}f_\alpha(t):=\sum_{m=0}^\infty\frac{\alpha^m}{2^m}\phi(2^mt),\qquad t\in[0,1].\end{equation}

In the case

![]() $\alpha=1$

, the function

$\alpha=1$

, the function

![]() $f_1$

is the classical Takagi function, which was first introduced by Takagi [Reference Takagi22] and later rediscovered many times; see, e.g., the surveys [Reference Allaart and Kawamura2,Reference Lagarias16]. The class of functions

$f_1$

is the classical Takagi function, which was first introduced by Takagi [Reference Takagi22] and later rediscovered many times; see, e.g., the surveys [Reference Allaart and Kawamura2,Reference Lagarias16]. The class of functions

![]() $f_\alpha$

with

$f_\alpha$

with

![]() $-2<\alpha<2$

is sometimes also called the exponential Takagi class. See Figure 3 for an illustration.

$-2<\alpha<2$

is sometimes also called the exponential Takagi class. See Figure 3 for an illustration.

Fig. 3. Takagi–Landsberg functions

![]() $f_{-\alpha}$

(left) and

$f_{-\alpha}$

(left) and

![]() $f_\alpha$

(right) for four different values of

$f_\alpha$

(right) for four different values of

![]() $\alpha$

.

$\alpha$

.

By letting

![]() $c_m:=\alpha^m2^{-m}$

, we see that the results from Section 2 apply to the function

$c_m:=\alpha^m2^{-m}$

, we see that the results from Section 2 apply to the function

![]() $f_\alpha$

. In particular, Theorem 2 · 3 characterises the maximisers of

$f_\alpha$

. In particular, Theorem 2 · 3 characterises the maximisers of

![]() $f_\alpha$

in terms of a step condition satisfied by their Rademacher expansions. Let us restate the corresponding Definition 2 · 2 in our present situation.

$f_\alpha$

in terms of a step condition satisfied by their Rademacher expansions. Let us restate the corresponding Definition 2 · 2 in our present situation.

Definition 3.1. Let

![]() $\alpha\in(-2,2)$

. A

$\alpha\in(-2,2)$

. A

![]() $\{-1,+1\}$

-valued sequence

$\{-1,+1\}$

-valued sequence

![]() $(\rho_m)_{m\in\mathbb N_0}$

satisfies the step condition for

$(\rho_m)_{m\in\mathbb N_0}$

satisfies the step condition for

![]() $\alpha$

if

$\alpha$

if

\begin{equation*}\rho_{n}\sum_{m=0}^{n-1}\alpha^m\rho_m\le0\qquad\text{for all $n\in\mathbb N$.}\end{equation*}

\begin{equation*}\rho_{n}\sum_{m=0}^{n-1}\alpha^m\rho_m\le0\qquad\text{for all $n\in\mathbb N$.}\end{equation*}

As in (2 · 3), we define recursively the following pair of sequences

![]() $\boldsymbol\rho^{\flat}(\alpha)$

and

$\boldsymbol\rho^{\flat}(\alpha)$

and

![]() $\boldsymbol\rho^{\sharp}(\alpha)$

. We let

$\boldsymbol\rho^{\sharp}(\alpha)$

. We let

![]() $\rho^{\flat}_0(\alpha)=\rho^{\sharp}_0(\alpha)=1$

and, for

$\rho^{\flat}_0(\alpha)=\rho^{\sharp}_0(\alpha)=1$

and, for

![]() $n\in\mathbb N$

,

$n\in\mathbb N$

,

\begin{equation}\rho_n^\flat(\alpha)=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}\alpha^m\rho^\flat_m(\alpha)<0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\qquad \qquad\rho_n^\sharp(\alpha)=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}\alpha^m\rho^\sharp_m(\alpha)\le0$,}\\[3pt]-1&\text{otherwise.}\end{cases}\end{equation}

\begin{equation}\rho_n^\flat(\alpha)=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}\alpha^m\rho^\flat_m(\alpha)<0$,}\\[3pt]-1&\text{otherwise,}\end{cases}\qquad \qquad\rho_n^\sharp(\alpha)=\begin{cases}+1&\text{if $\sum_{m=0}^{n-1}\alpha^m\rho^\sharp_m(\alpha)\le0$,}\\[3pt]-1&\text{otherwise.}\end{cases}\end{equation}

Then we define

where T is as in (2 · 2). It follows from Corollary 2 · 4 that

![]() $\tau^\flat(\alpha)$

is the largest and

$\tau^\flat(\alpha)$

is the largest and

![]() $\tau^\sharp(\alpha)$

is the smallest maximiser of

$\tau^\sharp(\alpha)$

is the smallest maximiser of

![]() $f_\alpha$

in

$f_\alpha$

in

![]() $[0,1/2]$

. We start with the following general result.

$[0,1/2]$

. We start with the following general result.

Proposition 3.2. For

![]() $\alpha\in(-2,2)$

, the following conditions are equivalent:

$\alpha\in(-2,2)$

, the following conditions are equivalent:

-

(a) The function

$f_\alpha$

has a unique maximiser in

$f_\alpha$

has a unique maximiser in

$[0,1/2]$

;

$[0,1/2]$

; -

(b)

$\tau^\flat(\alpha)=\tau^\sharp(\alpha)$

;

$\tau^\flat(\alpha)=\tau^\sharp(\alpha)$

; -

(c) There exists no

$n\in\mathbb N$

such that

$n\in\mathbb N$

such that

$\sum_{m=0}^n\alpha^m\rho_m^\sharp(\alpha)=0$

;

$\sum_{m=0}^n\alpha^m\rho_m^\sharp(\alpha)=0$

; -

(d) The functions

$\tau^\flat$

and

$\tau^\flat$

and

$\tau^\sharp$

are continuous at

$\tau^\sharp$

are continuous at

$\alpha$

.

$\alpha$

.

In the following subsections, we discuss the maximization of

![]() $f_\alpha$

for various regimes of

$f_\alpha$

for various regimes of

![]() $\alpha$

.

$\alpha$

.

3.1 Global maxima for

$\alpha\in(-2,-1)$

$\alpha\in(-2,-1)$

To the best of our knowledge, the case

![]() $\alpha\in(-2,-1)$

has not yet been discussed in the literature. Here, we give an explicit solution for both maximisers and maximum values in this regime. Before stating our corresponding result, we formulate the following elementary lemma.

$\alpha\in(-2,-1)$

has not yet been discussed in the literature. Here, we give an explicit solution for both maximisers and maximum values in this regime. Before stating our corresponding result, we formulate the following elementary lemma.

Lemma 3.3. For

![]() $n\in\mathbb N$

, the Littlewood polynomial

$n\in\mathbb N$

, the Littlewood polynomial

![]() $p_{2n}(x)=1-x-\cdots-x^{2n-1}-x^{2n}$

has a unique negative root

$p_{2n}(x)=1-x-\cdots-x^{2n-1}-x^{2n}$

has a unique negative root

![]() $x_{n}$

. Moreover, the sequence

$x_{n}$

. Moreover, the sequence

![]() $(x_{n})_{n\in\mathbb N}$

is strictly increasing, belongs to

$(x_{n})_{n\in\mathbb N}$

is strictly increasing, belongs to

![]() $(-2,-1)$

, and converges to

$(-2,-1)$

, and converges to

![]() $-1$

as

$-1$

as

![]() $n\uparrow\infty$

.

$n\uparrow\infty$

.

Note that

![]() $-x_1= (1+\sqrt5)/2\approx 1.61803$

is the golden ratio. Approximate numerical values for the next highest roots are

$-x_1= (1+\sqrt5)/2\approx 1.61803$

is the golden ratio. Approximate numerical values for the next highest roots are

![]() $x_2\approx -1.29065$

,

$x_2\approx -1.29065$

,

![]() $x_3\approx -1.19004$

,

$x_3\approx -1.19004$

,

![]() $x_4\approx -1.14118$

, and

$x_4\approx -1.14118$

, and

![]() $x_5\approx -1.11231$

.

$x_5\approx -1.11231$

.

Theorem 3.4. Let

![]() $(x_{n})_{n\in\mathbb N_0}$

be the sequence introduced in Lemma 3 · 3 with

$(x_{n})_{n\in\mathbb N_0}$

be the sequence introduced in Lemma 3 · 3 with

![]() $x_0:=-2$

. Then, for

$x_0:=-2$

. Then, for

![]() $\alpha \in (x_n,x_{n+1})$

, the function

$\alpha \in (x_n,x_{n+1})$

, the function

![]() $f_\alpha$

has exactly two maximisers in [0,1], which are located at

$f_\alpha$

has exactly two maximisers in [0,1], which are located at

If

![]() $\alpha=x_n$

for some

$\alpha=x_n$

for some

![]() $n\in\mathbb N$

, then

$n\in\mathbb N$

, then

![]() $f_\alpha$

has exactly four maximisers in [0,1], which are located at

$f_\alpha$

has exactly four maximisers in [0,1], which are located at

![]() $t_{n-1}$

,

$t_{n-1}$

,

![]() $t_{n}$

,

$t_{n}$

,

![]() $1-t_{n-1}$

and

$1-t_{n-1}$

and

![]() $1-t_{n}$

. Moreover,

$1-t_{n}$

. Moreover,

and this is equal to the maximum value of

![]() $f_\alpha$

if

$f_\alpha$

if

![]() $\alpha\in[x_n,x_{n+1}]$

.

$\alpha\in[x_n,x_{n+1}]$

.

Remark 3.5. It is easy to see that the right-hand side of (3 · 3) is strictly larger than

![]() $1/2$

for

$1/2$

for

![]() $\alpha\in(-2,-1)$

. Moreover, it tends to

$\alpha\in(-2,-1)$

. Moreover, it tends to

![]() $+\infty$

for

$+\infty$

for

![]() $\alpha\downarrow-2$

and to

$\alpha\downarrow-2$

and to

![]() $1/2$

for

$1/2$

for

![]() $\alpha\uparrow-1$

.

$\alpha\uparrow-1$

.

3.2 Global maxima for

$\alpha\in[-1,1/2]$

$\alpha\in[-1,1/2]$

Galkin and Galkina [Reference Galkin and Galkina10] proved that for

![]() $\alpha\in[-1,1/2]$

the function

$\alpha\in[-1,1/2]$

the function

![]() $f_\alpha$

has a global maximum at

$f_\alpha$

has a global maximum at

![]() $t=1/2$

with maximum value

$t=1/2$

with maximum value

![]() $f_\alpha(1/2)=1/2$

. Here, we give a short proof of this result by using our method and additionally establish the uniqueness of the maximiser.

$f_\alpha(1/2)=1/2$

. Here, we give a short proof of this result by using our method and additionally establish the uniqueness of the maximiser.

Proposition 3.6. For

![]() $\alpha\in[-1,1/2]$

, the function

$\alpha\in[-1,1/2]$

, the function

![]() $f_\alpha$

has the unique maximiser

$f_\alpha$

has the unique maximiser

![]() $t=1/2$

and the maximum value

$t=1/2$

and the maximum value

![]() $f_\alpha(1/2)=1/2$

.

$f_\alpha(1/2)=1/2$

.

Proof. Since obviously

![]() $f_\alpha(1/2)=1/2$

for all

$f_\alpha(1/2)=1/2$

for all

![]() $\alpha$

, the result will follow if we can establish that

$\alpha$

, the result will follow if we can establish that

![]() $\tau^\sharp(\alpha)=1/2$

for all

$\tau^\sharp(\alpha)=1/2$

for all

![]() $\alpha\in[-1,1/2]$

. This is the case if

$\alpha\in[-1,1/2]$

. This is the case if

![]() $\boldsymbol\rho:=\boldsymbol\rho^\sharp(\alpha)$

satisfies

$\boldsymbol\rho:=\boldsymbol\rho^\sharp(\alpha)$

satisfies

![]() $\rho_n=-1$

for all

$\rho_n=-1$

for all

![]() $n\ge1$

. We prove this by induction on n. The case

$n\ge1$

. We prove this by induction on n. The case

![]() $n=1$

follows immediately from

$n=1$

follows immediately from

![]() $\rho_0=1$

and (3 · 2). If

$\rho_0=1$

and (3 · 2). If

![]() $\rho_1=\cdots=\rho_{n-1}=-1$

has already been established, then

$\rho_1=\cdots=\rho_{n-1}=-1$

has already been established, then

\begin{equation*}\sum_{m=0}^{n-1}\alpha^m\rho_m=\frac{\alpha^n-2\alpha+1}{1-\alpha}.\end{equation*}

\begin{equation*}\sum_{m=0}^{n-1}\alpha^m\rho_m=\frac{\alpha^n-2\alpha+1}{1-\alpha}.\end{equation*}

If the right-hand side is strictly positive, then we have

![]() $\rho_n=-1$

. Positivity is obvious for

$\rho_n=-1$

. Positivity is obvious for

![]() $\alpha\in[-1,0]$

and for

$\alpha\in[-1,0]$

and for

![]() $\alpha=1/2$

. For

$\alpha=1/2$

. For

![]() $\alpha\in(0,1/2)$

, we can take the derivative of the numerator with respect to

$\alpha\in(0,1/2)$

, we can take the derivative of the numerator with respect to

![]() $\alpha$

. This derivative is equal to

$\alpha$

. This derivative is equal to

![]() $n\alpha^{n-1}-2$

, which is strictly negative for

$n\alpha^{n-1}-2$

, which is strictly negative for

![]() $\alpha\in(0,1/2)$

, because

$\alpha\in(0,1/2)$

, because

![]() $n\alpha^{n-1}\le1$

for those

$n\alpha^{n-1}\le1$

for those

![]() $\alpha$

. Since the numerator is strictly positive for

$\alpha$

. Since the numerator is strictly positive for

![]() $\alpha=1/2$

, the result follows.

$\alpha=1/2$

, the result follows.

3.3. Global maxima for

$\alpha\in(1/2,1]$

$\alpha\in(1/2,1]$

This is the most interesting regime, as can already be seen from Figure 1. Kahane [Reference Kahane15] showed that the maximum value of the classical Takagi function

![]() $f_1$

is

$f_1$

is

![]() $2/3$

and that the set of maximisers is equal to the set of all points in [0,Reference Allaart1] whose binary expansion satisfies

$2/3$

and that the set of maximisers is equal to the set of all points in [0,Reference Allaart1] whose binary expansion satisfies

![]() $\varepsilon_{2n}+\varepsilon_{2n+1}=1$

for each

$\varepsilon_{2n}+\varepsilon_{2n+1}=1$

for each

![]() $n\in\mathbb N_0$

. This is a perfect set of Hausdorff dimension

$n\in\mathbb N_0$

. This is a perfect set of Hausdorff dimension

![]() $1/2$

. For other values of

$1/2$

. For other values of

![]() $\alpha\in(1/2,1]$

, we are only aware of the following result by Tabor and Tabor [Reference Tabor and Tabor21]. They found the maximum value of

$\alpha\in(1/2,1]$

, we are only aware of the following result by Tabor and Tabor [Reference Tabor and Tabor21]. They found the maximum value of

![]() $f_{\alpha_n}$

, where

$f_{\alpha_n}$

, where

![]() $\alpha_n$

is the unique positive root of the Littlewood polynomial

$\alpha_n$

is the unique positive root of the Littlewood polynomial

![]() $1-x-x^2-\cdots -x^n$

. This sequence satisfies

$1-x-x^2-\cdots -x^n$

. This sequence satisfies

![]() $\alpha_1=1$

and

$\alpha_1=1$

and

![]() $\alpha_n\downarrow1/2$

as

$\alpha_n\downarrow1/2$

as

![]() $n\uparrow\infty$

. The maximum value of

$n\uparrow\infty$

. The maximum value of

![]() $f_{\alpha_n}$

is then given by

$f_{\alpha_n}$

is then given by

![]() $C(\alpha_n)$

, where

$C(\alpha_n)$

, where

\begin{equation}C(\alpha):=\frac{1}{2-2(2 \alpha -1)^{\frac{\log_2 \alpha-1}{\log_2 \alpha }}}.\end{equation}

\begin{equation}C(\alpha):=\frac{1}{2-2(2 \alpha -1)^{\frac{\log_2 \alpha-1}{\log_2 \alpha }}}.\end{equation}

Tabor and Tabor [Reference Tabor and Tabor21] observed numerically that the maximum value of

![]() $f_\alpha$

typically differs from

$f_\alpha$

typically differs from

![]() $C(\alpha)$

for other values of

$C(\alpha)$

for other values of

![]() $\alpha\in(1/2,1)$

. In Example 3 · 11 we will investigate a specific choice of

$\alpha\in(1/2,1)$

. In Example 3 · 11 we will investigate a specific choice of

![]() $\alpha$

for which

$\alpha$

for which

![]() $C(\alpha)$

is indeed different from the maximum value of

$C(\alpha)$

is indeed different from the maximum value of

![]() $f_\alpha$

. In Example3.10, we will characterise the set of maximisers of

$f_\alpha$

. In Example3.10, we will characterise the set of maximisers of

![]() $f_{\alpha_n}$

, where

$f_{\alpha_n}$

, where

![]() $\alpha_n$

is as above.

$\alpha_n$

is as above.

We have seen in Sections 3.1 and 3.2 that for

![]() $\alpha\le1/2$

the function

$\alpha\le1/2$

the function

![]() $f_\alpha$

has either two or four maximisers in [0,Reference Allaart1]. For

$f_\alpha$

has either two or four maximisers in [0,Reference Allaart1]. For

![]() $\alpha>1/2$

this situation changes. The following result shows that then

$\alpha>1/2$

this situation changes. The following result shows that then

![]() $f_\alpha$

will have either two or uncountably many maximisers. Moreover, the result quoted in Section 3.4 will imply that the latter case can only happen for

$f_\alpha$

will have either two or uncountably many maximisers. Moreover, the result quoted in Section 3.4 will imply that the latter case can only happen for

![]() $\alpha\in(1/2,1]$

.

$\alpha\in(1/2,1]$

.

Theorem 3.7. For

![]() $\alpha>1/2$

, we have the following dichotomy:

$\alpha>1/2$

, we have the following dichotomy:

-

(a) if

$\sum_{m=0}^n\alpha^m\rho^\sharp_m\neq0$

for all n, then the function

$\sum_{m=0}^n\alpha^m\rho^\sharp_m\neq0$

for all n, then the function

$f_\alpha$

has exactly two maximisers in [0,1]. They are given by

$f_\alpha$

has exactly two maximisers in [0,1]. They are given by

$\tau^\sharp(\alpha)$

and

$\tau^\sharp(\alpha)$

and

$1-\tau^\sharp(\alpha)$

and have

$1-\tau^\sharp(\alpha)$

and have

$\boldsymbol\rho^\sharp$

and

$\boldsymbol\rho^\sharp$

and

$-\boldsymbol\rho^\sharp$

as their Rademacher expansions;

$-\boldsymbol\rho^\sharp$

as their Rademacher expansions; -

(b) otherwise, let

$n_0$

be the smallest n such that

$n_0$

be the smallest n such that

$\sum_{m=0}^n\alpha^m\rho^\sharp_m=0$

. Then the set of maximisers of

$\sum_{m=0}^n\alpha^m\rho^\sharp_m=0$

. Then the set of maximisers of

$f_\alpha$

consists of all those

$f_\alpha$

consists of all those

$t\in[0,1]$

that have a Rademacher expansion consisting of successive blocks of the form

$t\in[0,1]$

that have a Rademacher expansion consisting of successive blocks of the form

$\rho^\sharp_0,\dots, \rho^\sharp_{n_0}$

or

$\rho^\sharp_0,\dots, \rho^\sharp_{n_0}$

or

$(-\rho^\sharp_0),\dots,(- \rho^\sharp_{n_0})$

. This is a perfect set of Hausdorff dimension

$(-\rho^\sharp_0),\dots,(- \rho^\sharp_{n_0})$

. This is a perfect set of Hausdorff dimension

$1/(n_0+1)$

and its

$1/(n_0+1)$

and its

$1/(n_0+1)$

-Hausdorff measure is finite and strictly positive.

$1/(n_0+1)$

-Hausdorff measure is finite and strictly positive.

The preceding theorem yields the following corollary.

Corollary 3.8 For

![]() $\alpha>1/2$

, the function

$\alpha>1/2$

, the function

![]() $f_\alpha$

cannot have a maximiser that is a dyadic rational number.

$f_\alpha$

cannot have a maximiser that is a dyadic rational number.

Note that Theorem 3 · 4 implies that also for

![]() $\alpha<-1$

there are no dyadic rational maximisers. However, by Proposition 3 · 6, the unique maximiser in case

$\alpha<-1$

there are no dyadic rational maximisers. However, by Proposition 3 · 6, the unique maximiser in case

![]() $-1\le \alpha\le1/2$

is

$-1\le \alpha\le1/2$

is

![]() $t=1/2$

.

$t=1/2$

.

Our next result shows in particular that there is no nonempty open interval in

![]() $(1/2,1]$

on which

$(1/2,1]$

on which

![]() $\tau^\flat$

or

$\tau^\flat$

or

![]() $\tau^\sharp$

are constant.

$\tau^\sharp$

are constant.

Theorem 3.9. There is no nonempty open interval in

![]() $(1/2,1)$

on which the functions

$(1/2,1)$

on which the functions

![]() $\tau^\flat$

or

$\tau^\flat$

or

![]() $\tau^\sharp$

are continuous.

$\tau^\sharp$

are continuous.

Example 3.10. Tabor and Tabor [Reference Tabor and Tabor21] found the maximum value of

![]() $f_{\alpha_n}$

, where

$f_{\alpha_n}$

, where

![]() $\alpha_n$

is the unique positive root of the Littlewood polynomial

$\alpha_n$

is the unique positive root of the Littlewood polynomial

![]() $1-x-x^2-\cdots -x^n$

. The case

$1-x-x^2-\cdots -x^n$

. The case

![]() $n=1$

, and in turn

$n=1$

, and in turn

![]() $\alpha_1=1$

, corresponds to the classical Takagi function as studied by Kahane [Reference Kahane15]. Here, we will now determine the corresponding sets of maximisers. It is clear that we must have

$\alpha_1=1$

, corresponds to the classical Takagi function as studied by Kahane [Reference Kahane15]. Here, we will now determine the corresponding sets of maximisers. It is clear that we must have

![]() $1-\sum_{k=1}^m\alpha_n^k>0$

for

$1-\sum_{k=1}^m\alpha_n^k>0$

for

![]() $m=1,\dots, n-1$

. Hence,

$m=1,\dots, n-1$

. Hence,

\begin{align*}\boldsymbol\rho^\sharp(\alpha_n)&=(+1,\underbrace{-1,\dots,-1}_{\text{n times}},+1,\underbrace{-1,\dots,-1}_{\text{n times}},\dots),\\\boldsymbol\rho^\flat(\alpha_n)&=(+1,\underbrace{-1,\dots,-1}_{\text{n times}},-1,\underbrace{+1,\dots,+1}_{\text{n times}},-1,\underbrace{+1,\dots,+1}_{\text{n times}},\dots).\end{align*}

\begin{align*}\boldsymbol\rho^\sharp(\alpha_n)&=(+1,\underbrace{-1,\dots,-1}_{\text{n times}},+1,\underbrace{-1,\dots,-1}_{\text{n times}},\dots),\\\boldsymbol\rho^\flat(\alpha_n)&=(+1,\underbrace{-1,\dots,-1}_{\text{n times}},-1,\underbrace{+1,\dots,+1}_{\text{n times}},-1,\underbrace{+1,\dots,+1}_{\text{n times}},\dots).\end{align*}

Every maximiser in [0,1] has a Rademacher expansion that is made up of successive blocks of length

![]() $n+1$

taking the form

$n+1$

taking the form

![]() $+1,-1,\dots,-1$

or

$+1,-1,\dots,-1$

or

![]() $-1,+1\dots, +1$

. This is a perfect set of Hausdorff dimension

$-1,+1\dots, +1$

. This is a perfect set of Hausdorff dimension

![]() $1/(n+1)$

. The smallest maximiser is given by

$1/(n+1)$

. The smallest maximiser is given by

\begin{align*}\tau^\sharp(\alpha_n)&=\sum_{m=0}^\infty\sum_{k=2}^{n+1}2^{-(m(n+1)+k)}=\Big(\frac12-2^{-(n+1)}\Big)\sum_{m=0}^\infty2^{-m(n+1)}=\frac{2^n-1}{2^{n+1}-1}.\end{align*}

\begin{align*}\tau^\sharp(\alpha_n)&=\sum_{m=0}^\infty\sum_{k=2}^{n+1}2^{-(m(n+1)+k)}=\Big(\frac12-2^{-(n+1)}\Big)\sum_{m=0}^\infty2^{-m(n+1)}=\frac{2^n-1}{2^{n+1}-1}.\end{align*}

The largest maximiser in

![]() $[0,1/2]$

is

$[0,1/2]$

is

\begin{equation*}\tau^\flat(\alpha_n)=\frac12-2^{-(n+1)}+\sum_{m=1}^\infty 2^{-m(n+1)-1}=\frac{1}{2} \Big(1-2^{-n}+\frac{1}{2^{n+1}-1}\Big).\end{equation*}

\begin{equation*}\tau^\flat(\alpha_n)=\frac12-2^{-(n+1)}+\sum_{m=1}^\infty 2^{-m(n+1)-1}=\frac{1}{2} \Big(1-2^{-n}+\frac{1}{2^{n+1}-1}\Big).\end{equation*}

Example 3.11. Consider the choice

One checks that

![]() $1-\alpha-\alpha^2-\alpha^3+\alpha^4=0$

and that

$1-\alpha-\alpha^2-\alpha^3+\alpha^4=0$

and that

![]() $1-\alpha-\alpha^2-\alpha^3<0$

and

$1-\alpha-\alpha^2-\alpha^3<0$

and

![]() $1-\alpha-\alpha^2>0$

and

$1-\alpha-\alpha^2>0$

and

![]() $1-\alpha>0$

. Therefore,

$1-\alpha>0$

. Therefore,

and every maximiser in [0,1] has a Rademacher expansion that consists of successive blocks of the form

![]() $+1,-1,-1,-1,+1$

or

$+1,-1,-1,-1,+1$

or

![]() $-1,+1,+1,+1,-1$

. This is a Cantor-type set of Hausdorff dimension

$-1,+1,+1,+1,-1$

. This is a Cantor-type set of Hausdorff dimension

![]() $1/5$

. Furthermore,

$1/5$

. Furthermore,

\begin{align*}\tau^\sharp(\alpha)&=\sum_{n=0}^\infty\big(0\cdot 2^{-(5n+1)}+2^{-(5n+2)}+ 2^{-(5n+3)}+ 2^{-(5n+4)}+0\cdot 2^{-(5n+5)}\big)=\frac{14}{31}\approx 0.451613\end{align*}

\begin{align*}\tau^\sharp(\alpha)&=\sum_{n=0}^\infty\big(0\cdot 2^{-(5n+1)}+2^{-(5n+2)}+ 2^{-(5n+3)}+ 2^{-(5n+4)}+0\cdot 2^{-(5n+5)}\big)=\frac{14}{31}\approx 0.451613\end{align*}

is the smallest maximiser, and

\begin{equation*}\tau^\flat(\alpha)=\frac7{16}+\sum_{n=1}^\infty \big(2^{-5n-1}+2^{-5n-5}\big)=\frac{451}{992}\approx 0.454637\end{equation*}

\begin{equation*}\tau^\flat(\alpha)=\frac7{16}+\sum_{n=1}^\infty \big(2^{-5n-1}+2^{-5n-5}\big)=\frac{451}{992}\approx 0.454637\end{equation*}

is the largest maximiser in

![]() $[0,1/2]$

. To compute the maximum value, we can either use Lemma 2 · 1, or we directly compute

$[0,1/2]$

. To compute the maximum value, we can either use Lemma 2 · 1, or we directly compute

![]() $f_\alpha(14/31)$

as follows. We note that

$f_\alpha(14/31)$

as follows. We note that

![]() $\phi(2^{5n+k}14/31)=b_k/31$

, where

$\phi(2^{5n+k}14/31)=b_k/31$

, where

![]() $b_0=14$

,

$b_0=14$

,

![]() $b_1=3$

,

$b_1=3$

,

![]() $b_2=6$

,

$b_2=6$

,

![]() $b_3=12$

, and

$b_3=12$

, and

![]() $b_4=7$

. Thus,

$b_4=7$

. Thus,

\begin{align*}f_\alpha(14/31)&=\frac1{31}\sum_{n=0}^\infty\Big(\frac\alpha2\Big)^{5n}\Big(14+3\frac\alpha2+6\Big(\frac\alpha2\Big)^2+12\Big(\frac\alpha2\Big)^3+7\Big(\frac\alpha2\Big)^4\Big)\\[3pt]&=\frac{39+3 \sqrt{13}-\sqrt{6 \left(25+7 \sqrt{13}\right)}}{56-2\sqrt{13}+4 \sqrt{7+2 \sqrt{13}}}\approx 0.508155,\end{align*}

\begin{align*}f_\alpha(14/31)&=\frac1{31}\sum_{n=0}^\infty\Big(\frac\alpha2\Big)^{5n}\Big(14+3\frac\alpha2+6\Big(\frac\alpha2\Big)^2+12\Big(\frac\alpha2\Big)^3+7\Big(\frac\alpha2\Big)^4\Big)\\[3pt]&=\frac{39+3 \sqrt{13}-\sqrt{6 \left(25+7 \sqrt{13}\right)}}{56-2\sqrt{13}+4 \sqrt{7+2 \sqrt{13}}}\approx 0.508155,\end{align*}

where the second identity was obtained by using Mathematica 12.0. For the function in (3 · 4), we get, however,

![]() $C(\alpha)\approx 0.508008$

, which confirms the numerical observation from [Reference Tabor and Tabor21] that

$C(\alpha)\approx 0.508008$

, which confirms the numerical observation from [Reference Tabor and Tabor21] that

![]() $C(\cdot)$

may not yield correct maximum values if evaluated at arguments different from the positive roots of

$C(\cdot)$

may not yield correct maximum values if evaluated at arguments different from the positive roots of

![]() $1-x-x^2-\cdots -x^n$

.

$1-x-x^2-\cdots -x^n$

.

3.4. Global maxima for

$\alpha\in(1,2)$

$\alpha\in(1,2)$

For

![]() $\alpha=\sqrt2$

, it can be deduced from [11, lemma 5] that

$\alpha=\sqrt2$

, it can be deduced from [11, lemma 5] that

![]() $f_{\sqrt2}$

has maxima at

$f_{\sqrt2}$

has maxima at

![]() $t=1/3$

and

$t=1/3$

and

![]() $t=2/3$

and maximum value

$t=2/3$

and maximum value

![]() $(2+\sqrt2)/3$

. That lemma was later rediscovered by the second author in [20, lemma 3

$(2+\sqrt2)/3$

. That lemma was later rediscovered by the second author in [20, lemma 3

![]() $\cdot$

1]. The statement on the maxima of

$\cdot$

1]. The statement on the maxima of

![]() $f_{\sqrt2}$

was given independently in [Reference Galkin and Galkina10,Reference Schied20]. Mishura and Schied [Reference Mishura and Schied18] extended this subsequently to the following result, which we quote here for the sake of completeness. It is not difficult to prove it with our present method; see [13, example 4

$f_{\sqrt2}$

was given independently in [Reference Galkin and Galkina10,Reference Schied20]. Mishura and Schied [Reference Mishura and Schied18] extended this subsequently to the following result, which we quote here for the sake of completeness. It is not difficult to prove it with our present method; see [13, example 4

![]() $\cdot$

3

$\cdot$

3

![]() $\cdot$

1].

$\cdot$

1].

Theorem 3.12. (Mishura and Schied [Reference Mishura and Schied18]) For

![]() $\alpha\in(1,2)$

, the function

$\alpha\in(1,2)$

, the function

![]() $f_\alpha$

has exactly two maximisers at

$f_\alpha$

has exactly two maximisers at

![]() $t=1/3$

and

$t=1/3$

and

![]() $t=2/3$

and its maximum value is

$t=2/3$

and its maximum value is

![]() $(3(1-\alpha/2))^{-1}$

.

$(3(1-\alpha/2))^{-1}$

.

3.5. Global minima

In this section, we discuss the minima of the function

![]() $f_\alpha$

.

$f_\alpha$

.

Theorem 3.13. for the global minima of the function

![]() $f_\alpha$

, we have the following three cases.

$f_\alpha$

, we have the following three cases.

-

(a) for

$\alpha\in(-2,-1)$

, the function

$\alpha\in(-2,-1)$

, the function

$f_\alpha$

has a unique minimum in

$f_\alpha$

has a unique minimum in

$[0,1/2]$

, which is located at

$[0,1/2]$

, which is located at

$t=1/5$

. Moreover, the minimum value is

$t=1/5$

. Moreover, the minimum value is

\begin{equation*}f_\alpha(1/5)=\frac{1+\alpha}{5(1-(\alpha/2)^2)}.\end{equation*}

\begin{equation*}f_\alpha(1/5)=\frac{1+\alpha}{5(1-(\alpha/2)^2)}.\end{equation*}

-

(b) for

$\alpha=-1$

, the minimum value of

$\alpha=-1$

, the minimum value of

$f_\alpha$

is equal to 0, and the set of minimisers is equal to the set of all

$f_\alpha$

is equal to 0, and the set of minimisers is equal to the set of all

$t\in[0,1]$

that have a Rademacher expansion

$t\in[0,1]$

that have a Rademacher expansion

$\boldsymbol\rho$

with

$\boldsymbol\rho$

with

$\rho_{2n}=\rho_{2n+1}$

for

$\rho_{2n}=\rho_{2n+1}$

for

$n\in\mathbb N_0$

. This is a perfect set of Hausdorff dimension

$n\in\mathbb N_0$

. This is a perfect set of Hausdorff dimension

$1/2$

, and its

$1/2$

, and its

$1/2$

-dimensional Hausdorff measure is finite and strictly positive.

$1/2$

-dimensional Hausdorff measure is finite and strictly positive. -

(c) for

$\alpha\in(-1,2)$

, the unique minimiser of

$\alpha\in(-1,2)$

, the unique minimiser of

$f_\alpha$

in

$f_\alpha$

in

$[0,1/2]$

is at

$[0,1/2]$

is at

$t=0$

and the minimum value is

$t=0$

and the minimum value is

$f_\alpha(0)=0$

.

$f_\alpha(0)=0$

.

The preceding theorem and Remark 3.5 yield immediately the following corollary.

Corollary 3.14 The function

![]() $f_\alpha(t)$

is nonnegative for all

$f_\alpha(t)$

is nonnegative for all

![]() $t\in[0,1]$

if and only if

$t\in[0,1]$

if and only if

![]() $\alpha\ge-1$

. Moreover, there is no

$\alpha\ge-1$

. Moreover, there is no

![]() $\alpha\in(-2,2)$

such that

$\alpha\in(-2,2)$

such that

![]() $f_\alpha$

is nonpositive.

$f_\alpha$

is nonpositive.

The fact that

![]() $f_\alpha\ge0$

for

$f_\alpha\ge0$

for

![]() $\alpha\ge-1$

can alternatively be deduced from an argument in the proof of [10, Theorem 4.1].

$\alpha\ge-1$

can alternatively be deduced from an argument in the proof of [10, Theorem 4.1].

4. Real (step) roots of Littlewood polynomials

In this section, we link our analysis of the maxima of the Takagi–Landsberg functions to certain real roots of the Littlewood polynomials. Recall that a Littlewood polynomial is a polynomial whose coefficients are all

![]() $-1$

or

$-1$

or

![]() $+1$

. By [4, corollary 3

$+1$

. By [4, corollary 3

![]() $\cdot$

3

$\cdot$

3

![]() $\cdot$

1], the complex roots of any Littlewood polynomial must lie in the annulus

$\cdot$

1], the complex roots of any Littlewood polynomial must lie in the annulus

![]() $\{z\in\mathbb C\,|\,1/2<|z|<2\}$

. Hence, the real roots can only lie in

$\{z\in\mathbb C\,|\,1/2<|z|<2\}$

. Hence, the real roots can only lie in

![]() $(-2,-1/2)\cup(1/2,2)$

. Below, we will show in Corollary 4.5 that the real roots are actually dense in that set. We start with the following simple lemma.

$(-2,-1/2)\cup(1/2,2)$

. Below, we will show in Corollary 4.5 that the real roots are actually dense in that set. We start with the following simple lemma.

Lemma 4.1. The numbers

![]() $-1$

and

$-1$

and

![]() $+1$

are the only rational roots for Littlewood polynomials.

$+1$

are the only rational roots for Littlewood polynomials.

Proof. Assume

![]() $\alpha \in \mathbb{Q}$

is a rational root for some Littlewood polynomial

$\alpha \in \mathbb{Q}$

is a rational root for some Littlewood polynomial

![]() $P_n(x)$

. Then the monic polynomial

$P_n(x)$

. Then the monic polynomial

![]() $x - \alpha$

divides

$x - \alpha$

divides

![]() $P_n(x)$

. The Gauss lemma yields that

$P_n(x)$

. The Gauss lemma yields that

![]() $x - \alpha \in \mathbb{Z}[x]$

and hence

$x - \alpha \in \mathbb{Z}[x]$

and hence

![]() $\alpha \in \mathbb{Z}$

. By the above-mentioned [4, corollary 3

$\alpha \in \mathbb{Z}$

. By the above-mentioned [4, corollary 3

![]() $\cdot$

3

$\cdot$

3

![]() $\cdot$

1], we get

$\cdot$

1], we get

![]() $|\alpha| = 1$

.

$|\alpha| = 1$

.

Definition 4.2. For given

![]() $n\in\mathbb N$

, let

$n\in\mathbb N$

, let

![]() $P_n(x)=\sum_{m=0}^n\rho_mx^m$

be a Littlewood polynomial with coefficients

$P_n(x)=\sum_{m=0}^n\rho_mx^m$

be a Littlewood polynomial with coefficients

![]() $\rho_m\in\{-1,+1\}$

. If

$\rho_m\in\{-1,+1\}$

. If

![]() $k\le n$

, we write

$k\le n$

, we write

![]() $P_k(x)=\sum_{m=0}^k\rho_mx^m$

. A number

$P_k(x)=\sum_{m=0}^k\rho_mx^m$

. A number

![]() $\alpha\in{\mathbb R}$

is called a step root of

$\alpha\in{\mathbb R}$

is called a step root of

![]() $P_n$

if

$P_n$

if

![]() $P_n(\alpha)=0$

and

$P_n(\alpha)=0$

and

![]() $\rho_{k+1}P_k(\alpha)\le0$

for

$\rho_{k+1}P_k(\alpha)\le0$

for

![]() $k=0,\dots, n-1$

.

$k=0,\dots, n-1$

.

The concept of a step root has the following significance for the maxima of the Takagi–Landsberg functions

![]() $f_\alpha$

defined in (3 · 1).

$f_\alpha$

defined in (3 · 1).

Corollary 4.3 For

![]() $\alpha\in(-2,2)$

, the following conditions are equivalent:

$\alpha\in(-2,2)$

, the following conditions are equivalent:

-

(a) the function

$f_\alpha$

has a unique maximiser in

$f_\alpha$

has a unique maximiser in

$[0,1/2]$

.

$[0,1/2]$

. -

(b) there is no Littlewood polynomial that has

$\alpha$

as its step root.

$\alpha$

as its step root.

Proof. The assertion follows immediately from Proposition 3.2 and Theorem 2 · 3.

With our results on the maxima of the Takagi–Landsberg function, we thus get the following corollary on the locations of the step roots of the Littlewood polynomials.

Corollary 4.4. We have the following results:

-

(a) the only Littlewood polynomials admitting negative step roots are of the form

$1-x-x^2-\cdots-x^{2n}$

for some

$1-x-x^2-\cdots-x^{2n}$

for some

$n\in\mathbb N$

and the step roots are the numbers

$n\in\mathbb N$

and the step roots are the numbers

$x_n$

in Lemma 3 · 3

$x_n$

in Lemma 3 · 3

-

(b) there are no step roots in

$[-1,1/2]\cup(1,2)$

$[-1,1/2]\cup(1,2)$

-

(c) the step roots are dense in

$(1/2,1]$

.

$(1/2,1]$

.

Proof. In view of Corollary 4 · 3, (a) follows from Theorem 3 · 4. Assertion (b) follows from Proposition 3 · 6 and Theorem 3 · 12. Part (c) follows from Theorem3.9.

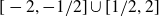

From part (c) of the preceding corollary, we obtain the following result, which identifies

![]() $[-2,-1/2]\cup[1/2,2]$

as the closure of the set of all real roots of the Littlewood polynomials. Although the roots of the Littlewood polynomials have been well studied in the literature (see, e.g, [Reference Borwein5] and the references therein), we were unable to find the following result in the literature. In [5, E1 on p. 72], it is stated that an analogous result holds if the Littlewood polynomials are replaced by the larger set of all polynomials with coefficients in

$[-2,-1/2]\cup[1/2,2]$

as the closure of the set of all real roots of the Littlewood polynomials. Although the roots of the Littlewood polynomials have been well studied in the literature (see, e.g, [Reference Borwein5] and the references therein), we were unable to find the following result in the literature. In [5, E1 on p. 72], it is stated that an analogous result holds if the Littlewood polynomials are replaced by the larger set of all polynomials with coefficients in

![]() $\{-1,0,+1\}$

. In the student thesis [Reference Vader23], determining the closure of the real roots of the Littlewood polynomials was classified as an open problem. The distribution of the positive roots and step roots of Littlewood polynomials is illustrated in Figure 4.

$\{-1,0,+1\}$

. In the student thesis [Reference Vader23], determining the closure of the real roots of the Littlewood polynomials was classified as an open problem. The distribution of the positive roots and step roots of Littlewood polynomials is illustrated in Figure 4.

Fig. 4. Log-scale histograms of the distributions of the positive roots (left) and step roots (right) of the Littlewood polynomials of degree

![]() $\le 20$

and with zero-order coefficient

$\le 20$

and with zero-order coefficient

![]() $\rho_0=+1$

. The algorithm found 2,255,683 roots and 106,682 step roots, where numbers such as

$\rho_0=+1$

. The algorithm found 2,255,683 roots and 106,682 step roots, where numbers such as

![]() $\alpha=1$

were counted each time they occurred as (step) roots of some polynomial.

$\alpha=1$

were counted each time they occurred as (step) roots of some polynomial.

Corollary 4.5 Let

![]() ${\mathscr R}$

denote the set of all real roots of the Littlewood polynomials. Then the closure of

${\mathscr R}$

denote the set of all real roots of the Littlewood polynomials. Then the closure of

![]() ${\mathscr R}$

is given by

${\mathscr R}$

is given by

![]() $[-2,-1/2]\cup[1/2,2]$

.

$[-2,-1/2]\cup[1/2,2]$

.

Proof. We know from [4, corollary 3

![]() $\cdot$

3

$\cdot$

3

![]() $\cdot$

1] that

$\cdot$

1] that

![]() ${\mathscr R}\subset(-2,-1/2)\cup(1/2,2)$

. Now denote by

${\mathscr R}\subset(-2,-1/2)\cup(1/2,2)$

. Now denote by

![]() ${\mathscr S}$

the set of all step roots of the Littlewood polynomials, so that

${\mathscr S}$

the set of all step roots of the Littlewood polynomials, so that

![]() ${\mathscr S}\subset{\mathscr R}$

. Corollary 4.4 (c) yields that

${\mathscr S}\subset{\mathscr R}$

. Corollary 4.4 (c) yields that

![]() $[1/2,1]$

is contained in the closure of

$[1/2,1]$

is contained in the closure of

![]() ${\mathscr S}$

, and hence also in the closure of

${\mathscr S}$

, and hence also in the closure of

![]() ${\mathscr R}$

. Next, note that if

${\mathscr R}$

. Next, note that if

![]() $\alpha$

is the root of a Littlewood polynomial, then so is

$\alpha$

is the root of a Littlewood polynomial, then so is

![]() $1/\alpha$

. Indeed, if

$1/\alpha$

. Indeed, if

![]() $\alpha$

is a root of the Littlewood polynomial P(x), then

$\alpha$

is a root of the Littlewood polynomial P(x), then

![]() $\widetilde P(x):=x^nP(1/x)$

is also a Littlewood polynomial and satisfies

$\widetilde P(x):=x^nP(1/x)$

is also a Littlewood polynomial and satisfies

![]() $\widetilde P(1/\alpha)=\alpha^{-n}P(\alpha)=0$

. Hence, [Reference Allaart1,Reference Allaart and Kawamura2] is contained in the closure of

$\widetilde P(1/\alpha)=\alpha^{-n}P(\alpha)=0$

. Hence, [Reference Allaart1,Reference Allaart and Kawamura2] is contained in the closure of

![]() ${\mathscr R}$

. Finally, for

${\mathscr R}$

. Finally, for

![]() $\alpha\in{\mathscr R}$

, we clearly have also

$\alpha\in{\mathscr R}$

, we clearly have also

![]() $-\alpha\in{\mathscr R}$

. This completes the proof.

$-\alpha\in{\mathscr R}$

. This completes the proof.

5. Proofs of the results in Section 2

Proof of Lemma 2 · 1. Take

![]() $m\in\mathbb N_0$

and let

$m\in\mathbb N_0$

and let

![]() $t\in[0,1]$

have Rademacher expansion

$t\in[0,1]$

have Rademacher expansion

![]() $\boldsymbol\rho$

. Then the tent map satisfies

$\boldsymbol\rho$

. Then the tent map satisfies

\begin{equation*}\phi(t)=\frac14-\frac14\sum_{k=1}^\infty 2^{-k}\rho_0\rho_k\quad\text{and}\quad \phi(2^mt)=\frac14-\frac14\sum_{k=1}^\infty 2^{-k}\rho_m\rho_{m+k}.\end{equation*}

\begin{equation*}\phi(t)=\frac14-\frac14\sum_{k=1}^\infty 2^{-k}\rho_0\rho_k\quad\text{and}\quad \phi(2^mt)=\frac14-\frac14\sum_{k=1}^\infty 2^{-k}\rho_m\rho_{m+k}.\end{equation*}

Plugging this formula into (2 · 1) gives the result.

By

\begin{equation*}f_{n}(t):=\sum_{m=0}^n c_m\phi(2^mt),\qquad t\in[0,1],\end{equation*}

\begin{equation*}f_{n}(t):=\sum_{m=0}^n c_m\phi(2^mt),\qquad t\in[0,1],\end{equation*}

we will denote the corresponding truncated function.

Let

be the dyadic partition of [0,1] of generation n. For

![]() $t\in{\mathbb D}_n$

, we define its set of neighbours in

$t\in{\mathbb D}_n$

, we define its set of neighbours in

![]() ${\mathbb D}_n$

by

${\mathbb D}_n$

by

If

![]() $s\in{\mathscr N}_n(t)$

, we will say that s and t are neighbouring points in

$s\in{\mathscr N}_n(t)$

, we will say that s and t are neighbouring points in

![]() ${\mathbb D}_n$

. We are now going to analyse the maxima of the truncated function

${\mathbb D}_n$

. We are now going to analyse the maxima of the truncated function

![]() $f_{n}$

. Since this function is affine on all intervals of the form

$f_{n}$

. Since this function is affine on all intervals of the form

![]() $[k2^{-(n+1)},(k+1)2^{-(n+1)}]$

, it is clear that its maximum must be attained on

$[k2^{-(n+1)},(k+1)2^{-(n+1)}]$

, it is clear that its maximum must be attained on

![]() ${\mathbb D}_{n+1}$

. In addition,

${\mathbb D}_{n+1}$

. In addition,

![]() $f_n$

can have flat parts (e.g.,

$f_n$

can have flat parts (e.g.,

![]() $n=0$

and

$n=0$

and

![]() $c_0=0$

), so that the set of maximisers of

$c_0=0$

), so that the set of maximisers of

![]() $f_n$

may be an uncountable set. In the sequel, we are only interested in the set

$f_n$

may be an uncountable set. In the sequel, we are only interested in the set

of maximisers located in

![]() ${\mathbb D}_{n+1}$

.

${\mathbb D}_{n+1}$

.

Definition 5.1. For

![]() $n\in\mathbb N_0$

, a pair

$n\in\mathbb N_0$

, a pair

![]() $(x_n,y_n)$

is called a maximising edge of generation n if the following conditions are satisfied:

$(x_n,y_n)$

is called a maximising edge of generation n if the following conditions are satisfied:

-

(a)

$x_n\in{\mathscr M}_n$

;

$x_n\in{\mathscr M}_n$

; -

(b)

$y_n$

is a maximiser of

$y_n$

is a maximiser of

$f_n$

in

$f_n$

in

${\mathscr N}_{n+1}(x_n)$

, i.e.,

${\mathscr N}_{n+1}(x_n)$

, i.e.,

$${y_n} \in \mathop {{\rm{arg }}{\mkern 1mu} {\rm{max}}}\limits_{x \in {{\mathscr N}_{n + 1}}({x_n})} {f_n}(x)$$

.

$${y_n} \in \mathop {{\rm{arg }}{\mkern 1mu} {\rm{max}}}\limits_{x \in {{\mathscr N}_{n + 1}}({x_n})} {f_n}(x)$$

.

The following lemma characterises the maximising edges of generation n as the maximisers of

![]() $f_n$

over neighbouring pairs in

$f_n$

over neighbouring pairs in

![]() ${\mathbb D}_{n+1}$

. It will be a key result for our proof of Theorem 2 · 3.

${\mathbb D}_{n+1}$

. It will be a key result for our proof of Theorem 2 · 3.

Lemma 5.2. For

![]() $n\in\mathbb N_0$

, the following conditions are equivalent for two neighboring points

$n\in\mathbb N_0$

, the following conditions are equivalent for two neighboring points

![]() $x_n,\,y_n\in{\mathbb D}_{n+1}$

:

$x_n,\,y_n\in{\mathbb D}_{n+1}$

:

-

(a)

$(x_n,y_n)$

or

$(x_n,y_n)$

or

$(y_n,x_n)$

is a maximising edge of generation n;

$(y_n,x_n)$

is a maximising edge of generation n; -

(b) for all neighboring points

$z_0,z_1$

in

$z_0,z_1$

in

${\mathbb D}_{n+1}$

, we have

${\mathbb D}_{n+1}$

, we have

$f_n(z_0)+f_n(z_1)\le f_n(x_n)+f_n(y_n)$

.

$f_n(z_0)+f_n(z_1)\le f_n(x_n)+f_n(y_n)$

.

Proof. We prove the assertion by induction on n. Consider the case

![]() $n=0$

. If

$n=0$

. If

![]() $c_0=0$

, then

$c_0=0$

, then

![]() ${\mathscr M}_0={\mathbb D}_1$

and all pairs of neighbouring points in

${\mathscr M}_0={\mathbb D}_1$

and all pairs of neighbouring points in

![]() ${\mathbb D}_1$

form maximising edges of generation 0, and so the assertion is obvious. If

${\mathbb D}_1$

form maximising edges of generation 0, and so the assertion is obvious. If

![]() $c_0>0$

, then

$c_0>0$

, then

![]() ${\mathscr M}_0=\{1/2\}$

, and if

${\mathscr M}_0=\{1/2\}$

, and if

![]() $c_0<0$

, then

$c_0<0$

, then

![]() ${\mathscr M}_0=\{0,1\}$

. Also in these cases the equivalence of (a) and (b) is obvious.ow assume that

${\mathscr M}_0=\{0,1\}$

. Also in these cases the equivalence of (a) and (b) is obvious.ow assume that

![]() $n\ge1$

and that the equivalence of (a) and (b) has been established for all

$n\ge1$

and that the equivalence of (a) and (b) has been established for all

![]() $m<n$

. To show that (a) implies (b), let

$m<n$

. To show that (a) implies (b), let

![]() $(x_n,y_n)$

be a maximising edge of generation n. First, we consider the case

$(x_n,y_n)$

be a maximising edge of generation n. First, we consider the case

![]() $x_n\in{\mathbb D}_{n+1}\setminus{\mathbb D}_n$

. Then

$x_n\in{\mathbb D}_{n+1}\setminus{\mathbb D}_n$

. Then

![]() ${\mathscr N}_{n+1}(x_n)$

contains

${\mathscr N}_{n+1}(x_n)$

contains

![]() $y_n$

and another point, say

$y_n$

and another point, say

![]() $u_n$

, and both

$u_n$

, and both

![]() $y_n$

and

$y_n$

and

![]() $u_n$

belong to

$u_n$

belong to

![]() ${\mathbb D}_n$

. If

${\mathbb D}_n$

. If

![]() $z_0$

and

$z_0$

and

![]() $z_2$

are two neighbouring points in

$z_2$

are two neighbouring points in

![]() ${\mathbb D}_n$

, we let

${\mathbb D}_n$

, we let

![]() $z_1:=(z_0+z_2)/2$

. Then

$z_1:=(z_0+z_2)/2$

. Then

and hence

![]() $f_{n-1}(z_0)+f_{n-1}(z_2)\le f_{n-1}(y_n)+f_{n-1}(u_n)$

. The induction hypothesis now yields that

$f_{n-1}(z_0)+f_{n-1}(z_2)\le f_{n-1}(y_n)+f_{n-1}(u_n)$

. The induction hypothesis now yields that

![]() $(y_n,u_n)$

or

$(y_n,u_n)$

or

![]() $(u_n,y_n)$

is a maximising edge of generation

$(u_n,y_n)$

is a maximising edge of generation

![]() $n-1$

. Moreover, since

$n-1$

. Moreover, since

![]() $(x_n,y_n)$

is a maximising edge of generation n, part (b) of Definition 5.1 gives

$(x_n,y_n)$

is a maximising edge of generation n, part (b) of Definition 5.1 gives

![]() $f_n(u_n)\le f_n(y_n)$

. Since both

$f_n(u_n)\le f_n(y_n)$

. Since both

![]() $y_n$

and

$y_n$

and

![]() $u_n$

belong to

$u_n$

belong to

![]() ${\mathbb D}_n$

, we get that

${\mathbb D}_n$

, we get that

Therefore,

![]() $y_n\in{\mathscr M}_{n-1}$

.

$y_n\in{\mathscr M}_{n-1}$

.

Now let

![]() $z_0$

and

$z_0$

and

![]() $z_1$

be two neighboring points in

$z_1$

be two neighboring points in

![]() ${\mathbb D}_{n+1}$

. Then one of the two, say

${\mathbb D}_{n+1}$

. Then one of the two, say

![]() $z_0$

belongs to

$z_0$