INTRODUCTION

Over the next decades, a dramatic increase is expected in the number of people living with dementia in developing regions compared to those living in developed regions (Ferri et al., Reference Ferri, Prince, Brayne, Brodaty, Fratiglioni and Ganguli2005; Prince et al., Reference Prince, Bryce, Albanese, Wimo, Ribeiro and Ferri2013), due to improvements in life expectancy and rapid population aging, especially in lower- and middle-income countries (World Health Organization, 2011). In addition, non-Western immigrant populations in Western countries, such as people from Turkey and Morocco who immigrated to Western Europe (Nielsen, Vogel, Phung, Gade, & Waldemar, Reference Nielsen, Vogel, Phung, Gade and Waldemar2011; Parlevliet et al., Reference Parlevliet, Uysal-Bozkir, Goudsmit, van Campen, Kok, Ter Riet and de Rooij2016), or Hispanic people who immigrated to the USA (Gurland et al., Reference Gurland, Wilder, Lantigua, Mayeux, Stern, Chen, Killeffer, Martin and Soldo1997), are reaching an age at which dementia is increasingly prevalent.

Most neuropsychological tests were developed to be used in (educated) Western populations. The work by Howard Andrew Knox in the early 1900s at Ellis Island already showed that adaptations are needed to make tests suitable for populations with diverse backgrounds (Richardson, Reference Richardson2003). It is now widely documented that neuropsychological test performance is substantially affected by factors such as culture, language, (quality of) education, and literacy (Ardila, Reference Ardila2005, Reference Ardila2007; Ardila, Rosselli, & Rosas, Reference Ardila, Rosselli and Rosas1989; Nielsen & Jorgensen, Reference Nielsen and Jorgensen2013; Nielsen & Waldemar, Reference Nielsen and Waldemar2016; Ostrosky-Solis, Ardila, Rosselli, Lopez-Arango, & Uriel-Mendoza, Reference Ostrosky-Solis, Ardila, Rosselli, Lopez-Arango and Uriel-Mendoza1998; Teng, Reference Teng2002). The rising number of patients with dementia from low-educated and non-Western populations therefore calls for an increase in studies addressing the reliability, validity, and cross-cultural and cross-linguistic applicability of neuropsychological instruments used to assess dementia. Furthermore, these studies should include patients with dementia or mild cognitive impairment (MCI) in their sample to determine whether these tests are sufficiently sensitive and specific to dementia.

Recent studies have mostly focused on developing cognitive screening tests, and an excellent review is available of screening tests that can be used in people who are illiterate (Julayanont & Ruthirago, Reference Julayanont and Ruthirago2018) and/or low educated (Paddick et al., Reference Paddick, Gray, McGuire, Richardson, Dotchin and Walker2017), as well as reviews about screening tests for specific regions, such as Asia (Rosli, Tan, Gray, Subramanian, & Chin, Reference Rosli, Tan, Gray, Subramanian and Chin2016) and Brazil (Vasconcelos, Brucki, & Bueno, Reference Vasconcelos, Brucki and Bueno2007). However, an overview of domain-specific cognitive tests and test batteries that are adapted to or developed for a non-Western, low-educated population is lacking. Domain-specific neuropsychological tests are essential to determine a profile of impaired and intact cognitive functions, providing insights into the underlying etiology of the dementia – something that is not possible with screening tests alone. Furthermore, a comprehensive assessment of the cognitive profile may result in more tailored, personalized care after a diagnosis (Jacova, Kertesz, Blair, Fisk, & Feldman, Reference Jacova, Kertesz, Blair, Fisk and Feldman2007).

The first aim of this review was to generate an overview of all studies investigating either (1) traditional neuropsychological measures, or adaptions of these measures in non-Western populations with low education levels, or (2) new, assembled neuropsychological tests developed for non-Western, low-educated populations. The second aim was to determine the quality of these studies, and to examine the validity and reliability of the current neuropsychological measures in each cognitive domain, as well as determine which could be applied cross-culturally and cross-linguistically.

METHOD

Identification of Studies

Search terms and databases

Studies were selected based on the title and the abstract. Medline, Embase, Web of Science, Cochrane, Psycinfo, and Google Scholar were used to identify relevant papers, without restrictions on the year of publication or language (for a list of the search terms used, see Supplementary Material). Studies were included up until August 2018 (no start date). The papers were judged independently by two authors (SF and JMP) according to the inclusion criteria described later. In case of disagreement a consensus agreement was made together with EvdB.

Inclusion criteria

The inclusion criteria were as follows:

1. The study included patients with dementia and/or patients with MCI/Cognitive Impairment No Dementia (CIND).

2. The study was conducted in a non-Western country, or a non-Western population in a Western country. Western was defined as all EU/EEA countries (including Switzerland), Australia, New Zealand, Canada, and the USA. Hispanic/Latino populations in the USA were included in this review as a non-Western population, as this group likely encompasses people with heterogeneous immigration histories and diverse cultural and linguistic backgrounds (Puente & Ardila, Reference Puente, Ardila, Fletcher-Janzen, Strickland and Reynolds2000).

3. The study described the instrument in sufficient detail for the authors to judge its applicability in a non-Western context, its validity and/or its reliability, that is, it was not merely mentioned as used during a diagnostic/research process, without any further elaboration.

Exclusion criteria

Studies that focused on medical conditions other than dementia were excluded. Screening tests – defined as tests covering multiple domains, but yielding a single total score without individually normed subscores – were also excluded, as some reviews of these already exist (Julayanont & Ruthirago, Reference Julayanont and Ruthirago2018; Paddick et al., Reference Paddick, Gray, McGuire, Richardson, Dotchin and Walker2017; Rosli et al., Reference Rosli, Tan, Gray, Subramanian and Chin2016; Vasconcelos et al., Reference Vasconcelos, Brucki and Bueno2007). Intelligence tests were also excluded from the analysis, except when subtests (e.g. Digit Span) were used to assess dementia in combination with other neuropsychological tests and the study described the cross-cultural applicability. Unpublished dissertations and book chapters were excluded.

Finally, studies that did not include low-educated people were excluded. This was operationalized as studies that did not describe the inclusion of low-educated or illiterate participants in the text, and did not include any education levels lower than primary school in their descriptive tables. An exception was made for studies of which the means and standard deviations of the years of education made it highly likely that low-educated participants were included, defined as a mean number of years of education that did not exceed primary school for the respective country by more than one standard deviation. Data from the UNESCO Institute for Statistics (UNESCO Institute for Statistics, n.d.) were used to determine the length of primary school education for each country.

Data Analysis

Quality assessment

The quality of the studies and the cross-cultural applicability of the instruments was assessed according to eight criteria. These criteria were developed specifically for this study to reflect important variables in the assessment of low-educated, non-Western persons. Any ambiguous cases with regard to the scoring were resolved in a consensus agreement.

The first criterion was whether any participants who are illiterate were included in the study (“Illiteracy”): 0 = no/not stated, 1 = yes. The second criterion was if the language in which the test was administered was specified (“Language”): 0 = no, 1 = yes. The administration language can significantly influence performance on neuropsychological tests (Boone, Victor, Wen, Razani, & Ponton, Reference Boone, Victor, Wen, Razani and Ponton2007; Carstairs, Myors, Shores, & Fogarty, Reference Carstairs, Myors, Shores and Fogarty2006; Kisser, Wendell, Spencer, & Waldstein, Reference Kisser, Wendell, Spencer and Waldstein2012), and is especially important in the assessment of immigrants, or in countries where many languages are spoken, such as China (Wong, Reference Wong and Fujii2011). Third, the cross-cultural adaptations were scored (“Adaptations”). For this criterion, a modification was made to the system by Beaton, Bombardier, Guillemin, and Ferraz (Reference Beaton, Bombardier, Guillemin and Ferraz2000) to capture the aspects relevant to neuropsychological test development: 0 = no procedures mentioned, 1 = translation (and/or back translation) or other changes to the form, but not the concept of the test, such as replacing letters with numbers or colors, 2 = an expert committee reviewed the (back) translation, or stimuli chosen by expert committee, 3 = all of the previous and pretesting, such as a pilot study in healthy controls. Assembled tests were scored either 0, 2, or 3, as no translation and back translation procedures would be required for assembled tests. The fourth criterion was whether the study reported qualitatively on the usefulness of the instrument for clinical practice, such as the acceptability of the material, acceptability of the duration of the test, and/or floor- or ceiling effects (“Feasibility”): 0 = no, 1 = yes. Illiterate people are known to be less test-wise than literate people, potentially affecting the feasibility of a test in this population (Ardila et al., Reference Ardila, Bertolucci, Braga, Castro-Caldas, Judd, Kosmidis and Rosselli2010). Fifth, the study was scored on the availability of information on reliability and/or validity: 0 = absent, 1 = either validity or reliability data were described, 2 = both validity and reliability were described. Additionally, three criteria were proposed with regard to the final diagnosis. First, “Circularity”– whether the study described preventive measures against circularity, that is, blinding [similar to the domain “The Reference Standard” in the tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews (Whiting, Rutjes, Reitsma, Bossuyt, & Kleijnen, Reference Whiting, Rutjes, Reitsma, Bossuyt and Kleijnen2003)]. This was scored: 0 = no/not stated, 1 = yes. Second, “Sources” – whether both neuropsychological and imaging data were used for the diagnosis, and whether a consensus meeting was held: 0 = not specified, 1 = only neuropsychological assessment or imaging, 2 = both neuropsychological assessment and imaging, and (C) for consensus meeting. As misdiagnoses are common in non-Western populations (Nielsen et al., Reference Nielsen, Vogel, Riepe, de Mendonca, Rodriguez, Nobili and Waldemar2011), it is important to rely on multiple sources of data to support the diagnosis. Third, “Criteria” – whether the study reported using subtype-specific dementia criteria: 0 = not specified, 1 = general criteria, such as the Diagnostic and Statistical Manual of Mental Disorders (DSM) criteria (American Psychiatric Association, 1987, 1994, 2000) or the International Classification of Diseases and Related Health Problems (ICD) criteria, 2 = extensive clinical criteria, for example, the National Institute on Aging-Alzheimer’s Association (NIA-AA) criteria (McKhann et al., Reference McKhann, Knopman, Chertkow, Hyman, Jack, Kawas and Phelps2011) for Alzheimer’s disease (AD) or the Petersen criteria (Petersen, Reference Petersen2004) for MCI. Although a score of one point on any criterion does not necessarily directly equate with one point on any other criterion, sum scores of these eight quality criteria were calculated for each instrument to provide a general indicator of the quality of the study (with a higher score indicating a higher general quality).

In the following sections and tables, the studies are described by cognitive domain, as defined by cognitive theory and according to standard clinical practice (Lezak, Howieson, Bigler, & Tranel, Reference Lezak, Howieson, Bigler and Tranel2012). Although neuropsychological tests often tap multiple cognitive functions, for example, verbal fluency is a sensitive measure of executive function, but also taps language and memory processes, tests are listed in only one primary cognitive domain. Studies investigating multiple cognitive instruments are described in multiple paragraphs if the tests belong to different cognitive domains. When both Western and non-Western populations are described, only the data for the non-Western group are shown. Discriminative validity is described with the Area Under the Curve (AUC), either for people with dementia versus controls or people with MCI versus controls (when only people with MCI were included in the study). AUC classification follows the traditional academic point system (<.60 = fail, .60–.69 = poor, .70–.79 = fair, .80–.89 = good, .90–.99 = excellent). When multiple studies reported on the same (partial) study cohort, the study with the most detailed information, the largest study population and/or the most comprehensive dataset is described.

RESULTS

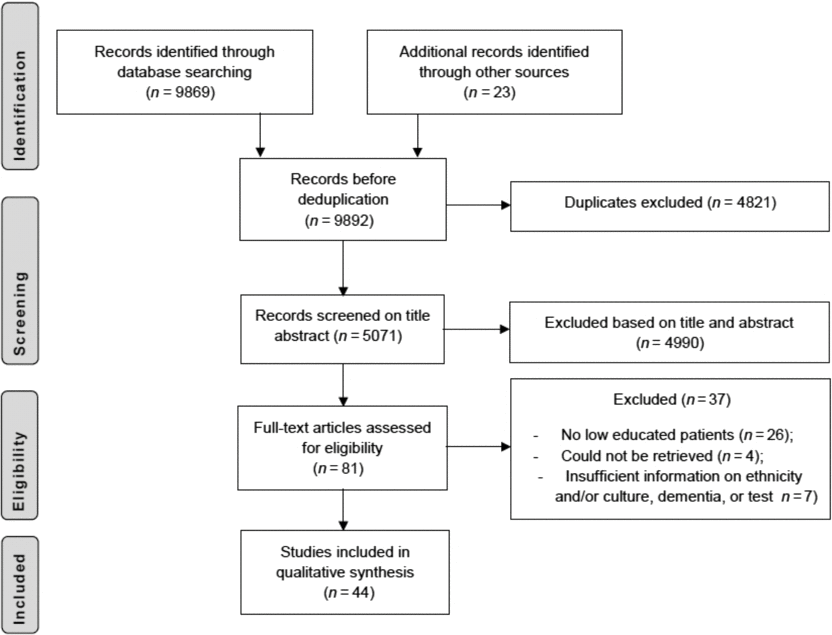

The review process is summarized in Figure 1. The search identified 9869 citations. Furthermore, 23 citations were identified through the reference lists of included studies. After deduplication, 5071 citations remained; these citations were screened on title and abstract. If the topic of the abstract fell within the criteria, but there was insufficient information on the type of population and/or education level that was studied, the participants section and demographic tables in the full text were checked. A total of 81 studies were assessed for eligibility, of which 37 were excluded: 26 due to the fact that low-educated participants were not included in the study sample (see Figure 1).

Fig. 1. Results of database searches and selection process.

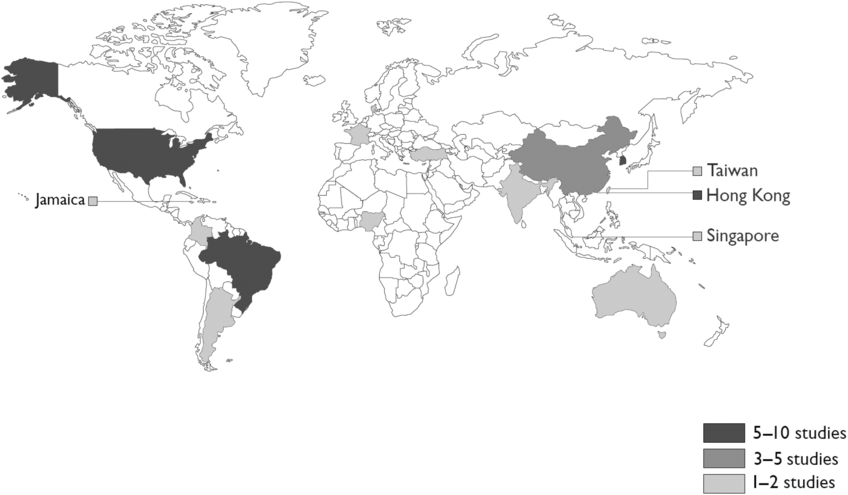

A total of 44 studies were included in this review. As shown in Figure 2, most studies stemmed from Brazil, the USA (Hispanic/Latino population), Hong Kong, and Korea. Primary school education in these countries lasts 5.46 years on average (with a standard deviation of .74 years and range of 4–7 years). Seventeen studies specifically focused on a population of patients with AD, 16 studies investigated an unspecified dementia group or MCI only, and 11 studies investigated a mixed population (mostly AD and smaller groups of other dementias, or AD vs. a “non-AD” group). Of those 11 studies, only one study was specifically aimed at a type of dementia other than AD, that is, Parkinson’s disease dementia (PDD).

Fig. 2. Number of studies per country.

Quality criteria scores are summarized in Supplementary Table 1. People who are illiterate were included in 26 of 44 studies. Regarding the tests that were used, 15 studies did not describe performing any translation procedures, and only five studies using an existing test described a complete adaptation procedure with translation, back translation (or other conceptual changes), review by an expert committee, and pretesting (Chan, Tam, Murphy, Chiu, & Lam, Reference Chan, Tam, Murphy, Chiu and Lam2002; Kim et al., Reference Kim, Lee, Bae, Kim, Kim, Kim and Chang2017; Lee et al., Reference Levy, Jacobs, Tang, Cote, Louis, Alfaro and Marder2002; Loewenstein, Arguelles, Barker, & Duara, Reference Loewenstein, Arguelles, Barker and Duara1993; Shim et al., Reference Shim, Ryu, Lee, Lee, Jeong, Choi and Ryu2015). The language the test was administered in, or the fact that it was administered with an interpreter present, was specified in 32 studies. Aspects of the feasibility of the tests were mentioned in 25 studies. With regard to the reference standard, blinding procedures were described in 15 studies. Out of 44 studies, 14 studies made use of both imaging data and neuropsychological assessment to determine the diagnosis, 13 studies used either one of these two and 17 studies did not mention using either imaging data or a neuropsychological assessment to support the final diagnosis. Nearly all studies specified the criteria that were used to determine the diagnosis: the DSM or similar criteria were used in 15 studies, and 25 studies used specific clinical criteria. Out of 44 studies, 12 studies reported on both the reliability and the validity of the test.

Attention

Attention tests were described in eight studies, with a total of five different types of tests: the Five Digit Test, the Trail Making Test, the Digit Span subtest of the Wechsler Adult Intelligence Scale-Revised (WAIS-R) and WAIS-III, the Corsi Block-Tapping Task, and the WAIS-R Digit Symbol subtest (see Table 1). The Five Digit Test is a relatively new, Stroop-like test, in which participants are asked to either read or count the digits one through five, in congruent and incongruent conditions (e.g. counting two printed fives). With regard to the Trail Making Test, two studies reported on its feasibility. The traditional Trail Making Test could not be used in Chinese and Korean populations with low education levels, leading to “frustration” (Salmon, Jin, Zhang, Grant, & Yu, Reference Salmon, Jin, Zhang, Grant and Yu1995) and to a 100% failure rate, even in healthy controls (Kim, Baek, & Kim, Reference Kim, Baek and Kim2014). An adapted version of Trail Making Test part B, in which participants had to switch between black and white numbers instead of numbers and letters, was completed by a higher percentage of both healthy controls and patients with dementia (Kim et al., Reference Kim, Baek and Kim2014). Generally, the AUCs in the domain of attention were variable, ranging from poor to good (.66–.84). In particular, the AUCs for the Digit Span test varied across studies (.69–.84).

Table 1. Attention

Notes: N = number of participants; MMSE = Mini Mental State Examination; AUC = Area Under the Curve; SN = Sensitivity at optimal cut-off; SP = Specificity at optimal cut-off; C = healthy controls; D = dementia; MCI = Mild Cognitive Impairment; AD = Alzheimer’s Dementia; WAIS-R = Wechsler Adult Intelligence Scale-Revised; PDD = Parkinson’s Disease Dementia; PD = Parkinson’s Disease

Age is mean years (standard deviation); education is presented as mean years (standard deviation) or % low educated or illiterate; MMSE is presented as mean unless otherwise specified.

– indicates no data available or not applicable.

a Group total.

b Median instead of mean.

c Entire dataset split into uneducated, educated respectively.

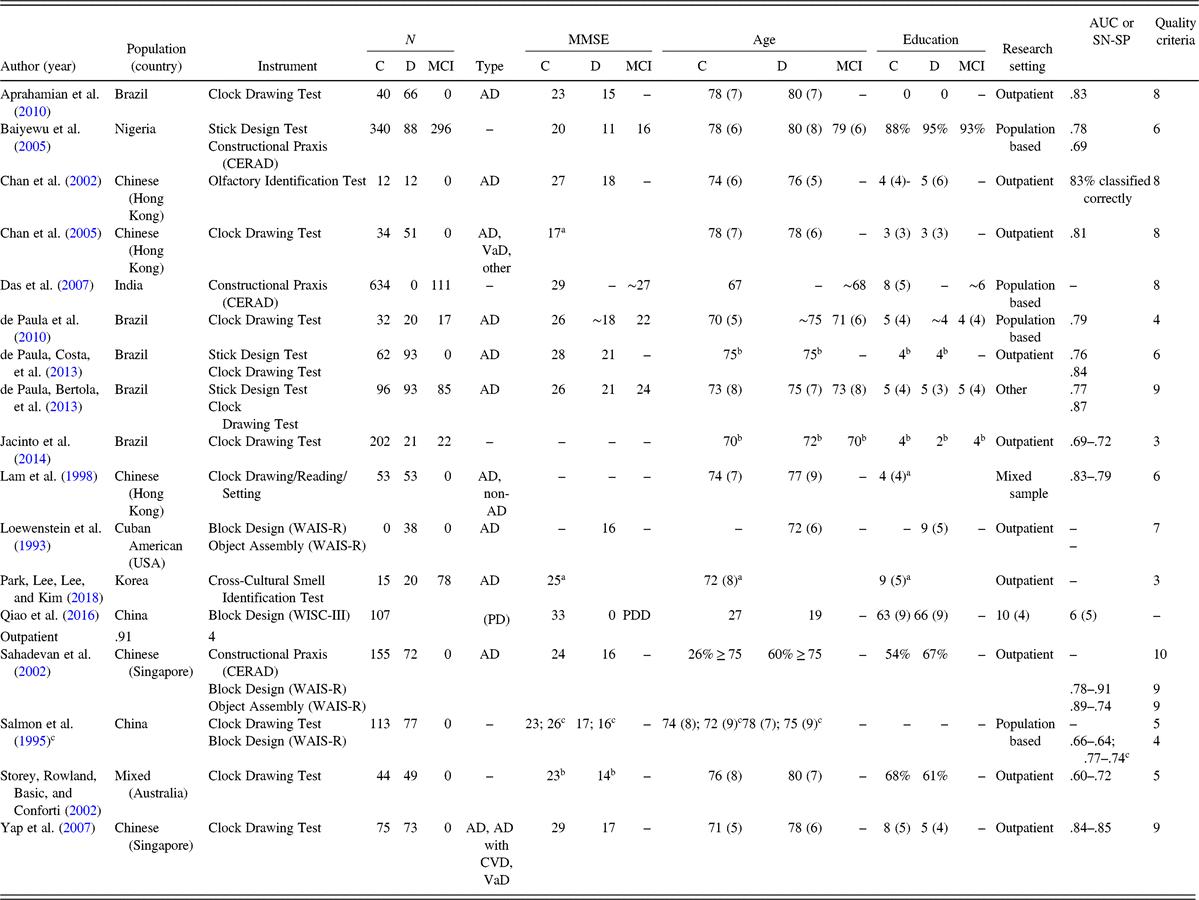

Construction and Perception

Construction tests were investigated in 15 studies, by means of five different instruments: the Clock Drawing Test, the Constructional Praxis Test of the neuropsychological test battery of the Consortium to Establish a Registry for Alzheimer’s Disease (CERAD), the Stick Design Test, the Block Design subtest of the WAIS-R and of the Wechsler Intelligence Scale for Children-III (WISC-III), and the Object Assembly subtest of the WAIS-R (see Table 2). Of these tests, the Clock Drawing Test was studied most often (n = 10). The results with regard to construction tests were mixed. They were described as useful in four studies (Aprahamian, Martinelli, Neri, & Yassuda, Reference Aprahamian, Martinelli, Neri and Yassuda2010; Chan, Yung, & Pan, Reference Chan, Yung and Pan2005; Lam et al., Reference Lam, Chiu, Ng, Chan, Chan, Li and Wong1998; Yap, Ng, Niti, Yeo, & Henderson, Reference Yap, Ng, Niti, Yeo and Henderson2007), whereas most of the others, such as Salmon et al. (Reference Salmon, Jin, Zhang, Grant and Yu1995), describe this cognitive domain to be “particularly difficult for uneducated subjects” and that some patients “refused to continue because of frustration generated by the difficulty of the task”. The Constructional Praxis Test was evaluated in three studies (Baiyewu et al., Reference Baiyewu, Unverzagt, Lane, Gureje, Ogunniyi, Musick and Hendrie2005; Das et al., Reference Das, Bose, Biswas, Dutt, Banerjee, Hazra and Roy2007; Sahadevan, Lim, Tan, & Chan, Reference Sahadevan, Lim, Tan and Chan2002), and was compared with the Stick Design Test in one study (Baiyewu et al., Reference Baiyewu, Unverzagt, Lane, Gureje, Ogunniyi, Musick and Hendrie2005). In the Stick Design Test, participants are asked to use matchsticks to copy various printed designs that are similar in complexity to those of the Constructional Praxis Test. The Stick Design Test had lower failure rates (4% vs. 15%) and was also described as “more acceptable” and more sensitive than the Constructional Praxis Test (Baiyewu et al., Reference Baiyewu, Unverzagt, Lane, Gureje, Ogunniyi, Musick and Hendrie2005). Although a study by de Paula, Costa, et al. (Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013) also described the Stick Design Test as useful, “eliciting less negative emotional reactions [than the Constructional Praxis Test] and lowering anxiety levels”, it showed ceiling effects in both healthy controls and patients, similar to the Clock Drawing Test. Generally, the Stick Design Test had fair AUCs of .76 to .79 (Baiyewu et al., Reference Baiyewu, Unverzagt, Lane, Gureje, Ogunniyi, Musick and Hendrie2005; de Paula, Costa, et al., Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013; de Paula, Bertola, et al., Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013). AUCs for the Constructional Praxis were low (Baiyewu et al., Reference Baiyewu, Unverzagt, Lane, Gureje, Ogunniyi, Musick and Hendrie2005), not reported (Das et al., Reference Das, Bose, Biswas, Dutt, Banerjee, Hazra and Roy2007), or left out of the report due to “low diagnostic ability” (Sahadevan et al., Reference Sahadevan, Lim, Tan and Chan2002). The AUCs were variable for the Clock Drawing Test, ranging from .60 to .87. The Block Design Test had lower sensitivity and specificity in the low educated than high-educated group in one study (Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995), and different cutoff scores for low and high education levels were recommended in a second study (Sahadevan et al., Reference Sahadevan, Lim, Tan and Chan2002), as performance was highly influenced by education.

Table 2. Construction and perception

Notes: N = number of participants; MMSE = Mini Mental State Examination; AUC = Area Under the Curve; SN = Sensitivity at optimal cut-off; SP = Specificity at optimal cut-off; C = healthy controls; D = dementia; MCI = Mild Cognitive Impairment; AD = Alzheimer’s Dementia; CERAD = Consortium to Establish a Registry for Alzheimer’s Disease; VaD = Vascular Dementia; WAIS-R = Wechsler Adult Intelligence Scale-Revised; WISC-III = Wechsler Intelligence Scale for Children-III; PD = Parkinson’s Disease; PDD = Parkinson’s Disease Dementia; CVD = Cerebrovascular disease.

Age is mean years (standard deviation); education is presented as mean years (standard deviation) or % low educated or illiterate; MMSE is presented as mean unless otherwise specified.

– indicates no data available or not applicable.

a Group total.

b Median instead of mean.

c Entire dataset split into uneducated, educated respectively.

Perception was investigated in two studies, both focusing on olfactory processes. The study by Chan et al. (Reference Chan, Tam, Murphy, Chiu and Lam2002) with the Olfactory Identification Test explicitly describes the adaptation procedure of the test. The authors did a pilot study of 16 odors specific to Hong Kong, and substituted some American items with the items that were most frequently identified as correct in their pilot study. The correct classification rate of the test was 83%. The study by Park et al. (Reference Park, Lee, Lee and Kim2018) with the Cross-Cultural Smell Identification Test scored positively on only two of the quality criteria and did not provide any sensitivity/specificity data.

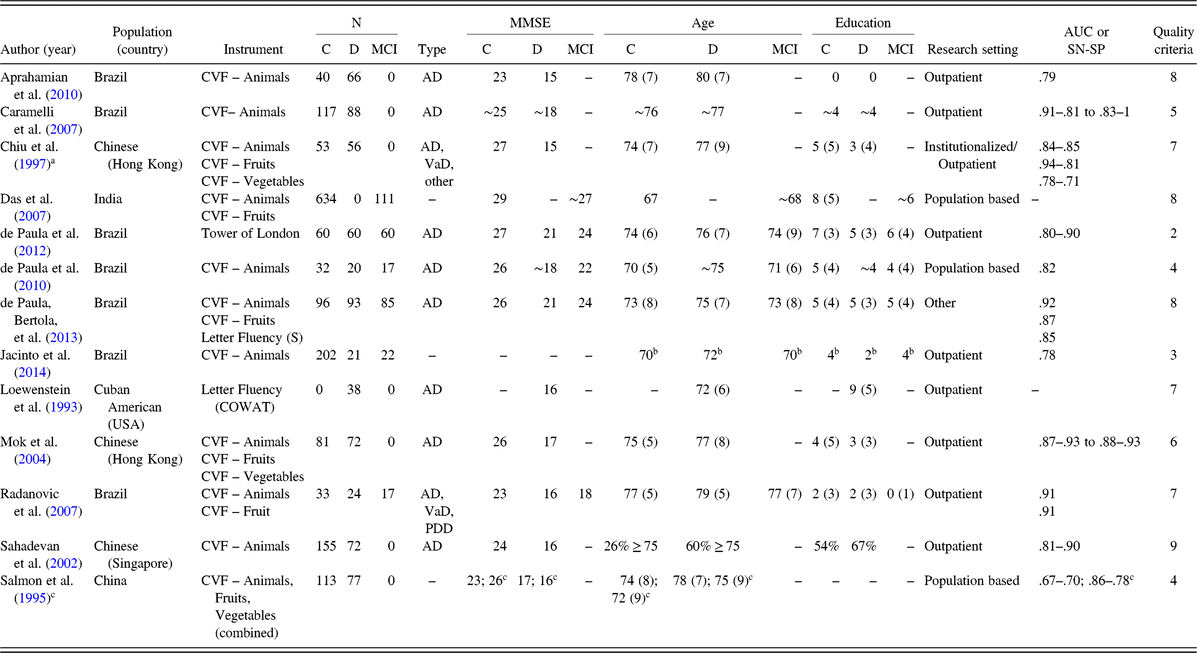

Executive Functions

Measures of executive function were investigated in 13 studies (see Table 3), of which 12 studies used the verbal fluency test, mostly focusing on category fluency (i.e. animals, fruits, vegetables). AUCs were fair to excellent for the fluency test (between .79 and .94), although lower sensitivity and specificity were found for lower-educated participants than higher-educated participants in one study (Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995). Of the six studies that included people who are illiterate (see Table 3), two observed different optimal cutoff scores for illiterate versus higher-educated groups (Caramelli, Carthery-Goulart, Porto, Charchat-Fichman, & Nitrini, Reference Caramelli, Carthery-Goulart, Porto, Charchat-Fichman and Nitrini2007; Mok, Lam, & Chiu, Reference Mok, Lam and Chiu2004). Only one study investigated another measure of executive function, the Tower of London test, with low scores for the quality criteria (de Paula et al., Reference de Paula, Moreira, Nicolato, de Marco, Correa, Romano-Silva and Malloy-Diniz2012). The AUCs for the Tower of London test were good (.80–.90).

Table 3. Executive functions

Notes: N = number of participants; MMSE = Mini Mental State Examination; AUC = Area Under the Curve; SN = Sensitivity at optimal cut-off; SP = Specificity at optimal cut-off; C = healthy controls; D = dementia; MCI = Mild Cognitive Impairment; CVF = Category Verbal Fluency; AD = Alzheimer’s Dementia; VaD = Vascular Dementia; COWAT = Controlled Oral Word Association Test; PDD = Parkinson’s Disease Dementia.

Age is mean years (standard deviation); education is presented as mean years (standard deviation) or % low educated or illiterate; MMSE is presented as mean unless otherwise specified.

– indicates no data available or not applicable.

a Two other fluency categories were described, but not used to assess validity.

b Median instead of mean.

c Entire dataset split into uneducated, educated respectively.

Language

Language tests were investigated in 12 studies, with a total of ten tests, or variations thereof (see Table 4). Of these ten tests, only three measured a language function other than naming: the Token Test, the Comprehension subtest of the WAIS-R, and the Vocabulary subtest of the WAIS-R. Information about the discriminative validity was not reported in three studies that used naming tests (Das et al., Reference Das, Bose, Biswas, Dutt, Banerjee, Hazra and Roy2007; Kim et al., Reference Kim, Lee, Bae, Kim, Kim, Kim and Chang2017; Loewenstein et al., Reference Loewenstein, Arguelles, Barker and Duara1993), as well as in all studies using the Comprehension and Vocabulary subtests of the WAIS-R (Loewenstein et al., Reference Loewenstein, Arguelles, Barker and Duara1993; Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995). The AUCs of the Token Test were fair (.76) in both studies (de Paula, Bertola, et al., Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013; de Paula et al., Reference de Paula, Schlottfeldt, Moreira, Cotta, Bicalho, Romano-Silva and Malloy-Diniz2010). The naming tests were frequently adapted from the Boston Naming Test, or similar types of tests making use of black-and-white line drawings. The AUCs of the naming tests varied, ranging from poor to excellent (.61–.90), with lower sensitivity and specificity for low educated than high-educated participants in one study (Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995).

Table 4. Language

Notes: N = number of participants; MMSE = Mini Mental State Examination; AUC = Area Under the Curve; SN = Sensitivity at optimal cut-off; SP = Specificity at optimal cut-off; C = healthy controls; D = dementia; MCI = Mild Cognitive Impairment; AD = Alzheimer’s Dementia; TN-LIN = The Neuropsychological Investigations Laboratory Naming Test; BCSB = Brief Cognitive Screening Battery; CERAD = Consortium to Establish a Registry for Alzheimer’s Disease; WAIS-R = Wechsler Adult Intelligence Scale-Revised; VaD = Vascular Dementia; PDD = Parkinson’s Disease Dementia.

Age is mean years (standard deviation); education is presented as mean years (standard deviation) or % low educated or illiterate; MMSE is presented as mean unless otherwise specified.

– indicates no data available or not applicable.

a Median instead of mean.

b Group total.

c Entire dataset split into uneducated, educated respectively.

Memory

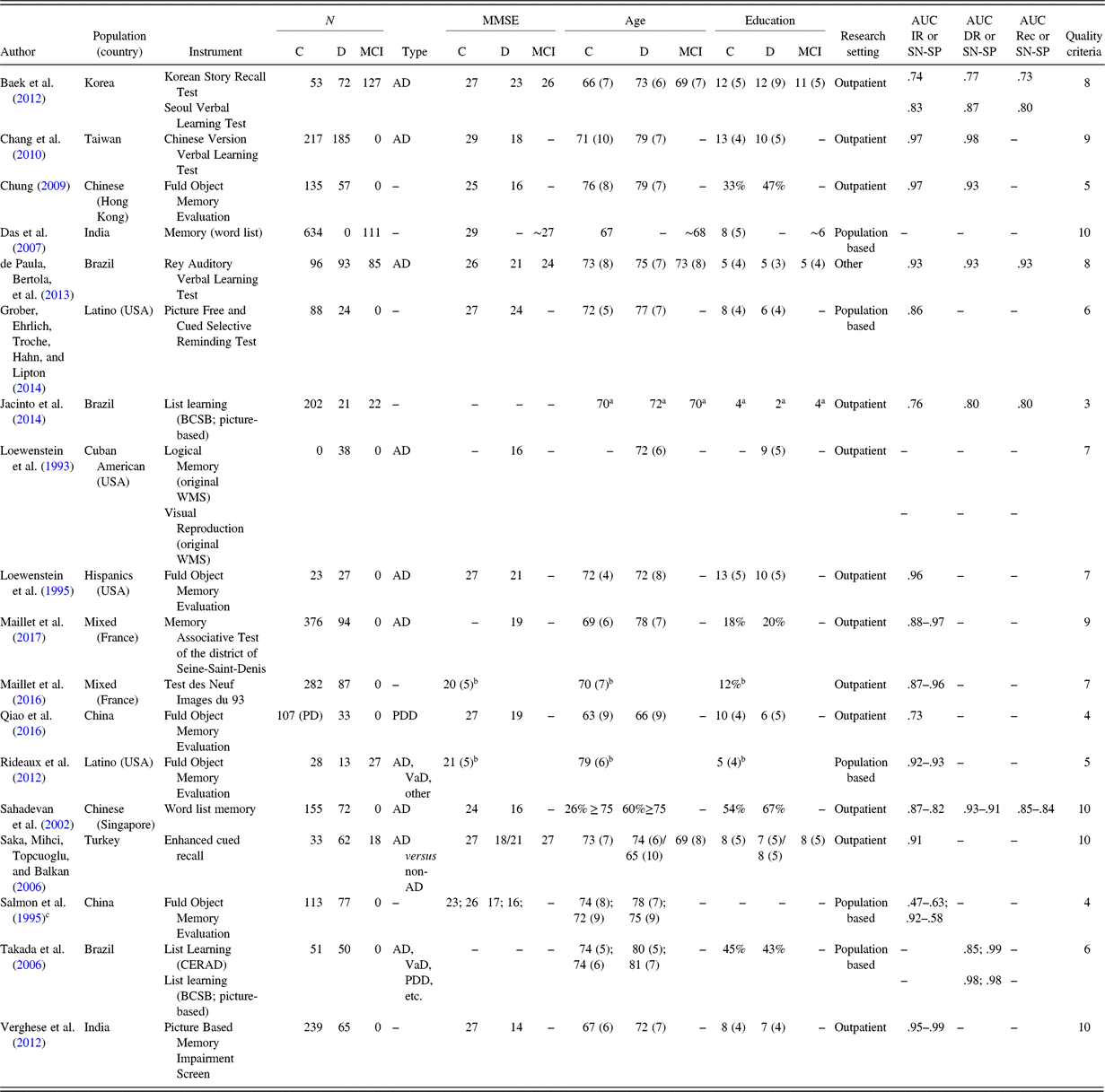

A total of 14 memory tests were investigated in 18 studies, with stimuli presented to different modalities (visual, auditory, and tactile), and in various formats (cued vs. free recall; word lists vs. stories; see Table 5). Both adaptations of existing tests and some assembled tests were studied, such as a picture-based list learning test from Brazil (Jacinto et al., Reference Jacinto, Brucki, Porto, de Arruda Martins, de Albuquerque Citero and Nitrini2014; Takada et al., Reference Takada, Caramelli, Fichman, Porto, Bahia, Anghinah and Nitrini2006) and picture-based cued recall tests in France (Maillet et al., Reference Maillet, Matharan, Le Clesiau, Bailon, Peres, Amieva and Belin2016, Reference Maillet, Narme, Amieva, Matharan, Bailon, Le Clesiau and Belin2017). AUCs were generally fair to excellent (.74–.99). Remarkably, more than half (n = 11) of the studies did not describe blinding procedures (see Table 5). With regard to specific tests, the Fuld Object Memory Evaluation (FOME), using common household objects as stimuli, was used in five studies (Chung, Reference Chung2009; Loewenstein, Duara, Arguelles, & Arguelles, Reference Loewenstein, Duara, Arguelles and Arguelles1995; Qiao, Wang, Lu, Cao, & Qin, Reference Qiao, Wang, Lu, Cao and Qin2016; Rideaux, Beaudreau, Fernandez, & O’Hara, Reference Rideaux, Beaudreau, Fernandez and O’Hara2012), yielding high sensitivity and specificity rates in most studies, although one found lower sensitivity and specificity in the low-educated group (Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995). However, the overall quality of the studies investigating this test was relatively low (see Table 5). Tests using a verbal list learning format (Baek, Kim, & Kim, Reference Baek, Kim and Kim2012; Chang et al., Reference Chang, Kramer, Lin, Chang, Wang, Huang and Wang2010; de Paula, Bertola, et al., Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013; Sahadevan et al., Reference Sahadevan, Lim, Tan and Chan2002; Takada et al., Reference Takada, Caramelli, Fichman, Porto, Bahia, Anghinah and Nitrini2006) also had good to excellent AUCs (.80–.99). With regard to the modality the stimuli were presented to, one study (Takada et al., Reference Takada, Caramelli, Fichman, Porto, Bahia, Anghinah and Nitrini2006) found that a picture-based memory test had better discriminative abilities than a verbal list learning test in the low educated, but not the higher-educated group.

Table 5. Memory

Notes: N = number of participants; MMSE = Mini Mental State Examination; AUC = Area Under the Curve; IR = Immediate Recall; SN = Sensitivity at optimal cut-off; SP = Specificity at optimal cut-off; DR = Delayed Recall; Rec = Recognition; C = healthy controls; D = dementia; MCI = Mild Cognitive Impairment; AD = Alzheimer’s Dementia; BCSB = Brief Cognitive Screening Battery; WMS: Wechsler Memory Scale; PD = Parkinson’s Disease; PDD = Parkinson’s Disease Dementia; VaD = Vascular Dementia; CERAD = Consortium to Establish a Registry for Alzheimer’s Disease.

Age is mean years (standard deviation); education is presented as mean years (standard deviation) or % low educated or illiterate; MMSE is presented as mean unless otherwise specified.

- indicates no data available or not applicable.

a Median instead of mean.

b Group total.

c Entire dataset split into uneducated, educated respectively.

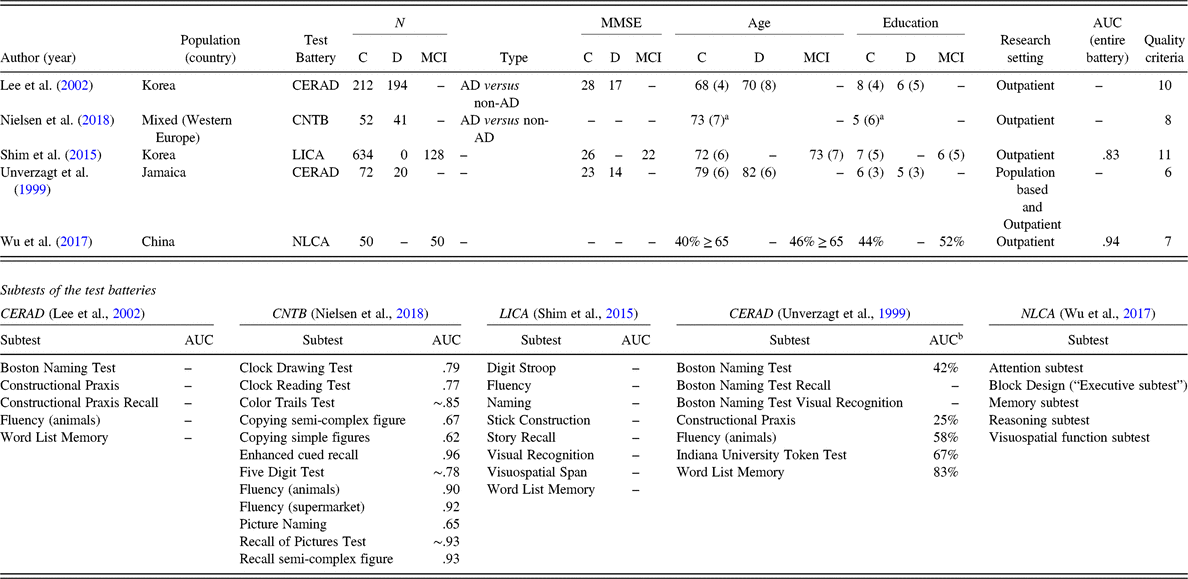

Assessment Batteries

Extensive test batteries were investigated in five studies (see Table 6). The studies by Lee et al. (Reference Levy, Jacobs, Tang, Cote, Louis, Alfaro and Marder2002) and Unverzagt et al. (Reference Unverzagt, Morgan, Thesiger, Eldemire, Luseko, Pokuri and Hendrie1999) looked into versions of the CERAD neuropsychological test battery. The CERAD battery was specifically designed to create uniformity in assessment methods of AD worldwide (Morris et al., Reference Morris, Heyman, Mohs, Hughes, van Belle, Fillenbaum and Clark1989) and contains category verbal fluency (animals), a 15-item version of the Boston Naming Test, the Mini-Mental State Examination, a word list learning task with immediate- and delayed recall, and recognition trials, and the Constructional Praxis Test, including a recall trial. The study by Lee et al. (Reference Levy, Jacobs, Tang, Cote, Louis, Alfaro and Marder2002) extensively describes the difficulties in designing an equivalent version in Korean, most notably with regard to “word frequency, mental imagery, phonemic similarity and semantic or word length equivalence”. In some cases, an adequate translation proved to be “impossible”. Items that used reading and writing (MMSE) were replaced by items concerning judgment to better suit the illiterate population in Korea. The Trail Making Test was added in this study to assess vascular dementia (VaD) and PDD, but – similar to other studies in the domain of attention – less-educated controls had “great difficulties” completing parts A and B of this test. A second study investigated the CERAD in a Jamaican population (Unverzagt et al., Reference Unverzagt, Morgan, Thesiger, Eldemire, Luseko, Pokuri and Hendrie1999). Remarkably, 8 out of 20 dementia patients were “not testable” with the CERAD battery. No further information was supplied as to the cause. The correct classification rates for the patients with dementia that did finish the battery were low (ranging from 25% to 67%) – except for the word list memory test (83%).

Table 6. Test batteries

Notes: N = number of participants; MMSE = Mini Mental State Examination; AUC = Area Under the Curve; C = healthy controls; D = dementia; MCI = Mild Cognitive Impairment; CERAD = Consortium to Establish a Registry for Alzheimer’s Disease; AD = Alzheimer’s Dementia; CNTB = European Cross-Cultural Neuropsychological Test Battery; LICA = Literacy Independent Cognitive Assessment; NLCA = Non-Language Based Cognitive Assessment.

Age is mean years (standard deviation); education is presented as mean years (standard deviation) or % low educated or illiterate; MMSE is presented as mean unless otherwise specified.

– indicates no data available or not applicable.

a Group total.

b Correct classification rate of dementia patients.

A study by Nielsen et al. (Reference Nielsen, Segers, Vanderaspoilden, Beinhoff, Minthon, Pissiota and Waldemar2018) investigated the European Cross-Cultural Neuropsychological Test Battery (CNTB) in immigrants with dementia from a Turkish, Moroccan, former Yugoslav, Polish, or Pakistani/Indian background. The CNTB consists of the Rowland Universal Dementia Assessment Scale (RUDAS), the Recall of Pictures Test, Enhanced Cued Recall, the copying and recall of a semi-complex figure, copying of simple figures, the Clock Drawing Test, the Clock Reading Test, a picture naming test, category verbal fluency (animal and supermarket), the Color Trails Test, the Five Digit Test, and serial threes. The Color Trails Test and copy and recall of a semi-complex figure were not administered to participants with less than 1 year of education. The study showed excellent discriminative abilities for measures of memory – Enhanced Cued Recall, Recall of Pictures Test, and recall of a semi-complex figure – and category word fluency. Most of the AUCs for these tests were .90 or higher. Attention measures, that is, the Color Trails Test and Five Digit Test, had fair to good discriminative abilities, with AUCs of around .85 and .78, respectively. The diagnostic accuracy was poor for picture naming (AUC .65) and graphomotor construction tests (AUCs of .62 and .67).

A third battery was the Literacy Independent Cognitive Assessment, or LICA (Shim et al., Reference Shim, Ryu, Lee, Lee, Jeong, Choi and Ryu2015), a newly developed cognitive battery for people who are illiterate. Subtests include Story and Word Memory, Stick Construction (similar to, but more extensive than the Stick Design Test), a modified Corsi Block Tapping Task, Digit Stroop, category word fluency (animals), a Color and Object Recognition Test, and a naming test. Only the performance on Stick Construction and the Color and Object Recognition Test were not significantly different between controls and MCI patients. The AUC for the entire battery was good (.83) in both the group of people who were literate and the group of people who were illiterate, but no information was provided on the AUCs of the subtests.

The last battery was the Non-Language–based Cognitive Assessment (Wu, Lyu, Liu, Li, & Wang, Reference Wu, Lyu, Liu, Li and Wang2017), a battery primarily designed for aphasia patients, but also validated in Chinese MCI patients. It contains Judgment of Line Orientation, overlapping figures, a visual reasoning subtest, a visual memory test using stimuli chosen to match the Chinese culture, an attention task in a cross-out paradigm, and Block Design test. All demonstrations were nonverbal. The AUC was excellent (.94), but no information was available regarding the subtests.

DISCUSSION

In this systematic review, an overview was provided of 44 studies investigating domain-specific neuropsychological tests used to assess dementia in non-Western populations with low education levels. The quality of these studies, the reliability, validity, and cross-cultural and/or cross-linguistic applicability were summarized. The studies stemmed mainly from Brazil, Hong Kong, and Korea, or concerned Hispanics/Latinos residing in the USA. Most studies focused on AD or unspecified dementia. Memory was studied most often, and various formats of memory tests seem suitable for low-educated, non-Western populations. The traditional Western tests in the domains of attention and construction were unsuitable for low-educated patients; instead, tests such as the Stick Design Test or Five Digit Test may be considered. There was little variety in instruments measuring executive functioning and language. More cross-cultural studies are needed to advance the assessment of these cognitive domains. With regard to the quality of the studies, the most remarkable findings were that many studies did not report a thorough adaptation procedure or blinding procedures.

A main finding of this review was that most studies investigated either patients with AD or a mixed or unspecified group of patients with dementia or MCI. In practice, this means that it remains unknown whether current domain-specific neuropsychological tests can be used to diagnose other types of dementia in non-Western, low-educated populations. Furthermore, only a third of the included studies described taking procedures against circularity of reasoning, such as blinding, potentially inflating the values for the AUCs. Only a third of the studies made use of both imaging and neuropsychological assessment to determine the reference standard. This can be problematic considering that misdiagnoses are likely to be more prevalent in a population in which barriers to dementia diagnostics in terms of culture, language, and education are present (Daugherty, Puente, Fasfous, Hidalgo-Ruzzante, & Perez-Garcia, Reference Daugherty, Puente, Fasfous, Hidalgo-Ruzzante and Perez-Garcia2017; Espino & Lewis, Reference Espino and Lewis1998; Nielsen et al., Reference Nielsen, Vogel, Phung, Gade and Waldemar2011). Another remarkable finding in this review was that only a handful of studies applied a rigorous adaptation procedure in which the instrument was translated, back translated, reviewed by an expert committee, and pilot-tested. These studies highlight the difficulty of developing a test that measures a cognitive construct in the same way as the original test in terms of the language used and the difficulty level. Abou-Mrad et al. (Reference Abou-Mrad, Tarabey, Zamrini, Pasquier, Chelune, Fadel and Hayek2015) elegantly describe these difficulties and provide details for the interested reader about the way some of these issues were resolved in their study.

With regard to specific cognitive domains, the tests identified in this review that measured attention were the Trail Making Test, WAIS-R Digit Span, Corsi Block Tapping Task, WAIS-R Digit Symbol, and Five Digit Test. It was apparent that traditional Western paper-and-pencil tests (Trail Making Test, Digit Symbol) are hard for uneducated subjects (Kim et al., Reference Kim, Baek and Kim2014; Lee et al., Reference Levy, Jacobs, Tang, Cote, Louis, Alfaro and Marder2002; Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995). It therefore seems unlikely that these types of tests will be useful in low-educated, non-Western populations. With regard to Digit Span tests, previous studies have indicated that performance levels vary depending on the language of administration, for example, due to the way digits are ordered in Spanish versus English (Arguelles, Loewenstein, & Arguelles, Reference Arguelles, Loewenstein and Arguelles2001), or due to a short pronunciation time in Chinese (Stigler, Lee, & Stevenson, Reference Stigler, Lee and Stevenson1986). This makes Digit Span less suitable as a measure for cross-linguistic evaluations in diverse populations. On the other hand, the Five Digit Test does not seem to suffer from this limitation: it is described by Sedó (Reference Sedó2004) as less influenced by differences in culture, language, and formal education, partially because it only makes use of the numbers one through five, that most illiterate people can identify and use correctly (according to Sedó).

Western instruments used to assess the domain construction, such as the Clock Drawing Test, led to frustration in multiple studies and had limited usefulness in the clinical practice with low-educated patients. This is in line with the finding by Nielsen and Jorgensen (Reference Nielsen and Jorgensen2013), that even healthy illiterate people may experience problems with graphomotor construction tasks. The Stick Design Test, that does not rely on graphomotor responses, was described as more acceptable for low-educated patients. Given the ceiling effects that were present in one study (de Paula, Costa, et al., Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013), as well as the differences in performance between the samples from Nigeria (Baiyewu et al., Reference Baiyewu, Unverzagt, Lane, Gureje, Ogunniyi, Musick and Hendrie2005) and Brazil (de Paula, Costa, et al., Reference de Paula, Costa, Bocardi, Cortezzi, De Moraes and Malloy-Diniz2013), further studies on this instrument are required.

Interestingly, no studies in the domain of Perception and Construction focused specifically on the assessment of visual agnosias, although a test of object recognition and a test with overlapping figures were included in two test batteries. As agnosia is included in the core clinical criteria of probable AD (McKhann et al., Reference McKhann, Knopman, Chertkow, Hyman, Jack, Kawas and Phelps2011), it is important to have the appropriate instruments available to determine whether agnosia is present. The only tests measuring perception were two smell identification tasks (Chan et al., Reference Chan, Tam, Murphy, Chiu and Lam2002; Park et al., Reference Park, Lee, Lee and Kim2018). In recent years, this topic has received more attention from cross-cultural researchers. Although olfactory identification is influenced by experience with specific odors (Ayabe-Kanamura, Saito, Distel, Martinez-Gomez, & Hudson, Reference Ayabe-Kanamura, Saito, Distel, Martinez-Gomez and Hudson1998), and tests would therefore have to be adapted to specific populations, deficits in olfactory perception have been described in the early stages of AD and PDD (Alves, Petrosyan, & Magalhaes, Reference Alves, Petrosyan and Magalhaes2014). As this task might also be considered to be ecologically valid, it may be an interesting avenue for further research. The study by Chan et al. (Reference Chan, Tam, Murphy, Chiu and Lam2002) with the Olfactory Identification Test explicitly describes the selection procedure of the scents used in the study, making it easy to adapt to other populations.

With regard to executive functioning, nearly all studies examined the verbal fluency test. In addition, the Tower of London test was examined in one study, and some subtests of attention tests tap aspects of executive functioning as well, such as the incongruent trial of the Five Digit Test or the Color Trails Test part 2. This relative lack of executive functioning tests poses significant problems to the diagnosis of Frontotemporal Dementia (FTD) and other dementias influencing frontal or frontostriatal pathways, such as PDD and dementia with Lewy Bodies (DLB) (Johns et al., Reference Johns, Phillips, Belleville, Goupil, Babins, Kelner and Chertkow2009; Levy et al., Reference Levy, Jacobs, Tang, Cote, Louis, Alfaro and Marder2002). Although this review shows that a limited amount of research is available on lower-educated populations, studies in higher-educated populations have given some indication of the clinical usefulness of other types of executive functioning tests in non-Western populations. For example, Brazilian researchers (Armentano, Porto, Brucki, & Nitrini, Reference Armentano, Porto, Brucki and Nitrini2009; Armentano, Porto, Nitrini, & Brucki, Reference Armentano, Porto, Nitrini and Brucki2013) found the Rule Shift, Modified Six Elements, and Zoo Map subtests of the Behavioral Assessment of the Dysexecutive Syndrome to be useful in discriminating Brazilian patients with AD from controls. It would be interesting to see whether these subtests can be modified so they can be applied with patients who have little to no formal education.

The results in the cognitive domain of language showed that (adapted) versions of the Boston Naming Test were most often studied. This is remarkable, as it is known that even healthy people who are illiterate are at a disadvantage when naming black-and-white line drawings, such as those in the Boston Naming Test, compared to people who are literate (Reis, Petersson, Castro-Caldas, & Ingvar, Reference Reis, Petersson, Castro-Caldas and Ingvar2001). This disadvantage disappears when a test uses colored images or, better yet, real-life objects (Reis, Faisca, Ingvar, & Petersson, Reference Reis, Faisca, Ingvar and Petersson2006; Reis, Petersson, et al., Reference Reis, Petersson, Castro-Caldas and Ingvar2001). Considering low-educated patients, Kim et al. (Reference Kim, Lee, Bae, Kim, Kim, Kim and Chang2017) describe an interesting finding: although participants with a low education level scored lower on the naming test, remarkable differential item functioning was discovered; the items “acorn” and “pomegranate” were easier to name for low-educated people than higher-educated people, and the effect was reversed for “compass” and “mermaid”. The authors suggest that this may be due to these groups growing up in rural versus urban areas, thereby acquiring knowledge specific to these environments. New naming tests might therefore benefit from differential item functioning analyses with regard to education, but also other demographic variables. It was surprising that none of the studies examined a cross-culturally and cross-linguistically applicable test, even though such a test has been developed, that is, the Cross-Linguistic Naming Test (Ardila, Reference Ardila2007). The Cross-Linguistic Naming Test has been studied in healthy non-Western populations from Morocco, Colombia, and Lebanon (Abou-Mrad et al., Reference Abou-Mrad, Chelune, Zamrini, Tarabey, Hayek and Fadel2017; Galvez-Lara et al., Reference Galvez-Lara, Moriana, Vilar-Lopez, Fasfous, Hidalgo-Ruzzante and Perez-Garcia2015), as well as in Spanish patients with dementia (Galvez-Lara et al., Reference Galvez-Lara, Moriana, Vilar-Lopez, Fasfous, Hidalgo-Ruzzante and Perez-Garcia2015). These studies preliminarily support its cross-cultural applicability, although more research is needed in diverse populations with dementia.

Memory was the cognitive domain that was most extensively studied, in different formats and with stimuli presented to different sensory modalities: visual, auditory, and tactile. Both adaptations of existing tests and assembled tests were studied. The memory tests in this review generally had the best discriminative abilities of all cognitive domains that were studied. Although this is a positive finding, given that memory tests play a pivotal role in assessing patients with AD, memory tests alone are insufficient to diagnose, or discriminate between, other types of dementia, such as VaD, DLB, FTD, or PDD.

For the majority of the test batteries that were described, information about the validity of the subtests was not provided. An exception is the study of the CNTB (Nielsen et al., Reference Nielsen, Segers, Vanderaspoilden, Beinhoff, Minthon, Pissiota and Waldemar2018). Largely in line with the other findings in this review, the memory tests of the CNTB performed best, whereas the tests of naming and graphomotor construction performed worst. Attention tests, such as the Color Trails Test and Five Digit Test, performed relatively well. In sum, the CNTB encompasses a variety of potentially useful subtests. Similar to the CNTB, the LICA also includes less traditional tests, such as Stick Construction and Digit Stroop, but the lack of information about the discriminative abilities of the subtests makes it hard to judge the relative value of these tests for the cross-cultural assessment of dementia.

In this review, special attention was paid to the influence of education on the performance on neuropsychological tests. Interestingly, the discriminative abilities of the tests were consistently lower for low-educated participants than high-educated patients (Salmon et al., Reference Salmon, Jin, Zhang, Grant and Yu1995). It has been suggested that tests with high ecological validity may be more suitable for low-educated populations than the (Western) tests that are currently used. Perhaps inspiration can be drawn from the International Shopping List Test (Thompson et al., Reference Thompson, Wilson, Snyder, Pietrzak, Darby, Maruff and Buschke2011) for memory, the Multiple Errands Test for executive functioning (Alderman, Burgess, Knight, & Henman, Reference Alderman, Burgess, Knight and Henman2003), or even its Virtual Reality (VR) version (Cipresso et al., Reference Cipresso, Albani, Serino, Pedroli, Pallavicini, Mauro and Riva2014), or other VR tests, such as the Non-immersive Virtual Coffee Task (Besnard et al., Reference Besnard, Richard, Banville, Nolin, Aubin, Le Gall and Allain2016) or the Multitasking in the City Test (Jovanovski et al., Reference Jovanovski, Zakzanis, Ruttan, Campbell, Erb and Nussbaum2012).

Some limitations must be acknowledged with respect to this systematic review. It can be argued that this review should not have been limited to dementia or MCI, and should have also included studies of healthy people – for example, normative data studies – or studies of patients with other medical conditions. The inclusion criterion of patients with dementia or MCI was chosen as it is important to know if and how the presence of dementia influences test performance, before a test can be used in clinical practice. That is: is the test sufficiently sensitive and specific to the presence of disease and to disease progression? If this is not the case, using the test might lead to an underestimation of the presence of dementia, or problems differentiating dementia from other conditions.

Furthermore, with regard to the definition of the target population of this review, questions may be raised whether African American people from the USA should have been included. Although differences in test performance have indeed been found between African Americans and (non-Hispanic) Whites, these differences mostly appear to be driven by differences in quality of education, as opposed to differences in culture (Manly, Jacobs, Touradji, Small, & Stern, Reference Manly, Jacobs, Touradji, Small and Stern2002; Nabors, Evans, & Strickland, Reference Nabors, Evans, Strickland, Fletcher-Janzen, Strickland and Reynolds2000; Silverberg, Hanks, & Tompkins, Reference Silverberg, Hanks and Tompkins2013). Although a very interesting topic for further research, the absence of cultural or linguistic barriers in this population has led to the exclusion of this population in this review.

Lastly, a remarkable finding was the relative paucity of studies from regions such as Africa and the Middle East. It is important to note that, although the search was thorough and studies in other languages were not excluded from this review, some studies without titles/abstracts in English, or studies that were published in local databases, may not have been found. For example, a review by Fasfous, Al-Joudi, Puente, and Perez-Garcia (Reference Fasfous, Al-Joudi, Puente and Perez-Garcia2017) describes how Arabic-speaking countries have their own data bases (e.g. Arabpsynet) and how an adequate word for “neuropsychology” is lacking in Arabic. Similar databases are known to exist in other regions as well, such as LILACS in Latin America (Vasconcelos et al., Reference Vasconcelos, Brucki and Bueno2007).

A strength of this review is that it provides clinicians and researchers working with non-Western populations with a clear overview of the tests and comprehensive test batteries that may have cross-cultural potential, and could be further studied. For example, researchers might use tests from the CNTB as the basis of the neuropsychological assessment, and supplement it with other tests. If preferred, memory tests can also be chosen from the wide variety of memory tests with good AUCs in this review, such as the Fuld Object Memory Evaluation. Researchers are advised against using measures of attention and construction that are paper-and-pencil based, and instead to use tests such as the Five Digit Test for attention, or the Stick Design Test for construction. With regard to executive functioning, it is recommended to look for new, ecologically valid tests to supplement existing tests such as the category verbal fluency test and the Five Digit Test. Furthermore, it is recommended to use language tests that are not based on black-and-white line drawings, but instead use colored pictures, photographs, or real-life objects. The Cross-Linguistic Naming Test might have potential for such purposes.

Other recommendations for future research are to study patients with a variety of diagnoses, including – but not limited to – FTD, DLB, VaD, and primary progressive aphasias. However, as this review has pointed out, this will remain difficult as long as adequate tests to assess these dementias are lacking. It is therefore recommended that future studies support the diagnosis used as the reference standard by additional biomarkers of disease, such as magnetic resonance imaging scans or lumbar punctures. Another suggestion is to carry out validation studies in patients with dementia for instruments that have only been used in healthy controls or for normative data studies. Lastly, it is recommended that test developers use the most up-to-date guidelines on the adaptation of cross-cultural tests, such as those by the International Test Commission (International Test Commission, 2017) and others (Hambleton, Merenda, & Spielberger, Reference Hambleton, Merenda and Spielberger2005; Iliescu, Reference Iliescu2017), and report in their study how they met the various criteria described in these guidelines.

In conclusion, the neuropsychological assessment of dementia in non-Western, low-educated patients is complicated by a lack of research examining cognitive domains such as executive functioning, non-graphomotor construction, and (the cross-cultural assessment of) language, as well as a lack of studies investigating other types of dementia than AD. However, promising instruments are available in a number of cognitive domains that can be used for future research and clinical practice.

Acknowledgements

This study was supported by grant 733050834 from the Netherlands Organization of Scientific Research (ZonMw Memorabel). The authors would like to thank Wichor Bramer from the Erasmus MC University Medical Center Rotterdam for his help in developing the search strategy.

CONFLICT OF INTEREST

The authors have nothing to disclose.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit https://doi.org/10.1017/S1355617719000894.