1. Introduction

The term ‘creativity’ is often misused, especially by industrial practitioners when promoting their own products and/or design processes. However, the problem is not limited to industry, since in academia, the term is sometimes used as a buzzword. Although creativity is often defined in different ways, making a shared definition unlikely (Doheim & Yusof Reference Doheim and Yusof2020), a plethora of studies provide comprehensive definitions, allowing us to clarify at least the fundamental pillars of creativity. More precisely, in the context of design, creativity has been defined by Amabile (Reference Amabile and Kidd1983) as the ability to create ideas that are novel, useful and appropriate, while Moreno et al. (Reference Moreno, Blessing, Yang, Hernández and Wood2016) define creativity as the ability to generate novel and valuable ideas. In other definitions, novelty is always present and can thus be considered the main pillar of any concept of creativity.

Unfortunately, the term ‘novelty’ has a multifaceted meaning, at least in the context of engineering design (Fiorineschi & Rotini Reference Fiorineschi and Rotini2021). For instance, Verhoeven, Bakker & Veugelers (Reference Verhoeven, Bakker and Veugelers2016) mentioned ‘technological novelty’, Boden (Reference Boden2004) considered both ‘historical novelty’ and ‘psychological novelty’ and Vargas-Hernandez, Okudan & Schmidt (Reference Vargas-Hernandez, Okudan and Schmidt2012) referred to the concept of ‘unexpectedness’. However, the nature of each of these definitions is quite different, which could generate misunderstandings because some of them refer to the ‘concept of novelty’ (i.e., to what is expected to be novel), while others refer to the ‘novelty type’ (i.e., to the reference to be considered for establishing the novelty of ideas). Indeed, according to Fiorineschi & Rotini (Reference Fiorineschi and Rotini2021), while ‘unexpectedness’ is a concept of novelty, ‘historical novelty’ and ‘psychological novelty’ define two possible novelty types.

The motivation for this work is the perceived need to improve the understanding of how and why novelty metrics are actually used in practice. Unfortunately, it is impossible to perform an in-depth analysis of the literature for all the available metrics in a single paper. Therefore, we decided to focus on one of the most cited contributions related to novelty metrics. More precisely, this literature review is performed by considering all the contributions that cite the article by Shah, Vargas-Hernandez & Smith (Reference Shah, Vargas-Hernandez and Smith2003a), commonly referenced and acknowledged by engineering design scholars (Kershaw et al. Reference Kershaw, Bhowmick, Seepersad and Hölttä-Otto2019), with 739 citations on Scopus (at March 2021). In particular, Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a) provided two families of novelty assessment procedures, namely the ‘a priori’ and the ‘a posteriori’ procedures. The ‘a priori’ approach can be associated with ‘historical novelty’ since it requires a reference set of solutions to assess the novelty of ideas. The ‘a posteriori’ approach does not require a set of reference solutions because the ideas to be assessed constitute the reference itself. In this specific procedure, novelty is calculated by counting the occurrences of similar ideas generated in the same session. Therefore, the a posteriori procedure is associated with ‘psychological novelty’ and with the ‘uncommonness’ or ‘unexpectedness’ of a specific idea.

The first article mentioning these two assessment approaches was published more than 20 years ago (Shah, Kulkarni & Vargas-Hernandez Reference Shah, Kulkarni and Vargas-Hernandez2000), just 3 years before the publication that more comprehensively demonstrates the validity of the metrics (Shah et al. Reference Shah, Vargas-Hernandez and Smith2003a). However, notwithstanding the wide diffusion of these seminal contributions, it is still not clear whether and to what extent the mentioned metrics are actually used. To bridge this gap, this work presents a comprehensive literature review of the articles and conference papers (in English) that cite the contribution of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a) (hereinafter called SVS), since it is acknowledged to be the reference in which the metrics are comprehensively explained and tested. In this way, it is our intention to provide a clear picture of the actual usage of the two novelty assessment procedures (i.e., the a posteriori and the a priori procedures). More specifically, the objective of this work is to determine the acknowledged applications of the mentioned assessment procedures and provide insightful considerations for future developments and/or applications by discussing the observed criticalities.

This work does not perform an in-depth analysis of each paper, as this activity is unnecessary to accomplish the claimed objective. Rather, it performs an analysis of the literature contributions and is focused solely on the extraction of the specific information needed to build a clear representation of the use of SVS novelty metrics. Therefore, the review intentionally overlooks any paper that could present (in our opinion) certain flaws. Indeed, providing a reference to a contribution that presents a questionable application of the metrics without preforming a comprehensive discussion about the research work would surely appear defamatory for the authors and not ethically appropriate. The references of the reviewed works are of course listed in the following sections.

The content of the paper is organised as follows. A description of the two SVS metrics is provided in Section 2 together with a brief overview of the works that perform a literature analysis of novelty metrics in the engineering design field (i.e., the context where the work of Shah, Vargas-Hernandez & Smith Reference Shah, Vargas-Hernandez and Smith2003a is mostly cited). The research methodology is comprehensively described in Section 3 together with a detailed description of the key parameters used to perform the analysis of the literature. Section 4 presents the obtained results, which are subsequently discussed in Section 5. Finally, conclusions are reported in Section 6. It is also important to note that it was not possible to retrieve all the citing documents. Accordingly, a detailed list is reported in Appendix Table A1 about the documents that we were unable to retrieve.

2. Background

2.1. The novelty metrics proposed by SVS

The well-acknowledged set of metrics proposed by SVS constitutes a milestone for design creativity research. SVS considered four parameters affecting ‘idea generation effectiveness’ – that is, the novelty, variety, quality and quantity of generated ideas – proposing metrics to facilitate the assessment of these ideas. It is not in the scope of this paper to describe each parameter and the related metrics. For additional information, the reader can refer to the original work of SVS (Shah et al. Reference Shah, Vargas-Hernandez and Smith2003a), but it is also relevant to acknowledge that important analyses and improvements have been proposed in the literature about some of the SVS metrics (e.g., Nelson, Wilson & Yen Reference Nelson, Wilson and Yen2009; Brown Reference Brown2014; Fiorineschi, Frillici & Rotini Reference Fiorineschi, Frillici and Rotini2020a, Reference Fiorineschi, Frillici and Rotini2021).

The key of the SVS novelty metrics is in the equation used to assess the overall novelty of each idea (M) in a specific set of ideas. More precisely, the novelty is calculated through Eq. (1):

$$ {M}_{SNM}\hskip0.35em =\hskip0.35em \sum \limits_{i\hskip0.35em =\hskip0.35em 1}^m{f}_i\sum \limits_{j\hskip0.35em =\hskip0.35em 1}^n{S}_{ij}{p}_j $$

$$ {M}_{SNM}\hskip0.35em =\hskip0.35em \sum \limits_{i\hskip0.35em =\hskip0.35em 1}^m{f}_i\sum \limits_{j\hskip0.35em =\hskip0.35em 1}^n{S}_{ij}{p}_j $$

where

![]() $ {f}_i $

is the weight of the

$ {f}_i $

is the weight of the

![]() $ {i}^{th} $

attribute and

$ {i}^{th} $

attribute and

![]() $ m $

is the number of attributes characterising the set of analysed ideas. The parameter

$ m $

is the number of attributes characterising the set of analysed ideas. The parameter

![]() $ n $

represents the number of design stages characterising the idea generation session, and

$ n $

represents the number of design stages characterising the idea generation session, and

![]() $ {p}_j $

represents the weight assigned to the

$ {p}_j $

represents the weight assigned to the

![]() $ {j}^{th} $

design stage. Indeed, SVS observed that different attributes or functions can differently impact the overall novelty. Similarly, the same scholars also observed that the contribution to novelty can be affected by the considered design stage [e.g., conceptual design, embodiment design (Pahl et al. Reference Pahl, Beitz, Feldhusen and Grote2007)]. The parameter

$ {j}^{th} $

design stage. Indeed, SVS observed that different attributes or functions can differently impact the overall novelty. Similarly, the same scholars also observed that the contribution to novelty can be affected by the considered design stage [e.g., conceptual design, embodiment design (Pahl et al. Reference Pahl, Beitz, Feldhusen and Grote2007)]. The parameter

![]() $ {S}_{ij} $

represents the ‘unusualness’ or the ‘unexpectedness’ of the specific solution used by the analysed idea to implement the

$ {S}_{ij} $

represents the ‘unusualness’ or the ‘unexpectedness’ of the specific solution used by the analysed idea to implement the

![]() $ {i}^{th} $

attribute at the

$ {i}^{th} $

attribute at the

![]() $ {j}^{th} $

design stage.

$ {j}^{th} $

design stage.

From Eq. (1), it is possible to infer that the SVS novelty assessment procedure underpins the concept of functions or key attributes that can be identified within the set of generated ideas. In other words, the novelty of an idea ‘I’ in the SVS approach is relative to a specific universe of ideas{U}. Each idea ‘I’ is considered a composition of the solutions that implement each function (or attribute) used to represent the idea (one solution for each function/attribute). However, according to SVS, the identification of functions and attributes is case sensitive.

The most important parameter of Eq. (1) is

![]() $ {S}_{ij} $

, and two different approaches have been proposed by SVS to obtain the related value, that is, the ‘a posteriori’ and the ‘a priori’ approaches.

$ {S}_{ij} $

, and two different approaches have been proposed by SVS to obtain the related value, that is, the ‘a posteriori’ and the ‘a priori’ approaches.

In the a priori approach, the

![]() $ {S}_{ij} $

value is ‘assigned’ by judges that compare the idea (decomposed in terms of attributes and related solutions) against a reference set of existing products. More precisely, SVS reports, ‘…a universe of ideas for comparison is subjectively defined for each function or attribute, and at each stage. A novelty score S1 [the value “1″ identifies the novelty metric in the SVS set of metrics] is assigned at each idea in this universe’. Therefore, the ‘a priori’ approach is based on subjective evaluations made by referring to subjective universes of ideas. This kind of approach then underpins the personal knowledge of the judges, similar to what occurs with other well-acknowledged novelty assessment approaches used in the context of design studies (e.g., Hennessey, Amabile & Mueller Reference Hennessey, Amabile and Mueller2011; Sarkar & Chakrabarti Reference Sarkar and Chakrabarti2011; Jagtap Reference Jagtap2019).

$ {S}_{ij} $

value is ‘assigned’ by judges that compare the idea (decomposed in terms of attributes and related solutions) against a reference set of existing products. More precisely, SVS reports, ‘…a universe of ideas for comparison is subjectively defined for each function or attribute, and at each stage. A novelty score S1 [the value “1″ identifies the novelty metric in the SVS set of metrics] is assigned at each idea in this universe’. Therefore, the ‘a priori’ approach is based on subjective evaluations made by referring to subjective universes of ideas. This kind of approach then underpins the personal knowledge of the judges, similar to what occurs with other well-acknowledged novelty assessment approaches used in the context of design studies (e.g., Hennessey, Amabile & Mueller Reference Hennessey, Amabile and Mueller2011; Sarkar & Chakrabarti Reference Sarkar and Chakrabarti2011; Jagtap Reference Jagtap2019).

In contrast, in the a posteriori approach, the

![]() $ {S}_{ij} $

for each attribute is calculated by Eq. (2):

$ {S}_{ij} $

for each attribute is calculated by Eq. (2):

$$ {S}_{ij}\hskip0.35em =\hskip0.35em \frac{T_{ij}-{C}_{ij}}{T_{ij}}\times 10 $$

$$ {S}_{ij}\hskip0.35em =\hskip0.35em \frac{T_{ij}-{C}_{ij}}{T_{ij}}\times 10 $$

where

![]() $ {T}_{ij} $

is the total number of solutions (or ideas) conceived for the

$ {T}_{ij} $

is the total number of solutions (or ideas) conceived for the

![]() $ {i}^{th} $

attribute at the

$ {i}^{th} $

attribute at the

![]() $ {j}^{th} $

design stage and

$ {j}^{th} $

design stage and

![]() $ {C}_{ij} $

is the count of the current solution for the

$ {C}_{ij} $

is the count of the current solution for the

![]() $ {i}^{th} $

attribute at the

$ {i}^{th} $

attribute at the

![]() $ {j}^{th} $

design stage. Therefore, in this case, the reference universe of ideas is the same set of generated ideas. Indeed, the evaluator (it is preferable not to use the term ‘judge’ to avoid confusion with the ‘a priori’ rating approach) is asked to count the number of times a specific solution for a specific attribute (or function) appears within the set of generated ideas (for each design stage). Then, such a value

$ {j}^{th} $

design stage. Therefore, in this case, the reference universe of ideas is the same set of generated ideas. Indeed, the evaluator (it is preferable not to use the term ‘judge’ to avoid confusion with the ‘a priori’ rating approach) is asked to count the number of times a specific solution for a specific attribute (or function) appears within the set of generated ideas (for each design stage). Then, such a value

![]() $ {C}_{ij} $

is compared with the total number of solutions generated for the specific attribute in the specific design stage (

$ {C}_{ij} $

is compared with the total number of solutions generated for the specific attribute in the specific design stage (

![]() $ {T}_{ij}\Big) $

, obtaining a value of the ‘infrequency’ of that solution. Accordingly, the a posteriori approach is heavily affected by the specific set of analysed ideas, and therefore, the novelty value is relative to that specific set of ideas.

$ {T}_{ij}\Big) $

, obtaining a value of the ‘infrequency’ of that solution. Accordingly, the a posteriori approach is heavily affected by the specific set of analysed ideas, and therefore, the novelty value is relative to that specific set of ideas.

2.2. What do we know about SVS novelty assessment approaches?

In the last decade, perhaps due to their widespread success and dissemination through the scientific community, the SVS assessment procedures have been deeply investigated and discussed. For example, Brown (Reference Brown2014) reviewed and discussed some novelty assessment approaches. Concerning SVS, Brown highlighted some problems related to the subjective identification of functions or key attributes, the subjective identification of the weights for each attribute and the additional difficulty of separating the ideas according to the design stages (if the user intends to use Eq. (1) in its complete form). Srivathsavai et al. (Reference Srivathsavai, Genco, Hölttä-otto and Seepersad2010) reported that the ‘a posteriori’ novelty assessment approach proposed by SVS cannot be used to assess ideas in relation to existing ideas (or products). In other words, considering the definitions provided by Boden (Reference Boden2004), the SVS ‘a posteriori’ novelty assessment approach is not related to historical novelty. However, such a metric can be successfully used to assess psychological novelty (i.e., what is novel for those who actually generate the idea). In theory, the ‘a priori’ approach should be capable of assessing historical novelty; however, its actual use in the scientific community appears unclear.

Furthermore, Sluis-Thiescheffer et al. (Reference Sluis-Thiescheffer, Bekker, Eggen, Vermeeren and De Ridder2016) observed that in certain circumstances, the ‘a posteriori’ approach can lead to misleading novelty scores (i.e., too high), even if similar solutions appear quite often in the examined set. Vargas-Hernandez et al. (Reference Vargas-Hernandez, Paso, Schmidt, Park, Okudan and Pennsylvania2012) claimed that the ‘a posteriori’ approach could be improved to better address changes within the examined sets of ideas and to be more effectively applied to boundary situations.

These and other observations have been made, which we summarised in comprehensive reviews that accurately describe and discuss each of them (e.g., Fiorineschi & Rotini Reference Fiorineschi and Rotini2019, Reference Fiorineschi and Rotini2021; Fiorineschi, Frillici & Rotini Reference Fiorineschi, Frillici and Rotini2020b). Additionally, we discovered and examined a particular issue concerning the ‘a posteriori’ approach, that is, the problem of ‘missing’ or ‘extra’ attributes. We examined the problem in depth (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini2020a), and we also proposed a comprehensive solution to the problem (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini2021).

However, despite the comprehensive set of studies that analyse and/or discuss SVS novelty assessment, there are still many questions to be answered. In particular, this work points to the following evident gaps that should be bridged to better apply the metrics in the future:

-

Q1) What is the rate of use of the SVS novelty assessment approaches within scientific works?

-

Q2) What is the rate of use of the ‘a priori’ and ‘a posteriori’ versions of the SVS novelty assessment approach?

-

Q3) What is the level of consciousness of SVS users in terms of the novelty type and concept needed for the specific experiment?

-

Q4) What is the rate of use of the ‘multiple design stages’ capability of the SVS novelty assessment approach?

-

Q5) What is the range of applicability for the SVS novelty assessment approaches?

-

Q6) How many scholars comprehensively use SVS novelty assessment approaches with multiple evaluators and perform an interrater agreement test?

-

Q7) How many research works, among those using SVS novelty assessment approaches, comprehensively describe the following assessment rationale?

The following section provides a description of the investigation targets related to each question, together with a comprehensive description of the following research methodology.

3. Materials and methods

3.1. Description and motivation of the key investigation targets

The seven questions reported in Subsection 4 constitute the key investigation targets on which this literature review swivels. Investigation targets are considered here as information categories that are expected to be extracted from the analysis of the literature. Table 1 reports a short description of each of them.

Table 1. List of the investigation targets related to the questions formulated in Subsection 4

More specifically, according to Q1, the review is expected to clarify the extent to which the work of SVS is actually cited for the application of the related novelty assessment procedures. Then, through Q2, we aim to clarify the extent to which each of the two assessment approaches (‘a priori’ and ‘a posteriori’) is used.

It is also important to understand whether and to what extent the scholars that use SVS approaches are conscious of the actual range of applicability of the SVS novelty assessment approaches. A first indication is given by Q3, which is expected to reveal that most of the references are to the definitions provided by SVS. Accordingly, in addition to the considered novelty concept, we intend to search for any reference to the considered novelty type, that is, psychological novelty or historical novelty.

Q4 has been formulated as, at first glance, we preliminarily observed a lack of use for the multiple stages capability of Eq. (1). It is important to further investigate this issue, since if confirmed, it would be important to investigate the reasons behind this lack.

Q5 is expected to provide a comprehensive view of the current range of use of SVS metrics. Such information, although not sufficient alone to be considered as a guide, is expected to provide a preliminary indication about the types of application where the SVS novelty metrics have been used.

Q6 and Q7 are also two critical questions (and related investigation targets) that aim to investigate the extent to which the assessment is performed with a robust approach (Q6) and whether the provided information is sufficient to understand and/or to repeat the experiments (Q7).

3.2. Methodological approach for the literature review

To perform a valuable literature review, Fink (Reference Fink2014) reported that it is necessary to conduct the analysis systematically. In other words, it is important to adopt a sound and repeatable method for the identification, evaluation and synthesis of the actual body of knowledge. Purposeful checklists and procedures for supporting comprehensive literature reviews can be found in the literature (e.g., Blessing & Chakrabarti Reference Blessing and Chakrabarti2009; Okoli & Schabram Reference Okoli and Schabram2010; Fink Reference Fink2014), whose main points can be summarised as follows:

-

1. Clear definition of the review objectives, for example, through the formulation of purposeful research questions.

-

2. Selection of the literature database to be used as a reference for extracting the documents.

-

3. Formulation of comprehensive search queries to allow in-depth investigations.

-

4. Definition of screening criteria to rapidly skim the set of documents from those that do not comply with the research objectives.

-

5. Use of a repeatable procedure to perform the review.

-

6. Analysis of documents and extraction of the resulting information according to the research objectives.

Point 1 has been achieved by the questions formulated in Section 2, with the related investigation targets described in Subsection 3.1. According to the second point, we selected the Scopus database as the reference for performing the literature review and extracting the references of the documents to be analysed. The achievement of the third point has been relatively simple in this case since there was no intention to identify the papers through the adoption of complex search queries. Indeed, all documents citing the paper of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b) were considered (739 in March 2021).

Points 4–6 have been achieved by means of the procedure represented in Figure 1, which is described in the following paragraphs.

Figure 1. Procedure used to perform the literature review described in this paper.

Referring to Figure 1, Step 1 was performed by using the Scopus search engine. In particular, the SVS paper (Shah et al. Reference Shah, Vargas-Hernandez and Smith2003a) was identified and exploited to access the list of citing documents. Each citing document was downloaded from the publisher, or when this was not possible, a shareable copy was directly sought from the authors. Unfortunately, a nonnegligible number of documents have not been retrieved (a complete list of these documents is provided in Appendix Table A1).

The very first analysis performed on the achieved documents is represented by Step 2 in Figure 1. In other words, each paper was rapidly screened to verify the presence of SVS metrics or any metric somehow related to SVS. To accomplish this, one of the authors rapidly searched for any reference about SVS in the paper’s sections that provide information about the research methodology. The approach used for this verification was to perform in-text searches within each article (e.g., for the terms ‘novelty’, ‘creativity’, ‘uncommonness’, etc.) and/or to directly search for the SVS citation with a subsequent analysis of ‘why’ it was cited. Although the number of processed documents was high, this analysis was relatively simple and allowed us to perform the screening with a rate of from 10–20 papers per day.

The subset of papers obtained by Step 2 was further processed to clearly identify the documents using SVS metrics in their original form (Step 3). This activity was time-consuming, as it required a clear understanding of the rationale behind the adopted novelty assessment approach. Fortunately, as shown in Section 4, the number of papers that passed Step 2 was quite limited. The outcomes of Step 3 were then expected to answer Q1 and Q2 and thus to accomplish the first two investigation targets listed in Table 1. Consequently, the papers using the original form of the novelty assessment procedures were further processed to find the information related to the investigation targets from 3 to 7, as listed in Table 1 (Step 4 in Figure 1).

Similar to Step 2, to retrieve information about the novelty concept (Q3), each document among those that complied with Substep 3.1 (Figure 1) was processed by in-text searches for the words ‘novelty’, ‘originality’, ‘newness’, ‘uncommonness’, ‘unusualness’ and ‘unexpectedness’. In this way, it was possible to rapidly identify the parts of the documents that discuss novelty concepts and to understand which of them (if any) were considered in the analysed work. In addition, the terms ‘psychological’ and ‘historical’ (as well any citation to the works of Boden) were searched throughout the text to verify the presence of any description of the type of novelty (i.e., psychological novelty or historical novelty).

To retrieve the information relevant to Q4, it was necessary to deeply analyse the methodological approach used in the reviewed papers. Indeed, it was important to clearly understand the design phases that were actually considered in the experiments.

The extraction of the information related to Q5 required the identification of the motivations and the research context underpinning each analysed document. Often, it was necessary to search in the paper for information about the application type. This was accomplished by focusing on the introduction, the methodology description and the discussion of each revised article. The extracted application types were grouped into a set of categories that we formulated by following a subjective interpretation (there was no intention to provide a universally shared set of definitions about the application contexts of the metrics). The set was created on the basis of the differences that we deemed useful to provide a wide overview of the current application range of the SVS approaches for novelty assessment.

To retrieve the information related to Q6, it was necessary to analyse the sections that describe the results and the methodological approach followed in the considered papers. The purpose of this specific analysis was to search for any trace of interrater agreement tests. In the first phase, we verified whether the assessment was performed by more than one evaluator. Then, for the papers presenting more than one evaluator, the analysis focused on the presence of any type of interrater agreement test.

Finally, for Q7, we checked each paper to understand whether and to what extent there was any description of the rationale used to perform the assessment. More specifically, the searched set of information is as follows:

-

• Availability of the complete set of ideas.

-

• Description of the method used to identify the attributes (or functions) and the related weights.

-

• Description of the rationale used to include or exclude ideas with missing or extra attributes.

-

• Description of the process used to assess each idea in relation to the identified attributes.

Substep 3.2 (Figure 1) was added after the analysis of a first chunk of retrieved documents, which allowed us to highlight that a nonnegligible number of the documents that passed Step 2 did not use the original version of the SVS approaches. The purpose of this step was to collect these modified versions of the metrics and to extract the available information (if any) about the reasons that led the authors to not use the original version.

4. Results

4.1. Results from the investigation target related to Q1

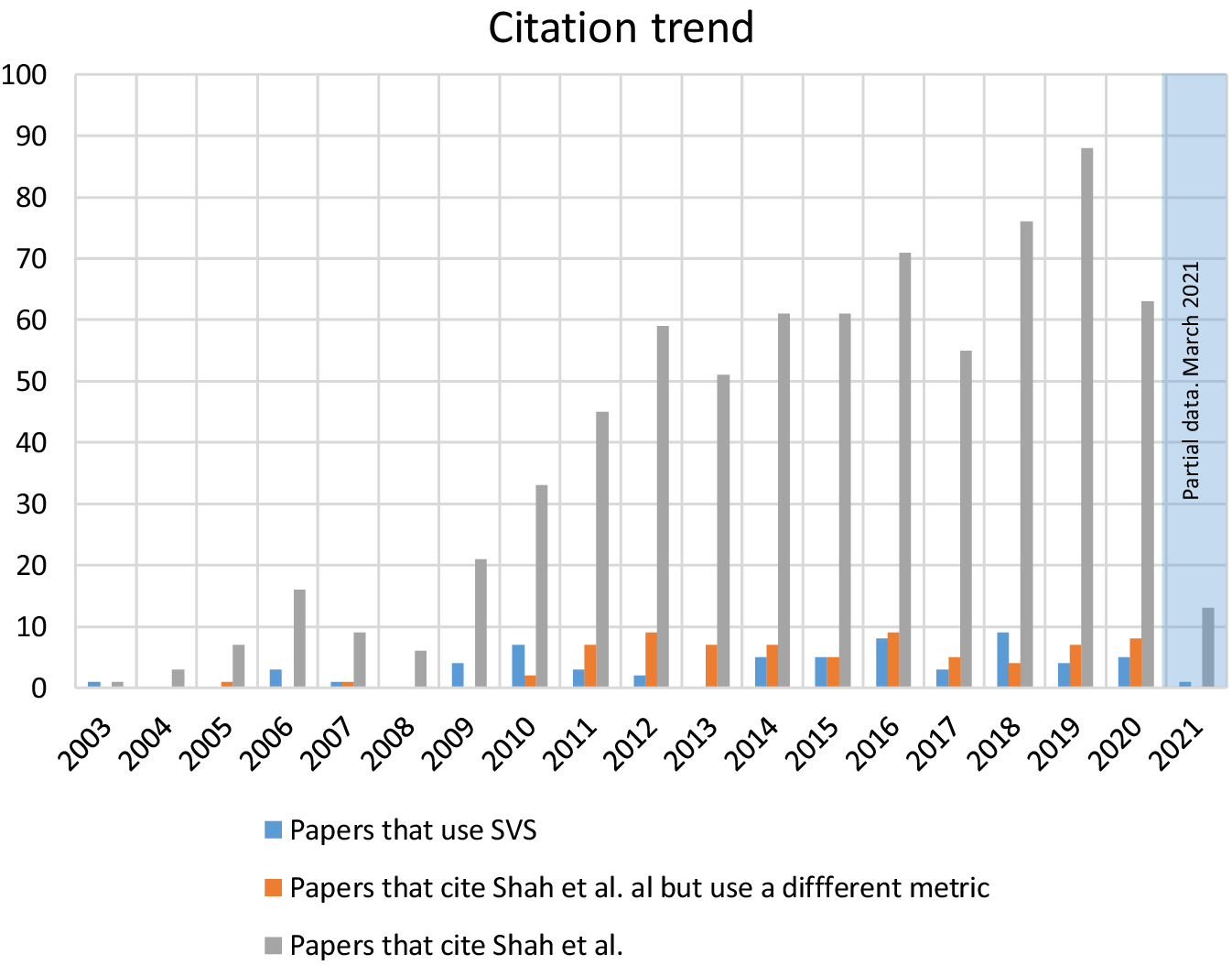

The analysis revealed that only a very small part of the documents that cited the work of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b) actually used the related metrics for novelty assessments, as shown in Figure 2 for the papers from 2003 to 2020 (the year 2021 was not considered since the data are updated only to March). The figure also shows that many papers did not use an original version of the SVS approaches. More precisely, excluding the 53 papers for which it was impossible to retrieve the document (see Appendix Table A1), the examined set comprises 686 papers. Among them, 61 papers used the original version of the SVS metrics, while 72 used a modified version (see Table 2 for the complete list of references). Therefore, the original versions of the SVS novelty assessment were used in approximately 9% of papers belonging to the examined set.

Figure 2. Citation trend for the work of Shah, Vargas-Hernandez & Smith (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b). The figure also reports the portion of those citations from papers actually using an original version of the SVS approach or a modified version.

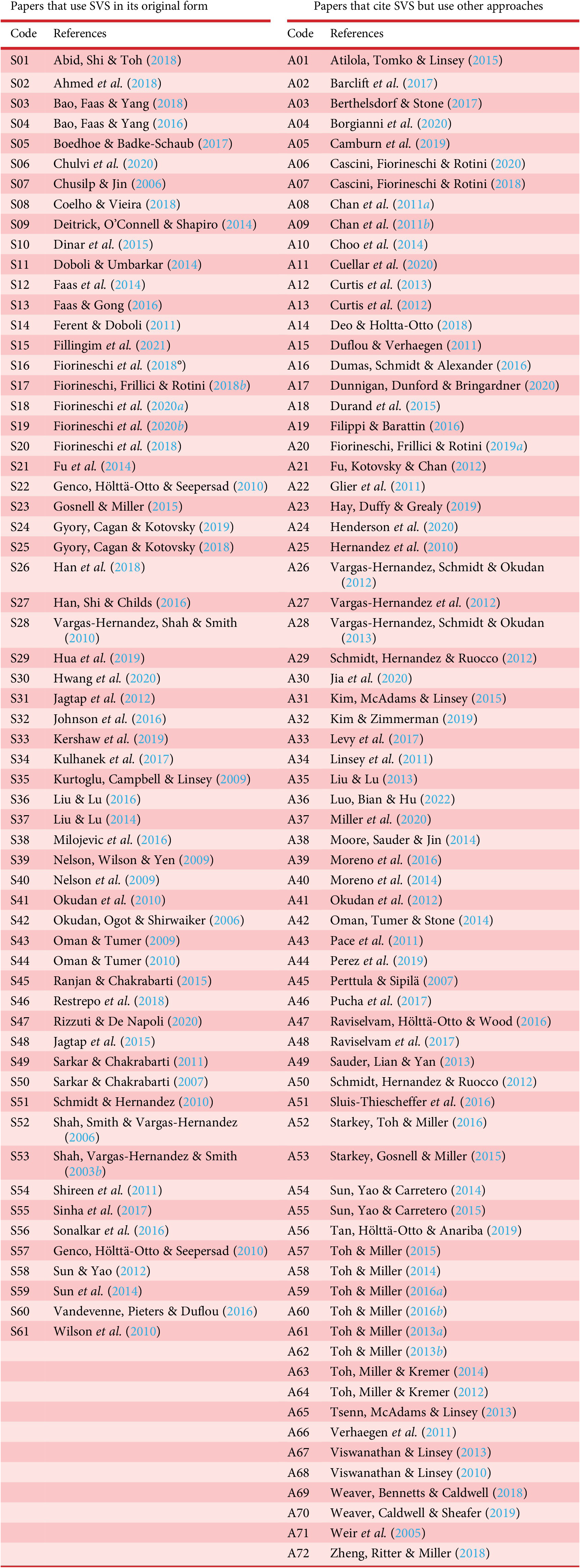

Table 2. List of documents identified according to Substeps 3.1 and 3.2 in Figure 1

To better show the results and to link the contents to the complete set of related references, we used an alphanumeric code (e.g., S10 or A21). The letter indicates whether the document uses an original SVS approach (letter “S”) or an alternative approach (letter “A”). The number identifies the article, according to the order shown in Table 2.

It is not within the scope of this paper to comprehensively analyse the 72 papers that used a modified version. However, a complete list of metrics is reported in Table 3, together with the indication of the papers that used them.

Table 3. List of metrics used in the works that refer to SVS but use different approaches

Note: See Table 2 for reference codes.

Unfortunately, the authors of the papers that are listed in Table 3 often failed to provide comprehensive motivations behind the need to use a metric different from the original SVS. A motivation was provided by Moore, Sauder & Jin (Reference Moore, Sauder and Jin2014), paper A38 in Table 2. The authors stated that they used a different metric because they were focused on the novelty of each design entity and not on the total novelty of the entire process.

Another case is that of Filippi & Barattin (Reference Filippi and Barattin2016), paper A19 in Table 2, who simply report that their metric resembles SVS but actually measures something different from novelty.

4.2. Results from the investigation target related to Q2

Figure 3 graphically reports the results obtained for Q2, according to Substep 4.1 in Figure 1. In particular, the results show that among the 61 papers that use the original version of the SVS, 63.9% use the ‘a posteriori’ approach, and only 8.2% use the ‘a priori’ version. To these percentages, it is also necessary to add the papers that use both approaches (3.3%). However, it is important to observe that a nonnegligible percentage of the examined documents (24.6%) does not provide sufficient information to understand which of the two approaches was used.

Figure 3. Percentages of the papers using the two different novelty assessment approaches originally proposed by SVS.

The latter information is critical. Indeed, while the use of one approach in place of the other (or both) can be a consequence of the experimental requirements (assuming that the authors correctly selected the most suited one), it is unacceptable for a scientific paper to fail to provide the information to understand which assessment procedure was used.

4.3. Results from the investigation target related to Q3

Concerning the level of consciousness that SVS users have in terms of novelty concepts, we found that the terms ‘unusualness’ and ‘unexpectedness’ are used very often. This result was expected since these terms are used in the original SVS work. However, as shown in Figure 4, other terms or a ‘mix of terms’ were also used among the reviewed works. More importantly, Figure 4 also shows that a nonnegligible part (24.6%) of the examined articles (among the 61 that use the original SVS metrics) does not provide any information about the considered novelty concept.

Figure 4. Terms used by the different authors to identify the novelty concept underpinning the assessment performed through the SVS metrics.

While it is often acceptable to use a specific term in place of others (e.g., due to the absence of a shared definition of novelty), it is unacceptable that some scientific works completely fail to provide any reference about the novelty concept. Indeed, the reader needs to understand what the authors intended when referring to novelty and/or when defining the investigation objectives. Independent of the correctness of the provided definitions, this is crucial information that cannot be neglected. Missing any reference to the considered concept necessarily implies that the authors failed to collect sufficient information about the plethora of different ways that actually exist for defining and assessing novelty.

Concerning the novelty type, we observed that almost all the examined papers (among the 61 that use the original SVS metrics) do not provide any specification about the actual need to assess psychological novelty or historical novelty. Only in three cases was the specification correctly included (Gosnell & Miller Reference Gosnell and Miller2015; Fiorineschi, Frillici & Rotini Reference Fiorineschi, Frillici and Rotini2020a,Reference Fiorineschi, Frillici and Rotini b). This result is also critical. Indeed, the selection of the most suited novelty assessment procedure (and the related metric) should be performed on the basis of ‘what is actually needed’ and not on the basis of naïve motivations such as whether a procedure ‘is the most cited’ or ‘is often used by scholars’. In the specific case of SVS metrics, the authors should always be aware that when using the a posteriori approach, they are actually measuring a psychological novelty, which cannot be interchangeably used with the historical novelty.

4.4. Results from the investigation target related to Q4

The possibility of applying Equation 1 to multiple design stages was not exploited by the 61 papers collected with Substep 3.1 in Figure 1.

Unfortunately, no explanation for the reasons behind this lack of use was found in the reviewed documents. However, it is possible to infer that the reason for this lack lies in the nature of the SVS metrics. Indeed, the SVS metrics were formulated to assess the effectiveness of idea generation, and it is widely acknowledged that the most creative part of the design process is the conceptual design phase. According to what was observed in the reviewed papers, we can assume that the studies using the SVS metrics are almost totally related to conceptual design activities.

4.5. Results from the investigation target related to Q5

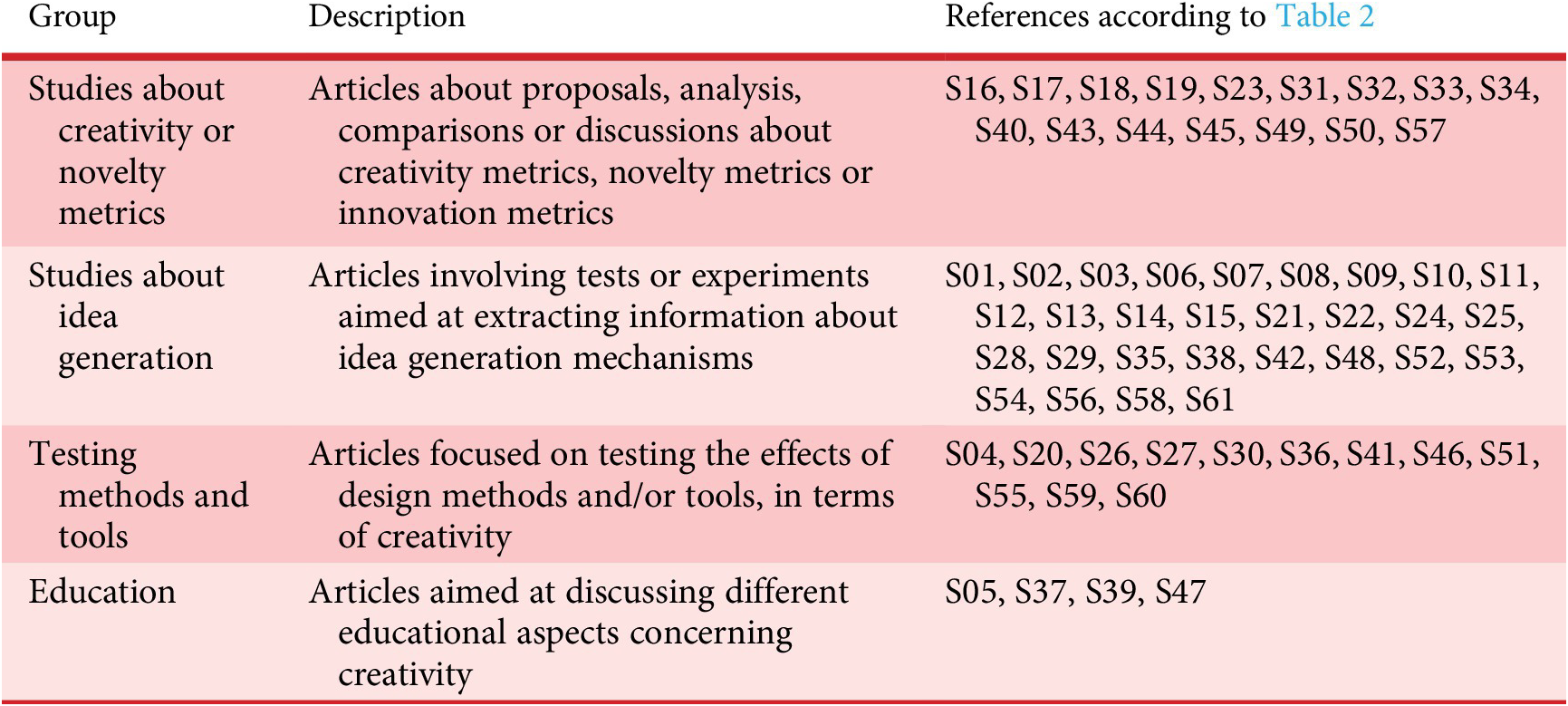

Concerning the types of applications where the SVS metrics were applied, the performed analysis (as described in Section 3) led to four different groups (see Figure 5). A short description of each group is reported in Table 4, together with the related list of coded references.

Figure 5. Types of application identified for the SVS novelty metrics.

Table 4. Application types identified for the SVS novelty metrics

As shown in Figure 5, a majority of works were about idea generation, followed by studies about creativity, innovation or novelty metrics.

All of the reviewed contributions are related to academia. Indeed, even if industry was indirectly mentioned or involved, the application of novelty metrics was always performed by academic staff for academia-related purposes. Therefore, there is no evidence of the use of SVS in industry.

4.6. Results from the investigation target related to Q6

Figure 6 reveals that only a portion of the reviewed works used an Inter Rater Agreement (IRR) test to validate the novelty assessment. At first glance, the application rate of the IRR is quite irregular, without any evident trend. There appeared to be an increasing trend at least from 2009 to 2018, but the use of the IRR rapidly decreased thereafter.

Figure 6. Papers that use SVS novelty metrics assessment and apply interrater agreement tests.

Surprisingly, in some specific years, the IRR was never applied to novelty assessment results (i.e., 2006, 2007, 2011, 2014, 2020 in Figure 6). In particular, only 18 contributions (among the 61 that use the original SVS novelty assessment approach) mentioned an IRR test (e.g., Shah, Vargas-Hernandez & Smith Reference Shah, Vargas-Hernandez and Smith2003b; Kurtoglu, Campbell & Linsey Reference Kurtoglu, Campbell and Linsey2009; Johnson et al. Reference Johnson, Caldwell, Cheeley and Green2016; Vandevenne, Pieters & Duflou Reference Vandevenne, Pieters and Duflou2016; Bao, Faas & Yang Reference Bao, Faas and Yang2018).

4.7. Results from the investigation target related to Q7

It emerged that a large part of the reviewed documents did not provide any information to allow a comprehensive understanding and repeatability of the assessment. We are conscious that due to ethical and/or professional agreements, it is often impossible to share whole sets of ideas (e.g., images, sketches or CAD files). This constraint could be the main reason behind the absence of documents that allow a complete replication of the experiment. Indeed, without the original set of ideas, it is not possible to repeat the same assessment. However, as shown in Figure 7, a nonnegligible number of the reviewed documents report ‘partial’ but important information about the assessment procedure (e.g., description of the procedure used to identify the attributes and the related weights, list of attributes, set of assessed ideas codified in terms of functions and attributes, etc.).

Figure 7. Percentages of articles that describe rationale for using the original SVS novelty assessment approaches. In particular, the graph shows how many documents do not report, partially report or are not required to report information about the assessment rationale. None of the reviewed papers completely reported the information required to ensure the repeatability of the experiment.

Only in two cases (among the 61 articles that used the original SVS assessment procedure) was the description of the rationale not needed. Indeed, in these cases, the authors do not consider the assessment from a real experiment or design session but use ad hoc data to explain their proposal (Nelson et al. Reference Nelson, Wilson, Rosen and Yen2009) or to explain potential SVS issues in border cases (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini2018a).

5. Discussion

5.1. Findings

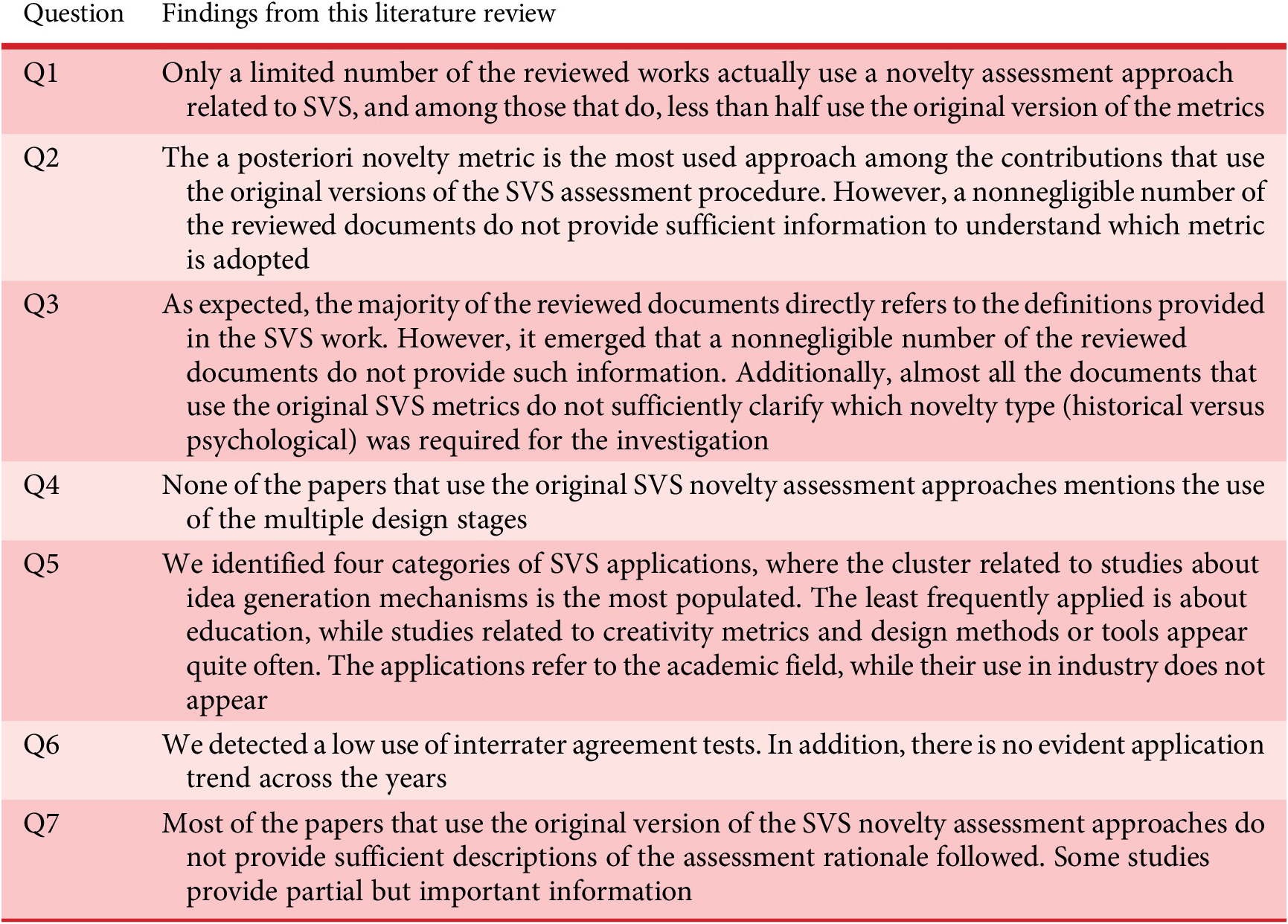

According to the seven research targets reported in Table 1 and then according to the related research questions formulated in Section 2, the outcomes from this review work can be summarised as shown in Table 5.

Table 5. Findings from each of the seven research questions introduced in Section 2

SVS novelty metrics are used by less than 10% of the citing contributions (and almost all the contributions use the ‘a posteriori’ version), notwithstanding the high number of citations received by the work of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a). Indeed, many of the reviewed contributions consider other contents of the paper by Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a). For example, Dym et al. (Reference Dym, Agogino, Eris, Frey and Leifer2005) refer to the SVS concept of variety, while Chandrasegaran et al. (Reference Chandrasegaran, Ramani, Sriram, Horváth, Bernard, Harik and Gao2013) cite Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a) when discussing creativity-related aspects. In general, the work of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b) is often mentioned when discussing creativity and idea generation concepts and definitions (e.g., Charyton & Merrill Reference Charyton and Merrill2009; Linsey et al. Reference Linsey, Tseng, Fu, Cagan, Wood and Schunn2010; Crismond & Adams Reference Crismond and Adams2012; Gonçalves, Cardoso & Badke-Schaub Reference Gonçalves, Cardoso and Badke-Schaub2014).

The categories of applications are congruent with the original intent of the SVS work, that is., to support design creativity research with a systematic and practical approach to perform repeatable assessments. However, the observed (partial or total) lack of information about the assessment rationale implies that the experiments described in the reviewed papers cannot be repeated (or comprehensively checked) by scholars. Indeed, as highlighted by Brown (Reference Brown2014) and confirmed by our recent works (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini2020a,b), the identification of the functions and/or attributes of the assessed ideas and the definition of the related weights are highly subjective.

Furthermore, it was a disappointing surprise to see that interrater agreement tests are often not performed or even mentioned at all. Due to the previously mentioned issue of subjectivity (which characterises any creativity assessment approach), it is always necessary to involve multiple evaluators (at least 2) and then to carefully check the robustness of the obtained scores. Fortunately, comprehensive interrater agreement approaches (e.g., Cronbach Reference Cronbach1951; Cohen Reference Cohen1960; Hayes & Krippendorff Reference Hayes and Krippendorff2007) were used in some of the reviewed works (e.g., Shah et al. Reference Shah, Vargas-Hernandez and Smith2003b; Kurtoglu et al. Reference Kurtoglu, Campbell and Linsey2009).

Another important flaw detected in the reviewed documents that use the original SVS approaches is the lack of comprehensive descriptions about the actual type of novelty that the investigation is expected to assess. In our recent review of novelty metrics, we identified a map to orient among the variety of different metrics (Fiorineschi & Rotini Reference Fiorineschi and Rotini2021), and one of the most important parameters is the type of novelty, intended as historical novelty or psychological novelty. The two SVS novelty assessment approaches belong to two distinct types of novelty but implement the same concept of novelty (uncommonness or unexpectedness). Any comprehensive work using the SVS metrics should demonstrate that their use is actually compatible with the expected measures.

5.2. Implications

The present work provides a clear picture of the actual usage of the SVS novelty assessment approaches, making it possible to understand the main flaws that characterise most of the published works. Implications from the observations extracted for each research question (see Table 5) are summarised in Table 6.

Table 6. Implications of the results presented in Table 5

The implications expressed in Table 6 can be reformulated by better focusing on both readers and external agents. In the bullet list reported below, we try to do so by referring to scholars, teachers, reviewers, PhD supervisors and industrial practitioners:

-

• Implications for researchers who use the metrics and for paper reviewers:

The implication derived from the findings related to Q6 deserves particular attention. Indeed, these findings imply that most of the reviewed works actually do not follow a robust assessment procedure. It is acknowledged that novelty assessment is necessarily performed by means of subjective interpretations of the assessed design or idea, and this also applies to the SVS metrics. Therefore, any assessment that fails to perform an IRR test among the evaluators cannot be considered scientifically valid.

Additional implications can be extracted from the findings pertaining to Q7. Indeed, here, the evidence emerges of a substantial impossibility (for most of the research works) of providing the information required to allow the repetition of the assessment with the same data. To increase the ability to perform comparable experiments, it is necessary to allow the reader to understand any detail about how the experiment has been conducted. First, the assigned design or idea generation task should be carefully described, as should the method used to administer it to the sample (or samples) of participants. In addition, it is important to carefully describe the considered sample of participants in terms of knowledge background, design experience, and, of course, gender, ethnographic and age distributions. Additionally, it is essential to carefully provide in-depth information about the experimental procedure. More specifically, it is crucial to indicate the time chosen to introduce the task, the time allotted to perform it and (if any) the presence of possible pauses (e.g., to enable incubation). Finally, it is necessary to provide indications about how participants worked, for example, whether they worked alone or in groups, whether they worked in a single design session or in subsequent design sessions, whether they worked on a single task on multiple tasks, etc.

It is also important to provide detailed information about the postprocessing activity (i.e., the novelty assessment for the scope of this work). More specifically, it is crucial to provide information about the evaluators, their expertise and the related background. The assessment procedure also needs to be carefully described in detail. In particular, it is important to mention and describe prealignment sessions among raters, as well as the procedure that each of them followed to identify the attributes from the ideas to be assessed.

Without the information described above, any research work where designs or ideas are assessed in terms of novelty (as is the case for any creativity-related assessment) cannot be considered as a reference.

Generally, these considerations can be extended to each of the acknowledged novelty assessment approaches.

-

• Implications for teachers:

What emerged in this paper is the need for a better understanding of the available concepts and types of novelty. Teaching creativity in design contexts should not neglect how to measure it. In this sense, due to the plethora of different metrics and assessment procedures, it is important that future designers or scholars start their professional lives with a sound understanding of ‘what can be measured’. Indeed, a clear understanding of the novelty concepts and types to be measured will surely help in selecting the most suitable way to assess novelty and then to assess creativity.

-

• Implications for PhD supervisors:

The need for more comprehensive descriptions expressed in the previous points should constitute a reference for PhD supervisors. Indeed, PhD students are often expected to perform experimental activities and then to apply (when needed) novelty metrics. First, it is necessary to identify ‘what’ is intended to be assessed. As demonstrated by the flaws highlighted in this paper, this is not a trivial task, and the aim is to clarify both the novelty type and the novelty concept (Fiorineschi & Rotini Reference Fiorineschi and Rotini2021). Then, before starting any experimental activity, it is important to plan it by considering the parameters to be considered in the assessment procedure (depending on the specific metric). Eventually, each experimental step must be opportunely archived and described to allow a comprehensive description of the adopted procedure. It is fundamental to allow reviewers to evaluate the robustness and the correctness of the experiment, as well as to allow scholars to repeat it in comparable boundary conditions.

-

• Implications for future development of novelty metrics:

A particular criticality observed among the reviewed works concerns the presence of papers that cite SVS but use specifically developed metrics. The criticism is based on the observed absence of sound motivations or justifications about the actual need to use or to propose an alternative metric. As already highlighted in this paper, it is not our intention to denigrate any work, but it is important to provide a clear message for future works. More specifically, it is necessary to stem the trend of creating ‘our own’ metrics to be used in place of already published ones without providing any reasonable motivations and, more importantly, without any proof about the actual improvement provided in relation to existing metrics. Indeed, the field of novelty metrics is already very populated by different alternatives, and it is already quite difficult to navigate them. We are aware of this challenge because we personally tried to review novelty metrics to support our selection (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini2019b; Fiorineschi & Rotini Reference Fiorineschi and Rotini2020, Reference Fiorineschi and Rotini2021). This does not mean that new metrics proposals or improvements should be avoided. This would be a hypocritical suggestion from us, since we have also proposed new metrics (Fiorineschi, Frillici & Rotini Reference Fiorineschi, Frillici and Rotini2019a, Reference Fiorineschi, Frillici and Rotini2021). What is important for future works that deal with novelty assessment is to use metrics that have been tested (in precedent works or in the same work) and to comprehensively describe the motivation for the choice. In particular, it is necessary to ensure that the selected metric is compatible with novelty type and concept type, which are expected to be measured according to the experimental objectives.

-

• Implications for design practice:

Indirectly, this work could also help in design practice. Indeed, the major understanding of metrics that this paper provides is intended to support both better novelty measurements and more efficient method selection. More precisely, the future possibility of improving the selection and arrangement of ideation methods will ultimately help develop better products.

5.3. Limitations and research developments

The work suffers from two main limitations, which have been partially highlighted in the previous sections. First, the analysis is limited to the citations to the work of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a) as of March 2021, and second, we were unable to retrieve a nonnegligible number of documents (see Appendix Table A1). Indeed, our institution did not provide access to all contributions, and we tried to retrieve as many of these as possible by directly asking for a free copy from the authors. Nevertheless, scholars could take inspiration from this work to perform similar analyses on other well-known assessment approaches (e.g., Hennessey et al. Reference Hennessey, Amabile and Mueller2011; Sarkar & Chakrabarti Reference Sarkar and Chakrabarti2011). With additional works such as this one, it may be possible to generate a clear map of the actual usage of the creativity assessment approaches used in the field of design creativity research.

Concerning the considered research questions, as mentioned above, we have identified the seven questions introduced in Section 2, which arise from our experience with in-depth (theoretical and practical) studies on novelty. We are therefore conscious that this work may not encompass all the doubts and questions about the argument.

An important limitation also concerns the missing analysis of the alternative metrics used in place of the SVS approaches. We believe that this kind of analysis can be considered feasible for future research, for which the list of documents reported in Table 3 could pave the way. The expected outcome from this possible extension of the work is a complete list and analysis of the whole set of metrics somehow inspired by or based on the SVS metrics. For that purpose, the list of metrics summarised in Table 3 could be integrated with other contributions (identified by following a different approach) that were already reviewed in our previous works (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini 2019b; Fiorineschi & Rotini Reference Fiorineschi and Rotini2019).

A further limitation of this work concerns the absence of any comprehensive analysis or discussion about the works that, even if not using the metrics, provide important considerations about the pros and cons of the SVS approach. However, we intentionally avoided this kind of analysis to avoid overlap with our recently published works that are focused on this kind of investigation (Fiorineschi et al. Reference Fiorineschi, Frillici and Rotini2018a, Reference Fiorineschi, Frillici and Rotini2020a,Reference Fiorineschi, Frillici and Rotini b, Reference Fiorineschi, Frillici and Rotini2021), where the reader can find an updated set of related information.

5.4. Expected impact

The main impact expected from this work is upon academic research on design creativity and can be summarised as follows:

-

• Help scholars understand the application field of the SVS novelty assessment approach.

-

• Promote comprehensive assessments through the correct application of the metrics by at least two evaluators, whose scores should always be checked by an IRR test.

-

• Invite scholars to provide sufficient information about the assessment rationale that has been followed for the application of the selected novelty metric.

-

• Describe the need for clear indications about the considered novelty type that the selected metric intends to measure (i.e., historical novelty or psychological novelty).

Generally, it is expected that this work could shed further light in the field of design creativity research by focusing on the novelty assessment of generated ideas. In particular, the criticalities highlighted in this work are expected to push scholars towards a more responsive use of metrics and to follow robust assessment procedures. This is fundamental to improving the scientific value of studies focused on novelty-related works about design and design methods and tools.

Unfortunately, it is not possible to formulate any ‘short-term’ expectation from the industrial point of view. Indeed, this work highlights that the use of the metrics is limited to academic fields (at least according to what is claimed in the analysed contributions). Therefore, a question may arise: ‘Are novelty metrics from academia useful for the industrial field?’

However, to answer this question, at least two other issues should be considered:

-

• ‘Are industrial practitioners sufficiently aware of the presence of novelty assessment procedures’?

-

• ‘Are industrial practitioners conscious of the potential information that can be extracted by the application of novelty and other creativity-related metrics?’

Therefore, even if a direct impact cannot be expected for industry, this work can push scholars to bridge the gap that currently exists between academia and industry in relation to the topic.

6. Conclusions

The main focus of this work is to shed light on the actual use of the two novelty assessment procedures presented by Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b) For that purpose, we formulated seven research questions whose answers have been generated through the analysis of the literature contributions that cite the work of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b). We found that even if scholars actually use the SVS novelty assessment approaches, the citations to the work of Shah et al. (Reference Shah, Vargas-Hernandez and Smith2003a,Reference Shah, Vargas-Hernandez and Smith b) often do not refer to novelty assessment. In addition, scholars frequently use a different version of the SVS metrics without providing explicit motivations behind this need. However, the most important issue highlighted in this work is the almost total lack of any reference to the type of novelty (historical or psychological) that scholars intend to assess when they use SVS metrics. This kind of information is fundamental and allows the reader to understand whether the selected metrics are suitable to obtain the expected results. Furthermore, the results highlight that, except for a few cases, the information provided in the reviewed works is not sufficient to repeat the experiments and/or the assessments in comparable conditions. Indeed, it emerged that only a few works provide a comprehensive description of the assessment rationale and apply interrater agreement evaluations. This is a critical aspect that should be considered by scholars for improving future literature contributions where novelty assessments are involved. As a further result, the methodology applied in this work can be reused by scholars to perform similar analyses on other metrics, not only limited to the field of creativity assessment. Indeed, several metrics exist in the literature (e.g., the quality of a design, modularity level of a system, sustainability of products and processes, etc.), and similar to what occurs in creativity assessment, comprehensive selection guidelines are still missing. Indeed, the expected impact of this work is to help scholars perform novelty assessments with improved scientific value. Eventually, studies such as this can contribute to the discussion about how to use metrics in different branches of engineering design and research.

Acknowledgments

This work was supported by the Open Access Publishing Fund of the University of Florence.

Appendix

A.1. List of the articles for which it was impossible to retrieve the document in this literature review

Table A1. Not retrieved articles