I. INTRODUCTION

Signal-processing research nowadays has a significantly widened scope compared with just a few years ago. It has encompassed many broad areas of information processing from low-level signals to higher-level, human-centric semantic information [Reference Deng2]. Since 2006, deep learning, which is more recently referred to as representation learning, has emerged as a new area of machine learning research [Reference Hinton, Osindero and Teh3–Reference Bengio, Courville and Vincent5]. Within the past few years, the techniques developed from deep learning research have already been impacting a wide range of signal- and information-processing work within the traditional and the new, widened scopes including machine learning and artificial intelligence [Reference Deng1, Reference Bengio, Courville and Vincent5–Reference Arel, Rose and Karnowski8]; see a recent New York Times media coverage of this progress in [Reference Markoff9]. A series of workshops, tutorials, and special issues or conference special sessions have been devoted exclusively to deep learning and its applications to various classical and expanded signal-processing areas. These include: the 2013 International Conference on Learning Representations, the 2013 ICASSP's special session on New Types of Deep Neural Network Learning for Speech Recognition and Related Applications, the 2013 ICML Workshop for Audio, Speech, and Language Processing, the 2013, 2012, 2011, and 2010 NIPS Workshops on Deep Learning and Unsupervised Feature Learning, 2013 ICML Workshop on Representation Learning Challenges, 2013 Intern. Conf. on Learning Representations, 2012 ICML Workshop on Representation Learning, 2011 ICML Workshop on Learning Architectures, Representations, and Optimization for Speech and Visual Information Processing, 2009 ICML Workshop on Learning Feature Hierarchies, 2009 NIPS Workshop on Deep Learning for Speech Recognition and Related Applications, 2012 ICASSP deep learning tutorial, the special section on Deep Learning for Speech and Language Processing in IEEE Trans. Audio, Speech, and Language Processing (January 2012), and the special issue on Learning Deep Architectures in IEEE Trans. Pattern Analysis and Machine Intelligence (2013). The author has been directly involved in the research and in organizing several of the events and editorials above, and has seen the emerging nature of the field; hence a need for providing a tutorial survey article here.

Deep learning refers to a class of machine learning techniques, where many layers of information-processing stages in hierarchical architectures are exploited for pattern classification and for feature or representation learning. It is in the intersections among the research areas of neural network, graphical modeling, optimization, pattern recognition, and signal processing. Three important reasons for the popularity of deep learning today are drastically increased chip processing abilities (e.g., GPU units), the significantly lowered cost of computing hardware, and recent advances in machine learning and signal/information-processing research. Active researchers in this area include those at University of Toronto, New York University, University of Montreal, Microsoft Research, Google, IBM Research, Baidu, Facebook, Stanford University, University of Michigan, MIT, University of Washington, and numerous other places. These researchers have demonstrated successes of deep learning in diverse applications of computer vision, phonetic recognition, voice search, conversational speech recognition, speech and image feature coding, semantic utterance classification, hand-writing recognition, audio processing, visual object recognition, information retrieval, and even in the analysis of molecules that may lead to discovering new drugs as reported recently in [Reference Markoff9].

This paper expands my recent overview material on the same topic as presented in the plenary overview session of APSIPA-ASC2011 as well as the tutorial material presented in the same conference [Reference Deng1]. It is aimed to introduce the APSIPA Transactions' readers to the emerging technologies enabled by deep learning. I attempt to provide a tutorial review on the research work conducted in this exciting area since the birth of deep learning in 2006 that has direct relevance to signal and information processing. Future research directions will be discussed to attract interests from more APSIPA researchers, students, and practitioners for advancing signal and information-processing technology as the core mission of the APSIPA community.

The remainder of this paper is organized as follows:

-

• Section II: A brief historical account of deep learning is provided from the perspective of signal and information processing.

-

• Sections III: A three-way classification scheme for a large body of the work in deep learning is developed. A growing number of deep architectures are classified into: (1) generative, (2) discriminative, and (3) hybrid categories, and high-level descriptions are provided for each category.

-

• Sections IV–VI: For each of the three categories, a tutorial example is chosen to provide more detailed treatment. The examples chosen are: (1) deep autoencoders for the generative category (Section IV); (2) DNNs pretrained with DBN for the hybrid category (Section V); and (3) deep stacking networks (DSNs) and a related special version of recurrent neural networks (RNNs) for the discriminative category (Section VI).

-

• Sections VII: A set of typical and successful applications of deep learning in diverse areas of signal and information processing are reviewed.

-

• Section VIII: A summary and future directions are given.

II. A BRIEF HISTORICAL ACCOUNT OF DEEP LEARNING

Until recently, most machine learning and signal-processing techniques had exploited shallow-structured architectures. These architectures typically contain a single layer of non-linear feature transformations and they lack multiple layers of adaptive non-linear features. Examples of the shallow architectures are conventional, commonly used Gaussian mixture models (GMMs) and hidden Markov models (HMMs), linear or non-linear dynamical systems, conditional random fields (CRFs), maximum entropy (MaxEnt) models, support vector machines (SVMs), logistic regression, kernel regression, and multi-layer perceptron (MLP) neural network with a single hidden layer including extreme learning machine. A property common to these shallow learning models is the relatively simple architecture that consists of only one layer responsible for transforming the raw input signals or features into a problem-specific feature space, which may be unobservable. Take the example of an SVM and other conventional kernel methods. They use a shallow linear pattern separation model with one or zero feature transformation layer when kernel trick is used or otherwise. (Notable exceptions are the recent kernel methods that have been inspired by and integrated with deep learning; e.g., [Reference Cho and Saul10–Reference Vinyals, Jia, Deng and Darrell12].) Shallow architectures have been shown effective in solving many simple or well-constrained problems, but their limited modeling and representational power can cause difficulties when dealing with more complicated real-world applications involving natural signals such as human speech, natural sound and language, and natural image and visual scenes.

Human information-processing mechanisms (e.g., vision and speech), however, suggest the need of deep architectures for extracting complex structure and building internal representation from rich sensory inputs. For example, human speech production and perception systems are both equipped with clearly layered hierarchical structures in transforming the information from the waveform level to the linguistic level [Reference Baker13–Reference Deng16]. In a similar vein, human visual system is also hierarchical in nature, most in the perception side but interestingly also in the “generative” side [Reference George17–Reference Poggio, Jacovitt, Pettorossi, Consolo and Senni19]. It is natural to believe that the state-of-the-art can be advanced in processing these types of natural signals if efficient and effective deep learning algorithms are developed. Information-processing and learning systems with deep architectures are composed of many layers of non-linear processing stages, where each lower layer's outputs are fed to its immediate higher layer as the input. The successful deep learning techniques developed so far share two additional key properties: the generative nature of the model, which typically requires adding an additional top layer to perform discriminative tasks, and an unsupervised pretraining step that makes an effective use of large amounts of unlabeled training data for extracting structures and regularities in the input features.

Historically, the concept of deep learning was originated from artificial neural network research. (Hence, one may occasionally hear the discussion of “new-generation neural networks”.) Feed-forward neural networks or MLPs with many hidden layers are indeed a good example of the models with a deep architecture. Backpropagation, popularized in 1980s, has been a well-known algorithm for learning the weights of these networks. Unfortunately backpropagation alone did not work well in practice for learning networks with more than a small number of hidden layers (see a review and analysis in [Reference Bengio4, Reference Glorot and Bengio20]). The pervasive presence of local optima in the non-convex objective function of the deep networks is the main source of difficulties in the learning. Backpropagation is based on local gradient descent, and starts usually at some random initial points. It often gets trapped in poor local optima, and the severity increases significantly as the depth of the networks increases. This difficulty is partially responsible for steering away most of the machine learning and signal-processing research from neural networks to shallow models that have convex loss functions (e.g., SVMs, CRFs, and MaxEnt models), for which global optimum can be efficiently obtained at the cost of less powerful models.

The optimization difficulty associated with the deep models was empirically alleviated when a reasonably efficient, unsupervised learning algorithm was introduced in the two papers of [Reference Hinton, Osindero and Teh3, Reference Hinton and Salakhutdinov21]. In these papers, a class of deep generative models was introduced, called deep belief network (DBN), which is composed of a stack of restricted Boltzmann machines (RBMs). A core component of the DBN is a greedy, layer-by-layer learning algorithm, which optimizes DBN weights at time complexity linear to the size and depth of the networks. Separately and with some surprise, initializing the weights of an MLP with a correspondingly configured DBN often produces much better results than that with the random weights. As such, MLPs with many hidden layers, or deep neural networks (DNNs), which are learned with unsupervised DBN pretraining followed by backpropagation fine-tuning is sometimes also called DBNs in the literature (e.g., [Reference Dahl, Yu, Deng and Acero22–Reference Mohamed, Dahl and Hinton24]). More recently, researchers have been more careful in distinguishing DNN from DBN [Reference Hinton6, Reference Dahl, Yu, Deng and Acero25], and when DBN is used the initialize the training of a DNN, the resulting network is called DBN–DNN [Reference Hinton6].

In addition to the supply of good initialization points, DBN comes with additional attractive features. First, the learning algorithm makes effective use of unlabeled data. Second, it can be interpreted as Bayesian probabilistic generative model. Third, the values of the hidden variables in the deepest layer are efficient to compute. And fourth, the overfitting problem, which is often observed in the models with millions of parameters such as DBNs, and the under-fitting problem, which occurs often in deep networks, can be effectively addressed by the generative pretraining step. An insightful analysis on what speech information DBNs can capture is provided in [Reference Mohamed, Hinton and Penn26].

The DBN-training procedure is not the only one that makes effective training of DNNs possible. Since the publication of the seminal work [Reference Hinton, Osindero and Teh3, Reference Hinton and Salakhutdinov21], a number of other researchers have been improving and applying the deep learning techniques with success. For example, one can alternatively pretrain DNNs layer by layer by considering each pair of layers as a denoising autoencoder regularized by setting a subset of the inputs to zero [Reference Bengio4, Reference Vincent, Larochelle, Lajoie, Bengio and Manzagol27]. Also, “contractive” autoencoders can be used for the same purpose by regularizing via penalizing the gradient of the activities of the hidden units with respect to the inputs [Reference Rifai, Vincent, Muller, Glorot and Bengio28]. Further, Ranzato et al. [Reference Ranzato, Boureau and LeCun29] developed the sparse encoding symmetric machine (SESM), which has a very similar architecture to RBMs as building blocks of a DBN. In principle, SESM may also be used to effectively initialize the DNN training.

Historically, the use of the generative model of DBN to facilitate the training of DNNs plays an important role in igniting the interest of deep learning for speech feature coding and for speech recognition [Reference Hinton6, Reference Dahl, Yu, Deng and Acero22, Reference Dahl, Yu, Deng and Acero25, Reference Deng, Seltzer, Yu, Acero, Mohamed and Hinton30]. After this effectiveness was demonstrated, further research showed many alternative but simpler ways of doing pretraining. With a large amount of training data, we now know how to learn a DNN by starting with a shallow neural network (i.e., with one hidden layer). After this shallow network has been trained discriminatively, a new hidden layer is inserted between the previous hidden layer and the softmax output layer and the full network is again discriminatively trained. One can continue this process until the desired number of hidden layers is reached in the DNN. And finally, full backpropagation fine-tuning is carried out to complete the DNN training. With more training data and with more careful weight initialization, the above process of discriminative pretraining can be removed also for effective DNN training.

In the next section, an overview is provided on the various architectures of deep learning, including and beyond the original DBN published in [Reference Hinton, Osindero and Teh3].

III. THREE BROAD CLASSES OF DEEP ARCHITECTURES: AN OVERVIEW

As described earlier, deep learning refers to a rather wide class of machine learning techniques and architectures, with the hallmark of using many layers of non-linear information-processing stages that are hierarchical in nature. Depending on how the architectures and techniques are intended for use, e.g., synthesis/generation or recognition/classification, one can broadly categorize most of the work in this area into three main classes:

-

(1) Generative deep architectures, which are intended to characterize the high-order correlation properties of the observed or visible data for pattern analysis or synthesis purposes, and/or characterize the joint statistical distributions of the visible data and their associated classes. In the latter case, the use of Bayes rule can turn this type of architecture into a discriminative one.

-

(2) Discriminative deep architectures, which are intended to directly provide discriminative power for pattern classification, often by characterizing the posterior distributions of classes conditioned on the visible data; and

-

(3) Hybrid deep architectures, where the goal is discrimination but is assisted (often in a significant way) with the outcomes of generative architectures via better optimization or/and regularization, or when discriminative criteria are used to learn the parameters in any of the deep generative models in category (1) above.

Note the use of “hybrid” in (3) above is different from that used sometimes in the literature, which refers to the hybrid pipeline systems for speech recognition feeding the output probabilities of a neural network into an HMM [Reference Bengio, De Mori, Flammia and Kompe31–Reference Morgan33].

By machine learning tradition (e.g., [Reference Deng and Li34]), it may be natural to use a two-way classification scheme according to discriminative learning (e.g., neural networks) versus deep probabilistic generative learning (e.g., DBN, DBM, etc.). This classification scheme, however, misses a key insight gained in deep learning research about how generative models can greatly improve learning DNNs and other deep discriminative models via better optimization and regularization. Also, deep generative models may not necessarily need to be probabilistic; e.g., the deep autoencoder. Nevertheless, the two-way classification points to important differences between DNNs and deep probabilistic models. The former is usually more efficient for training and testing, more flexible in its construction, less constrained (e.g., no normalization by the difficult partition function, which can be replaced by sparsity), and is more suitable for end-to-end learning of complex systems (e.g., no approximate inference and learning). The latter, on the other hand, is easier to interpret and to embed domain knowledge, is easier to compose and to handle uncertainty, but is typically intractable in inference and learning for complex systems. This distinction, however, is retained also in the proposed three-way classification, which is adopted throughout this paper.

Below we briefly review representative work in each of the above three classes, where several basic definitions will be used as summarized in Table 1. Applications of these deep architectures are deferred to Section VII.

Table 1. Some basic deep learning terminologies.

A) Generative architectures

Associated with this generative category, we often see “unsupervised feature learning”, since the labels for the data are not of concern. When applying generative architectures to pattern recognition (i.e., supervised learning), a key concept here is (unsupervised) pretraining. This concept arises from the need to learn deep networks but learning the lower levels of such networks is difficult, especially when training data are limited. Therefore, it is desirable to learn each lower layer without relying on all the layers above and to learn all layers in a greedy, layer-by-layer manner from bottom up. This is the gist of “pretraining” before subsequent learning of all layers together.

Among the various subclasses of generative deep architecture, the energy-based deep models including autoencoders are the most common (e.g., [Reference Bengio4, Reference LeCun, Chopra, Ranzato and Huang35–Reference Ngiam, Chen, Koh and Ng38]). The original form of the deep autoencoder [Reference Hinton and Salakhutdinov21, Reference Deng, Seltzer, Yu, Acero, Mohamed and Hinton30], which we will give more detail about in Section IV, is a typical example in the generative model category. Most other forms of deep autoencoders are also generative in nature, but with quite different properties and implementations. Examples are transforming autoencoders [Reference Hinton, Krizhevsky and Wang39], predictive sparse coders and their stacked version, and denoising autoencoders and their stacked versions [Reference Vincent, Larochelle, Lajoie, Bengio and Manzagol27].

Specifically, in denoising autoencoders, the input vectors are first corrupted; e.g., randomizing a percentage of the inputs and setting them to zeros. Then one designs the hidden encoding nodes to reconstruct the original, uncorrupted input data using criteria such as KL distance between the original inputs and the reconstructed inputs. Uncorrupted encoded representations are used as the inputs to the next level of the stacked denoising autoencoder.

Another prominent type of generative model is deep Boltzmann machine or DBM [Reference Salakhutdinov and Hinton40–Reference Srivastava and Salakhutdinov42]. A DBM contains many layers of hidden variables, and has no connections between the variables within the same layer. This is a special case of the general Boltzmann machine (BM), which is a network of symmetrically connected units that make stochastic decisions about whether to be on or off. While having very simple learning algorithm, the general BMs are very complex to study and very slow to compute in learning. In a DBM, each layer captures complicated, higher-order correlations between the activities of hidden features in the layer below. DBMs have the potential of learning internal representations that become increasingly complex, highly desirable for solving object and speech recognition problems. Furthermore, the high-level representations can be built from a large supply of unlabeled sensory inputs and very limited labeled data can then be used to only slightly fine-tune the model for a specific task at hand.

When the number of hidden layers of DBM is reduced to one, we have RBM. Like DBM, there are no hidden-to-hidden and no visible-to-visible connections. The main virtue of RBM is that via composing many RBMs, many hidden layers can be learned efficiently using the feature activations of one RBM as the training data for the next. Such composition leads to DBN, which we will describe in more detail, together with RBMs, in Section V.

The standard DBN has been extended to the factored higher-order BM in its bottom layer, with strong results for phone recognition obtained [Reference Dahl, Ranzato, Mohamed and Hinton43]. This model, called mean-covariance RBM or mcRBM, recognizes the limitation of the standard RBM in its ability to represent the covariance structure of the data. However, it is very difficult to train mcRBM and to use it at the higher levels of the deep architecture. Furthermore, the strong results published are not easy to reproduce. In the architecture of [Reference Dahl, Ranzato, Mohamed and Hinton43], the mcRBM parameters in the full DBN are not easy to be fine-tuned using the discriminative information as for the regular RBMs in the higher layers. However, recent work showed that when better features are used, e.g., cepstral speech features subject to linear discriminant analysis or to fMLLR transformation, then the mcRBM is not needed as covariance in the transformed data is already modeled [Reference Mohamed, Hinton and Penn26].

Another representative deep generative architecture is the sum-product network or SPN [Reference Poon and Domingos44, Reference Gens and Domingo45]. An SPN is a directed acyclic graph with the data as leaves, and with sum and product operations as internal nodes in the deep architecture. The “sum” nodes give mixture models, and the “product” nodes build up the feature hierarchy. Properties of “completeness” and “consistency” constrain the SPN in a desirable way. The learning of SPN is carried out using the EM algorithm together with backpropagation. The learning procedure starts with a dense SPN. It then finds an SPN structure by learning its weights, where zero weights remove the connections. The main difficulty in learning is found to be the common one – the learning signal (i.e., the gradient) quickly dilutes when it propagates to deep layers. Empirical solutions have been found to mitigate this difficulty reported in [Reference Poon and Domingos44], where it was pointed out that despite the many desirable generative properties in the SPN, it is difficult to fine tune its weights using the discriminative information, limiting its effectiveness in classification tasks. This difficulty has been overcome in the subsequent work reported in [Reference Gens and Domingo45], where an efficient backpropagation-style discriminative training algorithm for SPN was presented. It was pointed out that the standard gradient descent, computed by the derivative of the conditional likelihood, suffers from the same gradient diffusion problem well known for the regular deep networks. But when marginal inference is replaced by inferring the most probable state of the hidden variables, such a “hard” gradient descent can reliably estimate deep SPNs' weights. Excellent results on (small-scale) image recognition tasks are reported.

RNNs can be regarded as a class of deep generative architectures when they are used to model and generate sequential data (e.g., [Reference Sutskever, Martens and Hinton46]). The “depth” of an RNN can be as large as the length of the input data sequence. RNNs are very powerful for modeling sequence data (e.g., speech or text), but until recently they had not been widely used partly because they are extremely difficult to train properly due to the well-known “vanishing gradient” problem. Recent advances in Hessian-free optimization [Reference Martens47] have partially overcome this difficulty using second-order information or stochastic curvature estimates. In the recent work of [Reference Martens and Sutskever48], RNNs that are trained with Hessian-free optimization are used as a generative deep architecture in the character-level language modeling (LM) tasks, where gated connections are introduced to allow the current input characters to predict the transition from one latent state vector to the next. Such generative RNN models are demonstrated to be well capable of generating sequential text characters. More recently, Bengio et al. [Reference Bengio, Boulanger and Pascanu49] and Sutskever [Reference Sutskever50] have explored new optimization methods in training generative RNNs that modify stochastic gradient descent and show these modifications can outperform Hessian-free optimization methods. Mikolov et al. [Reference Mikolov, Karafiat, Burget, Cernocky and Khudanpur51] have reported excellent results on using RNNs for LM. More recently, Mesnil et al. [Reference Mesnil, He, Deng and Bengio52] reported the success of RNNs in spoken language understanding.

As examples of a different type of generative deep models, there has been a long history in speech recognition research where human speech production mechanisms are exploited to construct dynamic and deep structure in probabilistic generative models; for a comprehensive review, see book [Reference Deng53]. Specifically, the early work described in [Reference Deng54–Reference Deng and Aksmanovic59] generalized and extended the conventional shallow and conditionally independent HMM structure by imposing dynamic constraints, in the form of polynomial trajectory, on the HMM parameters. A variant of this approach has been more recently developed using different learning techniques for time-varying HMM parameters and with the applications extended to speech recognition robustness [Reference Yu and Deng60, Reference Yu, Deng, Gong and Acero61]. Similar trajectory HMMs also form the basis for parametric speech synthesis [Reference Zen, Nankaku and Tokuda62–Reference Shannon, Zen and Byrne66]. Subsequent work added a new hidden layer into the dynamic model so as to explicitly account for the target-directed, articulatory-like properties in human speech generation [Reference Deng15, Reference Deng16, Reference Deng, Ramsay and Sun67–Reference Ma and Deng73]. More efficient implementation of this deep architecture with hidden dynamics is achieved with non-recursive or FIR filters in more recent studies [Reference Deng, Yu and Acero74–Reference Deng and Yu76]. The above deep-structured generative models of speech can be shown as special cases of the more general dynamic Bayesian network model and even more general dynamic graphical models [Reference Bilmes and Bartels77, Reference Bilmes78]. The graphical models can comprise many hidden layers to characterize the complex relationship between the variables in speech generation. Armed with powerful graphical modeling tool, the deep architecture of speech has more recently been successfully applied to solve the very difficult problem of single-channel, multi-talker speech recognition, where the mixed speech is the visible variable while the un-mixed speech becomes represented in a new hidden layer in the deep generative architecture [Reference Rennie, Hershey and Olsen79, Reference Wohlmayr, Stark and Pernkopf80]. Deep generative graphical models are indeed a powerful tool in many applications due to their capability of embedding domain knowledge. However, in addition to the weakness of using non-distributed representations for the classification categories, they also are often implemented with inappropriate approximations in inference, learning, prediction, and topology design, all arising from inherent intractability in these tasks for most real-world applications. This problem has been partly addressed in the recent work of [Reference Stoyanov, Ropson and Eisner81], which provides an interesting direction for making deep generative graphical models potentially more useful in practice in the future.

The standard statistical methods used for large-scale speech recognition and understanding combine (shallow) HMMs for speech acoustics with higher layers of structure representing different levels of natural language hierarchy. This combined hierarchical model can be suitably regarded as a deep generative architecture, whose motivation and some technical detail may be found in Chapter 7 in the recent book [Reference Kurzweil82] on “Hierarchical HMM” or HHMM. Related models with greater technical depth and mathematical treatment can be found in [Reference Fine, Singer and Tishby83] for HHMM and [Reference Oliver, Garg and Horvitz84] for Layered HMM. These early deep models were formulated as directed graphical models, missing the key aspect of “distributed representation” embodied in the more recent deep generative architectures of DBN and DBM discussed earlier in this section.

Finally, temporally recursive and deep generative models can be found in [Reference Taylor, Hinton and Roweis85] for human motion modeling, and in [Reference Socher, Lin, Ng and Manning86] for natural language and natural scene parsing. The latter model is particularly interesting because the learning algorithms are capable of automatically determining the optimal model structure. This contrasts with other deep architectures such as the DBN where only the parameters are learned while the architectures need to be predefined. Specifically, as reported in [Reference Socher, Lin, Ng and Manning86], the recursive structure commonly found in natural scene images and in natural language sentences can be discovered using a max-margin structure prediction architecture. Not only the units contained in the images or sentences are identified but so is the way in which these units interact with each other to form the whole.

B) Discriminative architectures

Many of the discriminative techniques in signal and information processing apply to shallow architectures such as HMMs (e.g., [Reference Juang, Chou and Lee87–Reference Gibson and Hain94]) or CRFs (e.g., [Reference Yang and Furui95–Reference Peng, Bo and Xu100]). Since a CRF is defined with the conditional probability on input data as well as on the output labels, it is intrinsically a shallow discriminative architecture. (Interesting equivalence between CRF and discriminatively trained Gaussian models and HMMs can be found in [Reference Heigold, Ney, Lehnen, Gass and Schluter101]. More recently, deep-structured CRFs have been developed by stacking the output in each lower layer of the CRF, together with the original input data, onto its higher layer [Reference Yu, Wang and Deng96]. Various versions of deep-structured CRFs are usefully applied to phone recognition [Reference Yu and Deng102], spoken language identification [Reference Yu, Wang, Karam and Deng103], and natural language processing [Reference Yu, Wang and Deng96]. However, at least for the phone recognition task, the performance of deep-structured CRFs, which is purely discriminative (non-generative), has not been able to match that of the hybrid approach involving DBN, which we will take on shortly.

The recent article of [Reference Morgan33] gives an excellent review on other major existing discriminative models in speech recognition based mainly on the traditional neural network or MLP architecture using backpropagation learning with random initialization. It argues for the importance of both the increased width of each layer of the neural networks and the increased depth. In particular, a class of DNN models forms the basis of the popular “tandem” approach, where a discriminatively learned neural network is developed in the context of computing discriminant emission probabilities for HMMs. For some representative recent works in this area, see [Reference Pinto, Garimella, Magimai-Doss, Hermansky and Bourlard104, Reference Ketabdar and Bourlard105]. The tandem approach generates discriminative features for an HMM by using the activities from one or more hidden layers of a neural network with various ways of information combination, which can be regarded as a form of discriminative deep architectures [Reference Morgan33, Reference Morgan106].

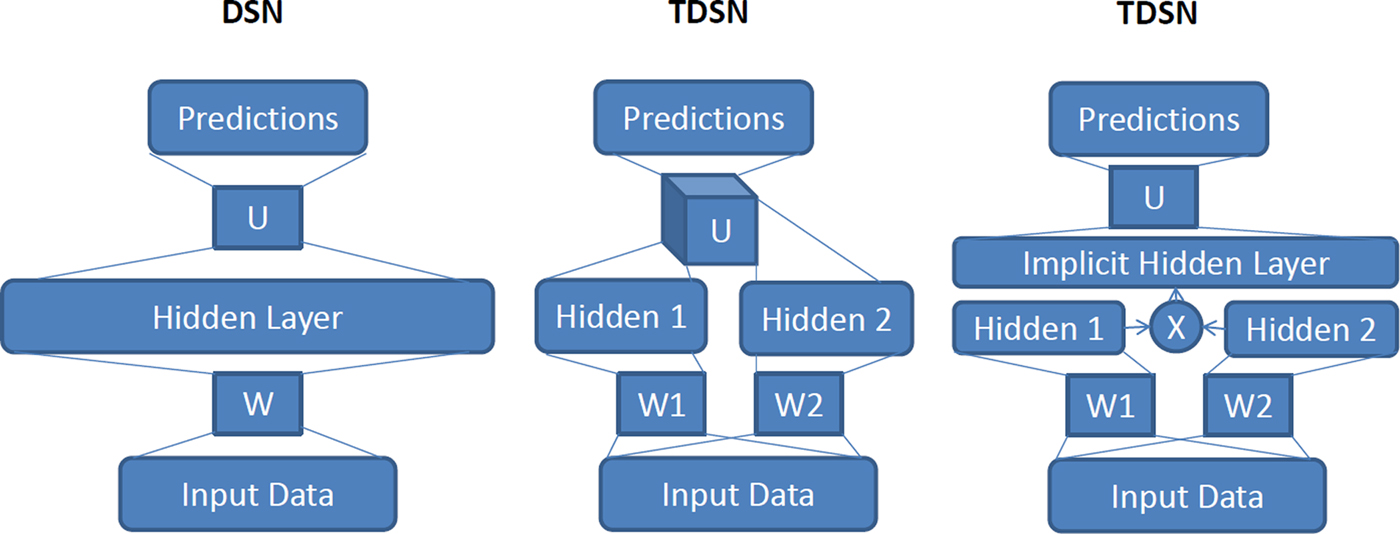

In the most recent work of [Reference Deng, Yu and Platt108–Reference Lena, Nagata and Baldi110], a new deep learning architecture, sometimes called DSN, together with its tensor variant [Reference Hutchinson, Deng and Yu111, Reference Hutchinson, Deng and Yu112] and its kernel version [Reference Deng, Tur, He and Hakkani-Tur11], are developed that all focus on discrimination with scalable, parallelizable learning relying on little or no generative component. We will describe this type of discriminative deep architecture in detail in Section V.

RNNs have been successfully used as a generative model when the “output” is taken to be the predicted input data in the future, as discussed in the preceding subsection; see also the neural predictive model [Reference Deng, Hassanein and Elmasry113] with the same mechanism. They can also be used as a discriminative model where the output is an explicit label sequence associated with the input data sequence. Note that such discriminative RNNs were applied to speech a long time ago with limited success (e.g., [Reference Robinson114]). For training RNNs for discrimination, presegmented training data are typically required. Also, post-processing is needed to transform their outputs into label sequences. It is highly desirable to remove such requirements, especially the costly presegmentation of training data. Often a separate HMM is used to automatically segment the sequence during training, and to transform the RNN classification results into label sequences [Reference Robinson114]. However, the use of HMM for these purposes does not take advantage of the full potential of RNNs.

An interesting method was proposed in [Reference Graves, Fernandez, Gomez and Schmidhuber115–Reference Graves117] that enables the RNNs themselves to perform sequence classification, removing the need for presegmenting the training data and for post-processing the outputs. Underlying this method is the idea of interpreting RNN outputs as the conditional distributions over all possible label sequences given the input sequences. Then, a differentiable objective function can be derived to optimize these conditional distributions over the correct label sequences, where no segmentation of data is required.

Another type of discriminative deep architecture is convolutional neural network (CNN), with each module consisting of a convolutional layer and a pooling layer. These modules are often stacked up with one on top of another, or with a DNN on top of it, to form a deep model. The convolutional layer shares many weights, and the pooling layer subsamples the output of the convolutional layer and reduces the data rate from the layer below. The weight sharing in the convolutional layer, together with appropriately chosen pooling schemes, endows the CNN with some “invariance” properties (e.g., translation invariance). It has been argued that such limited “invariance” or equi-variance is not adequate for complex pattern recognition tasks and more principled ways of handling a wider range of invariance are needed [Reference Hinton, Krizhevsky and Wang39]. Nevertheless, the CNN has been found highly effective and been commonly used in computer vision and image recognition [Reference LeCun, Bottou, Bengio and Haffner118–Reference Krizhevsky, Sutskever and Hinton121, Reference Le, Ranzato, Monga, Devin, Corrado, Chen, Dean and Ng154]. More recently, with appropriate changes from the CNN designed for image analysis to that taking into account speech-specific properties, the CNN is also found effective for speech recognition [Reference Abdel-Hamid, Mohamed, Jiang and Penn122–Reference Deng, Abdel-Hamid and Yu126]. We will discuss such applications in more detail in Section VII.

It is useful to point out that time-delay neural networks (TDNN, [,127129]) developed for early speech recognition are a special case of the CNN when weight sharing is limited to one of the two dimensions, i.e., time dimension. It was not until recently that researchers have discovered that time is the wrong dimension to impose “invariance” and frequency dimension is more effective in sharing weights and pooling outputs [Reference Abdel-Hamid, Mohamed, Jiang and Penn122, Reference Abdel-Hamid, Deng and Yu123, Reference Deng, Abdel-Hamid and Yu126]. An analysis on the underlying reasons are provided in [Reference Deng, Abdel-Hamid and Yu126], together with a new strategy for designing the CNN's pooling layer demonstrated to be more effective than nearly all previous CNNs in phone recognition.

It is also useful to point out that the model of hierarchical temporal memory (HTM, [Reference George17, Reference Hawkins and Blakeslee128, Reference Hawkins, Ahmad and Dubinsky130] is another variant and extension of the CNN. The extension includes the following aspects: (1) Time or temporal dimension is introduced to serve as the “supervision” information for discrimination (even for static images); (2) both bottom-up and top-down information flow are used, instead of just bottom-up in the CNN; and (3) a Bayesian probabilistic formalism is used for fusing information and for decision making.

Finally, the learning architecture developed for bottom-up, detection-based speech recognition proposed in [Reference Lee131] and developed further since 2004, notably in [Reference Yu, Siniscalchi, Deng and Lee132–Reference Siniscalchi, Svendsen and Lee134] using the DBN–DNN technique, can also be categorized in the discriminative deep architecture category. There is no intent and mechanism in this architecture to characterize the joint probability of data and recognition targets of speech attributes and of the higher-level phone and words. The most current implementation of this approach is based on multiple layers of neural networks using backpropagation learning [Reference Yu, Seide, Li and Deng135]. One intermediate neural network layer in the implementation of this detection-based framework explicitly represents the speech attributes, which are simplified entities from the “atomic” units of speech developed in the early work of [Reference Deng and Sun136, Reference Sun and Deng137]. The simplification lies in the removal of the temporally overlapping properties of the speech attributes or articulatory-like features. Embedding such more realistic properties in the future work is expected to improve the accuracy of speech recognition further.

C) Hybrid generative–discriminative architectures

The term “hybrid” for this third category refers to the deep architecture that either comprises or makes use of both generative and discriminative model components. In many existing hybrid architectures published in the literature (e.g., [Reference Hinton and Salakhutdinov21, Reference Mohamed, Yu and Deng23, Reference Dahl, Yu, Deng and Acero25, Reference Sainath, Kingsbury and Ramabhadran138]), the generative component is exploited to help with discrimination, which is the final goal of the hybrid architecture. How and why generative modeling can help with discrimination can be examined from two viewpoints:

-

(1) The optimization viewpoint where generative models can provide excellent initialization points in highly non-linear parameter estimation problems (the commonly used term of “pretraining” in deep learning has been introduced for this reason); and/or

-

(2) The regularization perspective where generative models can effectively control the complexity of the overall model.

The study reported in [Reference Erhan, Bengio, Courvelle, Manzagol, Vencent and Bengio139] provided an insightful analysis and experimental evidence supporting both of the viewpoints above.

When the generative deep architecture of DBN discussed in Section III-A is subject to further discriminative training using backprop, commonly called “fine-tuning” in the literature, we obtain an equivalent architecture of the DNN. The weights of the DNN can be “pretrained” from stacked RBMs or DBN instead of the usual random initialization. See [Reference Mohamed, Dahl and Hinton24] for a detailed explanation of the equivalence relationship and the use of the often confusing terminology. We will review details of the DNN in the context of RBM/DBN pretraining as well as its interface with the most commonly used shallow generative architecture of HMM (DNN–HMM) in Section IV.

Another example of the hybrid deep architecture is developed in [Reference Mohamed, Yu and Deng23], where again the generative DBN is used to initialize the DNN weights but the fine tuning is carried out not using frame-level discriminative information (e.g., cross-entropy error criterion) but sequence-level one. This is a combination of the static DNN with the shallow discriminative architecture of CRF. Here, the overall architecture of DNN–CRF is learned using the discriminative criterion of the conditional probability of full label sequences given the input sequence data. It can be shown that such DNN–CRF is equivalent to a hybrid deep architecture of DNN and HMM whose parameters are learned jointly using the full-sequence maximum mutual information (MMI) between the entire label sequence and the input vector sequence. A closely related full-sequence training method is carried out with success for a shallow neural network [Reference Kingsbury140] and for a deep one [Reference Kingsbury, Sainath and Soltau141].

Here, it is useful to point out a connection between the above hybrid discriminative training and a highly popular minimum phone error (MPE) training technique for the HMM [Reference Povey and Woodland89]. In the iterative MPE training procedure using extended Baum–Welch, the initial HMM parameters cannot be arbitrary. One commonly used initial parameter set is that trained generatively using Baum–Welch algorithm for maximum likelihood. Furthermore, an interpolation term taking the values of generatively trained HMM parameters is needed in the extended Baum–Welch updating formula, which may be analogous to “fine tuning” in the DNN training discussed earlier. Such I-smoothing [Reference Povey and Woodland89] has a similar spirit to DBN pretraining in the “hybrid” DNN learning.

Along the line of using discriminative criteria to train parameters in generative models as in the above HMM training example, we here briefly discuss the same method applied to learning other generative architectures. In [Reference Larochelle and Bengio142], the generative model of RBM is learned using the discriminative criterion of posterior class/label probabilities when the label vector is concatenated with the input data vector to form the overall visible layer in the RBM. In this way, RBM can be considered as a stand-alone solution to classification problems and the authors derived a discriminative learning algorithm for RBM as a shallow generative model. In the more recent work of [Reference Ranzato, Susskind, Mnih and Hinton146], the deep generative model of DBN with the gated MRF at the lowest level is learned for feature extraction and then for recognition of difficult image classes including occlusions. The generative ability of the DBN model facilitates the discovery of what information is captured and what is lost at each level of representation in the deep model, as demonstrated in [Reference Ranzato, Susskind, Mnih and Hinton146]. A related work on using the discriminative criterion of empirical risk to train deep graphical models can be found in [Reference Stoyanov, Ropson and Eisner81].

A further example of the hybrid deep architecture is the use of the generative model of DBN to pretrain deep convolutional neural networks (deep DNN) [Reference Abdel-Hamid, Deng and Yu123, Reference Lee, Grosse, Ranganath and Ng144, Reference Lee, Largman, Pham and Ng145]). Like the fully-connected DNN discussed earlier, the DBN pretraining is also shown to improve discrimination of the deep CNN over random initialization.

The final example given here of the hybrid deep architecture is based on the idea and work of [Reference Ney147, Reference He and Deng148], where one task of discrimination (speech recognition) produces the output (text) that serves as the input to the second task of discrimination (machine translation). The overall system, giving the functionality of speech translation – translating speech in one language into text in another language – is a two-stage deep architecture consisting of both generative and discriminative elements. Both models of speech recognition (e.g., HMM) and of machine translation (e.g., phrasal mapping and non-monotonic alignment) are generative in nature. But their parameters are all learned for discrimination. The framework described in [Reference He and Deng148] enables end-to-end performance optimization in the overall deep architecture using the unified learning framework initially published in [Reference He, Deng and Chou90]. This hybrid deep learning approach can be applied to not only speech translation but also all speech-centric and possibly other information-processing tasks such as speech information retrieval, speech understanding, cross-lingual speech/text understanding and retrieval, etc. (e.g., [Reference Deng, Tur, He and Hakkani-Tur11, Reference Tur, Deng, Hakkani-Tür and He109, Reference Yamin, Deng, Wang and Acero149–Reference He, Deng, Tur and Hakkani-Tur153]).

After briefly surveying a wide range of work in each of the three classes of deep architectures above, in the following three sections, I will elaborate on three prominent models of deep learning, one from each of the three classes. While ideally they should represent the most influential architectures giving state of the art performance, I have chosen the three that I am most familiar with as being responsible for their developments and that may serve the tutorial purpose well with the simplicity of the architectural and mathematical descriptions. The three architectures described in the following three sections may not be interpreted as the most representative and influential work in each of the three classes. For example, in the category of generative architectures, the highly complex deep architecture and generative training methods developed by and described in [Reference Le, Ranzato, Monga, Devin, Corrado, Chen, Dean and Ng154], which is beyond the scope of this tutorial, performs quite well in image recognition. Likewise, in the category of discriminative architectures, the even more complex architecture and learning described in Kingsbury et al. [Reference Kingsbury, Sainath and Soltau141], Seide et al. [Reference Seide, Li and Yu155], and Yan et al. [Reference Yan, Huo and Xu156] gave the state of the art performance in large-scale speech recognition.

IV. GENERATIVE ARCHITECTURE: DEEP AUTOENCODER

A) Introduction

Deep autoencoder is a special type of DNN whose output is the data input itself, and is used for learning efficient encoding or dimensionality reduction for a set of data. More specifically, it is a non-linear feature extraction method involving no class labels; hence generative. An autoencoder uses three or more layers in the neural network:

-

• An input layer of data to be efficiently coded (e.g., pixels in image or spectra in speech);

-

• One or more considerably smaller hidden layers, which will form the encoding.

-

• An output layer, where each neuron has the same meaning as in the input layer.

When the number of hidden layers is greater than one, the autoencoder is considered to be deep.

An autoencoder is often trained using one of the many backpropagation variants (e.g., conjugate gradient method, steepest descent, etc.) Though often reasonably effective, there are fundamental problems with using backpropagation to train networks with many hidden layers. Once the errors get backpropagated to the first few layers, they become minuscule, and quite ineffective. This causes the network to almost always learn to reconstruct the average of all the training data. Though more advanced backpropagation methods (e.g., the conjugate gradient method) help with this to some degree, it still results in very slow learning and poor solutions. This problem is remedied by using initial weights that approximate the final solution. The process to find these initial weights is often called pretraining.

A successful pretraining technique developed in [Reference Hinton, Osindero and Teh3] for training deep autoencoders involves treating each neighboring set of two layers such as an RBM for pretraining to approximate a good solution and then using a backpropagation technique to fine-tune so as the minimize the “coding” error. This training technique is applied to construct a deep autoencoder to map images to short binary code for fast, content-based image retrieval. It is also applied to coding documents (called semantic hashing), and to coding spectrogram-like speech features, which we review below.

B) Use of deep autoencoder to extract speech features

Here we review the more recent work of [Reference Deng, Seltzer, Yu, Acero, Mohamed and Hinton30] in developing a similar type of autoencoder for extracting bottleneck speech instead of image features. Discovery of efficient binary codes related to such features can also be used in speech information retrieval. Importantly, the potential benefits of using discrete representations of speech constructed by this type of deep autoencoder can be derived from an almost unlimited supply of unlabeled data in future-generation speech recognition and retrieval systems.

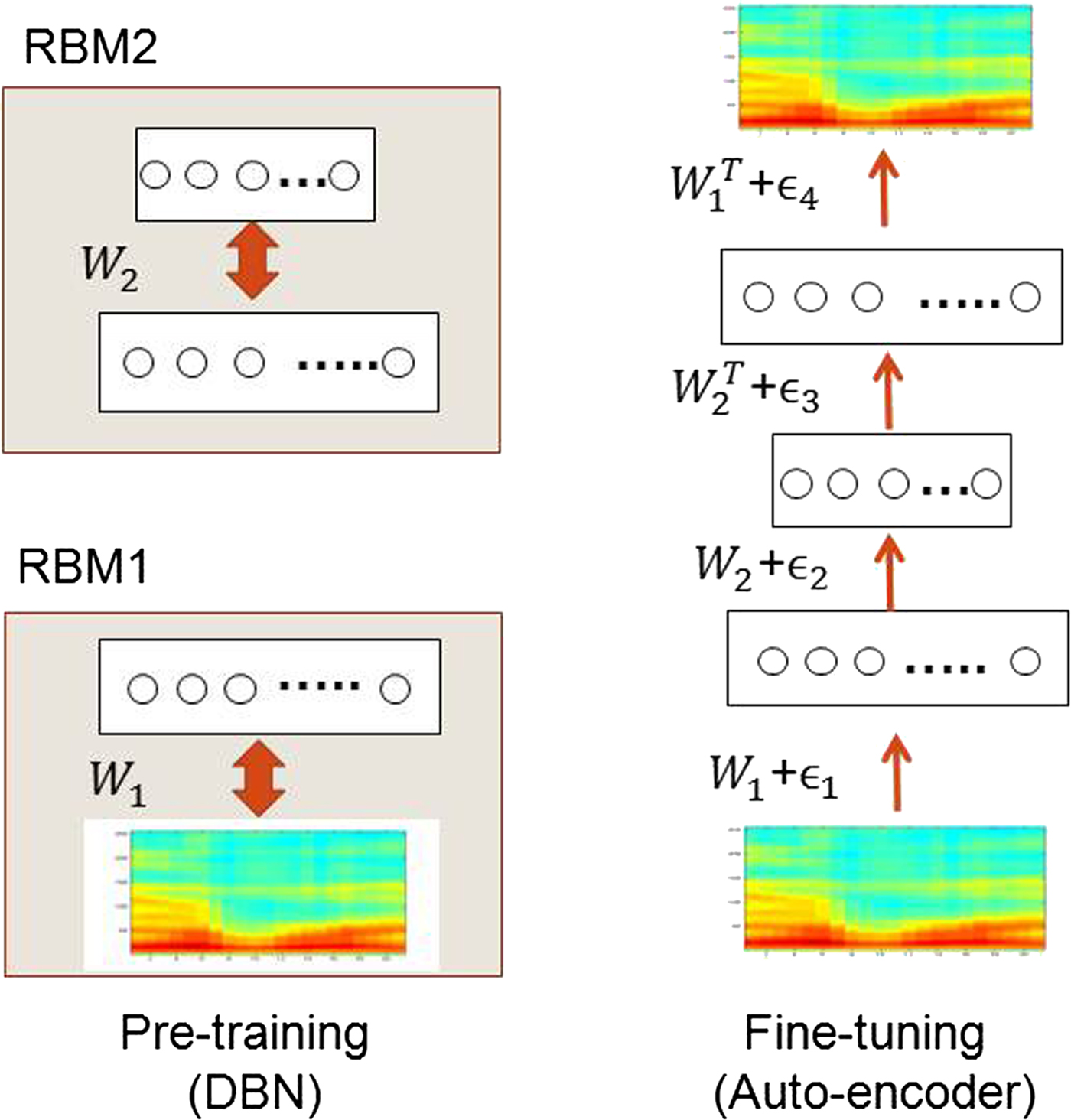

A deep generative model of patches of spectrograms that contain 256 frequency bins and 1, 3, 9, or 13 frames is illustrated in Fig. 1. An undirected graphical model called a Gaussian-binary RBM is built that has one visible layer of linear variables with Gaussian noise and one hidden layer of 500–3000 binary latent variables. After learning the Gaussian-binary RBM, the activation probabilities of its hidden units are treated as the data for training another binary–binary RBM. These two RBMs can then be composed to form a DBN in which it is easy to infer the states of the second layer of binary hidden units from the input in a single forward pass. The DBN used in this work is illustrated on the left side of Fig. 1, where the two RBMs are shown in separate boxes. (See more detailed discussions on RBM and DBN in the next section.)

Fig. 1. The architecture of the deep autoencoder used in [30] for extracting “bottle-neck” speech features from high-resolution spectrograms.

The deep autoencoder with three hidden layers is formed by “unrolling” the DBN using its weight matrices. The lower layers of this deep autoencoder use the matrices to encode the input and the upper layers use the matrices in reverse order to decode the input. This deep autoencoder is then fine-tuned using backpropagation of error-derivatives to make its output as similar as possible to its input, as shown on the right side of Fig. 1. After learning is complete, any variable-length spectrogram can be encoded and reconstructed as follows. First, N-consecutive overlapping frames of 256-point log power spectra are each normalized to zero-mean and unit-variance to provide the input to the deep autoencoder. The first hidden layer then uses the logistic function to compute real-valued activations. These real values are fed to the next, coding layer to compute “codes”. The real-valued activations of hidden units in the coding layer are quantized to be either zero or one with 0.5 as the threshold. These binary codes are then used to reconstruct the original spectrogram, where individual fixed-frame patches are reconstructed first using the two upper layers of network weights. Finally, overlap-and-add technique is used to reconstruct the full-length speech spectrogram from the outputs produced by applying the deep autoencoder to every possible window of N consecutive frames. We show some illustrative encoding and reconstruction examples below.

C) Illustrative examples

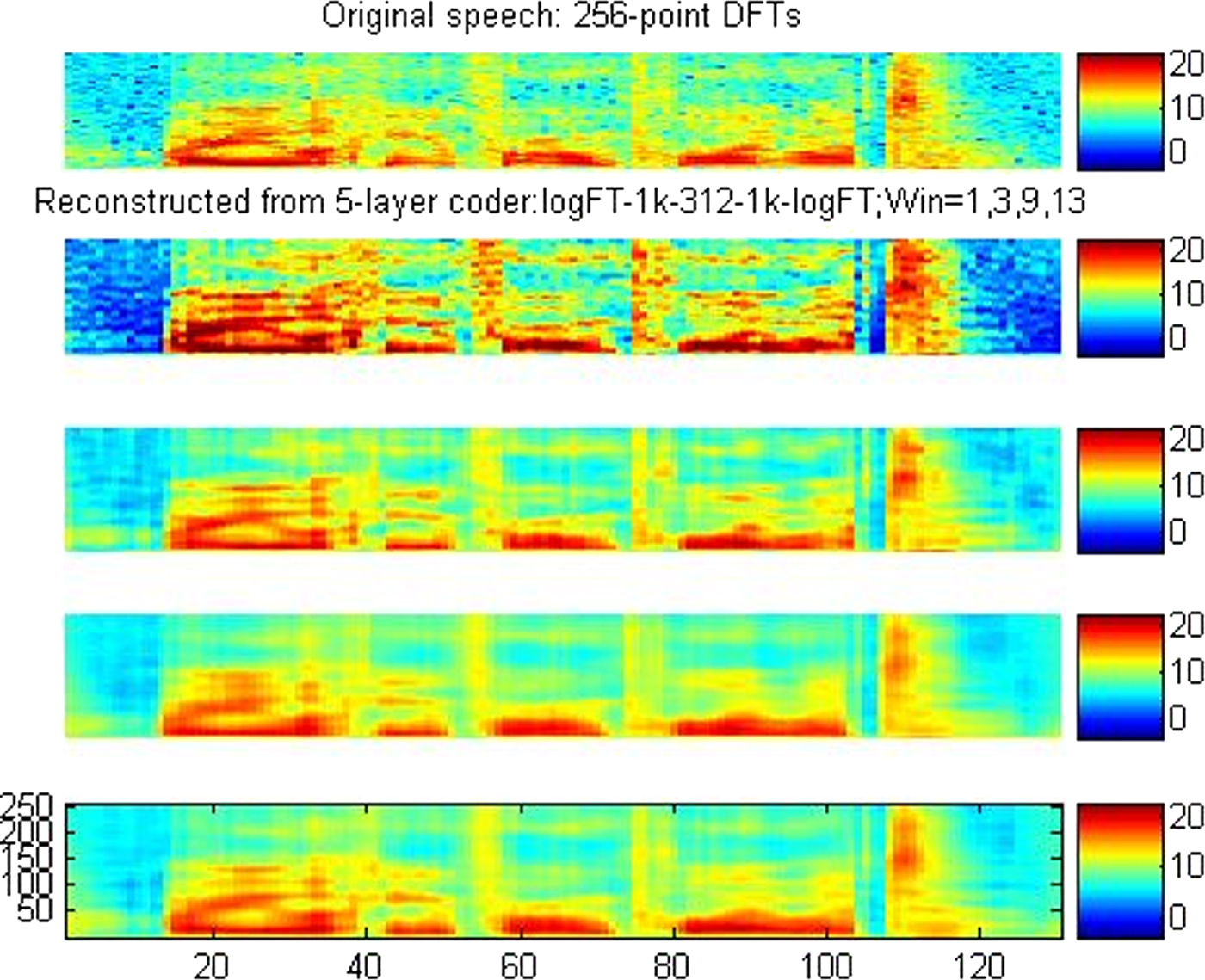

At the top of Fig. 2 is the original speech, followed by the reconstructed speech utterances with forced binary values (zero or one) at the 312 unit code layer for encoding window lengths of N = 1, 3, 9, and 13, respectively. The lower coding errors for N = 9 and 13 are clearly seen.

Fig. 2. Top to Bottom: Original spectrogram; reconstructions using input window sizes of N = 1, 3, 9, and 13 while forcing the coding units to be zero or one (i.e., a binary code). The y-axis values indicate FFT bin numbers (i.e., 256-point FFT is used for constructing all spectrograms).

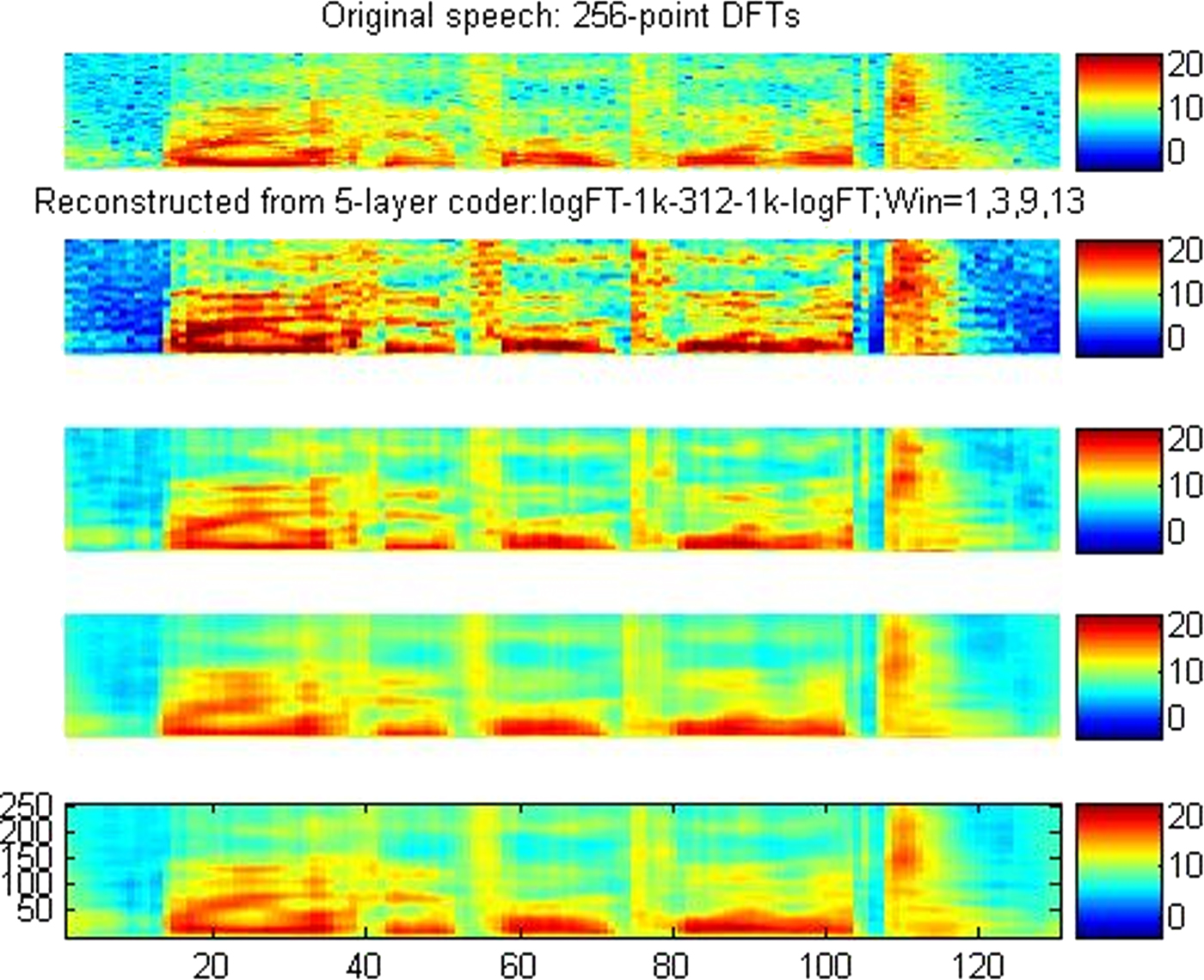

Encoding accuracy of the deep autoencoder is qualitatively examined to compare with the more traditional codes via vector quantization (VQ). Figure 3 shows various aspects of the encoding accuracy. At the top is the original speech utterance's spectrogram. The next two spectrograms are the blurry reconstruction from the 312-bit VQ and the much more faithful reconstruction from the 312-bit deep autoencoder. Coding errors from both coders, plotted as a function of time, are shown below the spectrograms, demonstrating that the autoencoder (red curve) is producing lower errors than the VQ coder (blue curve) throughout the entire span of the utterance. The final two spectrograms show the detailed coding error distributions over both time and frequency bins.

Fig. 3. Top to bottom: Original spectrogram from the test set; reconstruction from the 312-bit VQ coder; reconstruction from the 312-bit autoencoder; coding errors as a function of time for the VQ coder (blue) and autoencoder (red); spectrogram of the VQ coder residual; spectrogram of the deep autoencoder's residual.

D) Transforming autoencoder

The deep autoencoder described above can extract a compact code for a feature vector due to its many layers and the non-linearity. But the extracted code would change unpredictably when the input feature vector is transformed. It is desirable to be able to have the code change predictably that reflects the underlying transformation invariant to the perceived content. This is the goal of transforming autoencoder proposed in for image recognition [Reference Hinton, Krizhevsky and Wang39].

The building block of the transforming autoencoder is a “capsule”, which is an independent subnetwork that extracts a single parameterized feature representing a single entity, be it visual or audio. A transforming autoencoder receives both an input vector and a target output vector, which is related to the input vector by a simple global transformation; e.g., the translation of a whole image or frequency shift due to vocal tract length differences for speech. An explicit representation of the global transformation is known also. The bottleneck or coding layer of the transforming autoencoder consists of the outputs of several capsules.

During the training phase, the different capsules learn to extract different entities in order to minimize the error between the final output and the target.

In addition to the deep autoencoder architectures described in this section, there are many other types of generative architectures in the literature, all characterized by the use of data alone (i.e., free of classification labels) to automatically derive higher-level features. Although such more complex architectures have produced state of the art results (e.g., [Reference Le, Ranzato, Monga, Devin, Corrado, Chen, Dean and Ng154]), their complexity does not permit detailed treatment in this tutorial paper; rather, a brief survey of a broader range of the generative deep architectures was included in Section III-A.

V. HYBRID ARCHITECTURE: DNN PRETRAINED WITH DBN

A) Basics

In this section, we present the most widely studied hybrid deep architecture of DNNs, consisting of both pretraining (using generative DBN) and fine-tuning stages in its parameter learning. Part of this review is based on the recent publication of [Reference Hinton6, Reference Yu and Deng7, Reference Dahl, Yu, Deng and Acero25].

As the generative component of the DBN, it is a probabilistic model composed of multiple layers of stochastic, latent variables. The unobserved variables can have binary values and are often called hidden units or feature detectors. The top two layers have undirected, symmetric connections between them and form an associative memory. The lower layers receive top-down, directed connections from the layer above. The states of the units in the lowest layer, or the visible units, represent an input data vector.

There is an efficient, layer-by-layer procedure for learning the top-down, generative weights that determine how the variables in one layer depend on the variables in the layer above. After learning, the values of the latent variables in every layer can be inferred by a single, bottom-up pass that starts with an observed data vector in the bottom layer and uses the generative weights in the reverse direction.

DBNs are learned one layer at a time by treating the values of the latent variables in one layer, when they are being inferred from data, as the data for training the next layer. This efficient, greedy learning can be followed by, or combined with, other learning procedures that fine-tune all of the weights to improve the generative or discriminative performance of the full network. This latter learning procedure constitutes the discriminative component of the DBN as the hybrid architecture.

Discriminative fine-tuning can be performed by adding a final layer of variables that represent the desired outputs and backpropagating error derivatives. When networks with many hidden layers are applied to highly structured input data, such as speech and images, backpropagation works much better if the feature detectors in the hidden layers are initialized by learning a DBN to model the structure in the input data as originally proposed in [Reference Hinton and Salakhutdinov21].

A DBN can be viewed as a composition of simple learning modules via stacking them. This simple learning module is called RBMs that we introduce next.

B) Restricted BM

An RBM is a special type of Markov random field that has one layer of (typically Bernoulli) stochastic hidden units and one layer of (typically Bernoulli or Gaussian) stochastic visible or observable units. RBMs can be represented as bipartite graphs, where all visible units are connected to all hidden units, and there are no visible–visible or hidden–hidden connections.

In an RBM, the joint distribution p(v, h; θ) over the visible units v and hidden units h, given the model parameters θ, is defined in terms of an energy function E(v, h; θ) of

where Z = ∑ v ∑ h exp (−E(v, h; θ)) is a normalization factor or partition function, and the marginal probability that the model assigns to a visible vector v is

For a Bernoulli (visible)–Bernoulli (hidden) RBM, the energy function is defined as

$$E\lpar {\bf v}\comma \; {\bf h}\semicolon \; \theta\rpar = - \sum_{i=1}^I \sum_{j=1}^J w_{ij} v_i h_{\!j} - \sum_{i=1}^I b_i v_i - \sum_{j=1}^J a_j h_{\!j}\comma \;$$

$$E\lpar {\bf v}\comma \; {\bf h}\semicolon \; \theta\rpar = - \sum_{i=1}^I \sum_{j=1}^J w_{ij} v_i h_{\!j} - \sum_{i=1}^I b_i v_i - \sum_{j=1}^J a_j h_{\!j}\comma \;$$

where w ij represents the symmetric interaction term between visible unit v i and hidden unit h j , b i and a j the bias terms, and I and J are the numbers of visible and hidden units. The conditional probabilities can be efficiently calculated as

$$\eqalign{&p\lpar h_{j} = 1\vert {\bf v}\semicolon \; \theta\rpar = \sigma \left(\sum_{i=1}^I w_{ij} v_i +a_j\right)\comma \; \cr & p\lpar v_i = 1\vert {\bf h}\semicolon \; \theta\rpar = \sigma \left(\sum_{j=1}^J w_{ij} h_{j} +b_i\right)\comma \; }$$

$$\eqalign{&p\lpar h_{j} = 1\vert {\bf v}\semicolon \; \theta\rpar = \sigma \left(\sum_{i=1}^I w_{ij} v_i +a_j\right)\comma \; \cr & p\lpar v_i = 1\vert {\bf h}\semicolon \; \theta\rpar = \sigma \left(\sum_{j=1}^J w_{ij} h_{j} +b_i\right)\comma \; }$$

where σ (x)=1/(1 + exp(x)).

Similarly, for a Gaussian (visible)–Bernoulli (hidden) RBM, the energy is

$$\eqalign{E \lpar {\bf v}\comma \; {\bf h}\semicolon \; \theta\rpar = &- \sum_{i=1}^I \sum_{j=1}^J w_{ij} v_i h_{j} \cr & - {\displaystyle {1} \over {2}} \sum_{i=1}^I \lpar v_i -b_i\rpar ^2 - \sum_{j=1}^J a_j h_{j}\comma \; }$$

$$\eqalign{E \lpar {\bf v}\comma \; {\bf h}\semicolon \; \theta\rpar = &- \sum_{i=1}^I \sum_{j=1}^J w_{ij} v_i h_{j} \cr & - {\displaystyle {1} \over {2}} \sum_{i=1}^I \lpar v_i -b_i\rpar ^2 - \sum_{j=1}^J a_j h_{j}\comma \; }$$

The corresponding conditional probabilities become

$$\eqalign{& p\lpar h_{j} = 1\vert {\bf v}\semicolon \; \theta\rpar = \sigma \left(\sum_{i=1}^I w_{ij} v_i + a_j\right)\comma \; \cr & \quad \quad p\lpar v_i \vert {\bf h}\semicolon \; \theta\rpar = {\cal N} \left(\sum_{j=1}^J w_{ij} h_{j} + b_i\comma \; 1\right)\comma \; }$$

$$\eqalign{& p\lpar h_{j} = 1\vert {\bf v}\semicolon \; \theta\rpar = \sigma \left(\sum_{i=1}^I w_{ij} v_i + a_j\right)\comma \; \cr & \quad \quad p\lpar v_i \vert {\bf h}\semicolon \; \theta\rpar = {\cal N} \left(\sum_{j=1}^J w_{ij} h_{j} + b_i\comma \; 1\right)\comma \; }$$

where v i takes real values and follows a Gaussian distribution with mean ∑ j=1 J w ij h j + b i and variance one. Gaussian–Bernoulli RBMs can be used to convert real-valued stochastic variables to binary stochastic variables, which can then be further processed using the Bernoulli–Bernoulli RBMs.

The above discussion used two most common conditional distributions for the visible data in the RBM – Gaussian (for continuous-valued data) and binomial (for binary data). More general types of distributions in the RBM can also be used. See [Reference Welling, Rosen-Zvi and Hinton157] for the use of general exponential-family distributions for this purpose.

Taking the gradient of the log likelihood log p (v; θ) we can derive the update rule for the RBM weights as:

where E data (v i h j ) is the expectation observed in the training set and E model (v i h j ) is that same expectation under the distribution defined by the model. Unfortunately, E model (v i h j ) is intractable to compute so the contrastive divergence (CD) approximation to the gradient is used where E model (v i h j ) is replaced by running the Gibbs sampler initialized at the data for one full step. The steps in approximating E model (v i h j ) is as follows:

-

• Initialize v 0 at data

-

• Sample h 0 ~ p (h|v 0)

-

• Sample v 1 ~ p (v|h 0)

-

• Sample h 1 ~ p (h|v 1)

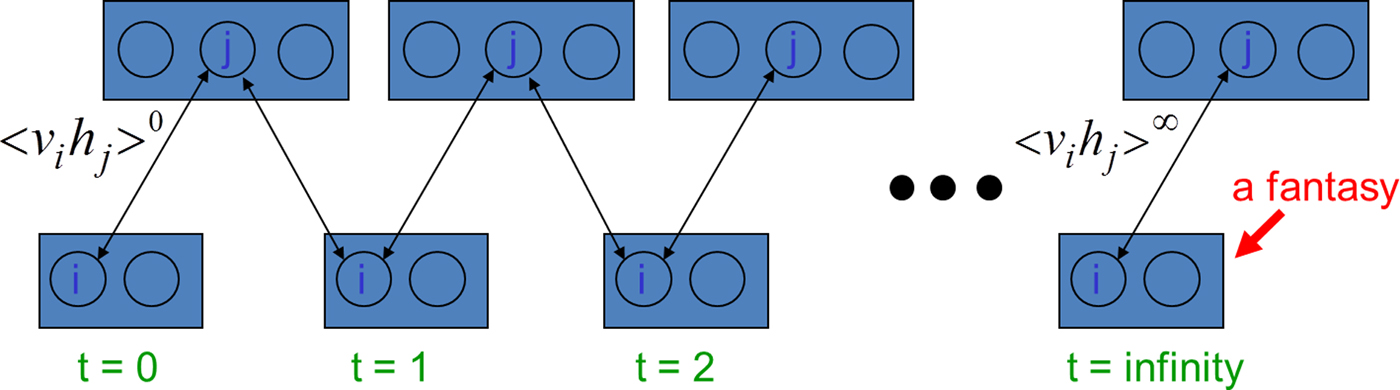

Then (v 1, h 1) is a sample from the model, as a very rough estimate of E model (v i h j ) = (v ∞, h ∞), which is a true sample from the model. Use of (v 1, h 1) to approximate E model (v i h j ) gives rise to the algorithm of CD-1. The sampling process can be pictorially depicted as below in Fig. 4 below.

Fig. 4. A pictorial view of sampling from a RBM during the “negative” learning phase of the RBM (courtesy of G. Hinton).

Careful training of RBMs is essential to the success of applying RBM and related deep learning techniques to solve practical problems. See the Technical Report [Reference Hinton158] for a very useful practical guide for training RBMs.

The RBM discussed above is a generative model, which characterizes the input data distribution using hidden variables and there is no label information involved. However, when the label information is available, it can be used together with the data to form the joint “data” set. Then the same CD learning can be applied to optimize the approximate “generative” objective function related to data likelihood. Further, and more interestingly, a “discriminative” objective function can be defined in terms of conditional likelihood of labels. This discriminative RBM can be used to “fine tune” RBM for classification tasks [Reference Larochelle and Bengio142].

Note the SESM architecture by Ranzato et al. [Reference Ranzato, Boureau and LeCun29] surveyed in Section III is quite similar to the RBM described above. While they both have a symmetric encoder and decoder, and a logistic non-linearity on the top of the encoder, the main difference is that RBM is trained using (approximate) maximum likelihood, but SESM is trained by simply minimizing the average energy plus an additional code sparsity term. SESM relies on the sparsity term to prevent flat energy surfaces, while RBM relies on an explicit contrastive term in the loss, an approximation of the log partition function. Another difference is in the coding strategy in that the code units are “noisy” and binary in RBM, while they are quasi-binary and sparse in SESM.

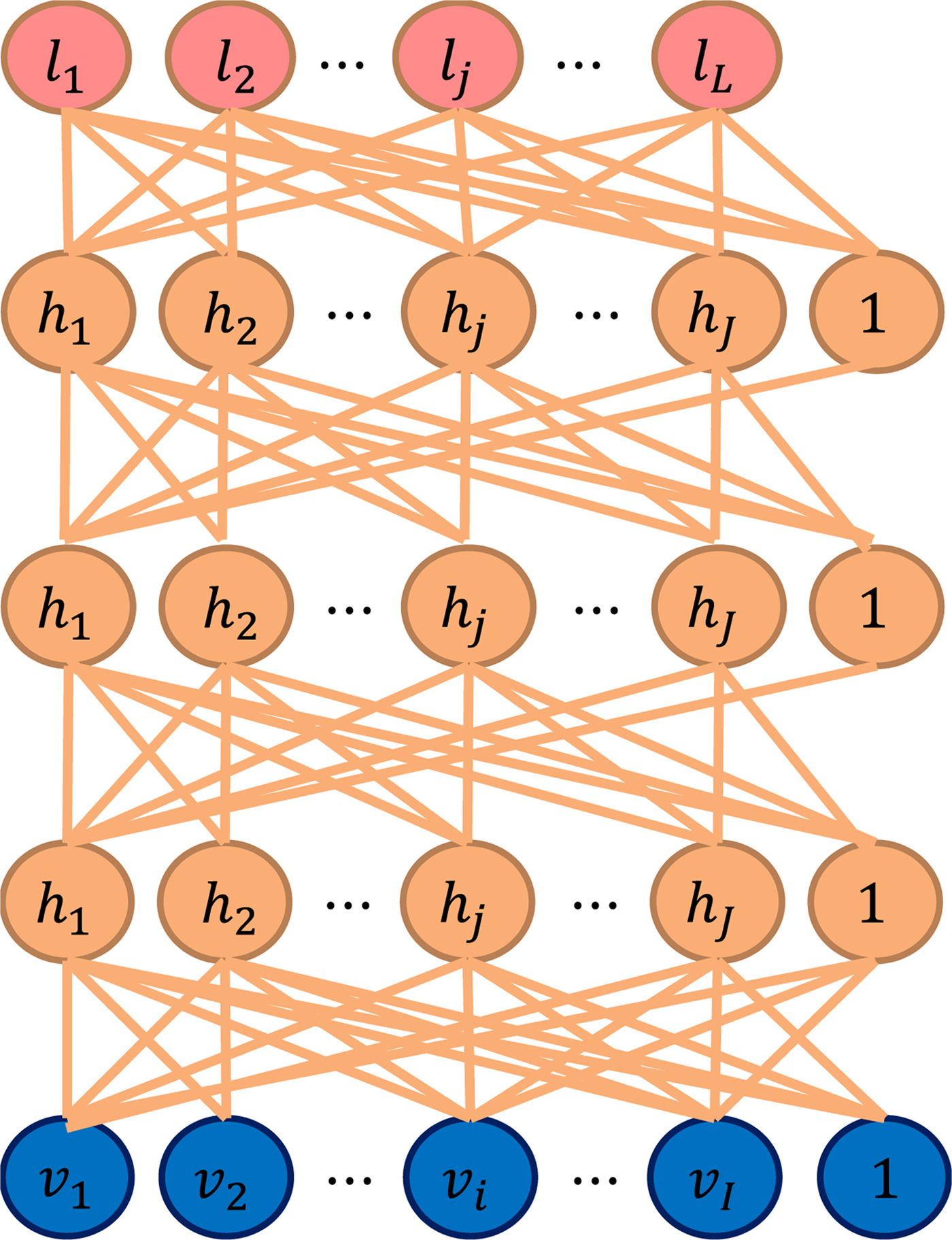

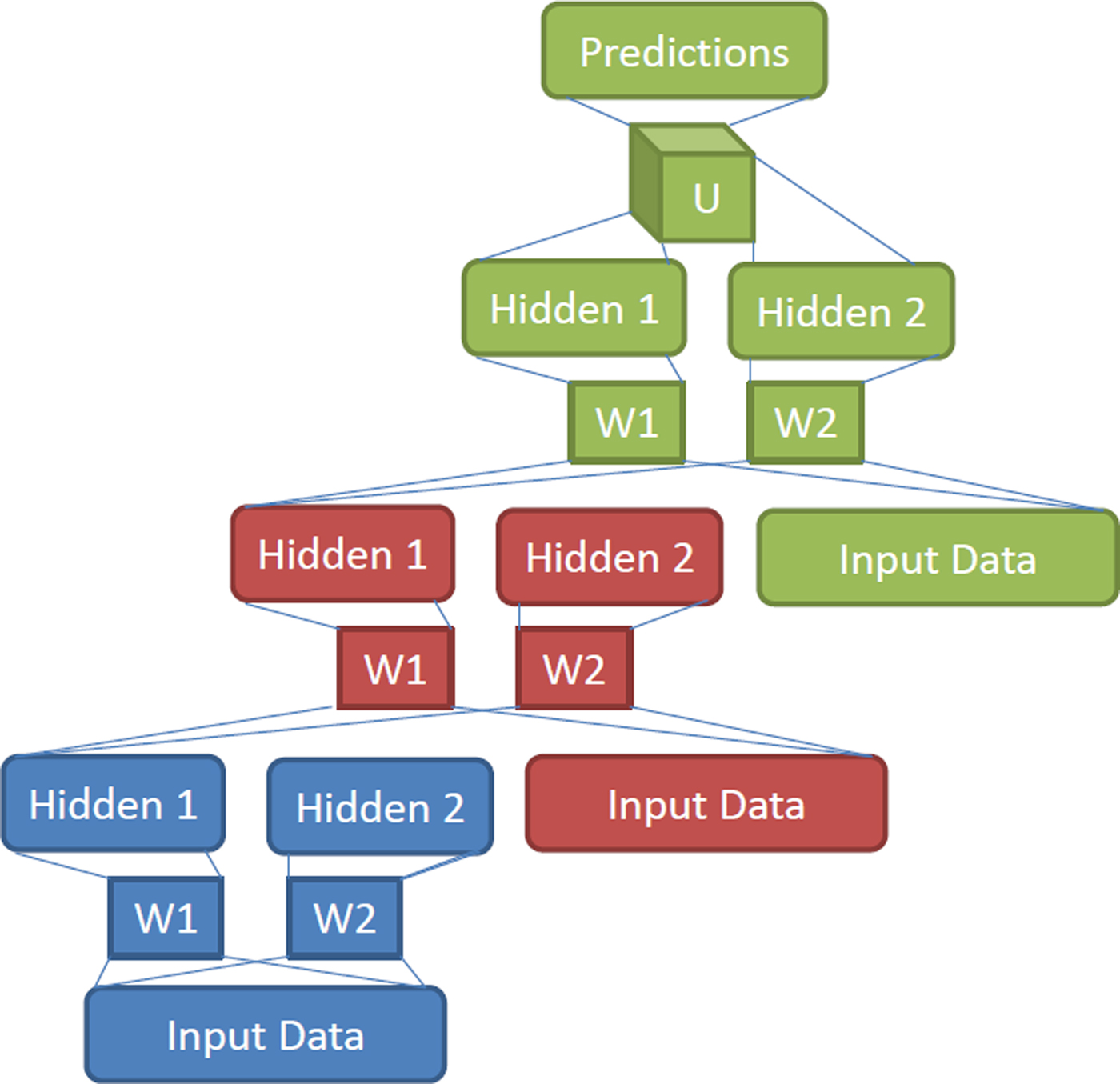

C) Stacking up RBMs to form a DBN/DNN architecture

Stacking a number of the RBMs learned layer by layer from bottom up gives rise to a DBN, an example of which is shown in Fig. 5. The stacking procedure is as follows. After learning a Gaussian–Bernoulli RBM (for applications with continuous features such as speech) or Bernoulli–Bernoulli RBM (for applications with nominal or binary features such as black–white image or coded text), we treat the activation probabilities of its hidden units as the data for training the Bernoulli–Bernoulli RBM one layer up. The activation probabilities of the second-layer Bernoulli–Bernoulli RBM are then used as the visible data input for the third-layer Bernoulli–Bernoulli RBM, and so on. Some theoretical justifications of this efficient layer-by-layer greedy learning strategy is given in [Reference Hinton, Osindero and Teh3], where it is shown that the stacking procedure above improves a variational lower bound on the likelihood of the training data under the composite model. That is, the greedy procedure above achieves approximate maximum-likelihood learning. Note that this learning procedure is unsupervised and requires no class label.

Fig. 5. Illustration of a DBN/DNN architecture.

When applied to classification tasks, the generative pretraining can be followed by or combined with other, typically discriminative, learning procedures that fine-tune all of the weights jointly to improve the performance of the network. This discriminative fine-tuning is performed by adding a final layer of variables that represent the desired outputs or labels provided in the training data. Then, the backpropagation algorithm can be used to adjust or fine-tune the DBN weights and use the final set of weights in the same way as for the standard feedforward neural network. What goes to the top, label layer of this DNN depends on the application. For speech recognition applications, the top layer, denoted by “l 1, l 2, … l j , …, l L ,” in Fig. 5, can represent either syllables, phones, subphones, phone states, or other speech units used in the HMM-based speech recognition system.

The generative pretraining described above has produced excellent phone and speech recognition results on a wide variety of tasks, which will be surveyed in Section VII. Further research has also shown the effectiveness of other pretraining strategies. As an example, greedy layer-by-layer training may be carried out with an additional discriminative term to the generative cost function at each level. And without generative pretraining, purely discriminative training of DNNs from random initial weights using the traditional stochastic gradient decent method has been shown to work very well when the scales of the initial weights are set carefully and the mini-batch sizes, which trade off noisy gradients with convergence speed, used in stochastic gradient decent are adapted prudently (e.g., with an increasing size over training epochs). Also, randomization order in creating mini-batches needs to be judiciously determined. Importantly, it was found effective to learn a DNN by starting with a shallow neural net with a single hidden layer. Once this has been trained discriminatively (using early stops to avoid overfitting), a second hidden layer is inserted between the first hidden layer and the labeled softmax output units and the expanded deeper network is again trained discriminatively. This can be continued until the desired number of hidden layers is reached, after which a full backpropagation “fine tuning” is applied. This discriminative “pretraining” procedure is found to work well in practice (e.g., [Reference Seide, Li and Yu155]).

This type of discriminative “pretraining” procedure is closely related to the learning algorithm developed for the deep architectures called deep convex/stacking network, to be described in Section VI, where interleaving linear and non-linear layers are used in building up the deep architectures in a modular manner, and the original input vectors are concatenated with the output vectors of each module consisting of a shallow neural net. Discriminative “pretraining” is used for positioning a subset of weights in each module in a reasonable space using parallelizable convex optimization, followed by a batch-mode “fine tuning” procedure, which is also parallelizable due to the closed-form constraint between two subsets of weights in each module.

Further, purely discriminative training of the full DNN from random initial weights is now known to work much better than had been thought in early days, provided that the scales of the initial weights are set carefully, a large amount of labeled training data is available, and mini-batch sizes over training epochs are set appropriately. Nevertheless, generative pretraining still improves test performance, sometimes by a significant amount especially for small tasks. Layer-by-layer generative pretraining was originally done using RBMs, but various types of autoencoder with one hidden layer can also be used.

D) Interfacing DNN with HMM

A DBN/DNN discussed above is a static classifier with input vectors having a fixed dimensionality. However, many practical pattern recognition and information-processing problems, including speech recognition, machine translation, natural language understanding, video processing and bio-information processing, require sequence recognition. In sequence recognition, sometimes called classification with structured input/output, the dimensionality of both inputs and outputs are variable.

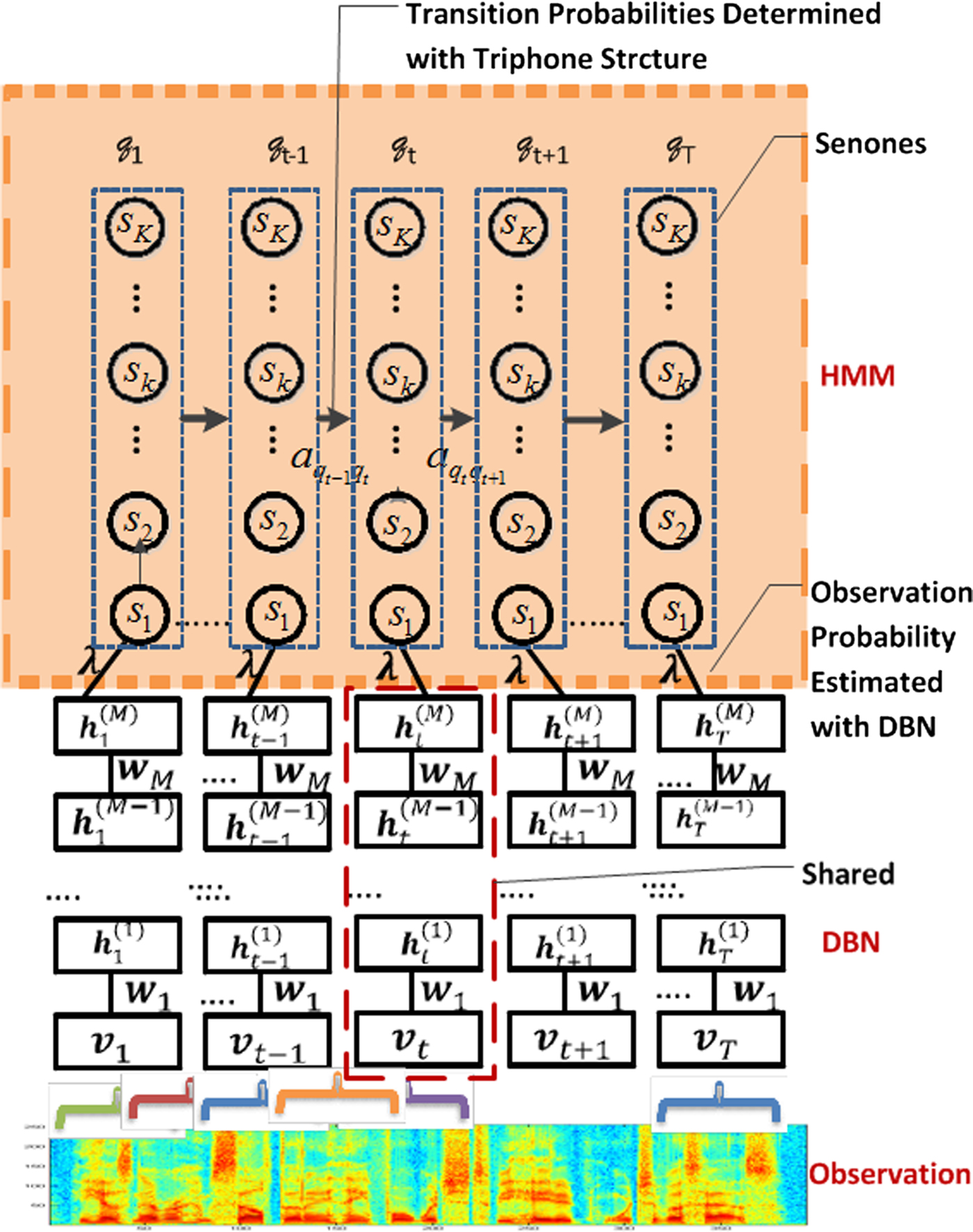

The HMM, based on dynamic programming operations, is a convenient tool to help port the strength of a static classifier to handle dynamic or sequential patterns. Thus, it is natural to combine DBN/DNN and HMM to bridge the gap between static and sequence pattern recognition. An architecture that shows the interface between a DNN and HMM is provided in Fig. 6. This architecture has been successfully used in speech recognition experiments as reported in [Reference Dahl, Yu, Deng and Acero25].

Fig. 6. Interface between DBN–DNN and HMM to form a DNN–HMM. This architecture has been successfully used in speech recognition experiments reported in [25].

It is important to note that the unique elasticity of temporal dynamic of speech as elaborated in [Reference Deng53] would require temporally-correlated models better than HMM for the ultimate success of speech recognition. Integrating such dynamic models having realistic co-articulatory properties with the DNN and possibly other deep learning models to form the coherent dynamic deep architecture is a challenging new research.

VI. DISCRIMINATIVE ARCHITECTURES: DSN AND RECURRENT NETWORK

A) Introduction

While the DNN just reviewed has been shown to be extremely powerful in connection with performing recognition and classification tasks including speech recognition and image classification, training a DBN has proven to be more difficult computationally. In particular, conventional techniques for training DNN at the fine tuning phase involve the utilization of a stochastic gradient descent learning algorithm, which is extremely difficult to parallelize across-machines. This makes learning at large scale practically impossible. For example, it has been possible to use one single, very powerful GPU machine to train DNN-based speech recognizers with dozens to a few hundreds of hours of speech training data with remarkable results. It is very difficult, however, to scale up this success with thousands or more hours of training data.

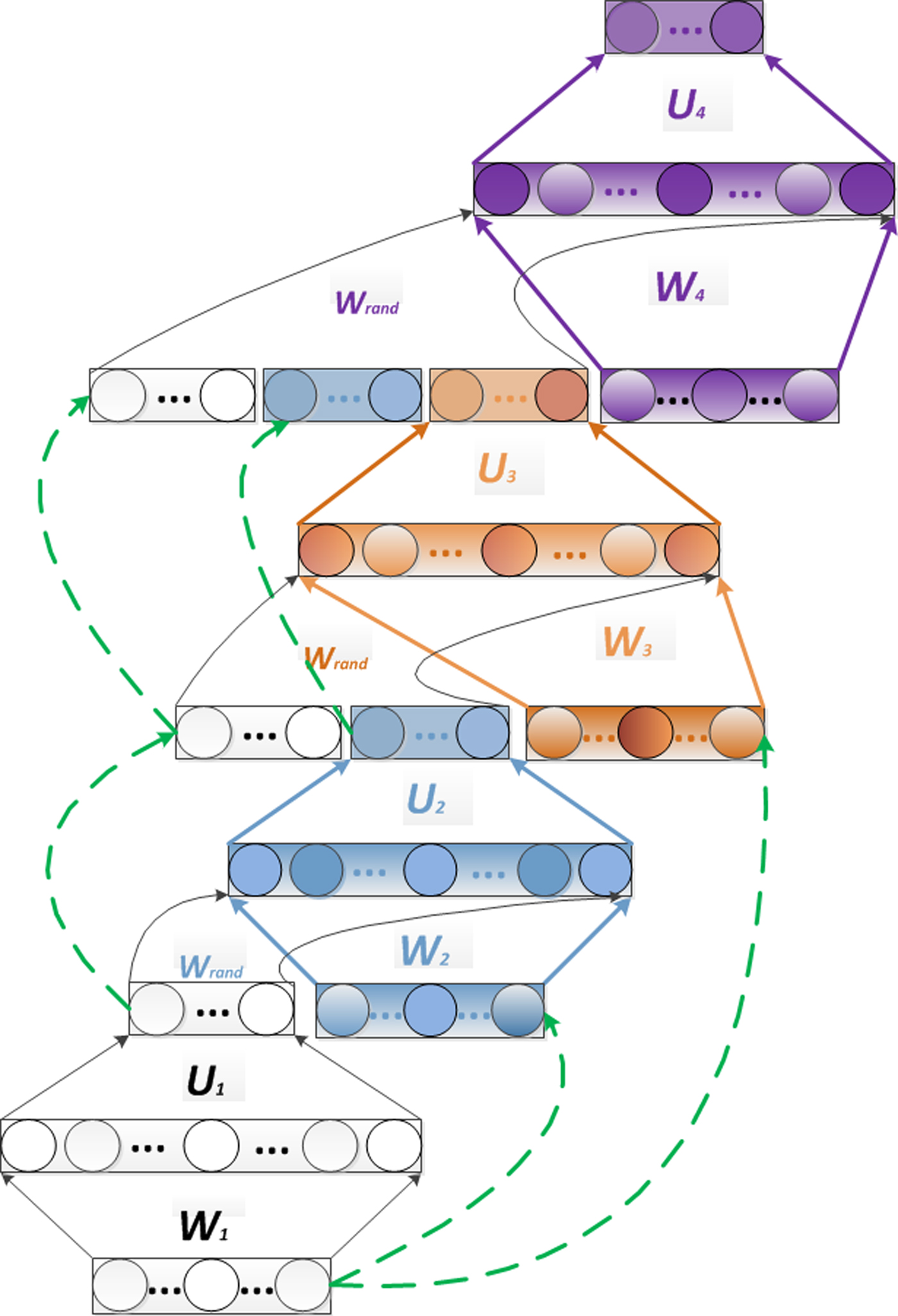

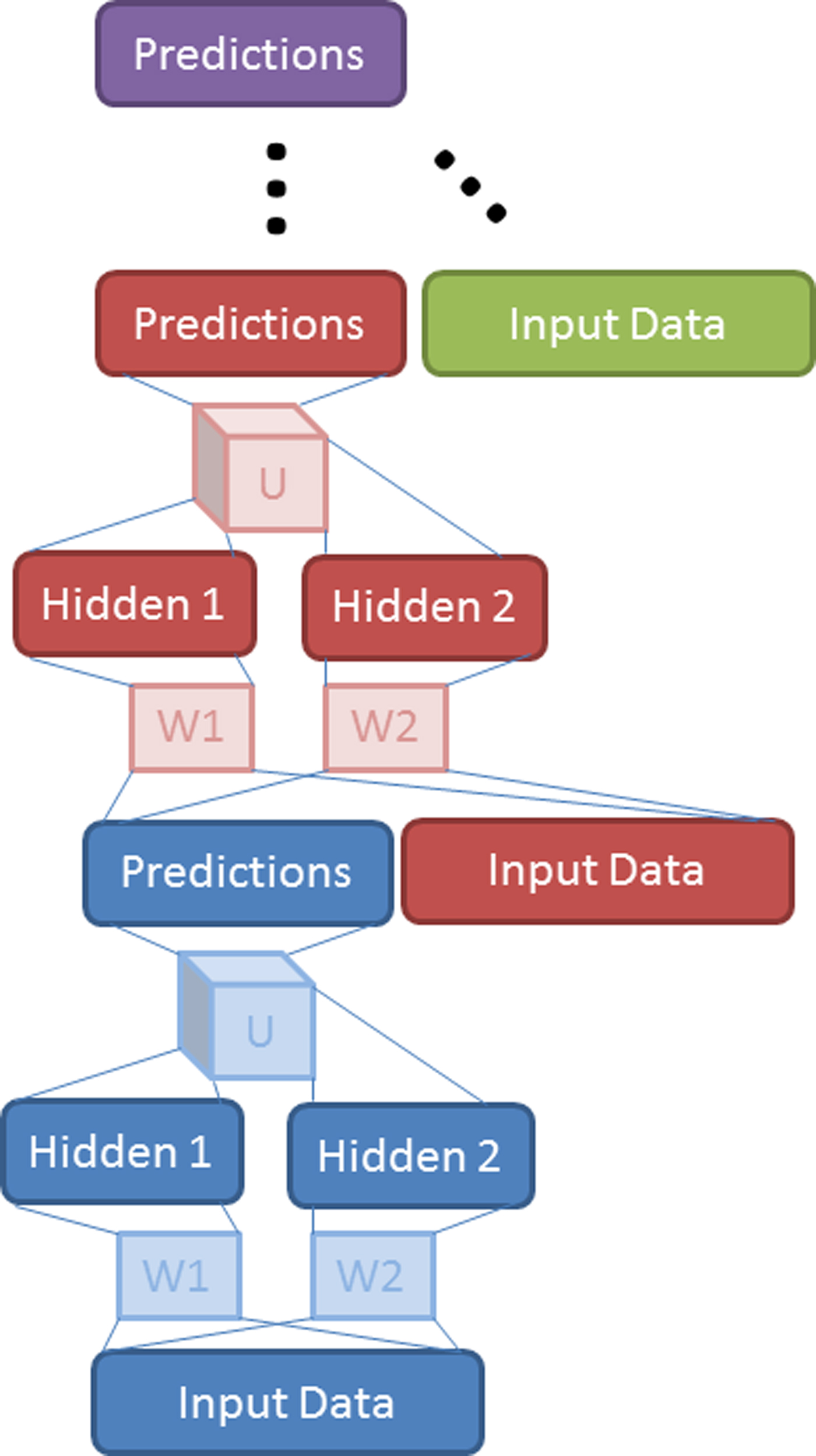

Here we describe a new deep learning architecture, DSN, which attacks the learning scalability problem. This section is based in part on the recent publications of [Reference Deng, Tur, He and Hakkani-Tur11, Reference Deng and Yu107, Reference Hutchinson, Deng and Yu111, Reference Hutchinson, Deng and Yu112] with expanded discussions.

The central idea of DSN design relates to the concept of stacking, as proposed originally in [Reference Wolpert159], where simple modules of functions or classifiers are composed first and then they are “stacked” on top of each other in order to learn complex functions or classifiers. Various ways of implementing stacking operations have been developed in the past, typically making use of supervised information in the simple modules. The new features for the stacked classifier at a higher level of the stacking architecture often come from concatenation of the classifier output of a lower module and the raw input features. In [Reference Cohen and de Carvalho160], the simple module used for stacking was a CRF. This type of deep architecture was further developed with hidden states added for successful natural language and speech recognition applications where segmentation information in unknown in the training data [Reference Yu, Wang and Deng96]. Convolutional neural networks, as in [Reference Jarrett, Kavukcuoglu and LeCun161], can also be considered as a stacking architecture but the supervision information is typically not used until in the final stacking module.

The DSN architecture was originally presented in [Reference Deng and Yu107], which also used the name Deep Convex Network or DCN to emphasize the convex nature of the main learning algorithm used for learning the network. The DSN discussed in this section makes use of supervision information for stacking each of the basic modules, which takes the simplified form of multi-layer perceptron. In the basic module, the output units are linear and the hidden units are sigmoidal non-linear. The linearity in the output units permits highly efficient, parallelizable, and closed-form estimation (a result of convex optimization) for the output network weights given the hidden units' activities. Owing to the closed-form constraints between the input and output weights, the input weights can also be elegantly estimated in an efficient, parallelizable, batch-mode manner.

The name “convex” used in [Reference Deng and Yu107] accentuates the role of convex optimization in learning the output network weights given the hidden units' activities in each basic module. It also points to the importance of the closed-form constraints, derived from the convexity, between the input and output weights. Such constraints make the learning the remaining network parameters (i.e., the input network weights) much easier than otherwise, enabling batch-mode learning of DSN that can be distributed over CPU clusters. And in more recent publications, DSN was used when the key operation of stacking is emphasized.

B) An architectural overview of DSN

A DSN, shown in Fig. 7, includes a variable number of layered modules, wherein each module is a specialized neural network consisting of a single hidden layer and two trainable sets of weights. In Fig. 7, only four such modules are illustrated, where each module is shown with a separate color. (In practice, up to a few hundreds of modules have been efficiently trained and used in image and speech classification experiments.)

Fig. 7. A DSN architecture with input–output stacking. Only four modules are illustrated, each with a distinct color. Dashed lines denote copying layers.

The lowest module in the DSN comprises a first linear layer with a set of linear input units, a non-linear layer with a set of non-linear hidden units, and a second linear layer with a set of linear output units.

The hidden layer of the lowest module of a DSN comprises a set of non-linear units that are mapped to the input units by way of a first, lower-layer weight matrix, which we denote by W . For instance, the weight matrix may comprise a plurality of randomly generated values between zero and one, or the weights of an RBM trained separately. The non-linear units may be sigmoidal units that are configured to perform non-linear operations on weighted outputs from the input units (weighted in accordance with the first weight matrix W ).

The second, linear layer in any module of a DSN includes a set of output units that are representative of the targets of classification. The non-linear units in each module of the DSN may be mapped to a set of the linear output units by way of a second, upper-layer weight matrix, which we denote by U . This second weight matrix can be learned by way of a batch learning process, such that learning can be undertaken in parallel. Convex optimization can be employed in connection with learning U . For instance, U can be learned based at least in part upon the first weight matrix W , values of the coded classification targets, and values of the input units.